Abstract

OBJECTIVE

Determine if bilateral loudness balancing during mapping of bilateral cochlear implants (CIs) facilitates fused, punctate, and centered auditory images that facilitate lateralization with stimulation on single-electrode pairs.

DESIGN

Adopting procedures similar to those that are practiced clinically, we used direct stimulation to obtain most-comfortable levels (C levels) in recipients of bilateral Cis. Three pairs of electrodes, located in the base, middle, and apex of the electrode array, were tested. These electrode pairs were loudness-balanced by playing right-left electrode pairs sequentially. In experiment 1, we measured the location, number, and compactness of auditory images in 11 participants in a subjective fusion experiment. In experiment 2, we measured the location and number of the auditory images while imposing a range of interaural level differences (ILDs) in 13 participants in a lateralization experiment. Six of these participants repeated the mapping process and lateralization experiment over three separate days to determine the variability in the procedure.

RESULTS

In approximately 80% of instances, bilateral loudness balancing was achieved from relatively small adjustments to the C levels (≤3 clinical current units, CU). More important, however, was the observation that in four of eleven participants, simultaneous bilateral stimulation regularly elicited percepts that were not fused into a single auditory object. Across all participants, approximately 23% of percepts were not perceived as fused; this contrasts with the 1-2% incidence of diplacusis observed with normal-hearing individuals. In addition to the unfused images, the perceived location was often offset from the physical ILD. On the whole, only 45% of percepts presented with an ILD of 0 CU were perceived as fused and heard in the center of the head. Taken together, these results suggest that distortions to the spatial map remain common in bilateral CI recipients even after careful bilateral loudness balancing.

CONCLUSION

The primary conclusion from these experiments is that, even after bilateral loudness balancing, bilateral CI recipients still regularly perceive stimuli that are either unfused, offset from the assumed zero ILD, or both. Thus, while current clinical mapping procedures for bilateral CIs are sufficient to enable many of the benefits of bilateral hearing, they may not elicit percepts that are thought to be optimal for sound-source location. As a result, in the absence of new developments in signal processing for CIs, new mapping procedures may need to be developed for bilateral CI recipients to maximize the benefits of bilateral hearing.

INTRODUCTION

Binaural hearing is of great importance because it enables or enhances the ability to 1) localize sounds in the horizontal plane and 2) understand speech in noisy environments. The localization of sounds in the horizontal plane is performed by neurally computing an across-ear comparison of the arrival times (interaural time differences, ITDs) and level (interaural level differences, ILDs) of a sound (Middlebrooks and Green, 1991). Speech understanding in noisy environments is greatly facilitated by the better-ear effect (Bronkhorst and Plomp, 1988; Culling et al., 2012; Hawley et al., 2004; Loizou et al., 2009). This occurs when one ear has a better signal-to-noise ratio, even if only for a very brief spectral-temporal moment. Speech understanding in noisy environments is also improved because of binaural unmasking, which is facilitated by sound sources having different ITDs (Bronkhorst and Plomp, 1988; Hawley et al., 2004).

Because of the numerous benefits associated with binaural hearing, an increasing number of individuals with severe-to-profound hearing impairment are receiving bilateral cochlear implants (CIs). Consistent with this decision, recipients of bilateral CIs have improved speech understanding in noise relative to monaural CI use. Recipients of bilateral CIs appear to gain a ‘better-ear advantage’ up to about 5 dB (Buss et al., 2008; Litovsky et al., 2006; Müller et al., 2002; Schleich et al., 2004; Tyler et al., 2002; van Hoesel and Tyler, 2003). However, the benefits to speech understanding are limited in bilateral CI recipients relative to those of normal-hearing individuals. For example, limited amounts of binaural unmasking have been measured, either in the free field (Buss et al., 2008; Dunn et al., 2008; Litovsky et al., 2009; van Hoesel and Tyler, 2003) or using direct-stimulation input that bypasses the microphones and uses research processors (Loizou et al., 2009; van Hoesel et al., 2008).

As with measures of speech understanding in noise, bilateral CI recipients appear to derive some benefit with regard to sound localization, but not as much as individuals with normal hearing. Notably, sound localization with bilateral CIs is much better compared to that with a unilateral CI. For example, when using a single CI and a clinical sound processor with an omni-directional microphone, individuals are nearly at chance performance when attempting to localize sounds in the free field (Litovsky et al., 2012). Using bilateral CIs, however, participants have much improved sound-localization abilities, albeit not as good as those observed for normal hearing listeners in quiet or background noise (Kerber and Seeber, 2012; Litovsky et al., 2012).

Several studies have now indicated that bilateral CI users mostly rely on ILDs for sound localization with clinical processors (Aronoff et al., 2010, 2012; Grantham et al., 2008; Seeber and Fastl, 2008). It is possible to detect ITDs with clinical processors from the slowly-varying envelope of the signal (Laback et al., 2004) or with direct stimulation where there is bilaterally synchronized timing (Litovsky et al., 2012). However, the lack of fine structure encoding and bilateral synchronization of the clinical processors makes ITDs an inferior localization cue compared to ILDs in bilateral CI users. Therefore, for present CI technology, it is imperative to focus on how ILDs are processed.

It is our conjecture that recipients of bilateral cochlear implants receive poorly encoded and inconsistent ILD and ITD cues, which results in an increased localization error, or ‘localization blur (Blauert, 1997)’ relative to individuals with normal hearing. In recipients of bilateral cochlear implants, an increased localization blur could manifest itself perceptually in at least two different ways. In one, individuals might be unable to fuse input from each ear into a single image. This may result from differences in the site of stimulation in each ear (Kan et al., 2013; Long et al., 2003; Poon et al., 2009). Such differences could theoretically result from disparities in electrode insertion depth between each ear. In a second possible source of localization blur, the perceived location of the signal might be offset and/or less precise than the location perceived by individuals with normal hearing.

Supporting the idea of localization blur, when bilateral CI localization response patterns are examined, it appears that individuals generally demonstrate relatively coarse resolution in their localization judgments compared to normal-hearing participants. For example, bilateral CI recipients tend to have either two (left or right), three (left, center, right), or four response regions (0, 15, 30 and 45–75 degrees within a hemifield) (Dorman et al., 2014; Majdak et al., 2011). These rough representations may result from inconsistent presentation of cues for sound-source location. In unsynchronized clinical processors, inconsistent onset ITDs can be introduced because there is no bilateral synchronization. Perhaps most relevant for recipients of bilateral CIs, there may be inconsistent ILD cues that differ from the physical ILD presented to the individual CI recipient. These inconsistent ILD cues may arise from several possible sources, one of which could be the independent automatic-gain control between the two processors; this variable has been previously shown to affect just-noticeable differences for ILDs in users of bilateral hearing aids (Musa-Shufani et al., 2006). The idea of inconsistent ILD cues in this population aligns with data obtained in bilateral CI recipients tested with clinical speech processors. In most such studies, ILD sensitivity and localization abilities approximated, but were worse than that observed with normal-hearing individuals (Dorman et al., 2014; Grantham et al., 2008; Laback et al., 2004). Conversely, one report showed that bilateral CI recipients may have at least as good and maybe better localization abilities than normal-hearing participants when only ILD cues are presented (Aronoff et al., 2012).

In an ideal scenario, inconsistent ILD cues or localization blur could be minimized in mapping of the CIs by their audiologist. At present, however, there is little consensus on the best procedures for mapping bilateral CIs, nor do audiologists have a large number of available tools to optimize bilateral CI fittings. Generally, clinical mapping procedures for bilateral CIs consist largely of fitting each CI independently. A key component of this mapping process is to determine, in each ear, the amount of current needed to elicit a maximally comfortable level of loudness. This process is determined either for each individual electrode or for a group of electrodes simultaneously. In some instances, the audiologist may then make loudness adjustments to one or both ears based on the percepts elicited by using both Cis when listening to conversational speech or other sound. Such manipulations are not always made in a systematic manner, however, and are rarely conducted across individual electrode pairs. These conventional fitting procedures have clearly been effective for re-establishing speech-understanding abilities in both ears. Moreover, conventional procedures also can restore some of the benefits of binaural hearing, including improved speech understanding in noise due to the head-shadow effect, and some access to ILD cues for sound-source location. However, there is also reason to think that such procedures may not elicit percepts that are thought to be optimal for sound-source location.

In a recent report, Goupell et al. (2013a) argued that conventional mapping procedures for bilateral CIs do not control the perceived intracranial position (i.e., lateralization) of an auditory image and that distortions to a participant’s spatial maps would be introduced. We assume that in an undistorted spatial map, individuals perceive a given sound as a single, fused image at a location which is consistent with the characteristics of the signal. For example, if a signal is presented to both ears at the same loudness with an ITD of 0 µs and an ILD of 0 dB, then the participant should perceive a single, fused image at the midline of the head. Similarly, if a signal with an ITD and ILD favoring one ear is presented, the participant should perceive a single image that is shifted in location. In Goupell et al. (2013a), it was shown in an archival meta-analysis that determining most-comfortable (C) levels independently across the ears often introduced substantial offsets in lateralization of an auditory image using direct stimulation and single-electrode pairs, which would normally be assumed to be centered. This is an unfortunate finding for present clinical mapping procedures. In that same study, an experiment showed how lateralization with ILDs was inconsistent across different stimulation levels as measured in percentage of the dynamic range for participants, thus also likely being a factor contributing to the localization blur hypothesis for realistic (i.e., modulated) stimuli like speech.

The existence of possible distortions of a spatial map with conventional mapping procedures raises the question of whether alternate, more detailed mapping procedures for bilateral CIs has the potential to yield percepts that are robust for sound-source location. One likely candidate in this regard would be systematic bilateral loudness balancing across individual electrodes. Electrode-by-electrode bilateral loudness balancing is not performed on a consistent basis in clinical environments. However, it is possible that it may enhance the accuracy and consistency of ILD-based cues to sound location by increasing the likelihood that a signal presented to each ear at the same intensity would be perceived as centered. Once this anchor has been established, changes in the ILD would hopefully be more likely to result in corresponding perceived changes in sound location. Therefore, the goal of the present investigation was to examine whether careful bilateral-loudness balancing produced 1) fused auditory percepts and 2) auditory image locations that were consistent with the physical ILD. The use of bilateral-loudness balancing addresses a weakness of Goupell et al. (2013a), as the lateralization offsets observed in their bilateral CI recipients could have resulted from between-ear loudness differences; in that study, only unilaterally-balanced C-levels were employed. Moreover, the use of bilateral loudness balancing is at present more likely to be clinically relevant than other approaches such as auditory-image centering1. In current clinical practice, loudness is one of the main tools utilized by audiologists when mapping cochlear implants. With regard to bilateral cochlear implants, it is common practice by many audiologists to conduct loudness balancing, both within and across ears, when programming these devices. Our goal in this experiment was to hew as closely as possible to procedures with which audiologists are readily familiar, and to observe whether such procedures could elicit percepts thought to be ideal for sound-source location.

That being said, careful loudness balancing may not necessarily facilitate a ‘fused’ intracranial image, particularly if a lack of fusion results from differences in the site of stimulation resulting from different electrode insertion depths in each ear (see Kan et al., 2013, 2015; Long et al., 2003; Poon et al., 2009). Nonetheless, there are two reasons why we chose to measure fusion after careful bilateral loudness balancing. First, on a fundamental level, the present paradigm represents an opportunity to further our understanding of how frequently ‘unfused’ percepts are reported in bilateral CI recipients with procedures that approximate those used clinically. This is important given that such suboptimal percepts are a likely contributor to the differences in localization abilities observed between recipients of bilateral CIs and individuals with normal hearing. Second, if there is an ILD, whether intended (via physical manipulation of the stimuli) or unintended (via unbalanced loudness percepts), then this may obscure the existence of an unfused image. In other words, if one ear is sufficiently louder than the other ear, the soft ear may not be perceptually salient. However, if the two ears were loudness balanced, it would be clearer that two separate images would be perceived. In this way, loudness balancing could be interpreted as affecting whether a signal is perceived as fused, regardless of whether it actually ‘improves’ the incidence of fused percepts.

MATERIALS AND METHODS

Participants and Equipment

Twenty-one post-lingually deafened bilateral CI users participated in this experiment. Their hearing histories and etiologies are shown in Table I. All had Nucleus-type implants with 24 electrodes (Nucleus24, Freedom, or N5).

Table I.

Demographic and device characteristics for the bilateral CI recipients tested in this investigation.

| SUBJECT CODE |

Age (yrs) |

Etiology | Onset of Profound Deafness (age in years) |

Length of Profound Hearing Loss (years) |

Sequential/ Simultaneous |

Right Internal Device |

Right CI Use (years) |

Left Internal Device |

Left CI use (years) |

Time Between Ears (years) |

|---|---|---|---|---|---|---|---|---|---|---|

| J2P | 53 | Unknown | 4 | 49 | Sequential | CI512 | 1.91 | CI24RE (CA) | 6.00 | 4.09 |

| JLB | 59 | unknown | 36 | 23 | Sequential | CI24RE (CA) | 3.26 | CI24R (CA) | 7.73 | 4.47 |

| Y4G | 43 | Measles /Mumps | 22 | 21 | Simultaneous | CI24M | 10.03 | CI24M | 10.03 | 0.00 |

| S6G | 75 | Family History | 70 | 4 | Simultaneous | CI24RE (CA) | 3.53 | CI24RE (CA) | 3.53 | 0.00 |

| RSB | 55 | Otosclerosis | 21 | 34 | Sequential | CI512 | 1.73 | CI24R (ST) | 12.68 | 10.95 |

| D7P | 52 | chronic middle ear pathology | 40 | 11 | Sequential | CI24M | 10.87 | CI24R (CA) | 8.09 | 2.78 |

| A8M | 72 | Family History | 47 | 24 | Sequential | 24R (CS) | 9.24 | CI24R (CS) | 9.71 | 0.47 |

| SEW | 56 | Unknown | 32 | 24 | Sequential | CI512 | 0.52 | CI24M | 10.76 | 10.23 |

| W9P | 56 | Unknown | 29 | 26 | Simultaneous | N512 | 0.92 | N512 | 0.92 | 0.00 |

| NEP | 18 | Cogan's syndrome/ head injury | 9 | 9 | Sequential | 24RE (CA) | 3.75 | CI24RE (CA) | 8.84 | 5.08 |

| AMB | 54 | Barotrauma | 32 | 21 | Sequential | CI512 | 2.42 | CI24RE (CA) | 3.43 | 1.01 |

| RJJ | 76 | unknown | 66 | 11 | Simultaneous | CI24M | 10.54 | CI24R (CS) | 10.54 | 0.00 |

| OBB | 78 | NIHL-military | 52 | 26 | Sequential | N512 | 1.20 | CI24RE | 6.27 | 5.06 |

| NOO | 53 | Unknown | 11 | 42 | Sequential | CI 24R (CS) | 10.46 | CI 24RE (CA) | 3.66 | 6.80 |

| CAA | 21 | Unknown | 14 | 7 | Sequential | CI512 | 0.5 | CI512 | 1.5 | 1 |

| CAB | 79 | Unknown | 75 | 4 | Sequential | CI24R | 5 | CI24M | 7 | 2 |

| CAC | 67 | Unknown | 5 | 46 | Sequential | CI24RE | 9 | CI24M | 16 | 7 |

| CAD | 73 | Hereditary | 54 | 10 | Sequential | CI24R (CS) | 3 | CI24R | 9 | 6 |

| IBA | 75 | Unknown | 21 | 47 | Sequential | CI512 | 1 | CI24R(CS) | 7 | 6 |

| IBF | 61 | Hereditary | 51 | 3 | Sequential | CI24RE | 7 | CI24RE | 5 | 2 |

Electrical pulses were presented directly to the participants via a pair of bilaterally synchronized L34 speech processors controlled by a Nucleus Implant Communicator (NIC, Cochlear Ltd.; Sydney, Australia). It was attached to a personal computer running custom software programmed in MATLAB (the Mathworks; Massachusetts).

Stimuli

Biphasic and monopolar electrical pulses that had a 25-µs phase duration were presented to participants in a train that was 500 ms in duration. There was no temporal windowing on the pulse train. The pulse rate matched a participant’s clinical rate, typically 900 pulses per second (pps) (see Table I). Bilateral stimulation was presented with zero ITD on pairs of electrodes that were matched in number. Explicit pitch-matching was not performed because it is not a common clinical practice, and the goal here was to emulate practices that are commonly utilized clinically.

Procedure

Loudness Mapping and Unilateral Loudness Balancing

The T and C levels for electrode pairs located in the apex, middle, and base of the cochlea (typically electrodes numbered 4, 12, and 20, respectively) were obtained in each ear using procedures similar to those utilized clinically. Participants were presented with a pulse train of a given intensity (given here in clinical current units, or CUs), and the participant reported its perceived loudness. The experimenter then adjusted the signal higher or lower in intensity, and presented the stimulus again. T levels were defined as the value at which the signal was barely audible, while C levels were defined as the value at which the signal was perceived as being most comfortable. The set of T and C levels for all the electrodes is called the loudness map. After determining the loudness map, the C levels were compared across electrodes within an ear by sequentially playing the pulse trains with an interstimulus interval of 500 ms. Any electrodes that were perceived as softer or louder than the other electrodes were adjusted so that all of the electrodes had C levels that were perceived as being equally loud.

Bilateral Loudness Balancing

For each electrode pair at the apex, middle, or base, bilateral loudness balancing was achieved by stimulating a single electrode pair sequentially with a 500-ms interstimulus interval, first in the left ear and then in the right. The presentation level initially was the unilaterally loudness balanced C levels (ULBC). The loudness of the stimulus in the right ear was adjusted to match that in the left ear by having the participant tell an experimenter to increase or decrease the intensity, or C levels accordingly. These adjustments were made continuously until the electrodes were perceived as being bilaterally loudness balanced (BLBC).

Experiment 1: Subjective Fusion

The goal of this experiment was to determine whether bilateral loudness balancing elicited 1) a fused image and 2) a perceived location that was generally consistent with the presented ILD. Toward this goal, we utilized a subjective fusion task that was the same as that used in Goupell et al. (2013b) and (Kan et al., 2013). A randomly-selected electrode pair (apex, middle, or base) was stimulated simultaneously at an ILD of 0, ± 5, or ± 10 CUs applied to the BLBC levels. Then, the participant reported what auditory image was elicited by this stimulation using the graphical user interface (GUI) shown in Figure 1.

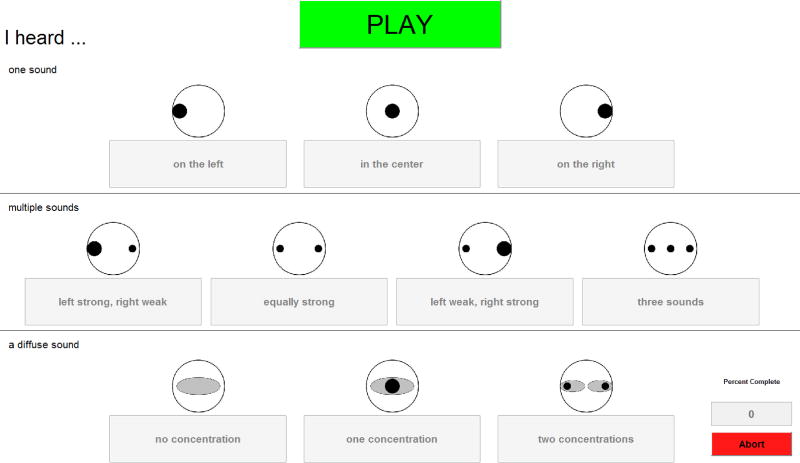

Figure 1.

The graphical-user interface (GUI) used for the Fusion task. Responses in the top row are chosen for fused, punctate percepts. Responses in the second row are selected when the participant hears two or three sounds. Finally, responses in the bottom row are chosen for diffuse sounds of different types.

Responses were categorized into one of three major groups, with sub-groups in each category, as follows: (1) one auditory image (on the left, in the center, on the right), (2) multiple auditory images (left strong, right weak; equally strong; left weak, right strong; three images), and (3) diffuse auditory image(s) (no concentration, one concentration, two concentrations). Participants initiated each trial by pressing a button, and were allowed to repeat any stimulus. Each of the three electrode pairs and five ILDs was presented at random 10 times, for a total of 150 trials. Eleven participants completed this protocol.

Experiment 2: Lateralization of ILDs

The goal of this experiment was to determine whether there is a perceived lateral offset with varying levels of ILD. In the lateralization task (Goupell et al., 2013a; Kan et al., 2013), an electrode pair (apex, middle, or base) was randomly selected and stimulated simultaneously either with an ILD of 0, ±5, or ±10 CUs. Then, the participant reported 1) the number of sound sources that they heard (one, two, or three) and 2) the perceived intracranial location of each source. Participants recorded the perceived intracranial location by clicking on slider bars imposed on a schematic of a head (see Figure 2 for an example of this GUI). The bars allowed participants to report only the lateral position of the auditory images. If multiple images were heard, participants were instructed to respond with the most salient, strongest, or loudest source on the topmost bar. The responses were translated to a numerical scale, where the left ear is –10, center is 0, and the right ear is +10. Participants were allowed to repeat any stimulus and they ended each trial with a button press. Each of the three electrode pairs and five ILDs was presented at random 10 times, for a total of 150 trials. Lateralization data of the most salient source was fit with a function that had the form

| (1) |

where x was the ILD values, and A, σ, µX, and µY were the variables optimized to fit the data2.

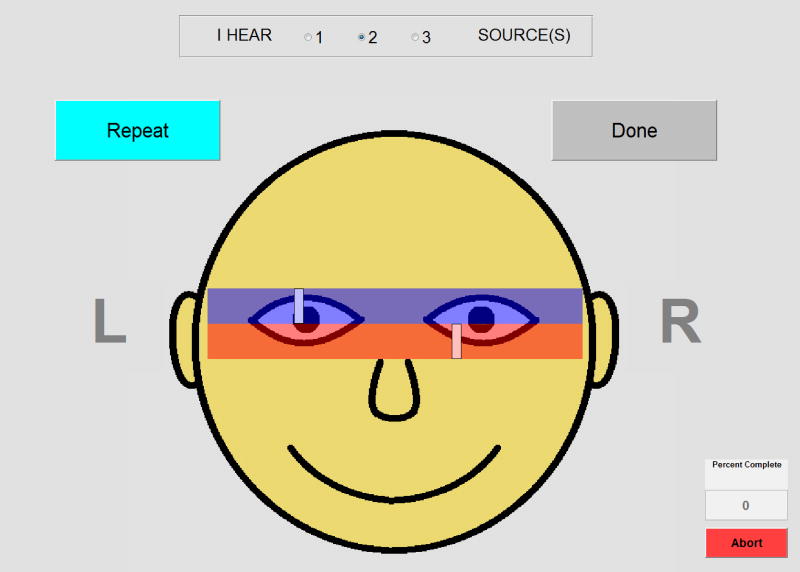

Figure 2.

The GUI used for the Lateralization task. At the top of the GUI, participants can indicate whether they heard one, two, or three sounds. Then, for each sound heard, participants can move the rectangle via a mouse until it matches the location of the perceived image within their head.

Thirteen participants completed this protocol, where six completed it three times on different days. The rationale to repeat the lateralization task on three consecutive days was to evaluate the consistency of the task as if the procedure would be performed by an audiologist on three separate visits. Performance on the lateralization task was very similar from one session to the next, and the data from each session were combined for data analyses.

RESULTS

Bilateral loudness balancing

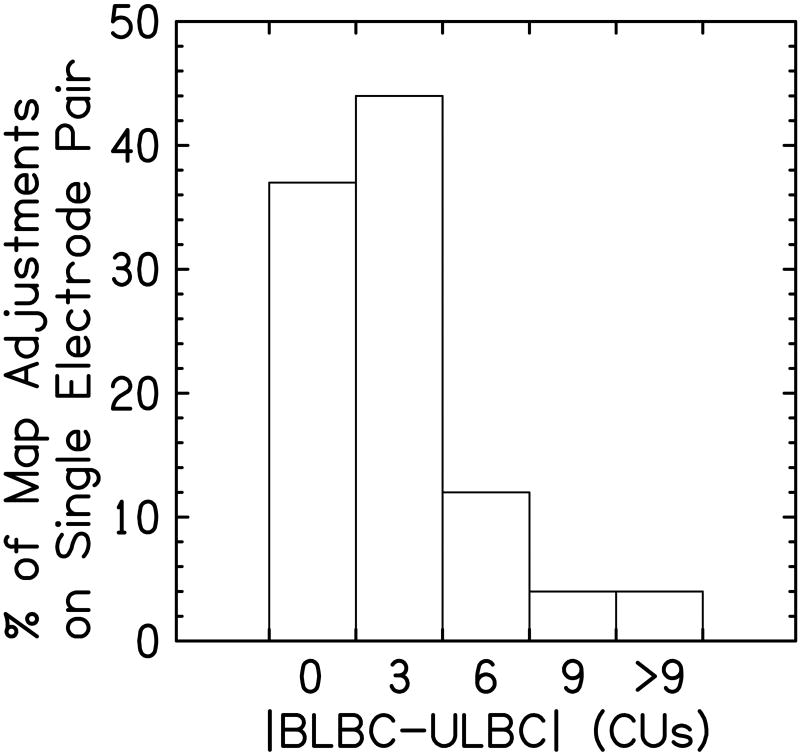

In this investigation, bilateral loudness balancing was generally achieved through relatively small adjustments to the ULBC levels. Figure 3 shows a bar graph indicating how frequently a certain number of CUs was needed to change the unilaterally loudness balanced levels to the bilaterally loudness balanced levels. Here, small adjustments (≤ 3 CUs) were needed to achieve bilateral loudness balancing on 80% of instances. In the remaining occurrences, however, considerably larger adjustments were required, with approximately 8% of instances requiring C level adjustments ≥ 9 CUs.

Figure 3.

Shown here are the percentage of map adjustments as a function of the difference between the bilaterally loudness balanced C levels (BLBC) and the unilaterally loudness balanced C levels (ULBC). The left-most bar indicates no adjustment was needed between ULBC and the BLBC levels. As the bars move to the right side of the figure, the bars reflect progressively larger adjustments.

Experiment 1: Subjective Fusion

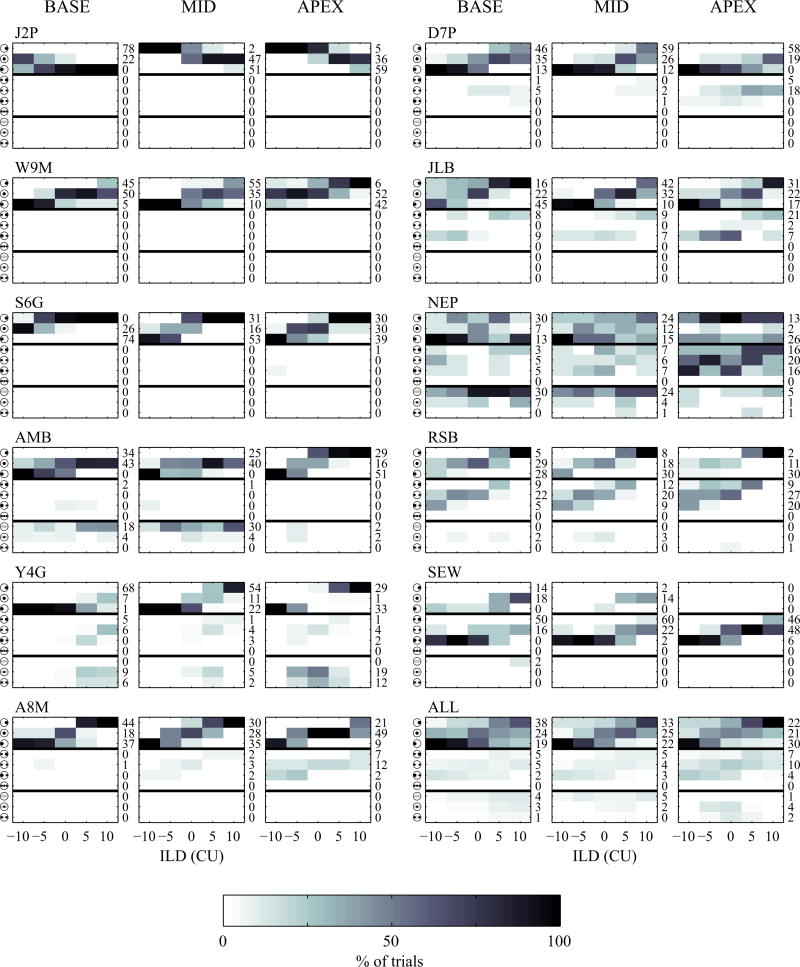

On average, results from the subjective fusion task indicate that only 77.8% of responses were perceived as being a single intracranial image. Figure 4 shows the perceived images for the 11 participants who completed the subjective fusion task for electrode pairs located in the apex, middle, and base of the cochlea. For each panel, rows 1–3 (top rows) indicate the participant perceived a single, fused sound. Rows 4–7 (middle) indicate that multiple sounds were perceived. Rows 8–10 indicate that a diffuse percept was reported. It is apparent that there are several instances in which participants do not perceive a single, fused sound, and the perceived location of many sounds is offset relative to the expected location. In addition, only 44.8% of responses were perceived as both fused and centered when BLBC levels were presented (e.g., the ILD was 0 CU). Thus, even after undergoing careful bilateral loudness balancing, there were a substantial number of responses which could be classified as sub-optimal for sound-source location, because they were not fused, or perceived in the appropriate location.

Figure 4.

Shown here are the subjective fusion results as a function of interaural level difference (ILD). Each row in each panel represents a possible response. The first three rows represent punctate, fused responses in which the participant perceived a single, fused image. The next four rows correspond to responses with two or three compact images. The last three rows reflect responses with diffuse auditory image(s). Results are shown for each participant for electrodes located in the base, middle, and apex of the cochlea.

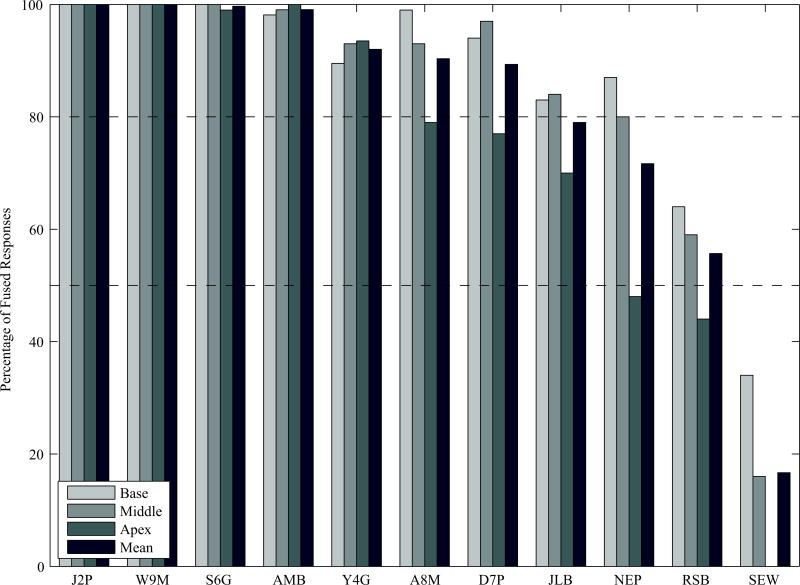

Figure 5 shows the percentage of fused responses reported by the 11 participants tested in this investigation; a mean percentage is provided, as well as a percentage of fused responses for electrode position. There are two main points to be gleaned from these data. First, there was considerable variability between participants with regard to the percentage of responses that were perceived as being fused. Four of the 11 participants (J2P, W9M, S6G and AMB) perceived virtually all stimuli as being fused, while another three participants (Y4G, A8M, and D7P), perceived over 80% of signals as being fused. In contrast, the remaining four participants reported perceiving a considerable number of non-fused responses. Finally, the number of fused responses were largely independent of the ILD as it ranged from −10 CU to +10 CU across all participants.

Figure 5.

Plotted here are the percentage of fused responses for each electrode pair in each participant. Within a given participant, the left bar corresponds to the most basal electrode pair, followed by the middle, the most apical, and finally the mean value across all electrode pairs.

Second, a smaller number of fused responses were reported for the apical electrode pair than the electrode pairs stimulated in the basal or middle of the array. Across all individuals tested here, twice as many ‘non-fused’ responses were reported for the most apical electrode pair relative to the middle- and basal-electrode pairs. Decreases of >10% for the apical electrode pair were observed in six participants, and of those participants who reported a between-electrode difference in the percentage of fused responses, all six reported the lowest percentage with the most apical electrode pair.

Experiment 2: Lateralization

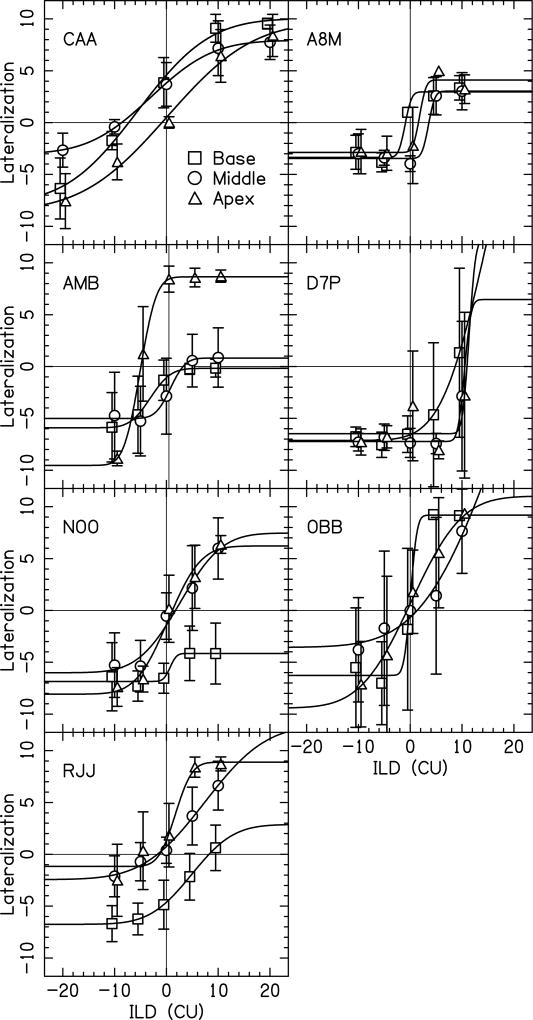

The results from this investigation indicate that, even after careful bilateral loudness balancing, bilateral CI recipients still perceive lateralization offsets that differ considerably from the physical ILD that was presented (in CU). Figures 6 and 7 show the perceived lateralization offset as a function of ILD for 13 participants tested here. There are two points to be derived from these figures.

Figure 6.

Shown here are data from the lateralization task for seven participants who completed this task only once. Each panel depicts data from a different participant. Within each panel, data are shown for the electrode pairs in the base (squares), middle (circles) and apex (triangles) of the cochlea. Data points show the mean lateralization for a condition. Error bars indicate ± one standard deviation from the mean. Fits to the data were performed using a cumulative normal distribution function.

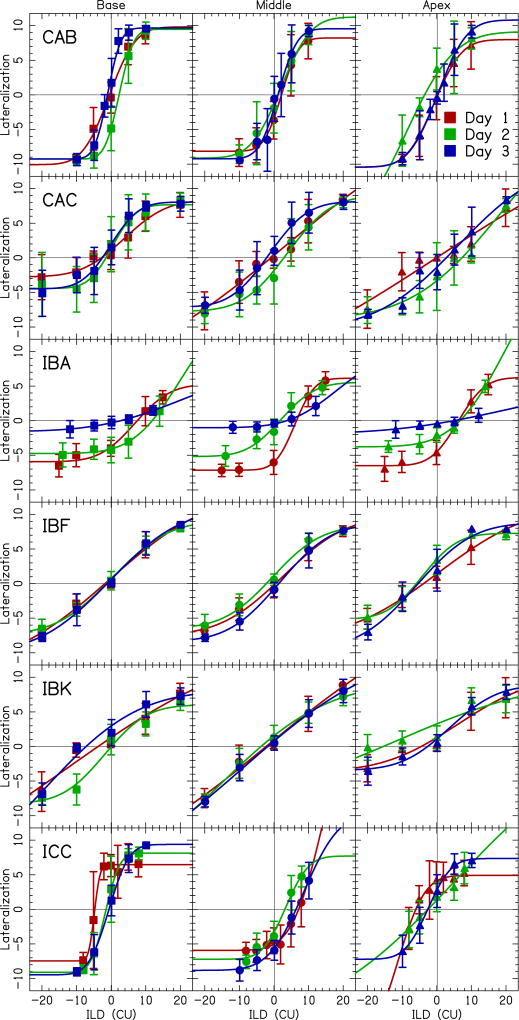

Figure 7.

Shown here are data for the lateralization task for the six participants who completed this task on three consecutive days. Within a given participant, the left panel corresponds to the basal electrode pair, the center panel corresponds to the middle pair, and the right panel corresponds to the apical electrode pair. Squares reflect data from the basal electrode pair, circles from the middle pair, and triangles from the apical pair. Different testing sessions are represented by different colors where red is day 1, green is day 2, and blue is day 3. Data points show the mean lateralization for a condition. Error bars indicate ± one standard deviation from the mean. Fits to the data were performed using a cumulative normal distribution function.

First, when presented with an ILD of 0 CU, 31% (20/65) of responses were perceived as having an intracranial image that was not centered within the head. This was measured by having an average that was not within ±1 standard deviation of zero lateralization (i.e., the center of the head). Figure 6 shows the data for the seven participants tested only once, while Figure 7 shows data for the six individuals tested on three different sessions; across both figures non-centered images were observed with an ILD of 0 CU. Moreover, the perceived lateralization offsets sometimes differed for electrode pairs stimulated in different regions of the array (e.g., CAA, AMB, and NOO). Finally, the perceived lateralization offset varied considerably amongst different individuals across the range of ILDs presented here.

The second key conclusion from Experiment 2 is that, for the six individuals tested across three consecutive days, perceived lateralization responses were generally consistent across testing sessions. This can be seen in the overlap amongst the curves representing different testing sessions, and in the observation that the shape of these curves was generally similar over time. IBA was the only individual who appeared to show a systematic change in perceived lateralization responses over time, with lateralization curves becoming progressively flatter with each successive testing session. Analysis of the BLBC levels for IBA showed a systematic increase in the right ear over the three days, which aided in obtaining a centered percept but appears to reduce IBA’s ability to lateralize with ILDs.

We reported that 31% of the responses were significantly off-center for an ILD of 0 CU, however, this may be an epiphenomenon from a task that is naturally highly variable. Therefore, to better quantify the percentage of responses that were reported as being significantly offset from the actual ILD, we calculated the inconsistency of the lateralization responses for the six participants who repeated the lateralization task over three consecutive days. The inconsistency could have been a result of different maps across days and/or different participant response patterns that are a result of different sensitivity to ILDs. To quantify the inconsistency, we calculated the standard deviation of the average lateralization responses measured across days. In other words we calculated the standard deviation (SD) using,

| (2) |

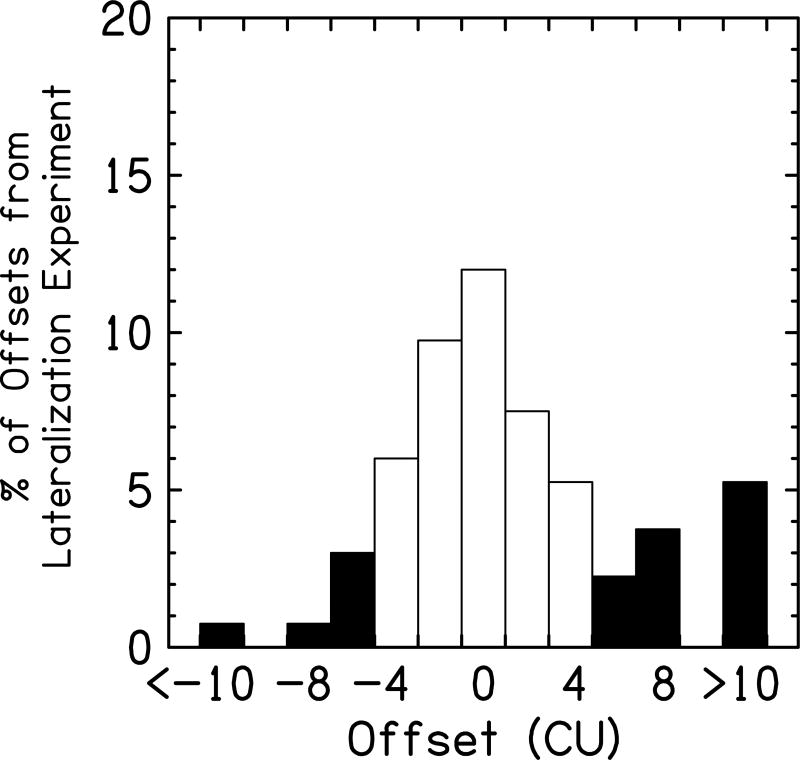

where P is the participant, D is the day, L is the average lateralization response at ILD = 0 CU, L̄ is the lateralization response at ILD = 0 CU averaged over days. Using this technique, we measured the SD = 2.2 CU. This suggests that on the whole, the perceived locations of the stimuli were largely consistent between sessions. Then, we determined the percentage of responses that were offset ≥5 CU from the ILD that was presented. These responses were classified as significant offsets, as they fall outside of this 95% confidence interval (i.e., they are greater than 2 standard deviation outside the mean). We plotted the percentage of perceived responses that were offset from the actual ILD (see Fig. 8). Here, for the six participants tested across three consecutive days, 28% of responses were classified as significantly offset from the presented ILD, suggesting there were more than a quarter of the bilaterally-loudness balanced electrode pairs that were significantly offset from center using this far stricter criterion.

Figure 8.

Plotted here is the percentage of offsets measured in the lateralization experiment for all of the participants. White bars represent lateralization curves within two standard deviations of zero offset (i.e., <5 CUs), while the black bars reflect offsets greater than two standard deviations.

DISCUSSION

The primary result from these experiments is that, even after bilateral loudness balancing, bilateral CI recipients still regularly perceive stimuli that are either unfused (Figs. 4 and 5), perceived as offset from the assumed zero ILD (Figs. 6, 7, and 8), or both. Such percepts are generally thought to be suboptimal for sound-source localization, and their pervasiveness in bilateral CI recipients likely contributes to the numerous reports indicating that bilateral CI recipients have poorer sound-localization abilities than normal-hearing individuals. In the present case, it appears that such unfused and/or offset percepts cannot be easily ameliorated without significant changes to either the mapping of bilateral CIs, or to the signal processing of the speech processor(s) themselves, because unfused or offset percepts were readily observed even after careful bilateral loudness balancing.

It is noteworthy that in most instances, careful bilateral loudness balancing required relatively small adjustments to the ULBC levels when compared with levels obtained individually in each ear. Generally, clinical mapping procedures for bilateral implants consist of fitting each CI independently; a key component of this process is finding levels in each ear that are perceived as being maximally comfortable. When this process was expanded to include careful loudness balancing between each ear, we observed that only small adjustments to the ULBC levels were necessary (≤ 3 CUs) in 80% of instances (see Fig. 3). If we assume that optimal cues to sound-source location in bilateral CI recipients are contingent upon stimuli being perceived as equally loud in each ear, the observation that conventional fitting procedures and mapping procedures based on careful bilateral loudness balancing yielded largely equivalent ULBC and BLBC levels suggests that both types of fitting procedures will generally yield similar access to sound-localization cues. Conversely, in normal-hearing individuals the binaural system is extremely sensitive to small differences in time and intensity between each ear. Thus, we cannot rule out the possibility that even small differences in loudness percepts between each ear could be relevant for accurate encoding and perception of ILDs.

While most individuals required only small adjustments to reach bilateral loudness balance, there were some instances in Fig. 3 in which sizeable adjustments to the ULBC levels were necessary before achieving BLBC levels, despite each ear initially being fit to a comfortable level. While it is not entirely clear why this would occur, two possible explanations seem most plausible. First, some participants may prefer the sound quality in one ear vs. another, perhaps due to sizeable differences in speech perception scores in each ear. By this logic, some participants have become accustomed to listening to the ‘secondary’ ear at a lower level, or prefer that ear to be stimulated at a lower intensity than the ear they perceive as their ‘better’ ear. In this way, sounds could be perceived as comfortably loud in each ear, but may not be loudness balanced. An alternate possibility is that there may be a range of CUs in each ear that elicits a ‘comfortable’ loudness to the participant, and in some individuals this range may be considerably larger than others. By this logic, when measuring C levels in each ear independently, one could fall at different parts of this range, and thus have each ear be perceived as ‘comfortably loud’ while not being bilaterally loudness balanced.

While conventional fitting procedures for bilateral CIs generally yield percepts that are bilaterally loudness balanced, it is striking that on average 23% of responses in Figs. 4 and 5 were not perceived as being fused into a single intracranial image. Such ‘unfused’ responses were observed regularly in four of the ten participants tested here. The incidence of such diplacusis is in stark contrast to the rates of 1–2% reported in the general population (Blom, 2010). Moreover, the percentage of ‘unfused’ responses observed with our CI recipients was 10% larger than that previously observed with normal-hearing individuals listening to pulse trains intended to simulate bilateral CI use (Goupell et al., 2013b). Taken together, these data are consistent with the idea that bilateral CI recipients sometimes have difficulties integrating information between ears.

It is unclear why the rates of non-fused images are greater in the bilateral CI population than in the general populace, but there are at least two explanations. First, an inability to fuse bilaterally-presented stimuli may stem from differences in the site of stimulation in each ear; such differences could arise from between-ear discrepancies in electrode insertion depth, or varying degrees of neural survival between ears. By this line of thought, when an individual has a between-ear mismatch in the site of stimulation to which they have not adapted, this individual would be less likely to fuse information from each ear into a single auditory image. Consistent with this account, when between-ear mismatches in the site of stimulation were imposed on bilateral CI recipients, these individuals were more likely to report an unfused intracranial image (Kan et al., 2013; Long et al., 2003; Poon et al., 2009). A similar result was observed with normal-hearing individuals who listened to a CI simulation with input signals that were both matched and mismatched in frequency to each ear (Goupell et al., 2013b). In the present investigation, we cannot confirm the presence or absence of between-ear mismatches in site of stimulation, as we do not have imaging data confirming or pitch-matching data approximating the electrode location. Taking data from our previous studies, we believe that the number-matched pairs had small pitch mismatches, as has been observed in other studies (Kan et al., 2015). However, the incidence of unfused responses suggests that there may have been an unadapted between-ear mismatch of some sort in four of the eleven individuals tested here.

Another possible explanation for why bilateral CI recipients cannot fuse bilateral information stems from the individual’s extent of bilateral experience. By this line of thought, bilateral fusion only occurs when the individual 1) has a normally-developed binaural system, as would be the case in individuals who lost their hearing well into adulthood, and 2) has been a user of bilateral CIs for enough time to enable them to learn to fuse the information in each ear. The importance of bilateral hearing experience has been noted on several occasions in both adult and pediatric bilateral CI recipients. In adults, ITD sensitivity was shown to improve with bilateral CI use in four sequentially-implanted individuals (Poon et al., 2009). In these individuals, shorter amounts of binaural deprivation prior to implantation were associated with faster acquisition of ITD sensitivity. In a different investigation, ITD sensitivity was observed in adults who were post-lingually, but not in those who were pre-lingually deafened (Litovsky et al., 2010). Here, it is worth noting that in this study both pre- and post-lingually deafened individuals were sensitive to ILD cues. In children, the effects of experience on bilateral CI use are well documented, as the ability to lateralize sound is improved when the child has used both CIs for a period of time (Asp et al., 2011; Grieco-Calub and Litovsky, 2010; Litovsky et al., 2006). Moreover, there is evidence indicating that auditory evoked potentials become more mature in sequentially-implanted children when the time between the first and second CI is short (Gordon et al., 2011, 2010) and that bilateral CI use can prevent cortical reorganization resulting from unilateral CI use (Gordon et al., 2013). Taken together, these data provide strong support for the idea that experience with binaural hearing plays a significant role in the perceptual abilities of individuals with bilateral CIs.

In the present study, participant NEP regularly reported multiple images and at the time of testing had characteristics that may be consistent with the bilateral experience hypothesis. For example, NEP lost hearing as a child, and was a long-term user of hearing aids and of bimodal hearing (CI + contralateral hearing aid) prior to implantation. Thus, this individual likely spent much of her life without consistent access to reliable binaural cues, as both hearing aids (Musa-Shufani et al., 2006) and CIs (Goupell et al., 2013a; Kan et al., 2013) can distort cues to binaural hearing. This lack of experience with “good” binaural cues for bilateral stimulation may then have contributed to her inability to fuse information from each ear. Conversely, it is also worth noting that J2P also lost her hearing at a very young age, but still perceived all signals as being fused, suggesting that having lost hearing at a young age does not necessarily mean that an individual will not fuse stimuli from each ear into a single image.

It is less clear at this time, however, why fused responses were consistently less common for the most apical electrode pair (see Fig. 5). Indirect evidence for a reduced ability to fuse signals in the apex of the cochlea may be inferred from data which show better performance for basal than apical electrode pairs on an ITD-discrimination task (Kan and Litovsky, 2014; Laback et al., 2014). If we assume that fusion is crucial for optimal performance on these tasks, then the observation that sometimes performance on these tasks is worse in the apex of the cochlea may be interpreted as indirect evidence for a reduced ability to fuse signals from each ear within this region of the cochlea. While this explanation has some merit, it should also be noted that other investigators have noted no difference between apical and basal electrode pairs on similar tasks (Litovsky et al., 2010; van Hoesel et al., 2009). Another possible explanation for the present data is that there may be a spatial mismatch between the two ears which is worse in the apex of the cochlea. However, this point remains speculative without appropriate imaging data.

Regardless of the precise mechanism responsible for the lack of fusion in these individuals, it is notable that these distortions to the spatial map were largely consistent across different testing sessions (see Fig. 7). For those participants tested on the lateralization task for three consecutive days, the standard deviation of responses across our participants was about 2 CUs, which is relatively small compared to the typical dynamic range of 30-40 CUs for a Cochlear CI user. Moreover, the general shape of these responses were largely consistent across sessions for five of six participants, with only participant IBA revealing a change in response pattern across different testing sessions. This result suggests that the distorted spatial maps observed here are unlikely to be an epiphenomenon, but rather reflect the perceptions elicited by controlled electrical stimulation in these participants.

Taken together, the present data suggest that even after bilateral loudness balancing, current fitting procedures for recipients of bilateral CIs may not elicit percepts that are optimal for sound-source localization. For example, in the present study, when individuals were presented with an ILD of 0 CU, a fused, centered image was only reported on 44.8% of trials. In contrast, normal-hearing participants, when presented with signals of the same frequency and intensity to each ear via headphones, almost universally hear a single intracranial image centered in the middle of the head.

One implication of these data is that, in the absence of new developments in signal processing for CIs, new mapping procedures may need to be developed for bilateral CI recipients to maximize the benefits of bilateral hearing. What is less clear is the type of CI mapping changes that would need to take place to enable optimal perception of bilateral cues for sound source location. One possibility would be to create a ‘localization’ map (Goupell et al., 2013a). While this mapping procedure is largely theoretical at this point, it is based on the concept of adjusting C levels in both ears in order to ensure that an image is perceived as centered when acoustic signals of equal intensity are presented to each ear. Further, the C levels in each ear could be adjusted to ensure that, with a change in ILD, the participant perceives an appropriate change in location. While this procedure has the potential to ensure that ILD cues for sound location are represented as faithfully as possible, there are some limitations to its implementation. First, such a procedure is likely to be too time-consuming for clinical use, at least with currently available procedures. Second, it would ideally need to occur at multiple stimulus levels, as the growth of loudness may differ between ears (Kirby et al., 2012), which means that an appropriate ‘localization map’ could vary with input signal level. Finally, the proposed procedure for a localization map may not facilitate fusing input from each ear into a single auditory image, as it cannot account for differences in electrode insertion depth, or neural survival.

A second possible fitting procedure for bilateral CIs which could facilitate fusion would be to create a ‘self-selected’ map. A self-selected map could be utilized if a clinician suspects that a given patient has a between-ear mismatch in site of stimulation to which they have not fully adapted. In this procedure, the frequency table could be adjusted until the individual reports that a given table maximizes bilateral speech intelligibility or integration [see (Fitzgerald et al., 2013) for a report on the feasibility of selecting frequency tables]. The assumption underlying this approach is that, if the individual selects a table in one ear that differs from the contralateral ear, then that individual may have a between-ear mismatch to which they have not fully adapted. Then, by adjusting the frequency table in the speech processor to that selected by the individual, it is possible that the two CIs would elicit percepts that are matched as closely as possible in each ear, thereby facilitating fusion. This approach was implemented in one of the participants here (D7P). With the initial protocol, this individual only reported a fused percept on ~85% of trials, with nearly 25% of responses reported as unfused for the E20 electrode pair. This individual selected a preferred frequency table in one ear (63–5188 Hz) that differed from the standard table (188-7938 Hz); the self-selected table was perceived by the individual as maximizing speech intelligibility, and ‘making the two ears blend together.’ After using the self-selected frequency table in that ear for one month, D7P repeated the subjective fusion task outlined in Experiment 1. Notably, in this test session all signals were perceived as fused regardless of which electrode was stimulated. Moreover, fusion was achieved with no change in speech-understanding ability. Thus, the ‘self-selected’ procedure has potential to facilitate fusion in bilateral CI recipients. However, as with the above-suggested ‘localization map,’ there are again some limitations with the self-selected procedure. First and most important, self-selection of a preferred frequency table requires custom software to be completed in a time-efficient manner; such software is not currently clinically available. Second, the self-selection procedure may not ensure that signals are perceived as having a location that is consistent with the physical ILD.

While the present data suggest that current mapping procedures for bilateral CIs may introduce spatial distortions that cannot be readily fixed by bilateral loudness balancing, it is worth noting that these data were obtained using signals that are atypical with regard to what a given bilateral CI user hears in his or her daily life. For example, the signals here consisted of stimulation of single-electrode pairs. These differ vastly from the complex signals widely encountered in daily living, which likely stimulate multiple electrodes. This raises the possibility that the results observed here may not be fully generalizable for daily CI use. For example, it is possible that complex signals which stimulate multiple electrodes are more readily fused into a single intracranial image than stimulation of single-electrode pairs. While this may be the case, it is important to note that in individuals with normal hearing, signals similar to those utilized here are both fused, and change in perceived location in a manner that is consistent with the presented ILD. In contrast, the present data suggest that even careful bilateral-loudness balancing did not ensure that bilateral CI recipients would perceive fused percepts at a location consistent with the presented ILD.

In summary, the present data suggest that mapping procedures for bilateral CIs utilizing bilateral loudness balancing may yield a distorted spatial map. Even after careful loudness balancing, simultaneous stimulation of electrode pairs elicited percepts that were often unfused, perceived as offset from the presented ILD, or both. Thus, while conventional fitting procedures are sufficient for bilateral CI recipients to receive many of the benefits to bilateral hearing, they may not be sufficient to receive the maximal benefits of bilateral hearing. To the extent that optimal bilateral performance is possible in bilateral CI recipients, these data suggest that either significant advances in signal processing are required for CI speech processors, or new procedures need to be developed to optimize the fitting of bilateral CIs.

Acknowledgments

We would like to thank Cochlear Ltd. for providing the testing equipment and technical support. We would also like to thank Tanvi Thakkar, Olga Stakhovskaya, Kyle Easter, and Katelyn Glassman for help in data collection. This work was supported by NIH Grant R00 DC09459 (M. B. Fitzgerald), R00 DC010206 (M. J. Goupell), P30 DC004664 (Center of Comparative Evolutionary Biology of Hearing core grant), R01 DC003083 (R. Y. Litovsky funded A. Kan), and P30 HD03352 (Waisman Center core grant).

We would like to thank Cochlear Ltd. for providing the testing equipment and technical support. This work was supported by NIH Grant R00 DC09459 (M. B. Fitzgerald), R00 DC010206 (M. J. Goupell), P30 DC004664 (Center of Comparative Evolutionary Biology of Hearing core grant), R01 DC003083 (R. Y. Litovsky funded A. Kan), and P30 HD03352 (Waisman Center core grant). Finally, this work met all requirements for ethical research put forth by the IRBs of the New York University School of Medicine, Maryland University, and the University of Wisconsin.

Footnotes

We have no other conflicts of interest.

In an auditory-image centering approach, the audiologist could stimulate both ears simultaneously, and ask the patient where in their head a given image is perceived. Then, the current level provided to one ear could be adjusted until the image is perceived as centered in the patient’s head. In this approach, the ability to center assumes that the patient has fused both ears into a single auditory image, as centering is likely to be impossible, or largely meaningless, if the patient does not perceive a single image.

In this instance we have 4 free parameters to fit 5 points. In the case of multiple sources, the secondary and tertiary responses were ignored. While suboptimal in some respects, this function has been previously used to fit similar data. As a result the shape of the underlying function is well understood. In this instance we think our approach is appropriate given 1) the typically sigmoidal shape of the data (justifying the functional form), 2) the different perceived extents of laterality (free parameter A), 3) the different slopes of the function that might be related to different dynamic ranges across subjects (free parameter σ), and 4) the fact that the offsets are the major concern in this work (the two free parameters µx and µy).

References

- Aronoff JM, Freed DJ, Fisher LM, Pal I, Soli SD. Cochlear implant patients’ localization using interaural level differences exceeds that of untrained normal hearing listeners. J. Acoust. Soc. Am. 2012;131:EL382–387. doi: 10.1121/1.3699017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff JM, Yoon Y, Freed DJ, Vermiglio AJ, Pal I, Soli SD. The use of interaural time and level difference cues by bilateral cochlear implant users. J. Acoust. Soc. Am. 2010;127:EL87. doi: 10.1121/1.3298451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asp F, Eskilsson G, Berninger E. Horizontal sound localization in children with bilateral cochlear implants: effects of auditory experience and age at implantation. Otol. Neurotol. Off. Publ. Am. Otol. Soc. Am. Neurotol. Soc. Eur. Acad. Otol. Neurotol. 2011;32:558–564. doi: 10.1097/MAO.0b013e318218cfbd. [DOI] [PubMed] [Google Scholar]

- Blauert J. Spatial Hearing: The Psychophysics of Human Sound Localization. Massachusets Institute of Technology; 1997. [Google Scholar]

- Blom JD. A Dictionary of Hallucinations, 2010 edition. ed. Springer; New York: 2010. [Google Scholar]

- Bronkhorst AW, Plomp R. The effect of head-induced interaural time and level differences on speech intelligibility in noise. J. Acoust. Soc. Am. 1988;83:1508–1516. doi: 10.1121/1.395906. [DOI] [PubMed] [Google Scholar]

- Buss E, Pillsbury HC, Buchman CA, Pillsbury CH, Clark MS, Haynes DS, Labadie RF, Amberg S, Roland PS, Kruger P, et al. Multicenter US bilateral MED-EL cochlear implantation study: speech perception over the first year of use. Ear Hear. 2008;29:20–32. doi: 10.1097/AUD.0b013e31815d7467. [DOI] [PubMed] [Google Scholar]

- Culling JF, Jelfs S, Talbert A, Grange JA, Backhouse SS. The benefit of bilateral versus unilateral cochlear implantation to speech intelligibility in noise. Ear Hear. 2012;33:673–682. doi: 10.1097/AUD.0b013e3182587356. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Loiselle L, Stohl J, Yost WA, Spahr A, Brown C, Cook S. Interaural Level Differences and Sound Source Localization for Bilateral Cochlear Implant Patients. Ear Hear. 2014 doi: 10.1097/AUD.0000000000000057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn CC, Tyler RS, Oakley S, Gantz BJ, Noble W. Comparison of speech recognition and localization performance in bilateral and unilateral cochlear implant users matched on duration of deafness and age at implantation. Ear Hear. 2008;29:352–359. doi: 10.1097/AUD.0b013e318167b870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgerald MB, Sagi E, Morbiwala TA, Tan C-T, Svirsky MA. Feasibility of real-time selection of frequency tables in an acoustic simulation of a cochlear implant. Ear Hear. 2013;34:763–772. doi: 10.1097/AUD.0b013e3182967534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon KA, Jiwani S, Papsin BC. What is the optimal timing for bilateral cochlear implantation in children? Cochlear Implants Int. 2011;(12 Suppl 2):S8–14. doi: 10.1179/146701011X13074645127199. [DOI] [PubMed] [Google Scholar]

- Gordon KA, Wong DDE, Papsin BC. Cortical function in children receiving bilateral cochlear implants simultaneously or after a period of interimplant delay. Otol. Neurotol. Off. Publ. Am. Otol. Soc. Am. Neurotol. Soc. Eur. Acad. Otol. Neurotol. 2010;31:1293–1299. doi: 10.1097/MAO.0b013e3181e8f965. [DOI] [PubMed] [Google Scholar]

- Gordon KA, Wong DDE, Papsin BC. Bilateral input protects the cortex from unilaterally-driven reorganization in children who are deaf. Brain J. Neurol. 2013;136:1609–1625. doi: 10.1093/brain/awt052. [DOI] [PubMed] [Google Scholar]

- Goupell MJ, Kan A, Litovsky RY. Mapping procedures can produce non-centered auditory images in bilateral cochlear implantees. J. Acoust. Soc. Am. 2013a;133:EL101–107. doi: 10.1121/1.4776772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goupell MJ, Stoelb C, Kan A, Litovsky RY. Effect of mismatched place-of-stimulation on the salience of binaural cues in conditions that simulate bilateral cochlear-implant listening. J. Acoust. Soc. Am. 2013b;133:2272–2287. doi: 10.1121/1.4792936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grantham DW, Ashmead DH, Ricketts TA, Haynes DS, Labadie RF. Interaural time and level difference thresholds for acoustically presented signals in post-lingually deafened adults fitted with bilateral cochlear implants using CIS+ processing. Ear Hear. 2008;29:33–44. doi: 10.1097/AUD.0b013e31815d636f. [DOI] [PubMed] [Google Scholar]

- Grieco-Calub TM, Litovsky RY. Sound localization skills in children who use bilateral cochlear implants and in children with normal acoustic hearing. Ear Hear. 2010;31:645–656. doi: 10.1097/AUD.0b013e3181e50a1d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawley ML, Litovsky RY, Culling JF. The benefit of binaural hearing in a cocktail party: Effect of location and type of interferer. J. Acoust. Soc. Am. 2004;115:833. doi: 10.1121/1.1639908. [DOI] [PubMed] [Google Scholar]

- Kan A, Litovsky RY. Binaural hearing with electrical stimulation. Hear. Res. 2014 doi: 10.1016/j.heares.2014.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kan A, Litovsky RY, Goupell MJ. "Effects of interaural pitch-matching and auditory image centering on binaural sensitivity in cochlear-implant users. Ear Hear. 2015 doi: 10.1097/AUD.0000000000000135. e-pub ahead of print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kan A, Stoelb C, Litovsky RY, Goupell MJ. Effect of mismatched place-of-stimulation on binaural fusion and lateralization in bilateral cochlear-implant users. J. Acoust. Soc. Am. 2013;134:2923–2936. doi: 10.1121/1.4820889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kerber S, Seeber BU. Sound Localization in Noise by Normal-Hearing Listeners and Cochlear Implant Users. Ear Hear. 2012;33:445–457. doi: 10.1097/AUD.0b013e318257607b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirby B, Brown C, Abbas P, Etler C, O’Brien S. Relationships Between Electrically Evoked Potentials and Loudness Growth in Bilateral Cochlear Implant Users. Ear Hear. 2012;33:389–398. doi: 10.1097/AUD.0b013e318239adb8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laback B, Pok S-M, Baumgartner W-D, Deutsch WA, Schmid K. Sensitivity to Interaural Level and Envelope Time Differences of Two Bilateral Cochlear Implant Listeners Using Clinical Sound Processors. Ear Hear. 2004;25:488–500. doi: 10.1097/01.aud.0000145124.85517.e8. [DOI] [PubMed] [Google Scholar]

- Laback B, Egger K, Majdak P. Perception and coding of interaural time differences with bilateral cochlear implants. Hear Res. 2014 doi: 10.1016/j.heares.2014.10.004. [e-pub ahead of print] [DOI] [PubMed] [Google Scholar]

- Litovsky R, Parkinson A, Arcaroli J, Sammeth C. Simultaneous Bilateral Cochlear Implantation in Adults: A Multicenter Clinical Study. Ear Hear. 2006;27:714–731. doi: 10.1097/01.aud.0000246816.50820.42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky RY, Goupell MJ, Godar S, Grieco-Calub T, Jones GL, Garadat SN, Agrawal S, Kan A, Todd A, Hess C, Misurelli S. Studies on Bilateral Cochlear Implants at the University of Wisconsin Binaural Hearing and Speech Lab. J. Am. Acad. Audiol. 2012 doi: 10.3766/jaaa.23.6.9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky RY, Jones GL, Agrawal S, van Hoesel R. Effect of age at onset of deafness on binaural sensitivity in electric hearing in humans. J. Acoust. Soc. Am. 2010;127:400. doi: 10.1121/1.3257546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky RY, Parkinson A, Arcaroli J. Spatial Hearing and Speech Intelligibility in Bilateral Cochlear Implant Users. Ear Hear. 2009;30:419–431. doi: 10.1097/AUD.0b013e3181a165be. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loizou PC, Hu Y, Litovsky R, Yu G, Peters R, Lake J, Roland P. Speech recognition by bilateral cochlear implant users in a cocktail-party setting. J. Acoust. Soc. Am. 2009;125:372. doi: 10.1121/1.3036175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Long CJ, Eddington DK, Colburn HS, Rabinowitz WM. Binaural sensitivity as a function of interaural electrode position with a bilateral cochlear implant user. J. Acoust. Soc. Am. 2003;114:1565–1574. doi: 10.1121/1.1603765. [DOI] [PubMed] [Google Scholar]

- Majdak P, Goupell MJ, Laback B. Two-dimensional localization of virtual sound sources in cochlear-implant listeners. Ear Hear. 2011;32:198–208. doi: 10.1097/AUD.0b013e3181f4dfe9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Middlebrooks JC, Green DM. Sound localization by human listeners. Annu. Rev. Psychol. 1991;42:135–159. doi: 10.1146/annurev.ps.42.020191.001031. [DOI] [PubMed] [Google Scholar]

- Müller J, Schon F, Helms J. Speech understanding in quiet and noise in bilateral users of the MED-EL COMBI 40/40+ cochlear implant system. Ear Hear. 2002;23:198–206. doi: 10.1097/00003446-200206000-00004. [DOI] [PubMed] [Google Scholar]

- Musa-Shufani S, Walger M, von Wedel H, Meister H. Influence of dynamic compression on directional hearing in the horizontal plane. Ear Hear. 2006;27:279–285. doi: 10.1097/01.aud.0000215972.68797.5e. [DOI] [PubMed] [Google Scholar]

- Poon BB, Eddington DK, Noel V, Colburn HS. Sensitivity to interaural time difference with bilateral cochlear implants: Development over time and effect of interaural electrode spacing. J. Acoust. Soc. Am. 2009;126:806–815. doi: 10.1121/1.3158821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schleich P, Nopp P, D’Haese P. Head Shadow, Squelch, and Summation Effects in Bilateral Users of the MED-EL COMBI 40/40+ Cochlear Implant. Ear Hear. 2004;25:197–204. doi: 10.1097/01.AUD.0000130792.43315.97. [DOI] [PubMed] [Google Scholar]

- Seeber BU, Fastl H. Localization cues with bilateral cochlear implants. J. Acoust. Soc. Am. 2008;123:1030. doi: 10.1121/1.2821965. [DOI] [PubMed] [Google Scholar]

- Tyler RS, Gantz BJ, Rubinstein JT, Wilson BS, Parkinson AJ, Wolaver A, Preece JP, Witt S, Lowder MW. Three-month results with bilateral cochlear implants. Ear Hear. 2002;23:80S–89S. doi: 10.1097/00003446-200202001-00010. [DOI] [PubMed] [Google Scholar]

- Van Hoesel R, Böhm M, Pesch J, Vandali A, Battmer RD, Lenarz T. Binaural speech unmasking and localization in noise with bilateral cochlear implants using envelope and fine-timing based strategies. J. Acoust. Soc. Am. 2008;123:2249–2263. doi: 10.1121/1.2875229. [DOI] [PubMed] [Google Scholar]

- Van Hoesel RJM, Jones GL, Litovsky RY. Interaural time-delay sensitivity in bilateral cochlear implant users: effects of pulse rate, modulation rate, and place of stimulation. J. Assoc. Res. Otolaryngol. JARO. 2009;10:557–567. doi: 10.1007/s10162-009-0175-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Hoesel RJM, Tyler RS. Speech perception, localization, and lateralization with bilateral cochlear implants. J. Acoust. Soc. Am. 2003;113(1617) doi: 10.1121/1.1539520. [DOI] [PubMed] [Google Scholar]