Abstract

One of the trends in collaborative learning is using mobile devices for supporting the process and products of collaboration, which has been forming the field of mobile-computer-supported collaborative learning (mCSCL). Although mobile devices have become valuable collaborative learning tools, evaluative evidence for their substantial contributions to collaborative learning is still scarce. The present meta-analysis, which included 48 peer-reviewed journal articles and doctoral dissertations written over a 16-year period (2000–2015) involving 5,294 participants, revealed that mCSCL has produced meaningful improvements for collaborative learning, with an overall mean effect size of 0.516. Moderator variables, such as domain subject, group size, teaching method, intervention duration, and reward method were related to different effect sizes. The results provided implications for future research and practice, such as suggestions on how to appropriately use the functionalities of mobile devices, how to best leverage mCSCL through effective group learning mechanisms, and what outcome variables should be included in future studies to fully elucidate the process and products of mCSCL.

Keywords: collaborative learning, critical synthesis, mCSCL, meta-analysis, mobile device

Computer-supported collaborative learning (CSCL), which was based on and transformed from cooperative or collaborative learning (CL), aims to employ computer technology to facilitate collaboration, discussion, and exchanges among peers or between students and teachers and help achieve the goal of knowledge sharing and knowledge creation (Kirschner, Beers, Boshuizen, & Gijselaers, 2008; Koschmann, 1996; Stahl, Koschmann, & Suthers, 2006). Kreijns, Kirschner, and Jochems (2002) proposed that there were five stages in the CSCL learning process: copresence, awareness, communication, collaboration, and coordination. This five-stage model described the core tasks of CSCL and the essential elements for the success of CSCL. CSCL retains the advantages of adaptiveness and flexibility of individual-based computer-based learning while avoiding the disadvantages of insufficient learner interaction and lack of feedback (Gay, Stefanone, Grace-Martin, & Hembrooke, 2001; Kreijns, Kirschner, & Jochems, 2003). Recent research syntheses have recognized the positive effects of CSCL and also pointed out the insufficiencies of CSCL (e.g., Gress, Fior, Hadwin, & Winne, 2010; Kreijns et al., 2003; Noroozi, Weinberger, Biemans, Mulder, & Chizari, 2012; Resta & Laferrière, 2007; Soller, Martínez, Jermann, & Muehlenbrock, 2005).

The emergence of wireless technology and a variety of mobile-device innovations have received a great deal of attention in the field of education (Sung, Chang, & Liu, 2016; Sung, Chang, & Yang, 2015). Mobile devices offer features of portability, social connectivity, context sensitivity, and individuality, which desktop computers might not offer (Chinnery, 2006; Gao, Liu, & Paas, 2016; Lan & Lin, 2016; Song, 2014; Zheng & Yu, 2016). Mobile devices have made learning movable, real-time, collaborative, and seamless (Kukulska-Hulme, 2009; Wong & Looi, 2011), which may be called “mobile learning” in general. The unique properties of mobile devices have also been incorporated into CL, forming an emerging research subfield of CSCL called mobile-computer-supported collaborative learning (mCSCL).

Mobile-Computer-Supported Collaborative Learning

Paraphrasing Resta and Laferrière (2007), mCSCL can be defined as using mobile devices to enhance the collaborative and cooperative learning that involve the use of small groups in which students work together to maximize their own and other’s learning. The essential element of mCSCL is that it must simultaneously integrate the characteristics of mobile devices and cooperative learning in teaching activities (Zurita & Nussbaum, 2007). In addition to using the mobile device as a tool or platform for CSCL, researchers have claimed that mCSCL can increase a learner’s active participation in activities by providing more opportunities for instant interaction between a learner and his or her peers via the use of mobile devices (Patten, Sánchez, & Tangney, 2006; Ryu & Parsons, 2012). The powerful computational capabilities of current mobile devices have allowed exchanges between group members to become more efficient (Asabere, 2012; L. Johnson, Levine, Smith, & Stone, 2010), the portability and mobility of mobile devices make it possible for every CL group member to communicate in different locations and time, and the individualized interface and functions allowing the sharing of the work process and products empower the coordination between learners and their interactions in their group (Song, 2014).

Despite the optimistic claims about mCSCL, the empirical effects of mCSCL have been equivocal. For example, Z. C. Lin (2013) found that the impact of an mCSCL curriculum on the learning achievement of nursing students far exceeded that of mobile devices that are used simply for personal learning. Similarly, Uzunboylu, Cavus, and Ercag (2009) indicated that mCSCL produces better learning attitudes among college students than do lectures. Sánchez and Olivares (2011) found that applying mCSCL-based teaching to junior high school courses enhanced students’ leadership, willingness and sense of responsibility to cooperate with their peers, and problem-solving ability, and Lan, Sung, and Chang (2007) observed that applying mCSCL to a group-based reading course was effective at improving interaction and motivation among group members. In contrast, Jones, Antonenko, and Greenwood (2012) found that mCSCL and personal mobile-based learning produced similar results in science learning, and Delgado-Almonte, Andreu, and Pedraja-Rejas (2010) found that mCSCL and learning through conventional lectures had similar impacts on the test performance of learners of engineering. Persky and Pollack (2010) studied the application of mCSCL in college medical courses and found that mCSCL and personal mobile-based learning did not present any significant difference in knowledge application and integration.

The Activity Theory–Based Framework for mCSCL

Just as there is ambiguity in the effects of mCSCL, there are diverse approaches to mCSCL studies in terms of variously emphasized methodology, participants, tools, processes, products, and so on. Previous research also indicated several factors influence the effects of mCSCL, such as group composition (e.g., Organisation for Economic Co-operation and Development, 2013), interactions between group members (e.g., Greiff, Holt, & Funke, 2013; Hsu & Ching, 2013; Organisation for Economic Co-operation and Development, 2013), implementation setting (e.g., Song, 2014), hardware and software used (e.g., Asabere, 2012; Hsu & Ching, 2013; Song, 2014), and intervention duration (e.g., Song, 2014). To elucidate the dynamics of affecting factors, the present research tries to use activity theory (AT) as a model for illustrating the essential components of mCSCL. Based on the original AT system of Engestrom (1987) and the revised AT of Sharples, Taylor, and Vavoula (2007) and Zurita and Nussbaum (2007) for mobile learning and mCSCL, we propose a notional AT-mCSCL framework for further illustrating the mediating effects of some moderator variables between tools (i.e., the mobile devices) and the objective (i.e., the outcomes) of mCSCL research.

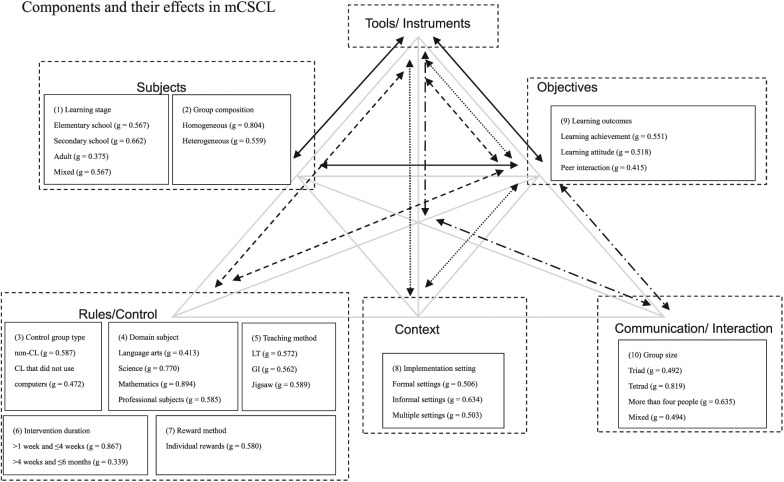

The AT-mCSCL framework includes the following six primary elements (see Figure 1):

Figure 1.

The activity theory–based mobile-computer-supported collaborative learning framework. Adapted from Engestrom (1987, p. 136).

Tools/instruments: Any tools or instruments that may be used in an mCSCL activity, which may be artifacts (e.g., hardware and software) or learning resources (e.g., the distributed intelligence from peers or tutors) for mCSCL

Subjects: All the people who may participate in mCSCL, such as students across different ages, teachers with different levels of teaching expertise, or group members or competitors

Objectives: The goals of mCSCL, such as knowledge creation, problem solving, sharing/interaction, or promoting learning motivation

Rules/control: The standards or rules that regulate the mCSCL activity, such as the procedure for teaching scripts designed for mCSCL, the rules for rewards, cooperative methods, or curriculum designated (e.g., intervention duration, domain subject, and control group type) for mCSCL projects

Context: The physical (e.g., formal learning in classrooms or informal learning in museums), psychological (e.g., students’ perception in heterogeneous groups), or social (e.g., competitive ambience among groups) circumstances in which mCSCL is applied

Communication/interaction: How the users and mCSCL technologies interact (e.g., how the technological affordances support students’ argumentation) or the communication modes among the mCSCL learners (e.g., face-to-face vs. distance learning)

The six elements in the framework can be used as a foundation for illustrating the content of mCSCL or general mobile learning studies (e.g., Frohberg, Goth, & Schwabe, 2009; Zurita & Nussbaum, 2007).

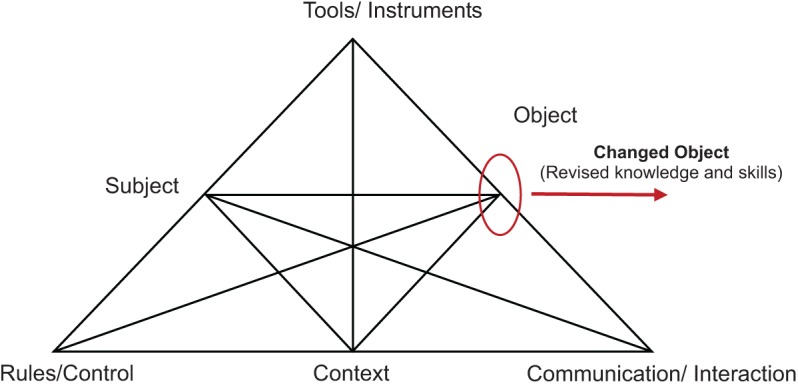

Moreover, the interactive connections among the six elements also establish a dynamic system for analyzing mCSCL activities and research. For example, the upper part of the triangle in Figure 2 contains the tools/instruments, subjects, and objectives, which illustrate the mediating effects of different subjects on learning outcomes. The tools/instruments–context–objectives triangle may illustrate the mediating effects of learning environments on learning results, the tools/instruments–rules/control–objectives triangle may illustrate the mediating effects of rules used on learning outcomes, and the tools/instruments–communication/interaction–objectives triangle may illustrate the mediating effects of different communication/interaction types on learning outcomes. In the present study, we used the AT-mCSCL framework along with the findings of previous research to choose moderating variables (e.g., the subjects, rules/control, context, and communication/interaction), which may affect the learning outcomes (i.e., the objectives) of mCSCL studies. More details are presented in the Coding Framework section.

Figure 2.

The moderating effects of components in the activity theory–based mobile-computer-supported collaborative learning framework. CL = collaborative learning; LT = learning together; GI = group investigation.

Review Studies on mCSCL

There are scarce research reviews on the effects of mCSCL. Asabere (2012) investigated mCSCL-related literature through a qualitative method and concluded that mCSCL is superior to CSCL in terms of mobility and instantaneity of feedback and that mCSCL also has a positive impact on interactions among learners but not necessarily on learners’ higher order thinking. Asabere also proposed that the quality of the hardware and software used for mCSCL significantly influences the interaction and communication among learners. Hsu and Ching (2013) concluded on the basis of their qualitative review that mCSCL is effective at improving the sense of engagement and communication efficiency of learners and enhancing students’ learning performance. Song (2014) found that most mCSCL studies suffered from the limitation of short teaching duration, which might overestimate the teaching results due to the influence of the novelty effect. Moreover, she also pointed out that the effects of mCSCL might be affected by moderating variables such as learning stages, intervention duration, and domain subjects.

The above-described reviews suffer from two major shortcomings. First, they use a qualitative approach, which may be able to describe and synthesize the current status quo of mCSCL but does little to evaluate the actual effectiveness of mCSCL; therefore, whether or not mCSCL benefits users more than other pedagogies still remains inconclusive. Second, most of the qualitative review studies were not able to systematically address the influences of various moderator variables on the effects of mCSCL, which makes it difficult to provide a complete perspective on mCSCL application and gauge the problems encountered.

Purposes of the Present Research

Based on the limitations of the current review research on mCSCL and the fact that there is a lack of meta-analyses about mCSCL, this study has the following goals: (a) to provide statistical information about the applications of mCSCL studies in a systematic way by discussing moderator variables, such as independent variables/control group types, dependent variables/learning outcomes, learning stage of participants, domain subject, group size, group composition, teaching method, intervention duration, implementation setting, and reward method; (b) to determine the overall effects of mCSCL studies on learning performance; (c) to compare the effect size (ES) of the various moderator variables; and (d) to provide suggestions for follow-up mCSCL research and practices. The specific research questions addressed are as follows:

What is the overall effect of mCSCL research on learning performance in terms of learning achievement, learning attitude, and peer interaction?

Are there different ESs when mCSCL groups are contrasted with different control groups such as noncooperative groups, cooperative groups that did not use computers, and CSCL groups that did not use mobile devices?

Do mCSCL programs produce different effects for different types of learning outcomes such as learning achievement, learning attitude, and peer interaction?

Do mCSCL programs produce different effects for learners of different learning stages?

Do mCSCL programs produce different effects for different domain subjects?

Do mCSCL effects vary with different group sizes of participants in programs?

Will the homogeneous or heterogeneous composition of groups affect mCSCL effects?

Do different teaching methods implemented in mCSCL produce different effects?

Do different intervention durations of mCSCL produce different effects?

Do mCSCL activities implemented in different settings produce different effects?

Do mCSCL effects vary with different reward methods?

Method

Data Source and Searching Strategy

Several reviews about mCSCL have indicated that studies related to mobile and ubiquitous learning or mCSCL were published in academic journals only after 2000 (e.g., Hsu & Ching, 2013; Hwang & Tsai, 2011; Song, 2014). Therefore, the present study set the period for searching the mCSCL articles between January 1, 2000, and December 31, 2015. The reference searching procedure proposed by Cooper (2010) was used to collect mCSCL-related references.

We conducted searches of electronic databases, manually searched across important publications, and also searched for relevant cited references in the identified research articles. The primary data sources for the study included journal articles, conference papers, and doctoral dissertations indexed in the Web of Knowledge Social Sciences Citation Index or in the ERIC database. Additionally, because of the limited language competence of the authors, only articles written in English were included. Based on the key concepts and keywords of mobile learning, CSCL, or mCSCL in previous reviews (e.g., Frohberg et al., 2009; Hsu & Ching, 2013; Song, 2014), the following two sets of keywords were used: (a) keywords related to mobile technology, including “mobile,” “wireless,” “ubiquitous,” “wearable,” “portable,” “handheld,” “mobile phone,” “PDA,” “palmtop,” “Web pad,” “tablet PC,” “tablet computer,” “laptop,” “e-book,” “digital pen,” and “clickers,” and (b) keywords related to CL, including “cooperative learning,” “collaborative learning,” “small group,” “peer learning,” and “group learning.”

We searched the titles, abstracts, keywords, and topics of each article. For the search, the keywords in each set were first connected by a Boolean OR operator within the respective set, and a Boolean AND operator was subsequently used to combine keywords across the two sets in order to achieve the maximum number of mCSCL-related combinations. This study also examined the search results to find out which journals have published most of the mCSCL-related articles. The journals that published more than 20 mCSCL-related articles during the 2000–2015 period, including the Australian Journal of Educational Technology, British Journal of Educational Technology, Computers & Education, Computer Assisted Language Learning, Educational Technology Research and Development, Journal of Computer Assisted Learning, Language Learning & Technology, International Journal of Computer-Supported Collaborative Learning, and ReCALL, were treated as highly mCSCL-related journals, and manual searches of these journals were also conducted. Finally, to ensure that no relevant literature was overlooked, we also looked at the references cited in the research articles of empirical and review studies. Additional data found this way were then added so as to produce a comprehensive data pool.

Search Results

Initial Screening

Using the keywords described in the Data Source and Searching Strategy section of the databases search, the first stage of the study identified 950 mCSCL-related abstracts published between 2000 and 2015 (300 articles in ERIC and 650 articles in Institute of Scientific Information [ISI] index). Two researchers of this study read the title, abstract, and keywords of the 950 articles and decided if the articles were closely related with mCSCL. The criteria of relatedness were (a) if the study used mobile devices as the research tool and (b) if the study was related with cooperative/CL/teaching situations and purposes. If articles used any mCSCL keywords described in the Data Source and Searching Strategy section but the contents were not related to mCSCL (e.g., topics about the relationships between social groups and social mobility), they were excluded. The two researchers had a consistency rate of around 92%, and they negotiated inconsistencies by reading and discussing the full texts of the articles. This process resulted in the selection of 347 articles (110 articles in ERIC and 237 articles in ISI).

Screening of Experimental Studies

The second stage focused on literature related to experimental studies. The scope of analysis covered preexperimental studies (including a one-group posttest design and a one-group pretest–posttest design; Ary, Jacobs, & Razavieh, 2002), true experiments (including an equivalent-group pretest–posttest design, an equivalent-group posttest design, and a randomized posttest design; Ary et al., 2002), and quasi experiments (including a nonequivalent-group pretest–posttest design and a counterbalanced design; Ary et al., 2002; Best & Kahn, 2003). Some studies that were labeled as “cases” actually employed experimental designs (e.g., Kim, Lee, & Kim, 2014; Melero, Hernández-Leo, Sun, Santos, & Blat, 2015) and were included in our analyses. However, any case studies with a qualitative analysis or single-case studies with an experimental design were excluded from this study (e.g., Laru, Järvelä, & Clariana, 2012; Pérez-Sanagustín, Santos, Hernández-Leo, & Blat, 2012). Two researchers read the full text of the 347 articles, and the agreement rate was around 97%. The researchers resolved their inconsistency by discussing the content of the articles on which they did not have consensus. This stage eliminated conceptual research and review articles (n = 84, 27 articles in ERIC and 57 articles in ISI), case studies and qualitative studies (n = 50, 16 articles in ERIC and 34 articles in ISI), surveys (n = 97, 31 articles in ERIC and 66 articles in ISI), and single-subject research (n = 1, 1 article in ISI). The overlapped studies that were reported in both ERIC and ISI indexes (n = 33) were also removed, which left 82 articles for inclusion (consisting of 81 journal articles and 1 doctoral dissertation).

Screening for Inclusion or Exclusion From the Meta-Analysis

Two screening criteria were applied in the third stage. The first involved determining whether an article met the purpose of the present study of comparing the dependent variables/learning outcomes (i.e., learning achievement, learning attitude, or peer interaction; see the Coding Framework section for more details about the measurement of dependent variables) under the independent variable of applying and not applying mCSCL in teaching scenarios. In this case, the experimental group had to involve mCSCL, and the control group had to involve either non-CL (e.g., individual learning; McDonough & Foote, 2015) or CL that did not use mobile devices (e.g., CL that uses desktop or printed textbooks in learning activities; Choi & Im, 2015; Hung & Young, 2015).

The second criterion involved determining whether the final data produced in an experiment met the requirements of a meta-analysis (Lipsey & Wilson, 2001) and could be applied to calculate an ES. The two researchers involved in the second stage of screening read the 82 articles and decided the articles to be included for meta-analysis. Their agreement rate was around 88%, and they resolved their inconsistency by discussing the content of any article on which they did not have consensus initially. Applying the above-described screening criteria resulted in the exclusion of 15 studies in which mCSCL was implemented in both the experimental and control groups (e.g., K. Lai & White, 2014; T. J. Lin, Duh, Li, Wang, & Tsai, 2013). In addition, 19 articles that did not have sufficient information for ES calculations were excluded, such as those that did not report the number of people in each group, those that did not report the results for statistical inferences, or those whose statistical inference results were not sufficient to calculate ESs (e.g., Baloian & Zurita, 2012; Li, Dow, Lin, & Lin, 2012). After the third stage of screening, there were 48 articles left—47 journal articles and 1 doctoral dissertation—all of which were published between 2000 and 2015.

Coding Framework

The study descriptors consisted of the author names, publication year, and title.

Variables Regarding Experimental Design

The present study included the following 10 variables related to experimental design: independent variable/control group types, dependent variables/learning outcomes, learning stage, domain subject, group size, group composition, teaching method, intervention duration, implementation setting, and reward method. These variables are described as follows:

Independent variables/control group types: The present study was concerned with the relative benefits of different types of CL, especially the relative effects of contrasting mCSCL with non-CL (i.e., individual leaning), CL that did not use computers, and CSCL that did not use mobile devices (e.g., CL with desktops). Therefore, experimental designs employing any of the three types of control groups listed above were coded for further comparison of their ESs.

Dependent variables/learning outcomes: The present study was concerned with the relative effects of mCSCL on different types of dependent variables/learning outcomes, which can be categorized into learning achievement (e.g., K. Lai & White, 2012), learning attitude (e.g., Uzunboylu et al., 2009), and peer interaction (e.g., Choi & Im, 2015). Learning achievement is measured by methods such as standardized tests, teacher-made tests, or researcher-made tests. The content of such tests includes knowledge acquisition, comprehension, and utilization. In contrast, learning attitude is measured subjectively using methods such as opinions, reactions, satisfaction level, or course evaluation. The content of such assessments include motivation (or self-efficacy), interest, perception (regarding the atmosphere, satisfaction level, usefulness, feelings about the activities or about the collaboration, etc.), and behavioral characteristics (e.g., frequency of use). Peer interaction refers to the interaction between subjects (e.g., the number of discussions during the process of mCSCL). The content includes cooperative behavior (e.g., Sánchez & Olivares, 2011), interactive behavior (e.g., C. C. Liu, Chung, Chen, & Liu, 2009), and interactive perception (e.g., Kim et al., 2014).

Learning stage: Previous research has proposed that learners of different school levels might benefit differently from mobile learning (e.g., Song, 2014) or CL (e.g., Kyndt et al., 2013). To investigate if learners of different learning stages have different beneficial effects from mCSCL, the present study coded learning stage as a moderator variable, which included kindergarten, elementary school, junior high school, senior high school, college, graduate school, and adults, as well as combinations of different learning stages.

Domain subject: Previous research has indicated that CL may have a different effect when implemented in different domain subjects (Kyndt et al., 2013; Lou et al., 1996; Qin, Johnson, & Johnson, 1995). The dimensions coded included all the domain subjects used in the 49 articles, such as language arts (e.g., English, Chinese, Hebrew, and Spanish), social studies (e.g., history and geography), science (e.g., physics, chemistry, and biology), mathematics, specific abilities (e.g., teamwork skill), and professional subjects (e.g., health care programs, finance and economics, education, computer and information technology, engineering project).

Group size: This refers to the number of learners in the mCSCL groups. Lou et al. (1996) mentioned that group size influences the interactive behavior among group members and the results for CL applications. The present study separated the groups into four sizes: dyad, triad, tetrad, and more than four people.

Group composition: This refers to the way of grouping students in groups. Researchers have proposed that heterogeneous grouping may be better than homogeneous grouping (e.g., Noroozi et al., 2012). We categorized studies as using either homogeneous (i.e., students with the same or similar attributes, traits, or learning performance) or heterogeneous grouping (i.e., students with a wide variety of attributes, traits, or learning performance).

Teaching method: This refers to the instructional methods of CL. D. W. Johnson, Johnson, and Stanne (2000) pointed out that the type of pedagogy influences how members of CL groups build knowledge and their interaction processes. The present study used D. W. Johnson et al.’s categories and categorized the teaching methods into learning together (LT), team game tournament (TGT), group investigation (GI), academic controversy, jigsaw, student teams achievement division (STAD), complex instruction, team accelerated instruction, team teaching, cooperative integrated reading and composition, and other methods.

Intervention duration: This refers to the length of time over which mCSCL-based teaching activities were applied. Researchers have proposed the importance of intervention duration for CL (Resta & Laferrière, 2007; Slavin, 1993; Springer, Stanne, & Donovan, 1999). To compare the relative effects of mCSCL implemented with different intervention duration, the present study categorized the intervention duration into the following dimensions (e.g., Song, 2014): ≤4 hours, >4 hours and ≤24 hours, >1 day and ≤7 days, >1 week and ≤4 weeks, >4 weeks and ≤6 months, and >6 months.

Implementation setting: One of the distinguished functionalities of mobile devices is that mobile learning tasks can be implemented in different settings (Melero et al., 2015). Springer et al. (1999) also found that CL implemented in different settings might demonstrate different effects. The present study divided the implementation setting of mCSCL into formal learning settings (i.e., classroom, laboratory, library, hospital, and work place), informal learning settings (i.e., museum and outdoors), multiple settings (i.e., two or more settings were used in learning activities), and “not mentioned” for the comparison of effects.

Reward method: This refers to the reward or scoring methods used for mCSCL members. Slavin (1995, 2011) proposed that different reward methods might influence the productivity of CL activities. The present study used the same categories as Slavin’s (1983) CL meta-analysis and categorized the reward methods into group rewards for individual learning, group rewards for group outcomes, and individual rewards.

We obtained relevant information about the moderator variables described above through the research method sections of the research articles. Furthermore, the coding process for these 10 variables was based on the procedure suggested by Cooper (2010) and first involved two researchers (coders) reaching consensus on the definitions of the entries and their related variables by selecting and discussing two exemplar articles. The coders then independently coded 10 articles, discussed their differences in coding results (the initial coding showed a consistency of 90%), and negotiated to reach a consensus. Missing values were handled in the study by coding them as 0, indicating them as being “not mentioned.” Finally, the coders coded all of the remaining articles, discussed the differences in the codes, and negotiated until consensus was reached. The Comprehensive Meta-Analysis Software (Borenstein, Hedges, Higgins, & Rothstein, 2005) was used for the coding process.

Data Analysis

Calculating ES

This study adopted the following steps proposed by Borenstein, Hedges, Higgins, and Rothstein (2009) to calculate and combine the ESs:

Calculate the ES of each article. An article may contain different studies, for example, Jones et al. (2012) included two outcome variables, learning achievement and learning attitude, which were treated as two studies. A study may contain different measures for the same outcome variable, for example, Persky and Pollack (2010) used three cognition tests to measure learning achievement. Different measures in the same study were calculated as separate ESs (Cohen’s standardized mean difference d) before being combined according to the formula of Borenstein et al. (2009, p. 227) into a single ES representing that study. For articles with multiple studies, after the ES of each study was obtained, these ESs were combined into a single ES for the article. We call this the ES of “learning performance” in the present study. It is noteworthy that different formulae for calculating the ESs were employed for studies employing different experimental designs. The formula proposed by Lipsey and Wilson (2001, p. 48) was adopted for a true-experimental design (random assignment of participants) without a pretest. The formula proposed by Furtak, Seidel, Iverson, and Briggs (2012, p. 311) and Morris (2008, p. 369) was adopted for a true- or quasi-experimental design that had pre- and posttests. The formula proposed by Borenstein et al. (2009, p. 29) was adopted for a preexperimental design with no control group.

Combine the ESs of all the articles to calculate the overall weighted average ES of the present meta-analysis study, the Hedges’s g, through the formula of Borenstein et al. (2009, p. 66).

Use the random effects model of Borenstein et al. (2009, pp. 73–74) to calculate the confidence interval of the overall average ES for mCSCL.

Use a heterogeneity analysis (producing the QΒ value) to check if the ESs were influenced by specific moderator variables.

Investigating Publication Bias

The study adopted two methods to investigate publication bias. The first method was the fail-safe N (e.g., classic fail-safe N) as proposed by Rosenthal (1979), which estimates the number of studies with nonsignificant results (unpublished data) needed to cause the mean ES to become statistically nonsignificant. A comparison value of 5n + 10 (where n represents the total number of studies included in the meta-analysis) was applied to the investigation. A fail-safe N of larger than 5n + 10 indicates that the nonsignificant results provided by any unpublished studies are not likely to influence the average ES of the study’s meta-analysis. This study also adopted the modified fail-safe N proposed by Orwin (1983), which estimates the number of studies with nonsignificant results (unpublished data) needed to cause the average ES to be lower than the smallest effect deemed to be important.

The second method used to investigate publication bias in this study was “trim and fill,” as proposed by Duval and Tweedie (2000), which not only estimates the number of missing studies but also yields a corrected average ES by employing data augmentation and reestimation techniques. These computations were performed in the present study using Comprehensive Meta-Analysis software (Version 2.0). The results generated by the analysis of both fixed and random effects are reported herein.

Results

Descriptive Information

Table 1 presents the distribution of moderator variables and their corresponding percentages in mCSCL. In total, there were 48 articles, 163 ESs (before integrated), and 5,294 participants. Regarding the independent variables/control group types, non-CL was used more often than CL that did not use computers and CSCL that did not use mobile devices. With respect to dependent variables/learning outcomes, the most frequently studied was learning achievement, followed by learning attitude and peer interaction. The largest proportion of studies in the learning stage was college, followed by elementary school, senior high school, and mixed. The most frequently studied subjects were language arts, followed by science and social studies.

Table 1.

Categories and proportion of studies for the 48 included articles

| Variable | Category | No. of studies (k) | Proportion of studies |

|---|---|---|---|

| Control group types | 1. Non-CL | 31 | .646 |

| 2. CL that did not use computers | 11 | .229 | |

| 3. CSCL that did not use mobile devices | 6 | .125 | |

| Learning outcomesa | 1. Learning achievement | 39 | .661 |

| 2. Learning attitude | 13 | .220 | |

| 3. Peer interaction | 7 | .119 | |

| Learning stage | 1. Elementary school | 16 | .333 |

| 2. Junior high school | 3 | .063 | |

| 3. Senior high school | 6 | .125 | |

| 4. College | 17 | .354 | |

| 5. Teacher | 1 | .021 | |

| 6. Mixed | 5 | .104 | |

| Domain subject | 1. Language arts | 11 | .229 |

| 2. Social studies | 6 | .125 | |

| 3. Science | 9 | .188 | |

| 4. Mathematics | 5 | .104 | |

| 5. Specific abilities | 5 | .104 | |

| 6. Health care programs | 4 | .083 | |

| 7. Finance and economics | 1 | .021 | |

| 8. Education | 1 | .021 | |

| 9. Computer and information technology | 3 | .063 | |

| 10. Engineering projects | 3 | .063 | |

| Group size | 0. Not mentioned | 9 | .188 |

| 1. Dyad | 2 | .042 | |

| 2. Triad | 10 | .208 | |

| 3. Tetrad | 6 | .125 | |

| 4. More than four people | 7 | .146 | |

| 5. Mixed | 14 | .292 | |

| Group composition | 0. Not mentioned | 27 | .563 |

| 1. Homogeneous | 4 | .083 | |

| 2. Heterogeneous | 17 | .354 | |

| Teaching method | 1. LT | 29 | .604 |

| 2. TGT | 4 | .083 | |

| 3. GI | 7 | .146 | |

| 4. Jigsaw | 6 | .125 | |

| 5. STAD | 2 | .042 | |

| Intervention duration | 0. Not mentioned | 4 | .083 |

| 1. ≤4 hours | 6 | .125 | |

| 2. >4 hours and ≤24 hours | 0 | .000 | |

| 3. >1 day and ≤7 days | 2 | .042 | |

| 4. >1 week and ≤4 weeks | 16 | .333 | |

| 5. >4 weeks and ≤6 months | 15 | .313 | |

| 6. >6 months | 5 | .104 | |

| Implementation setting | 0. Not mentioned | 0 | .000 |

| 1. Classroom | 35 | .729 | |

| 2. Museum | 1 | .021 | |

| 3. Outdoors | 3 | .063 | |

| 4. Multiple settings | 8 | .167 | |

| 5. Library | 1 | .021 | |

| Reward method | 0. Not mentioned | 1 | .021 |

| 1. Group rewards for individual learning | 4 | .083 | |

| 2. Group rewards for group outcomes | 4 | .083 | |

| 3. Individual rewards | 39 | .813 |

Note. CL = collaborative learning; CSCL = computer-supported collaborative learning; LT = learning together; TGT = team game tournament; GI = group investigation; STAD = student teams achievement division.

Some articles included more than one outcome variables.

As for group formats, the most frequently studied was mixed, followed by triad. Regarding the group properties, heterogeneous groups was used more often than homogeneous groups. The most frequently used teaching method was LT, followed by GI and jigsaw. The most frequent intervention duration was >1 week and ≤4 weeks, followed by >4 weeks and ≤6 months. The setting for more than half of the studies was a classroom, followed by multiple settings. The reward method being used via the intervention was mostly individual rewards, followed by group rewards for individual learning and group rewards for group outcomes.

Evaluation of Publication Bias

This study used the fail-safe N method (Orwin, 1983; Rosenthal, 1979), as well as the trim-and-fill method (Duval & Tweedie, 2000). The results presented in Table 2 indicate that the fail-safe N was 3,372, meaning that 3,372 missing articles would be needed to bring the overall effect to 0, which is far higher than the tolerable number of articles, which was 250 (5n + 10, where n is the total number of articles). In addition, the results for Orwin’s (1983) fail-safe N in Table 3 indicate that 2,124 missing articles would be needed to bring Hedges’s g to a “trivial” level (g < 0.01). The trim-and-fill results indicate that five missing articles were needed to make the asymmetric of ESs symmetrical. If five missing articles were added to the fixed effects category, the overall effect would become 0.402 (p < .05), whereas if five missing articles were added to the random effects category, the overall effect would become 0.399 (p < .05). Although this outcome would reduce the strength of the effects, the results would still be statistically significant. These two experimental results show that this meta-analysis was not substantially affected by publication bias.

Table 2.

Results for the classic fail-safe N

| z for observed studies | 16.54 |

| p for observed studies | .00 |

| Alpha | .05 |

| Tail | 2.00 |

| z for alpha | 1.96 |

| No. of observed studies | 48 |

| No. of missing studies that would bring the p value to >alpha | 3,372 |

Table 3.

Results for Orwin’s fail-safe N

| Hedges’s g in observed studies (fixed effect) | 0.45 |

| Criterion for a “trivial” Hedges’s g | 0.01 |

| Mean Hedges’s g in missing studies | 0.00 |

| No. of missing studies needed to reduce Hedges’s g to <0.01 | 2,124 |

The Overall ES for Learning Performance

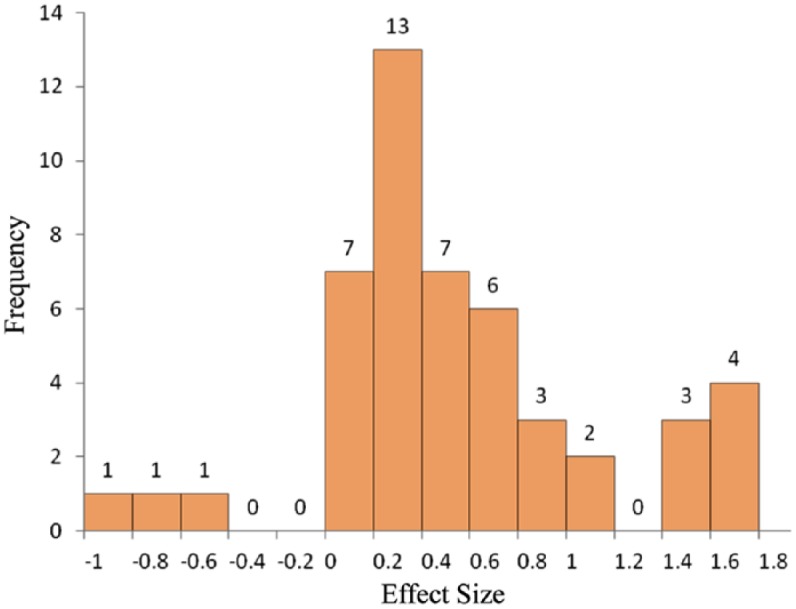

There were 163 ESs in the 48 articles. The distribution of the ESs of the 48 articles is shown in Figure 3. Using the procedure of Lipsey and Wilson (2001), an extreme-value analysis revealed no extreme values (i.e., those more than 3 standard deviations from the mean) for the entire collection of 48 articles. Using the procedure of Borenstein et al. (2009) with a random-effect model to integrate the ESs of the 48 included articles, the overall mean ES of the 48 articles was a medium one of 0.516, with a 95% confidence interval [0.377, 0.655]. According to Cohen (1988, 1992), ESs of 0.80, 0.50, and 0.20 are considered as large, medium, and small, respectively. Thus, the present findings suggest that mCSCL has a medium ES; in other words, 69.71% of the learners using mCSCL performed significantly better than those learners who did not use mobile devices in CL or did not undergo CL. Q statistics revealed that the ESs in the meta-analysis were heterogeneous (Qtotal = 293.990, z = 7.279, p < .001), which indicates that there were differences among the ESs that were attributable to sources other than subject-level sampling error.

Figure 3.

Histogram of the effect sizes for the 48 included articles of this meta-analysis.

The ESs of Learning Performance for Moderator Variables

The random effect model was used to analyze the ES of each variable. In this research, levels of some moderators had small numbers of articles (see Table 1); the levels with small numbers of articles were merged. For learning stage, junior and senior high schools were combined into “secondary schools,” and college and teacher were bundled together into “adult.” For domain subject, health care programs, finance and economics, education, computer and information technology, and engineering projects were combined into “professional subjects.” Some intervention durations were also combined, with ≤4 hours, >4 hours and ≤24 hours, and >1 day and ≤7 days becoming “≤1 week.” For implementation setting, classroom and library were combined into a “formal settings” category, and museum and outdoors were bundled together into one “informal settings” category. Table 4 lists the ESs for the moderator variables.

Table 4.

Learning-performance ESs of categories and their related moderator variables

| Category | k | g | z | 95% CI | QB | df |

|---|---|---|---|---|---|---|

| Control group types | 3.282 | 2 | ||||

| 1. Non-CL | 31 | 0.587 | 6.832*** | [0.419, 0.756] | ||

| 2. CL that did not use computers | 11 | 0.472 | 2.976** | [0.161, 0.784] | ||

| 3. CSCL that did not use mobile devices | 6 | 0.201 | 1.012 | [−0.188, 0.590] | ||

| Learning outcomes | 0.465 | 2 | ||||

| 1. Learning achievement | 39 | 0.551 | 6.979*** | [0.396, 0.705] | ||

| 2. Learning attitude | 13 | 0.518 | 3.828*** | [0.253, 0.783] | ||

| 3. Peer interaction | 7 | 0.415 | 2.249* | [0.053, 0.776] | ||

| Learning stage | 2.378 | 3 | ||||

| 1. Elementary school | 16 | 0.567 | 4.446*** | [0.317, 0.817] | ||

| 2. Secondary school | 9 | 0.662 | 4.017*** | [0.313, 0.822] | ||

| 3. Adult | 18 | 0.375 | 3.089** | [0.137, 0.613] | ||

| 4. Mixed | 5 | 0.576 | 2.609** | [0.143, 1.009] | ||

| Domain subject | 10.581 | 5 | ||||

| 1. Language arts | 11 | 0.413 | 2.548* | [0.095, 0.730] | ||

| 2. Social studies | 6 | 0.223 | 1.045 | [−0.195, 0.642] | ||

| 3. Science | 9 | 0.770 | 4.531*** | [0.437, 1.103] | ||

| 4. Mathematics | 5 | 0.894 | 3.583*** | [0.437, 1.351] | ||

| 5. Specific abilities | 5 | 0.124 | 0.565 | [−0.305, 0.552] | ||

| 6. Professional subjects | 12 | 0.585 | 3.911*** | [0.292, 0.879] | ||

| Group size | 3.885 | 5 | ||||

| 0. Not mentioned | 9 | 0.368 | 2.207* | [0.041, 0.694] | ||

| 1. Dyad | 2 | 0.216 | 0.579 | [−0.515, 0.947] | ||

| 2. Triad | 10 | 0.492 | 2.905** | [0.160, 0.824] | ||

| 3. Tetrad | 6 | 0.819 | 3.862*** | [0.403, 1.234] | ||

| 4. More than four people | 7 | 0.635 | 3.217** | [0.248, 1.021] | ||

| 5. Mixed | 14 | 0.494 | 3.668*** | [0.230, 0.759] | ||

| Group composition | 1.992 | 2 | ||||

| 0. Not mentioned | 27 | 0.449 | 4.803*** | [0.266, 0.632] | ||

| 1. Homogeneous | 4 | 0.804 | 3.243** | [0.318, 1.290] | ||

| 2. Heterogeneous | 17 | 0.559 | 4.654*** | [0.323, 0.794] | ||

| Teaching method | 1.232 | 4 | ||||

| 1. LT | 29 | 0.527 | 5.626*** | [0.343, 0.710] | ||

| 2. TGT | 4 | 0.442 | 1.797 | [−0.040, 0.924] | ||

| 3. GI | 7 | 0.562 | 2.846** | [0.175, 0.949] | ||

| 4. Jigsaw | 6 | 0.589 | 2.819** | [0.180, 0.999] | ||

| 5. STAD | 2 | 0.162 | 0.446 | [−0.549, 0.873] | ||

| Intervention duration | 13.519** | 4 | ||||

| 0. Not mentioned | 4 | 0.643 | 2.424* | [0.123, 1.164] | ||

| 1. ≤1 week | 8 | 0.239 | 1.354 | [−0.107, 0.586] | ||

| 2. >1 week and ≤4 weeks | 16 | 0.867 | 6.991*** | [0.624, 1.109] | ||

| 3. >4 weeks and ≤6 months | 15 | 0.339 | 2.694** | [0.092, 0.586] | ||

| 4. >6 months | 5 | 0.330 | 1.646 | [−0.063, 0.723] | ||

| Implementation setting | 0.253 | 2 | ||||

| 1. Formal settings (classroom, library) | 36 | 0.506 | 5.948*** | [0.339, 0.672] | ||

| 2. Informal settings (museum, outdoors) | 4 | 0.634 | 2.596** | [0.155, 1.113] | ||

| 3. Multiple settings | 8 | 0.503 | 2.839** | [0.156, 0.851] | ||

| Reward method | 6.179 | 3 | ||||

| 0. Not mentioned | 1 | 0.632 | 1.404 | [−0.250, 1.513] | ||

| 1. Group rewards for individual learning | 4 | 0.476 | 1.820 | [−0.037, 0.989] | ||

| 2. Group rewards for group outcomes | 4 | –0.069 | –0.278 | [−0.560, 0.421] | ||

| 3. Individual rewards | 39 | 0.580 | 7.089*** | [0.420, 0.740] |

Note. ES = effect size; CI = confidence interval; df = degrees of freedom; CL = collaborative learning; CSCL = computer-supported collaborative learning; LT = learning together; TGT = team game tournament; GI = group investigation; STAD = student teams achievement division.

p < .05. **p < .01. ***p < .001.

Independent Variables/Control Group Types

The mCSCL studies using non-CL as their control group demonstrated the largest ES, followed by control groups of CL that did not use computers and control groups of CSCL that used desktops but not mobile devices. QB did not reach statistical significance (see Table 4 and the tools/instruments–rules/control–objectives triangle in Figure 2).

Dependent Variables/Learning Outcomes

When using mobile devices for different learning outcomes, the ES was largest for studies in which the learning outcome was learning achievement, followed by learning attitude (including motivation, interest, and engagement) and peer interaction. QΒ did not reach statistical significance at the .05 level. Please refer to Figure 2, specifically at the tools/instruments–subjects–objectives, the tools/instruments–rules/control–objectives, and the tools/instruments–context–objectives triangles.

Learning Stage

The mCSCL studies implemented in the learning stage of secondary school produced the largest ES, followed by learners with mixed ages, elementary school, and adult. QB did not reach statistical significance. Please refer to Figure 2, specifically at the tools/instruments–subjects–objectives triangle.

Domain Subject

The mCSCL studies implemented with the mathematics domain produced the largest ES, followed by science, professional subjects, and language arts. The ESs for studies with the domain subjects of social studies and specific abilities were not significant. QΒ did not reach statistical significance. Please refer to Figure 2, specifically at the tools/instruments–rules/control–objectives triangle. Furthermore, when language arts and social science were combined into “humanities and social sciences” and mathematics and science were bundled together into “mathematics and science,” the ES was largest for studies in which the domain subject was mathematics and science, followed by professional subjects and humanities and social sciences. In addition, the ES for the study of specific abilities was not significant. QB reached statistical significance, indicating that the ESs differed significantly among the various categories.

Group Size

When the “not mentioned” category was ignored, the mCSCL studies in which group size was a tetrad produced the largest ES, followed by groups with more than four people, learners with mixed group sizes, and triad. In addition, the ES for studies with the dyad group size was not significant. QΒ did not reach statistical significance. Please refer to Figure 2, the tools/instruments–communication/interaction–objectives triangle. In addition, among all 48 studies we included in our study, only two studies examined the dyad group size (Table 1). As a result, the dyad and triad categories were also combined into “dyad/triad.” The results showed that studies in which the group size was dyad/triad had a medium ES.

Group Composition

When the “not mentioned” category was ignored, the mCSCL studies with homogeneous group composition had a larger ES, followed by heterogeneous groups. QΒ did not reach statistical significance. Please refer to Figure 2, specifically the tools/instruments–subjects–objectives triangle.

Teaching Method

The mCSCL studies with the teaching methods of jigsaw produced the largest ES, followed by GI. The studies with teaching methods of TGT and STAD did not yield significant ESs. QΒ did not reach statistical significance. Please refer to Figure 2, specifically the tools/instruments–rules/control–objectives triangle. It is noteworthy that structured CL scenarios that emphasized designated steps (e.g., TGT and STAD) and a division of labor among the team members (e.g., GI and jigsaw; Doymus, 2008; Slavin, 1983) for implementing learning activities were used only in 6 and 13 studies (Table 1), respectively. In contrast, most of the included studies used LT as their teaching method (Table 1). We combined TGT, GI, Jigsaw, and STAD into the “structured mCSCL” category for comparison with the “ill-structured” LT category. The results showed that studies with structured mCSCL had a similar ES with that of ill-structured mCSCL: Q did not reach statistical significance.

Intervention Duration

The ES was largest when the mCSCL studies lasted for >1 week and ≤4 weeks. The ESs for studies lasting for ≤1 week and >6 months were not significant. Q reached statistical significance, indicating that the ESs differed significantly among the various categories. Please refer to Figure 2, specifically at the tools/instruments–rules/control–objectives triangle.

Implementation Setting

The mCSCL studies that were implemented in informal settings had the largest ES, followed by formal settings and multiple settings. The Q did not achieve significance at the p < .05 level. Please refer to Figure 2, specifically at the tools/instruments–context–objectives triangle.

Reward Method

When the “not mentioned” category was ignored, the studies using individual reward methods had the largest ES. No significant ESs were found for categories of group rewards for individual learning and group rewards for group outcomes. Q did not reach statistical significance. Please refer to Figure 2, specifically at the tools/instruments–rules/control–objectives triangle. Given that most of the articles included in our study used individual rewards (Table 1), the present study also bundled the categories for group rewards for individual learning and group rewards for group outcome into a single category “group rewards.” The results showed that when the “not mentioned” category was ignored, individual rewards had medium ES; the ES for group rewards was again not significant. Q did not reach statistical significance.

Discussion

The Effects of Moderator Variables

The effects of mCSCL might be moderated by different variables. Previous research has indicated that factors such as group composition, interactions between group members, implementation setting, and intervention duration may influence CSCL effects. In the present study, we organized these factors in the AT-mCSCL framework in Figure 1. The analysis of the effects of those moderating effects provided converging evidence for previous research that the mCSCL effects may vary with different moderating variables, and their corresponding effects sizes may be visualized in Figure 2. A more detailed discussion of moderating effects follows.

Independent Variables/Control Group Types

Although previous studies have confirmed the effects of CSCL (e.g., Lou, Abrami, & d’Apollonia, 2001; Resta & Laferrière, 2007), our study provided converging evidence for such effects, as compared with control groups of individual learning and of group learning with no computers, mCSCL, as a branch of CSCL, produced significantly beneficial effects. However, compared with the control group of regular CSCL using desktops instead of mobile devices, mCSCL did not produce large effects. This finding suggests that the most essential element of CSCL may be not the delivering devices; instead, the design of CSCL, such as learning scenarios, mechanism of encouraging interaction, and reward method, may be more important for enhancing the effects of CSCL. To illuminate the effects of mCSCL, researchers and practitioners may need to pay more attention to how to exploit the functionalities of mobile devices by matching with the core elements of CSCL.

Dependent Variables/Learning Outcomes

Our results indicate that mCSCL is beneficial for different learning outcomes. The results are similar to findings in the meta-analyses by Springer et al. (1999) and Kyndt et al. (2013), who also found that the effects of cooperative learning were beneficial for the categories of learning achievement and learning attitude. These findings may echo researchers’ claims that the positive interdependence, reward structure, and group dynamics in cooperative learning processes can facilitate students’ learning (e.g., D. W. Johnson & Johnson, 1998; Slavin, 2012). Furthermore, the current meta-analysis also provided supportive evidence for the positive influence of cooperative learning on students’ peer interaction, which was scarcely investigated in previous CL or CSCL meta-analyses. This finding also supported researchers’ claims that mobile devices’ functionalities of sharing and feedback may facilitate group interaction (e.g., Asabere, 2012; Hsu & Ching, 2013; Song, 2014). Some empirical research might illustrate those effects. For instance, Valdivia and Nussbaum (2007) found that face-to-face cooperative learning assisted by mobile devices (personal digital assistants) enabled learners to receive timely feedback and express their views, which enhanced interaction among peers. Zurita and Nussbaum (2007) also discovered that cooperative learning assisted by mobile devices and wireless Internet allowed learners to discuss their views with peers whenever they encountered problems and enabled teachers to quickly grasp each group’s learning progress and give timely feedback or guidance, thus improving the social interactive performance of learners.

Researchers have also investigated factors that may affect interaction in cooperative learning. For example, White (2006) found that giving everyone a connected mobile device enhanced the effects of interaction among group members. In addition, Klein and Schnackenberg (2000) discovered that the effects of interaction may be influenced by affiliation among the group members. High-affiliation groups exhibited more helping and on-task group behaviors conducive to the completion of learning tasks. However, they also exhibited more off-task behaviors that are not linked to learning and might be detrimental to the completion of learning tasks. It is therefore very important to monitor cooperative learning groups and keep track of how they learn.

Domain Subject

We found that mCSCL had fewer positive effects on social studies and language arts than on sciences and mathematics, which is similar to findings by Kyndt et al. (2013), Lou et al. (1996), and Qin et al. (1995), who found that the effects of cooperative learning on mathematics and science were superior to of the effects on language arts and social studies. These authors proposed that learning mathematics and science may need more peer-based interaction and inquiry; therefore, interaction and problem-solving procedures in a small-group setting may produce larger effects in those subjects (e.g., K. Lai & White, 2012; Uzunboylu et al., 2009). For instance, Uzunboylu et al. (2009) used mobile learning systems to help students learn about the natural environment. During the learning process, students used mobile devices to record their observations, then discussed their findings with their peers. The study found that the features of mobile devices—real-time feedback and freedom from the limitations of time and place—helped learners collect information in their science classes more efficiently and also helped them find appropriate methods to solve problems, which led to positive cognitive understanding and learning attitudes.

K. Lai and White (2012) used software on mobile devices to assist students in cooperative learning activities in geometry, where learners were asked to complete group tasks using cooperation and discussion. The research found that intervention with mobile devices enhanced learning performance by increasing the efficiency of cooperation among group members as they sought to understand the geometry lesson and the questions asked. In addition, the intervention provided learners with more opportunities to present their views and receive timely feedback.

On the other hand, C. P. Chen, Shih, and Ma (2014) use mobile devices to assist in CL activities in social studies. They found that the individual learning groups had better learning achievements than the mCSCL groups. The possible reason could be that their learning goals for social studies focused on the learners’ knowledge and understanding of the materials, which differs from the subjects of sciences and mathematics, which emphasize peer-based interaction and inquiry (Lou et al., 1996; Qin et al., 1995). Kyndt et al. (2013) also indicated that learning tasks/concepts in mathematics are usually more structural/hierarchical than social sciences and languages. Peer tutoring in groups may be more significantly demonstrated in hierarchical/structural learning tasks/concepts in science and mathematics (Lou et al., 1996).

Group Size

Our findings differ somewhat from those of previous research (e.g., Schellens & Valcke, 2006; Strijbos, Martens, & Jochems, 2004), which proposed that in CSCL, smaller groups produced better interaction and performance than large groups; groups containing three or four members are the most appropriate. However, mCSCL may demonstrate different styles and features because mCSCL group interactions are not limited by distance, and the real-time display of the discussion process, products, and comments in mobile devices may also enhance the efficiency and effectiveness of group members. Furthermore, groups containing more members may increase the diversity of viewpoints and mutual feedback, which may also satisfy the needs of different group members (e.g., C. M. Chen, 2013; T. Y. Liu, Tan, & Chu, 2009).

Group Composition

Previous studies indicated that heterogeneous groups may have better effects in CL. For example, some research indicated that heterogeneous groups could provide greater degrees of critical thinking, providing and receiving explanations, and perspective taking in discussing materials; it not only helps low-ability students get more learning support but also allows high-ability students to deepen their learning impressions (e.g., Clark, Stegmann, Weinberger, Menekse, & Erkens, 2008; Dalton, Hannafin, & Hooper, 1989; Swing & Peterson, 1982). Nevertheless, Hooper and Hannafin (1991) indicated that learners’ abilities may interact with the composition of groups: High-ability learners benefited more from homogeneous groups, whereas low-ability learners benefitted more from heterogeneous groups. Furthermore, some researchers (Lou, Abrami, & Spence, 2000; Webb & Palincsar, 1996) proposed that group composition need to consider not only learners’ abilities but also instructional purposes. Therefore, although we found that the difference of effects between homogeneous and heterogeneous groups was not obvious in mCSCL, more elaborate experimental designs aimed at investigating group compositions are warranted.

Teaching Method

We found that TGT and STAD groups were less productive than LT, Jigsaw, and GI. This finding was consistent with D. W. Johnson et al. (2000), who indicated that competitive factors in learning activities, one of the features of TGT and STAD, may reduce learning performance. The reason TGT and STAD groups did not exhibit a significant effect may be that both methods emphasized competition by executing tournaments or individual quizzes in the learning activities (Kyndt et al., 2013; Slavin, 1983, 1990).

We also found that in mCSCL, researchers employed more ill-structured scenarios (e.g., LT) than structured scenarios (e.g., Jigsaw). This may be due to LT only loosely encouraging whole-group discussion among team members, as well as its lack of a designated learning procedure making the design of learning activities easier (D. W. Johnson & Johnson, 1998; Kyndt et al., 2013; Slavin, 1983, 2012). Previous researchers (Lou et al., 2001; Slavin, 2012; Vogel, Wecker, Kollar, & Fischer, 2016) have proposed that structured CL scenarios may be more effective than ill-structured or loosely defined CL scenarios. For instance, Vogel et al. (2016) found that CSCL scripts may function as a kind of sociocognitive scaffolding and promote learning outcomes more obviously than ill-structured CSCL. However, we found that when applying mobile devices with CL activities, structured CL did not show any advantages. There are at least two possible reasons for this finding. The first is that mobile devices compensated for the deficiency of ill-structured scenarios through certain beneficial functionalities, such as real-time sharing and feedback. The other may be that although certain mCSCL studies claimed to have used structured teaching methods (e.g., Jigsaw, GI, STAD, TGT), in reality, they did not strictly follow the mandated steps of the structured teaching method in their cooperative learning activities, resulting in the lack of a clear group target and individual accountability, which then reduced the expected learning outcomes (e.g., Slavin, 1995, 2012).

For example, both Chen et al. (2014) and Yuretich and Kanner (2015) used the GI as a teaching method for implementing cooperative learning activities, and they discovered that compared with the control groups, the mCSCL group did not significantly outperform in terms of learning achievements. Another possible reason could be that these studies did not formulate clear regulations for the division of labor and procedure for executing the tasks among the team members, which might not only result in inefficient group processes, such as social loafing, but also reduce learning performance. Based on the second possible reason, more technology-based assistance may be needed to help teachers easily and efficiently build their scripts for task structure (e.g., Miller & Hadwin, 2015; Vogel et al., 2016; Weinberger, Stegmann, & Fischer, 2010) and to help bolster participants’ awareness of the cooperative process and products during their interaction with peers (e.g., Janssen, Erkens, Kirschner, & Kanselaar, 2010; Winne, Hadwin, & Gress, 2010).

Intervention Duration

Researchers of CSCL or CL have highlighted the importance of time for CL (e.g., Resta & Laferrière, 2007; Springer et al., 1999). Slavin (1977, 1993) proposed an even more concrete intervention duration for CL: CL needed to last longer than 1 week or even several weeks to achieve beneficial learning effects. Our study provided solid and converging evidence supporting those claims, because mCSCL studies that lasted less than 1 week did not show significant effects. Furthermore, most of the studies with teaching duration less than 1 week belonged to one-shot interventions (75.0% of these interventions lasted up to 4 hours long, as indicated on Table 1). It was hardly possible for members of a small group to get familiar with team members, tasks, hardware, and software, let alone fulfill their function roles for discussion, feedback, and problem solving, given such a short time frame.

Another interesting finding in our study was that the effects of longer durations decreased when the intervention duration was longer than 1 month, because durations between 1 and 4 weeks demonstrated more prominent effects than those between 1 and 6 months. Moreover, mCSCL studies with durations longer than 6 months did not show significant effects. Cheung and Slavin (2013) proposed that the novelty effect may produce better technology-based-learning results in the short term but not necessarily in the long run. This hypothesis may be one of the reasons why 1 to 6 months of mCSCL did not show better effects than those programs of 2 to 4 weeks. The lower ESs for long-term interventions of more than 6 months may be due to a loss of the sense of novelty in the devices themselves and a loss of interest in the routine scenarios. Teachers might not be able to provide innovative teaching methods and might even reduce the amount of time allocated for students to spend with mobile devices if there is no appropriate logistical support for hardware maintenance, software supplementation, and curriculum design during a long-term program (Sandberg, Maris, & de Geus, 2011).

Monotonous teaching scenarios and learning software may result in the learners not maintaining their motivation to use mobile devices for learning, which then reduces the frequency of using mobile devices in the classrooms and ultimately makes mobile use in teaching superficial (Fleischer, 2012). For instance, Persky and Pollack (2010) divided learners into two groups: The experimental group engaged in more than 6 months of mCSCL, with learners studying through group discussion, whereas the control group was given traditional large-class lectures. They found that compared with traditional large-class lectures long-term mCSCL may enhance learner efficiency in acquiring information because mobile devices enable rapid access to information, but the experimental group did not significantly outperform the control group in terms of learning achievements. Delgado-Almonte et al. (2010) also found that long-term mCSCL groups did not perform significantly better on achievement tests. The possible reason might be that both studies employed long-term interventions, but their teaching methods only encouraged group discussions and lacked clear teaching goals and appropriate logistical support in learning activities, which may reduce the interest and participation of learners (Fleischer, 2012; Sandberg et al., 2011; Slavin, 2012).

Implementation Setting

Springer et al. (1999) found that CL performance was better in informal settings than in formal settings. This result could be due to learners having higher motivation for learning in informal settings such as outdoors or museums; in addition, the use of new learning tools induces a novelty effect that boosts the motivation of learners (e.g., T. Y. Liu et al., 2009; Melero et al., 2015). For example, in T. Y. Liu et al. (2009), students assisted by mobile devices were more engaged in natural science outdoor cooperative learning activities than students without mobile-device assistance. T. Y. Liu et al. (2009) believed that this might be due to the fact that an outdoor learning environment gave learners direct access to what they were learning about, and this immersive learning enhanced interest levels and learning achievements. Melero et al. (2015) used mobile devices and a learning-through-playing method in an outdoor setting where learners worked in groups to carry out inquiry-oriented activities. They found that an outdoor learning setting created a sense of novelty for learners and helped raise levels of interest and participation; consequently, learning achievements also improved significantly.

Reward Method

Our study found that in mCSCL using individual rewards might produce better effects than group rewards. The research findings of Slavin (1983, 1995, 2011) similarly indicated that in a CL environment, group rewards based on the group outcome are the least effective. Slavin (1983, 1995, 2011) noted that group rewards based on the group outcome may often result in little incentive for group members to explain concepts to one another and in the workload being distributed unequally among group members (e.g., Kim et al., 2014; C. C. Liu et al., 2009). For instance, Kim et al. (2014) used group rewards based on the group outcome to implement the mCSCL learning activities. They found that compared to non-mCSCL groups, the mCSCL group did not show a better learning outcome. A possible explanation could be that mCSCL group members with a higher level of performance might carry most of the workload, and meanwhile group members with a lower level of learning performance could contribute little while exploiting the skills of the high performers and get the same reward, which might result in overall lower quality group interactions and productions.

Conclusions and Implications

The present meta-analysis and critical synthesis on mCSCL provide substantial evidence for the overall effect of using mobile devices in CSCL and how those effects vary with moderator variables. Using mobile devices for CL produced an overall positive effect, with a moderate mean ES of 0.516, which means that around 69.71% of students in the experimental groups learning with mobile-device-based collaborative/cooperative scenarios outperformed their counterparts who learned individually with mobile devices or who learned in groups without the aid of mobile devices. Furthermore, based on the AT-mCSCL framework, this study also revealed the close relationships between moderator variables and mCSCL effects (Figure 2), which can be summarized as follows:

mCSCL produced obviously positive effects when compared to control groups of individual learning or group learning with computers, but there was no significant difference between mCSCL and regular CSCL.

mCSCL was beneficial to students’ learning achievement, learning attitude, and peer interaction.

Students in different learning stages had similarly beneficial effects from mCSCL.

mCSCL produced more positive effects on mathematics and science outcomes than on language arts and social studies outcomes.

Larger groups, such as four or more than four members, produced better effects than smaller groups, such as two or three members, with mCSCL.

Homogeneous group composition and heterogeneous group composition demonstrated similar effects with mCSCL.

Teaching methods with competition, such as TGT and STAD, demonstrated inferior effects to teaching methods with no competition, such as Jigsaw and GI. Structured collaborative scenarios had similar effects to ill-structured collaborative scenarios.

Mid-length intervention durations, such as interventions between 1 and 4 weeks or interventions between 1 and 6 months, demonstrated effects superior to short intervention durations lasting less than 1 week or long interventions lasting more than 6 months.

mCSCL in informal settings had similar effects to formal settings.

mCSCL implemented with individual rewards demonstrated effects superior to mCSCL with group reward methods.

The findings above may provide insight into the optimal arrangement of mCSCL activities regarding the precondition (e.g., subjects and settings), process (e.g., intervention duration, reward method, and teaching method), and product (e.g., learning achievement, learning attitude, and peer interaction) variables, which are the central concerns of CSCL researchers and practitioners. Based on these findings, several implications for further research and practice of mCSCL are discussed.

Enhancing the Effects of CSCL Through Appropriate Use of the Functionalities of Mobile Devices

Compared with traditional personal computers, mobile devices provide a more personalized learning interface, instant information access, context awareness, and instant messaging. Our review study found that using these features may help increase the application effects of CSCL. For example, personalized interfaces and instant messaging can facilitate the sharing of information, either face-to-face or online, thus increasing interaction among the members of small groups; instant information access through context-awareness techniques can facilitate the gathering and analysis of information and, consequently, help learners engage in inquiry and interaction with the environment. Furthermore, the portability and interconnectivity of learning devices help integrate formal and informal settings, which makes CL activities in different situations more tightly connected, helping achieve the goal of seamless learning. As one of the platforms and tools for CSCL with the most potential, the functionalities of mobile devices may play an important role for ameliorating the problems and challenges associated with CSCL implementations, such as insufficient information sharing, lack of instant interaction among members, and difficulty integrating learning activities out of classrooms (Asabere, 2012; Laurillard, 2009; Song, 2014).

Leveraging the Effects of mCSCL Through Effective Group Learning Mechanisms

In addition to the functionalities of mobile devices, another key factor that will influence the effectiveness of mCSCL is the mechanism for effective CL, such as intervention duration, group size, teaching method, and reward method. Efforts should be made to find out the critical leverage points that may optimize the effects of integrating mobile devices with group learning. For example, mCSCL interventions lasting less than 1 week may not produce significant effects because the time is insufficient for familiarizing learning tasks, scenarios, partners, and hardware/software. Interventions lasting more than 6 months need to pay more attention to how to provide sufficient logistics, such as the variability of learning scenarios, and software for enhancing teachers’ ability to arrange group learning scripts; how to monitor and encourage students’ interaction and proper workflow; and teachers’ professional development for their leadership roles for more productive outcomes in CSCL (Resta & Laferrière, 2007).

Regarding the reward method, our research—as well as those of Slavin (1983, 1995, 2011)—indicates that when rewards are distributed individually, the overall effect is better than when group rewards are given for group outcomes. This result not only provides insight for designing reward guidance for mCSCL but also shows the importance of finding out the possible balancing point for individual performance–based fairness and group-based accountability in mCSCL. Furthermore, previous researchers have indicated the benefits of a smaller group size for CSCL (e.g., Lou et al., 1996; Noroozi et al., 2012). However, our study revealed that group size deserves additional scrutiny when mCSCL is used, because mCSCL may empower group members to have equal access and opportunity to participate with fewer interaction restrictions caused by group size, time, and location. Therefore, sufficient group members may bring in more diverse input and insights and more opportunities for interaction. However, more studies are needed to establish the optimal group size for different types of mCSCL.

Equally Focusing on the Process and Products of mCSCL Research and Practice

In CSCL, quality group learning processes, such as coaching, sharing, and negotiation, are necessary conditions for quality products, especially for higher level products like problem solving and creation. However, even though product-level variables are essential, the fundamental contribution of group-level variables on a necessarily social intervention should be obvious. Yet, despite the importance of group learning processes, CSCL studies have focused on product variables (Gress et al., 2010). The same phenomenon appeared in our present review research: Only 7 (14.6%) of 48 articles reviewed were concerned with interaction issues during mCSCL activities. Examining the way in which group interaction is investigated, most studies just counted the frequencies of certain interactive behaviors, and almost none of the studies were concerned with the sequence or dynamic patterns of interactions between group members. Important issues, such as the way in which the functionalities of mobile devices (e.g., instant sharing and feedback) influence group interaction or the role mobile devices play in the peer interactions of different-sized groups have not yet been clarified. Furthermore, even if mCSCL researchers focused on product variables, most of the learning activities were limited to knowledge and skill acquisition, instead of higher level cognitive abilities, such as complex problem solving and knowledge creation, which may be one of the most desirable products for illustrating the power of CSCL.

At least two methods may help empower researchers and practitioners facilitate the mCSCL administration process and product creation. First, software for promoting learners’ reflection on their role functions in a team (e.g., Järvelä et al., 2015; Winne et al., 2010) or software for reminding students of their progress and level of quality of their created products during collaboration (e.g., Janssen et al., 2010; Nussbaum & Edwards, 2011; Stegmann, Wecker, Weinberger, & Fischer, 2012) can be incorporated into mCSCL environments to enhance the effects of group work and reduce the load of data collection.

Second, research designs appropriate for gathering diverse processes and product data in mCSCL, such as mixed research methods (e.g., Schrire, 2006), may be considered for their multiple data sources. Furthermore, data analysis methods that may capture the complete picture and dynamics of group interaction, such as the quantitative content analysis (e.g., Riffe, Lacy, & Fico, 2005), the lag sequential analysis (e.g., Hou & Wang, 2015; Yang, Chen, & Hwang, 2015), and the social network analysis (e.g., Scott, 2000), may be adopted into mCSCL studies.

Research Limitations

Despite our findings and implications, this study is subject to several limitations. First, owing to the limited number of empirical mCSCL studies, the quality of experimental research was not used as a criterion for the inclusion or exclusion of research samples. After the number of mCSCL studies grows, some approaches to examining the quality of experimental studies, such as the Best Evidence Synthesis (e.g., Cheung & Slavin, 2013) and the Study Design and Implementations Assessment Device (Valentine & Cooper, 2008), may be adopted to be the screening standards for more quality empirical mCSCL studies for meta-analysis. More abundant mCSCL studies may also increase the power of meta-analysis, especially for certain categories of moderators with very limited numbers of studies (e.g., “STAD” of teaching methods and “dyad” of group size). Second, the study samples included in this study covered earlier studies (e.g., two studies published in 2004), and as such, the types of mobile technologies used may vary significantly. Future research may consider using technology type as a moderator when comparing the effects of mCSCL.

Authors