Significance

We present a law of human perception. The law expresses a mathematical relation between our ability to perceptually discriminate a stimulus from similar ones and our bias in the perceived stimulus value. We derived the relation based on theoretical assumptions about how the brain represents sensory information and how it interprets this information to create a percept. Our main assumption is that both encoding and decoding are optimized for the specific statistical structure of the sensory environment. We found large experimental support for the law in the literature, which includes biases and changes in discriminability induced by contextual modulation (e.g., adaptation). Our results imply that human perception generally relies on statistically optimized processes.

Keywords: perceptual behavior, efficient coding, Bayesian observer, Weber–Fechner, stimulus statistics

Abstract

Perception of a stimulus can be characterized by two fundamental psychophysical measures: how well the stimulus can be discriminated from similar ones (discrimination threshold) and how strongly the perceived stimulus value deviates on average from the true stimulus value (perceptual bias). We demonstrate that perceptual bias and discriminability, as functions of the stimulus value, follow a surprisingly simple mathematical relation. The relation, which is derived from a theory combining optimal encoding and decoding, is well supported by a wide range of reported psychophysical data including perceptual changes induced by contextual modulation. The large empirical support indicates that the proposed relation may represent a psychophysical law in human perception. Our results imply that the computational processes of sensory encoding and perceptual decoding are matched and optimized based on identical assumptions about the statistical structure of the sensory environment.

Perception is a subjective experience that is shaped by the expectations and beliefs of an observer (1). Psychophysical measures provide an objective yet indirect characterization of this experience by describing the dependency between the physical properties of a stimulus and the corresponding perceptually guided behavior (2).

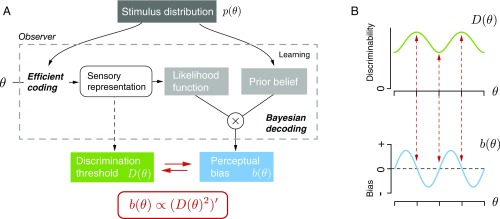

Two fundamental measures characterize an observer’s perception of a stimulus. Discrimination threshold indicates the sensitivity of an observer to small changes in a stimulus variable (Fig. 1A). The threshold depends on the quality with which the stimulus variable is represented in the brain (2) (i.e., encoded; Fig. 1B); a more accurate representation results in a lower discrimination threshold. In contrast, perceptual bias is a measure that reflects the degree to which an observer’s perception deviates on average from the true stimulus value. Perceptual bias is typically assumed to result from prior beliefs and reward expectations with which the observer interprets the sensory evidence (1), and thus is determined by factors that seem not directly related to the sensory representation of the stimulus. As a result, it has long been believed that there is no reason to expect any lawful relation between perceptual bias and discrimination threshold (3).

Fig. 1.

Psychophysical characterization and modeling of perception. (A) Perception of a stimulus variable (e.g., the angular tilt of the leaning tower of Pisa) can be characterized by an observer’s discrimination threshold and perceptual bias. Discrimination threshold specifies how well an observer can discriminate small deviations around the particular stimulus orientation given that there is noise in perceptual processing (green arrow). Perceptual bias specifies how much, on average, the perceived orientations over repeated presentations (thin lines) deviate from the true stimulus orientation (blue arrow). (B) Modeling perception as an encoding–decoding processing cascade. Discriminability is limited by the characteristics of the encoding process, i.e., the quality of the internal, sensory representation . Perceptual bias, however, also depends on the decoding process that typically involves cognitive factors such as prior beliefs and reward expectations. Both discrimination threshold and perceptual bias can be characterized with appropriate psychophysical methods (indicated by dashed arrows).

However, here we derive a direct mathematical relation between discrimination threshold and perceptual bias based on a recently proposed observer theory of perception (4, 7). The key idea is that both the encoding and the decoding process of the observer are optimally adapted to the statistical structure of the perceptual task (Fig. 2A). Specifically, we assume encoding to be efficient (8, 9) such that it maximizes the information in the sensory representation about the stimulus given a limit on the overall available coding resources (10). The assumption implies a sensory representation whose coding resources are allocated according to the stimulus distribution . This results in the encoding constraint

| [1] |

where the Fisher information represents the coding accuracy of the sensory representation (4, 11–13). Fisher information provides a lower bound on the discrimination threshold irrespective of whether the estimator is biased or not, which can be formulated as , where is a constant (5, 6). Rather than assuming a tight bound, we make the weaker assumption that the bound is equally “loose” over the range of the stimulus value . Using the encoding constraint (Eq. 1) above, we can express discrimination threshold in terms of the stimulus distribution as

| [2] |

Similarly, we have shown that the perceptual bias of the Bayesian observer model (Fig. 2A) can also be expressed in terms of the stimulus distribution (4, 7). Assuming that uncertainty in the perceptual process is dominated by internal (neural) noise and that the noise is relatively small, we can analytically derive the observer’s bias as

| [3] |

We can show that the expression holds independently of the details of the assumed loss function for a large family of symmetric loss functions (Supporting Information). Magnitude and sign of its proportionality coefficient, however, depend on several factors, including the noise magnitude and the loss function. Finally, by combining Eqs. 2 and 3, we obtain a direct functional relation between perceptual bias and discrimination threshold in the form of

| [4] |

i.e., perceptual bias (as a function of the stimulus variable) is proportional to the slope of the square of the discrimination threshold (Fig. 2B).

Fig. 2.

Observer model that links perceptual bias and discrimination threshold. (A) Our theory of perception proposes that encoding and decoding are both optimized for a given stimulus distribution (4). Based on this theory, the encoding accuracy characterized by Fisher information and the bias of the Bayesian decoder are both dependent on the stimulus distribution . With Fisher information providing a lower bound on discriminability (5, 6), we can mathematically formulate the relation between perceptual bias and discrimination threshold as . (B) Arbitrarily chosen example highlighting the characteristics of the relation: Bias is zero at the extrema of the discrimination threshold (red arrows) and largest for stimulus values where the threshold changes most rapidly. Thus, the magnitude of perceptual bias typically does not covary with the magnitude of the discrimination threshold.

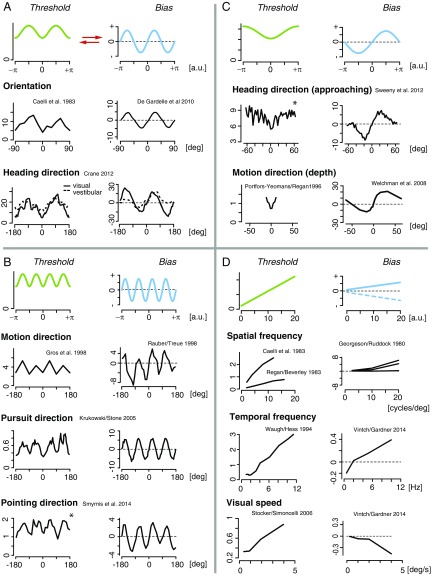

We tested the surprisingly simple relation against a wide range of existing psychophysical data. Figs. 3 and 4 show data for those perceptual variables for which both discrimination threshold and perceptual bias have been reported over a sufficiently large stimulus range. We grouped the examples according to their characteristic bias–threshold patterns. The first group consists of a set of circular variables (Fig. 3 A–C). It includes local visual orientation, probably the most well-studied perceptual variable. Orientation perception exhibits the so-called oblique effect (38), which describes the observation that the discrimination threshold peaks at the oblique orientations yet is lowest for cardinal orientations (14). Based on the oblique effect, Eq. 4 predicts that perceptual bias is zero at, and only at, both cardinal and oblique orientations. Measured bias functions confirm this prediction (15). Other circular variables that exhibit similar patterns are heading direction using either visual or vestibular information (16), 2D motion direction measured with a two alternative forced-choice (2AFC) procedure (17, 18) or by smooth pursuit eye movements (19), pointing direction (20), and motion direction in depth (21, 22). The relation also holds for the more high-level perceptual variable of perceived heading direction of approaching biological motion (human pedestrian) (23) as shown in Fig. 3C.

Fig. 3.

Predicted and measured bias–threshold patterns in perception. Data are organized into different groups. Green/blue curves represent the predicted discrimination threshold/bias. (A) Discrimination threshold (14) and bias (15) data for perceived orientation and heading direction (16) (solid lines for visual stimulation, and dashed lines for vestibular stimulation). (B) We found similar patterns for perceived motion direction [measured both with a 2AFC procedure (17, 18) or with smooth pursuit behavior (19)] and pointing direction [where subjects had to estimate the direction of a visually presented arrow (20)]. (C) The predicted relation also holds for perceived motion direction in depth (21, 22), as well as for the perception of higher-level stimuli such as the approaching heading direction of a person (23), although the quality of the available data is limited. (D) The bias–threshold relation is different for magnitude (i.e., noncircular) variables: Discrimination threshold is typically proportional to the stimulus value [Weber’s law (24)], and thus we predict that perceptual bias is also linear in stimulus value. Reported patterns for visually perceived spatial frequency (14, 25, 34), temporal frequency (26, 27), and grating speed (27, 28) match this prediction. All data curves are replotted from their corresponding publications, except bias data for orientation (15) and threshold data for visual speed (28), which both were derived by analyzing the original data. Stars (*) mark data that represent perceptual variability rather than discrimination thresholds (see also Figs. S2 and S3 for more details).

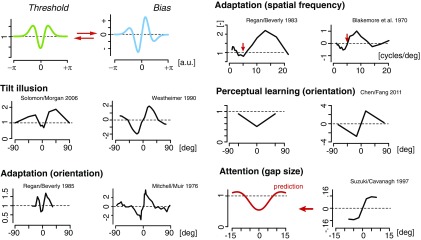

Fig. 4.

Predicted and measured bias–threshold patterns induced by various forms of contextual modulation. Spatial context as in the tilt illusion (29, 30) [or using motion stimuli (31)] and temporal context during adaptation experiments [orientation (32, 33), relative to adaptor orientation; spatial frequency (34, 35), red arrows indicating the value of the adaptor stimulus] induce similar biases and changes in thresholds that are qualitatively well predicted (relative to the unadapted condition). The predicted relation seems to also hold for perceptual changes induced by perceptual learning (36) and spatial attention. Spatial attention has been tied to repulsive biases (37) at the locus of attention and is also known for improvements in discriminability, although little is known about how discriminability changes with stimulus value. All data curves are replotted from the corresponding publications. All data represent biases and changes in threshold (ratio) relative to the corresponding control conditions (no surround; preadapt; prelearn; nonattended).

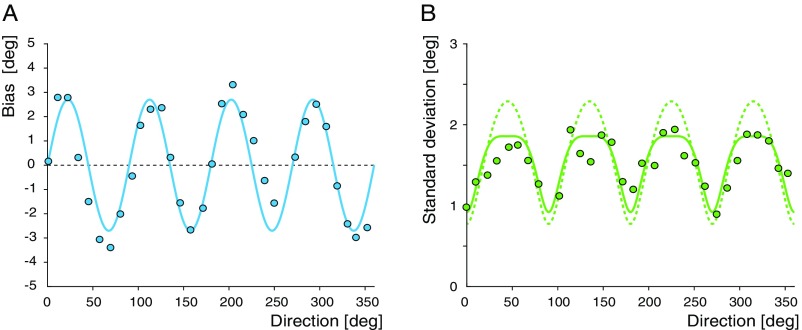

Fig. S3.

Variance prediction for pointing direction dataset (20). (A) Bias data and sinewave approximation (bold line). (B) Pointing variability (data) superimposed with the prediction (based on the sinewave approximation) according to Eq. S18 (bold line) and the prediction for discrimination threshold according to Eq. S15 (dashed line). Both predictions are made using the same values for their common free parameters . See also Fig. S2.

The second group contains noncircular magnitude variables for which discrimination threshold (approximately) follows Weber’s law (24) and linearly increases with magnitude (Fig. 3D). We predict that these variables should exhibit a perceptual bias that is also linear in stimulus magnitude. Indeed, we found this to be true for spatial frequency [threshold (14, 34, 39), bias (25)] as well as temporal frequency [threshold (40), bias (27)] in vision. Visual speed is another example for which discrimination threshold approximately follows Weber’s law (28) and bias is also approximately linear with stimulus speed (27), although, in contrast to the other examples, with a negative proportionality coefficient. A possible explanation for the sign difference may be that speed perception is governed by a loss function that differs from the loss functions for the other variables. In perceiving the speed of a moving object, an observer’s emphasis might be on estimating the speed “just right” to maximize the chance to, e.g., successfully intercept the object. A loss function that approximates the “all-or-nothing” characteristics of the norm would serve that goal, and would also predict the negative sign of the proportionality coefficient (see Supporting Information). Finally, perceived weight, one of the classical examples for illustrating Weber’s law (24), also seems to be consistent with the proposed bias–threshold pattern (41).

The last group contains bias–threshold patterns that are not intrinsic to individual specific variables but are induced by contextual modulation (Fig. 4). Spatial context as in the tilt illusion can induce characteristic repulsive biases in the perceived stimulus orientation away from the orientation of the spatial surround (30). The corresponding change in discrimination threshold (29) well matches the predicted pattern based on our theoretically derived relation. A similar bias–threshold pattern has been reported when probing motion direction instead of orientation (31). Furthermore, similar patterns have been observed for temporal context, i.e., as the result of adaptation. Adaptation-induced biases and changes in discrimination threshold for perceived visual orientation (32, 33) and spatial frequency (34, 35) are qualitatively in agreement with the prediction. At a slightly longer time scale, perceptual learning is also known to reduce discrimination thresholds. We therefore predict that perceptual learning also induces repulsive biases away from the learned stimulus value. This prediction is indeed confirmed by data for learning orientation (36) and motion direction (42), albeit the existing data are sparse. Finally, attention has been known as a mechanism that can decrease discrimination threshold (43). We predict that this decrease should coincide with a repulsive bias in the perceived stimulus variable. Although limited in extent, data from a Vernier gap size estimation experiment are in agreement with this prediction (37) and are further supported by recent results (44). In sum, the derived relation can readily explain a wide array of empirical observations across different perceptual variables, sensory modalities, and contextual modulations.

Based on the strong empirical support, we argue that we have identified a universal law of human perception. It provides a unified and parsimonious characterization of the relation between discrimination threshold and perceptual bias, which are the two main psychophysical measures characterizing the perception of a stimulus variable. Only a very few quantitative laws are known in perceptual science, including Weber–Fechner’s law (2, 24) and Stevens’ law (45). These laws express simple empirical regularities which provide a compact yet generally valid description of the data. The law we have proposed here shares the same virtue. However, unlike these previous laws, our law is not the result of empirical observations but rather was derived based on theoretical considerations of optimal encoding and decoding (4). Thus, as such, it does not merely describe perceptual behavior but rather reflects our understanding of why perception exhibits such characteristics in the first place. Note that, without the theory, we would not have discovered the general empirical regularity between discrimination threshold and bias. It is conceivable that some of the theoretical assumptions we made in deriving the law may prove incorrect (see Fig. S1) despite the fact that the law itself is empirically well supported. It is difficult to imagine, however, how a lawful relation between perceptual bias and discrimination threshold could emerge without a functional constraint that tightly links the encoding and decoding processes of perception (Fig. 2A).

Fig. S1.

Bayesian observer model simulations vs. analytic approximations (Eqs. 2 and 3). Discrimination threshold and perceptual bias are shown for a given prior distribution and four increasing levels of sensory (internal) noise. We assumed additive noise following a von Mises distribution whose concentration parameter determined the noise magnitude (high corresponds to low magnitude). Black lines represent the predicted curves according to Eqs. 2 and 3, and the colored lines represent the predicted curves according to numerical simulations of the full Bayesian observer model. Note that, while threshold and bias curves of the observer model show noticeable differences from the curves predicted by the analytic approximations, the lawful relation between the two still persists to an almost perfect extent. This is illustrated by how well the discrimination thresholds predicted by the law based on the numerically computed bias of the observer model (dashed purple lines) match the actual discrimination thresholds of the observer model (green lines).

The law allows us to predict either perceptual bias based on measured data for discrimination threshold or vice versa. One general prediction is that stimulus variables that follow Weber’s law should exhibit perceptual biases that are linearly proportional to the stimulus value as demonstrated with examples in Fig. 3D. Furthermore, because perceptual illusions are often examples of a strong form of perceptual bias induced by changes in context, we predict that these illusions should be accompanied with substantial threshold changes according to our law.

Perceptual biases can arise for different reasons, not all of which are aligned with the assumptions we made in our derivations. In particular, because we assumed that the uncertainty in the inference process is predominantly due to internal (neural) noise of the observer, we do not expect the proposed law to hold under conditions where stimulus ambiguity/noise is the dominant source of uncertainty. In this case, we expect discrimination threshold to be mainly determined by the stimulus uncertainty and not the prior expectations as we have assumed (Eq. 2).

It is worth noting that the law can also be expressed in terms of perceptual variance rather than discrimination threshold. This can be useful because some psychophysical experiments designed for measuring perceptual bias (e.g., by a method of adjustment) often record variance in subjects’ estimates as well. Using the Cramer–Rao bound on the variance of a biased estimator, we can rewrite Eq. 4 as

| [5] |

For relatively small and smoothly changing biases, the predictions for variance and discrimination threshold are similar (see Supporting Information for details).

Last but not least, perhaps the most surprising finding is that the law seems to hold for bias and discriminability patterns induced by contextual modulation (Fig. 4). This implies not only that changes in encoding and decoding can happen immediately (e.g., spatial context), or at least on short time scales, but also that these changes are matched between encoding and decoding by relying on identical assumptions (i.e., prior expectations) about the structure of the sensory environment. This fundamentally contrasts with existing theories that assume mismatches between encoding and decoding (i.e., the “coding catastrophe”) to be responsible for many of the known contextually modulated bias effects (46). It also contrasts with findings that put the locus of perceptual learning either at the encoding (47) or at the decoding (48) level; we predict learning to occur at both levels. Whether these contextual priors actually match the stimulus distributions within these contexts or not is unclear and remains a subject for future studies. Data from spatial attention experiments (Fig. 4) at least suggest that they may reflect subjective rather than objective expectations. This would imply that the distinction is not relevant in the context of the observer model considered here (Fig. 2A) and that efficient encoding and Bayesian decoding are both optimized for identical prior expectations, irrespective of whether these expectations are subjective or objective. We believe that the proposed law and its underlying theoretical assumptions have profound implications for the computations and neural mechanisms governing perception, which we have just started to explore.

SI Text

This document consists of three parts: The first part provides derivations of all of the components (i.e., essentially Eqs. 1–3) used in expressing the relation between perceptual bias and the discrimination threshold. Note that most of these derivations have been described before, and we only include them here to make the paper self-contained. In the second part, we derive the relation between perceptual bias and variance (rather than discrimination threshold). Finally, in the last part, we prove that the proposed relation between discrimination threshold (or variance) and perceptual bias is not limited to the case but holds for a large class of symmetric loss functions.

Part 1: Relation Between Perceptual Bias and Discrimination Threshold.

Relation between Fisher information and stimulus distribution (prior).

A key assumption of the used observer model (4) is that encoding is efficient in the sense that it seeks to maximize the mutual information between a scalar stimulus variable and its sensory representation (9, 10). Fisher information , defined as

| [S1] |

can approximate mutual information in the regime of small Gaussian noise (11, 13) through

| [S2] |

where and represents the (always nonnegative) Kullback–Leibler divergence (49).

We have previously shown (4, 13) that, by imposing a constraint to bound the total coding resource of the form

| [S3] |

where is a constant, maximizing mutual information requires the in Eq. S2 to be zero. This is the case if

| [S4] |

i.e., if the limited coding resources as measured by Fisher information are matched to the stimulus prior distribution . Several theoretical studies (4, 11, 12, 50) have previously derived and identified the constraint Eq. S4, which we refer to as the “efficient coding constraint.”

Relation between Fisher information and discrimination threshold.

Fisher information provides a lower bound on the discrimination threshold (5), including biased estimators (6). Below, we provide a shortened version of the derivation for the biased estimator according to ref. 6.

Consider two stimulus values and . With the assumption , it follows that . Signal detection theory (3) provides a formal description of how bias and variance for the estimates , of the two stimulus values determine the probability that , i.e., that the discrimination of the two stimulus values based on the estimates is correct. We can write

| [S5] |

by making the assumption that both estimates are Gaussian distributed, where the function is defined as

| [S6] |

and is the normalized distance (referred to as d-prime) between the distributions for the two estimates . The distance can be expressed as

| [S7] |

where and denote the mean and the variance of each of the two Gaussian distributions.

By writing , where is the estimation bias at , we can approximate for a small as

| [S8] |

where, in the last step, we have exploited the assumption of small by (i) approximating and as and (ii) approximating using . For a given discrimination criterion (measured in ), the corresponding can be obtained via Eq. S5. We will refer to this value as . With Eq. S9, we then can express the discrimination threshold , which we will formally denote as , as

| [S9] |

The Cramer–Rao bound for a biased estimator is given as

| [S10] |

Strictly, the Cramer–Rao bound is only formulated for linear variables. However, for small noise levels, we can approximate circular variables as locally linear variables, and thus apply the Cramer–Rao bound. The approximation will break down for nonlocal problems, in which case the metrics and topology of the circular space also make the calculation of several key statistical quantities (e.g., mean, variance) substantially different from the way they are computed in the linear space. Rearranging Eq. S10, we get

| [S11] |

By combining Eqs. S11 and S9, we find that the discrimination threshold is lower bounded by the Fisher information, i.e.,

| [S12] |

Finally, assuming that the Cramer–Rao bound is equally loose (not necessarily tight) for every , we obtain the relation between Fisher information and discrimination threshold,

| [S13] |

Relation between perceptual bias and stimulus distribution (L2 loss).

We have previously derived an analytic expression for perceptual as a function of the stimulus distribution , assuming a Bayesian decoder that minimizes squared error ( loss) (4). Assuming relative small noise and a smooth stimulus distribution, we found

| [S14] |

As we show in Generalization to Different Loss Functions, this functional relation holds for a large family of symmetric loss functions, including some of the most commonly used loss functions (e.g., loss function). Thus there seems little use in rederiving Eq. S14 for the special case of mean squared error loss.

Putting it together: The relation between perceptual bias and discrimination threshold.

By combining Eqs. S4, S13, and S14, we obtain our main result, the proposed relation between perceptual bias and discrimination threshold,

| [S15] |

In Generalization to Different Loss Functions, we will prove that this relation generalizes to a large class of symmetric loss functions of the Bayesian decoder.

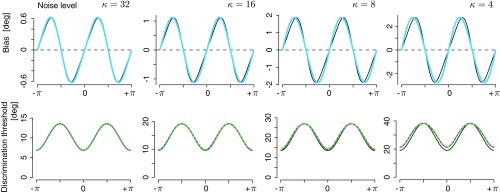

Validation of the analytic expressions for discrimination threshold and perceptual bias.

The analytic expressions for both the discrimination threshold and the perceptual bias (Eqs. 2 and 3) relied on a set of assumptions and approximations (mostly with regard to the magnitude of the noise). To provide some insight into how these assumptions affect our results, we simulated the full Bayesian observer model (as briefly described in Generalization to Different Loss Functions and in more detail in ref. 4) for various sensory (internal) noise magnitudes, and then compared the bias and threshold curves of the observer model with those predicted by the two analytic expressions.

Fig. S1 shows this comparison for increasing levels of noise. As expected, the approximations are good under small noise conditions and smoothly degrade with increasing noise levels. The largest noise level shown () corresponds to an estimation variance of a circular variable of approximately 30°. Importantly, while the numerically computed discrimination threshold and perceptual bias of the full Bayesian observer model are noticeably different from the curves given by the analytic expressions (Eqs. 2 and 3), they still almost perfectly obey the proposed law.

Part 2: Relation Between Estimation Bias and Variance.

The relation between discrimination threshold and bias can be rewritten in terms of estimation variance rather than threshold. This can be useful when trying to analyze datasets obtained with an estimation task (e.g., the method of adjustment). These datasets typically consist of a subject’s estimates over repeated trials, and thus allow the simultaneous estimate of perceptual bias and variance. While discrimination threshold and estimation variance are directly related, the reformulation in terms of variance results in a slightly more complex expression.

We start with the Cramer–Rao bound on the variance of a biased estimator, that is,

| [S16] |

where is the Fisher information and is the derivative of the bias. Using the characteristic efficient coding constraint Eq. S4 of our Bayesian observer model, we can express the variance in terms of the prior expectation as

| [S17] |

where we again make the assumption that the bound is equally loose across the entire range of . Combining Eq. S17 with the above derived dependency of the bias on the prior expectation Eq. S14, we find

| [S18] |

Compared with the original expression Eq. S14, the formulation is identical for variance except that it is scaled by . Given the bias , we can predict variance as

| [S19] |

while the reverse is generally not easy because it would involve solving a nonlinear differential equation. Obviously, when is constant, the relation simplifies to

| [S20] |

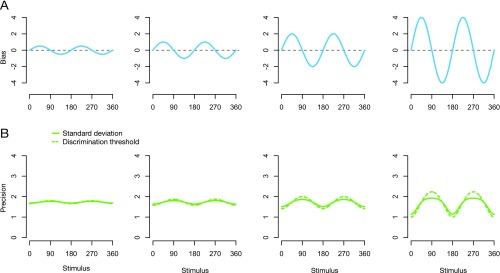

and the predicted relation between the bias and variance has identical functional form to the one between the bias and discrimination threshold. This should be the case for magnitude variables (i.e., variables that follow Weber’s law), as the perceptual bias for these variables is approximately linear in the stimulus value , and thus is constant (Fig. 3D). Similarly, for low noise levels, the biases become small and thus, assuming reasonably smooth bias curves, the term in Eq. S18 approximates 1. The relation between variance and bias again approximates Eq. S20. As the noise increases, the term becomes effective, and predictions for variance and squared discrimination threshold start to deviate from each other. Simulations shown in Fig. S2 demonstrate this effect.

Fig. S2.

Comparing the predictions for discrimination thresholds and variance (i.e., SD). (A) Perceptual bias is modeled as , with four different values (0.5, 1, 2, 4). (B) A comparison between the predictions for discrimination threshold (dashed lines) and the SD based on the bias curves in A. Discrimination threshold is modeled as . For SD, we use . for all of the panels. When is small, the threshold and SD behave similarly (up to a scale factor, which we have set to be 1 here for simplicity). As increases, the difference between the two becomes larger.

The two predictions remain qualitatively similar, which was the reason for us to include the pointing direction dataset (20) in our collection of examples (Fig. 3) even though it reports estimation variance rather than discrimination threshold.

Part 3: Generalization to Different Loss Functions.

Below, we show that the expression for bias (Eq. S15) generalizes to a large class of symmetric loss functions. Therefore, the main result of the paper, the predicted relation between discrimination threshold (or variance) and perceptual bias, is general. We first focus on the family of loss functions and then consider the case of more general symmetric loss functions in the end.

Bayesian observer model with efficient sensory representation.

We consider a Bayesian observer model that relies on the efficient encoding of sensory information (4). Given a stimulus with value , we assume the generative model for the encoding stage as

| [S21] |

where is the noisy sensory measurement, and represents the internal noise, which we assume is additive Gaussian with small variance . represents the encoder (Fig. 1) and is a one-to-one mapping from stimulus space to an internal sensory space with units . This internal sensory space (and thus the mapping ) is defined as the space in which Fisher information is uniform (thus uniform discrimination threshold). For all following derivations, we use the notation to indicate any variable that is expressed in units of this space, e.g., .

Due to the efficient constraint Eq. S4 of the observer model, the mapping is defined as

| [S22] |

which is the cumulative of the prior distribution .

With the above assumption of Gaussian noise, the posterior distribution can be expressed as

| [S23] |

Note that we formulate the inference problem in units of the stimulus space where a loss function has a clear physical interpretation. The loss function is defined as the norm

| [S24] |

between the estimate and the actual stimulus value . The corresponding Bayesian estimate is the one that minimizes the Bayes risk

| [S25] |

Below, we will derive how the bias of this estimate depends on the specifics of the loss function Eq. S24. Note that we have previously derived the bias for the loss (mean squared error estimate) (4).

L1 loss.

The Bayesian estimate that minimizes the loss function corresponds to the median of the posterior distribution. Note that the median is invariant to the particular parametrization of the posterior based on the following mathematical fact: Assuming is the median of random variable , for a one-to-one mapping , is the median of random variable . Because the posterior distribution for is Gaussian and centered around (the prior is flat in the internal space), the median for is simply . Mapping the value back to the stimulus space via , the median for becomes , and thus the estimate . The bias then can be rewritten as

| [S26] |

Note that the density of (with the inverse mapping of Eq. S22) follows

| [S27] |

where represents the prior distribution on . Thus,

| [S28] |

where we performed a change of variable from to . Assuming the prior density to be smooth, we expand around up to the second order,

| [S29] |

Note that, the derivative is taken with respect to , as indicated by the subscript . Applying this expansion, we find that

| [S30] |

where, in the last step, we have defined to simplify the notation. Evaluating the Gaussian integral , we then find that

| [S31] |

It is important to note that this expression for does not depend on the loss function of the decoder, because it does not involve the estimate . It is determined merely by the encoding process. We will use this result again when we consider other loss functions. With Eqs. S26 and S31, we then can express the bias for any as

| [S32] |

Therefore, .

L0 loss (MAP estimate).

The Bayesian estimate that minimizes the loss corresponds to the mode of the posterior distribution. Computing the mode of the posterior Eq. S23 is equivalent to computing the mode of the logarithm of the posterior, thus

| [S33] |

Applying a first-order Taylor expansion on around , we obtain the approximation

| [S34] |

and can reexpress Eq. S33 as

| [S35] |

Setting the left-hand side to zero, we find that the difference between the optimal Bayesian estimate and the measurement can be approximated by a constant related to and , i.e.,

| [S36] |

where we approximated both and by because is assumed to be small. The expectation of this difference is

| [S37] |

With Eq. S31, we then can express the bias for the MAP estimator as

| [S38] |

Therefore, . Note that the coefficient of proportionality is negative.

L2q loss function (, and q is an integer).

The Bayesian estimate under loss cannot be expressed in closed form, in general. However, we can compute the leading term of the bias of this estimator when the noise variance is small.

Again, the goal is to find the estimator , which minimizes the following Bayes risk:

| [S39] |

By examining the second-order derivative of with respect to , we find that the problem is a convex optimization problem. Therefore, there exists one and only one solution. We begin by rewriting

| [S40] |

Applying a second-order Taylor expansion on (Eq. S22) around , we find

| [S41] |

To simplify notation, we define and . Given these notations,

| [S42] |

Now the Bayes risk can be approximated as

| [S43] |

To find the estimate that minimizes , we take the first-order derivative with respect to (which is equivalent to the first-order derivative with respect to because of the linear dependence on ), and set it to zero. We find that should approximately satisfy the following equation:

| [S44] |

Denoting , the above expression can be simplified as

| [S45] |

We expand the left-hand side, exploiting (due to the symmetry) the fact that

| [S46] |

where is an arbitrary integer, and obtain

| [S47] |

where are the corresponding coefficients which we didn’t explicitly express for simplicity. Using the Gaussian integral

| [S48] |

where is an arbitrary integer, we use the notation for double factorial and obtain

| [S49] |

where are the corresponding coefficients. Ignoring the high-order terms, we obtain

| [S50] |

which can be rewritten as

| [S51] |

It can be verified that the coefficients satisfy the following property:

| [S52] |

The left-hand side of Eq. S51 is a continuous function of that we define as . We find that

| [S53] |

Due to the Intermediate Value Theorem, there must exist a solution for in the interval , and, because the problem is convex, this solution is unique. This tells us that . As a result, all of the terms on the left-hand side of Eq. S51 are of the order , except for the first term , which is of the order .

For small , the higher-order terms can be ignored, and the only relevant term left is . Thus, for small , we only need to solve the equation

| [S54] |

With the constraint Eq. S52 on the coefficients , this results in

| [S55] |

and thus

| [S56] |

The bias of the Bayesian estimator for any loss function can then be approximated as

| [S57] |

where we have again used Eq. S31.

Therefore, .

L2q−1 loss function (, and q is an integer).

We can use techniques similar to those for the case to compute the bias for an loss function. The main difference is that we have now to consider an additional term that, however, as we show below, can be neglected. For an loss, the Bayesian estimator minimizes the Bayes risk

| [S58] |

Similar to the case, the optimization problem is convex and thus has one and only one solution. We solve for the extremum by setting . Applying a Taylor expansion, we find

| [S59] |

where the term is introduced without being explicitly spelled out. We will deal with this term later.

To simplify notation, we define . It follows that

| [S60] |

Let us define . The goal then is to find the which satisfies . We will take advantage of the fact that the problem has a unique solution.

We first deal with . Similar to the analysis performed for the case, we can verify that, for small ,

| [S61] |

By verifying the coefficients , we find that to first order approximately satisfies .

Furthermore, we find that also satisfies . The reason is that, for , is of higher order than . Thus, can be ignored. Specifically, we can calculate the order of as

| [S62] |

We have assumed in the above calculation for the convenience of notation. The same technique applies and the result holds for the case of .

Thus, is the only solution of when is small. Similar to the case of the loss function, we then can express the bias of the Bayesian estimate as

| [S63] |

Therefore, .

General symmetric loss function.

For an arbitrary symmetric loss function with being smooth except at , the Bayesian estimator is the estimator that minimizes the Bayes risk

| [S64] |

Suppose that where is an integer. Then the bias of the Bayesian estimator is approximately the bias of the Bayesian estimator corresponding to the loss function, which is what we have derived above.

We thus conclude that represents a general description of the bias for a large set of symmetric loss functions.

Acknowledgments

We thank V. De Gardelle, S. Kouider, and J. Sackur for sharing their data. We thank Josh Gold and Michael Eisenstein for helpful comments and suggestions on earlier versions of the manuscript. This research was performed while both authors were at the University of Pennsylvania. We thank the Office of Naval Research for supporting the work (Grant N000141110744).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1619153114/-/DCSupplemental.

References

- 1.Helmholtz Hv. Handbuch der Physiologischen Optik, Allgemeine Enzyklopädie der Physik. Vol 9 Voss; Leipzig, Germany: 1867. [Google Scholar]

- 2.Fechner GT. Vorschule der Ästhetik. Vol 1 Breitkopf and Härtel; Leipzig, Germany: 1876. [Google Scholar]

- 3.Green D, Swets J. Signal Detection Theory and Psychophysics. Wiley; New York: 1966. [Google Scholar]

- 4.Wei XX, Stocker AA. A Bayesian observer model constrained by efficient coding can explain “anti-Bayesian” percepts. Nat Neurosci. 2015;18:1509–1517. doi: 10.1038/nn.4105. [DOI] [PubMed] [Google Scholar]

- 5.Seung H, Sompolinsky H. Simple models for reading neuronal population codes. Proc Natl Acad Sci USA. 1993;90:10749–10753. doi: 10.1073/pnas.90.22.10749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Seriès P, Stocker AA, Simoncelli EP. Is the homunculus ‘aware’ of sensory adaptation? Neural Comput. 2009;21:3271–3304. doi: 10.1162/neco.2009.09-08-869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wei XX, Stocker AA. Advances in Neural Information Processing Systems NIPS 25. MIT Press; Cambridge, MA: 2012. Efficient coding provides a direct link between prior and likelihood in perceptual Bayesian inference; pp. 1313–1321. [Google Scholar]

- 8.Attneave F. Some informational aspects of visual perception. Psychol Rev. 1954;61:183–193. doi: 10.1037/h0054663. [DOI] [PubMed] [Google Scholar]

- 9.Barlow HB. Possible principles underlying the transformation of sensory messages. In: Rosenblith W, editor. Sensory Communication. MIT Press; Cambridge, MA: 1961. pp. 217–234. [Google Scholar]

- 10.Linsker R. Self-organization in a perceptual network. Computer. 1988;21:105–117. [Google Scholar]

- 11.Brunel N, Nadal JP. Mutual information, Fisher information, and population coding. Neural Comput. 1998;10:1731–1757. doi: 10.1162/089976698300017115. [DOI] [PubMed] [Google Scholar]

- 12.Ganguli D, Simoncelli EP. Efficient sensory encoding and Bayesian inference with heterogeneous neural populations. Neural Comput. 2014;26:2103–2134. doi: 10.1162/NECO_a_00638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wei XX, Stocker AA. Mutual information, Fisher information and efficient coding. Neural Comput. 2016;28:305–326. doi: 10.1162/NECO_a_00804. [DOI] [PubMed] [Google Scholar]

- 14.Caelli T, Brettel H, Rentschler I, Hilz R. Discrimination thresholds in the two-dimensional spatial frequency domain. Vision Res. 1983;23:129–133. doi: 10.1016/0042-6989(83)90135-9. [DOI] [PubMed] [Google Scholar]

- 15.De Gardelle V, Kouider S, Sackur J. An oblique illusion modulated by visibility: Non-monotonic sensory integration in orientation processing. J Vis. 2010;10:6. doi: 10.1167/10.10.6. [DOI] [PubMed] [Google Scholar]

- 16.Crane BT. Direction specific biases in human visual and vestibular heading perception. PLoS One. 2012;7:e51383. doi: 10.1371/journal.pone.0051383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gros BL, Blake R, Hiris E. Anisotropies in visual motion perception: A fresh look. J Opt Soc Am A Opt Image Sci Vis. 1998;15:2003–2011. doi: 10.1364/josaa.15.002003. [DOI] [PubMed] [Google Scholar]

- 18.Rauber HJ, Treue S. Reference repulsion when judging the direction of visual motion. Perception. 1998;27:393–402. doi: 10.1068/p270393. [DOI] [PubMed] [Google Scholar]

- 19.Krukowski AE, Stone LS. Expansion of direction space around the cardinal axes revealed by smooth pursuit eye movements. Neuron. 2005;45:315–323. doi: 10.1016/j.neuron.2005.01.005. [DOI] [PubMed] [Google Scholar]

- 20.Smyrnis N, Mantas A, Evdokimidis I. Two independent sources of anisotropy in the visual representation of direction in 2-D space. Exp Brain Res. 2014;232:2317–2324. doi: 10.1007/s00221-014-3928-7. [DOI] [PubMed] [Google Scholar]

- 21.Portfors-Yeomans C, Regan D. Cyclopean discrimination thresholds for the direction and speed of motion in depth. Vision Res. 1996;36:3265–3279. doi: 10.1016/0042-6989(96)00065-x. [DOI] [PubMed] [Google Scholar]

- 22.Welchman AE, Lam JM, Bülthoff HH. Bayesian motion estimation accounts for a surprising bias in 3D vision. Proc Natl Acad Sci USA. 2008;105:12087–12092. doi: 10.1073/pnas.0804378105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sweeny TD, Haroz S, Whitney D. Reference repulsion in the categorical perception of biological motion. Vision Res. 2012;64:26–34. doi: 10.1016/j.visres.2012.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Weber EH. 1978. The Sense of Touch, trans Ross HE, Murray DJ (Academic, San Diego)

- 25.Georgeson M, Ruddock K. Spatial frequency analysis in early visual processing. Philos Trans R Soc Lond B Biol Sci. 1980;290:11–22. doi: 10.1098/rstb.1980.0079. [DOI] [PubMed] [Google Scholar]

- 26.Waugh S, Hess R. Suprathreshold temporal-frequency discrimination in the fovea and the periphery. J Opt Soc Am A Opt Image Sci Vis. 1994;11:1199–1212. doi: 10.1364/josaa.11.001199. [DOI] [PubMed] [Google Scholar]

- 27.Vintch B, Gardner JL. Cortical correlates of human motion perception biases. J Neurosci. 2014;34:2592–2604. doi: 10.1523/JNEUROSCI.2809-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Stocker AA, Simoncelli EP. Noise characteristics and prior expectations in human visual speed perception. Nat Neurosci. 2006;9:578–585. doi: 10.1038/nn1669. [DOI] [PubMed] [Google Scholar]

- 29.Solomon JA, Morgan MJ. Stochastic re-calibration: Contextual effects on perceived tilt. Proc Biol Sci. 2006;273:2681–2686. doi: 10.1098/rspb.2006.3634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Westheimer G. Simultaneous orientation contrast for lines in the human fovea. Vision Res. 1990;30:1913–1921. doi: 10.1016/0042-6989(90)90167-j. [DOI] [PubMed] [Google Scholar]

- 31.Tzvetanov T, Womelsdorf T. Predicting human perceptual decisions by decoding neuronal information profiles. Biol Cybern. 2008;98:397–411. doi: 10.1007/s00422-008-0226-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Regan D, Beverley K. Postadaptation orientation discrimination. J Opt Soc Am A. 1985;2:147–155. doi: 10.1364/josaa.2.000147. [DOI] [PubMed] [Google Scholar]

- 33.Mitchell DE, Muir DW. Does the tilt after-effect occur in the oblique meridian? Vision Res. 1976;16:609–613. doi: 10.1016/0042-6989(76)90007-9. [DOI] [PubMed] [Google Scholar]

- 34.Regan D, Beverley K. Spatial-frequency discrimination and detection: Comparison of postadaptation thresholds. J Opt Soc Am A. 1983;73:1684–1690. doi: 10.1364/josa.73.001684. [DOI] [PubMed] [Google Scholar]

- 35.Blakemore C, Nachmias J, Sutton P. The perceived spatial frequency shift: Evidence for frequency-selective neurones in the human brain. J Physiol. 1970;210:727–750. doi: 10.1113/jphysiol.1970.sp009238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chen N, Fang F. Tilt aftereffect from orientation discrimination learning. Exp Brain Res. 2011;215:227–234. doi: 10.1007/s00221-011-2895-5. [DOI] [PubMed] [Google Scholar]

- 37.Suzuki S, Cavanagh P. Focused attention distorts visual space: An attentional repulsion effect. J Exp Psychol Hum Percept Perform. 1997;23:443–463. doi: 10.1037//0096-1523.23.2.443. [DOI] [PubMed] [Google Scholar]

- 38.Appelle S. Perception and discrimination as function of stimulus orientation: The “oblique effect” in man and animals. Psychol Bull. 1972;78:266–278. doi: 10.1037/h0033117. [DOI] [PubMed] [Google Scholar]

- 39.Campbell FW, Nachmias J, Jukes J. Spatial-frequency discrimination in human vision. J Opt Soc Am. 1970;60:555–559. doi: 10.1364/josa.60.000555. [DOI] [PubMed] [Google Scholar]

- 40.Mandler M. Temporal frequency discrimination above threshold. Vision Res. 1984;24:1873–1880. doi: 10.1016/0042-6989(84)90020-8. [DOI] [PubMed] [Google Scholar]

- 41.Ross HE. Constant errors in weight judgements as a function of the size of the differential threshold. Br J Psychol. 1964;55:133–141. doi: 10.1111/j.2044-8295.1964.tb02713.x. [DOI] [PubMed] [Google Scholar]

- 42.Szpiro S, Spering M, Carrasco M. Perceptual learning modifies untrained pursuit eye movements. J Vis. 2014;14:8. doi: 10.1167/14.8.8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Carrasco M, McElree B. Covert attention accelerates the rate of visual information processing. Proc Natl Acad Sci USA. 2001;98:5363–5367. doi: 10.1073/pnas.081074098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Cutrone E. 2017. Attention, appearance, and neural computation. PhD thesis (New York University, New York)

- 45.Stevens SS. On the psychophysical law. Psychol Rev. 1957;64:153–181. doi: 10.1037/h0046162. [DOI] [PubMed] [Google Scholar]

- 46.Schwartz O, Hsu A, Dayan P. Space and time in visual context. Nat Rev Neurosci. 2007;8:522–535. doi: 10.1038/nrn2155. [DOI] [PubMed] [Google Scholar]

- 47.Schoups A, Vogels R, Qian N, Orban G. Practising orientation identification improves orientation coding in V1 neurons. Nature. 2001;412:549–553. doi: 10.1038/35087601. [DOI] [PubMed] [Google Scholar]

- 48.Law CT, Gold JI. Neural correlates of perceptual learning in a sensory-motor, but not a sensory, cortical area. Nat Neurosci. 2008;11:505–513. doi: 10.1038/nn2070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Kullback S, Leibler RA. On information and sufficiency. Ann Math Stat. 1951;22:79–86. [Google Scholar]

- 50.McDonnell MD, Stocks NG. Maximally informative stimuli and tuning curves for sigmoidal rate-coding neurons and populations. Phys Rev Lett. 2008;101:058103. doi: 10.1103/PhysRevLett.101.058103. [DOI] [PubMed] [Google Scholar]