Abstract

Addressing how neural circuits underlie behavior is routinely done by measuring electrical activity from single neurons in experimental sessions. While such recordings yield snapshots of neural dynamics during specified tasks, they are ill-suited for tracking single-unit activity over longer timescales relevant for most developmental and learning processes, or for capturing neural dynamics across different behavioral states. Here we describe an automated platform for continuous long-term recordings of neural activity and behavior in freely moving rodents. An unsupervised algorithm identifies and tracks the activity of single units over weeks of recording, dramatically simplifying the analysis of large datasets. Months-long recordings from motor cortex and striatum made and analyzed with our system revealed remarkable stability in basic neuronal properties, such as firing rates and inter-spike interval distributions. Interneuronal correlations and the representation of different movements and behaviors were similarly stable. This establishes the feasibility of high-throughput long-term extracellular recordings in behaving animals.

Research organism: Rat

Introduction

The goal of systems neuroscience is to understand how neural activity generates behavior. A common approach is to record from neuronal populations in targeted brain areas during experimental sessions while subjects perform designated tasks. Such intermittent recordings provide brief ‘snapshots’ of task-related neural dynamics (Georgopoulos et al., 1986; Hanks et al., 2015; Murakami et al., 2014), but fail to address how neural activity is modulated outside of task context and across a wide range of active and inactive behavioral states, (for exceptions see [Ambrose et al., 2016; Evarts, 1964; Gulati et al., 2014; Hengen et al., 2016; Hirase et al., 2001; Lin et al., 2006; Mizuseki and Buzsáki, 2013; Santhanam et al., 2007; Wilson and McNaughton, 1994]). Furthermore, intermittent recordings are ill-suited for reliably tracking the same neurons over time (Dickey et al., 2009; Emondi et al., 2004; Fraser and Schwartz, 2012; McMahon et al., 2014a; Santhanam et al., 2007; Tolias et al., 2007), making it difficult to discern how neural activity and task representations are shaped by developmental and learning processes that evolve over longer timescales (Ganguly et al., 2011; Jog et al., 1999; Lütcke et al., 2013; Marder and Goaillard, 2006; Peters et al., 2014; Singer et al., 2013).

Addressing such fundamental questions would be greatly helped by recording neural activity and behavior continuously over days and weeks in freely moving animals. Such longitudinal recordings would allow the activity of single neurons to be followed over more trials, experimental conditions, and behavioral states, thus increasing the power with which inferences about neural function can be made (Lütcke et al., 2013; McMahon et al., 2014a). Recording continuously outside of task context would also reveal how task-related neural dynamics and behavior are affected by long-term performance history (Bair et al., 2001; Chaisanguanthum et al., 2014; Morcos and Harvey, 2016), changes in internal state (Arieli et al., 1996), spontaneous expression of innate behaviors (Aldridge and Berridge, 1998), and replay of task-related activity in different behavioral contexts (Carr et al., 2011; Foster and Wilson, 2006; Gulati et al., 2014; Wilson and McNaughton, 1994).

While in vivo calcium imaging allows the same neuronal population to be recorded intermittently over long durations (Huber et al., 2012; Liberti et al., 2016; Peters et al., 2014; Rose et al., 2016; Ziv et al., 2013), photobleaching, phototoxicity, and cytotoxicity (Grienberger and Konnerth, 2012; Looger and Griesbeck, 2012), as well as the requirements for head-restraint in many versions of such experiments (Dombeck et al., 2007; Huber et al., 2012; Peters et al., 2014), make the method unsuitable for continuous long-term recordings. Calcium imaging also has relatively poor temporal resolution (Grienberger and Konnerth, 2012; Vogelstein et al., 2009), limiting its ability to resolve precise spike patterns (Vogelstein et al., 2010; Yaksi and Friedrich, 2006) (but see Gong et al., 2015 for an alternative high-speed voltage sensor). In contrast, extracellular recordings using electrode arrays can measure the activity of many single neurons simultaneously with sub-millisecond resolution (Buzsáki, 2004). Despite the unique benefits of continuous (24/7) long-term electrical recordings, they are not routinely performed. A major reason is the inherently laborious and difficult process of reliably and efficiently tracking the activity of single units from such longitudinal datasets (Einevoll et al., 2012), wherein firing rates of individual neurons can vary over many orders of magnitude (Hromádka et al., 2008; Mizuseki and Buzsáki, 2013) and spike waveforms change over time (Dickey et al., 2009; Emondi et al., 2004; Fraser and Schwartz, 2012; Okun et al., 2016; Santhanam et al., 2007; Tolias et al., 2007).

To address this, we designed and deployed a modular and low-cost recording system that enables fully automated long-term continuous extracellular recordings from large numbers of neurons in freely behaving rodents engaged in natural behaviors and prescribed tasks. To efficiently parse the large streams of neural data, we developed an unsupervised spike-sorting algorithm that automatically processes the raw data from electrode array recordings, and clusters spiking events into putative single units, tracking their activity over long timescales. We validated our algorithm on ground-truth datasets and found that it surpassed the performance of spike-sorting methods that assume stationarity of spike waveforms. We used this integrated system to record from motor cortex and striatum continuously over several months, and address an ongoing debate (Clopath et al., 2017; Lütcke et al., 2013) about whether the brain is stable (Ganguly and Carmena, 2009; Greenberg and Wilson, 2004; McMahon et al., 2014b; Peters et al., 2014; Rose et al., 2016) or not (Carmena et al., 2005; Huber et al., 2012; Liberti et al., 2016; Mankin et al., 2012; Morcos and Harvey, 2016; Rokni et al., 2007; Ziv et al., 2013) over long timescales, an issue that has been previously addressed using intermittent calcium imaging (Huber et al., 2012; Liberti et al., 2016; Morcos and Harvey, 2016; Peters et al., 2014; Rose et al., 2016; Ziv et al., 2013) and extracellular recordings (Carmena et al., 2005; Ganguly and Carmena, 2009; Greenberg and Wilson, 2004; Mankin et al., 2012; McMahon et al., 2014b; Rokni et al., 2007). Our continuous, long-term recordings revealed a remarkable stability in basic neuronal properties of isolated single units, such as firing rates and inter-spike interval distributions. Interneuronal correlations and movement tuning across a range of behaviors were similarly stable over several weeks.

Results

Infrastructure for automated long-term neural recordings in behaving animals

We developed experimental infrastructure for continuous long-term extracellular recordings in behaving rodents (Figure 1A, Figure 1—figure supplement 1). Our starting point was ARTS, a fully Automated Rodent Training System we previously developed (Poddar et al., 2013). In ARTS, the animal’s home-cage doubles as the experimental chamber, making it a suitable platform for continuous long-term recordings.

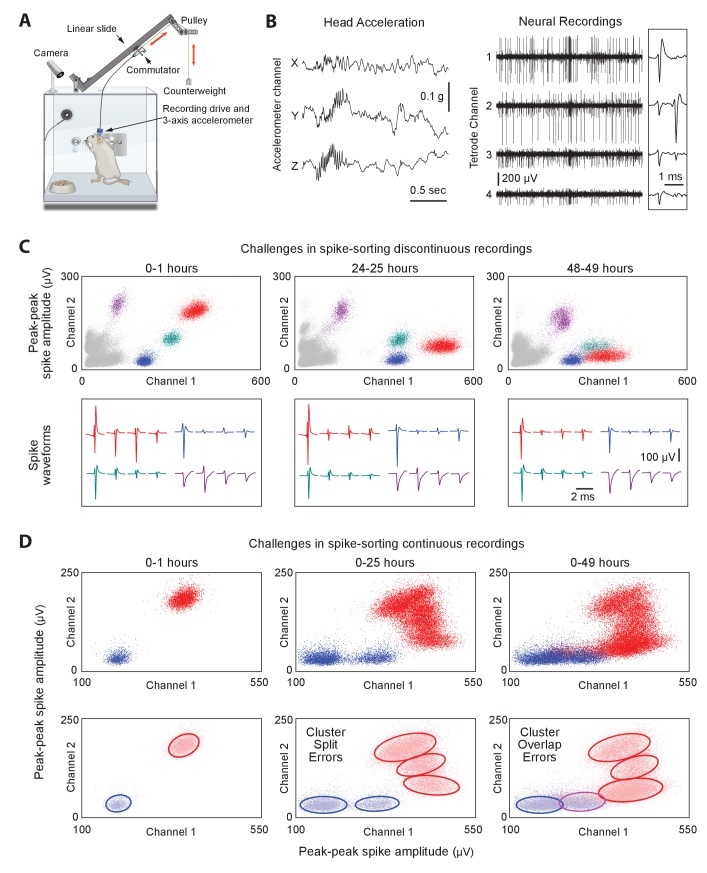

Figure 1. Long-term continuous neural and behavioral recordings in behaving rodents pose challenges for traditional methods of spike-sorting.

(A) Adapting our automated rodent training system (ARTS) for long-term electrophysiology. Rats engage in natural behaviors and prescribed motor tasks in their home-cages, while neural data is continuously acquired from implanted electrodes. The tethering cable connects the head-stage to a commutator mounted on a carriage that moves along a low-friction linear slide. The commutator-carriage is counterweighted to eliminate slack in the tethering cable. Behavior is continuously monitored and recorded using a camera and a 3-axis accelerometer. (B) Example of a recording segment showing high-resolution behavioral and neural data simultaneously acquired from a head-mounted 3-axis accelerometer (left) and a tetrode (right) implanted in the motor cortex, respectively. (Inset) A 2 ms zoom-in of the tetrode recording segment. (C) Drift in spike waveforms over time make it difficult to identify the same units across discontinuous recording sessions. Peak-to-peak spike amplitudes (top) and spike waveforms (bottom) for four distinct units on the same tetrode for hour-long excerpts, at 24 hr intervals, from a representative long-term continuous recording in the rat motor cortex 4 months after electrode implantation. Different units are indicated by distinct colors. We tracked units over days using a novel spike-sorting algorithm we developed to cluster continuously recorded neural data (see Figure 2). (D) Continuous extracellular recordings pose challenges for spike-sorting methods assuming stationarity in spike shapes. (Top) Peak-to-peak spike amplitudes of two continuously recorded units (same as in C) accumulated over 1 hr (left), 25 hr (middle) and 49 hr (right). (Bottom) Drift in spike waveforms can lead to inappropriate splitting (middle, right panels) of single-units and/or merging (right panel) of distinct units, even though these two units are separable in the hour-long ‘sessions’ shown in C.

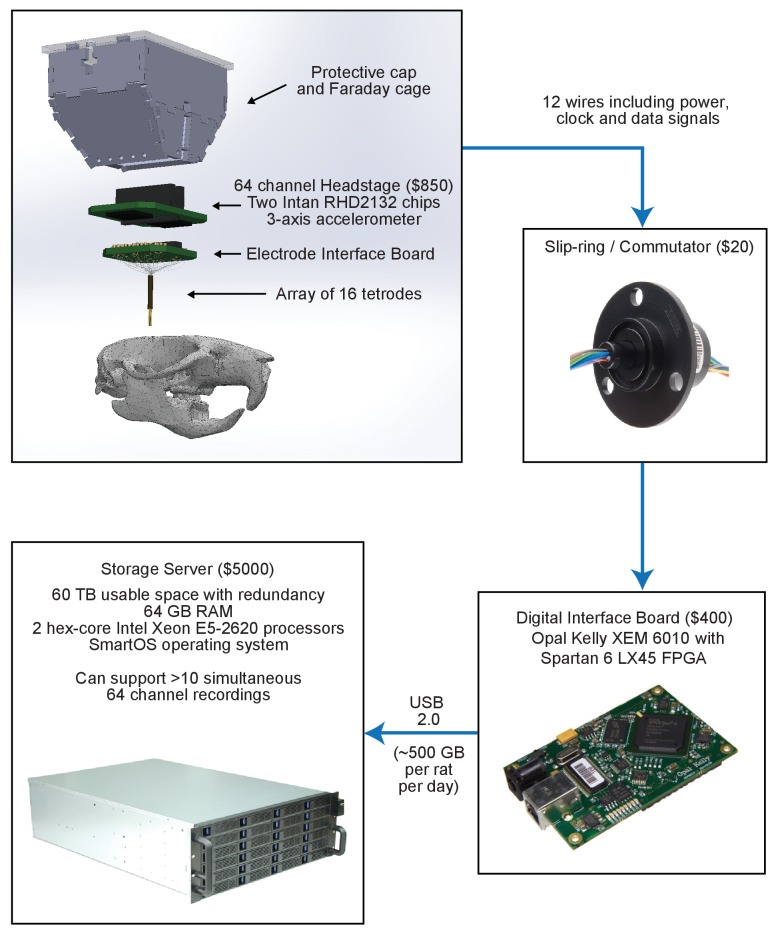

Figure 1—figure supplement 1. Overview of custom-built hardware for extracellular recordings in behaving rodents.

To ensure that animals remain reliably and comfortably connected to the recording apparatus over months-long experiments, we designed a variation on the standard tethering system that allows experimental subjects to move around freely while preventing them from reaching for (and chewing) the signal cable (Figure 1A; see Materials and methods for details). Our solution connects the implanted recording device via a cable to a passive commutator (Sutton and Miller, 1963) attached to a carriage that rides on a low-friction linear slide. The carriage is counterweighted by a pulley, resulting in a small constant upwards force (<10 g) on the cable that keeps it taut and out of the animal’s reach without unduly affecting its movements. The recording extension can easily be added to our custom home-cages, allowing animals that have been trained, prescreened, and deemed suitable for recordings to be implanted with electrode drives, and placed back into their familiar training environment (i.e. home-cage) for recordings.

Extracellular signals recorded in behaving animals from 16 implanted tetrodes at 30 kHz sampling rate are amplified and digitized on a custom-designed head-stage (Figure 1B and Figure 1—figure supplement 1, Materials and methods). To characterize the behavior of animals throughout the recordings, the head-stage features a 3-axis accelerometer that measures head movements at high temporal resolution (Venkatraman et al., 2010) (Figure 1A–B). We also record continuous video of the rats’ behavior with a wide-angle camera (30 fps) above the cage (Materials and methods). The large volumes of behavioral and neural data (~0.5 TB/day/rat) are streamed to custom-built high-capacity servers (Figure 1—figure supplement 1).

A fast automated spike tracker (FAST) for long-term neural recordings

Extracting single-unit spiking activity from raw data collected over weeks and months of continuous extracellular recordings presents a significant challenge for which there is currently no adequate solution. Parsing such large datasets must necessarily rely on automated spike-sorting methods. These face three major challenges (Rey et al., 2015a). First, they must reliably capture the activity of simultaneously recorded neurons whose firing rates can vary over many orders of magnitude (Hromádka et al., 2008; Mizuseki and Buzsáki, 2013). Second, they have to contend with spike shapes from recorded units changing significantly over time (Figure 1C) (Dickey et al., 2009; Emondi et al., 2004; Fraser and Schwartz, 2012). This can lead to sorting errors such as incorrect splitting of a single unit’s spikes into multiple clusters, or incorrect merging of spikes from multiple units in the same cluster (Figure 1D). Third, automated methods must be capable of processing very large numbers of spikes in a reliable and efficient manner (in our experience, >1010 spikes per rat over a time span of 3 months for 64 channel recordings).

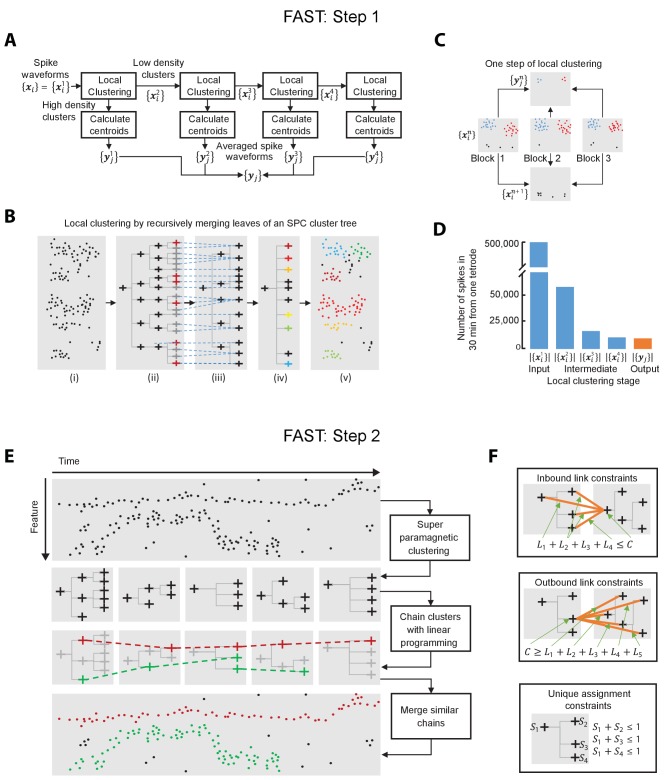

Here we present an unsupervised spike-sorting algorithm that meets these challenges and tracks single units over months-long timescales. Our Fast Automated Spike Tracker (FAST) (Poddar et al., 2017) comprises two main steps (Figure 2). First, to compress the datasets and normalize for large variations in firing rates between units, it applies ‘local clustering’ to create de-noised representations of spiking events in the data (Figure 2A–C). In a second step, FAST chains together de-noised spikes belonging to the same putative single unit over time using an integer linear programming algorithm (Figure 2D) (Vazquez-Reina et al., 2011). FAST is an efficient and modular data processing pipeline that, depending on overall spike rates, runs two to three times faster on our custom built storage servers (Figure 1—figure supplement 1) than the rate at which the data (64 electrodes) is acquired. This implies that FAST could, in principle, also be used for online sorting, although we are currently running it offline.

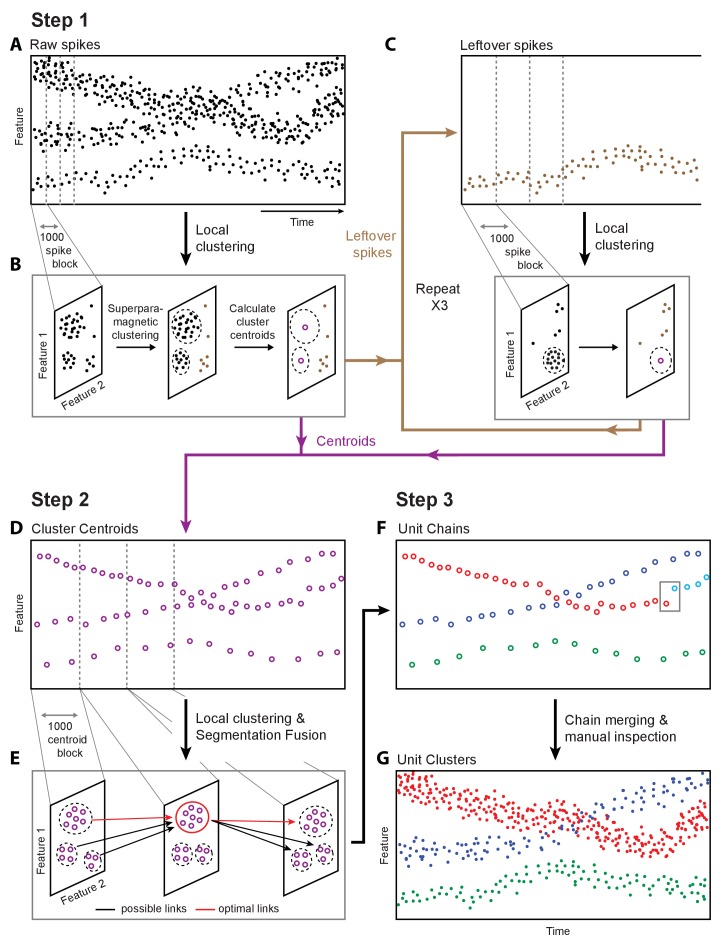

Figure 2. Overview of fast automated spike tracker (FAST), an unsupervised algorithm for spike-sorting continuous, long-term recordings.

(A–C) Step 1 of FAST. (A) Cartoon plot of spike waveform feature (such as amplitude) over time. Spikes are grouped into consecutive blocks of 1000 (indicated by gray dashed lines). (B) Superparamagnetic clustering is performed on each 1000 spike-block to compress and de-noise the raw spike dataset. Centroids (indicated by purple circles) of high-density clusters comprising more than 15 spikes are calculated. These correspond to units with higher firing rates. (C) Leftover spikes in low-density clusters (indicated by brown dots), corresponding to units with lower firing rates, are pooled into 1000 spike blocks and subject to the local clustering step. This process is repeated for a total of 4 iterations in order to account for spikes from units across a wide range of firing rates. Cluster centroids representing averaged spike waveforms from each round of local clustering are carried forward to Step 2 of FAST. (D–E) Step 2 of FAST. (D) Centroids from all rounds of local clustering in Step 1 are pooled into blocks of size 1000 (dashed grey lines) and local superparamagnetic clustering is performed on each block. (E) The resulting clusters are linked across time using a segmentation fusion algorithm to yield cluster-chains corresponding to single units. (F) Step 3 of FAST. In the final step, the output of the automated sorting (top) is visually inspected and similar chains merged across time to yield the final single-unit clusters (bottom).

Figure 2—figure supplement 1. Algorithm for identifying spikes from tetrode recordings.

Figure 2—figure supplement 2. Algorithms for local clustering of spike waveforms and linking cluster trees to track units over time (Steps 1 and 2 of FAST).

Figure 2—figure supplement 3. Recommended workflow for manual verification step of FAST.

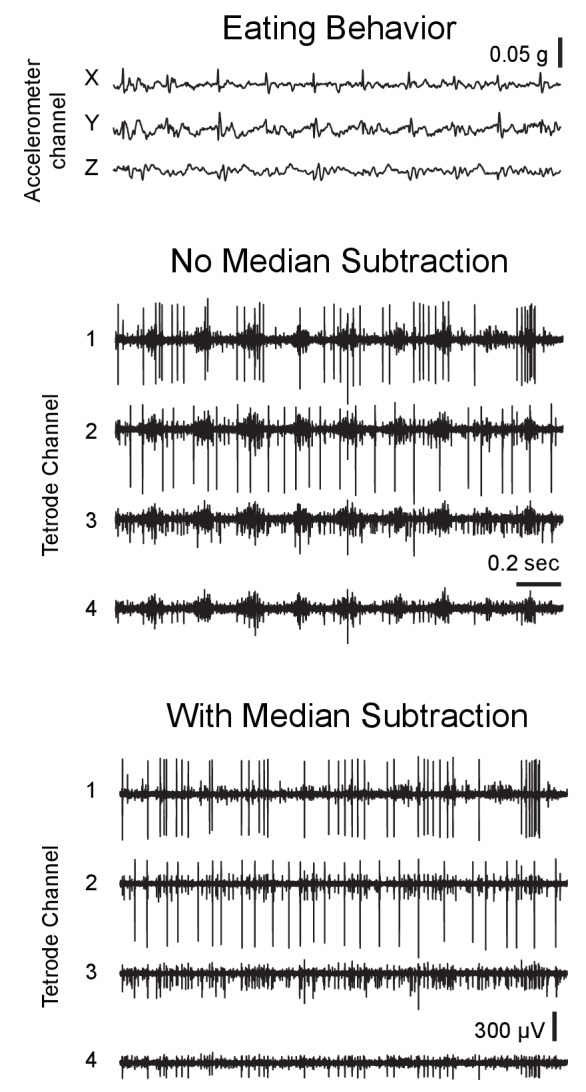

Figure 2—figure supplement 4. Effect of median subtraction on recording noise in behaving rats.

To parse and compress the raw data, FAST first identifies and extracts spike events (‘snippets’) by bandpass filtering and thresholding each electrode channel (Materials and methods, Figure 2—figure supplement 1). Four or more rounds of clustering are then performed on blocks of 1000 consecutive spike ‘snippets’ by means of an automated superparamagnetic clustering routine, a step we call ‘local clustering’ (Blatt et al., 1996; Quiroga et al., 2004) (Materials and methods, Figure 2B and Figure 2—figure supplement 2A–D). Spikes in a block that belong to the same cluster are replaced by their centroid, a step that effectively de-noises and compresses the data by representing groups of similar spikes with a single waveform. The number of spikes per block was empirically determined to balance the trade-off between computation time and accuracy of superparamagnetic clustering (see Materials and methods). The goal of this step is not to reliably find all spike waveforms associated with a single unit, but to be reasonably certain that the waveforms being averaged over are similar enough to be from the same single unit.

Due to large differences in firing rates between units, the initial blocks of 1000 spikes will be dominated by high firing rate units. Spikes from more sparsely firing cells that do not contribute at least 15 spikes to a cluster in a given block are carried forward to the next round of local clustering, where previously assigned spikes have been removed (Materials and methods, Figure 2C, Figure 2—figure supplement 2A–D). Applying this method of pooling and local clustering sequentially four times generates a de-noised dataset that accounts for large differences in the firing rates of simultaneously recorded units (Figure 2C, Materials and methods).

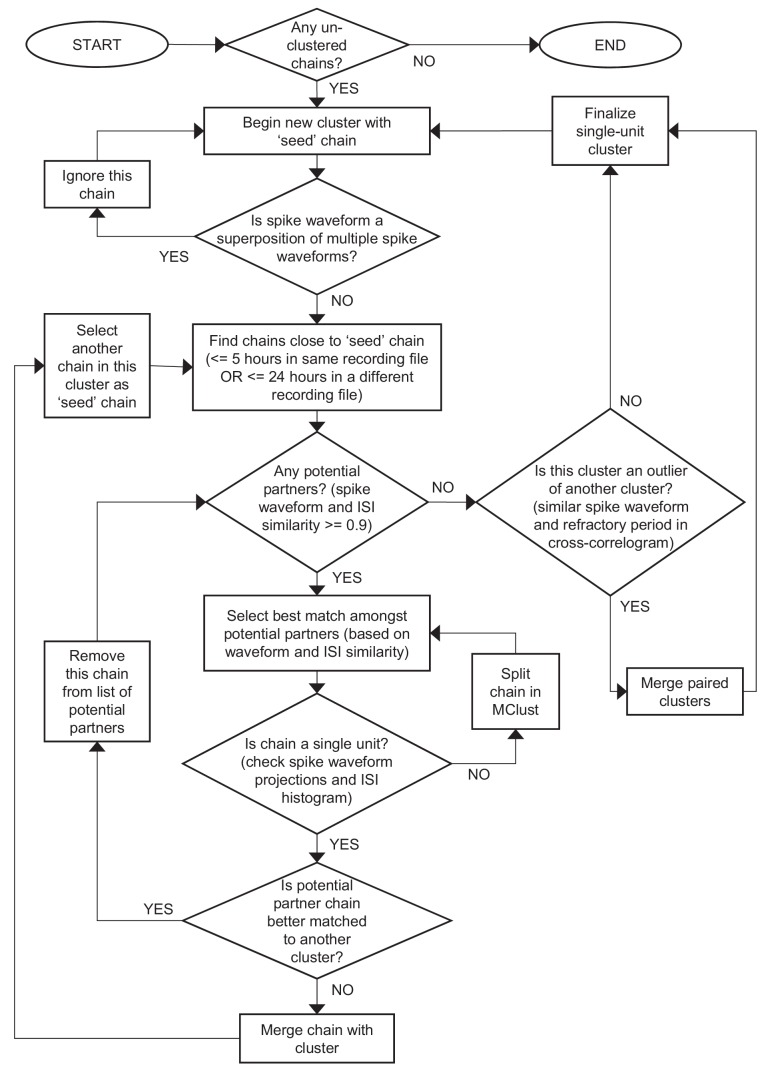

The second step of the FAST algorithm is inspired by an automated method (‘segmentation fusion’) that links similar elements over cross-sections of longitudinal datasets in a globally optimal manner (Materials and methods, Figure 2D–E, Figure 2—figure supplement 2E–F). Segmentation fusion has been used to trace processes of individual neurons across stacks of two-dimensional serial electron-microscope images (Kasthuri et al., 2015; Vazquez-Reina et al., 2011). We adapted this method to link similar de-noised spikes across consecutive blocks into ‘chains’ containing the spikes of putative single units over longer timescales (Figure 2F). This algorithm allows us to automatically track the same units over days and weeks of recording.

In a final post-processing and verification step, we use a semi-automated method (Dhawale et al., 2017a) to link ‘chains’ belonging to the same putative single unit together, and perform visual inspection of each unit (Figure 2F–G and Figure 2—figure supplement 3). A detailed description of the various steps involved in the automated spike-sorting can be found in Materials and methods. Below, we describe how we validated the spike-sorting performance of FAST using ground-truth datasets.

Validation of FAST on ground-truth datasets

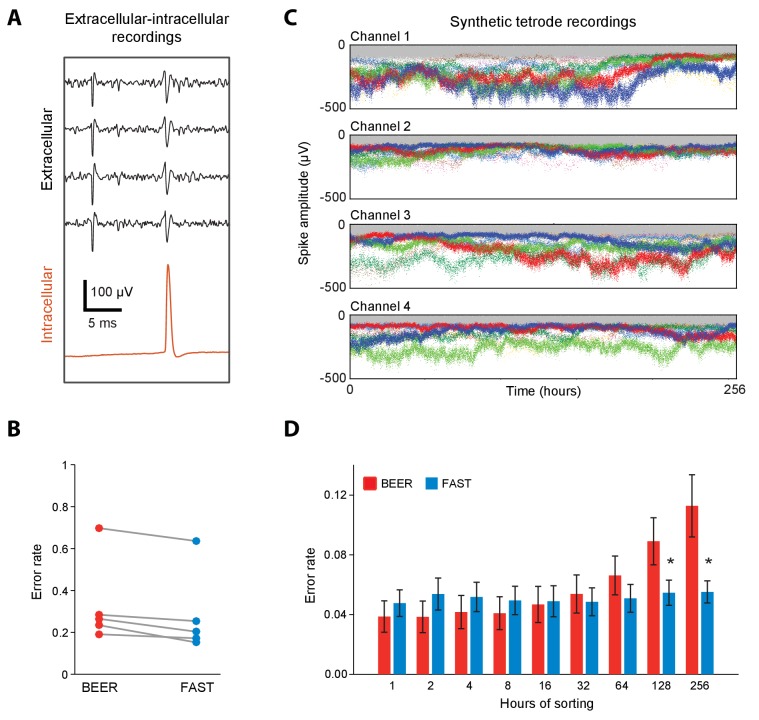

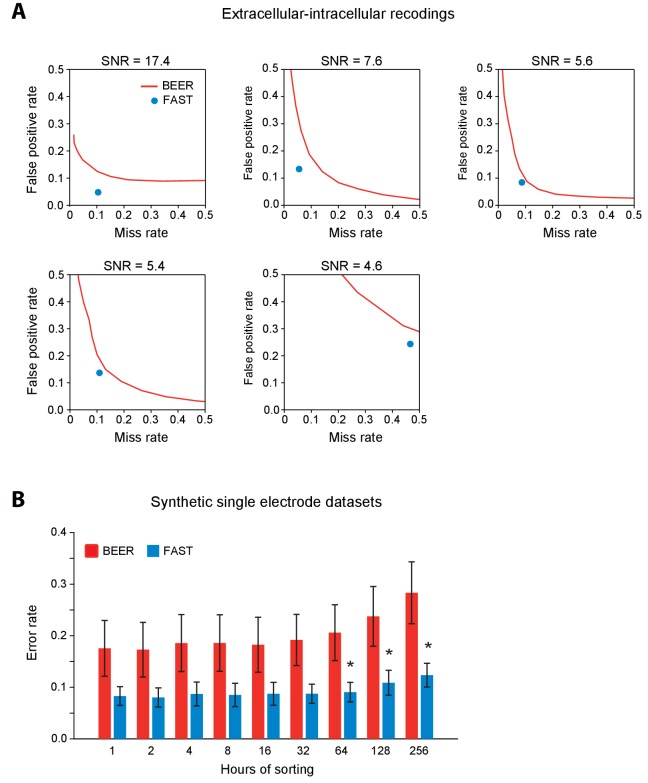

To measure the spike-sorting capabilities of FAST, we used a publicly available dataset (Harris et al., 2000; Henze et al., 2009) comprising paired intracellular and extracellular (tetrode) recordings in the anesthetized rat hippocampus. The intracellular recordings can be used to determine the true spike-times for any unit also captured simultaneously on the tetrodes (Figure 3A). To benchmark the performance of FAST, we compared its error rate to that of the best ellipsoidal error rate (BEER), a measure which represents the optimal performance achievable by standard clustering methods (Harris et al., 2000) (see Materials and methods). We found that FAST performed as well as BEER on these relatively short (4–6 min long) recordings (Figure 3B and Figure 3—figure supplement 1A, n = 5 recordings).

Figure 3. Validation of spike-sorting performance of FAST using ground-truth datasets.

(A) Example traces of simultaneous extracellular tetrode (black) and intracellular electrode (red) recordings from the anesthetized rat hippocampus (Harris et al., 2000; Henze et al., 2009). The intracellular trace can be used to identify ground-truth spike times of a single unit recorded on the tetrode. (B) Spike-sorting error rate of FAST on the paired extracellular-intracellular recording datasets (n = 5) from (Henze et al., 2009), in comparison to the best ellipsoidal error rate (BEER), a measure of the optimal performance of standard spike-sorting algorithms (see Materials and methods, Harris et al., 2000). The error rate was calculated by dividing the number of misclassified spikes (sum of false positives and false negatives) by the number of ground-truth spikes for each unit. (C) A representative synthetic tetrode recording dataset in which we model realistic fluctuations in spike amplitudes over 256 hr (see Materials and methods). The four plots show simulated spike amplitudes on the four channels of a tetrode. Colored dots indicate spikes from eight distinct units while gray dots represent multi-unit background activity. For visual clarity, we have plotted the amplitude of every 100th spike in the dataset. (D) Spike-sorting error rates of FAST (blue bars) applied to the synthetic tetrode datasets (n = 48 units from six tetrodes), in comparison to the BEER measure (red bars) over different durations of the simulated recordings. Error-bars represent standard error of the mean. * indicates p<0.05 after applying the Šidák correction for multiple comparisons.

Figure 3—figure supplement 1. Validation of spike-sorting performance of FAST using ground-truth extracellular-intracellular recordings and synthetic single electrode datasets.

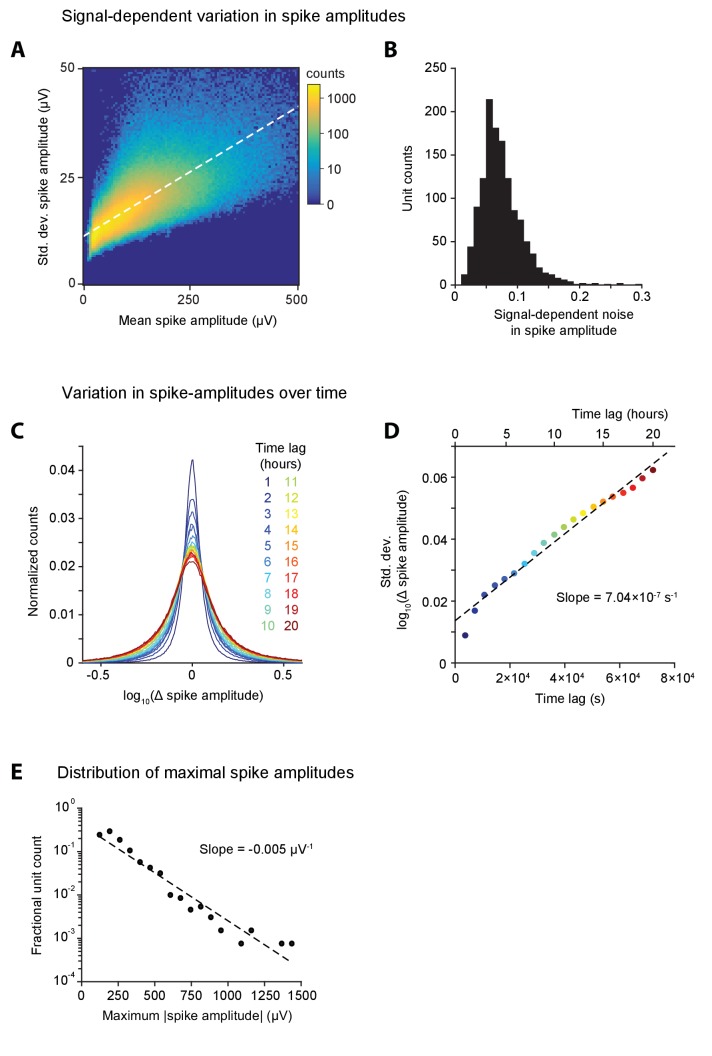

Figure 3—figure supplement 2. Analyzing long-term recordings in the DLS and MC to characterize variation in unit spike amplitude.

Next we wanted to quantify FAST’s ability to isolate and track units over time despite non-stationarity in their spike waveforms. Due to the unavailability of experimental ground-truth datasets recorded continuously over days to weeks-long timescales, we constructed synthetic tetrode recordings (Dhawale et al., 2017b; Martinez et al., 2009) from eight distinct units whose spike amplitudes fluctuated over 256 hr (10.7 days) following a geometric random walk process (Rossant et al., 2016) (Figure 3C, Figure 3—figure supplement 2, and Materials and methods). To make these synthetic recordings as realistic as possible, we measured several parameters including the variance of the random walk process, distribution of spike amplitudes, and degree of signal-dependent noise using our own long-term recordings in the rodent motor cortex and striatum (see Figure 3—figure supplement 2, Materials and methods, and the section ‘Continuous long-term recordings from striatum and motor cortex’). We then compared the sorting performance of FAST to BEER on increasingly larger subsets – from 1 to the full 256 hr – of the synthetic recordings (n = 48 units from 6 simulated tetrodes). Our expectation was for the performance of BEER to, on average, decrease with accumulated time in the recording as drift increases the likelihood of overlap between different units in spike waveform feature space, and that is also what we observed (Figure 3D). In contrast, the performance of FAST, which was comparable to BEER for short recording durations, significantly outperformed it when the recordings spanned days (Figure 3D). FAST was also found to perform better than BEER over recording durations longer than 64 hr for single electrode recording configurations that are commonly used in non-human primates (Figure 3—figure supplement 1B). The large differences in the sorting error rates between the experimental (Figure 3B) and synthetic datasets (Figure 3D) can be largely attributed to relatively small spike-amplitudes (<100 µV) (Harris et al., 2000) and, consequently, lower signal-to-noise ratio (SNR = 8.1 ± 5.3, mean ±SD, n = 5 units) of units identified in both intracellular and extracellular recordings in comparison to our in vivo extracellular recordings on which the simulated datasets are modeled (SNR = 15.0 ± 7.3, n = 2572 units, Figure 4F).

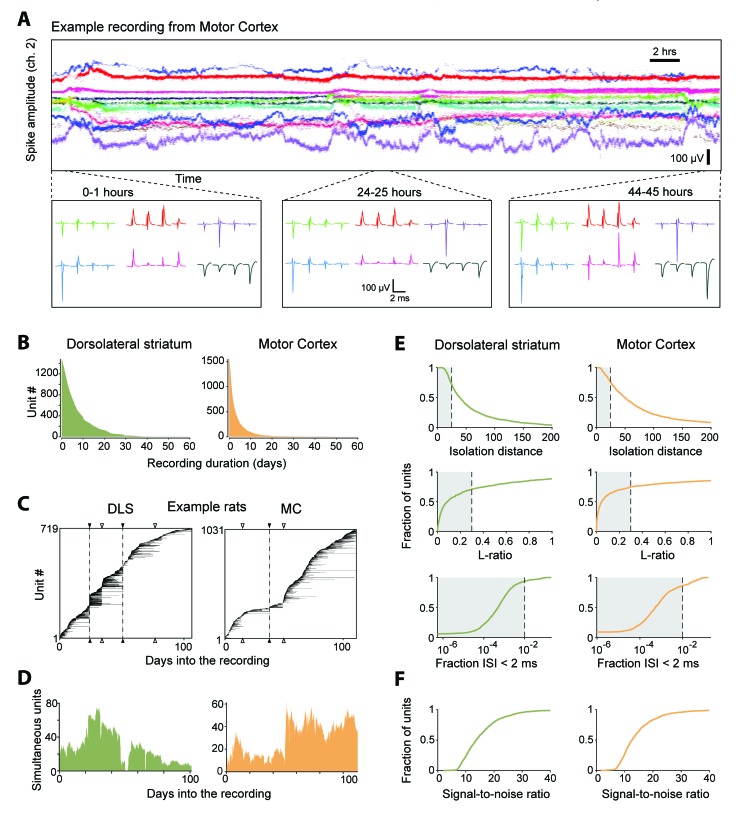

Figure 4. Single units isolated and tracked from continuous months-long recordings made in dorsolateral striatum (DLS) and motor cortex (MC) of behaving rats.

(A) The results of applying FAST to a representative continuous tetrode recording in the rodent motor cortex spanning 45 hr. Each point represents spike amplitudes on channel 2 of a tetrode, with different colors representing distinct units. For visual clarity we have plotted the amplitude of de-noised spike centroids (see Figure 2), not raw spike amplitudes. Insets (bottom) show average spike waveforms of six example units on the four channels of the tetrode at 0, 24 and 44 hr into the recording. (B) Holding times for all units recorded in the DLS (left, green) and MC (right, orange), sorted by duration. (C) Temporal profile of units recorded in DLS (left) and MC (right) from two example rats over a period of ~3 months. Unit recording times are indicated by black bars, and are sorted by when they were first identified in the recording. Black triangles and dotted lines indicate times at which the electrode array was intentionally advanced into the brain by turning the micro-drive. Open triangles indicate times at which the population of recorded units changed spontaneously. Number of simultaneously recorded units in the DLS (left, green) and MC (right, orange) as a function of time in the two example recordings. (D) Number of simultaneously recorded units in the DLS (left, green) and MC (right, orange) as a function of time in the example recordings shown in C. (E) Cumulative distributions of average cluster isolation quality for all units recorded in DLS (left, green) and MC (right, orange). Cluster quality was measured by the isolation distance (top), L-ratio (middle), and fraction of inter-spike intervals under 2 ms (bottom). Dotted lines mark the quality thresholds for each of these measures. Shaded regions denote acceptable values. (F) Cumulative distributions of average signal-to-noise ratios for all units recorded in DLS (left, green) and MC (right, orange).

Figure 4—figure supplement 1. Benchmarking the performance of discontinuous unit-tracking methods using continuous long-term datasets sorted by FAST.

Having validated FAST’s spike-sorting performance on both experimental and synthetic datasets, we next describe how our algorithm parses data acquired from continuous long-term tetrode recordings, but we note that it can be adapted to efficiently analyze other types of electrode array or single electrode recordings – whether continuous or intermittent.

Continuous long-term recordings from striatum and motor cortex

To demonstrate the utility of our experimental platform and analysis pipeline for long-term neural recordings, we implanted tetrode drives (16 tetrodes, 64 channels) into dorsolateral striatum (n = 2) or motor cortex (n = 3) of Long Evans rats (Materials and methods). We recorded electrophysiological and behavioral data continuously (Figure 4A), with only brief interruptions, for more than 3 months. We note that our recordings terminated not because of technical issues with the implant or recording apparatus, but because we deemed the experiments to have reached their end points.

We used our automated spike-sorting method (FAST) to cluster spike waveforms into putative single units, isolating a total of 1550 units from motor cortex and 1477 units from striatum (Figure 4). On average, we could track single units over several days (mean: 3.2 days for motor cortex and 7.4 days for striatum), with significant fractions of units tracked continuously for more than a week (11% and 36% in motor cortex and striatum, respectively) and even a month (0.4% in motor cortex and 1.7% in striatum, Figure 4B). Periods of stable recordings were interrupted by either intentional advancement of the electrode drive or spontaneous ‘events’ likely related to the sudden movement of the electrodes (Figure 4C). On average, we recorded simultaneously from 19 ± 15 units in motor cortex and 41 ± 20 units in striatum (mean ± standard deviation) (two example rats shown in Figure 4D).

The quality of single unit isolation was assessed by computing quantitative measures of cluster quality, that is, cluster isolation distance (Harris et al., 2000), L-ratio (Schmitzer-Torbert et al., 2005), and presence of a clean refractory period (Hill et al., 2011; Lewicki, 1998) (Figure 4E). Assessing the mean cluster quality of the units over their recording lifetimes, we found that 61.4% of motor cortical (n = 952) and 64.6% of striatal units (n = 954) satisfied our conservative criteria (Quirk et al., 2009; Schmitzer-Torbert et al., 2005; Sigurdsson et al., 2010) (Isolation distance >= 25, L-ratio <= 0.3 and fraction of ISIs below 2 ms <= 1%). However, 83.7% of motor cortical units (n = 1298) and 93.2% of striatal units (n = 1376) met these criteria for at least one hour of recording. The average signal-to-noise ratio of units isolated from the DLS and MC was found to be 15.4 ± 7.4 and 14.5 ± 7.2, respectively (Figure 4F).

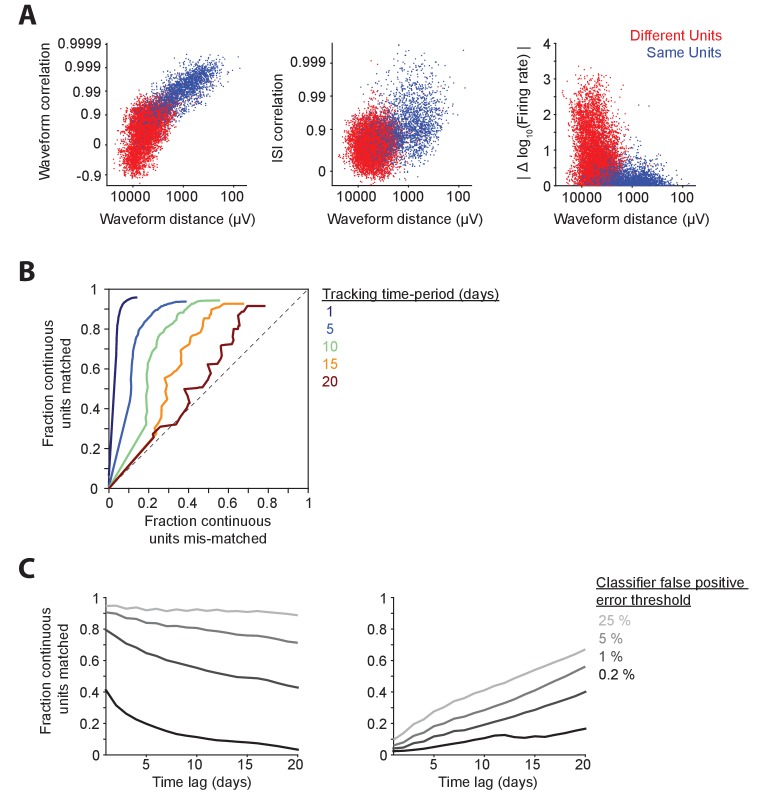

Previous attempts to track populations of extracellularly recorded units over time have relied on matching units across discontinuous recording sessions based on similarity metrics such as spike waveform distance (Dickey et al., 2009; Emondi et al., 2004; Fraser and Schwartz, 2012; Ganguly and Carmena, 2009; Greenberg and Wilson, 2004; Jackson and Fetz, 2007; McMahon et al., 2014a; Okun et al., 2016; Thompson and Best, 1990; Tolias et al., 2007) and activity measures including firing rates and inter-spike interval histograms (Dickey et al., 2009; Fraser and Schwartz, 2012). Given that spike waveforms of single-units can undergo significant changes even within a day (Figure 1C,D), a major concern with discontinuous tracking methods is the difficulty in verifying their performance. Since we were able to reliably track units continuously over weeks, we used the sorted single-units as ‘ground truth’ data with which to benchmark the performance of day-by-day discontinuous tracking methods (Figure 4—figure supplement 1). In our data set, we found discontinuous tracking over day-long gaps to be highly error-prone (Figure 4—figure supplement 1B–C). When applying tolerant thresholds for tracking units across time, a large fraction of distinct units were labeled as the same (false positives), while at conservative thresholds only a small proportion of the units were reliably tracked across days (Figure 4—figure supplement 1C).

The ability to record and analyze the activity of large populations of single units continuously over days and weeks allows neural processes that occur over long timescales to be interrogated. Below we analyze and describe single neuron activity, interneuronal correlations, and the relationship between neuronal dynamics and different behavioral states, over weeks-long timescales.

Stability of neural activity

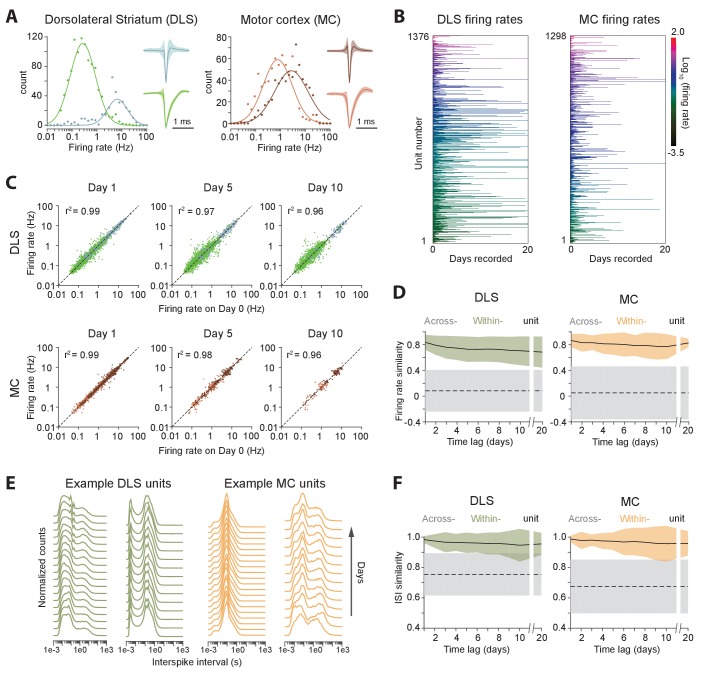

We clustered motor cortical and striatal units into putative principal neurons and interneurons based on their spike shapes and firing rates (Barthó et al., 2004; Berke et al., 2004; Connors and Gutnick, 1990), thus identifying 366 fast spiking and 1111 medium spiny neurons in striatum, and 686 fast spiking and 864 regular spiking neurons in motor cortex (Figure 5A). Consistent with previous reports (Hromádka et al., 2008; Mizuseki and Buzsáki, 2013), average firing rates appeared log-normally distributed and varied over more than three orders of magnitude (from 0.029 Hz to 40.2 Hz in striatum, and 0.031 Hz to 44.8 Hz in motor cortex; Figure 5A).

Figure 5. Long-term stability of single unit activity.

(A) Histograms of average firing rates for units recorded in DLS (left) and MC (right). Putative cell-types, medium spiny neurons (MSN, blue) and fast-spiking interneurons (FSI, green) in DLS, and regular spiking (RS, brown) and fast spiking (FS, red) neurons in the MC, were classified based on spike shape and firing rate (Materials and methods). The continuous traces are log-normal fits to the firing rate distributions of each putative cell-type. Insets show average peak-normalized waveform shapes for MSNs (left-bottom) and FSIs (left-top), and RS (right-bottom) and FS (right-top) neurons. Shading represents the standard deviation of the spike waveforms. (B) Firing rates of DLS (left) and MC (right) units over 20 days of recording. The color scale indicates firing rate on a log-scale, calculated in one-hour blocks. Units have been sorted by average firing rate. (C) Scatter plots of unit firing rates over time-lags of 1 (left), 5 (middle) and 10 (right) days for DLS (top) and MC (bottom). The dashed lines indicate equality. Every dot is a comparison of a unit’s firing from a baseline day to 1, 5 or 10 days later. The color of the dot indicates putative cell-type as per (A). Each unit may contribute multiple data points, depending on the length of the recording. Day 1: n = 4398 comparisons for striatum and n = 1458 for cortex; Day 5: n = 2471 comparisons for striatum and n = 615 for cortex; Day 10: n = 1347 comparisons for striatum and n = 268 for cortex. (D) Stability of unit firing rates over time. The firing rate similarity (see Materials and methods) was measured across time-lags of 1 to 20 days for the same unit (within-unit, solid lines), or between simultaneously recorded units (across-unit, dashed lines) in DLS (left) and MC (right). Colored shaded regions indicate the standard deviation of within-unit firing rate similarity, over all units. Grey shaded regions indicate standard deviation of across-unit firing rate similarity, over all time-bins. (E) Inter-spike interval (ISI) histograms for example units in DLS (left, green) and MC (right, orange) over two weeks of continuous recordings. Each line represents the normalized ISI histogram measured on a particular day. (F) Stability of unit ISI distributions over time. Correlations between ISI distributions were measured across time-lags of 1 to 20 days for the same unit (within-unit, solid lines), or between simultaneously recorded units (across-unit, dashed lines) in DLS (left) and MC (right). Colored shaded regions indicate the standard deviation of within-unit ISI similarity, over all units. Grey shaded regions indicate standard deviation of across-unit ISI similarity, over all time-bins.

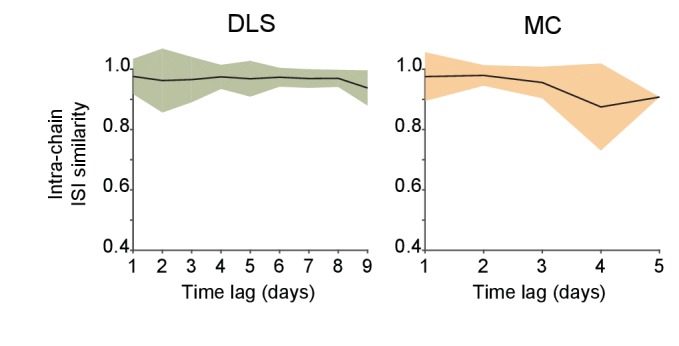

Figure 5—figure supplement 1. Stability over time of ISI distributions computed from spike chains that were automatically identified by FAST.

Average firing rates remained largely unchanged even over 20 days of recording (Figure 5B–D), suggesting that activity levels of single units in both cortex and striatum are stable over long timescales, and that individual units maintain their own firing rate set-point (Hengen et al., 2016; Marder and Goaillard, 2006). We next asked whether neurons also maintain second-order spiking statistics. The inter-spike interval (ISI) distribution is a popular metric that is sensitive to a cell’s mode of spiking (bursting, tonic etc.) and other intrinsic properties, such as refractoriness and spike frequency adaptation. Similar to firing rate, we found that the ISI distribution of single units remained largely unchanged across days (Figure 5E–F). To verify whether our ISI similarity criterion for manual merging of FAST-generated spike chains (see Materials and methods) biased our measurements of ISI stability, we also analyzed the stability of the ISI computed from chains generated by the fully automated steps 1 and 2 of FAST, and found it to be similarly stable over multiple days (Figure 5—figure supplement 1).

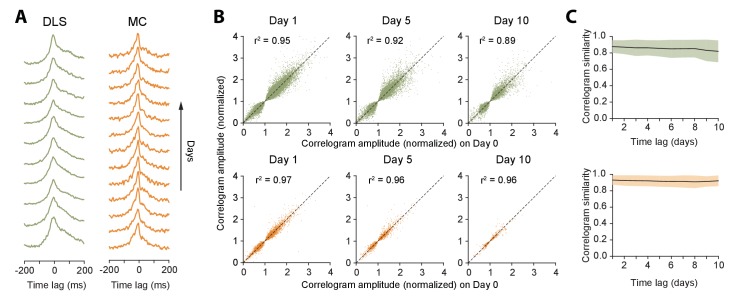

Measures of single unit activity do not adequately address the stability of the network in which the neurons are embedded, as they do not account for interneuronal correlations (Abbott and Dayan, 1999; Ecker et al., 2010; Nienborg et al., 2012; Okun et al., 2015; Salinas and Sejnowski, 2001; Singer, 1999). To address this, we calculated the cross-correlograms of all simultaneously recorded neuron pairs (Figure 6A, Materials and methods). We found that 21.1% of striatal pairs (n = 18697 pairs) and 37.6% motor cortex pairs (n = 9235 pairs) were significantly correlated (Materials and methods). The average absolute time-lag of significantly correlated pairs was 13.0 ± 26.3 ms (median: 4 ms) and 23.9 ± 28.8 ms (median: 13.0 ms) for striatum and motor cortex respectively. The pairwise spike correlations were remarkably stable, remaining essentially unchanged even after 10 days (Figure 6B–C), consistent with a very stable underlying network (Grutzendler et al., 2002; Yang et al., 2009).

Figure 6. Stability of network dynamics.

(A) Correlograms for example unit pairs from DLS (left) and MC (right) over 10+ days. Each line represents the normalized correlogram measured on a particular day. (B) Scatter plots comparing the correlogram amplitude (normalized) of a unit pair on a baseline day to the same pair’s peak correlation 1, 5 or 10 days later, for DLS (top) and MC (bottom). The black line indicates equality. Values > 1 (or <1) correspond to positive (or negative) correlations (see Materials and methods). Day 1: n = 48472 comparisons for DLS and n = 12148 for MC. Day 5: n = 19230 comparisons for DLS and n = 2881 for MC. Day 10: n = 7447 comparisons for DLS and n = 772 for MC. All unit pairs whose correlograms had at least 5000 spikes over a day’s period were included. (C) Stability of correlations over time. The correlogram similarity (see Materials and methods) was measured across time-lags of 1 to 10 days for pairs of units (solid lines) in DLS (top) and MC (bottom). Colored shaded regions indicate the standard deviation of the correlogram similarity between all pairs that had significant correlograms on at least one recording day (Materials and methods).

Stability of behavioral state-dependent activity

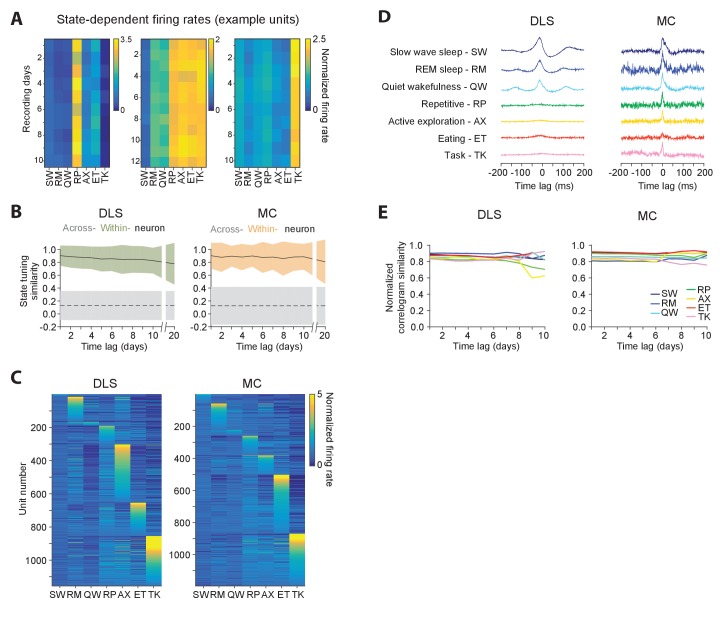

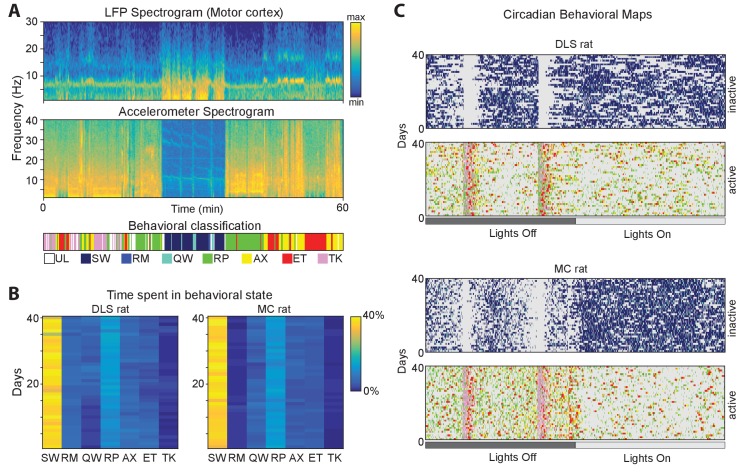

Our results demonstrated long-term stability in the time-averaged statistics of single units (Figure 5) as well as their interactions (Figure 6), both in motor cortex and striatum. However, the brain functions to control behavior, and the activity of both cortical and striatal units can be expected to differ for different behaviors. To better understand the relationship between the firing properties of single units and ongoing behavior, and to assess the stability of these relationships, we analyzed single unit activity in different behavioral states (Figure 7). To do this, we developed an algorithm for automatically classifying a rat’s behavior into ‘states’ using high-resolution measurements of head acceleration and local field potentials (LFPs). Our method distinguishes repetitive behaviors (such as grooming), eating, active exploration, task engagement as well as quiet wakefulness, rapid eye movement, and slow wave sleep (Gervasoni et al., 2004; Venkatraman et al., 2010) (Figure 7—figure supplement 1, Materials and methods), and assigns ~88% of the recording time to one of these states.

Figure 7. Stability of behavioral state-dependent activity patterns.

(A) Stability of average firing rates in different behavioral states (i.e. the unit’s ‘state-tuning’) across several days for example units recorded in the DLS (left) and MC (middle and right). The color-scale corresponds to the firing rate of a unit normalized by its average firing rate. The state abbreviations correspond to slow-wave sleep (SW), REM sleep (RM), quiet wakefulness (QW), repetitive (RP), active exploration (AX), eating (ET) and task execution (TK). (B) Stability of units’ state-tuning over time. Correlations between the state-tuning profiles (as in ‘A’) were measured across time-lags of 1 to 20 days for the same unit (within-unit, solid lines), or between simultaneously recorded units (across-unit, dashed lines) in MC (left) and DLS (right). Colored shaded regions indicate the standard deviation of within-unit state-tuning similarity, over all units. Grey shaded regions indicate standard deviation of across-unit state-tuning similarity, over all time-bins. (C) Diversity in state-tuning across all units recorded in the DLS (left) and MC (right). Units are sorted by the peak behavioral state in their state-tuning curves. (D) Cross-correlograms computed for example pairs of units in the DLS (left) and MC (right) in different behavioral states. Line colors represent behavioral state. (E) Stability of cross-correlograms measured within specific behavioral states. The correlation similarity (see Materials and methods) was measured across time-lags of 1 to 10 days (solid lines) in DLS (left) and MC (right). Line colors represent correlation similarity for different behavioral states, as per the plot legend.

Figure 7—figure supplement 1. Automated classification of behavioral states.

Behavioral state transitions, such as between sleep and wakefulness, have been shown to affect average firing rates (Evarts, 1964; Lee and Dan, 2012; Peña et al., 1999; Santhanam et al., 2007; Steriade et al., 1974; Vyazovskiy et al., 2009), inter-spike interval distributions (Mahon et al., 2006; Vyazovskiy et al., 2009), and neural synchrony (Gervasoni et al., 2004; Ribeiro et al., 2004; Wilson and McNaughton, 1994). However, most prior studies analyzed brief recording sessions in which only a narrow range of behavioral states could be sampled (Hirase et al., 2001; Mahon et al., 2006; Vyazovskiy et al., 2009; Wilson and McNaughton, 1994), leaving open the question of whether the modulation of neural dynamics across behavioral states is distinct for different neurons in a network and whether state-specific activity patterns of single neurons are stable over time. Not surprisingly, we found that firing rates of both striatal and motor cortical units depended on what the animal was doing (Figure 7A). Interestingly, the relative firing rates in different behavioral states (i.e. a cell’s ‘state tuning’) remained stable over several weeks-long timescales (Figure 7A–B), even as they varied substantially between different neurons (Figure 7A,C). Additionally, we observed that interneuronal spike correlations were also modulated by behavioral state (Figure 7D), and this dependence was also stable over time (Figure 7E).

Stability in the coupling between neural and behavioral dynamics

Neurons in motor-related areas encode and generate motor output, yet the degree to which the relationship between single unit activity and various movement parameters (i.e. a cell’s ‘motor tuning’) is stable over days and weeks has been the subject of recent debate (Carmena et al., 2005; Chestek et al., 2007; Clopath et al., 2017; Ganguly and Carmena, 2009; Huber et al., 2012; Liberti et al., 2016; Peters et al., 2014; Rokni et al., 2007; Stevenson et al., 2011). Long-term continuous recordings during natural and learned behaviors constitute unique datasets for characterizing the stability of movement coding at the level of single neurons.

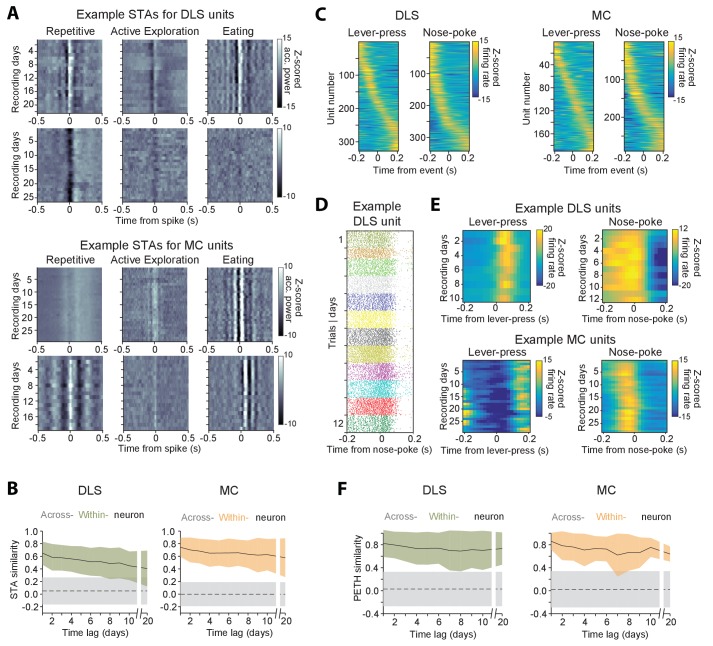

We addressed this by computing the ‘response fields’ (Cheney and Fetz, 1984; Cullen et al., 1993; Serruya et al., 2002; Wessberg et al., 2000), i.e. the spike-triggered average (STA) accelerometer power (Materials and methods), of neurons in different active states (Figure 8A; Materials and methods). The percentage of units with significant response fields during repetitive behavior, exploration, and eating was 19.7% (n = 291), 9.5% (n = 140) and 18.4% (n = 272), respectively, for the striatum, and 18.7% (n = 290), 7.6% (n = 118) and 22.5% (n = 349) for the motor cortex. Movement STAs varied substantially between neurons and across behavioral states, but were stable for the same unit when compared over at least 20 days, within a particular state (Figure 8A–B).

Figure 8. Stability of behavioral representations.

(A) Spike-triggered average (STA) accelerometer power calculated daily in three different behavioral states – repetitive behavior (left), active exploration (middle) and eating (right). Shown are four example units recorded from DLS (top two rows) and MC (bottom two rows). (B) Stability of STAs over time. Correlations between STAs were measured across time-lags of 1 to 20 days for the same unit (within-unit, solid lines), or between simultaneously recorded units (across-unit, dashed lines) in DLS (left) and MC (right). Colored shaded regions indicate the standard deviation of within-unit STA similarity (averaged over the three behavioral states in ‘A’), over all units. Grey shaded regions indicate standard deviation of across-unit STA similarity, over all time-bins. (C) Peri-event time histograms (PETHs) of DLS (left) and MC (right) unit activity, aligned to the timing of a lever-press or nose-poke during the execution of a skilled motor task. Plotted are the PETHs of units that had significant modulations in their firing rate in a time-window ±200 ms around the time of the behavioral event (Materials and methods). The color scale indicates Z-scored firing rate. Units are sorted based on the times of peaks in their PETHs. (D) Spike raster of an example DLS unit over 12 days, aligned to the time of a nose-poke event. Each dot represents a spike-time on a particular trial. The color of the dot indicates day of recording. (E) PETHs computed over several days for example DLS (top) and MC (bottom) units to lever-press (left) and nose-poke (right) events in our task. (F) Stability of task PETHs over time. Correlations between PETHs were measured across time-lags of 1 to 20 days for the same unit (within-unit, solid lines), or between simultaneously recorded units (across-unit, dashed lines) in DLS (left) and MC (right). Colored shaded regions indicate the standard deviation of within-unit PETH similarity (averages across lever-press and nose-poke events), over all units. Grey shaded regions indicate standard deviation of across-unit PETH similarity (averaged across lever-press and nose-poke events), over all time-bins.

Figure 8—figure supplement 1. Measurements of long-term stability of neural dynamics and motor representations for individual units.

Another measure used to characterize neural coding of behavior on fast timescales is the ‘peri-event time histogram’ (PETH), that is, the average neural activity around the time of a salient behavioral event. We calculated PETHs for two events reliably expressed during the execution of a skill our subjects performed in daily training sessions (Kawai et al., 2015) (Materials and methods): (i) the first lever-press in a learned lever-press sequence and (ii) entry into the reward port after successful trials (‘nose-poke’). The fraction of units whose activity was significantly locked to the lever-press or nose-poke (see Materials and methods) was 22.2% (n = 328) and 23.2% (n = 343) in striatum, and 12.3% (n = 190) and 18.7% (n = 290) in motor cortex (Figure 8C), respectively. When comparing PETHs of different units for the same behavioral events, we observed that the times of peak firing were distributed over a range of delays relative to the time of the events (Figure 8C). However, despite the heterogeneity in PETHs across the population of units, the PETHs of individual units were remarkably similar when compared across days (Figure 8D–F).

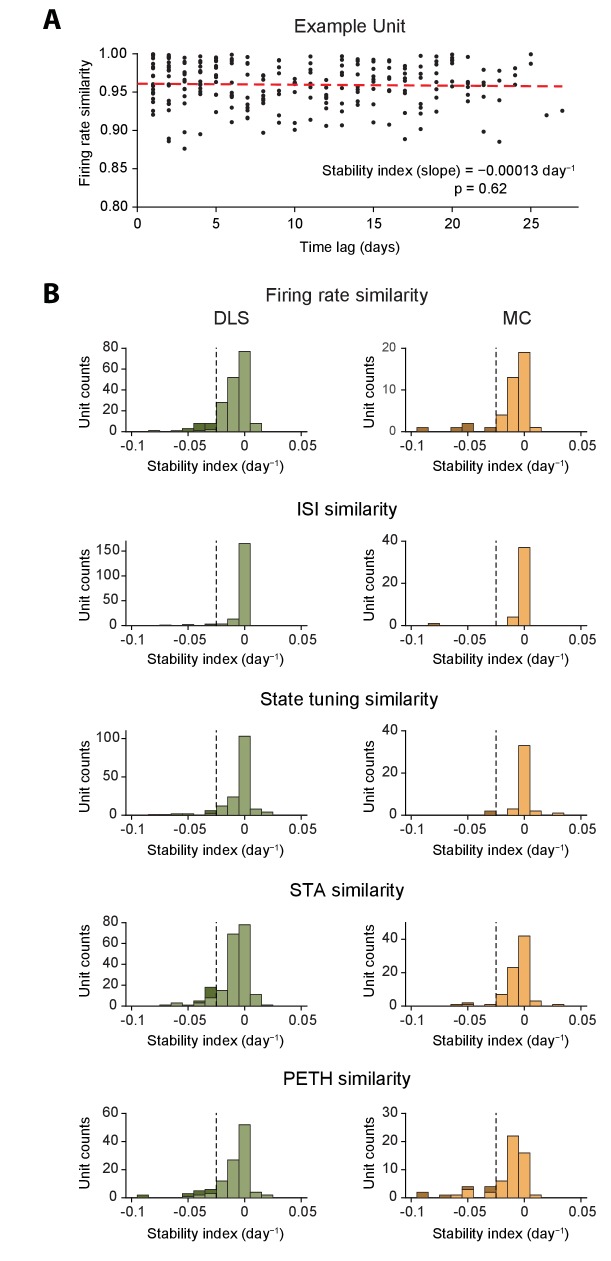

Stability of individual units

Our population-level analyses showed that many aspects of neural activity, such as firing rates, inter-spike intervals and behavioral representations are stable over many weeks. While these results are consistent with the majority of recorded units being stable over these timescales, they do not rule out the existence of a smaller subpopulation of units whose dynamics may be unstable over time. To probe this, we did a regression analysis to measure the dependence of different similarity metrics on elapsed time (Figure 8—figure supplement 1A), and quantified the stability index of individual units as the slope of the best-fit line. The distribution of stability indices was unimodal and centered at 0 day−1 for every similarity metric, indicating that the vast majority (between 88% and 98%) of recorded units were stable (Figure 8—figure supplement 1B, Table 2) given a conservative threshold (slope >= -0.025 day−1 and p>=0.05). Interestingly, a negative skew was also apparent in these distributions implying that a small fraction (~2–12%) of our recorded units were relatively less stable over longer timescales (Figure 8—figure supplement 1B, Table 2); however no unit was found to have a stability index lower than -0.1 day−1.

Table 2. Proportion of units that have stable neural dynamics and motor representations in the striatum and motor cortex.

| Activity metric | Dorsolateral striatum | Motor cortex | ||

|---|---|---|---|---|

| % stable | n | % stable | n | |

| Firing rate | 90.3 | 186 | 88.1 | 42 |

| ISI histogram | 97.9 | 187 | 97.6 | 42 |

| State tuning | 93.9 | 163 | 92.7 | 41 |

| STA | 93.6 | 202 | 95.0 | 80 |

| PETH | 89.4 | 113 | 89.5 | 57 |

Discussion

Recording from populations of single neurons in behaving animals has traditionally been a laborious undertaking both in terms of experimentation and analysis. Automating this process, and extending the recordings over weeks and months, increases the efficiency and power of such experiments in multiple ways. First, it eliminates the manual steps of intermittent recordings, such as transferring animals to and from their recording chambers, plugging and unplugging recording cables etc., which, besides being time-consuming, can be detrimental to the recordings (Buzsáki et al., 2015) and stressful for the animals (Dobrakovová and Jurcovicová, 1984; Longordo et al., 2011). Second, when combined with fully automated home-cage training (Poddar et al., 2013), this approach allows the neural correlates of weeks- and months-long learning processes to be studied in an efficient manner. Indeed, our system should enable a single researcher to supervise tens, if not hundreds, of recordings simultaneously, thus radically improving the ease and throughput with which such experiments can be performed. Third, continuous recordings allow the activity of the same neurons to be tracked over days and weeks, allowing their activity patterns to be pooled and compared for more trials, and across different experimental conditions and behavioral states, thus increasing the power with which the principles of neural function can be inferred (Lütcke et al., 2013; McMahon et al., 2014a).

Despite their many advantages, continuous long-term neural recordings are rarely performed. This is in large part because state-of-the-art methods for processing extracellular recordings require significant human input and hence make the analysis of large datasets prohibitively time-consuming (Hengen et al., 2016). Our fast automated spike tracking (FAST) algorithm overcomes this bottleneck, thereby dramatically increasing the feasibility of continuous and high-throughput long-term recordings of extracellular signals in behaving animals.

Long-term stability of neural dynamics

Recording the activity of the same neuronal population over long time periods can be done by means of functional calcium imaging (Huber et al., 2012; Lütcke et al., 2013; Peters et al., 2014; Rose et al., 2016). However, the calcium signal reports a relatively slow correlate of neural activity that can make it difficult to reliably resolve individual spike events and hence to assess fine timescale neuronal interactions (Grienberger and Konnerth, 2012; Lütcke et al., 2013). Furthermore, calcium indicators function as chelators of free calcium ions (Hires et al., 2008; Tsien, 1980) and could interfere with calcium-dependent plasticity processes (Zucker, 1999) and, over the long-term, compromise the health of cells (Looger and Griesbeck, 2012). These variables may have contributed to discrepancies in studies using longitudinal calcium imaging, with many reporting dramatic day-to-day fluctuations in neural activity patterns (Driscoll et al., 2017; Huber et al., 2012; Liberti et al., 2016; Ziv et al., 2013) while some others report more stable representations (Peters et al., 2014; Rose et al., 2016), leaving open the question of how stable the brain really is (Clopath et al., 2017).

While electrical measurements of spiking activity do not suffer from the same drawbacks, intermittent recordings may fail to reliably track the same neurons over multiple days (Figure 3—figure supplement 1D–E) (Dickey et al., 2009; Emondi et al., 2004; Fraser and Schwartz, 2012; Tolias et al., 2007). Attempts at inferring long-term stability of movement-related neural dynamics from such experiments have produced conflicting results, with many studies reporting stability (Bondar et al., 2009; Chestek et al., 2007; Fraser and Schwartz, 2012; Ganguly and Carmena, 2009; Greenberg and Wilson, 2004; Nuyujukian et al., 2014), while others find significant fluctuations even within a single day (Carmena et al., 2005; Mankin et al., 2012; Perge et al., 2013; Rokni et al., 2007). Such contrasting findings could be due to systematic errors in unit tracking across discontinuous recording sessions (Figure 4—figure supplement 1), or the choice of statistical framework used to assess stability (Rokni et al., 2007; Stevenson et al., 2011). Complications may also arise from the use of activity metrics, such as firing rate and ISI distributions, to track units in many studies (Dickey et al., 2009; Emondi et al., 2004; Fraser and Schwartz, 2012; Ganguly and Carmena, 2009; Greenberg and Wilson, 2004; Jackson and Fetz, 2007; McMahon et al., 2014a; Okun et al., 2016; Thompson and Best, 1990; Tolias et al., 2007), which may bias datasets towards subsets of units whose spike waveforms (Figure 1C–D) and neural dynamics (Figure 8—figure supplement 1) do not change with time.

Our continuous recordings, which allow for the reliable characterization of single neuron properties over long time periods, suggest a remarkable stability in both spiking statistics (Figure 5), neuronal interactions (Figure 6), and tuning properties (Figures 7 and 8) of the majority of motor cortical and striatal units. Taken together with studies showing long-term structural stability at the level of synapses (Grutzendler et al., 2002; Yang et al., 2009), our findings support the view that these neural networks are, overall, highly stable systems, both in terms of their dynamics and connectivity.

Discrepancies between our findings and other studies that report long-term instability of neural activity could, in some cases, be due to inherent differences between brain areas. Neural dynamics of circuits involved in transient information storage such as the hippocampus (Mankin et al., 2012; Ziv et al., 2013), and flexible sensorimotor associations such as the parietal cortex (Morcos and Harvey, 2016) may be intrinsically more unstable than circuits responsible for sensory processing or motor control (Ganguly and Carmena, 2009; McMahon et al., 2014b; Peters et al., 2014; Rose et al., 2016). In line with this hypothesis, recent studies have found higher synaptic turnover in the hippocampus (Attardo et al., 2015) in comparison to primary sensory and motor cortices (Holtmaat et al., 2005; Xu et al., 2009; Yang et al., 2009), although it is not clear whether structural plasticity functions to destabilize or stabilize neural activity (Clopath et al., 2017). In this context, our long-term electrical recording and analysis platform could be used to verify whether spiking activity in these associative areas is as unstable as their reported calcium dynamics.

In this study we recorded from rats that had already learned a sequence task in order to answer the question: is the brain stable when behavior is stable? We hypothesize that neural activity would be far more variable when an animal is learning a task or motor skill (Kawai et al., 2015). Our experimental platform makes it feasible to characterize learning-related changes in task representations and relate them to reorganization of network dynamics over long time-scales.

Future improvements

There is a continued push to improve recording technology to increase the number of neurons that can be simultaneously recorded from (Berényi et al., 2014; Buzsáki et al., 2015; Du et al., 2011; Viventi et al., 2011), as well as make the recordings more stable (Alivisatos et al., 2013; Chestek et al., 2011; Cogan, 2008; Fu et al., 2016; Guitchounts et al., 2013). Our modular and flexible experimental platform can easily incorporate such innovations. Importantly, our novel and automated analysis pipeline (FAST) solves a major bottleneck downstream of these solutions by allowing increasingly large datasets to be efficiently parsed, thus making automated recording and analysis of large populations of neurons in behaving animals a feasible prospect.

Materials and methods

Animals

The care and experimental manipulation of all animals were reviewed and approved by the Harvard Institutional Animal Care and Use Committee. Experimental subjects were female Long Evans rats (RRID:RGD_2308852), 3–8 months old at the start of the experiment (n = 5, Charles River).

Behavioral training

Before implantation, rats were trained twice daily on a timed lever-pressing task (Kawai et al., 2015) using our fully automated home-cage training system (Poddar et al., 2013). Once animals reached asymptotic (expert) performance on the task, they underwent surgery to implant tetrode drives into dorsolateral striatum (n = 2) and motor cortex (n = 3) respectively. After 7 days of recovery, rats were returned to their home-cages, which had been outfitted with an electrophysiology recording extension (Figure 1A, Figure 1—figure supplement 1). The cage was placed in an acoustic isolation box, and training on the task resumed. Neural and behavioral data was recorded continuously for 12–16 weeks with only brief interruptions (median time of 0.2 hr) for occasional troubleshooting.

Continuous behavioral monitoring

To monitor the rats’ head movements continuously, we placed a small 3-axis accelerometer (ADXL 335, Analog Devices) on the recording head-stage (Figure 1—figure supplement 1). The output of the accelerometer was sampled at 7.5 kHz per axis. We also recorded 24/7 continuous video at 30 frames per second with a CCD camera (Flea 3, Point Grey) or a webcam (Agama V-1325R). Video was synchronized to electrophysiological signals by recording TTL pulses from the CCD cameras that signaled frame capture times.

Surgery

Rats underwent surgery to implant a custom-built recording device (see ‘Tetrode arrays’). Animals were anesthetized with 1–3% isoflurane and placed in a stereotax. The skin was removed to expose the skull and five bone screws (MD-1310, BASi), including one soldered to a 200 µm diameter silver ground wire (786500, A-M Systems), were driven into the skull to anchor the implant. A stainless-steel reference wire (50 µm diameter, 790700, AM-Systems) was implanted in the external capsule to a depth of 2.5 mm, at a location posterior and contralateral to the implant site of the electrode array. A 4–5 mm diameter craniotomy was made at a location 2 mm anterior and 3 mm lateral to bregma for targeting electrodes to motor cortex, and 0.5 mm anterior and 4 mm lateral to bregma for targeting dorsolateral striatum. After removing the dura-mater, the pia-mater surrounding the implant site was glued to the skull with cyanoacrylate glue (Krazy glue). The pia-mater was then weakened using a solution of 20 mg/ml collagenase (Sigma) and 0.36 mM calcium chloride in 50 mM HEPES buffer (pH 7.4) in order to minimize dimpling of the brain surface during electrode penetration (Kralik et al., 2001). The 16-tetrode array was then slowly lowered to the desired depth of 1.85 mm for motor cortex and 4.5 mm for striatum. The microdrive was encased in a protective shield and cemented to the skull by applying a base layer of Metabond luting cement (Parkell) followed by a layer of dental acrylic (A-M systems).

Histology

At the end of the experiments, animals were anesthetized and anodal current (30 µA for 30 s) passed through select electrodes to create micro-lesions at the electrode tips. Animals were transcardially perfused with phosphate-buffered saline (PBS) and subsequently fixed with 4% paraformaldehyde (PFA, Electron Microscopy Sciences) in PBS. Brains were removed and post-fixed in 4% PFA. Coronal sections (60 µm) were cut on a Vibratome (Leica), mounted, and stained with cresyl violet to reconstruct the location of implanted electrodes. All electrode tracks were consistent with the recordings having been done in the targeted areas.

Tetrode arrays

Tetrodes were fabricated by twisting together short lengths of four 12.5 µm diameter nichrome wires (Redi Ohm 800, Sandvik-Kanthal), after which they were bound together by melting their polyimide insulation with a heat-gun. An array of 16 such tetrodes was attached to a custom-built microdrive, advanced by a 0–80 threaded screw (~320 µm per full turn). The wires were electroplated in a gold (5355, SIFCO) and 0.1% carbon nanotubes (Cheap Tubes dispersed MWNTs, 95wt% <8 nm) solution with 0.1% polyvinylpyrrolidone surfactant (PVP-40, Sigma-Aldrich) to lower electrode impedances to ~100–150 kΩ (Ferguson et al., 2009; Keefer et al., 2008). The prepared electrode array was then implanted into the motor cortex or striatum.

Tethering system

We used extruded aluminum with integrated V-shaped rails for the linear-slide (Inventables, Part #25142–03), with matching wheels (with V-shaped grooves) on the carriage (Inventables, Part #25203–02). The bearings in the wheels were degreased and coated with WD-40 to minimize friction. The carriage plate was custom designed (design files available upon request) and fabricated by laser cutting 3 mm acrylic. A low-cost 15-channel commutator (SRC-022 slip-ring, Hangzhou-Prosper Ltd) was mounted onto the carriage plate. The commutator was suspended with a counterweight using low friction pulleys (Misumi Part # MBFN23-2.1). We used a 14 conductor cable (Mouser, Part # 517-3600B/14100SF) to connect our custom designed head-stage to the commutator. The outer PVC insulation of the cable bundle was removed to reduce the weight of the cable and to make it more flexible. The same cable was used to connect the commutator to a custom designed data acquisition system. This cable needs to be as flexible as possible to minimize forces on the animal’s head.

Recording hardware

Our lightweight, low-noise and low-cost system for multi-channel extra-cellular electrophysiology (Figure 1—figure supplement 1) uses a 64-channel custom-designed head-stage (made of 2 RHD2132 ICs from Intan Technologies), weighs less than four grams and measures 18 mm x 28 mm in size. The head-stage filters, amplifies, and digitizes extracellular neural signals at 16 bits/sample and 30 kHz per second per channel. An FPGA module (Opal Kelly XEM6010 with Xilinx Spartan-6 FPGA) interfaces the head-stage with a computer that stores and displays the acquired data. Since the neural signals are digitized at the head-stage, the system is low-noise and can support long cable lengths. Design files and a complete parts list for all components of the system are available on request. The parts for a complete 64-channel electrophysiology extension, including head-stage, FPGA board, commutator, and pulley system, but excluding the recording computer, cost less than $1500.

Automatic classification of behavior

We developed an unsupervised algorithm to classify behaviors based on accelerometer data, LFPs, spike times, and task event times (Gervasoni et al., 2004; Venkatraman et al., 2010). Behaviors were classified at 1 s resolution into one of the following ‘states’: repetitive (RP), eating (ET), active exploration (AX), task engagement (TK), quiet wakefulness (QW), rapid eye movement sleep (RM) or slow-wave sleep (SW).

Processing of accelerometer data

We computed the L2 norm of the accelerometer signal as follows:

| (1) |

where is the accelerometer signal on channel . The accelerometer norm was then band-pass filtered between 0.5 Hz and 150 Hz using a fourth order Butterworth filter. We then computed the multitaper spectrogram (0–40 Hz) of the accelerometer signal in 1 s bins using Chronux (Mitra and Bokil, 2007) and measured the total accelerometer power in the 2–5 Hz frequency bands. The accelerometer power had a bimodal distribution with one narrow peak at low values corresponding to immobility and a secondary broad peak corresponding to epochs of movement. Time-bins with accelerometer power within two standard deviations of the lower immobility peak were termed ‘inactive’, while time-bins with power greater than the ‘mobility threshold’ – four standard deviations above the immobility peak – were termed ‘active’.

To verify the accuracy of our accelerometer-based separation of active and inactive behaviors, we calculated the percent overlap between our automated separation with that of a human observer who manually scored active and inactive periods using simultaneously recorded webcam video data (n = 29831 s video from 4 rats).

| Inactive (auto) | Active (auto) | |

|---|---|---|

| Inactive (manual) | 92.9% | 7.1% |

| Active (manual) | 3.1% | 96.9% |

Processing of the LFP

We extracted LFPs by down-sampling the raw data 100-fold (from 30 kHz to 300 Hz) by two applications of a fourth order 5-fold decimating Chebychev filter followed by a single application of a fourth order 4-fold decimating Chebychev filter. We then computed the multitaper spectrogram (0–20 Hz) for the LFP signal recorded on each electrode with a moving window of width 5 s and step-size 1 s using Chronux (Mitra and Bokil, 2007), and then averaged these spectrograms across all electrodes to yield the final LFP spectrogram. We used PCA to reduce the dimensionality of the spectrogram to 20 components and then fit a three component Gaussian mixture model to cluster the LFP spectrograms into three distinct brain states. Based on earlier studies (Gervasoni et al., 2004; Lin et al., 2006), these states were characterized by high delta (0–4 Hz) power (e.g. slow wave sleep), high type-II theta (7–9 Hz) power (e.g. REM sleep or active exploration) and high type-I theta (5–7 Hz) power (e.g. quiet wakefulness or repetitive behaviors).

Classification of inactive states – REM and slow-wave sleep, and quiet wakefulness

Inactive states, that is, when rats are immobile, were identified as bins with total accelerometer power below the immobility threshold (see ‘Processing of accelerometer data’ above). Slow-wave sleep (SW) states were distinguished by high delta power (see ‘Processing of the LFP’ above), REM sleep states (RM) were distinguished by high type-II theta power, and quiet wakefulness states (QW) were distinguished by high type-I theta power (Bland, 1986; Gervasoni et al., 2004; Lin et al., 2006). Consistent with prior studies (Gervasoni et al., 2004), we found that rats spent most of their time sleeping (44 ± 3%) or resting (6 ± 2%) (Figure 7—figure supplement 1B). Sleep occurred at all hours, but was more frequent when lights were on (58 ± 7%) versus when they were off (33 ± 9%), consistent with the nocturnal habits of rats (Figure 7—figure supplement 1C).

Classification of active states – repetitive behaviors, eating, task engagement and active exploration

Active states were characterized by the accelerometer power being above the mobility threshold (see ‘Processing of accelerometer data’ above).

Eating (ET) epochs were classified based on a strong, broad-band common-mode oscillatory signal on all electrodes, due to electrical interference from muscles of mastication. To extract eating epochs, we median-filtered the raw data across all channels, and computed a multitaper spectrogram between 0–40 Hz using Chronux, with window width 5 s and step-size 1 s. After reducing the dimensionality of the spectrogram to 20 by PCA, we fit a Gaussian mixture model and identified cluster(s) corresponding to the broad-band noise characteristic of eating.

Task engagement (TK) was identified based on lever-press event times. If less than 2 s had elapsed between presses (corresponding to the average trial length) the time between them was classified as task engagement.

Active epochs that were not classified as eating or task engagement were classified as active exploration (AX) or repetitive behavior (RP) depending on whether they had high type II or high type I theta power in their LFP spectrograms, respectively, based on prior reports (Bland, 1986; Gervasoni et al., 2004; Sainsbury et al., 1987).

The algorithm labeled each time bin exclusively, i.e. only one label was possible for each bin. Bins corresponding to multiple states (e.g. eating and grooming) where classified as unlabeled (UL), as were times when no data was available due to brief pauses in the recording (9% of time-bins unlabeled).

Removal of seizure-like epochs

Long Evans rats are susceptible to absence seizures, characterized by strong ~8 Hz synchronous oscillations in neural activity and associated whisker twitching (Berke et al., 2004; Gervasoni et al., 2004; Nicolelis et al., 1995). To identify seizure-like episodes, we calculated the autocorrelation of the raw spike data on all electrodes in 2 s windows. To identify peaks associated with seizure-like states, we calculated the periodogram of each autocorrelation function and evaluated the power in the 7–9 Hz frequency range. The distribution of these values had a Gaussian shape with a non-Gaussian long tail towards high values. The threshold for seizure-like states was set to the 95th percentile of the Gaussian distribution. Using this classification, 11% of time-bins were classified as seizure (12 ± 2% for the DLS-implanted rats and 11 ± 2% for the MC-implanted rats) similar to previously published reports (Shaw, 2004). These epochs were removed from the behavioral state analysis.

Data storage and computing infrastructure

Hardware setup

Our recordings (64-channels at 30 kHz per channel) generate 1 terabyte (TB) of raw data every 2 days. To cope with these demands, we developed a low-cost and reliable high I/O bandwidth storage solution with a custom lightweight fileserver. Each storage server consists of 24 4TB spinning SATA hard disks connected in parallel to a dual socket Intel server class motherboard via the high bandwidth PCI-E interface. The ZFS file-system (bundled with the open source SmartOS operating system) is used to manage the data in a redundant configuration that allows any two disks in the 24 disk array to simultaneously fail without data loss. Due to the redundancy, each server has 60 TB of usable space that can be read at approximately 16 gigabits per second (Gbps). This high I/O bandwidth is critical for data backup, recovery and integrity verification.

Distributed computing software setup

To fully utilize available CPU and I/O resources, we parallelized the data processing (Dean and Ghemawat, 2008). Thread-level parallelization inside a single process is the simplest approach and coordination between threads is orchestrated using memory shared between the threads. However, this approach only works for a single machine and does not scale to a cluster of computers. The typical approach to cluster-level parallelization is to coordinate the multiple parallel computations running both within a machine and across machines by exchanging messages between the concurrently running processes. The ‘map-reduce’ abstraction conceptualizes a computation as having two phases: a ‘map’ phase which first processes small subsets of the data in parallel and a ‘reduce’ phase which then serially aggregates the results of the ‘map’ phase. Since much of our data analysis is I/O limited (like computing statistics of spike waveform amplitudes), we developed a custom distributed computing infrastructure for ‘map-reduce’ like computations for the .NET platform. The major novelty in our framework is that rather than moving the output of the map computation over the network to a central node for performing the reduce computations, it instead moves the reduce computation to the machines containing the output of the map computations in the correct serial order. If the output of the map computation is voluminous compared to the output of each step of the reduce computation then our approach consumes significantly less time and I/O bandwidth. We have used this framework both in a virtual cluster of 5 virtual machines running on each of the afore-mentioned SmartOS-based storage servers and in Microsoft’s commercial cloud computing platform – Windows Azure.

Analysis of neural data

Automated spike-sorting algorithm

We developed a new and automated spike-sorting algorithm (Poddar et al., 2017) that is able to efficiently track single units over long periods of time (code available at https://github.com/Olveczky-Lab/FAST; copy archived at https://github.com/elifesciences-publications/FAST). Our offline algorithm first identifies the spikes from the raw recordings and then performs two fully automated processing steps. A list of important algorithm parameters and their recommended values is provided in Table 1.

Table 1. List of parameters for the fully automated steps of FAST and their recommended values.

| Step | Parameter | Details | Recommended value |

|---|---|---|---|

| Snippeting | block size | Length of data segment to filter and snippet per block | 15 s |

| Snippeting | end padding | To account for edge effects in filtering | 100 ms |

| Snippeting | waveform samples pre and post | Samples per spike snippet to record before and after peak (not including peak sample) | 31 and 32 samples (total 64 samples @ 30 kHz sampling) |

| Snippeting | spike detection threshold | Threshold voltage to detect spikes | 7 × median absolute deviation of recording |

| Snippeting | spike return threshold | Threshold voltage below which the spike detection process is reset | 3 × median absolute deviation of recording |

| Snippeting | spike return number of samples | Number of samples the recorded voltage must stay under the spike return threshold to reset the spike detection process | 8 samples (0.27 ms @ 30 kHZ sampling rate) |

| Clustering: Step 1 | spikes per block | Number of spikes per block for local clustering | 1000 spikes |

| Clustering: Step 1 | minimum and maximum SPC temps | Range of temperatures for super-paramagnetic clustering (SPC) | 0, 15 |

| Clustering: Step 1 | Threshold for recursive merging of SPC tree (‘a’) | Threshold distance between leaf node and its parent node in the SPC tree below which the leaf node is merged with its parent. | 20 µV |

| Clustering: Step 1 | Minimum cluster size | Min. number of spikes in cluster to compute cluster centroid | 15 spikes |

| Clustering: Step 1 | Number of rounds of local clustering | Rounds of iterative multi-scale clustering | 4 |

| Clustering: Step 2 | Spike centroids per block | Number of spike cluster centroids per block for generating SPC tree prior to segmentation fusion | 1000 centroids |

| Clustering: Step 2 | Cluster trees per block | Number of cluster trees per block for segmentation fusion | 10 cluster trees |

| Clustering: Step 2 | Segmentation fusion block overlap | Number of cluster trees overlap between blocks for segmentation fusion | 5 cluster trees |

| Clustering: Step 2 | ‘s’, ‘k’ | Parameters for sigmoid scaling of link weights to be between 0 and 1 in segmentation fusion | s = 0.005, k = 0.03 |

| Clustering: Step 2 | Link similarity threshold | Similarity threshold for link weights between cluster trees in segmentation fusion | 0.02 (range 0–1) |

| Clustering: Step 2 | Link similarity threshold for straggler nodes | Similarity threshold for joining leftover nodes to an existing chain in segmentation fusion | 0.02 (range 0–1) |

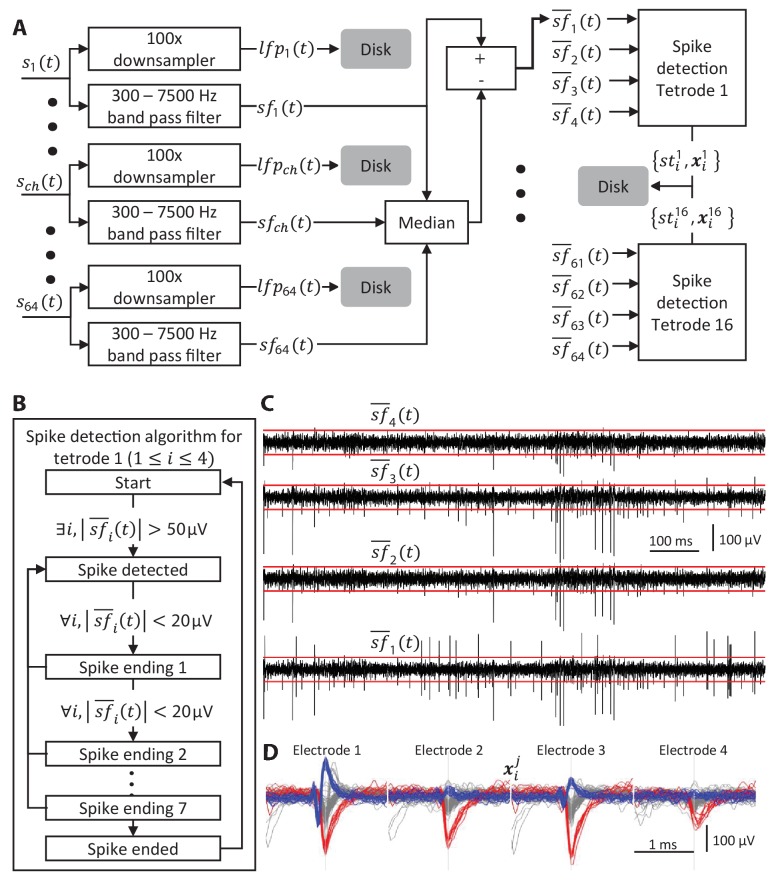

Spike identification

We first extract spike snippets, that is, the extracellular voltage traces associated with action potentials, automatically from the raw data. A schematic of this process is shown in Figure 2—figure supplement 1. First, the signal from each channel is partitioned into 15 s blocks with an additional 100 ms tacked onto each end of the block to account for edge effects in filtering. Then, for each block of each channel, the raw data is filtered with a fourth order elliptical band-pass filter (cut-off frequencies 300 Hz and 7500 Hz) first in the forward then the reverse direction to preserve accurate spike times and spike waveforms. For each sample in each block, the median across all channels is subtracted from every channel to eliminate common mode noise that may arise from rapid movement or behaviors such as eating (Figure 2—figure supplement 4). Finally, a threshold crossing algorithm is used to detect spikes independently for each tetrode (Figure 2—figure supplement 1). In our recordings, we use a threshold of 50 µV, which corresponds to about 7 times the median absolute deviation (m.a.d.) of the signal (average m.a.d. = 7.6 ± 0.6 µV in our recordings), but we note that this value is not hard-coded in FAST and can be changed to an exact multiple of the measured noise. After detecting a threshold crossing, we find the sample that corresponds to the local maximum of the event, defined as the maximum of the absolute value across the four channels of a tetrode. A 2 ms (64 sample) snippet of the signal centered on the local maximum is extracted from all channels. Thus, each putative spike in a tetrode recording is characterized by the time of the local maximum and a 256 dimensional vector (64 samples x 4 channels).

Each 15 s block of each tetrode can be processed in parallel. However, since the number of spikes in any given 15 s block is not known in advance, the extracted spike snippets must be serially written to disk. Efficiently utilizing all the cores of the CPUs and simultaneously queuing disk read/write operations asynchronously is essential for keeping this step faster than real-time. In our storage server, the filtering/spike detection step runs 15 times faster than the acquisition rate. To extract LFPs, we down-sample the raw data 100-fold (from 30 kHz to 300 Hz) by two applications of a fourth order 5-fold decimating Chebychev filter followed by a single application of a fourth order 4-fold decimating Chebychev filter. After extracting the spike snippets and the LFPs, the raw data is deleted, resulting in a 5–10 fold reduction in storage space requirements.

A typical week-long recording from 16 tetrodes results in over a billion putative spikes. While most putative spikes are low amplitude and inseparable from noise, the spike detection threshold cannot be substantially increased without losing many cleanly isolatable single units. Assigning many billion putative spikes to clusters corresponding to single units in a largely automated fashion is critical for realizing the full potential of continuous 24/7 neural recordings.

Automatic processing step 1. local clustering and de-noising