Abstract

Many accounts of reward-based choice argue for distinct component processes that are serial and functionally localized. In this article, we argue for an alternative viewpoint, in which choices emerge from repeated computations that are distributed across many brain regions. We emphasize how several features of neuroanatomy may support the implementation of choice, including mutual inhibition in recurrent neural networks and the hierarchical organisation of timescales for information processing across the cortex. This account also suggests that certain correlates of value may be emergent rather than represented explicitly in the brain.

Neurobiologists have long been interested in developing mechanistic models to explain how we evaluate options and choose the best course of action1–3. Many of these models take a modular perspective. That is, they assume, even if only tacitly, that goal-directed choice can be subdivided into a set of discrete component processes, and that the neural implementation of these processes is both serial and localized4–7. The component processes typically include the evaluation of options, the comparison of option values in the absence of any other factors, the selection of an appropriate action plan, and the monitoring of the outcome of the choice. These component processes are generally assumed to correspond to discrete neural computations that are implemented in distinct neural structures.

An alternative perspective takes features of neural circuit anatomy as a starting point, and constructs circuit-based models that predict both behavioural and neural data while retaining biological plausibility at their core8–14. Recent research using such an approach emphasizes three overarching principles of reward-based choice. First, decisions may be formed in a distributed fashion across many brain regions that act in concert and perform similar computations. Second, the distributed networks implementing choices are highly recurrent in nature, which affects the kinds of computation that are performed. Third, these distributed and recurrent networks are organised into functional and temporal hierarchies.

Centring behavioural models on neural circuit plausibility builds on several traditions. Its origins can be traced back to mid-century pioneers like Weiner, Hull, Hebb, and McCullough and Pitts15,16. These scholars took advantage of then-new discoveries about neurophysiology and computer science to propose neurally plausible computational theories. The next generation of scientists — including Grossberg, Hopfield, McClelland, Rumelhart and Hinton — developed these ideas to model entire neural systems. The key insight of this branch, from McCullough and Pitts to the present day, was that neuron-like units that performed biophysically plausible computations and were connected in simple ways could perform astonishingly rich computations17–20. Such systems do not have dedicated memory and processing subsystems, unlike other computing architectures. Memory and computation are instead interwoven in the system and distributed broadly throughout it17. This is directly relevant to choice models because evaluation depends critically on memory and comparison is a basic computation for choice.

These traditions continue to influence neuroscience to this day. Their influence on decision making has been expressed by computational neuroscientists such as Wang, Frank, O’Reilly, Rolls and Cisek, among others8–13. Recently, several studies have tested key empirical predictions from circuit-based accounts of decision-making. Moreover, these advances have coincided with a resurgence of interest in neural networks in machine learning and computer science21, and methodological breakthroughs in neuroanatomy22 and circuit manipulation23. These methods should allow for far more rigorous testing and refinement of circuit-level models in the near future. It is therefore particularly timely to consider the contribution that such models make to our understanding of reward-guided choice.

In this Opinion article, we first outline the key principles behind a distributed, hierarchical and recurrent account of reward-guided choice. We then discuss some empirical motivations for this account. We argue that the known and emerging neuroscience of simple economic choice is consistent with several important properties of circuit-based models.

The distributed, hierarchical, and recurrent approach differs qualitatively from modular explanations of reward-guided choice in that it is eliminative24. This means that the neural implementation of choice does not necessarily recapitulate the steps or modules often used to describe the overall process. Instead, choice algorithms may be thought of as emergent properties of network activity. This change in perspective leads to a different core set of research questions than the modular approach (BOX 1).

Box 1. A change of perspective.

Adopting a circuit-based perspective of reward-based choice reframes the questions that we ask about how decisions are formed, and alters our interpretation of the resulting neural data. Fundamentally, it changes the question from “What is represented?” to “How is the computation implemented?” To be more specific, the modular perspective has led to us asking questions such as how is value computed and represented in the brain? In what regions do the evaluation, comparison and selection steps occur? In what ‘space’ (for example, goods-based or action-based) does value comparison occur? Do the computations performed in a particular brain region precede or follow the decision? What are the qualitative functional differences between different regions of the reward system?

By contrast, the distributed, hierarchical and recurrent perspective of reward-based choice makes a different set of assumptions, and these in turn lead to different research questions. First, it assumes that the component processes of choice may not be localised to particular computations in discrete brain areas. Instead, the components may be distributed across many regions simultaneously — implementing fundamentally similar, canonical computations. What is the nature of these computations, and how do different brain regions interact as choices are made?

The second assumption is that a comparison may not occur in a single decision space: decision spaces may reflect the anatomical connections of a given region, or even be artefacts of the experimental design. How does the hierarchical organization of decision and reward areas lead to effective choices? And how does it explain the observed hemodynamic and neuronal response patterns?

Third, most brain regions are both pre- and post-decisional. Equally, because decision formation occurs gradually, most regions may be better classified as mid-decisional. How does neuroanatomy produce a gradual transformation from offers to choices? What role do the brain’s ubiquitous recurrent and feedback connections play in that process?

Fourth, value, or at least certain correlates of value, may not be represented per se. The implementation of choice need not recapitulate the algorithms that can be used to describe the overall choice process. Instead, the algorithm is an emergent property of the system: certain correlates of value could therefore emerge naturally as a consequence of how neural dynamics unfold across different trials. What is the structure of these dynamics, and why might they give rise to value correlates? Which correlates of value is this true for?

Properties of the framework

Distributed

A distributed decision is one in which separate elements perform subsidiary computations that, when combined, produce the eventual choice. Well-known examples include the actions of individual voters in a national election and the selection of hive sites by swarms of bees25 (BOX 2). In each of these cases, individual elements process a small (and often noisy) fragment of the overall input, possibly including the outcomes of other agents, to make an overarching single decision in the aggregate.

Box 2. Distributed decision-making in bee swarms.

Although modular decision systems are often intuitive — the functions map directly onto the structure — distributed ones are not. The decision process of a bee swarm provides a natural and intuitive example of a distributed decision system.

In late spring, a hive of bees will enter into a swarm state and begin the process of choosing a new hive site25,159. Individual scout bees make reconnaissance flights to identify and evaluate potential sites. The ideal sites are open, dry cavities of medium volume that are located high up in the canopy, protected from wind, and facing south; thus adaptive decisions need to optimize across many dimensions. On finding a potential site, each scout returns to the swarm and signals its location and quality through a specific patterns of dancing. Dances signalling high-quality sites can recruit other bees to investigate the same site. Subsequent bees evaluate the popularity of a potential site by counting the number of visitors there. When scouts detect a quorum of other scouts at a hive site (around 20 bees), they transmit an activation signal to the swarm. Bee swarms even show a distinct mutual inhibition signal that reduces the chance of costly ambivalence160.

Beehive decision-making has several notable features that make it a good analogy for distributed decision-making25. First, there is no localized evaluation: no individual bee has more than an extremely limited amount of information about the world, and each bee’s behaviour is remarkably stochastic. Second, there is no central decision-maker: no individual or subgroup makes the decision; instead, it arises in a well-understood emergent manner from the simple rules followed by individuals. Thus, removing any bee or bees would degrade performance in a graded but not all-or-none manner. Third, there is very little stable functional specialization: scouts are drawn at random and serve as site selectors, as observers of other bees’ dances, and as both members and monitors of the hive site quorum. Fourth, information about the value and location of options is firmly linked at every step of the process, thus sidestepping the otherwise difficult binding problem associated with choice and selection45.

As a consequence, the steps of evaluation, comparison and selection are clearly performed by bees, but at the same time they are not performed by dedicated subsets of bees or at specific times. No individual bee ever has knowledge of more than one hive site, so no bee performs a comparison159; instead the comparison step emerges as a consequence of the types of interactions the bees are programmed to perform.

A neuron can be thought of as a small and informationally limited decision-maker26,27. It non-linearly transforms its dendritic inputs to its firing rate. However, this transformation of inputs to outputs does not have to be completed by a single neuron (or layer of neurons) to be useful. Neurons providing incremental change may instead contribute as part of a well-organised distributed system that includes multiple brain regions. Any neuron whose activity influences the behaviour of the network can be thought of as participating in that behaviour. In a decision-making network, this means that individual neurons may not require a pure representation of decision parameters to contribute to the decision-making process28.

Distributed transformations within cortical circuits

Distributed decisions often involve a single simple computation repeated in each element on different inputs. One strong candidate for that computation in economic choice is competition through mutual inhibition. Mutual inhibition is a common motif that is found throughout the nervous system, and is often considered part of a basic repertoire of neural circuits29,30. Effective competition via mutual inhibition can be mediated in a biophysically realistic cortical circuit model by an appropriate choice of synaptic weights11,13 (FIG. 1a). Several recent results indicate that mutual inhibition may be at the core of reward-guided choice31–42.

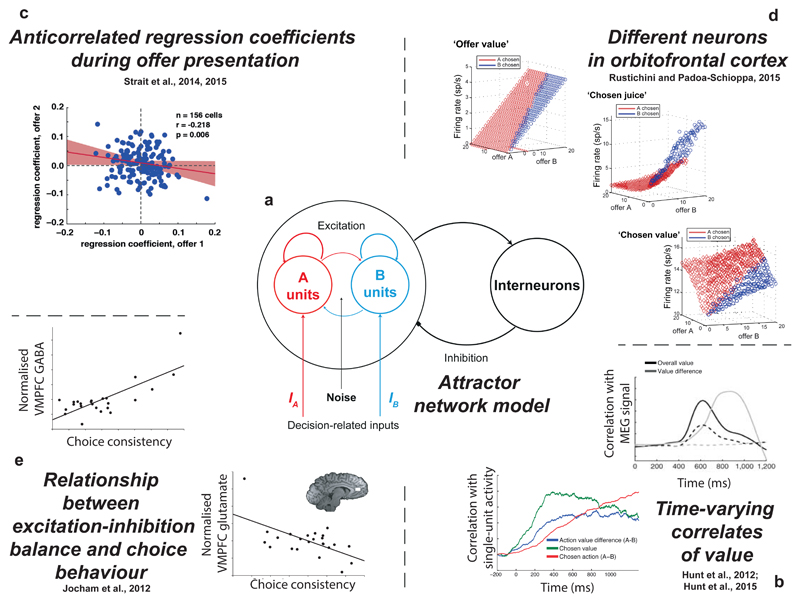

Figure 1. Evidence for competition via mutual inhibition during reward-guided choice.

a | A biophysical attractor network model of decision-making11 relies upon effective competition via mutual inhibition between ‘A units’ and ‘B units’, which are neurons selective for a different option. Noise is added as an input to all excitatory units in the network, causing choice behaviour to vary stochastically on different simulations. This model has been used to explain various findings. b | The model predicts variation in correlates of value as a function of time31,32. In human magnetoencephalography data31, the model predicts a transition from ‘overall value’ correlates to ‘value difference’ correlates. In macaque single units recorded from dorsolateral PFC32, it predicts a sequence of value correlates from the original difference in options available to the eventual categorical choice. c | The model predicts an anticorrelation of regression coefficients between different presented offers. This is observed in single neuron data recorded from ventromedial PFC and ventral striatum during sequential choice34,35 d | In orbitofrontal cortex, different neurons are found that respond to the values of different juice offers, the value of the chosen offer, or the identity of the chosen juice49. It has been proposed that these neuronal classes correspond to different identities of neurons in the network model36, whose response properties are shown here. e | The model predicts variation in choice behaviour as the balance between excitation and inhibition is altered. This matches with evidence from human subjects, whose choice consistency is found to correlate with resting concentrations of glutamate and GABA in ventromedial PFC, indexed using magnetic resonance spectroscopy33.

A recent study using magnetoencephalography demonstrated that human ventromedial prefrontal cortex (vmPFC) and intraparietal sulcus (IPS) expressed a key signature of mutual inhibition during economic choice: a change from encoding the sum of to the difference between chosen and unchosen values31 (FIG. 1b). This signature was subsequently also found in a macaque study of local field potentials (LFPs) from several subregions of the macaque PFC, including the orbitofrontal cortex (OFC), the dorsolateral prefrontal cortex (DLPFC) and the anterior cingulate cortex (ACC), confirming the generality of the mutual inhibition principle across species32.

Single-neuron recordings in macaques provide further evidence for a mutual inhibition process. In a binary choice, firing rates of neurons in both the vmPFC34 and the ventral striatum (VS)35 encode the values of the two offers through monotonic changes in firing rates. During the comparison period of the task, the directions of the tuning curves (positive or negative) for the two offers are opposed34,35 (FIG. 1c). Consequently, the ensemble activity of both areas functions as a comparator between the values of the two offers. The fact that similar effects are seen in both areas, and with largely overlapping time courses, suggests that neither is the sole site of comparison, but that comparison may take place in both regions simultaneously.

Nor are the vmPFC and the VS likely to be unique in this respect; the mutual inhibition model has also been used to capture the dynamics of single-unit activity in the OFC36 (FIG. 1d), the DLPFC32 and the lateral intraparietal cortex (LIP)39,42. Data from studies in the dorsal premotor cortex (PMd) are also consistent with a mutual inhibition process. In this region, neural responses during economic decisions encode the relative value of targets in their response fields, and show additional sensitivity to the physical distance between those response fields40,41. This finding suggests that even ostensibly motor areas are part of the distributed choice network.

Distributed transformations across cortical circuits

The body of work described above suggests that decisions occur through repeated mutual inhibition computations occurring simultaneously in both the motor and the abstract value domains. This implies that comparison is not the unique purview of any single brain area43–45. The distributed account instead suggests that multiple areas may perform a similar non-specialized function: they may all perform a comparison operation like mutual inhibition on the inputs received. Importantly, however, those inputs would differ by region. The nature of the competition occurring in any given area would then depend upon the interaction of the particular demands of the task46 and each area’s anatomical inputs47,48. For example, tuning properties of neurons in the OFC may be relatively specialized for gustatory comparisons49, whereas neurons in cingulate cortex may appear specialized for motor cost evaluations50.

This idea of multiple distributed comparators may help to resolve differing interpretations of imaging studies in neuroeconomics, which have attempted to localise the regions that are most critical to value comparison. In blood-oxygen level dependent (BOLD) functional MRI (fMRI) studies, debate has particularly centred on different regions of medial frontal cortex. For example, one study used the mutual inhibition model to predict how variation in levels of vmPFC GABAergic inhibition, indexed via magnetic resonance spectroscopy in humans, related to cross-subject variation in both choice stochasticity and value correlates in the vmPFC BOLD fMRI signal33 (FIG. 1e). A related study argued that because activity within a mutual inhibition model is highest at the end of the choice process and this activity is persistent, then the fMRI signal will be greatest for faster (easy) decisions, as is typically the case in the vmPFC51. However, other studies have argued that one should only consider accumulated activity until a decision boundary is reached. In this case, the fMRI signal would be higher for slower (difficult) decisions, as is typically found in the dorsomedial PFC52.

A potential reconciliation of these results would be that both regions implement a mutual inhibition process but differ in their response properties post-choice after a decision bound has been reached. This explanation is supported by the event-related profile of LFP recordings from multiple subregions of the PFC during reward-based choice32. It is also supported by the simultaneous emergence of single unit choice-related signals across six simultaneously recorded cortical regions in perceptual choice53, and the demonstration that motor output (corticospinal excitability) is already biased as a decision is unfolding54.

The question then arises of how different areas interact as choices are made. Here, whole-brain techniques (such as fMRI) come into their element55. Although anatomical connectivity is stable, functional connectivity is more flexible. One recent study examined changes in functional connectivity during multi-attribute choices involving integration of stimulus-based and action-based attributes37. A model of choice in which competition via mutual inhibition occurred at multiple levels (stimuli, actions and attributes) best explained the subjects’ choices. BOLD fMRI signal in the intraparietal sulcus (IPS) matched with the model’s predictions from the competition over which attribute was most relevant, with the value difference signal exhibiting opposing signs for the relevant and irrelevant attributes. Notably, changes in IPS functional connectivity to other brain areas depended on which attribute was most relevant to the current choice at hand. Connectivity was increased to either OFC or putamen when stimuli or actions were respectively made relevant. A related study of multidimensional learning demonstrated that the IPS is also particularly active when subjects update their understanding of the relevance of particular dimensions for guiding future choices56.

Recurrent

The predominant paradigm in studies of simple economic choice has been N-alternative forced choice, in which two or more options are presented simultaneously to the subject and they select the most valuable. However, real-world choice is often sequential rather than simultaneous in nature57,58. Even ostensibly simultaneous choices may be made sequential by virtue of limits imposed by attention or noise that needs to be integrated out across time2,14,59. The relationship between simultaneous and sequential accounts of choice parallels a distinction made between the computational roles of two distinct architectures of neural networks21: feedforward and recurrent architectures.

Two different classes of network architecture

Feedforward neural networks — recently popularised through the impressive perfomance of convolutional networks in computer vision (ConvNets)60,61 — contain units that exhibit activity that is only dependent upon the currently presented input. Such networks are ideally suited to tasks involving classification of multiple, simultaneously presented input features, such as pixel intensities in an image. The design of the ‘local convolution’ and ‘max pooling’ steps in a ConvNet were based on the response properties of primary visual cortex (V1) simple and complex cells, respectively60. Modern feedforward ConvNet models strikingly reproduce single-unit responses along the visual hierarchy62,63.

However, feedforward networks are poorly adapted to tasks that require some form of persistent memory for previous states across time. Recurrent neural networks (RNNs), by contrast, contain units that not only receive inputs from other network layers but also receive their own previous output at time t-118,64. This allows RNNs to show sustained memory for inputs long after they have been removed, allowing temporally extended computations to be performed on sequential inputs65,66. One of the original successes of such networks was in showing how a biologically plausible network could account for working memory responses in the PFC67,68. The recurrence of these networks recapitulates the high degree of recurrent connectivity observed empirically in prefrontal circuits relative to other parts of the cortex69,70.

RNNs as a naturalistic substrate for sequential choices

At first glance, the simultaneous simple economic choices between two goods often studied in the lab appear well suited to being solved with a feedforward network. The options in such choices are, after all, presented to the subject simultaneously and statically. However, reaction times in such tasks vary systematically as a function of value, and this implies that the underlying computation is dynamic rather than static71. Recent models in neuroeconomics have therefore moved away from static economic accounts of choice towards temporally extended algorithms, in which noisy estimates of value are integrated sequentially over time14,52. These algorithms can be formally related to the model of competition via mutual inhibition, discussed above and in FIG. 172. This network exemplifies how a biologically plausible RNN could implement an evidence accumulation algorithm11.

But why should the brain use a recurrent network if a feedforward architecture appears sufficient? It may be because of the sequential structure of choices that are typically faced in natural environments, such as foraging decisions. These choices probably have shaped the evolution of the frontal cortex most heavily43,73. Foraging theory emphasizes the fact that nearly all decisions must be made in a strategic manner, and doing so requires a telescoping representation of rewards and their future allocation distribution74,75. For example, when macaque monkeys perform a foraging task, activity within the anterior cingulate cortex (ACC) accumulates slowly over time in a depleting resource environment until a fixed firing threshold is reached, at which point a change in behaviour is triggered76.

The algorithmic structures of economic and foraging models are certainly very different, and one possibility would be that they are implemented by different brain systems, with the former being feedforward and the latter recurrent. We consider it more likely, however, that evolution will have developed a general approach to solving both types of decisions using temporally extended, recurrent implementations. The neural mechanisms of economic choice may even build on the underlying architecture laid down to solve foraging choices using recurrent computations. This fits well with modern ideas in neuroeconomic accounts that argue for temporal integration of noisy value estimates across time14,52,71, with attention being sequentially allocated to different choice options59,77.

Understanding recurrent network computations

The majority of neuroscientific studies to date using RNNs have used networks containing hand-tuned synaptic weights to elicit specific behaviours11 (Fig. 1a). Although this is a valuable starting point, it produces an unrealistic homogeneity of neuronal responses that does not match the empirically observed heterogeneity in cortical populations78–80. Recently introduced automatic training algorithms for RNNs have vastly increased their capacity to perform a wide variety of tasks and also, potentially, to accurately describe neural data81,82. A major challenge is then to understand the nature of the computations that an RNN is performing after it has been automatically trained.

An elegant solution to this challenge is to perform ‘reverse engineering’ on RNNs that have been fit to data83. This was exemplified in a recent study of PFC population responses, in which the PFC performed both the selection and the integration of relevant information in a context-dependent perceptual decision task84. The authors trained a randomly connected RNN to capture key features of the behavioural data; activity in the trained RNN matched with several key features of the neural population data. Reverse engineering of the RNN then revealed the mechanisms whereby such a computation could be achieved within a single cortical circuit. Two stable line attractors were present within the trained RNN; the selection of relevant inputs depended on how the network’s activity relaxed towards these line attractors under different contexts. Similar analyses of population recordings should yield important insights into the recurrent computations supporting reward-based choice in the near future85.

Hierarchical

A hierarchical organization is a cardinal feature of the organization of the brain’s reward and decision-making system. Information from one area is converted to a more abstract form that becomes more comprehensive as it increases in complexity. One feature of the hierarchy of brain areas associated with economic choice is that there are new inputs at each point. Thus, for example, the OFC receives gustatory inputs, the vmPFC receives limbic inputs, the subgenual ACC receives hypothalamic inputs, and the dorsal ACC receives motor inputs86. These inputs provide a way for different factors to enter into the distributed network subserving choice. They also allow for each area to have a different specific contribution to choice even if the general role of incorporating information into ongoing decisions is similar. This viewpoint differs from that in which all factors that influence choices must come together at a single point to create a single value scale before they can influence choice.

Hierarchical RNNs allow for multiple timescales

Humans excel at tasks that demand online organisation of behaviour across multiple differing timescales. Such tasks are particularly sensitive to PFC damage87. It has been hypothesised that the presence of parallel and hierarchical architectures within PFC allow different pieces of information to be remembered and parsed at multiple timescales88,89. This idea recapitulates recent developments in the design of RNNs in computer science. In particular, by making recurrent networks multi-layered or ‘deep’21,90, it is possible to dramatically improve performance on tasks that operate over multiple timescales (such as the generation of meaningful sentences to describe images91, and the recognition of speech65). The relevant timescales in speech are unknown to the neural network prior to training, but using automated training of multiple connected RNNs, the relevant temporal structure is extracted to successfully generate the requisite output. In decision-making, the ability of a population of cortical neurons to exhibit multiple timescales of integration over a previous history of rewards has recently been shown empirically92. These timescales vary in a manner that is dependent upon the timescale of integration of the animal’s current choice behaviour92,93.

Hierarchical timescales for information processing in cortex

A hierarchy of timescales across brain regions has recently been explicated in a large-scale network model of dynamical processing in non-human primate neocortex. One study combined a mean-field reduction of a RNN within each cortical area with detailed knowledge of anatomical connectivity between different areas derived from tracer studies94. This established a mechanism whereby multiple timescales could coexist within a single anatomical network, explaining the temporal structure of neurophysiological data recorded at rest across different cortical areas95. This work suggests an explanation for why early sensory areas possess an inherently transient temporal structure (changing across tens of milliseconds) while higher regions (for example, in the PFC) show a more-sustained, longer-lasting temporal profile (across hundreds of milliseconds or longer) (FIG. 2a).

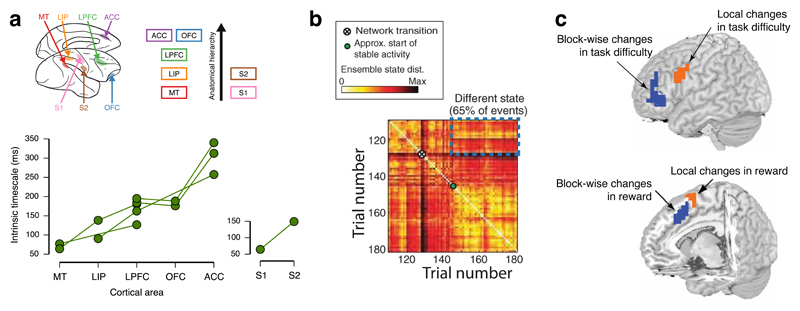

Figure 2. Hierarchical organisation of cortical timescales and its relationship with reward-guided choice.

a | The ‘intrinsic timescale’ of different cortical regions can be indexed by examining the rate of decay of their spike rate autocorrelation while the subject is at rest95. This reveals a hierarchical organisation to cortical timescales, with lower areas having rapidly changing activity, and higher areas having more persistent activity (bottom panel; data points from the same data set are joined). The medial prefrontal cortex (PFC), in particular the anterior cingulate cortex (ACC), has the longest intrinsic timescale. b | The panel shows persistent network activity, and network ‘resets’ during behavioural shifts, in rat medial PFC in a reward-guided exploration task100. Each element of the matrix reflects the trial-to-trial similarity of neuronal ensemble activity. The lighter and darker elements reflect more similar and more dissimilar network activity, respectively. Large, bright blocks reflect persistent, stable states across trials. Abrupt network transitions, and relaxations into different stable states, can be readily detected within network activity, and are related to behavioural shifts. c | The panel depicts that rostro-caudal hierarchy of temporal organisation in the PFC104. A similar hierarchy can be seen on medial and lateral surfaces for internal motivation (reward) and task difficulty, respectively. Whereas more anterior regions reflect block-wise changes in reward or in task difficulty, more posterior regions reflect trial-to-trial changes. MT, middle temporal area; LIP, lateral intraparietal cortex; LPFC, lateral PFC; OFC, orbitofrontal cortex; S1, primary somatosensory cortex; S2, secondary somatosensory cortex.

Recent decision-making paradigms have combined evidence accumulation at both slow (inter-trial) and fast (intra-trial) timescales96. It might then be predicted that evidence accumulation at slow timescales would be supported by cortical regions with sustained temporal structure. Interestingly, the dACC emerges as having the most sustained temporal structure within the regions that have been characterised thus far95. This observation sits well with studies that have linked ACC activity to disengagement from a foreground option in foraging tasks75,76,97,98 and during exploration99. A foreground option may be held in mind over many trials, but disengagments are linked to abrupt and coordinated changes in network activity in the medial PFC100 (FIG. 2b). Prior to disengagement, ramping activity is observed in both medial PFC–dACC76,101,102 and another region associated with exploration99, the rostrolateral PFC102.

Relationship to cognitive hierarchies

The observed relationship between anatomical and temporal hierarchies may be related to hierarchical accounts of PFC function in other cognitive paradigms103. One such account suggests the existence of two parallel streams of rostro-caudal organization within the PFC, along the medial and lateral surfaces of this region, respectively104 (FIG 2c). Data from BOLD fMRI studies suggest that a hierarchy relating to states of internal motivation exists along the medial surface 104–106, with more anterior portions reflecting block-wise changes in reward value and more posterior regions reflecting trial-to-trial changes. By contrast, studies indicate that a hierarchy of cognitive control processes exists along the lateral surface104,107,108. Again, anterior regions of the lateral surface exhibit sustained changes reflecting the complexity of the current block, whereas more posterior regions of this surface reflect task complexity only for relevant individual trials. Similar rostro-caudal PFC hierarchies have also been found in a related study where activation transferred to the striatum during the course of hierarchical rule learning109. This observation was well described by a hierarchically organized network model of corticostriatal interactions110 inspired by a particularly successful form of RNN known as ‘long short-term memory’111,112.

Evidence for the framework

Lesions and component processes

An important property of distributed computing systems is graceful degradation17. Because information is stored broadly, small amounts of damage to the system is seldom catastrophic; major impairments only come with large amounts of damage. Damage selectively impairs difficult retrieval processes and spares easier ones. The analogue of damage in connectionist networks is brain lesions. Brain lesions have long provided an important source of evidence for functional specialization. In the visual system, lesions to the middle temporal area cause akinetopsia113 and lesions to fusiform face area cause prosopagnosia114, confirming functional specialization for motion perception and face processing, respectively.

It has been natural for experimenters to design lesion studies that allow conceptually different components of choice to be assigned to different brain regions, and results of these studies have advocated a degree of functional specialisation within the PFC115–117. However, given how profound the impact of PFC lesions on day-to-day choices can be118, the impairments on choice are often surprisingly mild. PFC lesions sometimes lead to graded deficits in choosing, with difficult decisions impaired but easier ones spared115. It is possible that these deficits are mild because the tasks seek to differentiate component processes of economic choice rather than addressing the temporally extended nature of real-world economic decisions87.

The variety of cognitive functions affected by PFC lesions also casts doubt on the idea that choice, or even value comparison, is a specialisation of any single brain region. This doubt is supported by functional imaging data from studies of simple economic choice: different types of comparison signal are observed in different brain areas, depending upon the task at hand32,46,52,75. Moreover, regions implicated in choice in lesion and fMRI studies are not specific to choice: they are associated with cognitive processes that include working memory, strategic planning, executive control, reasoning and social cognition119. Together, this evidence suggests that many brain regions will collectively contribute to the process of comparison, and also that brain regions subserving choice make their contribution as part of a larger supporting suite of cognitive functions. By way of analogy, reward-guided choice seems to operate less like motion- or face-processing and more like the ability to drive a car, form a political preference or do calculus: such abilities rely on coordinated computations across many brain structures and systems.

Ubiquitous value correlates

Another feature of connectionist networks is that their storage of memories depends on distributed synaptic weight changes across all parts of a network, rather than at a single site17. Value is closely related to memory: it is a feature of an option that is inferred from associations with reward, which are based on past experiences120. In connectionist systems, memories are widely distributed (a feature leading to graceful degradation, discussed above), and traces of those memories can be observed throughout the network17; one might then predict that the same could also be true of value.

It is indeed the case that value correlates can be found in multiple brain regions. Correlates of value are seen in core reward regions such as the OFC49, the vmPFC121 and the VS122, and they are also observed in the amygdala, the insula, the dorsal striatum, the midbrain, the pregenual, subgenual, dorsal anterior, and posterior cingulate cortices, the dorsolateral and ventrolateral PFC, the IPS, and even the sensory and motor cortices 40,123–130. One recent neuroimaging study found that over 30% of the brain exhibited such signals131. Although there are certainly differences between the types of value-related information represented in these regions, there are also many overlaps.

Despite the ubiquity of value correlates, it often remains unclear whether activity in any area truly represents value — or what precise definition of value we should use28,132,133. One common criticism of neuroeconomic studies is that putative value correlates often reflect other correlated variables, such as attention, salience or, even, subthreshold premotor activation. There have certainly been some important efforts to disambiguate value correlates from alternative explanations28,133,134. Of course, it is possible that one of these value correlates genuinely represents value in the formal sense. However, in distributed systems, representation is often an emergent property; that is, it is driven by the specific pattern of connection between units and is not a property of any particular unit. In other words, value may be a network routing principle rather than a quantity that is represented. This eliminative view of value would be consistent with a subset of both classic studies and recent work in behavioural economics on process models of economic choice58,135,136.

One particular value signal is probably an emergent property. Recent work indicates that the ‘chosen value’ variable is a by-product of variation in decision dynamics across trials. To understand why, consider the model of competition via mutual inhibition discussed above11 (FIG. 1). Although many units’ activities in this network model correlate with chosen value, this quantity does need not to be represented to form a decision. Instead, it may arise naturally as a consequence of the varying speed at which network dynamics unfold on different trials31 (BOX 3).

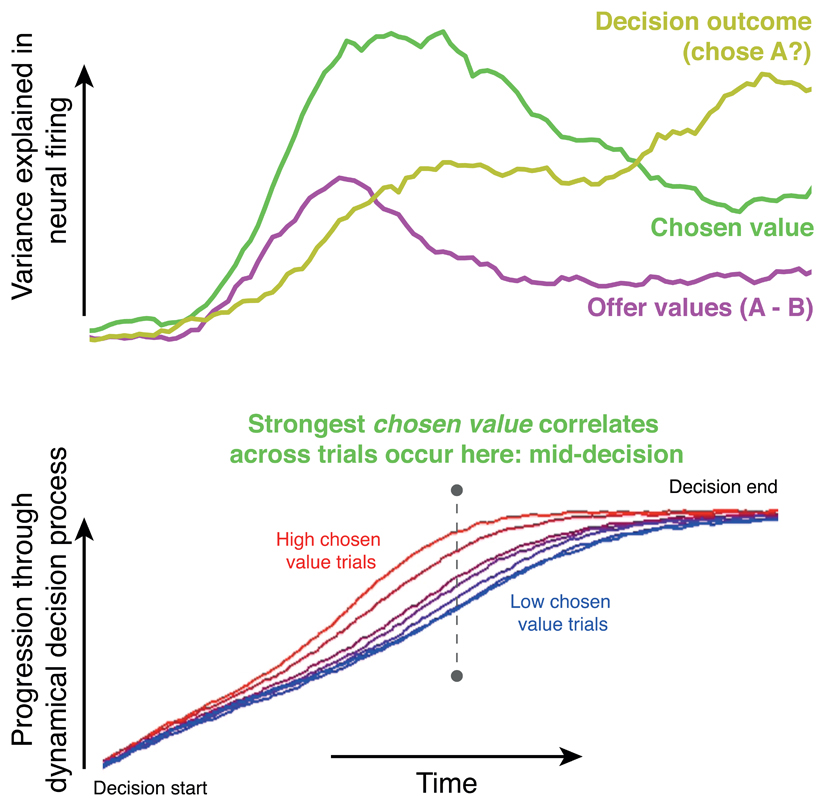

Box 3. Value representations as epiphenomena of decision dynamics.

One particularly ubiquitous signal during reward-guided decision tasks is a representation of chosen value34,35,39,49,129. This representation is isolated by correlating some measure of neural activity (firing rates, local field potentials (LFPs) or functional MRI signals) with the value of the option that will eventually be chosen on that trial. In measurements that are time-varying, this correlation can be repeated across many different timepoints, isolating the timepoint at which maximal variance is explained (see the figure, part a (green line)).

Why might this signal occur so commonly? Is it expressly represented by the brain, or is it an artefact? A clue comes from examining brain areas that also carry representations of other decision variables. These include the offer values of the options available, and the eventual categorical choice that the subject will make. Several studies32,39,129 show that the maximal variance explained by chosen value occurs between an initial representation of offer values, and the final representation of choices (see the figure, part a). Chosen value representations emerge as the decision is being formed, rather than after the choice is completed.

Decision formation is, of course, a dynamical process occurring at different rates on different trials71. Crucially, chosen value influences decision speed. Indeed, at a fixed timepoint in the ‘middle’ of the decision process, the decision may have neared completion on some trials (typically with high chosen value), whereas on others it may be a long way from completion. Activity in any part of the brain that reflects the progression of this dynamical process will thus correlate with the chosen value at the mid-decision point (see the figure, part b). Notably, these dynamics have several possible neural substrates, including ramp-to-threshold accumulation in single neurons2, neural population trajectories through a low-dimensional manifold137, or bulk activity observed at the level of LFP or magnetoencephalography (MEG) signals32. In each case, correlates of chosen value would naturally emerge as a consequence of varying dynamics across trials. This idea has, for example, been used to explain the origin of a commonly observed ‘unchosen minus chosen value’ signal in dorsomedial prefrontal cortex using functional MRI52. Additionally, a recent study estimated the speed at which dynamics unfolded on a trial-by-trial basis using LFP and MEG data32. The authors found this single-trial estimate of decision dynamics explained some of the variance that was previously explained by chosen value – implying that the underlying cause of chosen value correlates was indeed linked to decision speed.

The above findings are in line with those in recent studies of motor control, which indicate that motor cortical activity is better understood as a dynamical system than as one that represents movement parameters137,138. This line of work suggests that chosen value correlates emerge as a necessary consequence of recurrent network dynamics in mediating competition139, and, again, that chosen value may not be represented per se. It remains possible that other ostensible value correlates (such as offer value and experienced value) are also by-products of the computations that underlie choice and are not reified in the activity of dedicated reward neurons or regions135.

Elusive pure value

A modular view of reward-based choice predicts that certain brain regions or populations of neurons should be specialised for value, meaning that they respond primarily to values of options. Because value computation is a key intermediate stage in economic choice, the existence of specialized value regions or neurons is an important prediction of modular theories. By contrast, distributed theories do not demand any specialized value computation. Instead, in these theories, value is distributed broadly across a large number of regions, and is predicted in neurons that have other roles unrelated to valuation.

Some meta-analyses of neuroimaging data have argued that certain brain areas are central to ‘pure valuation’140. However, even in putative core reward regions such as the vmPFC, OFC and VS, a wealth of information processing occurs that is not related to value. For instance, the vmPFC is engaged by several ostensibly value-neutral factors, including autobiographical memory141, spatial navigation142, imagination143 and social cognition144; OFC is engaged by non-reward processes like conflict, working memory, and rule encoding145,146. Likewise, factors that modulate individual neurons in these areas may include ‘valueless’ changes in outcome expectancy147,148, previous outcomes92, intention to switch as well as other strategy variables149, metacognition150, spatial positions of offers and choices45, rules and task set146, and even irrelevant task variables151. It is possible that these apparently value-neutral signals are observed in these tasks because the tasks nevertheless demand the computation of value. However, it is becoming more clear that neurons in most, if not all, value-relevant regions encode a large number of task-relevant variables simultaneously, a property known as mixed selectivity152,153.

Neuronatomy

Finally, the strongest evidence for a distributed, hierarchical and recurrent approach to choice comes from neuroanatomy. Of course, the original work that provides the foundation for this approach developed side by side with progress in understanding the anatomy (and physiology) of the nervous system15. Perceptrons, feed-forward networks, parallel distributed networks, Hebbian learning and ConvNets were all inspired from observations about brain structure and physiology.

In the cortex, anatomical studies have indicated a reciprocal feedforward and feedback structure 154. This architecture was quantified via detailed tracer studies in macaques155 and diffusion imaging studies in humans22. Connectivity differences between adjacent prefrontal regions are smaller than is often appreciated, and adjacent regions generally blur into each other gradually rather than showing categorical boundaries156. Likewise, when considering the organization of subcortical brain regions, it is now accepted that cortico-thalamo-basal ganglia loops are not segregated as once thought157 but instead show strong functional convergence86.

Local intraregional connections in the cortex also argue for a distributed and recurrent organization. This is particularly true of ‘higher’ cortical areas that subserve cognitive functions. In particular, dendritic arbors of PFC pyramidal cells are endowed with many more dendritic spines than pyramidal cells in sensory areas, meaning that a single neuron in prefrontal areas 10, 11 or 12 receives 16 times the number of excitatory inputs of a neuron in V170. The majority of these cortical connections are local rather than long-distance, allowing these circuits to have a highly recurrent organization that is similar to that observed in RNNs.

Discussion

In this article, we have presented an overview of recent work suggesting that reward-based decisions reflect the outcome of a distributed, hierarchical and recurrent computational process. These ideas have their genesis in connectionist and neural network models that have also been used to understand perception and memory, among other processes. More recently, these ideas have been integrated to form detailed models of economic or reward-based decisions8–14.

According to the distributed view, the implementation of economic choice is dissimilar to a description of how it works at a more abstract level. Choice is an emergent consequence of the interactions of small computational elements158. By contrast, much recent research into the neurobiology of choice has adopted a modular framework, in which major components of choice map directly onto brain structures and discrete computations. Thus, for example, this framework encourages scholars to look for the specialized sites of evaluation, comparison and action selection. The change in viewpoint we propose leads us to reframe this debate and many other central questions (BOX 1).

The work we describe here brings up a philosophical issue that has long influenced cognitive science: mental representation16. It has long been unclear whether we ‘represent’ mental concepts. That is, whether specific patterns of brain activity serve to recapitulate a mental version of some external event or object. Although neural correlates of important events and objects are observed, these correlations may be consequences of internal processes. Recent work provides two reasons to doubt that value, at least, is explicitly represented in the brain. First, in regards to chosen value, it seems that although value is decodable, it is possible that it is an artefact of the way neural data are analysed32. Second, in regards to value more broadly, the case is less clear, but connectionist models suggest that is possible to construct networks that make good choices without explicit value representations; these networks at the very least have a similar flavour to neuroanatomy17.

Paul and Patricia Churchland have articulated the notion of eliminative materialism, which includes a suggestion that natural categories we use to describe psychological phenomena do not do a good job of capturing the organization of brain processes that generate our mental lives24. Economic choice may be one such case in point. Choice as a whole and steps like evaluation, comparison, selection and monitoring stages do not necessarily correspond to discrete anatomical substrates, to discrete neuron types, or even to discrete computations. Instead, they may be emergent consequences of processing units performing simple operations on inputs, ones that are radically different from the operations of the system as a whole24.

Acknowledgments

We are grateful to many colleagues with whom interactions have shaped ideas within this article, in particular T. Behrens, T. Blanchard, S. Kennerley, J. Pearson, S. Piantadosi, M. Platt, M. Rushworth and T. Seeley. We thank H. Barron, A. de Berker, R. Dolan, M.A. Noonan and T. Seow for comments on a previous draft of the manuscript. L.T.H. is supported by a Sir Henry Wellcome Fellowship from the Wellcome Trust (098830/Z/12/Z). B.Y.H. is supported by an NIH award. (DA037229).

Biographies

Laurence Hunt completed his DPhil in Neuroscience at the University of Oxford in 2012, and was then awarded a Sir Henry Wellcome Postdoctoral Fellowship to move to University College London. He is currently a senior research associate in the Sobell Department of Motor Neuroscience and the Wellcome Trust Centre for Neuroimaging at UCL. His research draws together neurophysiological data in animal models with imaging data in humans to understand the neural basis of decision-making. In his spare time, he enjoys singing, both with the London Oriana Choir and occasionally on the terraces of Oxford United.

Ben Hayden is an associate professor of Brain and Cognitive Sciences at the University of Rochester. He got his Ph.D. at UC Berkeley with Jack Gallant in 2005 and did a post-doc with Michael Platt at Duke until 2011. His lab studies the neural bases of economic choice and executive control, with a focus on problems involving foraging, curiosity, and risk. His lab website is http://www.haydenlab.com.

Footnotes

Competing interests statement

The authors declare no competing interests.

References

- 1.Glimcher P, Fehr E. Neuroeconomics: decision-making and the brain. Academic Press; 2014. [Google Scholar]

- 2.Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- 3.Dolan Ray J, Dayan P. Goals and habits in the brain. Neuron. 2013;80:312–325. doi: 10.1016/j.neuron.2013.09.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9:545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kable JW, Glimcher PW. The neurobiology of decision: consensus and controversy. Neuron. 2009;63:733–745. doi: 10.1016/j.neuron.2009.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rangel A, Hare T. Neural computations associated with goal-directed choice. Current opinion in neurobiology. 2010;20:262–270. doi: 10.1016/j.conb.2010.03.001. [DOI] [PubMed] [Google Scholar]

- 7.Padoa-Schioppa C. Neurobiology of economic Choice: a good-based model. Annu Rev Neurosci. 2011;34:333–359. doi: 10.1146/annurev-neuro-061010-113648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Frank MJ. An Introduction to Model-Based Cognitive Neuroscience. Springer; New York: 2015. pp. 159–177. [Google Scholar]

- 9.Wang XJ. Neural dynamics and circuit mechanisms of decision-making. Current opinion in neurobiology. 2012;22:1039–1046. doi: 10.1016/j.conb.2012.08.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Pezzulo G, Cisek P. Navigating the affordance landscape: feedback control as a process model of behavior and cognition. Trends Cogn Sci. 2016;20:414–424. doi: 10.1016/j.tics.2016.03.013. [DOI] [PubMed] [Google Scholar]

- 11.Wang XJ. Probabilistic decision making by slow reverberation in cortical circuits. Neuron. 2002;36:955–968. doi: 10.1016/s0896-6273(02)01092-9. [DOI] [PubMed] [Google Scholar]

- 12.Rolls ET. Emotion and decision making explained. Oxford University Press; 2013. [Google Scholar]

- 13.Machens CK, Romo R, Brody CD. Flexible control of mutual inhibition: a neural model of two-interval discrimination. Science. 2005;307:1121–1124. doi: 10.1126/science.1104171. [DOI] [PubMed] [Google Scholar]

- 14.Krajbich I, Armel C, Rangel A. Visual fixations and the computation and comparison of value in simple choice. Nat Neurosci. 2010;13:1292–1298. doi: 10.1038/nn.2635. [DOI] [PubMed] [Google Scholar]

- 15.Medler DA. A brief history of connectionism. Neural Computing Surveys. 1998;1:18–72. [Google Scholar]

- 16.Gardner H. The mind's new science : a history of the cognitive revolution. Basic Books; 1985. [Google Scholar]

- 17.McClelland JL, Rumelhart DE. Explorations in parallel distributed processing: a handbook of models, programs, and exercises. MIT press; 1989. [Google Scholar]

- 18.Rumelhart DE, Hinton GE, Williams RJ. Learning representations by back-propagating errors. Nature. 1986;323:533–536. doi: 10.1038/323533a0. [DOI] [Google Scholar]

- 19.Hopfield JJ. Neural networks and physical systems with emergent collective computational abilities. Proc Natl Acad Sci U S A. 1982;79:2554–2558. doi: 10.1073/pnas.79.8.2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Carpenter GA, Grossberg S. A massively parallel architecture for a self-organizing neural pattern recognition machine. Comput Vision Graph. 1987;37:54–115. doi: 10.1016/s0734-189x(87)80014-2. [DOI] [Google Scholar]

- 21.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 22.Jbabdi S, Sotiropoulos SN, Haber SN, Van Essen DC, Behrens TE. Measuring macroscopic brain connections in vivo. Nat Neurosci. 2015;18:1546–1555. doi: 10.1038/nn.4134. [DOI] [PubMed] [Google Scholar]

- 23.Fenno L, Yizhar O, Deisseroth K. The development and application of optogenetics. Annu Rev Neurosci. 2011;34:389–412. doi: 10.1146/annurev-neuro-061010-113817. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Churchland PM. Eliminative materialism and the propositional attitudes. J Philos. 1981;78:67. doi: 10.2307/2025900. [DOI] [Google Scholar]

- 25.Seeley TD. Honeybee democracy. Princeton University Press; 2010. [Google Scholar]

- 26.London M, Häusser M. Dendritic computation. Annu Rev Neurosci. 2005;28:503–532. doi: 10.1146/annurev.neuro.28.061604.135703. [DOI] [PubMed] [Google Scholar]

- 27.Couzin ID. Collective cognition in animal groups. Trends Cogn Sci. 2009;13:36–43. doi: 10.1016/j.tics.2008.10.002. [DOI] [PubMed] [Google Scholar]

- 28.O'Doherty JP. The problem with value. Neurosci Biobehav Rev. 2014;43:259–268. doi: 10.1016/j.neubiorev.2014.03.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Grillner S. Neurobiological bases of rhythmic motor acts in vertebrates. Science. 1985;228:143–149. doi: 10.1126/science.3975635. [DOI] [PubMed] [Google Scholar]

- 30.Kovac M, Davis W. Behavioral choice: neural mechanisms in Pleurobranchaea. Science. 1977;198:632–634. doi: 10.1126/science.918659. [DOI] [PubMed] [Google Scholar]

- 31.Hunt LT, et al. Mechanisms underlying cortical activity during value-guided choice. Nat Neurosci. 2012;15:470–476. doi: 10.1038/nn.3017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hunt LT, Behrens TE, Hosokawa T, Wallis JD, Kennerley SW. Capturing the temporal evolution of choice across prefrontal cortex. eLife. 2015:e11945. doi: 10.7554/eLife.11945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Jocham G, Hunt LT, Near J, Behrens TE. A mechanism for value-guided choice based on the excitation-inhibition balance in prefrontal cortex. Nat Neurosci. 2012;15:960–961. doi: 10.1038/nn.3140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Strait CE, Blanchard TC, Hayden BY. Reward value comparison via mutual inhibition in ventromedial prefrontal cortex. Neuron. 2014;82:1357–1366. doi: 10.1016/j.neuron.2014.04.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Strait CE, Sleezer BJ, Hayden BY. Signatures of value comparison in ventral striatum neurons. PLoS Biol. 2015;13:e1002173. doi: 10.1371/journal.pbio.1002173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rustichini A, Padoa-Schioppa C. A neuro-computational model of economic decisions. J Neurophysiol. 2015 doi: 10.1152/jn.00184.2015. jn 00184 02015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hunt LT, Dolan RJ, Behrens TE. Hierarchical competitions subserving multi-attribute choice. Nat Neurosci. 2014;17:1613–1622. doi: 10.1038/nn.3836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Chau BK, Kolling N, Hunt LT, Walton ME, Rushworth MF. A neural mechanism underlying failure of optimal choice with multiple alternatives. Nat Neurosci. 2014;17:463–470. doi: 10.1038/nn.3649. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Louie K, Glimcher PW. Separating value from choice: delay discounting activity in the lateral intraparietal area. J Neurosci. 2010;30:5498–5507. doi: 10.1523/jneurosci.5742-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Pastor-Bernier A, Cisek P. Neural correlates of biased competition in premotor cortex. J Neurosci. 2011;31:7083–7088. doi: 10.1523/JNEUROSCI.5681-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Cisek P. Integrated neural processes for defining potential actions and deciding between them: a computational model. J Neurosci. 2006;26:9761–9770. doi: 10.1523/JNEUROSCI.5605-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Soltani A. A biophysically based neural model of matching law behavior: melioration by stochastic synapses. J Neurosci. 2006;26:3731–3744. doi: 10.1523/jneurosci.5159-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Rushworth MF, Kolling N, Sallet J, Mars RB. Valuation and decision-making in frontal cortex: one or many serial or parallel systems? Current opinion in neurobiology. 2012;22:946–955. doi: 10.1016/j.conb.2012.04.011. [DOI] [PubMed] [Google Scholar]

- 44.Cisek P. Making decisions through a distributed consensus. Current opinion in neurobiology. 2012;22:927–936. doi: 10.1016/j.conb.2012.05.007. [DOI] [PubMed] [Google Scholar]

- 45.Strait CE, et al. Neuronal selectivity for spatial positions of offers and choices in five reward regions. J Neurophysiol. 2016;115:1098–1111. doi: 10.1152/jn.00325.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Hunt LT, Woolrich MW, Rushworth MF, Behrens TE. Trial-type dependent frames of reference for value comparison. PLoS Comput Biol. 2013;9:e1003225. doi: 10.1371/journal.pcbi.1003225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Passingham RE, Stephan KE, Kötter R. The anatomical basis of functional localization in the cortex. Nat Rev Neurosci. 2002;3:606–616. doi: 10.1038/nrn893. [DOI] [PubMed] [Google Scholar]

- 48.Neubert FX, Mars RB, Sallet J, Rushworth MF. Connectivity reveals relationship of brain areas for reward-guided learning and decision making in human and monkey frontal cortex. Proc Natl Acad Sci U S A. 2015;112:E2695–2704. doi: 10.1073/pnas.1410767112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Hosokawa T, Kennerley SW, Sloan J, Wallis JD. Single-neuron mechanisms underlying cost-benefit analysis in frontal cortex. J Neurosci. 2013;33:17385–17397. doi: 10.1523/jneurosci.2221-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Rolls ET, Grabenhorst F, Deco G. Choice, difficulty, and confidence in the brain. Neuroimage. 2010;53:694–706. doi: 10.1016/j.neuroimage.2010.06.073. [DOI] [PubMed] [Google Scholar]

- 52.Hare TA, Schultz W, Camerer CF, O'Doherty JP, Rangel A. Transformation of stimulus value signals into motor commands during simple choice. Proc Natl Acad Sci U S A. 2011;108:18120–18125. doi: 10.1073/pnas.1109322108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Siegel M, Buschman TJ, Miller EK. Cortical information flow during flexible sensorimotor decisions. Science. 2015;348:1352–1355. doi: 10.1126/science.aab0551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Klein-Flugge MC, Bestmann S. Time-dependent changes in human corticospinal excitability reveal value-based competition for action during decision processing. J Neurosci. 2012;32:8373–8382. doi: 10.1523/JNEUROSCI.0270-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.O’Reilly JX, Woolrich MW, Behrens TEJ, Smith SM, Johansen-Berg H. Tools of the trade: psychophysiological interactions and functional connectivity. Soc Cogn Affect Neurosci. 2012;7:604–609. doi: 10.1093/scan/nss055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Niv Y, et al. Reinforcement learning in multidimensional environments relies on attention mechanisms. J Neurosci. 2015;35:8145–8157. doi: 10.1523/jneurosci.2978-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Freidin E, Kacelnik A. Rational choice, context dependence, and the value of information in European starlings (Sturnus vulgaris) Science. 2011;334:1000–1002. doi: 10.1126/science.1209626. [DOI] [PubMed] [Google Scholar]

- 58.Bettman James R, Luce Mary F, Payne John W. Constructive consumer choice processes. J Consum Res. 1998;25:187–217. doi: 10.1086/209535. [DOI] [Google Scholar]

- 59.Rich EL, Wallis JD. Decoding subjective decisions from orbitofrontal cortex. Nat Neurosci. 2016;19:973–980. doi: 10.1038/nn.4320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.LeCun Y, et al. Backpropagation applied to handwritten ZIP code recognition. Neural Comput. 1989;1:541–551. doi: 10.1162/neco.1989.1.4.541. [DOI] [Google Scholar]

- 61.Krizhevsky A, Sutskever I, Hinton G. ImageNet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems. 2012;25:1090–1098. [Google Scholar]

- 62.Yamins DL, et al. Performance-optimized hierarchical models predict neural responses in higher visual cortex. Proc Natl Acad Sci U S A. 2014;111:8619–8624. doi: 10.1073/pnas.1403112111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Kriegeskorte N. Deep neural networks: a new framework for modeling biological vision and brain information processing. Annu Rev Vision Sci. 2015;1:417–446. doi: 10.10.1146/annurev-vision-082114-035447. [DOI] [PubMed] [Google Scholar]

- 64.Graves A. Supervised sequence labelling with recurrent neural networks. Springer; 2012. [Google Scholar]

- 65.Graves A, Mohamed A-r, Hinton G. Speech recognition with deep recurrent neural networks. ArXiv e-prints. 2013;1303 < http://adsabs.harvard.edu/abs/2013arXiv1303.5778G>. [Google Scholar]

- 66.Weston J, Chopra S, Bordes A. Memory networks. ArXiv e-prints. 2014;1410 < http://adsabs.harvard.edu/abs/2014arXiv1410.3916W>. [Google Scholar]

- 67.Amari S-i. Dynamics of pattern formation in lateral-inhibition type neural fields. Biol Cybern. 1977;27:77–87. doi: 10.1007/bf00337259. [DOI] [PubMed] [Google Scholar]

- 68.Compte A. Synaptic mechanisms and network dynamics underlying spatial working memory in a cortical network model. Cereb Cortex. 2000;10:910–923. doi: 10.1093/cercor/10.9.910. [DOI] [PubMed] [Google Scholar]

- 69.Wang X-J. In: Principles of frontal lobe function. Stuss DT, Knight RT, editors. Vol. 15. Oxford University Press; 2013. pp. 226–248. [Google Scholar]

- 70.Elston GN. Pyramidal cells of the frontal lobe: all the more spinous to think with. J Neurosci. 2000;20:RC95. doi: 10.1523/JNEUROSCI.20-18-j0002.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Busemeyer JR, Townsend JT. Decision field theory: a dynamic-cognitive approach to decision making in an uncertain environment. Psychol Rev. 1993;100:432–459. doi: 10.1037/0033-295x.100.3.432. [DOI] [PubMed] [Google Scholar]

- 72.Bogacz R, Brown E, Moehlis J, Holmes P, Cohen JD. The physics of optimal decision making: a formal analysis of models of performance in two-alternative forced-choice tasks. Psychol Rev. 2006;113:700–765. doi: 10.1037/0033-295X.113.4.700. [DOI] [PubMed] [Google Scholar]

- 73.Passingham RE, Wise SP. The neurobiology of the prefrontal cortex: anatomy, evolution, and the origin of insight. 1st edn. Oxford University Press; 2012. [Google Scholar]

- 74.Stephens DW, Krebs JR. Foraging theory. Princeton University Press; 1986. [Google Scholar]

- 75.Kolling N, Behrens TE, Mars RB, Rushworth MF. Neural mechanisms of foraging. Science. 2012;336:95–98. doi: 10.1126/science.1216930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Hayden BY, Pearson JM, Platt ML. Neuronal basis of sequential foraging decisions in a patchy environment. Nat Neurosci. 2011;14:933–939. doi: 10.1038/nn.2856. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Lim SL, O'Doherty JP, Rangel A. The decision value computations in the vmPFC and striatum use a relative value code that is guided by visual attention. J Neurosci. 2011;31:13214–13223. doi: 10.1523/JNEUROSCI.1246-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Harvey CD, Coen P, Tank DW. Choice-specific sequences in parietal cortex during a virtual-navigation decision task. Nature. 2012;484:62–68. doi: 10.1038/nature10918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Stokes MG. 'Activity-silent' working memory in prefrontal cortex: a dynamic coding framework. Trends Cogn Sci. 2015;19:394–405. doi: 10.1016/j.tics.2015.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Cavanagh SE, Wallis JD, Kennerley SW, Hunt LT. Autocorrelation structure at rest predicts value correlates of single neurons during reward-guided choice. eLife. 2016;5 doi: 10.7554/eLife.18937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Sussillo D, Abbott LF. Generating coherent patterns of activity from chaotic neural networks. Neuron. 2009;63:544–557. doi: 10.1016/j.neuron.2009.07.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Hennequin G, Vogels Tim P, Gerstner W. Optimal control of transient dynamics in balanced networks supports generation of complex movements. Neuron. 2014;82:1394–1406. doi: 10.1016/j.neuron.2014.04.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Sussillo D, Barak O. Opening the black box: low-dimensional dynamics in high-dimensional recurrent neural networks. Neural Comput. 2013;25:626–649. doi: 10.1162/NECO_a_00409. [DOI] [PubMed] [Google Scholar]

- 84.Mante V, Sussillo D, Shenoy KV, Newsome WT. Context-dependent computation by recurrent dynamics in prefrontal cortex. Nature. 2013;503:78–84. doi: 10.1038/nature12742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Song HF, Yang GR, Wang X. Reward-based training of recurrent neural networks for cognitive and value-based tasks. bioRxiv. 2016 doi: 10.7554/eLife.21492. < http://biorxiv.org/content/early/2016/08/19/070375>. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Haber SN, Behrens TE. The neural network underlying incentive-based learning: implications for interpreting circuit disruptions in psychiatric disorders. Neuron. 2014;83:1019–1039. doi: 10.1016/j.neuron.2014.08.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Shallice T, Burgess PW. Deficits in strategy application following frontal lobe damage in man. Brain: a journal of neurology. 1991;114(Pt 2):727–741. doi: 10.1093/brain/114.2.727. [DOI] [PubMed] [Google Scholar]

- 88.Fuster JM. The prefrontal cortex--an update: time is of the essence. Neuron. 2001;30:319–333. doi: 10.1016/s0896-6273(01)00285-9. [DOI] [PubMed] [Google Scholar]

- 89.Holroyd CB, Yeung N. Motivation of extended behaviors by anterior cingulate cortex. Trends Cogn Sci. 2012;16:122–128. doi: 10.1016/j.tics.2011.12.008. [DOI] [PubMed] [Google Scholar]

- 90.Pascanu R, Gulcehre C, Cho K, Bengio Y. How to construct deep recurrent neural networks. ArXiv e-prints. 2013;1312 < http://adsabs.harvard.edu/abs/2013arXiv1312.6026P>. [Google Scholar]

- 91.Karpathy A, Fei-Fei L. Deep visual-semantic alignments for generating image descriptions. Proceedings - IEEE Computer Society Conference on Computer Vision and Pattern Recognition. 2015 doi: 10.1109/TPAMI.2016.2598339. < https://arxiv.org/abs/1412.2306>. [DOI] [PubMed] [Google Scholar]

- 92.Bernacchia A, Seo H, Lee D, Wang X-J. A reservoir of time constants for memory traces in cortical neurons. Nat Neurosci. 2011;14:366–372. doi: 10.1038/nn.2752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Behrens TEJ, Woolrich MW, Walton ME, Rushworth MFS. Learning the value of information in an uncertain world. Nat Neurosci. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- 94.Chaudhuri R, Knoblauch K, Gariel M-A, Kennedy H, Wang X-J. A large-scale circuit mechanism for hierarchical dynamical processing in the primate cortex. Neuron. 2015;88:419–431. doi: 10.1016/j.neuron.2015.09.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Murray JD, et al. A hierarchy of intrinsic timescales across primate cortex. Nat Neurosci. 2014;17:1661–1663. doi: 10.1038/nn.3862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Purcell BA, Kiani R. Hierarchical decision processes that operate over distinct timescales underlie choice and changes in strategy. Proc Natl Acad Sci U S A. 2016;113:E4531–4540. doi: 10.1073/pnas.1524685113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Boorman ED, Rushworth MF, Behrens TE. Ventromedial prefrontal and anterior cingulate cortex adopt choice and default reference frames during sequential multi-alternative choice. J Neurosci. 2013;33:2242–2253. doi: 10.1523/JNEUROSCI.3022-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Wittmann MK, et al. Predictive decision making driven by multiple time-linked reward representations in the anterior cingulate cortex. Nat Commun. 2016;7:12327. doi: 10.1038/ncomms12327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Daw ND, O'Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Karlsson MP, Tervo DGR, Karpova AY. Network resets in medial prefrontal cortex mark the onset of behavioral uncertainty. Science. 2012;338:135–139. doi: 10.1126/science.1226518. [DOI] [PubMed] [Google Scholar]

- 101.Blanchard TC, Strait CE, Hayden BY. Ramping ensemble activity in dorsal anterior cingulate neurons during persistent commitment to a decision. J Neurophysiol. 2015;114:2439–2449. doi: 10.1152/jn.00711.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Desrochers Theresa M, Chatham Christopher H, Badre D. The necessity of rostrolateral prefrontal cortex for higher-level sequential behavior. Neuron. 2015;87:1357–1368. doi: 10.1016/j.neuron.2015.08.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Badre D, D'Esposito M. Is the rostro-caudal axis of the frontal lobe hierarchical? Nat Rev Neurosci. 2009;10:659–669. doi: 10.1038/nrn2667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Kouneiher F, Charron S, Koechlin E. Motivation and cognitive control in the human prefrontal cortex. Nat Neurosci. 2009;12:939–945. doi: 10.1038/nn.2321. [DOI] [PubMed] [Google Scholar]

- 105.O’Reilly RC. The what and how of prefrontal cortical organization. Trends Neurosci. 2010;33:355–361. doi: 10.1016/j.tins.2010.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Zarr N, Brown JW. Hierarchical error representation in medial prefrontal cortex. Neuroimage. 2016;124:238–247. doi: 10.1016/j.neuroimage.2015.08.063. [DOI] [PubMed] [Google Scholar]

- 107.Nee DE, Brown JW. Rostral–caudal gradients of abstraction revealed by multi-variate pattern analysis of working memory. Neuroimage. 2012;63:1285–1294. doi: 10.1016/j.neuroimage.2012.08.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Nee DE, D'Esposito M. The hierarchical organization of the lateral prefrontal cortex. eLife. 2016;5 doi: 10.7554/eLife.12112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Badre D, Kayser AS, D'Esposito M. Frontal cortex and the discovery of abstract action rules. Neuron. 2010;66:315–326. doi: 10.1016/j.neuron.2010.03.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Frank MJ, Badre D. Mechanisms of hierarchical reinforcement learning in corticostriatal circuits 1: computational analysis. Cereb Cortex. 2012;22:509–526. doi: 10.1093/cercor/bhr114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput. 1997;9:1735–1780. doi: 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- 112.O'Reilly RC, Frank MJ. Making working memory work: a computational model of learning in the prefrontal cortex and basal ganglia. Neural Comput. 2006;18:283–328. doi: 10.1162/089976606775093909. [DOI] [PubMed] [Google Scholar]

- 113.Dursteler MR, Wurtz RH, Newsome WT. Directional pursuit deficits following lesions of the foveal representation within the superior temporal sulcus of the macaque monkey. J Neurophysiol. 1987;57:1262–1287. doi: 10.1152/jn.1987.57.5.1262. [DOI] [PubMed] [Google Scholar]

- 114.Barton JJ, Press DZ, Keenan JP, O'Connor M. Lesions of the fusiform face area impair perception of facial configuration in prosopagnosia. Neurology. 2002;58:71–78. doi: 10.1212/wnl.58.1.71. [DOI] [PubMed] [Google Scholar]

- 115.Noonan MP, et al. Separate value comparison and learning mechanisms in macaque medial and lateral orbitofrontal cortex. Proc Natl Acad Sci U S A. 2010;107:20547–20552. doi: 10.1073/pnas.1012246107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Walton ME, Behrens TE, Buckley MJ, Rudebeck PH, Rushworth MF. Separable learning systems in the macaque brain and the role of orbitofrontal cortex in contingent learning. Neuron. 2010;65:927–939. doi: 10.1016/j.neuron.2010.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Vaidya AR, Fellows LK. Testing necessary regional frontal contributions to value assessment and fixation-based updating. Nat Commun. 2015;6:10120. doi: 10.1038/ncomms10120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Eslinger PJ, Damasio AR. Severe disturbance of higher cognition after bilateral frontal lobe ablation: patient EVR. Neurology. 1985;35:1731–1741. doi: 10.1212/wnl.35.12.1731. [DOI] [PubMed] [Google Scholar]

- 119.Szczepanski Sara M, Knight Robert T. Insights into human behavior from lesions to the prefrontal cortex. Neuron. 2014;83:1002–1018. doi: 10.1016/j.neuron.2014.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 120.Shadlen Michael N, Shohamy D. Decision making and sequential sampling from memory. Neuron. 2016;90:927–939. doi: 10.1016/j.neuron.2016.04.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 121.Plassmann H, O'Doherty J, Rangel A. Orbitofrontal cortex encodes willingness to pay in everyday economic transactions. J Neurosci. 2007;27:9984–9988. doi: 10.1523/JNEUROSCI.2131-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 122.Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. J Neurosci. 2005;25:4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 123.McCoy AN, Platt ML. Risk-sensitive neurons in macaque posterior cingulate cortex. Nat Neurosci. 2005;8:1220–1227. doi: 10.1038/nn1523. [DOI] [PubMed] [Google Scholar]

- 124.Kim S, Hwang J, Lee D. Prefrontal coding of temporally discounted values during intertemporal choice. Neuron. 2008;59:161–172. doi: 10.1016/j.neuron.2008.05.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 125.Louie K, Grattan LE, Glimcher PW. Reward value-based gain control: divisive normalization in parietal cortex. J Neurosci. 2011;31:10627–10639. doi: 10.1523/JNEUROSCI.1237-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 126.Shuler MG. Reward timing in the primary visual cortex. Science. 2006;311:1606–1609. doi: 10.1126/science.1123513. [DOI] [PubMed] [Google Scholar]

- 127.Philiastides MG, Biele G, Heekeren HR. A mechanistic account of value computation in the human brain. Proc Natl Acad Sci U S A. 2010;107:9430–9435. doi: 10.1073/pnas.1001732107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 128.Peck CJ, Lau B, Salzman CD. The primate amygdala combines information about space and value. Nat Neurosci. 2013;16:340–348. doi: 10.1038/nn.3328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129.Cai X, Kim S, Lee D. Heterogeneous coding of temporally discounted values in the dorsal and ventral striatum during intertemporal choice. Neuron. 2011;69:170–182. doi: 10.1016/j.neuron.2010.11.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 130.Heilbronner SR, Hayden BY. Dorsal anterior cingulate cortex: a bottom-up view. Annu Rev Neurosci. 2016;39:149–170. doi: 10.1146/annurev-neuro-070815-013952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 131.Vickery Timothy J, Chun Marvin M, Lee D. Ubiquity and Specificity of Reinforcement Signals throughout the Human Brain. Neuron. 2011;72:166–177. doi: 10.1016/j.neuron.2011.08.011. [DOI] [PubMed] [Google Scholar]