Abstract

Robots are increasingly envisaged as our future cohabitants. However, while considerable progress has been made in recent years in terms of their technological realization, the ability of robots to interact with humans in an intuitive and social way is still quite limited. An important challenge for social robotics is to determine how to design robots that can perceive the user’s needs, feelings, and intentions, and adapt to users over a broad range of cognitive abilities. It is conceivable that if robots were able to adequately demonstrate these skills, humans would eventually accept them as social companions. We argue that the best way to achieve this is using a systematic experimental approach based on behavioral and physiological neuroscience methods such as motion/eye-tracking, electroencephalography, or functional near-infrared spectroscopy embedded in interactive human–robot paradigms. This approach requires understanding how humans interact with each other, how they perform tasks together and how they develop feelings of social connection over time, and using these insights to formulate design principles that make social robots attuned to the workings of the human brain. In this review, we put forward the argument that the likelihood of artificial agents being perceived as social companions can be increased by designing them in a way that they are perceived as intentional agents that activate areas in the human brain involved in social-cognitive processing. We first review literature related to social-cognitive processes and mechanisms involved in human–human interactions, and highlight the importance of perceiving others as intentional agents to activate these social brain areas. We then discuss how attribution of intentionality can positively affect human–robot interaction by (a) fostering feelings of social connection, empathy and prosociality, and by (b) enhancing performance on joint human–robot tasks. Lastly, we describe circumstances under which attribution of intentionality to robot agents might be disadvantageous, and discuss challenges associated with designing social robots that are inspired by neuroscientific principles.

Keywords: attribution of intentionality, mind perception, social robotics, human–robot interaction, social neuroscience

Introduction

Robots are becoming a vision for societies of the near future, partially due to a growing need for assistance beyond what is currently possible with a human workforce (Ward et al., 2011). Robots can assist humans in a wide spectrum of domains (Tapus and Matarić, 2006; Cabibihan et al., 2013) that are not necessarily limited to the three d’s (dirty, dangerous, dull) of robotics, where robots are envisaged to assist humans during tasks that are hazardous, repetitive, or prone to errors (Takayama et al., 2008). On the contrary, there is a plethora of other domains where robots can (and perhaps should be) deployed, including entertainment, teaching, and health care: Pet robots like Paro (Shibata et al., 2001), or AIBO (developed by Sony1, see also Fujita and Kitano, 1998) or the huggable pillow-phone robot, Hugvie (Yamazaki et al., 2016) are used for elderly patients to reduce loneliness, increase social communicativeness, or improve cognitive performance (Tapus et al., 2007; Birks et al., 2016), and have positive effects on mood, emotional expressiveness and social bonding among dementia patients (Martin et al., 2013; Birks et al., 2016). In addition to their applicability for elderly patients (Wada et al., 2005; Wada and Shibata, 2006), social robots (a) are used in therapeutical interventions for children with autism spectrum disorder to help practice social skills, such as joint attention, turn-taking or emotion understanding (Dautenhahn, 2003; Robins et al., 2005; Ricks and Colton, 2010; Scassellati et al., 2012; Tapus et al., 2012; Cabibihan et al., 2013; Anzalone et al., 2014; Bekele et al., 2014; Kajopoulos et al., 2015; Warren et al., 2015), and (b) improve outcomes for patients with sensorimotor impairments during rehabilitation (Hogan and Krebs, 2004; Prange et al., 2006; Basteris et al., 2014). Outside the clinical context, social robots foster collaboration in the workplace (Hinds et al., 2004), improve learning (Mubin et al., 2013), and problem solving (Chang et al., 2010; Castledine and Chalmers, 2011; Kory and Breazeal, 2014), and deepen students’ understanding of mathematics and science in the classroom (Fernandes et al., 2006; Church et al., 2010). They also facilitate activities in daily lives, either as friendly companions at home (Kidd and Breazeal, 2008; Graf et al., 2009), or as assistants in supermarkets and airports (Triebel et al., 2016).

Despite this number of positive examples where robots support and assist their human counterparts in everyday life, general attitudes toward robots are not always positive (Flandorfer, 2012). In fact, the general public can be quite skeptical with respect to the introduction of robot assistants in everyday life (Bartneck and Reichenbach, 2005), especially when aspects like signing over decision-making or control to the robot are at stake (Scopelliti et al., 2005). Pop culture, myths and novels in western cultures also often depict robots or artificial agents as a threat to humanity (Kaplan, 2004). As a result, users might be worried about violations of their privacy or about becoming dependent on robot technology (Cortellessa et al., 2008), and particularly elderly individuals might be concerned about integrating robots into their home environment (Scopelliti et al., 2005). Concerns have also been raised regarding the potential of robots to contribute to social isolation and deprivation of human contact (Sharkey, 2008), and assistive robots are at risk of becoming stigmatized by the media as tools for lonely, old and dependent users. In line with this assumption, elderly individuals are reluctant to accept robots as social companions for themselves, although they acknowledge their potential benefits for other user groups (Neven, 2010).

Overall, these studies reveal that humans can be willing to accept social robots in some contexts but might be reluctant to do so in others. In consequence, research in social robotics needs to determine not only how to design robots that optimally support stakeholders with different cognitive and technical abilities, but also which features robots need to have to in order to be accepted as social companions that understand our needs, feelings and intentions, and can share valuable experiences with humans. One problem with the current state of social robotics research is that it often lacks systematicity, and in effect, specifications of particular features that facilitate treating robots as social companions are not sufficiently addressed. We suggest addressing this issue by using behavioral and physiological neuroscience methods (i.e., eye-tracking, EEG, fNIRS, fMRI) in robotics research with the goal of objectively measuring how humans react to robot agents, how they perform tasks with robots and how they develop mutual understanding and social engagement over time. In this context, we note that each method has advantages and disadvantages, and is suitable for specific questions but not others (for examples, see Table 1 and Figure 1). Insights gained from applying these methods can then be used to formulate design principles for social robots that are attuned to the workings of the human brain. In particular, we argue that if robots are to be treated as social companions, they should evoke mechanisms of social cognition in the brain that are typically activated when humans interact with other humans, such as joint attention (Moore and Dunham, 1995; Baron-Cohen, 1997), spatial perspective-taking (Tversky and Hard, 2009; Zwickel, 2009; Samson et al., 2010), action understanding (Gallese et al., 1996; Rizzolatti and Craighero, 2004; Brass et al., 2007), turn-taking (Knapp et al., 2013), and mentalizing (Baron-Cohen, 1997; Frith and Frith, 2006a).

Table 1.

Advantages and disadvantages of measures used to investigate human–robot interaction, together with example questions that can be best addressed with the respective measure; ERP, event related potential; PSP, postsynaptic potential; fMRI, functional magnetic resonance imaging; fNIRS, functional near infrared spectroscopy; TDS, transcranial doppler sonography; BF, blood flow.

| Method | Advantages | Disadvantages | Questions (examples) |

|---|---|---|---|

| Subjective measures | Explicit processes | Subjective measures | Traits |

| Likert scales | Inexpensive | Social acceptability bias | Attitudes |

| Implicit association | Easy-to-implement | Disrupts natural interaction | Acceptance |

| Interviews | No implicit processes | Judgments | |

| No performance measure | Likability | ||

| Classification/stereotyping | |||

| Performance measures | Objective measures | Disrupts natural interaction | Effectiveness/efficiency |

| Reaction times | Implicit/explicit processes | Needs specified goals | Competition |

| Error rates | Inexpensive | Indirect neural measure | Distraction |

| Easy-to-implement | Cognitive load | ||

| Social attention | |||

| Joint action | |||

| Search and rescue | |||

| Behavioral measures | Objective measures | Some discomfort | Free exploration (mobile) |

| Eye tracking | Implicit processes | Feeling of unnaturalness | Natural interaction (mobile) |

| Motion tracking | Relatively inexpensive | Indirect neural measure | Social attention (mobile) |

| Exploratory research | Not suitable for everyone | Social dynamics | |

| Non-disruptive | Preferences | ||

| Stress | |||

| Cognitive load | |||

| Movement kinematics | |||

| Physiological measures | Objective measures | Not specific in terms of cognitive processes | Stress |

| Heart rate | Implicit processes | Indirect neural measure | Alertness |

| Skin conductance | Relatively inexpensive | Low temporal resolution | Engagement |

| Respiratory rate | Non-disruptive | Cognitive load | |

| Electroencephalography | Objective measures | Some discomfort | Engagement |

| ERPs | Implicit processes | Feeling of unnaturalness | Social reward |

| (Time-) frequency | Relatively inexpensive | Timely to set-up | Task monitoring |

| Non-disruptive | Bound to laboratory setting | Error processing | |

| Direct neural measure (PSP) | Low spatial resolution | Entrainment | |

| High temporal resolution | Movement/other artifacts | Conflict processing | |

| Source localization possible | Social attention | ||

| Joint action | |||

| Violation of expectation | |||

| Neuroimaging | Objective measures | Some discomfort | Social reward |

| fMRI | Implicit processes | Feeling of unnaturalness | Social attention |

| fNIRS | Non-disruptive | Expensive | Bonding |

| TDS | Direct neural measure (BF) | Low temporal resolution | Empathy |

| High spatial resolution | Movement/other artifacts | Imitation | |

| Source localization possible | Anthropomorphism | ||

| Mind perception | |||

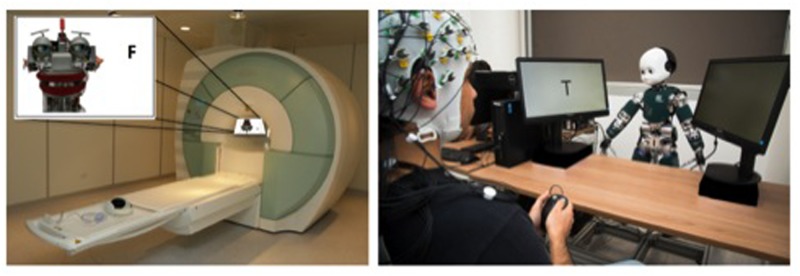

FIGURE 1.

Investigation of human-robot interaction with the use of neuroscientific methods. The image on the left illustrates the setup of an fMRI experiment measuring changes in blood flow in social brain areas during a joint attention task with the robot EDDIE (designed by Technical University of Munich). Participants are asked to respond as fast and accurately as possible to the identity of a target letter (“F” vs. “T”) that is either looked at nor not looked at by EDDIE. Changes in activation in social brain areas can be captured with a high spatial resolution, but no natural interactivity with the robot can be achieved (i.e., interaction needs to be imagined: offline social cognition). The image on the right shows a setup where neural processes associated with joint attention are examined using EEG and eye-tracking during interactions with the robot iCub (designed at the Istituto Italiano di Technologia by Metta et al., 2008). Similar to the previous example, participants are asked to react to the identity of a target letter (“T” vs. “V”) that is either looked at or not looked at by iCub. Mechanisms of joint attention can be captured with high temporal resolution during relatively natural interactions (i.e., online social cognition). Written informed consent has been obtained for publication of the identifiable image on the right.

But how can we make robots and other automated agents appear social? Research suggests that the two most important aspects for artificial agents to appear social are human-like appearance and behavior (Tapus and Matarić, 2006; Waytz et al., 2010b; Wykowska et al., 2016), with behavior probably being even more critical than appearance (a speculation that needs to be tested empirically). The effectiveness of behavior in inducing perceptions of humanness can be seen in Sci-Fi movies, where agents with not very human-like appearance like C-3PO, Wall-E, or Baymax can be perceived as social entities that evoke sympathy or affinity because their behavior is so human-like. On the other hand, human-looking agents like Data (‘Star Trek’) or Terminator (‘Terminator’) can evoke a sense of oddness or discomfort when they show mechanistic behavior. We suggest that research should build upon these observations and investigate (a) which physical and behavioral agent features are associated with humanness and are therefore able to make artificial entities appear social, and (b) how perceiving artificial agents as social entities affects attitudes, acceptance and performance in human–robot interaction. In order to accomplish that, it is useful to first understand the neural and cognitive underpinnings of social cognition in human–human interaction and then examine whether similar mechanisms can be activated in human–robot interaction. The ultimate goal is to create robots that are human-like enough to evoke mechanisms of social cognition in human interaction partners, and to achieve this with the use of neuroscientific and psychological methods.

This review focuses on humanoid robots (as opposed to robots with other non-humanoid shapes) for the following reasons: first, the goal of this review is to understand social interactions between humans and robots that live in shared environments. These environments are typically designed to match human movement and cognitive capabilities (in terms of physical space, ergonomics, or interfaces). Robots that are supposed to act as social interaction partners in the future need to fit in these human-attuned environments by emulating human form and cognition. For example, a legged service robot at a restaurant would be able to step over obstacles with which a wheeled robot might have troubles. Similarly, a robot of human-like width and height would be able to move around in human environments better than a larger robot. Human shape also allows the robot to communicate internal states like emotions (i.e., via facial expression or body posture) or intentions (i.e., via social cues like gestures or changes in gaze direction) in a natural way. Second, robots with a humanoid appearance have the potential to provide a number of desired functions within a single platform (i.e., service, learning, companionship), which allows for a more general and flexible use than more specialized platforms without human features. For example, in a home environment, a humanoid robot can manipulate kitchen utensils and appliances to cook (oven, fridge, dishwasher, etc.). The same robot can press buttons and control light settings, switch the television on and off, serve food, and utilize all tools that are already available at home, simplifying the humanoid robots’ deployment and increasing their usefulness, without substantially modifying the human environment. Lastly, the goal of this review is to advocate for the integration of behavioral and physiological neuroscience methods in the design and evaluation of social robots able to engage in social interactions, which requires robot platforms that are human-like enough to activate mechanisms in the human brain in a fashion similar to human interaction partners. Since many social-cognitive brain mechanisms are sensitive to human appearance and behavior (see “Observing Intentional Agents Activates Social Brain Areas”), humanoid robot designs are the most promising, but not necessarily the only avenue to accomplish this goal (for research on animal-like and fictional robot designs, see for instance, Shibata et al., 2001 or Kozima and Nakagawa, 2007). However, we acknowledge that for more specific and focused applications, other robot designs can be more suitable (Fujita and Kitano, 1998; Johnson and Demiris, 2005).

In this review, we argue that one of the main factors that contributes to robots being treated as social entities is their ability to be perceived as intentional2 beings with a mind (see “Can Robots be Perceived as Intentional Agents?”), so that they activate brain areas involved in social-cognitive processes in a similar way as human interaction partners do (see “Observing Intentional Agents Activates Social Brain Areas”). Since intentionality is a feature that can be ascribed to non-human agents or withdrawn from human agents (Gray et al., 2007), it is important to understand the principles underlying the attribution of intentionality to others, and to examine its effects on attitudes, acceptance and performance in human–human and human–robot interaction (see “Effects of Mind Perception on Attitudes and Performance in HRI”). The ultimate goal is to design social robots that trigger attributions of intentionality with a high likelihood and activate social-cognitive areas in the human brain (see “Designing Robots as Intentional Agents,” for examples of robot designs that are in line with neuroscientific models of the social brain).

Can Robots Be Perceived As Intentional Agents?

In human–human interactions, we activate brain areas responsible for social-cognitive processing and make inferences about what others think, feel and intend based on observing their behavior (Frith and Frith, 2006a,b). However, before we usually make inferences about intentions or emotions, we need to perceive others as intentional beings, with the general ability of having internal states (i.e., mind perception; Gray et al., 2007). Attributing internal states in social interactions is the default mode for human agents, but this might not automatically happen during interactions with artificial agents like Siri, Waymo, or Jibo3 due to their ambiguous mind status. As a result, human brain areas specialized in processing inputs of intentional agents might not be sufficiently activated when interacting with robot agents, which can potentially have negative consequences on attitudes and performance in human–robot interactions. We suggest that this issue should be addressed in social robotics by incorporating neuroscientific methods in the engineering design cycle, with the goal of designing robots that activate social brain areas in a similar manner as human interaction partners do. Robots that are attuned to the human cognitive system have the potential to make human–robot interaction more intuitive, and can positively affect acceptance and performance within human–robot teams.

Luckily for human–robot interaction, perceiving mind is not exclusive to agents that actually have a mind, but can also be triggered by agents who are not believed to have a mind (i.e., robots, avatars, self-driving cars) or agents with ambiguous mind status (i.e., animals; Gray et al., 2007). Mind is in the eye of the beholder, which means that it can be ascribed to others or denied, based on cognitive or motivational features associated with the perceiver, as well as physical and behavioral features of the perceived agent (Waytz et al., 2010b). For instance, being in need of social connection or lacking system-specific knowledge has been shown to increase the likelihood that human characteristics like ‘having a mind’ are ascribed to non-human agents (i.e., anthropomorphism; Rosset, 2008; Hackel et al., 2014), while feeling socially rejected or witnessing harmful acts being done to others by human beings decreases the extent to which mind is perceived in them (i.e., dehumanization; Epley et al., 2007; Bastian and Haslam, 2010; Waytz et al., 2010a). The human tendency to anthropomorphize others is so strong that some of us readily perceive craters on the moon as the ‘man in the moon,’ burnt areas on a toast as ‘Jesus,’ or the front of a car as ‘having a face,’ and are not surprised when Tom Hanks becomes friends with the volleyball Wilson (‘Cast Away’), or when Joaquin Phoenix falls in love with his virtual agent Samantha (‘Her’).

In line with these observations, psychological research has shown that anthropomorphism, and specifically mind perception, are highly automatic processes that activate social areas in the human brain in a bottom–up fashion (Gao et al., 2010; Looser and Wheatley, 2010; Wheatley et al., 2011; Schein and Gray, 2015), triggered by human-like facial features (Maurer et al., 2002; Looser and Wheatley, 2010; Balas and Tonsager, 2014; Schein and Gray, 2015; Deska et al., 2016), or biological motion (Castelli et al., 2000). Due to the automatic nature of mind perception, intentional agents can be differentiated from non-intentional agents within a few 100 ms (Wheatley et al., 2011; Looser et al., 2013), and even just passively viewing stimuli that trigger mind perception is sufficient to induce activation in a wide range of brain regions implicated in social cognition (Wagner et al., 2011).

Using the anthropomorphic model when making inferences about the behavior of non-human entities makes sense given that we are experts in what it means to be human, but have no phenomenological knowledge about what it means to be non-human (Nagel, 1974; Gould, 1998). Thus, when we interact with entities for which we lack specific knowledge, we commonly choose the ‘human’ model to predict their behavior, such as blaming God for events that we cannot explain or thinking that computers want to sabotage us when they simply start to malfunction (Rosset, 2008). Once the human model is activated, we can use it to infer particular intentions behind observed actions (i.e., mentalizing) or to reason about emotional states underlying facial expressions or changes in body language (i.e., empathizing). We do this by imagining what we would intend or feel if we were in a comparable situation (Buccino et al., 2001; Umilta et al., 2001; Rizzolatti and Craighero, 2004), which gives us immediate phenomenological access to the internal states of others. Despite the advantage of being able to reason about their internal states, automatically activating the anthropomorphic model when interacting with robots could also have negative consequences when it leads to incorrect predictions because the behavioral repertoire of the robot does not perfectly overlap with human behavior (Bisio et al., 2014), or when it potentially induces a cognitive conflict because certain robot features trigger mind perception (i.e., appearance), while others hinder mind perception (i.e., motion; Chaminade et al., 2007; Saygin et al., 2012; see “Negative Effects of Mind Perception in Social Interactions”). For a detailed discussion of costs and benefits associated with anthropomorphism in human–robot interaction, please also see (Złotowski et al., 2015).

In sum, these studies suggest that non-human agents have the potential to trigger mind perception, as long as they display observable signs of intentionality, such as human-like appearance and/or behavior. In this review, we argue that mind perception has the potential to positively affect human–robot interaction by (a) activating the social brain network involved in action understanding and mentalizing, (b) enhancing feelings of social connection, empathy and prosociality, and (c) fostering performance during joint action tasks. However, we also discuss circumstances in which mind perception might be disadvantageous for human–robot interaction, and suggest robot design features that allow humans to flexibly activate and deactivate the ‘human’ model when interacting with robot agents.

Observing Intentional Agents Activates Social Brain Areas

In order to successfully interact with others, we need to understand and predict their behavior (see “Performing Actions Together: Action Understanding and Joint Action”), and be able to make inferences about their intentions and emotions (see “Making Inferences about Internal States: Mentalizing and Empathizing”). The human brain is highly specialized in understanding the behaviors and internal states of others, and contains areas that are specifically activated when we interact with other social entities (i.e., social brain; Adolphs, 2009). Understanding actions is subserved by frontoparietal networks of the action-perception system (APS), while reasoning about internal states activates the temporo-parietal junction (TPJ), as well as prefrontal areas like the medial prefrontal cortex (mPFC), and anterior cingulate cortex (ACC; Brothers, 2002; Saxe and Kanwisher, 2003; Amodio and Frith, 2006; Adolphs, 2009; van Overwalle, 2009; Saygin et al., 2012; see Figure 2). Activation within the social brain network is predictive of how much we like others, how strongly we empathize with them, and how well we understand their actions (Ames et al., 2008; Cikara et al., 2011; Gutsell and Inzlicht, 2012), and can therefore be used as a proxy to estimate the degree of socialness that is ascribed to others. Although non-human agents can generally activate the social brain network, the strength of activation depends on the degree to which they are perceived as human-like entities with a mind (Blakemore and Decety, 2001; Gallese et al., 2004; Chaminade et al., 2007). The following sections describe the social brain network in more detail and discuss whether social robots can activate these brain areas, and if so, under which conditions.

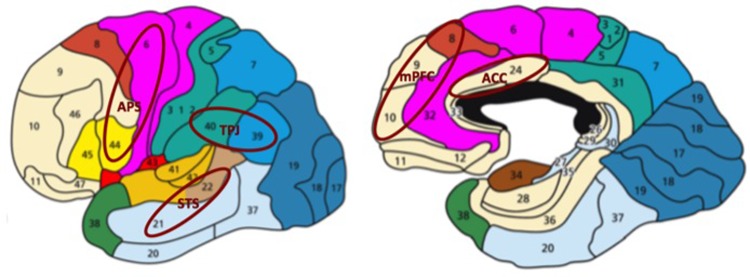

FIGURE 2.

Social brain network consisting of the action perception system (APS, mainly brodmann areas 6, 44, but also 4 and 40), superior temporal sulcus (STS; brodmann areas 21, 22), temporo-parietal junction (TPJ; brodmann areas 39,40), medial prefrontal cortex (mPFC; brodmann areas 8, 9, 10, 32) and anterior cingulate cortex (ACC; brodmann area 24). APS and STS detect biological motion and make inferences about low-level action goals from observed behavior. TPJ and mPFC are involved in mentalizing about high-level action goals and stable person features. ACC is associated with the attribution of mental states to non-human entities. The image has been modified (the original image was retrieved from: http://2.bp.blogspot.com/-SE4Yb_SRjdw/T6rNRgvRedI/AAAAAAAAAA0/FaU50ZemOCY/s1600/brodmann.png).

Performing Actions Together: Action Understanding and Joint Action

One key mechanism in social interactions is the ability to understand the actions of others, that is: being able to tell what sort of action is executed, and based on what kind of intention. Action understanding in the primate brain is based on shared representations that are activated both when an action is executed and when a similar action is observed in others (i.e., resonance; Gallese et al., 1996; Decety and Grèzes, 1999). Observing the actions of others facilitates the execution of a similar action (i.e., motor imitation), and hinders the execution of a different action (i.e., motor interference), since both action observation and execution activate the same neural network (Kilner et al., 2003; Oztop et al., 2005; Press et al., 2005). Imitation/interference effects are observed, for instance, when participants perform continuous unidirectional arm movements while observing continuous arm movements in the same/orthogonal direction or when being asked to perform an opening/closing gesture with their hand while observing opening/closing gestures in others (Kilner et al., 2003; Oztop et al., 2005; Press et al., 2005). Shared representations are also essential for performing joint actions, where two or more individuals coordinate their actions in time and space to achieve a shared action goal (Sebanz et al., 2005; Sebanz and Knoblich, 2009). For instance, when performing an action coordination task with another person (e.g., to cause a moving circle to overlap with a moving dot), we need to represent our own action (e.g., accelerating the circle) together with the action the other person is performing (e.g., slowing down the moving circle) in order to accomplish a shared action goal (e.g., establish overlap between the circle and the dot; Knoblich and Jordan, 2003). Performing a task together with another person also impacts action planning (Sebanz et al., 2006), and action monitoring (van Schie et al., 2004), which provides further support for the involvement of shared representations in the execution of joint actions.

In the primate brain, action understanding and execution activate the APS, including temporal areas like the posterior superior temporal sulcus (pSTS), involved in processing biological motion, as well as frontoparietal areas like the inferior parietal cortex and ventral premotor cortex (IPC and vPMC), responsible for inferring the intentions underlying observed actions (Saygin et al., 2004; Becchio et al., 2006; Pobric and Hamilton, 2006; Grafton and Hamilton, 2007; Saygin, 2007). In non-human primates, the IPC and vPMC are known to contain mirror neurons that fire both during action observation and execution, and infer intentions by simulating the action outcome as if the observer was executing the actions himself (Gallese et al., 1996, 2004; Keysers and Perrett, 2004; Rizzolatti and Craighero, 2004; Iacoboni, 2005). Although there is agreement that action understanding in humans is also based on the principles of resonance (Umilta et al., 2001; Kilner et al., 2003; Oztop et al., 2005; Press et al., 2005), the particular role of mirror neurons in this process still needs to be determined (Dinstein et al., 2007; Chong et al., 2008; Kilner et al., 2009; Mukamel et al., 2010; Saygin et al., 2012).

Given the importance of action understanding in human–robot interaction, it is essential to examine whether activation within the APS is exclusive to human agents or whether robotic agents can also activate this network. Robots were initially not assumed to activate the APS due to the fact that activation in this network is sensitive to the observation of biological motion and intentional behavior. In line with this assumption, initial studies on action understanding in human–robot interaction were not able to show motor resonance for the observation of robot actions (Kilner et al., 2003) or at least to a significantly smaller degree than for the observation of human agents (Oztop et al., 2005; Press et al., 2005; Oberman et al., 2007). Follow-up studies consistently showed that motor resonance can be induced by robot agents, but that its degree seems to depend on features like physical appearance (Chaminade et al., 2007; Kupferberg et al., 2012), motion kinematics (Bisio et al., 2014), or visibility of the full body (Chaminade and Cheng, 2009). In contrast, beliefs regarding the humanness of the observed agent did not have an impact on the presence or absence of motor resonance (Press et al., 2006). Yet another set of studies showed that motor resonance during interactions with robot agents can even reach levels comparable to human agents, however, only when participants were explicitly instructed to pay attention to their actions (Gazzola et al., 2007; Cross et al., 2011; Wykowska et al., 2014a), or when given additional time to familiarize themselves with the robots’ actions (Press et al., 2007). These findings suggest that participants naturally pay more attention to human actions than robot actions, with the consequence that brain areas involved in action understanding and prediction might be under-activated during interactions with robots. However, this effect can be reverted if participants are encouraged to process robot actions at a sufficient level of detail, either via instruction or via increased familiarization time.

Altogether, these studies suggest that robots have the potential to activate the human APS, at the very least in a reduced fashion, but under certain conditions even to a similar degree as human interaction partners. The degree of activation in APS depends on physical factors, such as the appearance or kinematic profile of a robot agent, as well as cognitive factors, such as one’s willingness to reason about a robot’s intentionality or the level of expertise one has with a particular robotic system. This means that low-level mechanisms of social cognition are not specifically sensitive to the identity of an interaction partner, and can be activated by robot agents as long as their actions map onto the human motor repertoire, and people are motivated to pay attention to them.

Making Inferences about Internal States: Mentalizing and Empathizing

When navigating social environments, we need to understand how others feel (i.e., empathizing; Baron-Cohen, 2005; Singer, 2006), and what they intend to do (i.e., mentalizing: Frith and Frith, 2003, 2006a). Similar to joint action, empathizing and mentalizing are based on shared representations that allow us to infer the emotions and intentions of others by simulating what we would feel or intend in a comparable situation (i.e., represent the behavior of others in our own reference frame; de Guzman et al., 2016; Steinbeis, 2016). In terms of empathizing, seeing or imagining the emotional states of others automatically activates similar states in the observer, thereby creating a shared representation at the neural and physiological level (Preston and de Waal, 2002). For instance, receiving a painful stimulus and observing the stimulus being presented to others activates similar brain areas, involving the anterior insula, rostral ACC, brain stem, and cerebellum (Singer et al., 2004). Similarly, smelling disgusting odors and seeing faces disgusted by the presentation of the same odors activates shared representations in the anterior insula (Wicker et al., 2003), and being touched and observing someone else being touched at the same parts of the body activates similar areas within the secondary somatosensory cortex (SII; Keysers et al., 2004).

When studying mentalizing, researchers typically present participants with stories that involve false-belief manipulations (Wimmer and Perner, 1983; Baron-Cohen et al., 1985) and require them to (a) take the perspective of others in order to understand whether and how their representation of the situation differs from their own (Epley et al., 2004; Epley and Caruso, 2009), (b) make inferences about what others are interested in based on non-verbal cues like gaze direction (Friesen and Kingstone, 1998), and (c) reason about how others currently feel based on facial expressions or body postures (Baron-Cohen, 2005; Singer, 2006). In the human brain, processes related to mentalizing are subserved by a distributed network consisting of temporal areas like the TPJ, as well as prefrontal areas like the mPFC and ACC (Ruby and Decety, 2001; Chaminade and Decety, 2002; Farrer et al., 2003; Grèzes et al., 2004, 2006; van Overwalle, 2009). Bilateral TPJ is involved in inferring intentions based on sensory input (Gallagher et al., 2000; Ruby and Decety, 2001; Chaminade and Decety, 2002; Saxe and Kanwisher, 2003; Grèzes et al., 2004, 2006; Ohnishi et al., 2004; Perner et al., 2006; Saxe and Powell, 2006), and allows differentiating self from other intentions via perspective-taking (Ruby and Decety, 2001; Chaminade and Decety, 2002; Farrer et al., 2003; van Overwalle, 2009). Although both sides of the TPJ have basic mentalizing and perspective-taking abilities, expertise regarding these functions seems to be lateralized, with the left side being more specialized on perspective-taking (Samson et al., 2004), and the right side being more involved in mentalizing (Gallagher et al., 2002; Frith and Frith, 2003; Saxe and Kanwisher, 2003; Saxe and Wexler, 2005; Costa et al., 2008). Activation within left TPJ is also associated with attributions of humanness (Chaminade et al., 2007; Zink et al., 2011) and intentionality (Perner et al., 2006) to non-human agents, and gray matter volume in left TPJ has been shown to be a reliable predictor for individual differences in anthropomorphizing non-human agents (Cullen et al., 2014). Right TPJ is specialized on inferring intentions underlying observed human behavior, and shows stronger activation for intentional than non-intentional or random actions (Gallagher et al., 2002; Cavanna and Trimble, 2006; Krach et al., 2008; Chaminade et al., 2012). In addition to its involvement in mentalizing, the TPJ also serves as a convergence point for processing social and non-social information (Mitchell, 2008; Scholz et al., 2009; Chang et al., 2013; Krall et al., 2015, 2016).

When we make inferences about the internal states of others, it is essential to incorporate knowledge about their dispositions and preferences into the mentalizing process, in particular in long-term interactions (van Overwalle, 2009). This requires the ability to represent behaviors over a long period of time, across different circumstances and with different social partners, and is associated with activation in the mPFC (Frith and Frith, 2001; Decety and Chaminade, 2003; Gallagher and Frith, 2003; Amodio and Frith, 2006). Neurons in the mPFC have the ability to discharge over extended periods of time and across different events (Wood and Grafman, 2003; Huey et al., 2006), and their activation is positively correlated to the degree of background knowledge we have about another person (Saxe and Wexler, 2005). The ventral mPFC is associated with reasoning about the emotional states of others (Hynes et al., 2006; Vollm et al., 2006), while the dorsal mPFC is more recruited during triadic interactions involving two agents and one object of interest (Brass et al., 2005; Jackson et al., 2006; Mitchell et al., 2006). Due to a high degree of interconnectivity with other brain areas, the mPFC can process a wealth of neural input and is capable of implementing abstract inferences regarding interpersonal information (Leslie et al., 2004; Amodio and Frith, 2006). Similar to the TPJ, the mPFC is more strongly activated by agents who are believed to have a mind (Krach et al., 2008; Riedl et al., 2014).

Perceiving others as intentional entities is particularly associated with activation in the ACC, a cortical midline structure extending from the genu to the corpus callosum (Barch et al., 2001). The anterior ACC is activated when we attribute internal states to others, and responds more strongly during interactions with intentional agents versus non-intentional agents (Gallagher et al., 2002), as well as during interactions that require real-time mentalizing rather than retrospective inferences about mental states based on stories or images (Gallagher et al., 2000; McCabe et al., 2001). In addition, the dorsal ACC is involved in processing uncertainty, while the ventral ACC is responsible for monitoring emotions in self and others (Bush et al., 2000; Barch et al., 2001; Critchley et al., 2003; Nomura et al., 2003; Amodio and Frith, 2006). Similar to the mPFC, the ACC is highly interconnected with other brain areas and plays an integrative role in both social and non-social cognitive processes (Allman et al., 2001).

In sum, these studies show that activation in brain areas related to empathizing and mentalizing are modulated by the degree to which interaction partners are perceived to have a mind, with stronger activation for intentional agents (i.e., humans) compared to non-intentional agents (i.e., robots; Leyens et al., 2000; Gallagher et al., 2002; Sanfey et al., 2003; Harris and Fiske, 2006; Krach et al., 2008; Demoulin et al., 2009; Chaminade et al., 2010; Spunt et al., 2015; Suzuki et al., 2015). Although further studies are necessary to determine the constraints under which robot agents activate the empathizing and mentalizing networks, the aforementioned studies provide preliminary evidence that activation in social brain areas involved in higher-order social-cognitive processes like empathizing and mentalizing (i.e., mPFC, TPJ, insula) more strongly depends on mind perception than activation in social brain areas involved in lower-level social cognitive processing like action understanding (i.e., APS). In particular, it was shown that the APS can reach levels of activation during human–robot interaction that are similar to human–human interaction if certain constraints are met (i.e., human appearance and motor kinematics), while a comparable effect has not been reported for areas like the mPFC, TPJ or insula (i.e., areas get activated by robot agents but to a lesser degree than by human agents). Interestingly, these neuroscientific findings are in line with behavioral studies showing that humans seem to be willing to treat robots as entities with agency (i.e., ability to plan and act), but are reluctant to perceive them as entities that can experience internal states (i.e., ability to sense and feel; Gray et al., 2007). In consequence, research in social robotics would benefit from identifying conditions under which artificial agents engage mechanisms of higher-order social cognition in the human brain, which may necessitate some effort to specifically design robots as intentional and empathetic agents (Gonsior et al., 2012; Silva et al., 2016).

Effects of Mind Perception on Attitudes and Performance in HRI

Mind perception is not only essential for triggering activation in social brain areas; it also has an impact on how we think and feel about others, and how we perform actions with them (see Waytz et al., 2010b; for a review). These effects on social interactions are mainly positive: mind perception enhances the degree of social connection felt towards others, leads to more prosocial behaviors, motivates others to adhere to moral standards, and improves performance on joint action tasks (see “Positive Effects of Mind Perception in Social Interactions”). Under some circumstances, however, mind perception can be disadvantageous in social interactions, in particular, when the mind status of an agent is ambiguous and evokes categorical uncertainty (i.e., ambiguity regarding whether to classify the agent as human or robot), or when an agent’s behavior deviates strongly enough from human behavior so that an anthropomorphic model would lead to incorrect predictions (see “Negative Effects of Mind Perception in Social Interactions”).

Positive Effects of Mind Perception in Social Interactions

Treating others as agents with a mind makes us feel socially connected with them and fosters prosocial behaviors, such as decreased cheating and increased generosity (Bering and Johnson, 2005; Haley and Fessler, 2005; Gray et al., 2007, 2012; Shariff and Norenzayan, 2007; Epley et al., 2008). The effect of perceiving a mind in others on prosociality is so strong that simply presenting a pair of eyes during task execution or asking participants to perform a task in front of an audience significantly decreases cheating behaviors and motivates people to perpetuate moral standards (Haley and Fessler, 2005). The positive effect of mind perception on prosocial behavior is even stronger when the interaction partner is similar to the perceiver or believed to belong to his ingroup (Shariff and Norenzayan, 2007; Graham and Haidt, 2010). Agents not being perceived as having a mind, on the other hand, are perceived as being incapable of experiencing emotional states, which makes them unlikely recipients of empathy, morality or prosociality (Haslam, 2006; Hein et al., 2010; Cikara et al., 2011; Harris and Fiske, 2011; Gutsell and Inzlicht, 2012), and makes people feel less guilty when performing harmful acts toward them (Castano and Giner-Sorolla, 2006; Cehajic et al., 2009).

Mind perception also determines whether moral rights are granted to others and how strongly they are judged when showing immoral or harmful behaviors. According to Gray et al. (2007), agents that have a high ability to experience internal and external states, but a low ability to manipulate the environment (i.e., babies or puppies) are treated as ‘moral patients’ who deserve protection, are granted moral rights, and are associated with accidental rather than intentional negative behavior. Agents that display a high degree of agency, but only a low degree of experience (i.e., robots or corporations) are labeled as ‘moral agents’ with full moral responsibilities and the ability to show intentional behavior, in particular when it is harmful. Moral patients are seen as subservient or animalistic, and are more likely to be oppressed against their will or robbed of their human rights (Fiske et al., 2002, 2007), while moral agents are perceived as cold and robotic, and are more likely to be harmed by others (Fiske et al., 2002, 2007; Loughnan and Haslam, 2007). In consequence, this means that in order to be respected as a moral patient, deserving of protection and moral rights, AND as a moral agent, capable of showing intentional behavior, agents need to be ascribed the ability to experience and act. However, while human agents have this set of features by default, robots are typically associated with a limited capability to sense themselves, others and their environments (i.e., reduced ability to experience), with the consequence that they are more likely to be denied moral rights and judged more harshly for behaviors that lead to negative consequences (Gray et al., 2007). This can potentially be prevented by designing robots whose physical and behavioral features trigger mind perception with a high likelihood (e.g., the robot Leonardo; Breazeal et al., 2005).

Believing that an agent has a mind has also been shown to increase the social relevance ascribed to its actions, which can improve performance during social interactions: participants, for instance, follow the eye movements of an agent more strongly when they are believed to reflect intentional compared to preprogrammed or random behavior (Wiese et al., 2012; Wykowska et al., 2014b; Caruana et al., 2016; Özdem et al., 2016; see Figure 3). Similarly, perceiving the actions of others as intentional determines how intensely we experience their outcomes (Barrett, 2004; Gilbert et al., 2004): an electric shock hurts more when it is believed to be administered on purpose rather than accidentally (Gray and Wegner, 2008), and intentional harms are judged more rigorously than accidental ones (Ohtsubo, 2007; Cushman, 2008). Perceiving human features like ‘having a mind’ in non-human agents has also been shown to induce social facilitation effects on human performance (Bartneck, 2003; cf. Hoyt et al., 2003; Woods et al., 2005; Park and Catrambone, 2007; Zanbaka et al., 2007; Riether et al., 2012; Hertz and Wiese, 2017), and to foster learning via social reinforcement (Druin and Hendler, 2000; Robins et al., 2005; see Figure 4). The facilitatory effect of the presence of an intentional robot on performance becomes even more prominent with an increasing degree of physical embodiment of the robot (Bartneck, 2003; Hoyt et al., 2003; Zanbaka et al., 2007).

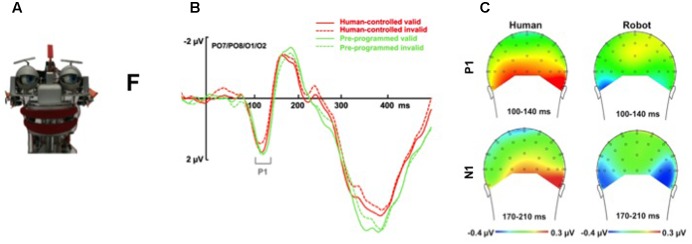

FIGURE 3.

Effect of mind perception on the social relevance of observed behavior. (A) Participants are asked to perform a joint attention task (react as fast and accurately as possible to the identity of a target letter: “F” vs. “T”) with the robot EDDIE (designed by Technical University of Munich). Results show that changes in gaze direction are followed more strongly when the eye movements are believed to be intentional versus pre-programmed. (B) Grand average ERP waveforms time-locked to the onset of the target for the pool of O1/O2/PO7/PO8 electrodes show that the belief that eye movements are intentional enhances sensory gain control mechanisms, with larger P1 validity effects (i.e., difference between valid and invalid trials) for changes in gaze direction that are believed to be intentional (i.e., human-controlled, red lines) versus non-intentional (i.e., pre-programmed, green lines). (C) Topographical maps of voltage distribution (posterior view) show that observing an intentional agent (left panel) versus a pre-programmed agent (right panel) modulates mechanisms of joint attention in occipital and parietal areas (suggesting that attribution of mental states affects visual and early attentional processes). The time interval of the P1 component (100–140 ms) is presented in the upper panel. The time interval of the N1 component (170–210 ms) is presented in the lower panel. For more details see: Wykowska et al. (2014b).

FIGURE 4.

Social facilitation effects in Human–Robot Interaction. Perceiving robot agents as having a mind can induce social facilitation effects on human performance (i.e., presence of a robot agent facilitates performance on simple tasks, but worsens performance on difficult tasks) and foster learning via social reinforcement (i.e., robot provides social cues like smiling for wanted behaviors). The facilitating and reinforcing abilities of companion robots can be used in the classroom to improve learning (left image) or during driving to verbally and non-verbally encourage wanted driving behaviors (right image), for example. Written informed consent has been obtained for publication of the identifiable image on the left. The image on the right was modified (the original image of the driving simulator was retrieved from http://stevevolk.com; the original image of the robot was retrieved from: http://newsroom.toyota.co.jp/).

Negative Effects of Mind Perception in Social Interactions

Automatically perceiving mind or human-likeness in non-human agents can also have negative consequences, in particular when an agent is hard to categorize as human versus non-human (Cheetham et al., 2011, 2014; Hackel et al., 2014), or when the anthropomorphic model is not the best predictor for agent behavior (Epley et al., 2007). With regard to categorization difficulties, psychological research has shown that perceiving humanness in others follows a categorical pattern, with agents either being treated as ‘human’ or ‘non-human’ based on their physical features, except at the category boundary located at around 63% of physical humanness, where humanness ratings are ambiguous (Looser and Wheatley, 2010; Cheetham et al., 2011, 2014; Hackel et al., 2014; Martini et al., 2016). The consequence is that pairs of stimuli straddling the category boundary are easier to discriminate (i.e., same or different stimuli?), but harder to categorize (i.e., human or non-human?) than equally similar stimulus pairs located on the same side of the boundary (Repp, 1984; Harnad, 1987; Goldstone and Hendrickson, 2010; Looser and Wheatley, 2010; Cheetham et al., 2011, 2014). Categorizing agents located at the human–nonhuman category boundary results in increased response times and decreased accuracy rates (Cheetham et al., 2011, 2014), consistent with cognitive conflict processing. Trying to resolve this cognitive conflict takes up cognitive resources and can therefore have detrimental effects on performance during tasks that are conjointly performed with agents with an ambiguous mind status (Mandell et al., 2017; Weis and Wiese, 2017).

The categorization conflict at the human–nonhuman boundary has also been associated with the uncanny valley phenomenon, where positive attitudes toward non-human agents initially increase as the agents’ physical humanness increases and then drop dramatically as the agents start to look human-like but not perfectly human (i.e., uncanny valley), just to recover and reach a maximum for agents that are fully human (Mori, 1970; Kätsyri et al., 2015). In particular, it was argued that negative affective reactions associated with uncanny stimuli could be the result of conflict resolution processes triggered by categorical ambiguity during categorization response selection (Cheetham et al., 2011; Burleigh et al., 2013; Kätsyri et al., 2015; see Figure 5). Alternatively, perceptions of uncanniness could also be due to a mismatch of agent features, where one feature, for instance physical appearance, suggests that the agent might be human, but another feature, for instance lack of biological motion, suggests otherwise (i.e., perceptual mismatch; Seyama and Nagayama, 2007; MacDorman et al., 2009; Mitchell et al., 2011; Saygin et al., 2012; Kätsyri et al., 2015). Both the categorical ambiguity and the perceptual mismatch hypothesis are based on the assumption that physical agent features drive the automatic selection of a neural model that can be used to predict agent behavior, and that categorical ambiguity of the agent or perceptual mismatch of its features can lead to the selection of an inaccurate neural model, which is associated with error processing and might therefore trigger negative affective reactions (Saygin et al., 2012)4.

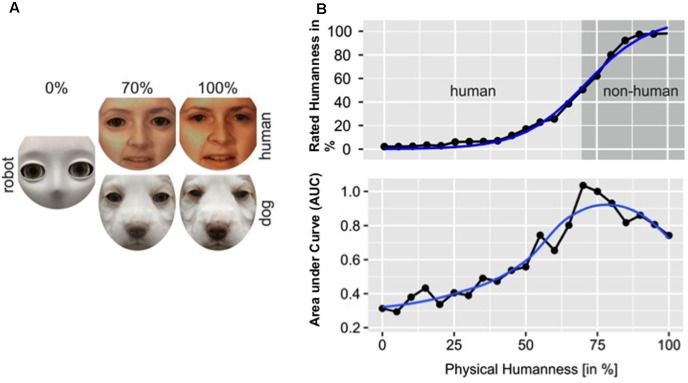

FIGURE 5.

Mind perception can induce a cognitive conflict for agents with ambiguous physical appearance. (A) Effects of mind perception triggered by physical appearance can be measured using a morphing procedure (e.g., image of a robot is morphed into image of a human or a dog in steps of 5%). (B) Mind perception follows a qualitative (i.e., significant changes in mind ratings occur only after a critical level of physical humanness is reached) rather than a quantitative pattern (i.e., likelihood for perceiving mind increases in a linear fashion with physical humanness). The significant change in mind ratings occurs when the category boundary between human and non-human is crossed (upper panel). Agents located at the category boundary are ambiguous in terms of their mind status, and trying to categorize them as human versus non-human causes a cognitive conflict, which takes cognitive resources to resolve. The degree of cognitive conflict that is induced by a categorical decision can be measured using mouse-tracking (i.e., the more curved the mouse movement, the larger the cognitive conflict; see Freeman and Ambady, 2010). For more details regarding the experiment on cognitive conflict in HRI: Weis and Wiese (2017).

For human–robot interaction, this means that designs should be avoided that (a) trigger categorization difficulties due to physical ambiguity, or (b) cause perceptual mismatch by incorporating human- and machine-like features into the same robot platform. The research also suggests that in order to predict internal states and behaviors of non-human agents, humans need to be able to flexibly activate, correct and apply anthropomorphic knowledge to come up with the best possible prediction given the current circumstances (Epley et al., 2007): when interacting with unfamiliar or novel systems, it makes sense to activate anthropomorphic knowledge and use it as a basis to predict how the agent thinks, feels and behaves. However, as specific agent knowledge becomes available with more experience, the anthropomorphic model needs to be adjusted to match the agent’s actual capabilities (Gilbert, 1991; Gilbert and Malone, 1995), even more so when precise predictions of behavior are required or when future interactions with the agent are anticipated (Epley et al., 2007). Robots that can trigger both an anthropomorphic and a mechanistic mental model also have the advantage that humans can switch between these models depending on their current need for social contact and affiliation or effective task performance. In consequence, this means that robot design should not only focus on mind perception and associated processes of mentalizing and empathizing, but should also equip robots with triggers that activate machine mental models in situations where the anthropomorphic model could potentially lead to incorrect predictions. For example, if a robot cannot grasp an object to pass it to the human user due to hardware or software limitations, it would be useful for the user to understand the underlying reasons so he/she does not blame the robot for a lack of good intentions. This can be achieved, for example, through the use of informative verbal messages (Lee et al., 2010).

Designing Robots As Intentional Agents

In this section, we explore a number of studies privileging models of the components of the social brain tested in real robots, in real-time human–robot interaction. In doing so, we survey some of the engineering work and technological limitations related to the implementation of interactive robots.

In designing intentional agents, we need to consider the appearance of a robot as well as its behavior (Tapus and Matarić, 2006; Waytz et al., 2010b; Wykowska et al., 2016). Robot appearance is concerned with the ‘bodyware’ or hardware of the machine, while behavior concerns the observable results of the workings of its ‘mindware’ or software. In advanced robot designs, there is a tighter link between the body- and mindware, since often what can be done and how depends on the joint design of hardware and software. Engineering approaches do not necessarily reflect solutions that have any resemblance to their natural counterparts although there is a tradition of robotic research that utilized neuroscience studies as a starting point (Kawato, 1999; Scassellati, 2001; Demiris et al., 2014). Although these approaches led to accurate models of muscular-skeletal systems (Mizuuchi et al., 2006; Pfeifer et al., 2012), facial features (Oh et al., 2006; Becker-Asano et al., 2010), and human kinematics (Kaneko et al., 2009; Metta et al., 2010), they are limited in their ability to reproduce movements accurately in all possible contexts due to technological limitations impacting the range and speed of motion (i.e., mechanics of rigid bodies connected through rotary joints). Furthermore, when talking about mindware, an important distinction needs to be made between neurally accurate models – often proof of principles – and actual working implementations on real hardware, with profound differences between computers and human brains impeding accurate real-time neural simulations of large brain systems, such as those of the social brain. This, however, does not necessarily influence focused experiments targeting specific mechanisms of social-cognitive processing, such as action understanding (via APS) or intention and emotion understanding (via TPJ, mPFC and insula; Oztop et al., 2013).

To build robots that are perceived as intentional agents, we need to ask whether it is even necessary that they accurately emulate human behavior or whether it is sufficient for them to just display certain aspects of human behavior that are most strongly associated with the perception of intentionality (Yamaoka et al., 2007). Given the technological limitations associated with trying to reproduce large brain networks in artificial agents, the goal needs to be the identification of a minimal set of features that can reliably trigger mind perception in non-human agents. Neuroscientists need to identify these features and investigate their effects on attitudes and performance in human–robot interactions, while engineers can help with designing the robot body structure in such a way that faithfully implements this minimal set of behavioral parameters in term of kinematics, dynamics, electronics, and computation. As a corollary to this question, trying to build robots that are perceived as intentional agents can also help to elucidate whether the minimal set of parameters relates to a specific architecture and how tuning various parameters affects the way a robot is perceived.

From an engineering perspective, research in robotics and artificial intelligence that may have an impact to intentionality is vast (see Feil-Seifer and Matarić, 2009; for a review). First attempts to build socially competent robots can be traced back to the MIT robots Kismet (Breazeal, 2003) and Cog (Brooks et al., 1999). With Kismet, Breazeal and Scassellati (1999) studied how an expressive robot elicited appropriate social responses in humans by displaying attention and turn-taking mechanisms. They also identify some of the requirements of the visual system of such robots (Breazeal et al., 2001) as for example the advantages of foveated vision, eye contact (and therefore detecting the eyes of the interactant in the visual scene), and a number of sensorimotor control loops (e.g., avoid and seek objects and people). Scassellati (2002) went further and took some first steps toward implementing a theory of mind for the robot Cog based on an established psychological model for mentalizing developed by Baron-Cohen (1997). Among other features, the model possesses a human-like attentional system that identifies living agents and non-living objects from basic perceptual features like optical flow. In particular, the model relies on an Intentionality Detector (ID) that labels actions as intentional based on their goal-directedness, as well as an Eye Direction Detector (EDD) that allows the robot to shift its attention to locations in space that are gazed-at by its human interaction partner. Although the ID on Cog was relatively simple, dealing exclusively with the issue of animacy versus no animacy, it nevertheless had the advantage of being based on a psychologically sound and empirically derived model of mentalization.

Following the discovery of mirror neurons in non-human primates and their involvement in action understanding (Gallese et al., 1996), neuroscientifically inspired approaches to robotics mainly focused on developing models for action recognition and imitation (Metta et al., 2006; Oztop et al., 2013). The key concept of shared sensorimotor representations, dating back to Liberman and Mattingly (1985), guided a variety of implementations utilizing, for example, recurrent neural networks (Tani et al., 2004) or various other machine-learning methods that learn direct-inverse models from examples (Oztop et al., 2006; Demiris, 2007). Among these attempts to implement a mirror neuron system into artificial agents, some models were more neuroscientifically accurate than others (Arbib et al., 2000; see Oztop et al., 2013; for a review). More recently, the use of RGBd cameras boosted the ability to extract meaningful parameters automatically from images allowing robots to engage in more complex social interactions with their human counterparts. The use of convolutional neural networks made a further step toward robust body pose/skeleton extraction from images (even 2D; see Cao et al., 2016), which is a fundamental component for robots to interact in a complex way within a social context.

More recently, Pointeau et al. (2013) utilized both object recognition and human posture detection to give a humanoid robot the ability to implement spatial perspective taking during the execution of a shared task in human–robot interaction. Spatial reasoning was implemented via simulation of the environment in 3D, which allowed for disambiguating linguistic constructs (e.g., ‘object on the left’). An autobiographical memory was utilized to learn the structure of the shared task, which was represented as a sequence of elemental steps allowing the robot to take the human’s perspective and to step in at any given point of the task execution. Although this architecture bears some resemblance with certain brain functions, such as memorizing sequences and spatial perspective taking, its implementation still relies exclusively on engineering methods, utilizing simple tables and strictly symbolic representations, instead of neurologically plausible mechanisms.

Other areas of research relevant to the design of robots as intentional agents include image and object recognition, as well as spatial reasoning. In terms of object recognition, brain-inspired models have dominated the field for several years (Serre et al., 2005), but are being replaced by the modern “brute force” approach of using very large neural networks and managing the increased computational cost through specialized processors (e.g., GPUs), resulting in an improvement in performance of orders of magnitude (Krizhevsky et al., 2012). In terms of spatial navigation, roboticists have developed a set of standard methods including probabilistic localization techniques and planning impact-free movements (O’Donnell and Lozano-Pérez, 1989; Thrun, 2002), some of which are also building blocks for robot controllers that help avoid contact and/or reach properly during human–robot interaction (Kulić and Croft, 2005;De Santis et al., 2008). Other active research directions within the theme of spatial reasoning explore how to represent spatial data (i.e., objects and people in 3D, their spatial relationships), and how to connect linguistic constructs that imply spatial relationships with reasoning (Sugiyama et al., 2006; Gold et al., 2009; Hato et al., 2010). Spatial knowledge is one element of the correct interpretation of deictic gestures, which by their nature require both the gesture itself and general knowledge about the environment, which usually, in human–human interaction, co-occur with utterances. Therefore, for the robot to understand them, location and recognition of the hand configuration, the spatial configuration of objects/people in the world, and speech recognition have to be integrated (Brooks and Breazeal, 2006).

In summary, this short overview indicates that some of the problems in designing intentional robots require competencies that span the whole range of human cognitive skills in both perceptual and reasoning terms, and that psychologically and neuroscientifically sound implementations thereof are for the most part missing. Furthermore, while important aspects of human–robot interaction are currently addressed in isolated models, a more integrated architecture that combines cognitive and social functioning does not exist and the effectiveness of the existing models on mind perception and attitudes and performance in human–robot interaction has not been sufficiently investigated. In the future, neuroscientists and roboticists need to work together to identify at least a minimal set of physical and behavioral robot features that have the potential to activate the same areas in the human brain as human interaction partners. In doing so, it is still not guaranteed that the exact functioning of the human neural system can be emulated in artificial agents, but it at least increases the likelihood that robot agents are treated as if they were intentional agents.

Conclusion

We highlight that the design of social robots should be based on methods of cognitive neuroscience in order to determine robot features (e.g., behavioral features, such as timing of saccades, head-eye coordination, frequency and length of gaze toward a human user) that activate mechanisms of social cognition in the human brain. Neuroscientific results inform us about what these mechanisms are, how they are implemented in human neural architecture and when they are activated. These results can also inspire research in artificial intelligence and robotics so that robot architectures can be based on similar principles as those operating in the human brain (even if this is at present often a challenging enterprise due to technological limitations), and allow for more human-like behaviors of robots. As one of the key factors activating mechanisms of social cognition is attribution of intentionality to robots, it is important to understand the conditions under which humans perceive robots as intentional agents, and what consequences attribution of intentionality may have for human–robot interaction. Although adopting an anthropomorphic mental model in explaining the behaviors of robot agents usually has positive consequences on attitudes and performance in human–robot interaction, in some cases it might hinder the quality of human–robot interaction, in particular when some agent features trigger mind perception and others do not. Therefore, it is extremely important to design robots based on systematic studies, perhaps with an iterative approach, in order to understand which parameters of the robot’s behavior and appearance activate the social brain and elicit attribution of intentionality, and whether in certain cases it is better not to evoke mind attribution.

Author Contributions

EW and AW conceptualized the paper, and created the figures. AW wrote sections “Introduction” and “Conclusion”, EW wrote sections “Can Robots Be Perceived as Intentional Agents?,” “Observing Intentional Agents Activates Social Brain Areas,” “Effects of Mind Perception on Attitudes and Performance in HRI,” and GM wrote section “Designing Robots as Intentional Agents.”

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (grant agreement no. 715058) to AW.

Footnotes

Note that we use the term ‘intentionality’ in the philosophical sense: “Intentionality is the power of minds to be about, to represent, or to stand for, things, properties and states of affairs” (Jacob, 2014). In other words, intentionality characterizes mental states to refer to something. For example, a belief (mental state) is about something, refers to something. This way of defining intentionality might be different from the common use of the term “intentional” (commonly “intentional” might be understood as “done on purpose” or “deliberate”). The philosophical meaning dates back to Brentano: “Every mental phenomenon is characterized by what the Scholastics of the Middle Ages called the intentional (or mental) inexistence of an object, and what we might call, though not wholly unambiguously, reference to a content, direction toward an object (which is not to be understood here as meaning a thing), or immanent objectivity” (Brentano, 1874, p. 68). We use the term ‘intentional’ in the philosophical sense in order to highlight the aspect of robots being potentially perceived as agents with mental states (as opposed to only mindless machines).

Webpages for Siri (https://www.apple.com/ios/siri/), Waymo (https://waymo.com/), Jibo (https://www.jibo.com/).

Please note that conflicts might also lead to positive effects in human–robot interaction given that they might lead human interaction partners to update their internal representation of the robot to match better its abilities and features.

References

- Adolphs R. (2009). The social brain: neural basis of social knowledge. Annu. Rev. Psychol. 60 693–716. 10.1146/annurev.psych.60.110707.163514 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allman J. M., Hakeem A., Erwin J. M., Nimchinsky E., Hof P. (2001). The anterior cingulate cortex: the evolution of an interface between emotion and cognition. Ann. N. Y. Acad. Sci. 935 107–117. 10.1111/j.1749-6632.2001.tb03476.x [DOI] [PubMed] [Google Scholar]

- Ames D. L., Jenkins A. C., Banaji M. R., Mitchell J. P. (2008). Taking another person’s perspective increases self-referential neural processing. Psychol. Sci. 19 642–644. 10.1111/j.1467-9280.2008.02135.x [DOI] [PubMed] [Google Scholar]

- Amodio D. M., Frith C. D. (2006). Meeting of minds: the medial frontal cortex and social cognition. Nat. Rev. Neurosci. 7 268–277. 10.1038/nrn1884 [DOI] [PubMed] [Google Scholar]

- Anzalone S. M., Tilmont E., Boucenna S., Xavier J., Jouen A.-L., Bodeau N., et al. (2014). How children with autism spectrum disorder behave and explore the 4-dimensional (spatial 3D+ time) environment during a joint attention induction task with a robot. Res. Autism Spectr. Disord. 8 814–826. 10.1016/j.rasd.2014.03.002 [DOI] [Google Scholar]

- Arbib M. A., Billard A., Iacoboni M., Oztop E. (2000). Synthetic brain imaging: grasping, mirror neurons and imitation. Neural Netw. 13 975–997. 10.1016/S0893-6080(00)00070-8 [DOI] [PubMed] [Google Scholar]

- Balas B., Tonsager C. (2014). Face animacy is not all in the eyes: evidence from contrast chimeras. Perception 43 355–367. 10.1068/p7696 [DOI] [PubMed] [Google Scholar]

- Barch D. M., Braver T. S., Akbudak E., Conturo T., Ollinger J., Snyder A. (2001). Anterior cingulate cortex and response conflict: effects of response modality and processing domain. Cereb. Cortex 11 837–848. 10.1093/cercor/11.9.837 [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S. (1997). Mindblindness: An Essay on Autism and Theory of Mind. Boston, MA: MIT Press. [Google Scholar]

- Baron-Cohen S. (2005). “The empathizing system: a revision of the 1994 model of the mindreading system,” in Origins of the Social Mind, eds Ellis B., Bjorklund D. (New York City, NY: Guilford Publications; ). [Google Scholar]

- Baron-Cohen S., Leslie A. M., Frith U. (1985). Does the autistic child have a theory of mind? Cognition 21 37–46. 10.1016/0010-0277(85)90022-8 [DOI] [PubMed] [Google Scholar]

- Barrett J. L. (2004). Why Would Anyone Believe in God?. Lanham, MD: AltaMira Press. [Google Scholar]

- Bartneck C. (2003). “Interacting with an embodied emotional character,” in Proceedings of the 2003 International Conference on Designing Pleasurable Products and Interfaces, DPPI, Pittsburgh, PA, 55–60. 10.1145/782896.782911 [DOI] [Google Scholar]

- Bartneck C., Reichenbach J. (2005). Subtle emotional expressions of synthetic characters. Int. J. Hum. Comput. Stud. 62 179–192. 10.1016/j.ijhcs.2004.11.006 [DOI] [Google Scholar]

- Basteris A., Nijenhuis S. M., Stienen A. H., Buurke J. H., Prange G. B., Amirabdollahian F. (2014). Training modalities in robot-mediated upper limb rehabilitation in stroke: a framework for classification based on a systematic review. J. Neuroeng. Rehabil. 11:111 10.1186/1743-0003-11-111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bastian B., Haslam N. (2010). Excluded from humanity: the dehumanizing effects of social ostracism. J. Exp.Soc. Psychol. 46 107–113. 10.1016/j.jesp.2009.06.022 [DOI] [Google Scholar]

- Becchio C., Adenzato M., Bara B. (2006). How the brain understands intention: different neural circuits identify the componential features of motor and prior intentions. Conscious. Cogn. 15 64–74. 10.1016/j.concog.2005.03.006 [DOI] [PubMed] [Google Scholar]

- Becker-Asano C., Ogawa K., Nishio S., Ishiguro H. (2010). “Exploring the uncanny valley with Geminoid HI-1 in a real-world application,” in Proceedings of IADIS International Conference Interfaces and Human Computer Interaction, Freiburg, 121–128. [Google Scholar]

- Bekele E., Crittendon J. A., Swanson A., Sarkar N., Warren Z. E. (2014). Pilot clinical application of an adaptive robotic system for young children with autism. Autism 18 598–608. 10.1177/1362361313479454 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bering J. M., Johnson D. D. P. (2005). O Lord, you perceive my thoughts from afar: recursiveness and the evolution of supernatural agency. J. Cogn. Cult. 5 118–141. 10.1163/1568537054068679 [DOI] [Google Scholar]

- Birks M., Bodak M., Barlas J., Harwood J., Pether M. (2016). Robotic seals as therapeutic tools in an aged care facility: a qualitative study. J. Aging Res. 2016 1–7. 10.1155/2016/8569602 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisio A., Sciutti A., Nori F., Metta G., Fadiga L., Sandini G., et al. (2014). Motor contagion during human-human and human-robot interaction. PLOS ONE 9:e106172 10.1371/journal.pone.0106172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blakemore S.-J., Decety J. (2001). From the perception of action to the understanding of intention. Nat. Rev. Neurosci. 2 561–567. 10.1038/35086023 [DOI] [PubMed] [Google Scholar]

- Brass M., Derrfuss J., von Cramon D. Y. (2005). The inhibition of imitative and overlearned responses: a functional double dissociation. Neuropsychologia 43 89–98. 10.1016/j.neuropsychologia.2004.06.018 [DOI] [PubMed] [Google Scholar]

- Brass M., Schmitt R., Spengler S., Gergely G. (2007). Investigating action understanding: inferential processes versus action simulation. Curr. Biol. 17 2117–2121. 10.1016/j.cub.2007.11.057 [DOI] [PubMed] [Google Scholar]

- Breazeal C. (2003). Toward sociable robots. Rob. Auton. Syst. 42 167–175. 10.1016/S0921-8890(02)00373-1 [DOI] [Google Scholar]

- Breazeal C., Buchsbaum D., Gray J., Gatenby D., Blumberg B. (2005). Learning from and about others: towards using imitation to bootstrap the social understanding of others by robots. Artif. Life 11 31–62. 10.1162/1064546053278955 [DOI] [PubMed] [Google Scholar]

- Breazeal C., Edsinger A., Fitzpatrick P., Scassellati B. (2001). Active vision for sociable robots. IEEE Trans. Syst. Man Cybern. Syst. 31 443–453. 10.1109/3468.952718 [DOI] [Google Scholar]

- Breazeal C., Scassellati B. (1999). “How to build robots that make friends and influence people,” in Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems IROS’99 Vol. 2 Kyoto, 858–863. 10.1109/IROS.1999.812787 [DOI] [Google Scholar]

- Brentano F. (1874). Psychology from an Empirical Standpoint, trans. Rancurello A. C., Terrell D. B., McAlister L. L. London: Routledge. [Google Scholar]

- Brooks A. G., Breazeal C. (2006). “Working with robots and objects: revisiting deictic reference for achieving spatial common ground,” in Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, (New York City, NY: Association for Computing Machinery; ), 297–304. 10.1145/1121241.1121292 [DOI] [Google Scholar]

- Brooks R. A., Breazeal C., Marjanović M., Scassellati B., Williamson M. M. (1999). “The cog project: building a humanoid robot,” in Computation for Metaphors, Analogy, and Agents, ed. Nehaniv C. L. (Berlin: Springer; ), 52–87. [Google Scholar]

- Brothers L. (2002). “The social brain: a project for integrating primate behavior and neurophysiology in a new domain,” in Foundations in Social Neuroscience, ed. Cacioppo J. T. (London: MIT Press; ), 367–385. [Google Scholar]

- Buccino G., Binkofski F., Fink G. R., Fadiga L., Fogassi L., Gallese V., et al. (2001). Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. Eur. J. Neurosci. 13 400–404. 10.1111/j.1460-9568.2001.01385.x [DOI] [PubMed] [Google Scholar]

- Burleigh T. J., Schoenherr J. R., Lacroix G. L. (2013). Does the uncanny valley exist? An empirical test of the relationship between eeriness and the human likeness of digitally created faces. Comput. Hum. Behav. 29 759–771. 10.1016/j.chb.2012.11.021 [DOI] [Google Scholar]