Abstract

Objective

To assess the predictive potential of the complete response pattern from the University of Pennsylvania Smell Identification Test for the diagnosis of Parkinson's disease.

Methods

We analyzed a large dataset from the Arizona Study of Aging and Neurodegenerative Disorders, a longitudinal clinicopathological study of health and disease in elderly volunteers. Using the complete pattern of responses to all 40 items in each subject's test, we built predictive models of neurodegenerative disease, and we validated these models out of sample by comparing model predictions against postmortem pathological diagnosis.

Results

Consistent with anatomical considerations, we found that the specific test response pattern had additional predictive power compared with a conventional measure – total test score – in Parkinson's disease, but not Alzheimer's disease. We also identified specific test questions that carry the greatest predictive power for disease diagnosis.

Interpretation

Olfactory ability has typically been assessed with either self‐report or total score on a multiple choice test. We showed that a more accurate clinical diagnosis can be made using the pattern of responses to all the test questions, and validated this against the “gold standard” of pathological diagnosis. Information in the response pattern also suggests specific modifications to the standard test that may optimize predictive power under the typical clinical constraint of limited time. We recommend that future studies retain the individual item responses for each subject, and not just the total score, both to enable more accurate diagnosis and to enable additional future insights.

Introduction

Olfactory decline is a hallmark of both normal aging and neurodegenerative diseases including Alzheimer's disease (AD) and Parkinson's disease (PD). In PD, in particular, olfactory dysfunction may precede the motor symptoms of the disease by up to 7 years.1 Indeed, during the earliest premotor stages of PD, there is associated pathology in olfactory‐related areas.2 Recently, the movement disorders society created research criteria for the diagnosis of prodromal PD,3 with olfactory loss being one biomarker. While the main pathological indicator of PD is intraneuronal aggregation of α‐synuclein into Lewy bodies, there are other synucleinopathies that have also been associated with olfactory dysfunction, including dementia with Lewy bodies (DLB) and incidental Lewy body disease (ILBD).4, 5, 6 Thus, in many cases PD and related disorders might be thought of as diseases of olfaction before they afflict other brain areas. To this end the unified staging system for Lewy body disorders has Stage I as being “olfactory bulb‐only” pathology.7 Olfactory dysfunction is thus the proverbial “canary in the coal mine” for PD. Since early clinical diagnosis of PD by standard neurological examination is unreliable,8 this motivates the possibility of improving clinical diagnosis by making better use of potentially informative olfactory information. It may also help to distinguish PD from other disorders that have a motor but not necessarily olfactory component.

Here we consider the possibility that early diagnosis of PD might be possible using olfactory testing. Previous studies have shown that clinically diagnosed PD is associated with olfactory dysfunction, most using the now common University of Pennsylvania Smell Identification Test (UPSIT) multiple choice test.6, 9 Such testing is critical for assessing olfactory dysfunction, since self‐report can be unreliable.10 However, the only information typically retained from an UPSIT test – the total score – may be a crude measure for guiding diagnosis; while the mean total score of the PD subpopulation is lower than in healthy subjects,9 the sensitivity and specificity of the total score in distinguishing PD from non‐PD‐related olfactory deficits may not be high enough, or early enough in disease progression, to be clinically valuable.11 But what if the pattern of olfactory decline due to PD differs from that due to other age‐related pathologies or to normal aging? Specifically, what if the total UPSIT score masks a particular response pattern of correct and incorrect answers on individual test questions that has predictive power exceeding that of the total score, or could be useful in cases where the total score is not predictive at all? If this were true, a subset of UPSIT test questions motivated by the response patterns associated with specific phenotypic subpopulations could be targeted to patients in the clinic to gain more information in less time than a traditional 40‐question UPSIT would allow. While abbreviated versions of the UPSIT do exist,12, 13 the specific composition of questions on these minitests has not been pathologically validated to justify their use in specific disease diagnosis. Here we add pathological validation of disease phenotypes by leveraging the power of the Brain and Body Donation Program (BBDP) dataset from the Arizona Study of Aging and Neurodegenerative Disorders (AZSAND).14 First, it contains neuropathological postmortem information about each subject, providing a diagnostic gold standard that overcomes the limited accuracy of a purely clinical diagnosis. This also allows related diseases to be identified and predicted that either do not show up in clinical assessment, that may be mistaken for PD in the clinic, or that otherwise confound analysis. Second, in some cases it contains longitudinal information (not explored here), including subjects’ itemized UPSIT responses during the course of aging and disease progression. Third, it contains abundant related data that can be used to identify confounds and isolate the specific contribution of measured olfactory dysfunction to the predictive power of the UPSIT response pattern.

We previously used these data to show a strong association between olfactory dysfunction – as measured by total UPSIT score – and pathological diagnosis of PD and ILBD, as confirmed in postmortem examination.6 Here we extend this result to show that the specific pattern of UPSIT responses contains even greater diagnostic power than the total score alone. We also isolate the specific test questions with the greatest diagnostic power for PD, opening the door to future variants of the test that focus exclusively on these questions.

Material and Methods

Data

All data were obtained from the Banner Sun Health Research Institute Brain and Body Donation Project (BBDP, https://www.brainandbodydonationprogram.org), in association with the Arizona Study of Aging and Neurodegenerative Disorders (AZSAND), a longitudinal research study of elderly individuals living in Maricopa County, Arizona, USA. Both antemortem clinical and postmortem neuropathological data were available for N = 198 (N = 86 females) deceased individuals who took at least one UPSIT, and reflect data available through March 2016. Age at death was 86.4 ± 8.0 years. Subjects greater than 90 years of age at time of death were assigned an age of 95 in this dataset to protect anonymity. Some subjects took the UPSIT on multiple occasions, but only the last UPSIT prior to death for each subject (2.5 ± 2.0 years prior) was used here for classification. Age at time of last UPSIT ranged from 54 to 95; counts of final clinicopathological diagnoses6 are provided in Table 1. The BBDP has enrollment criteria that enrich for certain disease phenotypes; consequently the abundance of both neurodegenerative disease and olfactory dysfunction are unlikely to reflect an age‐matched distribution from the wider community. In particular, healthy, normosmic individuals are undersampled in this study. All subjects signed written informed consent approved by the Banner Institutional Review Board. All data are available to other researchers upon request.

Table 1.

Number of subjects with each postmortem diagnosis

| Diagnosis | N |

|---|---|

| AD only | 54 |

| PD only | 23 |

| PSP only | 7 |

| AD and PD | 7 |

| AD and PSP | 3 |

| PD and PSP | 2 |

| All of these | 0 |

| None of these | 80 |

AD, Alzheimer's disease; PD, Parkinson's disease; PSP, progressive supranuclear palsy.

Statistics and predictive modeling

Data obtained from the BBDP were analyzed using the Python packages pandas and sklearn, and all analysis code including recipes for reproduction of all figures and tables is available at http://github.com/rgerkin/UPSIT. Because the number of features used for prediction is high, we use machine learning techniques to outperform standard multivariate regression and to minimize the possibility of overfitting. Each possible UPSIT question response (a, b, c, or d) was coded as a mutually exclusive input feature to the classifier. There were thus five possible outcomes, corresponding to one of the four responses, or to nonresponse. In preliminary work we found that support vector machines (SVM) and a linear discriminant analysis (LDA) each outperformed all other classification techniques on a wide range of problems related to this dataset (using default sklearn parameters). We consequently used a simple average of these two for all classification work reported in Figures 1, 2, 3. The classifier was only trained on a subset of the data, and then assessed on the remaining data (“out‐of‐sample”) to avoid overfitting and to increase the likelihood that the results would generalize beyond the data collected to date. Specifically, the classifier was trained on 80% of the data and tested on the remaining 20%, with this repeated 100 times with unique, random train/test splits. ROC curves were produced on the ensemble test set performance, and smoothed using Gaussian kernel density estimation with a bandwidth of 0.2. Statistical significance for differences between ROC curves was computed using an analogue of the Mann–Whitney U statistic.15 For Figure 3 we used only the LDA for reasons of coefficient interpretability. Coefficients for the shuffled data in Figure 3 were obtained by shuffling the diagnostic labels (i.e., PD = 0 or PD = 1) across subjects and fitting the classifier. This was performed 100 times to produce the null distribution of coefficients.

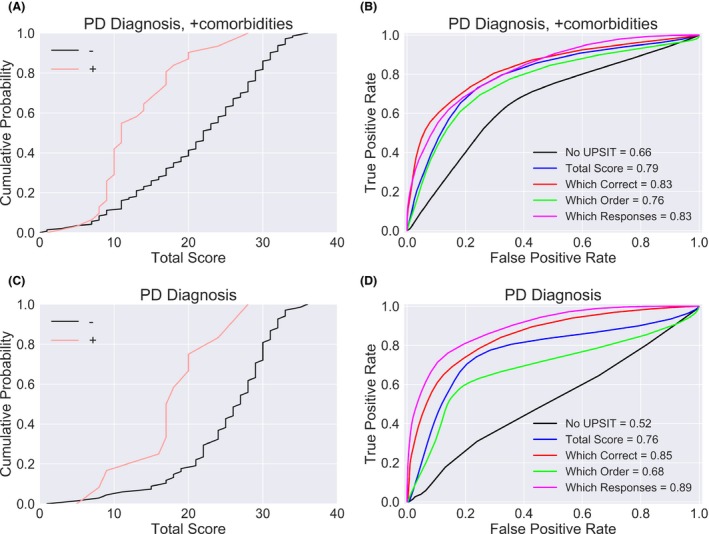

Figure 1.

The specific UPSIT response pattern is diagnostic of PD. (A) Subjects with pathologically confirmed PD (red) had poorer overall performance on the UPSIT than subjects who did not (black) (see also Driver‐Dunckley et al.6). (B) The UPSIT test score (blue) improves diagnostic accuracy of PD relative to a simple qualitative self‐assessment of olfactory dysfunction (black). For this sample in which subjects with comorbidities were included, additional details of the UPSIT test performance, such as the correctness of each response (red), the position of the responses (green), or the pattern of responses (magenta), did not further improve accuracy. Area under the curve (AUC) for each classifier is provided in the legend. (C) Similar to A, but excluding subjects with pathologically confirmed dementias such as AD, reflecting a “pure” sample of PD versus non‐PD. (D) Using the pure sample from (C), a classifier that uses the individuals’ responses to each of the 40 UPSIT questions significantly outperforms one that only uses the total number of questions answered correctly.

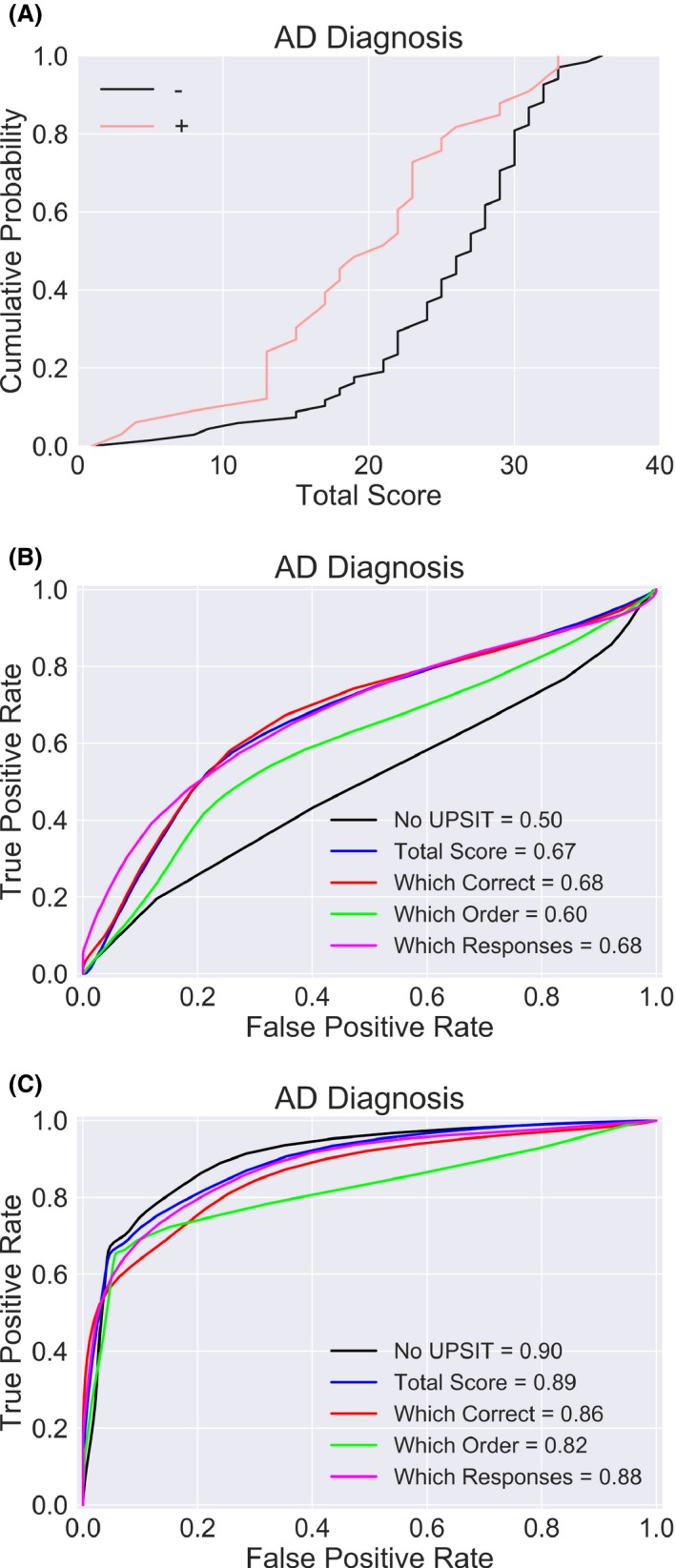

Figure 2.

AD diagnosis is not improved by using the UPSIT. (A) Subjects with AD perform more poorly on the UPSIT than controls. (B) Adding the UPSIT still results in weak classification performance. (C) Adding the MMSE substantially improves classification performance.

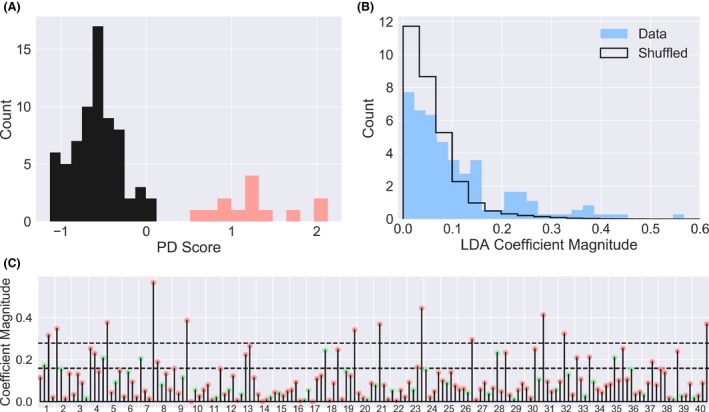

Figure 3.

Specific incorrect responses inform PD diagnosis. (A) A classifier fit to the entire nondemented subject sample and mapped onto the fit's first principal dimension distinguishes PD (red) from control (black) subjects. (B) The coefficients (corresponding to the informativeness of each possible response to each UPSIT question) of the classifier have a distribution with a longer tail than coefficients obtained using the same classifier applied to the same data with diagnostic labels shuffled. (C) All 160 coefficients (4 per UPSIT question) are plotted, with green corresponding to the correct answer to each question, and red to each of the three incorrect answers. The 95th and 99th percentiles of the shuffled distribution are shown as dashed lines.

Results

As the AZSAND is an active program, here we update and confirm our previous results6 using a larger sample size (Fig. 1A). We then constructed a classifier (Material and Methods) and used it with a small subset of clinical data to predict the pathological phenotype subsequently determined by postmortem brain examination.

The classifier was provided with basic information like the age and gender of the subjects at the time of clinical assessment, as well as a subjective, qualitative self‐assessment of olfactory function (“normal,” “reduced,” or “absent”) (Fig. 1B, black line). It also had access to either the total UPSIT score (Fig. 1B, blue line), to the correctness of each response (red line), or to the response itself (i.e., which multiple choice answer the subject selected, magenta line). All classifiers using UPSIT information were substantially more accurate (P < 0.001, Mann–Whitney U test) than the one using only qualitative olfactory information, but none of the former were significantly different from each other. However, this initial analysis included subjects with pathologically confirmed comorbidities such as AD or other dementing disorders, which are extremely likely to reduce UPSIT performance for reasons unrelated to olfactory dysfunction. After removing these subjects from the analysis, leaving a “pure” PD and non‐PD sample (Fig. 1C), we retrained the classifier, and found that the specific response pattern on the UPSIT test provided significantly more diagnostic information than the total score alone (Fig. 1D, AUC = 0.89 vs. AUC = 0.76, P < 0.01). For one cutoff, the classifier using the itemized response data approached sensitivity 80% with specificity 80%.

There were no significant differences in the performance of the best classifier between male and female subjects (AUC = 0.89 ± 0.05 vs. 0.89 ± 0.06, P > 0.5); classification was slightly more accurate for subjects younger than the median age than for those that were older (AUC = 0.91 ± 0.05 vs. 0.85 ± 0.06, P = 0.06), but this difference was not significant. We also considered the possibility that the value of the itemized response data might be driven by a preference for responses occurring in a certain position in the list of possible responses, for example, certain subjects might always choose multiple choice answer “d.” However, a classifier exploiting this possibility rather than using the full response pattern actually performed worse (AUC = 0.68) than one using only the total score alone (AUC = 0.76). Consequently, a simple order effect cannot explain our results.

PD might specifically impair olfactory performance by damaging brain areas in the early stages of olfactory processing, whereas the mechanism by which AD could impair olfaction might be more general, due to involvement of higher order brain regions. Consequently we should expect that, while AD should reduce UPSIT test performance for cognitive reasons (Fig. 2A), the test itself is unlikely to provide especially useful diagnostic information. Consistent with this hypothesis, using a “pure” AD sample (i.e., no other significant confounding pathologies) we found that no variation of the classifier had impressive performance (Fig. 2B). To confirm that these subpopulations were nonetheless distinguishable in principle, we added the total score of a Mini‐Mental Status Examination (MMSE) test to the classifier, which resulted in classification performance for AD that was similar to that obtained for PD using the UPSIT response pattern alone (Fig. 2C), and which largely obviated the value of the UPSIT in AD diagnosis.

The motor symptoms that initially suggest the possibility of PD can also be produced by other movement disorders, such as progressive supranuclear palsy (PSP). This can make accurate diagnosis challenging, especially in the early stages of disease.8 Since PSP is not known for specific olfactory pathology, this suggests that the UPSIT test might be used for differential diagnosis of PD versus PSP, as demonstrated previously.16 We offer preliminary support for the possibility that the specific pattern of responses on the test can be used in a similar way to distinguish PD from PSP (AUC = 0.91); however, sample size for this particular comparison is limited in our dataset and so this result should be considered speculative.

Since the specific pattern of responses on the test appeared to be more informative than the total score, we asked whether some questions and/or responses might be more informative than others, which might suggest more rapid and efficient diagnostics that rely on only those. We fit the classifier to the entire dataset of nondemented subjects, and then projected the data back onto the first principal dimension of the resulting fit. This allowed us to visualize a “score” for each subject associated with a likelihood of PD (Fig. 3A). Because this score is fit to the entire dataset, it is overly optimistic about the capacity to cleanly distinguish PD from non‐PD in practice, but it nonetheless represents the best fit to the data. We then examined the coefficients associated with each possible UPSIT question response (40 questions × 4 responses per question = 160 coefficients) to see if some were especially informative. To compute a threshold for “informative,” we also shuffled the diagnostic labels and recomputed the same coefficients. Since classification after shuffling represents fitting to statistical noise, the distribution of coefficients so obtained represents a null hypothesis that we can use to assess significance. The true distribution had a longer tail than the one obtained via shuffling (Fig. 3B), suggesting that some of the largest coefficients were meaningfully informative. We then plotted these coefficients and identified those that exceeded the 99th percentile of the null distribution. While only 1.6 coefficients would be expected to exceed this threshold by chance, we observed 12 (Fig. 3C). These coefficients correspond to particular “wrong” answers on individual UPSIT questions, specifically questions 1, 2, 5, 7, 9, 19, 21, 23, 26, 31, 32, and 40. They do not reflect getting the wrong answer per se, but to a specific wrong answer, elaborated in Table 2. Eleven of these 12 were still significant at P < 0.05 after controlling for false discovery rate.

Table 2.

The 12 UPSIT question/response pairs with significant diagnostic power, showing the correct odor and the incorrect response that distinguishes PD from controls

| Q# | Correct | Response | PD | CTRL |

|---|---|---|---|---|

| 1 | Pizza | Peanuts | 0.22 | 0.07 |

| 2 | Bubble gum | Dill pickle | 0.22 | 0.06 |

| 5 | Motor oil | Grass | 0.43 | 0.14 |

| 7 | Banana | Motor oil | 0.48 | 0.07 |

| 9 | Leather | Apple | 0.26 | 0.04 |

| 19 | Chocolate | Black pepper | 0.35 | 0.04 |

| 21 | Lilac | Chili | 0.39 | 0.06 |

| 23 | Peach | Pizza | 0.13 | 0.01 |

| 26 | Pineapple | Onion | 0.30 | 0.06 |

| 31 | Paint thinner | Watermelon | 0.22 | 0.03 |

| 32 | Grass | Gingerbread | 0.39 | 0.17 |

| 40 | Peanut | Root beer | 0.22 | 0.06 |

For each phenotype the proportion of subjects providing that specific incorrect response is shown. UPSIT, University of Pennsylvania Smell Identification Test; PD, Parkinson's disease.

Discussion

Olfactory dysfunction is a hallmark of some neurodegenerative diseases including PD. Previous studies have reported that poor performance on the UPSIT is correlated with PD, but it was previously unclear if this test had enough predictive power to be of clinical value. Part of this uncertainty originates from the use of a purely clinical diagnosis of PD as a gold standard, which may be inaccurate especially in the early stages of the diseases.8 Here we overcame this issue using a postmortem pathological assessment of subjects as a gold standard, made possible by the ongoing AZSAND and BBDP.14

The UPSIT is typically scored as the total number of questions answered correctly, which is reasonable as a measure of total olfactory discrimination ability. However, because a low score might arise from either PD, another neurodegenerative disease, a peripheral olfactory disorder, dementia, or from normal aging, it may be difficult to use that score to make a specific clinical diagnosis. We had hypothesized that the specific pattern of incorrect questions might help to disambiguate these cases; this might be because PD subjects answer specific questions incorrectly that other subjects do not, or because they answer them incorrectly in specific ways. For example, confusing the odor of lavender with pizza might reflect a different underlying pathology than confusing it with motor oil. We provided support for this hypothesis by showing that a classifier constructed from the specific pattern of responses (and nonresponses) to test questions significantly outperformed (out‐of‐sample) one that used only the total score. In contrast, we did not observe such improved performance when the same method was applied to the diagnosis of AD in our sample, which we had hypothesized due to the fact that smell deficits in AD are not necessarily specific defects of the olfactory system.

What specific features of the UPSIT response pattern are useful for PD diagnosis? Can these be exploited to construct a more parsimonious test to more quickly collect the most diagnostically relevant information about olfactory dysfunction? We found that 12 incorrect responses (one for each of 12 questions) were significantly predictive of PD, whereas fewer than 2 were expected by chance. While we identified specific incorrect responses to those questions that were most informative, these responses may not reflect such a detailed perceptual deficit; for example, the particular incorrect responses observed may have resulted from a tendency for subjects to have similar guessing strategies when they could not identify the odor. Consequently, it remains possible that it is not the specific responses that are important, but simply which questions were answered incorrectly, regardless of how they were answered. Indeed, the performance of the classifier that used only the correctness of the answers performed nearly as well as the one that used the detailed response pattern (Fig. 1D). Naturally, classification performance would still decrease if only these responses are considered, because even responses with a nonsignificant impact individually are still likely to provide valuable information in the aggregate. However, in clinical settings where time or fatigue is a concern, an abbreviated version of the test focusing on those 12 questions might be a reasonable or even superior alternative to the B‐SIT test otherwise used in that scenario,12 at least for the diagnosis of PD. This also suggests that differential diagnosis might be improved by an adaptive version of the UPSIT, where questions are presented out of order, and the subsequent questions determined in part by the subject's response to the previous questions. This would maximize the amount of useful diagnostic information collected in a given amount of time in the clinic, and make partially completed tests more interpretable. Naturally, the diagnostic power of any biomarker is best understood in the context of what other potentially more accurate or less expensive biomarkers already provide. It remains to be seen whether patterns of dysosmia specific to and diagnostic of PD are correlated with or captured by other available biomarkers.

Because hyposmia is considered a risk factor for PD, it has been used as an enrollment criterion for studies of the development of the disease in otherwise healthy adults.17 Abbreviated questionnaires have struggled to identify preclinical PD18; the results shown here suggest that the predictive power of such questionnaires might be further optimized based on the diagnostic value of specific questions. However, this hope is predicated on the assumption that clinical and preclinical PD share a similar detailed olfactory phenotype, differing mostly in magnitude rather than having different specific patterns of dysosmia, an idea which has not been thoroughly investigated.

Finally, we strongly recommend that clinicians and researchers retain the specific responses (a, b, c, d, or no response) for each of the 40 UPSIT questions for each subject, and not just the subjects’ total scores. This more refined data will enable future investigations of the diagnostic power of olfactory testing, including on specific subpopulations.

Author Contributions

RCG, CHA, and JGH conceived and designed the study. CHA, HAS, EDD, SHM, JNC, MNS, CB, TGB, and RCG acquired and analyzed the data. RCG, CHA, JGH, HAS, EDD, SHM, JNC, BHS, MNS, CB, and TGB drafted the manuscript and/or figures. We have no potential conflicts of interest to report.

Conflict of Interest

None declared.

Acknowledgments

The Arizona Study for Aging and Neurodegenerative Disorders is supported by the National Institute of Neurological Disorders and Stroke (U24 NS072026 National Brain and Tissue Resource for Parkinson's Disease and Related Disorders), the National Institute on Aging (P30 AG19610 Arizona Alzheimer's Disease Core Center), the Arizona Department of Health Services (contract 211002, Arizona Alzheimer's Research Center), the Arizona Biomedical Research Commission (contracts 4001, 0011, 05‐901, and 1001 to the Arizona Parkinson's Disease Consortium), the Michael J. Fox Foundation for Parkinson's Research, the Mayo Clinic Foundation, and the Sun Health Foundation. We also thank the Arizona Alzheimer's Consortium, the National Institute on Aging (AG002132 Core C), the CurePSP Foundation, the Alzheimer's Association, the Henry M. Jackson Foundation (HU0001‐15‐2‐0020), Daiichi Sankyo Co., Ltd., the National Institute of Mental Health (R01MH106674), and the National Institute of Biomedical Imaging and Bioengineering (R01EB021711) for funding, the Banner Brain and Body Donation Program for making the data available, and the many individuals who contributed their brains to make this study possible.

Funding Statement

This work was funded by National Institute of Neurological Disorders and Stroke grant U24 NS072026; National Institute on Aging grants P30 AG19610 and AG002132; Arizona Department of Health Services grant 211002; Arizona Biomedical Research Commission grants 4001, 0011, 05‐901, and 1001; Michael J. Fox Foundation for Parkinson's Research grant ; Mayo Clinic Foundation grant ; Sun Health Foundation grant ; Arizona Alzheimer's Consortium grant ; CurePSP Foundation grant ; Alzheimer's Association grant ; Henry M. Jackson Foundation grant HU0001‐15‐2‐0020; Daiichi Sankyo Co., Ltd. grant ; National Institute of Mental Health grant R01MH106674; National Institute of Biomedical Imaging and Bioengineering grant R01EB021711.

References

- 1. Ross GW, Petrovitch H, Abbott RD, et al. Association of olfactory dysfunction with risk for future Parkinson's disease. Ann Neurol 2008;63:167–173. [DOI] [PubMed] [Google Scholar]

- 2. Braak H, Del Tredici K, Rüb U, et al. Staging of brain pathology related to sporadic Parkinson's disease. Neurobiol Aging 2003;24:197–211. [DOI] [PubMed] [Google Scholar]

- 3. Berg D, Postuma RB, Adler CH, et al. MDS research criteria for prodromal Parkinson's disease. Mov Disord 2015;30:1600–1611. [DOI] [PubMed] [Google Scholar]

- 4. Saito Y, Ruberu N, Sawabe M, et al. Lewy body‐related alpha‐synucleinopathy in aging. J Neuropathol Exp Neurol 2004;63:742–749. [DOI] [PubMed] [Google Scholar]

- 5. Beach TG, White CL, Hladik CL, et al.; Arizona Parkinson's Disease Consortium . Olfactory bulb alpha‐synucleinopathy has high specificity and sensitivity for Lewy body disorders. Acta Neuropathol 2009;117:169–174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Driver‐Dunckley E, Adler C, Hentz J, et al.; Arizona Parkinson Disease Consortium . Olfactory dysfunction in incidental Lewy body disease and Parkinson's disease. Parkinsonism Relat Disord 2014;20:1260–1262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Beach T, Adler C, Lue L, et al.; Arizona Parkinson's Disease Consortium . Unified staging system for Lewy body disorders: correlation with nigrostriatal degeneration, cognitive impairment and motor dysfunction. Acta Neuropathol 2009;117:613–634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Adler C, Beach T, Hentz J, et al. Low clinical diagnostic accuracy of early vs advanced Parkinson disease: clinicopathologic study. Neurology 2014;83:406–412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Doty R. Olfaction in Parkinson's disease and related disorders. Neurobiology of Disease 2012;46:527–552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Shill H, Hentz J, Caviness J, et al. Unawareness of hyposmia in elderly people with and without Parkinson's Disease. Movement Disord Clin Pract (Hoboken, NJ) 2016;3:43–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Rodrıguez‐Violante M, Gonzalez‐Latapi P, Camacho‐Ordonez A, et al. Low specificity and sensitivity of smell identification testing for the diagnosis of Parkinson's disease. Arq Neuropsiquiatr 2014;72:33–37. [DOI] [PubMed] [Google Scholar]

- 12. Doty RL, Marcus A, William Lee W. Development of the 12‐item cross‐cultural smell identification test(CC‐SIT). The Laryngoscope 1996;106:353–356. [DOI] [PubMed] [Google Scholar]

- 13. Tabert M, Liu X, Doty R, et al. A 10‐item smell identification scale related to risk for Alzheimer's disease. Ann Neurol 2005;58:155–160. [DOI] [PubMed] [Google Scholar]

- 14. Beach TG, Adler CH, Sue LI, et al. Arizona study of aging and neurodegenerative disorders and brain and body donation program. Neuropathology 2015;35:354–389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Hanley JA, McNeil BJ. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 1982;143:29–36. [DOI] [PubMed] [Google Scholar]

- 16. Doty R, Golbe LI, McKeown DA, et al. Olfactory testing differentiates between progressive supranuclear palsy and idiopathic Parkinson's disease. Neurology 1993;43:962–965. [DOI] [PubMed] [Google Scholar]

- 17. Siderowf A, Jennings D, Eberly S; et al., PARS Investigators . Impaired olfaction and other prodromal features in the Parkinson At‐Risk Syndrome Study. Mov Disord 2012;27:406–412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Dahodwala N, Kubersky L, Siderowf A; PARS Investigators . Can a screening questionnaire accurately identify mild Parkinsonian signs? Neuroepidemiology 2012;39(3–4):171–175. [DOI] [PMC free article] [PubMed] [Google Scholar]