Abstract

This study evaluated the impact of a patient-centered medical home (PCMH) pilot on utilization, costs, and quality and assessed variation in PCMH components. Data included the New Hampshire Comprehensive Healthcare Information System and Medical Home Index (MHI) scores for 9 pilot sites. A quasi-experimental, difference-in-difference model with propensity score-matched comparison group was employed. MHI scores were collected in late 2011. There were no statistically significant findings for utilization, cost, or quality in the expected direction. MHI scores suggest variation in type and level of implemented features. Understanding site-specific PCMH components and targeted change enacted by PCMHs is critical for future evaluation.

Keywords: commercial insurance, delivery system reform, patient-centered medical home, primary care

Health care organizations are increasingly looking for ways to address rising health care costs, inconsistent quality, and fragmentation in the US health care system. The predominant payment system, fee-for-service, rewards volume over value (Aaron & Ginsburg, 2009; Goroll & Schoenbaum, 2012; Miller, 2009), does not incentivize coordination between providers or care settings (Miller, 2009), and does not invest in health information technology, chronic condition management, or broader practice and systemwide improvements (Abrams et al., 2011; Barr, 2008; Ginsburg et al., 2008). As the number of people with multiple chronic conditions grows, the task of providing and coordinating care becomes increasingly complex (Matlow et al., 2006). Communication errors are common, information sharing is in-sufficient, and handoffs between individual physicians, hospitals, and other health care providers are poorly coordinated (Institute of Medicine, 2001; Matlow et al., 2006).

The patient-centered medical home (PCMH) model aims to improve quality and reduce costs by treating the patient as a whole person, improving care coordination, and providing advanced team-based primary care and population health management supported by health information technology. Evidence on the impact of PCMH models is mixed (Christensen et al., 2013; Friedberg et al., 2014; Hoff et al., 2012; Rosenthal et al., 2013). Recent findings from nonintegrated primary care settings on the impact of the PCMH on utilization, costs, and quality show moderate improvements. A study by Friedberg et al. (2014) evaluated the impact of the Southeast Pennsylvania Chronic Care Initiative, a multipayer PCMH pilot guided by NCQA recognition. The authors reported improvement in one diabetes process measure (nephropathy monitoring) for PCMH sites relative to non-PCMH sites, but no statistically significant improvements in utilization or cost measures by PCMH sites relative to non-PCMH sites over 3 years (Friedberg et al., 2014). A study of the Rhode Island Chronic Care Sustainability Initiative showed lower rates of ambulatory care–sensitive emergency department (ED) visits for PCMH sites relative to non-PCMH sites but no other statistically significant improvements in quality measures or remaining utilization and cost measures (Rosenthal et al., 2013).

This study evaluates the NH Citizens Health Initiative Multi-Stakeholder Medical Home Pilot, a multipayer demonstration of the PCMH model in 9 primary care practices in New Hampshire, to better understand whether the PCMH model can improve quality and reduce costs and to explore variation in the level of implemented components. This study contributes to our understanding of whether transformation to a PCMH as defined by NCQA recognition yields positive outcomes compared with practices without comparable PCMH recognition. This study hypothesized that PCMH pilot sites would yield better utilization (eg, lower hospitalizations and ED use), lower total costs, and improved quality by PCMH recognized pilot practices relative to non-PCMH sites.

To participate in the pilot, all sites were expected to obtain level 1 recognition by the 2008 NCQA Physician Practice Connections–Patient-Centered Medical Home standards by July 1, 2009, the start date for payments that included fixed per member per month z (PMPM) (on average $4 PMPM), in addition to existing fee-for-service (New Hampshire Citizens Health Initiative, 2008). The 4 major commercial payers in New Hampshire participated through PMPM payments to pilot sites and participation in the steering committee. Sites participated in regular conference calls facilitated by a convener but designed their specific PCMH models independently.

METHODS

The primary purpose of this study was to explore the impact of this PCMH pilot on utilization, costs, and quality using formal NCQA recognition as the indicator of PCMH status. All the pilot sites in this study achieved the same recognition level (ie, the highest level, level 3). Comparison sites had no formal NCQA PCMH recognition. A secondary part of this study was to better understand variation that might exist among similarly recognized PCMH practices. While qualitative findings illustrating some of this variation have been published elsewhere (Flieger, 2016), data from the Medical Home Index (MHI) aim to describe potential variation quantitatively among pilot practices.

Research questions and hypotheses

RQ1: Does formal recognition as a PCMH and additional PMPM payment improve utilization, costs, and quality outcomes?

H1: Pilot sites will exhibit better utilization (eg, lower hospitalizations and ED use), lower total costs, and better quality related to preventive and chronic care measures after the start of PMPM payments, relative to nonpilot sites.

H2: Greater improvement in utilization, costs, and quality will be observed for the population with 2 or more chronic conditions for pilot sites, relative to non-pilot sites.

RQ2: How do PCMH models vary across the pilot sites?

H1: Levels of implementation of PCMH components will vary by site, as measured through the MHI.

Study design

This study uses a quasi-experimental design with a propensity score–matched, nonequivalent comparison group identified at the practice level. Nine diverse practices across New Hampshire participated in the pilot including a mix of federally qualified health centers, look-alike community health centers, health system–affiliated practices, multispecialty practices, and nurse practitioner–owned and operated practices. Comparison practices were identified as practices in New Hampshire that were not NCQA recognized as PCMHs during the study period, the majority of which had at least 2 providers. Providers were attributed to practices based on the best available information on practice websites for provider listings and associated provider identifiers in claims data.

Data sources and analysis tools

New Hampshire Comprehensive Health Care Information System

The primary data source for this analysis was the New Hampshire Comprehensive Health Care Information System, a multipayer health care claims database that includes claims data from commercial payers in New Hampshire. The data set was established by the state of New Hampshire to make health care data more available to insurers, employers, providers, purchasers, and state agencies for the purposes of reviewing utilization, expenditures, and performance (OnPoint Health Data, 2011). Because these data do not include Medicare and Medicaid recipients, the findings presented here are generalizable to a relatively healthier working age population. This study period is July 2007 through June 2011, including 2 years of preintervention period data and 2 years of postintervention period data, with July 2009 as the start of the postperiod.

Medical Home Index (MHI)

To assess further variation in the PCMH models implemented, each practice completed an MHI. The 2008 NCQA recognition criteria, offers one way to score a practice’s achievement on 9 standards related to the PCMH. Since all sites achieved level 3, additional data were collected to explore further variation in “medical homeness” across pilot sites. The MHI in adult primary care (full version), designed by the Center for Medical Home Improvement (CMHI), is a validated self-assessment and classification tool to measure and quantify the level of medical homeness of a primary care practice (CMHI, 2008). The MHI has been cited as one of the tools for practice facilitators to use to assess a practice’s medical homeness (Geonnotti et al., 2015). The full version used here has 25 questions spread across 6 domains: organizational capacity, chronic condition management, care coordination, community outreach, data management, and quality improvement/change. Practice representatives must identify at what level (level 1 through level 4) the practice “currently provides care for patients with [a] chronic health condition.” The practice representatives then indicate whether practice performance within that level is “partial” (some activity within that level) or “complete” (all activity within that level). This yields 8 possible points within each question (CMHI, 2008). Total MHI scores range from 25 to 200 and are created by the sum of scores across the 6 domains specified earlier. It takes about 20 minutes to complete the tool, and input from administrative and clinical staff is needed. Each practice completed the MHI at the end of the extended pilot period as part of a research site visit by the author. A site-level score for medical homeness was constructed. The Cronbach α, factor analysis, and summary statistics are presented.

Utilization, cost, and quality measures

This study draws on the recommendations of the PCMH Evaluators’ Collaborative for measure selection and specification in the areas of utilization, cost, and quality (Rosenthal et al., 2012) and is consistent with the methods specified in a recent PCMH meta-analysis. Claims-based utilization and cost measures are reported per 1000 member-months and included acute hospital admissions; ambulatory care–sensitive hospital admissions, as defined by the Agency for Healthcare Research and Quality’s Prevention Quality Indicators, including only the chronic care subset; ED visits; ambulatory care–sensitive ED visits, defined by the NYU ED algorithm (primary care treatable, and emergent-preventable/avoidable); primary care visits; specialty care visits; and total costs (excluding pharmacy). Claims-based quality measures were defined according to 2012 HEDIS (Healthcare Effectiveness Data and Information Set) specifications—some with modified look-back periods based on available data—and selected on the basis of the areas emphasized by the pilot sites for quality improvement activities. These quality measures included 4 diabetes process measures: HbA1c (glycated hemoglobin A1c) testing, LDL (low-density lipoprotein) testing, nephropathy screening and treatment, and dilated retinal eye examination; and breast cancer screening, colon cancer screening, and cervical cancer screening. Utilization, cost, and quality measures were analyzed for the entire population as well as a subpopulation of patients with 2 or more chronic conditions identified using risk adjustment software.

Attribution

Each patient was assigned to a primary care practice retrospectively based on utilization. To be attributed to a practice, patients needed to be enrolled in health insurance for at least 3 of the 6 months in the time period analyzed. Patients were attributed to a practice based on where they received the plurality of their primary care, as indicated through Evaluation and Management visits. Patients who switched attribution between pilot and comparison sites throughout the study period were excluded. However, patients who switched between practices within the pilot or comparison groups were reattributed in each 6-month period. Moreover, if patients were initially attributed to a practice but then did not have a visit in a given year, they maintained the same attribution as the year before to capture as much of the care as possible.

Analysis

To assess the impact of the PCMH, a difference-in-differences model was conducted using a pre-post model controlling for 6-month increments. Quality measures were analyzed at the year level. To account for clustering of patients at practices, a random effect for site was included. On the basis of consistent sensitivity analyses, an ordinary least-squares method was reported for utilization and cost analyses for ease of interpretation.

The following variables were included in all models: pilot status, time, difference-indifference estimator, risk score, age band, sex, and provider number. Risk adjustment was conducted using the Johns Hopkins ACG® System (version 10.0) risk adjustment software and was based on age, gender, and diagnoses in the first year of enrollment for each patient and applied throughout the remainder of the study period. A customized comparison sample was used, derived from propensity score matching using nearest neighbor methodology, and the greedy algorithm without replacement. Propensity score matching was conducted at the practice level to recognize the practice-level intervention (Rosenthal et al., 2013) and facilitated more balanced pilot and comparison groups based on t tests before and after matching.

FINDINGS

Utilization and cost

There were no statistically significant findings for the impact of the PCMH pilot on utilization, cost, and quality outcomes in the expected direction. Specifically, there was no difference in ED visits, ambulatory care–sensitive ED visits, acute hospital admissions, ambulatory care–sensitive admissions for the chronic composite, primary care visits, or specialty care visits. The only statistically significant finding indicated that the total costs (excluding pharmacy) were higher for the pilot sites than the comparison sites (Table 1). Similarly, there were no statistically significant results for the subpopulation with 2 or more chronic conditions, including no statistically significant difference for costs.

Table 1.

Utilization and Cost Analysis per 1000 Member Months and DID Estimate

| Preperiod | Postperiod | D-I-D Estimate (SE) | |||

|---|---|---|---|---|---|

|

|

|

||||

| Pilot | Comparison | Pilot | Comparison | ||

| Primary care visits | 359.48 | 350.55 | 361.44 | 357.39 | − 0.0334 (0.037) |

| Specialist visits | 150.98 | 149.73 | 175.72 | 172.36 | 0.0463 (0.032) |

| ACS emergency department visits | 1.90 | 1.69 | 1.94 | 1.65 | 0.0007 (0.0006) |

| Emergency department visits | 33.37 | 31.65 | 34.18 | 32.53 | 0.005 (0.0053) |

| ACS inpatient admissions | 0.25 | 0.21 | 0.31 | 0.30 | 0.0001 (0.0002) |

| Inpatient admissions | 5.42 | 5.07 | 6.38 | 6.08 | 0.0011 (0.0012) |

| Total costs | 173 016.87 | 166 374.24 | 202 556.17 | 182 325.45 | 80.42a (31.80) |

Abbreviation: ACS, ambulatory care sensitive; D-I-D, difference-in-difference; SE, standard errors.

P < . 05.

Quality

There were no statistically significant quality findings for the pilot sites relative to the comparison sites (Table 2). This remained true for the subpopulation with 2 or more chronic conditions. A sensitivity analysis was conducted without adjusting for patient characteristics (eg, age band, sex, and risk score), and the results remained the same.

Table 2.

Quality Analyses, Average Rate per Period and D-I-D Estimate

| Preperiod | Postperiod | D-I-D Estimate (SE)a | |||

|---|---|---|---|---|---|

|

|

|

||||

| Pilot | Comparison | Pilot | Comparison | ||

| Cervical cancer screeningb | 0.32 | 0.33 | 0.35 | 0.34 | 0.0032 (0.0469) |

| Breast cancer screening | 0.82 | 0.80 | 0.80 | 0.78 | 0.001 (0.013) |

| Colon cancer screeningb | 0.38 | 0.40 | 0.34 | 0.35 | 0.0069 (0.0147) |

| Dilated eye examinations for patients with diabetes | 0.91 | 0.90 | 0.91 | 0.92 | − 0.0192 (0.012) |

| HbA1c testing for patients with diabetes | 0.77 | 0.76 | 0.76 | 0.75 | − 0.0054 (0.016) |

| Lipid control in patient with diabetes | 0.61 | 0.57 | 0.59 | 0.54 | − 0.0006 (0.0159) |

| Nephropathy screening/treatment for patients with diabetes | 0.60 | 0.57 | 0.59 | 0.54 | 0.0046 (0.0177) |

Abbreviation: D-I-D, difference-in-difference; HbA1c, glycated hemoglobin A1c; SE, standard errors.

No DID estimates reached statistical significance at the p < .05 level.

Modified look-back periods were used that were prescribed by HEDIS to shorter time frames available in the data.

Sensitivity analyses

Several sensitivity analyses have been conducted, including varying attribution models (eg, attributing in the preperiod and following the same group into the postperiod, as well as cross-sectional attribution in both the pre and postperiods); generalized estimating equations for the utilization analyses to account for the high number of zeros; and matching at the patient level rather than the practice level. None of these sensitivity analyses have shown a consistent trend toward better performance by the pilot sites relative to the comparison sites.

Medical Home Index

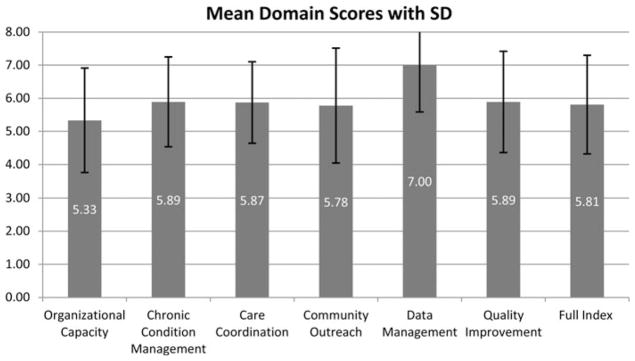

The Cronbach α was 0.76, indicating good reliability and consistency, suggesting that these 6 domains together measure an underlying construct. Factor analysis indicated that 2 factors were retained, with eigenvalues of 3.08 and 1.74, respectively. Factor 1 accounted for 56.41% of the variance, whereas both factors together accounted for 88.24% of the variance, suggesting the MHI may be best treated as 2 separate constructs. The first 4 dimensions that load on factor 1 seem to be more about organizational characteristics and care delivery (MH-Org), whereas the last 2 dimensions focus more on the use of data (MH-Data). Summary statistics for the full index and the 2 factors are presented in Table 3. Overall, the mean score per question (out of 8) in each of these subscores was higher for MH-Data than MH-Org. The Figure shows the mean score by domain along with standard deviations to illustrate the variation in each domain among practices that all achieved level 3 NCQA recognition.

Table 3.

Medical Home Index Summary Statistics

| Medical Home Index (Total) | Medical Homeness-Organization (MH-Org) | Medical Homeness-Data (MH-Data) | |

|---|---|---|---|

| Minimum | 96 (out of 200) | 71 (out of 168) | 16 (out of 32) |

| Maximum | 167 (out of 200) | 145 (out of 168) | 30 (out of 32) |

| Mean total | 145.22 (out of 200) | 119.44 (out of 168) | 25.78 (out of 32) |

| SD | 20.99 | 20.27 | 4.35 |

| Mean per question | 5.69 (out of 8) | 5.69 (out of 8) | 6.44 (out of 8) |

| SD | 1.49 | 1.56 | 1.56 |

Abbreviation: SD, Standard deviation

Figure.

Mean Medical Home Index domain scores with standard deviation.

DISCUSSION

There were limited statistically significant findings for the PCMH sites relative to the non-PCMH sites. Moreover, there is clearly variation in the PCMH models implemented in the 9 pilot sites, even at the end of the pilot period, suggesting there is not one single PCMH model. This is consistent with recent findings that suggest that how practices enact features under the umbrella of the PCMH vary significantly; thus, PCMH models are dissimilar both in concept and in implementation (Bitton et al., 2012; Hearld et al., 2013; Hoff et al., 2012). This, together with the nonsignificant findings for utilization and quality, and the higher cost outcomes, may suggest a number of interpretations of these analyses.

First, Looking at the presence of a PCMH model (eg, NCQA recognition as an on-off switch) and expecting there to be a comprehensive impact on a variety of patient outcomes might be overly simplistic. More attention should be paid to assessing specific structural features (eg, primary care practices calling patients within 24 hours of hospital discharge) and their impact on specific outcomes (eg, readmission rates) through quantitative modeling. The observed variation in the MHI scores by component supports this idea. A targeted transformation could more clearly focus on improving specific outcomes of interest within either of these domains. Further research is needed to carefully identify what components may be driving the positive outcomes observed in some of the earlier studies conducted in integrated health systems (Gilfillan et al., 2010; Reid et al., 2010), and whether there is a certain package of interventions that can be more successful in some contexts than others. For example, a recent study of PCMH implementation in the Veterans Health Administration examined the link between specific organizational processes of care and patient outcomes and found mixed results (Werner et al., 2014). Consistent with previous recommendations (Bitton et al., 2012), policy makers should pay special attention to the nature of the PCMH model implemented and the variation in the model within the demonstration itself.

Second, Two years since the start of payments might be too short of a time to see measurable change in these outcomes because many of the practices reported they were still implementing PCMH-related changes at the time of the MHI, despite being able to achieve recognition as a PCMH much earlier. MHI findings reinforce this idea, highlighting the difference between data management aspects of the model, which were more emphasized in the 2008 NCQA tool (Rittenhouse & Shortell, 2009; O’Malley, Peikes, & Ginsburg, 2008). One could hypothesize that it is the organizational transformation that would be expected to have a more robust impact on the outcomes measured here and perhaps take longer to implement. For this reason, longer-term analyses of PCMHs are warranted (Calman et al., 2013).

Third, The comparison sites, as identified here, could share some characteristics with formally recognized PCMH sites, reducing the differences in PCMH components between the pilot and comparison groups. As PCMHs proliferate, it will be even more difficult to find a non-PCMH comparison group based on lack of NCQA recognition, since some practices have adopted PCMH components without formal recognition whereas others have dropped formal recognition after PCMH transformation. This reiterates the importance of assessing the presence of PCMH characteristics rather than PCMH recognition (Yes/No).

Fourth, Given that the PCMH is targeting outcomes that are generally found among sicker patients (eg, ED visits, hospitalizations), it might be harder to detect an effect using the population studied here. While these results did not show different findings between the full population and those with 2 or more chronic conditions, more work is needed to understand whether the PCMH model is best suited for all patients served by primary care or whether it is more effective targeted toward higher need patients. Moreover, since this study was completed with commercially insured beneficiaries only, to reflect the participating payers in this pilot, it is possible that an analysis of Medicare or Medicaid participants receiving care at these pilot practices might demonstrate different results. This issue is further impacted by the attribution approach used here, which draws all users of primary care into the study sample. Future studies that are sufficiently powered should explore the relative impact of the PCMH on patients who are more frequent visitors of primary care to those relatively healthy, less frequent visitors to further isolate different aspects of care management within the PCMH model.

Fifth, The lack of a practice facilitator to support each practice’s transformation may be a contributing factor to the variation in PCMH models observed here and may also be a missed opportunity for shared learning. There is evidence that practice facilitators play a critical role in primary care transformation efforts (Taylor et al., 2013). Practices looking to become PCMHs should explore the role of practice facilitation in the transformation process (Geonnotti et al., 2015) and how more targeted transformation efforts, with the help of a facilitator, could focus on specific utilization, cost, and quality outcomes of interest.

The variation we observed among practices with the highest level of recognition suggests that the 2008 NCQA recognition guidelines leave considerable room for implementation variation. While many revisions have been made to the NCQA guidelines since the start of this pilot, further work is needed to re-fine recognition tools to more precisely reflect care delivery. In our view, however, no fixed criteria will ever adequately distinguish robust PCMHs from meager ones. It is inevitable that such criteria will be dominated by structural measures (eg, referral tracking), whereas more subjective concepts such as team-based culture or care coordination roles will be incompletely captured. As our understanding of key components of the PCMH model becomes more refined, perhaps, these tools and others can start to reweight the criteria that go into assessing the status of a PCMH to create a spectrum-based understanding and give higher weight to those aspects that are shown to have more of an impact on specific utilization, cost, and quality outcomes. While NCQA and other PCMH recognition bodies should continue to refine recognition criteria, we need to continue to go beyond the easily measured structures and processes and capture the management approaches that help practices effectively adopt a fundamentally new orientation to patient-centered care.

Several limitations to this study should be noted. As is the nature of these types of quasi-experimental designs, it is not fully possible to test for any differences between the pilot practices and the comparison sites. While the matching process indicated that these groups were more similar after matching, it is still not possible to fully eliminate any potential selection bias or to measure whether there are other reasons that these two groups would be different.

The attributed patient populations are based on retrospective primary care use, rather than patient designation. While this is a reasonable and common approach, given the lack of available data on patient’s choice of primary care practice, it does effectively limit the population studied to users of primary care, and thus patients who do not seek primary care, are not attributed to any practice even if they use other types of care (eg, ED), or would, if asked, consider themselves a member of a given primary care practice. In this way, to the extent that pilot practices are making more of an effort to get patients into the office for primary care visits, this could have the effect of penalizing them (from an evaluation perspective) when patients do seek care in the ED or are hospitalized, since those visits would be more likely to be associated with that PCMH site, relative to the comparison practices that may not have reached out to those patients for their primary care visits. While several sensitivity analyses have been conducted to assess attribution approaches showing consistently nonsignificant results, the limitations of retrospective attribution remain.

In addition, the attribution of providers to practices is imperfect. Specifically, providers are attributed on the basis of the data available on provider websites and only at one point in time, namely, after the end of the pilot. Thus, this does not account for any movement in providers over time and may be biased to more accurately reflect the latter part of the study period. More work is needed to develop better data systems to link providers to practices to improve evaluation research of practice-level interventions.

Unfortunately, it was not possible to use the MHI in robust analyses comparing pilot sites with comparison sites since only the pilot sites completed this tool. While analyses were conducted using the MHI and claims data for the pilot sites (not reported here), sensitivity analyses suggested that results were not consistent based on the small sample size of practices (N = 9) and the need to account for practice-level clustering. Future studies should try to capture this type of variation among both pilot and comparison sites to tease apart some of the variation that is embedded in the on-off switch of PCMH status.

This study contributes to the growing body of literature on the impact of PCMHs on utilization, cost, and quality in nonintegrated primary care settings. Overall, these findings suggest little impact on desired outcomes after 2 years of implementation but point to some potential challenges of evaluating PCMH efforts earlier in the transformation process and highlight the variation that exists across similarly recognized PCMH practices. Again, we are made aware: If you have seen one medical home, you have seen one medical home.

Acknowledgments

This project was supported by grant no. R36HS021385 from the Agency for Healthcare Research and Quality. Funding was also provided by the Endowment for Health (grant no. 2208). The author is grateful to the New Hampshire Citizens Health Initiative, The Center for Medical Home Improvement, the pilot practices, and members of her dissertation committee for their guidance with this work: Chris Tompkins, Jody Hoffer Gittell, Meredith Rosenthal, and John Chapman.

Footnotes

The content is solely the responsibility of the author and does not necessarily represent the official views of the Agency for Healthcare Research and Quality. No further conflicts of interest are declared.

References

- Aaron HJ, Ginsburg PB. Is health spending excessive? If so, what can we do about it? Health Affairs. 2009;28(5):1260–1275. doi: 10.1377/hlthaff.28.5.1260. [DOI] [PubMed] [Google Scholar]

- Abrams M, Nuzum R, Mika S, Lawlor G. Realizing health reform’s potential: How the affordable care act will strengthen primary care and benefit patients, providers, and payers. New York, NY: The Commonwealth Fund; 2011. [PubMed] [Google Scholar]

- Barr MS. The need to test the patient-centered medical home. JAMA. 2008;300(7):834. doi: 10.1001/jama.300.7.834. [DOI] [PubMed] [Google Scholar]

- Bitton A, Schwartz GR, Stewart EE, Henderson DE, Keohane CA, Bates DW, Schiff GD. Off the hamster wheel? Qualitative evaluation of a payment-linked patient-centered medical home (PCMH) pilot. Milbank Quarterly. 2012;90(3):484–515. doi: 10.1111/j.1468-0009.2012.00672.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calman NS, Hauser D, Weiss L, Waltermaurer E, Molina-Ortiz E, Chantarat T, Bozack A. Becoming a patient-centered medical home: A 9-year transition for a network of federally qualified health centers. Annals of Family Medicine. 2013;11(1):S68–S73. doi: 10.1370/afm.1547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Center for Medical Home Improvement. The Medical Home Index: Adult: Measuring the organization and delivery of primary care for all adults and their families. Concord, NH: Author; 2008. [Google Scholar]

- Christensen EW, Dorrance KA, Ramchandani S, Lynch S, Whitmore CC, Borsky AE, … Bickett TA. Impact of a patient-centered medical home on access, quality, and cost. Military Medicine. 2013;178(2):135–141. doi: 10.7205/milmed-d-12-00220. [DOI] [PubMed] [Google Scholar]

- Flieger SP. Implementing the patient-centered medical home in complex adaptive systems: Becoming a relationship-centered patient-centered medical home. Health Care Management Review. 2016 doi: 10.1097/HMR.0000000000000100. Advance online publication. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedberg MW, Schneider EC, Rosenthal MB, Volpp KG, Werner RM. Association between participation in a multipayer medical home intervention and changes in quality, utilization, and costs of care. JAMA. 2014;311(8):815–825. doi: 10.1001/jama.2014.353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geonnotti K, Taylor EF, Peikes D, Schottenfeld L, Burak H, McNellis R, Genevro J. Engaging primary care practices in quality improvement: Strategies for practice facilitators. Rockville, MD: Agency for Healthcare Research and Quality; 2015. [Google Scholar]

- Gilfillan RJ, Tomcavage J, Rosenthal MB, Davis DE, Graham J, Roy JA, … Steele JGD. Value and the medical home: Effects of transformed primary care. American Journal of Managed Care. 2010;16:607–614. [PubMed] [Google Scholar]

- Ginsburg PB, Maxfield M, O’Malley AS, Peikes D, Pham HH. Making medical homes work: Moving from concept to practice. Policy Perspective. Washington, DC: Center for Studying Health System Change; 2008. pp. 1–20. [Google Scholar]

- Goroll AH, Schoenbaum SC. Payment reform for primary care within the accountable care organization: A critical issue for health system reform. JAMA. 2012;308(6):577–578. doi: 10.1001/jama.2012.8696. [DOI] [PubMed] [Google Scholar]

- Hearld LR, Weech-Maldonado R, Asagbra OE. Variations in patient-centered medical home capacity: A linear growth curve analysis. Medical Care Research and Review. 2013;70(6):597–620. doi: 10.1177/1077558713498117. [DOI] [PubMed] [Google Scholar]

- Hoff T, Weller W, DePuccio M. The patient-centered medical home: A review of recent research. Medical Care Research and Review. 2012;69(6):619–644. doi: 10.1177/1077558712447688. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine. Crossing the quality chasm: A new health system for the 21st century. Washington, DC: National Academy of Sciences; 2001. [Google Scholar]

- Matlow AG, Wright JG, Zimmerman B, Thomson K, Valente M. How can the principles of complexity science be applied to improve the coordination of care for complex pediatric patients? Quality and Safety in Health Care. 2006;15(2):85–88. doi: 10.1136/qshc.2005.014605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller HD. From volume to value: Better ways to pay for health care. Health Affairs. 2009;28(5):1418–1428. doi: 10.1377/hlthaff.28.5.1418. [DOI] [PubMed] [Google Scholar]

- New Hampshire Citizens Health Initiative. New Hampshire multi-stakeholder medical home handbook, Version 2.0. Bow, NH: Author; 2008. [Google Scholar]

- O’Malley AS, Peikes D, Ginsburg PB. Qualifying a physician practice as a medical home. Washington, DC: Center for Studying Health System Change; 2008. [Google Scholar]

- OnPoint Health Data. The New Hampshire Comprehensive Health Care Information System (NH CHIS) commercial limited use data dictionary, Version 2.1. Concord, NH: New Hampshire Department of Health and Human Services; 2011. [Google Scholar]

- Reid RJ, Coleman K, Johnson EA, Fishman PA, Hsu C, Soman MP, … Larson EB. The Group Health medical home at year two: Cost savings, higher patient satisfaction, and less burnout for providers. Health Affairs. 2010;29(5):835–843. doi: 10.1377/hlthaff.2010.0158. [DOI] [PubMed] [Google Scholar]

- Rittenhouse DR, Shortell SM. The patient-centered medical home: Will it stand the test of health reform? JAMA. 2009;301(19):2038–2040. doi: 10.1001/jama.2009.691. [DOI] [PubMed] [Google Scholar]

- Rosenthal MB, Abrams MK, Bitton A. Patient-Centered Medical Home Evaluators’ Collaborative. Recommended core measures for evaluating the patient-centered medical home: Cost, utilization, and clinical quality. New York, NY: The Commonwealth Fund; 2012. [Google Scholar]

- Rosenthal MB, Friedberg MW, Singer SJ, Eastman D, Li Z, Schneider EC. Effect of a multipayer patient-centered medical home on health care utilization and quality: The Rhode Island chronic care sustainability initiative pilot program. JAMA Internal Medicine. 2013;173(20):1907–1913. doi: 10.1001/jamainternmed.2013.10063. [DOI] [PubMed] [Google Scholar]

- Taylor EF, Machta RM, Meyers DS, Genevro J, Peikes DN. Enhancing the primary care team to provide redesigned care: The roles of practice facilitators and care managers. Annals of Family Medicine. 2013;11(1):80–83. doi: 10.1370/afm.1462. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werner RM, Canamucio A, Shea JA, True G. The medical home transformation in the Veterans Health Administration: An evaluation of early changes in primary care delivery. Health Services Research. 2014;49(4):1329–1347. doi: 10.1111/1475-6773.12155. [DOI] [PMC free article] [PubMed] [Google Scholar]