Abstract

Objective

We examined how cognitive and linguistic skills affect speech recognition in noise for children with normal hearing. Children with better working memory and language abilities were expected to have better speech recognition in noise than peers with poorer skills in these domains.

Design

As part of a prospective, cross-sectional study, children with normal hearing completed speech recognition in noise for three types of stimuli: (1) monosyllabic words, (2) syntactically correct but semantically anomalous sentences and (3) semantically and syntactically anomalous word sequences. Measures of vocabulary, syntax and working memory were used to predict individual differences in speech recognition in noise.

Study sample

Ninety-six children with normal hearing, who were between 5 and 12 years of age.

Results

Higher working memory was associated with better speech recognition in noise for all three stimulus types. Higher vocabulary abilities were associated with better recognition in noise for sentences and word sequences, but not for words.

Conclusions

Working memory and language both influence children’s speech recognition in noise, but the relationships vary across types of stimuli. These findings suggest that clinical assessment of speech recognition is likely to reflect underlying cognitive and linguistic abilities, in addition to a child’s auditory skills, consistent with the Ease of Language Understanding model.

Keywords: Behavioural measures, paediatric, speech perception, psychoacoustics/hearing science, noise

Introduction

School-age children communicate, socialise and learn in environments with background noise and reverberation (Nelson & Soli, 2000; Knecht et al, 2002). Because understanding speech in noise is important for academic and social functioning, assessment of speech recognition in noise is often used by audiologists to quantify the likelihood of listening difficulties in classrooms, social situations and at home (Schafer, 2010; Muñoz et al, 2012). Measures of speech recognition in noise are also used as outcome measures to monitor auditory development in children with hearing aids or cochlear implants (Blamey et al, 2001; DesJardin et al, 2009; McCreery et al, 2015). Individual variability in speech recognition in noise, even in typically developing children with normal hearing, complicates the differentiation of normal from atypical performance. The protracted and variable developmental trajectory for speech recognition in noise has been attributed to the parallel maturation of cognitive and linguistic abilities during childhood (Wightman & Allen, 1992; Caldwell & Nittrouer, 2013; Nittrouer et al, 2013). Yet, several investigations have failed to find consistent relationships between cognitive and linguistic abilities and speech recognition in school-age children (Eisenberg et al, 2000; Fallon et al, 2000; Talarico et al, 2006). The purpose of this study was to further examine how cognitive and linguistic skills predict children’s speech recognition in noise for stimuli with varying linguistic complexity. A clearer understanding of how these factors support speech recognition is an important first step towards improving the use and interpretation of clinical speech recognition tests for children.

The ability to understand speech is dependent on a combination of auditory, cognitive and linguistic abilities (for a review, see Mattys et al, 2012). The process of temporarily maintaining incoming sensory information for cognitive processing is known as working memory (Baddeley, 2000; Cowan, 2004). Once a speech signal is at least partially audible, the incoming stream of acoustic information must be temporarily maintained so that it can be compared to the listener’s accumulated linguistic knowledge in long-term memory. The ability to temporarily store and maintain the speech signal in working memory and the accumulated linguistic knowledge both contribute to the process of speech recognition. There are a number of factors related to the listener, stimuli and interactions between those factors that could affect speech recognition and the degree to which listeners must recruit cognitive abilities and linguistic knowledge when understanding speech. Norman and Bobrow (1975) referred to resource-limited and data-limited processes as a framework to describe cognitive processing of sensory information. Resource-limited processes refer to the limited capacity of cognitive systems, including working memory, for processing information. Data-limited processes refer to the interaction between the stimulus (signal data-limits) and the listener’s linguistic representations in long-term memory (memory data-limits). In speech recognition, signal data-limits would include how audible the stimulus is to the listener and linguistic context, whereas memory data-limits would include the listener’s accumulated knowledge of linguistic context, the talker and environment. Children who are listening in background noise have disadvantages on resource- and data-limited fronts: acoustic cues in the stimulus are limited by noise and linguistic knowledge is still developing, as are their working memory abilities. However, few studies have examined the interplay between these factors in speech recognition for typically developing children.

The Ease of Language Understanding (ELU) model

In adults, a theoretical account has been developed to describe the relationship between cognitive processes and speech understanding, known as the Ease of Language Understanding (ELU) model (see Rönnberg et al, 2013 for review). As in the more general information processing model of Norman & Bobrow (1975), the ELU model describes the relationships between the listener’s cognitive resources, stimulus factors and the listener’s knowledge of language and experience (often referred to in the ELU model as semantic and episodic long-term memory). A key component of the ELU model is that working memory capacity will predict individual differences in speech recognition, a finding that has been replicated across numerous studies with adult listeners using both verbal (Pichora-Fuller et al, 1995; Lunner, 2003; Rönnberg, 2003; McKellin et al, 2007) and visuospatial (Sörqvist et al, 2012) measures of working memory capacity. Since the development of the ELU model, advantages for understanding speech in noise for adults with stronger working memory capacity have been demonstrated for adults with hearing loss across a wide range of adverse listening conditions (Humes & Floyd, 2005; Arehart et al, 2013; see Akeroyd, 2008 for review).

Despite extensive support for the ELU model for speech understanding in adults in previous studies, the model has not been investigated in children. The ELU model could be tested as a plausible model for the development of speech recognition in noise in children, particularly since working memory undergoes rapid development during childhood. Research assessing the influence of working memory on speech recognition for typically developing children has been limited. In support of the predictions of the ELU, school-age children with higher working memory abilities have better simple and complex sentence recognition in quiet than peers with poorer working memory abilities (Magimairaj & Montgomery, 2012). Lalonde & Holt (2014) also reported a positive relationship between speech discrimination abilities in quiet and parent ratings of working memory for a group of 2-year-old children. The effects of working memory abilities on speech recognition in data-limited conditions, such as in noise or with spectral degradation, for children have not been consistent with findings from the adult literature. For example, Eisenberg et al (2000) found that working memory abilities were not related to the recognition of spectrally-degraded words or sentences after the effects of age were considered. Further evaluation of the effects of working memory capacity on children’s speech recognition in noise is needed.

The effects of language on speech recognition in noise

The listener’s language abilities support speech recognition in noise within the ELU model. In order to understand speech, phonological representations of the acoustic signal must be maintained temporarily in working memory, so that they can be compared against accumulated linguistic knowledge in long-term memory, also referred to as semantic memory. The specific linguistic skills that are involved in recognising speech may depend on the complexity of linguistic information in the stimuli. For words in isolation, the processes that describe the development of recognition in noise have been summarised as the Lexical Restructuring Model (LRM; Metsala & Walley, 1998). The LRM predicts that as children learn an increasing number of new words, the similarity between words in their lexicon necessitates a shift in how words are processed. Younger children process words as whole units, but increasing vocabulary during early school-age requires differentiation of distinct phonological representations to distinguish between similar words in the child’s lexicon. For speech recognition in noise, the LRM predicts that children with larger vocabularies would be better able to recognise words in noise because they have experience accommodating phonologically similar words as their lexicons have expanded. The ELU model would make a similar, but more general prediction that language abilities, including vocabulary, support word recognition in noise. There is support for both models in the literature, as children with higher scores on standardised tests of vocabulary have stronger speech recognition abilities in noise or conditions of stimulus degradation than peers with lower vocabulary in some studies (Garlock et al, 2001; Munson, 2001; Vance et al, 2009; McCreery & Stelmachowicz, 2011; Vance & Martindale, 2012). However, in other studies (Eisenberg et al, 2000; Stelmachowicz et al, 2000; Nittrouer et al, 2013), vocabulary has not been a consistent predictor of word recognition under degraded conditions.

The predictions of the LRM are specific to word recognition, but general predictions about language ability from ELU can also be extended to other types of stimuli. For recognition of sentences in noise, adult listeners also rely on sentence-level syntactic or semantic cues to support recognition (McElree et al, 2003). Because sentences are longer than words, working memory has also been shown to facilitate the use of linguistic cues in adults. Adults with stronger working memory capacity are more likely to use sentence-level semantic information than adults with poorer working memory capacity (Zekveld et al, 2011). In children, higher semantic and syntactic abilities have been associated with stronger recognition skills in quiet (Entwisle & Frasure, 1974), but not in noise (Jerger et al, 1981). For both word and sentence-level materials, the relationship between language abilities and speech recognition for typically developing children is much less coherent than studies with adults. The use of different stimuli and measures of language and cognitive abilities across studies may have contributed to the inconsistencies in findings observed in the previous literature examining linguistic and cognitive contributors to speech recognition in noise. The use of a range of speech perception, linguistic and cognitive measures in a single study may help to identify which skills support speech perception as the complexity of the stimulus is varied. A better understanding of how children use language knowledge would be beneficial for interpretation of clinical speech recognition tests and the design of new stimuli and tasks that better reflect realistic listening conditions.

Goals and hypotheses

The overall goal of this study was to assess the influence of working memory and language abilities on speech recognition in background noise for school-age children. To examine how the relationship between working memory, language abilities and speech recognition varied across different linguistic contexts, three different types of speech stimuli (monosyllabic words, sentences with simple syntactic structure with no semantic meaning, and sequences of three or four words without syntactic structure or semantic meaning) were used to assess speech recognition in noise. Standardised measures of language and working memory abilities were conducted and used to assess the relationship between speech recognition in noise and cognitive and linguistic abilities. Vocabulary and syntax measures allowed an examination of how individual language abilities influenced recognition for words and sentences, respectively. Working memory tests included both verbal and visuospatial tasks. Simple and complex span working memory tasks for each modality were used. This combination of multiple measures of working memory was included to create a representative construct of working memory capacity. Based on previous research with adults and the ELU model, two predictions were made:

Children with higher working memory abilities would recognise speech at lower signal-to-noise ratios (SNRs) than children with lower working memory abilities, consistent with the ELU model that has been validated in adults.

Children with stronger vocabularies would have better speech recognition in noise than peers with poorer vocabularies. Likewise, children with better syntactic skills would have better sentence recognition in noise, due to linguistic structure that facilitates sentence recognition. Knowledge of syntax was not predicted to relate to speech recognition for isolated words or word sequences, since neither of these stimuli contains syntactic cues.

Method

Subjects

Ninety-six typically developing children (48 male; 48 female) with normal hearing, ranging in age from 5 to 12 years, participated in the study. Subjects were recruited through the Boys Town National Research Hospital (BTNRH) Research Subject Core Database. All participants were native speakers of English. All participants’ hearing was screened at 15 dB HL in each ear across the octave frequencies of 500, 1000, 2000, 4000 and 8000 Hz. The screening was completed using a GSI-61 audiometer while participants wore TDH-39 headphones in a sound-treated audiometric booth. Participants were compensated $15/hour for their participation and were offered a prize and book at the completion of the session.

Materials

Speech recognition stimuli

Speech recognition was assessed using three types of stimuli: monosyllabic words, syntactically-correct sentences with no semantic meaning (low-predictability; LP), and sequences of four words without syntactic structure or semantic meaning (zero-predictability; ZP). All target words for the three stimulus types were within the lexicon of first grade children based on an online child lexical database (Storkel & Hoover, 2010). Five hundred seventy-five monosyllabic words were used. Two hundred forty-five LP sentences were created by selecting four words to form syntactically-correct, but semantically-meaningless sentences (example with target words underlined: The jaws giggle at the frosty tractor). Two hundred thirty-four ZP sequences were created by selecting four random words and ensuring that the order of words did not create a valid syntactic structure (example: Ghost four smart tooth.). The stimuli were spoken by a young adult female talker and recorded with custom recording software using a Shure 53 BETA head-worn boom microphone (Shure Incorporated, Niles, IL) at a sampling rate of 22,050 Hz. The best exemplar of each token was selected by having one examiner listen to three recordings of each stimulus. The stimuli were cropped to have 100 ms of quiet before and after each token. The tokens were then equated in root-mean-square level using Praat (Boersma & Weenink, 2001). To determine intelligibility of the stimuli in quiet, a group of 46 children between 6 and 12 years of age with normal hearing listened to a random subset at 65 dB SPL that included either 20 monosyllabic words or five sentences or sequences with four keywords each. The mean percent correct in quiet was 96% for the monosyllabic words, 94.1% for LP sentences and 93.5% for ZP sequences. Steady-state masking noise based on the long-term average speech spectrum for the female talker was created in MATLAB (MathWorks, Natick, MA) by taking a Fast Fourier Transform of a concatenated sound file containing all of the stimuli, randomising the phase of the signal at each sample point, and then taking the inverse Fast Fourier Transform. The noise started 100 ms prior to each stimulus and ended 250 ms after each stimulus.

Working memory measures

Four subtests of the Automated Working Memory Assessment (Alloway, 2007) were used to document visuospatial and verbal working memory ability using simple and complex span tasks for each sensory modality. The AWMA subtests were selected because of established reliability and validity for measuring working memory in typically developing children (Alloway et al, 2006). Table 1 shows the working memory measures categorised by span (simple vs. complex) and sensory modality (verbal and visuospatial). The visuospatial subtests included Dot Matrix and Odd-one-out. The Dot Matrix subtest is a simple span task that required children to watch a red dot in a sequence of positions on a 4 × 4 grid. Children were asked to indicate the sequential order of positions of the red dot on an empty grid as the number of positions increases on subsequent trials. The Odd-one-out subtest is a complex span task that required children to identify which shape out of a panel of three is different than the other two and to recall the odd shape’s position on an empty panel. As trials progressed, children indicated which shape was different on an increasing number of panels presented serially, and then recalled all of their positions in the order they were presented. Verbal working memory tasks included the Nonword Recall and Counting Recall subtests. The Nonword Recall subtest is a simple span task that required children to listen and repeat back a series of monosyllabic nonsense words. Some words contained word-final /r/clusters (nerm, karn, dorb). Articulation errors for these clusters were not counted as incorrect if they were not part of the child’s phonological repertoire, as determined by the Goldman-Fristoe Test of Articulation. The testing starts with one nonsense word per block and increases up to a maximum of six nonwords per block. The Counting Recall subtest is a complex span task that involves counting aloud how many red circles appear on a panel with various red and blue shapes. Following the presentation of shapes, children are required to recall how many red circles were counted. As trials progress, children count the red circles on multiple panels and then recall how many they counted in sequential order of presentation.

Table 1.

Working memory measures by sensory modality and span complexity.

| Simple span | Complex Span | |

|---|---|---|

| Verbal | Nonword recall | Counting recall |

| Visuospatial | Dot matrix | Odd-one-out |

Speech and language measures

The Goldman–Fristoe Test of Articulation 2 (Goldman & Fristoe, 2000) was administered to participants. The Goldman–Fristoe measures articulation accuracy and was used to ensure that children’s speech production error patterns would not interfere with judging correctness of their speech perception. None of the children had scores poorer than 1.5 standard deviations from the mean for their chronological age; thus, none of the children were excluded due to articulation concerns. Children completed the Peabody Picture Vocabulary Test, Fourth edition (PPVT; Dunn & Dunn, 2012) as a measure of receptive vocabulary. Participants were shown a page with four picture items and asked to find the target vocabulary word (e.g., “Show me avocado”). The Test of Reception of Grammar, Version 2 (TROG-2; Bishop, 2003), was used as a measure of receptive syntactic knowledge. Participants were shown a page with four pictures while an examiner read a sentence describing only one of the pictures. The subject was instructed to point to or say the picture number that best represented the meaning of the sentence. Sentences were categorised into 20 blocks representing different syntactic structures and levels of complexity. Each block contained four test sentences.

Instrumentation

All procedures were completed in a sound-treated audiometric test booth. Speech perception stimuli were presented from a personal computer using MATLAB and a MOTU Track 16 USB Audio Interface (MOTU, Cambridge, MA) via Sennheiser HD 25-1 II headphones (Sennheiser Electronic Corporation, Old Lyme, CT). The stimuli were calibrated using a Larson-Davis (Depew, NY) sound-level metre. Working memory tasks were presented from a personal computer in sound field via a JBL LSR2300 (JBL Professional, Northridge, CA) loudspeaker at a level of 65 dB SPL.

Procedure

Speech recognition and measures of language and working memory were completed in a single test session. The order of assessments was randomised across subjects. For speech recognition, an interleaved, adaptive tracking procedure was used to obtain the signal-to-noise ratio (SNR) at which children reached 29% and 71% correct for each listening condition (Levitt, 1971). To measure the SNR for 29% correct, the noise level was increased after one correct response and decreased after two consecutive incorrect responses. To measure the SNR for 71% correct, the noise level was increased after two consecutive correct responses and decreased after one incorrect response. There were a total of 6 reversals. The step size was 18 dB for the first reversal, 9 dB for the second reversal, 6 dB for the third reversal and 3 dB for the remaining reversals. The minimum SNR for the adaptive procedure was −20 dB and the maximum was 60 dB. The level of the speech stimulus was fixed at 65 dB SPL. The SNR at 29% and 71% correct was calculated by averaging the SNR from the last three reversals. Stimuli for each speech recognition condition were selected at random from the overall stimulus set for each type without replacement. Participants were instructed to listen to the stimulus and repeat back what they heard. Children were encouraged to guess when unsure of what they heard.

For working memory assessment, all subtests of the AWMA began with instruction and practice trials to ensure that each child understood each task. Testing began with a block of six test trials that contained only one recall item. After correctly recalling four out of six trials within the test block, the program automatically advanced to the next block, which contained an additional recall item. Test trials were continued until the child incorrectly recalled three out of six trials within a block or all test blocks were administered. For PPVT and TROG, the testing was completed in a quiet laboratory office setting. For the PPVT, testing was completed when a child reached a criterion level of performance based on 8 incorrect items out of a set of 12. The number of words correctly identified was summed as the raw score. For the TROG, testing was completed when children incorrectly responded to at least one item in five consecutive blocks or when all blocks were administered.

Statistical analyses

All statistical analyses were performed using the R software interface (Version 3.0.2; R Core Team, 2014). Pearson correlations were evaluated for all predictor variables. The effect of stimulus type (word, LP sentence and ZP sequence) on the SNRs required for 29% and 71% were assessed with a linear mixed model with stimulus and level as factors using the lme4 package for R (Bates et al, 2014). Linear mixed models were selected because they allow for specification of random intercepts for each participant to account for correlation of repeated measures within each subject. Linear mixed models for each stimulus type were also conducted to evaluate which linguistic and cognitive factors predicted speech recognition in noise. To avoid potential collinearity between different measures of working memory, a total working memory score based on the sum of the raw scores for each AWMA subtest was constructed for each subject. Age, receptive vocabulary (PPVT), and total working memory were used as predictors for all three stimulus types. Receptive syntax (TROG) was used as a predictor for the LP sentences only, since the LP sentences were the only stimulus that had syntactic structure. Raw scores were used in linear mixed models for all linguistic and cognitive variables. Age was included as a covariate. This allowed us to estimate the effect of each variable while controlling for the increases in raw scores that occur with age. All predictor variables were mean-centered to minimise the potential for multicollinearity. Variance inflation factors for individual predictors never exceeded 2.5. Regression assumptions, including normality, were assessed through residual analysis and there was no evidence of violation of the modelling assumptions.

Results

A subset of children were unable to reach 71% correct for the four-target-word sentence and sequence conditions at even the greatest SNR (60 dB); in those cases, data were not included in the analysis for the condition where they could not complete the task. This occurred for 26 children in the LP sentence condition and 33 children in the ZP sequence condition. Of those excluded, 22 children were missing data for both LP and ZP conditions, 4 children were missing data only for LP and 11 children were missing data for only the ZP condition. A series of one-way ANOVA was conducted to determine if the children who could not complete the speech perception task in the LP and ZP conditions differed in terms of age, vocabulary (PPVT) or working memory from the children who could complete the speech recognition task. Table 2 includes the comparison of mean scores between the children included and excluded from each analysis.

Table 2.

Comparison of age, language and working memory for participants who could not reach criterion performance in LP and ZP sentence conditions.

| Condition | LP Included | LP Excluded | ZP Included | ZP Excluded |

|---|---|---|---|---|

| Age (years) | 8.6 | 8.8 | 8.7 | 8.3 |

| Difference | F(1,96) =0.06, p =0.82 | F(1,96) =0.55, p =0.47 | ||

| PPVT | 110 | 111 | 110 | 112 |

| Difference | F(1,96) =0.22, p =0.64 | F(1,96) =0.46, p =0.49 | ||

| AWMA Total WM | 110 | 109 | 109 | 108 |

| Difference | F(1,96) =0.17, p =0.68 | F(1,96) =1.5, p =0.22 |

LP =Low-predictability sentences; ZP =Zero-predictability sequences; PPVT =Peabody Picture Vocabulary Test; AWMA Total WM =Automated Working Memory Assessment (AWMA) Total Composite of Raw scores.

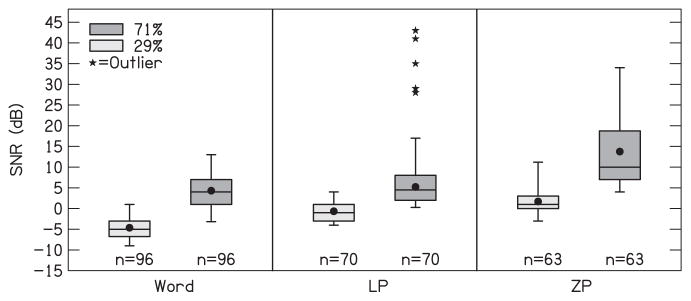

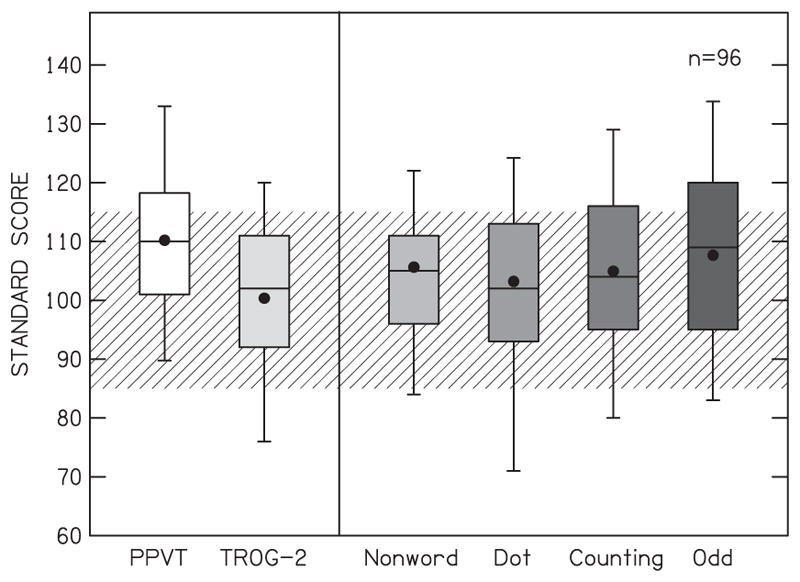

Figure 1 displays the standard scores for the measures of language and working memory. All of the children in the study had standard scores within 2 standard deviations of the mean for their age based on the normative sample for each test. Table 3 shows the descriptive statistics for speech recognition, age and cognitive and linguistic predictor variables. Figure 2 displays the SNR for 29% and 71% correct recognition for monosyllabic words, LP sentences and ZP sequences. For the linear mixed model with effects for stimulus and SNR (29% vs 71%), the random intercept had a variance of 10.2 and a standard deviation of 3.1 for each participant. The main effects for stimulus [F(2,286) =76.1, p<0.001] and SNR [F(1,190) =344.9, p<0.001] were significant. The SNR for 71% was 9.1 dB higher than the SNR for 29%. The average SNR for words was 4 dB lower than the SNR for LP sentences. The SNR for LP sentences was 2 dB lower than the SNR for ZP sequences. The interaction between stimulus and SNR was not significant.

Figure 1.

Box plots of the standard scores for language and cognitive measures for children who participated in the study. The boxes represent the interquartile range (25th–75th percentiles) and the whiskers represent the range of the 5th and 95th percentiles. The horizontal lines within each bar represent the medians and the filled circles represent the mean. The hatched area represents 1 standard deviation from the normative mean for each measure. PPVT =Peabody Picture Vocabulary Test; TROG =Test of Reception of Grammar; Nonword =Automated Working Memory Assessment (AWMA) Nonword Recall Subtest; Dot =AWMA Dot Matrix Subtest; Counting =AWMA Counting Recall Subtest; Odd =AWMA Odd-one-out subtest.

Table 3.

Descriptive statistics.

| Speech recognition (SNR) | Mean | SD |

|---|---|---|

| Word (29%/71%) | −4.6/4.3 | 3.1/7.2 |

| LP (29%/71%) | −3/6.1 | 2.3/7.4 |

| ZP (29%/71%) | 1.8/11.5 | 2.3/8.3 |

| Predictors | ||

| Age (years) | 8.7 | 2.2 |

| PPVT: Raw/SS | 147/110.4 | 29.6/13.1 |

| TROG2: Raw/SS | 13.9/100.5 | 3.8/12.9 |

| Nonword Raw/SS | 12.5/105.6 | 3.5/11.9 |

| Dot Raw/SS | 20.3/102.9 | 6.6/15.6 |

| Counting Raw/SS | 16.5/104.8 | 6.7/14.3 |

| Odd Raw/SS | 17.5/107.5 | 6.7/15.1 |

| AWMA Total WM | 66.9 | 20.1 |

LP =Low predictability sentences; ZP =Zero-predictability sentences, PPVT =Peabody Picture Vocabulary Test; TROG-2 =Test of Reception of Grammar, Nonword =Automated Working Memory Assessment (AWMA) Nonword Recall Subtest; Dot =AWMA Dot Matrix Subtest; Counting =AWMA Counting Recall Subtest; Odd =AWMA Odd-one-out subtest.

Figure 2.

Box plots of the signal-to-noise ratios (SNRs) for 71% (dark grey) and 29% (light grey) for words, low predictability (LP) sentences and zero-predictability (ZP) sequences. The boxes represent the interquartile range (25th–75th percentiles) and the whiskers represent the range of the 5th and 95th percentiles. The horizontal lines within each bar represent the medians and the filled circles represent the means. The stars represent data points that were outside of the range for the 5th–95th percentiles.

Table 4 displays the Pearson correlations between predictor variables. There were significant positive correlations between all of the predictor variables. The SNR for 50% correct was calculated for each listener as the average of the SNR for 29% and SNR for 71% correct for each stimulus type. Table 5 displays the Pearson correlations between the SNR for 50% correct and predictor variables. Lower 50% SNR was associated with higher age, vocabulary, syntactic knowledge and working memory abilities. To analyse the predictors of individual variability on speech recognition in noise, linear mixed models were used for each stimulus type with a random intercept for each participant. Predictors in the full model for each stimulus type were age in months, receptive vocabulary (PPVT), and total working memory score (AWMA). For the LP sentence model, syntax (TROG-2) was included as an additional predictor due to the syntactic structure of those stimuli. A main effect for level was included in each model to contrast the effects of predictors for the 29% and 71% SNRs. None of the higher-order interactions were significant, and therefore, were not included in the model for each stimulus. Table 6 includes the model statistics for each stimulus type. The 71% SNR was 8.9 dB higher than the 29% SNR for all three types of stimuli (p<0.0001; 8.9 dB for words, 8.7 dB for LP sentences and 9.6 dB for ZP sequences). While older children had lower SNRs across all three types of stimuli, age was not a significant predictor. Children with higher total working memory scores had lower SNRs for all three types of stimuli (Words, r =0.18, p =0.04; LP sentences, r =0.29, p =0.009; and ZP sequences, r =0.32, p =0.002) than children with lower working memory scores. Children with higher vocabulary scores had lower SNRs for LP sentences (r =0.46, p =0.05) and ZP sequences (r =0.45, p =0.03) than children with lower scores in vocabulary. Vocabulary was not related to word recognition in noise after accounting for other predictors. Receptive syntax and age were not significant predictors of LP sentence recognition in noise after accounting for other predictors.

Table 4.

Pearson correlations between predictor variables.

| Age | PPVT | TROG-2 | Nonword | Dot | Counting | Odd | Total WM | |

|---|---|---|---|---|---|---|---|---|

| Age | 1 | 0.85a | 0.64a | 0.53a | 0.76a | 0.76a | 0.68a | 0.82a |

| PPVT | 1 | 0.73a | 0.53a | 0.67a | 0.70a | 0.69a | 0.76a | |

| TROG | 1 | 0.57a | 0.51a | 0.60a | 0.59a | 0.66a | ||

| Nonword | 1 | 0.53a | 0.59a | 0.44a | 0.69a | |||

| Dot | 1 | 0.70a | 0.70a | 0.89a | ||||

| Counting | 1 | 0.70a | 0.90a | |||||

| Odd | 1 | 0.87a | ||||||

| AWMA Total WM | 1 |

PPVT =Peabody Picture Vocabulary Test; TROG-2 =Test of Reception of Grammar, Nonword =Automated Working Memory Assessment (AWMA) Nonword Recall Subtest; Dot =AWMA Dot Matrix Subtest; Counting =AWMA Counting Recall Subtest; Odd =AWMA Odd-one-out subtest; Total WM =Composite score of AWMA working memory subtests. Correlations for standardised measures are based on raw scores.

p<0.05 (two-tailed).

Table 5.

Pearson correlations between speech recognition and age, language and working memory.

| SNR50 | Age in months | Language | Working Memory | ||||

|---|---|---|---|---|---|---|---|

|

|

|

||||||

| PPVT | TROG-2 | Nonword | Dot | Counting | Odd | ||

| Words | −0.39a | −0.35a | −0.41a | −0.28a | −0.39a | −0.39a | −0.40a |

| LP | −0.40a | −0.52a | −0.42a | −0.47a | −0.44a | −0.44a | −0.45a |

| ZP | −0.25 | −0.30a | −0.24 | −0.49a | −0.34a | −0.43a | −0.25a |

SNR50 =Signal-to-noise ratio for 50% correct; LP =Low predictability sentences; ZP =Zero-predictability sequences, PPVT =Peabody Picture Vocabulary Test; TROG-2 =Test of Reception of Grammar, Nonword =Automated Working Memory Assessment (AWMA) Nonword Recall Subtest; Dot =AWMA Dot Matrix Subtest; Counting =AWMA Counting Recall Subtest; Odd =AWMA Odd-one-out subtest. Correlations are based on raw scores.

p<0.05.

Table 6.

Linear mixed models.

| Stimulus | Random intercept variance | Predictors | t | p |

|---|---|---|---|---|

| Word | 7.4, SD =2.7 | PPVT | 1.5 | 0.13 |

| Age (months) | −1.4 | 0.14 | ||

| Total WM | −1.9 | 0.04 | ||

| SNR (29%/71%) | 13.4 | <0.0001 | ||

| LP | 37.9, SD =6.2 | PPVT | −1.9 | 0.05 |

| Age (months) | 1.5 | 0.14 | ||

| Total WM | −3.1 | 0.009 | ||

| TROG | −0.6 | 0.52 | ||

| SNR (29%/71%) | 8.0 | <0.0001 | ||

| ZP | 31.54, SD =5.6 | PPVT | −2.1 | 0.03 |

| Age (months) | 1.7 | 0.09 | ||

| Total WM | −3.1 | 0.002 | ||

| SNR (29%/71%) | 10.9 | <0.0001 |

SD =standard deviation; LP =Low predictability sentences; ZP =Zero-predictability sequences; PPVT =Peabody Picture Vocabulary Test; Total WM =composite of all AWMA subtest scores; SNR =signal-to-noise ratio; TROG =Test of Reception of Grammar; significant individual predictors are noted in bold. Predictors for standardised measures are based on raw scores.

Discussion

This study quantified the influence of working memory and language abilities on speech recognition in noise in typically developing children for three types of stimuli that varied in linguistic complexity. The SNRs for 29% and 71% correct were measured for monosyllabic words, sentences with syntax and no semantic meaning (LP), and sequences of four monosyllabic words without syntactic structure or semantic meaning (ZP). Two predictions were made based on previous research related to the ELU model for adult listeners: (1) Children with stronger working memory abilities would have better speech recognition in noise and (2) Children with stronger language skills would have better speech recognition in noise than peers with poorer language skills. Specifically, children with higher receptive vocabulary abilities were expected to have better speech recognition in noise for all three stimulus types. Children with higher receptive syntactic abilities were expected to have better speech recognition for sentences with syntax. The results offered partial support for these predictions. Children with stronger working memory abilities had better speech recognition in noise across all three stimulus types, consistent with the ELU model. Higher receptive vocabularies were associated with lower (better) SNRs for LP sentences and ZP sequences, but not monosyllabic words. Receptive syntax and age were not significant predictors of speech recognition in noise after controlling for the other predictors. These results have implications for understanding the factors that support speech recognition in noise, the clinical assessment of children’s speech recognition, as well as the development of speech recognition materials for children.

Children’s working memory and speech recognition in noise

Children with higher working memory scores had better speech recognition in noise for all three stimulus types. The findings that working memory supports speech recognition in noise for children is consistent with the ELU model (Rönnberg et al, 2013) and previous studies that have demonstrated advantages in sentence recognition in quiet for children with stronger working memory abilities (Magimairaj & Montgomery, 2012; Lalonde & Holt, 2014). The finding that working memory facilitates recognition of LP sentences and ZP sequences was expected due to previous studies in adults that demonstrate working memory abilities are positively related to sentence recognition in noise (McElree et al, 2003; Zekveld et al, 2011). The relationship between working memory and monosyllabic word recognition appears to contradict previous research, which did not show a relationship between working memory and word recognition in noise for children (Eisenberg et al, 2000). Eisenberg and colleagues used a forward digit span task to quantify working memory in their participants, which characterises the simple span of working memory. Multiple measures of working memory that represented storage (simple span) or storage and concurrent processing (complex span), as well as verbal and visuospatial modalities were included in this study to help to determine if the complexity or modality of working memory tasks mattered for predicting speech recognition in noise. Complex span working memory tasks, which include both storage and processing of information, are often the most consistent predictors of speech recognition in noise in the adult literature (Rönnberg et al, 2013). We did not find that to be the case for children in this study. Instead, high correlations between working memory measures in this study prevented a comparison of the contributions of simple vs. complex span tasks. Future research may be able to examine the effects of working memory task complexity on speech recognition in noise for children.

Children’s language abilities and speech recognition

Counter to the hypotheses of the study, receptive vocabulary skills were not predictive of individual differences in speech recognition in noise for monosyllabic words. However, children with higher vocabulary abilities did have better speech recognition in noise for LP sentences and ZP sequences than children with poorer vocabulary abilities. The finding that vocabulary abilities were not associated with word recognition in noise went against our predictions and do not appear to be consistent with predictions from the LRM (Metsala & Walley, 1998) or the ELU model. The LRM suggests that children with better vocabularies are better able to perceive phonological differences between similar words because of their experience accommodating a larger number of novel words into their lexicon, which should also yield advantages for word recognition in noise. The ELU model would predict that children who have stronger language abilities, in general, will have advantages recognising speech in noise across different types of stimuli.

There could be several reasons for this apparent discrepancy. First, previous studies of the relationship between word recognition in noise and vocabulary have shown mixed findings. Some studies found a positive relationship between word recognition and vocabulary abilities consistent with the predictions of the LRM (Blamey et al, 2001; Munson, 2001; McCreery et al, 2015), but other studies have not (Eisenberg et al, 2000; Nittrouer et al, 2013). Several factors could have contributed to this inconsistency. The shared variance between working memory and vocabulary abilities in this study could have limited the ability to statistically differentiate the unique contributions of each variable to speech recognition in noise. The age of acquisition for word stimuli used in speech recognition tasks is one factor that may affect the relationship between vocabulary and speech recognition in children. Garlock et al (2001) examined the influence of vocabulary abilities on recognition for words that varied in the age of acquisition (early-vs late-acquired words). The speech recognition benefit for children with stronger vocabulary skills was limited to the conditions where the stimuli consisted of later-acquired words. Early-acquired words may not require the ability to detect fine-grained phonological differences between similar words. The lack of a relationship between vocabulary abilities and speech recognition in this study and several previous studies may have been because these studies ensured that all of the words were within the lexicon of the younger children (e.g. Eisenberg et al 2000; Nittrouer et al, 2013). However, including only early-acquired words may limit the extent to which children’s vocabularies contribute to word perception performance. The positive effects of vocabulary on LP sentences and ZP sequences suggest that children may rely on vocabulary or other related language abilities when the speech recognition task is more challenging. Vocabulary may also be related to other language abilities that were not measured in this study that affected speech recognition in noise for LP sentences and ZP sequences. The finding that higher vocabulary scores were associated with better recognition in noise for LP sentences and ZP sequences is consistent with the ELU model.

Receptive syntax abilities were not related to LP sentence recognition after controlling for the effects of vocabulary and working memory. This observation went against our prediction that children with higher receptive syntax abilities would have an advantage listening in noise for stimuli with syntactic structure, like the LP sentences. The lack of an effect for receptive syntax on speech recognition in noise may have been related to the decision to use simple, subject-verb-object syntax as the only syntactic structure for the LP sentences. The inclusion of multiple syntactic structures and more complex sentence constructions could have provided an advantage for children with stronger receptive syntax. Additionally, receptive syntax ability measured by the TROG-2 was correlated with working memory and vocabulary abilities (Table 4). Thus, some of the effects of receptive syntax may have been accounted for in the statistical model by working memory or vocabulary abilities.

The effects of age on children’s speech recognition in noise

As in multiple previous studies of speech recognition in children with normal hearing (Elliott, 1979; Marshall et al, 1979; Hnath-Chisolm et al, 1998; Fallon et al, 2000; Johnson, 2000; Eisenberg et al, 2000; Blandy & Lutman, 2005; Talarico et al 2006; Scollie, 2008; McCreery et al, 2010; McCreery & Stelmachowicz, 2011; Leibold & Buss, 2013), speech recognition in noise in this study increased with age for monosyllabic words and two different types of sentences. After accounting for working memory and vocabulary, however, age was not a significant predictor of speech recognition in noise. We interpret these results to suggest that age-related variability observed in previous studies is partly related to individual differences in working memory and linguistic abilities. Incorporating cognitive and linguistic skills into models of speech recognition for children would improve predictions of speech recognition in data-limited conditions, such as in background noise or in reverberation, including in classrooms and other realistic communication environments.

Clinical implications

The current findings have clinical implications for speech recognition testing as part of hearing assessment. Despite the fact that clinical speech recognition tests are often considered to be indices of auditory abilities, working memory and vocabulary abilities influence speech recognition in noise for children with normal hearing. Even for stimuli like monosyllabic words, which might be chosen to minimise the cognitive demands on the clinical speech recognition task, children with higher working memory abilities were able to recognise words at lower SNRs than peers with more limited working memory abilities. Poor word recognition performance, particularly in background noise, should be interpreted cautiously as there are multiple potential contributing factors. For LP sentence and ZP sequence conditions, ~25–30% of school-age children in the study could not reach a criterion level of speech recognition performance in noise. This suggests that limiting the semantic and syntactic information available in speech recognition tasks for children may limit the number of children who can complete speech recognition tasks with these stimuli clinically.

Limitations and future directions

The current study has several limitations. The sample only included typically-developing children from English-speaking homes. Children from diverse language backgrounds and those with other disabilities may be at risk for difficulties listening in background noise, but it is unclear if a similar pattern of performance and the relationship between cognitive and linguistic skills and speech recognition would be observed in those populations. Furthermore, it is unclear if the relationship would be different with the use of other types of masking noise, such as maskers composed of two competing talkers (Hillock-Dunn et al, 2014; Corbin et al, 2015). Future research should seek to expand these results to children with hearing loss and to stimuli and maskers that are a more representative approximation of the stimuli that children listen to in realistic situations.

Another potential limitation is that high correlations among different measures of working memory prevented exploration of the specific aspects of working memory that might support speech recognition. Verbal and visuospatial modalities, as well as measures that reflect storage alone or concurrent storage and processing of information, were all related to speech recognition in noise across all three stimulus conditions to a similar degree. This raises questions about whether or not these results reflect the contributions of working memory specifically, or some other general factor related to intelligence. The current study did not include other measures of intelligence, such as verbal or nonverbal intelligence quotient, primarily because several previous studies have shown a limited relationship between these measures of general intelligence and speech perception in children with normal hearing in the age range for this study (Fallon et al, 2000; Talarico et al, 2006). Given the shared variance between predictors of language and working memory, the statistical power of the study also may have been insufficient to detect differences in the unique contributions of these variables to individual differences in speech recognition in noise. Future studies could attempt to quantify a broader range of cognitive variables to help to determine whether the effects observed in this study are related to working memory or other executive functions that are related to working memory. Larger sample sizes may also help to detect smaller effects of predictors with shared variance.

Conclusions

The purpose of this study was to determine the impact of working memory and language abilities on speech recognition in noise for typically developing children with normal hearing. Children with better working memory abilities had better speech recognition in noise than children with poorer working memory abilities, consistent with the ELU model that has been validated in adults. Receptive vocabulary skills were positively related to recognition of LP sentences and ZP sequences. However, children with higher receptive vocabulary abilities did not have an advantage over peers with poorer vocabulary for word recognition in noise. The lack of predictive ability for word recognition in noise may have been related to the use of only words that were within the lexicon of the youngest children in the sample. Sentence-level syntactic skills were not related to LP sentence recognition in noise after controlling for vocabulary and working memory. These results suggest that age-related changes in speech recognition in noise among school-age children are related, at least in part, to the development of working memory and linguistic skills. Models that attempt to predict speech recognition in children should include individual measures of working memory and linguistic skills.

Acknowledgments

Preliminary data for this manuscript were presented at the 2014 Annual Scientific and Technology Conference of the American Auditory Society. This work was supported by grants from NIH-NIDCD (R03 DC012635, R01 DC013591, P30 DC004662, T32 DC000013). The authors wish to thank Ellen Hatala and Kris Fernau for help with data collection and development of Figures.

Abbreviations

- ELU

Ease of Language Understanding

- LRM

Lexical Restructuring Model

- LP

Low-predictability

- ZP

Zero-predictability

- AWMA

Automated Working Memory Assessment

- PPVT

Peabody Picture Vocabulary Test

- TROG

Test of Reception of Grammar

- SNR

signal-to-noise ratio

Footnotes

Declaration of interest: The authors report no conflicts of interest. The authors alone are responsible for the content and writing of this article. This work was supported by grants from NIH-NIDCD (R03 DC012635, R01 DC013591, P30 DC004662, T32 DC000013).

References

- Akeroyd MA. Are individual differences in speech reception related to individual differences in cognitive ability? A survey of twenty experimental studies with normal and hearing-impaired adults. Int J Audiol. 2008;47:S53–S71. doi: 10.1080/14992020802301142. [DOI] [PubMed] [Google Scholar]

- Alloway TP. Automated Working: Memory Assessment. Pearson, London: Pearson; 2007. [Google Scholar]

- Alloway TP, Gathercole SE, Pickering SJ. Verbal and visuospatial short-term and working memory in children: Are they separable? Child development. 2006;77:1698–1716. doi: 10.1111/j.1467-8624.2006.00968.x. [DOI] [PubMed] [Google Scholar]

- Arehart KH, Souza P, Baca R, Kates JM. Working memory, age, and hearing loss: Susceptibility to hearing aid distortion. Ear Hear. 2013;34:251. doi: 10.1097/AUD.0b013e318271aa5e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley AD. Short-term and working memory. In: Tulving E, Craik FIM, editors. The Oxford Handbook of Memory. Oxford: Oxford University Press; 2000. pp. 77–92. [Google Scholar]

- Bates D, Maechler M, Bolker B, Walker S. lme4: Linear mixed-effects models using Eigen and S4. R Package Version. 2014:1. [Google Scholar]

- Bishop DV. Test for reception of grammar: TROG-2 version 2. Pearson Assessment 2003

- Blamey PJ, Sarant JZ, Paatsch LE, Barry JG, Bow CP, et al. Relationships among speech perception, production, language, hearing loss, and age in children with impaired hearing. J Speech Lang Hear Res. 2001;44:264–285. doi: 10.1044/1092-4388(2001/022). [DOI] [PubMed] [Google Scholar]

- Blandy S, Lutman M. Hearing threshold levels and speech recognition in noise in 7-year-olds. Int J Audiol. 2005;44:435–443. doi: 10.1080/14992020500189203. [DOI] [PubMed] [Google Scholar]

- Boersma P, Weenink D. Praat, a system for doing phonetics by computer 2001

- Caldwell A, Nittrouer S. Speech perception in noise by children with cochlear implants. J Speech Lang Hear Res. 2013;56:13–30. doi: 10.1044/1092-4388(2012/11-0338). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbin NE, Bonino AY, Buss E, Leibold LJ. Development of open-set word recognition in children: Speech-shaped noise and two-talker speech maskers. Ear Hear. 2016;37:55–63. doi: 10.1097/AUD.0000000000000201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowan N. On the psychophysics of memory. In: Kaernbach C, Schröger E, Müller H, editors. Psychophysics Beyond Sensation: Laws and Invariants of Human Cognition. Scientific Psychology Series. Mahwah, NJ: Erlbaum; 2004. pp. 313–319. [Google Scholar]

- Davidson LS, Skinner MW. Audibility and speech perception of children using wide dynamic range compression hearing aids. Am J Audiol. 2006;15:141–153. doi: 10.1044/1059-0889(2006/018). [DOI] [PubMed] [Google Scholar]

- DesJardin JL, Ambrose SE, Martinez AS, Eisenberg LS. Relationships between speech perception abilities and spoken language skills in young children with hearing loss. Int J Audiol. 2009;48:248–259. doi: 10.1080/14992020802607423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn LM, Dunn DM. Peabody Picture Vocabulary Test, (PPVT-4) Johannesburg: Pearson Education Inc; 2012. [Google Scholar]

- Elliott LL. Performance of children aged 9 to 17 years on a test of speech intelligibility in noise using sentence material with controlled word predictability. J Acoust Soc Am. 1979;66:651–653. doi: 10.1121/1.383691. [DOI] [PubMed] [Google Scholar]

- Eisenberg LS, Shannon RV, Martinez AS, Wygonski J, Boothroyd A. Speech recognition with reduced spectral cues as a function of age. J Acoust Soc Am. 2000;107:2704–2710. doi: 10.1121/1.428656. [DOI] [PubMed] [Google Scholar]

- Entwisle DR, Frasure NE. A contradiction resolved: Children’s processing of syntactic cues. Dev Psychol. 1974;10:852–857. [Google Scholar]

- Fallon M, Trehub SE, Schneider BA. Children’s perception of speech in multitalker babble. J Acoust Soc Am. 2000;108:3023–3029. doi: 10.1121/1.1323233. [DOI] [PubMed] [Google Scholar]

- Garlock VM, Walley AC, Metsala JL. Age-of-acquisition, word frequency, and neighborhood density effects on spoken word recognition by children and adults. J Mem Lang. 2001;45:468–492. [Google Scholar]

- Goldman R, Fristoe M. Goldman-Fristoe Test of Articulation-2 (GFTA-2) Circle Pines, MN: American Guidance Service; 2000. [Google Scholar]

- Hillock-Dunn A, Taylor C, Buss E, Leibold LJ. Assessing speech perception in children with hearing loss: What conventional clinical tools may miss. Ear Hear. 2014;36:e57–e60. doi: 10.1097/AUD.0000000000000110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hnath-Chisolm TE, Laipply E, Boothroyd A. Age-related changes on a children’s test of sensory-level speech perception capacity. J Speech Lang Hear Res. 1998;41:94–106. doi: 10.1044/jslhr.4101.94. [DOI] [PubMed] [Google Scholar]

- Humes LE, Floyd SS. Measures of working memory, sequence learning, and speech recognition in the elderly. J Speech Lang Hear Res. 2005;48:224–235. doi: 10.1044/1092-4388(2005/016). [DOI] [PubMed] [Google Scholar]

- Jerger S, Jerger J, Lewis S. Pediatric speech intelligibility test. II. Effect of receptive language age and chronological age. Int J Pediatr Otorhinolaryngol. 1981;3:101–118. doi: 10.1016/0165-5876(81)90026-4. [DOI] [PubMed] [Google Scholar]

- Johnson CE. Children’s phoneme identification in reverberation and noise. J Speech Lang Hear Res. 2000;43:144–157. doi: 10.1044/jslhr.4301.144. [DOI] [PubMed] [Google Scholar]

- Knecht HA, Nelson PB, Whitelaw GM, Feth LL. Background noise levels and reverberation times in unoccupied classrooms: Predictions and measurements. Am J Audiol. 2002;11:65–71. doi: 10.1044/1059-0889(2002/009). [DOI] [PubMed] [Google Scholar]

- Lalonde K, Holt RF. Cognitive and linguistic sources of variance in 2-year-olds’ speech-sound discrimination: A preliminary investigation. J Speech Lang Hear Res. 2014;57:308–326. doi: 10.1044/1092-4388(2013/12-0227). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leibold LJ, Buss E. Children’s identification of consonants in a speech-shaped noise or a two-talker masker. J Speech Lang Hear Res. 2013;56:1144–1155. doi: 10.1044/1092-4388(2012/12-0011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitt HCCH. Transformed up-down methods in psychoacoustics. J Acoust Soc Am. 1971;49:467–477. [PubMed] [Google Scholar]

- Lunner T. Cognitive function in relation to hearing aid use. Int J Audiol. 2003;42:S49–S58. doi: 10.3109/14992020309074624. [DOI] [PubMed] [Google Scholar]

- Magimairaj BM, Montgomery JW. Children’s verbal working memory: Role of processing complexity in predicting spoken sentence comprehension. J Speech Lang Hear Res. 2012;55:669–682. doi: 10.1044/1092-4388(2011/11-0111). [DOI] [PubMed] [Google Scholar]

- Marshall L, Brandt JF, Marston LE, Ruder K. Changes in number and type of errors on repetition of acoustically distorted sentences as a function of age in normal children. J Am Aud Soc. 1979;4:218–225. [PubMed] [Google Scholar]

- Mattys SL, Davis MH, Bradlow AR, Scott SK. Speech recognition in adverse conditions: A review. Lang Cogn Process. 2012;27:953–978. [Google Scholar]

- McElree B, Foraker S, Dyer L. Memory structures that subserve sentence comprehension. J Mem Lang. 2003;48:67–91. [Google Scholar]

- McCreery R, Ito R, Spratford M, Lewis D, Hoover B, et al. Performance-intensity functions for normal-hearing adults and children using computer-aided speech perception assessment. Ear Hear. 2010;31:95–101. doi: 10.1097/AUD.0b013e3181bc7702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCreery RW, Walker EA, Spratford M, Oleson J, Bentler R, et al. Speech recognition and parent ratings from auditory development questionnaires in children who are hard of hearing. Ear Hear. 2015;36:60S–75S. doi: 10.1097/AUD.0000000000000213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCreery RW, Stelmachowicz PG. Audibility-based predictions of speech recognition for children and adults with normal hearing. J Acoust Soc Am. 2011;130:4070–4081. doi: 10.1121/1.3658476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKellin WH, Shahin K, Hodgson M, Jamieson J, Pichora-Fuller K. Pragmatics of conversation and communication in noisy settings. J Pragmat. 2007;39:2159–2184. [Google Scholar]

- Metsala JL, Walley AC. Spoken vocabulary growth and the segmental restructuring of lexical representations: Precursors to phonemic awareness and early reading ability 1998 [Google Scholar]

- Munson B. Relationships between vocabulary size and spoken word recognition in children aged 3 to 7. Contemp Issues Commun Sci Disord. 2001;28:20–29. [Google Scholar]

- Muñoz K, Blaiser DK, Schofield H. Aided speech perception testing practices for three-to-six-year old children with permanent hearing loss. J Educ Aud. 2012;18:53–60. [Google Scholar]

- Nelson PB, Soli S. Acoustical barriers to learning: Children at risk in every classroom. Lang Speech Hear Serv Sch. 2000;31:356–361. doi: 10.1044/0161-1461.3104.356. [DOI] [PubMed] [Google Scholar]

- Nittrouer S, Caldwell-Tarr A, Tarr E, Lowenstein JH, Rice C, et al. Improving speech-in-noise recognition for children with hearing loss: Potential effects of language abilities, binaural summation, and head shadow. Int J Audiol. 2013;52:513–525. doi: 10.3109/14992027.2013.792957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norman DA, Bobrow DG. On data-limited and resource-limited processes. Cogn Psychol. 1975;7:44–64. [Google Scholar]

- Pichora-Fuller MK, Schneider BA, Daneman M. How young and old adults listen to and remember speech in noise. J Acoust Soc Am. 1995;97:593–608. doi: 10.1121/1.412282. [DOI] [PubMed] [Google Scholar]

- R Core Development Team. R: A language and environment for statistical computing [Computer software] 2014 www.R-project.org.

- Rönnberg J. Cognition in the hearing impaired and deaf as a bridge between signal and dialogue: A framework and a model. Int J Audiol. 2003;42:S68–S76. doi: 10.3109/14992020309074626. [DOI] [PubMed] [Google Scholar]

- Rönnberg J, Lunner T, Zekveld A, Sörqvist P, Danielsson H, et al. The Ease of Language Understanding (ELU) model: Theoretical, empirical, and clinical advances. Front Syst Neurosci. 2013;7:31. doi: 10.3389/fnsys.2013.00031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schafer EC. Speech perception in noise measures for children: A critical review and case studies. J Educ Aud. 2010;16:4–15. [Google Scholar]

- Scollie SD. Children’s speech recognition scores: The speech Intelligibility Index and proficiency factors for age and hearing level. Ear Hear. 2008;29:543–556. doi: 10.1097/AUD.0b013e3181734a02. [DOI] [PubMed] [Google Scholar]

- Sörqvist P, Stenfelt S, Rönnberg J. Working memory capacity and visual–verbal cognitive load modulate auditory–sensory gating in the brainstem: Toward a unified view of attention. J Cogn Neurosci. 2012;24:2147–2154. doi: 10.1162/jocn_a_00275. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Hoover BM, Lewis DE, Kortekaas RW, Pittman AL. The relation between stimulus context, speech audibility, and perception for normal-hearing and hearing-impaired children. J Speech Lang Hear Res. 2000;43:902–914. doi: 10.1044/jslhr.4304.902. [DOI] [PubMed] [Google Scholar]

- Storkel HL, Hoover JR. An online calculator to compute phonotactic probability and neighborhood density on the basis of child corpora of spoken American English. Behav Res Methods. 2010;42:497–506. doi: 10.3758/BRM.42.2.497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talarico M, Abdilla G, Aliferis M, Balazic I, Giaprakis I, et al. Effect of age and cognition on childhood speech in noise perception abilities. Audiol Neurootol. 2006;12:13–19. doi: 10.1159/000096153. [DOI] [PubMed] [Google Scholar]

- Vance M, Martindale N. Assessing speech perception in children with language difficulties: Effects of background noise and phonetic contrast. Int J Speech-Lang Pathol. 2012;14:48–58. doi: 10.3109/17549507.2011.616602. [DOI] [PubMed] [Google Scholar]

- Vance M, Rosen S, Coleman M. Assessing speech perception in young children and relationships with language skills. Int J Audiol. 2009;48:708–717. doi: 10.1080/14992020902930550. [DOI] [PubMed] [Google Scholar]

- Wightman F, Allen P. Individual differences in auditory capability among preschool children. In: Werner LA, Rubel APAEW, editors. Developmental Psychoacoustics. Washington, DC: American Psychological Association; 1992. pp. 113–133. [Google Scholar]

- Zekveld AA, Kramer SE, Festen JM. Cognitive load during speech perception in noise: The influence of age, hearing loss, and cognition on the pupil response. Ear and hearing. 2011;32:498–510. doi: 10.1097/AUD.0b013e31820512bb. [DOI] [PubMed] [Google Scholar]