Abstract

Sensitivity to others’ emotions is foundational for many aspects of human life, yet computational models do not currently approach the sensitivity and specificity of human emotion knowledge. Perception of isolated physical expressions largely supplies ambiguous, low-dimensional, and noisy information about others' emotional states. By contrast, observers attribute specific granular emotions to another person based on inferences of how she interprets (or “appraises”) external events in relation to her other mental states (goals, beliefs, moral values, costs). These attributions share neural mechanisms with other reasoning about minds. Situating emotion concepts in a formal model of people's intuitive theories about other minds is necessary to effectively capture humans' fine-grained emotion understanding.

Introduction

“I’d rather write an encyclopedia about common emotions,” he admitted. “From A for ‘Anxiety about picking up hitchhikers’ to E for ‘Early risers’ smugness’ through to Z for ‘Zealous toe concealment, or the fear that the sight of your feet might destroy someone’s love for you.’ ” — The little Paris bookshop by Nina George.

If your friend is experiencing early risers’ smugness, how would you know? From a quick glance at her face and posture, you see she is experiencing a low-arousal positive emotion. To refine this attribution, though, you would need knowledge of the context and cause of the emotion. She is more likely to feel smug, you know intuitively, if she chose to wake up early (rather than being woken involuntarily by a screaming baby) and if she used those extra hours to her relative advantage (rather than wasting them counting sheep). As this example illustrates, human observers can recognize and reason about highly-differentiated, or fine-grained, emotions. Here we propose that fine-grained emotion concepts are best captured in a Bayesian hierarchical generative model of the intuitive theory of other minds [1*].

The role of concepts in emotion has been much disputed [2–5]. This question is particularly hard for first person emotions: when I myself feel anxious, what is the role of my concept of “anxiety” in the construction of my experience? Here, we selectively tackle an easier problem: the problem of other minds. We recognize anxiety in our friends, distinguish their anxiety from their disappointment or regret, and try to respond in appropriate ways [6]; but how do we make such specific and accurate emotion attributions to another person? In order to formally address that question, we situate emotion concepts in a computational model of the intuitive theory of mind [1*,7]. (Note that intuitive or lay theories are causally structured, but generally not explicit, declarative, or introspectively accessible [8]).

Situating emotion concepts within an intuitive theory of mind

Initial scientific descriptions of an “intuitive theory of mind” focused on its application to predicting others’ intentional actions [9]. Minimally, intentional actions can be predicted (and explained) as consequences of the agent’s beliefs and desires, and modeled as inverse planning [10]. Subsequent models have considerably extended this basic premise to capture causal relations between other kinds of mental states. For example: Greg’s choices additionally depend on (what he believes about) the costs of his actions [11]; his beliefs update in response to new evidence [7]; his actions are influenced by his habits [12]; and so on. A hierarchical Bayesian model of this intuitive causal theory can explain both observers’ forward inferences (predicting Greg’s actions given his beliefs and desires) and inverse inferences (inferring Greg’s beliefs and desires given his actions) [10].

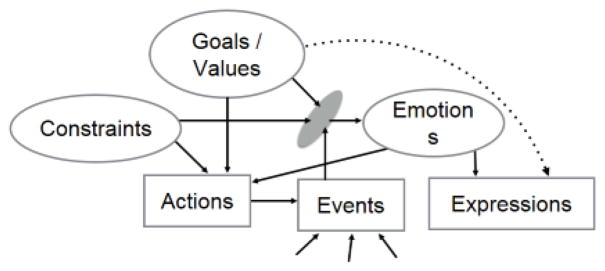

People readily incorporate emotions in their intuitive reasoning about other minds [13–15] but only recently have computational models of theory of mind been elaborated to include emotion concepts. Minimally, in the intuitive theory, emotions (or emotional reactions) are caused by how the person interprets (or “appraises”) external events in relation to his other mental states (goals, beliefs, moral values, costs, traits, etc; Figure 1). For example, Greg’s emotional reactions will depend on whether (according to Greg) external events fulfill his goals, contradict his beliefs, reduce the constraints or costs of his preferred actions, violate his values, and so on. As with intentional actions, the same intuitive theory also supports inverse inferences. In the intuitive theory, emotions (which are internal mental states) cause emotional expressions (which are externally observable behaviors), so observers can use perceived emotional behaviors to infer underlying emotions (i.e. perform an inverse inference from observed effects to unobserved cause). Situating emotion concepts within the intuitive theory of mind in this way may seem obvious, but has many ramifications, some of which we explore in the remainder of this article.

Figure 1.

A box and arrow simplification of part of the intuitive causal theory of other minds. Ovals denote unobservable, internal states; rectangles are externally observable. Constraints include appraisals of the costs of actions, what is possible, what is controllable, and other beliefs. Goals and values include both local goals and intentions, but also long term values like relationships and status, and therefore can directly influence expressions. At the core of the model is the inferred “appraisal” process: interpreting external events through the lens of their relevance for one’s goals, beliefs, costs, and so on. Inferred appraisals cause emotions (internal states) which cause expressions (observable behaviors). An observer can therefore predict emotions based on inferred appraisals (following the causal arrows) or from the observed expressions (inverse of the causal arrows). Compare similar models in [14,18**,19].

Specificity and development of emotion inference

First, this approach offers a natural, systematic way to formalize highly-differentiated predictions of others’ emotions, and the links between those predictions and the rest of our sophisticated reasoning about other minds. Although no existing model has yet fulfilled this promise, parts of the intuitive theory of mind have already been well-described in Bayesian generative causal models [16*,17]. Capitalizing on this progress, the same formalizations can be used to model (some) human emotion predictions. For example, in a simple lottery context, two parameters of the target’s appraisal could be inferred directly from a description of the event — his overall reward, and his prediction error — and combined to capture in quantitative detail the emotions that observers predicted [18**]. Relatedly, Wu and colleagues showed participants simple moral scenarios, in which Grace puts white powder in another girl’s coffee [19]. The powder turns out to be poison, and the girl dies. Participants use Grace’s smiling facial expression to infer both that Grace knew the powder was poison, and that she wanted the girl to die. These inferences could be precisely described as inverse inferences in the participants’ intuitive theory of mind. (In the real world, observers make similarly momentous inverse inferences based on emotional reactions [20,21].)

Even children’s earliest understanding of others’ emotions implies (simple) inferred appraisals. Based on an agent’s observed motion path (and a principle of rational action), preverbal infants can infer the agent’s goal (e.g. to get over the wall); then, relative to that goal, infants can distinguish between outcomes that the agent would appraise as goal-consistent or not [22]. Critically, by 10 months old, infants also appear to predict a relevant emotion (or affective state) that causes subsequent expressions (laughing or crying) and are surprised if the agent whose goal was fulfilled then shows a negative-valence behavior, crying [23**] (see also [24]). During development, children’s intuitive theory of mind becomes more sophisticated, and their third-person emotion attributions follow suit [15,25–30]. (Note that while some developmental psychologists reserve the term “theory of mind” for a meta-representational understanding of beliefs, e.g. [30], here the Bayesian model of theory of mind is a generative causal theory, encompassing goals and actions as well as beliefs, costs, and values [10,16*].)

The long-term goal, however, is not just to capture one or two components of observers’ emotion knowledge; rather, it is to develop a formal model that captures all of the same inferred appraisals as human observers do. Promising for this line of work, when given human labels for the target’s appraisals, computational models can already capture a relatively wide and differentiated range of human emotion predictions. Two recent studies provide converging evidence. Using human ratings for 25 appraisal features, a model correctly chose an emotion label (out of 14) for 51% of 6000 real-life events; only 10% of the model’s choices were judged “wrong” by human observers [31]. Similarly, using human ratings for 38 inferred appraisals, a simple model correctly chose the emotion label (out of 20) for 57% of 200 short stories; human accuracy on the same test was 63% [32**]. These models do not yet capture the link from the event to the target’s values, goals, beliefs, and costs, and thus to inferred appraisals. Still, the models’ success suggests that once these links are included, the intuitive theory of mind will capture a substantial portion of shared human knowledge about emotions.

Ambiguous perception and precise predictions

Second, our proposal offers novel insight into predictions based on combinations of inferred appraisals (forward inference) and perceived emotional expressions (inverse inference). People intuit that faces contain the most revealing information about others’ emotions [33,34]. Perhaps surprisingly, mounting scientific evidence shows that human emotion attribution from faces is actually uncertain, noisy, and low-dimensional [35–37]. Many different emotions can be attributed to the same facial configuration [38*–41]; and the space of emotions perceived in faces can be captured in just a handful of dimensions [42,43*]. Even the valence of the event (goal-congruent or not) is not reliably perceived in high-intensity faces: the exact same facial configuration can be attributed to extreme joy (the unexpected return of a child from military service), extreme distress (witnessing a terrorist attack), extreme pleasure (orgasm), or extreme pain (unanesthetized nipple piercing) with equal plausibility [33]. To disambiguate these emotions, observers rely on body posture (open arms, lifted chest [44]) or inferred appraisals of the event (“he won the race” [45]).

Although both body posture and event information are known to disambiguate emotion recognition [33,37,45,46], our model makes a novel distinction between inverse inferences (from bodies) and forward inferences (from event-appraisals). On one hand, observers intuitively infer a common cause (an underlying emotion) of observable face, body and vocal cues. Thus, integrating facial and body configuration, as well as vocal tone, can improve the reliability and specificity of inverse inferences [44,47,48]. Postural information is less ambiguous than facial configuration when perceived at high intensity, from a distance, etc. [44]; similarly, vocal bursts are more informative for distinguishing among positive emotions [49]. As a result, depending on the context, the modality with the most reliable information will appear to dominate emotion attributions [18**,46]; when one cue is ambiguous, cues from other modalities can “sharpen” the inferred cause by shifting attributions among similar, or nearby, emotions [37]. On the other hand, event information is intuitively relevant to the cause of the emotion, rather than its consequences. Additional event information can make emotion attributions more reliable not only by continuously shifting among similar emotions, but also by selecting among separated possibilities [50], because partial event knowledge can generate predictions of distinct (dissimilar, non-overlapping) alternative emotions (e.g. how will he feel after he asks his crush on a first date?). This difference between forward and inverse inferences has been obscured in prior research that confused postural and event-context cues: for example, a photograph of nipple piercing [33] contains mainly event information supporting inferred appraisals, not an emotional posture.

Relatedly, we can distinguish between two ways that “dynamic” facial expressions contain more information than static ones [51]. On the one hand, dynamic change can more precisely differentiate expressive from structural facial features (e.g. a person with dark brows from a person making an angry expression) [52–55]. Dynamic change can also provide more clarity on mixed expressions, by separating the mixture in time [56]. In these ways, dynamic expressions may lead to more specific or more confident inverse inferences (though observers can also be surprisingly insensitive to dynamic information per se [36,57]). On the other hand, when temporal change in the face coincides with temporal change in the external event structure, dynamics support forward inference by highlighting the emotionally-relevant aspect of an event [58]. For example, observers are generally quite insensitive to elements of surprise (“wide-eyed”) in mixed expressions [19,42]. When a change of expression is temporally coincident with an event outcome, though, observers accurately infer that the information was unexpected and change their inferred appraisals accordingly [19]. The temporal sequence of emotions can further constrain inferred appraisals; if people intuit that cognitive processes occur at different speeds then the order of expressions can indicate which hidden mental variable is associated with which emotion.

Third, we propose that there is a key asymmetry between forward and inverse inferences of emotion. The forward inference depends on inferred appraisals which are highly differentiated and granular. However, people’s intuitive theory of mind is also biased and based on simplifying heuristics, inducing systematic errors [59]. We assume people share our desires, values, norms [60]. We underestimate people’s ability to cope, recover, and rebound from significant events [61,62]. These biases in the intuitive theory of mind translate into systematic errors in predictions of emotions. By contrast, inverse inference from emotional expressions is uncertain and low-dimensional, but also relatively accurate and unbiased. Combining both sources is therefore uniquely powerful: forward inferences from inferred appraisals can suggest highly specific, granular, differentiated predictions of another person’s emotions; perception of that person’s expressions can confirm or contradict these predictions, allowing for rapid correction within a reduced possibility space.

Neural representations of fine-grained emotion concepts

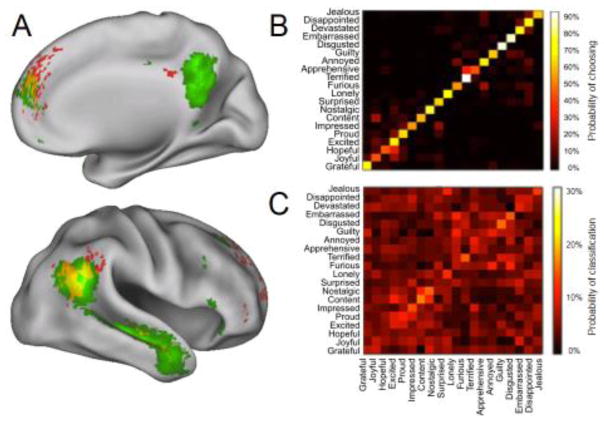

Finally, situating emotion concepts within the intuitive theory of mind fits well with recent neuroscientific evidence. Highly-differentiated representations of others’ emotions are almost exclusively found in brain regions associated with theory of mind, especially in temporo-parietal and medial frontal cortex [32**,63,64] (Figure 2). These representations are abstract and amodal, generalizing across emotions inferred from stories, events, and expressions [32**,65]. By contrast, perception of emotional expressions, and even integration of those expressions across modalities, depends on distinct brain regions, especially the superior temporal sulcus [66–70]. These two processes are dissociable in individual differences [71–73], and in neurodegenerative disorders [74]. Taken together, these lines of evidence strongly support the link between emotion concepts and the rest of an observer’s intuitive theory of mind.

Figure 2.

(A) Fine-grained discrimination of others’ emotions (20-way classification) from short verbal narratives (in red) depends on the same brain regions that are involved in intuitive reasoning about other minds (green), especially temporo-parietal junction and medial prefrontal cortex. (B) The behavioral confusion matrix for human participants: bright colors show the probability of a human choosing each label, averaged over 10 stories in each of 20 categories. (C) The confusion matrix based on patterns of response in brain regions associated with theory of mind. Adapted with permission from [32**].

Conclusion

Two lines of scientific research have made substantial progress in parallel, and now stand to make even more progress in concert. On the one hand, formal computational models have begun to capture the core of people’s intuitive theory of mind. These models can accurately model inferences over continuous quantitative variables, within abstract hierarchical structures. As of yet, however, these models have made limited progress in the domain of emotion understanding. On the other hand, the conceptual act theory of emotion attribution identifies the powerful influence of emotion concepts on emotion attribution (though emotion concepts are usually operationalized as words, or labels) [75,76]. Appraisal theory describes some of the content of shared knowledge about emotional events (though as a hand-picked and manually-coded list, rather than a generative causal model) [31]. Using the intuitive theory of mind as a framework to formalize observers’ inferences about a target’s appraisals offers a powerful tool to capture, and even recreate in a computer [77], our detailed knowledge of how others feel.

Highlights.

Humans recognize and reason about specific fine-grained emotions in others.

Generative causal models of people's intuitive theory of mind can formalize human emotion attributions.

Observed emotional expressions yield low-dimensional, noisy and uncertain emotion attributions.

Forward predictions of others' emotions based on event structure are precise but subject to systemic biases.

These complimentary inferences can synergize to yield rich, granular understandings of others' minds.

Footnotes

We thank the NSF Center for Minds Brains and Machines (CCF-1231216) and NIH Grant 1R01 MH096914-01A1 (R.S.) for supporting the work; and Josh Tenenbaum, Laura Schulz and Stefano Anzellotti for vigorous discussion.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1*.Lake BM, Ullman TD, Tenenbaum JB, Gershman SJ. Building Machines That Learn and Think Like People. Behav Brain Sci. 2016:1–101. doi: 10.1017/S0140525X16001837. Comprehensive argument for using computational generative models to build human-like artificial intelligence to address the current major shortcoming of machine learning. Stresses the use of intuitive theories of mind to infer human cognitive processes and proposes machine learning challenges that target specific methodical leaps in service of this aim. [DOI] [PubMed] [Google Scholar]

- 2.Barrett LF. The Conceptual Act Theory: A Précis. Emotion Review. 2014;6:292–297. doi: 10.1177/1754073914534479. [DOI] [Google Scholar]

- 3.Moors A. Flavors of Appraisal Theories of Emotion. Emotion Review. 2014;6:303–307. doi: 10.1177/1754073914534477. [DOI] [Google Scholar]

- 4.Tracy JL. An Evolutionary Approach to Understanding Distinct Emotions. Emotion Review. 2014;6:308–312. doi: 10.1177/1754073914534478. [DOI] [Google Scholar]

- 5.Adolphs R. How should neuroscience study emotions? by distinguishing emotion states, concepts, and experiences. Social Cognitive and Affective Neuroscience. 2016:nsw153–8. doi: 10.1093/scan/nsw153.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Zaki J, Williams WC. Interpersonal emotion regulation. Emotion. 2013;13:803–810. doi: 10.1037/a0033839. [DOI] [PubMed] [Google Scholar]

- 7.Baker CL, Jara-Ettinger J, Saxe R, Tenenbaum JB. Rational quantitative attribution of beliefs, desires, and percepts in human mentalizing. Nat Hum Behav. 2017 In press. [Google Scholar]

- 8.Murphy GL, Medin DL. The role of theories in conceptual coherence. Psychological Review. 1985;92:289–316. [PubMed] [Google Scholar]

- 9.Wellman HM. Making minds: How theory of mind develops. Oxford University Press; 2014. [Google Scholar]

- 10.Baker CL, Saxe R, Tenenbaum JB. Action understanding as inverse planning. Cognition. 2009;113:329–349. doi: 10.1016/j.cognition.2009.07.005. [DOI] [PubMed] [Google Scholar]

- 11.Jara-Ettinger J, Gweon H, Tenenbaum JB, Schulz LE. Children's understanding of the costs and rewards underlying rational action. Cognition. 2015;140:14–23. doi: 10.1016/j.cognition.2015.03.006. [DOI] [PubMed] [Google Scholar]

- 12.Gershman SJ, Gerstenberg T, Baker CL, Cushman FA. Plans, Habits, and Theory of Mind. PLoS ONE. 2016;11:e0162246–24. doi: 10.1371/journal.pone.0162246.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ong DC, Zaki J, Goodman ND. Emotions in lay explanations of behavior. CogSci. 2016:360–365. [Google Scholar]

- 14.Böhm G, Pfister H-R. How people explain their own and others' behavior: a theory of lay causal explanations. Front Psychol. 2015;6:139. doi: 10.3389/fpsyg.2015.00139.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Harris PL, de Rosnay M, Pons F. Understanding emotion. In: Barrett LF, Lewis M, Haviland-Jones JM, editors. Handbook of Emotions. 4. 2016. [Google Scholar]

- 16*.Jara-Ettinger J, Gweon H, Schulz LE, Tenenbaum JB. The Naïve Utility Calculus: Computational Principles Underlying Commonsense Psychology. Trends Cogn Sci. 2016;20:589–604. doi: 10.1016/j.tics.2016.05.011. Formal generative Bayesian model of how both children and adults use intuitive theories of others' subjective utility functions to infer the contents of their minds, and employ these inferences as bases for action. Such inferences can be sophisticated and abstract, spanning others' beliefs, desires, preferences, prior knowledge, and character traits. [DOI] [PubMed] [Google Scholar]

- 17.Moutoussis M, Fearon P, El-Deredy W, Dolan RJ, Friston KJ. Bayesian inferences about the self (and others): A review. Consciousness and Cognition. 2014;25:67–76. doi: 10.1016/j.concog.2014.01.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18**.Ong DC, Zaki J, Goodman ND. Affective cognition: Exploring lay theories of emotion. Cognition. 2015;143:141–162. doi: 10.1016/j.cognition.2015.06.010. Uses a formal intuitive theory of mind to model observer’s quantitative predictions of others’ emotions (in a lottery context) based on inferred event appraisals, based on emotional expressions, and based on combinations of both sources of information. [DOI] [PubMed] [Google Scholar]

- 19.Wu Y, Baker CL, Tenenbaum JB. Joint inferences of belief and desire from facial expressions. CogSci. 2014:1796–1801. [Google Scholar]

- 20.The weeping Oscar Pistorius and a final question: Has it all been an act? 2014 https://www.washingtonpost.com/news/morning-mix/wp/2014/10/15/the-weeping-oscar-pistorius-and-a-final-question-has-it-all-been-an-act.

- 21.Armstrong K, Semien R, Miller TC, Glass I. Anatomy of Doubt, This American Life. 2016 https://www.thisamericanlife.org/radio-archives/episode/581/anatomy-of-doubt.

- 22.Gergely G, Csibra G. Teleological reasoning in infancy: the na ve theory of rational action. Trends Cogn Sci. 2003;7:287–292. doi: 10.1016/S1364-6613(03)00128-1. [DOI] [PubMed] [Google Scholar]

- 23**.Skerry AE, Spelke ES. Preverbal infants identify emotional reactions that are incongruent with goal outcomes. Cognition. 2014;130:204–216. doi: 10.1016/j.cognition.2013.11.002. Three elegant experiments provide evidence that 10-month-old infants predict that an agent’s emotional reactions will depend on whether the agent’s inferred goals are fulfilled or not. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Repacholi BM, Meltzoff AN, Toub TS, Ruba AL. Infants’ generalizations about other people’s emotions: Foundations for trait-like attributions. Developmental Psychology. 2016;52:364–378. doi: 10.1037/dev0000097. [DOI] [PubMed] [Google Scholar]

- 25.Ong DC, Asaba M, Gweon H. Young children and adults integrate past expectations and current outcomes to reason about others' emotions. CogSci. 2016:135–140. [Google Scholar]

- 26.Ronfard S, Harris PL. When will Little Red Riding Hood become scared? Children’s attribution of mental states to a story character. Developmental Psychology. 2014;50:283–292. doi: 10.1037/a0032970. [DOI] [PubMed] [Google Scholar]

- 27.Ornaghi V, Grazzani I. The relationship between emotional-state language and emotion understanding: A study with school-age children. Cognition & Emotion. 2013;27:356–366. doi: 10.1080/02699931.2012.711745. [DOI] [PubMed] [Google Scholar]

- 28.Nelson JA, de Lucca Freitas LB, O’Brien M, Calkins SD, Leerkes EM, Marcovitch S. Preschool-aged children's understanding of gratitude: Relations with emotion and mental state knowledge. British Journal of Developmental Psychology. 2012;31:42–56. doi: 10.1111/j.2044-835X.2012.02077.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Weimer AA, Sallquist J, Bolnick RR. Young Children's Emotion Comprehension and Theory of Mind Understanding. Early Education & Development. 2012;23:280–301. doi: 10.1080/10409289.2010.517694. [DOI] [Google Scholar]

- 30.O'Brien M, Miner Weaver J, Nelson JA, Calkins SD, Leerkes EM, Marcovitch S. Longitudinal associations between children's understanding of emotions and theory of mind. Cognition & Emotion. 2011;25:1074–1086. doi: 10.1080/02699931.2010.518417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Scherer KR, Meuleman B. Human Emotion Experiences Can Be Predicted on Theoretical Grounds: Evidence from Verbal Labeling. PLoS ONE. 2013;8:e58166–8. doi: 10.1371/journal.pone.0058166.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32**.Skerry AE, Saxe R. Neural Representations of Emotion Are Organized around Abstract Event Features. Current Biology. 2015;25:1945–1954. doi: 10.1016/j.cub.2015.06.009. Fine-grained emotion distinctions (20-way classification) were measured behaviorally and neurally in human observers. Information relevant to these distinctions was present in brain regions involved in intuitive theory of mind. Both behavioral and neural representations were well captured by a high-dimensional space of abstract event features. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Aviezer H, Trope Y, Todorov A. Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science. 2012;338:1225–1229. doi: 10.1126/science.1224313. [DOI] [PubMed] [Google Scholar]

- 34.Bonnefon J-F, Hopfensitz A, De Neys W. The modular nature of trustworthiness detection. J Exp Psychol Gen. 2013;142:143–150. doi: 10.1037/a0028930. [DOI] [PubMed] [Google Scholar]

- 35.Russell JA. A Sceptical Look at Faces as Emotion Signals. In: Abell C, Smith J, editors. The Expression of Emotion: Philosophical, Psychological and Legal Perspectives. Cambridge University Press; Cambridge: 2016. pp. 157–172. [Google Scholar]

- 36.Bartlett MS, Littlewort GC, Frank MG, Lee K. Automatic Decoding of Facial Movements Reveals Deceptive Pain Expressions. Current Biology. 2014;24:738–743. doi: 10.1016/j.cub.2014.02.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hassin RR, Aviezer H, Bentin S. Inherently Ambiguous: Facial Expressions of Emotions, in Context. Emotion Review. 2013;5:60–65. doi: 10.1177/1754073912451331. [DOI] [Google Scholar]

- 38*.Wenzler S, Levine S, van Dick R, Oertel-Knöchel V, Aviezer H. Beyond pleasure and pain: Facial expression ambiguity in adults and children during intense situations. Emotion. 2016;16:807–814. doi: 10.1037/emo0000185. A replication and extention of Aviezer et al. [33], finding that observers cannot discriminate high intensity positive versus negative emotions, based on naturally occurring facial expressions. [DOI] [PubMed] [Google Scholar]

- 39.Aviezer H, Messinger DS, Zangvil S, Mattson WI, Gangi DN, Todorov A. Thrill of victory or agony of defeat? Perceivers fail to utilize information in facial movements. Emotion. 2015;15:791–797. doi: 10.1037/emo0000073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Widen SC, Russell JA. Differentiation in preschooler's categories of emotion. Emotion. 2010;10:651–661. doi: 10.1037/a0019005. [DOI] [PubMed] [Google Scholar]

- 41.Russell JA, Suzuki N, Ishida N. Canadian Greek and Japanese freely produced emotion labels for facial expressions. Motivation and Emotion. 1993;17:337–351. doi: 10.1007/BF00992324. [DOI] [Google Scholar]

- 42.Mehu M, Scherer KR. Emotion categories and dimensions in the facial communication of affect: An integrated approach. Emotion. 2015;15:798–811. doi: 10.1037/a0039416. [DOI] [PubMed] [Google Scholar]

- 43*.Dobs K, Bülthoff I, Breidt M, Vuong QC, Curio C, Schultz J. Quantifying human sensitivity to spatio-temporal information in dynamic faces. Vision Res. 2014;100:78–87. doi: 10.1016/j.visres.2014.04.009. Systematic manipulation of spatio-temporal information in dynamic faces found that people used sparse cues to process facial motion and gave noisy similarity ratings, but were highly sensitive to deviations from natural motion dynamics. [DOI] [PubMed] [Google Scholar]

- 44.Martinez L, Falvello VB, Aviezer H, Todorov A. Contributions of facial expressions and body language to the rapid perception of dynamic emotions. Cognition & Emotion. 2015;30:939–952. doi: 10.1080/02699931.2015.1035229. [DOI] [PubMed] [Google Scholar]

- 45*.Kayyal M, Widen S, Russell JA. Context is more powerful than we think: Contextual cues override facial cues even for valence. Emotion. 2015;15:287–291. doi: 10.1037/emo0000032. Observers viewed real photographs of Olympic athletes at the moment of winning or losing their event, and were given either correct or misleading information about the context. Verbal descriptions of the event dominated the facial expression in determining the attributed emotion. [DOI] [PubMed] [Google Scholar]

- 46.Zaki J. Cue Integration: A Common Framework for Social Cognition and Physical Perception. Perspectives on Psychological Science. 2013;8:296–312. doi: 10.1177/1745691613475454. [DOI] [PubMed] [Google Scholar]

- 47.de Gelder B, de Borst AW, Watson R. The perception of emotion in body expressions. WIREs Cogni Sci. 2015;6:149–158. doi: 10.1002/wcs.1335. [DOI] [PubMed] [Google Scholar]

- 48.Schlegel K, Grandjean D, Scherer KR. Emotion recognition: Unidimensional ability or a set of modality- and emotion-specific skills? Personality and Individual Differences. 2012;53:16–21. doi: 10.1016/j.paid.2012.01.026. [DOI] [Google Scholar]

- 49.Simon-Thomas ER, Keltner DJ, Sauter D, Sinicropi-Yao L, Abramson A. The voice conveys specific emotions: Evidence from vocal burst displays. Emotion. 2009;9:838–846. doi: 10.1037/a0017810. [DOI] [PubMed] [Google Scholar]

- 50.Ma WJ, Zhou X, Ross LA, Foxe JJ, Parra LC. Lip-Reading Aids Word Recognition Most in Moderate Noise: A Bayesian Explanation Using High-Dimensional Feature Space. PLoS ONE. 2009;4:e4638–14. doi: 10.1371/journal.pone.0004638.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Krumhuber EG, Scherer KR. The Look of Fear from the Eyes Varies with the Dynamic Sequence of Facial Actions. Swiss Journal of Psychology. 2016;75:5–14. doi: 10.1024/1421-0185/a000166. [DOI] [Google Scholar]

- 52.Hehman E, Flake JK, Freeman JB. Static and Dynamic Facial Cues Differentially Affect the Consistency of Social Evaluations. Pers Soc Psychol Bull. 2015;41:1123–1134. doi: 10.1177/0146167215591495. [DOI] [PubMed] [Google Scholar]

- 53.Todorov A, Olivola CY, Dotsch R, Mende-Siedlecki P. Social Attributions from Faces: Determinants, Consequences, Accuracy, and Functional Significance. Annu Rev Psychol. 2015;66:519–545. doi: 10.1146/annurev-psych-113011-143831. [DOI] [PubMed] [Google Scholar]

- 54.Todorov A, Porter JM. Misleading First Impressions. Psychol Sci. 2014;25:1404–1417. doi: 10.1177/0956797614532474. [DOI] [PubMed] [Google Scholar]

- 55.Goren A, Todorov A. Two faces are better than one: Eliminating false trait associations with faces. Social Cognition. 2009;27:222–248. doi: 10.1521/soco.2009.27.2.222. [DOI] [Google Scholar]

- 56.Jack RE, Garrod OGB, Schyns PG. Dynamic Facial Expressions of Emotion Transmit an Evolving Hierarchy of Signals over Time. Current Biology. 2014;24:187–192. doi: 10.1016/j.cub.2013.11.064. [DOI] [PubMed] [Google Scholar]

- 57.Widen SC, Russell JA. Do Dynamic Facial Expressions Convey Emotions to Children Better Than Do Static Ones? Journal of Cognition and Development. 2015;16:802–811. doi: 10.1080/15248372.2014.916295. [DOI] [Google Scholar]

- 58.Mumenthaler C, Sander D. Automatic integration of social information in emotion recognition. J Exp Psychol Gen. 2015;144:392–399. doi: 10.1037/xge0000059. [DOI] [PubMed] [Google Scholar]

- 59.Saxe R. Against simulation: the argument from error. Trends Cogn Sci. 2005;9:174–179. doi: 10.1016/j.tics.2005.01.012. [DOI] [PubMed] [Google Scholar]

- 60.Coleman MD. Emotion and the False Consensus Effect. Current Psychology. 2016:1–7. doi: 10.1007/s12144-016-9489-0.. [DOI] [Google Scholar]

- 61.Miloyan B, Suddendorf T. Feelings of the future. Trends Cogn Sci. 2015;19:196–200. doi: 10.1016/j.tics.2015.01.008. [DOI] [PubMed] [Google Scholar]

- 62.Cooney G, Gilbert DT, Wilson TD. The unforeseen costs of extraordinary experience. Psychol Sci. 2014;25:2259–2265. doi: 10.1177/0956797614551372. [DOI] [PubMed] [Google Scholar]

- 63.Sebastian CL, Fontaine NMG, Bird G, Blakemore S-J, De Brito SA, McCrory EJP, et al. Neural processing associated with cognitive and affective Theory of Mind in adolescents and adults. Social Cognitive and Affective Neuroscience. 2012;7:53–63. doi: 10.1093/scan/nsr023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Ferrari C, Lega C, Vernice M, Tamietto M, Mende-Siedlecki P, Vecchi T, et al. The Dorsomedial Prefrontal Cortex Plays a Causal Role in Integrating Social Impressions from Faces and Verbal Descriptions. Cereb Cortex. 2016;26:156–165. doi: 10.1093/cercor/bhu186. [DOI] [PubMed] [Google Scholar]

- 65.Skerry AE, Saxe R. A common neural code for perceived and inferred emotion. J Neurosci. 2014;34:15997–16008. doi: 10.1523/JNEUROSCI.1676-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Lee KH, Siegle GJ. Different brain activity in response to emotional faces alone and augmented by contextual information. Psychophysiology. 2014;51:1147–1157. doi: 10.1111/psyp.12254. [DOI] [PubMed] [Google Scholar]

- 67.Watson R, Latinus M, Noguchi T, Garrod O, Crabbe F, Belin P. Crossmodal adaptation in right posterior superior temporal sulcus during face-voice emotional integration. J Neurosci. 2014;34:6813–6821. doi: 10.1523/JNEUROSCI.4478-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Srinivasan R, Golomb JD, Martinez AM. A Neural Basis of Facial Action Recognition in Humans. Journal of Neuroscience. 2016;36:4434–4442. doi: 10.1523/JNEUROSCI.1704-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Wegrzyn M, Riehle M, Labudda K, Woermann F, Baumgartner F, Pollmann S, et al. Investigating the brain basis of facial expression perception using multi-voxel pattern analysis. Cortex. 2015;69:131–140. doi: 10.1016/j.cortex.2015.05.003. [DOI] [PubMed] [Google Scholar]

- 70.Kotz SA, Kalberlah C, Bahlmann J, Friederici AD, Haynes J-D. Predicting vocal emotion expressions from the human brain. Hum Brain Mapp. 2012;34:1971–1981. doi: 10.1002/hbm.22041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Anderson LC, Rice K, Chrabaszcz J, Redcay E. Tracking the Neurodevelopmental Correlates of Mental State Inference in Early Childhood. Dev Neuropsychol. 2015;40:379–394. doi: 10.1080/87565641.2015.1119836. [DOI] [PubMed] [Google Scholar]

- 72.Rice K, Redcay E. Spontaneous mentalizing captures variability in the cortical thickness of social brain regions. Social Cognitive and Affective Neuroscience. 2015;10:327–334. doi: 10.1093/scan/nsu081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Rice K, Viscomi B, Riggins T, Redcay E. Amygdala volume linked to individual differences in mental state inference in early childhood and adulthood. Dev Cogn Neurosci. 2014;8:153–163. doi: 10.1016/j.dcn.2013.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Lindquist KA, Gendron M, Barrett LF, Dickerson BC. Emotion perception, but not affect perception, is impaired with semantic memory loss. Emotion. 2014;14:375–387. doi: 10.1037/a0035293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Lindquist KA, Satpute AB, Gendron M. Does Language Do More Than Communicate Emotion? Curr Dir Psychol Sci. 2015;24:99–108. doi: 10.1177/0963721414553440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Lindquist KA, Gendron M. What's in a Word? Language Constructs Emotion Perception. Emotion Review. 2013;5:66–71. doi: 10.1177/1754073912451351. [DOI] [Google Scholar]

- 77.Gratch J, Marsella S. Appraisal Models. In: Calvo RA, D'Mello S, Gratch J, Kappas A, editors. The Oxford Handbook of Affective Computing. Oxford University Press; USA: 2014. pp. 54–67. [Google Scholar]