Abstract

Background

Kenya has implemented the Strengthening Laboratory Management Toward Accreditation (SLMTA) programme to facilitate quality improvement in medical laboratories and to support national accreditation goals. Continuous quality improvement after SLMTA completion is needed to ensure sustainability and continue progress toward accreditation.

Methods

Audits were conducted by qualified, independent auditors to assess the performance of five enrolled laboratories using the Stepwise Laboratory Quality Improvement Process Towards Accreditation (SLIPTA) checklist. End-of-programme (exit) and one year post-programme (surveillance) audits were compared for overall score, star level (from zero to five, based on scores) and scores for each of the 12 Quality System Essential (QSE) areas that make up the SLIPTA checklist.

Results

All laboratories improved from exit to surveillance audit (median improvement 38 percentage points, range 5–45 percentage points). Two laboratories improved from zero to one star, two improved from zero to three stars and one laboratory improved from three to four stars. The lowest median QSE scores at exit were: internal audit; corrective action; and occurrence management and process improvement (< 20%). Each of the 12 QSEs improved substantially at surveillance audit, with the greatest improvement in client management and customer service, internal audit and information management (≥ 50 percentage points). The two laboratories with the greatest overall improvement focused heavily on the internal audit and corrective action QSEs.

Conclusion

Whilst all laboratories improved from exit to surveillance audit, those that focused on the internal audit and corrective action QSEs improved substantially more than those that did not; internal audits and corrective actions may have acted as catalysts, leading to improvements in other QSEs. Systematic identification of core areas and best practices to address them is a critical step toward strengthening public medical laboratories.

Introduction

Accurate, timely and affordable medical diagnosis to support patient care and management remains a challenge in developing countries. Many laboratories are ill prepared to respond to health emergencies, yet their services are critical for the detection of new pathogens and containment of disease outbreaks.1 Establishing a quality management system (QMS) to support accreditation is a demanding process requiring great organisational skills, motivation and huge investment that may be both overwhelming and unfeasible for public medical laboratories with limited resources. In addition to the cost of developing these systems, barriers are raised by the lack of awareness amongst healthcare workers regarding the benefits of accreditation on health services. Several publications have highlighted the advantages of a comprehensive QMS in a laboratory setting along with recognition of areas of QMS that were at risk of failing.2,3,4,5 Accreditation is now widely recognised as being an essential element of strengthening the QMS in public health laboratories and may also have a positive effect on other sectors of the healthcare system.6,7

The past few years have seen increased support for health systems in Africa through funding from initiatives such as the US President’s Emergency Plan for AIDS Relief (PEPFAR); the Global Fund to Fight AIDS, Tuberculosis and Malaria; the Global Health Initiative; and organisations such as the World Bank.8 Strengthening integrated laboratory services within public health laboratories, as opposed to focusing on disease-specific programmes, has been encouraged as a means of establishing a cost-effective laboratory system.9 The growing recognition of the importance of laboratory services has resulted in the launch of several important initiatives including the Strengthening Laboratory Management Toward Accreditation (SLMTA) programme.

SLMTA was developed by the US Centers for Disease Control and Prevention (CDC) in collaboration with the American Society for Clinical Pathology (ASCP), the Clinton Health Access Initiative and the World Health Organization’s Regional Office for Africa (WHO AFRO), with the aim of promoting immediate measurable improvement in the laboratories of developing countries.10,11,12

The Kenya Health Service delivers medical laboratory services through a network of 958 laboratories, of which 70% belong to the government, 20% to nongovernmental organisations and 10% to the private sector.13 As of the end of 2012, eight (< 1%) of the 958 laboratories in Kenya had been accredited, none of which were in the government sector.4 As accreditation of health facilities is a key goal of the country’s 2008–2012 Strategic Plan,13 Kenya’s Ministry Of Health (MOH) established a National Accreditation Steering Committee to coordinate laboratory accreditation activities. After SLMTA’s launch in July 2009, the MOH, with support from CDC’s Kenya office, adopted the programme and enrolled 53 laboratories in six cohorts between 2010 and 2013 from amongst national reference, provincial, and district-level laboratories. The MOH set a target to accredit at least five laboratories from the first cohort of 13 to the International Organization for Standardization (ISO) 15189 standard by the end of 2014. Six main partners have helped implement SLMTA in Kenya: ASCP, A Global Health Care Public Foundation (AGHPF), Management Sciences for Health (MSH), the Kenya AIDS Vaccine Initiative (KAVI), the African Field Epidemiology Network (AFENET) and the World Bank. Twenty laboratories so far have graduated from the SLMTA programme.

After completing the SLMTA programme, laboratories still had substantial quality gaps to fill before seeking accreditation. This paper reviews the performance of five laboratories from the first SLMTA cohort in Kenya, highlighting the progress made in the year after completion of the programme and identifying areas critical to implementation and sustainability of a sound QMS.

Research methods and design

Site selection

Five laboratories from the first SLMTA cohort in Kenya were selected for this evaluation based on two primary factors: they had the same group of mentors and mentorship schedules after the exit audit; and all were audited during the same timeframe by the same team of auditors. For the purpose of confidentiality these laboratories are presented as A–E. Laboratory B is a private clinical research laboratory, whilst the other four are government-run provincial-level laboratories with significant automation in haematology and flow cytometry but only semi-automated equipment in clinical chemistry.

SLMTA training

The SLMTA training for the first cohort took place in 2010 and consisted of three workshops of four days each. Module one and cross-cutting activities were taught in workshop one, modules two to six were taught in workshop two and modules seven to 10 were taught in workshop three. The trainers were SLMTA Training-of-Trainers (TOT) graduates employed by CDC’s Kenya office. Two staff members from each of the five laboratories participated in the workshops. These participants were quality managers and laboratory managers, whose responsibilities included overseeing the implementation of QMS, and training and supervising the laboratory personnel. These workshops were interspersed with periods of three months, during which participants implemented improvement projects based on what was learned at the previous workshop and laboratory-specific quality gaps.

Audits

Baseline audits were conducted in April 2010, one month before the first SLMTA workshop. Since they were conducted by non-certified auditors, their findings are not included in this analysis.

Exit audits were conducted in August 2011, eight months after the third workshop. Surveillance audits were conducted one year later, in August 2012.

Audits were conducted using WHO AFRO’s Stepwise Laboratory Quality Improvement Process Towards Accreditation (SLIPTA) checklist. The SLIPTA checklist comprises 111 questions subdivided into 12 sections that represent the quality systems essentials (QSEs) from the Clinical and Laboratory Standards Institute.14 For each positively answered question, points were allocated (two, three or five); partially positive answers were awarded one point. A star rating was assigned as follows: five stars (244–258 points, ≥ 95% compliance), four stars (219–243 points, 85% – 94% compliance), three stars (193–218 points, 75% – 84% compliance), two stars (167–192 points, 65% – 74% compliance) and one star (142–166 points, 55% – 64% compliance). A score of 141 points or less (< 55%) received zero stars.15

Both exit and surveillance audits were conducted by auditors certified by The Kenya Accreditation Service (KENAS), the sole national accreditation body in Kenya. Auditors were selected on the basis of training and technical expertise, as well as having had no previous engagement with the laboratory being audited to safeguard impartiality. All auditors had completed core training on SLIPTA as well as training on ISO requirements for quality and competency in medical laboratories (ISO 15189) and guidelines for QMS auditing (ISO 19011), had attended an annual assessor refresher course and had conducted at least one SLIPTA audit. The composition of each audit team was based on the scope of the laboratory being audited.

Methods of audit included: (1) review of documents; (2) review of records (procedures, minutes of the meetings, quality control data, corrective actions, work plans); (3) staff interviews; and (4) observation and witnessing of testing procedures. The interviews were open-ended questions used to obtain information required by the SLIPTA checklist. The laboratory management and staff received prior notification of the audit dates and auditors’ names. The auditors selected the staff and the procedure to be observed, using sampling techniques that ensured objectivity and impartiality of the audit process. This ensured that staff did not have prior knowledge of who was to be observed. Audits were conducted by two auditors per laboratory over a two-day period. Each auditor recorded their findings in their checklist before compiling the report in a consensus checklist that included the final score and the star rating for each laboratory. A summary report of all findings (both positive and negative) and a corrective action request form (for each nonconformity) were completed by the auditors on site and submitted to the laboratory management at the end of the second day of audit. A senior laboratory staff member was required to sign each form so as to acknowledge receipt of the findings. Any divergent opinions between the auditors and the laboratories were resolved on site before writing of the final report.

Mentorship

Mentorship during SLMTA implementation was unstructured, with mentors visiting laboratories for unspecified periods of time without concrete work plans. This deficiency was partly because of a lack of training for mentors, as well as the lack of a proper mentorship programme in the country by 2010. In contrast, after the exit audit new mentors were embedded in the five facilities for two weeks per month for four months. These mentors followed comprehensive work plans in the eight weeks they were in the facilities, which covered the entire SLIPTA checklist. The mentors were consultants in QMS implementation in medical laboratories contracted by the implementing partner. Their qualifications included diploma or a bachelor’s degree in Medical Laboratory Sciences, quality management training based on ISO 15189 and the SLIPTA checklist, and experience working in an accredited laboratory or one undergoing the accreditation process.

Data analysis

Retrospective data mining and analysis were conducted using SLIPTA audit data comprehensive reports and corrective action forms for each laboratory at the KENAS offices. We compared the exit and surveillance audit scores, evaluated performance in the individual QSEs and identified key areas of progress and challenges in implementation of a sound QMS. We also examined nonconformities at the exit and surveillance audits with a focus on identification of common issues. Any nonconformity identified at both the exit and surveillance audits in the same laboratory was classified as a recurring nonconformity. Statistical analysis was done using GraphPad Prism software Version 6.02 (GraphPad Software, La Jolla, California, USA, www.graphpad.com) and Microsoft® Excel.

Results

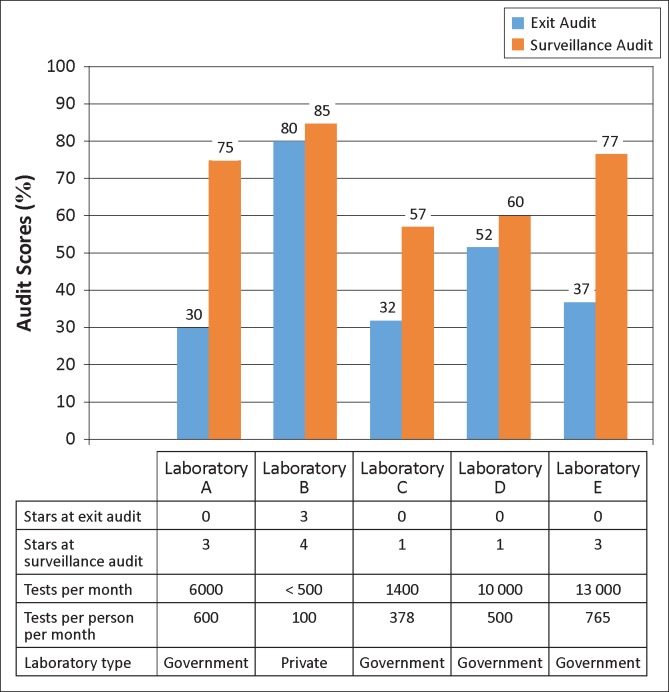

Exit audit scores were below the one-star level for all laboratories except B, which scored three stars at exit. All of the laboratories increased their scores from exit to surveillance audit; two achieved one star, two achieved three stars and the laboratory that began at three stars achieved four stars (Figure 1). Median scores increased from 37% at exit to 75% at surveillance audit (improvement range 5–45 percentage points).

FIGURE 1.

Performance of the five laboratories based on Stepwise Laboratory Quality Improvement Process Towards Accreditation (SLIPTA) checklist scores and star ratings.

The two laboratories with the highest per-person workload (A and E) had the most improvement from exit to surveillance audit, whilst the laboratory with the lowest volume (B) and highest exit score had the least improvement (Figure 1).

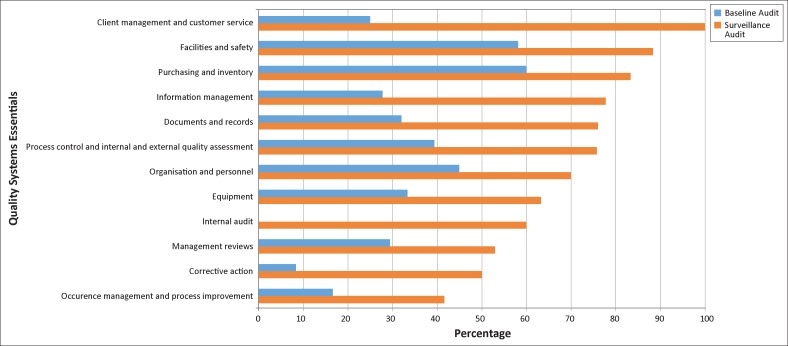

The lowest median QSE scores at exit were internal audit, corrective action and occurrence management, and process improvement (all < 20%) (Figure 2). The highest medians were in purchasing and inventory, facilities and safety, and organisation and personnel (all > 45%). Each of the 12 QSEs improved substantially at the surveillance audit (range 24 – 75 percentage points), with the greatest improvement in client management and customer service, internal audit and information management (≥ 50 percentage points). The smallest improvements were in purchasing and inventory, management reviews, organisation and personnel, and occurrence management and process improvement (≤ 25 percentage points). By the surveillance audit, the areas of greatest challenge were occurrence management and process improvement, corrective action and management reviews (all ≤ 55%), whilst the strongest areas were client management and customer service, facilities and safety, purchasing and inventory, and information management (all ≥ 78%) (Figure 2).

FIGURE 2.

Median scores of the five laboratories in the 12 Quality System Essentials (QSEs).

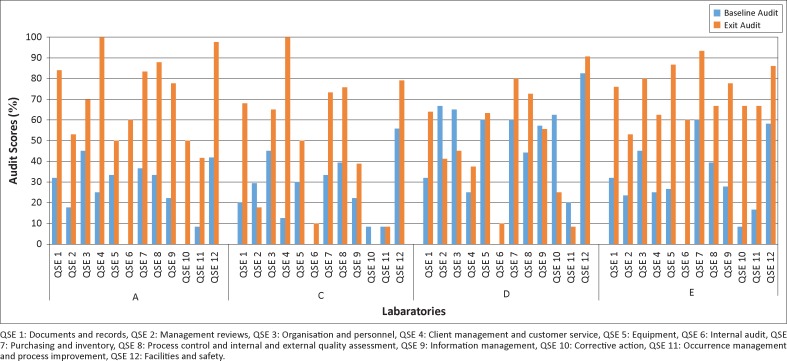

In order to examine more closely the relationship between specific areas of improvement and overall success, we compared results for laboratories A and E, which started with zero stars at exit (median 34%) and reached three stars at surveillance (median 76%), to those for laboratories C and D, which also started at zero stars (median 42%) and reached one star at surveillance (median 59%). We excluded laboratory B in this sub-analysis because it was markedly smaller than the others and started with a substantially higher score at exit. Most striking were the results for the internal audit and corrective action QSEs. All four of these laboratories scored 0% in internal audit at exit. Laboratories A and E both excelled in this area post-SLMTA, increasing their score to 60% by the surveillance audit. Laboratories C and D, on the other hand put little focus on this area, scoring only 10% at the surveillance audit (Figure 3). Moreover, laboratories A and E both excelled in corrective action, increasing from 0% to 50% and from 8% and 67%, respectively, whilst laboratories C and D decreased from 8% to 0% and from 63% to 25%, respectively. When excluding internal audit and corrective action, the other QSEs for laboratory A and E improved by a median of 48 percentage points from exit to surveillance audit, whilst those from C and D improved by 15 percentage points. No other QSEs had such consistent results.

FIGURE 3.

Performance of laboratories A, C, D and E in the 12 Quality System Essentials (QSEs).

Ten nonconformities were common to all five laboratories: lack of critical procedures, lack of or incomplete management review records, incomplete personnel files, lack of equipment or method validation, lack of equipment calibration records, deficient internal audit, inconsistent internal quality control monitoring, unacceptable proficiency testing results, ineffective corrective action and deficient quality indicator monitoring (Table 1). At the exit audits, laboratories A and E had the most nonconformities. However, these laboratories developed corrective action plans that covered most of the nonconformities, so that by the surveillance audit their nonconformities were reduced by more than half. Laboratories C and D, on the other hand, reduced their nonconformities by only 12% – 40% at the surveillance audit, with many recurring issues.

TABLE 1.

Number of nonconformities at exit and surveillance audits and implementation of the corrective action plan by the laboratory.

| Laboratory | Number of nonconformities at exit audit | Number of nonconformities at surveillance audit | Coverage of nonconformities in corrective action plan (%) | Number of recurring* nonconformities at surveillance audit** |

|

|---|---|---|---|---|---|

| n | % | ||||

| Laboratory A | 100 | 31 | 69 | 20 | 65 |

| Laboratory B | 23 | 19 | 17 | 12 | 63 |

| Laboratory C | 87 | 52 | 40 | 38 | 73 |

| Laboratory D | 60 | 53 | 12 | 30 | 56 |

| Laboratory E | 88 | 36 | 59 | 24 | 66 |

Any nonconformity identified at both exit and surveillance audits in the same laboratory was classified as a recurring nonconformity.

Common nonconformities (N = 10) included lack of critical procedures, lack of or incomplete management review records, incomplete personnel files, lack of equipment or method validation, lack of equipment calibration records, deficient internal audit, inconsistent internal quality control monitoring, unacceptable proficiency testing results, ineffective corrective action and deficient quality indicator monitoring.

Discussion

All five laboratories in this study made notable improvements from exit to surveillance audit, displaying sustained and even increased efforts after completion of the SLMTA programme. Whilst laboratory B outperformed the other laboratories both at its exit and surveillance audits, this may in part reflect the smaller size, lower workload and less complicated management system of this small private research laboratory compared with the four hospital laboratories. Laboratories C and D reached one star at their surveillance audits, recording more modest improvements than laboratories A and E, which reached three stars despite having a higher number of tests per technician. Overall, results suggest that intensive mentorship provided post-SLMTA may be helpful with regard to accelerating quality improvement.

In an attempt to identify the factors associated with the relatively greater success of laboratories A and E, we examined closely their improvements by QSE. For the most part, all of the laboratories tended to struggle in the same areas (primarily internal audit, corrective action, and occurrence/management and process improvement) and excelled in the same areas (organisation and personnel, facilities and safety, and purchasing and inventory). However, laboratories A and E improved substantially in the internal audit QSE, increasing their scores from 0% to 60% in this area by the surveillance audit; laboratories C and D did not. They also improved well in corrective action, whilst the scores for this QSE decreased in laboratories C and D. Furthermore, improvements to these scores alone do not account for the greater overall improvements of laboratories A and E, as their scores in other areas also improved substantially more than laboratories C and D (48% versus 15%). The QSE ‘internal audit’ involves checking whether internal audits have been conducted by trained auditors and examining the corrective actions carried out to rectify the audit findings. ISO 15189 mandates that internal audits be conducted at least annually.16 Conducting internal audits can help a laboratory stay focused and provides concrete information with which to better understand its own areas of weakness and make decisions for improvement.17 The American Association for Laboratory Accreditation suggests that internal audits are critical because they allow laboratories to identify the root and potential causes of problems that need to be eliminated so that the problems are prevented from occurring the first time or recurring after correction. Subsequently, corrective or preventative action is taken to address a problem and eliminate the root cause of the problem.18 Thus, in laboratories A and E, internal audits and corrective actions may have acted as catalysts, facilitating improvement in other areas. In addition, discussion of internal audit results within the laboratory may have helped to develop an overall quality culture, not only amongst laboratory staff but also amongst hospital management, as has been identified elsewhere as a key factor in SLMTA success.19 Whilst this hypothesis is generated using a very small number of laboratories and various findings have been reported in other small studies,20,21,22 our results suggest that further evaluation of the benefits of conducting regular internal audits followed by corrective action is warranted.

The 12 QSEs can be divided into three quality stages: resource management (pre-analytical), process management (analytical) and improvement management (post-analytical).23 The three QSEs with the lowest scores for these laboratories at exit audit (internal audit, corrective action, and occurrence management and process improvement) are all part of the improvement management stage. Datema et al.24 point out that this stage is given the lowest scoring weight of the three quality stages in the SLIPTA system (16% of the total score, compared with 48% for resource management and 36% for process management in the original scoring structure; and 20%, 48% and 33%, respectively, in the current scoring structure).15 They argue that ‘this stage is of prime importance for sustaining the continuous improvement cycle’24 and that by awarding it fewer points, laboratories may be ‘less stimulated to invest effort in improvement management’.24

Overall, data on nonconformities obtained from the exit and surveillance audit reports showed that the actions taken to address deficiencies were often inadequate and, in most instances, root causes were not established and eliminated. The completeness and implementation of the corrective action work plan varied between laboratories. Laboratories A and E developed focused corrective action work plans based on the findings of the exit audit and implemented them thoroughly. In contrast, laboratories C and D made less progress in implementing corrective action plans. Of note is that, despite attaining four stars, laboratory B had 19 nonconformities at the surveillance audit, including 12 recurring nonconformities from the exit audit. It may be that this laboratory had already covered the easier parts of the checklist during SLMTA implementation and the remaining weak areas, such as occurrence management and internal audit, were hardest to implement and sustain post-SLMTA. Recurring nonconformities are graded as major events and could potentially lead to a serious breakdown of the QMS if not addressed in a timely fashion. Thus, even laboratories with high SLIPTA scores may still have major nonconformities that could pose a threat to sustainability of the QMS and prevent accreditation.

Limitations

The circumstances in each country and in every laboratory within a country are unique, each facing multiple interconnected challenges. Whilst our analysis of five laboratories identified some interesting findings, it will be critical to expand this analysis using data from a large number of laboratories in multiple settings. This will give a better picture of performance trends across the 12 QSEs both during and after SLMTA implementation, as well as allowing for generalisation of the important issues facing laboratories today and the critical challenges impeding success and sustainability. Our study was limited by the lack of comparable baseline data from which to evaluate the common issues that existed before SLMTA implementation, as baseline data were excluded in order to minimise variability in auditor scoring. Finally, additional surveillance audits will be needed for the assessment of long-term sustainability of programme results.

Conclusion

This analysis reveals common gaps in laboratory QSEs that can be addressed during and after SLMTA implementation. In particular, internal audits and subsequent corrective actions may play key roles in catalysing improvements in other areas, although a more systematic global evaluation is needed in order to generalise common problems and determine the best practices to address them.

Acknowledgements

This publication was made possible by support from PEPFAR phase II through Cooperative Agreement number 5 U2G PS001285-02 from the CDC, Division of Global HIV/AIDS (DGHA).

We thank Dr. Jane Mwangi from CDC’s Kenya office for her valuable suggestions and the mentors of the first cohort laboratories, especially Lucy Otieno, Moses Oyaya, Florence Mawere and Hanington Ahenda, as well as the staff from laboratories A–E for providing valuable information.

Competing interests

The authors declare that they have no financial or personal relationship(s) that may have inappropriately influenced them in writing this article.

Authors’ contributions

R.N.M. (KENAS) collected, analysed and interpreted the data and wrote the manuscript. D.M.M. (KENAS) and A.D.M. (KENAS) assisted in data collection. S.M.O. (KENAS) was involved in both auditor selection and data collection. S.K.M. (KENAS) provided guidance on selection of facilities to include in this analysis. C.J.S. (CDC, Atlanta) was involved in data analysis and interpretation. S.M. (Botswana-Harvard AIDS Institute Partnership) assisted in data analysis. E.T.L. (CDC, Atlanta) assisted in data analysis and interpretation and significantly shaped the focus of the article.

CDC disclaimer

The findings and conclusions in this paper are those of the author(s) and do not necessarily represent the official position of the CDC or the Government of Kenya.

Footnotes

How to cite this article: Maina RN, Mengo DM, Mohamud AD, et al. Progressing beyond SLMTA: Are internal audits and corrective action the key drivers of quality improvement?. Afr J Lab Med. 2014;3(2), Art. #222, 7 pages. http://dx.doi.org/10.4102/ajlm.v3i2.222

References

- 1.Nkengasong JN, Nsubuga P, Nwanyanwu O, et al. Laboratory systems and services are critical in global health: Time to end the neglect? Am J Clin Pathol. 2010;134(3):368–373. http://dx.doi.org/10.1309/AJCPMPSINQ9BRMU6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Nicklin W, Dickson S. The value and impact of accreditation in health care: A review of the literature. Canada: Accreditation Canada; 2009. [Google Scholar]

- 3.Harper J.C, Sengupta S, Vesela K, et al. Accreditation of the PDG laboratory. Hum Reprod. 2010; 25(4) 1051–1065. http://dx.doi.org/10.1093/humrep/dep450 [DOI] [PubMed] [Google Scholar]

- 4.Schroeder LF, Amukele T. Medical laboratories in sub-Saharan Africa that meet international quality standards. Am J Clin Pathol. 2013;141(6):791–795. http://dx.doi.org/10.1309/AJCPQ5KTKAGSSCFN [DOI] [PubMed] [Google Scholar]

- 5.Zeh CE, Inzaule SC, Magero VO, et al. Field experience in implementing ISO 15189 in Kisumu, Kenya. Am J Clin Pathol. 2010;134(3):410–418. http://dx.doi.org/10.1309/AJCPZIRKDUS5LK2D [DOI] [PubMed] [Google Scholar]

- 6.Peter TF, Rotz PD, Blair DH, et al. Impact of laboratory accreditation on patient care and the health system. Am J Clin Pathol. 2010;134(4):550–555 http://dx.doi.org/10.1309/AJCPH1SKQ1HNWGHF [DOI] [PubMed] [Google Scholar]

- 7.Alkhenizan A, Shaw C. Impact of accreditation on the quality of healthcare services: A systematic review of the literature. Ann Saudi Med. 2011;31(4):407–416. http://dx.doi.org/10.4103/0256-4947.83204 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.McCoy D, Chand S, Sridhar D. Global health funding: How much, where it comes from and where it goes. Health Policy Plan. 2009;24(6):407–417. http://dx.doi.org/10.1093/heapol/czp026 [DOI] [PubMed] [Google Scholar]

- 9.Parsons LM, Somoskovi A, Lee E, et al. Global health: Integrating national laboratory health system and services in resource-limited settings. Afr J Lab Med. 2012;1(1), 5 pages. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Yao K, McKinney B, Murphy A, et al. Improving quality management systems of laboratories in developing countries: An innovative training approach to accelerate laboratory accreditation. Am J Clin Pathol. 2010;134(3):401–409. http://dx.doi.org/10.1309/AJCPNBBL53FWUIQJ [DOI] [PubMed] [Google Scholar]

- 11.Luman ET, Yao K, Nkengasong JN. A comprehensive review of the SLMTA literature part 1: Content analysis and future priorities. Afr J Lab Med. 2014;3(2), Art. #265, 11 pages. http://dx.doi.org/10.4102/ajlm.v3i2.265 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gershy-Damet GM, Rotz P, Cross D, et al. The World Health Organization African region laboratory accreditation process: Improving the quality of laboratory systems in the African region. Am J Clin Pathol. 2010;134(3):393–400. http://dx.doi.org/10.1309/AJCPTUUC2V1WJQBM [DOI] [PubMed] [Google Scholar]

- 13.Ministry of Public Health and Sanitation Strategic plan. The second national health sector strategic plan of Kenya. Reversing the trends [document on the Internet]. c2008 [cited 2014 Oct 07]. Available from: http://www.internationalhealthpartnership.net/fileadmin/uploads/ihp/Documents/Country_Pages/Kenya/Kenya%20ministy_of_public_health_and_sanitation_strategic_plan%202008-2012.pdf

- 14.Clinical and Laboratory Standards Institute Application of a quality management system model for laboratory services; Approved Guidelines – Third Edition. CLSI document GP26-A3 [ISBN 1-56238-553-4] Clinical and Laboratory Standards Institute; 2004. [Google Scholar]

- 15.World Health Organization’s Regional Office for Africa WHO-AFRO Stepwise Laboratory Quality Improvement Process Towards Accreditation (SLIPTA) Checklist [document on the Internet]. c2012 [cited 2013 May 31]. Available from: http://www.afro.who.int/en/clusters-a-programmes/hss/blood-safety-laboratories-a-health-technology/blt-highlights/3859-who-guide-for-the-stepwise-laboratory-improvement-process-towards-accreditation-in-the-african-region-with-checklist.html

- 16.International Organization for Standardization ISO 15189 Medical laboratories – Requirements for quality and competence. Geneva: International Organization for Standardization; 2005. [Google Scholar]

- 17.Clinical and Laboratory Standards Institute Assessments: laboratory internal audit program; Approved Guideline – Vol: 33 No. 15. CLSI Document QMS15-A [ISBN 1-56238-895-9] Clinical and Laboratory Standards Institute; 2013. [Google Scholar]

- 18.American Association for Laboratory Accreditation Internal auditing course training manual [document on the Internet]. c2012 [cited 2014 Jul 03]. Available from: http://www.aavld.org/assets/Accreditation/Accreditation_Documents/internal%20auditor%20course%20handbook.pdf

- 19.Maruti PM, Mulianga EA, Wambani LN, et al. Creating a sustainable culture of quality through the SLMTA programme in a district hospital laboratory in Kenya. Afr J Lab Med. 2014;3(2), Art. #201, 5 pages. http://dx.doi.org/10.4102/ajlm.v3i2.201 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mothabeng D, Maruta T, Lebina M, et al. Strengthening laboratory management towards accreditation: The Lesotho experience. Afr J Lab Med. 2012;1(1), Art. #9, 7 pages. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Makokha EP, Mwalili S, Basiye FL, et al. Using standard and institutional mentorship models to implement SLMTA in Kenya. Afr J Lab Med. 2014;3(2), Art. #220, 8 pages. http://dx.doi.org/10.4102/ajlm.v3i2.220 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Mokobela KO, Moatshe MT, Modukanele M. Accelerating the spread of laboratory quality improvement efforts in Botswana. Afr J Lab Med. 2014;3(2), Art. #207, 6 pages. http://dx.doi.org/10.4102/ajlm.v3i2.207 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.International Organization for Standardization ISO 9001:2008 quality management systems – Requirements. Geneva: International Organization for Standardization; 2008. [Google Scholar]

- 24.Datema TA, Oskam L, van Beers SM, et al. Critical review of the Stepwise Laboratory Improvement Process Towards Accreditation (SLIPTA): Suggestions for harmonization, implementation and improvement. Trop Med Int Health. 2012;17(3):361–367. [DOI] [PubMed] [Google Scholar]