Abstract

Purpose

Emerging evidence shows that interactive virtual environments (VEs) may be a promising tool for studying sensorimotor processes and for rehabilitation. However, the potential of VEs to recruit action observation-execution neural networks is largely unknown. For the first time, a functional MRI-compatible virtual reality system (VR) has been developed to provide a window into studying brain-behavior interactions. This system is capable of measuring the complex span of hand-finger movements and simultaneously streaming this kinematic data to control the motion of representations of human hands in virtual reality.

Methods

In a blocked fMRI design, thirteen healthy subjects observed, with the intent to imitate (OTI), finger sequences performed by the virtual hand avatar seen in 1st person perspective and animated by pre-recorded kinematic data. Following this, subjects imitated the observed sequence while viewing the virtual hand avatar animated by their own movement in real-time. These blocks were interleaved with rest periods during which subjects viewed static virtual hand avatars and control trials in which the avatars were replaced with moving non-anthropomorphic objects.

Results

We show three main findings. First, both observation with intent to imitate and imitation with real-time virtual avatar feedback, were associated with activation in a distributed frontoparietal network typically recruited for observation and execution of real-world actions. Second, we noted a time-variant increase in activation in the left insular cortex for observation with intent to imitate actions performed by the virtual avatar. Third, imitation with virtual avatar feedback (relative to the control condition) was associated with a localized recruitment of the angular gyrus, precuneus, and extrastriate body area, regions which are (along with insular cortex) associated with the sense of agency.

Conclusions

Our data suggest that the virtual hand avatars may have served as disembodied training tools in the observation condition and as embodied “extensions” of the subject’s own body (pseudo-tools) in the imitation. These data advance our understanding of the brain-behavior interactions when performing actions in VE and have implications in the development of observation- and imitation-based VR rehabilitation paradigms.

Keywords: Virtual environment, VR, motor control, imitation, hand

1. Introduction

Technological advances, such as virtual reality (VR), are experiencing a period of rapid growth and offer exceptional opportunity to extend the reach of services available to a variety of disciplines. Virtual environments (VEs) can be used to present richly complex multimodal sensory information to the user and can elicit a substantial feeling of realness and agency on behalf of the individual immersed in such an artificial world (Riva et al., 2006). VR is an indispensable training tool in many areas including healthcare where physicians receive surgical training (McCloy and Stone 2001), patients receive cognitive therapies (Powers and Emmelkamp 2008), and soldiers benefit from post-traumatic stress disorder therapies (Rizzo et al., 2008). VR also demonstrates great value for the rehabilitation of patients with disordered movement due to neurological dysfunction (Holden 2005; Gaggioli et al., 2006; Merians et al., 2006; Merians et al., In Press), wherein new models including observation (Altschuler 2005; Buccino et al., 2006; Celnik et al., 2006), imagery (Butler and Page 2006) and imitation therapies (Gaggioli et al., 2006) which might be instrumental in facilitating the voluntary production of movement, may be incorporated. In spite of showing promise at improving some aspects of movement, the effect that interacting in VEs has on brain activity remains unknown – even in neurologically intact individuals.

The experiences of interest in the VR environment would be observation with intent to imitate, and the ability to integrate real experiences with virtual experiences of one’s own movement. Virtual environments might effectively serve as a never-tired model for observation therapy for the facilitation of the voluntary production of movement. Moreover, interactive VE can be used as a powerful tool to modulate feedback during training and motor learning to facilitate recovery through various plasticity mechanisms. However, there is little evidence to enable the testing of this hypothesis in patients with neurological diseases (Pomeroy et al., 2005). Studies in control subjects are needed to determine the effectiveness of such approach.

The purpose of this project was two-fold: 1) to develop a VR system that could be used with functional magnetic resonance imaging (fMRI) for concurrent measurement of motor behavior and brain activity, and 2) to delineate the brain-behavior interactions that may occur as subjects interact in the VE. Our overall hope is that a better understanding of brain-behavior relations when interacting in VE may better guide the use of VE for therapeutic applications. Our long term objective is to identify the essential elements of the VE sensorimotor experience that may selectively modulate neural reorganization for rehabilitation of patients with neural dysfunction. These discoveries will offer a foundation for evidence-based VR therapies, with profound implications in diverse fields as computational neuroscience, neuroplasticity, neural prosthetics, and human computer interface design. Here we present a proof of concept of a novel VR system that can be integrated with fMRI to allow the study of brain-behavior interactions.

Our initial goal was to design a system capable of measuring complex coordination of the hand and fingers (which have over 20 degrees of freedom), deliver reliable and real-time visual feedback of a virtual representation of the moving hand. We have tested our system with the following hardware components. To track hand-arm motion, we used left and right Immersion CyberGloves (Immersion, 2006: http://www.immersion.com) and Ascension Flock of Birds 6 degrees of freedom sensors (Ascension Technology Corp., Flock of Birds, http://www.ascension-tech.com). In addition, we have used two haptic devices: a CyberGrasp (Immersion Corp.) exoskeleton to mechanically perturb finger motion and a HapticMaster (Moog FCS) 3 degree of freedom manipulandum to perturb arm motion. Virtual environments have been designed using C++/OpenGl or Virtools (Dassault Systèmes, Virtools Dev 3.5, 2006: http://www.virtools.com) (Fig. 1A,B). Movement of the hands depicted in the virtual environment is an exact representation of the movement of the subject’s hands in real space. For example, in the Piano Trainer (Fig. 1B), movement of the keys of the virtual piano and appropriate musical sounds are defined by the interaction between the virtual finger and the virtual key using a collision detection algorithm. These and similar environments have been successfully used by our group for training patients post stroke (Adamovich et al., 2005; Merians et al., 2006; Adamovich et al., 2008; Adamovich et al., In Press).

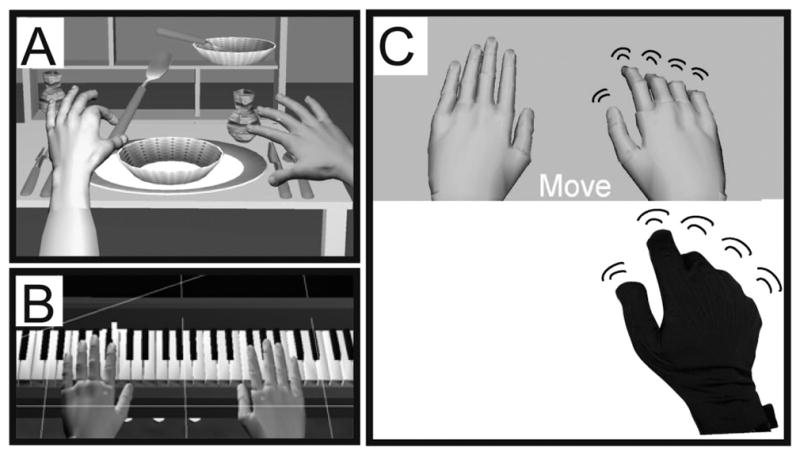

Fig. 1.

A sample of our currently developed virtual environments. A. Dining Table Scene, B. Piano trainer, C. The virtual environment used in the current paradigm. We extracted the essential component common to all of our virtual environments, the virtual hands, over a plane background. Below them is a picture of a subject’s hand wearing a 5DT data glove that actuated motion of the virtual hand models.

To directly investigate how controlling a virtual representation of one’s hands, in real-time, affects neural activation, we have extracted the essential elements common to all of our environments, a pair of virtual hands (Fig. 1C). We imaged healthy subjects at 3T as they performed a simple finger movement task. We used the MRI-compatible 5DT data glove to measure subjects’ hand movements in real-time to actuate motion of virtual hands viewed by the subjects in a 1st person perspective. Subjects observed virtual finger movements with an intention to imitate afterwards. Subjects were subsequently required to imitate the observed movements while observing motion of the virtual hands animated in real-time by their actual movement. Perani and coworkers (Perani et al., 2001) investigated the effects of observing animations of virtual and real hands on brain activity. The authors noted that observing animated virtual hands was associated with weaker activation in sensorimotor networks when compared to observation of real hands. However, in these studies visual feedback was not of the subject’s own real-time movement and was not observed in 1st person perspective. Recently, Farrer and coworkers (Farrer et al., 2007) presented subjects with visual feedback from a live video feed of their moving hands during the fMRI session. By manipulating the temporal delay between the movement and feedback, the authors investigated neural networks involved in the sense of agency, or sense of control of the action. Their results from this and previous work (Farrer et al., 2003) suggested that the angular and insular cortices may be involved in this function. The data from the above studies leads us to hypothesize that interaction in a VE in the 1st person should elicit activation in the insular and inferior parietal cortices, regions that are known to be recruited in agency-related tasks (Farrer et al., 2003; Corradi-Dell’acqua et al., 2008). Additionally, our study allows us to investigate whether these networks can be recruited when observing with the intent to imitate movement of VR representations of human hands. We hypothesize that in individuals who are naïve to our VE, repeated exposure should induce an increase in activation of agency-related networks.

If we can show proof of concept for using virtual reality feedback to selectively facilitate brain circuits in healthy individuals, then this technology may have profound implications for use in rehabilitation and in the study of basic brain mechanisms (i.e. neuroplasticity).

2. Methods

2.1. Subjects

13 healthy (mean ± 1SD, 27.7 ± 3.4 years old, 9 males) and right-handed (Oldfield 1971) subjects with no history of neurological or orthopedic diseases participated after signing informed consent form approved by the IRB Committees of NYU and NJIT.

2.2. Description of the virtual reality system

To investigate the underlying role of VR in facilitating movement and activating motor related brain regions, a task-based virtual reality simulation was developed for use in an fMRI. Figure 1C shows the fundamental element of the training system, the VR representations of the user’s hands. Movement of the virtual hand models is actuated in real-time by the subject’s own hand motion. The virtual environment was developed using Virtools with the VRPack plugin which communicates with the open source VRPN (Virtual Reality Peripheral Network) (Taylor 2006). For the present experiments, we used an MRI-compatible right hand 5DT Data Glove 16 MRI (Fifth Dimension Technologies, 5DT Data Glove 16 MRI, http://www.5dt.com) with fiberoptic sensors to measure 14 joint angles of the hand. The glove provided measurements for each of the five metacarpophalangeal (MCP) joints, proximal interphalangeal (PIP) joints, and four abduction angles. The 5DT glove is metal-free and therefore safe to operate in an MRI environment. The data glove was worn by subjects in the magnet and a set of fiberoptic cables (5 meters long) ran from the glove into the console room through an access port in the wall. In the console room, the fiber optic signals were digitized and plugged into the serial port of a personal computer that ran the simulation. The simulation was displayed to the subjects through a rear projector behind the magnet and the subjects viewed this through a rear-facing mirror placed above their eyes.

2.3. Experimental protocol

Naïve subjects never exposed to our VE interface were tested in four conditions: 1) OTI: Subjects observed, with the intent to imitate, the virtual hand flex the index-middle-ring-pinky fingers in randomly defined sequential order. The virtual hands were anthropometrically shaped and resembled real hands (Fig. 1C). The virtual hands in this condition were animated by data obtained from pre-recorded movements of a subject performing a finger sequence. A new finger sequence was used on each trial, 2) MOVE h: Execute the observed sequence. During movement, subjects received real-time visual feedback of the virtual hand actuated by the subject’s actual movement, 3) WATCH e: Observe a non-anthropometric object, an ellipsoid, rotating about its long axis. The ellipsoid matched the virtual hand in size, color, movement frequency, and visual field position and controlled for these non-specific effects. No intention to imitate was required in this condition, 4) MOVE e: Execute a previously observed finger sequence. In all conditions, a pair of right and left virtual hands or ellipsoids was displayed but only the right object moved. All movements were performed with the subject’s right hand.

The scanning session was arranged as 16 nine-second long miniblocks. Each of the four conditions (OTI, MOVE h, WATCH e, MOVE e) repeated four times throughout the session. During the miniblocks, subjects performed one of the tasks described above. In blocks requiring observation without movement, subjects were required to rest their hands on their laps and in blocks requiring movement, subjects were required to slightly lift their hands off their lap just enough to allow finger motion. The miniblocks were separated by a rest interval that randomly varied in duration between 5–10 seconds, to introduce temporal jitter into the fMRI acquisition. During this interval, subjects were instructed to observe the two virtual hand models displayed statically on the screen and to rest both of their hands on their lap.

2.4. Glove calibration

The glove must be calibrated separately for each user before the start of the session. Two glove measurements are recorded: 1) with the hand fully closed into a fist such that the five MCP and five PIP joints are maximally flexed and form a 90° angle, and 2) with the hand fully open, palm down on a level surface (fingers abducted). To calibrate the fiberoptic signal to a joint angle, the difference in the sensor readings for the MCP and PIP joints between the open and closed hand postures positions is divided by 90 degrees. This determines a calibration “gain” which is applied in real time to make virtual hand movements correspond to the subject’s own movement.

2.5. Behavioral measures

Finger motion data obtained from the 5DT glove during the fMRI session was analyzed offline using custom written Matlab (Mathworks, Inc) software to confirm that subjects conformed to the task instructions and that finger movements during the execution epochs were consistent across conditions (to assure that differences in finger movement did not account for any differences in brain activation). For this, the amplitude of each finger’s movement was recorded and submitted to a repeated measures analysis of variance (ANOVA) with within factors: CONDITION (hand, ellipsoid), FINGER (index, middle, ring, pinky), and MINIBLOCK (1, 2, 3, 4). Statistical threshold was set at alpha = 0.05.

2.6. Synchronization with collection of fMRI data

Three components of this system are synchronized in time: the collection of hand joint angles from the instrumented glove, the motion of the virtual hands, and the collection of fMRI images. After calibration, glove data collection was synchronized with the first functional volume of each functional imaging run by a back-tic TTL transmitted from the scanner to the computer controlling the glove. From that point, glove data was collected in a continuous stream until termination of the visual presentation program at the end of each functional run. As glove data was acquired, it was time-stamped and saved for offline analysis.

2.7. FMRI data acquisition and preprocessing

Magnetic resonance imaging was performed at NYU’s Center for Brain Imaging on a research-dedicated 3-T Siemens Allegra head-only scanner with a Siemens standard head coil. Structural (T1-weighted) and functional images (TR = 2500 ms, TE = 30 ms, FOV = 192 cm, flip angle = 90°, bandwidth = 4112 Hz/px, echo-spacing = 0.31 ms, 3 × 3 × 3 voxels, 46 slices) were acquired. Functional data were preprocessed with SPM5 (http://www.fil.ion.ucl.ac.uk/spm/). The first two volumes were discarded to account for field inhomogeneities. Each subject’s functional volumes was realigned to the first volume, co-registered and spatially normalized to the Montreal Neurological Institute template, and smoothed using an 8 mm Gaussian kernel.

2.8. FMRI analysis

fMRI data was analyzed with SPM5. We were interested in two primary effects. First, we analyzed task-specific activation related to interacting in VE; i.e. activation related to observation of virtual hand motion with the intent to imitate and activation related to execution. To rule out non-specific visual feedback effects, we subtracted activations in the conditions with ellipsoids from those in conditions with virtual hands. Thus, the resultant contrasts were: 1) OTI > WATCH e and 2) MOVE h > MOVE e. Second, we were interested in whether increased exposure to the VE led to time-varying changes in activation; i.e. as the virtual hand became embodied. For this, we modeled the miniblock number as a separate column in the design matrix and analyzed whether activation parametrically increased across the miniblocks. This analysis was performed for each condition. Activation was significant if it exceeded a threshold level of P < 0.001 and a minimum extent of 10 voxels. Each subject’s data was analyzed using a fixed-effects model and the resultant contrast images were submitted for group analysis using a random-effects model.

3. Results

In the imaging experiment, we sought to answer two critical questions. First, are the networks recruited for observing and executing actions in VE similar to those known to be engaged for observation and execution of real-world actions? Second, does activity in these neural circuits change as one becomes more familiarized with the VE?

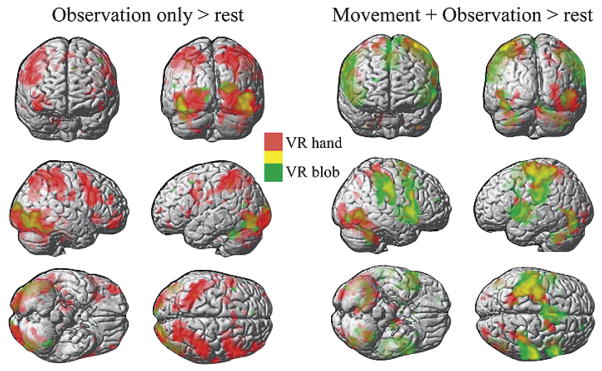

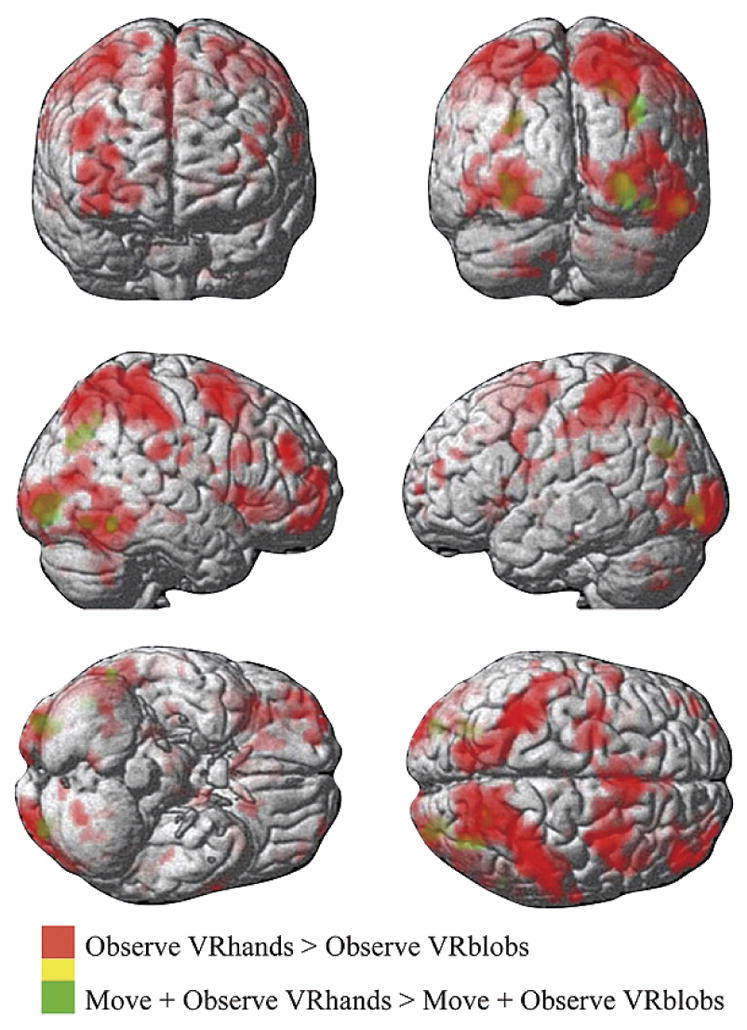

3.1. Activation when observing movements in virtual reality

Figure 2 (left pane) shows the activation patterns when subjects observed a sequential finger movement in the virtual environment. Note that in the observation condition, subjects were instructed to “observe with the intention to imitate afterwards”. Therefore, in the ellipsoid condition, subjects could observe the virtual environment with most of the features that they saw in the virtual hands condition but were unable to make an intention to imitate. This allowed us to dissociate “passive” from “active” observation. Observing virtual hands perform a finger sequence was associated with a distributed network (Table 1) including the left parietal cortex (somatosensory and intraparietal sulcus) extending into the anterior bank of the central sulcus (motor cortex), bilateral anterior insula, bilateral frontal lobes (right precentral gyrus and left inferior frontal gyrus pars opercularis), bilateral occipital lobe, right anterior/posterior intermediate cerebellum. Conversely, observing the rotating ellipsoids was associated with activation limited to the bilateral occipital lobe and the left superior lateral cerebellum (Table 1). The contrast of OTI > WATCH e (Fig. 3 and Table 2) was performed to subtract out regions that may have been associated with low-level effects of observation in VR (such as object motion, position, color) and observation not associated with an intention to imitate an action. Regions activated in this contrast included the fusiform gyrus of the temporal cortex, superior parietal lobe including the precuneus and intraparietal sulcus, anterior insula, middle frontal gyrus, and the medial frontal lobe.

Fig. 2.

Left Panes: Simple main effect of observation only in VR versus rest. In red are regions activated when subjects observed, with the intent to imitate (OTI), a virtual hand perform a natural pre-recorded finger sequence. In green are regions activated when subjects passively viewed a rotating ellipsoid (WATCH e, see Methods). Right Panes: Simple main effect of execution versus rest. In red are regions activated when subjects imitated the finger sequence (that they observed in the OTI condition) with real-time control of representations of their hands in VR (MOVE h). In green are regions activated when subjects performed the finger sequence while viewing rotating ellipsoids that were not controlled by the subject’s motion (MOVE e). All yellow colors depict regions where the activations in red and green overlapped. All activations are thresholded at p < 0.001 and extent of 10 voxels.

Table 1.

Regions in MNI space showing significant activation for the main contrasts of interest. Data are thresholded at p<0.001 at the cluster level, uncorrected, and a voxel extent of k=10. IPL, inferior parietal lobule, IPS, intraparietal sulcus, ITG, inferior temporal gyrus, MTG, middle temporal gyrus, STG, superior temporal gyrus, MFG, middle frontal gyrus, SFG, superior frontal gyrus, SFS, superior frontal sulcus

| Side | x,y,z {mm} | K | t- value | z-x value | p(FDR) | p(unc) | |

|---|---|---|---|---|---|---|---|

| OTI > REST | |||||||

| Anterior insula | R | 34 20 −4 | 350 | 10.07 | 4.96 | 0.005 | 0.000 |

| L | −34 20 2 | 50 | 7.93 | 4.49 | 0.005 | 0.000 | |

| IPL, angular gyrus | L | −44 −32 44 | 2686 | 9.31 | 4.81 | 0.005 | 0.000 |

| IPL, postcentral gyrus | L | −66 −16 20 | 23 | 5.48 | 3.73 | 0.005 | 0.000 |

| Precuneus, IPS, IPL | R | 14 −76 56 | 3893 | 8.92 | 4.73 | 0.005 | 0.000 |

| Caudal SFS | L | −24 2 50 | 158 | 7.55 | 4.39 | 0.005 | 0.000 |

| MFG, precentral sulcus/gyrus | L | −52 12 38 | 382 | 6.8 | 4.18 | 0.005 | 0.000 |

| Pars orbitalis | R | 46 50 −10 | 261 | 8.09 | 4.53 | 0.005 | 0.000 |

| L | −46 46 −16 | 34 | 6.16 | 3.97 | 0.005 | 0.000 | |

| L | −26 64 4 | 25 | 5.06 | 3.56 | 0.006 | 0.000 | |

| Pars triangularis | L | −48 28 12 | 12 | 7.02 | 4.24 | 0.005 | 0.000 |

| Pars opercularis, precentral gyrus | R | 66 −12 34 | 122 | 6.68 | 4.14 | 0.005 | 0.000 |

| R | 52 18 22 | 1975 | 7.2 | 4.29 | 0.005 | 0.000 | |

| L | −54 18 4 | 39 | 5.43 | 3.71 | 0.005 | 0.000 | |

| ITG | R | 26 −16 −28 | 114 | 6.31 | 4.02 | 0.005 | 0.000 |

| ITG | R | 38 −24 −12 | 48 | 6.1 | 3.95 | 0.005 | 0.000 |

| Anterior cingulate | R | 18 22 42 | 65 | 6.96 | 4.23 | 0.005 | 0.000 |

| L | −8 30 38 | 35 | 6.15 | 3.97 | 0.005 | 0.000 | |

| L | −8 12 60 | 145 | 6.03 | 3.93 | 0.005 | 0.000 | |

| Putamen | L | −24 16 12 | 20 | 5.34 | 3.68 | 0.005 | 0.000 |

| Dentate | R | 24 −60 −38 | 23 | 5.03 | 3.55 | 0.006 | 0.000 |

| Occipito-parietal | L | −26 −72 22 | 154 | 4.99 | 3.53 | 0.006 | 0.000 |

| Occipito-temporal | R | 46 −68 2 | 4701 | 7.7 | 4.43 | 0.005 | 0.000 |

| Calcarine sulcus | L | −26 −102 −4 | 2729 | 6.9 | 4.21 | 0.005 | 0.000 |

| WATCH e > REST | |||||||

| Inferior occipito-temporal | R | 48 −68 2 | 1218 | 7.96 | 4.5 | 0.068 | 0.000 |

| L | −44 −70 −16 | 644 | 8.06 | 4.52 | 0.068 | 0.000 | |

| Occipital pole | R | 20 −96 10 | 284 | 6.07 | 3.94 | 0.068 | 0.000 |

| Occipital pole | L | −8 −104 −4 | 35 | 7.4 | 4.35 | 0.068 | 0.000 |

| L | −22 −96 6 | 84 | 5.11 | 3.58 | 0.068 | 0.000 | |

| Caudal IPS | R | 36 −44 54 | 10 | 4.38 | 3.26 | 0.068 | 0.001 |

| L | −36 −46 38 | 17 | 6.29 | 4.02 | 0.068 | 0.000 | |

| L | −34 −44 48 | 34 | 5.01 | 3.54 | 0.068 | 0.000 | |

| Anterior intermediate cerebellum | L | −38 −48 −28 | 203 | 6.2 | 3.98 | 0.068 | 0.000 |

| MTG | L | −54 −66 16 | 15 | 5.25 | 3.64 | 0.068 | 0.000 |

| MFG, intermediate | L | −44 14 52 | 16 | 4.69 | 3.41 | 0.068 | 0.000 |

| Anterior cingulate | L | −8 40 40 | 16 | 4.57 | 3.35 | 0.068 | 0.000 |

| MOVE h > REST | |||||||

| Intermediate cerebellum, dentate | R | 28 −38 −50 | 1260 | 7.39 | 4.35 | 0.01 | 0.000 |

| SFG, precentral gyrus | L | −22 −4 74 | 4045 | 6.76 | 4.16 | 0.01 | 0.000 |

| R | 16 2 58 | 303 | 6.78 | 4.17 | 0.01 | 0.000 | |

| Occipito-temporal | R | 44 −66 −10 | 1856 | 6.18 | 3.98 | 0.01 | 0.000 |

| Posterior insula | L | −44 −8 4 | 732 | 6.08 | 3.94 | 0.01 | 0.000 |

| Intermediate insula | R | 50 6 4 | 1185 | 6.06 | 3.94 | 0.01 | 0.000 |

| Anterior, intermediate cingulate | L | −6 4 48 | 638 | 5.94 | 3.90 | 0.01 | 0.000 |

| Central sulcus, pre/post-central gyri | R | 46 −26 32 | 1572 | 5.73 | 3.82 | 0.01 | 0.000 |

| Precuneus | R | 16 −70 52 | 103 | 5.70 | 3.81 | 0.01 | 0.000 |

| Lateral inferior occipital | L | −46 −74 −20 | 1007 | 5.51 | 3.74 | 0.01 | 0.000 |

| IPL, angular gyrus | R | 32 −70 22 | 53 | 5.30 | 3.66 | 0.01 | 0.000 |

| Intermediate putamen | R | 24 −2 6 | 52 | 5.26 | 3.64 | 0.01 | 0.000 |

| Pars opercularis | R | 56 6 42 | 20 | 4.61 | 3.37 | 0.01 | 0.000 |

| Occipital pole | L | −26 −86 −4 | 96 | 4.54 | 3.34 | 0.01 | 0.000 |

| MOVE e > REST | |||||||

| Intermediate insula, frontoparietal operculum, central sulcus, pre/postcentral gyrus | L | −58 −2 2 | 8268 | 8.05 | 4.52 | 0.006 | 0.000 |

| Central sulcus, pre/poscentral gyrus | R | 56 −20 44 | 2614 | 7.97 | 4.5 | 0.006 | 0.000 |

| Anterior-posterior insula | R | 54 16 −6 | 2687 | 7.66 | 4.42 | 0.006 | 0.000 |

| Inferior occipital-temporal | R | 44 −64 0 | 543 | 6.76 | 4.16 | 0.006 | 0.000 |

| Inferior lateral temporal lobe | L | −38 −76 −18 | 482 | 6.09 | 3.95 | 0.006 | 0.000 |

| Intermediate, superior cerebellum | R | 44 −56 −40 | 653 | 5.9 | 3.88 | 0.006 | 0.000 |

| L | −27 −70 −38 | 434 | 5.92 | 3.89 | 0.006 | 0.000 | |

| Precuneus | L | −14 −54 54 | 63 | 5.59 | 3.77 | 0.006 | 0.000 |

| Caudal MFG | L | −56 2 44 | 19 | 5.56 | 3.76 | 0.006 | 0.000 |

| Anterior insula | L | −32 18 4 | 59 | 5.52 | 3.74 | 0.006 | 0.000 |

| Intermediate putamen | R | 18 2 12 | 70 | 5.33 | 3.67 | 0.006 | 0.000 |

| Rostral SFS | R | 22 50 20 | 36 | 4.96 | 3.52 | 0.007 | 0.000 |

Fig. 3.

Regions activated in the OTI > WATCH e (red) and MOVE h > MOVE e (green) contrasts.

Table 2.

Regions in MNI space showing significant activation for the secondary contrasts of interest not reported in Table 1. Data are thresholded at p < 0.001 at the cluster level, uncorrected, and a voxel extent of k = 10. IPL, inferior parietal lobule, IPS, intraparietal sulcus, ITG, inferior temporal gyrus, MTG, middle temporal gyrus, STG, superior temporal gyrus, MFG, middle frontal gyrus, SFG, superior frontal gyrus, SFS, superior frontal sulcus.

| Side | x,y,z {mm} | K | t- value | z-x value | p(FDR) | p(unc) | |

|---|---|---|---|---|---|---|---|

| OTI > WATCH e | |||||||

| Precuneus, IPS, SPL, IPL, angular gyrus, postcentral gyrs, central sulcus | R | 16 −68 50 | 7595 | 14.4 | 5.64 | 0.001 | 0.000 |

| Central sulcus, anterior-posteior bank | R | 66 −14 24 | 66 | 6.14 | 3.97 | 0.002 | 0.000 |

| Lateral parieto-occipital | L | −34 −80 18 | 273 | 7.97 | 4.5 | 0.001 | 0.000 |

| R | 44 −74 12 | 2848 | 12.87 | 5.43 | 0.001 | 0.000 | |

| Anterior insula | L | −32 14 10 | 40 | 6.63 | 4.13 | 0.002 | 0.000 |

| L | −40 18 0 | 41 | 5.77 | 3.84 | 0.003 | 0.000 | |

| R | 38 18 2 | 1783 | 10.50 | 5.05 | 0.001 | 0.000 | |

| Frontal pole | L | −28 64 6 | 24 | 5.8 | 3.85 | 0.003 | 0.000 |

| Caudal SFG, precentral gyrus | L | −20 −4 62 | 712 | 7.86 | 4.47 | 0.002 | 0.000 |

| Rostral SFS, SFG, MFG | R | 34 4 56 | 2522 | 9.3 | 4.81 | 0.001 | 0.000 |

| Rostral MFG | L | −58 10 34 | 121 | 8.1 | 4.53 | 0.001 | 0.000 |

| R | 46 44 26 | 376 | 10 | 4.95 | 0.001 | 0.000 | |

| Pars orbitalis | L | −44 52 14 | 59 | 6.02 | 3.92 | 0.003 | 0.000 |

| Pars opercularis, precentral gyrus | L | −62 4 18 | 58 | 6.33 | 4.03 | 0.002 | 0.000 |

| R | 46 20 26 | 285 | 9.56 | 4.86 | 0.001 | 0.000 | |

| left occipital pole | L | −26 −104 −2 | 1005 | 8.84 | 4.71 | 0.001 | 0.000 |

| ITG | R | 26 −32 −2 | 87 | 6.19 | 3.98 | 0.002 | 0.000 |

| R | 38 −20 −10 | 51 | 9.45 | 4.84 | 0.001 | 0.000 | |

| Rostral lateral sulcus | L | −64 −34 22 | 28 | 5.97 | 3.91 | 0.003 | 0.000 |

| L | −50 −36 26 | 73 | 5.82 | 3.86 | 0.003 | 0.000 | |

| Cerebellar vermis | R | 6 −62 −34 | 65 | 6.84 | 4.19 | 0.002 | 0.000 |

| Intermediate inferior cerebellum | L | −20 −74 −48 | 156 | 6.78 | 4.17 | 0.002 | 0.000 |

| L | −34 −62 −34 | 41 | 4.98 | 3.53 | 0.005 | 0.000 | |

| R | 40 −58 −44 | 193 | 6.79 | 4.17 | 0.002 | 0.000 | |

| Posterior putamen | R | 20 0 20 | 79 | 8.18 | 4.55 | 0.001 | 0.000 |

| Caudate | L | −14 20 −4 | 125 | 8.05 | 4.52 | 0.001 | 0.000 |

| MOVE h > MOVE e | |||||||

| IPL, angular gyrus | L | −26 −70 32 | 88 | 6.29 | 4.02 | 0.239 | 0.000 |

| R | 38 −68 38 | 124 | 7.25 | 4.31 | 0.239 | 0.000 | |

| Precuneus | R | 18 −62 46 | 53 | 4.89 | 3.49 | 0.239 | 0.000 |

| Occipital pole | L | −26 −86 −4 | 122 | 5.19 | 3.62 | 0.239 | 0.000 |

| R | 26 −86 −6 | 253 | 6.77 | 4.17 | 0.239 | 0.000 | |

| ITG, intermediate | R | 58 −54 −14 | 56 | 6.34 | 4.03 | 0.239 | 0.000 |

| R | 40 −68 −12 | 40 | 4.96 | 3.52 | 0.239 | 0.000 | |

| WATCH e > OTI | |||||||

| Cuneus, calcarine sulcus | L | −12 −94 20 | 97 | 7 | 4.24 | 0.511 | 0.000 |

| Cuneus | R | 18 −80 24 | 10 | 5 | 3.54 | 0.541 | 0.000 |

| Inferior occipital | R | 6 −62 0 | 110 | 6.8 | 4.18 | 0.511 | 0.000 |

| MOVE e > MOVE h | |||||||

| Corpus callosum | L | −10 −16 32 | 112 | 12.33 | 5.35 | 0.009 | 0.000 |

| Cuneus | L | −10 −88 34 | 331 | 6.85 | 4.19 | 0.075 | 0.000 |

| Frontal pole | L | −16 58 14 | 94 | 8.9 | 4.72 | 0.049 | 0.000 |

| STG, rostral | R | 62 4 −6 | 21 | 5.44 | 3.71 | 0.096 | 0.000 |

| SFS, intermediate | L | −20 20 50 | 54 | 7.11 | 4.27 | 0.075 | 0.000 |

| MFG, rostral | R | 22 46 16 | 68 | 6.15 | 3.97 | 0.075 | 0.000 |

| MFG, precentral sulcus | R | 34 −6 38 | 76 | 7.73 | 4.44 | 0.075 | 0.000 |

| Pars orbitalis | L | −36 56 −4 | 37 | 5.62 | 3.78 | 0.091 | 0.000 |

| OTI block 4>3>2>1 | |||||||

| Posterior-intermediate insula | L | −46 −16 14 | 163 | 9.04 | 4.75 | 0.066 | 0.000 |

3.2. Activation when executing movements in virtual reality

Figure 2 (right pane) shows activation when participants executed the sequential finger movements while receiving feedback in VR of either the virtual hands driven by the subjects’ own motion (red areas) or of ellipsoids (green areas). Both conditions were associated with activation in the right cerebellar cortex, an extensive activation of the left sensorimotor cortex that included much of the postcentral gyrus and precentral gyrus, the right inferior parietal lobule, and the bilateral insular cortex (Table 1). Additionally, feedback of the VR hands was associated with activation of the right fusiform gyrus. The contrast for feedback of VR hands minus ellipsoids (Table 2, Fig. 3) revealed activation of the bilateral angular gyri, precuneus, inferior occipital lobe, and the occipitotemporal junction. Note that since finger movement remained constant between the two conditions (see Behavioral Data section), it is not surprising that the sensorimotor cortex activation that was associated with each condition was not evident after the subtraction.

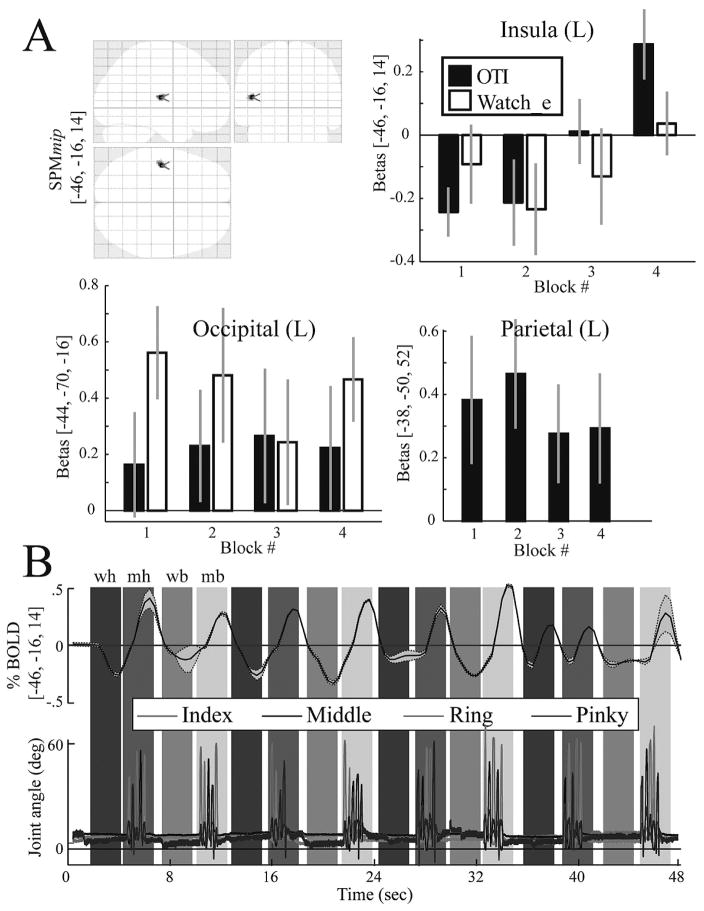

3.3. Activation during observation that changed over time

To understand if brain activation changed over time as subjects became familiar with interacting in the VE, we analyzed the parametric changes in the BOLD signal across the four execution and observation blocks. Increases in the BOLD signal were noted in the OTI condition in the left posterior insula (Fig. 4 and Table 2) and in the MOVE h condition in the right inferior occipital lobe (Figure not shown, see Table 2 for coordinates). Note that the time-variant changes in the BOLD signal occurred despite no difference in movement kinematics across the blocks for the observation and execution conditions (see Behavioral Data section). No other significant time-variant changes in the BOLD signal were noted.

Fig. 4.

A. The top left panel shows on an SPM glass brain the only region, the insula, that showed a significant time-variant increase in activation during the OTI condition. The remaining three panels show bar plots of the beta values at three cortical locations: in the insula shown in the glass brain and two control sites that were recruited in the simple main effect contrast. Note that the time-variant increase is evident only in the insula and only in the OTI condition. The bar plots for the parietal site in the WATCH e condition are not shown since this site was not recruited in the simple main effect. B. The simultaneously recorded time-series data for the BOLD signal (top) (group mean ± 1SD) and the joint angles (bottom) (one representative subject) of the four fingers. Shaded vertical bars denote the condition epochs (wh, OTI; mh, MOVE h; we, WATCH e; me, MOVE e).

3.4. Behavioral data

Inspection of the finger kinematics acquired during the fMRI experiment revealed that all subjects complied with the task by maintaining their fingers still during the observation epochs and performing the correct finger sequences in the execution epochs. The bottom panel of Fig. 4 shows a representative subject’s MCP joint angle excursion for the index, middle, ring, and pinky fingers across one block. An ANOVA for peak joint excursion (movement extent) at the MCP revealed a significant main effect of FINGER (F(3,9) = 8.9; p = 0.005). Indeed, the greatest excursion occurred at the index MCP (range: 27°–38°) and the least excursion at the pinky MCP (range: 17°–25°). No other significant main effects or interactions were noted for movement extent (p > 0.05), suggesting that movement was consistent for each finger across the epochs and conditions.

4. Discussion

Observation and imitation are among the most powerful and influential aspects of human skill learning. Since neural networks for observation and execution show a large degree of overlap, stroke patients may benefit from observation of embodied actions/effectors during the acutely immobile phase after stroke. In support of this, it has been demonstrated that simple observation of actions can accelerate functional recovery after stroke (Celnik et al., 2008). To afford subjects the opportunity to embody movement that they observe, we developed realistic representations of human hands in virtual reality that can be actuated in real time by an actor’s hands. We used an MR-compatible interactive virtual environment to study the neural networks involved in observation and imitation of complex hand movements.

A longstanding challenge to understanding the real-time link between brain and motor behavior is partly due to the incompatibility of human motion measurement technology with MRI environments. Recently, innovative devices capable of measuring kinematics and kinetics of one- (1D) and two- (2D) degree of freedom movements as well as delivering forces/torques to subjects’ movements have been successfully integrated with MRI environments with negligible device-to-MRI and MRI-to-device artifacts (Ehrsson et al., 2001; Diedrichsen et al., 2005; Tunik et al., 2007; Vaillancourt et al., 2007). These devices allow one to study brain-behavior interactions in real-time for 1D and 2D movements. Moreover, the visual feedback presented in these studies was of a moving cursor rather than a moving body part. However, these devices are limited in their capacity to study complex movements, such as hand coordination.

Our study demonstrates four important findings. First, we show the possibility of simultaneous integration of kinematic recording of hand movements, virtual reality-based feedback, and task-related measurement of neural responses using fMRI. Second, we demonstrate that intentional observation of to-be-imitated hand actions presented in VE, in 1st person perspective, recruits a bilateral fronto-parietal network similar to that recruited for observation of actions performed in the real world. Third, we show an increase in activation in the left insular cortex as participants became more familiarized with the relationship between their own movement and that of the virtual hand models. Fourth, we identify for the first time the involvement of the bilateral angular gyri, extrastriate body area, and left precuneus when controlling a virtual representation of your own hands viewed in real-time in the 1st person. We discuss each of these points below.

4.1. Capabilities of our VE training system

It is timely to consider how virtual environments can be exploited to facilitate functional recovery and neural reorganization. Although exercising in a virtual environment is in the nascent stage of exploration, there are an ever increasing number of studies showing VE to have positive behavioral (Holden et al., 2007; Mirelman et al., 2008; Merians et al., In Press) and neural (You et al., 2005; You et al., 2005) effects. The overall VE architecture developed by our team was designed to be used in rehabilitation of hand function in patients with various neurological disorders including stroke and cerebral palsy. The system is capable of accommodating patients with a broad array of dysfunction and treatment goals. For example, our system can integrate various sensors and actuators to track seamlessly the motion of the fingers, hands, and arms as well as to induce mechanical perturbations to the fingers or the arms (Merians et al., In Press). What remains untested is whether the benefits from training in VE emerge simply because it is an entertaining practice environment or whether interacting in a specially-designed VE can be used to selectively engage a frontoparietal action observation and action production network. The latter may have profound implications for evidence-based neurorehabilitation methods and practices.

4.2. Frontoparietal involvement for observing with the intent to imitate actions in VE

For the OTI condition, subjects observed finger sequences performed by a virtual hand representation. The finger movements were not performed by consecutive fingers and varied from trial to trial, requiring subjects to actively observe each finger sequence for reproduction on the subsequent trial. The simple main effect of observe-with-the-intent-to-imitate afterwards (OTI) condition versus viewing static virtual hands was associated with activation in a distributed, mostly bilateral, network including the visual cortex, sensorimotor cortex, premotor cortex, posterior parietal cortex, and insular cortex. The network we identified in the OTI condition is consistent with a host of neuroimaging studies investigating neural correlates of observation of real-world hand movements. For example, observation of intransitive (non-object oriented) actions involving pictures or videos of real hands is associated with engagement of a distributed network involving the frontal, parietal, and temporal lobes (Decety et al., 1997; Buccino et al., 2001; Grezes et al., 2001; Suchan et al., 2008). In contrast, activation in the simple main effect of observe ellipsoids (OE) versus static virtual hands was predominantly localized to visual processing areas (occipito-temporal cortex), making it unlikely that activation in the OTI condition was attributed to low-level effects such as object shape, color, motion, or its position in the visual field. Activation in the OTI > OE contrast confirmed this finding. It has been suggested that the above mentioned frontal and parietal regions, particularly those involving the mirror-neuron system, may be part of a network subserving internal simulation of action which may resonate during intentional observation of movement (Rizzolatti and Luppino 2001; Gallese et al., 2004; Kilner et al., 2004). Our data extend this hypothesis, suggesting that observation of virtual, but realistic, effectors may also engage similar neural substrates.

An earlier fMRI study investigated observation of grasp performed by high and low fidelity VR hands versus those performed by real hands (Perani et al., 2001). The authors noted that observation of grasp performed by real hands was associated with stronger recruitment of the frontal and parietal cortices and that the degree of realness of the virtual hands had negligible effect on higher-order sensorimotor centers. Along these lines, activation in the left ventral premotor cortex, a presumptive mirror neuron site, has been shown to be more strongly recruited when participants observe grasp performed by a real versus a robotic (nonbiological) hand (Tai et al., 2004). However, in these paradigms, subjects 1) passively observed the actions performed by another agent, and 2) never engaged in practicing the observed action (with real-time feedback) themselves. Particularly, in Perani et al.’s study, bilateral precuneus and right inferior parietal lobule (BA39, 40) were recruited during observation of real but not virtual hand grasping movements (see Table 2 in (Perani et al., 2001)). In our study, observation of virtual hand actions (with the intent to imitate the movement) paired with rehearsal of the observed action, likely led to recruitment of these higher-order sensorimotor centers during observation only (see OTI>OE in Table 2).

4.3. Time-varying activation in the insular cortex

An additional component to interacting in VR pertains to the possibility that extended exposure to the virtual model is needed to develop a sense of control or ownership over the virtual representations of your own body. We tested this by performing a time-series analysis of the BOLD data. The analysis revealed a parametric increase in the BOLD signal in the left posterior insular cortex for the OTI condition. Other cortical regions that were recruited in the OTI or the OE conditions did not show such parametric increases. Note too that in the OTI condition, subjects did not make overt movements (see Results), but just observed with the intention to imitate immediately after. To our knowledge, this is the first evidence showing a time-variant change localized to the insular cortex driven by increased interaction with a virtual representation of one’s hand. The increase in insular activation likely reflects the neural substrate underlying the emergence of a sensed relationship between self movement and the movement of the virtual hands that were controlled by the subject throughout the experiment. This thesis is supported by lesion and neuroimaging data implicating the insular cortex in awareness of actions performed by the self and others (see discussion in the above section). For example, several recent reports noted increased activation of the insula as subjects became increasingly aware of being in control of an action (Farrer and Frith 2002; Farrer et al., 2003; Corradi-Dell’acqua et al., 2008). Along these lines, we show a parametric increase during observation with the intent to imitate but not during the MOVE h condition. The parametric increase in BOLD across the blocks is unlikely to be explained by any movement-related changes across the blocks since our analyses of movement kinematics (collected concurrently with fMRI by use of a data glove) did not reveal any significant changes in performance in the movement blocks nor any movement in the observation blocks. The parametric changes in BOLD likely reflects perceptuo-motor influences of interacting in VR. The exact source of this modulation of brain activity is the focus of our ongoing studies.

4.4. Control of a virtual representation of your own hands

Subjects executed sequential finger movements with simultaneous feedback of their movement through VR hands (that they controlled) or through rotation of virtual ellipsoids (not actuated by subjects). Movement under both sensory feedback conditions led to a distributed activation in known networks recruited for sequential finger movement (Grafton et al., 1996). To identify regions sensitive to feedback from VR hand models, we subtracted the VR ellipsoids contrast from the VR hands contrast. This subtraction revealed activation in the left precuneus, bilateral angular gyri, and left extrastriate body area. Our findings are consistent with recently hypothesized functions of these regions.

4.5. Contralateral precuneus

Functional neuroimaging work in humans and unit recordings in non-human primates suggests that the parietal cortex is integral for sensorimotor integration, a process wherein visual and proprioceptive information is integrated with efferent copies of motor commands to generate an internal representation of the current state of the body (Pellijeff et al., 2006). A number of related tasks that presumably require sensorimotor integration, such as motor imagery and the sense of degree of control of an action (i.e. sense of agency) are associated with activation of regions within the parietal cortex, particularly the precuneus in the case of imagery (Vingerhoets et al., 2002; Hanakawa et al., 2003; Cavanna and Trimble 2006). Tracing studies, mapping the corticocortical connections of the precuneus, demonstrate that this region is reciprocally connected with higher-order centers in the superior and inferior parietal lobule, lateral and medial premotor areas, the prefrontal cortex, and cingulate cortex, further substantiating its role in sensorimotor integration.

4.6. Bilateral angular gyri

Tracing studies in monkeys demonstrate that area PG (the putative homologue of the angular gyrus in humans) is connected with higher-order sensorimotor centers including the rostral regions of the inferior parietal lobule, pre-SMA, and ventral premotor cortex (area F5b) (Gregoriou et al., 2006; Rozzi et al., 2006). Tractography in healthy humans, performed using diffusion-weighted tensor imaging, reveals similar findings, that the angular gyrus has strong connectivity with the ventral premotor cortex and the parahipocampal gyrus (which is implicated in perception of space) (Rushworth et al., 2006). Cells in area PG (area 7a) have complex visual and somatosensory response properties suggesting that this region is involved in egocentric and allocentric space perception, particularly for guiding motor actions (Blum 1985; Blatt et al., 1990; MacKay 1992; Yokochi et al., 2003), perhaps as part of the operation of the dorsal visual stream. Human imaging studies support the role of the angular gyrus in the attribution of agency (Farrer and Frith 2002; Farrer et al., 2003; Farrer et al., 2007).

4.7. Extrastriate body area

The extrastriate body area (EBA) is located in the occipito-temporal cortex at about the posterior inferior temporal sulcus/middle temporal gyrus (Peelen and Downing 2005; Spiridon et al., 2006) and near visual motion processing area MT (area V5) and lateral occipital area LO in which cells are selective for object form (Downing et al., 2001; Downing et al., 2007). A detailed review of EBA’s role in perception is provided by (Peelen and Downing 2007). Human neuroimaging work reveals that the EBA is selectively recruited when observing images of body parts (relative to images of faces or objects) and, like the angular gyrus and insular cortex, seems to be important for identifying the agent of the observed movement (David et al., 2007). Transcranial magnetic stimulation-induced virtual lesions of EBA lead to transient decrement in performance on match-to-sample paradigms of images of body parts (Urgesi et al., 2004). This body of literature allows us to suggest that the EBA activation in the VRhands>VRellipse contrast in our study indicates that increased activation in the EBA was specific to observation of moving virtual body parts.

4.8. General conclusion

In our study, subjects’ interactions in VR alternated between the conditions of observation of actions (performed by virtual hand models) to imitate, and the condition of actually controlling the VR hand models (whose motion was temporally and spatially congruent with the subject’s own motion). A parsimonious explanation is that the VR hand models served as disembodied training tools in the former condition, and as embodied “extensions” of the subject’s own body or as “pseudo-tools” in the latter condition. Our results suggest that the time-variant activation of the insula in the observation epochs may have reflected an improved ability to disembody the VR hands, while the recruitment of a network involving the precuneus, angular gyrus, and extrastriate body area for the execution condition may be attributable to the role of these regions in integrating visual feedback of the VR hand models with concurrent proprioceptive feedback and efferent copies of motor commands.

Acknowledgments

Special thanks to On-Yee Lo, Jeffrey Lewis, and Qinyin Qiu for expert technical assistance. This work was supported by institutional funds provided by New York University, Steinhardt School of Culture, Education, and Human Development (ET), University of Medicine and Dentistry of New Jersey, Department of Rehabilitation and Movement Science (ET), and by the NIH grant HD 42161 (SA) and National Institute on Disability and Rehabilitation Research RERC Grant # H133E050011 (SA).

References

- Fifth Dimension Technologies. 5DT Data Glove 16 MRI. from http://www.5dt.com.

- Adamovich S, Fluet G, Merians A, Mathai A, Qiu Q. Recovery of hand function in virtual reality: training hemi-paretic hand and arm together or separately. 28th EMBC Annual International Conference Engineering in Medicine and Biology Society; Vancouver, Canada. 2008. pp. 3475–3478. [DOI] [PubMed] [Google Scholar]

- Adamovich S, Fluet G, Qiu Q, Mathai A, Merians A. Incorporating haptic effects into three-dimensional virtual environments to train the hemiparetic upper extremity. IEEE Transactions on Neural Systems and Rehabilitation Engineering. doi: 10.1109/TNSRE.2009.2028830. In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adamovich S, Merians A, Boian R, Tremaine M, Burdea G, Recce M, Poizner H. A virtual reality (VR)-based exercise system for hand rehabilitation post stroke. Presence. 2005;14:161–174. doi: 10.1109/IEMBS.2004.1404364. [DOI] [PubMed] [Google Scholar]

- Altschuler EL. Interaction of vision and movement via a mirror. Perception. 2005;34(9):1153–5. doi: 10.1068/p3409bn. [DOI] [PubMed] [Google Scholar]

- Blatt GJ, Andersen RA, Stoner GR. Visual receptive field organization and corticocortical connections of the lateral intraparietal area (area LIP) in the macaque. J Comp Neurol. 1990;299(4):421–45. doi: 10.1002/cne.902990404. [DOI] [PubMed] [Google Scholar]

- Blum B. Manipulation reach and visual reach neurons in the inferior parietal lobule of the rhesus monkey. Behav Brain Res. 1985;18(2):167–73. doi: 10.1016/0166-4328(85)90072-5. [DOI] [PubMed] [Google Scholar]

- Buccino G, Binkofski F, Fink GR, Fadiga L, Fogassi L, Gallese V, Seitz RJ, Zilles K, Rizzolatti G, Freund HJ. Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. Eur J Neurosci. 2001;13(2):400–4. [PubMed] [Google Scholar]

- Buccino G, Solodkin A, Small SL. Functions of the mirror neuron system: implications for neurorehabilitation. Cogn Behav Neurol. 2006;19(1):55–63. doi: 10.1097/00146965-200603000-00007. [DOI] [PubMed] [Google Scholar]

- Butler AJ, d Page SJ. Mental practice with motor imagery: evidence for motor recovery and cortical reorganization after stroke. Arch Phys Med Rehabil. 2006;87(12 Suppl 2):S2–11. doi: 10.1016/j.apmr.2006.08.326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavanna AE, Trimble MR. The precuneus: a review of its functional anatomy and behavioural correlates. Brain. 2006;129(Pt 3):564–83. doi: 10.1093/brain/awl004. [DOI] [PubMed] [Google Scholar]

- Celnik P, Stefan K, Hummel F, Duque J, Classen J, Cohen LG. Encoding a motor memory in the older adult by action observation. Neuroimage. 2006;29(2):677–84. doi: 10.1016/j.neuroimage.2005.07.039. [DOI] [PubMed] [Google Scholar]

- Celnik P, Webster B, Glasser DM, Cohen LG. Effects of action observation on physical training after stroke. Stroke. 2008;39(6):1814–20. doi: 10.1161/STROKEAHA.107.508184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corradi-Dell’acqua C, Ueno K, Ogawa A, Cheng K, Rumiati RI, Iriki A. Effects of shifting perspective of the self: an fMRI study. Neuroimage. 2008;40(4):1902–11. doi: 10.1016/j.neuroimage.2007.12.062. [DOI] [PubMed] [Google Scholar]

- David N, Cohen MX, Newen A, Bewernick BH, Shah NJ, Fink GR, Vogeley K. The extrastriate cortex distinguishes between the consequences of one’s own and others’ behavior. Neuroimage. 2007;36(3):1004–14. doi: 10.1016/j.neuroimage.2007.03.030. [DOI] [PubMed] [Google Scholar]

- Decety J, Grezes J, Costes N, Perani D, Jeannerod M, Procyk E, Grassi F, Fazio F. Brain activity during observation of actions. Influence of action content and subject’s strategy. Brain. 1997;120(Pt 10):1763–77. doi: 10.1093/brain/120.10.1763. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J, Hashambhoy Y, Rane T, Shadmehr R. Neural correlates of reach errors. J Neurosci. 2005;25(43):9919–31. doi: 10.1523/JNEUROSCI.1874-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293(5539):2470–3. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Downing PE, Wiggett AJ, Peelen MV. Functional magnetic resonance imaging investigation of overlapping lateral occipitotemporal activations using multi-voxel pattern analysis. J Neurosci. 2007;27(1):226–33. doi: 10.1523/JNEUROSCI.3619-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehrsson HH, Fagergren E, Forssberg H. Differential fronto-parietal activation depending on force used in a precision grip task: an fMRI study. J Neurophysiol. 2001;85(6):2613–23. doi: 10.1152/jn.2001.85.6.2613. [DOI] [PubMed] [Google Scholar]

- Farrer C, Franck N, Georgieff N, Frith CD, Decety J, Jeannerod M. Modulating the experience of agency: a positron emission tomography study. Neuroimage. 2003;18(2):324–33. doi: 10.1016/s1053-8119(02)00041-1. [DOI] [PubMed] [Google Scholar]

- Farrer C, Frey SH, Van Horn JD, Tunik E, Turk D, Inati S, Grafton ST. The Angular Gyrus Computes Action Awareness Representations. Cereb Cortex. 2007 doi: 10.1093/cercor/bhm050. [DOI] [PubMed] [Google Scholar]

- Farrer C, Frith CD. Experiencing oneself vs another person as being the cause of an action: the neural correlates of the experience of agency. Neuroimage. 2002;15(3):596–603. doi: 10.1006/nimg.2001.1009. [DOI] [PubMed] [Google Scholar]

- Gaggioli A, Meneghini A, Morganti F, Alcaniz M, Riva G. A strategy for computer-assisted mental practice in stroke rehabilitation. Neurorehabil Neural Repair. 2006;20(4):503–7. doi: 10.1177/1545968306290224. [DOI] [PubMed] [Google Scholar]

- Gallese V, Keysers C, Rizzolatti G. A unifying view of the basis of social cognition. Trends Cogn Sci. 2004;8(9):396–403. doi: 10.1016/j.tics.2004.07.002. [DOI] [PubMed] [Google Scholar]

- Grafton ST, Fagg AH, Woods RP, Arbib MA. Functional anatomy of pointing and grasping in humans. Cereb Cortex. 1996;6(2):226–37. doi: 10.1093/cercor/6.2.226. [DOI] [PubMed] [Google Scholar]

- Gregoriou GG, Borra E, Matelli M, Luppino G. Architectonic organization of the inferior parietal convexity of the macaque monkey. J Comp Neurol. 2006;496(3):422–51. doi: 10.1002/cne.20933. [DOI] [PubMed] [Google Scholar]

- Grezes J, Fonlupt P, Bertenthal B, Delon-Martin C, Segebarth C, Decety J. Does perception of biological motion rely on specific brain regions? Neuroimage. 2001;13(5):775–85. doi: 10.1006/nimg.2000.0740. [DOI] [PubMed] [Google Scholar]

- Hanakawa T, Immisch I, Toma K, Dimyan MA, Van Gelderen P, Hallett M. Functional properties of brain areas associated with motor execution and imagery. J Neurophysiol. 2003;89(2):989–1002. doi: 10.1152/jn.00132.2002. [DOI] [PubMed] [Google Scholar]

- Holden MK. Virtual environments for motor rehabilitation: review. Cyberpsychol Behav. 2005;8(3):187–211. doi: 10.1089/cpb.2005.8.187. discussion 212–9. [DOI] [PubMed] [Google Scholar]

- Holden MK, Dyar TA, Dayan-Cimadoro L. Telerehabilitation using a virtual environment improves upper extremity function in patients with stroke. IEEE Trans Neural Syst Rehabil Eng. 2007;15(1):36–42. doi: 10.1109/TNSRE.2007.891388. [DOI] [PubMed] [Google Scholar]

- Kilner JM, Paulignan Y, Boussaoud D. Functional connectivity during real vs imagined visuomotor tasks: an EEG study. Neuroreport. 2004;15(4):637–42. doi: 10.1097/00001756-200403220-00013. [DOI] [PubMed] [Google Scholar]

- MacKay WA. Properties of reach-related neuronal activity in cortical area 7A. J Neurophysiol. 1992;67(5):1335–45. doi: 10.1152/jn.1992.67.5.1335. [DOI] [PubMed] [Google Scholar]

- McCloy R, Stone R. Science, medicine, and the future. Virtual reality in surgery. Bmj. 2001;323(7318):912–5. doi: 10.1136/bmj.323.7318.912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merians AS, Poizner H, Boian R, Burdea G, Adamovich S. Sensorimotor training in a virtual reality environment: does it improve functional recovery poststroke? Neurorehabil Neural Repair. 2006;20(2):252–67. doi: 10.1177/1545968306286914. [DOI] [PubMed] [Google Scholar]

- Merians AS, Tunik E, Adamovich SV. Virtual Reality to Maximize Function for Hand and Arm Rehabilitation: Exploration of Neural Mechanisms. In: Gaggioli A, editor. Innovation in Rehabilitation Technology. IOS Press; In Press. [PMC free article] [PubMed] [Google Scholar]

- Mirelman A, Bonato P, Deutsch JE. Effects of Training With a Robot-Virtual Reality System Compared With a Robot Alone on the Gait of Individuals After Stroke. Stroke. 2008 Nov 6; doi: 10.1161/STROKEAHA.108.516328. [Epub ahead of print] [DOI] [PubMed]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9(1):97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. Within-subject reproducibility of category-specific visual activation with functional MRI. Hum Brain Mapp. 2005;25(4):402–8. doi: 10.1002/hbm.20116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. The neural basis of visual body perception. Nat Rev Neurosci. 2007;8(8):636–48. doi: 10.1038/nrn2195. [DOI] [PubMed] [Google Scholar]

- Pellijeff A, Bonilha L, Morgan PS, McKenzie K, Jackson SR. Parietal updating of limb posture: an event-related fMRI study. Neuropsychologia. 2006;44(13):2685–90. doi: 10.1016/j.neuropsychologia.2006.01.009. [DOI] [PubMed] [Google Scholar]

- Perani D, Fazio F, Borghese NA, Tettamanti M, Ferrari S, Decety J, Gilardi MC. Different brain correlates for watching real and virtual hand actions. Neuroimage. 2001;14(3):749–58. doi: 10.1006/nimg.2001.0872. [DOI] [PubMed] [Google Scholar]

- Pomeroy VM, Clark CA, Miller JS, Baron JC, Markus HS, Tallis RC. The potential for utilizing the “mirror neurone system” to enhance recovery of the severely affected upper limb early after stroke: a review and hypothesis. Neurorehabil Neural Repair. 2005;19(1):4–13. doi: 10.1177/1545968304274351. [DOI] [PubMed] [Google Scholar]

- Powers MB, Emmelkamp PM. Virtual reality exposure therapy for anxiety disorders: A meta-analysis. J Anxiety Disord. 2008;22(3):561–9. doi: 10.1016/j.janxdis.2007.04.006. [DOI] [PubMed] [Google Scholar]

- Riva G, Castelnuovo G, Mantovani F. Transformation of flow in rehabilitation: the role of advanced communication technologies. Behav Res Methods. 2006;38(2):237–44. doi: 10.3758/bf03192775. [DOI] [PubMed] [Google Scholar]

- Rizzo AA, Graap K, Perlman K, McLay RN, Rothbaum BO, Reger G, Parsons T, Difede J, Pair J. Virtual Iraq: initial results from a VR exposure therapy application for combat-related PTSD. Stud Health Technol Inform. 2008;132:420–5. [PubMed] [Google Scholar]

- Rizzolatti G, Luppino G. The cortical motor system. Neuron. 2001;31(6):889–901. doi: 10.1016/s0896-6273(01)00423-8. [DOI] [PubMed] [Google Scholar]

- Rozzi S, Calzavara R, Belmalih A, Borra E, Gregoriou GG, Matelli M, Luppino G. Cortical connections of the inferior parietal cortical convexity of the macaque monkey. Cereb Cortex. 2006;16(10):1389–417. doi: 10.1093/cercor/bhj076. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Behrens TE, Johansen-Berg H. Connection patterns distinguish 3 regions of human parietal cortex. Cereb Cortex. 2006;16(10):1418–30. doi: 10.1093/cercor/bhj079. [DOI] [PubMed] [Google Scholar]

- Spiridon M, Fischl B, Kanwisher N. Location and spatial profile of category-specific regions in human extrastriate cortex. Hum Brain Mapp. 2006;27(1):77–89. doi: 10.1002/hbm.20169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suchan B, Melde C, Herzog H, Homberg V, Seitz RJ. Activation differences in observation of hand movements for imitation or velocity judgement. Behav Brain Res. 2008;188(1):78–83. doi: 10.1016/j.bbr.2007.10.021. [DOI] [PubMed] [Google Scholar]

- Tai YF, Scherfler C, Brooks DJ, Sawamoto N, Castiello U. The human premotor cortex is ‘mirror’ only for biological actions. Curr Biol. 2004;14(2):117–20. doi: 10.1016/j.cub.2004.01.005. [DOI] [PubMed] [Google Scholar]

- The Virtual Reality Peripheral Network (VRPN) from http://www.cs.unc.edu/Research/vrpn.

- Tunik E, Schmitt PJ, Grafton ST. BOLD coherence reveals segregated functional neural interactions when adapting to distinct torque perturbations. J Neurophysiol. 2007;97(3):2107–20. doi: 10.1152/jn.00405.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Urgesi C, Berlucchi G, Aglioti SM. Magnetic stimulation of extrastriate body area impairs visual processing of nonfacial body parts. Curr Biol. 2004;14(23):2130–4. doi: 10.1016/j.cub.2004.11.031. [DOI] [PubMed] [Google Scholar]

- Vaillancourt DE, Yu H, Mayka MA, Corcos DM. Role of the basal ganglia and frontal cortex in selecting and producing internally guided force pulses. Neuroimage. 2007;36(3):793–803. doi: 10.1016/j.neuroimage.2007.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vingerhoets G, de Lange FP, Vandemaele P, Deblaere K, Achten E. Motor imagery in mental rotation: an fMRI study. Neuroimage. 2002;17(3):1623–33. doi: 10.1006/nimg.2002.1290. [DOI] [PubMed] [Google Scholar]

- Yokochi H, Tanaka M, Kumashiro M, Iriki A. Inferior parietal somatosensory neurons coding face-hand coordination in Japanese macaques. Somatosens Mot Res. 2003;20(2):115–25. doi: 10.1080/0899022031000105145. [DOI] [PubMed] [Google Scholar]

- You SH, Jang SH, Kim YH, Hallett M, Ahn SH, Kwon YH, Kim JH, Lee MY. Virtual reality-induced cortical reorganization and associated locomotor recovery in chronic stroke: an experimenter-blind randomized study. Stroke. 2005;36(6):1166–71. doi: 10.1161/01.STR.0000162715.43417.91. [DOI] [PubMed] [Google Scholar]

- You SH, Jang SH, Kim YH, Kwon YH, Barrow I, Hallett M. Cortical reorganization induced by virtual reality therapy in a child with hemiparetic cerebral palsy. Dev Med Child Neurol. 2005;47(9):628–35. [PubMed] [Google Scholar]