Abstract

Purpose

Real‐world evidence (RWE) includes data from retrospective or prospective observational studies and observational registries and provides insights beyond those addressed by randomized controlled trials. RWE studies aim to improve health care decision making.

Methods

The International Society for Pharmacoeconomics and Outcomes Research (ISPOR) and the International Society for Pharmacoepidemiology (ISPE) created a task force to make recommendations regarding good procedural practices that would enhance decision makers' confidence in evidence derived from RWD studies. Peer review by ISPOR/ISPE members and task force participants provided a consensus‐building iterative process for the topics and framing of recommendations.

Results

The ISPOR/ISPE Task Force recommendations cover seven topics such as study registration, replicability, and stakeholder involvement in RWE studies. These recommendations, in concert with earlier recommendations about study methodology, provide a trustworthy foundation for the expanded use of RWE in health care decision making.

Conclusion

The focus of these recommendations is good procedural practices for studies that test a specific hypothesis in a specific population. We recognize that some of the recommendations in this report may not be widely adopted without appropriate incentives from decision makers, journal editors, and other key stakeholders.

Keywords: comparative effectiveness, decision making, guidelines, pharmacoepidemiology, real‐world data, treatment effectiveness

1. INTRODUCTION

Real‐world evidence (RWE) is obtained from analyzing real‐world data (RWD). The RWD is defined here briefly as data obtained outside the context of randomized controlled trials (RCTs) generated during routine clinical practice.1, 2 This includes data from retrospective or prospective observational studies and observational registries; some consider data from single arm clinical trials as RWD. As stated in a 2007 International Society for Pharmacoeconomics and Outcomes Research (ISPOR) task force report, “Evidence is generated according to a research plan and interpreted accordingly, whereas data is but one component of the research plan. Evidence is shaped, while data simply are raw materials and alone are non‐informative.” RWE can inform the application of evidence from RCTs to health care decision making and provide insights beyond those addressed by RCTs. RWD studies assess both the care and health outcomes of patients in routine clinical practice and produce RWE. In contrast to RCTs, patients and their clinicians choose treatments on the basis of the patient's clinical characteristics and preferences. However, since the factors that influence treatment choice in clinical practice may also influence clinical outcomes, RWD studies generally cannot yield definitive causal conclusions about the effects of treatment.

Currently, most regulatory bodies and Health Technology Assessment (HTA) organizations use RWE for descriptive analyses (eg, disease epidemiology, treatment patterns, and burden of illness) and to assess treatment safety (the incidence of adverse effects) but not treatment effectiveness, whereas other decision makers also leverage RWD to varying extents with respect to effectiveness and comparative effectiveness.3 With increasing attention paid to the applicability of evidence to specific target populations, however, clinicians, payers, HTA organizations, regulators, and clinical guideline developers are likely to turn to RWE to sharpen decision making that heretofore had been guided principally by RCTs (frequently using a placebo control) in narrowly defined populations.

Commonly voiced concerns about RWD studies include uncertainty about their internal validity, inaccurate recording of health events, missing data, and opaque reporting of conduct and results.4 Critics of such studies also worry that the RWE literature is biased because of “data dredging” (ie, conducting multiple analyses until one provides the hoped‐for result) and selective publication (ie, journals' preference for publishing positive results).5, 6, 7, 8 As a first step toward addressing these concerns, the ISPOR and the International Society for Pharmacoepidemiology (ISPE) created a task force to make recommendations regarding good procedural practices that would enhance decision makers' confidence in evidence derived from RWD studies.

We define good procedural practices (or good “study hygiene”) as policies about the planning, execution, and dissemination of RWD studies that help to assure the public of the integrity of the research process and enhance confidence in the RWE produced from RWD studies. Journal editors and regulatory agencies have established good procedural practices for pre‐approval RCTs, including study registration of trials on a public website prior to their conduct, the completion of an a priori protocol and data analysis plan, accountability for documenting any changes in study procedures, and the expectation that all RCT results will be made public.9, 10 A statement of complementary practices for RWD studies of treatment effectiveness is lacking. Our report aims to fill this gap.

We differentiate 2 categories of RWD studies aiming to provide data on treatment effectiveness that may differ in their procedural approach:

Exploratory Treatment Effectiveness Studies. These studies typically do not hypothesize the presence of a specific treatment effect and/or its magnitude. They primarily serve as a first step to learn about possible treatment effectiveness. While the quality of exploratory studies may be high and enrich our understanding, the process of conducting exploratory studies is generally less preplanned and allows for process adjustments as investigators gain knowledge of the data.

Hypothesis Evaluating Treatment Effectiveness (HETE) Studies. These studies evaluate the presence or absence of a prespecified effect and/or its magnitude. The purpose of a HETE study is to test a specific hypothesis in a specific population. When evaluated in conjunction with other evidence, the results may lead to treatment recommendations by providing insights into, for example, whether a treatment effect observed in RCTs gives the same result in the real world where low adherence and other factors alter treatment effectiveness.

We note that both exploratory and HETE studies can provide important insights based on clinical observations, evolving treatment paradigms, and other scientific insights. However, the focus of the recommendations in this report is good procedural practices for HETE studies (ie, studies with explicit a priori hypotheses).

We recognize that procedural integrity is necessary but not sufficient for including RWD studies in the body of evidence that health policy makers use to make a decision. Other factors play a role, including the study design, adjudication of outcomes (if relevant), transparency and methodological rigor of the analyses, and size of the treatment effect among others. Nonetheless, the focus of this report is the role of procedural practices that enhance the trustworthiness of the larger process, with a particular focus on the effectiveness or comparative effectiveness of pharmaceutical and biologic treatments.

The scope of this report does not, by design, directly address several important considerations when RWD studies are reviewed by decision makers:

Good practices for the design, analysis, and reporting of observational RWD studies (ie, methodological standards). These issues have been addressed by ISPOR, ISPE, the US Food and Drug Administration (FDA), the European Medicines Agency, the European Network of Centres for Pharmacoepidemiology and Pharmacovigilance (ENCePP®), and the European Network for Health Technology Assessment.11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23 Their recommendations aim to improve the validity and relevance of RWD studies by adequately addressing methodological issues including confounding, bias, and uncertainty, which are the explicit objections raised by payers and regulatory bodies to using RWD studies to inform decision making.

Good practices for the conduct of pragmatic clinical trials (pRCTs or PCTs). pRCTs are randomized trials with varying amounts of pragmatic (real world) elements, including the use of electronic health records or claims data to obtain study outcomes.24

We take no position on whether, and in which context, policy makers may deem observational studies sufficiently transparent to routinely support initial regulatory approval and reimbursement.

The focus of this report is RWE examining the effectiveness of pharmaceutical or biologic therapies. Although the recommendations of this report will have relevance for all types of therapeutic health technologies, we recognize that applying these recommendations to technologies different from drugs (ie, medical devices) might require further refinement because of differences in the evidence required for regulatory approval.25

The current report, led by ISPOR, is one product of the joint ISPOR‐ISPE Special Task Force. A second report, where ISPE took the lead, addresses transparency in the reporting of the implementation of RWD studies; this is a requirement for designing and executing studies to reproduce the results of RWD studies.26 The purposes of these 2 reports are complementary. They address several key aspects of transparency in overall study planning and procedural practices (ie, study hygiene) and transparent implementation of studies to facilitate study reproducibility. Along with available guidance documents on transparently reporting study results, these 2 reports aim to provide guidance that will ultimately lead to increased confidence in using RWE for decision making in health care.18, 27, 28 We see our recommendations as the beginning of a public discussion that will provide an impetus for all parties to converge on broadly agreed practices. Our intended audiences include all relevant stakeholders globally in the generation and use of RWE including researchers, funders of research, patients, payers, providers, regulatory agencies, systematic reviewers, and HTA authorities. This report may also inform related current science policy initiatives in the United States and Europe, including FDA initiatives to expand use of RWE in drug labeling, FDA draft guidance regarding using RWE for medical devices, the 21st Century Cures legislation and Adaptive Pathways considerations.29, 30, 31, 32

2. RECOMMENDATIONS AND RATIONALES

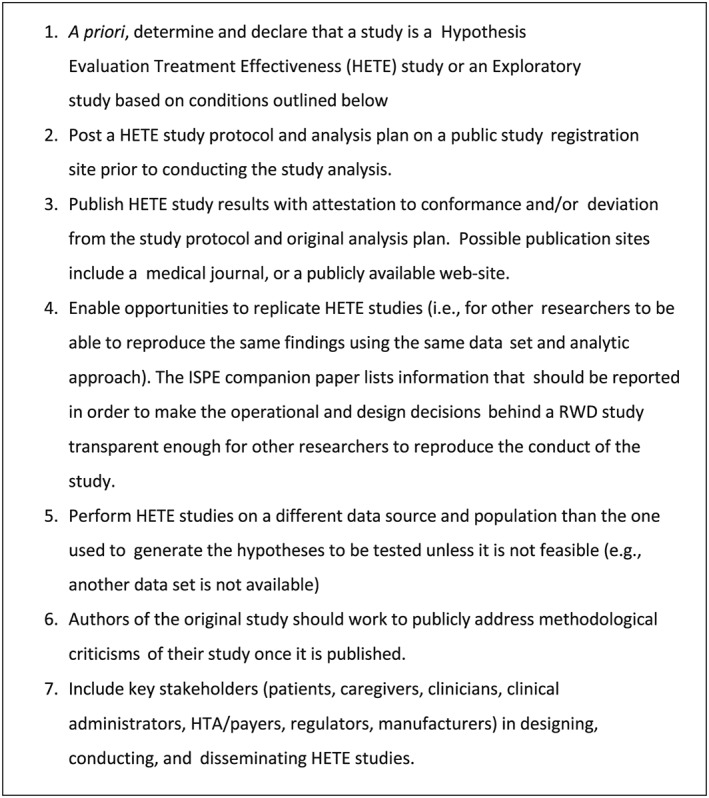

Our recommendations for good procedural practices for HETE studies are summarized in Figure 1; they are presented, along with the rationales for these recommendations, below:

-

1

A priori, determine and declare that study is a “HETE” or “exploratory” study based on conditions we outline below.

Figure 1.

Recommendations for good procedural practices for Hypothesis Evaluating Treatment Effectiveness Studies

A HETE RWD study that aims to assess treatment effectiveness or comparative effectiveness intends to test a specific hypothesis in a specific population. It is analogous to a confirmatory clinical trial, which has been defined as “an adequately controlled trial in which the hypotheses are stated in advance and evaluated” (International Conference on Harmonization of Standards) for drug regulation purposes and discussed in other experimental contexts.33, 34, 35

We recommend disclosing the rationale for the research question and for the study hypothesis to improve transparency and provide a basis for interpreting the study results (a compelling hypothesis increases the probability that the results are true). Study hypotheses may be derived from a variety of sources: an exploratory data analysis on other RWD sources, meta‐analyses or reanalyses (possibly on subgroups) of RCT data that reveal gaps in the evidence, results of other observational studies, changes in clinical practice, clinician or patient perceptions, expert opinion, decision models, or the underlying basic science. If the source was an exploratory analysis of a real‐world data set, it should be identified. The rationale for choosing the source of RWD for the HETE study should be described.

-

2

Post a HETE study protocol and analysis plan on a public study registration site prior to conducting the study analysis.

The posting of a study protocol and analysis plan on a public registration site provides researchers with the opportunity to publicly declare the “intent” of the study—exploratory or hypothesis evaluation—as well as basic information about the study. Registration in advance of beginning a study is a key step in reducing publication bias because it allows systematic reviewers to assemble a more complete body of evidence by including studies that were partially completed or were inconclusive and therefore less likely to be published in a journal. For transparency, posting of exploratory study protocols is strongly encouraged.

A number of options are available for study registration of observational studies including the EU Post‐authorisation Study Register, ClinicalTrials.Gov, and HSRProj.23, 36, 37 ClinicalTrials.gov was started by the National Institutes of Health's National Library of Medicine as a registry of publicly and privately supported clinical studies so that there would be public information about trials and other clinical studies on health technologies. While this site was designed for RCTs, some observational studies have been registered although the process is not an easy one. It may be possible to modify the site to facilitate the registration of observational studies. In 1995, National Library of Medicine began a similar effort—HSRProj—to provide information on health services research (which includes RWD studies). The HSRProj is a free, openly accessible, searchable database of information on ongoing and recently completed health services and policy research projects. While the stated purpose of HSRProj is to provide “information on newly funded projects before outcomes are published,” registration records general information about study protocols. The EU Post‐authorisation Study Register, hosted by ENCePP, is a publicly available register of non‐interventional post‐authorization studies, with a focus on observational studies, either prospective or retrospective; it accepts study protocols and study results. The University hospital Medical Information Network—Clinical Trials Registry is also a publicly available register of observational studies as well as interventional studies, hosted by University hospital Medical Information Network Center in Japan.38 In addition to these sites, health researchers should consider study registration sites developed by social scientists, who also developed a formal framework for describing research designs.39 We recognize that none of the current options are ideal and will require further discussion among stakeholders.

-

3

Publish HETE study results with attestation to conformance and/or deviation from original analysis plan. Possible publication sites include a medical journal or a publicly available website.

Full and complete reporting of HETE studies, along with the initial declaration of its hypothesis evaluation intent and study registration of the study protocol, is an important step toward earning the confidence of decision makers. Along with study registration, we recommend publishing HETE study results, together with the study protocol as proposed in Wang et al.26, 40 Comprehensive reporting involves several elements. Seek to publish a HETE study in a venue that the public can access easily, preferably through publication of a peer‐reviewed full manuscript or, if unpublished, on a publicly available website. Any publication must attest to any deviation from study protocol or the original data analysis plan, detailing the modified elements as they appeared in the original protocol and the final protocol. Some journals and funder websites now publish the study protocol. The publication should follow reporting guidelines: the RECORD/STROBE statements reporting guidelines for observational studies, the SPIRIT recommendations for the content of a clinical trial protocol, as well as making public the study design and operational study results (outlined in the ISPE‐led companion report).26, 27, 40, 41 The coding rules and study parameters that generated the study results should be made publicly available.

-

4

Enable opportunities for replication of HETE studies whenever feasible (ie, for other researchers to be able to reproduce the same findings using the same data set and analytic approach).

Full transparency in design and operational parameters, data sharing, and open access in clinical research will not only increase confidence in the results but will also foster the reuse of clinical data.40 This is discussed in more detail in the ISPE‐led companion paper. This will depend if the data set can be made available for independent researchers.

-

5

Perform HETE studies on a different data source and population than the one used to generate the hypotheses to be tested, unless it is not feasible.

When a clinically significant finding emerges from an exploratory analysis of observational data or a formal exploratory RWD study, good practice requires that a HETE study must analyze a different data source and population; otherwise, the HETE analysis risks replicating a finding that is specific to a given data source or population. Confirmation in a second, different database does not guarantee validity.42, 43 There are situations when replication in another data source is for practical reasons impossible. For example, if an association with a rare outcome was detected in the FDA Sentinel data infrastructure of >160 million lives, one would be hard pressed to find an equally sized high‐quality data source more representative of the at‐risk population. There are other situations where using the same data set may be appropriate. If the study hypothesis is sufficiently sharpened on the basis of the signal from an analysis of a subsample of a data set used for an exploratory study and there are no other available data sets, then the same data source may be considered for hypothesis evaluation.44 Because many consider this practice to be a departure from good science, a publication should acknowledge the risks involved in acting upon the results. In such cases, thorough reporting of how and why the data analysis plan evolved should be provided.

-

6

Authors of the original study should work to publicly address methodological criticisms of their study once it is published.

Public discussion of disagreements regarding methodology is important to both the credibility of RWD studies and to advancing the field of observational research. Authors may want to collaborate on reanalysis with colleagues raising the criticism, while in other cases they may make needed information/data available to facilitate reanalysis. Publishing or posting on a public website criticisms and responses or reanalyses based on these comments would be useful.

-

7

Include key stakeholders (eg, patients, caregivers, clinicians, clinical administrators, HTA/payers, regulators, and manufacturers) in designing, conducting, and disseminating the research.

Many would agree that stakeholder involvement, particularly, but not limited to, patients, helps ensure that RWD studies address questions that are meaningful to those who will be affected by, or must make decisions based on, the study's results (eg, Kirwin et al44 and Selby et al45). However, participation of stakeholders in research is evolving, and best practices are still emerging. The best way to involve stakeholders is to be clear about the intent of stakeholder engagement, particularly for RWD studies. Investigators should consult regulators, HTA authorities/payers, clinicians, and/or patients on study design, survey instruments, outcome measures, and strategies for recruiting and dissemination via advisory panel meetings, review of protocols, or other means. The specific consultative needs will depend on the intended use of the study, end points involved, novelty of the approach, perceived reliability of the data, and other factors. The experience at the Patient‐Centered Outcomes Research Institute is useful in this regard.46, 47, 48

3. DISCUSSION

In health care, RWD studies are often the only source of evidence about the effects of a health care intervention in community practice and should be included in the body of evidence considered in health care decision making. Effective health care decision making relies not only on good procedural practices, as described in the Recommendations above, but also on methodologically sound science, transparency of study execution as discussed in the companion ISPE report, and appropriate interpretation of the evidence. Decision makers have to evaluate RWD study results in light of the usual criteria for causal inference.49 Decision makers who embrace evidence‐based medicine will look to the best available information on effectiveness, which will mean results from RCTs, RWD HETE studies, and rarely RWD exploratory studies. Prudence dictates that caution be exercised when using RWD studies as part of the evidence considered for informing policy decisions. Particularly for exploratory RWD studies, greater detail should be expected by decision makers regarding the study procedures and conduct; decision makers should thoroughly understand as how and why the analysis deviated from the original protocol and data analysis plan evolved. A publication should acknowledge the risks involved in acting upon the results. Greater transparency comes with study registration, and therefore, greater credibility should be given to studies that are registered prior to their conduct, whether exploratory or HETE.

To date, lack of confidence in observational research (eg, RWD studies) has slowed the uptake of RWE into policy,50 and many of the concerns have focused on methodological issues and deserve a brief mention. Threats to the validity of RWD studies of the effects of interventions include unmeasured confounding, measurement error, missing data, model misspecification, selection bias, and fraud.51 Scholarly journals publish many observational studies, often without requiring the authors to report a thorough exploration of these threats to validity. Unless the authors identify these threats and provide guidance about how they could bias study results, consumers of observational studies must trust the study authors to conduct the study with integrity and report it transparently. Of equal concern for decision makers is the perceived ready opportunity for selective choice and publication of RWD study results.

Establishing study registration as an essential element in clinical research will discourage the practice of ad hoc data mining and selective choice of results that can occur in observational health care studies.52 However, from the strict point of view of scientific discovery, study registration per se may be neither completely necessary (methodologically sound studies with large effect sizes may be useful regardless of study registration prior to their conduct) nor sufficient (study registration does not guarantee quality or prevent scientific fraud). Nevertheless, when possible we recommend study registration because a public record of all research on a topic would reduce the effect of publication bias, which in turn would significantly augment the credibility of a body of evidence. It encourages deliberation, planning, and accountability, which enhances the trustworthiness of the research enterprise itself.

Even when researchers use sound methods and good procedural practices, the ultimate responsibility devolves onto decision makers to interpret study results for relevance and credibility; a joint ISPOR‐AMCP‐NPC Good Practices Report specifically addresses this purpose.17 For example, in large observational databases, even small effect sizes may be statistically significant, but not clinically relevant to decision makers.

As detailed in the ISPE companion paper, sharing of data sets, programming code, and transparent reporting about key decisions and parameters used in study execution enhances the credibility of the clinical research enterprise.26 The proprietary nature of some data, the intellectual property embodied in the full programming code, and privacy concerns are realities; however, clear reporting about the parameters used in study execution could provide transparency. Given the feasibility challenges with data sharing, alternative solutions to facilitate reproducibility and transparency may need to be explored.

While regulatory bodies typically accept RWD studies for the assessment of adverse treatment effects, we believe that concerns about study integrity are part of the reason for the relatively limited adoption of RWD studies as credible evidence for beneficial treatment effects. Indeed, in the future, if not now, post‐regulatory approval RWD studies will generate the majority of the information about the benefits and harms associated with therapies. The current situation is not optimal since RCTs cannot answer all policy‐relevant questions and typically have limited external validity. Moreover, RCTs have become increasingly expensive and difficult to execute successfully. In addition, the increased focus on identifying the predictors of treatment response heterogeneity requires data sets that are far larger than typical RCTs can provide. RWE based on RWD studies in typical clinical practice is critical to the operation of a learning health system—providing timely insights into what works best for whom and when and for informing the development of new applications for existing technology.

We recognize that some of the recommendations in this report may not be widely adopted without appropriate incentives from decision makers, journal editors, and other key stakeholders. It is beyond the scope of this report to suggest what appropriate incentives might be. A stakeholder meeting is being planned for October 2017 by ISPOR to begin a process of dissemination of these recommendations and to elicit input regarding a variety of issues including what would be the best venue for study registration and what might be appropriate incentives for encouraging adherence to the recommendations.

We believe that the recommendations of this ISPOR/ISPE task force can, in concert with earlier recommendations about study methodology, provide a trustworthy foundation for the expanded use of RWE in health care decision making.

ETHICS STATEMENT

The authors state that no ethical approval was needed.

DISCLAIMER

The views expressed in this article are the personal views of the authors and may not be understood or quoted as being made on behalf of or reflecting the position of the agencies or organizations with which the authors are affiliated.

ACKNOWLEDGEMENT

No financial support was received for this work. We would like to acknowledge the helpful comments of the following: Shohei Akita, Rene Allard, Patrizia Allegra, Tânia Maria Beume, Lance Brannman, Michael Cangelosi, Hanna Gyllensten, Gillian Hall, Kris Kahler, Serena Losi, Junjie Ma, Dan Malone, Kim McGuigan, Brigitta Monz, Dominic Muston, Eberechukwu Onukwugha, Lisa Shea, Emilie Taymor, Godofreda Vergeire‐Dalmacion, David van Brunt, Martin van der Graaff, Carla Vossen, and Xuanqian Xie.

Berger ML, Sox H, Willke RJ, et al. Good practices for real‐world data studies of treatment and/or comparative effectiveness: Recommendations from the joint ISPOR‐ISPE Special Task Force on real‐world evidence in health care decision making. Pharmacoepidemiol Drug Saf. 2017;26:1033–1039. https://doi.org/10.1002/pds.4297

Marc L. Berger is a self‐employed part‐time consultant.

This article is a joint publication by Pharmacoepidemiology and Drug Safety and Value in Health.

REFERENCES

- 1. Garrison LP, Neumann PJ, Erickson P, Marshall D, Mullins CD. Using real‐world data for coverage and payment decisions: The ISPOR real‐world data task force report. Value Health. 2006;10(5):326‐335. [DOI] [PubMed] [Google Scholar]

- 2. Makady A, de Boer A, Hillege H, Klungel O, Goettsch W. What is real‐world data? A review of definitions based on literature and stakeholder interviews. Value Health. 2017;20(7):858‐865. [DOI] [PubMed] [Google Scholar]

- 3. Makady A, Ham RT, de Boer A, Hillege H, Klungel O, Goettsch W. GetReal Workpackage. Policies for use of real‐world data in health technology assessment (HTA): A comparative study of six HTA agencies. Value Health. 2017;20(4):520‐532. [DOI] [PubMed] [Google Scholar]

- 4. Sherman RE, Anderson SA, Dal Pan GJ, et al. Real‐world evidence—what is it and what can it tell us? N Engl J Med. 2016;375(23):2293‐2297. [DOI] [PubMed] [Google Scholar]

- 5. Brixner DI, Holtorf AP, Neumann PJ, Malone DC, Watkins JB. Standardizing quality assessment of observational studies for decision making in health care. J Manag Care Pharm. 2009;15(3):275‐283. Available at: http://www.amcp.org/data/jmcp/275‐283.pdf [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. U.S. Food and Drug Administration . Guidance for industry and FDA staff. Best practices for conducting and reporting pharmacoepidemiologic safety studies using electronic healthcare data. May 2013. Available at: http://www.fda.gov/downloads/drugs/guidancecomplianceregulatoryinformat ion/

- 7. The PLOS Medicine Editors . Observational studies: Getting clear about transparency. PLoS Med. 2014;11(8):e1001711. https://doi.org/10.1371/journal.pmed.1001711 guidances/ucm243537.pdf). Accessed December 10, 2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Segal JB, Kallich JD, Oppenheim ER, et al. Using certification to promote uptake of real‐world evidence by payers. J Manag Care Pharm. 2016;22(3):191‐196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. International Committee of Medical Journal Editors. http://www.icmje.org/recommendations/browse/publishing‐and‐editorial‐issues/clinical‐trial‐registration.html (accessed July 6, 2017)

- 10. US Food and Drug Administration. https://clinicaltrials.gov/ct2/manage‐recs/fdaaa (accessed July 6, 2017)

- 11. Motheral B, Brooks J, Clark MA, et al. A checklist for retroactive database studies—report of the ISPOR task force on retrospective databases. Value Health. 2003;6(2):90‐97. [DOI] [PubMed] [Google Scholar]

- 12. Berger ML, Mamdani M, Atkins D, Johnson ML. Good research practices for comparative effectiveness research: Defining, reporting and interpreting nonrandomized studies of treatment effects using secondary data sources: the ISPOR good research practices for retrospective database analysis task force report—part I. Value Health. 2009;12(8):1044‐1052. [DOI] [PubMed] [Google Scholar]

- 13. Cox E, Martin BC, Van Staa T, Garbe E, Siebert U, Johnson ML. Good research practices for comparative effectiveness research: Approaches to mitigate bias and confounding in the design of non‐randomized studies of treatment effects using secondary data sources: The ISPOR good research practices for retrospective database analysis task force—part II. Value Health. 2009;12(8):1053‐1061. [DOI] [PubMed] [Google Scholar]

- 14. Johnson ML, Crown W, Martin BC, Dartmouth CR, Siebert U, Normand SL. Good research practices for comparative effectiveness research: analytic methods to improve causal inference from nonrandomized studies of treatment effects using secondary data sources: The ISPOR good research practices for retrospective database analysis task force report—part III. Value Health. 2009;12:1062‐1073. [DOI] [PubMed] [Google Scholar]

- 15. Berger ML, Dreyer N, Anderson F, Towse A, Sedrakyan A, Normand SL. Prospective observational studies to assess comparative effectiveness: the ISPOR good research practices task force report. Value Health. 2012;15:217‐230. [DOI] [PubMed] [Google Scholar]

- 16. Garrison LP, Towse A, Briggs A, et al. Performance‐based risk‐sharing arrangements—good practices for design, implementation, and evaluation: report of the ISPOR good practices for performance‐based risk‐sharing arrangements task force, Value Health. 2013;16:703–709. [DOI] [PubMed] [Google Scholar]

- 17. Berger M, Martin B, Husereau D, et al. A questionnaire to assess the relevance and credibility of observational studies to inform healthcare decision making: an ISPOR‐AMCP‐NPC good practice task force report. Value Health. 2014;17(2):143‐156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Public Policy Committee . International Society for Pharmacoepidemiology. Guidelines for good pharmacoepidemiology practices (GPP). Pharmacoepidemiol Drug Saf. 2016;25:2‐10. [DOI] [PubMed] [Google Scholar]

- 19. U.S. FDA : Guidance for industry and FDA staff: Best practices for conducting and reporting pharmacoepidemiologic safety studies using electronic healthcare data. May 2013. URL: http://www.fda.gov/downloads/Drugs/GuidanceComplianceRegulatoryInform ation/Guidances/UCM243537.pdf

- 20. U. S. FDA : Guidance for industry: Good Pharmacovigilance Practices and Pharmacoepidemiologic Assessment. March 2005 http://www.fda.gov/downloads/regulatoryinformation/guidances/ucm126834 .pdf

- 21. European Medicines Agency (EMA) : Guideline on good pharmacovigilance practices (GVP) module VIII—post‐authorisation safety studies (Rev 2). August 2016. URL: http://www.ema.europa.eu/docs/en_GB/document_library/Scientific_guideline/2012/06/WC500129137.pdf

- 22. http://www.eunethta.eu/outputs/publication‐ja2‐methodological‐guideline‐ internal‐validity‐non‐randomised‐studies‐nrs‐interv.

- 23. European Network of Centres for Pharmacoepidemiology and Pharmacovigilance (ENCePP) . The European Union electronic register of post‐authorisation studies (EU PAS Register). http://www.encepp.eu/encepp_studies/indexRegister.shtml (Accessed July 13, 2017).

- 24. Ford I, Norrie J. Pragmatic clinical trials. NEJM. 2016;375:454‐463. [DOI] [PubMed] [Google Scholar]

- 25. Drummond M, Griffin A, Tarricone R. Economic evaluation for devices: Same or different? Value Health. 2009;12(4):402‐404. [DOI] [PubMed] [Google Scholar]

- 26. Wang SV, Brown J, Gagne JJ, Rassen JA, Klungel O, Douglas I, Smeeth L, Nguyen M, Sturkenboom M, Berger M, Eichler HG, Mullins D, Gini R, Schneeweiss S. Reporting to facilitate assessment of validity and improve reproducibility of healthcare database analyses 1.0. Working paper (May 3, 2017).

- 27. Benchimol EI, Smeeth L, Guttmann A, et al., RECORD Working Committee . The REporting of studies Conducted using Observational Routinely‐collected health Data (RECORD) statement. PLoS Med. 2015. https://doi.org/10.1371/journal.pmed.1001885 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Vandenbroucke JP, von Elm E, Altman DG, et al. Strengthening the Reporting of Observational Studies in Epidemiology (STROBE): Explanation and elaboration. PLoS Med. 2007;4(10):e297. https://doi.org/10.1371/journal.pmed.0040297 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. https://www.fda.gov/downloads/medicaldevices/deviceregulationandguidance/guidancedocuments/ucm513027.pdf (Accessed 4/29/2017)

- 30. Tarricone R, Torbica A, Drummond M, Schreyögg J. Assessment of medical devices: Challenges and solutions. Health Econ. 2017;S1:1‐152. Accessed 4/29/2017 [DOI] [PubMed] [Google Scholar]

- 31. https://www.congress.gov/bill/114th‐congress/house‐bill/34 (Accessed 4/29/2017)

- 32. Eichler HG, Baird LG, Barker R, et al. From adaptive licensing to adaptive pathways: Delivering a flexible life‐span approach to bring new drugs to patients. Clin Pharmacol Ther. 2015. Mar;97(3):234‐246. https://doi.org/10.1002/cpt.59 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. International conference on harmonisation of technical requirements for registration of pharmaceuticals for human use: ICH Harmonised Tripartite Guideline Statistical Principles for Clinical Trials E9." (PDF). ICH. 1998–02‐05.

- 34. Wagenmakers EJ, Wetzels R, Borsboom D, van der Maas HL, Kievit RA. An agenda for purely confirmatory research. Perspect Psychol Sci. 2012. Nov;7(6):632‐638. https://doi.org/10.1177/1745691612463078 [DOI] [PubMed] [Google Scholar]

- 35. Kimmelman J, Mogil JS, Dirangl U. Distinguishing between exploratory and confirmatory preclinical research will improve translation. PLoS Biol. 2014. May;12(5):e1001863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Clinicaltrials.gov. https://clinicaltrials.gov. Accessed March 21, 2017. 32.HSRProj. https://wwwcf.nlm.nih.gov/hsr_project/home_proj.cfm#. (Accessed March 21, 2017).

- 37. HSRPoj . https://wwwcf.nlm.nih.gov/hsr_project/home_proj.cfm#. (Accessed March 21, 2017).

- 38. University hospital Medical Information Network–Clinical Trials Registry. http://www.umin.ac.jp/ctr/index.htm (Accessed July 5, 2017).

- 39. Blair, G. , Cooper, J. , Coppock, A. , & Humphreys, M. Declaring and diagnosing research designs. 2006. https://declaredesign.org/paper.pdf (Accessed July 5, 2017). [DOI] [PMC free article] [PubMed]

- 40. Wang SV, Schneeweiss S. on behalf of the joint ISPE-ISPOR Special Task Force on Real World Evidence in Health Care Decision Making . Reporting to Improve Reproducibility and Facilitate Validity Assessment for Healthcare Database Studies V1.0. Pharmacoepidemiol Drug Saf 2017;26(9):1018–1032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Chen AW, Tetzlaff JM, Altman DG, et al. SPIRIT 2013 statement: defining standard protocol items for clinical trials. Ann Intern Med. 2013;158(3):200‐207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Madigan D, Ryan P, Schuemie M, et al. Evaluating the impact of database heterogeneity on observational studies. Am J Epidemiol. 2013. https://doi.org/10.1093/aje/kwt010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Walker AM. Orthogonal predictions: Follow‐up questions for suggestive data. Pharmacoepidemiol Drug Saf. 2010;19(5):529‐532. [DOI] [PubMed] [Google Scholar]

- 44. Kirwan JR, de Wit M, Frank L, et al. Emerging guidelines for patient engagement in research. Value Health. 2017;20(3):481‐486. [DOI] [PubMed] [Google Scholar]

- 45. Selby JV, Forsydth LP, Sox HC. Stakeholder‐driven comparative effectiveness research: an update from PCORI. JAMA. 2015;314:2335‐2336. [DOI] [PubMed] [Google Scholar]

- 46. Forsythe LP, Frank LB, Workman TA, Hilliard T, Harwell D, Fayish L. Patient, caregiver and clinician views on engagement in comparative effectiveness research. J Comp Eff Res. 2017. Feb 8. https://doi.org/10.2217/cer‐2016‐0062. [Epub ahead of print] PubMed; 28173732 [DOI] [PubMed] [Google Scholar]

- 47. Forsythe LP, Frank LB, Workman TA, Borsky A, Hilliard T, Harwell D, Fayish L. Health researcher views on comparative effectiveness research and research engagement. J Comp Eff Res. 2017. Feb 8. https://doi.org/10.2217/cer‐2016‐0063. [Epub ahead of print] PubMed PMID: 28173710. [DOI] [PubMed] [Google Scholar]

- 48. Frank LB, Forsythe LP, Workman TA, Hilliard T, Lavelle M, Harwell D, Fayish L. Patient, caregiver and clinician use of comparative effectiveness research findings in care decisions: Results from a national study. J Comp Eff Res. 2017. Feb 8. https://doi.org/10.2217/cer‐2016‐0061. [Epub ahead of print] PubMed PMID: 28173724. [DOI] [PubMed] [Google Scholar]

- 49. Hill AB. Observation and experiment. N Engl J Med. 1953;248:995‐1001. [DOI] [PubMed] [Google Scholar]

- 50. https://imigetreal.eu/Portals/1/Documents/01%20deliverables/GetReal%20D1.2%20Current%Policies%20and%20Perspectives%20Final_webversion.pdf

- 51. Loannidis JP. Why most published research findings are false. PLoS Med. 2005;2(8): e124 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Simmons JP, Nelson LD, Simonsohn U. False‐positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychol Sci. 2011;22(11):1359‐1366. [DOI] [PubMed] [Google Scholar]