Abstract

We have developed a vision-based program to detect symptoms of Olive Quick Decline Syndrome (OQDS) on leaves of Olea europaea L. infected by Xylella fastidiosa, named X-FIDO (Xylella FastIdiosa Detector for O. europaea L.). Previous work predicted disease from leaf images with deep learning but required a vast amount of data which was obtained via crowd sourcing such as the PlantVillage project. This approach has limited applicability when samples need to be tested with traditional methods (i.e., PCR) to avoid incorrect training input or for quarantine pests which manipulation is restricted. In this paper, we demonstrate that transfer learning can be leveraged when it is not possible to collect thousands of new leaf images. Transfer learning is the re-application of an already trained deep learner to a new problem. We present a novel algorithm for fusing data at different levels of abstraction to improve performance of the system. The algorithm discovers low-level features from raw data to automatically detect veins and colors that lead to symptomatic leaves. The experiment included images of 100 healthy leaves, 99 X. fastidiosa-positive leaves and 100 X. fastidiosa-negative leaves with symptoms related to other stress factors (i.e., abiotic factors such as water stress or others diseases). The program detects OQDS with a true positive rate of 98.60 ± 1.47% in testing, showing great potential for image analysis for this disease. Results were obtained with a convolutional neural network trained with the stochastic gradient descent method, and ten trials with a 75/25 split of training and testing data. This work shows potential for massive screening of plants with reduced diagnosis time and cost.

Keywords: convolutional neural networks, deep learning, machine vision, transfer learning, Olea europaea, Xylella fastidiosa

Introduction

Olive trees are among the most cultivated plants in the world with 10.2 million hectares of planted trees in 2014 (FAO, 2017). In 2013, Xylella fastidiosa, a quarantine pathogen known as the causal agent of devastating diseases such as Pierce’s disease or Citrus variegated chlorosis (Chatterjee et al., 2008; Janse and Obradovic, 2010), was discovered in the Apulia region of Italy (Saponari et al., 2013). The pathogen (X. fastidiosa subsp. pauca strain CoDiRO) was associated to the Olive Quick Decline Syndrome (OQDS or Complesso del Disseccamento Rapido dell’Olivo, CoDiRO) that is causing the collapse and death entire groves of olives in some Apulian districts. Symptoms can vary but, in general, the disease presents itself as leaf scorching, drying, wilting and eventual death. In olive trees, tissue desiccation starts at the tip of the leaves and progresses toward the petiole, soon extending to the whole blade (Martelli, 2016). The first visual symptoms in an infected tree occur between 3 and 18 months after initial infection, depending on the time of year, tree age and variety. In olive trees, the latent period—the period between infection and the appearance of symptoms—will likely provide ample time for the pathogen to spread far away from the initial point of introduction before it is detected, thus large-scale monitoring is desirable. Several diagnostic protocols were tested for the CoDiRO strain (Luvisi et al., 2017), such as ELISA (Loconsole et al., 2014), PCR (Minsavage et al., 1994; Guan et al., 2015), direct tissue blot immunoassay (Djelouah et al., 2014) or loop-mediated isothermal amplification (LAMP) (Harper et al., 2010; Yaseen et al., 2015). Two real-time PCR protocols are also available (Francis et al., 2006; Harper et al., 2010). However, large-scale monitoring such those carried out in Apulia (Martelli, 2016) required a screening of plants to collect samples from symptomatic plants, otherwise the erratic distribution of the pathogen in the host may decrease detection effectiveness of diagnostic tools. Presently, to detect OQDS, a human expert observes the whole canopy (the size of which may be considerable for centenary trees) to detect the symptoms. Because the disease symptoms mainly appear visually on the leaves of an infected plant, computer vision has the potential to provide an effective and fast method for detecting leaf scorch (Luvisi et al., 2016).

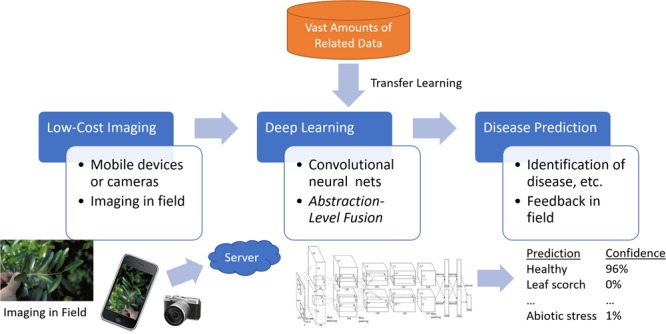

We propose a system that would enable growers to take photos of a possibly affected plant with their mobile device, upload the image from the mobile device or computer, have the image processed remotely through the cloud by a deep learning system, and receive a prompt diagnosis of the specimen (Figure 1).

FIGURE 1.

General system overview. A picture of an affected leaf is processed remotely by a server using deep learning. The user receives a report on the diagnosis of the specimen in the field.

In the following, we give a brief overview of the historical basis for quantitative analysis of plants, the development of image-based pattern recognition systems for processing leaves, and the impact of deep learning when applied to pattern recognition systems to identify plants and detect diseases. The earliest work for mathematical analysis of plants dates to a 1936 work by Fisher (1936) that used floral measurements and discriminant analysis to determine the taxonomy of Iris plants. With the advent of LANDSAT technology in the 1970’s, growers were able to quantify the health of whole fields of crops at a glance using satellite imagery (Wiegand et al., 1986). Computer methods for analysis of specific plant specimens appear as early as the 2000’s. Haiyan and He (2005) fuse backpropagation, an artificial neural network designed to imitate the behavior of firing synapses in the human brain, and expert systems, early AI methods based on IF-THEN rules, to pinpoint the origination of nutritional disorders in an orchard. Cunha (2003) applied computer vision techniques to analyze plant leaves imaged with a digital scanner. Computer algorithms automatically calculated the morphological properties of the leaf such as area, perimeter, holes, width and length. Among the earliest works to automatically identify plant species from leaf images dates to a 2001 thesis by Söderkvist (2001). A variety of feature extraction methods (circularity of shape, wavelet transforms, Fourier descriptors, Hu’s moments, etc.) and machine learning methods (support vector machines and backpropagation; Chang and Lin, 2011) were utilized. At best, it was found that backpropagation had mixed success. The data for this work was publicly released and 1,125 leaf images continue to be used as a benchmark dataset.

By the late 2000’s, pattern recognition systems were being proposed that had much better success at automatically identifying the species of plants and presence of disease from leaf images. Pattern recognition is the field of study where a computer automatically predicts the properties of an object from its percepts. The approaches can be categorized into two methodologies: local appearance methods and shape methods. In local appearance methods, the image is processed on a pixel-by-pixel basis. The behavior of a local neighborhood is used to characterize each pixel. Often, a method will quantify the gradient pattern—the strength and direction of the edge at the local pixel. Nilsback and Zisserman (2008) characterized leaf features with the Histogram of Oriented Gradients algorithm (Chen et al., 2014) and the Scale-invariant Feature Transform (SIFT) (Liu et al., 2015). A support vector machine predicted the species of a flower amongst 103 different categories. Xiao et al. (2010) applied Histogram of Oriented Gradients with Maximum Margin Criterion, an algorithm based on discriminant analysis that reduces the number of features to be used by the machine learning algorithm. The algorithm was tested on Soderkvist’s Swedish leaf data set (Söderkvist, 2001) and the Intelligent Computing Laboratory’s (ICL) plant dataset of 17,032 leaf images of 220 different species. In shape methods, also known as segmentation and morphological-based methods, a segmentation algorithm pre-processes the leaf image to obtain an outline of the leaf. From the outline, shape features such as circularity, area, etc. are derived. A machine learning algorithm predicts the disease/species from these features. Munisami et al. (2015) segmented leaf images with Otsu’s algorithm (Baxi and Vala, 2013) and calculated leaf length, width, area, perimeter, hull area, axis length and centroid and applied a K-NN for prediction. The system was tested on 640 images of 32 different plant species.

These methods follow the traditional paradigm of selecting a feature representation based on knowledge of the problem domain. Generally, an image-based pattern recognition system (a) transforms an image to a feature representation that best characterizes the relevant features of an object and (b) a machine learning algorithm carries out the recognition task based on the feature representation. Selecting relevant features in step (a) is a difficult task and features are often selected by empirical analysis. The resulting system is ad-hoc. It will not scale to the detection of other diseases, species or even accommodate great changes in imaging conditions. Often the sensitivity is too low for a production system.

Recently, deep learning has challenged the paradigm of focusing on feature representation. Deep learning may have been used to describe neural networks as early as 1986 (Dechter, 1986) and backpropagation is not a new concept in the field of pattern recognition (LeCun et al., 1989). However, advances in computational power and dissemination of the outstanding performance in the ImageNet challenge (Krizhevsky et al., 2012) have renewed the scientific community’s interest in deep learning (LeCun et al., 2015). A deep learning algorithm learns the features from raw data entirely from the ground up. It combines both steps of a pattern recognition system, (a) and (b) in the previous paragraph. Deep learners have as much as a 99.24% recognition rate for the detection of some plant diseases and species (Mohanty et al., 2016) and show great potential for the field of agricultural engineering. Loosely speaking deep learning is a collection of methods that: (1) improve optimization and generalization of neural networks, and (2) stack layers of transformation to enable the learning algorithm to develop higher levels of abstraction.

A deep learner’s performance increases as the amount of data increases. This enables the system to overcome a variety of imaging conditions, such as lighting conditions, poor alignment, and improper cropping of the object. In contrast, increasing the amount of data for other pattern recognition algorithms (e.g., K-NN, support vector machines) has diminishing returns, and eventual stagnation. However, deep learning is not the end all solution to pattern recognition, as it would appear. The unbounded improvements in accuracy with increasing amounts of data is also deep learning’s greatest disadvantage. A staggeringly large amount of data is required to train a deep learner to exceed a baseline machine learning algorithm’s performance. Unfortunately, in the absence of such large amounts of training data it is inadvisable to train a deep learner from scratch.

Some methods have attempted to address this by borrowing the training data from a related problem domain, called Transfer Learning (Bengio, 2012; Yosinski et al., 2014; Sadeghi and Testolin, 2017). The algorithm is trained on the related problem’s data and then re-trained on data for the problem at hand. The deep learner will learn features relevant to the new problem. If the task is similar enough, such as prediction of plant diseases from images of leaves, the same structures learned by the network will continue to be relevant. This was verified in the field of facial emotion recognition (Kim et al., 2015); deep learners are trained on face identification databases where there is a large amount of data (millions to tens of millions of samples), then later applied to the prediction of facial expression where the data is insufficient to train a deep learner on its own (hundreds to thousands of samples). In this work we will apply this concept to the detection of leaf scorch in Olea europaea L.

We also propose a novel deep fusion method that fuses the data with additional features at different levels of abstraction to improve performance when re-applying an already trained deep learner to a new problem. The network will discover relevant low-level features from the raw data to automatically detect veins, colors, and describe events that lead to leaf scorch. Existing applications of deep learning investigated spatial relationships in the image. Fusion, if used, occurred temporally, at the beginning of the processing pipeline, or mixed in the learning process. We will improve upon this with a staggered data fusion scheme that injects relevant features at the increasing levels of abstraction, while allowing the network to discover the complimentary factors leading to disease. Investigating the non-linear relationship found by the network will allow us to better understand the links between plant leaf structure and appearance, disease symptoms.

In the following section, we discuss deep learning methods most similar to our work, and describe how our work is significantly different. The method proposed in this work, Abstraction-Level Fusion, is inspired by the work in Karpathy et al. (2014). In that work, a deep learning neural network is extended to process videos with three modes: early fusion, late fusion and slow fusion. In early fusion, frames are combined as input to the neural network. In late fusion, the additional video frames are injected into the first fully connected later. Slow fusion is a balanced version of both approaches. In Abstraction-Level Fusion, there are multiple levels of fully connected layers. Each fully connected layer receives low-level appearance features such as edge information or order statistics. The method in Karpathy et al. (2014) fuses temporal information, not additional appearance features.

Mohanty et al. (2016) investigated the applicability of two neural networks: AlexNet (Krizhevsky et al., 2012), the seminal neural network that brought deep learning to the attention of the pattern recognition community; and GoogLeNet (Szegedy et al., 2015), an improvement to AlexNet developed by Google that added inception layers to reduce computational burden when carrying out a convolutional neural network. Various transfer learning and image preprocessing methods were tested on a database of 54,306 images of 14 species and 26 diseases obtained from the PlantVillage dataset 1. Pawara et al. (2017) carried out a similar study on the Leafsnap (Kumar et al., 2012) and Folio datasets (Munisami et al., 2015), that contained 7,719 and 640 leaf images, respectively. Fujita et al. (2016) applied deep learning to 7,520 cucumber images of 7 different diseases and a healthy control. Unlike other approaches, the approach employed a VGG convolutional neural network (Simonyan and Zisserman, 2015). VGG networks differ from the other two neural network architectures in that the early, filtering layers use much smaller kernels than other networks. We build upon previous work (Fujita et al., 2016; Mohanty et al., 2016; Pawara et al., 2017) by considering improvements to neural network architectures, and we are the first to apply it to leaf scorch detection in O. europaea L.

The focus of this paper is a system to process an olive leaf image with computer vision algorithms able to detect OQDS symptoms. We present a novel deep learning framework to organize the learning process into different levels of abstraction. The algorithm discovers low-level features from raw data to automatically detect veins and colors that lead to symptomatic leaves. The system has been implemented as a MATLAB standalone executable for Linux and Mac environments. The proposed system works in the absence of large amounts of training data, a useful feature when samples need to be tested with traditional methods (i.e., PCR) to avoid incorrect input or for quarantine pests which manipulation is restricted.

Materials and Methods

The system overview is described as follows: (1) for this pilot study, a leaf clipping from a plant to be tested is scanned. In the future, data will be collected of infected leaves in the field. (2) A mask of the leaf is automatically obtained by segmenting the leaf image with Otsu’s algorithm, filtering with a small-window median filter to remove noise, and cropped to the minimally sized bounding box enclosing the segmentation mask. (3) The image is resized to 256 pixels × 256 pixels. All images must be the same resolution to be processed by the machine learning algorithm in the following step. Resizing an image can make it difficult for humans to detect OQDS because it affects symptom presentation (i.e., necrosis and spots). However, so long as the images are resized in a uniform way across all images, it will not inhibit the machine learning algorithm’s ability to learn symptoms. This procedure was performed in related work (Mohanty et al., 2016). (4) The image is processed with the proposed Abstraction-Level Fusion for (5) diagnosis. An overview is given in Figure 2.

FIGURE 2.

System overview. Red: focus of work.

Plant Materials

Trials were carried out in orchards located in Province of Lecce (Apulia, Italy), in which OQDS symptomatic and symptomless trees of O. europaea L. were monitored. 24 plants grown in X. fastidiosa infected areas (in orchards where all plants showed OQDS since 2014, 1 year after first pathogen detection) and 24 plants grown in orchards where the pathogen is not yet detected and non-symptomatic plants were observed. Plants within groups were selected from the same olive tree age (25–30 years), and the same agronomic practices in the last 5 years and similar pedoclimatic conditions.

To evaluate the presence of X. fastidiosa in olive trees, sampling was accomplished in relation to symptom expression in October 2016. For each sample of 40 or more leaves, petioles and basal portions of leaf blade were cut with a sterile scalpel. Plant tissue (about 1 g, obtained from 20–25 leaves) was transferred in extraction bags (BIOREBA, Switzerland) for homogenization and 4 mL of extraction buffer was added to each bag (0.2 M Tris – HCl pH 9, 0.4 M LiCl and 25 mM EDTA). Remaining leaves were stored for image acquisition. Homogenization was performed using a semi-automatic homogenizer (Homex 6, BIOREBA) at 50% maximum speed. DNA extraction was performed following Edwards et al. (1991).

TaqMan quantitative PCR protocol with XF-F/R primers and XF-P probe (Harper et al., 2010) was used. Each reaction was prepared using 5 μL from a 20 ng/μL dilution of DNA extracted from 1 g of olive petioles, 200 nM probe, 400 nM forward and reverse primers, in a total volume of 25 μL. The cycling conditions were: 10 min at 95°C, followed by 40 cycles of 95°C for 15 s and 60°C for 1 min with the final dissociation at 95°C for 15 s, 60°C for 30 s and 95°C for 15 s.

Xylella fastidiosa-negative samples were also assayed by qPCR for Verticillium dahliae (Bilodeau et al., 2012), Colletotrichum spp., Colletotrichum acutatum, C. gloeosporioides (Garrido et al., 2009) and visually evaluated for presence of Stictis panizzei, Mycocentrospora cladosporioides, and Spilocaea oleagina or showing disorders caused by abiotic stress. Once health status was confirmed by qPCR and visual assessment, 99 unprocessed leaves from X. fastidiosa-positive samples, 100 unprocessed healthy leaves (asymptomatic samples negative to X. fastidiosa and other tested/observed pathogen) and 100 unprocessed leaves from X. fastidiosa-negative samples but showing other diseases or disorders were scanned for image acquisition (300 dpi).

Abstraction-Level Fusion

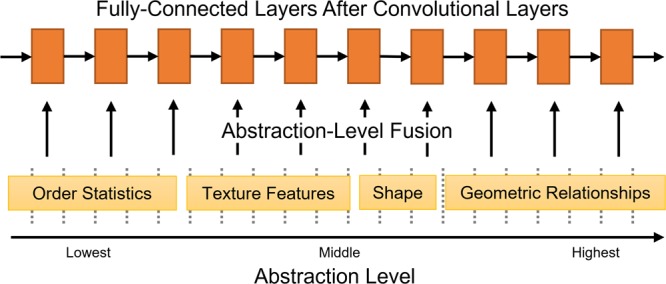

A convolutional neural network (LeCun and Bengio, 1995; Cireşan et al., 2011) takes the low-level, pixel representation of an image as input. Each layer is interconnected with the next and is responsible for organizing the visual stimulus into increasingly non-local signals. As the signal passes through successive layers of the network it becomes more complex, and the abstraction-level of the signal increases. This concept inspires us to assist the neural network by injecting features of increasing abstraction-level in the fully connected portion of the network. A general overview of the method is given in Figure 3. The idea was originally proposed in a research plan in Rinaldi and Cruz (2016). This work is significantly different; in previous work we used auto-encoders and in this work we use convolutional neural networks. This method is similar to learning with privileged information (Vapnik and Vashist, 2009; Vapnik, 2015), but the method proposed in this paper is based on framing the injection of additional features at different abstraction levels.

FIGURE 3.

A general overview of the idea of Abstraction-Level Framing. After the convolutional, filtering portion of a convolutional neural network, each fully connected layer receives additional features at increasing levels of abstraction.

We guide the learning process by providing the fully connected layers of the network with high-level image features organized in increasing levels of abstraction; for example, order statistics of the intensity values, edge gradient patterns, image moments from segmentation, etc. With a conventional fully connected layer, we anticipate that the neural network would learn these structures on its own. The key idea with Abstraction-Level Fusion is that, by fusing this information with the network, we hope that the neural network will learn a complimentary organization of signals with the information that is being provided. The full structure is described in Table 1. Note that Figure 3 describes the general idea, and the embodiment of Abstraction-Level Fusion used in this work focuses on texture and shape features.

Table 1.

Structure of the convolutional neural network employed in this work.

| Layer | Type | Abstraction-Level Feature |

|---|---|---|

| 0 | 2-D convolutional layer with 11 × 11 kernel, padding size 6, stride length size 4 | – |

| 1 | Rectified linear activation function layer | – |

| 2 | Max pooling layer, downsample factor 2, stride length 2 | – |

| 3 | 2-D convolutional layer with 7 × 7 kernel, padding size 3, stride length size 4 | – |

| 4 | Rectified linear activation function layer | – |

| 5 | Max pooling layer, downsample factor 2, stride length 2 | – |

| 6 | Fully connected layer, 256 neurons | Edge magnitudes: Grayscaled, original image filtered by Laplacian of a Gaussian, result downsampled by a factor of 8 |

| 7 | Rectified linear activation function layer | – |

| 8 | Fully connected layer, 256 neurons | Shape features: area, perimeter, Hu’s moments (Hu, 1962), Zernike moments (Zhenjiang, 2000) |

| 9 | Rectified linear activation function layer | – |

| 10 | Fully connected layer, 3 neurons | – |

| 10 | Softmax classification layer | – |

Type: the type of layer. Abstraction-Level Feature: The additional features injected in the layer, if any. Features are injected by concatenation with the input from the previous layer.

Implementation Details and Parameters

The network is implemented in MATLAB 2016 and carried out on a Dell Precision Rack 7910 with Dual 8-core Intel Xeon E5-2630 v3 processors, 128 GB of DDR4 RDIMM ECC RAM, and a NVIDIA Quadro K6000 12 GB. The network is initially trained on the PlantVillage dataset (Mohanty et al., 2016), then frozen and retrained on the new dataset. Note that the work in Mohanty et al. (2016) uses a pre-trained AlexNet and GoogLeNet neural networks, whereas we train a modified LeNet from scratch on the PlantVillage dataset. Stochastic gradient method is used with a batch size of 60. The base learning rate is 0.01. Step-based learning rate was not employed. Momentum is set to 0.9. Weight decay is set to 0.0005. Gamma is 0.1. For validation, we perform 10 trials splitting the data into random sets of 75% for training and 25% for testing.

We provide a comparison to three baseline methods using various features and a Radial Basis Function (RBF) Support Vector Machine (SVM). For the SVM, γ is set to the inverse of the length of the feature vector and cost c set to 1. The feature vectors are z-scored based on the training data before the machine learning step. We compare three feature vector representations: an improved Gabor filter Cruz et al. (2015), Uniform Local Binary Patterns (Almaev and Valstar, 2013), and SIFT features Liu et al. (2015). These features were selected because they provide a good representation of features used currently in literature. For Background Suppressing Gabor Energy Filtering we follow the parameters in Cruz et al. (2015). The whole, filtered image is taken to be the feature vector. For Uniform Local Binary Patterns we use 8 neighbors and a radius of 1. For SIFT Features we follow Liu et al. (2015). SIFT features are extracted sparsely with a stride of 12 pixels in the horizontal and vertical directions. Images are registered by translating the centroid of the segmentation mask to the center of the image. Missing values are filled with repetition. Results are presented with the same validation method (ten random folds with a 75/25 training/testing split).

Results and Discussion

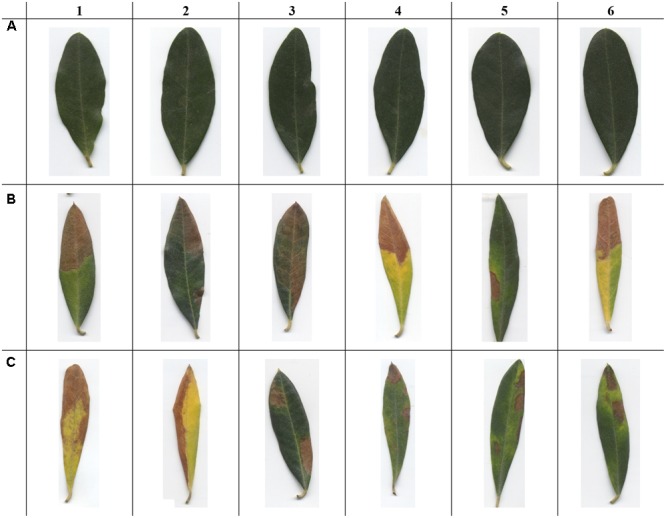

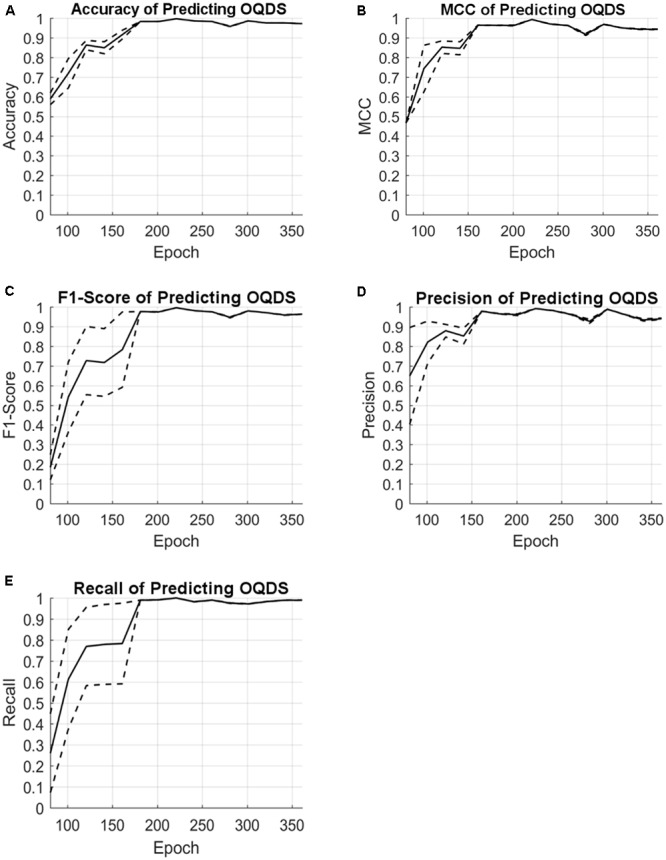

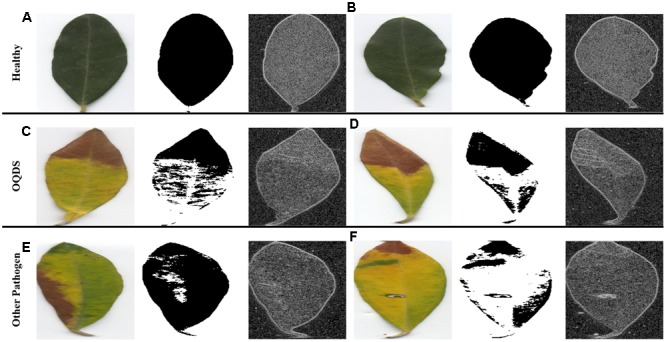

Example images of healthy leaves, OQDS-symptomatic leaves and leaves showing other disorders are reported in Figure 4. In order to stress the proposed detection systems, X. fastidiosa-negative leaves showing other disorders (Figure 4C) are chosen among samples which may be more easily confused by layperson as OQDS. Results with the proposed system for varying epoch limits are given in Figure 5. Numerical results with the proposed system are given in Table 2A and baseline results are given in Table 2B. The system has exceptional performance despite being trained on only 224 images. Hundreds of data samples are woefully insufficient for training a deep learner, and pretraining the network on the PlantVillage dataset improved performance. Additionally, including edge patterns and shape information contributed to the network’s performance and the advantage can be visualized (Figure 6). While it is possible that the network would learn parameters to obtain the segmentation and edge maps in Figure 6, providing this information potentially improves convergence because the network does not have to learn these representations from scratch.

FIGURE 4.

Examples images of Olea europaea L. used in this study. (A) Healthy control (asymptomatic leaves); (B) OQDS-symptomatic leaves (Xylella fastidiosa-positive samples); (C) X. fastidiosa-negative samples showing another pathogen/disorder. Note that the images given here are the native, original aspect ratio of O. europaea L. leaves. It is easy to distinguish healthy leaves from non-healthy leaves (A vs. B or C). However, tissue desiccation on the leaf tip, if it occurs, is not exclusive to OQDS (C1, C4, and C6), thus it is challenging to detect the difference between OQDS and non-OQDS samples (B vs. C).

FIGURE 5.

(A) Accuracy, (B) Matthew’s Correlation Coefficient (MCC), (C) F1-Score, (D) precision and (E) recall of predicting symptoms of Olive Quick Decline Syndrome (OQDS) in images of X. fastidiosa-positive leaves of O. europaea L. amongst healthy controls (asymptomatic leaves) or X. fastidiosa-negative leaves showing other disorders. Higher is better for all metrics.

Table 2.

Accuracy, Matthew’s Correlation Coefficient (MCC), F1-Score, Precision and Recall of predicting symptoms of Olive Quick Decline Syndrome (OQDS) in images of Xylella fastidiosa-positive leaves of Olea europaea L. amongst healthy controls (asymptomatic leaves) or X. fastidiosa-negative leaves showing other disorders.

| Epoch | Accuracy (%) | Matthew’s Correlation Coefficient (MCC) [-1,1] | F1-Score (%) | Precision (%) | Recall (%) |

|---|---|---|---|---|---|

| (A) Proposed method | |||||

| 100 | 71.93 ± 27.32 | 0.5419 ± 0.4225 | 74.37 ± 34.41 | 82.14 ± 32.62 | 61.27 ± 48.73 |

| 150 | 84.91 ± 17.44 | 0.7173 ± 0.4150 | 84.65 ± 18.13 | 85.19 ± 20.07 | 77.87 ± 43.61 |

| 200 | 98.25 ± 2.15 | 0.9743 ± 0.3320 | 96.25 ± 4.63 | 96.05 ± 6.51 | 99.09 ± 2.03 |

| 250 | 98.60 ± 1.47 | 0.9811 ± 0.1930 | 97.02 ± 3.10 | 98.09 ± 2.62 | 98.18 ± 2.49 |

| 300 | 98.60 ± 1.47 | 0.9798 ± 0.2410 | 96.89 ± 3.45 | 98.82 ± 2.63 | 97.18 ± 2.71 |

| Method | Accuracy (%) | Matthew’s Correlation Coefficient (MCC) [-1,1] | F1-Score (%) | Precision (%) | Recall (%) |

| (B) Comparison to other methods | |||||

| Background Suppressing Gabor Energy Filtering (Cruz et al., 2015) with RBF-SVM (Chang and Lin, 2011) | 63.11 ± 11.91 | 0.2271 ± 0.2517 | 65.52 ± 15.15 | 72.44 ± 14.30 | 65.28 ± 21.74 |

| Uniform Local Binary Patterns (Almaev and Valstar, 2013) and RBF-SVM (Chang and Lin, 2011) | 88.55 ± 16.71 | 0.7839 ± 0.2936 | 90.95 ± 11.97 | 92.12 ± 17.68 | 92.24 ± 6.16 |

| SIFT Features (Liu et al., 2015) and RBF-SVM (Chang and Lin, 2011) | 84.91 ± 17.44 | 0.7173 ± 0.4150 | 84.65 ± 18.13 | 85.19 ± 20.07 | 77.87 ± 43.61 |

Higher is better for all metrics.

FIGURE 6.

The advantage of including shape and texture. From left to right: The original image, the segmentation map (from which shape features such as moments are extracted), and the edge map. (A,B) The healthy leaves (asymptomatic), (C,D) OQDS leaves, and (E,F) non-OQDS leaves. Healthy leaves do not have any notable features in either the segmentation or edge maps. OQDS results in a more yellow leaf causing a distinct shape in the segmentation map, and note the subtle lines in the dead area of the leaf in (D). While other pathogens/disorders cause yellow leaves, it does not occur as orderly as leaf scorch, and dead areas do not have the distinctive subtle lines as in (D). Note that these images have been resized to 256 × 256 as a part of Step (3) in Figure 2.

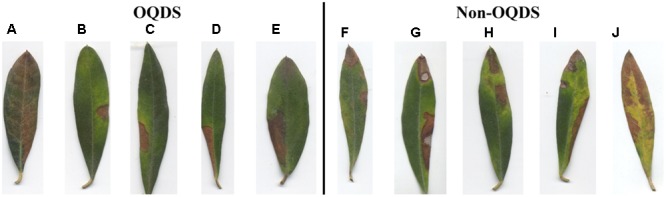

In Figure 7, we give examples of images that were misclassified more than once across the ten validation folds. In Figure 7A, the desiccated tissue area has a very gentle intensity gradient compared to other images and we suspect that the edge transition from desiccation to healthy tissue is not sharp enough to be detected by the convolutional steps of the neural network. In Figures 7B–E, tissue desiccation did not start at the tip of the leaf as expected. However, in the non-OQDS images in Figures 7F–J, tissue desiccation appeared at the tip of the leaf resembling OQDS.

FIGURE 7.

Examples of images that were misclassified more than once across the 10 validation folds. (A–E) Images of OQDS leaves. (F–J) Images of leaves with pathogens/diseases other than OQDS.

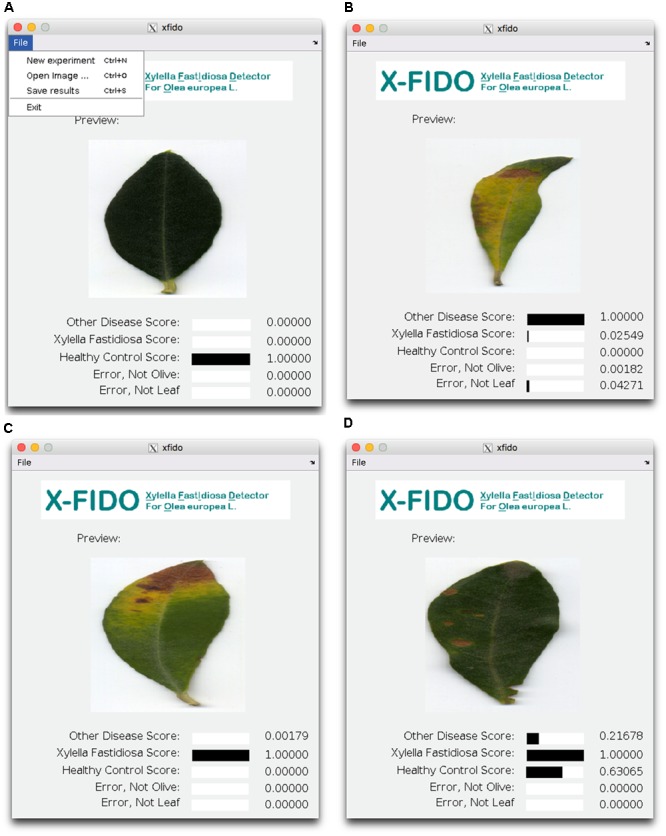

Description of Stand-Alone Executable Program

The model trained in the above experiments is compiled into a stand-alone MATLAB executable file 2. The program was developed with the intent to be as simple and intuitive as possible (Figure 8). The work flow is described as follows. A user starts a new experiment, and loads images of olive tree to be processed. As each image is loaded, prediction is carried out automatically. A preview of the image is displayed to the screen and confidence scores for each prediction category are displayed to the user. The system detects symptoms of OQDS in images of X. fastidiosa-positive leaves of olive tree amongst healthy controls (asymptomatic leaves) or X. fastidiosa-negative leaves showing other disorders. It is also capable of detecting two types of errors: (1) the user has given a leaf but it is not Olea europaea L., and (2) a general error for when the user has given an image that is not a leaf. Error case (1) was trained on 100 random images of non-olive species from the PlantVillage dataset. Error case (2) is useful for reporting to the user that the system is unsure of the content in the image. It was trained on 100 random images on CalTech 101 (Wang et al., 2006). When the user is finished experimenting on a set of images, the user executes the save option. As the user is processing images the program saves the file name, time, date, and confidence scores for each of the five categories. The “Save results” command stores the saved experimental results to a comma-separated value (CSV) file. To start a new experiment and clear all current experimental results, the user selects “New experiment.”

FIGURE 8.

Screen shots of the X-FIDO program. (A) The program is simple to operate and consists of three commands: New experiment; Open image, which prompts the user to open an image, automatically processes the image and logs the confidence scores; and Save results, which saves all logged confidence scores to a comma-separated value (CSV) file. In this sub-figure, the program correctly classified a healthy control. (B) A non-OQDS pathogen/disorder. (C) OQDS (X. Fastidiosa). (D) A very challenging sample of a non-OQDS image that resembles OQDS because of very faint leaf tip desiccation, and a healthy control because of verdancy.

Conclusion

Deep learning could revolutionize the field of agriculture, enabling swift, effective methods for the identification of crop diseases from images of leaves (Ampatzidis et al., 2017). Transfer learning enabled the application of deep learning to overcome the lack of sufficient training examples. Large leaf databases such as PlantVillage can be used to enable deep learning for other plant species and diseases where it is too challenging to obtain the tens of thousands of images normally required for deep learning. Collecting a large amount of images may be a difficult task due to unskilled samplers. Furthermore, to efficiently train an automated system for symptom recognition, images should be collected from samples previously tested for pathogen using traditional diagnostic methods, avoiding false positive/negative input. This task may be not easily achievable for large amount of samples or for quarantine pests which manipulation is restricted.

We demonstrate that it is possible to automatically detect leaf scorch in olive tree from leaf clipping images and that it can be discriminated from other disorders or pathogens, despite the strong similarity. However, even if the true positive rate obtained with the proposed method was high, specificity and sensibility of traditional diagnostic methods such as ELISA or qPCR is still unmatched. Thus this method may represent a tool for supporting sampling or could be used to pre-screening of samples before diagnostic tests. Presently, monitoring of OQDS in Europe strongly relies on visual inspection and collection of samples from plants that show standard symptoms, thus the use of X. fastidiosa-infected leaves with advanced stage of symptoms is coherent with the aim of the tools. However, the evaluation of the proposed method with earlier symptomatic leaves represents a critical issue that need to be investigated.

We also present a novel algorithm for framing a convolutional neural network, called Abstraction-Level Fusion, that injects additional feature vectors into the network. The layers are abstracted with increasing levels of complexity. In the future, efficacy of the Abstraction-Level Fusion will be applied to other convolutional neural networks such as VGG and inception. We found that the PlantVillage dataset alone was sufficient for transfer learning whereas previous work used pre-trained AlexNet and GoogLeNet networks.

The program shows potential for rapid and automatic detection of OQDS with reduced diagnosis time and cost. Because leaf scorch symptoms present themselves on the leaf when affecting other species, the technologies and algorithms pioneered in this work have broad impact to the detection of X. fastidiosa for Pierce’s disease in grapevine or other leaf scorch-related diseases.

Author Contributions

Conceived and designed the experiments: AC, YA, and AL. Data acquisition of plant pathogen and diagnostic tests: AL and LDB. Processing, analysis and interpretation: AC and YA. Analyzed the data: AC and YA. Prepared the manuscript: AC and YA. Edited the manuscript: AL and LDB.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This material is based upon work that is supported by the National Institute of Food and Agriculture, United States Department of Agriculture, under award numbers 2016-38422-25544 and 2016-67032-25008.

Footnotes

References

- Almaev T. R., Valstar M. F. (2013). “Local gabor binary patterns from three orthogonal planes for automatic facial expression recognition,” in Proceedings of the Humane Association Conference on Affective Computing and Intelligent Interaction Geneva: 356–361. 10.1109/ACII.2013.65 [DOI] [Google Scholar]

- Ampatzidis Y. G., De Bellis L., Luvisi A. (2017). iPathology: robotic applications and management of plants and plant diseases. Sustainability 9:1010 10.3390/su9061010 [DOI] [Google Scholar]

- Baxi A., Vala H. (2013). A review on Otsu image segmentation algorithm. Int. J. Adv. Res. Comp. Eng. Technol. 2 387–389. 10.1007/s11548-009-0389-8 [DOI] [PubMed] [Google Scholar]

- Bengio Y. (2012). “Deep learning of representations for unsupervised and transfer learning,” in Poster at ICML Workshop on Unsupervised and Transfer Learning Edinburgh: 17–36. [Google Scholar]

- Bilodeau G. J., Koike S. T., Uribe P., Martin F. N. (2012). Development of an assay for rapid detection and quantification of Verticillium dahliae in soil. Phytopathology 102 331–343. 10.1094/PHYTO-05-11-0130 [DOI] [PubMed] [Google Scholar]

- Chang C. C., Lin C. J. (2011). LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. 2 1–27. 10.1145/1961189.1961199 [DOI] [Google Scholar]

- Chatterjee S., Almeida R. P. P., Lindow S. (2008). Living in two worlds: the plant and insect lifestyles of Xylella fastidiosa. Annu. Rev. Phytopathol. 46 243–271. 10.1146/annurev.phyto.45.062806.094342 [DOI] [PubMed] [Google Scholar]

- Chen J., Chen Z., Chi Z., Fu H. (2014). “Emotion recognition in the wild with feature fusion and multiple kernel learning,” in Proceedings of the 16th International Conference on Multimodal Interaction Istanbul: 508–513. 10.1145/2663204.2666277 [DOI] [Google Scholar]

- Cireşan D. C., Meier U., Masci J., Gambardella L. M., Schmidhuber J. (2011). “Flexible, high performance convolutional neural networks for image classification,” in Proceedings of the International Joint Conference on Artificial Intelligence Barcelona: 1237–1242. [Google Scholar]

- Cruz A. C., Bhanu B., Thakoor N. S. (2015). Background suppressing Gabor energy filtering. Pattern Recogn. Lett. 52 40–47. 10.1016/j.patrec.2014.10.001 [DOI] [Google Scholar]

- Cunha J. B. (2003). “Application of image processing techniques in the characterization of plant leafs,” in Proceedings of the 2003 IEEE International Symposium on Industrial Electronics, Rio de Janeiro 612–616. 10.1109/ISIE.2003.1267322 [DOI] [Google Scholar]

- Dechter R. (1986). “Learning while searching in constraint-satisfaction-problems,” in Proceedings of the 5th National Conference on Artificial Intelligence Philadelphia, PA: 178–183. [Google Scholar]

- Djelouah K., Frasheri D., Valentini F., D’Onghia A. M., Digiaro M. (2014). Direct tissue blot immunoassay for detection of Xylella fastidiosa in olive trees. Phytopathol. Mediterr. 53 559–564. [Google Scholar]

- Edwards K., Johnstone C., Thompson C. (1991). A simple and rapid method for the preparation of plant genomic DNA for PCR analysis. Nucleic Acids Res. 19 1349 10.1093/nar/19.6.1349 [DOI] [PMC free article] [PubMed] [Google Scholar]

- FAO (2017). Data from: FAOSTAT. Available at: http://www.fao.org/faostat/en/#home [Google Scholar]

- Fisher R. A. (1936). The use of multiple measurements in taxonomic problems. Ann. Eugen. 7 179–188. 10.1111/j.1469-1809.1936.tb02137.x [DOI] [Google Scholar]

- Francis M., Lin H., Cabrera-La Rosa J., Doddapaneni H., Civerolo E. L. (2006). Genome-based PCR primers for specific and sensitive detection and quantification of Xylella fastidiosa. Eur. J. Plant Pathol. 115 203–213. 10.1016/j.mimet.2012.11.008 [DOI] [PubMed] [Google Scholar]

- Fujita E., Kawasaki Y., Uga H., Kagiwada S., Iyatomi H. (2016). “Basic investigation on a robust and practical plant diagnostic system,” in Proceedings of the 2016 IEEE Machine Learning and its Applications Anaheim, CA: 989–992. 10.1109/ICMLA.2016.0178 [DOI] [Google Scholar]

- Garrido C., Carbú M., Fernández-Acero F. J., Boonham N., Colyer A., Cantoral J. M., et al. (2009). Development of protocols for detection of Colletotrichum acutatum and monitoring of strawberry anthracnose using qPCR. Plant Pathol. 58 43–51. 10.1111/j.1365-3059.2008.01933.x [DOI] [Google Scholar]

- Guan W., Shao J., Elbeaino T., Davis R. E., Zhao T., Huang Q. (2015). Infecting and italian olive-associated strains of Xylella fastidiosa by polymerase chain reaction. PLOS ONE 10:e0129330. 10.1371/journal.pone.0129330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haiyan S., He Y. (2005). “Crop nutrition diagnosis expert system based on artificial neural networks,” in Proceedings of the Third International Conference on Information Technology and Applications Sydney, NSW: 357–362. 10.1109/ICITA.2005.108 [DOI] [Google Scholar]

- Harper S. J., Ward L. I., Clover G. R. G. (2010). Development of LAMP and QPCR methods for the rapid detection of Xylella fastidiosa for quarantine and field applications. Phytopathology 100 1282–1288. 10.1094/PHYTO-06-10-0168 [DOI] [PubMed] [Google Scholar]

- Hu M. K. (1962). Visual pattern recognition by moment invariants. IRE Trans. Inform. Theor. 8 179–187. 10.1109/TIT.1962.1057692 [DOI] [Google Scholar]

- Janse J. D., Obradovic A. (2010). Xylella fastidiosa: its biology, diagnosis, control and risks. J. Plant Pathol. 92 35–48. [Google Scholar]

- Karpathy A., Toderici G., Shetty S., Leung T., Sukthankar R., Li F. F. (2014). “Large-scale video classification with convolutional neural networks,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Columbus, OH: 1725–1732. 10.1109/CVPR.2014.223 [DOI] [Google Scholar]

- Kim B., Lee H., Roh J., Lee S. (2015). “Hierarchical committee of deep CNNs with exponentially-weighted decision fusion for static facial expression recognition,” in Proceedings of the 2015 ACM on International Conference on Multimodal Interaction Seattle, WA: 427–434. 10.1145/2818346.2830590 [DOI] [Google Scholar]

- Krizhevsky A., Sutskever I., Hinton G. E. (2012). ImageNet classification with deep convolutional neural networks. Adv. Neur. In. 25 1–9. [Google Scholar]

- Kumar N., Belhumeur P. N., Biswas A., Jacobs D. W., Kress W. J., et al. (2012). “Leafsnap: a computer vision system for automatic plant species identification,” in Proceedings of the 12th European Conference on Computer Vision Firenze: 502–516. 10.1007/978-3-642-33709-3_36 [DOI] [Google Scholar]

- LeCun Y., Bengio Y. (1995). “Convolutional networks for images, speech, and time series,” in The Handbook of Brain Theory and Neural Networks ed. Arbib M. A. (Cambridge, MA: MIT Press; ) 255–258. [Google Scholar]

- LeCun Y., Bengio Y., Hinton G. (2015). Deep learning. Nature 521 436–444. 10.1038/nature14539 [DOI] [PubMed] [Google Scholar]

- LeCun Y., Boser B., Denker J. S., Henderson D., Howard R. E., Hubbard W., et al. (1989). Backpropagation applied to handwritten zip code recognition. Neural Comput. 1 541–551. 10.1162/neco.1989.1.4.541 [DOI] [Google Scholar]

- Liu C., Yuen J., Torralba A. (2015). Sift flow: dense correspondence across scenes and its applications. IEEE Trans. Pattern Anal. Mach. Intell. 33 15–49. 10.1109/TPAMI.2010.147 [DOI] [PubMed] [Google Scholar]

- Loconsole G., Potere O., Boscia D., Altamura G., Djelouah K., Elbeaino T., et al. (2014). Detection of Xylella fastidiosa in olive trees by molecular and serological methods. J. Plant Pathol. 96 7–14. [Google Scholar]

- Luvisi A., Ampatzidis Y., De Bellis L. (2016). Plant pathology and Information Technology: opportunity for management of disease outbreak and applications in regulation frameworks. Sustainability 8:831 10.3390/su8080831 [DOI] [Google Scholar]

- Luvisi A., Nicolì F., De Bellis L. (2017). Sustainable management of plant quarantine pests: the case of olive quick decline syndrome. Sustainability 9:659 10.3390/su9040659 [DOI] [Google Scholar]

- Martelli G. P. (2016). The current status of the quick decline syndrome of olive in southern Italy. Phytoparasitica 44 1–10. 10.1007/s12600-015-0498-6 [DOI] [Google Scholar]

- Minsavage G. V., Thompson C. M., Hopkins D. L., Leite R. M. V. B. C., Stall R. E. (1994). Development of a polymerase chain reaction protocol for detection of Xylella fastidiosa in plant tissue. Phytopathology 84 456–461. 10.1094/Phyto-84-456 16022291 [DOI] [Google Scholar]

- Mohanty S. P., Hughes D., Salathé M. (2016). Using deep learning for image-based plant disease detection. Front. Plant Sci. 7:1419. 10.3389/fpls.2016.01419 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Munisami T., Ramsurn M., Kishnah S., Pudaruth S. (2015). Plant leaf recognition using shape features and colour histogram with K-nearest neighbour classifiers. Procedia Comput. Sci. 58 740–747. 10.1016/j.procs.2015.08.095 [DOI] [Google Scholar]

- Nilsback M. E., Zisserman A. (2008). “Automated flower classification over a large number of classes,” in Proceedings of the 6th Indian Conference on Computer Vision, Graphics and Image Processing Bhubaneswar: 722–729. 10.1109/ICVGIP.2008.47 [DOI] [Google Scholar]

- Pawara P., Okafor E., Surinta O., Schomaker L., Wiering M. (2017). “Comparing local descriptors and bags of visual words to deep convolutional neural networks for plant recognition,” in Proceedings of the International Conference on Pattern Recognition Applications and Methods, Porto 479–486. 10.5220/0006196204790486 [DOI] [Google Scholar]

- Rinaldi A., Cruz A. C. (2016). “Deep context injection for super-resolution,” in Proceedings of the International Conference on Image Processing, Computer Vision and Pattern Recognition, Las Vegas, NV 353–354. [Google Scholar]

- Sadeghi Z., Testolin A. (2017). Learning representation hierarchies by sharing visual features: a computational investigation of Persian character recognition with unsupervised deep learning. Cogn. Process. 18 273–284. 10.1007/s10339-017-0796-7 [DOI] [PubMed] [Google Scholar]

- Saponari M., Boscia D., Nigro F., Martelli G. P. (2013). Identification of DNA sequences related to Xylella fastidiosa in oleander, almond and olive trees exhibiting leaf scorch symptoms in Apulia (Southern Italy). J. Plant Pathol. 95 659–668. 10.4454/JPP.V95I3.035 [DOI] [Google Scholar]

- Simonyan K., Zisserman A. (2015). “Very deep convolutional networks for large-scale image recognition,” in Proceedings of the International Conference on Learning Representations San Diego, CA. [Google Scholar]

- Söderkvist O. J. O. (2001). Computer Vision Classification of Leaves from Swedish Trees. Master’s thesis, Linkoping University; Linkoping. [Google Scholar]

- Szegedy C., Liu W., Jia Y., Sermanet P., Reed S., Anguelov D., et al. (2015). “Going deeper with convolutions,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Boston, MA: 1–9. 10.1109/CVPR.2015.7298594 [DOI] [Google Scholar]

- Vapnik V. (2015). Learning using privileged information: similarity control and knowledge transfer. J. Mach. Learn. Res. 16 2023–2049. [Google Scholar]

- Vapnik V., Vashist A. (2009). A new learning paradigm: learning using privileged information. Neural Netw. 22 544–557. 10.1016/j.neunet.2009.06.042 [DOI] [PubMed] [Google Scholar]

- Wang G., Zhang Y., Fei-Fei L. (2006). “Using dependent regions for object categorization in a generative framework,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition New York, NY: 1597–1604. 10.1109/CVPR.2006.324 [DOI] [Google Scholar]

- Wiegand C. L., Richardson A. J., Jackson R. D., Pinter P. J., Aase J. K., Smika D. E., et al. (1986). Development of Agrometeorological Crop Model Inputs from Remotely Sensed Information. IEEE Trans. Geosci. Remote Sens. 24 90–98. 10.1109/TGRS.1986.289689 [DOI] [Google Scholar]

- Xiao X. Y., Hu R., Zhang S. W., Wang X. F. (2010). “HOG-based approach for leaf classification,” in Proceedings of the Advanced Intelligent Computing Theories and Applications Changsha: 149–155. 10.1007/978-3-642-14932-0_19 [DOI] [Google Scholar]

- Yaseen T., Drago S., Valentini F., Elbeaino T., Stampone G., Digiaro M., et al. (2015). On-site detection of Xylella fastidiosa in host plants and in “spy insects” using the real-time loop-mediated isothermal amplification method. Phytopathol. Mediterr. 54 488–496. [Google Scholar]

- Yosinski J., Clune J., Bengio Y., Lipson H. (2014). How transferable are features in deep neural networks? Adv. Neural Inf. Process Syst. 27 1–9. 28954434 [Google Scholar]

- Zhenjiang M. (2000). Zernike moment-based image shape analysis and its application. Pattern Recogn. Lett. 21 169–177. 10.1016/S0167-8655(99)00144-0 [DOI] [Google Scholar]