An entire industry is booming on the promise that electronic health predictive analytics (e-HPA) can improve surgical outcomes by, for example, predicting whether a procedure is likely to benefit a patient compared with alternative treatments, or if a patient will experience short or long-term complications.1 The hope is that surgeons can use model predictions to improve the continuum of surgical care including patient selection, informed consent, shared decision making, preoperative risk modification, and perioperative management with the ultimate goal of producing better outcomes. Although so much effort is expended developing and selling predictive models, almost no attention is paid to rigorously testing if patients treated in health care settings that implement e-HPA have better outcomes than those treated in absence of e-HPA. Therefore, practicing surgeons need to be skeptical regarding the inherent benefits of e-HPA, as should model developers, implementers, and patients. Before buying or using an e-HPA system, all stakeholders should insist on research that rigorously evaluates not only the accuracy of the predictive models, but their effects on health outcomes when used in particular ways in real clinical settings. Without evidence of effectiveness and a careful examination of unintended consequences, implementation of e-HPA systems may produce more risks and/or costs than benefits.

Before describing the many ways even accurate and technically integrated e-HPA can fail to produce benefits to patients, we should review some characteristics of good predictive models, without which there is no hope. Good predictive models need to include important and modifiable outcomes, be adequately accurate (eg, sensitive, specific) given the clinical context, only include inputs that will be available at the time of decision or possible intervention, be cross-validated in data not used to develop the model, and be implemented in a usable and accessible technology platform. Moreover, model developers should be able and willing to transparently share the details of the model development methodology, model performance metrics, and model coefficients to facilitate replication, comparisons with alternative models, and refinements. The Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD) standards and the recent Consensus Statement on E-HPA elaborate these points.2,3 Unfortunately, a disappointing number of publically available predictive models meet these criteria.4 However, developing good predictive models that meet these criteria is just the beginning of successful implementation and perhaps easier than the downstream challenges to which we now turn.

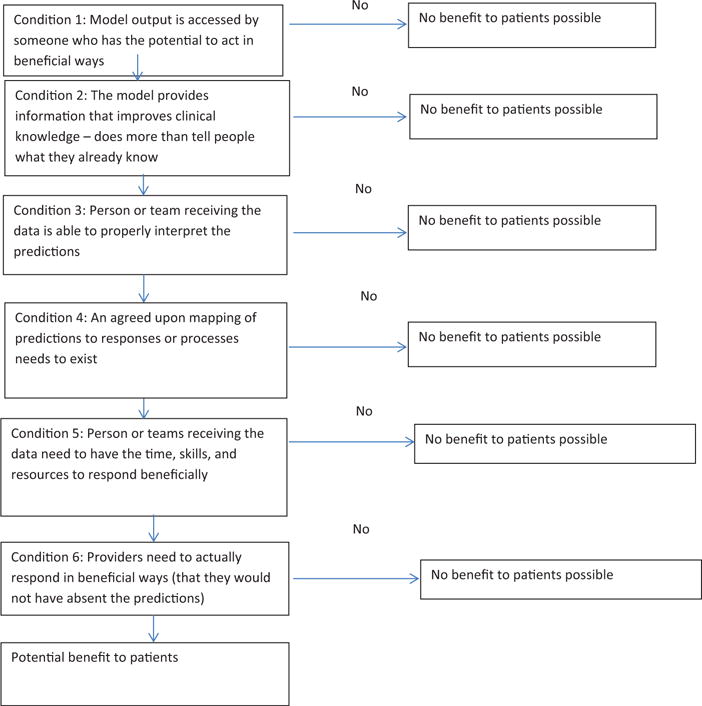

In rare cases when a particular implementation of e-HPA is evaluated and shown to produce real improvements in treatment quality and outcomes,5 it is generally under-appreciated how many intervening steps happened to translate model predictions into improved health. In prominent cases where the anticipated benefits of e-HPA failed to materialize,6 people are left wondering what went wrong along the pathway between accurate predictions and patient outcomes. Figure 1 represents a framework to better understand the conditions and events that must occur to enable good predictive models to create real value and benefit in the surgical context. The framework has a least 2 purposes. First, the framework is designed to sensitize all stakeholders, especially practicing surgeons, to the many implementation challenges that follow model development and technical integration. Second, the framework provides guidance to researchers and administrators who seek to rigorously test the clinical impacts of e-HPA—not only to estimate overall impacts, but to identify processes that might have broken down if no effects are observed.

FIGURE 1.

Framework to Guide Use, Implementation, and Evaluation of Accurate Surgical Predictive Models.

Condition 1 is that model outputs need to be accessed by someone who has the potential to act in beneficial ways. Prediction models are not helpful if nobody knows about them. Placing a risk calculator on a SharePoint Site or even as a pop-up in the electronic medical record does not ensure that potential users know of the model’s existence or will interact with it. Putting systems in place to track interactions with the model (eg, who, where, for which patients) is critical to understanding downstream clinical impacts or lack thereof.

Condition 2 is that models must produce information not already known to users. Thus, models can be accurate, but unhelpful unless they provide new insights. The best models accurately predict events that were not expected, accurately predict the absence of expected events, or help clinical staff refine the probability of events in patients with less certain risk, perhaps for informed consent or shared decision making.

Condition 3 is that model outputs need to be received by someone who understands how to interpret the information. Humans are notoriously bad at understanding statistical information. Many end users of e-HPA systems struggle with basic epidemiological and measurement concepts (eg, sensitivity, specificity, and confidence intervals). Packaging and presenting predictions in a way that fosters the intended interpretations is a complicated and important subfield of inquiry. Ensuring that end users understand what the predictions mean, and do not mean, is not a trivial problem.

Even if we could magically impart statistical and epidemiological fluency to end users, in many clinical contexts the jury is out regarding how to translate risk information into clinical action. If there exists an agreed upon mapping of predictions to specific actions or processes (Condition 4), then recommendations can be generated by the e-HPA system without confusing people with predicted probabilities and confidence intervals. Unfortunately, there are many situations where scientifically-based mappings do not exist. For example, how should the orthopedic surgeon respond to information that a candidate for total knee arthroplasty is at somewhat elevated risk of serious complications? Crudely, the impulse may be to delay or deny elective surgery to patients with elevated risk for complications. This may or may not be in the patient’s best interest depending on many measurement factors, (eg, the quality and precision of the predictive model, false positive rate) and patient factors (eg, preoperative functioning, expected benefit, preferences, and whether the risk factors are modifiable). In other cases, proper response to risk information may be better established and defined. But the value of a predictive model is tightly tied to the quality of our knowledge regarding how the information can be translated to beneficial actions and not unintended consequences. To put this general problem in a more familiar context, health care systems should not screen for problems unless the gathered information is interpretable and actionable. Similarly, unless predictive models can produce interpretable data that can be mapped to scientifically-supported responses (eg, delay surgery) or processes (eg, shared decision making), the likelihood of benefit decreases dramatically.

Penultimately, even if people know how to respond, they need the time, skills, and resources to do so (Condition 5). Finally, providers need to actually respond (Condition 6). Knowing what to do and how to do it, even if time and resources are available, does not necessarily produce action. Perhaps there are too many competing demands when the model produces alerts. Perhaps too many false alarms have blunted motivation to act. Recipients of predictions need to take action; otherwise no benefits to patients will accrue.

The six conditions of the framework highlight the many ways even good surgical risk prediction models can fail to have the intended impact on patient outcomes. Instead of assuming that accurate predictions will magically result in improved health outcomes, it is critical to conduct rigorous evaluations (eg, cluster randomized trials, interrupted time series designs) to test if e-HPA systems produce better outcomes. Awareness of the pathway from prediction to patient outcomes should inform e-HPA implementation and evaluation. If each condition within the framework is measured within an evaluation design, then a postmortem of null results is more likely to find the point(s) of derailment. Predictive analytics hold promise for improving patient health, but unless each condition in the framework is met, this promise will remain unfulfilled.

Footnotes

Disclosure: The author declares no conflict of interests.

References

- 1.Parikh RB, Kakad M, Bates DW. Integrating Predictive Analytics Into High-Value Care: The Dawn of Precision Delivery. JAMA. 2016;315:651–652. doi: 10.1001/jama.2015.19417. [DOI] [PubMed] [Google Scholar]

- 2.Collins GS, Reitsma JB, Altman DG, et al. Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD) Ann Intern Med. 2015;162:735–736. doi: 10.7326/L15-5093-2. [DOI] [PubMed] [Google Scholar]

- 3.Amarasingham R, Audet AM, Bates DW, et al. Consensus statement on electronic health predictive analytics: a guiding framework to address challenges. EGEMS (Wash DC) 2016;4:1163. doi: 10.13063/2327-9214.1163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bilimoria KY, Liu Y, Paruch JL, et al. Development and evaluation of the universal ACS NSQIP surgical risk calculator: a decision aid and informed consent tool for patients and surgeons. J Am Coll Surg. 2013;217:833–842. e831–833. doi: 10.1016/j.jamcollsurg.2013.07.385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wessler BS, Lai Yh L, Kramer W, et al. Clinical prediction models for cardiovascular disease: tufts predictive analytics andcomparative effectiveness clinical prediction model database. Circ Cardiovasc Qual Outcomes. 2015;8:368–375. doi: 10.1161/CIRCOUTCOMES.115.001693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Connors A, et al. A controlled trial to improve care for seriously ill hospitalized patients. The study to understand prognoses and preferences for outcomes and risks of treatments (SUPPORT). The SUPPORT Principal Investigators. JAMA. 1995;274:1591–1598. [PubMed] [Google Scholar]