Abstract

Auditory brainstem responses (ABRs) and their steady-state counterpart (subcortical steady-state responses, SSSRs) are generally thought to be insensitive to cognitive demands. However, a handful of studies report that SSSRs are modulated depending on the subject’s focus of attention, either towards or away from an auditory stimulus. Here, we explored whether attentional focus affects the envelope-following response (EFR), which is a particular kind of SSSR, and if so, whether the effects are specific to which sound elements in a sound mixture a subject is attending (selective auditory attentional modulation), specific to attended sensory input (inter-modal attentional modulation), or insensitive to attentional focus. We compared the strength of EFR-stimulus phase locking in human listeners under various tasks: listening to a monaural stimulus, selectively attending to a particular ear during dichotic stimulus presentation, and attending to visual stimuli while ignoring dichotic auditory inputs. We observed no systematic changes in the EFR across experimental manipulations, even though cortical EEG revealed attention-related modulations of alpha activity during the task. We conclude that attentional effects, if any, on human subcortical representation of sounds cannot be observed robustly using EFRs.

Keywords: Frequency-following response, FFR, Envelope-following response, Attention, Auditory processing

1. Introduction

The ability to selectively attend to a particular talker in “cocktail party” situations depends on the fidelity of sensory encoding throughout the auditory pathway (Shinn-Cunningham and Best, 2008). In human listeners, numerous electroencephalography (EEG), magnetoencephalography (MEG), and electrocorticography (ECoG) studies over the past four decades have shown that selective attention (i.e., “selecting” and focusing on a particular sound source from among multiple sound sources; Shinn-Cunningham, 2008) modulates how sound is encoded in primary auditory cortex. The P1-N1-P2 complex in the auditory-evoked potential (AEP), which is a stereotyped response occurring approximately 100 ms after sound onset that localizes to auditory cortex (Scherg et al., 1989), is enhanced when a listener actively pays attention to the evoking sound (e.g., Hillyard et al., 1973). Conversely, this response is suppressed when sounds are ignored in dichotic listening tasks (Sussman et al., 2005; Bidet-Caulet et al., 2007; Choi et al., 2013). The auditory steady-state response (ASSR), also originating in auditory cortex, has been reported to behave similarly during selective listening (Bharadwaj et al., 2014). “Inter-modal” selective attention (e.g., paying attention to visual stimuli while ignoring simultaneously presented auditory stimuli) has also been shown to modulate the strength of cortical auditory responses. Specifically, the magnitude of the AEP (Hackley et al., 1990; Choi et al., 2013) and ASSR (ASSR; Wittekindt et al., 2014) both have been observed to increase when subjects are actively listening for an auditory stimulus compared to when they perform a visual task and are ignoring the same auditory inputs.

In contrast to the well-described effects of attention on auditory-related neuroelectrical responses originating in the cortex, it is less clear whether selective listening or inter-modal attentional shifts modulates responses originating in subcortical auditory structures. At least one previous study has suggested that attentional modulation of phase-locked neural activity may not occur at processing stages below auditory cortex (Gutschalk et al., 2008). However, there exist corticofugal projections from auditory cortex to subcortical structures that have the potential to modulate the function of lower nuclei (see Winer, 2006 for a concise review). What remains unclear is whether the actions and specific anatomical targets of these projections are specific enough to support selective attention, or even whether these projections to lower nuclei actively sculpt neural processing based on task demands at all.

In animals, efferent projections from auditory cortex play a role in the long-term plasticity of the neural firing properties of a number of different subcortical structures, including outer hair cells (Xiao and Suga, 2002), neurons in inferior colliculus (Yan and Suga, 1996, 1999; Bajo et al., 2010), and possibly at later subcortical processing stages as well. Similar long-term changes have also been reported in humans, seen in modifications of subcortical steady-state responses (SSSRs) obtained from the brainstem (e.g., Skoe and Kraus, 2010b). Here, we focus not on long-term plasticity, but rather on the question of whether cortical feedback shapes sub-cortical processing to aid performance “online” flexibly and in an immediate, task-dependent manner. Some awake behaving animal studies suggest that this occurs. Attention to visual stimuli was found to reduce the amplitude of transient evoked responses elicited by broadband auditory stimuli in the cochlear nucleus (Hernandez-Peon et al., 1956; Oatman, 1971, 1976) and the auditory nerve (Oatman, 1971, 1976), as well as of transient (Oatman and Anderson, 1977) and steady-state (Oatman and Anderson, 1980) responses to pure tones at a variety of frequencies in the cochlear nucleus in awake felines. Cochlear sensitivity, as measured by compound action potentials obtained from a round-window electrode in the chinchilla, was reduced when animals deployed visual attention compared to when animals performed an auditory-only task (Delano et al., 2007). The spectrotemporal tuning of neurons in IC has also been observed to change during detection tasks relative to when animals are passively listening (Slee and David, 2015). Yet despite these results from animal studies, there are not consistent reports of attention-related modulation of subcortical neuroelectrical activity in human listeners.

Correlates of subcortical auditory function can be observed non-invasively in humans by measuring compound neuroelectrical activity at scalp locations in response to auditory stimulation. The best known among these measures is the auditory brainstem response (ABR; Sohmer and Feinmesser, 1967; Jewett and Williston, 1971; Picton et al., 1981), which is a stereotypical response occurring in the first 10 ms or so after presentation of a brief stimulus. Individual ABR peaks correspond to the neural responses from different ascending stages of the auditory pathway, from the auditory nerve (wave I) through the lemnisci and the inferior colliculus (IC, wave V; Melcher and Kiang, 1996; Scherg and von Cramon 1985). Neurons in the structures from which the ABR arises can also respond in a phase-locked manner to periodic stimulation, resulting in a measured potential known as the subcortical steady-state response (SSSR; also called the “frequency-following response”; Worden and Marsh, 1968; Skoe and Kraus, 2010a). By combining SSSRs in response to periodic stimuli presented in opposite polarities, one can separate responses that are phase-locked to the temporal fine structure (TFS) of the stimulus from those that are phase-locked to its envelope (Aiken and Picton, 2008). The response that is phase-locked to the relatively slow-varying auditory stimulus envelope specifically has been called the “amplitude-modulated following response” (AMFR; Kuwada et al., 2002) or the “envelope-following response” (EFR; Dolphin and Mountain, 1992); the latter terminology will be used throughout the rest of this paper. The strength of the EFR, measured at the fundamental frequency of the envelope and its harmonics via spectral estimation techniques, is a sum of activity from a number of different peripheral frequency auditory channels and possibly a number of different subcortical nuclei (Chandrasekaran and Kraus, 2010; Shinn-Cunningham et al., 2013; Zhu et al., 2013).

The prevailing practice within the audiology and auditory research community is to obtain ABR/SSSR recordings while subjects watch a silent movie (Skoe and Kraus, 2010a) or even while they are sedated or sleeping, with the latter method routinely used when conducting clinical ABR measurements (Burkard and McNeary, 2009; Sininger and Hyde, 2009). Such practices suggest that an individual’s cognitive state does not pose a concern to auditory researchers and clinicians interested in quantifying peripheral neural activity. The literature on the modulation of ABRs by attentional focus supports such practices, since the majority of studies examining the effect of attention on the ABR in human listeners have failed to find any effects of either inter-modal attention (e.g. Connolly et al., 1989; Gregory et al., 1989; Hackley et al., 1990) or selective auditory attention (e.g., Picton and Hillyard, 1974; Woldorff et al., 1987; Gregory et al., 1989). Similarly, EFRs specifically have been found to be insensitive to sleep and even general anesthesia (Cohen et al., 1991; Lins et al., 1996; Lins and Picton, 1995).

However, examination of the literature reveals several papers that appear to show the opposite, that ABRs and SSSRs are sensitive to a listener’s attentive state. Decreased amplitude and increased latency of ABR wave I and wave V have been reported when subjects attended to a visual stimulus compared to when there was no visual stimulus (Lukas, 1980; Brix, 1984). Several studies by Galbraith and colleagues reported that the amplitudes of SSSRs are modulated by both inter-modal attention (Galbraith et al., 2003) and selective auditory attention (Galbraith and Arroyo, 1993; Galbraith and Doan, 1995; Galbraith et al., 1998). More recently, Hairston and colleagues (2003) found that the SSSR amplitude to task-irrelevant tones decreased during an auditory task, but did not change during a visual task, potentially indicating a subcortical suppression of irrelevant stimuli in challenging listening situations. Finally, Lehmann and Schönwiesner (2014) reported changes in the EFR as a result of selective attention.

Given the presence of anatomical projections capable of supporting top-down modulation of peripheral structures, as well as the reports of top-down modulation observed in animal studies, the inconsistencies in the literature describing subcortical attentional effects in human scalp-recorded potentials are puzzling and deserve further consideration. This is especially true given the observation of subcortical attentional effects using other types of functional measurements of subcortical auditory processing, including otoacoustic emissions (OAEs; Giard et al., 1994; Maison et al., 2001; Wittekindt et al., 2014) and fMRI BOLD responses in IC (Rinne et al., 2008).

Here, we conducted two experiments designed to test the hypothesis that attention modulates subcortical neuroelectrical activity. Since cortical feedback could be targeting any number of subcortical auditory processing sites, including different tonotopic channels, the likelihood of observing such feedback effects seems higher when presenting broadband periodic stimuli and quantifying neural activity via EFRs compared to other stimulus-response combinations that could be used to assay subcortical neuroelectrical activity. As such, auditory stimuli in both experiments consisted of spoken digits, and the subcortical neural responses to these stimuli were quantified using the complex-domain phase-locking value (PLV; Lachaux, et al., 1999; Bharadwaj and Shinn-Cunningham, 2014) derived from the EFR. Auditory stimuli were presented either monaurally or dichotically (one to each ear); during dichotic presentation, digits presented to each ear had asynchronous onsets and had different fundamental frequencies to allow the observed neural responses to attended and ignored digits to be resolved in time and frequency. Furthermore, in order to give selective auditory attentional feedback the “best shot” at independently modulating responses to attended and ignored digits, auditory stimuli were specifically processed such that they had non-overlapping frequency content spanning a 6 kHz frequency range (via click-train “vocoding”; see Fig. 1 and Section 4). Our overall hypothesis was that selective attention to a particular auditory stimulus stream would enhance the phase locking of the EFR to the when the stream was attended and/or diminish the phase locking of the EFR when the stream is ignored.

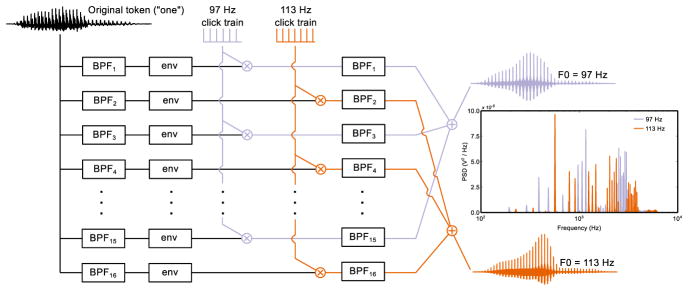

Fig. 1.

Example of vocoding procedure for the speech token “one”. The original natural speech token was filtered into 16 frequency band using 1/3-octave-wide filters. The filter output from odd-numbered bands was half-wave rectified and low-pass filtered, multiplied with a click train at 97 Hz, and filtered once more using the same bandpass filter. The output from this final stage was summed to obtain the final stimulus. Construction of the 113 Hz stimulus was similar, but used even numbered bands and a click train at 113 Hz. The vocoding procedure resulted in speech streams with a fundamental frequency of 97 Hz or 113 Hz and with minimally overlapping power spectral density functions (inset, right). On each dichotic experimental trial, a digit stream with one of the two carrier frequencies was sent to one ear, and another digit stream with the other carrier frequency was presented to the other ear.

2. Results

2.1. Experiment 1

In the first experiment, subjects were asked to listen to streams of spoken digits and respond whenever two consecutive, increasing digits were heard in the attended ear (e.g., “3” followed by “4”); this task was performed both in quiet (i.e., during monaural presentation) or while ignoring digits presented to the other ear (i.e., during selective listening in a dichotic setting). EEG was recorded during the task for the purpose of quantifying EFR-stimulus phase locking.

2.1.1. Behavioral results

Analysis of behavioral performance data indicated that subjects performed the task as instructed, and they performed similarly regardless of whether they were presented with a monaural or dichotic stimulus. The mean d′ when subjects were attending to one sound stream while ignoring another (in the attended/dichotic condition) was 3.18 (range: 2.41–4.16). The mean d′ when subjects were presented with a single sound stream (in the attended/monaural condition) was 3.16 (1.88–4.63). This difference between conditions was not statistically significant [two-tailed, paired t-test; t(8)= 0.09, p=0.94]. Examination of the natural logarithm of the bias parameter, log(β), suggested that subjects were more likely overall to categorize a digit in the attended ear as a non-target in each condition. Mean log(β) values were 4.47 (2.82–5.96) for the dichotic condition and 3.93 (2.17–5.29) in the monaural condition; this difference was not found to be statistically significant [two-tailed, paired t-test; t(8)=1.82, p=0.11).

2.1.2. Phase-locking results

Grand-averaged plots of PLV as a function of frequency are shown in Fig. 2, with PLV z-scores for individual subjects at the fundamental frequency of each stimulus shown in Fig. 3. Regardless of which stimulus was being attended (97 Hz or 113 Hz click frequency), there was significant phase locking (i. e., z>1.64) to the fundamental frequency of each of the two audio streams. Responses to higher harmonics were near the noise floor for almost all subjects. We also observed significant phase-locking to odd harmonics of 60 Hz due to line noise, because we did not jitter between the digit onsets within a stimulus and the regular 2 Hz repetition rate of the digits within the stream, resulting in line noise that was at the same phase at the onset of each digit token within a single trial. The regularized across-stream timing also resulted in a peak at the opposite click train frequency in epochs from dichotic trials (i.e., a peak at 97 Hz in the epochs binned at 113 Hz digit onsets, and a peak at 113 Hz in the epochs binned at 97 Hz digit onsets).

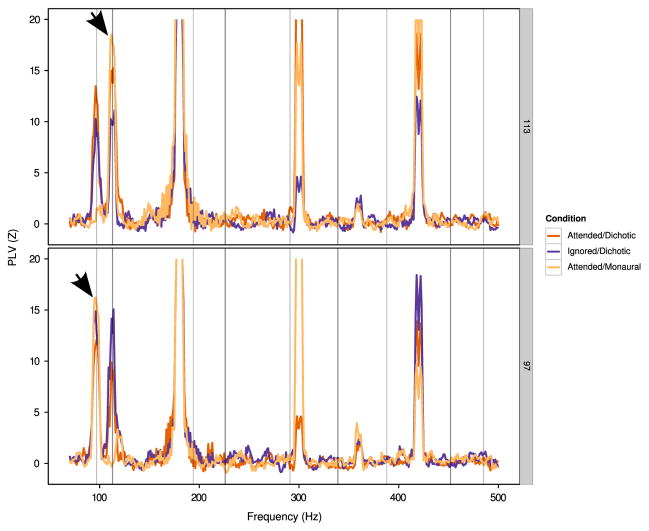

Fig. 2.

Average PLV z-score as a function of frequency in Experiment 1. Due to the high z-scores at odd multiples of 60 Hz (20>z>40; see text for explanation), the y-axes are truncated to the range of interest around the stimulus fundamental frequency values (see text). Hz Top row: PLV (z-score) as a function of frequency for 113 Hz stimuli. Bottom: PLV (z-score) as a function of frequency for 97 Hz stimuli. Integer multiples of 97 Hz and 113 Hz are indicated by vertical lines. Arrows represent peaks for which individual subject data is shown in Fig. 3. The opposite frequency peak (i.e., the peak at 97 Hz in the top plot and the peak at 113 Hz in the bottom plot) arise from the lack of jitter in digit onset timings; such peaks were not present in Experiment 2 (see Fig. 4 and text for details).

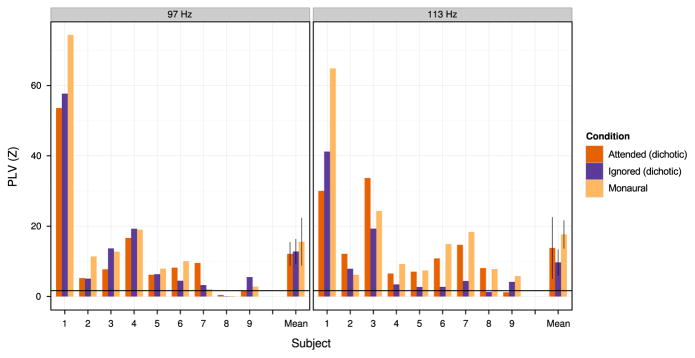

Fig. 3.

PLV z-scores at the fundamental frequency of each stimulus shown for individual subjects in Experiment 1. Left column: Data for epochs corresponding to 97 Hz stimulus onsets (PLV z-score at 97 Hz). Right column: data for epochs corresponding to 113 Hz stimulus onsets (PLV z-score at 113 Hz). The horizontal line indicates the 95th percentile of the standardized noise distribution. Error bars represent 95% confidence intervals about the mean with a within-subject correction applied (Morey, 2008; Chang, 2015).

Visual comparison of z-scores across conditions in Fig. 3 reveals no consistent effects of attention on phase locking. Although phase locking to the 113 Hz stimuli tended to be higher at 113 Hz for attended conditions compared to the ignored condition (Fig. 3, right; red and yellow bars tend to be higher than blue bars), the effect was small; moreover, no similar trend was observed for the 97 Hz stimuli (Fig. 3, left; no clear ordering to red, blue, and yellow bars across subjects).

ANOVA on PLV z-scores were conducted, with experimental condition (attend during dichotic presentation, ignore during dichotic presentation, “attend” during monaural presentation), stimulus carrier frequency (i.e., click rate; 97 Hz or 113 Hz), and the interaction of the two factors treated as fixed effects. Subject effects were included in the model as random effects. None of the fixed effects were found to be significant [experimental condition: F(2, 16)=2.76, p=0.09, generalized effect size (see Section 4); stimulus frequency: F(1, 8)=4.38 × 10−3, p=0.95, ; interaction: F(2, 16)=2.62, p=.10, ].

Given that our experimental manipulations had no statistically significant effect on PLVs, we sought to quantify the likelihood that the null hypothesis is true, given our results (as opposed to simply failing to reject the null hypothesis). PLV z-scores were subject to a Bayes Factor analysis (Kass and Raftery, 1995; Rouder et al., 2012). Given our data, a linear model that excludes experimental condition is 2.98 times as likely as one taking into account both experimental condition and the interaction between experimental condition and stimulus frequency. Based on published guidelines (Kass and Raftery, 1995), our data provides at best slight evidence in favor of the null hypothesis; i.e., if a priori, an effect of task on the EFR strength is equally likely as no effect of task, the no-effect hypothesis is about 3 times as likely to be true as the hypothesis that PLVs are affected by experimental manipulation.

2.2. Experiment 2

Results of Experiment 1 suggest that task conditions did not affect phase-locking in the EFR. Experiment 2 was conducted to test the same set of hypotheses regarding auditory attention, but with digit onset timings randomized within a single stream. This resulted in the randomization of relative onset timings of digits across ears when stimuli were presented dichotically. Furthermore, given that previous reports of modality-specific attentional modulation of subcortical responses are more consistent than reports of modulation due to selective, within-modality attention shifts (e.g., Lukas, 1980; Oatman and Anderson, 1980; Lukas, 1981; Bauer and Bayles, 1990; Galbraith et al., 2003; Delano et al., 2007; Wittekindt et al., 2014), we also introduced an additional condition in which participants attended to visual stimuli while ignoring monaurally presented auditory stimuli. The detection task employed in the second experiment was similar to the task utilized in Experiment 1 (detect two consecutive, increasing digits in the attended ear or, for the visual task, on a computer monitor), but with a small financial bonus contingent on performance levels to encourage participants’ maximum engagement with the task. We hypothesized that switching attention to a different sensory modality altogether would decrease the strength of EFR phase locking to auditory inputs, even if within-modality shifts of attention did not result in similar changes (as suggested by the results of Experiment 1).

2.2.1. Behavioral task performance

The mean d′ values were 3.10 (range: 2.62–3.86), 3.12 (2.04–4.62), and 3.27 (1.97–3.84) for the selective listening, monaural, and attend-visual tasks, respectively, indicating that all subjects were able to perform the task reasonably well. The difference in performance between conditions was not statistically significant [F(2, 22)=0.67, p=0.52, ]. Mean log(β) values were 4.52 (3.43–6.51), 4.42 (2.86–5.87), and 3.85 (1.77–5.80) for the selective listening, monaural, and attend-visual tasks, indicating that subjects had an overall bias towards classifying digits in the attended ear as non-targets. The difference between conditions was not statistically significant after applying the Greenhouse-Geisser correction for violation of sphericity [F(2, 22)= 3.72, Greenhuose-Geisser corrected p=0.07, ].

2.2.2. Phase-locking results

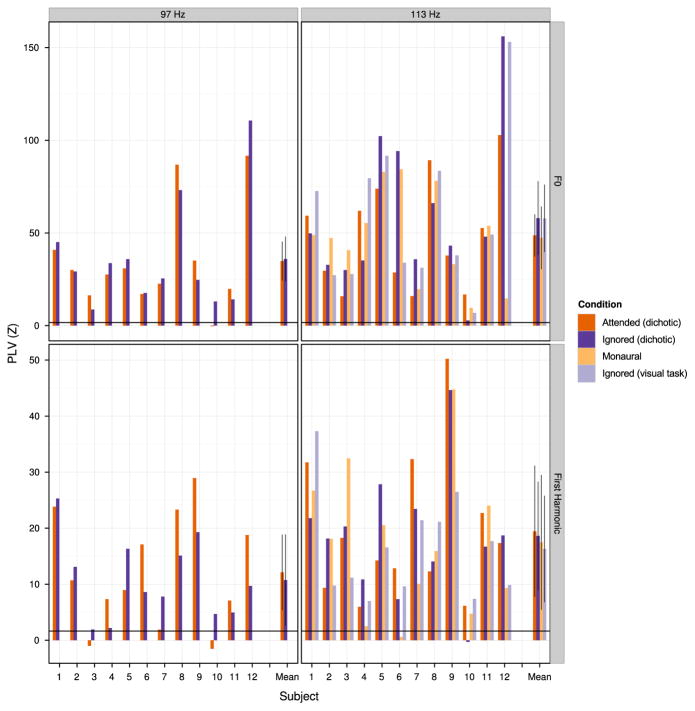

Grand-averaged plots of PLV as a function of frequency are shown in Fig. 4, with PLV z-scores for individual subjects at the fundamental frequency and first harmonic frequency of each stimulus shown in Fig. 5. In contrast to Experiment 1, subjects exhibited significant phase-locked activity at the fundamental frequency of both streams (113 Hz or 97 Hz), as well as at integer multiples of these fundamentals (Fig. 4, peaks for each PLV curve align at the frequencies indicated by darker vertical lines). Additionally, there was no cross-frequency peak for the selective attention conditions; i.e. there was no significant phase-locking to the 113 Hz stimuli in the 97 Hz epochs, and vice versa (compare Fig. 2 to Fig. 4). The absence of the peak at the opposing stimulus frequency as well as the more robust responses at harmonics of the fundamental is most likely due to the introduction of the onset timing jitter within each digit stream, which has the effect of reducing phase locking to the fundamental frequency of the competing stream and to the line noise, since the phases of these stimulus components are not aligned across the analysis epochs.

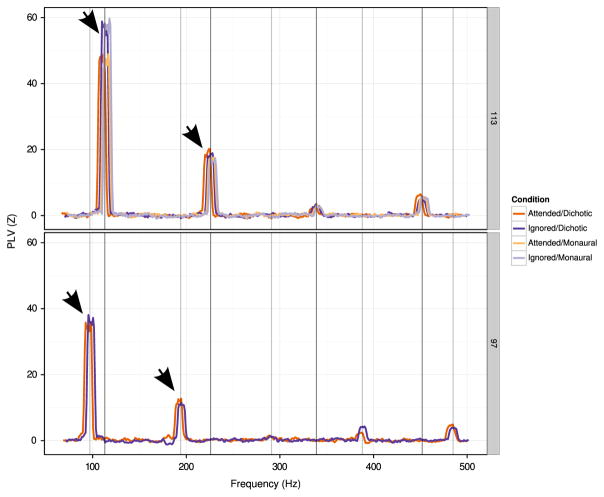

Fig. 4.

Average PLV z-score as a function of frequency in Experiment 2. Traces have been shifted slightly in the horizontal direction to aid visualization. Top row: PLV (z-score) as a function of frequency for 113 Hz stimuli. Bottom: PLV (z-score) as a function of frequency for 97 Hz stimuli. Integer multiples of 97 Hz and 113 Hz are indicated by vertical lines. Arrows represent peaks for which individual subject data is shown in Fig. 5.

Fig. 5.

PLV z-scores at the fundamental frequency of each stimulus shown for individual subjects in Experiment 2. Top row: Z-scores at stimulus fundamental frequency. Bottom row: Z-scores at first harmonic. Left column: Data for epochs corresponding to 97 Hz stimulus onsets (PLV z-score at 97 Hz or 194 Hz). Right column: data for epochs corresponding to 113 Hz stimulus onsets (PLV z-score at 113 Hz or 226 Hz). Horizontal lines in each panel indicate the 95th percentile of the standardized noise distribution. Error bars represent 95% confidence intervals about the mean with a within-subject correction applied (Morey, 2008; Chang, 2015).

When comparing across individual subjects, there did not appear to be a consistent effect of attentional condition on PLV strength (in Fig. 5, there is no consistent ordering of red, blue, yellow, or gray bars across subjects for the 97 Hz stimuli or in the ordering of red and blue bars across subjects for the 113 Hz stimuli). The mean PLV z-scores for the 113 Hz stimuli at F0 tend to be higher in the “ignore” cases than the “attend” cases (Fig. 5, upper right; blue and gray bars are higher than red and yellow bars). However, this trend is driven almost entirely by the results of subject 12, who showed very large responses in the “ignored” trials; this tendency was not otherwise present across subjects.

Due to the imbalance of stimulus frequency and attentional condition (four attentional conditions for 113 Hz stimuli, and two attentional conditions for 97 Hz stimuli), we chose to treat stimulus frequency and attentional condition as a single fixed effect (“experimental condition”) for the purpose of conducting ANOVA. Stimulus harmonic (F0 versus second harmonic) was treated as an additional fixed effect. Subject was treated as a random effect. ANOVA indicated that the interaction between experimental effect and harmonic was not significant [F(5,55)=1.53, p=0.20, ]. The effect of stimulus harmonic was found to be significant [F(1, 11)=16.11, p<0.01, ], confirming that phase-locking at F0 is greater than phase-locking at the first harmonic. The effect of experimental condition was also found to be significant [F(5, 55)=4.09, Greenhouse-Geisser corrected p=0.02, ]. However, post-hoc, pair-wise t-tests with Bonferroni-Holm correction indicated that the only significant differences occurred when comparing data from 97 Hz stimulus presentations against data from 113 Hz stimulus presentations (Table 1). This outcome can be interpreted as PLVs to 113 Hz stimuli being greater than PLVs to 97 Hz stimuli, which is evident visually by comparing left and right panels in Fig. 5. We note that a given natural speech token vocoded with a 113 Hz click train has more F0 cycles than the same token vocoded with a 97 Hz click train. This inherent difference in the physical stimuli may contributeto the difference in absolute PLV strength at these two frequencies.

Table 1.

Pairwise t-test results for 97 Hz vs. 113 Hz comparison for PLV data from Experiment 2. All p-values shown after Bonferroni-Holm correction have been applied. Bold indicates statistical significance using α =0.95. D=Dichotic, M=Monaural, A=Attend, I=Ignore.

| 97 Hz | 113 Hz | 113 Hz | 113 Hz | 113 Hz | |

|---|---|---|---|---|---|

|

| |||||

| D/I | D/A | D/I | M/A | V/I | |

| 97 Hz | 1.000 | 0.018 | 0.066 | 1.000 | 0.034 |

| D/A | |||||

| 97 Hz | 0.011 | 0.032 | 1.000 | 0.007 | |

| D/I | |||||

| 113 Hz | 1.000 | 1.000 | 1.000 | ||

| D/A | |||||

| 113 Hz | 1.000 | 1.000 | |||

| D/I | |||||

| 113 Hz M/ | 1.000 | ||||

| A | |||||

We computed Bayes factors on this dataset to quantify evidence in favor of the null hypothesis, using the same fixed and random effect structure used in the ANOVA. Results indicated that a model that does not include experimental condition is 6.05 times more likely than a model that includes both experimental condition and the interaction between harmonic and experimental condition, indicating “positive” evidence against the latter (Kass and Raftery, 1995). When this procedure was repeated on the data from just the four attentional conditions for 113 Hz stimulus presentation, the model without attentional condition was found to be 70.2 times more likely than the model including attentional condition and its interaction with harmonic. This constitutes “strong” evidence against our experimental manipulations having an effect on the EFR.

2.2.3. Post-hoc cortical data analysis

In the absence of any subcortical attentional effects in Experiments 2, we turned to cortical responses (which dominate the low-frequency portions of the recorded signals) to see if there was evidence that subjects were directing their attentional focus appropriately. In conducting this post-hoc analysis, we noted some issues that complicate the interpretation of auditory-evoked cortical activity in our paradigm. First, over the course of each long trial, adaptation is likely to diminish the amplitude of canonical AEP components (Woods and Elmasian, 1986). Second, each individual token is sufficiently different in its spectrotemporal content that the latency and morphology of auditory-evoked peaks may differ substantially (Hansen et al., 1983; Sharma et al., 2000; Frye et al., 2007). Indeed, cursory inspection of cortical-evoked AEP components time-locked to both the epoch start as well as the approximate onset of voicing in the speech stimuli exhibited features consistent with traditional AEP components in some, but not all subjects; even when typical ERP components were present, their latencies and amplitudes varied from token to token and from subject to subject. Some recent studies have shown that the EEG signal correlates with the speech energy as a function of time; indeed, in mixtures of two speech waveforms, it is possible to use the strength of the correlation between the cortical response and the envelope of each of the competing speech waveforms to decode which stream a listener is attending (e.g., Lalor et al., 2009; Kerlin et al., 2010). Unfortunately, broadband stimulus envelopes and onset times of our two competing streams were not independent of each other (indeed, they were roughly alternating) and relatively regular compared to natural, continuous speech, so such analysis was not suitable for our data set.

Given that our paradigm was ill suited for evoked potential analysis, we investigated ongoing alpha band (8–13 Hz) oscillatory activity in Experiment 2 as a marker of attention. A number of recent studies associate alpha band activity with sensory suppression in a variety of tasks, including selective attention tasks (Foxe and Snyder, 2011). One recent study reported that during an inter-modal selective attention task using auditory and visual stimuli, alpha activity was reduced over frontal and parieto-occipital EEG sensor regions when subjects attended to visual stimuli compared to when they attended to audio stimuli (Wittekindt et al., 2014). Increased alpha activity over central and parietal sensors has also been associated with task difficulty on auditory-only tasks (Obleser et al., 2012; Obleser and Weisz, 2012).

Based on previous literature on alpha band activity and on our own experience with EEG, we chose to focus on alpha activity within the parieto-occipital sensor region. We hypothesized that the magnitude of alpha activity in these sensors would be reduced when participants performed the visual task compared to when they performed the auditory task. We additionally hypothesized that alpha band activity would be greater in the dichotic, auditory selective attention condition, where selective attention requires suppression of the competing stream, than in the monaural, auditory-only condition.

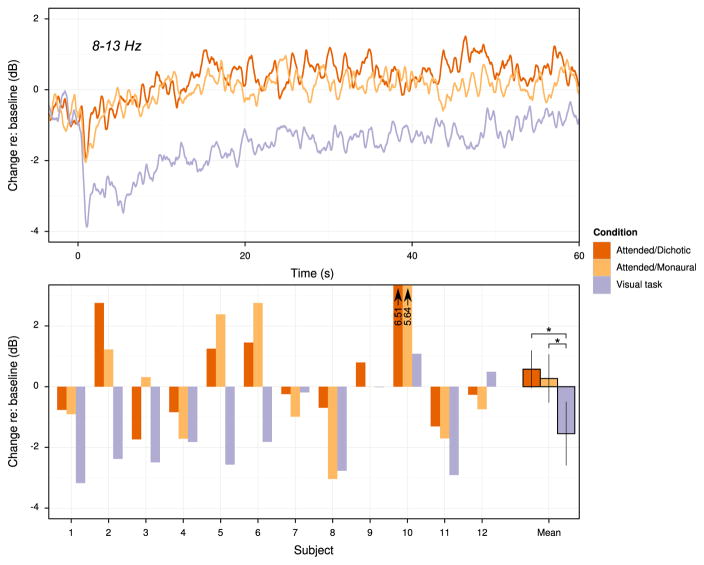

The time course of parieto-occipital sensor alpha activity averaged over subjects is shown in the upper panel of Fig. 6, with the mean difference between baseline alpha activity and alpha activity during the stimulus presentation period shown in the bottom panel for each subject. Inspection of the time course indicates a sharp drop in alpha activity in the first second after stimulus onset, with the activity during the visual task dropping most sharply. Activity in the auditory conditions returns to near- or above-baseline levels within about 5 s; in contrast, activity during the visual task remains below baseline over the entire course of the time period analyzed.

Fig. 6.

Summary of parieto-occipital alpha activity obtained from Experiment 2. Top: mean alpha amplitude across subjects over time, expressed in dB relative to the amplitude in the pretrial period (−3.5 to 0 s), shown for the pretrial period and the first 60 s of the trials. Bottom: change in alpha amplitude during stimulus presentation, expressed as dB relative to baseline amplitude, shown for individual subjects and for the group mean. Error bars represent 95% confidence intervals about the mean with a within-subject correction applied (Morey, 2008; Chang, 2015). Stars indicate significant pairwise differences (α =0.95) obtained via t-test after Bonferroni-Holm corrections were applied to p values.

The mean change between baseline alpha amplitude and alpha amplitude during performance of the task was +0.58 dB for the attended/dichotic condition, +0.27 dB for the attended/monaural condition, and −1.55 dB for the attend-visual condition. One-way, repeated measures ANOVA on the alpha amplitude change revealed a significant difference in the magnitude of change relative to baseline across conditions [F(2, 22)=9.01, p=0.01, ]. Pairwise, paired two-tailed t-tests with Bonferroni-Holm correction indicated that the alpha activity in the attend-visual condition was significantly lower than when subjects selectively attended to a stream in the dichotic condition (p=0.01), as well as when they attended to the monaurally presented auditory stimuli (p=0.04). The difference in the change in alpha amplitude between the dichotic and auditory-only monaural conditions was not significant (p=0.41).

3. Discussion

Selective attention involves selecting a particular source of information while suppressing competing sources (e.g., Shinn-Cunningham, 2008). It is well established that selective attention modulates cortical responses to sound, so that responses to the attended source are strong compared to responses to an ignored sound (e.g., Fritz et al., 2007). Yet it is not clear whether attention modulates responses via corti-cofugal pathways in a way that can be consistently observed from non-invasive measures of subcortical responses, specifically in EFRs. Even if selective auditory attention does not lead to a relative enhancement of EFRs elicited by attended over unattended sources in a sound mixture, it may be that EFRs are modified by whether a subject is attending to sound vs. attending to some other sensory modality.

Here, in two separate experiments, subjects either selectively listened to one sound stream or selectively attended to visual stimuli while ignoring auditory inputs. We observed no systematic variation in EFR PLV when listeners selectively attended vs. ignored a sound stream in a mixture of competing streams, even though we designed our auditory stimuli such that the two streams were separated in frequency content, pitch, and ear of presentation. Furthermore, the lack of systematic variation in EFR PLV with task demands, or even presentation scheme (i.e., monaural vs. dichotic), cannot be due to poor overall SNR, as overall levels of phase locking were well above the noise floor at the fundamental frequency of each digit stream in both experiments.

3.1. Experiment 1

For almost all subjects and conditions, there was significant phase locking to the fundamental frequency of the stimuli. However, we observed no consistent changes in the strength of phase locking that could be attributed to attentional focus. A Bayes factor analysis indicated that our data favored the null hypothesis by a factor of about 3.

While clear phase-locking peaks were found at the fundamental frequencies of 97 Hz and 113 Hz, we did not observe consistent, strong phase locking at the harmonics of these fundamental frequencies. When PLVs are used to analyze phase locking to the stimulus envelope, clear peaks can often be seen at the first few harmonics of the fundamental frequency of the stimulus, regardless of whether PLVs were obtained from a single electrode (e.g., Ruggles et al., 2012; Zhu et al., 2013), or derived from multiple electrodes (Bharadwaj and Shinn-Cunningham, 2014); however, in general, the strength of these peaks is idiosyncratic across subjects. The weak phase locking at stimulus frequency harmonics observed here may have been due to interactions between the responses evoked by the two streams with different fundamental frequencies. The magnitude of SSSR harmonics increases with presentation level (Krishnan, 2002). Moreover, higher harmonics of SSSRs at higher stimulus harmonics are more sensitive to SNR than the SSSR at the fundamental frequency (Zhu et al., 2013). Given that our listeners set the presentation level to a comfortable listening level, presentation level may also have influenced the amount of phase locking observed at the higher harmonics compared to what has been found in previous studies.

At the fundamental frequencies of the two competing streams, the PLV was significantly above the noise floor in almost all subjects and conditions, yet we found no consistent effects on the PLV that could be attributed to selective listening. Behavioral data (d′>3) suggests that our inability to find an effect is not due to the possibility that subjects were passively listening, rather than engaging in the task, during the experiment. One potential explanation for the null result could be that listeners were rapidly switching their attention back and forth between streams in dichotic trials rather than selectively listening to one stream, thereby cancelling out any expected neural effects of selective listening. The time to switch the focus of attention from one sound source to another is thought to be in the range of 200–300 ms (Shinn-Cunningham, 2008). Given that the digits in alternating streams were 250 ms apart, it is possible that subjects adopted such a strategy. However, not only would such a strategy interfere with rather than help performing the task, sustaining this kind of listening over a one-minute long experimental trial would be quite taxing, making it unlikely that subjects adopted this strategy. Our pilot testing prior to the start of the experiment indicated that the least effortful way to perform the task was to direct attention to the signal presented in the to-be-attended ear and sustain focus on the target stream throughout each trial.

3.2. Experiment 2

There was significant phase locking to the fundamental frequency of the stimuli for all subjects in all conditions, as well as at the first harmonic frequency of the stimulus for most subjects and conditions. In spite of these gains, which could be attributed to better overall SNR relative to Experiment 1, we once again observed no consistent changes in the strength of phase locking that could be attributed to attentional focus, with a Bayes factor analysis indicating that our data provided “positive” to “strong” evidence (Kass and Raftery, 1995) in favor of the null hypothesis.

Behavioral performance on the dichotic and attend-monaural conditions was similar to what was observed in Experiment 1. However, it is difficult to directly compare task performance across the two experimental sessions. The financial incentive reward for correct responses may have pushed listeners to try harder on the task, but any resulting performance gains might have been offset by the fact that the digit onsets were temporally unpredictable due to the timing jitter introduced, which may have made the task harder.

We observed strong phase locking of the EFR to the stimulus fundamental frequency and at multiple harmonics across subjects, and overall, PLV z-scores were higher in Experiment 2 than in Experiment 1. We believe that the additional cautions taken in the design and presentation of the stimulus and the analyses helped increase the strength of the phase locking that we observed. Specifically, we fixed the RMS presentation level in Experiment 2, rather than allowing subjects to control the stimulus level (thus raising the possibility that they set it too low to observe reliable phase-locking at the harmonics). Presentation levels were also normalized on a per-token basis, compared to a per-stream basis as in Experiment 1. There may also have been less interference from the responses to the competing stream due to the introduction of temporal jitter in the digit onsets. Finally, equating the number of epochs corresponding to each digit and polarity in PLV computation, as opposed to simply equating number of epochs per polarity in Experiment 1, may also have led to more robust phase locking estimates across conditions, averaging out differences in phase-locking caused by differences in the temporal envelope of different digits.

Examination of the alpha activity indicated that alpha activity remained lower than baseline alpha activity in the attend-visual trials, while returning to near- or above- baseline levels when subjects attended to auditory stimuli. These observations lend support to the argument that our failure to see changes in EFR strength across conditions is not due to the possibility that participants simply failed to direct attention or follow instructions. Neural oscillations in the alpha band have been associated with suppression of sensory inputs (Obleser et al., 2012; Wittekindt et al., 2014). Reduced parieto-occipital alpha activity during attention to a visual stimulus has been reported in numerous studies on visual information processing (e.g., Foxe et al., 1998) as well as during attention to visual stimuli during auditory stimulation (Wittekindt et al., 2014). Consistent with these reports, we observed greater cortical parieto-occipital alpha activity during selective listening compared to when listeners performed a visual task while ignoring auditory stimuli.

We had hypothesized that alpha activity would be higher during the attended/dichotic condition due to the additional cognitive load associated with selective listening. However, we did not observe a significant difference in alpha activity between attend/monaural and attend/dichotic conditions. Previous reports associate the strength of alpha activity with task difficulty (Obleser and Weisz, 2012), and may index the direction of spatial attention during selective listening tasks (Kerlin et al., 2010). As yet, it is unclear as to whether such lateralized effects during auditory-only processing are due to anticipatory effects (e.g., from a pre-stimulus visual cue) or whether changes in alpha activity are directly related to where attention is directed in a sound scene (see Strauss et al., 2014). Our paradigm was not designed to examine this particular question; therefore, we did not try to localize activity to neural sources in order to determine whether lateralized alpha changes were present during auditory-only conditions.

In summary, even though EFR phase locking was stronger in Experiment 2, the qualitative outcome was identical to Experiment 1: EFR-derived PLVs were insensitive to task demands. Specifically, PLVs were unaffected by whether attention was directed to one stream versus another, whether attention was focused on a visual task rather than an auditory task, or even whether stimuli were presented dichotically versus monaurally. Based on the behavioral results, post-hoc analysis of cortical alpha activity, and informal reports from our subjects both during the breaks and after the experimental sessions were complete, we conclude that our failure to find attentional effects on the EFR is not due to simple, uninteresting possibility that participants failed to follow instructions and direct their focus of attention appropriately.

3.3. Reconciling our negative findings with previous studies

Several previous studies have reported that attention does not affect the scalp-recorded ABR (Picton and Hillyard, 1974; Woldorff et al., 1987; Gregory et al., 1989; Connolly et al., 1989; Hackley et al., 1990). As the ABR and EFR are both generated by brainstem auditory structures, it is perhaps unsurprising that we found no significant effects of attention on the EFR. However, ABR and SSSR responses (including the EFR) tend to emphasize different features of neural responses. The ABR measures the transient response to the onset of a sound (typically a broadband click; Sohmer and Feinmesser, 1967; Jewett and Williston, 1971; Picton et al., 1981), with each peak representing neural activity from a particular structure along the auditory pathway (Melcher and Kiang, 1996). In contrast, SSSRs measure responses to ongoing neural activity that is phase-locked to the periodic structure of an input stimulus, reflecting steady-state activity that likely originates from multiple brainstem structures. SSSRs will not reflect modulation of neural populations that do not respond in a phase-locked manner to the input stimulus (e.g., Palombi et al., 2001, as cited in Carcagno et al., 2014).

While some earlier studies performed in humans have reported that there are no robust effects of attention on SSSRs (Hillyard and Picton, 1979; Galbraith and Kane, 1993), other studies have reported the opposite (Galbraith and Arroyo, 1993; Galbraith and Doan, 1995; Galbraith et al., 1998, 2003; Hairston et al., 2013; Lehmann and Schönwiesner, 2014). Yet on close examination, several of these later reports suffer from issues that make it difficult to conclude that the reported effects are due to task-dependent shifts in the focus of attention or cognitive state.

Lehmann and Schönwiesner (2014) reported selective attention effects that are idiosyncratic across subjects and are non-specific in their direction: for some subjects, SSSR strength was greater when attention was directed towards the corresponding stimulus, while for others, it decreased. Despite discussing SSSRs in general terms, the authors seem to focus specifically on the EFR in their study, since their analysis combines responses to alternate-polarity stimuli when computing spectral measurements (Lehmann and Schönwiesner, 2014, pg. 3). Further, the bootstrap statistics used to assess attention effects at the individual level compared between-block effects of attention to an estimated variance of the EFR that depended primarily on within block variability (Lehmann and Schönwiesner, 2014, Fig. 4). Taken as a whole, these results seem to support the conclusion that the EFR is not sensitive to attention (despite the title of the report), in agreement with the present study and other recent studies that failed to find attentional effects on the EFR (Dai and Shinn-Cunningham, 2014; Ruggles et al., 2014).

Among the studies conducted by Galbraith and colleagues on the modulation of SSSRs by attention, one reported no effect (Galbraith and Kane, 1993), while there was no main effect of attention in any of the studies claiming attentional modulation effects (Galbraith and Arroyo, 1993; Galbraith and Doan, 1995; Galbraith et al., 1998, 2003). In those studies claiming a positive effect of attention, conclusions are based on complex patterns of interactions between stimulus, analysis window, and other factors such as the recording montage. We suspect that the inconsistent findings in these studies are due in part to insufficient SNR to allow for an accurate computation of spectral quantities. For example, in Galbraith and Arroyo (1993), attention was reported to affect the SSSR differently, depending on both which stimulus frequency was considered and the period over which the SSSR was analyzed. Specifically, attention enhanced the first half of the 25 ms-long response to the 400 Hz SSSR and not its second half, yet also reported that attention enhanced the second half of the 200 Hz SSSR and not its first half. We suggest that 25 ms or 12.5 ms analysis windows may be too short to reliably assess the presence or absence of attentional effects without a very large number of stimulus repetitions, especially for pure tone stimuli that excite a limited number of auditory channels and yield concomitantly weak responses at the scalp (Ananthanarayan and Durrant, 1992). Similarly, the SSSR results presented in Galbraith and Doan (1995) are based on 500 trials per condition, with approximately 43 ms of neural data from a single electrode analyzed per trial. The pattern of results, in which SSSR amplitudes in one of the conditions (intensity discrimination of a pure tone) showed a large and opposite trend compared to SSSR amplitudes in the other conditions, may reflect a genuine physiological effect. However, such a pattern of results could be entirely due to insufficient SNR (and false positives by chance).

Most studies that have reported an effect of attention on SSSRs (all except those of Galbraith et al., 2003), did not consider the spectral noise floor when computing spectral power, and whether it differed across subjects, conditions, or stimulus frequency (see Zhu et al., 2013). While a response metric incorporating the spectral noise floor was considered by Galbraith et al. (2003), the overall magnitude of change due to the attention effect was not reported. Because the signals driving the SSSR are quite small compared to measurement noise, all SSSR measures, especially magnitude measures, depend on background noise levels to some degree. Moreover, since cortical activity is arguably the largest source of noise at most frequencies, changes in listener state could cause changes in cortical function, which could translate into changes (or increased variability) in observed SSSR strength. Changes in the noise due to overall subject state could be particularly problematic in listening tasks requiring a sustained focus of attention over relatively long periods of time (e.g., a lapse of listener arousal could affect a large set of trials from one particular condition, biasing results). Indeed, the deviant detection tasks used in most previous SSSR studies, as well as the detection task used in the present study, may be particularly prone to such issues due to the relatively long trial durations typically used in these experiments.

This possibility, that scalp-recorded measures have too low an SNR to reliably reveal subcortical attentional effects, is supported by reports of subcortical effects of attention in animal models, as well as reports of attention-related subcortical activity modulations in humans using techniques with better spatial selectivity than SSSRs/EFRs. In cochlear nucleus responses, attention to visual stimuli decreases both transient activity (Hernandez-Peon, et al., 1956; Oatman, 1971, 1976; Oatman and Anderson, 1977) and the amplitude of the steady-state response (Oatman and Anderson, 1980). In chinchillas, the magnitude of the compound action potential decreases the cochlear microphonic amplitude increases during periods of visual attention (Delano et al., 2007). In the IC of awake behaving ferrets, there is task-related modulation of neural ressponses (Slee and David, 2015). Modulation of BOLD responses localized to IC during selective attention has also been reported in human listeners (Rinne et al., 2008). Subcortical attentional effects have also been noted at the level of the cochlea in humans. Stimulus-frequency OAE recordings collected during selective listening (Giard et al., 1994; Maison et al., 2001) and distortion-product OAE recordings collected in an inter-modal attention task (Wittekindt et al., 2014) also suggest that attention modulates responses in outer hair cells.

Based on the results of our study and others that have failed to find modulatory effects on the EFR, the observed effects on cochlear responses in humans may not be inherited by the subsequent neural structures that contribute to the EFR. Alternatively, such propagative effects may be small and subtle enough that they are masked by noise. Indeed, deep neural cellular currents must flow with high synchrony in an appropriate direction in order to produce voltages large-enough to be measureable on the scalp (Okada et al., 1997). Given past reports, it may be that attentional mechanisms target specific subcortical structures or particular neuronal populations rather than operate as a blanket enhancement or suppression affecting all levels and channels of subcortical auditory processing. Such modulation may not robustly affect the EFR, even if brainstem and midbrain responses are modulated by descending efferent signals.

3.4. Caveats and conclusions

In computing PLVs, we combined responses to different vocoded digits with the same carrier signal, but different envelopes. Our assumption was that the primary drivers of EFR phase-locking are the harmonic peaks in each frequency band that arise from the click train vocoding procedure. However, the amount of energy in each vocoder band, and thus the energy driving each peripheral auditory channel, will differ across digits of the same carrier frequency due to the different temporal envelopes of the digits. We attempted to minimize the effects of this source of noise by equating the overall RMS energy per digit and the number of epochs per digit considered in the PLV calculation in Experiment 2, yet we still observed no consistent effect of attention on our results. In addition to supporting the hypothesis that subcortical attentional effects are small and perhaps localized, this also implies that it would be difficult to observe consistent attentional effects in EFR responses to more ecologically valid stimuli, such as natural sounds or speech.

SSSRs (including EFRs) are increasingly used within the auditory research community as tools to measure subcortical auditory coding fidelity (e.g., Picton et al. 2003; Purcell et al., 2004; Bharadwaj et al., 2015). Somewhat ironically, they are useful as a tool for assessing sensory coding precision because they are relatively insensitive to a listener’s state of arousal. In addition to the present data, other recent studies (Dai and Shinn-Cunningham, 2014; Lehmann and Schönwiesner, 2014; Ruggles et al., 2014) and previous reports (Cohen et al., 1991; Lins et al., 1996; Lins and Picton, 1995) support this idea. Even if the structures generating the ABR or EFR are truly altered by attention or cognitive state, our results indicate these changes are likely of sufficiently small magnitude that for all practical purposes, their effect on scalp recordings of EFRs can be ignored. Our results reinforce the notion that more sensitive and specific assays of subcortical function are necessary to better understand the contributions of online corticofugal modulation to everyday hearing abilities in human listeners.

4. Experimental procedures

4.1. Experiment 1

Ten subjects (4 males, 6 females, ages 18–28) recruited from the Boston University community participated in the experiment. Each subject was screened via audiometer to confirm normal hearing thresholds at standard audiometric frequencies between 250 Hz and 8 kHz (defined as <20 dB hearing level in either ear, or a 20 dB asymmetry across ears at any tested frequency). Subjects signed informed consent forms approved by the Boston University Charles River Campus IRB and were compensated $20/h for their participation. Each subject participated in three or four two-hour-long experimental sessions, completing at most one session per day. An intermittent headphone connection problem led us to exclude data from one subject, as we could not reliably determine which of that subject’s sessions were affected by the problem with audio presentation. All other subjects had at least 2 sessions where we were confident in the reliability of the headphone connection.

4.1.1. Stimuli

The spoken digits 1–5 were recorded by a male member of the laboratory using an Audio Technica (Tokyo, Japan) AT4033 microphone and digitized with 16 bit precision at a sampling rate of 44,100 Hz using an Apogee (Santa Monica, CA, USA) Duet audio interface with a 40 Hz high pass filter applied. The speech tokens were then cropped using Digital Performer 7 software (MOTU; Cambridge, MA, USA).

All stimulus processing took place in Matlab (Mathworks; Natick, MA, USA). A 50 ms cosine squared onset-offset ramp was applied to each of the original speech tokens. All tokens were downsampled to 24,414 Hz, then filtered with a first-order shelf filter (+3 dB at approximately 600 Hz, +13.5 dB at the Nyquist frequency) to boost the high-frequency content of the signal. The original speech was channel vocoded as illustrated in Fig. 1 using a regular click train carrier with fundamental frequency of either 97 or 113 Hz, one used for one stream, and the other for the competing stream. Narrow-band envelope signals derived from the original speech tokens (16 in total) were imposed on click train, replacing the temporal fine structure of the speech with a steady, monotone fundamental frequency while preserving the intelligibility of the speech tokens (Shannon et al., 1995). By design, the two fundamental frequencies used for the two streams were separated enough that the EFR of each stream could be isolated in the frequency domain. To further maximize the separation of the peripheral auditory representations of the two competing streams, each token was synthesized using only half the vocoder bands (odd-numbered bands for 97 Hz stimuli, even-numbered bands for 113 Hz stimuli; see Fig. 1). With this design, when there were two competing streams (on dichotic trials), the streams were presented to different ears and also had complementary frequency bands, thus minimizing their spectral overlap and optimizing the potential to observe frequency-specific attentional modulation effects.

4.1.2. Digit stream construction

To create each dichotic stimulus (the “attended/dichotic” condition), one carrier click frequency was selected as the “attend” frequency and assigned to either the left or the right channel, and the other was designated the “distractor” frequency and assigned to the opposite channel; this was done randomly on a trial-by-trial basis. For the attended channel, the 120 digits composing the stream were selected pseudo-randomly from the set of 5 digits vocoded with the carrier frequency to be attended. In the sequence of 120 tokens in the attended stream, any pair of temporally abutting consecutive digits (e.g., 1–2, or 2–3, etc.) was designated a target. Constraints were imposed such that (1) there were 4 to 6 targets in each stream, (2) target start positions were separated by at least 10 other digits, and (3) the target start position never occurred in either the first three or the last three positions in the stream. The distractor digit stream was constructed similarly; 120 tokens were drawn from the set of 5 digits at the to-be-ignored carrier frequency, with the only constraint being that no consecutive, increasing digits could occur in the sequence. All digits within a single stream had a 500 ms inter-onset interval, and the to-be-ignored stream always started 250 ms after the to-be-attend stream, which resulted in the listeners hearing digits alternating in each ear every 250 ms. (Unfortunately, this construction of constant inter-token intervals meant that the 60 Hz power line noise was in the same phase for each of the 120 tokens in each of the two streams, leading to artifacts in the measurements at multiples of 60 Hz for this experiment – an issue that was corrected in Experiment 2.) An additional set of monaural stimuli was constructed in a similar fashion, except that the distractor stream was not included (“attended/monaural”). On half of all trials, the output stimulus was inverted in polarity to allow us to compute the subcortical response to the envelope rather than the response arising from the combination of phase-locked activity to the envelope and fine structure of the stimulus (Aiken and Picton, 2008; Skoe and Kraus, 2010a).

These manipulations (2 frequencies × 2 attended locations × 2 polarities for both dichotic and monaural stimuli) were counterbalanced across all experimental conditions for each session. Within an experimental session, each trial type was repeated twice, for a total of 32 trials per session.

4.1.3. Stimulus presentation and task details

Experimental flow was controlled in Matlab with the PsychToolbox 3 extension (Brainard, 1997; Kleiner and Brainard, 2007) installed to present on-screen instructions. Matlab was interfaced with Tucker-Davis Technologies (Alachua, FL, USA) System 3 hardware for D/A conversion and playback over Etymotic (Elk Grove Village, IL, USA) ER-1 insert earphones. Listeners were seated in a sound-attenuating booth (Eckel; Cambridge, MA, USA) for the duration of the experiment. The audio presentation was set to a comfortable listening level for each subject (in the range of 66–73 dB SPL, computed using a 1 kHz pure tone with the same RMS level) within each session.

On each trial, a small cross was presented in the middle of the screen, and subjects were instructed to fixate their gaze on it without blinking excessively. Prior to the start of sound playback, a small arrow was drawn on screen to indicate the side (left or right) to which the subject should direct his or her attention. After 1 s, the arrow disappeared and audio playback commenced. Subjects pressed a button on a response box whenever they detected a target sequence (two sequential numbers in a row in the target stream). Subjects were scored as having missed a target if they did not respond within 2 s of the occurrence of the second digit in a target sequence; responses outside the two-second window were labeled as false alarms, with a corresponding false alarm rate computed as the ratio of false responses to the total number of non-target stimuli presented. Once playback was complete, the cross in the center of the screen turned red, and listeners were able to rest before beginning the next trial at their discretion. No feedback was given to subjects during the task. Each trial lasted a little longer than a minute, and subjects were given a short break after every 8 trials.

Subject performance was quantified using signal detection theory. We considered d′ and β as a measure of sensitivity and response bias, respectively (Macmillan and Creelman, 2005; Abdi, 2007). In these computations, hit rate was defined as the ratio of correct detections to the total number of target digit presentations within a condition, and false alarm rate was defined as the ratio of the number of responses outside a target window to the total number of non-target digit presentations presented at the attended ear within a condition. Extreme proportions of 0 or 1 were corrected by recomputing the ratios as 0.5/N or 1–0.5/N, respectively, where N represents the number of trials used to compute the rates (Macmillan and Kaplan, 1985; Hautus, 1995).

4.1.4. EEG recording and EFR analysis procedures

EEG data were collected from subjects during the task using a Biosemi (Amsterdam, Netherlands) ActiveTwo system sampling at 16,384 Hz. Responses were collected from 32 scalp electrodes. Data from a pair of reference electrodes, one affixed to each earlobe, was collected and used later in offiine analysis. One additional channel recorded event markers indicating the time of each digit onset and subject response button presses. Recordings were monitored online to ensure that subjects were not closing their eyes during audio playback.

Initial assessment of signal quality

The signal quality of the channels was assessed visually by applying a 70–1,800 Hz first-order Butterworth filter using the EDFBrowser software package (http://www.teuniz.net/edfbrowser/). Any channel that was observed to have an excessive number of noisy data points (e.g., motion artifacts, muscle fiber activity, or artifacts resulting from poor electrode contact, all of which are visually distinguishable from the electrical signals of interest) was recorded and excluded from all subsequent analyses.

Phase-locking computations

After exclusion of channels identified as artifact-contaminated, the raw signals from the scalp and reference electrodes were filtered with a 70–1800 Hz FIR filter and downsampled to 4096 Hz, then time shifted to compensate for the group delay imposed by the bandpass filtering. The scalp data were then re-referenced to the average of the signal at the two earlobe electrodes. Data were split into 500-ms long epochs aligned to the starting point of the digit. These epochs were grouped according to whether (1) they corresponded to a digit from the attended stream, the ignored stream, or a monaural stream, (2) the stream had a carrier frequency (i.e., click rate) of 97 Hz or a carrier frequency of 113 Hz, and (3) the stimulus was presented in positive or negative polarity. We note that while our method of splitting the data resulted in responses from both the attended and the ignored digits being present in each epoch collected during dichotic stimulation, the responses to each digit should have distinct and dissociable frequency components, as the two digits had distinct, resolvable fundamental frequencies. Finally, trials showing deflections greater than 65 μV in any channel were marked as artifact-contaminated and discarded.

EFR strength was quantified using the phase-locking value (PLV; Lachaux et al., 1999; Zhu et al., 2013). As the vocoding procedure resulted in tokens with identical envelope phase relationships relative to one another, no token-specific adjustments of the response phase was necessary in the PLV computation. The SNR of PLV estimates were improved by utilizing the multi-taper and complex-valued eigenvalue decomposition method described in Bharadwaj and Shinn-Cunningham (2014), as implemented in the “ANLffr” version 0.1.0 software package (http://github.com/haribharadwaj/ANLffr). Briefly, the method involves multiplying the data from each epoch with a discrete prolate spheroidal sequence window (DPSS; also known as a Slepian sequence; Slepian, 1978), deriving a cross-spectral density matrix across channels from the data, and then performing an eigenvalue decomposition on this matrix to obtain the squared PLV (p2) with spectral information derived from multiple channels. This procedure is then repeated on the data with different (orthogonal) tapers applied to the time domain signal. The final result is obtained by averaging over the estimates obtained from each taper. The first three DPSS tapers corresponding to a time half-bandwidth parameter of 2 were used for this analysis. A 4096-point FFT was utilized in computing the cross-spectral densities, resulting in a frequency axis sampled at 1 Hz.

The variance and bias of PLV estimates derived using the complex PCA method depends on the number of electrodes utilized in the computations (Bharadwaj and Shinn-Cunningham, 2014). Because all subjects had clean signals from a minimum of 20 electrodes, we fixed the number of electrodes utilized in the phase-locking calculations by choosing 14 electrode sites that all subjects had in common, and then adding additional electrodes at random from the remaining good set of electrodes for that subject until the total number reached 20.

The bias and variance of PLV estimates in general depends on the number of trials utilized for computation (Bokil et al., 2007; Zhu et al., 2013). As we were interested in within-subject effects of attention (i.e., the relative strength of the PLV across different attentional states for a given subject), we elected to analyze the same number of electrodes and trials across the conditions of interest within a subject. For each subject, this number was chosen as the minimum number of trials available across attention conditions that allowed for an equal number of positive and negative polarity trials to be included in each PLV calculation. As each subject performed the test over multiple sessions; there may have been differences in cap positioning, background noise levels, or overall subject state across sessions that make it difficult to combine data across days. To address this confound, we chose to perform PLV computations on data from a single recording session per subject. The particular session chosen for analysis was the session that maximized the number of artifact-free trials available for analysis. Overall, these selection procedures resulted in PLVs being computed on a minimum of 572 trials/condition (286/polarity) for the noisiest dataset, a maximum of 952 trials/condition (476/polarity) for the dataset with the fewest number of artifacts. Bootstrapped estimates of PLVs in the frequency range 70–600 Hz were obtained by averaging the results of 240 PLV computations on resampled datasets obtained by sampling M trials with replacement from among the M trials available per polarity.

For each subject, condition, and frequency bin in this range, we empirically derived the noise floor distribution (i.e., the expected PLV using our analysis procedures in the absence of any actual phase-locked neural activity; the null model) by reversing the phase of half of the trials and computing the resulting PLV. We note that for PLVs computed on single-channel data, the noise floor for stationary noise is theoretically independent across frequency bins and depends only on the number of trials available for analysis; practically speaking, this theoretical floor is close to what we observe, suggesting that non-stationarities in the measured noise do not strongly impact results (Zhu et al., 2013). When using the frequency-domain eigenvalue decomposition to obtain PLVs from multiple channels, however, this assumption may not be correct at lower (cortical) frequencies if there is significant spatial correlation of activity between electrode sites. In the frequency range of interest in this study (above 70 Hz), these spatial correlations should be minimal. As we observed no significant differences in the noise floor across conditions, we estimated the noise floor in each frequency bin in the 70–600 Hz range over all conditions for each subject s, and constructed a subject-specific noise distribution by computing the mean μs;noise and variance of the middle 95% of these values.

The noise mean and variance were utilized to convert the bootstrapped estimates of phase locking from the original, phase-intact data to a z-score z for each condition c, which was then used for all further analyses:

Using this scaling method, a peak may be considered “significantly above the noise floor” if a z-score is above 1.64, which corresponds to the 95th percentile of the standard normal distribution.

Statistical analyses of z-scores were conducted in R (R Core Team, 2015). Repeated-measures ANOVA was conducted using the “ezANOVA” package (Lawrence, 2015). Effect sizes for ANOVA factors were quantified using generalized eta-squared, , a measure of effect size suitable for comparisons across different experimental designs (Olejnik and Algina, 2003; Bakeman, 2005). When the assumption of equal variance across conditions was violated, a Greenhouse-Geisser correction to the degrees of freedom of the reference F-distribution was applied to compute the p-values from the F statistics (Keselman et al., 2001).

PLV z-scores were subject to a Bayes Factor analysis (Kass and Raftery, 1995; Rouder et al., 2012) using the “BayesFactor” R package (Morey et al., 2015). For this analysis, a default, non-informative, Cauchy prior distribution was imposed on the standardized effect size (Rouder et al., 2012).

4.2. Experiment 2

Experiment 2 was similar to Experiment 1, except for some minor changes in stimulus construction and the addition of an “ignore-auditory” condition. These differences are described below.

Thirteen subjects (3 males, 10 females, age 20–29) recruited from the Boston University student population participated in Experiment 2; none had participated in Experiment 1. All subjects were native speakers of American English, and were screened to ensure that they had detection thresholds<20 dB hearing loss in both ears for frequencies between 250 Hz and 8 kHz. Subjects signed informed consent documents approved by the Boston University Charles River Campus IRB, and were compensated $25/hour for their participation. Recordings were acquired in a single experimental session. The total recording time per subject was approximately 1.5 h, including breaks. Data from one subject was excluded due to unusually high PLVs (z-scores>700), which suggests that some electromechanical artifact had compromised the measured signals.

4.2.1. Stimulus presentation and task details

Stimuli were generated as in Experiment 1 with the exception of the following key differences: (1) each stream contained 140, rather than 120 digits; (2) vocoded digits were equated in RMS energy on a per-token basis, rather than on a per-trial basis; (3) the digit onsets within each stream were jittered in time by up to 100 ms, but with the constraint that no onset in one stream could occur within 25 ms of another onset in the opposing stream; (4) there were always exactly 4 target digits in the attended stream, rather than a random number between 4 and 6; and (5) audio stimuli were presented at a fixed level across subjects (identical RMS to a 1 kHz pure tone presented at 75 dB SPL), rather than set to a “comfortable” level determined subjectively by the subject; (6) positive and negative polarity stimuli were included within the same trial, rather than all stimuli having the same polarity within a trial.

There were three conditions in Experiment 2: (1) “attend” to a monaural digit stream (“monaural”); (2) selectively attend to one stream in a dichotic mixture comprising one stream at 113 Hz and one stream at 97 Hz (“selective attention”); and (3) attend to a visual digit stream during presentation of a monaural digit stream (“visual task”). In the visual task, a digit stream was presented at the center of the computer screen; the visual stream was similar to the auditory streams in its timing and content. These visual trials were included solely to determine whether responses evoked by auditory stimuli are altered when subjects focus attention on a non-auditory stimulus. As such, visual stimulus parameters (e.g., visual angle, luminosity) were not controlled. In all cases, which ear received the stream to attend and which received the stream to ignore was counterbalanced across trials.

To ensure maximum engagement with the task and to incentivize selective listening on dichotic trials, subjects were paid a small financial bonus of about $0.05 for each correct target detection and penalized about $0.03 for each false alarm. These financial bonuses did not affect subjects’ base compensation, and were capped at a maximum of $10.00 in the event of perfect performance.

Stimuli were presented like those in Experiment 1. At the beginning of each trial, a green arrow pointing either left or right (for attend-monaural and attend-dichotic trials), or the message “on-screen” (for attend-visual trials) appeared at the center of the computer screen to indicate the task type. A simultaneously presented red arrow indicated that subjects should ignore any sounds originating from that direction. Subjects pressed a button to acknowledge the instructions, after which the arrows were replaced with a single gray fixation cross at the center of the screen. Stimulus presentation then commenced. Participants completed the same task as in Experiment 1, pressing a button whenever they heard two consecutive, increasing digits when attending to an audio stream, or whenever they saw two consecutive, increasing digits presented on screen during the attend-visual trials. Participants were instructed to keep their eyes open for the duration of the trial without blinking excessively, and to do their best to keep their gaze on the center of the screen for the duration of the trial. The fixation cross turning red signaled the end of a trial. Subjects’ responses were scored as in Experiment 1, but they were given feedback after each trial in the form of number of correct responses, number of false presses, and their running “bonus” total (described above). Participants were given a short break after every 12 trials.

4.2.2. EEG recording and EFR analysis procedures

EEG was collected from subjects using a Biosemi ActiveTwo system sampling at 4096 Hz while they performed the task. Subcortical EFR data analyses procedures were similar to those in Experiment 1, with the following differences: (1) 23 electrodes were used in the computation of PLVs (15 electrode sites in common and 8 additional electrodes selected per subject), reflecting the number of “clean” electrodes available in the noisiest subject; (2) the number of trials utilized in the PLV calculations was fixed based on the minimum number of trials per data pool available when datasets were was broken down by attention condition, frequency, digit, side of presentation, and polarity for a given subject. Across subjects, this procedure resulted in a minimum of 720 trials (36/digit/side/polarity) and a maximum of 1060 trials (53/digit/side/polarity) utilized for PLV calculations across the different subjects. Resampled datasets of equal size were constructed by selecting an equal number of trials with replacement from each data pool (digit/side/polarity) per subject and condition; PLVs were computed from these resampled pools. As in Experiment 1, the bootstrapping procedure was repeated 240 times to obtain final estimates of stimulus induced PLV as well as the noise floor distribution.

Statistical analysis was performed in R using the same procedures previously described for Experiment 1.

4.2.3. Cortical alpha band analysis

Recordings from the 12 subjects for whom EFR PLVs were computed were used in this analysis. For these subjects, we considered the 36 trials in which they either attended to a 113 Hz stimulus in the dichotic condition, attended to a 113 Hz stimulus when presented monaurally, or ignored the 113 Hz stimulus while performing the visual task.

Data from electrode locations CP1, CP2, CP5, CP6, P3, Pz, P4, PO3, PO4, O1, Oz, and O2 (in 10–20 notation) were considered for this analysis. Channels from this set were visually inspected after the application of a 1–40 Hz 1st order Butter-worth bandpass filter. This resulted in three channels from a single subject being removed from further analyses (electrode locations Oz, O1, and O2 in Subject 12, all of which appeared to be making poor contact with the scalp).

Alpha activity was isolated from the raw signals by applying an 8–13 Hz bandpass FIR filter on the raw data and referencing the data against the average of the signal at the two earlobes; the filtered signals were then downsampled to 64 Hz. The envelope of this signal was extracted by full-wave rectifying the signal and low-pass filtering with a 31 tap FIR moving average filter (a Gaussian window in the time domain). The constant group delay introduced with the application of each FIR filter was compensated by time shifting after filtering. The amplitude of this envelope was converted to decibels (dB) relative to each subject’s mean alpha activity in the 3.5 s prior to the start of stimulus presentation. When all processing was complete, approximately 64.2 s of data (including the 3.5 s in the baseline) were considered per trial.

ANOVAs and t-tests for these data were conducted in R.

Acknowledgments