Abstract

Insufficient capacity to use evidence-based programs (EBPs) limits the impact of community-based organizations (CBOs) to improve population health and address health disparities. PLANET MassCONECT was a community-based participatory research (CBPR) project conducted in three Massachusetts communities. Researchers and practitioners co-created an intervention to build capacity among CBO staff members to systematically find, adapt, and evaluate EBPs. The project supported development of trainee social networks and this cross-sectional study examines the association between network engagement and EBP usage, an important goal of the capacity-building program. Trainee cohorts were enrolled from June 2010 to April 2012 and we collected community-specific network data in late 2013. The relationship of interest was communication among network members regarding the systematic approach to program planning presented in the intervention. For Communities A, B, and C, 39/59, 36/61, and 50/59 trainees responded to our survey, respectively. We conducted the full network analysis in Community C. The average degree, or number of connections with other trainees, is a useful marker of engagement; respondents averaged 6.6 reported connections. Degree was associated with recent use of EBPs, in a linear regression, adjusting for important covariates. The results call for further attention to practitioner networks that support the use of research evidence in community settings. Consideration of key contextual factors, including resource levels, turnover rates, and community complexity will be vital for success.

Keywords: Evidence-based programs, Community-based organizations, Community-based participatory research, Social network analysis, Dissemination, Implementation

BACKGROUND

The widespread dissemination and implementation of evidence-based programs (EBPs) in community settings has great potential to improve population health outcomes and mitigate health disparities [1, 2]. Community-based organizations (CBOs) can be more effectively leveraged for health promotion, including organizations working with the underserved, which are an important channel for reaching vulnerable populations [3, 4]. To realize the benefits of evidence-based public health, it is vital to improve the knowledge-production process to better meet the needs of end-users [5] and address capacity gaps that hinder the ability of CBOs to leverage the rich, growing evidence base [6]. Recent efforts in public health departments and other agencies suggest that capacity-building programs may offer an important solution to addressing the gap in ability to use EBPs in community settings [7–9]. Capacity-building programs have been shown to increase practitioners’ knowledge, skills, motivation, and ability to find, implement, and evaluate evidence-based programs (EBPs) [10–12]. However, it is unclear how best to design capacity-building programs to promote use of EBPs [10, 13].

A recent review of capacity-building programs for EBP usage highlights development of a social network among trainees as one of the factors that supports success. Other factors include training, technical assistance, tools, evaluations, incentives, collaboration between trainees and program developers, and adopters’ characteristics [12]. The importance of peer networks is unsurprising, given that the interpersonal relationships that make up social networks are established channels by which ideas and practices spread [14]. Networks impact adoption of innovations through social influence, modeling, and facilitating access to resources, including information [15, 16]. By connecting with others, tackling similar professional challenges, practitioners can create and share practice-focused knowledge, exchange resources, engage in problem-solving, and establish themselves as local experts [17–20]. Yet, developing CBO practitioner networks may be a challenge given that: (a) substantial resources must be committed to network engagement to see benefits [19] and (b) CBOs tend to experience high staff turnover rates [21].

Given that it is not always clear how best to design capacity-building programs, a community-based participatory research (CBPR) approach may support solutions that meet the needs and contexts of practitioners. The CBPR approach emphasizes respect and co-learning among partners, capacity-building at individual and community levels, systems change, and the need to produce both research and action [22, 23]. For dissemination and implementation science, additional benefits include increased relevance and impact of research efforts and products, engaged processes for adaptation of evidence to diverse practice contexts, ability to leverage practice-based knowledge and ensure knowledge is “translated” from research to practice settings and vice versa, and a focus on sustainable change in practice systems [24–26].

Clearly, practitioner networks are expected to play an important role in implementation of EBPs in community settings, but engagement poses challenges in resource-constrained environments. This study brings together a unique focus on CBO-based practitioners; a goal of creating sustainable, community-based networks; and the use of a participatory approach to create a viable solution. We sought to answer the following question: Is network engagement associated with EBP use among trainees in a capacity-building program for CBO staff members?

METHODS

Intervention overview

Data for this study come from PLANET MassCONECT, a project that used participatory approaches to build capacity for systematic program planning among a diverse range of CBOs working with the underserved in three Massachusetts communities. The communities were defined in terms of their associated Community Health Network Areas, regional coalitions sponsored as a part of regionalization efforts by the Massachusetts Department of Public Health to bring members from different sectors (public, private, and non-profit) together to identify and address priority health areas [27]. As highlighted in Table 1 (which presents data for the main city in each of the three communities), the three communities were diverse in terms of community complexity and scale of health promotion efforts [28–31]. Community complexity is a measure of heterogeneity, in which more complex communities are larger and also more differentiated across sectors, organizations, and population segments [32]. Complexity is also a measure of differentiation and diffusion of power structures. There is an expectation that in more complex communities, one’s day-to-day interactions are less likely to be with kith and kin and more with peers, colleagues and those with aligned interests. Last, complexity influences the information environment in the community [33]. The three communities were selected for the parent grant in 2005 [34] because of their strong health coalitions and interest in using CBPR to address cancer disparities, and also because, as a set, they represented diversity on a number of indicators, including community complexity. Community A had the highest complexity as a large, urban area with great diversity in terms of sociodemographic composition and a large number of actors in the health sector. Community B had a medium level of complexity as it was a mid-size community, with great diversity, partly due to its status as a refugee resettlement area. It had a mid-range number of health sector organizations. Community C had the lowest complexity as it was the smallest and was fairly homogenous with a predominantly Hispanic population.

Table 1.

Exemplar characteristics of community members and CBOs in the main city for each community in 2010, the start of the intervention

| Community A | Community B | Community C | |

|---|---|---|---|

| Community members | |||

| Population | 617,680 | 181,041 | 76,377 |

| Racial/ethnic composition | |||

| White, non-Hispanic | 53.9% | 69.4% | 42.8% |

| Black, non-Hispanic | 24.4% | 11.6% | 7.6% |

| Hispanic | 17.5% | 20.9% | 73.8% |

| Percent foreign-born | 27.0% | 21.4% | 38.3% |

| Percent living below the poverty line | 21.9% | 22.0% | 28.5% |

| Local organizations | |||

| Number of CBOs addressing health promotion, 2009 | 72 | 42 | 32 |

Community-based participatory research approach

The study was conducted in collaboration with a Community Project Advisory Committee, an advisory group created for the project that included practitioner-leaders from each community (full list in the Acknowledgments section). The committee included interested partners from a previous CBPR grant. Following the principles of CBPR, academic and community partners co-developed the program in a manner that leveraged and built on strengths, resources, and expertise of all partners; emphasized the importance of knowledge and action; and facilitated collaborative, equitable involvement of partners throughout the research process [23]. The project came about as a result of joint issue selection, an important aspect of CBPR projects [23, 35]. Partners noted that local CBOs were being encouraged or mandated to use EBPs by funders, but did not have the capacity to find, use, and evaluate EBPs.

The advisory committee and study team co-developed and refined the capacity-building intervention and evaluations. We employed local community health educators in each partner community. For Community A, the health educator attended coalition meetings, but was based mainly at the investigators’ site. For Communities B and C, health educators were embedded in the local coalitions for the duration of the project and contributed a percentage of effort to the coalition’s work independent of the intervention (e.g., running working groups and supporting outreach efforts). This paper was written by investigators (SR, KV), the community engagement lead (SM), and one community partner (V-DM).

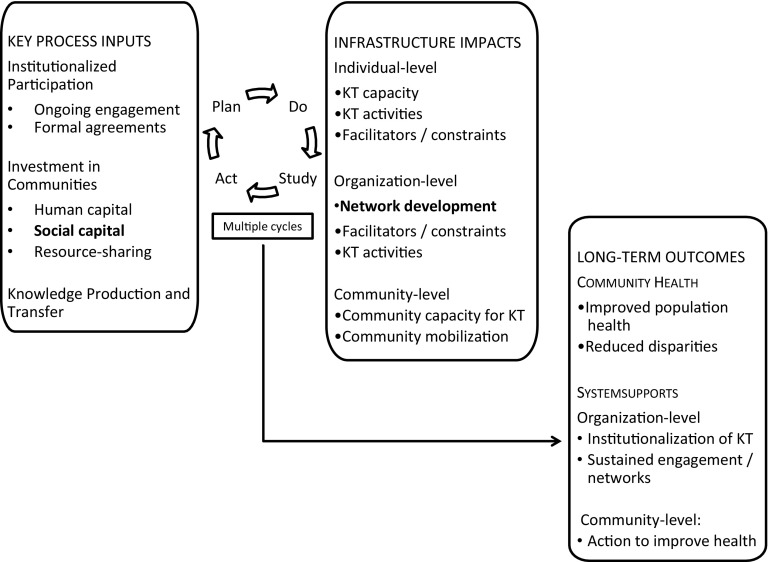

The Participatory Approach to Knowledge Translation (PaKT) Framework [36] guided the PLANET MassCONECT intervention. The framework posits that: (a) a system that allows for ongoing translation of evidence into health promotion and community change efforts can address many of the barriers to improving health and health disparities and (b) such a system requires sustained engagement with community stakeholders. Knowledge translation is conceptually linked to dissemination and implementation science, with an emphasis on “the exchange, synthesis, and ethically-sound application of knowledge—within a complex system of interactions among researchers and users” to improve health, services, and the overall healthcare system [37]. As seen in Fig. 1, there are three major inputs to the framework: institutionalized participation, investment in communities, and knowledge production/transfer, which are linked by iterative Plan-Do-Study-Act (PDSA) cycles [38, 39] to infrastructure impacts and ultimately, long-term outcomes. While the overall project included all of the inputs, the current study focuses on investment in social capital (in the form of network development) and impacts at the individual- and network-levels (in terms of individual-level practice and network structure).

Fig. 1.

The Participatory Approach to Knowledge Translation (PaKT) Framework, bold text highlights study focus

Capacity-building intervention components

The intervention included a number of components to support the goal of repeated engagement with trainees over time. These components included the following: (1) a skill-building workshop, typically delivered over two half-days, which focused on using data, finding partners, exploring intervention approaches, selecting/adapting an EBP, and evaluating the EBP; (2) a tool kit including a customized web portal, a training manual with handouts, and case studies; (3) networking events for additional training and to support the development of a network of dissemination specialists; (4) mini-grants to provide opportunities to apply the systematic approach to program planning; and (5) technical assistance provided by staff members. The training emphasized the use of key national resources, including the CDC community guide, which provides systematic reviews related to health promotion intervention strategies [40] and the NCI Cancer Control P.L.A.N.E.T., a web-based resource that supports the data and EBPs for cancer control [41]. Trainees were enrolled on a rolling basis to allow for small class sizes (15–20 trainees per session) and to support interaction among trainees and between trainees and trainers. Additional details about the intervention are provided elsewhere [36].

Creating a network of dissemination specialists

The research and community partners had the goal of creating a network of dissemination specialists who could support the use of EBPs in their local communities and share knowledge, solve problems together, and act as resources to each other [18, 19, 42]. The use of a CBPR approach was important for our network development plans. First, we tapped into local practitioner networks to leverage local resources and support sustainable infrastructure. The team conducted recruitment and engagement within existing local health promotion networks, the Community Health Network Areas described above. Second, we facilitated network development by structuring additional training opportunities to include additional content as well as dedicated networking time, keeping time- and resource-constraints of CBO practitioners in mind. Activities included four additional events that provided training and facilitated networking (e.g., grouping individuals for discussions by health topic or providing dedicated networking time); regular newsletters that included trainee activities; and a discussion board on the web portal. Third, at the advisory committee’s suggestion, we encouraged inter-organizational collaboration for the mini-grants. As with any social networks, peer networks require time and resources to facilitate interaction [19] and the project team built both into network development activities.

Study design

The study utilized whole-network (or sociometric) analysis for each of the three communities. The methods and theoretical paradigm of social network analysis support our focus on: (1) capacity-building as a systems intervention [43] and (2) the importance of social relationships for knowledge sharing and practice change [44, 45]. Network analysis allows the opportunity to move beyond individual-level outcomes and capture outcomes at the dyad- and network-level. The first important design decision was boundary specification; we defined each network as the set of trainees who completed the training workshop and worked in the associated Community Health Network Area. The affiliation at the time of enrollment was assigned to the trainee throughout the intervention. In other words, if someone moved to another area or left their initial organization/position after enrollment, we still counted them as part of the original network as they could still be a resource to that community. The second design decision was to define the relationship of interest. We focused on communication related to the systematic approach (for finding, adapting, and evaluating EBPs) that was presented in the intervention. Communication linkages are well-established conduits for the flow of information and influence [14, 46].

Respondents

Practitioners were eligible for the PLANET MassCONECT intervention if they met the following criteria: adults aged 18 +, working for a non-profit organization or public sector service organization in one of the three partner communities, and were engaged in health promotion programming. We recruited trainees from diverse organizations, ranging from health-focused nonprofits to housing authorities to schools. As part of our participatory approach, the project team included local community health educators who were integrated into their local health promotion communities and thus were well-positioned to recruit for the study. We also collected referrals from trainees and depended on advisory committee members to promote the program and guide recruitment. Based on the advisory committee members’ recommendations, the training was positioned as a professional development opportunity subsidized by the National Cancer Institute. Cohorts of trainees were enrolled from June 2010 to April 2012, and intervention activities ended in December 2013. We recruited about 60 trainees from each community. The equal investment of study resources (e.g., number of individuals trained, stipends, and grant funds) into each of the three communities was a function of our CBPR approach.

Data collection

Data for this study come from immediate post-test surveys (collected at the end of the initial workshops June 2010–April 2012) and the social network survey (September–December 2013). The immediate post-tests were collected in paper format at the end of the workshop or online via a secure data collection service for individuals unable to complete the survey in-person. The social network analysis data were collected using a secure online data collection service and follow-up included weekly contact with non-respondents, alternating between automated emails generated by the data collection system and phone calls from a community health educator. The social network analysis was not part of the original evaluation plan, but instead arose from an opportunity presented through a supplemental award.

Measures—trainee-level

Descriptive measures: Trainee characteristics. In the immediate post-test, we asked trainees a series of questions about their organization, position, and sociodemographics. Independent variable: Degree. This variable is the number of connections a given trainee has in the network. It represents the set of connections that may be functionally useful to respondents; a network member who is more highly connected is better able to access network resources than one who is less connected [47, 48]. As part of the network survey, trainees were asked who they communicated with in the last 6 months about using some or all of the program planning steps presented in the intervention. Trainees were presented with a roster of fellow trainees from their community network to ease recall and selection [45]. Dependent variable: Number of EBPs used in the past 6 months. Our outcome variable was the number of EBPs trainees had used in the 6 months preceding the survey. The implementation of EBPs in practice settings is an established marker of success for capacity-building programs focused on EBPs [12]. As part of the social network survey, trainees were asked if they had used an EBP in their organization in the previous 6 months. Those who said yes were asked how many EBPs they had used. The response was open-ended. Covariates: Pre-training EBP usage: In the immediate post-test, we asked trainees whether or not they had used EBPs prior to participating in the training. Perceived skills. In the network survey, we asked about their perceived skill levels for key EBP-related activities. We created a mean self-reported skill score, which averaged skills to find data, find partners, choose an appropriate EBP, adapt an EBP, and evaluate an EBP. Response options ranged from 1 (low) to 5 (high).

Measures—network-level

These measures provide a high-level assessment of the exchange of information and resources [49]. Network density is the proportion of potential connections that were reported by network members. A denser (or more highly connected) network may be useful and effective for sharing information and resources, but a more sparsely connected network may provide greater access to diverse contacts and novel resources. The point at which density transitions from being an asset to a limitation is a function of both the characteristics of the network members as well as the kind of relationship being studied. A curvilinear relationship (resembling an inverse U) has been proposed between performance or spread of innovations and density [45]. As an example, Valente and colleagues studied network-level attributes among a group of coalitions and found that decreased network density was linked with increased adoption of EBPs. They suggested that in this case, a less dense network was critical for maintaining access to external ties that were a source of new knowledge. These findings show that it is not always clear what network structure is ideal to support adoption of new innovations [16]. Network centralization is the extent to which the network is focused around a small number of members; reported here using the Freeman’s degree centralization calculation [50]. Highly centralized networks can spread information and resources from influential members efficiently and effectively and may also support the mobilization of network members (which could have important impacts for spreading EBP information or activities), but may not be as supportive of shared decision-making and member empowerment [51].

Analyses

We conducted descriptive analyses to summarize trainees’ characteristics at intervention close. For the network analysis, our initial step was to create network maps for each of the communities using NetDraw [52], a computer program for visualizing social networks. In these maps, the positions of nodes (which represent trainees) in the diagrams are determined by a spring embedding algorithm, which puts network members who have many connections in the center of the diagram and also puts members who connect directly to each other or with few intermediaries closest to one another [53, 54]. Network members at the center of the diagram can be thought of as particularly engaged in the network of trainees [49]. Social network data are sensitive to missing data [44, 55] and degree (our main network measure of interest) is sensitive to missing data with less than an 80% response rate [56], precluding further analysis of network data for Communities A and B. For Community C, we utilized dedicated network analysis software, UCINET6 [53], to analyze the data. We conducted linear regressions using UCINET procedures developed specifically for network data, which contain observations that are not independent and do not meet the assumptions of classical statistical techniques. We utilized SAS v9.4 to analyze the non-network data [57]. The institutional review board at [blinded] reviewed all study protocols and related documents and deemed the study exempt from review [study reference number blinded].

RESULTS

A total of 125 trainees participated in the network analysis survey, for a response rate of 70% overall and rates of 67, 59, and 85% for Communities A, B, and C, respectively. As seen in Table 2, trainees hailed from a diverse range of community organizations and many had program planning/outreach coordination roles. We also assessed turnover patterns among trainees and found that over one quarter left their original organization over the course of the intervention. We did not find a statistically significant relationship between turnover status and: (a) length of time in the program or (b) city affiliation. At the close of the intervention, about two thirds reported use of an EBP in the preceding 6 months and the median number used was 2.

Table 2.

Respondents’ characteristics at enrollment and at the close of the intervention (n = 125)

| Trainee characteristics at enrollment | Percent (%) |

|---|---|

| Organization type* | |

| Community-based organization | 33 |

| Private non-profit | 24 |

| Community-based health care center | 21 |

| Advocacy | 12 |

| Social service organization | 8 |

| Educational institution | 9 |

| Governmental | 7 |

| Other | 18 |

| Position type* | |

| Administrator | 23 |

| Healthcare provider | 11 |

| Other | 29 |

| Outreach coordinator | 24 |

| Program planner | 44 |

| Gender | |

| Female | 91 |

| Age | |

| 20–30 | 16 |

| 31–40 | 21 |

| 41–50 | 29 |

| 51–60 | 28 |

| 61+ | 6 |

| Highest level of education completed | |

| Some college or less | 10 |

| College graduate | 37 |

| Graduate or professional school | 53 |

| Race* | |

| White | 62 |

| Black | 17 |

| Other | 23 |

| Hispanic/Latino | |

| Yes | 75 |

| Prior receipt of EBP training | 34 |

| Prior use of any systematic approach for program planning | 40 |

| Practice outcomes reported at the close of the intervention | |

| Use of an EBP in last 6 months | 66 |

| Number of EBPs used in last 6 months (n = 74) | |

| 1 | 24 |

| 2 (median) | 31 |

| 3 | 20 |

| 4 | 11 |

| 5+ | 13 |

| Likely/highly likely to use planned approach in future | 82 |

| Turnover patterns at the close of the intervention | |

| Changed organizations at least once during follow-up | 27 |

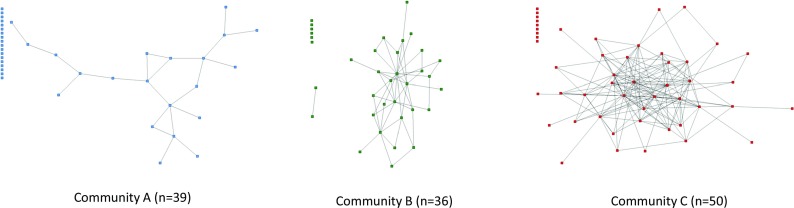

As seen in Fig. 2, the community-specific network maps demonstrate vastly different structures of the trainee communication networks in the three partner communities. Each node represents a trainee and lines represent reported communication about the systematic program planning approach. Nodes that are on the left side of each map without any lines connecting them to others are isolates, or respondents who did not have incoming/outgoing reports of connections. As noted in the “Methods” section, potential bias due to missing data prevented additional analyses for Communities A and B, thus we only present a detailed network analysis for Community C.

Fig. 2.

Trainee communication networks at intervention close, by community. Each node represents a trainee and lines represent reported communication about the systematic program planning approach

Community C network assessment

We calculated network-level metrics for the 50-person network and found that the overall density of the network (or the proportion of potential connections that have been realized) was 0.14. The average degree (or number of connections held by a given trainee) was 6.6 (SD = 6.6). The degree centralization was 0.434, suggesting that the network was not dominated by a subset of members [45]. We then turned our attention to the trainee-level to assess the impact of network engagement on a key outcome of the program—use of EBPs in practice settings. As seen in Table 3, we found a statistically significant relationship between the degree (the number of connections in the network) and number of EBPs used in practice in the 6 months preceding the assessment, adjusting for prior EBP use and average EBP skills (β = 0.11, p = 0.001). Average skills for EBP use were also significantly associated with EBP use (p = 0.01). The adjusted R-squared for the model was 0.34.

Table 3.

Association between degree (number of connections) and number of EBPs used in prior 6 months, adjusting for prior EBP use and average EBP skills (n = 48 trainees in Community C network)

| β coefficient (SE) | p value | |

|---|---|---|

| Intercept | −2.24 | 0.00 |

| Degree | 0.11 | 0.001 |

| EBP prior to initial workshop | −0.21 | 0.526 |

| Average skills for EBP use | 0.79 | 0.014 |

Adjusted R-squared was 0.34

Bolded figures are p<=0.05

The association between network connections and practice outcomes prompted investigation into the differences between those with above- and below-average levels of connections. We found statistically significant differences between groups in terms of their education level. Those with above-average connection levels had higher education levels (ANOVA, F = 2.9861, p = 0.0184). There were no significant differences by age and we could not analyze the data by gender due to insufficient variation.

Finally, we analyzed data related to network connections beyond the trainee network. At the year 1 follow-up, we asked trainees whether or not they had shared information with other colleagues related to the systematic approach to program planning that was taught in the workshop. Among the Community C respondents, 67% responded that they had shared information from the workshop with others. Among those who had shared information, 70% shared information with colleagues at the same organization, 30% shared information with colleagues at an organization similar to theirs, and 21% shared information with others (multiple responses permitted). About 55% of trainees who had shared information did so with two or three colleagues and the rest shared information with four or more colleagues. The most popular content shared was the URL for the customized, localized web portal (76%), followed by tools (58%), the systematic approach to program planning (42%), and other content (15%) (multiple responses permitted).

DISCUSSION

This study was conducted to assess the association between network engagement and use of EBPs among staff of community organizations who were trained in a systematic approach to program planning. Four main findings emerged from the analysis. First, we found that trainee network engagement was positively associated with use of EBPs in practice settings in the network for which we were able to conduct the network analysis. Second, we found that trainees shared intervention content with networks of colleagues outside the program. Third, our results highlight the potential impact of community context (such as community complexity and resource levels) on network engagement. Fourth, the results suggest that turnover in CBOs may warrant additional attention in the context of building infrastructure for EBP use in community settings. The findings also speak to the benefits and challenges of using participatory approaches for capacity-building efforts in community settings.

For Community C, we found that network engagement (measured as degree or the number of communication linkages a trainee reported) was positively associated with recent use of EBPs in practice. The model centered on network engagement explained a fair amount of variation in EBP usage (R 2 = 0.34). The finding was in the expected direction as increased engagement in the communication network was a marker of increased access to relevant resources, in other words, a marker of social capital [48]. The relationship is consistent with other findings that engagement in a network of CBO practitioners was linked to EBP-related skill transfer [58, 59] and EBP implementation [60]. There is a tension between needing sufficient connectivity to support the spread of information and resources, without restricting access to outside information/engagement, which can reduce adoption of best practices [51]. A community of practitioners is only as powerful as the time and resources committed to the community [19] and a major challenge in CBOs is that time devoted to network engagement often translates into reduced time for service delivery [60]. At the community level, such investments may be offset by the gains accrued from peers teaching each other informally, which is often more cost-effective than outside, expert-delivered training [61]. Another potential solution comes in the form of intentionally altering networks, as demonstrated by a recent effort to rewire communication networks among mental health clinicians using an evidence-based treatment [62].

The Community C network analysis highlighted an unanticipated benefit of the capacity-building intervention: secondary diffusion of information. About two thirds of trainees reported sharing information from the intervention with other colleagues. These trainees often shared a practical resource, the localized web portal that supported systematic program planning. Although we do not have data regarding the impact of the secondary diffusion, receipt of information from a personal or professional contact can be a powerful influence on professional behavior change and there may be an opportunity to leverage trainees’ network more effectively. The bulk of information-sharing was with colleagues at the trainees’ organizations and the literature highlights the potential for such sharing to facilitate EBP use, e.g., through informal learning processes [59] or social influence [14, 63]. For information shared with colleagues at other organizations, this may play an important role in exposing others to novel information/resources [63] or changing norms about the “way of work,” here the use of EBPs [64].

The third finding points to the importance of community context on network development. Community context is a well-established driver of CBPR-driven system change [65] and implementation outcomes [66]. We focused here on community complexity, a measure of heterogeneity [32] and impacts network development. For example, given that homogeneity likely yields a denser network, it is useful to consider the benefits of that density (e.g., supporting resource flow) with the costs (restricted access to external resources). It may be that in a smaller, more homogenous community, CBO staff members are able to get more from the network, e.g., access to information and resources that directly pertain to their clientele. This would be an important advantage, given our previous work highlighting the challenge practitioners face in finding and accessing relevant data as well as adapting EBPs for the population subgroups they serve [67]. In this study, we trained roughly the same number of practitioners per community (as a function of our CBPR approach), which resulted in very different penetration rates across communities. Additionally, our locally based community health educators were much better able to make and sustain connections with CBOs and trainees in the smaller and medium-sized communities than those in the larger community. This is reflected in the gradient for the response rates for all of our surveys, though this is more extreme for the network analysis presented here, likely because it was added to the evaluation plan after the study team received a supplement.

The results also point to the potential impact of turnover on efforts to disseminate and implement EBPs in community settings. More than one quarter of trainees changed organizations at least once during follow-up. A recent survey of nonprofits found the average turnover rate in 2015 was 19%. CBO staff turnover is a major challenge to capacity-building efforts, inter-organizational collaboration, institutional memory, and implementation of EBPs [21, 68, 69]. As one might imagine, it is much more difficult to build social capital within a defined group if many practitioners leave the area or are no longer in a position to apply the newly acquired skills. If the turnover rate and challenges identified in this analysis are reflective of what happens in public health at the local level, we require different strategies of investment to support infrastructure development. First, reducing turnover is a useful target, narrowly for EBP implementation and more broadly for health promotion goals. There is evidence that EBP implementation with ongoing monitoring may have a protective effect on turnover [70]. Additionally, EBP capacity-building efforts may result in reduced turnover if they are part of a larger professional development strategy [71]. Another set of targets comes from the perspective that turnover is simply a given in this environment. In this light, network development could include efforts to build “redundant” connections to protect knowledge loss due to turnover [72] and could also promote ongoing, informal training among members of the peer network [59]. EBP-related learning could be an organizational process that is resilient after staff members leave and attends to the training needs of new staff.

Finally, the study highlights some of the benefits and challenges of using a CBPR approach. As noted previously, the intervention was co-developed, which is an important strategy for increasing intervention relevance and impact [26]. Based on community partners’ suggestions, we attempted to support connections in the context of existing networks—Community Health Network Areas—and also leveraged connections held by local community health educators who were part of the project team. We also crafted the network development plan in a manner that fit practitioners’ work environment and with an eye to sustainability. However, the benefits of a CBPR approach may have been more difficult to realize in the communities with greater complexity.

The results of the study must be interpreted in the context of its limitations. First, the main outcome (EBP usage) relies on self-report data and may be subject to social desirability bias, in which practitioners may have over-reported EBP usage as that was the clearly identified goal of the program. Another challenge of self-report data relates to the fact that practitioners may have a broader definition of EBPs than academics do, as we have found in previous works. However, a bulk of our training focused on understanding the characteristics that make a program “evidence-based”, so we expected to have established a common definition. We were not aware of relevant scales (focused on EBP processes broadly, as opposed to attitudes towards a given EBP) at the time of survey development, though validated scales are now available for such assessments [73]. The same limitation for social desirability bias relates to the secondary diffusion reports as well. Second, we were only able to analyze network data in Community C, which may be unlike the other two networks in terms of trainee engagement as well as contextual factors related to the community and program-related factors including connectedness to the local community health educator and program. Additionally, a network analysis such as this reflects findings in a single network and we are limited in our ability to generalize findings to other networks or situations. Finally, the data are cross-sectional and causation cannot be assessed. However, whether engagement drives EBP usage, EBP usage drives reliance on the network, or some combination, the potential for the network to be a useful support related to EBPs remains and warrants study.

The study has a number of strengths that outweigh the limitations. First, by using social network analysis, we were able to move beyond individual-level effects and capture system-level effects of public health initiatives, while making use of a set of methods underutilized in public health at this time [44]. Second, the focus on non-profit community organizations (versus government agencies) is important given the importance of CBOs for health promotion among the underserved. Third, the team conducted recruitment and engagement in the context of pre-existing local networks engaged in health promotions, the Community Health Network Areas, which increases the likelihood of a sustainable effect. In this vein, we did not seek to assess the development of new relationships or strengthening of existing relationships, but instead focused on infrastructure for EBP usage by focusing on communication regarding the systematic approach to program planning presented in the intervention.

The study highlights the potential and challenge of building a network of dissemination specialists. Future efforts should include greater integration of contextual factors into the development of capacity- and system-building interventions. Important factors in CBO settings include the following: (1) low-resource environments, which likely limit the amount of time practitioners can devote to network development/engagement; (2) community complexity, which impacts both the ability to develop a meaningful network and also the ability for network developers to have sufficient engagement with practitioners; and (3) turnover rates, which impact the ability to build a sustainable network. Consideration of context in capacity-building interventions may prompt network development within a more narrowly bounded community, as recommended elsewhere [74] or among a subset of practitioners/organizations particularly primed for change [64]. Peer networks are more likely to be built and sustained if the trainees and their organizations can identify concrete benefits of participation. As noted previously, important benefits may extend beyond implementation and programmatic outcomes to turnover and creation of channels for informal learning that can be accessed for a wide range of organizational goals. The study also highlights the fact that implementer/stakeholder networks are (1) important resources to leverage to support the execution of dissemination and implementation research activities and (2) important aspects of implementation context that can and should be studied as part of dissemination and implementation research studies [66, 75, 76]. Peer networks are only one of the strategies available for capacity-building and a detailed evaluation of their effect as part of an intervention package will be useful for advancing the field.

Overall, the results of this study reinforce the benefit of taking a systems’ perspective to promote the use of research evidence in practice settings. To leverage the potential power of a CBO practitioner network, dedicated time and resources are needed for practitioners to develop the necessary expertise, engage with peers, and begin to change the way in which health promotion programs leverage research evidence for the benefit of communities.

Acknowledgements

The study was conducted in collaboration with the PLANET MassCONECT C-PAC, which includes the authors and the following members: community partners: Clara Savage, EdD (Common Pathways*); David Aaronstein (Boston Alliance for Community Health/Health Resources in Action); Ediss Gandelman, MBA, MEd (Beth Israel Deaconness Medical Center*); investigators: Karen Emmons, PhD, (Harvard T.H. Chan School of Public Health/Dana-Farber Cancer Institute*); Elaine Puleo, PhD (University of Massachusetts*); Glorian Sorensen, PhD, MPH (Harvard T.H. Chan School of Public Health/Dana-Farber Cancer Institute); and the PLANET MassCONECT Study Team: Jaclyn Alexander-Molloy, MS; Cassandra Andersen, BS; Carmenza Bruff, BS; and Josephine Crisostomo, MPH (Dana-Farber Cancer Institute*). * affiliation at time of intervention

Compliance with ethical standards

The institutional review board at The Harvard T.H. Chan School of Public Health reviewed all study protocols and related documents and deemed the study exempt from review (study reference number: 15630).

Footnotes

Implications

Practice: Supporting practitioners to engage with a network of peers may enhance dissemination and implementation of evidence-based strategies and programs, but will require dedicated staff time and resources.

Policy: Funders and policymakers should allocate sufficient resources to allow practitioners in community-based organizations to develop, maintain, and sustain local networks to take advantage of research evidence.

Research: Future research is needed to identify key contextual influences on the development and sustainability of networks that support dissemination and implementation activities in community settings.

An erratum to this article is available at https://doi.org/10.1007/s13142-017-0518-9.

References

- 1.Maibach, E. W., Van Duyn, M. A. S., & Bloodgood, B. (2006). A marketing perspective on disseminating evidence-based approaches to disease prevention and health promotion. Preventing Chronic Disease, 3(3). [PMC free article] [PubMed]

- 2.World Health Organization . Health 21: health for all in the 21st century. Copenhagen: World Health Organization Regional Office for Europe; 1999. [Google Scholar]

- 3.Stephens KK, Rimal RN. Expanding the reach of health campaigns: community organizations as meta-channels for the dissemination of health information. Journal of Health Communication. 2004;9:97–111. doi: 10.1080/10810730490271557. [DOI] [PubMed] [Google Scholar]

- 4.Griffith DM, et al. Community-based organizational capacity building as a strategy to reduce racial health disparities. Journal of Primary Prevention. 2010;31(1–2):31–39. doi: 10.1007/s10935-010-0202-z. [DOI] [PubMed] [Google Scholar]

- 5.Van de Ven AH, Johnson PE. Knowledge for theory and practice. Academy of Management Review. 2006;31(4):802–821. doi: 10.5465/AMR.2006.22527385. [DOI] [Google Scholar]

- 6.Brownson, R. C., Baker, E. A., Leet, T. L., Gillespie, K. N., & True, W. R. (2011). Evidence-based public health. New York: Oxford University Press.

- 7.Jacobs JA, et al. Capacity building for evidence-based decision making in local health departments: scaling up an effective training approach. Implementation Science. 2014;9(1):124. doi: 10.1186/s13012-014-0124-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Dreisinger M, et al. Improving the public health workforce: evaluation of a training course to enhance evidence-based decision making. Journal of Public Health Management and Practice. 2008;14(2):138–143. doi: 10.1097/01.PHH.0000311891.73078.50. [DOI] [PubMed] [Google Scholar]

- 9.Lavis JN, et al. How can research organizations more effectively transfer research knowledge to decision makers? Milbank Quarterly. 2003;81(2):221–248. doi: 10.1111/1468-0009.t01-1-00052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Leeman J, et al. What strategies are used to build practitioners’ capacity to implement community-based interventions and are they effective?: a systematic review. Implementation Science. 2015;10(1):80. doi: 10.1186/s13012-015-0272-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fixsen, D. L., Naoom, S. F., Blase, K. A., Friedman, R. M., & Wallace, F. (2005). Implementation research: a synthesis of the literature. Tampa: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network.

- 12.Leeman J, et al. Developing Theory to Guide Building Practitioners’ Capacity to Implement Evidence-Based Interventions. Health Educ Behav. 2015. [DOI] [PMC free article] [PubMed]

- 13.Wandersman A, Chien VH, Katz J. Toward an evidence-based system for innovation support for implementing innovations with quality: tools, training, technical assistance, and quality assurance/quality improvement. American Journal of Community Psychology. 2012;50(3–4):445–459. doi: 10.1007/s10464-012-9509-7. [DOI] [PubMed] [Google Scholar]

- 14.Rogers E. Diffusion of innovations. 5. New York: The Free Press; 2003. [Google Scholar]

- 15.Valente TW, Davis RL. Accelerating the diffusion of innovations using opinion leaders. The Annals of the American Academy of Political and Social Science. 1999;566(1):55–67. doi: 10.1177/000271629956600105. [DOI] [Google Scholar]

- 16.Valente TW, Chou CP, Pentz MA. Community coalitions as a system: effects of network change on adoption of evidence-based substance abuse prevention. American Journal of Public Health. 2007;97(5):880–886. doi: 10.2105/AJPH.2005.063644. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lave J, Wenger E. Situated learning: legitimate peripheral participation. Cambridge: Cambridge University Press; 1991. [Google Scholar]

- 18.Wenger E, McDermott RA, Snyder W. Cultivating communities of practice. Boston: Harvard Business School Press; 2001. [Google Scholar]

- 19.Li LC, et al. Use of communities of practice in business and health care sectors: a systematic review. Implementation Science. 2009;4:27. doi: 10.1186/1748-5908-4-27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Crisp BR, Swerissen H, Duckett SJ. Four approaches to capacity building in health: consequences for measurement and accountability. Health Promotion International. 2000;15(2):99–107. doi: 10.1093/heapro/15.2.99. [DOI] [Google Scholar]

- 21.Takahashi LM, Smutny G. Collaboration among small, community-based organizations strategies and challenges in turbulent environments. Journal of Planning Education and Research. 2001;21(2):141–153. [Google Scholar]

- 22.Minkler M, Wallerstein N. Introduction to community-based participatory research. In: Minkler M, Wallerstein N, editors. Community-based participatory research for health: From process to outcomes. San Francisco: Jossey-Bass; 2008. pp. 5–24. [Google Scholar]

- 23.Israel BA, et al. Review of community-based research: assessing partnership approaches to improve public health. Annual Review of Public Health. 1998;19:173–201. doi: 10.1146/annurev.publhealth.19.1.173. [DOI] [PubMed] [Google Scholar]

- 24.Wallerstein N, Duran B. Community-based participatory research contributions to intervention research: the intersection of science and practice to improve health equity. American Journal of Public Health. 2010;100(Suppl 1):S40–S46. doi: 10.2105/AJPH.2009.184036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Minkler M, Salvatore AL. Participatory approaches for study design and analysis in dissemination and implementation research. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and implementation research in health. New York: Oxford; 2012. pp. 192–212. [Google Scholar]

- 26.Greenhalgh T, et al. Achieving research impact through co-creation in community-based health services: literature review and case study. The Milbank Quarterly. 2016;94(2):392–429. doi: 10.1111/1468-0009.12197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Massachusetts Department of Public Health. Community Health Network Area (CHNA). Available from: http://www.mass.gov/eohhs/gov/departments/dph/programs/admin/comm-office/chna/. Cited June 14 2016.

- 28.U.S. Census Bureau Population Estimates Program (PEP). 2010.

- 29.U.S. Census Bureau. Poverty Status in the Past 12 Months: 2006–2010. American Community Survey 5-year Estimates. Available from: http://factfinder2.census.gov/faces/nav/jsf/pages/index.xhtml. 2010 cited November 20 2012.

- 30.U.S. Census Bureau, 2010 American Community Survey 5-Year Estimates. 2010.

- 31.Ramanadhan, S. and Viswanath, K. (2013). Priority-setting for evidence-based health outreach in community-based organizations: a mixed-methods study in three Massachusetts communities. Transl Behav Med: Practice, Policy and Research, 3(2),180–188. [DOI] [PMC free article] [PubMed]

- 32.Viswanath K, Randolph Steele W, Finnegan JR., Jr Social capital and health: civic engagement, community size, and recall of health messages. American Journal of Public Health. 2006;96(8):1456–1461. doi: 10.2105/AJPH.2003.029793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tichenor PJ, Donohue GA, Olien CN. Community conflict and the press. Beverly Hills: Sage; 1980. [Google Scholar]

- 34.Koh HK, et al. Translating research evidence into practice to reduce health disparities: a social determinants approach. American Journal of Public Health. 2010;100(S1):S72–S80. doi: 10.2105/AJPH.2009.167353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Minkler M, Wallerstein N, editors. Community based participatory research in health. San Francisco: Jossey-Bass; 2003. [Google Scholar]

- 36.Ramanadhan S, K Viswanath. Engaging communities to improve health: models, evidence, and the Participatory Knowledge Translation (PaKT) Framework. In: EB Fisher, ed. Principles and Concepts of Behavioral Medicine: A Global Handbook. Springer Science & Business Medial; 2017.

- 37.Canadian Institutes of Health Research. Knowledge Translation Strategy 2004–2009. Available from: http://www.cihr-irsc.gc.ca/e/26574.html. 2004 cited October 23 2009.

- 38.Institute for Healthcare Improvement The breakthrough series: IHI’s collaborative model for achieving breakthrough improvement. Diabetes Spectrum. 2004;17(2):97–101. doi: 10.2337/diaspect.17.2.97. [DOI] [Google Scholar]

- 39.Deming WE. The new economics for industry, government, education. 2. Cambridge: MIT Press; 2000. [Google Scholar]

- 40.Centers for Disease Control and Prevention. The Community Guide: Cancer Prevention and Control. Available from: http://www.thecommunityguide.org/cancer/index.html. 2011 January 27 cited March 27 2012.

- 41.National Cancer Institute. Cancer Control P.L.A.N.E.T.—About This Site. Available from: http://cancercontrolplanet.cancer.gov/about.html. 2011 cited August 15 2001.

- 42.Wenger E. Communities of practice: learning, meaning, and identity. New York: Cambridge University Press; 1998. [Google Scholar]

- 43.Hawe P, et al. Multiplying health gains: the critical role of capacity-building within health promotion programs. Health Policy. 1997;39(1):29–42. doi: 10.1016/S0168-8510(96)00847-0. [DOI] [PubMed] [Google Scholar]

- 44.Luke DA, Harris JK. Network analysis in public health: history, methods, and applications. Annual Review of Public Health. 2007;28:69–93. doi: 10.1146/annurev.publhealth.28.021406.144132. [DOI] [PubMed] [Google Scholar]

- 45.Valente TW. Social networks and health: models, methods, and applications. New York: Oxford University Press; 2010. [Google Scholar]

- 46.Tichy NM, Tushman ML, Fombrun C. Social network analysis for organizations. Academy of Management Review. 1979;4(4):507–519. [Google Scholar]

- 47.Hansen MT. The search-transfer problem: the role of weak ties in sharing knowledge across organization subunits. Administrative Science Quarterly. 1999;44(1):82–111. doi: 10.2307/2667032. [DOI] [Google Scholar]

- 48.Lakon CM, GC Godette, JR Hipp. Network-based approaches for measuring social capital. In: I Kawachi, SV Subramanian, D Kim, eds. Social Capital and Health. 2008: 63–81.

- 49.Wasserman S, Faust K. Social network analysis: Methods and analysis. New York: Cambridge University Press; 1994. [Google Scholar]

- 50.Freeman LC. Centrality in social networks: conceptual clarification. Social Networks. 1979;1:215–239. doi: 10.1016/0378-8733(78)90021-7. [DOI] [Google Scholar]

- 51.Valente TW, et al. Collaboration and competition in a children’s health initiative coalition: a network analysis. Evaluation and Program Planning. 2008;31(4):392–402. doi: 10.1016/j.evalprogplan.2008.06.002. [DOI] [PubMed] [Google Scholar]

- 52.Borgatti SP. NetDraw: graph visualization software. Harvard: Analytic Technologies; 2002. [Google Scholar]

- 53.Borgatti SP, Everett MG, Freeman LC. UCINET for windows: software for social network analysis. Lexington: Analytic Technologies; 2005. [Google Scholar]

- 54.Hanneman RA, Riddle M. Introduction to social network methods. Riverside: University of California, Riverside; 2005. [Google Scholar]

- 55.Huisman M. Imputation of missing network data: some simple procedures. Journal of Social Structure. 2009;10(1):1–29. [Google Scholar]

- 56.Costenbader E, Valente TW. The stability of centrality measures under conditions of imperfect data. Social Networks. 2003;25:283–307. doi: 10.1016/S0378-8733(03)00012-1. [DOI] [Google Scholar]

- 57.SAS Institute. Cary, NC:SAS; 2012.

- 58.Ramanadhan S, et al. Extra-team connections for knowledge transfer between staff teams. Health Education Research. 2009;24(6):967–976. doi: 10.1093/her/cyp030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Ramanadhan S, et al. Informal training in staff networks to support dissemination of health promotion programs. American Journal of Health Promotion. 2010;25(1):12–18. doi: 10.4278/ajhp.080826-QUAN-163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Flaspohler PD, et al. Ready, willing, and able: developing a support system to promote implementation of school-based prevention programs. American Journal of Community Psychology. 2012;50(3–4):428–444. doi: 10.1007/s10464-012-9520-z. [DOI] [PubMed] [Google Scholar]

- 61.Liu X, Batt R. The economic pay-offs to informal training: evidence from routine service work. Industrial & Labor Relations Review. 2007;61(1):75–89. doi: 10.1177/001979390706100104. [DOI] [Google Scholar]

- 62.Bunger AC, et al. Can learning collaboratives support implementation by rewiring professional networks? Administration and Policy in Mental Health. 2016;43(1):79–92. doi: 10.1007/s10488-014-0621-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Greenhalgh T, et al. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Quarterly. 2004;82(4):581–629. doi: 10.1111/j.0887-378X.2004.00325.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38(1):4–23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Wallerstein, N., Oetzel, J., Duran, B., Tafoya, G., Belone, L., & Rae, R. (2008). What predicts outcomes in CBPR? In M. Minkler & N. Wallerstein (Eds.), Community-based participatory research for health: from process to outcomes (pp. 371–392). San Francisco: Jossey-Bass.

- 66.Damschroder LJ, et al. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implementation Science. 2009;4(1):50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Ramanadhan S, et al. Perceptions of evidence-based programs by staff of community-based organizations tackling health disparities: a qualitative study of consumer perspectives. Health Education Research. 2012;27(4):717–728. doi: 10.1093/her/cyr088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Kegeles SM, Rebchook GM, Tebbetts S. Challenges and facilitators to building program evaluation capacity among community-based organizations. AIDS Education and Prevention. 2005;17(4):284. doi: 10.1521/aeap.2005.17.4.284. [DOI] [PubMed] [Google Scholar]

- 69.Dolcini MM, et al. Translating HIV interventions into practice: community-based organizations’ experiences with the diffusion of effective behavioral interventions (DEBIs) Social Science & Medicine. 2010;71(10):1839–1846. doi: 10.1016/j.socscimed.2010.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Aarons GA, et al. The impact of evidence-based practice implementation and fidelity monitoring on staff turnover: evidence for a protective effect. Journal of Consulting and Clinical Psychology. 2009;77(2):270–280. doi: 10.1037/a0013223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Edge K. Powerful public sector knowledge management: a school district example. Journal of Knowledge Management. 2005;9(6):42–52. doi: 10.1108/13673270510629954. [DOI] [Google Scholar]

- 72.Parise S. Knowledge management and human resource development: an application in social network analysis methods. Advances in Developing Human Resources. 2007;9(3):359–383. doi: 10.1177/1523422307304106. [DOI] [Google Scholar]

- 73.Rubin A, Parrish DE. Development and validation of the evidence-based practice process assessment scale: preliminary findings. Research on Social Work Practice. 2010;20(6):629–640. doi: 10.1177/1049731508329420. [DOI] [Google Scholar]

- 74.Fujimoto K, Valente TW, Pentz MA. Network structural influences on the adoption of evidence-based prevention in communities. Journal of Community Psychology. 2009;37(7):830–845. doi: 10.1002/jcop.20333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Palinkas LA, et al. Social networks and implementation of evidence-based practices in public youth-serving systems: a mixed-methods study. Implementation Science. 2011;6:113. doi: 10.1186/1748-5908-6-113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Valente TW, et al. Social network analysis for program implementation. PloS One. 2015;10(6):e0131712. doi: 10.1371/journal.pone.0131712. [DOI] [PMC free article] [PubMed] [Google Scholar]