Abstract

Humans generally prefer social over nonsocial stimuli from an early age. Reduced preference for social rewards has been observed in individuals with autism spectrum conditions (ASC). This preference has typically been noted in separate tasks that measure orienting toward and engaging with social stimuli. In this experiment, we used two eye-tracking tasks to index both of these aspects of social preference in in 77 typical adults. We used two measures, global effect and preferential looking time. The global effect task measures saccadic deviation toward a social stimulus (related to ‘orienting’), while the preferential looking task records gaze duration bias toward social stimuli (relating to ‘engaging’). Social rewards were found to elicit greater saccadic deviation and greater gaze duration bias, suggesting that they have both greater salience and higher value compared to nonsocial rewards. Trait empathy was positively correlated with the measure of relative value of social rewards, but not with their salience. This study thus elucidates the relationship of empathy with social reward processing.

Introduction

We are a social species. Humans, from an early stage, generally prefer attending to and interacting with conspecifics compared to objects [1,2]. This preference for social stimuli has been termed “social preference” and “social motivation” in different theoretical accounts, and is vital for our engagement with the social world [3]. A lack of preferential processing of social stimuli can lead to deficits in learning from one’s social environment, and consequent social behavioural deficits in adulthood. One account of conditions marked by deficits in empathy (such as Autism Spectrum Conditions (ASC) and Psychopathy) suggests that empathy deficits seen in these conditions can arise from a core deficit in social reward processing [4].

This suggestion is consistent with previous work showing that individuals with high trait empathy have a greater reward-related ventral striatal response to social rewards such as happy faces [5]. Variations in a key gene expressed within the human reward system (Cannabinoid Receptor gene, CNR1) were found to be associated with differences in eye-gaze fixation and neural response to happy faces (but not disgust faces) in three independent samples [6,7,8]. In a recent study, Gossen et al. found that individuals with high trait empathy showed greater accumbens activation in response to social rewards compared to individuals with low trait empathy [9].

These studies leave two questions unanswered. First, are rewarding social stimuli preferred when contrasted with an alternative rewarding non-social stimulus? Previous work discussed above has used paradigms where only rewarding social stimuli were presented [6,7,8] or where social stimuli were presented on their own [8]. Studies that have presented social vs nonsocial stimuli simultaneously, typically have not used stimuli that were rewarding per se [10,11], but see Sasson & Touchstone [12]. From early on in development, social reward signals play a crucial role in learning about objects and people in the external environment through their reinforcing properties [13]. If an individual has reduced sensitivity to social reward signals this may lead to atypical social behaviour, as seen in ASC.

Second, does a preference for social rewards manifest through quicker orienting to social rewards, or a longer engagement with social rewards, or both? Both of these phenomena have been observed in infants [14], as well as in young children [12]. It is useful to think of the orienting response as one more related to the salience of the stimuli, while the engagement response as more related to the value of the stimuli. Salience, in this context, refers to motivational salience, or “extrinsic salience”, i.e. a measure of how important a given stimulus is to the observer [15,16], rather than a stimulus property. On the other hand, ‘value’ of a stimulus refers to how pleasant/unpleasant it is. Salience and value for nonsocial rewards (e.g. food) has been widely studied in primates, and shown to be encoded differently in the brain [17,18]. However, these processes and individual differences thereof, have not been systematically delineated in the domain of social reward processing in humans.

In this paper, we report two experiments designed to measure two metrics of social reward processing, and relate individual differences in these metrics to trait empathy. The first of these experiments is based on a global effect or centre of gravity effect paradigm [19,20]. In this paradigm, two stimuli are presented peripherally while the participant is asked to make a saccade to a pre-specified target or to simply choose their own target. The saccade tends to deviate (“get pulled”) away from the target toward the distractor stimulus [21,22]. Initial saccade latencies in the global effect task are usually short (~180-230ms) and are known to be influenced more by image saliency [23]. This paradigm thus allows for direct attentional competition between social and nonsocial reward targets. The extent to which the saccade gets deviated toward social images compared to nonsocial images can then be used as a metric for relative salience of social rewards.

In the second experiment, we used a preferential looking task, widely utilised in developmental psychology to index preference [24,25]. In a preferential looking task two images are presented side by side and participants are provided with no instructions. This provides an unconstrained setting for participants to fixate wherever they like, and allows them to switch back and forth between the two pictures, for a long duration (usually > = 5s). Gaze duration in tasks of this type correlates strongly with self-reported choices and preference ratings [26], and has been suggested to encode relative value [27,28]. In the current study, we utilised this paradigm to measure preferential gaze duration for social compared to nonsocial reward images, as a putative index of relative value for social compared to nonsocial stimuli.

In order to address the key questions using the paradigms described above, it was necessary to develop a set of stimuli with social and nonsocial rewarding content, that were age-appropriate for an adult sample. A scrambled version of these images was also created to control for the impact of low-level visual features on any comparison between image types (details of image matching parameters are described below in the methods section).

We hypothesised that social rewards will be associated with greater gaze duration bias and saccadic deviation compared to nonsocial rewards, and that trait empathy will be positively correlated with the measures of social preference. We did not have a prior hypothesis on whether empathy will be related to the measures related to salience or value of social rewards, in the absence of prior behavioural data.

Materials and methods

Stimuli

40 pairs of positively valenced images were chosen for their social or nonsocial content. Social content was defined as images where one or more humans were visible in the image (e.g. happy couples, babies), while nonsocial content included objects and food items targeted to appeal to a range of individuals (e.g. cupcakes, cars). A subset of these images (15 social, 21 nonsocial) were drawn from the International Affective Picture System [29], while the rest were drawn from publicly available creative common licensed images databases such as Flickr (stimuli set available upon request).

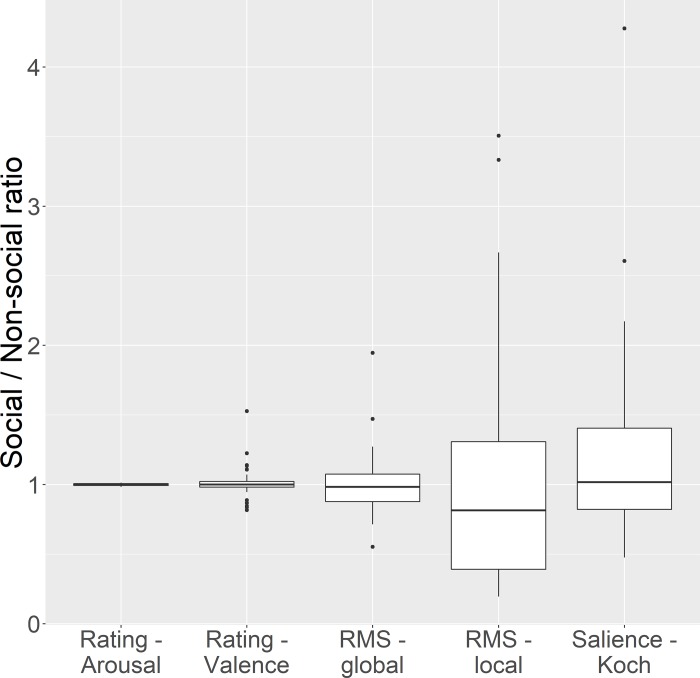

Each social reward image was paired with a specific nonsocial reward image such that they were closely matched on a number of psychological (arousal, valence) and stimulus parameters (contrast, and stimulus saliency). The extent of matching in each of these parameters for each pair of images is depicted in Fig 1. The global RMS and Local RMS contrast were computed as described in [30]. Image saliency (a characteristic of the image calculated based on its low-level visual features) was calculated using the Koch toolbox [31]. The Koch toolbox uses a steerable pyramid approach to combine the input image contrast, spatial frequency, orientation, and color into a single map that represents total saliency at each image point. Please note, however, that “image saliency” as calculated by the Koch toolbox is a property solely of the image, and is different from our use of the term “salience”, which refers to a property of the image in relation to the observer (how important/relevant an image is to the observer). The ratio for every matching parameter was calculated for each pair of stimuli, and this ratio was tested using a one-sample t-test against a test value of 1 (i.e. representing no difference in the value of the matching parameter). The confidence intervals for all of these ratios overlap the value of 1, suggesting that there was no significant difference on any of these parameters between social and nonsocial reward images. Self-reported valence ratings of the same stimuli in a similar but independent sample support the claim that they are rated as significantly positive (this data is available at https://github.com/bhismalab/EyeTracking_PlosOne_2017). On a 9 point likert scale, where a 5 rating conveys a neutral valence, and 6 or higher conveys a rewarding image, the average rating for social images given by 100 participants was 6.4, and for nonsocial images was 6.32. No significant difference in valence ratings were noted between social and nonsocial images (t(99) = 1.027, p = .307).

Fig 1. Parameters of matching of social and nonsocial images.

The ratio of a number of parameters were calculated for each pair of images (1 social, 1 nonsocial) used as stimuli in both the tasks. These consisted of psychological parameters (arousal and valence ratings) as well as image parameters (Global Root Mean Square[RMS] contrast, local RMS contrast, and stimulus saliency). Error bars denote 95% confidence intervals.

In addition, all image pairs were converted into grid-scrambled 10-pixel mosaics to create a control stimuli set, to control for the effect of low level properties of the stimuli such as contrast or colour on any of the measures of interest. All 40 image pairs were used in both tasks described below.

Global effect (GE) task

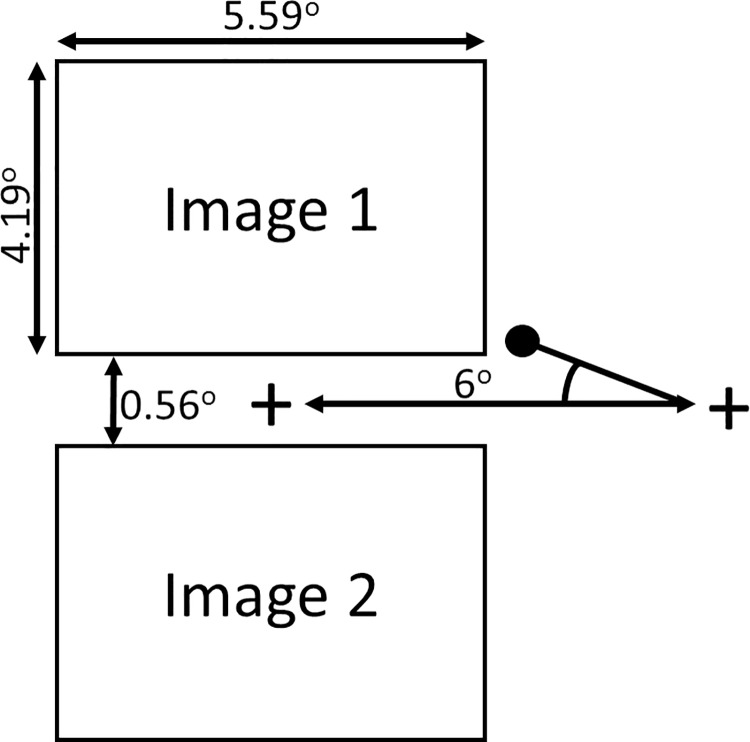

The GE task was based on a modified version of the original task that has been used in studying response to emotional stimuli [22]. The stimuli were presented as shown in Fig 2. Each trial began with the presentation of a central fixation cross, which subtended a visual angle of 1 degree. After 800–1200 ms this disappeared and immediately reappeared 6 degree of visual angle to the left or right of centre on the horizontal meridian. Participants were instructed to look at wherever the fixation cross reappeared. This required making a target-directed saccade from the center to the periphery of the screen.

Fig 2. The layout of a trial in the Global Effect task.

The angle of deviation towards the top or bottom image is calculated as the difference between the participant’s first saccade (represented by a black circle) and the shortest path between the initial location of the fixation cross and the location where it reappears (on the left or right of the screen). Social and nonsocial reward images appeared in vertical pairs as shown, which were presented to the right or the left of the initial fixation cross for an equal number of times (40 times each). Participants were instructed to look only at the fixation cross, and ignore the images. The full set of stimuli is available on request from the first author.

Each of the 40 pairs of (social and nonsocial) images was presented in its scrambled and unscrambled form, thus comprising 80 trials. Stimulus type (scrambled/unscrambled, social/nonsocial) and spatial location (left/right, top/bottom) were counterbalanced across participants.

Freeview (preferential looking) task

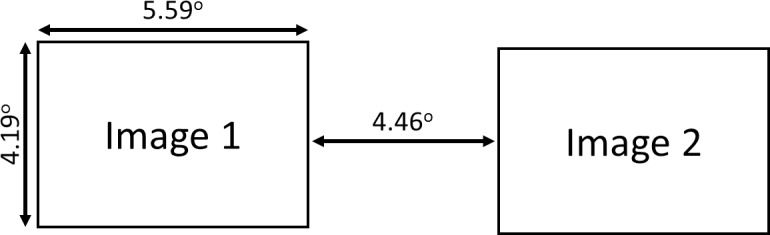

The stimuli were presented as shown in Fig 3. The 40 pairs of images (social and nonsocial) and 40 control pairs of scrambled images were presented in a pseudorandom sequence side by side (see Fig 3). Each trial began with the presentation of a central fixation cross (“+” 0.28 degree of visual angle). Once participants fixated on the cross an automated drift correct procedure was performed using four head cameras that corrected for any slight movements of the participants. Following this, the fixation cross was removed and a pair of social and nonsocial images (each image 5.59 deg x 4.19 deg) were immediately presented for 5 seconds to the left and right. During the trial the participant was free to look wherever they chose. This was followed by a 1500ms intertrial interval.

Fig 3. The layout for a trial in the Freeview task.

Participants were free to look at paired social and nonsocial images that were presented side by side. The average duration participants spent looking at the social vs. nonsocial images was measured. Images were presented for 5000ms. The full set of stimuli is available on request.

Procedure

After giving informed consent participants were briefed about both tasks. Head movements were constrained with a chin-rest, which held participants so their eyes were in-line with the horizontal meridian of the screen, at a viewing distance of 1m. The eye-tracker was calibrated using a standard 9 point grid, carried out at the beginning of the experiment. Calibration was only accepted once there was an overall difference of less than 0.5 degree between the initial calibration and a validation retest: in the event of a failure to validate, calibration was repeated. The order of the two tasks was counterbalanced across participants.

Eye movements were recorded using a head-mounted, video-based, eye-tracker with a sampling rate of 500 Hz (Eyelink II, SR Research). Viewing of the display was binocular and we recorded monocularly from observers’ right eyes. Stimuli were presented in greyscale on a 21” colour monitor with a refresh rate of 75 Hz (DiamondPro, Sony) using Experiment Builder (SR Research Ltd.).

Participants completed the Empathy Quotient (EQ)[32]questionnaire online.

Participants

77 participants (42 females; mean age = 21 years, 1 month, s.d. = 3 years and 5 months) drawn from in and around the University of Reading campus completed the FV task. One participant’s data in the FV task was removed because gaze data was captured on less than 75% of scrambled trials. 74 of the FV participants completed the GE task. GE data for 7 participants were discarded due to capturing fixations on fewer than 75% of scrambled or unscrambled trials. All participants had normal or corrected to normal vision. Of the remaining participants, 68 FV (38 female) and 61 GE (33 female) participants completed the online EQ questionnaire. The study was approved by the University of Reading Research Ethics Committee.

Data analysis

The data was analysed and figures were generated with R using the ggplot2 [33] and grid [34]. The global effect in response to social stimuli was measured as the average deviation towards social vs nonsocial images. During each trial the angle of the first saccade identified was calculated relative to how far it was off the line between where the fixation cross initially appears and where it reappears, and whether it was toward the social or nonsocial image (See Fig 2). Saccade start and endpoints were identified using the following criteria: 22°/s velocity and 8000°/s2 acceleration. If the initial saccade within a trial was identified before 70ms, or after 500ms had elapsed, the trial was excluded. On this basis, 92 of the 5360 trials were removed (1.716%) across the participants (i.e an average of 1.373 trials per participant). A positive average deviation indicates a bias towards social stimuli whereas a negative value indicates a bias towards nonsocial stimuli. Average deviation was calculated separately for scrambled and unscrambled images.

Preferential looking for social stimuli in the FV task was calculated as the proportion of gaze duration (dwell time) on social images on each trial using the following formula:

For both the tasks, blinks were automatically discounted by software used (SR Research DataViewer). Within the FV task, after blinks were processed, the shortest fixation was 32ms, which is equivalent to 16 consecutive timestamps on a 500Hz eyetracker. No trials were excluded due to missing data.

All test statistics presented in the following section are 2-tailed.

Results

Global effect task

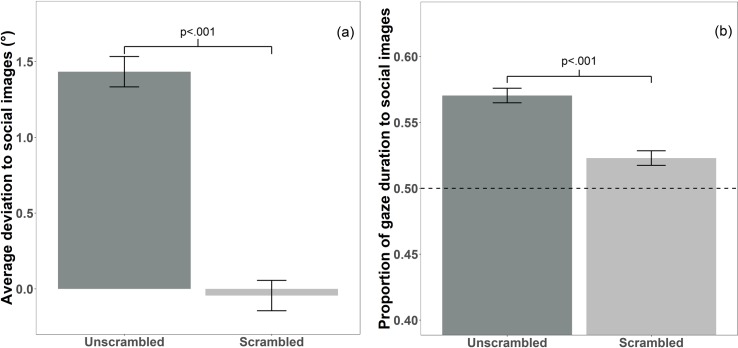

For unscrambled images, a one sample t-test against a test value of 0 (which corresponds to no significant deviation toward social/nonsocial image) found that average deviation was significantly more toward social than nonsocial images (t (66) = 8.409, p < .001, Cohen’s d = 1.027). This was not true for scrambled images (t (66) = -.392, p = .697, Cohen’s d = .048; see Fig 4A). A direct comparison of the extent of deviation toward social images in the unscrambled and the scrambled conditions revealed a significant difference in average deviation (t(66) = 7.371, p < .001; Cohen’s d = 1.253; see Fig 4A).

Fig 4. Bias to social stimuli in the Global Effect and Freeview task.

(a) The average angle of deviation towards social images during the Global Effect task. There was a significant sociality bias for average deviation on unscrambled images (mean = 1.433°, standard deviation = 1.395°, standard error = .17°, p < .001), but not scrambled images (mean = -.044°, standard deviation = .913°, standard error = .111°; p = .697). The error bars reflect within- subject errors, calculated using the Cousineau[35] method. (b) Bar graph of the proportion of duration looking to social images during the Freeview task. Proportion of gaze duration was significantly longer to social images for unscrambled (mean = .57, standard deviation = .092, standard error = .011, p < .001) and scrambled images (mean = .523, standard deviation = .046, standard error = .005, p < .001). Values above the dotted line at .5 indicate a bias to social images.

There was no correlation between EQ and the average deviation toward social reward images for unscrambled (r (59) = .059, p = .652) or scrambled images (r (59) = -.114, p = .382).

Freeview task

One sample t-tests against a test-value of .5 (corresponding to equal proportion of dwell time to social and nonsocial images) found that the proportion of gaze duration to social images was significantly higher for both unscrambled images (mean = .57; t (75) = 6.664, p < .001, Cohen’s d = .764) and scrambled images (mean = .523; t (75) = 4.362, p < .001, Cohen’s d = .5; see Fig 4B). A paired samples t-test found that proportion of gaze duration to social images was greater for unscrambled images than scrambled images (t (75) = 4.285, p < .001, Cohen’s d = .652; see Fig 4B).

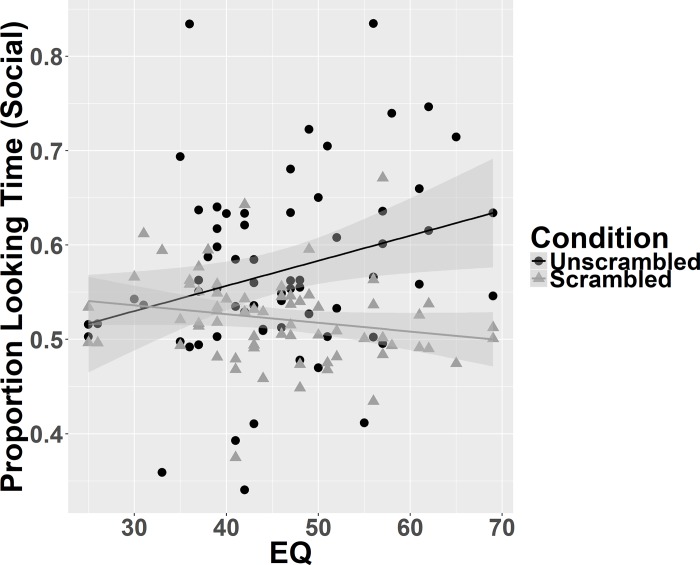

There was a positive correlation between EQ and proportion of gaze duration to social images in unscrambled images (r (66) = .278, p = .022), but no significant correlation between EQ and proportion of gaze duration to social images in scrambled images (r (66) = -.198, p = .106; see Fig 5). To directly test the difference between these two dependent correlations Steiger’s Z was calculated [36]. This showed a significant difference between the correlations (Steiger’s Z = 2.95, p = .003).

Fig 5. Correlations between trait empathy and proportion of gaze duration to social images.

Greater empathy was significantly associated with more time spent looking at social images than nonsocial images when they are unscrambled (black line and circles; r(66) = .277, p = .022) but not scrambled (grey line and triangles; r(66) = -.198, p = .106).

Discussion

In this study we developed a new set of ecologically valid stimuli depicting of social and nonsocial rewards. Using two separate tasks with this stimulus set in the general population, we found that social reward images evoked greater saccadic deviation and preferential looking than nonsocial reward images, after having controlled for differences in low-level visual properties. This result supports similar work on dynamic scenes which showed that low-level stimulus features are not able to explain the gaze response to social stimuli [37]. Importantly, the preferential gaze bias toward social reward images was proportional to individual differences in trait empathy. This relationship with empathy was seen only with the measure of engagement (i.e. the preferential gaze experiment), and not for the measure of orientation (i.e. the experiment measuring saccadic deviation).

Humans orient quickly to social stimuli from an early age, across sensory modalities [38,39,40]. Typically developing children orient more to social stimuli, when these are presented within an array of nonsocial stimuli [41]. Orienting responses are driven primarily by the salience of the target, and hence these results suggest that social stimuli are generally regarded as more salient than nonsocial stimuli. However, none of these paradigms have measured the global effect which relies on quick saccades that are influenced more by image saliency [23]. These results are therefore consistent with the literature on infants and young children, and points to a higher salience of social compared to nonsocial reward images in adults.

Relative gaze duration in paradigms where two stimuli are presented simultaneously may index the relative value of the two targets and two computational models have been proposed (gaze cascade and drift diffusion models) to relate gaze duration bias and the relative value of targets [27,28]. Preferential looking paradigms have been used widely in developmental psychology, where it has been shown that infants look longer at social compared to nonsocial stimuli [40,42,43]. This suggests that in typically developing infants social stimuli in general have a higher value than nonsocial stimuli. However, these stimuli are usually not matched for visual properties. Our results are consistent with these results, and show that social rewards may have a higher value than nonsocial rewards. Importantly, this difference was not driven by a difference in stimulus arousal/valence, or by differences in low-level properties of the images that we tested.

Empathy was found to be directly proportional to the gaze duration for social compared to nonsocial reward images. This suggests that highly empathic individuals may attribute a greater value to social rewards compared to nonsocial rewards. This is consistent with experiments which found greater striatal activation to happy faces in individuals with high trait empathy [5]. Janowski et al. [44] showed that empathic choice is influenced by processing of value in the medial prefrontal cortex in a choice task. Jones & Klin [42] showed that infants who go on to develop autism show a progressively reduced gaze fixation toward faces when presented simultaneously with objects in a naturalistic video. Individuals with ASC score low in questionnaire measures of empathy [32]. These converging lines of evidence suggest that individuals low in empathy might show reduced gaze duration for social vs nonsocial stimuli, a suggestion supported by our results. However, it leaves open a question about the direction of this relationship. Is a reduced responsivity to social rewards early in life responsible for lower levels of trait empathy as an adult, or does trait empathy determine the level of relative responsivity to social rewards? From a developmental perspective, responsivity to social rewards should occur prior to all the components of empathy being developed (especially the more cognitive components such as theory of mind). Accordingly, we speculate that the former alternative has a higher probability; though this question can only be answered in future longitudinal studies. Further work should explore if these effects are magnified or reduced, if nonsocial reward images are chosen to be of high interest to individual participants, similar to the approach taken by Sasson and colleagues [12,45](. Another potential avenue for exploration would be to test if such indices of social preference hold true if stimuli of neutral or negative valence are used.

Three caveats need to be considered in relation to the results discussed above. First, it is important to match stimuli as closely as possible on both psychological (such as arousal and valence) as well stimulus parameters (such as RMS & local RMS) in paradigms such as ones reported in this paper. However, the issue of ‘perfect’ matching of all stimulus parameters remains thorny, since it would not be difficult to contrive an image based metric that would be associated with a statistical difference between the image sets (e.g., an algorithm that looked for colours that correspond to flesh tones, or a measure that used a simple face detector). Second, while the proportional gaze duration for social over non-social reward images in the unscrambled condition was significantly greater than that observed in the scrambled condition, it was noted that the proportion of gaze duration for social reward images was significantly >50% for both scrambled and unscrambled conditions. This unexpected observation could be due to the size (10px x 10px) of the blocks used to scramble the pictures. While the overall image is rendered completely unrecognisable due to the scrambling process, it might nonetheless be possible to detect few recognisable features (e.g. flesh tone colours) from even the scrambled images. In comparison to the scrambled version of the non-social images, the social images might therefore have attracted more preferential gaze duration. Third, even though the inferences in this study are drawn on the processing of social vs nonsocial rewards, due to the rewarding nature of the images used, it is not possible to rule out an alternative interpretation based on the social content of the images alone. Future experiments with similarly matched pairs of rewarding and non-rewarding social and nonsocial stimuli will be needed to test between these alternative potential explanations.

Conclusion

In this set of two experiments we used a new set of images of social and nonsocial rewards and showed that social rewards are associated with greater saccadic deviation and higher gaze duration compared to nonsocial rewards. This social advantage persists even after minimising differences in arousal/valence of these images and low level visual properties. We found that trait empathy was correlated positively to the gaze duration bias for social rewards, but not with the saccadic deviation toward social rewards. This results points to a potential distinction between two important aspects of social reward processing (i.e. salience and value), and clarifies their relationship with phenotypic dimensions relevant to ASC. Future research should directly test these different parameters of social reward processing in individuals with atypical empathy profiles, such as those with ASC and Psychopathy.

Acknowledgments

The authors acknowledge Natalie Kkeli, Violetta Mandreka, Charlotte Whiteford and Kara Dennis for help with data collection. BC was supported by the Philip Leverhulme Prize from the Leverhulme Trust and the MRC New Investigator grant, CPT was supported by the University of Reading Research Endowment Trust Fund, ATH was supported by an ESRC-MRC interdisciplinary doctoral studentship, and LC was supported by Sapienza University of Rome studentship.

Data Availability

Data and the scripts that generated the analyses are available from GitHub (https://github.com/bhismalab/EyeTracking_PlosOne_2017).

Funding Statement

BC was supported by the Philip Leverhulme Prize (PLP-2015-329) from the Leverhulme Trust and the MRC New Investigator grant (G1100359/1), CPT was supported by the University of Reading Research Endowment Trust Fund, ATH was supported by an ESRC-Medical Research Council interdisciplinary doctoral studentship, LC was supported by Sapienza University of Rome studentship. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Bukach CM, Peissig JJ. How faces became special. Perceptual Expertise: Bridging Brain and Behavior: Bridging Brain and Behavior. 2009;11. [Google Scholar]

- 2.Legerstee M. Contingency Effects of People and Objects on Subsequent Cognitive Functioning in Three-month-old Infants. Soc Dev. 1997;6(3):307–21. [Google Scholar]

- 3.Chevallier C, Kohls G, Troiani V, Brodkin ES, Schultz RT. The social motivation theory of autism. Trends Cogn Sci. 2012. April;16(4):231–9. doi: 10.1016/j.tics.2012.02.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dawson G, Bernier R, Ring RH. Social attention: a possible early indicator of efficacy in autism clinical trials. J Neurodev Disord. 2012. January;4(1):11 doi: 10.1186/1866-1955-4-11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chakrabarti B, Bullmore E, Baron-Cohen S. Empathizing with basic emotions: common and discrete neural substrates. Soc Neurosci. 2006. January;1(3–4):364–84. doi: 10.1080/17470910601041317 [DOI] [PubMed] [Google Scholar]

- 6.Chakrabarti B, Baron-Cohen S. Variation in the human cannabinoid receptor CNR1 gene modulates gaze duration for happy faces. Mol Autism. 2011. January;2(1):10 doi: 10.1186/2040-2392-2-10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Chakrabarti B, Kent L, Suckling J, Bullmore E, Baron-Cohen S. Variations in the human cannabinoid receptor (CNR1) gene modulate striatal responses to happy faces. Eur J Neurosci. 2006. April;23(7):1944–8. doi: 10.1111/j.1460-9568.2006.04697.x [DOI] [PubMed] [Google Scholar]

- 8.Domschke K, Dannlowski U, Ohrmann P, Lawford B, Bauer J, Kugel H, et al. Cannabinoid receptor 1 (CNR1) gene: impact on antidepressant treatment response and emotion processing in major depression. Eur Neuropsychopharmacol. 2008. October;18(10):751–9. doi: 10.1016/j.euroneuro.2008.05.003 [DOI] [PubMed] [Google Scholar]

- 9.Gossen A, Groppe SE, Winkler L, Kohls G, Herrington J, Schultz RT, et al. Neural evidence for an association between social proficiency and sensitivity to social reward. Soc Cogn Affect Neurosci. 2014. May;9(5):661–70. doi: 10.1093/scan/nst033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fletcher-Watson S, Leekam SR, Benson V, Frank MC, Findlay JM. Eye-movements reveal attention to social information in autism spectrum disorder. Neuropsychologia. 2009. January;47(1):248–57. doi: 10.1016/j.neuropsychologia.2008.07.016 [DOI] [PubMed] [Google Scholar]

- 11.Pierce K, Conant D, Hazin R, Stoner R, Desmond J. Preference for geometric patterns early in life as a risk factor for autism. Arch Gen Psychiatry. 2011. January;68(1):101–9. doi: 10.1001/archgenpsychiatry.2010.113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sasson NJ, Touchstone EW. Visual attention to competing social and object images by preschool children with autism spectrum disorder. J Autism Dev Disord. 2014. March;44(3):584–92. doi: 10.1007/s10803-013-1910-z [DOI] [PubMed] [Google Scholar]

- 13.Wu R, Gopnik A, Richardson DC, Kirkham NZ. Infants learn about objects from statistics and people. Developmental psychology. 2011. September;47(5):1220 doi: 10.1037/a0024023 [DOI] [PubMed] [Google Scholar]

- 14.Elsabbagh M, Gliga T, Pickles A, Hudry K, Charman T, Johnson MH, BASIS Team. The development of face orienting mechanisms in infants at-risk for autism. Behavioural brain research. 2013. August 15;251:147–54. doi: 10.1016/j.bbr.2012.07.030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Jensen J, Smith AJ, Willeit M, Crawley AP, Mikulis DJ, Vitcu I, et al. Separate brain regions code for salience vs. valence during reward prediction in humans. Hum Brain Mapp. 2007. April;28(4):294–302. doi: 10.1002/hbm.20274 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zalla T, Sperduti M. The amygdala and the relevance detection theory of autism: an evolutionary perspective. Front Hum Neurosci. 2013. January;7:894 doi: 10.3389/fnhum.2013.00894 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Leathers ML, Olson CR. In monkeys making value-based decisions, LIP neurons encode cue salience and not action value. Science. 2012. October 5;338(6103):132–5. doi: 10.1126/science.1226405 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Talmi D, Atkinson R, El-Deredy W. The feedback-related negativity signals salience prediction errors, not reward prediction errors. J Neurosci. 2013. May 8;33(19):8264–9. doi: 10.1523/JNEUROSCI.5695-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Findlay JM. Global visual processing for saccadic eye movements. Vision Res. 1982;22(8):1033–45. [DOI] [PubMed] [Google Scholar]

- 20.He P, Kowler E. The role of location probability in the programming of saccades: Implications for “center-of-gravity” tendencies. Vision Res. 1989;29(9):1165–81. [DOI] [PubMed] [Google Scholar]

- 21.Eggert T, Sailer U, Ditterich J, Straube A. Differential effect of a distractor on primary saccades and perceptual localization. Vision Res. 2002. December;42(28):2969–84. [DOI] [PubMed] [Google Scholar]

- 22.McSorley E, van Reekum CM. The time course of implicit affective picture processing: an eye movement study. Emotion. 2013. August;13(4):769–73. doi: 10.1037/a0032185 [DOI] [PubMed] [Google Scholar]

- 23.Schütz AC, Trommershäuser J, Gegenfurtner KR. Dynamic integration of information about salience and value for saccadic eye movements. Proc Natl Acad Sci U S A. 2012. May 8;109(19):7547–52. doi: 10.1073/pnas.1115638109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Batki A, Baron-Cohen S, Wheelwright S, Connellan J, Ahluwalia J. Is there an innate gaze module? Evidence from human neonates. Infant Behav Dev. 2000. February;23(2):223–9. [Google Scholar]

- 25.Fantz RL. Pattern vision in young infants. Psychol Rec [Internet]. 1958. [cited 2015 Jul 11]; Available from: http://psycnet.apa.org/psycinfo/1959-07498-001 [Google Scholar]

- 26.Taylor C, Schloss K, Palmer SE, Franklin A. Color preferences in infants and adults are different. Psychon Bull Rev. 2013. October;20(5):916–22. doi: 10.3758/s13423-013-0411-6 [DOI] [PubMed] [Google Scholar]

- 27.Krajbich I, Armel C, Rangel A. Visual fixations and the computation and comparison of value in simple choice. Nat Neurosci. 2010. October;13(10):1292–8. doi: 10.1038/nn.2635 [DOI] [PubMed] [Google Scholar]

- 28.Shimojo S, Simion C, Shimojo E, Scheier C. Gaze bias both reflects and influences preference. Nat Neurosci. 2003. December;6(12):1317–22. doi: 10.1038/nn1150 [DOI] [PubMed] [Google Scholar]

- 29.Lang PJ, Bradley MM, Cuthbert BN. International affective picture system (IAPS): Technical manual and affective ratings. 1999; Available from: http://www.hsp.epm.br/dpsicobio/Nova_versao_pagina_psicobio/adap/instructions.pdf [Google Scholar]

- 30.Bex PJ, Makous W. Spatial frequency, phase, and the contrast of natural images. J Opt Soc Am. 2002;19(6):1096a. [DOI] [PubMed] [Google Scholar]

- 31.Walther D, Koch C. Modeling attention to salient proto-objects. Neural networks. 2006. November 30;19(9):1395–407. doi: 10.1016/j.neunet.2006.10.001 [DOI] [PubMed] [Google Scholar]

- 32.Baron-Cohen S, Wheelwright S. The Empathy Quotient: An Investigation of Adults with Asperger Syndrome or High Functioning Autism, and Normal Sex Differences. J Autism Dev Disord. 2004. April;34(2):163–75. [DOI] [PubMed] [Google Scholar]

- 33.Wickham H. ggplot2: elegant graphics for data analysis Springer; New York: 2009; [Google Scholar]

- 34.Team RC. R: A language and environment for statistical computing. 2012;

- 35.Cousineau D. Confidence intervals in within-subject designs: A simpler solution to Loftus and Masson’s method. Tutorials in quantitative methods for psychology. 2005;1(1):42–5. [Google Scholar]

- 36.Steiger JH. Test for Comparing Elements of a Correlation Matrix. Psychol Bull. 1980;87(2):245–51. [Google Scholar]

- 37.Coutrot A, Guyader N. How saliency, faces, and sound influence gaze in dynamic social scenes. J Vis. 2014. July 3;14(8):5 doi: 10.1167/14.8.5 [DOI] [PubMed] [Google Scholar]

- 38.Dawson G, Meltzoff AN, Osterling J, Rinaldi J, Brown E. Children with autism fail to orient to naturally occurring social stimuli. J Autism Dev Disord. 1998;28(6):479–85. [DOI] [PubMed] [Google Scholar]

- 39.Mosconi MW, Steven Reznick J, Mesibov G, Piven J. The Social Orienting Continuum and Response Scale (SOC-RS): a dimensional measure for preschool-aged children. J Autism Dev Disord. 2009. February;39(2):242–50. doi: 10.1007/s10803-008-0620-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Johnson MH, Dziurawiec S, Ellis H, Morton J. Newborns’ preferential tracking of face-like stimuli and its subsequent decline. Cognition. 1991. August;40(1–2):1–19. [DOI] [PubMed] [Google Scholar]

- 41.Sasson NJ, Turner-Brown LM, Holtzclaw TN, Lam KSL, Bodfish JW. Children with autism demonstrate circumscribed attention during passive viewing of complex social and nonsocial picture arrays. Autism Res. 2008. February;1(1):31–42. doi: 10.1002/aur.4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Jones W, Klin A. Attention to eyes is present but in decline in 2-6-month-old infants later diagnosed with autism. Nature. 2013. December 19;504(7480):427–31. doi: 10.1038/nature12715 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Simion F, Regolin L, Bulf H. A predisposition for biological motion in the newborn baby. Proc Natl Acad Sci U S A. 2008. January 15;105(2):809–13. doi: 10.1073/pnas.0707021105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Janowski V, Camerer C, Rangel A. Empathic choice involves vmPFC value signals that are modulated by social processing implemented in IPL. Soc Cogn Affect Neurosci. 2013. February;8(2):201–8. doi: 10.1093/scan/nsr086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Sasson NJ, Elison JT, Turner-Brown LM, Dichter GS, Bodfish JW. Brief report: Circumscribed attention in young children with autism. Journal of autism and developmental disorders. 2011. February 1;41(2):242–7. doi: 10.1007/s10803-010-1038-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data and the scripts that generated the analyses are available from GitHub (https://github.com/bhismalab/EyeTracking_PlosOne_2017).