ABSTRACT

Background

The Association of American Medical Colleges describes 13 core entrustable professional activities (EPAs) that every graduating medical student should be expected to perform proficiently on day 1 of residency, regardless of chosen specialty. Studies have shown wide variability in program director (PD) confidence in interns' abilities to perform these core EPAs. Little is known regarding comparison of United States Medical Licensing Examination (USMLE) scores with proficiency in EPAs.

Objective

We determined if PDs from a large health system felt confident in their postgraduate year 1 residents' abilities to perform the 13 core EPAs, and compared perceived EPA proficiency with USMLE Step 1 and Step 2 scores.

Methods

The PDs were asked to rate their residents' proficiency in each EPA and to provide residents' USMLE scores. Timing coincided with the reporting period for resident milestones.

Results

Surveys were completed on 204 of 328 residents (62%). PDs reported that 69% of residents (140 of 204) were prepared for EPA 4 (orders/prescriptions), 61% (117 of 192) for EPA 7 (form clinical questions), 68% (135 of 198) for EPA 8 (handovers), 63% (116 of 185) for EPA 11 (consent), and 38% (49 of 129) for EPA 13 (patient safety). EPA ratings and USMLE 1 and 2 were negatively correlated (r(101) = −0.23, P = .031).

Conclusions

PDs felt that a significant percentage of residents were not adequately prepared in order writing, forming clinical questions, handoffs, informed consent, and promoting a culture of patient safety. We found no positive association between USMLE scores and EPA ratings.

Introduction

In 2014, the Association of American Medical Colleges (AAMC)1 described 13 core entrustable professional activities (EPAs) every graduating medical student should be expected to perform proficiently, without direct supervision, on day 1 of residency, regardless of chosen specialty (box). The core EPAs evolved from the entry-level residency milestones of pediatrics, surgery, emergency medicine, internal medicine, and psychiatry as defined by the Accreditation Council for Graduate Medical Education (ACGME).2,3 As described by ten Cate,4,5 EPAs are discrete units of work representative of physicians' routine activities. They stand in contrast to competencies, which measure an individual's abilities in a particular domain. As multiple competencies are inherent in each EPA, a learner's mastery of any single EPA can be seen as representing proficiency in several competencies.6

What was known and gap

Core entrustable professional activities (EPAs) have been developed with the expectation that medical school graduates should be able to perform them on their first day of residency.

What is new

A study found program directors reporting a considerable percentage of residents who were not adequately prepared on selected core EPAs.

Limitations

Single institution study may limit generalizability.

Bottom line

Program directors felt that a significant percentage of residents were not prepared to write orders, form clinical questions, engage in handoffs and informed consent, or promote a culture of patient safety.

Medical schools have been surveying directors of the residency programs in which their students matched to evaluate their graduates' level of core EPA proficiency at the start of residency. In 2015, Lindeman et al7 surveyed surgery program directors regarding their confidence in new residents' abilities to perform the core EPAs, comparing that data with data on resident confidence collected via the AAMC Graduation Questionnaire. This showed a sizable gap between graduating medical student confidence and program director confidence in residents' performance of the 13 core EPAs, with program directors reporting less confidence compared with the residents themselves. More surprising was the finding of wide variability in measures of program director confidence in interns' abilities to perform core EPAs, which ranged from 78.7% for EPA 1 (history and physical) to 13.5% for EPA 13 (patient safety).7

Our study explored if program directors from 1 large health care system with a diverse resident population were confident in their postgraduate year 1 (PGY-1) residents' abilities to perform the core EPAs 6 months into training; we also compared this information on reported EPA proficiency with scores on the United States Medical Licensing Examination (USMLE) Step 1 and Step 2. Programs use USMLE scores in screening and ranking applicants. Although they measure different skills, we sought to determine if USMLE scores correlated with EPA proficiency.

Methods

Northwell Health is a large integrated health care organization with PGY-1 residents from 65 US allopathic, 8 US osteopathic, and 31 international medical schools. We asked program directors from all ACGME-accredited residency programs with PGY-1 residents to complete a survey on how well these residents performed on the 13 core EPAs. Specifically, program directors were asked to rate each PGY-1 resident in all 13 EPAs using discrete categories: requires direct observation, requires general supervision, or not observed. Program directors also were asked to state reasons why an EPA might have been challenging to perform or challenging to observe. Finally, respondents were asked to provide USMLE Step 1 and Step 2 Clinical Knowledge (CK) scores for each PGY-1 resident. We did not ask for the Clinical Skills portion of Step 2, as it is graded pass/fail and all medical students are required to pass the Clinical Skills examination before starting residency at Northwell Health.

Surveys were distributed beginning in January 2016, which allowed programs to observe these residents for the first 6 months of their training, and following the time programs formally submit the ACGME milestones report for each resident. Therefore, program directors had data from their Clinical Competency Committees available when completing the surveys.

All ACGME-accredited programs with PGY-1 residents at Northwell Health were included in the study. Participation was voluntary.

Northwell Health's Institutional Review Board deemed this study exempt from ethical review.

Results

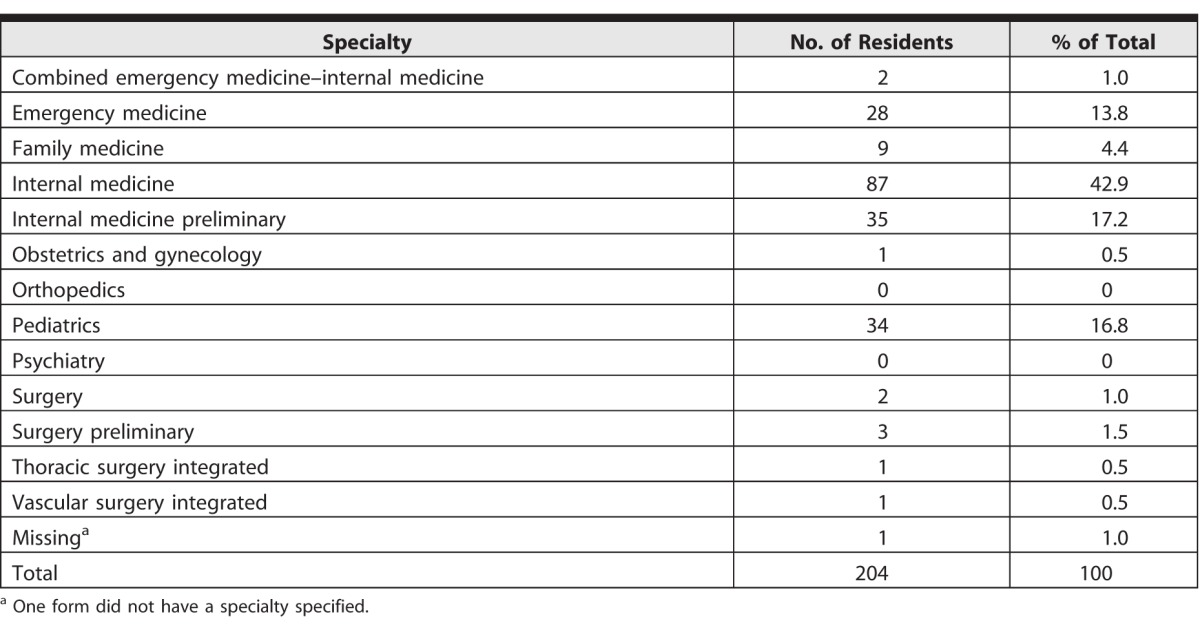

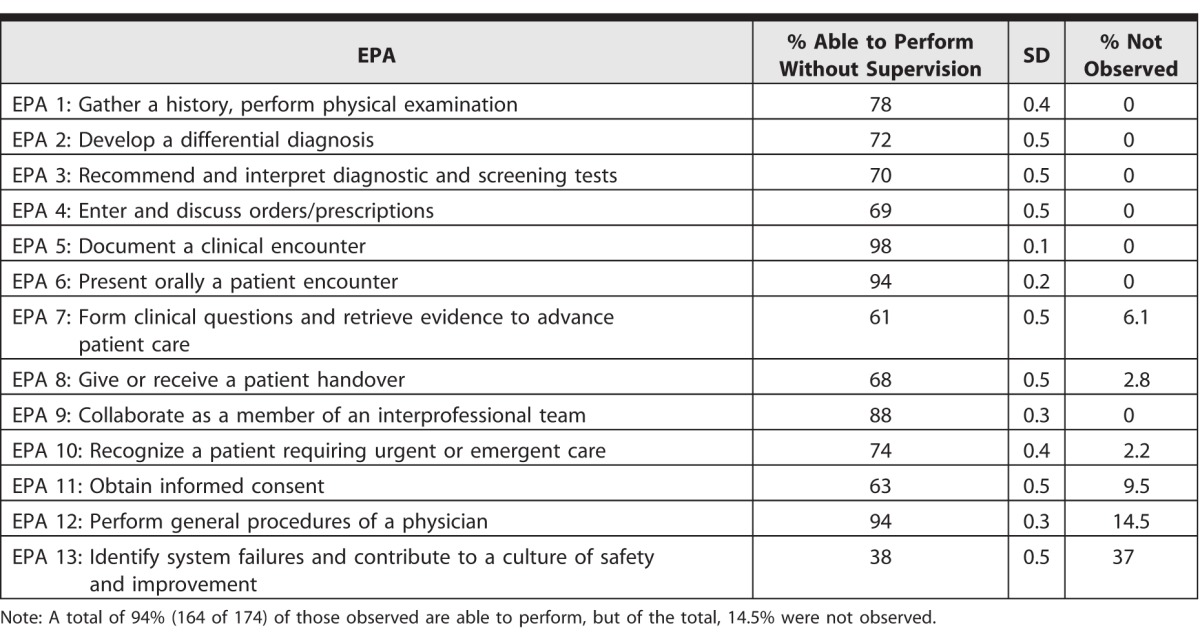

Program directors completed surveys on 204 of 328 PGY-1 residents (62%). Table 1 depicts the number of EPA assessments of residents completed by program directors by specialty. Table 2 depicts the program director ratings of residents in each of the EPAs. The EPAs that program directors felt residents were most adequately prepared for were EPA 5 (documentation), EPA 6 (oral presentation), EPA 9 (teamwork), and EPA 12 (procedures), with program directors reporting 98% (200 of 204), 94% (192 of 204), 88% (180 of 204), and 94% (164 of 174) of residents being adequately prepared, respectively. In addition, program directors reported that at least 70% of residents were prepared in EPA 1 (history and physical), EPA 2 (differential diagnosis), EPA 3 (recommend and interpret tests), and EPA 10 (recognize urgent or emergent care).

Table 1.

Number of Entrustable Professional Activity Assessments of Residents by Specialty

Table 2.

Program Director Entrustable Professional Activity (EPA) Ratings

The EPAs for which program directors felt residents were least prepared were EPA 4 (orders and prescriptions), EPA 7 (form clinical questions), EPA 8 (handovers), EPA 11 (consent), and EPA 13 (safety). Fewer than 70% of residents were rated as adequately prepared for these EPAs. The lowest rating was given to EPA 13 (patient safety), with program directors reporting that only 38% of PGY-1 residents (49 of 129) were adequately prepared. They also reported that 37% of the residents (75 of 204) had not been observed in this EPA. Furthermore, program directors reported only 61% of residents (117 of 192) as adequately prepared in EPA 7 (form clinical questions), while 94% of residents (192 of 204) had been observed performing this EPA.

Program directors' ratings on the 13 EPAs were aggregated and compared with USMLE Step 1 and Step 2 CK scores. The average Step 1 CK score was 238; the average Step 2 CK score was 248. EPA ratings and USMLE Step 1 CK scores were slightly negatively correlated (r(101) = −0.23, P = .031), as were EPA ratings and USMLE Step 2 CK scores (r(101) = −0.23, P = .031).

Discussion

Program directors in our study perceived that a significant percentage of residents were not adequately prepared in several of the EPAs, specifically EPA 4 (orders and prescriptions), EPA 7 (form clinical questions), EPA 8 (handovers), EPA 11 (informed consent), and EPA 13 (patient safety). Interestingly, we found little to no relationship between USMLE scores and EPA ratings.

The findings showed that EPA 13 (patient safety) is one of the most challenging to assess, with 37% of residents not assessed on this EPA. Program directors also reported that most of the residents were not able to recognize a system failure without faculty guidance, underscoring the importance of teaching and assessing this EPA in undergraduate medical education and in residency.

When program directors were asked for possible reasons for residents' lack of preparation for EPA 7 (form a clinical question), responses ranged from residents relying on online reviews and not using primary literature to the lack of modeling from senior residents and faculty. Because this skill is routinely emphasized during medical school, the low program director confidence rate is concerning and may reflect needed emphasis on assessment of this EPA in undergraduate medical education in a summative fashion.

Program directors also perceived EPA 4 (orders and prescriptions), EPA 8 (handovers), and EPA 11 (informed consent) as relative performance weaknesses in their PGY-1 residents. Entering orders and writing prescriptions have been made more challenging for medical students since the introduction of the electronic health record, which reduces their opportunities for entering orders or writing.8 Handovers are difficult to assess in medical students because they may not have had the opportunity to perform them, or they may not have been observed by faculty who could provide instruction and feedback. Finally, obtaining informed consent on a patient is something that is usually not done by a student. In essence, students may not have had in-depth exposure to these EPAs unless they participated in a subinternship with a fair amount of autonomy. We did not ascertain the extent to which residents participated in subinternships during medical school. This information would have provided insight as to the value of subinternships in preparation for residency.

Pereira et al9 surveyed more than 20 000 internal medicine residents and reported that subinternships were the most valuable fourth-year medical school courses for preparing them for internship. If the AAMC EPAs are considered a requirement for entering residency, medical schools need to provide opportunities for medical students to perform and practice them under supervision. If that is not feasible, simulated scenarios or capstone projects should be instituted to allow all students to learn and be assessed on these skills.

Our findings are consistent with Lindeman et al,7 who reported that program directors lacked confidence in surgical residents' performance of EPAs. We noted with interest the slight negative correlation between EPA performance and USMLE Step 1 and Step 2 scores. The USMLE scores test knowledge, while EPAs assess skills. Therefore, while they assess different components of competency, the fact that USMLE scores have a slightly negative correlation to EPA attainment should alert programs to use a more holistic selection process for residents that includes skills assessment.

Limitations of this study include that it took place in a single health care organization, reducing generalizability. We also did not identify individual residents' type of medical school attended to see if there was a difference in program director response. Finally, several of the EPAs have multiple components, and it is possible that residents may be competent in selected, but not all, aspects of an EPA.

Based on our findings, we suggest that residency programs may be well served by having fourth-year medical students engage in a capstone EPA assessment prior to graduation. This assessment could be followed by a supplementary, post-Match Medical Student Performance Evaluation addendum with EPA assessment data. Such an assessment would also allow medical schools to evaluate their curricula to ensure students obtain the necessary training and practice of the core EPAs to ensure adequate preparation for residency. Our next study will examine the correlation between student class ranking on the Medical Student Performance Evaluation and competency in the EPAs.

box Core Entrustable Professional Activities (EPAs) for Entering Residency.

EPA 1: Gather a history and perform a physical examination

EPA 2: Prioritize a differential diagnosis following a clinical encounter

EPA 3: Recommend and interpret common diagnostic and screening tests

EPA 4: Enter and discuss orders and prescriptions

EPA 5: Document a clinical encounter in the patient record

EPA 6: Provide an oral presentation of a clinical encounter

EPA 7: Form clinical questions and retrieve evidence to advance patient care

EPA 8: Give or receive a patient handover to transaction care responsibility

EPA 9: Collaborate as a member of an interprofessional team

EPA 10: Recognize a patient requiring urgent or emergent care and initiate evaluation and management

EPA 11: Obtain informed consent for tests and/or procedures

EPA 12: Perform general procedures of a physician

EPA 13: Identify system failures and contribute to a culture of safety and improvement

Conclusion

This study was conducted in a large and diverse health care organization, and demonstrated that residency program directors do not consider PGY-1 residents prepared for multiple AAMC core EPAs on day 1 of residency. These include order writing, forming clinical questions, handoffs, informed consent, and promoting a culture of safety. Most of these EPAs are among the more challenging to observe and assess competence for in medical students. Lastly, we found little to no relationship between USMLE scores and EPA ratings.

References

- 1. . Association of American Medical Colleges. Core entrustable professional activities for entering residency (updated). https://www.mededportal.org/icollaborative/resource/887. Accessed July 31, 2017. [DOI] [PubMed]

- 2. . Accreditation Council for Graduate Medical Education. Common program requirements. http://www.acgme.org/What-We-Do/Accreditation/Common-Program-Requirements. Accessed July 31, 2017.

- 3. . Accreditation Council for Graduate Medical Education. Milestones. http://www.acgme.org/What-We-Do/Accreditation/Milestones/Overview. Accessed July 31, 2017.

- 4. . ten Cate O. . Entrustability of professional activities and competency-based training. Med Educ. 2005; 39 12: 1176– 1177. [DOI] [PubMed] [Google Scholar]

- 5. . ten Cate O. . Nuts and bolts of entrustable professional activities. J Grad Med Educ. 2013; 5 1: 157– 158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. . ten Cate O, Scheele F. . Competency-based postgraduate training: can we bridge the gap between theory and clinical practice? Acad Med. 2007; 82 6: 542– 547. [DOI] [PubMed] [Google Scholar]

- 7. . Lindeman BM, Sacks BC, Lipsett PA. . Graduating students' and surgery program directors' views of the Association of American Medical Colleges Core Entrustable Professional Activities for entering residency: where are the gaps? J Surg Educ. 2015; 72 6: e184– e192. [DOI] [PubMed] [Google Scholar]

- 8. . Welcher MC, Hersh W, Takesue B, et al. Barriers to medical students' electronic health record access can impede their preparedness for practice. Acad Med. July 25, 2017. Epub ahead of print. [DOI] [PubMed]

- 9. . Pereira AG, Harrell HE, Weissman A, et al. Important skills for internship and the fourth-year medical school courses to acquire them: a national survey of internal medicine residents. Acad Med. 2016; 91 6: 821– 826. [DOI] [PubMed] [Google Scholar]