ABSTRACT

Background

In-service training examinations (ITEs) are used to assess residents across specialties. However, it is not clear how they are integrated with the Accreditation Council for Graduate Medical Education Milestones and competencies.

Objective

This study explored the distribution of specialty-specific milestones and competencies in ITEs for plastic surgery and orthopaedic surgery.

Methods

In-service training examinations were publicly available for plastic surgery (PSITE) and orthopaedics (OITE). Questions on the PSITE for 2014–2016 and the OITE for 2013–2015 were mapped to the specialty-specific milestones and the 6 competencies.

Results

There was an uneven distribution of milestones and competencies in ITE questions. Nine of the 36 Plastic Surgery Milestones represented 52% (341 of 650) of questions, and 3 were not included in the ITE. Of 41 Orthopaedic Surgery Milestones, 7 represented 51% (201 of 394) of questions, and 5 had no representation on the ITE. Among the competencies, patient care was the most common (PSITE = 62% [403 of 650]; OITE = 59% [233 of 394]), followed by medical knowledge (PSITE = 34% [222 of 650]; OITE = 31% [124 of 394]). Distribution of the remaining competencies differed between the 2 specialties (PSITE = 4% [25 of 650]; OITE = 9% [37 of 394]).

Conclusions

The ITEs tested slightly more than half of the milestones for the 2 specialties, and focused predominantly on patient care and medical knowledge competencies.

Introduction

The 6 competencies were implemented by the Accreditation Council for Graduate Medical Education (ACGME) in 1999, followed by the development of the Next Accreditation System and the adoption of competency-based assessments in the Milestone Project in 2013 and 2014.1,2

The milestones for each specialty were developed with input from key stakeholders, including Review Committees and the member boards of the American Board of Medical Specialties, faculty, and residents. The milestones reflect the knowledge and skill competencies for trainees in each specialty. Validity evidence and reliability have been demonstrated for some of the core specialties.3,4

An important evaluation tool used across specialties is the annual in-service training examination (ITE). These multiple-choice examinations are advantageous in that they assess specialty content areas, have high reliability, and are graded through an automated system.5–7 Yet, studies have shown variable results in the correlation of performance on ITEs with clinical performance and certifying examinations.8–11 Assessment through direct observation, clinical simulation, and multi-source assessments like the milestones provide important complementary information beyond the ITE.12–14

With the implementation of milestones and competencies, ITEs now are accompanied by competency-based systems that consolidate resident assessments that add to a more complete picture of resident performance. However, while residents receive formative evaluations of both ITEs and the milestone-based assessments, there is no existing method that correlates performance on ITEs to competency-based assessments.

We aimed to determine the distribution of milestones and competencies on ITEs, and integrate and align ITEs within the framework of competency-based education.

Methods

We identified ACGME specialties that publish the entire content of their ITEs, including answer keys and explanations. Although several specialties provided partial information, only plastic surgery (the Plastic Surgery In-service Training Examination, PSITE) and orthopaedic surgery (the Orthopaedic In-training Examination, OITE) make the whole examinations available.

We independently reviewed and performed content analysis to define themes in question stems, and used rules to assign milestones within this framework.15,16 As each milestone has an associated competency, the following rules were used to assign a competency: (1) questions that required management of patient disease, procedural complications, decision-making, or interpreting clinical and diagnostic information fell under the competency of patient care; (2) questions that required anatomic knowledge, guidelines and classification systems knowledge, or genetic associations and syndromic constellations were considered medical knowledge; and (3) for the remaining competencies, milestones were specific enough to determine the appropriate assignment based on the question stem. Application of our rules for coding resulted in no ITE question appearing to have more than 1 associated milestone.

Questions on the PSITE and OITE were assigned a milestone from the 36 milestones in plastic surgery and the 41 milestones in orthopaedic surgery.17,18 The 2016 PSITE consisted of 250 questions, and the 2014 and 2015 PSITEs had 200 questions. Ten questions on the 2014 OITE were not published and were excluded from scoring. Given the disease-specific nature of the Orthopaedic Surgery Milestones, a number of ITE questions could not be coded to a milestone because they tested a pathology not documented by the milestones, and were coded as other.

This study was determined exempt from requirement for Institutional Review Board approval.

Results

Milestones

There was an uneven distribution of milestones across both ITEs (provided as online supplemental material). Of the 36 Plastic Surgery Milestones, 9 were represented in 52% (341 of 650) of examination questions. On the OITE, 7 of 41 Orthopaedic Surgery Milestones were represented in 51% (201 of 394) of questions, and 5 milestones were not represented. Detailed information for both specialties is provided as online supplemental material.

Competencies

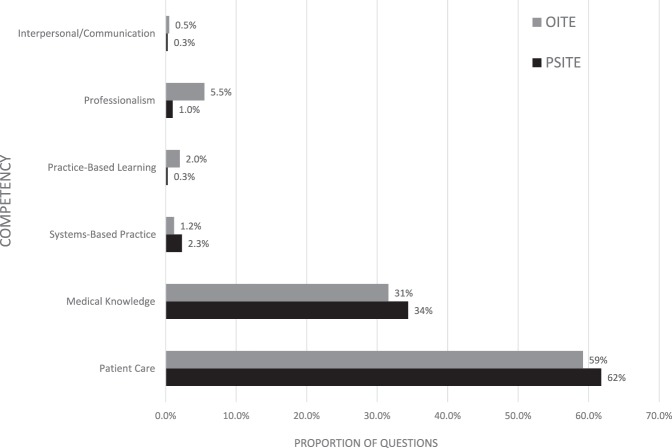

Of the competencies, patient care was tested by 62% (403 of 650) of PSITE and 59% (233 of 394) of OITE questions; medical knowledge was tested in 34% (222 of 650) of PSITE and 31% (124 of 394) of OITE questions. Interpersonal and communication skills had the lowest representation on both the PSITE and the OITE (0.3% and 0.5%, respectively). While patient care and medical knowledge were equally distributed, the other competencies varied significantly (Figure 1).

Figure 1.

Competency Representation on the PSITE and OITE

Note: Representation (%) of competencies on the Plastic Surgery In-service Training Examination (PSITE) and the Orthopaedic Surgery In-service Training Examination (OITE).

Our analysis found that the ITEs for plastic surgery and orthopaedic surgery focus primarily on patient care and medical knowledge. Combined, the 2 competencies represented 96% (625 of 650) of PSITE and 91% (357 of 394) of OITE questions. In this study, the OITEs included significantly more questions emphasizing the other 4 competencies (OITE = 9%; PSITE = 4%), in part through a dedicated section covering professionalism and systems-based practice in 2013.

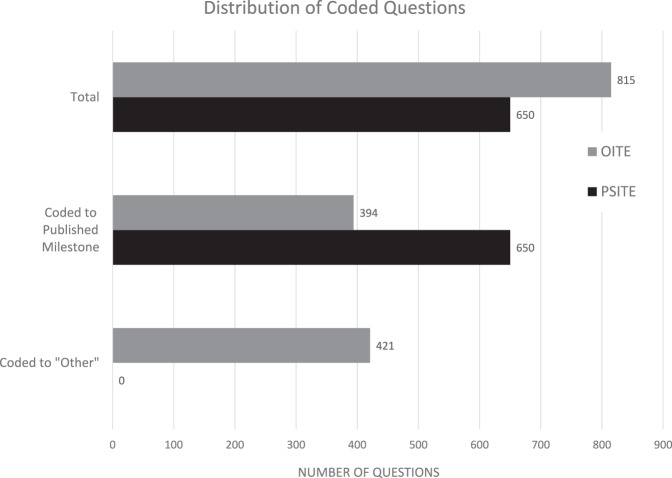

Our analysis also revealed an uneven distribution of milestones in the ITEs, with a quarter of the milestones representing more than 50% of examination questions, while some milestones were not tested. Less than half (48%, 394 of 815) of OITE questions mapped to any Orthopaedic Surgery Milestone (Figure 2).

Figure 2.

Coding Questions on In-service Training Examinations

Note: Number of questions available for analysis and that could be coded to a specialty-specific milestone on the Plastic Surgery In-service Training Examination (PSITE) and the Orthopaedic Surgery In-service Training Examination (OITE).

Discussion

Formulating ITEs for their representation of the 6 competencies may enhance their utility as evaluative metrics, and aligning ITE domains with milestones may improve integration of these formative tests with competency-based education. Considering the labor-intensive process to develop and evaluate the milestones for evidence of validity, they serve as a useful blueprint for writing ITEs.19–24 By using such a framework, ITE scoring may be better integrated with clinical evaluations using the milestones, and it may be useful to compare faculty-rated milestone performance with objective ITE results. A step toward this goal would be to crosswalk milestones and competencies with ITE questions.

This study has limitations. First, it represents a review of 3 years of ITEs in 2 available specialties. Second, as reviewers were not blinded to the study questions, there may be inherent subjectivity in assigning milestones to ITE questions.

To better define the role of ITEs in competency-based education, future studies could compare resident milestone evaluations to milestone-coded performance on the ITE. Referencing a milestone and competency for each ITE question would be a useful addition to see how performance on ITEs fits into a competency-based framework.

Conclusion

Despite the shift toward competency-based evaluation in residency training, ITEs for plastic surgery and orthopaedic surgery are not well integrated with ACGME competency-based assessment domains. The ITEs for orthopaedic surgery and plastic surgery tested a minority of the milestones for each specialty, and focused predominantly on patient care and medical knowledge.

Supplementary Material

References

- 1. . Batalden P, Leach D, Swing S, et al. General competencies and accreditation in graduate medical education. Health Aff (Millwood). 2002; 21 5: 103– 111. [DOI] [PubMed] [Google Scholar]

- 2. . Nasca TJ, Philibert I, Brigham T, et al. The next GME accreditation system—rationale and benefits. N Engl J Med. 2012; 366 11: 1051– 1056. [DOI] [PubMed] [Google Scholar]

- 3. . Beeson MS, Holmboe ES, Korte RC, et al. Initial validity analysis of the emergency medicine milestones. Acad Emerg Med. 2015; 22 7: 838– 844. [DOI] [PubMed] [Google Scholar]

- 4. . McGrath MH. . The plastic surgery milestone project. J Grad Med Educ. 2014; 6 1 suppl 1: 222– 224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. . Cantor AD, Eslick AN, Marsh EJ, et al. Multiple-choice tests stabilize access to marginal knowledge. Mem Cognit. 2015; 43 2: 193– 205. [DOI] [PubMed] [Google Scholar]

- 6. . Bloch C. . TH-F-201-00: writing good multiple choice questions. Med Phys. 2016; 43 6: 3902. [Google Scholar]

- 7. . Little JL, Bjork EL. . Optimizing multiple-choice tests as tools for learning. Mem Cognit. 2015; 43 1: 14– 26. [DOI] [PubMed] [Google Scholar]

- 8. . Ryan JG, Barlas D, Pollack S. . The relationship between faculty performance assessment and results on the in-training examination for residents in an emergency medicine training program. J Grad Med Educ. 2013; 5 4: 582– 586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. . Jones AT, Biester TW, Buyske J, et al. Using the American Board of Surgery In-Training Examination to predict board certification: a cautionary study. J Surg Educ. 2014; 71 6: 144– 148. [DOI] [PubMed] [Google Scholar]

- 10. . Withiam-Leitch M, Olawaiye A. . Resident performance on the in-training and board examinations in obstetrics and gynecology: implications for the ACGME outcome project. Teach Learn Med. 2008; 20 2: 136– 142. [DOI] [PubMed] [Google Scholar]

- 11. . Juul D, Sexson SB, Brooks BA, et al. Relationship between performance on child and adolescent psychiatry in-training and certification examinations. J Grad Med Educ. 2013; 5 2: 262– 266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. . Epstein RM, Dannefer EF, Nofziger AC, et al. Comprehensive assessment of professional competence: the Rochester experiment. Teach Learn Med. 2004; 16 2: 186– 196. [DOI] [PubMed] [Google Scholar]

- 13. . Van Der Vleuten CP. . The assessment of professional competence: developments, research and practical implications. Adv Health Sci Educ Theory Pract. 1996; 1 1: 41– 67. [DOI] [PubMed] [Google Scholar]

- 14. . Van der Vleuten CP, Norman GR, De Graaff E. . Pitfalls in the pursuit of objectivity: issues of reliability. Med Educ. 1991; 25 2: 110– 118. [DOI] [PubMed] [Google Scholar]

- 15. . Riff D, Lacy S, Fico F. . Analyzing Media Messages: Using Quantitative Content Analysis in Research. 3rd ed. New York, NY: Routledge; 2014. [Google Scholar]

- 16. . Krippendorff K. . Content Analysis: An Introduction to its Methodology. 2nd ed. Thousand Oaks, CA: SAGE Publications Inc; 2004. [Google Scholar]

- 17. . Accreditation Council for Graduate Medical Education; American Board of Plastic Surgery. The Plastic Surgery Milestone Project. July 2015. https://www.acgme.org/Portals/0/PDFs/Milestones/PlasticSurgeryMilestones.pdf. Accessed August 3, 2017.

- 18. . Accreditation Council for Graduate Medical Education; American Board of Orthopaedic Surgery. The Orthopedic Surgery Milestone Project. July 2015. http://www.acgme.org/Portals/0/PDFs/Milestones/OrthopaedicSurgeryMilestones.pdf. Accessed August 3, 2017.

- 19. . Angus S, Moriarty J, Nardino RJ, et al. Internal medicine residents' perspectives on receiving feedback in milestone format. J Grad Med Educ. 2015; 7 2: 220– 224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. . Meier AH, Gruessner A, Cooney RN. . Using the ACGME milestones for resident self-evaluation and faculty engagement. J Surg Educ. 2016; 73 6: e150– e157. [DOI] [PubMed] [Google Scholar]

- 21. . Swing SR, Clyman SG, Holmboe ES, et al. Advancing resident assessment in graduate medical education. J Grad Med Educ. 2009; 1 2: 278– 286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. . Schumacher DJ, Spector ND, Calaman S, et al. Putting the pediatrics milestones into practice: a consensus roadmap and resource analysis. Pediatrics. 2014; 133 5: 898– 906. [DOI] [PubMed] [Google Scholar]

- 23. . Rooney DM, Tai BL, Sagher O, et al. Simulator and 2 tools: validation of performance measures from a novel neurosurgery simulation model using the current standards framework. Surgery. 2016; 160 3: 571– 579. [DOI] [PubMed] [Google Scholar]

- 24. . Philibert I, Brigham T, Edgar L, et al. Organization of the educational milestones for use in the assessment of educational outcomes. J Grad Med Educ. 2014; 6 1: 177– 182. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.