Significance

How the motor system participates in auditory perception is unknown. In a magnetoencephalography experiment involving auditory temporal attention, we show that the left sensorimotor cortex encodes temporal predictions, which drive the precise temporal anticipation of forthcoming sensory inputs. This encoding is associated with bursts of beta (18–24 Hz) neural oscillations that are directed toward auditory regions. Our data also show that the production of overt movements improves the quality of temporal predictions and augments auditory task performance. These behavioral changes are associated with increased signaling of temporal predictions in right-lateralized frontoparietal associative regions. This study points at a covert form of auditory active sensing, and emphasizes the fundamental role of motor brain areas and actual motor behavior in sensory processing.

Keywords: auditory perception, sensorimotor, magnetoencephalography, psychophysics, rhythm

Abstract

In behavior, action and perception are inherently interdependent. However, the actual mechanistic contributions of the motor system to sensory processing are unknown. We present neurophysiological evidence that the motor system is involved in predictive timing, a brain function that aligns temporal fluctuations of attention with the timing of events in a task-relevant stream, thus facilitating sensory selection and optimizing behavior. In a magnetoencephalography experiment involving auditory temporal attention, participants had to disentangle two streams of sound on the unique basis of endogenous temporal cues. We show that temporal predictions are encoded by interdependent delta and beta neural oscillations originating from the left sensorimotor cortex, and directed toward auditory regions. We also found that overt rhythmic movements improved the quality of temporal predictions and sharpened the temporal selection of relevant auditory information. This latter behavioral and functional benefit was associated with increased signaling of temporal predictions in right-lateralized frontoparietal associative regions. In sum, this study points at a covert form of auditory active sensing. Our results emphasize the key role of motor brain areas in providing contextual temporal information to sensory regions, driving perceptual and behavioral selection.

Active sensing, the act of gathering perceptual information using motor sampling routines, is well described in all but the auditory sense (1–4). It refers to a dual mechanism of the motor system, which both controls the orienting of sensing organs (e.g., ocular saccades, whisking, sniffing) and provides contextual information to optimize the parsing of sensory signals, via top-down corollary discharges (5). In audition, bottom-up sensory processing is generally disconnected from movements. Moreover, motor acts do not cause inflow of auditory input, except when movements produce sounds (e.g., hand claps, musical instruments). However, top-down motor influences over auditory cortices have been well characterized during self-generated sounds (6, 7). Strikingly, auditory and motor systems strongly interact during speech or music perception (8–11). We recently proposed a theoretical framework, whereby a covert form of active sensing exists in the auditory domain, through which oscillatory influences from motor cortex modulate activity in auditory regions during passive perception (1, 12). This idea implies that the chief information provided by the motor system to sensory processing is contextual temporal information.

Relevantly, the motor system is the core amodal network supporting timing and time perception (13, 14). It is also involved in beat and rhythm processing during passive listening (9, 15–18). Further, beta (∼20 Hz) activity, the default oscillatory mode of the motor system (19, 20), is also related to the representation of temporal information (21–26). Time estimation could thus rely on the neural recycling of action circuits (27) and be implemented by internal, nonconscious simulation of movements in most ecological situations (28, 29). Following these conceptual lines, we may predict that efferent motor signaling would convey temporal prediction information, with behavioral benefits in anticipating future sensory events and the facilitation of their processing.

We recently developed a mechanistic behavioral account of auditory active sensing and showed that during temporal attending, overt rhythmic movements engage a top-down modulation that sharpens the temporal selection of auditory information (30). Crucially, we found that the participants were able to disentangle two streams of sound on the unique basis of temporal cues. In the present study, we recorded magnetoencephalography (MEG) while participants performed such a paradigm to dissociate stimulus-driven from internally driven neural dynamics, and quantified the precision of temporal attention during auditory perception. We addressed three complementary questions. How are temporal predictions encoded? How do temporal predictions modulate auditory processing? Why do overt rhythmic movements improve the temporal precision of auditory attention?

Results

Behavioral Effects of Temporal Attention During Passive Listening.

We asked participants to categorize sequences of pure tones as higher or lower pitched, on average, than a reference frequency (30) (Methods). To drive rhythmic fluctuations in attention, we presented the tones (targets) in phase with a reference beat and in antiphase with irrelevant, yet physically indistinguishable, tones (distractors). Each trial started with the rhythmic presentation of four reference tones indicating both the reference frequency and beat (1.5 Hz), followed by a melody unfolding at 3 Hz (lasting ∼5.5 s), corresponding to the alternation of eight targets and eight distractors of variable frequencies, respectively, presented in quasi-phase and antiphase with the reference beat (Fig. 1A). This interleaved delivery of sensory events forced participants to use the reference beat to discriminate between targets and distractors [i.e., to maximize the integration of relevant sensory cues (targets), while minimizing the interference from irrelevant ones (distractors)]. This protocol (30) ensured that their attentional focus was temporally modulated over an extended time period. Thus, during the 3-Hz melody (our period of interest), while sensory events were delivered at a 3-Hz rate, temporal attention fluctuated at 1.5 Hz, effectively dissociating stimulus-driven and internally driven temporal dynamics (Fig. 1B). The task consisted of two conditions: In the listen condition, participants performed the task while staying completely still during the duration of the trial, and in the tracking condition, they performed the task while rhythmically touching a pad with their left index finger, in phase with the reference beat.

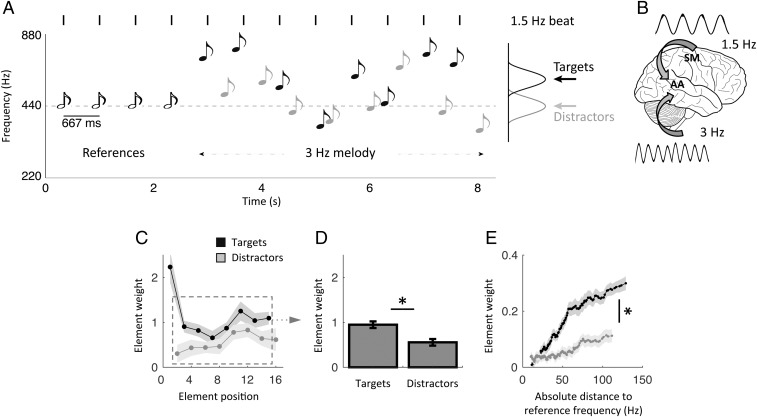

Fig. 1.

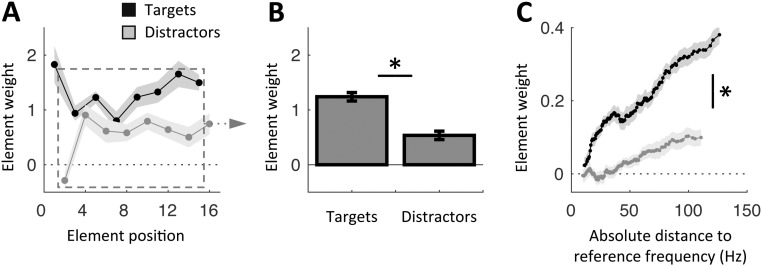

Experimental design and behavioral results in the listen condition. (A) Quasi-rhythmic sequences of 20 pure tones were presented binaurally on each trial. Four reference tones preceded an alternation of eight target and eight distractor tones of variable frequencies. Targets occurred in phase with the preceding references, whereas distractors occurred in antiphase. Participants had to decide whether the mean frequency of targets was higher or lower than the reference frequency. Participants performed the task without moving before the end of the sequence in the listen condition and while expressing the reference beat by moving their left index finger in the (motor) tracking condition. The 3-Hz melody is the period of interest for all analyses. (B) In this design, neural activity dedicated to stimulus or temporal prediction processing is dissociable based on its temporal dynamics (3 Hz vs. 1.5 Hz, respectively). (C–E) Behavioral results in the listen condition. (C) Element-weight profiles for targets (black) and distractors (gray) as a function of element position in the sequence. (D) Averaged contribution of targets and distractors to the decision. Element weights were pooled separately across targets and distractors, excluding the first target and last distractor (dashed square in C). (E) Element-weight profiles for targets (black) and distractors (gray) as a function of tone frequency. Shaded error bars indicate SEM. An asterisk indicates a significant difference (n = 19; paired t tests, P < 0.05).

The task in the listen condition was relatively difficult, with an averaged categorization performance of 73%. We quantified the relative contribution of each tone to the decision (Methods). We observed that the first target had a considerable influence on the decision, due to its leading position at the beginning of each melody sequence (Fig. 1C). To properly investigate the effects of temporal attention, we thus excluded this particular event (and the last distractor, for the same reason) from all subsequent analyses. Participants were able to assign greater weight to targets than distractors in their decision (repeated-measures ANOVA of two stimulus categories × seven elements; main effect of stimulus category: F1,18 = 7.1, P = 0.016; Fig. 1 C and D). Moreover, as predicted by the logistic regression model, the influence a given target wielded on the subsequent decision scaled parametrically with its absolute distance from the reference frequency. This effect (i.e., the slope of the function) was significantly reduced for distractors (paired t test: t18 = 5.2, P < 0.001; Fig. 1E). In other words, participants were able to sustain and orient their temporal attention at a 1.5-Hz rate to segregate targets from distractors and maximize the integration of targets.

Neural Correlates of the 3-Hz Melody.

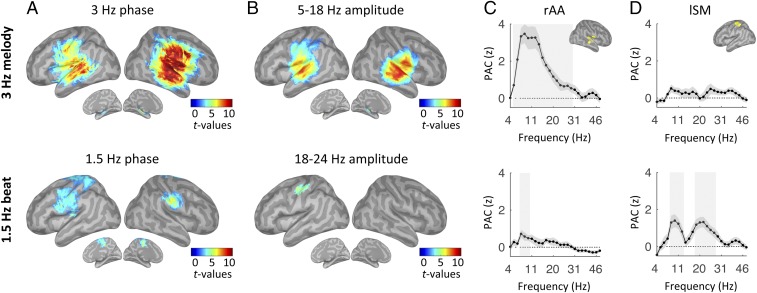

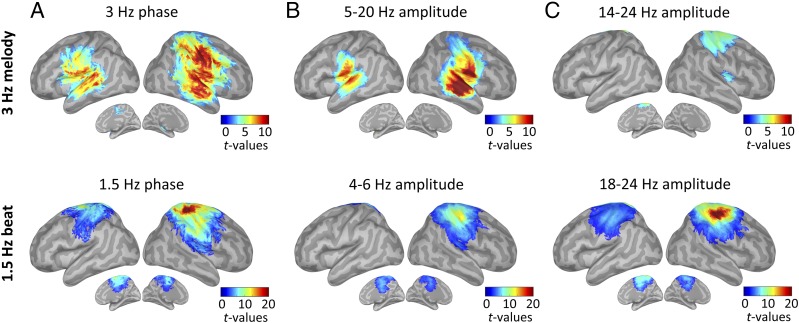

The 3-Hz melody corresponded to a stream of tones presented in quasi-rhythmic fashion (Fig. 1A). We estimated the neural response to this auditory stream by investigating those regions whose oscillatory activity was synchronous to the melody. We relied on two complementary measures: the phase-phase coupling [phase-locking value (PLV)] and phase-amplitude coupling (PAC) between MEG source signals from correct trials and a signal template of the 3-Hz melody (Methods). The dynamics of the 3-Hz melody principally correlated with neural oscillatory activity in bilateral auditory regions, both at 3 Hz (phase coupling) and in the 5- to 18-Hz frequency range (amplitude coupling) [one-tail t tests against zero, P < 0.05, false discovery rate (FDR)-corrected; Fig. 2 A–C, Top].

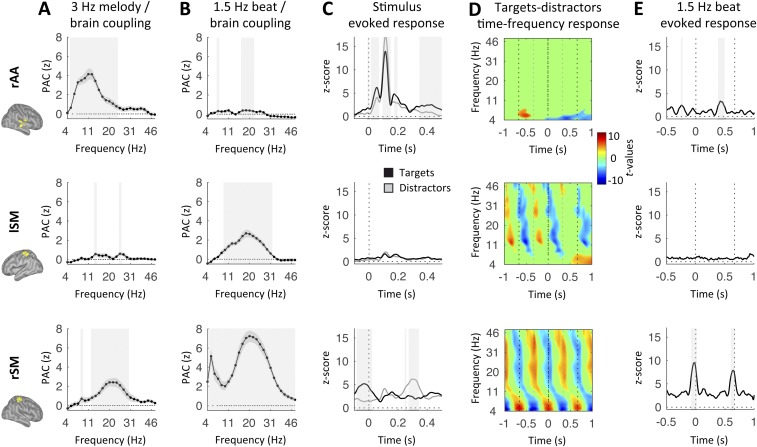

Fig. 2.

Listen: Neural correlates of the 3-Hz melody (Top) and covert temporal (Bottom) predictions. (A and B) Whole-brain statistical maps of phase-phase coupling (A, PLV) and PAC (B) estimates for listen correct trials. Coupling estimates were computed, respectively, between the phase of the 3-Hz melody (Top) or 1.5-Hz beat dynamics (Bottom) and specific brain rhythms. Details of the PAC estimates in rAA (C) and lSM (D) cortex in the range of 4–50 Hz are shown. Shaded error bars indicate SEM. Shaded vertical bars highlight significant effects [n = 19; one-tail t tests against zero (A and B) or paired t tests (C and D); P < 0.05, FDR-corrected].

Core Substrate of Covert Temporal Predictions.

While listening to the 3-Hz melody, participants modulated their attention temporally by relying on covert temporal predictions produced by an internal model approximating the 1.5-Hz beat cued from the four reference tones (Fig. 1 A and B). We estimated the neural correlates of temporal predictions by examining where neural oscillatory activity fluctuated at the beat frequency (i.e., 1.5 Hz) during presentation of the 3-Hz melody. Thus, we computed PLV and PAC between MEG source signals from correct trials, and a signal template of the 1.5-Hz reference beat of interest (Methods).

Whole-brain statistical maps of phase-phase coupling revealed that a network of sensorimotor regions, bilateral over the pre- and postcentral areas, tracked internal temporal predictions (one-tail t tests against zero, P < 0.05, FDR-corrected; Fig. 2A, Bottom). Moreover, PAC measures revealed the presence of beta bursting (18–24 Hz) at the delta rate in the left sensorimotor (lSM) cortex (one-tail t tests against zero, P < 0.05, FDR-corrected; Fig. 2B, Bottom).

Response Profile of the Right Associative Auditory and lSM Cortex During Passive Listening.

To further investigate these results, we analyzed the response profiles of two key regions: the right associative auditory (rAA) cortex and the lSM cortex, where the respective PAC values with the 3-Hz melody and the 1.5-Hz reference beat were found to be maximal. We first confirmed that PAC with the 3-Hz melody was significant in rAA cortex (5–30 Hz; t tests against zero, P < 0.05, FDR-corrected) but not lSM cortex (all P > 0.2, FDR-corrected), and peaked in the alpha range (7–14 Hz; Fig. 2 C and D, Top). Second, the 1.5-Hz beat was indeed phase-amplitude coupled to beta oscillations in lSM cortex (17–27 Hz; P < 0.05, FDR-corrected) but not rAA cortex (all P > 0.2, FDR-corrected; Fig. 2 C and D, Bottom). Additional coupling with the 1.5-Hz beat was also observed in the alpha range in both rAA and lSM cortex (at 7–10 Hz and 8–13 Hz, respectively; P < 0.05, FDR-corrected; Fig. 2 C and D, Bottom), but these results did not reach significance at the whole-brain level of analysis.

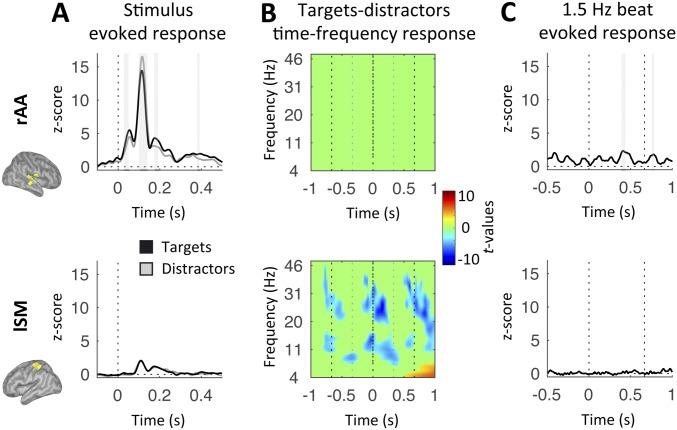

The evoked response to tones was drastically larger in rAA cortex than lSM cortex and, importantly, significantly different between targets and distractors in rAA cortex only (at 30–53 ms, 106–141 ms, 176–195 ms, and 382–396 ms; paired t tests, P < 0.05, FDR-corrected; in lSM cortex, all P > 0.5, FDR-corrected; Fig. S1A), highlighting the impact of temporal attention on auditory processing. Of note, while the M50, M200, and M300 peaks were larger for targets than distractors, the opposite was true for the M100 peak. In contrast, the time-frequency response to tones was significantly different between targets and distractors in lSM cortex only (P < 0.05, FDR-corrected; Fig. S1B). This analysis revealed where 1.5-Hz oscillatory activity originated, and confirmed the PAC results (Fig. 2D, Bottom). The 1.5-Hz beat was not associated with evoked activity in lSM cortex (all P > 0.5, FDR-corrected; Fig. S1C, Bottom), confirming that the amplitude modulations at 1.5 Hz observed in the (alpha and) beta range did not correlate with unexpected motor acts during the listen condition.

Fig. S1.

Listen: Response profiles in rAA (Top) and lSM (Bottom) cortex. (A) Time courses of the evoked responses to tones (black, targets; gray, distractors) for listen correct trials. Dashed lines represent tone onset. (B) Time-frequency statistical maps of the contrast between target and distractor listen correct trials. Dashed lines schematize the alternation of targets and distractors at 3 Hz. (C) Time courses of the evoked response to the 1.5-Hz reference beats for listen correct trials. Dashed lines represent the 1.5-Hz beat. Shaded vertical bars in A and C highlight significant effects. Signals in A and C were low-pass-filtered at 30 Hz for display purpose. Maps were interpolated for display purposes in B [n = 19; paired t tests (A and B) or one-tail t tests against zero (C), P < 0.05, FDR-corrected].

Altogether, these results indicate that covert temporal predictions at 1.5 Hz are represented in the phase of delta oscillations in a distributed sensorimotor network, with phase-coupled amplitude modulations of beta oscillations from the left lateralized sensorimotor cortex (Fig. 2 A and B, Bottom). We emphasize that this neural substrate is distinct from melody-driven neural dynamics, both in terms of oscillatory frequencies and regions involved (Fig. 2), and could not be explained by the presence of evoked motor components (Fig. S1).

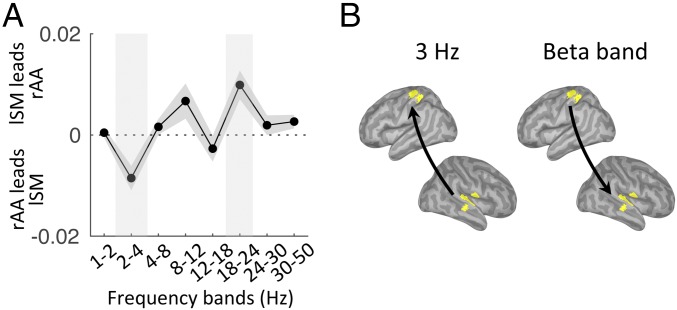

Frequency-Specific Directional Interactions Between rAA and lSM During Passive Listening.

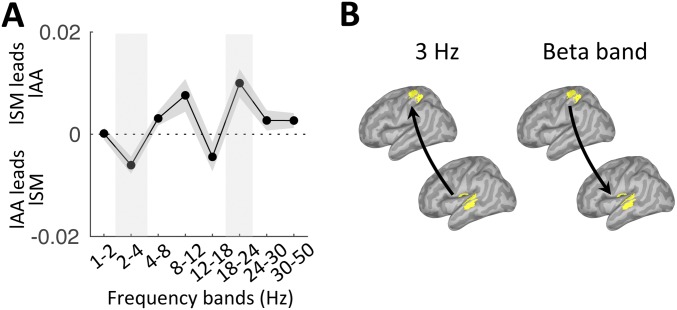

In our paradigm, two complementary dynamics were occurring simultaneously: the melody-driven bottom-up response and the top-down internal temporal predictions, anticipated to operate at 3 Hz and 1.5 Hz, respectively. We investigated the directionality of functional connectivity between two regions of interest (rAA and lSM cortex; Methods) across the frequency spectrum (1–50 Hz). We found bottom-up directed connectivity at 3 Hz (i.e., from rAA to lSM cortex) and top-down directed connectivity in the beta range [18–24 Hz (i.e., from lSM to rAA cortex)] (P < 0.05, FDR corrected; Fig. 3). The results pointed at the role of beta-band oscillations in mediating temporal predictions. Of note, using either the left associate auditory (lAA) or rAA cortex as the sensory region of interest led to similar results (Fig. S2).

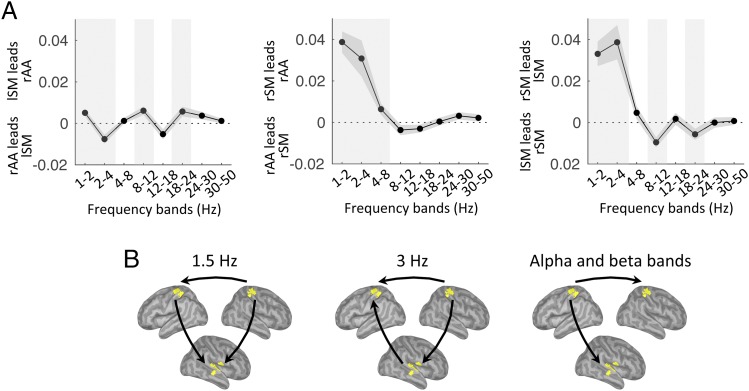

Fig. 3.

Listen: Frequency-specific directional interactions between rAA and lSM cortex. (A) Directed functional connectivity between rAA and lSM in the range of 1–50 Hz for listen correct trials. Shaded vertical bars highlight significant effects. (B) Schematic of the significant results depicted in A (n = 19; paired t tests, P < 0.05, FDR corrected).

Fig. S2.

Listen: Frequency-specific directional interactions between lAA and lSM cortex. (A) Directed functional connectivity between lAA and lSM in the range of 1–50 Hz for listen correct trials. Shaded vertical bars highlight significant effects. (B) Schematic of the significant results depicted in A (n = 19; paired t tests, P < 0.05, FDR-corrected).

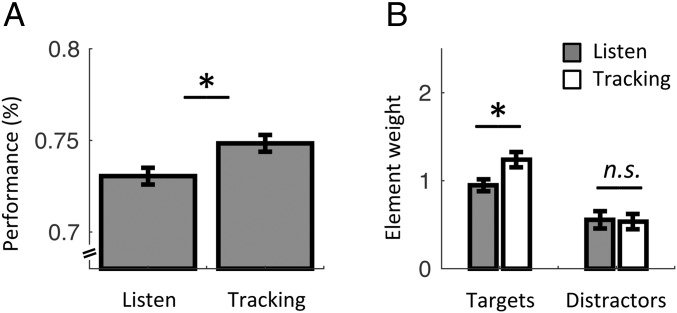

Behavioral Advantage of Overt Motor Tracking.

In sum, we described the role of the lSM cortex in representing and propagating temporal predictions (via beta-band oscillations), which were mandatory to modulate auditory processing in the present study. We further investigated whether overt rhythmic motor activity improved the segmentation of auditory information. At the behavioral level, the comparison between listen and tracking confirmed our previous finding (29) of a significant increase in categorization performance during overt motor tracking (one-tail paired t test: t18 = 2.0, P = 0.033; Fig. 1C). We confirmed that during tracking, participants were able to assign greater weight to targets than distractors in their decision (repeated-measures ANOVA of two stimulus categories × seven elements; main effect of stimulus category: F1,18 = 21.0, P < 0.001; paired t test between target and distractor slopes of element-weight profiles as a function of tone frequency: t18 = 3.3, P = 0.004; Fig. S3). Finally, we observed a significant difference across element weights between listen and tracking (repeated-measures ANOVA of two stimulus categories × two conditions; interaction: F1,18 = 4.6, P = 0.047; Fig. 4B): The contribution of targets was larger during tracking (post hoc paired t test: t18 = 3.5, P = 0.003), whereas the contribution of distractors was not significantly different across conditions (t18 = 0.2, P = 0.88). These results show that overt motor tracking further enhances the perceptual sensitivity to target auditory events.

Fig. S3.

Behavioral results in tracking. (A) Element-weight profiles for targets (black) and distractors (gray) as a function of element position in the sequence. (B) Averaged contribution of targets and distractors to the decision. Element weights were pooled separately across targets and distractors, excluding the first target and last distractor (dashed square in A). (C) Element-weight profiles for targets (black) and distractors (gray) as a function of tone frequency. Shaded error bars indicate SEM. An asterisk indicates a significant difference (n = 19; paired t tests, P < 0.05).

Fig. 4.

Behavioral differences between listen and tracking. (A) Average categorization performance in listen and tracking. (B) Averaged contribution of targets and distractors to the decision in the listen (gray) and tracking (white) conditions. Element weights were pooled separately across targets and distractors, excluding the first target and last distractor. Error bars indicate SEM. An asterisk indicates a significant differences (n = 19; paired t tests, P < 0.05). n.s., nonsignificant.

Neural Correlates of Overt Temporal Predictions.

During tracking, the dynamics of the 3-Hz melody principally correlated with neural 3-Hz oscillatory activity in bilateral auditory regions (phase coupling) and in a broad low-frequency range (5–20 Hz; amplitude coupling; Fig. 5 A and B, Top). In addition, and in contrast to the listen condition, we found PAC between the 3-Hz melody and the right sensorimotor (rSM) cortex in the beta (14–24 Hz) range (one-tail t tests against zero, P < 0.05, FDR-corrected; Fig. 5C, Top). Temporal predictions, which were overtly conveyed during tracking (through left index movements), were unsurprisingly maximally represented over the rSM cortex (i.e., contralateral to finger movements) both in 1.5-Hz phase (Fig. 5A, Bottom) and broadband (4–50 Hz) amplitude modulations, with a main spectral peak and secondary spectral peak in the beta (∼18–24 Hz) and theta (∼4–6 Hz) ranges, respectively (one-tail t tests against zero, P < 0.05, FDR-corrected; Fig. 5 B and C, Bottom). Importantly, coupling was also observed in lSM cortex at 1.5 Hz (phase coupling) and within the beta band (amplitude coupling), but not in the theta band (amplitude coupling; Fig. 5, Bottom). We then detailed the profile of PAC with the 3-Hz melody or the 1.5-Hz beat in rAA, lSM, and rSM cortex, and qualitatively confirmed the above-mentioned results (Fig. S4 A and B). In rAA and lSM cortex, the evoked and time-frequency response profiles were qualitatively similar during the listen condition (Fig. S4 C–E). In rSM (Fig. S4, Bottom), we observed a strong evoked response to the 1.5-Hz beat that slightly anticipated beat onset [−87 to 14 ms; also reoccurring after 1.5 Hz (i.e., 667 ms); P < 0.05, FDR-corrected]. This pattern was also visible in the evoked response to tones (with a peak slightly before target onset and following the distractor onset by ∼333 ms). Finally, the time-frequency response profile in rSM cortex confirmed the presence of broadband amplitude modulation at 1.5 Hz (with peaks in the theta and beta ranges).

Fig. 5.

Tracking: Neural correlates of the 3-Hz melody (Top) and overt temporal (Bottom) predictions. Whole-brain statistical maps of phase-phase coupling (A, PLV) and PAC (B and C) estimates for tracking correct trials are shown. Coupling estimates were computed, respectively, between the phase of the 3-Hz melody (Top) or 1.5-Hz beat dynamics (Bottom) and specific brain rhythms (n = 19; one-tail t tests against zero, P < 0.05, FDR-corrected).

Fig. S4.

Tracking: Response profiles in rAA (Top), lSM (Middle), and rSM (Bottom) cortex. PAC estimates were computed between the phase of the 3-Hz melody (A) or 1.5-Hz beat dynamics (B) and brain amplitude in the range of 4–50 Hz for tracking correct trials. Shaded error bars indicate SEM. (C) Time courses of the evoked responses to tones (black, targets; gray, distractors) for tracking correct trials. Dashed lines represent tone onset. (D) Time-frequency statistical maps of the contrast between targets and distractors tracking correct trials. Dashed lines schematize the alternation of targets and distractors at 3 Hz. (E) Time courses of the evoked response to the 1.5-Hz reference beats for tracking correct trials. Dashed lines represent the 1.5-Hz beat. Shaded vertical bars highlight significant effects. Signals were low-pass-filtered in C and E at 30 Hz for display purpose. Maps were interpolated for display purpose in D [n = 19; paired t tests (A–D) or one-tail t tests against zero (E), P < 0.05, FDR-corrected].

Frequency-Specific Directional Interactions During Overt Motor Tracking.

We then investigated the directionality of functional connectivity between the three regions of interest (rAA, lSM, and rSM cortex). We first observed that directed connectivity from rAA to lSM cortex was found only at 3 Hz (P < 0.05, FDR-corrected; Fig. 6A). Second, directed connectivity from rSM cortex was directed toward both rAA (1–8 Hz) and lSM (1–4 Hz; P < 0.05, FDR-corrected) cortex in the low-frequency range. Finally, we also observed directed connectivity from lSM cortex toward both rAA and rSM cortex in the alpha (8–12 Hz) and beta (18–24 Hz) ranges (and additionally at 1.5 Hz toward rAA cortex; P < 0.05, FDR-corrected). Importantly, lSM cortex was neither acoustically stimulated (contrary to rAA cortex; Fig. S4C) nor associated with overt movements (contrary to rSM cortex; Fig. S4E). Nevertheless, this region was a source of directed functional connectivity during tracking (Fig. 6B).

Fig. 6.

Tracking: Frequency-specific directional interactions between rAA, lSM, and rSM cortex. (A) Directed functional connectivity between rAA and lSM (Left), rAA and rSM (Center), and lSM and rSM (Right), in the range of 1–50 Hz for tracking correct trials. Shaded vertical bars highlight significant effects. (B) Schematic of the main significant results depicted in A (n = 19; paired t tests, P < 0.05, FDR-corrected).

Taken together, our results from both the listen and tracking conditions revealed a key role for lSM cortex in representing and directing toward sensory regions’ temporal predictions through beta bursting (18–24 Hz).

Extended Influence of Temporal Predictions During Overt Motor Tracking.

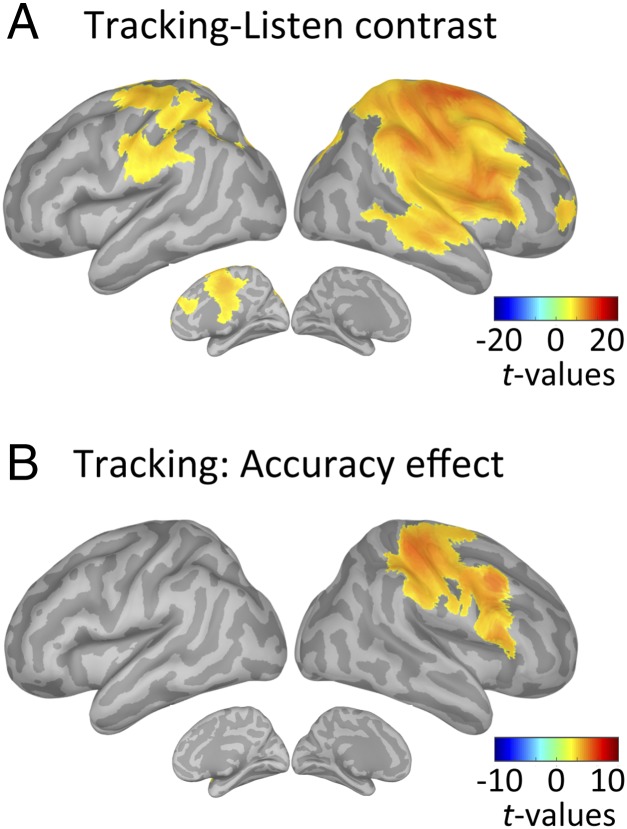

To further comprehend how temporal predictions modulate sensory processing and drive performance accuracy, we contrasted the neural correlates of temporal predictions, estimated at the 1.5-Hz phase, in tracking vs. listen correct trials. The data confirmed the qualitative difference in neural encoding of temporal predictions between listen and tracking. Specifically, we showed that overt motor tracking enhanced phase coupling with the 1.5-Hz beat in a broad set of regions, encompassing the bilateral sensorimotor cortex and right-lateralized temporal, parietal, and frontal regions (paired t tests, P < 0.05, FDR-corrected; Fig. 7A). No significant enhancement was observed in listen relative to tracking (P > 0.5, FDR-corrected).

Fig. 7.

Modulation of the neural correlates of temporal predictions with condition and accuracy. Whole-brain statistical maps of the contrast between 1.5-Hz beat phase-phase coupling estimates in tracking versus listen correct trials (A) and in tracking correct versus incorrect trials (B) are shown (n = 19; paired t tests, P < 0.05, FDR-corrected).

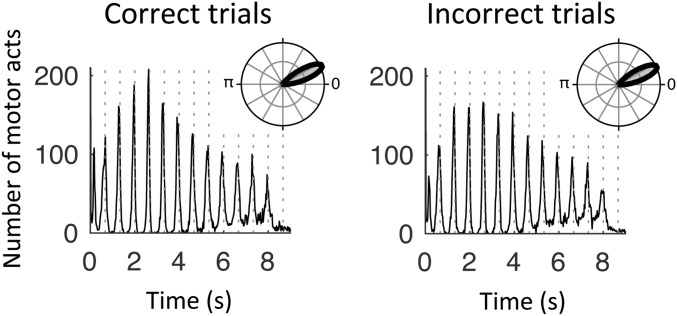

We further investigated possible accuracy effects by contrasting the neural correlates of temporal predictions in correct vs. incorrect trials for both conditions. During tracking, the phase of 1.5-Hz oscillations in the right sensorimotor, inferior parietal, and lateral frontal regions was significantly related to the behavioral outcome, with stronger phase coupling in correct than incorrect trials (paired t tests, P < 0.05, FDR-corrected; Fig. 7B). Importantly, this result could not be explained by a difference in the precision of motor tracking events (i.e., the timing of individual motor acts) between correct and incorrect trials (paired t test: t18 = 0.3, P = 0.29; Fig. S5). In the listen condition, no significant difference between correct and incorrect trials was observed at the whole-brain level of analysis (paired t tests, P > 0.5, FDR-corrected). We note that without correcting for multiple comparisons, the same analysis revealed a single cluster located around lSM cortex (paired t tests, P < 0.05, uncorrected). Finally, no significant difference between correct and incorrect trials was observed at the whole-brain level of analysis in the tracking or listen conditions for PAC estimates of temporal predictions (paired t tests, P > 0.05, FDR-corrected).

Fig. S5.

Distribution of behavioral motor acts in tracking. Values are aggregated across trials and participants for correct (Left) and incorrect (Right) trials. Dashed lines represent the 1.5-Hz beat. (Insets) Phase orientation of motor acts relative to the 1.5-Hz beat.

Together, these results therefore reveal that in the right sensorimotor, inferior parietal, and lateral frontal regions, the phase of neural oscillations at the beat frequency (1.5 Hz) is significant to behavioral outcome: Phase coupling was stronger in the condition with the highest categorization score (i.e., tracking; Fig. 4) and in correct trials of this latter condition (Fig. 7B).

Discussion

The present study aimed at identifying the neural substrates of temporal predictions and the mechanisms by which they impact auditory perception. We hypothesized that temporal prediction information is encoded in sensorimotor cortex oscillatory activity and that overt movements enhance its representation and further its influence. We designed a paradigm involving endogenous temporal attention [i.e., attention guided by internal predictions (31)], in which, importantly, temporal attention and stimulus dynamics were operating at distinct rates (1.5 vs. 3 Hz, respectively; Fig. 1). Schematically, our paradigm involved the maintenance of temporal predictions and their deployment through temporal attention to modulate sensory processing.

Behaviorally, our results show that participants were able to sustain and orient their temporal attention to segregate targets from distractors in both conditions (Fig. 1 C–E and Fig. S3). At the cortical level, we observed that temporal attention modulated the processing of auditory information down to the auditory cortex (Fig. S1A). This indicates that endogenous temporal attention impacts on stimulus-driven event patterns, tuning sensory processing to its own internal temporal dynamics to drive perceptual and behavioral selection (32).

To perform this paradigm, participants had to capitalize on temporal predictions (i.e., an internal model of the 1.5-Hz beat) during presentation of the 3-Hz melody (Fig. 1 A and B). While we observed differences between the listen and tracking conditions, especially in terms of oscillatory frequencies and regions involved (Figs. 2 and 5), a condition-invariant representation emerged as a candidate to encapsulate the core neural substrate of temporal predictions. Indeed, we first observed in both conditions a low-frequency phase reorganization combined with the presence of beta bursting at the delta rate, a result compatible with previous findings (21, 28, 33, 34). Second, these delta-beta–coupled oscillations were present in lSM cortex. Park et al. (35, 36) recently identified that this region played a pivotal role in the top-down modulation of auditory processing. Finally, the functional connectivity conveyed by beta oscillations was directed toward rAA cortex, probably to modulate perceptual and behavioral selection (Figs. 3 and 6). It is striking that even during tracking, where beta bursting was dominant in rSM cortex (due to left index movements; Fig. 5C), the connectivity of beta oscillations was still directed from lSM cortex, toward both rAA and rSM cortex. Altogether, these results bridge recent electrophysiological studies highlighting the role of beta oscillations in representing temporal information (21–26) with studies that emphasized the role of the motor system in timing (9, 13–18, 37). They also fuel the idea that left-lateralized motor structures are more preferentially engaged than their right counterparts in predicting future events, possibly to optimize information processing (37). While our results underline the lSM cortex, it is likely that subcortical structures and, in particular, the striatum may drive the effects we observed at the cortical level with MEG (16–18, 38–42).

Multiple functional MRI studies have reported involvement of the left inferior parietal cortex in temporal attention (43–46). While our analyses highlight regions implicated in the encoding of temporal predictions and sensory dynamics, we have not investigated the neural bases of temporal attention per se. Rather, we suggest that the left inferior parietal cortex is at the interface between internal predictions and sensory processing (i.e., it may be driven by the motor system to modulate auditory processing).

The role of beta oscillations in encoding temporal predictions can be interpreted in two complementary manners. First, temporal predictions might be encoded in beta bursting because the motor system is (among other things) dedicated to the encoding of temporal predictions and beta (∼20 Hz) activity is the default oscillatory mode of the motor system (19, 20). This would represent an incidental hypothesis. Second, we posit that top-down predictions are ubiquitously encoded through beta oscillations, while bottom-up information tends to be reflected in higher frequency (gamma) oscillations: This perspective has gained substantial attention in the recent years (47–50). This would represent an essential hypothesis. In this framework, beta oscillations could mediate the endogenous reactivation of cortical content-specific representations in the service of current task demands (51). Our results also pinpoint the role of alpha oscillations in both bottom-up and top-down processing (Figs. 2, 5, and 6). However, these alpha-related findings were not consistent across conditions, making them difficult to interpret.

Our behavioral findings also confirm that overt rhythmic movements improve performance accuracy. The additional act of tapping, instead of interfering with the auditory task, favors it. This indicates that motor tracking and temporal attention synergistically interact (29). Further, this beneficial strategy does not capitalize on any additional external information (e.g., light flashes presented at the reference beat), but is purely internally driven. This suggests that embodied representations of rhythms are more stable than internal, cognitive alternatives. This behavioral benefit was driven by an increased modulation of sensory evidence encoding by temporal attention [i.e., an increased differential weighting between targets and distractors (30)] (Fig. 1). However, in our study, motor tracking only enhanced sensitivity to target tones, while it was found in a previous study (30) that it also suppresses sensitivity to distractors. Such discrepancy is probably due to this study’s setting and instructions, which resulted in enhanced attention to targets (Methods). Overall, our behavioral results confirm that overt tracking sharpens the temporal selection of auditory information. Such a behavioral advantage has broad consequences. For example, it could be directly exploited in language rehabilitation to help patients suffering from auditory or language deficits understand speech better. Using an overt motor strategy (in the form of rhythmic movements) may indeed help focusing on the temporal modulations of a speech signal of interest, and therefore facilitate speech perception and segmentation, especially in adverse listening conditions (52–54).

This behavioral advantage is mirrored at the cortical level by a task-specific amplified phase reorganization of low-frequency delta oscillations during motor tracking in an extended network comprising bilateral sensorimotor and right lateralized auditory and frontoparietal regions (Fig. 5A). This set of right-lateralized regions that spreads well beyond the sensorimotor cortex corresponds to the typical network involved in auditory memory for pitch (55, 56). It plays a major role in the current task, which involves pitch estimation and retention. Importantly, our results thus show that temporal predictions spread selectively toward task-relevant regions and propagate at the network level. Further, in a subset of right-lateralized regions, delta-phase reorganization was significant to behavioral outcome (Fig. 7). Our results thus show that during overt tracking, additional portions of cortex were implicated, through delta oscillations, probably to modulate sensory processing, emphasize target selection, and augment behavioral performance. However, our results do not address whether such motor-driven benefit requires a sense of agency (i.e., the intention to produce the movement) (57) and/or is mediated by somatosensory (tactile) feedback (58).

Altogether, these results show that the motor system is ubiquitously involved in sensory processing, providing (at least) temporal contextual information. It encodes temporal predictions through delta-beta–coupled oscillations and has directed functional connectivity toward sensory regions in the beta range. We also show that performing overt rhythmic movements is an efficient strategy to augment the quality of temporal predictions and amplify their influence on sensory processing, enhancing perception.

Methods

Participants.

Twenty-one healthy adult participants were recruited (age range: 20–48 y, 10 female). The experiment followed ethics guidelines from the McGill University Health Centre (Protocol NEU-13-023). Informed consent was obtained from all participants before the experiment. All had normal audition and reported no history of neurological or psychiatric disorders. We did not select participants based on musical training. Two participants were excluded from further analyses, as they did not perform the task properly.

Experimental Design.

Sequences of pure tones (Fig. 1A) were presented binaurally at a comfortable hearing level, using the Psychophysics-3 toolbox (59), and additional custom scripts written with MATLAB (The MathWorks). Each pure tone was sampled at 44,100 Hz and lasted 100 ms, with a dampening length and attenuation of 10 ms and 40 dB, respectively.

Each trial (∼15 s) was composed of 20 pure tones, which were qualified as references, targets, and distractors. Four reference tones initiated the sequence, indicating both the reference tone frequency ( = 440 Hz) and beat {1.5 Hz [i.e., interstimulus interval (ISI) = 667 ms]}. They were followed by an alternation of eight target and eight distractor tones of variable frequencies (with an SD of 0.2 in base-2 logarithmic units) presented in a quasi-rhythmic fashion [at 3 Hz (i.e., ISI = 333 ± 67 ms)]. The distribution of the 67-ms jitter across the tones of each sequence was approximately Gaussian and shorter than 141 ms. This ensured that no overlap between targets and distractors could occur. Importantly, targets and distractors occurred in phase and antiphase with the preceding references, respectively, so that participants could use the beat provided by the references to distinguish targets from distractors, which were otherwise perceptually indistinguishable.

Participants performed a two-alternative pitch categorization task at the end of each trial by deciding whether the mean frequency of targets, , was lower or higher than . Participants reported their choice with either their right index (lower) or right middle (higher) finger. The mean frequency of distractors, , was always equal to , and hence noninformative. The absolute difference between and was titrated for each participant to reach threshold performance (discussed below). The task consisted of two conditions, listen and tracking, with the only difference being that participants tapped in rhythm with the relevant sensory cues in the tracking condition. To do so, participants were required to follow the beat by moving their left index finger from the beginning of the sequence (the second reference tone), so that their rhythm was stabilized when the 3-Hz melody started. In essence, this condition is a variation of the synchronization-continuation paradigm (10). We instructed participants to use their left hand to spatially dissociate the neural correlates of motor acts (in the right motor cortex) from those of temporal predictions, hypothesized to be lateralized to the left hemisphere (42–45). Their movements were recorded with an infrared light pad. To ensure that each participant’s finger was properly positioned on the pad and occluded the infrared light when tracking, we added a small fabric patch below the infrared light that participants had to make sure to touch. Thus, in addition to the task, participants had to focus on the somatosensory feedback from their finger in the tracking condition, which probably resulted in enhanced attention to targets compared with a previous experiment (30). Participants were asked to stay still in the listen condition, not moving any part of their body, so as to minimize the overt involvement of the motor system. Absence of movements during the listen condition was monitored visually, using online video-capture.

For each participant, the experiment was divided into three sessions occurring on different days, each lasting ∼1 h. The first session, purely behavioral, started with a short training period, where the task was made easier by increasing the relative distance between the mean frequency of targets and , and by decreasing the volume of distractors. Following this, participants performed a psychophysical staircase with as the varying parameter. The staircase was set to obtain 75% of categorization performance in the listen condition. Then, the participants performed two separate sessions inside the MEG scanner. Two hundred trials per condition (listen or tracking) were acquired using a block-design paradigm, with conditions alternating every 20 trials (∼5 min). Feedback was provided after each trial to indicate correct/incorrect responses, and overall feedback on performance, indicating the total number of correct responses, was given after every 20-trial block for motivational purposes.

Data Acquisition.

MEG data were acquired at the McConnell Brain Imaging Centre, Montreal Neurological Institute, McGill University, using a 275-channel MEG system (axial gradiometers, Omega 275; CTF Systems, Inc.) with a sampling rate of 2,400 Hz. Six physiological (Electrooculogram and Electrocardiogram, one audio, two response buttons, and one infrared light pad channels were recorded simultaneously and synchronized with the MEG. The head position of participants was controlled using three coils, whose locations were digitized (Polhemus), together with ∼100 additional scalp points, to facilitate anatomical registration with MRI. Instructions were visually displayed on a gray background (spatial resolution of 1,024 by 768 pixels and vertical refresh rate of 60 Hz) onto a screen at a comfortable distance from the subject’s face. Auditory stimuli were presented through insert earphones (E-A-RTONE 3A; Aero Company). On each trial, participants had to fixate on a cross, located at the center of the screen, to minimize eye movements. Before the first recording session, participants performed a 5-min, eyes-open, resting-state session to be used as baseline activity in ensuing analyses. For cortically constrained MEG source analysis (i.e., the projection of the MEG sensor data onto the cortical surface), a T1-weighted MRI acquisition of the brain was obtained from each participant (1-mm isotropic voxel resolution).

Behavioral Data Processing.

We estimated the “element weight” [i.e., the additive contribution assigned to each target () and distractor ()] for the subsequent decision (higher or lower pitched than ). We calculated these parameters across trials via a multivariate logistic regression of choice on the basis of a linear combination of the frequencies of the 16 tones (eight targets and eight distractors):

| [1] |

where is the probability of judging the target sequence as higher pitched, is the cumulative normal density function, () is the frequency of the target (distractor) tone at position in the sequence (expressed in logarithmic distance to ), and is an additive response bias toward one of the two choices. This analysis was based on best-fitting parameter estimates from the logistic regression model, which are not bounded and meet the a priori assumptions of standard parametric tests (30, 60). To estimate the element weight as a function of tone frequency, we binned the data into 100 overlapping quartiles before computing the multivariate logistic regression. We then estimated, per participant and separately for targets and distractors, the slope (linear coefficient) of the resulting element-weight profiles as a function of the tone frequency.

Timing of Motor Acts in the Tracking Condition.

We extracted the timing of individual motor acts to investigate the precision of motor tracking. This analysis was performed separately for correct and incorrect trials of the tracking condition, after having matched the number of trials in these two groups for each participant. We then computed the preferred phase orientation (in radians) of motor acts relative to the 1.5-Hz beat (with zero corresponding to perfect simultaneity between a motor act and the 1.5-Hz beat). The mean resultant vector length was finally extracted to statistically compare the distribution of tapping events in correct and incorrect trials.

MEG Data Preprocessing.

Preprocessing was performed with Brainstorm (61), following good-practice guidelines (62). Briefly, we removed electrical artifacts using notch filters (at 60 Hz and its first three harmonics), slow drifts using high-pass filtering (at 0.3 Hz), and eye blink and heartbeat artifacts using source signal projections. We detected sound onsets with a custom threshold-based algorithm on the simultaneously recorded audio traces. Data were split into 10-s trials and 600-ms epochs (−100:500 ratio) centered at the onset of each tone. MRI volume data were segmented with Freesurfer and down-sampled into Brainstorm to 15,002 triangle vertices to serve as image supports for MEG source imaging. Finally, we computed individual MEG forward models using the overlapping-sphere method, and source imaging using weighted minimum norm estimates (wMNEs) onto preprocessed data, all with using default Brainstorm parameters, and obtained 15,002 source-reconstructed MEG signals (i.e., vertices). The wMNEs also included an empirical estimate of the variance of the noise at each MEG sensor, obtained from a 2-min empty-room recording done at the beginning of each scanning session.

Neural Correlates of the 3-Hz Melody.

The 3-Hz melody corresponded to a stream of tones presented in quasi-rhythmic fashion (Fig. 1A). We estimated the neural response to this auditory stream by means of two complementary measures inspired by Peelle et al. (63): phase-phase coupling (indexed by the PLV) and PAC between MEG signals and the 3-Hz melody. For each trial, we first extracted the MEG signals recorded during presentation of the 3-Hz melody (Fig. 1A; ∼2.5–8 s). We approximated the stimulus dynamics with a sinusoid oscillating around 3 Hz, in which each cycle corresponded to the duration between two successive tone onsets (with tone onset corresponding to = 0). For phase-phase coupling, we band-pass-filtered the MEG source signals at 2–4 Hz [using an even-order, linear-phase finite-impulse-response (FIR) filter], extracted their Hilbert phase, and computed PLV scores (64) between each resulting MEG signal and the 3-Hz acoustic sinusoid over time and trials. For PAC, we first estimated the amplitude of MEG source signals in 26 frequencies between 4 and 50 Hz by applying a time-frequency wavelet transform, using a family of complex Morlet wavelets [central frequency of 1 Hz, time resolution (FWMH) of 3 s; hence, three cycles]. Then, we computed the phase-amplitude cross-frequency coupling (65) between the 3-Hz acoustic sinusoid (phase) and each resulting MEG signal (amplitude) over time and trials. Finally, parameter estimates (PLV or PAC) were contrasted with similarly processed resting-state data (assigned randomly), projected on a standard default brain, spatially smoothed, and z-scored across vertices (to normalize across participants) before performing group-level statistical tests. Note that z-scoring was applied for main effect (e.g., listen condition) but not contrast analyses (e.g., tracking vs. listen), as this latter is a normalization procedure in itself.

Neural Correlates of Temporal Predictions.

During the 3-Hz melody, participants modulated their attention in time by relying on an internal model, an approximation of the 1.5-Hz beat initiated by the four reference tones (Fig. 1A). We estimated the neural dynamics of these temporal predictions by means of phase-phase coupling (PLV) and PAC between MEG signals and the internal 1.5-Hz reference beat. We approximated the internal reference beat with a pure sinusoid oscillating at 1.5 Hz (with beat occurrence corresponding to = 0). We then applied the procedure described above, using the 1.5-Hz pure sinusoid as a signal template, and band-passed-filtered the MEG source signals within 1–2 Hz for phase-phase coupling.

Regions of Interest.

We defined four regions of interest of 100 vertices each using functional localizers in each individual. We defined the lAA and rAA cortices as the left and right hemispheric vertices, respectively, having the largest M100 (∼110 ms) auditory evoked responses to both target and distractor tones in both listen and tracking conditions. The lSM and rSM cortices were defined as the vertices having the largest M50 (∼40 ms) evoked response, corresponding, respectively, to the right-handed motor responses produced at the end of each trial (to report participants’ choices) and to the left-handed finger movements produced in the tracking condition (to track the reference beat). Region-of-interest analyses were carried out by performing principal component analysis across the 100 vertices composing each region of interest so as to reduce their spatial dimensionality.

Evoked Responses.

Different categories of evoked responses were computed by averaging MEG signals across trials for each time sample around targets, distractors, or the 1.5-Hz beat onsets. The resulting signal averages were z-scored, using similarly processed resting-state data as a baseline before group-level statistical tests.

Time-Frequency Responses.

Time-frequency responses were computed by first estimating the amplitude of MEG signals in 30 frequency bins between 1 and 50 Hz by applying a time-frequency wavelet transform (discussed above) around target and distractor onsets (−1:1 s ratio). The resulting signals were averaged across trials. We finally contrasted in a normalized manner (across frequencies) targets and distractors by subtracting their respective time-frequency response and dividing the result by their sum.

Directed-Phase Transfer Entropy.

Directed functional connectivity between regions of interest was estimated using directed-phase transfer entropy (66). Briefly, it is based on the same principle as Wiener–Granger causality, with time series described in terms of their instantaneous phase (also ref. 67). Signals were band-pass-filtered in specific frequency bands (covering the 1- to 50-Hz range) before computation of directed-phase transfer entropy. We then normalized the results between −0.5 and 0.5, with the sign indicative of the dominant direction of functional connectivity.

Statistical Procedures.

All analyses were performed at the single-subject level and followed by standard parametric tests at the group level (e.g., paired t tests, t tests against zero, repeated-measure ANOVAs). To test for the presence of significant 1.5-Hz beat-evoked responses, data were z-scored over time (i.e., normalized around zero) and t tests against zero were then performed. One-tail t tests were applied when analyses were constrained with an a priori hypothesis. Two-tail t tests were applied otherwise. In particular, main effects were subjected to one-tail t tests against zero to investigate the specific hypothesis of a stronger impact of acoustic stimuli on task-related rather than resting-state recordings. The alternate hypothesis was not investigated, as it was considered unrealistic. FDR corrections for multiple comparisons were applied over the dimensions of interest (i.e., time, vertices, frequencies), using the Benjamini–Hochberg step-up procedure. For whole-brain analyses, only clusters >60 vertices in size were reported. For time-frequency analyses, only clusters >400 data points were reported.

Data and Analysis Pipeline Availability.

The data reported in this paper, together with analysis pipelines, can be accessed upon request via dx.doi.org/10.23686/0088165.

Acknowledgments

We thank Philippe Albouy, Beth Bock, François Tadel, and Peter Donhauser for providing comments and suggestions at multiple stages of the study. B.M. was supported by a fellowship from the Montreal Neurological Institute. S.B. was supported by a Senior Researcher Grant from the Fonds de Recherche du Quebec-Santé (Grant 27605), a Discovery Grant from the National Science and Engineering Research Council of Canada (Grant 436355-13), the NIH (Grant 2R01EB009048-05), and a Platform Support Grant from the Brain Canada Foundation (Grant PSG15-3755).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1705373114/-/DCSupplemental.

References

- 1.Schroeder CE, Wilson DA, Radman T, Scharfman H, Lakatos P. Dynamics of active sensing and perceptual selection. Curr Opin Neurobiol. 2010;20:172–176. doi: 10.1016/j.conb.2010.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kleinfeld D, Ahissar E, Diamond ME. Active sensation: Insights from the rodent vibrissa sensorimotor system. Curr Opin Neurobiol. 2006;16:435–444. doi: 10.1016/j.conb.2006.06.009. [DOI] [PubMed] [Google Scholar]

- 3.Wachowiak M. All in a sniff: Olfaction as a model for active sensing. Neuron. 2011;71:962–973. doi: 10.1016/j.neuron.2011.08.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hatsopoulos NG, Suminski AJ. Sensing with the motor cortex. Neuron. 2011;72:477–487. doi: 10.1016/j.neuron.2011.10.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Crapse TB, Sommer MA. Corollary discharge across the animal kingdom. Nat Rev Neurosci. 2008;9:587–600. doi: 10.1038/nrn2457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Schneider DM, Nelson A, Mooney R. A synaptic and circuit basis for corollary discharge in the auditory cortex. Nature. 2014;513:189–194. doi: 10.1038/nature13724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Flinker A, et al. Single-trial speech suppression of auditory cortex activity in humans. J Neurosci. 2010;30:16643–16650. doi: 10.1523/JNEUROSCI.1809-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Scott SK, McGettigan C, Eisner F. A little more conversation, a little less action–Candidate roles for the motor cortex in speech perception. Nat Rev Neurosci. 2009;10:295–302. doi: 10.1038/nrn2603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Zatorre RJ, Chen JL, Penhune VB. When the brain plays music: Auditory-motor interactions in music perception and production. Nat Rev Neurosci. 2007;8:547–558. doi: 10.1038/nrn2152. [DOI] [PubMed] [Google Scholar]

- 10.Repp BH, Su Y-H. Sensorimotor synchronization: A review of recent research (2006-2012) Psychon Bull Rev. 2013;20:403–452. doi: 10.3758/s13423-012-0371-2. [DOI] [PubMed] [Google Scholar]

- 11.Patel AD, Iversen JR. The evolutionary neuroscience of musical beat perception: The action simulation for auditory prediction (ASAP) hypothesis. Front Syst Neurosci. 2014;8:57. doi: 10.3389/fnsys.2014.00057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Morillon B, Hackett TA, Kajikawa Y, Schroeder CE. Predictive motor control of sensory dynamics in auditory active sensing. Curr Opin Neurobiol. 2015;31:230–238. doi: 10.1016/j.conb.2014.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Merchant H, Yarrow K. How the motor system both encodes and influences our sense of time. Curr Opin Behav Sci. 2016;8:22–27. [Google Scholar]

- 14.Coull JT. Brain Mapping. Elsevier; London: 2015. A frontostriatal circuit for timing the duration of events; pp. 565–570. [Google Scholar]

- 15.Chen JL, Penhune VB, Zatorre RJ. Listening to musical rhythms recruits motor regions of the brain. Cereb Cortex. 2008;18:2844–2854. doi: 10.1093/cercor/bhn042. [DOI] [PubMed] [Google Scholar]

- 16.Teki S, Grube M, Kumar S, Griffiths TD. Distinct neural substrates of duration-based and beat-based auditory timing. J Neurosci. 2011;31:3805–3812. doi: 10.1523/JNEUROSCI.5561-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Merchant H, Grahn J, Trainor L, Rohrmeier M, Fitch WT. Finding the beat: A neural perspective across humans and non-human primates. Philos Trans R Soc Lond B Biol Sci. 2015;370:20140093. doi: 10.1098/rstb.2014.0093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Grahn JA, Rowe JB. Finding and feeling the musical beat: Striatal dissociations between detection and prediction of regularity. Cereb Cortex. 2013;23:913–921. doi: 10.1093/cercor/bhs083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Murthy VN, Fetz EE. Coherent 25- to 35-Hz oscillations in the sensorimotor cortex of awake behaving monkeys. Proc Natl Acad Sci USA. 1992;89:5670–5674. doi: 10.1073/pnas.89.12.5670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Niso G, et al. OMEGA: The open MEG archive. Neuroimage. 2016;124:1182–1187. doi: 10.1016/j.neuroimage.2015.04.028. [DOI] [PubMed] [Google Scholar]

- 21.Arnal LH, Doelling KB, Poeppel D. Delta-beta coupled oscillations underlie temporal prediction accuracy. Cereb Cortex. 2014;25:3077–3085. doi: 10.1093/cercor/bhu103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fujioka T, Trainor LJ, Large EW, Ross B. Internalized timing of isochronous sounds is represented in neuromagnetic β oscillations. J Neurosci. 2012;32:1791–1802. doi: 10.1523/JNEUROSCI.4107-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fujioka T, Ross B, Trainor LJ. Beta-band oscillations represent auditory beat and its metrical hierarchy in perception and imagery. J Neurosci. 2015;35:15187–15198. doi: 10.1523/JNEUROSCI.2397-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kulashekhar S, Pekkola J, Palva JM, Palva S. The role of cortical beta oscillations in time estimation. Hum Brain Mapp. 2016;37:3262–3281. doi: 10.1002/hbm.23239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Iversen JR, Repp BH, Patel AD. Top-down control of rhythm perception modulates early auditory responses. Ann N Y Acad Sci. 2009;1169:58–73. doi: 10.1111/j.1749-6632.2009.04579.x. [DOI] [PubMed] [Google Scholar]

- 26.Saleh M, Reimer J, Penn R, Ojakangas CL, Hatsopoulos NG. Fast and slow oscillations in human primary motor cortex predict oncoming behaviorally relevant cues. Neuron. 2010;65:461–471. doi: 10.1016/j.neuron.2010.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Coull JT. Space, Time and Number in the Brain. Elsevier; London: 2011. Discrete neuroanatomical substrates for generating and updating temporal expectations; pp. 87–101. [Google Scholar]

- 28.Arnal LH. Predicting “when” using the motor system’s beta-band oscillations. Front Hum Neurosci. 2012;6:225. doi: 10.3389/fnhum.2012.00225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Schubotz RI. Prediction of external events with our motor system: Towards a new framework. Trends Cogn Sci. 2007;11:211–218. doi: 10.1016/j.tics.2007.02.006. [DOI] [PubMed] [Google Scholar]

- 30.Morillon B, Schroeder CE, Wyart V. Motor contributions to the temporal precision of auditory attention. Nat Commun. 2014;5:5255. doi: 10.1038/ncomms6255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- 32.Besle J, et al. Tuning of the human neocortex to the temporal dynamics of attended events. J Neurosci. 2011;31:3176–3185. doi: 10.1523/JNEUROSCI.4518-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Arnal LH, Giraud AL. Cortical oscillations and sensory predictions. Trends Cogn Sci. 2012;16:390–398. doi: 10.1016/j.tics.2012.05.003. [DOI] [PubMed] [Google Scholar]

- 34.Breska A, Deouell LY. Neural mechanisms of rhythm-based temporal prediction: Delta phase-locking reflects temporal predictability but not rhythmic entrainment. PLoS Biol. 2017;15:e2001665. doi: 10.1371/journal.pbio.2001665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Park H, Kayser C, Thut G, Gross J. Lip movements entrain the observers’ low-frequency brain oscillations to facilitate speech intelligibility. Elife. 2016;5:e14521. doi: 10.7554/eLife.14521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Park H, Ince RAA, Schyns PG, Thut G, Gross J. Frontal top-down signals increase coupling of auditory low-frequency oscillations to continuous speech in human listeners. Curr Biol. 2015;25:1649–1653. doi: 10.1016/j.cub.2015.04.049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Coull JT, Davranche K, Nazarian B, Vidal F. Functional anatomy of timing differs for production versus prediction of time intervals. Neuropsychologia. 2013;51:309–319. doi: 10.1016/j.neuropsychologia.2012.08.017. [DOI] [PubMed] [Google Scholar]

- 38.Grahn JA, Rowe JB. Feeling the beat: Premotor and striatal interactions in musicians and nonmusicians during beat perception. J Neurosci. 2009;29:7540–7548. doi: 10.1523/JNEUROSCI.2018-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Gouvêa TS, et al. Striatal dynamics explain duration judgments. Elife. 2015;4:e11386. doi: 10.7554/eLife.11386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Buhusi CV, Meck WH. What makes us tick? Functional and neural mechanisms of interval timing. Nat Rev Neurosci. 2005;6:755–765. doi: 10.1038/nrn1764. [DOI] [PubMed] [Google Scholar]

- 41.Nozaradan S, Schwartze M, Obermeier C, Kotz SA. Specific contributions of basal ganglia and cerebellum to the neural tracking of rhythm. Cortex. August 19, 2017 doi: 10.1016/j.cortex.2017.08.015. [DOI] [PubMed] [Google Scholar]

- 42.Schwartze M, Kotz SA. A dual-pathway neural architecture for specific temporal prediction. Neurosci Biobehav Rev. 2013;37:2587–2596. doi: 10.1016/j.neubiorev.2013.08.005. [DOI] [PubMed] [Google Scholar]

- 43.Coull JT, Nobre AC. Where and when to pay attention: The neural systems for directing attention to spatial locations and to time intervals as revealed by both PET and fMRI. J Neurosci. 1998;18:7426–7435. doi: 10.1523/JNEUROSCI.18-18-07426.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Bolger D, Coull JT, Schön D. Metrical rhythm implicitly orients attention in time as indexed by improved target detection and left inferior parietal activation. J Cogn Neurosci. 2014;26:593–605. doi: 10.1162/jocn_a_00511. [DOI] [PubMed] [Google Scholar]

- 45.Wiener M, Turkeltaub PE, Coslett HB. Implicit timing activates the left inferior parietal cortex. Neuropsychologia. 2010;48:3967–3971. doi: 10.1016/j.neuropsychologia.2010.09.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Coull JT. Brain Mapping. Elsevier; London: 2015. Directing attention in time as a function of temporal expectation; pp. 687–693. [Google Scholar]

- 47.Fontolan L, Morillon B, Liegeois-Chauvel C, Giraud A-L. The contribution of frequency-specific activity to hierarchical information processing in the human auditory cortex. Nat Commun. 2014;5:4694. doi: 10.1038/ncomms5694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Bastos AM, et al. Visual areas exert feedforward and feedback influences through distinct frequency channels. Neuron. 2015;85:390–401. doi: 10.1016/j.neuron.2014.12.018. [DOI] [PubMed] [Google Scholar]

- 49.van Kerkoerle T, et al. Alpha and gamma oscillations characterize feedback and feedforward processing in monkey visual cortex. Proc Natl Acad Sci USA. 2014;111:14332–14341. doi: 10.1073/pnas.1402773111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Buschman TJ, Miller EK. Top-down versus bottom-up control of attention in the prefrontal and posterior parietal cortices. Science. 2007;315:1860–1862. doi: 10.1126/science.1138071. [DOI] [PubMed] [Google Scholar]

- 51.Spitzer B, Haegens S. Beyond the status quo: A role for beta oscillations in endogenous content (re)activation. eNeuro. 2017;4:0170-17. doi: 10.1523/ENEURO.0170-17.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Schön D, Tillmann B. Short- and long-term rhythmic interventions: Perspectives for language rehabilitation. Ann N Y Acad Sci. 2015;1337:32–39. doi: 10.1111/nyas.12635. [DOI] [PubMed] [Google Scholar]

- 53.Kotz SA, Schwartze M, Schmidt-Kassow M. Non-motor basal ganglia functions: A review and proposal for a model of sensory predictability in auditory language perception. Cortex. 2009;45:982–990. doi: 10.1016/j.cortex.2009.02.010. [DOI] [PubMed] [Google Scholar]

- 54.Goswami U. A temporal sampling framework for developmental dyslexia. Trends Cogn Sci. 2011;15:3–10. doi: 10.1016/j.tics.2010.10.001. [DOI] [PubMed] [Google Scholar]

- 55.Zatorre RJ, Evans AC, Meyer E. Neural mechanisms underlying melodic perception and memory for pitch. J Neurosci. 1994;14:1908–1919. doi: 10.1523/JNEUROSCI.14-04-01908.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Peretz I, Zatorre RJ. Brain organization for music processing. Annu Rev Psychol. 2005;56:89–114. doi: 10.1146/annurev.psych.56.091103.070225. [DOI] [PubMed] [Google Scholar]

- 57.Timm J, Schönwiesner M, Schröger E, SanMiguel I. Sensory suppression of brain responses to self-generated sounds is observed with and without the perception of agency. Cortex. 2016;80:5–20. doi: 10.1016/j.cortex.2016.03.018. [DOI] [PubMed] [Google Scholar]

- 58.Reznik D, Ossmy O, Mukamel R. Enhanced auditory evoked activity to self-generated sounds is mediated by primary and supplementary motor cortices. J Neurosci. 2015;35:2173–2180. doi: 10.1523/JNEUROSCI.3723-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Kleiner M, et al. What’s new in psychtoolbox-3. Perception. 2007;36:1–16. [Google Scholar]

- 60.Wyart V, de Gardelle V, Scholl J, Summerfield C. Rhythmic fluctuations in evidence accumulation during decision making in the human brain. Neuron. 2012;76:847–858. doi: 10.1016/j.neuron.2012.09.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Tadel F, Baillet S, Mosher JC, Pantazis D, Leahy RM. Brainstorm: A user-friendly application for MEG/EEG analysis. Comput Intell Neurosci. 2011;2011:879716. doi: 10.1155/2011/879716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Gross J, et al. Good practice for conducting and reporting MEG research. Neuroimage. 2013;65:349–363. doi: 10.1016/j.neuroimage.2012.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Peelle JE, Gross J, Davis MH. Phase-locked responses to speech in human auditory cortex are enhanced during comprehension. Cereb Cortex. 2013;23:1378–1387. doi: 10.1093/cercor/bhs118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Lachaux JP, Rodriguez E, Martinerie J, Varela FJ. Measuring phase synchrony in brain signals. Hum Brain Mapp. 1999;8:194–208. doi: 10.1002/(SICI)1097-0193(1999)8:4<194::AID-HBM4>3.0.CO;2-C. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Özkurt TE, Schnitzler A. A critical note on the definition of phase-amplitude cross-frequency coupling. J Neurosci Methods. 2011;201:438–443. doi: 10.1016/j.jneumeth.2011.08.014. [DOI] [PubMed] [Google Scholar]

- 66.Lobier M, Siebenhühner F, Palva S, Palva JM. Phase transfer entropy: A novel phase-based measure for directed connectivity in networks coupled by oscillatory interactions. Neuroimage. 2014;85:853–872. doi: 10.1016/j.neuroimage.2013.08.056. [DOI] [PubMed] [Google Scholar]

- 67.Hillebrand A, et al. Direction of information flow in large-scale resting-state networks is frequency-dependent. Proc Natl Acad Sci USA. 2016;113:3867–3872. doi: 10.1073/pnas.1515657113. [DOI] [PMC free article] [PubMed] [Google Scholar]