Abstract

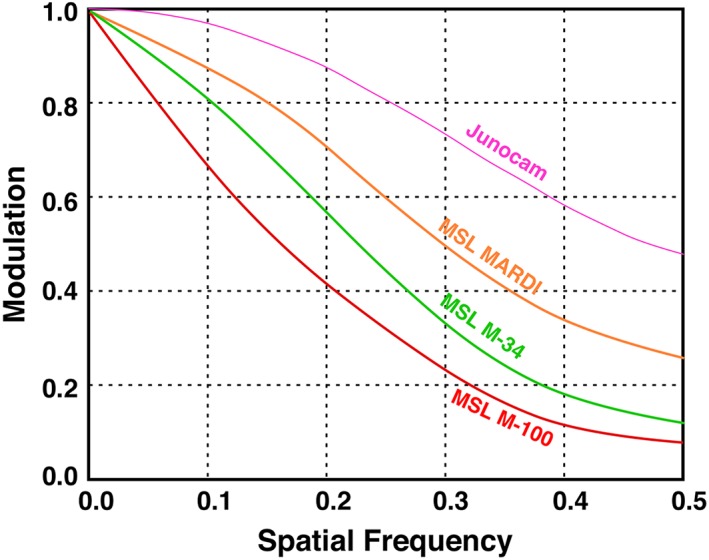

The Mars Science Laboratory Mast camera and Descent Imager investigations were designed, built, and operated by Malin Space Science Systems of San Diego, CA. They share common electronics and focal plane designs but have different optics. There are two Mastcams of dissimilar focal length. The Mastcam‐34 has an f/8, 34 mm focal length lens, and the M‐100 an f/10, 100 mm focal length lens. The M‐34 field of view is about 20° × 15° with an instantaneous field of view (IFOV) of 218 μrad; the M‐100 field of view (FOV) is 6.8° × 5.1° with an IFOV of 74 μrad. The M‐34 can focus from 0.5 m to infinity, and the M‐100 from ~1.6 m to infinity. All three cameras can acquire color images through a Bayer color filter array, and the Mastcams can also acquire images through seven science filters. Images are ≤1600 pixels wide by 1200 pixels tall. The Mastcams, mounted on the ~2 m tall Remote Sensing Mast, have a 360° azimuth and ~180° elevation field of regard. Mars Descent Imager is fixed‐mounted to the bottom left front side of the rover at ~66 cm above the surface. Its fixed focus lens is in focus from ~2 m to infinity, but out of focus at 66 cm. The f/3 lens has a FOV of ~70° by 52° across and along the direction of motion, with an IFOV of 0.76 mrad. All cameras can acquire video at 4 frames/second for full frames or 720p HD at 6 fps. Images can be processed using lossy Joint Photographic Experts Group and predictive lossless compression.

Keywords: Mars, cameras, Mastcam, MARDI, Curiosity, MSL

Key Points

The Mars Descent Imager, an f/3 9.7 mm, 2 M pixel color camera operated autonomously during landing taking a descent video at 4 frames/second

Mastcam‐34 f/8, 34 mm camera takes <1600 × 1200 pixel images in broad and narrowband color over a field 20° × 15° at a scale of 218 μrad/pixel

Mastcam‐100 f/10, 100 mm, f/10 takes <1600 × 1200 pixel images in broad and narrowband color over a field 6.8° × 5.1° at 74 μrad/pixel scale

1. Introduction

1.1. Scope

The Mars Science Laboratory (MSL) Curiosity rover landed on Aeolis Palus in northern Gale crater (latitude 4.5895°S, longitude 137.4417°E) on 6 August 2012 [Vasavada et al., 2014]. The mission's main objective is to assess the past and present habitability of Mars, with particular emphasis on exploring environmental records preserved in strata exposed by erosion in western Aeolis Palus and northwestern Aeolis Mons, also known as Mount Sharp [Grotzinger et al., 2012; Vasavada, 2016].

Curiosity has 17 cameras. These include four navigation and eight hazard cameras [Maki et al., 2012] arranged as redundant pairs, the Mars Hand Lens Imager (MAHLI) [Edgett et al., 2012], the ChemCam Remote Microscopic Imager [Le Mouélic et al., 2015], two Mast Cameras (Mastcams), and the Mars Descent Imager (MARDI).

This paper describes MSL Mastcam and MARDI (Figure 1) investigation objectives, instrumentation, and MARDI calibration. Not covered here are the following:

Mastcam calibration, presented by Bell et al. [2017].

Mastcam, MAHLI, and MARDI data and data products; these are archived with the National Aeronautics and Space Administration (NASA) Planetary Data System (PDS), and Malin et al. [2015], Edgett et al. [2015], and Bell et al. [2017] described these products.

Science results from the MARDI Entry, Descent, and Landing (EDL) and surface observations, and the science results from the use of the Mastcam throughout the surface mission.

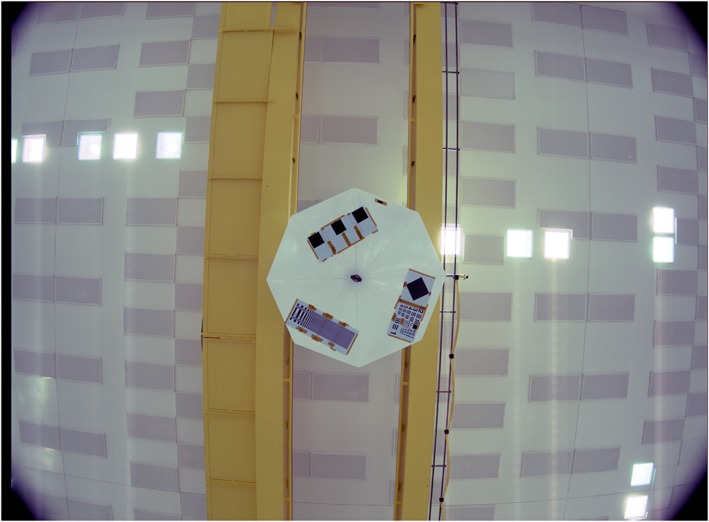

Figure 1.

Curiosity rover in cleanroom facilities at JPL‐Caltech on 4 April 2011 with the locations of the Mastcam and MARDI camera heads and Mastcam calibration target indicated. The digital electronics assembly (DEA) hardware for these cameras is housed inside the rover body in a thermally controlled environment. In this view, support equipment is holding the rover slightly off the floor. When the wheels are on the ground, the top of Curiosity's mast is about 2.2 m above ground level. This is a portion of NASA/JPL‐Caltech image PIA13980.

1.2. Background

Grotzinger et al. [2012] described the MSL mission, hardware, instrumentation, and science investigation. The Curiosity rover is a mobile laboratory that, as of sol 1550 (14 December 2016), had driven 15.01 km (onboard odometry) from its landing site. The rover has tools to extract particulate samples by drilling into rocks and by scooping regolith and eolian fines [Anderson et al., 2012]. Dust can be brushed from rock surfaces using the rover's Dust Removal Tool [Davis et al., 2012]. Images of surface disturbances created by rover wheels also contribute to science [Haggart and Waydo, 2008; Sullivan et al., 2011; Arvidson et al., 2014): wheels can make trenches to probe eolian deposits, rocks can be accidentally or purposefully broken or scraped, and wheels leave tracks that provide information about regolith properties and eolian transport.

Many of the results of the first 2.3 Mars years of the MSL mission centered on science for which images acquired by 11 of the rover's cameras are central. These include identification of rock types, compositions, facies, and stratigraphic relations; rock diagenetic textures, structures, and metasomatic features; the geomorphic configuration of the landscape as it relates to rock properties, erosion history, and rover traverse planning; studies of regolith and present‐day windblown sediments; and investigations of the Martian atmosphere and meteorology.

Images from Curiosity's cameras and instruments on NASA's orbiting assets have also been vital for enabling the overall scientific success of the MSL mission, including mosaics used to plan where to drive the rover, where to stop and observe geologic materials more closely in the rover's robotic arm workspace, and where to extract samples using the rover's drill or scoop. Images are also used to monitor the condition of rover and instrument hardware, such as wheels, drill bits, instruments on the robotic arm, and sample inlet ports.

2. Science and Science‐Enabling Objectives

Investigation objectives are important, as they, in part, provide science guidance to the engineering effort of designing, building, and calibrating the investigation instrumentation. The “flow‐down” from objectives to functional requirements, from functional requirements to design requirements, and eventually to demonstrated capabilities is, perhaps, the single most important aspect of the investigation objectives.

The Mastcam and MARDI investigations were proposed separately to NASA in July 2004 in response to an April 2004 Announcement of Opportunity that solicited science investigations for the MSL mission. NASA selected Mastcam and MARDI, together with MAHLI, in December 2004. These three camera‐based investigations would share common instrument design elements and labor (staffing).

2.1. Mastcam

The primary objective of the Mastcam investigation is to acquire and use images of the environment in which the MSL rover is operational to characterize and determine details of the history and processes recorded in geologic material at the MSL field site, particularly as these details pertain to the past and present habitability of Mars. More specifically, the Mastcam investigation was designed to address the following objectives:

-

1

Observe landscape physiography and processes. Provide data that address the topography, geomorphology, geologic setting, and the nature of past and present geologic processes at the MSL field site.

-

2

Examine the properties of rocks. Observe rocks (outcrops and clasts ≥4 mm) and the results of interaction of rover hardware with rocks (e.g., drilling, brushing, and wheel breakage) to help determine morphology, texture, structure, mineralogy, stratigraphy, rock type, history/sequence, and depositional, diagenetic, and weathering processes for these materials.

-

3

Study the properties of fines. Examine Martian fines (clasts <4 mm) to determine the processes that acted on these materials and individual grains within them, including physical and mechanical properties, the results of interaction of rover hardware with fines (e.g., scooping, wheel imprints, and trenches), plus stratigraphy, texture, mineralogy, and depositional processes.

-

4

Document atmospheric and meteorological events and processes. Observe clouds, dust‐raising events (dust devils, plumes, and storms), properties of suspended aerosols (dust and ice crystals), and eolian transport and deposition of fines. Observations of the Sun through the two solar filters at different wavelengths give the opacity of the atmospheric column at two wavelengths, a function of scattering particle size. Similar observations of other parts of the sky using the normal color filters also provide information on dust particle size and composition. Observations of the distant rim of the crater and the variation of opacity as a function of height of the measurement yield a profile of opacity (dust loading) within the lower portion of the atmospheric boundary layer.

-

5

Support and facilitate rover operations, sample extraction and documentation, contact instrument science, and other MSL science. To help enable overall MSL scientific success, acquire images that help determine the location of the Sun and horizon features; provide information pertinent to rover traversability; provide data that help the MSL science team identify materials to be collected for, and characterize samples before delivery to, the MSL analytical instruments; help the team identify and document materials to be examined by the robotic arm‐deployed contact science instruments; and acquire images that support other MSL instruments that may need them.

Finally, the Mastcam investigation has a sixth objective that was formulated in the July 2004 proposal to NASA, a time when the MSL landing site was unknown and could have placed the rover at a latitude as high as 60° north or south of the equator:

-

6

View frost, ice, and related landforms and processes. Characterize frost or ice, if present, to determine texture, morphology, thickness, stratigraphic position, and relation to regolith and, if possible, observe changes over time and also examine ice‐related (e.g., periglacial) geomorphic features.

Subsequent to the July 2004 proposal, frost was actually observed on the Mars Exploration Rover (MER), Opportunity, at an equatorial latitude [Landis and MER Athena Science Team, 2007] and has even been observed in images acquired from orbit at a latitude as low as 24°S in terrain north of Hellas in the middle of the afternoon [Schorghofer and Edgett, 2006].

2.2. MARDI

Malin et al. [2001] described the scientific rationale for acquisition of images during a descent to the Martian surface in the context of the Mars Polar Lander (MPL) Mars Descent Imager (MARDI) investigation. Given the importance of geologic context, Malin et al. [2001] said, “among the most important questions to be asked about a spacecraft sitting on a planetary surface is ‘Where is it?’”

At the time the MSL MARDI investigation was proposed, the launch of NASA's newest high‐resolution orbiter camera, the Mars Reconnaissance Orbiter (MRO) High Resolution Imaging Experiment (HiRISE), was still more than a year away and many critical events (launch, cruise, orbit insertion, and aerobraking) had to occur before the start of the November 2006 to December 2008 primary mission. Further, the Mars Global Surveyor Mars Orbiter Camera (MOC), which had just demonstrated its capability to image MER Opportunity and Spirit rover hardware on the Martian surface in January and February of that year [Malin et al., 2010], was in its second extended mission. Would MOC (capable of ~1.4 m/pixel imaging), HiRISE (capable of ~25 cm/pixel imaging), or a similar high‐resolution camera be available when MSL was scheduled to land in 2010? The MSL MARDI investigation was proposed, in part, to guard against the possibility of a negative answer.

Thus, the primary objective for the MSL MARDI investigation was similar to that of the MPL MARDI—to obtain visual, spatial information about the geology and geomorphology of the MSL landing site so as to locate where the spacecraft landed and, in this case, to also assist with initial rover traverse planning. More specifically, the investigation was designed to address the following objectives:

-

1

Determine landing site location and geologic context. Investigation of rocks for records of habitable environments requires an understanding of the geologic context and stratigraphic position of the rocks investigated. These determinations are aided by knowledge of spatial relationships between materials of differing texture, structure, color, and tone. Determine the geographic and physiographic location and geologic context of the MSL rover, quickly (nominally, within 1 Mars day, a sol), after it has landed.

-

2

Assist initial traverse planning. Use the color image‐based knowledge of rover location, geologic context, and mobility system (wheels) hazards to plan the initial traverse for the MSL surface mission.

-

3

Connect geologic observations from images acquired by cameras aboard orbiting and landed platforms. Bridge the spatial resolution gap, providing continuity of geologic and geomorphic knowledge between images acquired by orbiting and landed cameras to improve science understanding of features barely resolved or invisible in the lower‐resolution orbiter images.

-

4

Observe dynamic events during descent. Deduce the wind profile encountered during the portion of the descent in which images are acquired, observe the descent and impact of the MSL heat shield, and document the interaction of the MSL terminal descent engines with the landing site surface.

-

5

Acquire data to for descent imaging technology demonstration. Acquire data to be used to evaluate future landing systems that might incorporate active hazard avoidance capabilities.

Because the investigation is centered on observations acquired during the descent of the MSL rover to the Martian surface, the MARDI design was not required to be operational and acquire in‐focus images after the landing sol (sol 0). However, after more than 2.3 Mars years of surface operations, the camera has remained functional and, thus, a sixth objective applies:

-

6

Observe geologic features within the MARDI field of view along the rover traverse. For however long the MARDI camera continues to be operational, acquire occasional images of geologic materials beneath the rover, within the camera field of view. MARDI has the potential to make stratigraphic observations over the course of a traverse upslope or downslope on a rock outcrop of varied lithology, observe eolian sediments and forms created by wind erosion of rock surfaces, and document regolith properties. As a portion of the left (port) front wheel is visible in landed MARDI images, images can also observe wheel interaction with surfaces during trenching activities.

3. Roles and Responsibilities

3.1. Instrument Development

The Mastcam, MAHLI, and MARDI science teams and Malin Space Science Systems (MSSS) developed these instruments under contract to NASA's Jet Propulsion Laboratory (JPL), a division of the California Institute of Technology. JPL manages the MSL Project for the NASA's Science Mission Directorate. At the suggestion of the NASA Selecting Authority, and for the ease of contracting, JPL combined the three science teams and development efforts into a single contract. JPL built the Curiosity rover and its cruise and descent stage delivery elements. MSSS developed, integrated, and tested the Mastcam and MARDI hardware and flight software. MSSS's major subcontracted partner for development of the Mastcam lens assembly mechanisms was Alliance Spacesystems, Inc. (ASI), of Pasadena, California. ASI is now MDA U.S. Systems, LLC, a division of MacDonald, Dettwiler, and Associates Ltd.

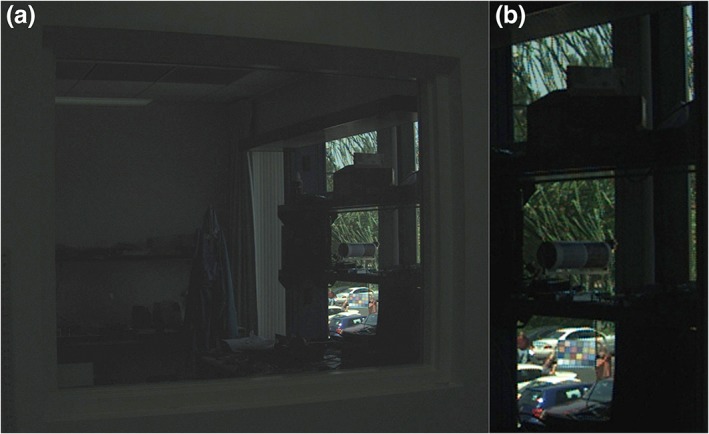

3.2. Assembly, Test, and Launch Operations

The MARDI camera head was delivered to JPL Assembly, Test, and Launch Operations (ATLO) facilities in July 2008 in order to be operated during all Entry, Descent, and Landing (EDL) tests that began in October 2008. The Mastcam hardware was delivered to JPL in March 2010. Working in partnership with MSSS engineers, ATLO tests involving use of the Mastcams and MARDI aboard Curiosity were conducted thereafter in JPL facilities and, starting in July 2011, at NASA's Kennedy Space Center (KSC) in Florida. Major tests involving the cameras included integrated camera geometric calibration in December 2010 [Maki et al., 2012; Bell et al., 2017] and rover system thermal/vacuum testing in a large environment chamber at JPL in March 2011 [Novak et al., 2012]. Final inspection and cleaning of the camera head optics by the MSSS team occurred in August 2011 at KSC.

3.3. Instrument Operations, Data Validation, and Data Archiving

The Mastcams and MARDI are operated in parallel with the MAHLI by a full‐time professional operations staff at MSSS in San Diego, California. Mastcam, MAHLI, and MARDI science team members, working part‐time at venues across the U.S., literally from Maine to Hawaii, assist with camera operations. The operations are conducted in partnership and close consultation with the full MSL science team and rover operations engineers at JPL. Together, these personnel decide which images to obtain and when to acquire them, all on a tactical (daily) basis. Their decision‐making process is based on considerations of scientific and engineering objectives relative to resources available (e.g., power, schedule, onboard data storage, and downlink data volume) on a given Martian day (sol). Section 8 describes operations in somewhat more detail.

MSSS personnel also provide Mastcam and MARDI data and instrument status information to the MSL Project and science team on a tactical (daily) basis. Further, the operations personnel monitor the amount of data stored on board each instrument and work with the Principal Investigator and the MSL science team to make decisions on when to delete data stored therein (deletion requires Principal Investigator authorization).

Working with the Principal Investigator, MSSS personnel also ensure data validation, and archiving with the NASA Planetary Data System (PDS). The validated data are placed in the NASA PDS archives on a regular basis per a schedule developed by MSL Project management. As of 05 December 2016, all validated Mastcam and MARDI data and data products received on Earth through sol 1417 (01 August 2016) were available in the archive.

4. Instrument Required Capabilities and Their Motivation

4.1. Mastcam

As proposed in July 2004, the Mastcam camera pair was envisioned to provide the primary geology and geomorphology imaging data set for the MSL mission. The required capabilities built upon operational and science lessons learned from previous landed Mars missions: Viking, Mars Pathfinder, and the Mars Exploration Rovers (MER) Spirit and Opportunity [Huck et al., 1975; Smith et al., 1997; Bell et al., 2003]. Of particular importance were the following requirements: spatial resolution and focus; true color, narrowband multispectral color, and stereo imaging and mosaics; data storage and downlink flexibility; and high‐definition video. Mosaics include any option from two overlapping images of adjacent targets to full 360° landscape panoramas. Camera pointing would be provided by the rover's Remote Sensing Mast (RSM) azimuth and elevation pointing mechanisms. In the following paragraphs, the phrase “The cameras shall” is omitted from the beginning of each subsection.

4.1.1. Spatial Resolution and Focus

Observe, in focus, geologic materials at ≤10 cm per pixel at 1 km distance and 150 μm per pixel at 2 m distance. The requirement at 1 km distance was motivated by geology, in particular to observe stratigraphic relations and rock outcrop erosional expressions at a spatial resolution higher than available from, and a viewing geometry different from, planned high‐resolution orbiter cameras (e.g., HiRISE); such images would also both assist in rover traverse planning (deciding where to go and investigate) and provide images of geology the rover would otherwise never visit (e.g., in a direction that differs from the planned traverse or on a slope too steep or high for driving).

The requirement at 2 m distance was motivated by the need to investigate and plan activities for the robotic arm workspace in front of the rover, roughly 2 m from the cameras mounted on the RSM; the 150 μm per pixel requirement permits detection of objects as small as ~500–600 μm (coarse sand) and covers the spatial resolution gap between Navigation Camera [Maki et al., 2012] and MAHLI [Edgett et al., 2012] capabilities in the workspace. Ultimately, the Mastcam spatial requirements drive a need for focusable cameras with a requirement to be in focus on objects in the robotic arm workspace, elsewhere on and near the rover, and out to infinity. Indeed, infinity focus facilitates viewing of geologic and geomorphic features beyond the 1 km, ≤10 cm per pixel range as well as viewing astronomical objects.

4.1.2. True Color Images and Mosaics

Obtain red, blue, and green (RGB) “true color” similar to that seen by the typical human eye in single‐frame images. The single‐image color capability minimizes image and mosaic acquisition time (and changes in illumination conditions over time) relative to cameras previously landed on Mars that employed separate red, green, and blue narrowband filters and acquired at least three (RGB) filtered images for each color scene. This requirement permits the color of geological materials to be directly relatable to human field and laboratory experience on Earth and was also designed to provide the public with a sense of what their eyes or their personal digital cameras might “see” if they were transported to Mars.

The main reason one might perform a color or white balance adjustment to an image is to accommodate illumination source “temperatures” (i.e., the light source hue as compared with the temperature of a blackbody radiator matching that hue). Consumer digital cameras, for example, commonly have built‐in capabilities that allow the user to adjust for differences in illumination conditions, such as a bright, sunny day, an overcast day, or indoor illumination by different types of artificial lamps. The solar illumination reaching the Martian surface, through its atmosphere and reflecting off its surface, and with a given opacity at a given moment in time, would differ from the terrestrial experience. One motivation for performing a color adjustment on Mastcam and MARDI images, then, is to ensure that the geologic features observed appear as they would to a human eye working with the materials if they were in a field setting on Earth.

4.1.3. Multispectral Images and Mosaics

Perform the same 13 narrowband filtering for color and near‐infrared spectroscopy (400–1100 nm) and solar observing (for atmospheric aerosol opacity) as were carefully selected and rationalized for the MER Pancam experiment [Bell et al., 2003]. This objective was enabled by the recognition that the out‐of‐band leakage of the Bayer filters was substantial (≤10%) and nearly uniform across the red and near‐infrared (IR) portion of the spectrum. Light passing through the science filters passes through the RGB true color filters of the Bayer filter array. In cases of red, green, and blue monochromatic visible light filters, only one of the Bayer filters would pass the light, so in some cases only a few pixels would record the light from those filters. In the cases of long‐red and near‐IR science filters, the detector receives equal amounts of light at each pixel. Finally, commonality with the Pancam filters was intended to facilitate comparison of results across three rover field sites, Spirit, Opportunity, and Curiosity.

4.1.4. Stereo Images and Mosaics

Acquire stereo imaging to provide both visual qualitative and computed photogrammetric quantitative three‐dimensional information about a scene. In the Mars rover science context, this is useful for interpreting distance, object size, and spatial relations of strata and geomorphic features. Ideally, stereoscopy is accomplished with cameras of the same focal length, as is the case on the MSL rover for the engineering cameras, that are specifically designed for stereo ranging [Maki et al., 2012]. When the Mastcam was descoped in 2007 (see section 5.1 below), both stereoscopy through identical focal lengths and focus capability were removed from the requirements. A nonidentical pair of cameras was deemed capable of satisfying interests to acquire stereo pair images while at the same time preserving both high‐ and low‐resolution options. Stereo observations between cameras of dissimilar focal length are limited by the scale of the shortest focal length. The requirement to mount the two Mastcam cameras with a maximum interoccular distance and if required, toe‐in to improve the stereo overlap closer to the rover was retained.

4.1.5. Data Storage and Downlink Flexibility

Store multiple narrow angle field‐of‐view mosaics in raw form for multiple image planning cycles, in both full resolution and thumbnail versions. Eventually, it became possible to generate thumbnails in realtime, so thumbnails were not stored in the camera buffers, but transmitted to the Rover's non‐volatile memory for storage. The Mastcam team envisioned maximizing flexibility to acquire, store, compress, and downlink images. For example, full 360° panorama mosaics could be acquired in RGB (single color image per mosaic frame, each stored as a gray scale image), small (thumbnail) versions of these images could be downlinked and mosaicked, and then the science team could choose which full‐size images (from one to all of them) to downlink, how to prioritize their downlink relative to other data on board the rover, and how to compress the data to optimize science return. This requirement, coupled with the MARDI descent imaging data storage requirements described in section 4.2, would drive decisions regarding data storage and compression options available on board the instrument.

4.1.6. High‐Definition Video

Employ video to observe dynamic events (e.g., eolian activity or astronomical events like the transit of Phobos across the face of the Sun) and provide options for public engagement opportunities. Thus, the Mastcam instruments were required to be able to acquire high‐definition video (720p; 1280 × 720 pixel format). The video requirement was, in part, a bonus provided by the commonality of design with MARDI, which required a descent video capability.

4.2. MARDI

To meet MARDI science objectives, the camera was required to be able to obtain and store a continuous series of several hundred nested RGB color images of the Martian surface of increasing spatial resolution during the descent to touchdown. It was further required to be fixed‐mounted on the rover body such that its optic axis pointed in the rover coordinate frame +Z direction (downward, the direction of the gravity vector when the rover is on a planar surface perpendicular to that vector), and with the wide dimension of the field of view perpendicular to the direction of flight (fore direction).

There were, in addition, a number of engineering objectives defined by the science team. For example, the descent imaging plan was required to begin shortly after parachute deployment but just prior to heat shield separation (in order to capture that engineering event).

Prakash et al. [2008] described the MSL Entry, Descent, and Landing (EDL) system and sequence of events; Kornfeld et al. [2014] discussed the EDL system and its verification and validation in further detail. The EDL system design was to have heat shield separation occur while the spacecraft was descending on its parachute. As the spacecraft further descended, its radar system would detect the surface and trigger parachute and backshell separation and a after a brief interval, initiate powered descent (firing engines) at an altitude between 1500 and 2000 m above the ground. Powered decent would continue until the rover was about 18.6 m above the surface and descending at a constant rate of 0.75 m/s, at which time the descent stage would release the rover on a set of cables. The system, now a descent stage and rover separated by these cables, would continue to move downward until the rover wheels touched the Martian surface. Then the cables would be cut and the descent stage would fly away and crash >150 m from the rover. MARDI imaging would be completed soon thereafter. A primary requirement on the MARDI was that imaging occur uninterrupted throughout this sequence of events.

Various aspects of the descent sequence affected the science requirements:

4.2.1. Nesting Coverage

Nesting scale impacts the ability to link geological observables in images at one scale with images at another. For descent imaging, nesting scale is a function of altitude, descent rate, and camera frame rate.

A primary requirement for the MARDI was to be able to acquire observations of the eventual landing location at several resolutions (e.g., from different altitudes), with later images “nested” inside earlier images. The descent stage's unknown but modeled large rigid body oscillatory motions while descending under its parachute required a large field of view. The angular rate of attitude variations required a short exposure time (<1 ms) to limit image motion smear to less than 1 pixel. The short exposure time led to the detector readout time being an appreciable fraction of the exposure time, leading to a large component of electronic shutter smear. The short exposure also led to a faster optics than would have been ideal.

4.2.1.1. Frame Rate

A slow fixed frame rate (as was required for the Mars Polar Lander MARDI [Malin et al., 2001]) results in a nesting scale ratio that varies during descent. For geologic and geomorphic observing, a 10:1 ratio is extremely difficult to use in comparisons from one image to the next but a ratio of 5:1 is much better. Thus, the MSL MARDI was required to obtain data over a short frame acquisition interval to ensure that the scale ratio at all points during the descent is less than 5:1. This translated to a requirement to obtain full‐frame MARDI images faster than 1 per second. The practical limit was set by the electronics clocking speed at a rate of ~5 frames per second, and the actual in‐flight frame rate was about 4 frames per second.

4.2.1.2. Field of View and Instantaneous Field of View (Resolution)

The rapid MSL descent was envisioned to make full nesting relatively easy to achieve, but solid body oscillations and rotations during parachute descent would reduce some of the image‐to‐image overlap, and actually eliminated it between images widely separated in time. Spatial coverage is achieved through the combined requirements for a 1648 by 1214 (1600 × 1200 active) pixel format detector inscribed in an ~90° circular field of view (FOV) lens (70 × 52° inscribed FOV), and minimal MSL hardware obstruction (except, of course, the heat shield) of the camera field of view during descent. A further, governing requirement on camera lens format was that, as the rover descends, at some point the entire landing site would be visible in an area ≥10 by 10 m at a scale of at least 1 mrad, or better than 2 cm per pixel (accomplished by vertical terminal descent).

4.2.2. Autonomous Operation

Finally, a most important requirement—a lesson learned from the previous MARDI instruments developed for the Mars Polar Lander [Malin et al., 2001] and Phoenix Mars Scout lander—was for the operation of the instrument to be as decoupled, as much as possible, from the mission critical operation of computers controlling the spacecraft descent. Thus, the MARDI was designed to operate autonomously: after an initial turn‐on and warm‐up period, a single command would start the imaging sequence, and it would run to completion during the descent, and after landing terminate without further spacecraft interaction.

5. Instrument Descriptions

5.1. Introduction

This section will describe the instruments as flown. These differ somewhat from the instruments originally proposed and developed, and the differences are often manifested in idiosyncratic attributes noted in the descriptions above and below. These differences resulted from the descope of both the Mastcam (initially required to remove both the zoom and focus capability, with focus capability later reintroduced into the flight design) and the MARDI (initially removed from the flight payload, but later reinstated although too late to complete a full calibration and meet a delivery date to participate in all EDL testing). The descopes in September 2007 came 4 months after instrument CDR, and some of the attributes resulted from fabrication of flight hardware based on the zoom lens design that could not be refabricated for the final flight design. Among the Mastcam idiosyncracies are the vignetting of the full field of view, flying dissimilar focal lengths and the effects this has on stereoscopy, and the limited near‐field focus capability of the M‐100, and for the MARDI the relatively poor flat field photometry and nonquantitative assessment of its stray and scattered light sensitivity.

5.2. Brief Overviews

Table 1 summarizes the characteristics of the Mastcam and MARDI instruments. Each of the three cameras has two hardware elements, a camera head and a digital electronics assembly (DEA). The two Mastcam camera heads are mounted on the rover's azimuth‐elevation articulated RSM [Warner et al., 2016]. The MARDI camera head is mounted, pointing downward, behind the front left rover wheel. The DEAs are packaged with the MAHLI DEA inside the rover's environment‐controlled body [Bhandari et al., 2005]. The Mastcams share a third hardware element, the Mastcam calibration target. The MARDI calibration target was affixed on the inside surface of the descent system heat shield. A strict definition of “in focus” is used for these cameras, wherein the optical blur circle is equal to or less than one pixel across.

Table 1.

Mastcam and MARDI Instrument Characteristics

| Hardware Element | Mass (kg) | Power (w) | Dimensions (cm) |

|---|---|---|---|

| Mastcam 34 mm | 0.799 | 1.8 idle, 3.5 imaging | 27 × 12 × 11 |

| Mastcam 100 mm | 0.806 | 1.8 idle, 3.5 imaging | 27 × 12 × 11 |

| MARDI | 0.559 | 1.8 idle, 3.5 imaging | 15 × 11 × 11 |

| Digital electronics assembly (DEA) includes 2 Mastcams, MAHLI, and MARDI | 2.089 | 6.4 idle, 9.0 imaging | 22 × 12 × 10 |

| Mastcam calibration target | 0.079 | None | 8 × 8 × 6 |

| MARDI calibration target | NA | None | 34 × 93 |

| Optics | Description | ||

| Mastcam 34 mm | Mastcam 100 mm | MARDI | |

| Field of view (FOV, diagonal) | 20.6° | 7.0° | 90° |

| FOV (horizontal 1200 pixels)a | 15.0° | 5.1° | 70° |

| FOV (vertical 1200 pixels) | 15.0° | 5.1° | 52° |

| Instantaneous field of view (IFOV) | 218 μrad | 74 μrad | 764 μrad |

| Focal ratio | f/8 | f/10 | f/3.0 |

| Effective focal length | 34.0 mm | 99.9 mm | 9.7 mm |

| Focus range | 0.5 m to infinity | 1.6 m to infinity | 2 m to infinity; ~1 mm resolution at surface |

| Band passes | Figure 3 | Figure 3 | 399–675 nm with Bayer pattern filter |

| Detector | Description | ||

| Product | Kodak KAI‐2020CM interline transfer CCD | ||

| Color | Red, green, and blue microfilters; Bayer pattern | ||

| Array size | 1640 × 1214 pixels, 1600 × 1200 photoactive pixels; typical image size was 1344 × 1200 | ||

| Pixel size | 7.4 μm (square pixels) | ||

| M‐34 gain, read noise, full well | 16.0 e −/DN | 18.0 e − | 26,150 e − |

| M‐100 gain, read noise, full well | 15.8 e −/DN | 15.8 e − | 23,308 e − |

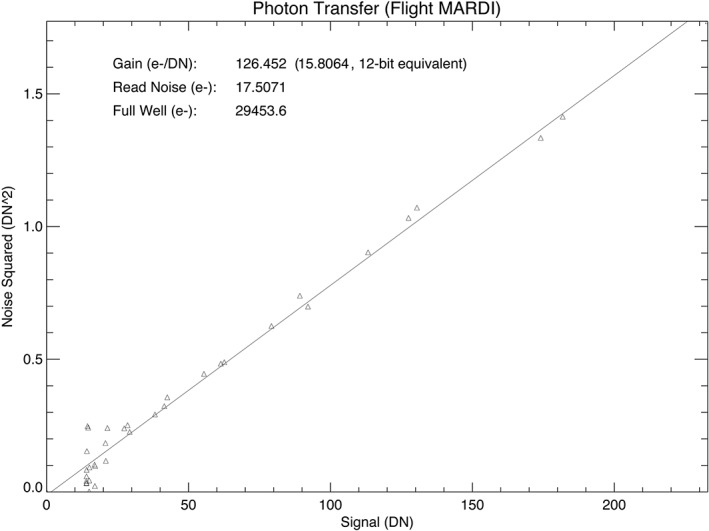

| MARDI gain, read noise, full well | 15.8 e −/DN | 17.5 e − | 29,454 e − |

| Exposure | Description | ||

| Duration | 0 to 838.8 s; commanded in units of 0.1 ms | ||

| Auto‐exposure | Based on Mars rover engineering camera approach of Maki et al. [2003] | ||

| Video acquisition | Description | ||

| Modes | Acquire and store single‐image frames or acquire and immediately compress into groups of pictures (GOPs) | ||

| Frame rate | Maximum limited by signal transmission rate between camera head and DEA to <4 Hz | ||

| Compression | Frames stored individually are treated the same as individual nonvideo images; GOPs are JPEG‐compressed | ||

| Onboard data compression options | Description | ||

| Uncompressed | No compression; no color interpolation | ||

| Lossless | Approximately 1.7:1 compression; no color interpolation | ||

| Lossy | JPEG; color interpolation or gray scale; commandable color subsampling Y:CR:CB (4:4:4 or 4:2:2); commandable compression quality (1–100) | ||

| Video GOP | JPEG‐compressed color‐interpolated GOPs, up to 2 Mb file size and up to 16 frames per GOP; commandable color subsampling and compression quality | ||

| Deferred compression | Image stored in onboard instrument DEA uncompressed; specified compression performed at a later time for transmission to Earth | ||

| Stereo pair acquisition | |||

| The two Mastcam camera heads can be used to acquire stereo image pairs, which requires resampling to match the pixel scales between the two cameras; the cameras have an interoccular distance of 24.64 cm and a toe‐in angle between the two Mastcams is 2.5° | |||

| The MARDI camera head is fixed‐mounted to the rover body; stereo pairs can only be acquired by motion of the rover—during descent and, after landing, by driving |

| Mosaic acquisition | |||

|

Mastcam mosaic acquisition requires use of software planning tools and RSM motion to scan the camera head FOVs over the desired scene, typical overlap was 20% (top‐to‐bottom/side‐to‐side) MARDI is fixed‐mounted to the rover body; mosaics are only acquired by motion of the rover—during descent and, after landing, by driving |

| Mastcam onboard focus merge | Description (unavailable on MARDI) | ||

| Products | Best focus image (color) and range map (gray scale) | ||

| Maximum input image size | 1600 × 1200 pixels; input images must be same size | ||

| Maximum number of input images | 8 images of 1600 × 1200 pixels | ||

| Input image compression | Raw, uncompressed (no color interpolation) | ||

| Output image compression | JPEG with user‐specified compression quality | ||

| Processing options | Merge, registration (feature tracking + corner detection), blending (spline‐based) | ||

| Image thumbnails | Description | ||

| Size | Approximately 1/8th the size of the parent image; size in integer multiples of 16 rounded down (thus, the thumbnail for a 1600 × 1200 pixel parent image is 1600/8 = 200/16 = 12.5 ≥ 12 × 16 yields 192 and 1200/8 = 150/16 = 9.375 ≥ 9 × 16 = 144, so the thumbnail is 192 × 144 pixels) | ||

| Purpose | Provides an index of images acquired by and stored onboard instrument; returned to Earth, often in advance of parent image, for evaluation and decision‐making about on‐Mars event success, data downlink compression, and priority | ||

| Compression | Commandable compressed or uncompressed; typical thumbnails from Mars are 4:4:4 color JPEGs; commandable compression quality | ||

| Video GOP | One thumbnail per GOP, derived from first image in GOP | ||

Note that the horizontal FOV is not limited to 1200 active pixels. The full 1600 active pixels are illuminated (though vignetted), and the M‐100 FOV is 16/12 × 5.1° across (~6.8°), and the M‐34 is 16/12 X 15° = 20°.

5.2.1. Mastcams

After the 2007 descope of the zoom and focus capabilities, focus was added back to both Mastcams, because their depths of field would not cover the ranges from the rover deck to the horizon (~0.5 m to several kilometers) owing to their relatively fast optics. The MAHLI focus mechanism design was adapted to the Mastcams, but did not have sufficient capability to focus the M‐100 on the rover deck (thus, there are no in‐focus images of the Mastcam calibration target using the M‐100).

Curiosity's two Mastcams are mounted about 1.97 m above ground level, on the RSM camera bar (Figure 1). Mosaics and stereopair coverage are achieved by RSM azimuth and elevation pointing. The left camera, Mastcam‐34 (M‐34) or Mastcam‐Left (ML) (Figure 2a), is a fixed focal length, focusable, color, and multispectral camera. It has a ~34 mm focal length, f/8 lens that illuminates a 20.0 × 15° FOV, covering the (active) 1600 wide by 1200 high detector (although a small amount of corner vignetting occurs). The full fields of both cameras are vignetted because an earlier definition of the filter wheel and optics, optimized for the descoped zoom lens, was designed for a square field of view (FOV) inscribed on a 1200 by 1200 pixel subframe without vignetting, and there was insufficient time and money to redesign the filter wheels that had already been manufactured to the original design. The IFOV is 218 μrad that yields a pixel scale of 440 μm at 2 m distance and 22 cm at 1 km.

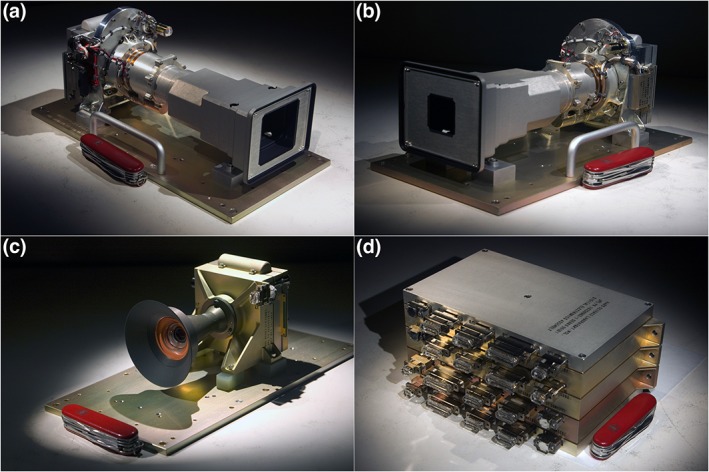

Figure 2.

Mastcam and MARDI flight hardware elements with pocketknife (88.9 mm long) for scale. (a) Mastcam‐34 camera head. (b) Mastcam‐100 camera head. (c) MARDI camera head. (d) Mastcam, MARDI, and MAHLI digital electronics assembly (DEA); each instrument DEA is a separate entity (they do not communicate with each other) packaged together to reduce volume and mass.

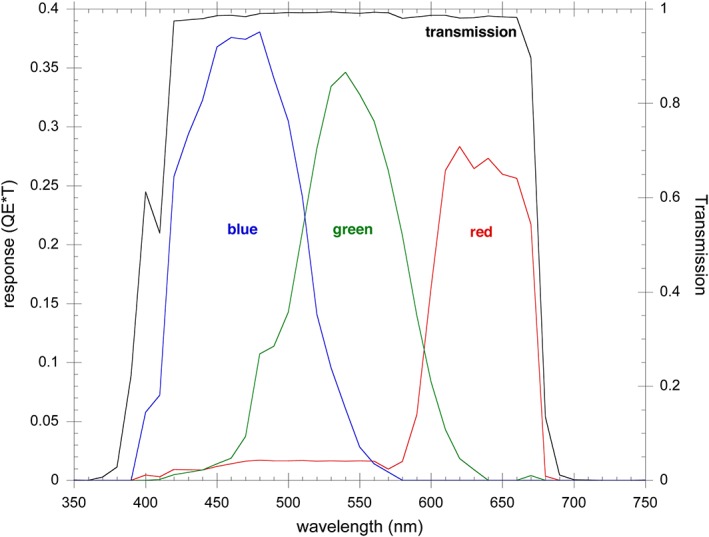

Mastcam‐100 (M‐100) (Figure 2b), also known as Mastcam‐Right (MR), is also a fixed focal length, focusable, color and multispectral camera. The camera has ~100 mm focal length (that changes very slightly depending on focus), f/10 lens that illuminates a 6.8° × 5.1° FOV on the (active) 1600 × 1200 pixel detector (again with slight vignetting). The IFOV is 74 μrad that yields a pixel scale of 7.4 cm at 1 km distance and ~150 μm at 2 m distance. Both cameras use an RGB Bayer pattern color filter array with microlenses integrated with the charge‐coupled device (CCD) detector and have options for imaging a scene via this RBG detector through a broadband filter or imaging through one of the seven additional (per camera) narrowband filters, arranged on a filter wheel, in the 399–1100 nm range (Figure 3). The Bayer filter array has a RG/GB 4 pixel unit cell.

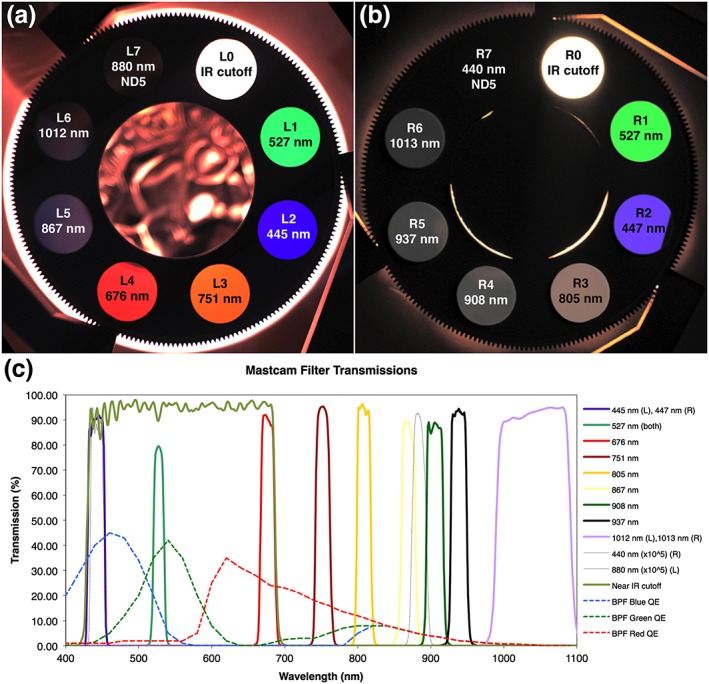

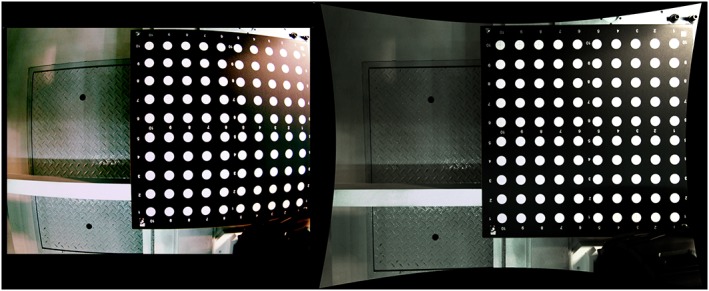

Figure 3.

Mastcam narrowband filters. (a) Photograph of white light transmitted through the Mastcam‐34 (Mastcam‐Left) filter wheel. Labels indicate the filter positions, left zero through seven (L0–L7), and effective center wavelength as determined by Bell et al. [2017]. (b) Photograph of white light transmitted through the Mastcam‐100 (Mastcam‐Right) filter wheel. Labels indicate the filter positions, right zero through seven (R0–R7), and effective center wavelength as determined by Bell et al. [2017]. Filter aperture diameters are 12.6 mm diameter. (c) Mastcam narrowband filter transmissions and CCD Bayer pattern filter (BPF) response functions. Note that the three BPF dashed lines extend longward of 700 nm wavelength, indicating leakage, and that they converge longward of 850 nm, meaning that they are equally transmissive at longer wavelengths, which permits imaging at these scientifically interesting wavelengths.

5.2.2. MARDI

MARDI (Figure 2c) is a fixed focal length, fixed‐focus, color camera with a 90° circular FOV lens that yields a 70° by 52° view on a 1600 by 1200 (active) pixel RGB Bayer pattern‐microfiltered CCD over a band pass of 399–675 nm. This wide‐angle lens shows a distinct brightness falloff from the center of the field to the edges that can be corrected using calibration flat‐field images.

The lens f/number is f/3.0, this being the slowest practical system that met a requirement to provide sufficient light gathering capability to get a signal‐to‐noise ratio (SNR) of greater than 50:1, even with unfavorable Sun elevation and surface albedo (both dependent on landing site that was unknown at the time of design). The f/3 was also the fastest practical aperture stop, because the exposure had to be set to a single fixed value for the entire descent imaging sequence and exposure was a trade‐off between the desire to minimize blurring from solid body motion during descent and the desire to improve SNR and reduce interline transfer smear (the latter becoming more of an issue with shorter exposure time). The exposure duration also had to provide margin against sensor saturation from high‐albedo, low‐phase angle surfaces in the scene. After extensive deliberation and testing under Earth sunlit conditions using the MARDI engineering model hardware, we settled on an exposure time of 0.9 ms for the decent. Based on the descent design [Prakash et al., 2008], this was anticipated to result in acquisition of 1000–1500 frames, well within the capacity of the MARDI onboard data storage that was designed to hold about 4000 raw full‐frame 8 bits per pixel images.

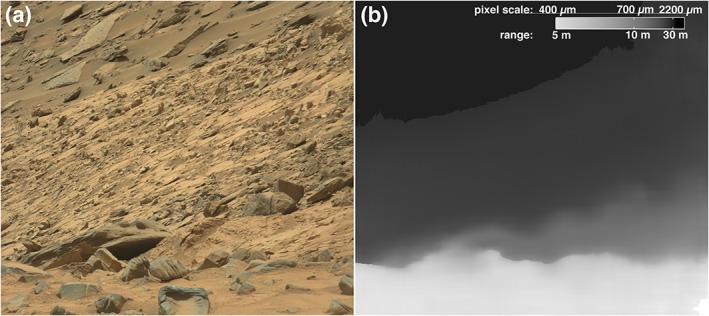

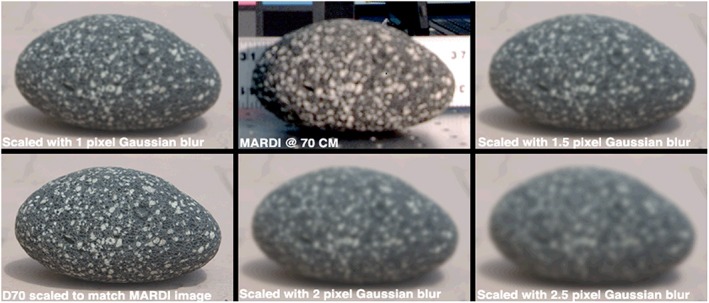

The MARDI IFOV is 0.768 mrad, which provides in‐focus pixel scales that range from ~1.5 m at 2 km range to ~1.5 mm at 2 m range, covering surface areas between 2.4 by 1.8 km and 2.4 by 1.8 m, respectively. At distances <2 m, out‐of‐focus blurring increases proportionally as the spatial scale decreases, resulting in a constant spatial sampling of ~1 mm. Once landed, and at a range to the surface of 66 cm (to front of sun shade; the focal plane is about 77 cm above the surface), MARDI images have a pixel scale of ~0.5 mm per pixel over a ~80 by ~60 cm surface, and the out‐of‐focus resolution is about three pixels (1.5 mm). Interpolative resampling reduction of MARDI images by a factor of 2 provides a good approximation to the resolution of the system.

MARDI was provided with an 11.8 W survival heater to maintain its allowable flight temperature (−40 to +40°C) during the mission's cruise phase, if needed. This heater was also used prior to EDL to raise MARDI's temperature to ensure that it would remain within its flight allowable range during the cooling predicted to occur throughout EDL. Since MARDI had no requirement to operate during the landed mission, the heater is not sized for use on the surface. Owing to its descope history, MARDI did not go through the same thermal environmental qualification testing as the other cameras (that nominally includes testing in the expected surface thermal environment), which raised questions about its survivability without heat on the surface. However, since landing, although the MARDI camera head reaches temperatures well below its tested allowable flight temperature during diurnal cycling, both its electronics and optics were built to the same design rules as Mastcam and MAHLI, and it remains fully operational more than 2.3 Mars years after landing.

5.3. Camera Heads

Each Mastcam and MARDI camera head (Figures 2a–2c) consists of a lens assembly, a focal plane assembly, and a camera head electronics assembly. The latter two share a common design with each other and the MAHLI. The Mastcam lenses are focusable and each Mastcam camera head also includes an eight‐position, rotatable filter wheel.

5.3.1. Focal Plane Assembly and Electronics

Each camera's focal plane assembly is designed around a Kodak (now ON Semiconductor) KAI‐2020CM charge‐coupled device (CCD) without a cover glass (Figure 4, left). The sensor has 1648 by 1214 pixels of 7.4 μm by 7.4 μm size. The photoactive area is a 1600 by 1200 pixel area inside of 16 dark columns and four photoactive buffer pixel columns on either side of the detector, two dark rows at the top and four dark rows at the bottom, plus four photoactive buffer pixel rows at both the top and bottom of the detector. The CCD uses interline transfer to implement electronic shuttering. The sensor has red, green, and blue (RGB) filtered microlenses arranged in a RG/GB Bayer pattern. Microlenses improve detector quantum efficiency, which is about 40% on average for the three color channels. The “fast‐dump” capability of the sensor is used to clear residual charge prior to integration and also allows vertical subframing of the final image.

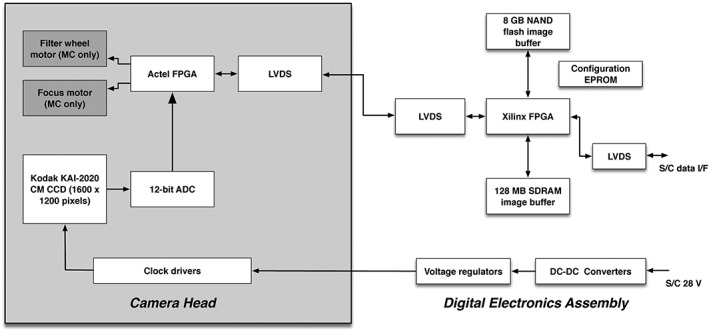

Figure 4.

Mastcam and MARDI electronics block diagram. (top left) The elements common to the Mastcam camera heads but not available in MARDI are shaded dark gray. MC is Mastcam, FPGA is a field‐programmable gate array, LVDS is low‐voltage differential signal, an ADC is an analog to digital converter, the Kodak KAI‐2020CM is a charge‐coupled device (CCD), NAND is a type of nonvolatile (flash) memory, EPROM is an erasable programmable read‐only memory, Gb is gigabyte, Mb is megabyte, DC‐DC converters change a source of direct current from one voltage level to another, SDRAM is synchronous dynamic random access memory, I/F stands for interface, V is voltage, and S/C represents spacecraft, which in this case is the rover.

The output signal from the CCD is AC‐coupled and then amplified. The amplified signal is digitized to 12 bits at a maximum rate of 10 megapixels per second. For each pixel, both reset and video levels are digitized and then subtracted in the digital domain to perform correlated double sampling (CDS), resulting in a typical 11 bits of dynamic range and 1 bit of noise.

The camera head electronics are laid out as a single rigid‐flex printed circuit board (PCB) with three rigid sections. The sections are sandwiched between housings that provide mechanical support and radiation shielding; the interconnecting flexible cables are enclosed in metal covers for radio‐frequency shielding. Camera head functions are supervised by a single Actel RTSX field‐programmable gate array (FPGA). In response to commands from the DEA (Figures 2d and 4, right), the FPGA generates the CCD clocks, reads samples from the analog‐to‐digital converter (ADC) and performs digital CDS, and transmits the pixels to the DEA. The FPGA is also responsible for operating the motors that drive the Mastcam focus and filter wheel rotation mechanisms.

The camera heads operate using regulated 5 V and ±15 V power provided by the DEA. A platinum resistance thermometer (PRT) on the camera focal plane provides temperature knowledge for radiometric calibration. An etched‐foil heater and an additional pair of PRTs are attached to the outside of the camera head and thermostatically controlled by the rover to warm the mechanism for operation when needed. On Mars, the camera head is usually operated at temperatures between −40°C and +40°C and was verified (through testing on a nonflight unit) to be able to survive nearly 3 Mars years of diurnal temperature cycles (down to −130°C) without any heating.

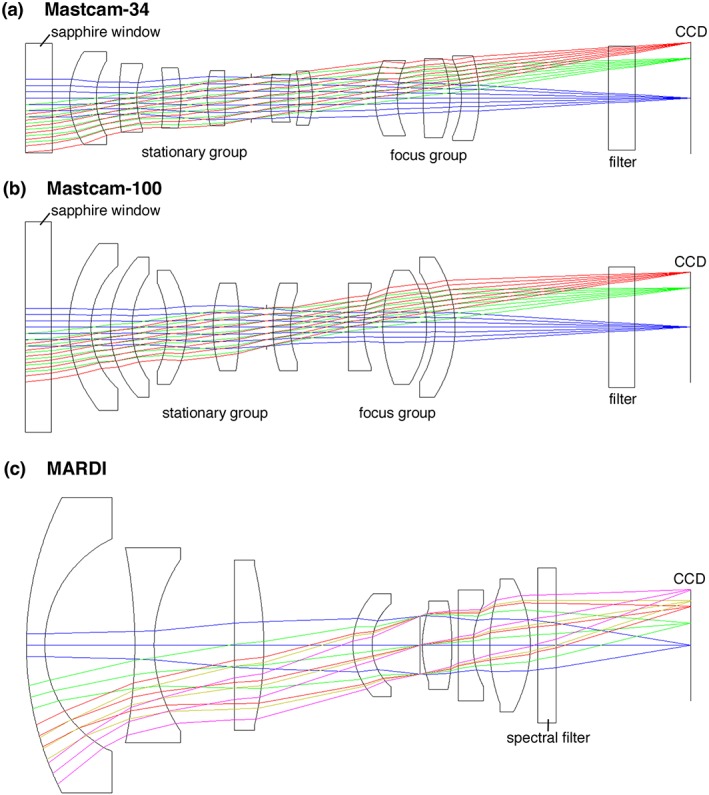

5.3.2. MARDI Lens Assembly

The fixed‐focus MARDI lens has an effective focal length of 9.68 mm, a 90° circular FOV, and its front element has a diameter of 40.0 mm. It has a focal ratio of 3.0 and a distortion of −18.6%. The 74.4 mm long aluminum lens housing contains seven powered elements and a plane‐parallel optical filter (Figure 5c). The filter limits the spectral band pass to 399–675 nm. Ghaemi [2009, 2011] provided details regarding the lens design and fabrication challenges. The MARDI 90° circular FOV, projected onto the 1600 by 1200 CCD, provides an overall 70° by 52° view.

Figure 5.

(a and b) Mastcam and (c) MARDI optics and ray‐trace diagrams (not to scale).

5.3.3. Mastcam Optomechanical Lens Assembly

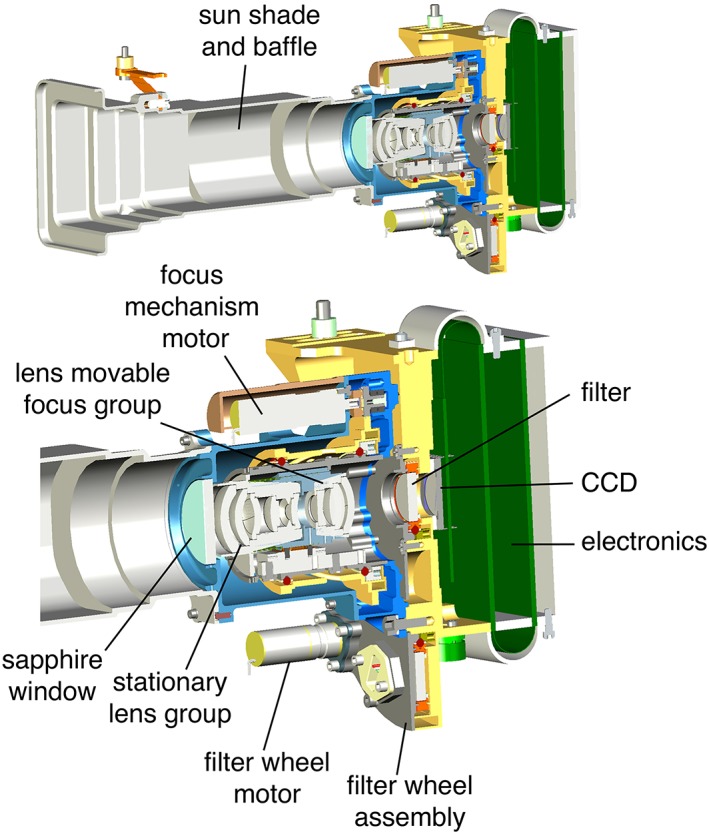

The Mastcam fixed focal length optomechanical lens assemblies include integrated optics and focus mechanisms and a single drive motor to adjust focus (Figure 6). DiBiase and Laramee [2009] described aspects of the optomechanical design and manufacture; Ghaemi [2009, 2011] described the optics and their assembly.

Figure 6.

Representative cross‐sectional views of a Mastcam camera head. Shown here is the Mastcam‐100. The top view shows the entire camera head including its sunshade baffle; the lower view shows the details of the optical and mechanical lens and filter wheel assemblies.

The optics for each Mastcam is an all‐refractive focusable design, Mastcam‐34 has six fixed elements and three in a single movable group, Mastcam‐100 has five fixed and three in a single movable group, and the front of each also has a protective plano‐plano sapphire window (Figures 5a and 5b). Attached to the front of each camera is an aluminum sunshade that comprises nearly two thirds of the length of the cameras. This provides stray light rejection, protection against dust accumulation on the front window of each camera, and structure for mounting the front of the cameras to the RSM (the size and shape were dictated by the design of the descoped zoom lens).

The 34 mm lens has a focal ratio of about f/8 and a field of view of about 20.6°. Its angular resolution is about 218 μrad and it can focus on targets at distances of about 0.37 m to infinity. The focal length varies from 34.2 mm at infinity to 34.0 mm at closest focus. The 100 mm lens has a focal ratio of about f/10 and a field of view of about 7.0°. Its angular resolution is about 74 μrad and it can focus on targets at distances of about 1.8 m to infinity. The focal length varies from 99.9 mm at infinity to 94.8 mm at closest focus.

Mechanically, the Mastcams are similar to MAHLI, but with the omission of the MAHLI dust cover mechanism. The lens focus group is positioned using an Aeroflex 10 mm stepper motor with a 256:1 gearbox. The motor drives the integral gear of a cam tube, and the focus group slides on linear bearings under the control of a cam follower with rolling bearing. End‐of‐travel sensing is performed using a Hall‐effect sensor and magnet pairs on the cam. The total useful range of travel of the focus group is about 2 mm. To protect against damage during the vibratory loads of launch, EDL, and driving, the mechanism has a passive launch restraint at one end of its range of travel that loosely captures the cam follower.

The mechanisms are lubricated with polytetrafluoroethylene (PTFE)‐based vacuum‐compatible grease. This grease suffers from viscosity increase at low temperatures, requiring heating to be applied at some times of day for reliable actuation. This is accomplished using an etched‐foil heater wrapped around the lens barrel, thermostatically controlled by the rover. The minimum allowable flight temperature of the mechanism is −40°C, although function has been demonstrated at considerably colder temperatures.

5.3.4. Mastcam Filter Wheel Assemblies

Each Mastcam has an eight‐position filter wheel actuated by an Aeroflex 10 mm stepper motor with a 16:1 gearbox. DiBiase et al. [2012] described the mechanical drive system. The motor drives an integral gear on the outer diameter of the filter wheel; the filter wheel is supported by a bearing on its inner diameter. Position sensing of the wheel is accomplished using a Hall‐effect sensor and magnet pairs at each filter location, with an extra magnet pair for absolute indexing. Each filter has an aperture of 12.6 mm diameter.

Figure 3 shows the arrangement of filters for the Mastcam‐34 and Mastcam‐100 camera heads. In each, one of the eight positions contains a “clear” broadband infrared cutoff filter that is used with the Bayer RGB color capability of the CCD; this is filter position 0. At position 7, each camera has a 10−5 neutral density filter for solar observations (Mastcam‐34, ~880 nm; Mastcam‐100, ~440 nm). Figure 3 also shows the other visible and near‐infrared narrowband filters and their response functions; the spectral bandwidths are from system‐level monochromator measurements described by Bell et al. [2017]. Note that filter position 0, the infrared cutoff filter, is designed such that the cameras provide “natural color” in a single exposure.

Because the Bayer pattern filters are effectively transparent over the ~800 to ~1050 nm range, the IR filters can be used without regard to the pattern. For those filters that overlap the Bayer pattern wavelengths, the Bayer color interpolation is adjusted to either scale or ignore the pixel positions outside the filter's band pass. Thus, the images can appear to be gray scale or the principal color of the filter.

5.4. Digital Electronics Assembly (DEA)

5.4.1. DEA Hardware

The Mastcam and MARDI DEAs (Figures 2d and 4, right) are packaged with the MAHLI DEA to save mass. In each DEA, the electronics are laid out on a single rectangular PCB. The DEA interfaces the camera head with the rover avionics. All data interfaces are synchronous (dedicated clock and sync signals) and use low‐voltage differential signaling. Each (redundant) rover interface comprises two flow‐controlled serial links, one running at 2 megabits per second from the rover to the DEA and another at 8 megabits per second from the DEA to the rover. The DEA transmits commands to its respective camera head using a 2 megabits per second serial link and receives image data from the camera head on a 30/60/120 megabits per second selectable rate 6‐bit parallel link. The DEA is powered from the rover's 28 V power bus and provides switched regulated power to the camera head. It also contains a PRT for temperature monitoring.

The core functionality of the DEA is implemented in a Xilinx Virtex‐II FPGA. All interface, compression, and timing functions are implemented as logic peripherals of a Microblaze soft‐processor core in the FPGA. The DEA provides an image‐processing pipeline that includes 12‐to‐8‐bit companding of input pixels, horizontal subframing, and optionally lossless predictive or lossy Joint Photographic Experts Group (JPEG) compression. The latter also requires the Bayer pattern raw image to be interpolated and reordered into luminance/chrominance block format. The onboard image‐processing pipeline can run at the full speed of camera head input, writing the processed data stream directly into DEA memory.

The DEA memory subsystem contains 128 Mb of SDRAM (synchronous dynamic random‐access memory) and 8 Gb of nonvolatile NAND flash memory. The flash is organized as a large image buffer, allowing images to be acquired without use of rover memory resources at the maximum camera data rate. The SDRAM is typically used as scratchpad space and to store file system information, but can also be used as a small image buffer.

5.4.2. Flight Software

DEA hardware functions are coordinated by the DEA flight software (FSW) that runs on the Microblaze. The FSW receives and executes commands, transmits commands generated on Earth from the rover, and transmits any resulting data. The FSW also implements autofocus and auto‐exposure algorithms for image acquisition, performs error correction on the contents of flash memory, implements mechanism control and fault protection, and performs focus stack merges. The FSW consists of about 10,000 lines of ANSI C code (American National Standards Institute C programming language).

The FSW allows considerable flexibility for image acquisition. Images can be acquired in uncompressed form into the flash buffer and read out multiple times using different compression schemes. Alternatively, images can be compressed during acquisition to minimize the amount of buffer space they occupy; the latter capability is useful for video sequences. Reduced resolution “thumbnail” versions of each image can be generated on command. These thumbnails are generated in the FSW with some assistance from the DEA logic; in the case of images that are stored in compressed form, partial software decompression is required to produce the thumbnail. Thumbnails are produced “on‐the‐fly” just prior to their delivery to the rover compute element and queued for downlink; they are not stored by the cameras.

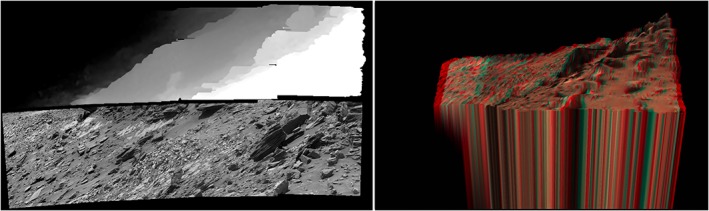

5.4.3. Postlanding Flight Software Modifications

Since arrival at Mars, very few issues have arisen regarding the Mastcam and MARDI flight software and all of those that did arise are managed with operational workarounds. Therefore, the Mastcams are still running on Mars using the same version of the software with which they were launched. MARDI, however, is using a new version of the FSW that adds a requested capability for so‐called “sidewalk mode” imaging. This operational mode, installed on sols 628–629 (13–14 May 2014), yields mosaics of MARDI images that parallel a rover drive. Driving on a layered rock exposure, these data can be used to continuously map stratigraphic relations at subcentimeter scales.

Normally, when the rover is driving it moves a short distance and then stops to evaluate its position. MARDI videos acquired during such drives would contain many redundant frames acquired during the pauses, but there is no way to command the video to start and stop based on rover behavior. For sidewalk mode, images are acquired, stored in MARDI SDRAM, and each is compared to the previous image using a simple mean‐squared error metric. If the error exceeds a fixed value, the image is considered to contain new scene information and is stored in the DEA flash storage. This method discards images acquired during drive pauses. The additional computation limits the maximum frame rate to about 1 frame every 3 s, but this usually provides good overlap between adjacent frames. Generally, the image thumbnails (section 7.9) are downlinked first and science investigators select which full frames to downlink.

5.5. Calibration Targets

5.5.1. MARDI

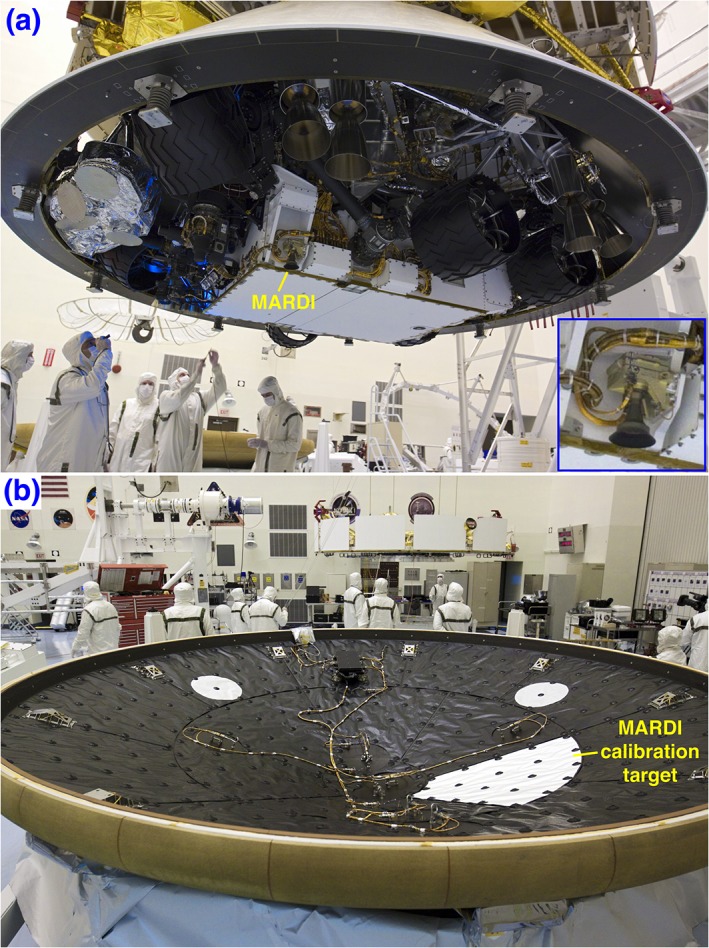

Serving as a white balance target during the MSL rover's descent to the Martian surface, the MARDI calibration target (Figure 7b) was a swatch of beta cloth (PTFE‐coated fiberglass cloth; Bron Aerotech BA 500BC). The target is a truncated 60° pie wedge‐shaped piece with a base of 34 cm and a side of 93 cm. Prelaunch calibration included imaging of a sample of target material with a standard color reference under close to identical illumination conditions.

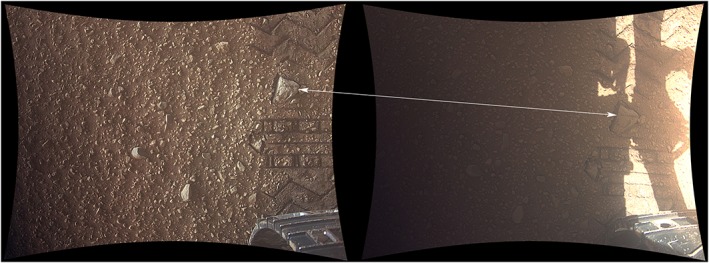

Figure 7.

MARDI hardware elements during final spacecraft assembly in October 2011 at the NASA Kennedy Space Center Payload Hazardous Servicing Facility in Florida, USA. (a) Illustrating the unobstructed view that MARDI would have for most of the descent, this photograph shows that the camera head after the rover was packaged with the MSL descent stage inside the spacecraft backshell. The inset shows the close‐up view of the camera head. NASA photograph KSC‐2011‐7344. (b) MARDI calibration target mounted inside heat shield. NASA photograph KSC‐2011‐7307.

5.5.2. Mastcam

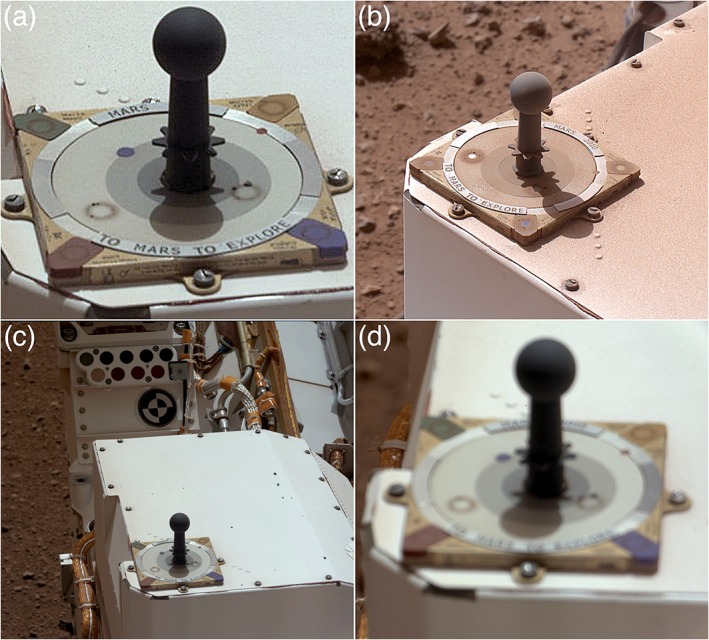

The pair of Mastcams share an 8 by 8 by 6 cm calibration target that provides a suite of reference color and gray swatches of known spectral reflectance and photometric properties (Figure 8). The target provides opportunities for validation of the instrument's radiometric calibration and tactical calibration of radiance images to relative reflectance or Lambert albedo in the Martian environment. The shadow cast by a central post (gnomon) accommodates assessment of the direct versus diffuse components of solar and sky irradiance incident on the target.

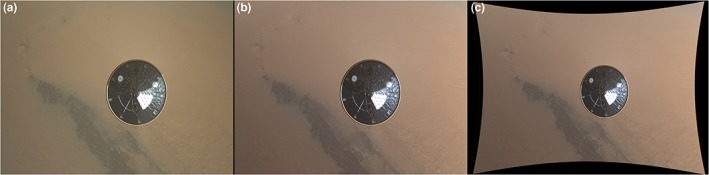

Figure 8.

The Mastcam calibration target on board Curiosity as viewed from three different cameras. The target is a flight spare of the MER Pancam calibration target, described by Bell et al. [2003] to which ring‐shaped “sweep” magnets were added to provide dust‐free areas on the color and gray swatches [see Bell et al., 2017]. (a) Details on the target, including initial sand and dust clinging to the embedded ring magnets, are evident in this sol 14 Mastcam‐34 view (from image 014ML0000210000100207E01). (b) The calibration target as viewed from a different camera position by the MSL MAHLI on sol 544 (portion of image 0544MH0003510000201493C00). (c) For comparison with the scale and focus of the view to the right in Figure 8d, this is the Mastcam‐34 view of the calibration target on sol 14 (image 014ML0000210000100207E01). (d) This is the Mastcam‐100 view on the same sol (image 0014MR0000210020100071E01). The target is too close to the Mastcam‐100 camera head for the camera to focus on it; nevertheless, sufficient pixels cover the red and gray swatches so as to be useful for in‐flight calibration [Bell et al., 2017].

The Mastcam calibration target is a modified flight spare from the MER Pancam investigation. The two preceding flight unit calibration targets were aboard the rovers Spirit and Opportunity [Bell et al., 2003; Bell et al., 2006]. The flight spare was modified to add six ring‐shaped “sweep” magnets [Madsen et al., 2003] approximately 1 mm under the surface of the color swatches and two of the gray scale rings to enable small parts of the target to remain relatively dust‐free throughout the surface mission; a similar strategy was employed for the Phoenix Mars lander Surface Stereo Imager [Leer et al., 2008]. Details of the reflectance and photometric properties of the target materials were described previously by Bell et al. [2003] and Bell et al. [2006]. Operationally, calibration target observations through all filters are acquired either before or after all multispectral and photometric sequences, and on a few other occasions, or roughly 5% of the Mastcam sequences transmitted to the rover.

6. Instrument Accommodation

6.1. MARDI Camera Head

The MARDI camera head is mounted rigidly on the bottom front left (fore port) side of the Curiosity rover with the top of the sunshade aligned evenly with the bottom of the rover chassis (Figure 7a). Its optic axis points in the rover coordinate frame +Z axis (toward the ground), and the long dimension of the detector is oriented perpendicular to the direction of flight (in practice, the FOV of the camera is inverted relative to the normal video scan direction). This position was designed to provide a view of the Martian surface, during descent, that is unobstructed by hardware except the descending heat shield and a portion of the front left rover wheel that would drop into view during the rover's cable descent from the hovering “sky crane” descent stage [Prakash et al., 2008]. The shutter speed was selected to address the effects of parachute‐induced solid body rotation rates (large magnitude but moderately low frequency) and descent‐thruster‐induced vibration (small magnitude but higher frequency).

6.2. Mastcam Camera Heads and Pointing

The Mastcam camera heads are mounted on the “camera plate” at the top of Curiosity's RSM, along with the ChemCam mast unit [Maurice et al., 2012] and the rover's four (two primary and two spare) Navigation cameras [Maki et al., 2012]. Each camera head is bolted to the RSM camera plate via two mounting feet on the upper housing/filter wheel assembly, and at a “bipod” support near the front of the camera's sunshade. Viewed from the back, the 34 mm camera is mounted on the left and the 100 mm camera is mounted on the right, and the terms “left eye” and “right eye” or “Mastcam left” and “Mastcam right” or “ML” and “MR” are commonly used in the course of Mastcam operations. The relative position and orientation of the cameras as measured during calibration is included in the camera models in the archival (NASA PDS) data product labels.

The RSM provides pointing in azimuth and elevation relative to the rover coordinate system. The RSM can point the Mastcams +91° (up) to −87° (down) in elevation; azimuth pointing permits full 360° views. Because of the orientation of the Mastcams, the pointing directly corresponds to the raw image coordinate system, such that azimuth moves a target left and right in image space and elevation moves it up and down. Mastcam imposed a pointing error requirement of 1/10 of the Mastcam‐100 FOV or about 0.5°; in practice, the control is better and pointing stability during exposures has not been an issue. Operationally, Mastcam pointing is commanded in a variety of coordinate systems, both rover‐fixed and relative to the surface. Pointing can be specified either angularly or as rectangular coordinates, whichever is more appropriate to the imaging use.

An idiosyncrasy of the Curiosity deck layout and the placement and orientation of the RSM coordinate system is that the Mastcam calibration target is positioned on the rover such that the RSM hard stop is between the optimal pointing angle for the left and right Mastcams. Thus, to view the target with both cameras, the RSM has to travel almost a full 360° between images, a minor operational inefficiency.

A flex cable connects each Mastcam and its DEA. These provide power to each camera head and form the communications link between them. These cables pass through azimuth and elevation twist capsules, which account for the relatively long total length of the flex cable. This design simplifies cable management, and is why the RSM cannot point below −87° elevation.

6.3. DEAs and Camera Head to DEA Harness

The DEA package—containing DEAs for each of the four cameras: Mastcam‐34, Mastcam‐100, MARDI, and MAHLI—is located inside the rover's environmentally controlled body (the warm electronics box). Each Mastcam camera head is connected to its respective DEA by a multisegment flex and round‐wire cable, approximately 6 m in total length, that is integrated with the rover's RSM. MARDI uses a conventional round‐wire cable harness also provided by JPL. Unlike MAHLI, which is limited in its maximum data rate by the longer harness on the rover's robotic arm [Edgett et al., 2012], neither Mastcam nor MARDI have such limitations and can run at the full signaling rate of 20 MHz.

6.4. Calibration Targets

The MARDI white balance target was mounted on the interior surface of the MSL heat shield (Figure 7b) so that it would be approximately centered in the MARDI FOV at heat shield release.

The Mastcam calibration target was required to be visible to and no closer than 1 m from the Mastcams. In addition, the science team desired the calibration target to be located such that it would typically be fully illuminated by the Sun (e.g., no shadows from hardware) between at least 10:00 and 14:00 local solar time on Mars. There was also a desire to mount the target at a location on the rover at which it would be viewed at an emission angle similar to or the same as the Pancams views of their calibration targets on the MER rovers (camera pointing elevation −36.5° ± 2.5° relative to a horizontal elevation of 0°), so as to take advantage of the detailed characterization work [Bell et al., 2003; Bell et al., 2006] already performed.

Ultimately, the Mastcam calibration target was mounted on the top of the Rover Pyro Firing Assembly box at the right rear of the rover deck (Figure 8). As described by Bell et al. [2017], pointing the Mastcam‐34 at the calibration target means positioning it at rover‐frame azimuth 189.2°, elevation −32.4°; for the Mastcam‐100, the elevation is the same and the azimuth is 176.2°. The distance between the center of the base of the calibration target gnomon to each Mastcam sapphire window is about 1.2 m; as Bell et al. [2017] discuss, this is too close for acquisition of in‐focus Mastcam‐100 images, but the greater number of Mastcam‐100 pixels (relative to Mastcam‐34 pixels) covering the target calibration surfaces compensates for the blurred view and does not compromise use of the target as a relative calibration source for Mastcam‐100 data [Bell et al., 2017].

7. Instrument Capabilities and Onboard Data Processing

7.1. Full‐Frame and Subframe Imaging

Mastcam and MARDI image size—the number of rows and columns of pixels—is commanded in whole number multiples of 16. Full‐frame images are 1600 by 1200 pixels in size or larger (the detector is 1640 by 1214 pixels in size; because of multiples of 16, the maximum number of columns is 1648 pixels). Subframes, when desired, are commanded at the time of image acquisition. Mastcam full‐frame images are vignetted, so to avoid the corner darkening; subframes, often operationally called ”full frames,” are typically 1344 × 1200 pixels, as these permit fewer images in a mosaic. An alternative Mastcam mosaic format, 1200 × 1200 pixels, was used early in the mission. Mastcam solar tau measurements are typically subframed 256 × 256 pixels, and some engineering images of the drill bit and sample portioner tube are also occasionally subframed 256 × 256 pixels.

7.2. MARDI Descent Operation

Operation of MARDI was required to be decoupled and occur independently of the rover during EDL. Normally, the MSL science instrument command process requires a “handshake” from the instrument to the rover. To eliminate any chance of a MARDI problem disrupting EDL events, MARDI was operated in a special “quiet” mode that suppressed the handshake. The rover simply commanded MARDI to begin imaging and the rest occurred internal to the MARDI. Each command was sent twice. The EDL image sequence was commanded as 1504 frames at the maximum frame rate, resulting in a video duration that would be longer than any possible descent time. This command was designed to complete normally instead of being terminated at landing; thus, some number of images were intended to be acquired of the surface beneath the camera after touchdown. A complicated downlink plan was devised to sample the full sequence because uplink commanding of the science instruments was not intended to begin until almost a week after landing. Thus, many images were returned out of order and sampled from the entire descent image data set, and not necessarily the best frames first, based on the a priori worst guess landing sequence. The actual landing took less than half the time of the worst case.

7.3. Mastcam Focus

7.3.1. Manual and Previous Focus

Each Mastcam can be focused “manually” or it can be autofocused. A manual focus is one in which the lens focus group is commanded to move to a specified motor count position and acquire an image. The cameras can also be commanded to use the same focus setting as the immediately previous image (i.e., a command to not change focus before acquiring the next image).

Bell et al. [2017] found that the relationship between focus group stepper motor count and distance to a target is

| (1) |

| (2) |

in which d is the distance from the camera to the focused image target in meters, T is the temperature of the camera in degrees Celsius, and f 34 and f 100 are the focus motor counts reported by the Mastcam‐34 and Mastcam‐100 cameras, respectively.

7.3.2. Autofocus

The Mastcam autofocus command instructs the camera focus stepper motor to move the lens focus group to an estimated specified starting position closer to the camera than the actual focus position (i.e., far‐field out of focus) and collect an image, move a specified number of steps out from that position and collect another image, and keep doing so until reaching a commanded total number of images, each separated by a specified motor count increment. Each of these images is JPEG compressed (Joint Photographic Experts Group; see CCITT [1993]) with the same compression quality factor applied. The file size of each compressed image is a measure of scene detail that is in turn a function of focus (an in‐focus image shows more detail than a blurry, out‐of‐focus view of the same scene). In practice, assuming that the seed position was on the short side of focus, and the final position on the farside of focus, the file sizes would start low, increase to a near maximum, and then decrease. As illustrated by Edgett et al. [2012], the camera determines the relationship between JPEG file size and motor count and fits a parabola to the three neighboring maximum file sizes. The vertex of the parabola provides a computation of the best focus motor count position. Having made this determination, the focus stepper motor moves the lens focus group to that best motor position and acquires an image. This last image is stored, and the earlier images used to determine the autofocus position are not.

Autofocus can be performed over the entire Mastcam field of view, or it can be performed on a subframe that corresponds to the portion of the scene that includes the object(s) intended to be in focus. It should be noted that this technique is not perfect, as a number of factors associated with the scene itself can spoof the approach (e.g., half of the scene is very active, while half is not; the scene is uniformly active but covers a distance greater than the depth of field of the lens). Also, success is highly dependent on the seed estimate and number and size of increments (e.g., if the seed is too close and number and increments does not pass focus, or in the opposite case, the seed is too far past focus and so increments never achieve focus).

7.4. Mastcam Filter Wheel

Each Mastcam filter wheel (Figures 3a and 3b) position can be commanded either with an explicit, separate command to rotate to a specific position or implicitly by commanding a filter position when commanding image acquisition. The filter wheel gear ratio is such that it takes 2346.67 stepper motor counts to rotate the wheel by 360° or 293.33 motor counts to rotate from one filter position to the next. Since it is impossible to move by fractional motor counts, this is rounded up to 294 counts. For a single revolution, this leads to a negligible amount of positioning error, but if left to accumulate, then the mismatch would eventually lead to noticeable misalignment of the filters. To avoid this, the wheel is only commanded over an total angle of 315°; that is, if the wheel is at position 7 and position 0 is desired, the wheel is driven seven positions backward instead of one position forward, even though the latter would be faster. The motor is typically driven at a rate of 150 motor counts per second, so each filter step takes slightly less than 2 s to accomplish. The magnet pair at each filter position could be used for positioning drive control. However, in practice, filter wheel operation has always been skip‐free, so positioning is done using dead reckoning. A homing algorithm is implemented in the flight software that can move the wheel to the filter 0 location without any knowledge of its initial position. Periodic filter homing operations can be performed if there is reason to suspect filter wheel slippage.

7.5. Exposure

The exposure duration for each Mastcam or MARDI image can be commanded manually or by using the instrument's auto‐exposure capability. The exposure duration can range from 0 to 838.8 s (i.e., up to 13.98 min). Exposure times are commanded in units of 0.1 ms and manual exposures require the user to know something about the expected illumination conditions.