Abstract

Tensor factorization models offer an effective approach to convert massive electronic health records into meaningful clinical concepts (phenotypes) for data analysis. These models need a large amount of diverse samples to avoid population bias. An open challenge is how to derive phenotypes jointly across multiple hospitals, in which direct patient-level data sharing is not possible (e.g., due to institutional policies). In this paper, we developed a novel solution to enable federated tensor factorization for computational phenotyping without sharing patient-level data. We developed secure data harmonization and federated computation procedures based on alternating direction method of multipliers (ADMM). Using this method, the multiple hospitals iteratively update tensors and transfer secure summarized information to a central server, and the server aggregates the information to generate phenotypes. We demonstrated with real medical datasets that our method resembles the centralized training model (based on combined datasets) in terms of accuracy and phenotypes discovery while respecting privacy.

Keywords: ADMM, Federated approach, Phenotype

1 INTRODUCTION

Electronic health records (EHRs) become one of most important sources of information about patients, which provide insight into diagnoses [19] and prognoses [11], as well as assist in the development of cost-effective treatment and management programs [1, 12]. But meaningful use of EHRs is also accompanied with many challenges, for example, diversity of populations, heterogeneous of information, and data sparseness. The large degree of missing and erroneous records also complicates the interpretation and analysis of EHRs. Furthermore, clinical scientists are not used to the complex and high-dimensional EHR data [8, 21]. Instead, they are more accustomed to reasoning based on accurate and concise clinical concepts (or phenotypes) such as diseases and disease subtypes. Useful phenotypes should capture multiple aspects of the patients (e.g., diagnosis, medication and lab results) and be both sensitive and specific to the target patient population. Although some phenotypes can be easily concluded based on EHR data, a wide range of clinically important ones such as disease subtypes are not obtainable in a straightforward manner. The transformation from EHR data into useful phenotypes, or phenotyping is a fundamental challenge to learn from EHR data. Current approaches for translating EHR data into useful phenotypes are typically slow, manually intensive and limited in scope [4, 5]. Overcoming several disadvantages of the previous methods, tensor factorization methods have shown great potential in discovering meaningful phenotypes from complicated and heterogeneous health records [13, 14, 28].

Nevertheless, phenotypes developed from one hospital are often limited due to a small sample size and inherent population bias. Ideally, we would like to compute phenotypes on a large population with data combined from multiple hospitals. However, this will require healthcare data sharing and exchange, which are often impeded by policies due to the privacy concerns. For example, PCORnet data privacy guidance does not allow record-level research participant information sharing and it recommends a minimum count threshold (e.g., 10) for aggregate data sharing [25]. The same threshold is used in Informatics for Integrating Biology & the Bedsides (I2B2) [24], a famous system developed by National Center for Biomedical Computing based at Partners HealthCare. The real-world challenges motivate the development of a federated phenotyping method to learn phenotypes across multiple hospitals with mitigated privacy risks.

In the federated method, the hospitals perform most of computations, and a semi-trusted server supports the hospital by aggregating results from hospitals. The hospitals demand a certain form of summarized patient information (not patient-level data) anyhow for updating tensor. A challenge of the federated tensor factorization is that the summarized information can disclose the patient-level data. For example, an objective function of tensor factorization is ||𝒳 − 𝒪||2 where 𝒳 is a tensor to be estimated using an observed tensor 𝒪. Because the objective function is not linearly separable over hospitals, tensor factorization for each hospital inevitably demands the others patient-level data. Thus, hospitals should share summarized information that does not disclose the patient-level data but instead contains accurate phenotypes from the patient-level data.

However, sharing the summarized information raises another challenge when the data are distributed in many hospitals as a relatively small size, or when the data are unevenly distributed. Because of sampling error, noise in the summarized information can increase with small patient populations. Accuracy then can be decreased or unstable. Therefore, we need to ensure the robustness of summary information even with small sized or unevenly distributed samples.

In this paper, we develop federated Tensor factorization for privacy preserving computational phenotyping (Trip), a new federated framework for tensor factorization over horizontally partitioned data (i.e., data are partitioned based on rows or patients). Our major contributions are the following:

Accurate and fast federated method: Trip is as accurate as centralized training model (based on combined datasets). The accuracy of Trip is robust on the patient size or distribution. Trip is fast compared to the centralized training model thanks to federated computation.

Rigorous privacy and security analysis: Trip preserves the privacy of patient data by transferring summarized information. We prove that the summarized information does not disclose the patient data.

Phenotype discovery from real datasets: Phenotypes that Trip discovers without sharing the patient-level data are the same phenotypes based on the combined data. Trip even discovers some phenotypes that individual hospital cannot discover due to biased and limited population.

2 RELATEDWORKS

Many privacy preserving data mining algorithms aim at constructing a global model only from aggregated statistics locally generated by participating institutions on their own data, without seeing others’ data at a fine-grained level [22, 27]. More rigorous privacy criteria like differential privacy [10], which introduces noises, have been applied for several classification models through parameter or objective perturbations [6]. However, this is not desirable for computational phenotyping applications because noise can lead to “ghost” phenotypes, which do not exist in the original databases and might mislead healthcare providers with severe consequences. In this work, we will consider privacy protection like in the former privacy preserving data mining methods to compute phenotypes by only exchanging summary statistics, calculated by local participants.

Tensor factorization emerged as a promising solution for computational phenotyping thanks to its interpretability and Flexibility. In the medical context, tensor factorization has been adapted to enforce sparsity constraints [13], model interactions among groups of the same modality [14], and absorbing prior medical knowledge via customized regularization terms [28]. Our goal is to develop a federated tensor factorization framework to compute phenotypes in a privacy-preserving way. This is different from distributed tensor factorization models [7, 16] and grid tensor factorization models [9]. The latter assumes data spread across different but interconnected computer systems, in which the communication cost is negligible and data/computation can be arbitrarily reallocated to improve parallelization efficiency. In contrast, our Trip framework deals with data stored in separate sources (hospital at different locations) and requires the ability to go through policy barriers using accepted practices that respect privacy.

3 PRELIMINARIES

We first describe some preliminaries of tensor factorization, and summarize the notations and symbols in Table 1.

Table 1.

Notations and symbols

| ∘ | outer product |

| ⊚ | Khatri-Rao product |

| R | number of ranks |

| N | number of modes (order) |

| K | number of hospitals |

| A, B | matrix |

| 𝒳, 𝒪 | tensor |

| O(n) | matricized tensor of 𝒪 on nth mode |

Definition 3.1

Outer product of N vectors a(1) ∘ ··· ∘ a(N) makes N-order rank-one tensor 𝒳.

Definition 3.2

Kronecker product of two vectors a ∈ ℝIa×1 and b ∈ ℝIb×1 is

Definition 3.3

Kharti-Rao product of two matrices A ∈ ℝIA×R and B ∈ ℝIB×R is A ⊚ B = [a1 ⊗ b1 ··· aR ⊗ bR] ∈ ℝIAIB×R.

Definition 3.4

Matricization is to reshape the tensor into a matrix by unfolding elements of the tensor. Mode-n matricization of tensor 𝒪 is denoted as O(n).

Tensor factorization is a dimensionality reduction approach that represents the original tensor as a lower dimensional latent matrix. The CANDECOMP/PARAFAC (CP) [3] model is the most common tensor factorization, which approximates the original tensor 𝒪 as 𝒳, a linear combination of R rank-one tensors that are made from outer product of N vectors. That is, CP tensor factorization is represented as

where A(n) (:, r) refers to the r th column of A(n). Here, A(n) is the nth factor matrix. R is referred as the rank of the 𝒳. The columns from factor matrices represent latent concepts that describe the data as lower dimensions.

Tensor factorization for phenotyping is to compute a factorized tensor 𝒳 that contains latent medical concepts from data (or observed tensor 𝒪). 𝒳 consists of the R most prevalent phenotypes. The nth factor matrix, A(n) defines the elements from the mode n to comprise the phenotypes. That is, r th phenotype consists of r th column of factor matrices [13].

The objective function of the tensor factorization with regularization terms for pairwise distinct constraints [28] is formulated as

| (1) |

It is rewritten with respect to mode-n matricization

| (2) |

where Π(n) = A(N) ⊚ … ⊚ A(n+1) ⊚ A(n−1) ⊚ … ⊚ A(1). This is our decomposition goal in the rest of this paper. Solving the problem (1) while preserving privacy is technically challenging because the tensor residual term 𝒳 − 𝒪 inherently contains other hospitals’ patient data that involve sensitive information.

4 FEDERATED TENSOR FACTORIZATION

We first provide a general overview of the Trip and then formulate the problem with iterative updating rules for optimization.

4.1 Overview

Trip is a federated tensor factorization for horizontally partitioned patient data. We assume the data are horizontally partitioned along patient mode, that is, hospitals have their own patient data on the same medical features (Figs. 1, 2). Let us assume that there are K hospitals and a central server, where the server distributes most decomposition computation to hospitals and aggregates intermediate results from them. We assume Honest-but-Curious adversary model, in which the server and hospitals are curious on data of others but do not maliciously manipulate intermediate results [18].

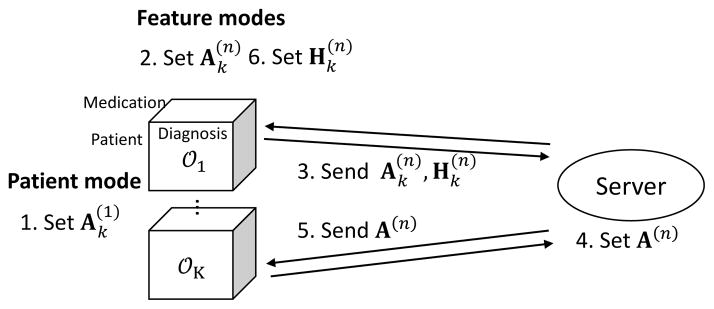

Figure 1.

Process of federated tensor factorization.

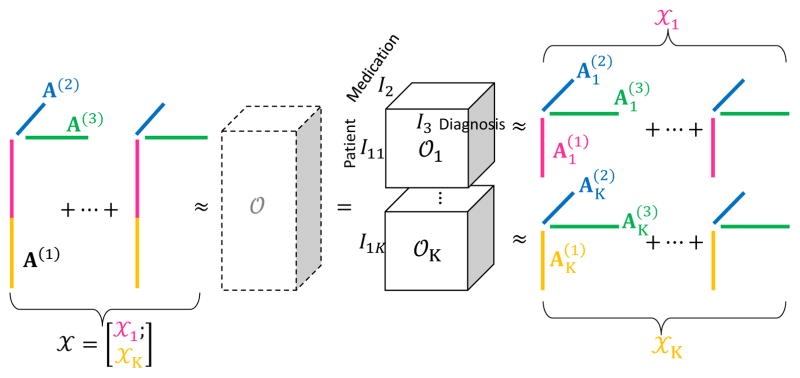

Figure 2.

Equivalence between tensor factorization with respect to each local tensor 𝒪k and tensor factorization with respect to global tensor 𝒪. Without 𝒪, tensor factorization that is globally optimal across hospitals can be achieved via local tensor factorization.

A local observed tensor 𝒪k is the local patient data in hospital k (Fig. 2); a local factorized tensor 𝒳k is the factorized tensor generated by local observed tensor in hospital k, 𝒳k has N modes for the set of patient and medical features (eg. medication, diagnosis). In this case, N = 3 because we have modes for patient, medication, and diagnosis. The horizontally partitioned patient mode of each 𝒳k is generated from distinct set of patients whose size is I1k. For simplicity, first mode (n = 1) always denotes patient mode. On the other hand, N − 1 medical features modes that hospitals share of each 𝒳k is generated from the same set of N − 1 medical features whose size is In, (n = 2,…, N). For example, diagnosis and medication can be the feature modes. The size of 𝒳k is I1k × I2 × ··· × IN, ∀k.

We assume that factor matrix on feature modes of the local factorized tensor 𝒳k is the same for all the hospitals. By assuming that, all hospitals are enforced to share the same phenotypes. Also, the objective function Ψ in Eq. (1) can be linearly separable on hospitals; consequently hospitals can update their local factorized tensor indirectly using other hospitals’ patient data while respecting privacy.

The local factorized tensor 𝒳k is computed as following steps: first, in patient mode, hospital k (k = 1, …, K) computes local factor matrix independently (step 1) in Fig. 1. For feature modes, hospital k computes the local factor matrices (step 2) and send them together with the Lagrangian multipliers to the server (step 3). The server then generates harmonized factor matrix (global factor matrix) by combining all the local factor matrices with Lagrangian multipliers (step 4). After receiving the global factor matrix (step 5), hospital k updates the Lagrangian multipliers (step 6). Hospitals and the server repeat the procedures until the local factor matrices are converged. During the procedures, the global factor matrices can retain phenotypes from local factor matrices without directly using the local patient data.

4.2 Formulation

We first formulate separable objective function on hospitals for federated tensor factorization. The objective function for tensor factorization, Ψ in Eq. (1) is reformulated with respect to the local factorized tensor.

𝒳k is decomposed into factor matrices (patient mode) and , n ≥ 2 (feature modes). We assume that the local factor matrices of feature modes from all hospitals are equal to the global factor matrix (Fig. 2), i.e.,

| (3) |

This assumption is reasonable because all hospitals aim to have the same phenotypes and share them with others. By assuming Eq. (3), the horizontal concatenation of the local factor matrices of patient mode forms the (global) factor matrix A(1) (Fig. 2):

| (4) |

Accordingly, we represent the global factorized tensor 𝒳 in Eq. (1) with respect to the local factorized tensor 𝒳k (Fig. 2) as

and we can make the objective function Ψ linearly separable on k as . The optimization problem for tensor factorization is reformulated with respect to local tensors:

| (5) |

Here, the non-convex second term in Eq. (1) is replaced to a convex term using B(n) such that A(n) = B(n). We assume that the pairwise constraint is only applied to the feature modes. This assumption is reasonable because phenotypes are defined as only combination of medical features in feature modes.

Augmented Lagrangian function ℒ for the new optimization problem (5) is

where and Y(n) are the Lagrangian multipliers. The penalty terms that are multiplied by parameter ω and μ help ℒ to improve the convergence property (i.e., method of multiplier) [23] during federated optimization in Section 4.3.

4.3 Federated optimization

The optimization problem (5) is then solved via consensus alternating direction method of multipliers (ADMM) [2], which decomposes the original problem into sub-problems using auxiliary variables and ensures convergence to a stationary point even with nonconvex problem [15]. Our problem is decomposed to sub-problems for hospitals with respect to the local factor matrices. Individual components of the local factor matrices are iteratively updated while other local factor matrices are fixed. Once all hospitals update the local factor matrices, server updates the global factor matrix and send it back to every hospital. Hospitals and server repeat this procedure until the local factor matrices converge before maximum iteration.

4.3.1 Patient mode

Because the factor matrix for patient mode does not need to be shared, each hospital updates the local factor matrix without sharing the intermediate results. The local matricized residual tensor on patient mode is

Horizontal concatenation of the local matricized residual tensors from K hospitals becomes the global matricized residual tensor A(1)Π(1)T − O(1). To compute , we separate the first term in Ψ in Eq. (1) to each hospital as

| (6) |

By setting derivatives of Ψ with respect to to zero, a closed form solution for updating is

| (7) |

4.3.2 Feature modes

Hospitals update the local factor matrices using the global factor matrix, and server makes the global factor matrix by aggregating the intermediate local factor matrices from hospitals in turn.

Update the local factor matrices

The local matricized residual tensor on feature modes is

where , n ≥ 2. Contrast to patient mode, vertical concatenation of the local matricized residual tensors becomes the global matricized residual tensor A(n)Π(n)T − O(n). The first term in Ψ becomes

| (8) |

with . The closed form solution for is

| (9) |

This closed form solution updates the local factor matrices using the both local observed tensor O(n)k and global factor matrix A(n). That is, each hospital uses both their patient data and the common phenotypes from others to update their local phenotypes. Now, hospitals send the local information and to server for following updates on the global factor matrix.

Update the global factor matrix

Server updates the global factor matrix based on the local information. The objective function is

that also uses the pairwise constraint. A(n) is updated to be similar with in the third term. That is, the global phenotypes are made to be similar with all other hospitals’ phenotypes. By derivatives of this function with respect to A(n), we derive the following closed form solution:

| (10) |

Now, server sends the global information A(n) to hospitals for the next iteration. Server updates B(n) by

| (11) |

Algorithm 1.

Trip

| 1 | Input: 𝒪, λ, ω, μ |

| 2 | Initialize , Y(n). |

| 3 | repeat |

| 4 | // Update patient mode n = 1 |

| 5 | Hospitals set (Eq. 7). |

| 6 | for n = 2, …, N do //Update feature modes |

| 7 | Hospitals set and send (Eq. 9). |

| 8 | Server sets and sends A(n) (Eq. 10). |

| 9 | Server sets B(n) and Y(n) (Eq.11, 12). |

| 10 | Hospitals set and send (Eq. 13). |

| 11 | end for |

| 12 | until Converged |

Update Lagrangian multipliers

Finally, server updates Lagrangian multipliers as

| (12) |

Hospitals also updates local Lagrangian multipliers as

| (13) |

to adjust the gap between local and global factor matrices. The entire procedures of updating the tensors are summarized in Algorithm 1.

4.4 Convergence proof

We prove that our federated tensor factorization (5) converges. Due to limited space, detailed proof of inequality (17) and (22) can be found in our technical report [17] or [2]. For each n = 2, ···, N, let us denote

| (14) |

for vectorized local factor matrices, global factor matrix, Lagrangian multipliers, and residual at iteration t, respectively. Then ℒ is rewritten as

| (15) |

where and . Let (x*, z*, y*) be a saddle point, and define

| (16) |

Vt decreases in each iteration (proof in [17]) because

| (17) |

Adding the inequality (17) through t = 0 to ∞ gives

| (18) |

which implies rt → 0 and zt → zt+1 as t → ∞.

Now, we define pt = f(xt) + g(zt) and show pt converges. Because (x*, z*, y*) is a saddle point,

| (19) |

That is, using x* = z* at the saddle point,

| (20) |

which implies that upper bound of p* − pt+1 is

| (21) |

Lower bound of p* − pt+1 (proof in [17]) is

| (22) |

The upper and lower bounds go to zero because rt → 0 and zt → zt+1 as t → ∞, i.e., limt→∞pt = p*. Thus, the objective function Ψ of our federated optimization converges.

4.5 Privacy analysis

In our Honest-but-Curious adversary scenario, privacy of patient data is preserved because patient-level data are not disclosed to the both server and hospitals. The server and hospitals cannot access to unintended fine-grained local information. The local data are only accessible to the corresponding hospital. The server also cannot indirectly learn patient data from the local factor matrices. After receiving , the server might try to do reverse-engineering through Eq. (9). However, server cannot access to because from patient mode is not shared. If server accesses to by any chance as is leaked, reverse-engineering cannot still restore patient-level data. That is, the matricized unknown observed tensor (patient data) has an equation in form of after removing all the known values in Eq. (9) for simplicity. The size of the unknown information in O(n)k is In × (I1k ··· In−1In+1 ··· IN), and the size of and is (I1k ··· In−1In+1 ··· IN) × r and In × r, respectively. Element-wise computation generates only In · r equations for the unknown I1 ··· IN values. Server cannot recover the unknown values from the In · r equations that is less than the number of unknown values (r is always selected as In · r ≪ I1 ··· IN).

Hospitals also cannot learn other hospitals’ data from the global factor matrix. If hospital k′ knows all the information of Eq. (10) for global factor matrix by any chance, hospital k′ cannot restore other hospitals’ local factor matrix . If the hospital k′ can access to by any chance, has still insufficient information to recover the data as shown in the case of server.

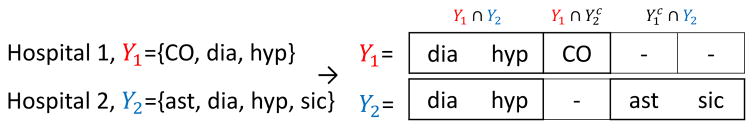

4.6 Secure alignment of feature modes

In Section 4.2, we first assume that hospitals have the same element set for each feature in feature modes, but in practice, hospitals may have different elements. For example, Hospital 1 and Hospital 2 have set of diagnosis: Y1 and Y2 (Fig. 3), but each index of Y1 and Y2 refers to a different element. In this case, before concatenating the local tensors, the index of feature modes should refer to the same element among hospitals. Thus, we introduce a secure alignment method for feature modes by which hospitals do not reveal the elements they have and get an integrated and sorted view on the elements of feature modes. This secure alignment enables hospitals to have only the position of its elements without knowing other hospitals’ elements as like Y1 and Y2 are aligned to make the index from two sets refer to the same element (Fig. 3). For each feature mode, hospital k assigns integer values to the set of elements Yk (eg. ICD9 codes). Hospitals use polynomial properties of set intersection [18]:

Figure 3.

Example of secure alignment on feature mode.

Lemma 4.1

A polynomial function of y that represents set of elements at hospital k is . A yik is an element of Yk (yik ∈ Yk) if and only if fk(yik) = 0.

Lemma 4.2

A polynomial function that represents intersection of Yk and Yk′ (Yk ∩ Yk′) is fk * r + fk′ * s where r, s are polynomial functions with gcd(r, s) = 1. Given fk * r + fk′ * s, one cannot learn individual elements on Y1 and Y2 other than elements in Y1 ∩ Y2.

Hospitals express Yk as a polynomial function (or in short polynomial) fk by Lemma 4.1. To prevent the factorization of the polynomial, hospital k multiplies a term r = (y − α) to fk (=fk *r), where α is a random prime number that is selected with overwhelming probability that the α does not represent any element from Yk. For simplicity, fk * r is denoted as fk. Because computing the polynomials with large |Yk| can cause computational overhead, hospitals compute the polynomials’ modulus (denoted as %) by a random prime number P (P > yk) instead of the polynomial itself, i.e., hospitals compute by equivalence of modulus operation and use it instead of fk.

Then server receives fk%P from hospitals. To find a pairwise intersection between hospital k and k′, server computes a pairwise sum of polynomials as [fk%P + fk′%P] %P = [fk + fk′] %P, which refers to the polynomial for intersection between hospital k and k′. Server repeats this procedure for every pair of k and k′ (k′ ≠ k), and send the K − 1 polynomials to each hospital. Hospital k then checks whether its element yk ∈ Yk is in the pairwise intersection of hospital k and other hospital k′, that is, if [fk(yk) + fk′(yk)]%P = 0, then yk ∈ Yk ∩ Yk′ by Lemma 4.2.

By combining all the pairwise intersection with K − 1 hospitals, hospital k checks whether the element yk is in the intersection of all the K hospitals. For example, combining the pairwise intersection of f1(y1) + f2(y1) = 0 (i.e., y1 ∈ Y1 ∩ Y2) and f1(y1) + f3(y1) ≠ 0 (i.e., y1 ∉ Y1 ∩ Y3) gives . After obtaining 2K−1 intersections with Yk, hospital k sends the size of 2K−1 intersections to server. Server collects the size of intersection from all the K hospitals and obtain the size of all the 2K − 1 combinations of intersections (the number of combinations is two cases, whether in or out, for every Yk except one case when ). Finally, hospitals receive the size of 2K − 1 intersection, and align their elements according to the size information. Hospitals have the same order of these intersections such as Y1 ∩ Y2, (Fig. 3). The elements within the intersections are sorted. Thus, all hospitals have the same size and order of elements for every feature mode.

5 EXPERIMENTS

We evaluate Trip by measuring computational performance (accuracy and time) and deriving meaningful phenotypes. We compare Trip with two baselines:

Central model: Ordinary tensor factorization method for phenotyping. Regardless of privacy problem that concerns data sharing, this model runs on a central server where all the patient data are combined [28].

Local model: We devise an intuitive local model, by which hospitals run the central model at their sides and send the final factor matrices of feature modes to server. Server averages the factor matrices and sends the averaged factor matrices back to hospitals without iterative updating like Trip. Because each column in factor matrices can represent different phenotypes over hospitals, before averaging the matrices, server sorts the columns of each hospital’s factor matrix so that all hospitals have the same phenotypes at each column. For all feature modes n, server first chooses a pivot hospital kp and computes cosine similarity between every pair of rp th and r th column from factor matrix of hospital kp and other hospitals k as where ∀k ≠ kp, ∀r ≠ rp. Server then finds the most similar combination of rp and r for all pairs. Finding the best combination that matches multiple items (columns) to multiple items can be solved in polynomial time by Hungarian method [20]. Finally, server changes the order of columns in according to the combination so that each column from and refer to the same phenotype.

5.1 Accuracy and Time

We use a large publicly available dataset MIMIC-III containing de-identified health-related data associated with over forty thousand patients who stayed in critical care units of the Beth Israel Deaconess Medical Center between 2001 and 2012 [26]. MIMIC-III includes information such as demographics, laboratory test results, procedures, medications, caregiver notes, imaging reports, and mortality. We construct a 3-order tensor with patients, laboratory test results, and medication. The tensor value is the number of co-occurrences of abnormal lab results and medication from the same patient within specific time window. We generate four datasets as setting the time window as 3 hours, 6 hours, 1 day, or 7 days, and have the number of nonzero values of around 15 million (M), 25M, 40M, and 50M, respectively. The size for 7-day-window tensor (MIMIC-III 50M) is 38,035 patients by 3,229 medications by 304 lab results. Because duplicated co-occurrence can be counted with large time window, we set the maximum value of count as three, which is a median of 1-day-window tensor (MIMIC-III 40M). The count value larger than three is truncated to three.

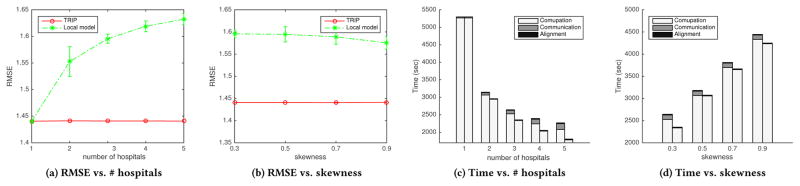

We evaluate accuracy and time of Trip compared to two baseline models by varying the number of nonzero values, hospitals, and skewness (for unevenly distributed patients). We measure accuracy using root mean square error (RMSE) between the factorized tensor and the observed tensor. We also measure time elapsed by adding time for computation, communication, and alignment. Because Trip and local model distribute computation of local tensors to hospitals, we consider the computation time on the local tensors as the largest computation time on one hospital. The communication time is measured as the total number of communicated bytes between server and hospitals divided by data transfer rate of 15 MB/sec. The communication time for central model is time for transferring the local patient data to server. We repeat the evaluation ten times and average them. We run the models until it converges before maximum iteration 100. The rank is set to ten. λ is set to 10−2 after trying 10−3, 10−2, 10−1, 1, and 10.

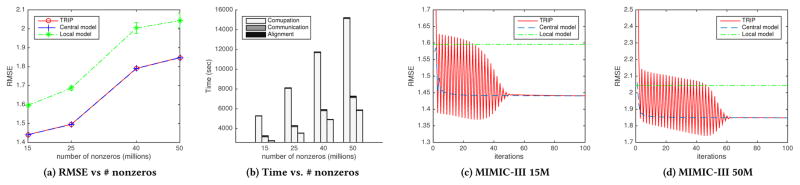

5.1.1 Number of nonzeros

We use the four MIMIC-III datasets that have 15M, 25M, 40M, and 50M nonzero values. We assume that MIMIC-III datasets are distributed in three hospitals, on which the patients are randomly distributed as the same size. As a result, Trip has low RMSE as much as central model and resembles central model for all the four datasets (Fig. 4a). For MIMIC-III 15M, RMSE from central model converges to 1.4404. Similarly, the RMSE from Trip starts to stable at around 50 iterations and converges to 1.4409 (Fig. 4c). Both of the RMSE from central model and Trip are significantly smaller than that of local model (1.5957). MIMICIII 50M dataset also shows similar convergence. RMSE from Trip starts to stable at around 60 iterations and converges to 1.8482, which is also similar to RMSE from central model, 1.8479 (Fig. 4d). MIMIC-III 25M shows the RMSE of 1.4955 from Trip, 1.4947 from central model, 1.6867 from local model. MIMIC-III 40M shows the RMSE of 1.7913 from Trip, 1.7903 from central model, 2.0037 from local model. Convergence results on MIMIC-III 25M and 40M can be found in our technical report [17].

Figure 4.

RMSE and total time over the number of nonzeros (Fig. 4a, 4b). The first, second, and third stacked bars in Fig. 4b refer to central model, Trip, and local model, respectively. RMSE of Trip, central model, and local model over iteration (Fig. 4c, 4d).

In addition, total time elapsed for Trip (and local model) is much faster (half less) than central model in all datasets (Fig. 4b). Trip reduces computation time by distributing updating procedures to de-centralized hospitals; consequently, Trip reduces total time elapsed although sacrificing communication and alignment time. For the datasets of 15M, 25M, 40M, and 50M, computation time from Trip is 3,152, 4,183, 5,796, and 7,125 seconds, and computation time from central model is 5,266, 8,068, 11,661, and 15,105 seconds, which take majority of total time. Communication time from Trip is around 100.7 seconds for all the cases, and communication time from central model is 30.1, 53.9, 85.0, and 114.4 seconds. Note that Trip saves not only computation time but also communication time with large dataset (MIMIC-III 50M). Alignment time for Trip takes 22.6, 26.1, 29.3, and 59.0 seconds, which is negligible compared to computation time. Based on those observations, we can see that Trip efficiently resembles central model without no cost for privacy even at reduced time owing to distributed computation.

5.1.2 Number of hospitals

Using MIMIC-III 15M dataset, we partition the patients evenly into one to five hospitals. RMSE with one hospital refers to RMSE of central model. We observed that RMSE of Trip is stable as the number of hospitals increases, and is similar to RMSE of central model 1.4404, whereas RMSE of local model increase (Fig. 5a) with large variance. That is, compared to local model, in which local factorized tensors are diverged, Trip is robust on the finely split data. It means that phenotypes from Trip are accurate and not biased even with many small sized patient data.

Figure 5.

RMSE over the number of hospitals (Fig. 5a) and skewness (Fig. 5b). Total time over the number of hospitals (Fig. 5c) and skewness (Fig. 5d). The former and latter stacked bars in Fig. 5c, 5d refer to Trip and local model, respectively.

Total time of Trip and local model are significantly faster than that of central model. As the number of hospitals increases and the patient data are spread more, the total time of Trip and local model decrease (Fig. 5c). Specifically, computation time for Trip and local model decrease because more hospitals distribute the computation, and communication time for Trip slightly increases, whereas communication time for local model is negligibly short. Alignment time is negligible for both Trip and local model.

5.1.3 Skewness

We partition the patients in MIMIC-III 15M unevenly in three hospitals. One hospital takes 1/3 (evenly distributed), 0.5, 0.7, and 0.9 of patients, and the other two hospitals take the remaining patients evenly. Note that elements in feature mode are still overlapped enough among hospitals. We observed that RMSE of Trip is stable although patients are distributed unevenly, whereas RMSE of local model is higher than that of Trip with large variance. Factorized tensor of local model can be inaccurate because the local factorized tensor from a small sized hospital can be biased and far different from others’ results. However, the hospital can benefit from Trip by overcoming this bias and producing a generalized results.

Total time of Trip and local model increase (Fig. 5d) as the skewness increases. Time for computation increases because computational overhead occurs on one hospital with large data, and time for communication and alignment does not increase significantly.

5.2 Phenotype discovery

We use de-identified EHRs dataset from University of California, San Diego (UCSD) Medical Center with 8,022 patients by 748 medications by 299 diagnoses. Specifically, it is from two hospitals that have 4,703 patients (UCSD1) and 3,319 patients (UCSD2). We construct a 3-order tensor with patient, medication, and diagnosis mode with around 1.6 million of non-zero elements. The value of tensor is the number of co-occurrences of medication and diagnosis event from the same patient at the same visit.

We discover phenotypes from Trip and compare them with phenotypes from central model and individual central model of two hospitals in UCSD (i.e., run central model independently at UCSD1 and UCSD2). λ is set 1 to derive more distinct phenotypes than those from MIMIC-III. A domain expert summarizes the factorized tensor into clinically meaningful phenotypes. The phenotypes consist of set of diagnoses and its corresponding medications. Due to limited space, medication factors in phenotypes are omitted and can be found in our project website [17].

As a result, Trip discovers unbiased and hidden phenotypes compared to the phenotypes from two individual central models (UCSD1, UCSD2). The phenotypes from Trip contain top-ranked phenotypes from UCSD1 and UCSD2, and are similar to phenotypes from combined central model, UCSD1+UCSD2 (Table 2). The phenotypes from Trip consist of top five phenotypes from UCSD1 and top four from UCSD2. The phenotypes from Trip are also the same with phenotypes from central model except gastrointestinal complaints and neurogenic bladder. Without our federated model, the two individual hospitals could derive biased phenotypes that are only fitted to the local data. It means that Trip can effectively resemble central model without cost for privacy.

Table 2.

Phenotypes from Trip, central model on UCSD1+UCSD2,

, and

, and

. Some phenotypes appear in

. Some phenotypes appear in

| Rank | TRIP | UCSD1+UCSD2 | UCSD1 | UCSD2 |

|---|---|---|---|---|

| 1 | Coronary artery disease with diabetes & hypertension | Diabetic with hypertension | Diabetic with hypertension | Cystic fibrosis with pancreatic involvement |

| 2 | Diabetic with hypertension | Cystic fibrosis with pancreatic involvement | Coronary artery disease with diabetes & hypertension | Cystic fibrosis with pulmonary exacerbation |

| 3 | Chronic obstructive pulmonary disease (COPD) exacerbation | Coronary artery disease with diabetes & hypertension | COPD exacerbation | Neurogenic bladder with abdominal pain |

| 4 | Constipation | Hypertension | Decompensated cirrhosis | Non-specific gastrointestinal complaints |

| 5 | Cystic fibrosis with pancreatic involvement | COPD exacerbation | Non-specific gastrointestinal complaints | Diabetes |

| 6 | Decompensated cirrhosis | Constipation | Non-specific complaints | Constipation |

| 7 | Non-specific gastrointestinal complaints | Decompensated cirrhosis | COPD w/o exacerbation | Anxiety with gastrointestinal complaints |

| 8 | Cystic fibrosis with pulmonary exacerbation | Non-specific complaints | Acute on chronic pain | Cystic fibrosis with pneumonia |

| 9 | Sickle cell/chronic pain crisis | Sickle cell/chronic pain crisis | COPD with Pneumonia | Non-specific complaints |

| 10 | Non-specific complaints | Neurogenic bladder with abdominal pain | Anxiety with hypertension | Lymphoma |

In addition, Trip discovers a new phenotype, sickle cell/chronic pain crisis, that is contained in neither of UCSD1 and UCSD2. This phenotype consists of diagnoses related to sickle cell diseases or chronic pain crisis and corresponding medications (Table 3). Based on physician’s judgement, this phenotype is clinically meaningful in that sickle cell disease usually accompanies chronic pain such as constipation, back/neck pain, headache, (pruritic disorder, insomnia, and wheezing. sickle cell/chronic pain crisis is not dominant in individual hospital but is dominant in overall perspective. Note that RMSE of Trip is low as much as RMSE of central model while reducing total time (Table 4), and RMSEs of two individual UCSD datasets are lower than others because those two use separated small local datasets. Also, note that communication time of the central model is due to transferring the data.

Table 3.

Detailed phenotype of sickle cell/chronic pain crisis

| Diagnosis |

| Sickle cell disease NOS, Hb-SS disease with crisis, Constipation NOS, Pruritic disorder NOS, Generalized pain, Headache, Insomnia, Chronic pain syndrome, Wheezing |

| Medication |

| Hydroxyurea, Deferasirox, Docusate, Diphenhydramine, Hydromorphone, Acetaminophen, Zolpidem, Folic Acid, Baclofen |

Table 4.

RMSE and time [sec] elapsed. Central model is run on UCSD1,2, UCSD1, UCSD2.

| Trip | UCSD1,2 | UCSD1 | UCSD2 | |

|---|---|---|---|---|

| RMSE | 1.2304 | 1.2327 | 1.2267 | 1.1778 |

| Computation | 446.5 | 656.9 | 432.8 | 314.6 |

| Communication | 15.1196 | 3.4533 | 0 | 0 |

| Alignment | 1.2052 | 0 | 0 | 0 |

6 CONCLUSIONS

This paper presents Trip, a federated tensor factorization for computational phenotyping without sharing patient-level data. We developed secure data harmonization and privacy-preserving computation procedures based on ADMM, and analyzed that Trip ensure the confidentiality of patient-level data. Experimental results on data from MIMIC-III and UCSD medical center demonstrated that our framework resembles the central model very well. Trip is also accurate even with small or skewly distributed patient data, and fast compared to the central model. We also showed that Trip discovers phenotypes as the central model with combined patient data does, which are unbiased or not discovered (hidden) phenotypes from each hospital. As a result, Trip can help derive useful phenotypes from EHR data to overcome policy barriers due to the privacy concerns. We plan to apply it to much larger scale datasets in the future and facilitate the discovery of novel and important “phenotypes” to support clinical research and precision medicine.

Acknowledgments

We thank Dr. Robert El-Kareh, MD from University of California San Diego to annotate the computed phenotype. This research was supported by NIH (R01GM118609, R21LM012060), MSIP (No.2014-0-00147), and NRF (NRF-2016R1E1A1A01942642).

Footnotes

CCS CONCEPTS

•Applied computing→Health informatics; •Computing methodologies→Factorization methods; Machine learning algorithms;

References

- 1.Bennett C, Doub T, Selove R. EHRs connect research and practice: Where predictive modeling, artificial intelligence, and clinical decision support intersect. Health Policy and Technology. 2012 Jun;1(2):105–114. [Google Scholar]

- 2.Boyd S, Parikh N, Chu E, Peleato B, Eckstein J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Foundations and Trends® in Machine Learning. 2011;3(1):1–122. [Google Scholar]

- 3.Carroll JD, Chang J-J. Analysis of individual differences in multidimensional scaling via an n-way generalization of ”eckart-young” decomposition. Psychometrika. 1970;35(3):283–319. [Google Scholar]

- 4.Carroll RJ, Eyler AE, Denny JC. Naïve electronic health record phenotype identification for rheumatoid arthritis. Proceedings of the American Medical Informatics Association Annual Symposium; Jan. 2011; pp. 189–196. [PMC free article] [PubMed] [Google Scholar]

- 5.Carroll RJ, Thompson WK, Eyler AE, Mandelin AM, Cai T, Zink RM, Pacheco JA, Boomershine CS, Lasko TA, Xu H, Karlson EW, Perez RG, Gainer VS, Murphy SN, Ruderman EM, Pope RM, Plenge RM, Kho AN, Liao KP, Denny JC. Portability of an algorithm to identify rheumatoid arthritis in electronic health records. Journal of the American Medical Informatics Association. 2012 Jun;19(1e):e162–e169. doi: 10.1136/amiajnl-2011-000583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chaudhuri K, Monteleoni C, Sarwate A. Differentially Private Empirical Risk Minimization. Journal of Machine Learning Research (JMLR) 2011 Jul;12:1069–1109. [PMC free article] [PubMed] [Google Scholar]

- 7.Choi JH, Vishwanathan S. Dfacto: Distributed factorization of tensors. Advances in Neural Information Processing Systems. 2014:1296–1304. [Google Scholar]

- 8.Cimino JJ. From data to knowledge through concept-oriented terminologies: Experience with the Medical Entities Dictionary. Journal of the American Medical Informatics Association. 2000 May;7(3):288–297. doi: 10.1136/jamia.2000.0070288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.De Almeida AL, Kibangou AY. Distributed large-scale tensor decomposition. Acoustics, Speech and Signal Processing (ICASSP), 2014 IEEE International Conference on; IEEE; 2014. pp. 26–30. [Google Scholar]

- 10.Dwork C. Differential privacy. International Colloquium on Automata, Languages and Programming. 2006;4052(d):1–12. [Google Scholar]

- 11.Ebadollahi S, Sun J, Gotz D, Hu J, Sow D, Neti C. Predicting patient’s trajectory of physiological data using temporal trends in similar patients: A system for near-term prognostics. AMIA Annual Symposium Proceedings. 2010;2010:192–196. [PMC free article] [PubMed] [Google Scholar]

- 12.Greengard S. A new model for healthcare. Communications of the ACM. 2013;56(2):17– 19. [Google Scholar]

- 13.Ho JC, Ghosh J, Steinhubl SR, Stewart WF, Denny JC, Malin BA, Sun J. Limestone: High-throughput candidate phenotype generation via tensor factorization. Journal of biomedical informatics. 2014;52:199–211. doi: 10.1016/j.jbi.2014.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ho JC, Ghosh J, Sun J. Marble: High-throughput Phenotyping from Electronic Health Records via Sparse Nonnegative Tensor Factorization. Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’14; New York, NY, USA. ACM; 2014. pp. 115–124. [Google Scholar]

- 15.Hong M, Luo Z-Q, Razaviyayn M. Convergence analysis of alternating direction method of multipliers for a family of nonconvex problems. SIAM Journal on Optimization. 2016;26(1):337–364. [Google Scholar]

- 16.Kang U, Papalexakis E, Harpale A, Faloutsos C. Gigatensor: scaling tensor analysis up by 100 times-algorithms and discoveries. Proceedings of the 18th ACM SIGKDD international conference on Knowledge discovery and data mining; ACM; 2012. pp. 316–324. [Google Scholar]

- 17.Kim Y, Sun J, Yu H, Jiang X. Federated Tensor Factorization for Computational Phenotyping. 2016 doi: 10.1145/3097983.3098118. http://dm.postech.ac.kr/trip. [DOI] [PMC free article] [PubMed]

- 18.Kissner L, Song D. Advances in Cryptology–CRYPTO 2005. Springer; 2005. Privacy-preserving set operations; pp. 241–257. [Google Scholar]

- 19.Kononenko I. Machine learning for medical diagnosis: history, state of the art and perspective. Artificial Intelligence in Medicine. 2001 Aug;23(1):89–109. doi: 10.1016/s0933-3657(01)00077-x. [DOI] [PubMed] [Google Scholar]

- 20.Kuhn HW. The hungarian method for the assignment problem. Naval research logistics quarterly. 1955;2(1–2):83–97. [Google Scholar]

- 21.Ledley RS, Lusted LB. Reasoning foundations of medical diagnosis. M.D. computing: computers in medical practice. 1991 Sep;8(5):300–315. [PubMed] [Google Scholar]

- 22.Li Y, Jiang X, Wang S, Xiong H, Ohno-Machado L. Vertical grid logistic regression (vertigo) Journal of the American Medical Informatics Association. 2015:ocv146. doi: 10.1093/jamia/ocv146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lin Z, Liu R, Su Z. Linearized alternating direction method with adaptive penalty for low-rank representation. Advances in neural information processing systems. 2011:612–620. [Google Scholar]

- 24.Murphy SN, Weber G, Mendis M, Gainer V, Chueh HC, Churchill S, Kohane I. Serving the enterprise and beyond with informatics for integrating biology and the bedside (i2b2) Journal of the American Medical Informatics Association. 2010;17(2):124–130. doi: 10.1136/jamia.2009.000893. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.PCORnet Data Privacy Task Force. Technical Approaches for Protecting Privacy in the PCORnet Distributed Research. 2015. [Google Scholar]

- 26.Saeed M, Villarroel M, Reisner AT, Clifford G, Lehman L-W, Moody G, Heldt T, Kyaw TH, Moody B, Mark RG. Multiparameter intelligent monitoring in intensive care ii (mimic-ii): A public-access intensive care unit database. Critical Care Medicine. 2011 May;39:952–960. doi: 10.1097/CCM.0b013e31820a92c6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Vaidya J, Yu H, Jiang X. Privacy-preserving svm classification. Knowledge and Information Systems. 2008;14(2):161–178. [Google Scholar]

- 28.Wang Y, Chen R, Ghosh J, Denny JC, Kho A, Chen Y, Malin BA, Sun J. Rubik: Knowledge guided tensor factorization and completion for health data analytics. Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining; ACM; 2015. pp. 1265–1274. [DOI] [PMC free article] [PubMed] [Google Scholar]