Abstract

Background

The capacity for health systems to support the translation of research in to clinical practice may be limited. The cluster randomised controlled trial (cluster RCT) design is often employed in evaluating the effectiveness of implementation of evidence-based practices. We aimed to systematically review available evidence to identify and evaluate the components in the implementation process at the facility level using cluster RCT designs.

Methods

All cluster RCTs where the healthcare facility was the unit of randomisation, published or written from 1990 to 2014, were assessed. Included studies were analysed for the components of implementation interventions employed in each. Through iterative mapping and analysis, we synthesised a master list of components used and summarised the effects of different combinations of interventions on practices.

Results

Forty-six studies met the inclusion criteria and covered the specialty groups of obstetrics and gynaecology (n=9), paediatrics and neonatology (n=4), intensive care (n=4), internal medicine (n=20), and anaesthetics and surgery (n=3). Six studies included interventions that were delivered across specialties. Nine components of multifaceted implementation interventions were identified: leadership, barrier identification, tailoring to the context, patient involvement, communication, education, supportive supervision, provision of resources, and audit and feedback. The four main components that were most commonly used were education (n=42, 91%), audit and feedback (n=26, 57%), provision of resources (n=23, 50%) and leadership (n=21, 46%).

Conclusions

Future implementation research should focus on better reporting of multifaceted approaches, incorporating sets of components that facilitate the translation of research into practice, and should employ rigorous monitoring and evaluation.

Keywords: Implementation, effective practice, quality of care, cluster randomised trials

Key questions.

What is already known about this topic?

Implementation of evidence-based medicine is a challenge across settings and medical specialties worldwide. Health systems often fail to ensure that this evidence is used in routine clinical practice.

What are the new findings?

Our systematic review of cluster randomised trials in facility settings identified nine components consistently common to the implementation of evidence-based practices across all disciplines: leadership, barrier identification, tailoring to the context, patient involvement, communication, education, supportive supervision, provision of resources, and audit and feedback.

The same set of components was used in studies that showed a positive effect or no effect on the study outcomes.

Recommendations for policy

As clinicians, researchers and implementers, we are beholden to ensure the application of research to practice is a focus of health systems if we would like to optimise care and outcomes for all.

Our study identifies the components of implementation used in cluster randomised studies in a way that is common across disciplines and suggests that the impact of different components is not consistent across studies, underlining the importance of better reporting of these implementation components.

Introduction

Implementation research (‘the scientific study of methods to promote the systematic uptake of proven clinical treatments, practices, organisational, and management interventions into routine practice, and hence to improve health’1) requires the use of an evidence-based practice from a defined research setting and support of its implementation using a range of behavioural and health system interventions2 so that the practice maintains its proven efficacy in this new setting. Effective implementation of evidence-based practices in healthcare settings remains a challenge within medicine and public health with health systems often failing to ensure that this evidence is used in routine clinical practice,3 4 and maternal and newborn health is a particularly stark example of the failure to adequately implement interventions that are known to be effective.

Globally, an estimated 289 000 maternal deaths, 2.6 million stillbirths and 2.8 million newborn deaths occur each year.5–7 These deaths highlight the critical importance of ensuring that high-quality care is available for every woman and newborn throughout pregnancy, childbirth and the postnatal period.8 However, in many settings, effective interventions are still poorly implemented. In light of these challenges, the WHO has proposed a vision for quality of care for pregnant women and newborns in facilities globally.8 As part of the recently launched Quality of Care Network,9 WHO is currently conducting a number of evidence syntheses and primary research activities in order to develop and provide a roadmap for how evidence-based practices in maternal and newborn health can be effectively and sustainably implemented.10

Implementation studies often employ multifaceted or complex interventions aimed at different levels of the health system. While implementation research can employ a wide variety of designs, the cluster randomised trial design is often employed in evaluating the effectiveness of implementation of evidence-based practices at the facility level.11 Thus, in this first analysis, we aimed to systematically review available evidence to identify and evaluate the components that are considered in the implementation of effective practices at the facility level using cluster (parallel or step wedge) randomised controlled trial (RCT) designs across all medical specialties.

Methods

Criteria for considering studies for this review

Types of studies

All cluster RCTs using a parallel or step-wedge design, irrespective of language, published or written from 1990 to 2014 were assessed.

Types of participants

Health facilities with inpatient care or areas within inpatient care facilities as the unit of randomisation with healthcare staff and/or patients as the participants.

Types of interventions

Healthcare practice or set of practices for which there was previous evidence of efficacy implemented using a complex or multifaceted intervention. Evidence of efficacy was as defined by the study authors; that is where the aim of the trial was to assess the implementation of an intervention known to be beneficial, rather than the aim being the primary assessment of a new intervention. The comparison was either existing health practice or another method of implementation. Studies were excluded if they assessed a health practice with no evidence of its proven efficacy, or where the health practice is not targeted at care recipients (eg, an implementation strategy to address a staff administrative practice), or a single effectiveness intervention (eg, randomised to use of a drug or guideline or not, without any other facet to the implementation).

Types of outcome measures

The primary outcomes as defined by the included studies were reported. It should be noted that given the scope of this review, the outcomes were variable across studies and were not used as part of the inclusion and exclusion criteria. Such outcomes would include, but not be limited to, changes in baseline clinical outcomes, measurements of patient satisfaction, changes in mortality or morbidity measures, and changes in compliance with guidelines.

Electronic searches

EMBASE and Medline were searched from January 1990 to December 2014, using a combination of the following key terms: ‘intervention’, ‘health care planning’, ‘implementation’, ‘cluster analysis’, ‘step-wedge’, ‘community-based participatory research/methods’, ‘organisation’ and ‘administration’. Search terms were conducted in English; however, there were no language restrictions placed on the results identified. Given the issues in reporting of cluster trials (see the Discussion section), a broad search of the Cochrane Central Trials Register using the search term cluster random* of the full text was undertaken. The search strategy is included in online supplementary appendix 1.

bmjgh-2016-000266supp002.pdf (43.5KB, pdf)

Searching other resources

In addition, reference lists from assessed articles were hand searched for any potential additional studies. Given the topic, the journal Implementation Science was hand searched from its inception in 2006 until December 2014.

Data extraction and management

Following initial title and abstract review, the full texts of potential studies were screened independently by two reviewers, with data extraction and risk of bias assessment then undertaken (ERA and DNK). If more than one citation was identified related to the included study, information from all the citations were used. Data extraction was undertaken with a form designed by two of the review authors (ERA and DNK). The components and process of implementation and the effect of the primary outcomes were the core focus of data extraction. The steps of the implementation process and components of implementation were extracted as described in each individual manuscript.

Assessment of risk of bias in included studies

Risk of bias was determined using The Cochrane Collaboration's tool for assessing risk of bias12 as well as considering additional forms of bias particular to cluster randomised trials. The criteria for assessing bias were the risk of selection bias (random sequence generation and allocation concealment), performance bias, detection bias, attrition bias and reporting bias. The additional risk of bias assessments unique to cluster trials considered were recruitment bias, comparability bias and analysis bias. As allocation concealment is not generally possible in a cluster trial (as all clusters are usually randomised at once), this was only considered to potentially add bias where clusters were allocated in a time-staggered fashion. In accordance with Cochrane guidance, little weight was given to the absence of blinding as this is not practical in the vast majority of cluster randomised trial designs, parallel or stepped wedge.12 The magnitude and direction of bias was considered in assigning overall risk of bias to each included study. Risk of bias was independently assessed by two authors (ERA and DNK), and where there was divergence of assessment, a third reviewer (OT) was consulted and consensus was reached.

Assessment of the quality of implementation reporting

Currently, there is no standard checklist or tool for assessing the quality of reporting on implementation studies, thus the validity of tools to assess the adequacy of reporting across included studies in this review is somewhat unclear.13

We identified a recent publication related to development of reporting standards for implementation research, which described initial consensus on a list of 35 items to be checked when reporting on an implementation study.14 We used four items from the relevant section of this list (describing the methods of the implementation) to assess quality of reporting by the included studies from this list, namely (1) describe the new service (eg, components/content, frequency, duration, intensity, mode of delivery, materials used); (2) describe the professional backgrounds, roles and training requirements of the personnel involved in delivering the intervention; (3) define the core components of the interventions, and the processes for assessing fidelity to this core content, and what, if any, local adaptation was allowed; and (4) describe the intervention received by control/comparator group not simply stating ‘usual care’.

Data analysis

We anticipated that studies would include a wide range of health practices across different medical specialties, focusing on varying populations and outcomes. To identify whether there are variations in findings based on context, all studies were categorised according to relevant medical specialty. Therefore, a meta-analysis was deemed to be of little value. We report on the significant change in the hypothesised direction in the primary outcomes in each study. We further reviewed all included studies and analysed the components of implementation interventions employed in each based on the descriptions provided. There is a significant body of literature on the frameworks used for implementation research.15 Through iterative mapping and analysis, we synthesised the list of components used in these studies to nine distinct components. In light of our work on the WHO quality of care framework for pregnant women and newborns,8 we then cross-tabulated identified components with the proposed quality of care improvement strategy in order to identify where such components could be applied in future implementation research.

Ethics

Ethics approval was not applicable.

Results

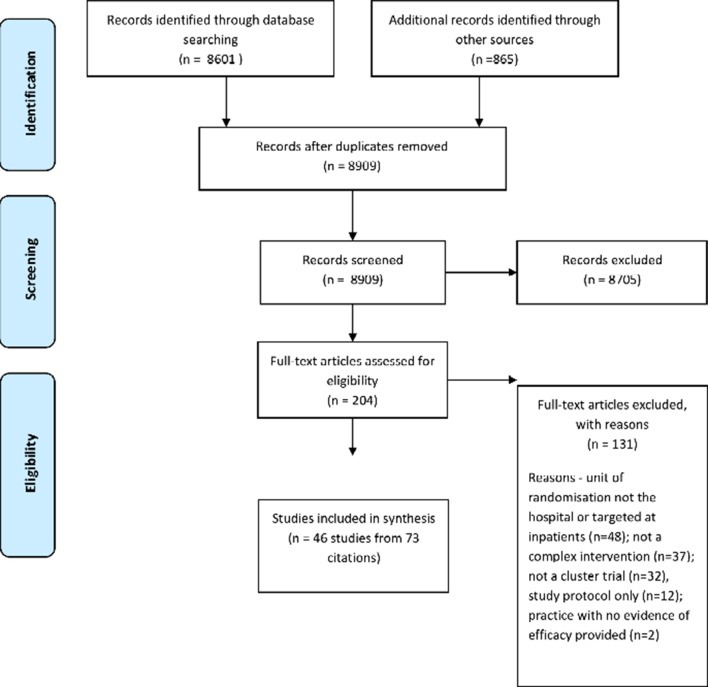

The search strategy identified 8909 citations after 557 duplicate records were removed. Two hundred and four full texts were assessed for eligibility with 46 studies (with a total of 73 citations) meeting inclusion criteria for the review. Figure 1 shows the PRISMA flow diagram.

Figure 1.

PRISMA flowchart.

Characteristics of included studies

The characteristics (location, intervention, component of implementation, primary outcomes and risk of bias) of the included studies are outlined in supplementary table 1. We included 43 parallel and 3 stepped wedge randomised trials. The studies were conducted between 1996 and 2012. Although there were no language restrictions in our search, all included studies were available in English. The study locations were spread across 37 high-income countries, 9 middle-income countries and 3 low-income countries (World Bank data). Three studies included multiple countries: one study was located in Senegal and Mali,16 one was located in Argentina and Uruguay,17 and another one was located in Mexico and Thailand.18 The process of implementation was described in all studies, all in varying degrees of depth. The practices being assessed could be broadly categorised into the specialty groups of obstetrics and gynaecology (n=9), paediatrics and neonatology (n=4), intensive care (n=4), internal medicine specialties (n=20), and anaesthetics and surgery (n=3). Six studies included interventions that went across specialties or the entire facility. The practices were variable, but most often were guideline based or a programme structured around an evidence-based practice(s), as defined by study authors.

bmjgh-2016-000266supp001.pdf (431.8KB, pdf)

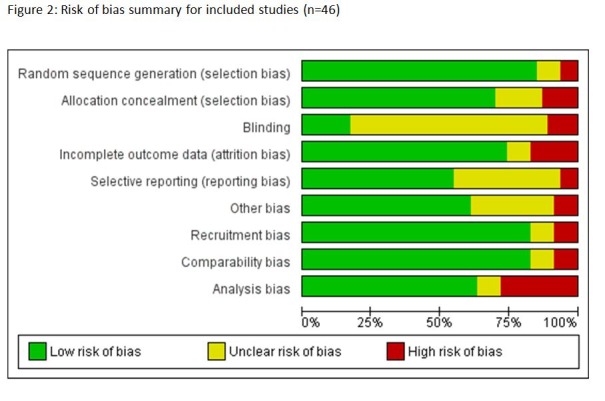

Assessment of risk of bias

A summary of risk of bias is provided in figure 2. Eighteen studies were assessed as having a high risk of bias (39%), 12 as having a low risk of bias (26%) with the remaining 16 (35%) having an unclear risk of bias. The most common sources of high risk of bias were analysis bias (13 studies) and attrition bias (8 studies). Selective reporting was frequently unclear; 18 studies (39%) had no accessible protocol and insufficient evidence to comment on any selective reporting bias. As stated above, blinding was of little consequence as the nature of a cluster RCT renders blinding mostly impractical.

Figure 2.

Risk of bias summary for included studies (n=46).

Assessment of the quality of implementation reporting

Forty trials (87%) described the service; 26 (57%) described the professional backgrounds, role and training requirements of the personnel involved; 28 (61%) defined the core processes and the process for assessing fidelity; and 34 (74%) described the intervention received by the control group. Eleven studies checked all four items (24%), 17 checked three items (39%), 15 checked two items (33%) and 3 studies only checked one of the four items (6%).

Components of multifaceted implementation interventions

Our descriptive synthesis identified nine components of multifaceted implementation interventions across included studies: leadership (the process of formally identifying or appointing a leader and/or a driver as a formal part of the implementation process), barrier identification (a formal process of identifying barriers either prior to the implementation, in order to tailor the process, or during the evaluation of the implementation), tailoring to the context (the process of implementation considered or was tailored to the specific needs of a facility setting), patient involvement (any implementation that actively included patients in the intervention design), communication (a process of formal communication undertaken in the implementation process, for example through coordinated collaborative sessions or structured team work), education (any process of educating healthcare providers or patients: oral, written, off-site, on-site), supportive supervision (the provision of in-person or off-site support for staff), provision of resources (the provision of human or physical resources as a formal part of implementation), and audit and feedback (audit of the implementation process or its outcomes and/or of feedback to participants).

The same component of implementation may have been used more than once in a single trial; for example, the Bashour trial19 uses three clearly distinct elements, all of which can be grouped under the component of education. When counting each of these individually, the nine components of implementation were used 192 times. The number of times a component was used (if more than once) is denoted in online supplementary table 1 in parentheses next to the component. Included studies used between two and seven, with most (n=18) using four components. This was similar between the 24 studies that had a statistically significant improvement in the hypothesised direction for at least one of their primary outcomes (two to six components used, with 10 using four components) and the 22 studies that did not have a statistically significant improvement (two to seven components, with eight using four components). The results are presented in the order of frequency of components as identified across studies.

The four main components that were most commonly implemented in these studies were education, audit and feedback, provision of resources and leadership. Education was identified in 91% (42) of studies.16 19–57 The content and delivery of education was variable and included elements such as training packages, workshops, in-service training, provision of education materials to facility staff and/or patients, educational campaigns and outreach, on-site and distance training, and self-assessment. Audit and feedback (26 studies, 57%)16 21 24–26 28–38 41 43 45 46 48 51 53 57–59 was conducted in a variety of ways: formal feedback and audit sessions, delivery of customised feedback, self-monitoring and reporting of surveillance benchmarks. Provision of resources (23 studies, 50%)23–26 28–30 36 38 40 42–44 46–48 51 52 55–57 60 were study specific and were delivered as checklists, equipment, patient information, online materials and guidelines. The process of having leadership (21 studies, 46%)16 23 24 27 30 35 37–39 42–47 50–52 58 60 61 was centred on the identification of champions, the use of opinion leaders and the engagement of senior clinical staff.

Barrier identification was addressed as part of the implementation intervention in 17 (37%) studies.19 21 23 24 26 33 34 36 37 50 52 58–62 This process was done in one of two ways: identification of barriers prior to implementation in order to target the intervention to overcome these, or identification of barriers as part of the implementation process to be able to explain why an intervention either did not achieve a significant outcome or why it may be difficult to sustain. Communication was another identified component of implementation in 14 (30%) studies23 24 26 29–31 35 37 40 43 48 50 53 61 and this was deployed in a variety of ways, including formalised communication strategies and short messaging- service based reminders to staff about an implementation study. Tailoring an implementation to local context was specifically addressed in nine studies (20%)21 32 36 41 45 52 59 62 and included strategies such as a pre-implementation phase to plan for adaptations needed for the local setting and processes during implementation to site-specific adjustments to implementation. Patient involvement was a part of the implementation intervention in seven studies (15%)22 26 34 39 47 54 and was often in the form of educating patients alongside staff involved in the study. A supportive supervision component was outlined in six studies (13%),28 32 34 48 49 53 most often in the form of either on-site visits to support staff participating in the intervention or in the provision of access to phone and email support during the intervention.

Effect of implementation interventions on practices

Twenty-four studies (52%) had a statistically significant improvement in the hypothesised direction of effect for at least one of their primary outcomes. The primary outcomes identified a priori and reported by included studies can be found in online supplementary table 1. Due to the heterogeneity of the studies, it was not possible to undertake a meta-analysis of the quantitative outcomes. Furthermore, the aim of this review was to scope the components of implementation and to interrogate the outcomes of different combinations of implementation approaches. The four components used in the studies with a significant outcome were education (22 studies, 92%), audit and feedback (14 studies, 58%), leadership (10 studies, 42%) and provision of resources (10 studies, 42%). Studies without a significant outcome employed the same four components, that is, education (20 studies, 91%), audit and feedback (12 studies, 55%), provision of resources (14 studies, 64%) and having leadership (11 studies, 50%). The components of implementation separated by specialty groups are outlined in table 1, separated by those with a statistically significant outcome(s) and those with a non-significant outcome(s).

Table 1.

Components of implementation studies, separated by specialty group and primary outcome (statistically significant or non-significant) (N=46)

| Component of implementation | No ofstudies | Specialty group | Obstetrics and gynaecology | Neonatology and paediatrics | Intensive care | Internal medicine specialties | Anaesthetics and surgery | Hospital wide |

| No of studies | 9 | 4 | 4 | 20 | 3 | 6 | ||

| Education | 42 | Significant outcome(s) | 3 | 4 | 2 | 10 | 1 | 2 |

| Non-significant outcome(s) | 6 | − | 2 | 7 | 2 | 3 | ||

| Audit and feedback | 26 | Significant outcome(s) | 1 | 3 | 2 | 6 | − | 2 |

| Non-significant outcome(s) | 2 | − | 2 | 7 | 1 | − | ||

| Provision of resources | 23 | Significant outcome(s) | 1 | 3 | 1 | 3 | 1 | 1 |

| Non-significant outcome(s) | 3 | − | 1 | 6 | − | 3 | ||

| Leadership | 21 | Significant outcome(s) | 2 | 1 | − | 6 | 1 | − |

| Non-significant outcome(s) | 1 | − | 1 | 6 | 2 | 1 | ||

| Barrier identification | 17 | Significant outcome(s) | 2 | 1 | − | 4 | 1 | − |

| Non-significant outcome(s) | 4 | − | − | 3 | 1 | 1 | ||

| Communication | 14 | Significant outcome(s) | 1 | 1 | 2 | 3 | − | 1 |

| Non-significant outcome(s) | 1 | − | 1 | 3 | 1 | − | ||

| Context | 9 | Significant outcome(s) | 1 | − | − | 3 | 1 | − |

| Non-significant outcome(s) | 2 | − | 1 | 1 | − | − | ||

| Patient involvement | 7 | Significant outcome(s) | − | 1 | − | 2 | − | − |

| Non-significant outcome(s) | 2 | − | − | 1 | − | 1 | ||

| Supportive supervision | 6 | Significant outcome(s) | − | 1 | − | 2 | − | 1 |

| Non-significant outcome(s) | − | − | 1 | 1 | − | − | ||

| Studies with significant outcome(s) | 3 | 4 | 2 | 12 | 1 | 2 | ||

| Studies with non-significant outcome(s) | 6 | 0 | 2 | 8 | 2 | 4 |

Discussion

Our systematic review included and characterised a total of 46 cluster randomised trials on implementation of effective healthcare practices from a range of high-income, middle-income and low-income settings. From these, we identified a list of nine components of multifaceted implementation interventions, namely leadership, barrier identification, tailoring to the context, patient involvement, communication, education, supportive supervision, provision of resources, and audit and feedback. The four components most frequently used were education, audit and feedback, leadership and provision of resources. These components were used in both statistically successful and unsuccessful trials as defined by the trial's primary outcomes. The number or combination of components of implementation was not associated with a statistically significant improvement in the primary outcome, as reported by the study. It is worth noting that statistical significance, or lack thereof, does not translate into clinical significance.63

The formal use of leadership was common to many of the strategies in the included studies. There is little argument against the need for leaders or champions as a crucial part of any complex intervention,64 or as a basic component of a functioning health system.65 A Cochrane review on local opinion leaders as an intervention found that it may have some impact on changing professional practice, but the method of delivery and its role as a stand-alone intervention are difficult to define.66 There is often poor or no reporting on how leaders are identified, recruited and supported, and limited clarity and assessment of what aspects of leadership contribute to its success. This prevents a clear articulation of exactly how leadership components should be developed and implemented. The relationship between leadership and success of implementation may be more complex: are settings that are able to successfully implement an evidence-based practice already benefiting from good leadership, rendering additional efforts during implementation unnecessary? Additionally, the lack of an explicit statement of leadership as a component of a complex intervention does not mean it was not a critical part of the intervention.

The same question applies to other components, such as the barrier identification and tailoring to the local context. A situational assessment must involve the identification of contextually specific facilitators and barriers that would need to be considered in the implementation of an intervention,67 yet the critical steps of barrier identification and tailoring to the local context were infrequently identified as implementation components in the studies in this review. It is important to note that these components, as well as others, may need to be repeatedly applied and reviewed as the implementation is rolled out and assessed. Patient involvement was not a commonly reported component of implementation in the studies included in this review, yet clearly all included studies involved patients in some way, usually as part of measurable clinical outcomes. The specifics of patient care recipient or public involvement will vary widely depending on the practice in question.68 69 In our opinion, all implementation studies should at least consider the need to engage and involve individuals (including patients, care recipients, families, consumer groups or the public) from the perspective of equity and rights, and the importance of the experience of healthcare consumers in how they perceive and use healthcare services.70

There are a multitude of methods for providing education, yet assessing the effectiveness of any of these remains problematic.71 How does one determine which part(s) of the education process (such as attendance, delivery, engagement and assessment) are the critical element(s) that leads to success of the intervention? For example, there is evidence that education delivered as an outreach visit changes professional practice, but the variation in the response (as both an effective and non-effective tool) to this intervention is difficult to explain.72 We saw a vast array of educational methods used in our review, with no clear pattern that separated the successful from the non-successful interventions. As a single intervention, targeted education of health professionals (with various delivery methods) may have a small effect on both practice and patient outcomes in some trials,73 74 but the variation in response is wide. This same phenomenon is evident in the included studies in our review; education as a component of a complex intervention was used in more than 90% of the included studies, yet only slightly more than half found a significant effect of their intervention based on the primary outcomes reported.

Supportive supervision was rarely identified as a component of implementation in the studies included in this review. This process can be considered necessary at multiple points during implementation (considering that all staff members could reasonably benefit from it), and at all times it must be done in such a way that facilitates the delivery of quality care.75 A functioning health system, including one that is adequately resourced, is clearly critical to the success of any new intervention. While the studies in this review refer to resources in the context of those necessary and new to the implementation, it is also worth considering that basic resources, in the form of human resources, financial, capital and material, are critical to the success of both health systems and implementation processes.75

Audit and feedback has been shown in a Cochrane review to improve professional practice,76 but, similar to education, there are multiple factors that have the potential to affect the size of the impact. Having leadership has been shown to be a critical factor in the success of an audit and feedback component;77 however, half of the included studies in this review that identified audit and feedback in their intervention did not specify leadership as a component. This makes it increasingly difficult to evaluate these components in isolation.

Looking at our results, it is evident that these components are not unique to any one step in the implementation process. Moreover, it is clear from this review that the components identified are not unique to a particular specialty, suggesting that these nine components are the most commonly tested in cluster randomised trials across settings and health areas while implementing an evidence-based practice. However, they are also not unique to those studies that one could classify as successful or not based on the reported primary outcomes. This remains one of the significant challenges in taking an evidence-based practice to successful implementation. How does one implement the right mix of components in the right way that an implementation is successful and a practice change occurs? At the least, we suggest that an explicit consideration of these nine components is necessary as part of a facility-based implementation activity. The limited reporting and/or assessment of the implementation process, combined with a degree of caution in assessing success given the vast differences in primary outcomes, means we were limited in being able to attribute significant outcomes (and successful implementation trials) to specific aspects of the implementation components.

In light of our findings, one of the main lessons learnt centred on the challenges of appropriately reporting and analysing the types of included studies. Implementation studies (of varying trial designs) are inconsistently reported on in the literature. This might be, in part, due to a lack of standard reporting guidelines,13 14 78 as well as due to variability and inconsistency in the use of the terms to describe implementation strategies.79 Poor reporting of the methodological process of implementation makes it difficult to assess at what point in the process certain factors were critical to success. Furthermore, various systematic reviews of implementation strategies show that these interventions have effectiveness some of the time in some settings, and not all of the time in all settings, and a clearer framework for the implementation process (and measuring its effect) would improve the robustness of the data generated and its applicability to other implementation studies.80 For example, the Medical Research Council (UK) provides researchers with a framework on developing and evaluating complex interventions,81 and one would argue that this framework should be used in combination with a standardised reporting process in implementation studies.

There exist many frameworks to consider when planning an implementation intervention.82 83 We have shown nine components of implementation that are consistent across cluster randomised trials and disciplines. While having a multifaceted intervention is not necessarily more effective than having a single component intervention,84 it is possible that some of all of these nine specific components could be integrated into the existing approaches of implementation frameworks, and this warrants further consideration.

Strengths and limitations

We undertook a broad search of trials covering all medical specialties. Whereas other reviews have embraced a more generic category of implementation strategies (such as multifaceted interventions),85 our synthesis unpacked these into their individual components.

There are limitations in the search findings given the varying inclusion of the trial design (cluster) in the title and abstract, as reported in the literature.86 Despite employing a full-text search strategy to overcome this, we might have missed relevant citations. We did not include other study designs, which might have been helpful in terms of identifying components of implementation. Moreover, the quality of implementation reporting was highly variable, limiting the analysis of the components and their combinations in detail. As our review was comprehensive in terms of the medical specialty, our analysis of the effects were limited to the primary outcomes reported by each study.

Conclusions

We have identified and evaluated the set of components of implementation in cluster randomised trials in a way that is common across disciplines, and our review suggests that the impact of different components is not consistent across studies, underlining the importance of better reporting of these implementation components to allow replicability and adaptation at different settings. This systematic review will inform the ongoing work at WHO to identify, support implementation of and learn from the effective intervention strategies to improve quality of care for maternal and newborn health. In this new era of global health with the sustainable development goals, we must focus on the implementation of proven practices with well-designed and reported approaches, incorporating sets of components that facilitate the translation of research into practice, and which employ rigorous monitoring and evaluation.

Footnotes

Contributors: ERA and OT designed the protocol. ERA, DNK and OT performed data extraction and assessment of bias and quality of reporting. ERA drafted the manuscript. ERA, OT, JPV, DNK, OTO, QL and AMG reviewed the draft, provided critical review and contributed to the revision of the manuscript. ERA, OT, JPV, DNK, OTO, QL and AMG read and approved the final manuscript.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data are available.

Correction notice: This article has been corrected since it first published. The corresponding author e-mail address has been corrected.

References

- 1.Implementation Science. About implementation science Secondary about Implementation Science, 2015. http://www.implementationscience.com/about

- 2.Powell BJ, Proctor EK, Glass JE. A systematic review of strategies for implementing empirically supported mental health interventions. Res Soc Work Pract 2014;24:192–212. 10.1177/1049731513505778 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Glasgow RE, Lichtenstein E, Marcus AC. Why don't we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. Am J Public Health 2003;93:1261–7. 10.2105/AJPH.93.8.1261 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Straus SE, Tetroe J, Graham I, et al. . Canadian Medical Association journal = journal de L'association Medicale Canadienne. CMAJ 2009;181:165–8.19620273 [Google Scholar]

- 5.Blencowe H, Cousens S, Jassir FB, et al. . National, regional, and worldwide estimates of stillbirth rates in 2015, with trends from 2000: a systematic analysis. Lancet Glob Health 2016;4:e98-e108:e98–e108. 10.1016/S2214-109X(15)00275-2 [DOI] [PubMed] [Google Scholar]

- 6.Liu L, Oza S, Hogan D, et al. . Global, regional, and national causes of child mortality in 2000–13, with projections to inform post-2015 priorities: an updated systematic analysis. Lancet 2015;385:430–40. 10.1016/S0140-6736(14)61698-6 [DOI] [PubMed] [Google Scholar]

- 7.Alkema L, Chou D, Hogan D. Global, regional, and national levels and trends in maternal mortality between 1990 and 2015, with scenario-based projections to 2030. a systematic analysis by the UN Maternal Mortality Estimation Inter-Agency Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Tunçalp Ӧ, Were WM, MacLennan C, et al. . Quality of care for pregnant women and newborns—the WHO vision. BJOG 2015;122:1045–9. 10.1111/1471-0528.13451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.World Health Organization. What is the Quality of Care Network? 2017. http://www.who.int/maternal_child_adolescent/topics/quality-of-care/network/en/

- 10.Tunçalp Ö, Were W, Bahl R, et al. . Authors’ reply re: quality of care for pregnant women and newborns—the WHO vision. BJOG 2016;123:145–45. 10.1111/1471-0528.13748 [DOI] [PubMed] [Google Scholar]

- 11.Mdege ND, Man MS, Taylor Nee Brown CA, et al. . Systematic review of stepped wedge cluster randomized trials shows that design is particularly used to evaluate interventions during routine implementation. J Clin Epidemiol 2011;64:936–48. 10.1016/j.jclinepi.2010.12.003 [DOI] [PubMed] [Google Scholar]

- 12.HIggins J, Green S. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]: The Cochrane Collaboration. 2011. http://www.cochrane-handbook.org

- 13.Kågesten A, Tunçalp Ӧ, Ali M, et al. . A systematic review of Reporting Tools Applicable to Sexual and Reproductive Health Programmes: step 1 in developing Programme Reporting Standards. PLoS One 2015;10:e0138647 10.1371/journal.pone.0138647 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Pinnock H, Epiphaniou E, Sheikh A, et al. . Developing standards for reporting implementation studies of complex interventions (StaRI): a systematic review and e-Delphi. Implement Sci 2015;10:42 10.1186/s13012-015-0235-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lokker C, McKibbon KA, Colquhoun H, et al. . A scoping review of classification schemes of interventions to promote and integrate evidence into practice in healthcare. Implement Sci 2015;10:1 10.1186/s13012-015-0220-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Dumont A, Fournier P, Abrahamowicz M, et al. . Quality of care, risk management, and technology in obstetrics to reduce hospital-based maternal mortality in Senegal and Mali (QUARITE): a cluster-randomised trial. Lancet 2013;382:146–57. 10.1016/S0140-6736(13)60593-0 [DOI] [PubMed] [Google Scholar]

- 17.Althabe F, Buekens P, Bergel E, et al. . A cluster randomized controlled trial of a behavioral intervention to facilitate the development and implementation of clinical practice guidelines in Latin American maternity hospitals: The Guidelines Trial: study protocol [ISRCTN82417627]. BMC Womens Health 2005;5:4 10.1186/1472-6874-5-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gülmezoglu AM, Langer A, Piaggio G, et al. . Cluster randomised trial of an active, multifaceted educational intervention based on the WHO Reproductive Health Library to improve obstetric practices. BJOG 2007;114:16–23. 10.1111/j.1471-0528.2006.01091.x [DOI] [PubMed] [Google Scholar]

- 19.Bashour HN, Kanaan M, Kharouf MH, et al. . The effect of training doctors in communication skills on women's satisfaction with doctor–woman relationship during labour and delivery: a stepped wedge cluster randomised trial in Damascus. BMJ Open 2013;3:e002674 10.1136/bmjopen-2013-002674 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kramer MS, Chalmers B, Hodnett ED, et al. . Promotion of breastfeeding intervention trial (PROBIT): a cluster-randomized trial in the republic of Belarus. Design, follow-up, and data validation. Adv Exp Med Biol 2000;478:327–45. [PubMed] [Google Scholar]

- 21.Foy R, Penney GC, Grimshaw JM, et al. . A randomised controlled trial of a tailored multifaceted strategy to promote implementation of a clinical guideline on induced abortion care. BJOG 2004;111:726–33. 10.1111/j.1471-0528.2004.00168.x [DOI] [PubMed] [Google Scholar]

- 22.Ismail KM, Kettle C, Macdonald SE, et al. . Perineal Assessment and Repair Longitudinal Study (PEARLS): a matched-pair cluster randomized trial. BMC Med 2013;11:209 10.1186/1741-7015-11-209 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Althabe F, Buekens P, Bergel E, et al. . A behavioral intervention to improve obstetrical care. N Engl J Med 2008;358:1929–40. 10.1056/NEJMsa071456 [DOI] [PubMed] [Google Scholar]

- 24.Deneux-Tharaux C, Dupont C, Colin C, et al. . Multifaceted intervention to decrease the rate of severe postpartum haemorrhage: the PITHAGORE6 cluster-randomised controlled trial. BJOG 2010;117:1278–87. 10.1111/j.1471-0528.2010.02648.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Horbar JD, Bracken M, Buzas J, et al. . Cluster randomized trial of a multifaceted intervention to promote evidence-based surfactant therapy. Pediatric research 2004;55:39. [Google Scholar]

- 26.Lee SK, Aziz K, Singhal N, et al. . Improving the quality of care for infants: a cluster randomized controlled trial. Can Med Assoc J 2009;181:469–76. 10.1503/cmaj.081727 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Acolet D, Allen E, Houston R, et al. . Improvement in neonatal intensive care unit care: a cluster randomised controlled trial of active dissemination of information. Arch Dis Child Fetal Neonatal Ed 2011;96:F434–F439. 10.1136/adc.2010.207522 [DOI] [PubMed] [Google Scholar]

- 28.Ayieko P, Ntoburi S, Wagai J, et al. . A multifaceted intervention to implement guidelines and improve admission paediatric care in Kenyan district hospitals: a cluster randomised trial. PLoS Med 2011;8:e1001018 10.1371/journal.pmed.1001018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Scales DC, Dainty K, Hales B, et al. . A multifaceted intervention for quality improvement in a network of intensive care units: a cluster randomized trial. JAMA 2011;305:363–72. 10.1001/jama.2010.2000 [DOI] [PubMed] [Google Scholar]

- 30.Doig GS, Simpson F, Finfer S, et al. . Effect of evidence-based feeding guidelines on mortality of critically ill adults: a cluster randomized controlled trial. JAMA 2008;300:2731–41. 10.1001/jama.2008.826 [DOI] [PubMed] [Google Scholar]

- 31.Martin CM, Doig GS, Heyland DK, et al. . Multicentre, cluster-randomized clinical trial of algorithms for critical-care enteral and parenteral therapy (ACCEPT). CMAJ 2004;170:309–204. 10.1177/0115426504019003309 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.van der Veer SN, de Vos ML, van der Voort PH, et al. . Effect of a multifaceted performance feedback strategy on length of stay compared with benchmark reports alone: a cluster randomized trial in intensive care*. Crit Care Med 2013;41:1893–904. 10.1097/CCM.0b013e31828a31ee [DOI] [PubMed] [Google Scholar]

- 33.Fuller C, Michie S, Savage J, et al. . The Feedback Intervention Trial (FIT)—improving hand-hygiene compliance in UK healthcare workers: a stepped wedge cluster randomised controlled trial. PLoS One 2012;7:e41617 10.1371/journal.pone.0041617 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Dijkstra RF, Braspenning JC, Huijsmans Z, et al. . Introduction of diabetes passports involving both patients and professionals to improve hospital outpatient diabetes care. Diabetes Res Clin Pract 2005;68:126–34. 10.1016/j.diabres.2004.09.020 [DOI] [PubMed] [Google Scholar]

- 35.Power M, Tyrrell PJ, Rudd AG, et al. . Did a quality improvement collaborative make stroke care better? A cluster randomized trial. Implement Sci 2014;9:40 10.1186/1748-5908-9-40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Barkun AN, Bhat M, Armstrong D, et al. . Effectiveness of disseminating consensus management recommendations for ulcer bleeding: a cluster randomized trial. CMAJ 2013;185:E156–66. 10.1503/cmaj.120095 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Scott PA, Meurer WJ, Frederiksen SM, et al. . A multilevel intervention to increase community hospital use of alteplase for acute stroke (INSTINCT): a cluster-randomised controlled trial. Lancet Neurol 2013;12:139–48. 10.1016/S1474-4422(12)70311-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Pai M, Lloyd NS, Cheng J, et al. . Strategies to enhance venous thromboprophylaxis in hospitalized medical patients (SENTRY): a pilot cluster randomized trial. Implement Sci 2013;8:1 10.1186/1748-5908-8-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Metlay JP, Camargo CA, MacKenzie T, et al. . Cluster-randomized trial to improve antibiotic use for adults with acute respiratory infections treated in emergency departments. Ann Emerg Med 2007;50:221–30. 10.1016/j.annemergmed.2007.03.022 [DOI] [PubMed] [Google Scholar]

- 40.Berwanger O, Guimarães HP, Laranjeira LN, et al. . Effect of a multifaceted intervention on use of evidence-based therapies in patients with acute coronary syndromes in Brazil: the BRIDGE-ACS randomized trial. JAMA 2012;307:2041–9. 10.1001/jama.2012.413 [DOI] [PubMed] [Google Scholar]

- 41.Romero A, Alonso C, Marín I, et al. . [Effectiveness of a multifactorial strategy for implementing clinical guidelines on unstable angina: cluster randomized trial]. Rev Esp Cardiol 2005;58:640–8. [PubMed] [Google Scholar]

- 42.Panella M, Marchisio S, Demarchi ML, et al. . Reduced in-hospital mortality for heart failure with clinical pathways: the results of a cluster randomised controlled trial. Qual Saf Health Care 2009;18:369–73. 10.1136/qshc.2008.026559 [DOI] [PubMed] [Google Scholar]

- 43.Kinsman LD, Rotter T, Willis J, et al. . Do clinical pathways enhance access to evidence-based acute myocardial infarction treatment in rural emergency departments? Aust J Rural Health 2012;20:59–66. 10.1111/j.1440-1584.2012.01262.x [DOI] [PubMed] [Google Scholar]

- 44.Thilly N, Briançon S, Juillière Y, et al. . Improving ACE inhibitor use in patients hospitalized with systolic heart failure: a cluster randomized controlled trial of clinical practice guideline development and use. J Eval Clin Pract 2003;9:373–82. 10.1046/j.1365-2753.2003.00441.x [DOI] [PubMed] [Google Scholar]

- 45.Du X, Gao R, Turnbull F, et al. . Hospital quality improvement initiative for patients with acute coronary syndromes in China: a cluster randomized, controlled trial. Circ Cardiovasc Qual Outcomes 2014;7:217–26. 10.1161/CIRCOUTCOMES.113.000526 [DOI] [PubMed] [Google Scholar]

- 46.Panella M, Marchisio S, Brambilla R, et al. . A cluster randomized trial to assess the effect of clinical pathways for patients with stroke: results of the clinical pathways for effective and appropriate care study. BMC Med 2012;10:71 10.1186/1741-7015-10-71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Cumming RG, Sherrington C, Lord SR, et al. . Cluster randomised trial of a targeted multifactorial intervention to prevent falls among older people in hospital. BMJ 2008;336:758–60. 10.1136/bmj.39499.546030.BE [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Costantini M, Romoli V, Leo SD, et al. . Liverpool Care Pathway for patients with cancer in hospital: a cluster randomised trial. Lancet 2014;383:226–37. 10.1016/S0140-6736(13)61725-0 [DOI] [PubMed] [Google Scholar]

- 49.Weaver MR, Burnett SM, Crozier I, et al. . Improving facility performance in infectious disease care in Uganda: a mixed design study with pre/post and cluster randomized trial components. PLoS One 2014;9:e103017 10.1371/journal.pone.0103017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Rycroft-Malone J, Seers K, Crichton N, et al. . A pragmatic cluster randomised trial evaluating three implementation interventions. Implement Sci 2012;7:80 10.1186/1748-5908-7-80 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Simunovic M, Coates A, Smith A, et al. . Uptake of an innovation in surgery: observations from the cluster-randomized Quality Initiative in Rectal Cancer trial. Canadian Journal of Surgery 2013;56:415–21. 10.1503/cjs.019112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Wright FC, Gagliardi AR, Law CH, et al. . A randomized controlled trial to improve lymph node assessment in stage II colon cancer. Arch Surg 2008;143:1050–5. 10.1001/archsurg.143.11.1050 [DOI] [PubMed] [Google Scholar]

- 53.Huis A, Schoonhoven L, Grol R, et al. . Impact of a team and leaders-directed strategy to improve nurses’ adherence to hand hygiene guidelines: a cluster randomised trial. Int J Nurs Stud 2013;50:464–74. 10.1016/j.ijnurstu.2012.08.004 [DOI] [PubMed] [Google Scholar]

- 54.Murray RL, Coleman T, Antoniak M, et al. . The effect of proactively identifying smokers and offering smoking cessation support in primary care populations: a cluster-randomized trial. Addiction 2008;103:998–1006. 10.1111/j.1360-0443.2008.02206.x [DOI] [PubMed] [Google Scholar]

- 55.Haines TP, Bell RA, Varghese PN. Pragmatic, cluster randomized trial of a policy to introduce low-low beds to hospital wards for the prevention of falls and fall injuries. J Am Geriatr Soc 2010;58:435–41. 10.1111/j.1532-5415.2010.02735.x [DOI] [PubMed] [Google Scholar]

- 56.Hillman K, Chen J, Cretikos M, et al. . Introduction of the medical emergency team (MET) system: a cluster-randomised controlled trial. Lancet 2005;365:2091–7. 10.1016/S0140-6736(05)66733-5 [DOI] [PubMed] [Google Scholar]

- 57.Stevenson KB, Searle K, Curry G, et al. . Infection control interventions in small rural hospitals with limited resources: results of a cluster-randomized feasibility trial. Antimicrob Resist Infect Control 2014;3:10 10.1186/2047-2994-3-10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Lakshminarayan K, Borbas C, McLaughlin B, et al. . A cluster-randomized trial to improve stroke care in hospitals. Neurology 2010;74:1634–42. 10.1212/WNL.0b013e3181df096b [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Schouten JA, Hulscher ME, Trap-Liefers J, et al. . Tailored interventions to improve antibiotic use for lower respiratory tract infections in hospitals: a cluster-randomized, controlled trial. Clin Infect Dis 2007;44:931–41. 10.1086/512193 [DOI] [PubMed] [Google Scholar]

- 60.Schultz TJ, Kitson AL, Soenen S, et al. . Does a multidisciplinary nutritional intervention prevent nutritional decline in hospital patients? A stepped wedge randomised cluster trial. e-SPEN Journal 2014;9:e84–e90. 10.1016/j.clnme.2014.01.002 [DOI] [Google Scholar]

- 61.Middleton S, McElduff P, Ward J, et al. . Implementation of evidence-based treatment protocols to manage fever, hyperglycaemia, and swallowing dysfunction in acute stroke (QASC): a cluster randomised controlled trial. Lancet 2011;378:1699–706. 10.1016/S0140-6736(11)61485-2 [DOI] [PubMed] [Google Scholar]

- 62.Kramer MS, Chalmers B, Hodnett ED, et al. . Promotion of Breastfeeding Intervention Trial (PROBIT): a cluster-randomized trial in the republic of Belarus. Pediatric research 2000;47:203A. [PubMed] [Google Scholar]

- 63.Effective Practice and Organisation of Care (EPOC). EPOC Resources for review authors.. 2013. http://epoc.cochrane.org/epoc-specific-resources-review-authors

- 64.Aarons GA, Ehrhart MG, Farahnak LR, et al. . Leadership and organizational change for implementation (LOCI): a randomized mixed method pilot study of a leadership and organization development intervention for evidence-based practice implementation. Implement Sci 2015;10:11 10.1186/s13012-014-0192-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Monitoring the building blocks of health systems: a handbook of indicators and their measurement strategies. : World Health Organization, Geneva: World Health Organization, 2010. [Google Scholar]

- 66.Flodgren G, Parmelli E, Doumit G, et al. . Local opinion leaders: effects on professional practice and health care outcomes. Cochrane Database Syst Rev 2011;8:CD000125 10.1002/14651858.CD000125.pub4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Straus SE, Moore JE, Uka S, et al. . Determinants of implementation of maternal health guidelines in Kosovo: mixed methods study. Implement Sci 2013;8:108 10.1186/1748-5908-8-108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Brett J, Staniszewska S, Mockford C, et al. . Mapping the impact of patient and public involvement on health and social care research: a systematic review. Health Expect 2014;17:637–50. 10.1111/j.1369-7625.2012.00795.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Mockford C, Staniszewska S, Griffiths F, et al. . The impact of patient and public involvement on UK NHS health care: a systematic review. Int J Qual Health Care 2012;24:28–38. 10.1093/intqhc/mzr066 [DOI] [PubMed] [Google Scholar]

- 70.Bohren MA, Hunter EC, Munthe-Kaas HM, et al. . Facilitators and barriers to facility-based delivery in low- and middle-income countries: a qualitative evidence synthesis. Reprod Health 2014;11:71 10.1186/1742-4755-11-71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Roland D. Linear rather than vertical analysis of educational initiatives: proposal of the 7is framework. J Educ Eval Health Prof 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.O’Brien MA, Rogers S, Jamtvedt G, et al. . Educational outreach visits: effects on professional practice and health care outcomes. Cochrane Database Syst Rev 2007:CD000409 10.1002/14651858.CD000409.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Forsetlund L, Bjørndal A, Rashidian A, et al. . Continuing education meetings and workshops: effects on professional practice and health care outcomes. Cochrane Database Syst Rev 2009:CD003030 10.1002/14651858.CD003030.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Reeves S, Perrier L, Goldman J, et al. . Interprofessional education: effects on professional practice and healthcare outcomes (update). Cochrane Database Syst Rev 2013;3:CD002213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Bergh AM, Allanson E, Pattinson RC. What is needed for taking emergency obstetric and neonatal programmes to scale? Best Pract Res Clin Obstet Gynaecol 2015;29:1017–27. 10.1016/j.bpobgyn.2015.03.015 [DOI] [PubMed] [Google Scholar]

- 76.Ivers N, Jamtvedt G, Flottorp S, et al. . Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev 2012;6:CD000259 10.1002/14651858.CD000259.pub3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Belizán M, Bergh AM, Cilliers C, et al. . Stages of change: a qualitative study on the implementation of a perinatal audit programme in South Africa. BMC Health Serv Res 2011;11:243 10.1186/1472-6963-11-243 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Newhouse RP, White KM. Guiding implementation: frameworks and resources for evidence translation. J Nurs Adm 2011;41:513–6. 10.1097/NNA.0b013e3182378bb0 [DOI] [PubMed] [Google Scholar]

- 79.Powell BJ, Waltz TJ, Chinman MJ, et al. . A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci 2015;10:21 10.1186/s13012-015-0209-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Roll C, Hüning B, Käunicke M, et al. . Umbilical artery catheter blood sampling volume and velocity: impact on cerebral blood volume and oxygenation in very-low-birthweight infants. Acta Paediatr 2006;95:68–73. 10.1080/08035250500369577 [DOI] [PubMed] [Google Scholar]

- 81.Craig P, Dieppe P, Macintyre S, et al. . Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ 2008;337:a1655 10.1136/bmj.a1655 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Michie S, van Stralen MM, West R. The behaviour change wheel: a new method for characterising and designing behaviour change interventions. Implement Sci 2011;6:42 10.1186/1748-5908-6-42 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Moullin JC, Sabater-Hernández D, Fernandez-Llimos F, et al. . A systematic review of implementation frameworks of innovations in healthcare and resulting generic implementation framework. Health Res Policy Syst 2015;13:16 10.1186/s12961-015-0005-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Squires JE, Sullivan K, Eccles MP, et al. . Are multifaceted interventions more effective than single-component interventions in changing health-care professionals’ behaviours? An overview of systematic reviews. Implement Sci 2014;9:1 10.1186/s13012-014-0152-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Pantoja T, Opiyo N, Ciapponi A, et al. . Implementation strategies for health systems in low-income countries: an overview of systematic reviews. Cochrane Database Syst Rev 2014. http://onlinelibrary.wiley.com/store/10.1002/14651858.CD011086/asset/CD011086.pdf?v=1&t=igxi30qi&s=62c51ad6eeaa841f18dd90e052b1a4b18dbd3bfe [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Ivers NM, Taljaard M, Dixon S, et al. . Impact of CONSORT extension for cluster randomised trials on quality of reporting and study methodology: review of random sample of 300 trials, 2000–8. BMJ 2011;343:d5886 10.1136/bmj.d5886 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjgh-2016-000266supp002.pdf (43.5KB, pdf)

bmjgh-2016-000266supp001.pdf (431.8KB, pdf)