Summary

The voice is the most direct link we have to others’ minds, allowing us to communicate using a rich variety of speech cues [1, 2]. This link is particularly critical early in life, as parents draw infants into the structure of their environment using infant-directed speech (IDS), a communicative code with unique pitch and rhythmic characteristics relative to adult-directed speech (ADS) [3, 4]. To begin breaking into language, infants must discern subtle statistical differences about people and voices in order to direct their attention toward the most relevant signals. Here, we uncover a new defining feature of IDS: mothers significantly alter statistical properties of vocal timbre when speaking to their infants. Timbre, the tone color or unique quality of a sound, is a spectral fingerprint that helps us instantly identify and classify sound sources, such as individual people and musical instruments [5, 6, 7]. We recorded 24 mothers’ naturalistic speech while they interacted with their infants and with adult experimenters in their native language. Half of the participants were English speakers, and half were not. Using a support vector machine classifier, we found that mothers consistently shifted their timbre between ADS and IDS. Importantly, this shift was similar across languages, suggesting that such alterations of timbre may be universal. These findings have theoretical implications for understanding how infants tune in to their local communicative environments. Moreover, our classification algorithm for identifying infant-directed timbre has direct translational implications for speech recognition technology.

Keywords: speech, communication, infancy, timbre, auditory perception, audience design, summary statistics

Results

If mothers systematically alter their unique timbre signatures when speaking to their infants, we predicted that we could use freely improvised, naturalistic speech data to discriminate infant-directed from adult-directed speech. Furthermore, if this systematic shift in timbre production during IDS exists, we expected that it would manifest similarly across a wide variety of languages.

Twenty-four mother-infant dyads participated in this study (see STAR Methods). We recorded mothers’ naturalistic speech while they spoke to their infants and to an adult interviewer. During the recorded session, half of the mothers spoke only English and the other half spoke only a non-English language (the language they predominantly used when speaking to their child at home). For each participant, we extracted 20 short utterances from each condition (IDS, ADS) and computed a single, time-averaged MFCC vector (i.e., a concise summary statistic representing the signature tone ‘color’ of that mother’s voice; see STAR Methods). This measure – a limited set of time-averaged values that concisely describe a sound’s unique spectral properties [8, 9] – has been shown to represent human timbre perception quite well [7]. As an initial validation of our method, we first confirmed that support-vector machine (SVM) classification is sensitive enough to replicate previous work distinguishing individual mothers [10, 11] by performing the classification on these MFCC vectors across subjects (see STAR Methods). Then, to test our primary question of interest, we performed a similar SVM classification on these vectors to distinguish IDS from ADS. Our use of MFCC as a global summary measure of vocal signature across varied, naturalistic speech (see STAR Methods) represents a new approach to discriminating communicative modes in real-life contexts.

Classification of infant- vs. adult- directed speech

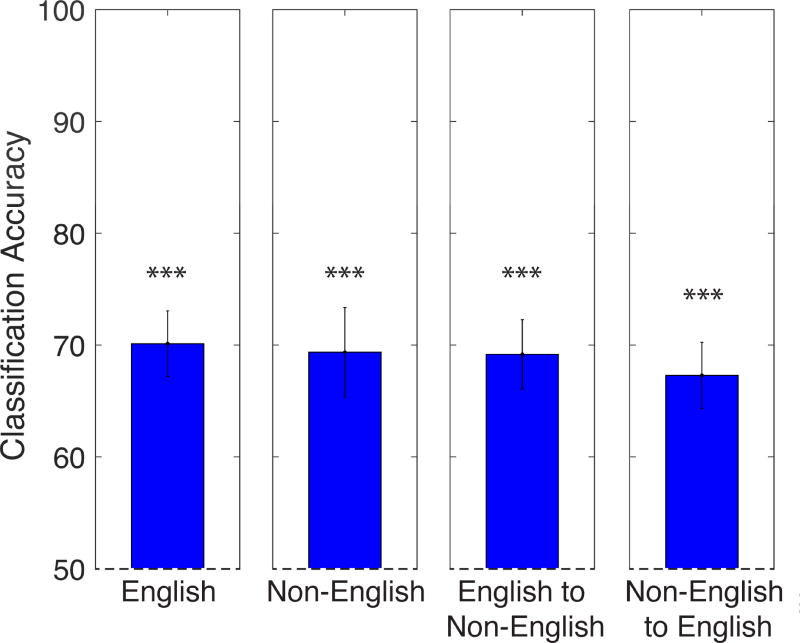

Using a support-vector machine classifier (SVM-RBF, see STAR Methods), we were able to distinguish utterances of infant-directed from adult-directed speech significantly above chance using the MFCC, here used as a summary statistical feature vector that represents the overall timbre fingerprint of someone’s voice (see STAR Methods). Our classification analysis discriminated IDS from ADS for both English speech (Figure 2; two-tailed, one-sample t-test, t(11) = 6.85, p < .0001) and non-English speech (t(11) = 4.84, p < .001). These results indicate that timbre shifts across communicative modes are highly consistent across mothers.

Figure 2. Accuracy rates for classifying mothers’ IDS vs. ADS using MFCC vectors.

The first two bars indicate results from training and testing the classifier on English (first bar) and on all other languages (second bar). The third bar results from training the classifier on English data and testing on non-English data (and vice versa for the fourth bar). Chance (dashed line) is 50%. N = 12. Classification performance is represented as mean percent correct and ± SEM across cross-validation folds (leave-one-subject-out). ***p < .001.

Classification of infant- vs. adult-directed speech generalizes across languages

In a cross-language decoding analysis, we also found that the classifier trained to distinguish English IDS from ADS could discriminate these two modes of speech significantly above chance when tested instead on non-English data (Figure 2, t(11) = 6.16, p < .0001). Conversely, a classifier trained to distinguish non-English IDS from ADS could also successfully discriminate English data (t(11) = 5.84, p < .001). Thus, the timbral transformation used (perhaps automatically) by English speakers when switching from ADS to IDS generalizes robustly to other languages. See STAR Methods for the full list of languages tested.

Classification of infant- vs. adult-directed speech cannot be fully explained by differences in pitch (F0) or formants (F1, F2)

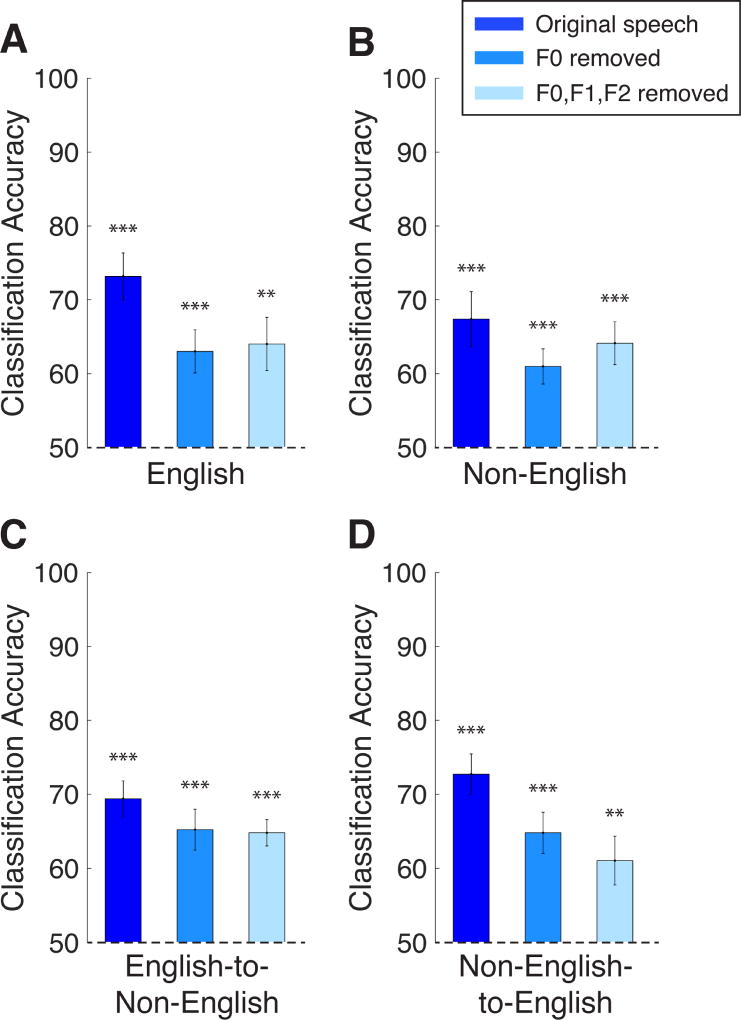

We performed a control analysis to rule out the possibility that our ability to distinguish IDS from ADS was based on differences in pitch that were somehow recoverable in the MFCC feature set. To do this, we regressed out F0 (over time) from each of the 12 MFCC time vectors before computing the single time-averaged vector of MFCC coefficients for each utterance and performing classification based on those time-averaged vectors (see STAR Methods). After removing the dynamic effects of F0, we found that classification between ADS and IDS (using the same algorithm as in previous analyses, SVM-RBF) was still significantly above chance for English data (Figure 3a, second bar; two-tailed, one-sample t-test, t(11) = 4.45, p < .001), non-English data (Figure 3b, second bar; t(11) = 4.61, p < .001), training on English and testing on non-English data (Figure 3c, second bar; t(11) = 5.54, p < .001), and training on non-English and testing on English data (Figure 3d, second bar; t(11) = 5.36, p < .001). Thus, even in the absence of pitch differences, timbre information alone enabled robust discrimination of ADS and IDS.

Figure 3. Accuracy rates for classifying IDS vs. ADS based on timbre, after controlling for pitch and formants.

The first (darkest blue) bars indicate results for MFCC vectors derived from original speech, the second bars result from speech with F0 regressed out, and the third bars result from speech with F0, F1, and F2 regressed out. Bars corresponding to “original speech” are derived from only the segments of each utterance in which an F0 value was obtained, for direct comparison with the regression results (see STAR Methods). Chance (dashed line) is 50%. N = 12. Classification performance is represented as mean percent correct and ± SEM across cross-validation folds (leave-one-subject-out). **p < .01, ***p < .001.

In a second analysis, we computed the F1 and F2 contours from each utterance and regressed out each of these vectors (in addition to F0) from each MFCC time vector before computing the single time-averaged vector of MFCC coefficients for each utterance. We chose F1 and F2 because they have been identified in previous research as properties of speech that are shifted between IDS and ADS [12]. After removing the dynamic effects of F0 and the first two formants, we found that classification was still significantly above chance for English data (Figure 3a, third bar; two-tailed, one-sample t-test, t(11) = 3.88, p < .01), non-English data (Figure 3b, third bar; t(11) = 4.85, p < .001), training on English and testing on non-English data (Figure 3c, third bar; t(11) = 8.31, p < .0001), and training on non-English and testing on English data (Figure 3d, third bar; t(11) = 3.37, p < .01). Thus, even after removing the dynamic effects of pitch and the first two formants, remaining timbre information present in MFCCs enabled robust discrimination of ADS and IDS.

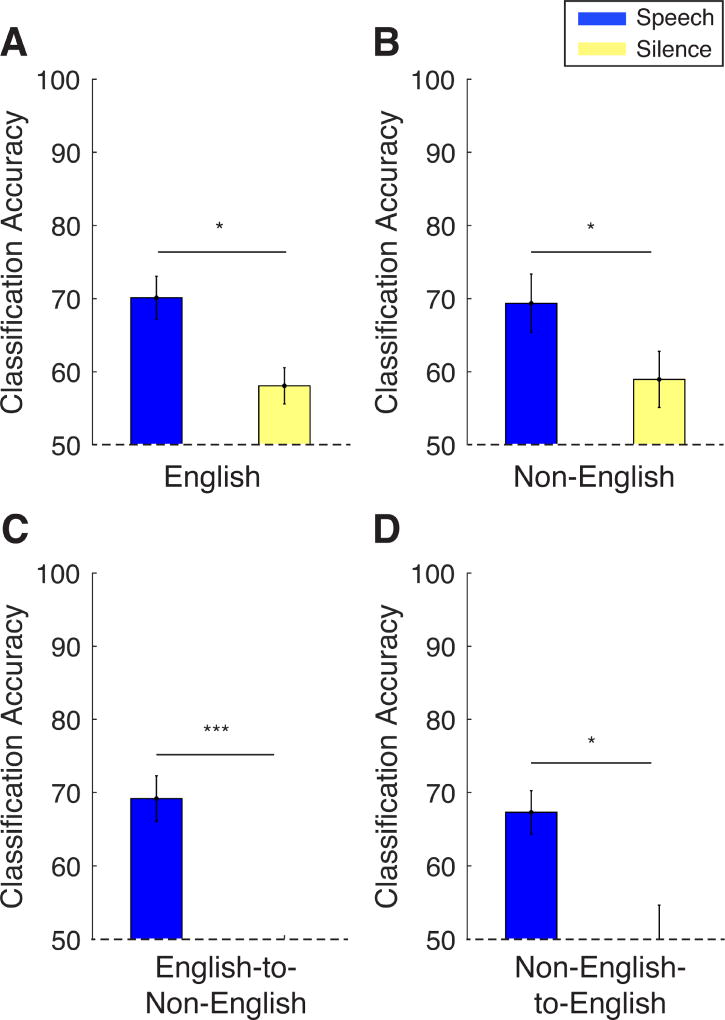

Classification of IDS vs. ADS data cannot be fully explained by differences in background noise

We performed another control analysis to rule out the possibility that our ability to distinguish between IDS and ADS was due to differences between the noise properties of the microphone or room across different conditions. We were able to classify ADS vs. IDS using silence alone for English speakers (Figure 4a, yellow bar; two-tailed, one-sample t-test, t(11) = 3.26, p < .01) and for non-English speakers (Figure 4b, yellow bar; t(11) = 2.33, p < .05). This could result from slight shifts in microphone position when mothers were oriented toward the adult experimenter versus their infant. But importantly, classification of ADS vs. IDS was significantly better for real speech than silences alone both for English (Figure 4a; two-tailed, paired samples t-test, t(11) = 2.92, p < .02) and non-English (Figure 4b; t(11) = 2.31, p < .05) data. Furthermore, both of our cross-language analyses failed completely when we used silence alone [train on English silence, test on non-English silence: t(11) = −1.38, p = .19, see Figure 4c, yellow bar; train on non-English silence, test on English silence: t(11) = −.04, p = .97, see Figure 4d, yellow bar]. And once again, cross-language classification of ADS vs. IDS was significantly better when we trained on real English speech and tested on non-English speech than when we trained and tested on silence alone from those respective groups (Figure 4c; t(11) = 6.46, p < .0001), and was also better when we trained on non-English speech and tested on English speech than when we trained and tested on silence alone (Figure 4d; t(11) = 2.59, p < .05). Collectively, these results demonstrate that differences in background noise across ADS and IDS recordings cannot fully account for our ability to classify those two modes of speech.

Figure 4. Accuracy rates for classifying IDS vs. ADS based on vocal timbre vs. background noise.

All “speech” (blue) bars are duplicated exactly from Figure 2 and appear again here for visual comparison. “Silence” (yellow) bars are derived from cropped segments containing no sounds except for ambient noise from recordings of English speakers and non-English speakers. Chance (dashed line) is 50%. N = 12. Classification performance is represented as mean percent correct and ± SEM across cross-validation folds (leave-one-subject-out). Figure S2 displays accuracy rates for classifying individual speakers based on speech vs. background noise. *p < .05, ***p < .001.

Discussion

We show for the first time that infant-directed speech is defined by robust shifts in overall timbre, which help differentiate it from adult-directed speech as a distinct communicative mode across multiple languages. This spectral dimension of speakers’ voices differs reliably between the two contexts, i.e., when a mother is speaking to her infant versus engaging in dialogue with an adult. Our findings generalize across a broad set of languages, much as pitch characteristics of IDS manifest similarly in several languages [13]. This research emphasizes and isolates the significant role of timbre (a relatively high-level feature of sounds [14]) in communicative code switching, adding a novel dimension to the well-known adjustments that speakers use in IDS, such as higher pitch, more varied pitch, longer pauses, shorter utterances, and more repetition [3, 4].

Our study complements research showing that adult speakers acoustically exaggerate the formant frequencies of their speech when speaking to infants to maximize differences between vowels [15, 12]. Our results cannot be explained by differences in pitch (F0) or in the first two formants (F1 and F2) between ADS and IDS; even after regressing out these features over time from the MFCCs, significant timbre differences remain that allow for robust classification of these two modes of speech (see Results and Figure 3). This suggests that our timbre effects reflect a shift in the global shape of the spectrum beyond these individual frequency bands. Furthermore, the shifts we report generalize across a broader set of languages than have been tested in previous work. Even after the removal of pitch and formants, our ADS/IDS classification model transfers from English speech to speech sampled from a diverse set of 9 other languages (Figure 3c and 3d, third bars).

Timbre enables us to discriminate, recognize, and enjoy a rich variety of sounds [5, 16], from friends’ voices and animal calls to musical textures. A characteristic of the speech spectrum that depends on the resonant properties of the larynx, vocal tract, and articulators, vocal timbre varies widely across people (see Audio S1–S4). Because MFCC has been shown to provide a strong model for perceptual timbre space as a whole [7], here we focused on this summary statistical measure of the spectral shape of a voice as a proxy for timbre. However, timbre is a complex property that requires the neural integration of multiple spectral and temporal features [17], most notably spectral centroid (the weighted average frequency of a signal’s spectrum, which strongly relates to our MFCC measure and influences perceived brightness [18]), attack time, and temporal modulation (e.g., vibrato) [6]. Future work should explore how these dimensions of timbre might interact in IDS in order to support infants’ learning of relevant units of speech.

Compared to most prosodic features of speech (e.g., pitch range, rhythm), which are more “horizontal” and unfold over multiple syllables and even sentences of data, the time-averaged summary statistic we have measured represents a more “vertical” dimension of sound. This spectral fingerprint is detectable and quantifiable with very little data, consistent with listeners’ ability to identify individual speakers even from single, monosyllabic words [19] and to estimate rich information from very brief clips of popular music [20]. These examples are too short to contain pitch variation but do include information about the relationships between harmonics that are linked to perceptual aspects of voice quality. Such cues include amplitude differences between harmonics, harmonics-to-noise ratio, and both jitter and shimmer (which relate to roughness or hoarseness; [21]). Infants’ ability to classify [22] and remember [23] timbre suggests that it could be partly responsible for their early ability to recognize IDS [24] and their own caregivers’ voices [25]. Both identification processes are likely to provide relevant input for further learning about the ambient language and the social environment. Because timbre contributes greatly to the rapid identification of sound sources and the grouping of auditory objects [5], it likely serves as an early and important cue to be associatively bound to other sensory features (e.g., a sibling with her voice, a dog with its bark). The developmental time course of this process invites future investigation.

The timbre shifts we report in IDS are likely part of a broadly adaptive mechanism that quickly draws infants’ attention [26] to the statistical structure of their relevant auditory environment, starting very soon after birth [24], and helps them to segment words [27], learn the meanings of novel words [28], and segment speech into units [29]. IDS may serve as a vehicle for the expression of emotion [30], in part due to its ‘musical’ characteristics [31, 32] and its interaction with a mother’s emotional state [33]. One study [34] reported differences in several timbre-related acoustic features between infant-directed and adult-directed singing in English-speaking mothers, but it is unclear whether these differences are due to performance-related aspects of vocalization (i.e., having someone to interact with or not, which can affect speech behavior through non-verbal feedback; [35]) or code switching between adult and child audiences. Because some timbre features have been shown to influence emotional ratings of speech prosody [36] and affect is thought to mediate the learning benefits of IDS [33], future work might ask how the observed differences between IDS and ADS relate to mothers’ tendencies to smile, gesture, or provide other emotional cues during learning.

Our findings have the potential to stimulate broad research on features of language use in a variety of communicative contexts. Although timbre’s role in music has been widely studied [16], its importance for speech—and in particular, communicative signaling—is still quite poorly understood. However, timbre holds great promise for helping us understand and quantify the frequent register shifts that are important for flexible communication across a wide variety of situations. For instance, performers often manipulate their timbre for emotional or comic effect, and listeners are sensitive to even mild affective changes signaled by these timbral shifts [37]. Future studies could expand existing literature on audience design [38, 39, 40, 41] and code switching [42, 43] by exploring how speakers alter their timbre, or other vocal summary statistics, to flexibly meet the demands of a variety of audiences, such as friends, intimate partners, superiors, students, or political constituents.

Understanding how caregivers naturally alter their vocal timbre to accommodate children’s unique communicative needs could have wide-ranging impact, from improving speech recognition software to education. For instance, our use of summary statistics could enable speech recognition algorithms to quickly and automatically identify infant-directed speech (and in the future, perhaps a diverse range of speech modes) from just a few seconds of data. This would support ongoing efforts to develop software that provides summary measures of natural speech in infants’ and toddlers’ daily lives through automatic vocalization analysis [44, 45]. Moreover, software designed to improve language or communication skills [46] could enhance children’s engagement by adjusting the vocal timbre of virtual speakers or teaching agents to match the variation inherent in the voices of their own caregivers. Finally, this implementation of summary statistics could improve the efficiency of emerging sensory substitution technologies that cue acoustic properties of speech through other modalities [47, 48]. The statistics of timbre in different communicative modes have the potential to enrich our understanding of how infants tune in to important signals and people in their lives and to inform efforts to support children’s language learning.

STAR Methods

Contact for Reagent and Resource Sharing

Further information and requests for resources should be directed to and will be fulfilled by the Lead Contact, Elise Piazza (elise.piazza@gmail.com).

Experimental Model and Subject Details

Twenty-four mother-infant dyads participated in three naturalistic activities (see General Procedure), the order of which was counterbalanced across participants. Infants were 7–12 months old. Informed consent was obtained from all participating mothers, and approval of the study was obtained from the Princeton University Institution Review Board. Participants were given no information about the experimental hypotheses; that is, they were told that we were broadly interested in “how mothers interact with their infants” and were not aware that we were measuring differences between the acoustic properties of their speech across the conditions. We chose to test only mothers to keep overall pitch range fairly consistent across participants but would expect these results to generalize to fathers as well, which could be explored in future studies.

Twelve of the mother-infant dyads were English speakers. To investigate the possibility that timbral differences between ADS and IDS generalize across languages, we recorded a second group of 12 mothers who speak to their infants using a language other than English at least 50% of the time. We included a wide variety of languages: Spanish (N = 1), Russian (N = 1), Polish (N = 1), German (N = 2), Hungarian (N = 1), French (N = 1), Hebrew (N = 2), Mandarin (N = 2), Cantonese (N = 1). All families were recruited from the central New Jersey area.

Method Details

Equipment

Speech data were recorded continuously using an Apple iPhone and a Miracle Sound Deluxe Lavalier lapel microphone attached to each mother’s shirt. Due to microphone failure, three participants were recorded with a back-up Blue Snowball USB microphone; recording quality did not differ between microphones.

General procedure

In the adult-directed speech (ADS) condition, mothers were interviewed by an adult experimenter about the child’s typical daily routine, feeding and sleeping habits, personality, and amount of time spent with various adults and children in the child’s life. In the two infant-directed speech (IDS) conditions, mothers were instructed to interact freely with their infants, as they naturally would at home, by playing with a constrained set of animal toys and reading from a set of age-appropriate board books, respectively. Each condition lasted approximately 5 minutes. See Table S1 for example utterances from both conditions.

All procedures were identical for the English-speaking and non-English-speaking mothers, except that non-English-speaking mothers were asked to speak only in their non-English language during all experimental conditions (adult interview, reading, and play). In the interview condition, the experimenter asked the questions in English, but the mother was asked to respond in her non-English language, and she was told that a native speaker of her non-English language would later take notes from the recordings. We chose this method (instead of asking the participants to respond to a series of written questions in the appropriate language) to approximate a naturalistic interaction (via gestures and eye contact) as closely as possible.

Quantification and Statistical Analysis

Data pre-processing

Using Adobe Audition, we extracted 20, two-second phrases from each condition (ADS, IDS) for each mother. Phrases were chosen to represent a wide range of semantic and syntactic content; see Table S1 for example phrases. Data were manually inspected to ensure that they did not include any non-mother vocalizations or other extraneous sounds (e.g., blocks being thrown). After excluding these irrelevant sounds, there were typically only 20–30 utterances to choose from, and we simply chose the first 20 from each mother.

Timbre analysis

To quantify each mother’s timbre signature, we used Mel-frequency cepstral coefficients (MFCC), a measure of timbre used for automatic recognition of individual speakers [10, 11], phonemes [49] and words [9], and musical genres [8, 50]. The MFCC is a feature set that succinctly describes the shape of the short-term speech spectrum using a small vector of weights. The weights represent the coefficients of the discrete cosine transform of the Mel spectrum, which is designed to realistically approximate the human auditory system’s response. In our analysis, the MFCC serves as a time-averaged summary statistic that describes the global signature of a person’s voice over time. For each phrase, we first computed the MFCC in each 25-ms time window to yield a coefficient × time matrix. MFCCs were computed using 25-ms overlapping windows, each 10 ms apart. Finally, we computed a single, time-averaged vector, consisting of 12 MFCC coefficients, across the entire duration of the phrase (Figure 1). Figure S1 shows the average ADS and IDS vectors across all 20 phrases for each English-speaking participant. The MFCC features were extracted according to [51] and [9], as implemented in the HTK MFCC analysis package in MATLAB R2016A, using the following default settings: 25-ms overlapping windows, 10-ms shift between windows, pre-emphasis coefficient = 0.97, frequency range = 300 to 3700 Hz, 20 filterbank channels, 13 cepstral coefficients, cepstral sine lifter parameter = 22, hamming window.

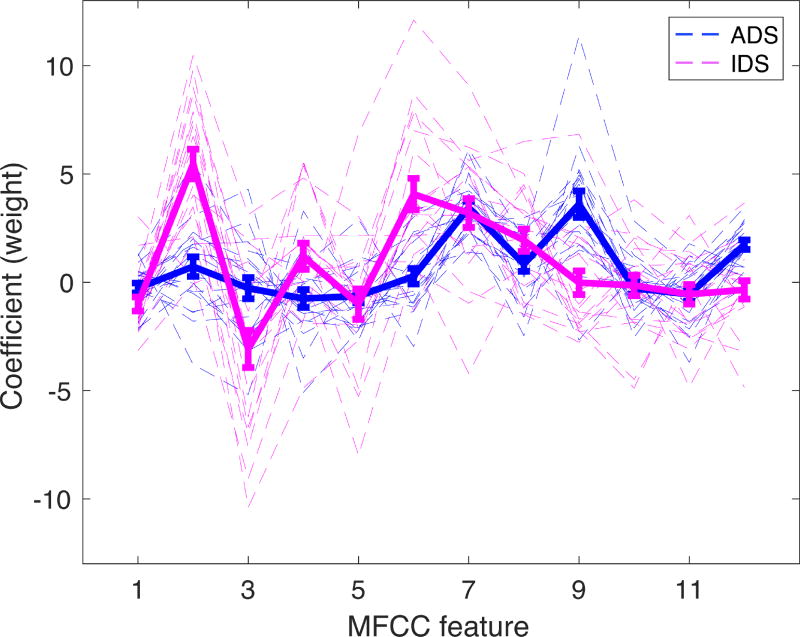

Figure 1. MFCC feature vectors from all utterances for one representative participant.

Each vector (dashed lines) represents the time-averaged set of Mel-frequency cepstral coefficients for a single utterance of either adult-directed speech (ADS, shown in blue) or infant-directed speech (IDS, shown in pink). Each bold line represents the average MFCC vector across all 20 utterances for a given condition. Error bars on the averaged vectors represent ± SEM across 20 utterances. Figure S1 depicts average MFCC vectors for each of the 12 English-speaking participants; the vectors displayed in Figure 1 come from s12.

Classification

Prior work suggests that timbre as a feature of natural speech is consistent within individuals and distinct between individuals [19], i.e., that timbral signatures possess enough information to distinguish individual mothers from one another. As an initial validation of our method, we first confirmed that support-vector machine (SVM) classification is sensitive enough to replicate previous work distinguishing individual mothers [10, 11] by performing the classification on these MFCC vectors across subjects (see Figure S2). Then, to test our primary question of interest, we performed a similar SVM classification on these vectors to distinguish IDS from ADS.

To this end, we used a support vector machine classifier with radial basis function kernel (SVM-RBF) (LibSVM implementation, [52]) to predict whether utterances belong to the IDS or ADS communicative modes based on MFCC features extracted from natural speech. We employed a standard leave-one-subject-out (LOSO) 12-fold cross-validation, where for each cross-validation fold we trained our classifier to distinguish between IDS and ADS using the data from 11 subjects and then tested how well it generalized to the left-out (twelfth) subject. We note that this is a more stringent test for our classifier compared to using training data from each subject, because in our case the classifier is oblivious to idiosyncrasies of the left-out subject’s speech when building a model to discriminate between IDS and ADS.

Additionally, within each LOSO cross-validation fold, we also performed a log-space grid search in the (2−14, 214) range on a randomly selected held-out subset of one quarter of the training data (25% × 91.7% = 22.9% of the original data) to select the optimal classifier parameters (C, Gamma). After selecting the optimal cross-validated parameters for that particular fold, we re-trained our classifier on the entire fold’s training set (11 subjects) and tested how well it generalized to the left-out (twelfth) subject from the original data. We repeated this procedure 12 times, iterating over each individual subject for testing (e.g. the first subject is held out for fold #1 testing, the second subject is held out for fold #2 testing, etc.).

We performed the same analysis described above (classify IDS vs. ADS) on a second group of mothers who spoke a language other than English during the experimental session. In addition, to test the generalizability of the timbre transformation between ADS and IDS across languages, we performed cross-language classification. Specifically, we first trained the classifier to distinguish between IDS and ADS using data from the English participants only and tested it on data from the non-English cohort. Finally, we performed the reverse analysis, where the classifier was trained on non-English data and was used to predict IDS vs. ADS in the English participant cohort. SVM classification results (mean percent correct and ± SEM across cross-validation folds) are shown in Figure 2.

Pitch and formant control analyses

We performed a control analysis to rule out the possibility that our ability to distinguish IDS from ADS was based on differences in pitch that were somehow recoverable in the MFCC feature set. For every utterance in our dataset, we used Praat [53] to extract a vector corresponding to the entire F0 contour. For time points in which no F0 value was estimated (i.e., non-pitched speech sounds), we removed these samples from the F0 vector and also removed the corresponding time bins from the MFCC matrix (coefficients × times; see Timbre Analysis). This temporal alignment of the MFCC matrices and F0 vectors allowed us to regress out the latter from the former. Classification performance for these time-restricted MFCC matrices, before regressing anything out, is shown in Figure 3 (first bars). Next, we regressed out F0 (over time) from each of the 12 MFCC time vectors before computing the single time-averaged vector of MFCC coefficients for each utterance and performing SVM classification between ADS and IDS based on these residual time-averaged vectors (Figure 3, second bars).

We performed a very similar analysis to additionally control for the impact of the first two formants (F1 and F2) on our classification of IDS and ADS. Specifically, for each utterance, we used Praat [53] to extract two vectors corresponding to F1 and F2; these vectors represented the same time points as the F0 vector described above to ensure temporal alignment for the purposes of regression. We then regressed out F0, F1, and F2 (over time) from each of the 12 MFCC time vectors before computing the single time-averaged vector of MFCC coefficients and performing classification between ADS and IDS based on these residual time-averaged vectors (Figure 3, third bars).

Background noise control analysis

We performed a control analysis to rule out the possibility that our ability to distinguish between IDS and ADS was due to differences between the noise properties of the microphone or room across different conditions (Figure 4). Here, instead of utterances, we extracted 20 segments of silence (which included only ambient, static background noise and no vocalizations, breathing, or other dynamic sounds) from the ADS and IDS recordings of each participant, of comparable length to the original speech utterances (1–2 seconds). We then performed identical analyses to those described above for speech data (i.e., analyzing the MFCC of each segment of silence, performing cross-validated SVM classification to discriminate IDS from ADS recordings).

Classification of IDS vs. ADS cannot be explained by differences between read and spontaneous speech

We conducted a control analysis to ensure that known prosodic differences between read and spontaneous speech [54] could not account for our ability to distinguish IDS from ADS data. Specifically, for each of the 12 English-speaking mothers, we replaced all IDS utterances that corresponded to the “book” (reading) condition with new utterances from the same mother’s recording that corresponded to the “play” condition only. Thus, all 20 utterances from both IDS and ADS now represented only spontaneous speech. Resulting classification remained significantly above chance (two-tailed, one-sample t-test, t(11) = 9.81, p < .0001), indicating that potential differences between spontaneous and read speech could not account for our results.

Classification of individual speakers

To confirm that our method is sufficiently sensitive to distinguish between different participants, as in previous research [10, 11], we used a similar classification technique as the one used to compare IDS to ADS. More specifically, we employed a standard “leave-two-utterances-out” 10-fold cross-validation procedure for testing, where for each fold we left out 10% of the utterances from each condition and each subject (e.g. two utterances each from IDS and ADS per subject) and trained the classifier on the remaining 90% of the data, before testing on the left-out 10%. Within each fold, we also performed a log-space grid search in the (2−14, 214) range on a held out subset of one quarter of the training data (25% × 90% = 22.5% of the original data) to select the optimal classifier parameters (C, Gamma). After selecting the optimal cross-validated parameters for that particular fold, we re-trained our classifier on the entire training set (90% of original data) and tested how well it generalized to the left-out 10% of the original data. We repeated this procedure 10 times, iterating over non-overlapping subsets of held-out data for testing (e.g. the first two utterances from IDS and ADS are held out for fold #1 testing, the next two utterances are held out for fold #2 testing, etc.).

Using this procedure, we were able to reliably distinguish between individual mothers significantly above chance based on MFCCs from English speech data (Figure S2a, blue bar; two-tailed, one-sample t-test, t(11) = 23.26, p < .0001) and from non-English speech data (Figure S2b, blue bar; t(11) = 22.22, p < .0001).

We also performed another control analysis based on background noise (similar to the one above) to rule out the possibility that our ability to distinguish between different individuals was due to differences between the noise properties of the microphone or room across different days. We found that we could classify individual mothers above chance in both the English-speaking group (Figure S2a, yellow bar; t(11) = 19.42, p < .0001) and the non-English-speaking group (Figure S2b, yellow bar; t(11) = 26.78, p < .0001) based on silences in the recordings alone. This is not entirely surprising because although we maintained a consistent distance (approximately 12 inches) from the mouth to microphone across mothers, the background noise conditions of the room may have changed slightly beyond our control across days (e.g., due to differences in the settings of the heating unit). Importantly, however, discrimination of individual speakers was significantly better for real speech than silence segments, for both English (Figure S2a; two-tailed, paired samples t-test, t(11) = 4.52, p < .001) and non-English data (Figure S2b; t(11) = 3.45, p < .01).

Data and Software Availability

Interested readers are encouraged to contact the Lead Contact for the availability of data. The MATLAB-based MFCC routines can be found at: http://www.mathworks.com/matlabcentral/fileexchange/32849-htk-mfcc-matlab

Supplementary Material

Recordings of four different women (not participants in the study) pronouncing the syllable “ba”, each with the same pitch, duration, and RMS amplitude. Differences between speakers reflect variation in timbre.

Highlights.

Infant-directed speech is an important mode of communication for early learning

Mothers shift the statistics of their vocal timbre when speaking to infants

This systematic shift generalizes robustly across a variety of languages

This research has implications for infant learning and speech recognition technology

Acknowledgments

This research was supported by a C.V. Starr Postdoctoral Fellowship from the Princeton Neuroscience Institute awarded to Elise A. Piazza, and a grant from the National Institute of Child Health and Human Development to Casey Lew-Williams (HD079779). The authors thank Julia Schorn and Eva Fourakis for assistance with data collection.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Author Contributions

E.A.P. developed the study concept. E.A.P., M.C.I., and C.L.W. contributed to the study design. E.A.P. collected data and E.A.P and M.C.I. performed data analysis. E.A.P. drafted the manuscript, and M.C.I. and C.L.W. provided critical revisions.

References

- 1.Ohala JJ. Cross-language use of pitch: An ethological view. Phonetica. 1983;40:1–18. doi: 10.1159/000261678. [DOI] [PubMed] [Google Scholar]

- 2.Auer P, Couper-Kuhlen E, Müller F. Language in time: The rhythm and tempo of spoken interaction. Oxford University Press on Demand; 1999. [Google Scholar]

- 3.Fernald A. Human maternal vocalizations to infants as biologically relevant signals. In: Barkow J, Cosmides L, Tooby J, editors. The Adapted Mind: Evolutionary Psychology and the Generation of Culture. Oxford: Oxford University Press; 1992. pp. 391–428. [Google Scholar]

- 4.Fernald A, Simon T. Expanded intonation contours in mothers’ speech to newborns. Dev. Psychol. 1984;20:104–113. [Google Scholar]

- 5.Bregman AS. Auditory Scene Analysis: The Perceptual Organization of Sound. Cambridge: MIT Press; 1994. [Google Scholar]

- 6.Elliott TM, Hamilton LS, Theunissen FE. Acoustic structure of the five perceptual dimensions of timbre in orchestral instrument tones. J Acoust. Soc. Am. 2013;133(1):389–404. doi: 10.1121/1.4770244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Terasawa H, Slaney M, Berger J. Perceptual distance in timbre space. Georgia Institute of Technology; 2005. [Google Scholar]

- 8.McKinney MF, Breebaart J. Features for audio and music classification. Proc. ISMIR. 2003;3:151–158. [Google Scholar]

- 9.Davis S, Mermelstein P. Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE T. Acoust. Speech Signal Proc. 1980;28:357–366. [Google Scholar]

- 10.Reynolds DA. Experimental evaluation of features for robust speaker identification. IEEE Transactions on Speech and Audio Proc. 1994;2(4):639–643. [Google Scholar]

- 11.Tiwari V. MFCC and its applications in speaker recognition. Int. J. Emerg. Technol. 2010;1:19–22. [Google Scholar]

- 12.Kuhl PK, Andruski JE, Chistovich IA, Chistovich LA, Kozhevnikova EV, Ryskina VL, Stolyarova EI, Sundberg U, Lacerda F. Cross-language analysis of phonetic units in language addressed to infants. Science. 1997;277(5326):684–686. doi: 10.1126/science.277.5326.684. [DOI] [PubMed] [Google Scholar]

- 13.Grieser DL, Kuhl PK. Maternal speech to infants in a tonal language: Support for universal prosodic features in motherese. Dev. Psychol. 1988;24:14–20. [Google Scholar]

- 14.Binder JR, Frost JA, Hammeke TA, Bellgowan PS, Springer JA, Kaufman JN, Possing ET. Human temporal lobe activation by speech and nonspeech sounds. Cereb. Cortex. 2000;10:512–528. doi: 10.1093/cercor/10.5.512. [DOI] [PubMed] [Google Scholar]

- 15.Eaves BS, Feldman NH, Griffiths TL, Shafto P. Infant-directed speech is consistent with teaching. Psychol. Rev. 2016;123:758–771. doi: 10.1037/rev0000031. [DOI] [PubMed] [Google Scholar]

- 16.McAdams S, Giordano BL. The perception of musical timbre. In: Hallam S, Cross I, Thaut M, editors. Oxford Handbook of Music Psychology. New York: Oxford University Press; 2009. pp. 72–80. [Google Scholar]

- 17.Patil K, Pressnitzer D, Shamma S, Elhilali M. Music in our ears: the biological bases of musical timbre perception. PLoS Comput. Biol. 2012;8:e1002759. doi: 10.1371/journal.pcbi.1002759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wessel D. Timbre space as a musical control structure. Comput. Music J. 1979;3:45–52. [Google Scholar]

- 19.Pollack I, Pickett JM, Sumby WH. On the identification of speakers by voice. J. Acoust. Soc. Am. 1954;26:403–406. [Google Scholar]

- 20.Krumhansl C. Plink: "Thin slices" of music. Music Percept. 2010;27:337–354. [Google Scholar]

- 21.Kreiman J, Gerratt BR, Precoda K, Berke GS. Individual differences in voice quality perception. J. Speech Hear. Res. 1992;35:512–520. doi: 10.1044/jshr.3503.512. [DOI] [PubMed] [Google Scholar]

- 22.Trehub S, Endman M, Thorpe L. Infants’ perception of timbre: Classification of complex tones by spectral structure. J. Exp. Child Psychol. 1990;49:300–313. doi: 10.1016/0022-0965(90)90060-l. [DOI] [PubMed] [Google Scholar]

- 23.Trainor LJ, Wu L, Tsang CD. Long-term memory for music: Infants remember tempo and timbre. Dev. Sci. 2004;7:289–96. doi: 10.1111/j.1467-7687.2004.00348.x. [DOI] [PubMed] [Google Scholar]

- 24.Cooper R, Aslin R. Preference for infant-directed speech in the first month after birth. Child Dev. 1990;61:1584–1595. [PubMed] [Google Scholar]

- 25.DeCasper AJ, Fifer WP. Of human bonding: Newborns prefer their mothers’ voices. Science. 1980;208:1174–76. doi: 10.1126/science.7375928. [DOI] [PubMed] [Google Scholar]

- 26.Kaplan PS, Goldstein MH, Huckeby ER, Owren MJ, Cooper RP. Dishabituation of visual attention by infant-versus adult-directed speech: Effects of frequency modulation and spectral composition. Infant Behav. Dev. 1995;18(2):209–223. [Google Scholar]

- 27.Thiessen ED, Hill EA, Saffran JR. Infant-directed speech facilitates word segmentation. Infancy. 2005;7:53–71. doi: 10.1207/s15327078in0701_5. [DOI] [PubMed] [Google Scholar]

- 28.Graf Estes K, Hurley K. Infant-directed prosody helps infants map sounds to meanings. Infancy. 2013;18:797–824. doi: 10.1111/infa.12006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Nelson DGK, Hirsh-Pasek K, Jusczyk P, Cassidy K. How the prosodic cues in motherese might assist language learning. J. Child Lang. 1989;16:55–68. doi: 10.1017/s030500090001343x. [DOI] [PubMed] [Google Scholar]

- 30.Trainor L, Austin C, Desjardins R. Is infant-directed speech prosody a result of the vocal expression of emotion? Psychol. Sci. 2000;11:188–195. doi: 10.1111/1467-9280.00240. [DOI] [PubMed] [Google Scholar]

- 31.Bergeson TR, Trehub SE. Absolute pitch and tempo in mothers' songs to infants. Psychol. Sci. 2002;13(1):72–75. doi: 10.1111/1467-9280.00413. [DOI] [PubMed] [Google Scholar]

- 32.Bergeson TR, Trehub SE. Signature tunes in mothers’ speech to infants. Infant Behav. Dev. 2007;30(4):648–654. doi: 10.1016/j.infbeh.2007.03.003. [DOI] [PubMed] [Google Scholar]

- 33.Kaplan P, Bachorowski J, Zarlengo-Strouse P. Child-directed speech produced by mothers with symptoms of depression fails to promote associative learning in 4-month-old infants. Child Dev. 1999;70:560–570. doi: 10.1111/1467-8624.00041. [DOI] [PubMed] [Google Scholar]

- 34.Trainor LJ, Clark ED, Huntley A, Adams BA. The acoustic basis of preferences for infant-directed singing. Infant Behav. Dev. 1997;20(3):383–396. [Google Scholar]

- 35.Blubaugh JA. Effects of positive and negative audience feedback on selected variables of speech behavior. Commun. Monog. 1969;36:131–137. [Google Scholar]

- 36.Coutinho E, Dibben N. Psychoacoustic cues to emotion in speech prosody and music. Cognition Emotion. 2013;27:658–684. doi: 10.1080/02699931.2012.732559. [DOI] [PubMed] [Google Scholar]

- 37.Gobl C, Ní A. The role of voice quality in communicating emotion, mood, and attitude. Speech Commun. 2003;40:189–212. [Google Scholar]

- 38.Bell A. Language style as audience design. Lang. Soc. 1984;13:145–204. [Google Scholar]

- 39.Lam TQ, Watson DG. Repetition is easy: Why repeated referents have reduced prominence. Mem. Cognition. 2010;38:1137–1146. doi: 10.3758/MC.38.8.1137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Rosa EC, Finch KH, Bergeson M, Arnold JE. The effects of addressee attention on prosodic prominence. Lang. Cogn. Neurosci. 2015;30:48–56. [Google Scholar]

- 41.Jürgens R, Grass A, Drolet M, Fischer J. Effect of acting experience on emotion expression and recognition in voice: Non-actors provide better stimuli than expected. J Nonverbal Behav. 2015;39(3):195–214. doi: 10.1007/s10919-015-0209-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Koch L, Gross A, Kolts R. Attitudes toward Black English and code switching. J. Black Psychol. 2001;27:29–42. [Google Scholar]

- 43.DeBose CE. Codeswitching: Black English and standard English in the African-American linguistic repertoire. J. Multiling. Multicul. 1992;13:157–167. [Google Scholar]

- 44.Xu D, Yapanel U, Gray S. Reliability of the LENA language environment analysis system in young children’s natural home environment. 2009 Retrieved from http://www.lenafoundation.org/TechReport.aspx/Reliability/LTR-05-2.

- 45.Ford M, Baer CT, Xu D, Yapanel U, Gray S. The LENA language environment analysis system: Audio specifications of the DLP-0121. 2009 Retrieved from http://www.lenafoundation.org/TechReport.aspx/Audio_Specifications/LTR-03-2.

- 46.Bosseler A, Massaro DW. Development and evaluation of a computer-animated tutor for vocabulary and language learning in children with autism. J. Autism Dev. Disord. 2003;33:653–672. doi: 10.1023/b:jadd.0000006002.82367.4f. [DOI] [PubMed] [Google Scholar]

- 47.Saunders FA. Information transmission across the skin: High-resolution tactile sensory aids for the deaf and the blind. Int. J. Neurosci. 1983;19:21–28. doi: 10.3109/00207458309148642. [DOI] [PubMed] [Google Scholar]

- 48.Massaro DW, Carreira-Perpinan MA, Merrill DJ, Sterling C, Bigler S, Piazza E, Perlman M. iGlasses: an automatic wearable speech supplement in face-to-face communication and classroom situations. Proc. ICMI. 2008;10:197–198. [Google Scholar]

- 49.Koreman J, Andreeva B, Strik H. Acoustic parameters versus phonetic features in ASR. Proc. Int. Congr. Phonet. Sci. 1999;99:719–722. [Google Scholar]

- 50.Van den Oord A, Dieleman S, Schrauwen B. Deep content-based music recommendation. Adv. Neur. In. 2013:2643–2651. [Google Scholar]

- 51.Young S, Evermann G, Gales M, Hain T, Kershaw D, Liu X, Moore G, Odell J, Ollason D, Povey D, et al. The HTK Book (HTK Version 3.4.1) Engineering Department, Cambridge University; 2006. [Google Scholar]

- 52.Chang CC, Lin CJ. LIBSVM: A library for support vector machines. ACM T. Intell. Syst. Technol. 2011;2:27. [Google Scholar]

- 53.Boersma P, Weenink D. Praat: doing phonetics by computer (Version 6.0.14) [Computer program] [Retrieved January 1, 2016];2009 [Google Scholar]

- 54.Howell P, Kadi-Hanifi K. Comparison of prosodic properties between read and spontaneous speech material. Speech Commun. 1991;10:163–169. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Recordings of four different women (not participants in the study) pronouncing the syllable “ba”, each with the same pitch, duration, and RMS amplitude. Differences between speakers reflect variation in timbre.