Abstract

While many popular casual games use three-star systems, which give players up to three stars based on their performance in a level, this technique has seen limited application in human computation games (HCGs). This gives rise to the question of what impact, if any, a three-star system will have on the behavior of players in HCGs. In this work, we examined the impact of a three-star system implemented in the protein folding HCG Foldit. We compared the basic game’s introductory levels with two versions using a three-star system, where players were rewarded with more stars for completing levels in fewer moves. In one version, players could continue playing levels for as many moves as they liked, and in the other, players were forced to reset the level if they used more moves than required to achieve at least one star on the level. We observed that the three-star system encouraged players to use fewer moves, take more time per move, and replay completed levels more often. We did not observe an impact on retention. This indicates that three-star systems may be useful for re-enforcing concepts introduced by HCG levels, or as a flexible means to encourage desired behaviors.

Keywords: games, human computation, design, analytics

INTRODUCTION

Human computation games (HCGs) hold promise for solving a variety of challenging problems relevant to many fields of science. These range from designing RNA folds [13] to improving cropland coverage maps [24] to building 3D models of neurons [9] to software verification [16].

However, engaging and retaining participants in citizen science projects such as HCGs is difficult, with many participants leaving projects soon after starting [21, 15]. A critical part of any game is the onboarding process, often accomplished through a series of introductory levels that teach players the fundamental concepts of the game. These levels, being the first thing that a user interacts with upon playing a game, have a profound impact on a user’s experience and lay the foundation for later levels. Well-crafted levels create a positive first impression on the player and help with onboarding. Thus, improving the onboarding process is critical to retaining players to make later scientific contributions. Even in entertainment games, having a positive onboarding experience is necessary to build a large user base and retain player interest.

Three-star systems are used in many of the most popular casual games available, including Angry Birds [20], Candy Crush [10], and Cut the Rope [28]. The educational game DragonBox Algebra [26] also uses such a system. Three-star systems reward players with up to three stars (or some other object) upon completing a level. Better “performance” on a level—such as accumulating more points, finding a more challenging solution, or using fewer moves—results in more stars awarded. Players can replay levels to try for more stars. The stars often have no mechanical use in-game; however, in some games, accumulating more stars is required to unlock later levels or produce other effects.

Even with the popularity of three-star systems in casual games, there is currently little research exploring the impact of three-star systems on player onboarding and behavior. We suspect that adding a three-star system to an HCG could encourage players to use desired behaviors and positively impact user experience. One attractive feature of three-star systems is that they can be orthogonal to other game mechanics, and thus potentially be layered on top of an existing game with minimal impact to existing systems. Therefore, three-star systems have the potential to be broadly applicable.

We carried out an experiment to examine the impact of including a three-star system in the onboarding process of the protein-folding HCG Foldit. We found that the three-star system encouraged players to use fewer moves, take more time per move, and replay levels more often, though we did not observe a difference in retention. This work contributes an experimental evaluation of the impact of a three-star system on the onboarding process of a human computation game.

RELATED WORK

Different players may have different motivations and thus respond to different types of rewards. Early work by Bartle [4] divided online game players into four types based on their interests. We believe that our three-star system would appeal to Bartle’s Achiever type (as opposed to Killers, Socializers, or Explorers), as they are interested in “achievement within the game context”. Yee [27] refined Bartle’s model based on motivational survey data, and also included an Achievement component. Tondello et al.’s recent work [25] also identifies Achievers as one of multiple user types in gamified systems, motivated by completing tasks and overcoming challenges (in particular, “competence” from self-determination theory). Thus, even in gamified systems, there are multiple player types and the three-star system would likely appeal to players motivated by some form of “achievement”, though not necessarily all players.

Hallford and Hallford [8] took the perspective of categorizing different reward types in role-playing games; Phillips et al. [18] expanded their model with a review of rewards across different genres. In our work, the three-star system itself did not impact gameplay other than the display of stars (only to the player—not shared socially). In this regard, it could be considered what Hallford and Hallford term a “Reward of Glory”.

Other work has examined the impacts of rewards and incentives on both correctness and engagement in HCGs. Early work by von Ahn and Dabbish [1] used a reward based on output-agreement—matching outputs with another player—in The ESP Game to incentivize correct answers. The related input-agreement reward—players use outputs to decide if they have the same input—was later introduced by Law and von Ahn [11] in TagATune. Siu et al. [23] demonstrated that using a reward system based on competition, rather than collaboration (i.e. agreement), improved engagement without negatively impacting accuracy in the HCG Cabbage Quest. Later work by Siu and Riedl [22] examined allowing HCG players to choose their rewards in Cafe Flour Sack, and found that allowing choice (among rewards including leaderboards, avatars, and narrative) improved task completion, while not necessarily impacting engagement. Goh et al. [7] found that adding points and badges as rewards in HCGs improved self-reported enjoyment. In this work, we found that additional rewards encouraged desired behaviors, but we did not observe an impact on user retention.

Much recent work has used online experimentation to evaluate design choices. Lomas et al. [14] used a large-scale online experiment in an educational game to optimize engagement through challenge. Other work has used online experimentation to evaluate the designs of secondary objectives [2] and tutorial systems during onboarding [3]—in this work, we are using online experimentation to evaluate the impact of a different type of objective on onboarding.

SYSTEM OVERVIEW

In order to examine the impact of three-star systems on user onboarding, we implemented a three-star system in the citizen science game Foldit [6]. In Foldit, players are given a three-dimensional semi-folded protein as a puzzle, along with a set of tools to modify the protein’s structure. Their goal is to change the folding of the protein in order to find lower energy configurations, thus attaining a higher score. Foldit is based on the Rosetta molecular modeling package [19, 12]. Players with little to no biochemical knowledge or experience can contribute to biochemistry research by playing science challenges and creating higher quality structures than those found with other methods [5].

To teach new players how to play, the game includes a set of introductory levels. These levels cover the basic concepts and tools that are necessary to complete the science challenges later in the game. Foldit’s introductory levels are completed by reaching a target score. Each level teaches or reinforces new skills with a simple example protein and context-sensitive popup tutorial text bubbles that help guide the user. There are 31 possible levels. The first 16 must be completed in order; after that, the player has a few options of different branches to take.

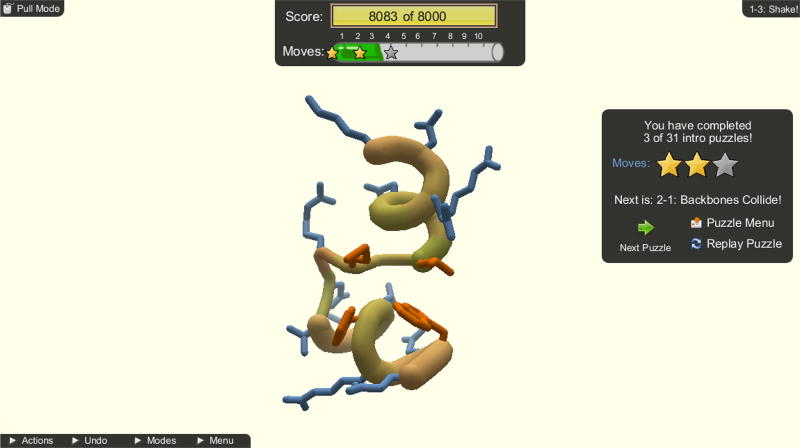

Each level has been designed to be completed in only a few moves, usually one to four, if the moves are made thoughtfully. Due to the complex nature of the game, players may pursue an incorrect way of folding the protein, and could better succeed if they were to take fewer moves, as many of the solutions are within a few moves of the starting position, and making more moves can often move the player further from the correct solution. Therefore, the three-star system we implemented rewards players for completing levels in fewer moves. A screenshot of the game with the three-star system is shown in Figure 1.

Figure 1.

Screenshot of Foldit gameplay screen with three-star system, after the completion of a level. The player’s remaining moves are shown in the panel at the top center, and the summary of stars earned is shown in the panel on the right side.

Each level was evaluated for the ideal number of moves necessary to complete it; if a user completed the level with this number of moves or fewer, they received all three stars. If they completed the level in one or two moves beyond the ideal number, they received two stars. Otherwise, they received one star for completing the level. We optionally implemented a forced reset as part of the three-star system: if this was active and players did not complete the level in four moves beyond the ideal number, they would receive a popup message informing them that they had run out of moves and were forced to restart the level. As some casual games have a failure mode, this was a way of adding a failure mode to Foldit, along with requiring players to use fewer moves to complete levels.

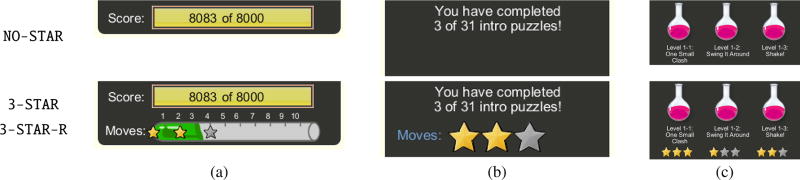

During each level, a gauge in the shape of a test tube was shown near the top of the user interface under the score (Figure 2a). The amount of liquid in the tube decreased as the user took more moves, and would empty after the player had made four more moves than ideal. If the player used undo or load features, their number of moves would be restored to the loaded structure’s, ensuring their number of moves was counted from the level’s starting structure. The tube had three stars overlaid where each star could still be earned—these stars started gold and faded to gray as they were lost. When a level was completed, the number of stars earned was shown in a summary panel (Figure 2b); on the level select screen, the highest number of stars obtained on each level was displayed under the title (Figure 2c). No modifications were made to the popup tutorial text mentioning the three-star system, so players had to figure out how the system worked themselves.

Figure 2.

Interface elements from (top) the NO-STAR condition of Foldit and (bottom) the 3-STAR and 3-STAR-R conditions using a three-stars system. (a) The score panel, which has a test tube gauge added in the experimental version, as well as three stars overlaid on it. (b) The level complete panel, in which the number of stars obtained on that level was added in the experimental version. (c) The level select screen, with the addition of stars underneath the name of each level showing how many stars were won on that level.

To determine the ideal number of moves for a level, we had four members of the Foldit development team—with different degrees of familiarity—play through all the levels, and decide the lowest number of moves they thought the level could be completed in if the appropriate tools were used. We resolved cases where there was disagreement through discussion, and in cases where there was more than one desirable approach, we might select a number of moves above the minimum any team member found. Thus we use the term “ideal” rather than “minimum”, since there are levels that may be completed in fewer moves than ideal. Of the 32 levels, 10 had an ideal move count 1 more than the minimum found and the rest used the minimum.

EXPERIMENT

We ran an experiment in Foldit and gathered data to examine the following hypotheses:

H1: The three-star system will cause players to complete levels in fewer moves and take more time per move. This is the primary behavior the three-star system is designed to encourage. As Foldit’s levels are designed to be completed in a few moves given the available tools, we believe that completing a level in fewer moves indicates a better understanding of the tools and concepts needed to complete that level, or at least a more thoughtful process of making moves.

H2: The three-star system will encourage players to replay levels that they have already completed. As a secondary effect, the three-star system will encourage players to play and complete levels more than once to increase the number of stars rewarded. If so, this may help players learn the concepts introduced in the level: replaying levels may reinforce the concepts in them through practice, and help players improve [17].

H3: The three-star system will improve retention, in terms of number of unique levels completed, time played, and likelihood of returning to the game. Ideally, as a tertiary effect, the three-star system will improve player retention. Since players are playing of their own free will and can stop at any time, we expect that if they make more progress, spend more time, and return to the game, they find it worthwhile in comparison to other possible activities.

We released an update to the version of Foldit available at https://fold.it/ that contained the three-star system and gathered data for roughly one week in September 2016. During this time, a portion of new users were randomly assigned to one of the following conditions:

NO-STAR: The basic game, without the three-star system. Players could complete levels in any number of moves.

3-STAR: Players were rewarded using the three-star system, and could complete levels in any number of moves.

3-STAR-R: Players were rewarded using the three-star system, and forced to reset the level when the test tube emptied.

In all experimental conditions, chat was disabled, to reduce any confusion that might be caused by players with different conditions communicating. Data was analyzed for 626 players: 212 in NO-STAR, 215 in 3-STAR, and 199 in 3-STAR-R. Looking only at introductory levels (and not science challenges), we analyzed log data for the following variables:

Extra Moves: The mean number of minimum extra moves the player took for each completed level. Only non-undone moves made beyond the ideal number of moves were counted, using the minimum such moves made for each level completed. These were the moves—past the ideal number—that counted towards the player’s star rating for a level. Since undone moves did not count toward the rating, they were not counted. If a level was completed multiple times, the minimum number of such moves was used. Thus, for conditions with stars, zero Extra Moves corresponds to three stars, one or two corresponds to two stars, and three or more corresponds to one star. It is notable that in the 3-STAR-R condition a player can get a maximum of four Extra Moves due to the forced reset. Only players who completed at least one level were included.

Time per Move: The average time (in seconds) a player took per move. Unlike Extra Moves, every move a player made in a level was counted, even if it was undone or the level was not completed. Only players who made at least one move were included.

Recompleted: If the player completed a level that they had already completed (i.e., they completed at least one level twice).

Levels: The number of unique levels completed by the player (not re-counting the same level completed multiple times).

Total Time: The total time (in minutes) spent playing levels by the player. Time was calculated by looking at sequential event pairs during levels and summing the time between them. If there were more than 2 minutes between a pair of events, we assumed the player was idle and that time was not counted.

Returned: If the player returned or not (i.e., they exited the game and started again at least once).

As the numerical variables (Extra Moves, Time per Move, Levels, and Total Time) were not normally distributed, we first performed a Kruskal-Wallis test to check for any differences among the conditions; if so, we performed three post-hoc Wilcoxon Rank-Sum tests with the Bonferroni correction (scaling p-values by 3) to examine pairwise differences between conditions and used rank-biserial correlation (r) for effect sizes. For categorical Boolean variables (Returned and Recompleted), we first performed a chi-squared test to check for any differences among the conditions; if so, we performed three post-hoc chi-squared tests with the Bonferroni correction to examine pairwise differences and used ϕ for effect sizes.

A summary of the data and significant comparisons is given in Table 1. For the hypotheses, this indicates:

H1: Supported. There were significant differences between all three conditions in Extra Moves and Time per Move. The three-star system encouraged players to use half the extra moves, and the addition of the forced reset reduced the number of extra moves even further—this makes sense as the forced reset only allows completion of levels in a few moves beyond ideal. The three-star system also encouraged players to take slightly longer per move, with the forced reset increasing that time further.

H2: Supported. There were significant differences in Recompleted between NO-STAR and both 3-STAR and 3-STAR-R, with the three-star system encouraging players to recomplete levels around twice as often. However, there was no significant difference in recompletion rate between 3-STAR and 3-STAR-R.

H3: Not supported. There was no significant difference in Levels, Total Time, or Returned.

Table 1.

Summary of data and statistical analysis. Medians are given for Extra Moves, Time per Move, Levels, and Total Time; percentages for Recompleted and Returned.

| Variable | Omnibus | NO-STAR / 3-STAR | NO-STAR / 3-STAR-R | 3-STAR / 3-STAR-R |

|---|---|---|---|---|

|

| ||||

| Extra Moves | H(2) = 141.66, p < .001 | 2.69 / 1.6 | 2.69 / 0.67 | 1.6 / 0.67 |

| U = 26255.5, p = .001; r = 0.20 | U = 33105.5, p < .001; r = 0.69 | U = 10698, p < .001; r = 0.45 | ||

|

| ||||

| Time per Move (s) | H(2) = 48.65, p < .001 | 14 / 16 | 14 / 20 | 16 / 20 |

| U = 18971.5, p = .032; r = 0.14 | U = 11975, p < .001; r = 0.40 | U = 24936.5, p < .001; r = 0.26 | ||

|

| ||||

| Recompleted (%) | χ2 = 22.00, p < .001 | 15.57 / 35.35 | 15.57 / 28.14 | 35.35 / 28.14 |

| χ2 = 20.95, p < .001; ϕ = 0.22 | χ2 = 8.84, p = .009; ϕ = 0.15 | χ2 = 2.15, n.s. | ||

|

| ||||

| Levels | H(2) = 1.04, n.s. | 9 / 8 | 9 / 8 | 8 / 8 |

|

| ||||

| Total Time (m) | H(2) = 2.65, n.s. | 17.04 / 18.48 | 17.04 / 14.2 | 18.48 / 14.2 |

|

| ||||

| Returned (%) | χ2 = 2.30, n.s. | 33.96 / 27.44 | 33.96 / 29.15 | 27.44 / 29.15 |

Statistically significant post-hoc comparisons shown in

.

.

CONCLUSION AND FUTURE WORK

This work has explored the impact of a three-star system on the human computation game Foldit. We found that the introduction of a three-star system that rewarded players with stars for using fewer moves to complete levels did, in fact, encourage players to use fewer extra moves, take more time per move, and recomplete levels more often, even when the system had no further impact on gameplay. Adding a forced reset further reduced the number of extra moves used and increased the time taken per move; however, as the forced reset limited the extra moves possible, the further reduction in extra moves is not especially surprising. This indicates that three-star systems may be useful as an orthogonal mechanic that can encourage desired player behaviors. It remains to be confirmed that the behaviors encouraged by the three-star system have lasting impact on player skill.

We did not observe differences in retention among conditions. This may be due to the fact that Foldit levels are challenging enough that simply adding in new rewards is not enough to help players make further progress. Thus, focusing on other game mechanics, such as the tools or tutorial systems, may be more fruitful.

Although this work examined the use of three-star systems in the context of HCGs, three-star systems are popular in casual games. Future work can examine if a three-star system can improve retention in less challenging games or entertainment games, where such rewards may have more chance to impact retention. It would also be illuminating to examine the impact of three-star systems on other behaviors that game designers may desire to encourage (such as correct answers in educational games).

Acknowledgments

The authors thank Robert Kleffner, Qi Wang, the Center for Game Science, and all of the Foldit players. This work was supported by the National Institutes of Health grant 1UH2CA203780, RosettaCommons, and Amazon. This material is based upon work supported by the National Science Foundation under Grant Nos. 1541278 and 1629879.

Contributor Information

Jacqueline Gaston, Carnegie Mellon University.

Seth Cooper, Northeastern University.

References

- 1.von Ahn Luis, Dabbish Laura. Labeling Images with a Computer Game. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 2004:319–326. [Google Scholar]

- 2.Andersen Erik, Liu Yun-En, Snider Richard, Szeto Roy, Cooper Seth, Popović Zoran. On the Harmfulness of Secondary Game Objectives. Proceedings of the 6th International Conference on the Foundations of Digital Games. 2011:30–37. [Google Scholar]

- 3.Andersen Erik, O’Rourke Eleanor, Liu Yun-En, Snider Rich, Lowdermilk Jeff, Truong David, Cooper Seth, Popović Zoran. The Impact of Tutorials on Games of Varying Complexity. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 2012:59–68. [Google Scholar]

- 4.Bartle Richard. Hearts, Clubs, Diamonds, Spades: Players who Suit MUDs. The Journal of Virtual Environments. 1996;1:1. (1996) [Google Scholar]

- 5.Cooper Seth, Khatib Firas, Treuille Adrien, Barbero Janos, Lee Jeehyung, Beenen Michael, Leaver-Fay Andrew, Baker David, Popović Zoran, Players Foldit. Predicting Protein Structures with a Multiplayer Online Game. Nature. 2010a;466(7307):756–760. doi: 10.1038/nature09304. (2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Cooper Seth, Treuille Adrien, Barbero Janos, Leaver-Fay Andrew, Tuite Kathleen, Khatib Firas, Snyder Alex Cho, Beenen Michael, Salesin David, Baker David, Popović Zoran, Players Foldit. The Challenge of Designing Scientific Discovery Games. Proceedings of the 5th International Conference on the Foundations of Digital Games. 2010b:40–47. [Google Scholar]

- 7.Goh Dion Hoe-Lian, Pe-Than Ei Pa Pa, Lee Chei Sian. An Investigation of Reward Systems in Human Computation Games. In: Kurosu Masaaki., editor. Human-Computer Interaction: Interaction Technologies. 2015. pp. 596–607. Number 9170 in Lecture Notes in Computer Science. [Google Scholar]

- 8.Hallford Neal, Hallford Jana. Swords and Circuitry: A Designer’s Guide to Computer Role-Playing Games. Premier Press, Incorporated; 2001. [Google Scholar]

- 9.Kim Jinseop S, Greene Matthew J, Zlateski Aleksandar, Lee Kisuk, Richardson Mark, Turaga Srinivas C, Purcaro Michael, Balkam Matthew, Robinson Amy, Behabadi Bardia F, Campos Michael, Denk Winfried, Seung H Sebastian, Wirers Eye. Space-Time Wiring Specificity Supports Direction Selectivity in the Retina. Nature. 2014;509(7500):331–336. doi: 10.1038/nature13240. (2014) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.King. Candy Crush. Game. 2012 (2012) [Google Scholar]

- 11.Law Edith, von Ahn Luis. Input-Agreement: A New Mechanism for Collecting Data Using Human Computation Games. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 2009:1197–1206. [Google Scholar]

- 12.Leaver-Fay Andrew, Tyka Michael, Lewis Steven M, Lange Oliver F, Thompson James, Jacak Ron, Kaufman Kristian, Renfrew P Douglas, Smith Colin A, Sheffler Will, Davis Ian W, Cooper Seth, Treuille Adrien, Mandell Daniel J, Richter Florian, Ban Yih-En Andrew, Fleishman Sarel J, Corn Jacob E, Kim David E, Lyskov Sergey, Berrondo Monica, Mentzer Stuart, Popović Zoran, Havranek James J, Karanicolas John, Das Rhiju, Meiler Jens, Kortemme Tanja, Gray Jeffrey J, Kuhlman Brian, Baker David, Bradley Philip. ROSETTA3: An Object-Oriented Software Suite for the Simulation and Design of Macromolecules. Methods in Enzymology. 2011;487:545–574. doi: 10.1016/B978-0-12-381270-4.00019-6. (2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lee Jeehyung, Kladwang Wipapat, Lee Minjae, Cantu Daniel, Azizyan Martin, Kim Hanjoo, Limpaecher Alex, Yoon Sungroh, Treuille Adrien, Das Rhiju EteRNA Participants. RNA Design Rules from a Massive Open Laboratory. Proceedings of the National Academy of Sciences. 2014;111(6):2122–2127. doi: 10.1073/pnas.1313039111. (2014) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lomas Derek, Patel Kishan, Forlizzi Jodi L, Koedinger Kenneth R. Optimizing Challenge in an Educational Game using Large-Scale Design Experiments. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 2013:89–98. [Google Scholar]

- 15.Mao Andrew, Kamar Ece, Horvitz Eric. Why Stop Now? Predicting Worker Engagement in Online Crowdsourcing. Proceedings of the 1st Conference on Human Computation and Crowdsourcing 2013 [Google Scholar]

- 16.Moffitt Kerry, Ostwald John, Watro Ron, Church Eric. Making Hard Fun in Crowdsourced Model Checking: Balancing Crowd Engagement and Efficiency to Maximize Output in Proof by Games. Proceedings of the Second International Workshop on CrowdSourcing in Software Engineering. 2015:30–31. [Google Scholar]

- 17.Newell Allen, Rosenbloom Paul S. Mechanisms of Skill Acquisition and the Law of Practice. Cognitive Skills and their Acquisition. 1981;1:1–55. (1981) [Google Scholar]

- 18.Phillips Cody, Johnson Daniel, Wyeth Peta. Videogame Reward Types. Proceedings of the First International Conference on Gameful Design, Research, and Applications. 2013:103–106. [Google Scholar]

- 19.Rohl Carol A, Strauss Charlie EM, Misura Kira MS, Baker David. Protein Structure Prediction using Rosetta. Methods in Enzymology. 2004;383:66093. doi: 10.1016/S0076-6879(04)83004-0. (2004) [DOI] [PubMed] [Google Scholar]

- 20.Rovio. Angry Birds. Game. 2009 (2009) [Google Scholar]

- 21.Sauermann Henry, Franzoni Chiara. Crowd Science User Contribution Patterns and their Implications. Proceedings of the National Academy of Sciences. 2015;112(3):679–684. doi: 10.1073/pnas.1408907112. (2015) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Siu Kristin, Riedl Mark O. Reward Systems in Human Computation Games. Proceedings of the SIGCHI Annual Symposium on Computer-Human Interaction in Play 2016 [Google Scholar]

- 23.Siu Kristin, Zook Alexander, Riedl Mark O. Collaboration versus Competition: Design and Evaluation of Mechanics for Games with a Purpose. Proceedings of the 9th International Conference on the Foundations of Digital Games 2014 [Google Scholar]

- 24.Sturn Tobias, Wimmer Michael, Salk Carl, Perger Christoph, See Linda, Fritz Steffen. Cropland Capture – A Game for Improving Global Cropland Maps. Proceedings of the 10th International Conference on the Foundations of Digital Games 2015 [Google Scholar]

- 25.Tondello Gustavo F, Wehbe Rina R, Diamond Lisa, Busch Marc, Marczewski Andrzej, Nacke Lennart E. The Gamification User Types Hexad Scale. Proceedings of the 2016 Annual Symposium on Computer-Human Interaction in Play. 2016:229–243. [Google Scholar]

- 26.WeWantToKnow. DragonBox Algebra. Game. 2012 (2012) [Google Scholar]

- 27.Yee Nick. Motivations for Play in Online Games. Cyberpsychology and Behavior. 2006;9(6):772–775. doi: 10.1089/cpb.2006.9.772. (2006) [DOI] [PubMed] [Google Scholar]

- 28.ZeptoLab. Cut the Rope. Game. 2010 (2010) [Google Scholar]