Abstract

As modern scientific image datasets typically consist of a large number of images of high resolution, devising methods for their accurate and efficient processing is a central research task. In this paper, we consider the problem of obtaining the steerable principal components of a dataset, a procedure termed “steerable PCA” (steerable principal component analysis). The output of the procedure is the set of orthonormal basis functions which best approximate the images in the dataset and all of their planar rotations. To derive such basis functions, we first expand the images in an appropriate basis, for which the steerable PCA reduces to the eigen-decomposition of a block-diagonal matrix. If we assume that the images are well localized in space and frequency, then such an appropriate basis is the prolate spheroidal wave functions (PSWFs). We derive a fast method for computing the PSWFs expansion coefficients from the images' equally spaced samples, via a specialized quadrature integration scheme, and show that the number of required quadrature nodes is similar to the number of pixels in each image. We then establish that our PSWF-based steerable PCA is both faster and more accurate then existing methods, and more importantly, provides us with rigorous error bounds on the entire procedure.

Keywords: principal component analysis, prolate spheroidal wave functions, steerable filters, group invariance, band limited functions

1. Introduction

Principal component analysis (PCA), also known as Karhunen–Loeve transform, is a ubiquitous method for dimensionality reduction which is often utilized for compression, denoising, and feature extraction from datasets. Given a dataset, the basic idea behind classical PCA is to find the best linear approximation (in the least-squares sense) to the dataset using a set of orthonormal basis functions, thus allowing for processing methods adaptive to the dataset at hand. Due to increasing improvements in image acquisition and storage techniques, we often encounter the need to process very large datasets, which consist of many thousands of images of ever-growing resolutions. In addition, there exist image acquisition techniques that introduce known deformation types into the images, thus increasing the variability in the data.

In this work, we focus on the rotation deformation, and specifically, on the setting where each image was acquired through an unknown planar rotation. Therefore, it is only natural to include all planar rotations of all images when performing the PCA procedure. It is important to mention that, when handling large datasets, the naive approach of introducing a large number of rotated versions of all images into the dataset, and then performing standard PCA, is computationally prohibitive, and, moreover, is less accurate than considering the continuum of all rotations. In the literature, one can find numerous works concerning the study of deformations (such as rotations, translations, dilations, etc.) and their connections to invariant-feature extraction, through the theory of Lie groups and compact group theory [20, 4, 21, 22, 11]. In this context, we aim to incorporate the action of the group SO(2) into the framework of PCA of image datasets. We will refer to such a procedure as steerable PCA. By the theory of Lie groups, and specifically the action of the group SO(2), it is known that the resulting principal components (which best approximate all rotations of a set of images) are described by tensor products of radial functions and angular Fourier modes. Such functions are referred to as “steerable” [7], since they can be rotated (or “steered”) by a simple multiplication with a complex-valued constant, and hence the term “steerable” in steerable PCA. An early approach for computing the steerable PCA was proposed in [25], which used the SVD to obtain the principal components of a single template and its set of deformations. In [9], the authors presented an efficient steerable-PCA algorithm based on angular Fourier decomposition of the images, after they have been resampled to a polar grid. While this method considers the continuum of all planar rotations, it requires a nonobvious discretization of the images in the radial direction. Efficient methods for steerable PCA were also introduced in [27] and [10]. These methods considered a finite set of equiangular rotations of each image on a polar grid, which allows for efficient circulant matrix decompositions to be carried out when computing the principal components. Recently, [38] and [37] utilized Fourier–Bessel basis functions to expand the images, followed by applying PCA in the domain of the expansion coefficients, thus accounting for all (infinitely many) rotations. We also mention [35], where the authors present an accurate algorithm to obtain the steerable principal components of templates whose analytical form is known in advance.

As digital images are typically specified by their samples on a Cartesian grid, considering their rotations implicitly assumes that they were sampled from some underlying bivariate function. Rotation of a sampled image essentially interpolates this underlying function from the available Cartesian samples. We remark that while previous works provide algorithms for steerable PCA of discretized input images, they lack in describing rigorous connections between the results of the procedure and the images prior to discretization, i.e., the underlying bivariate functions. In this work, we assume that the digital images were sampled from bivariate functions that are essentially bandlimited and are sufficiently concentrated in an area of interest in space. These assumptions guarantee that if an image was sampled with a sufficiently high sampling rate, it can be reconstructed from its samples with high precision [26]. Such assumptions are very common in various areas of engineering and physics, and as all acquisition devices are essentially bandlimited and restricted in space/time, are expected to hold for a wide range of image datasets. Since we are interested in processing images with arbitrary orientations, it is only natural to consider a circular support area, both in space and frequency, instead of the (classical) square. We note that this model was implicitly assumed to hold in [38] and [37] for single-particle cryo-electron microscopy (cryo-EM) images.

Under the model assumptions mentioned above, our goal is to develop a fast and accurate steerable-PCA procedure which considers the continuum of all planar rotations of all images in our dataset. Particularly appealing basis functions, for expanding bandlimited functions which are also concentrated in space, are the prolate spheroidal wave functions (PSWFs) [33, 16, 17, 32, 24], defined as the strictly bandlimited set of orthogonal functions, which maximize the ratio between their ℒ2 norm inside some finite region of interest and their ℒ2 norm over the entire Euclidean space. Recently, [13] described an approximation scheme for functions localized to disks in space and frequency, using a series of two-dimensional PSWFs. We therefore incorporate the methods introduced in [13] into the framework of steerable PCA, providing accurate, scalable, and efficient algorithms. Our approach is in the spirit of [37], resulting in a similar block-diagonal covariance matrix. However, replacing the Fourier–Bessel with PSWFs turns out to be advantageous in terms of accuracy, available error bounds, speed, and statistical properties.

The contributions of this paper are the following. By utilizing theoretical and computational tools related to PSWFs, we are able to provide accuracy guarantees for our steerable-PCA algorithm, under the assumptions of space-frequency localization. This accuracy is in part related to a rigorous truncation rule we provide for the PSWFs series expansion, in contrast to the series truncation rules used in [38] and [37]. In addition, using a quadrature integration scheme optimized for integrating bandlimited functions on a disk [31], we present an algorithm which is in theory (and in practice) faster than [37] by a factor between 2 and 4. Finally, we also show that under some conditions on the space-frequency concentration of the images at hand, the transformation to the PSWFs expansion coefficients is nearly orthogonal.

The organization of this paper is as follows. In section 2 we introduce the PSWFs and their usage in expanding space-frequency localized images specified by their equally spaced Cartesian samples. In particular, we review the results of [13] on expanding a function into a series of PSWFs, evaluating the expansion coefficients, and bounding the overall approximation error. In section 3 we present a fast algorithm for approximating the expansion coefficients from section 2 up to an arbitrary precision. In section 4 we formalize the procedure of steerable PCA for the continuous setting (similarly to [35]), and combine it with our PSWFs-based approximation scheme. Section 5 then summarizes all relevant algorithms, and analyses in detail the computational complexities involved. In section 6 we provide some numerical results on the spectrum of the transformation to the PSWFs expansion coefficients, as this spectrum is of particular interest for noisy datasets, and in section 7 we compare our algorithm to that of [37] in terms of running time and accuracy. Finally, in section 8 we provide some concluding remarks and some possible future research directions.

2. Image approximation based on PSWFs expansion

Let f : ℝ2 → ℝ be a square integrable function on ℝ2. We define a function f(x) as c-bandlimited if its two-dimensional Fourier transform, denoted by F(ω), vanishes outside a disk of radius c. Specifically, if we denote D ≜ {x ∈ ℝ2, |x| ≤ 1}, then f is bandlimited with bandlimit c if

| (1) |

Among all c-bandlimited functions, the prolate spheroidal wave functions (PSWFs) on D (the unit disk) are the most energy concentrated in D; that is, they satisfy

| (2) |

while constituting an orthonormal system over ℒ2(D). The two-dimensional PSWFs were derived and analyzed in [32], and were shown to be the solutions to the integral equation

| (3) |

We denote the PSWFs with bandlimit c as , and their corresponding eigenvalues as. , which together form the eigenfunctions and eigenvalues of (3), with N ∈ ℤ and n ∈ ℕ ∪ {0}. In addition, it turns out that the functions are orthogonal on both D and ℝ2 using the standard ℒ2 inner products on D and ℝ2, respectively, and are dense in both the class of ℒ2(D) functions and in the class of c-bandlimited functions on ℝ2. In polar coordinates, the functions have a separation of variables and can be written in complex form as

| (4) |

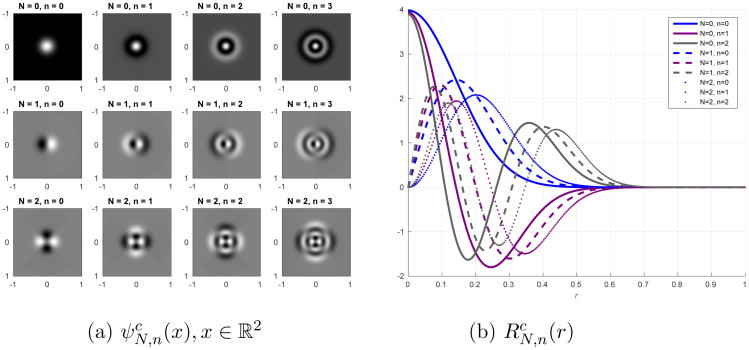

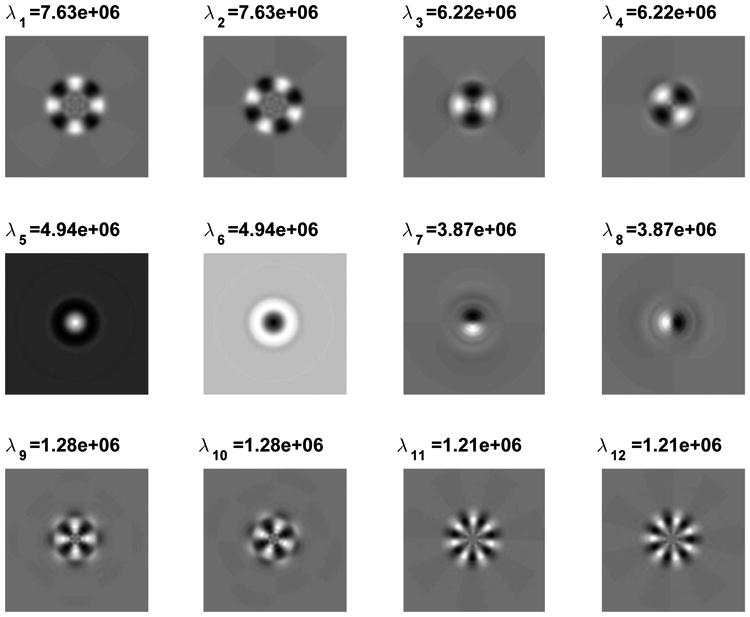

where RN,n(r) (defined explicitly in (66) in Appendix C) satisfies RN,n(r) = R|N|, n (r), and the eigenfunctions are normalized to have an ℒ2(D) norm of 1. The indices N and n are often referred to as the angular index and the radial index respectively. Equation (4) also tells us that the PSWFs are steerable [7]; that is, rotating is equivalent to multiplying it by a complex constant. This property is important for handling datasets which include rotations and in particular for the steerable-PCA procedure. A detailed numerical evaluation procedure for the two-dimensional PSWFs can be found in [31] and an illustration of the PSWFs for the several first index pairs (N, n) can be seen in Figure 1.

Figure 1. Illustration of the first few PSWFs (real part) with bandlimit c = 16π, ordered according to their eigenvalues (for every index N, the eigenvalues are ordered from largest to smallest as a function of the index n).

Since our images are assumed to be essentially bandlimited and sufficiently concentrated in a disk, the properties of the PSWFs mentioned above (and especially their optimal concentration property) make them suitable basis functions for expanding such images, as we consider next. Let us define a function f(x) as (ν, μ)-concentrated if its ℒ2 norm outside a disk of radius ν is upper bounded by μ, that is

| (5) |

Using this definition, the class of c-bandlimited functions is a subclass of (c, δc)-concentrated functions in the Fourier domain (for any δc ≥ 0). We consider the two-dimensional functions from which our images were sampled to be (1, ε)-concentrated in space, with their Fourier transforms being (c, δc)-concentrated. This assumption always holds for some set of parameters c, δc, ε, and the general notion is that δc and ε are “small.” Since our images are given in their sampled form, we define the unit square Q ≜ [−1, 1] × [−1, 1], and assume that we are given the samples , where k is a two-dimensional integer index. These samples correspond to a Cartesian grid of (2L + 1) × (2L + 1) equally spaced samples, with sampling frequency of L in each dimension. We mention that in this setup, the Nyquist frequency corresponds to a bandlimit c of at most πL.

Given an image I(x) ∈ ℒ2(D), we can expand it as

| (6) |

where (·)* denotes complex conjugation. As numerical algorithms cannot use infinite expansions, the image I(x) needs to be approximated by a finite sequence of PSWFs, while its expansion coefficients {aN,n} are approximated using only the available samples .To this end, we follow [13], and define the truncated PSWFs expansion that approximates I(x) as

| (7) |

where âN,n are the approximated coefficients (to be described shortly), and ΩT is a finite set of indices that is determined by a truncation parameter T, and is defined by

| (8) |

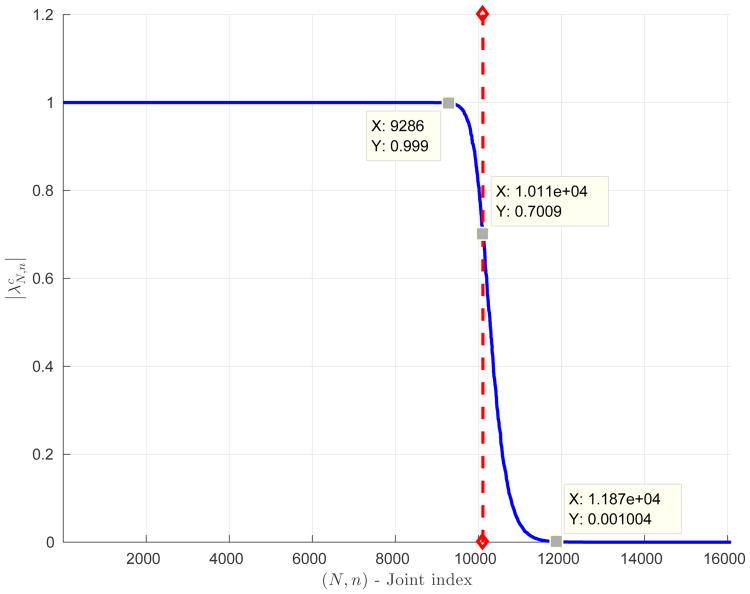

where is the eigenvalue from (3) corresponding to the eigenfunction . The properties of the index set ΩT are determined by the “normalized” eigenvalues , whose behavior is exemplified in Figure 2. The coefficients âN,n of (7) are defined by

Figure 2.

Illustration of the normalized eigenvalues for L = 64, c = πL, sorted in a nonincreasing order with a joint index k enumerating over (N, n). It is evident that for T ≪ 1 the set ΩT consists of indices up to the right of the vertical dashed line, where the normalized eigenvalues rapidly decay to zero. On the other hand, for T ≫ 1 the set ΩT consists of indices up to the left of the vertical line, where the normalized eigenvalues are extremely close to 1. The dashed vertical line corresponds to k = c2/4, which also approximately agrees with (or equivalently with the rule T = 1).

| (9) |

where both I and stand for the appropriate vectors of samples of I(x) and inside the unit disk, respectively. We note that for real-valued images we have that aN,n = (a−N,n)*, and thus it is sufficient to compute the coefficients âN,n only for N ≥ 0.

Using our definitions in (7), (8), (9), and assuming that I(x) is (1, ε)-concentrated in space and (c, δc)-concentrated in Fourier domain (as defined earlier), we show in Appendix A that

| (10) |

It is evident that the bound ℰ(ε, δc, T) in (10) depends only on the images' concentration properties and on the truncation parameter T, all of which can be determined a priori.

Lastly, it is also mentioned in [13] that the cardinality of the index set ΩT of (8) is expected to be

| (11) |

The first term on the right hand-side of (11), which depends quadratically on c, is in fact (up to a constant of 1/π) what is known as the Landau rate [14] for stable sampling and reconstruction, and it is the term which dominates asymptotically the required number of basis functions for the approximation (see also Figure 2). A table listing the values of |ΩT| for various L and T can be found in [13]. We remark that for c = πL (Nyquist sampling) and T = 1, which essentially means that the approximation error is of the order of the space-frequency localization, we have that |ΩT| is about π2L2/4, which is smaller than the number of samples (pixels) in the unit disk.

3. Fast PSWFs coefficients approximation

Computing the expansion coefficients âN,n of (9) directly results in a computational complexity of O(L4) operations. This is due to the fact that each image contains O(L2) samples, while there are about O(L2) different basis functions in the expansion. Since we aim to process large datasets of high resolution images, in what follows we describe an asymptotically more efficient method for evaluating the expansion coefficients in O(L3) operations.

Using (3), we can rewrite the PSWFs expansion coefficients (9) as

| (12) |

| (13) |

where

| (14) |

Since both and ϕc are c-bandlimited functions, the product defines a function which is 2c-bandlimited. Therefore, we can compute the integral in (13) using a quadrature formula for 2c-bandlimited functions on a disk. Such an integration scheme was proposed in [31] using specialized quadrature nodes and weights. Thus, it follows that, by using such a quadrature formula, we can approximate the expansion coefficients of (13) by

| (15) |

where and are the quadrature nodes and weights respectively, 𝒩r is the number of different radii of the quadrature nodes, and is the number of quadrature nodes per radius (which may vary for each radius). We use for and the quadrature nodes and weights of [31] corresponding to bandlimit 2c. These quadrature nodes are equally spaced in the angular direction and nonequally spaced in the radial direction. In polar coordinates, the nodes and weights are given by

| (16) |

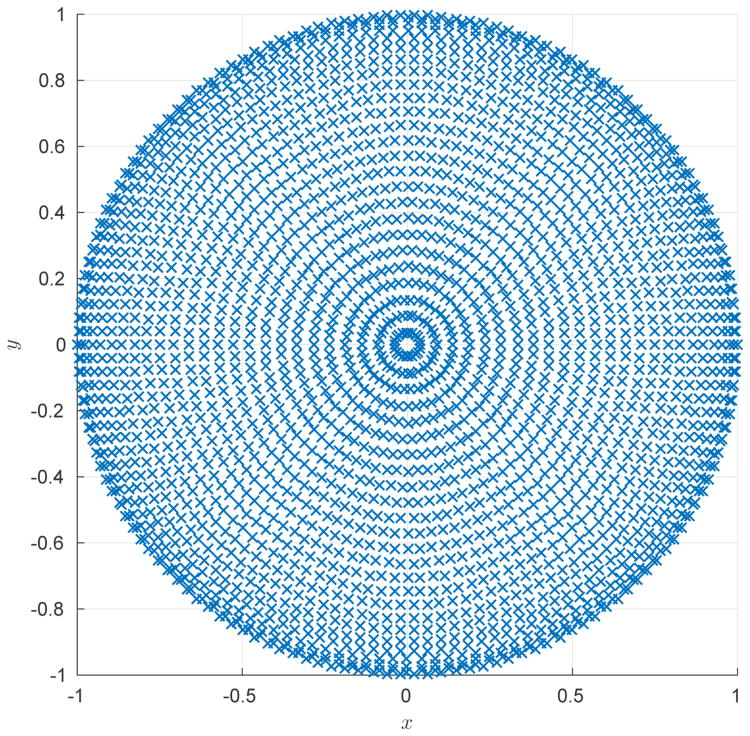

and the derivation of is detailed in [31]. A typical array of quadrature nodes is plotted in Figure 3.

Figure 3.

Quadrature nodes for L = 16, c = πL, and accuracy of the order of machine precision. The nodes are arranged to be equally spaced in the angular direction (the specific phase of the allocation is arbitrary) with different numbers of nodes for each radius (rings closer to the origin require fewer angular nodes). The number of radial nodes is about c/π, and the number of angular nodes per radius is about , where e is the base of the natural logarithm.

By employing (15), (16), and the analytical expression of the PSWFs (4), we finally arrive at

| (17) |

which is particularly appealing for efficient numerical evaluation, as we explain later in this section.

Clearly, replacing the integral in (13) with a quadrature formula results in an approximation error. In this respect, it is desirable to choose 𝒩r and such that this error is smaller than some prescribed accuracy ϑq (usually chosen as the machine precision). To this end, and according to [31], it is sufficient to satisfy the condition

| (18) |

for all 1 ≤ ℓ ≤ 𝒩r and ρ ∈ [0, 1], where Jj is the Bessel function of the first kind and order j, as well as the condition

| (19) |

where was defined in (8), ‖·‖∞ stands for the max-norm in [0, 1], and both C1 and C2 are constants which depend on the bandlimit c. We mention that are ordered in a nonincreasing order with respect to the index k. In order to determine appropriate values for 𝒩r and , one can proceed to solve the inequalities in conditions (18) and (19) numerically by directly evaluating these expressions. However, in order to analyze the computational complexity of the procedure described in this section, and to offer the reader simpler analytic expressions and some insight concerning conditions (18) and (19), we provide some further analysis of these conditions and the resulting number of quadrature nodes. In Appendix B, we prove that, in order to satisfy condition (18) for every 1 ≤ ℓ ≤ 𝒩r, it is sufficient to choose the integers as

| (20) |

where are the radial quadrature nodes from (16), e is the base of the natural logarithm, and ⎾·⏋ is the rounding up operation. Then, it is evident that the term dominates condition (20), i.e., , since all other factors (prescribed error ϑq and the constant C1) affect it only logarithmically. Therefore, we expect the overall number of quadrature nodes to be

| (21) |

assuming that the quadrature points are approximately symmetric about 0.5. We remark that numerical experiments reveal that there is a small difference between conditions (18) and (20), and specifically, choosing directly via the numerical evaluation of condition (18) results in about 20% fewer angular nodes compared to choosing according to (20).

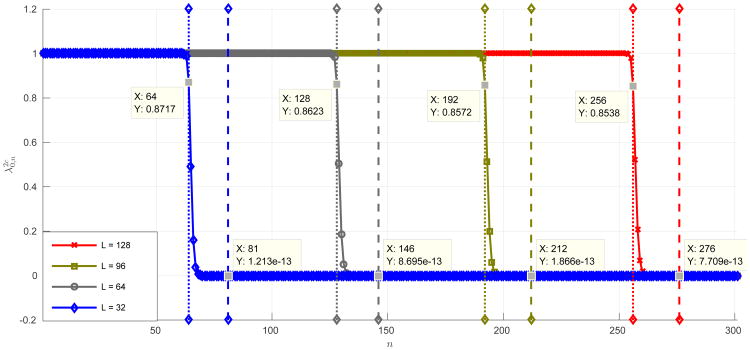

With regard to condition (19), although it may seem somewhat daunting, the sum on the left-hand side is dominated by the values of and their decay properties. We argue in Appendix C that the number of non-negligible (relative to machine precision) values of is only about 2c/π, that is

| (22) |

for some small ε and a sufficiently large c. This observation stems from the fact that the values of become arbitrarily small (and deca y at a superexponential rate) once k reaches 2c/π + O(logc). We provide numerical evidence for this claim in Figure 4.

Figure 4.

Behavior of for c = πL (Nyquist sampling) and several values of L. For each value of L, the dotted vertical line represents n = 2c/π, and the dashed vertical line represents the first index n for which , which we denote by . First, we observe that 2c/π is the exact index for which begins its rapid decay, and, second, it is evident that the difference between and the estimate 2c/π grows extremely slowly with L, making the asymptotic estimate sufficiently accurate even for moderate values of L.

Therefore, it is evident that condition (19) can be satisfied if is sufficiently small for k > 2𝒩r, which together with (22) implies that for c = πL (Nyquist sampling)

| (23) |

In total, by (21) and (23), the number of quadrature nodes for c = πL is

| (24) |

which is only slightly larger than the number of sampling points in the unit square.

Let us now turn our attention to the computational complexity of computing ãN,n of (17). The first step is to compute from (14) for all quadrature nodes , which can be implemented efficiently using the nonequispaced fast fourier transform (NFFT) [3, 8, 28, 5]. The computational complexity of such algorithms is

| (25) |

where L is the sampling rate, ε is the required accuracy of the transform, and P is the number of evaluation points, which is equal to the number of quadrature nodes (24). Next, we notice that, for every value of ℓ the inner sum in (17)

| (26) |

can be computed for multiple values of N using the FFT in operations, resulting in total of operations for all required values of ℓ. By (24), this results in a computational complexity of O(L2 log L) for c = πL. Lastly, the approximated expansion coefficients (17) are given by the remaining outer sum

| (27) |

which can be computed for all indices {N, n} ∈ ΩT in 𝒩r |ΩT| operations, where |ΩT| denotes the cardinality of the index set ΩT. From (11) and (23), the computational complexity of the last step is essentially O(L3), which thus governs the computational complexity of the entire procedure described in this section. We end this discussion with the observation that the complexity of evaluating (27) can be further reduced by exploiting the rapid decay of the functions with the radius r (see Figure 1b for an illustration), due to which a substantial number of the terms involving can be discarded for certain sets of the radii and indices {(N, n)}. To exemplify this point, for T = 1, L = 150, and c = πL, we have that about 30% of the values are below 10−12, and therefore can be safely discarded from (27).

4. Steerable-PCA procedure

As discussed in the introduction, steerable PCA extends the classical PCA by artificially including in the analyzed dataset all (infinitely many) planar rotations of each image. In the previous sections, we have established a framework for expanding images using PSWFs, under the assumption that the images to be approximated are sufficiently concentrated in space and frequency. Since PSWFs constitute an excellent basis for expanding images which are localized in space and frequency (see (50), (10) and [13]), we use them in this section to construct optimized basis functions for a given set of images and their rotations.

Let us suppose that our dataset consists of M (sampled) images, where the mth image is given by the samples of some function Im(x) ∈ ℒ2(D). We denote by the planar rotation of Im(x) by an angle ψ ∈ [0, 2π). Now, ideally, we would like to obtain basis functions that best approximate (in the sense of ℒ2(D)) the images for all rotation angles ψ ∈ [0, 2π). However, we do not have access to the underlying images Im(x), nor their rotations . Therefore, we first replace them with their PSWFs-based approximations, denoted by Îm(x) (see (7)) and . Then, we derive a steerable-PCA procedure for the set of approximated images, and finally we make the connection between the resulting steerable principal components of the approximated images and the original images.

The kth principal component gk(x) ∈ ℒ2(D) for the set of approximated images , m = 0, …, M – 1, is defined as

| (28) |

where 〈·, ·〉ℒ2(D) denotes the standard inner product on ℒ2(D), and μ̂(x), is the average of all approximated images and their planar rotations, given by

| (29) |

In other words, the function gk(x) is expected to maximize the average projection norm (defined over all images and their rotations), such that {gk(x)} forms a set of orthonormal functions over ℒ2(D). The functions {gk(x)} are named the steerable principal components of our dataset. The formulation (28) differs from the classical formulation of PCA in the additional integration over the rotation angle φ, which has the interpretation of including all (infinitely many) rotations of all images in our data set.

After substituting (7) in (28) and exploiting the orthonormality and steerable structure of the PSWFs, the optimization problem (28) is reduced to a problem in the domain of the PSWFs expansion coefficients âN,n, for which the derivation in [38] reveals that the solution is given by the eigenvectors of the |ΩT| × |ΩT| Hermitian positive semidefinite matrix, whose entries are

| (30) |

where

| (31) |

and , given by (9) (or (17)), are the approximated expansion coefficients for the mth image in our dataset, i.e., the coefficients of Îm. We refer to C of (30) as our rotationally invariant covariance matrix (replacing the standard covariance matrix used in classical PCA). Since the matrix C enjoys a block-diagonal structure, we obtain its eigen-decomposition through the eigen-decomposition of its blocks. Let λ̂1 ≥ λ̂2 ≥ … ≥ λ̂|ΩT| ≥ 0 be the eigenvalues of the matrix C and let ĝk be the eigenvector corresponding to eigenvalue λ̂k. For each pair (λ̂k, ĝk), the entries of ĝk, denoted by , are nonzero only on some block of the matrix C corresponding to an angular index Nk, and it can be shown that the functions gk(x) of (28) are recovered as

| (32) |

where nk stands for the largest index n such that (Nk, n) ∈ ΩT. Equation (32), together with (4), confirms that each gk(x) is a steerable function (as in [7]).

By the formulation of (28), it follows that the functions gk(x) form the optimal basis (in ℒ2(D)) for expanding the approximated images {Îm(x)} and their rotations, such that if we define

| (33) |

then we have that

| (34) |

where λ̂k is the kth eigenvalue of C.

Since the steerable principal components were computed for the set of approximated images {Îm(x)} and not for the set of underlying images {Im(x)}, the bound in (34) holds only for the set of the approximated images. Nonetheless, the PSWFs approximation scheme from section 2 provides us with strict error bounds when expanding images localized in space and frequency. Therefore, when using (33) to approximate our images Im(x), the error norm (averaged over all images and rotations) can be bounded by joining (34) and (10). In particular, for a truncation parameter T, we have that

|

|

| Algorithm 1. Evaluating PSWFs expansion coefficients (direct method). |

|

|

|

|

|

| (35) |

where is the expansion via the steerable principal components (33), and ε(ε, δc, T) is the approximation error term of (10) for the truncated series of PSWFs. In essence, (35) asserts that if the images are sufficiently localized in space and frequency, then for an appropriate truncation parameter T the error in expanding Im(x) using the steerable principal components computed from Îm(x) is close to the smallest possible error given by (34).

5. Algorithm summary and computational cost

We summarize the algorithms for evaluating the expansion coefficients âN,n and ãN,n (corresponding to the direct method from section 2 and the efficient method of section 3, respectively) in Algorithms 1 and 2 respectively. The steerable-PCA procedure described in section 4 is summarized in Algorithm 3.

We now turn our attention to the computational complexity of Algorithms 1, 2, and 3. We omit the precomputation steps from the complexity analysis as they can be performed only once per setup, and do not depend on the specific images.

Since we have O(L2) PSWFs in our expansions (corresponding to the indices in the set ΩT) and O(L2) equally spaced Cartesian samples in the unit disk, computing the expansion coefficients of M images using the direct approach of Algorithm 1 requires O(ML4) operations. On the other hand, Algorithm 2 allows us to obtain the expansion coefficients in O(ML3) operations, since the NFFT in step 3 requires O(L2logL) operations and step 4 can be implemented using O(L2 log L + L3) operations, for each image.

Although Algorithm 2 and the method described in [37] (based on Fourier–Bessel basis functions) have the same order of computational complexity, our method enjoys a twofold asymptotic speedup in the coefficients' evaluation, and often it runs about three to four times faster. The twofold asymptotic speedup is because we need asymptotically only half the number of radial nodes for the numerical integration (see analysis in section 3 and Appendix C) compared to the Gaussian quadratures used in [37], which dictates the constant in the leading term of the computational complexity. The greater speedup observed in practice stems from the fact that practically, the most time-consuming operation in both methods is the NFFT, which depends heavily on the total number of quadrature nodes. In our integration scheme, the total number of quadrature nodes is about a quarter of the number of nodes in [37].

|

|

| Algorithm 2. Evaluating PSWFs expansion coefficients (efficient method). |

|

|

|

|

|

|

| Algorithm 3. PSWFs-based steerable PCA. |

|

|

|

|

|

Next, as of the computational complexity of the steerable PCA (Algorithm 3), forming the blocks of the matrix C requires O(ML3) operations, and then the eigen-decomposition of each block requires O(L3) operations, where there are O(L) different blocks in the matrix. Therefore, obtaining the eigenvalues and eigenvectors in step 5 of Algorithm 3 requires O(ML3 + L4) operations. As pointed out in [38] and [37], the eigenvalues and eigenvectors can be also obtained from the SVD of the matrices of coefficients from which the blocks of C are obtained.

Following (32), if we have O(L2) basis functions gk(x), their evaluation on the Cartesian grid requires O(L5) operations. Sometimes it may be more convenient to evaluate these basis functions on a polar grid instead, in which case the computational complexity reduces to O(L4).

Lastly, computing the expansion coefficients of the images in the steerable basis via (33) requires O(ML3) operations, since O(L) operations are required to compute a single expansion coefficient for every image.

We point out though that often only a small fraction of the basis functions are chosen for subsequent processing (via their eigenvalues), so the contribution of steps 6 and 7 in Algorithm 3 to the overall running time of the steerable PCA procedure is usually negligible.

To summarize, the computational complexity of the entire procedure (computing PSWFs expansion coefficients + steerable PCA), when using Algorithm 2 to compute the expansion coefficients, is O(ML3 + L5) when sampling the steerable principal components on the Cartesian grid and O(ML3 + L4) when sampling them on a polar grid. It is important to note that, although Algorithm 1 for evaluating PSWFs expansion coefficients suffers from an inferior order of computational complexity (compared to Algorithm 2), it is simpler to implement and may still run faster (due to optimized implementations of the scalar product on CPUs and GPUs), particularly for small values of L.

6. Steerable PCA in the presence of noise

Up to this point, we have presented a method for computing the steerable PCA of a set of images localized in space and frequency, sampled on a Cartesian grid. In many practical settings however, the images are corrupted with noise. Therefore, it is beneficial to understand the impact this noise has on the PSWFs expansion coefficients, and in particular, it is generally convenient if the transformation to the expansion coefficients does not alter the spectrum of the noise. In this section, we demonstrate numerically that for sufficiently localized images in space-frequency, for which we can choose a truncation parameter T ≫ 1 (see (8)), the transformation to the PSWFs expansion coefficients is essentially orthogonal. In particular, the higher the truncation parameter T, the closer is our steerable PCA to orthogonality.

Let us denote by I a column vector consisting of the clean (Cartesian) samples of an input image. Suppose that our image is corrupted by an additive noise such that

| (36) |

where ξ is zero mean noise vector with covariance matrix Rξ. From (9), our approximated expansion coefficients are given (up to a constant) by

| (37) |

where the operator (·)* stands for the conjugate transpose, and ψ̂c denotes the matrix whose columns contain samples of inside the unit disk, with different columns corresponding to different pairs of indices (N, n). If we define the vector of additive noise in the expansion coefficients as

| (38) |

then its covariance matrix is provided by

| (39) |

Now, if the noise in (36) is white (Rξ = σ2I), and in order to preserve the covariance of the noise, we would require the matrix

| (40) |

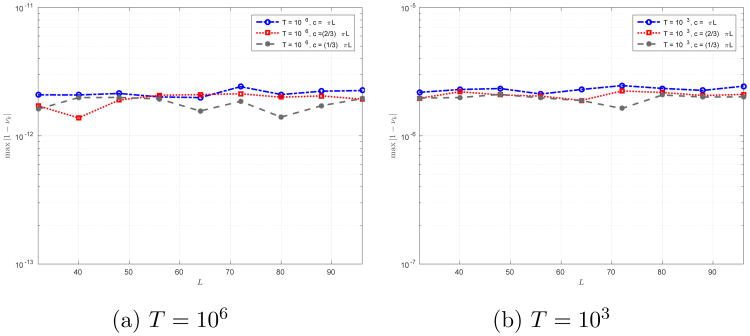

to be as close as possible to the identity matrix, or equivalently that the eigenvalues of the matrix Hc be as close as possible to 1. As the matrix Hc is Hermitian and positive semidefinite, it has non-negative real-valued eigenvalues ν1, ν2, …, νΩT. To determine how close Hc is to the identity matrix, we evaluated numerically the maximal distance (in absolute value) between the eigenvalues {νk} and 1, and the results are plotted in Figure 5 for various values of L, T, and the bandlimit c.

Figure 5.

Measured deviation of the eigenvalues of Hc from 1 for different values of the truncation parameter T, the bandlimit c, and the sampling resolution L. We notice that the deviation from orthogonality remains approximately constant for different values of L and c. Specifically, for T = 106 we notice that Hc is practically orthogonal.

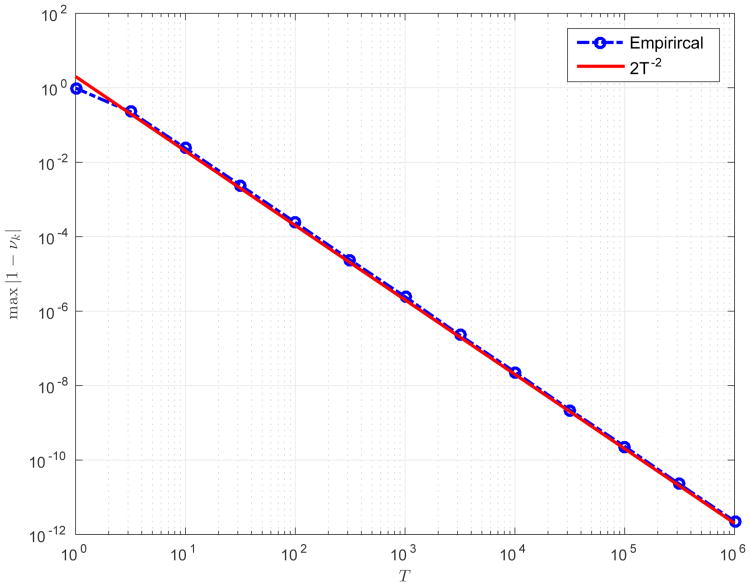

It is noteworthy that for T = 106 the eigenvalues of Hc differ from 1 by about 10−12. We have also observed that the spectrum of the matrix Hc is related to the truncation parameter T by

| (41) |

for T ≥ 10. This observation is exemplified numerically in Figure 6. As the matrix Hc in (40) is essentially orthogonal for sufficiently large values of T, it is important to mention that, if the images are sufficiently localized in space and frequency, it is often possible to choose the value of T as to enjoy the orthogonality of the transform, while keeping the bound in (10) sufficiently small.

Figure 6.

Measured deviation of the eigenvalues of Hc from 1 as a function of T, versus the function 2T−2, for L = 96 and c = πL. We obsereve that the truncation parameter T gives us direct control over the spectrum of Hc with maxk |1 – νk| ≈ 2T−2 for T ≥ 10.

7. Numerical experiments

We begin by demonstrating the running times of our algorithm for large datasets. We generated several sets of 20,000 white noise images, where the sets consist of images with different L for each set, and compared the running time of our algorithm with the FFBsPCA algorithm of [37], where the coefficients' evaluation for our method was carried out using both Algorithm 1 (direct method) and Algorithm 2 (efficient method). All of the algorithms were implemented in Matlab, and were executed on a dual Intel Xeon X5560 CPU (8 cores in total), with 96 GB of RAM running Linux. Whenever possible, all 8 cores were used simultaneously, either explicitly using Matlab's parfor, or implicitly, by employing Matlab's implementation of BLAS, which takes advantage of multicore computing. As for the NFFT implementation, we used the software package [12], with an oversampling of 2 and a truncation parameter m = 6 (which provides accuracy close to machine precision). The running times (in seconds) are shown in Table 1. As anticipated, Algorithm 1 runs faster than Algorithm 2 for small image sizes, but becomes significantly slower for larger values of L. As for the running time of our algorithm versus FFBsPCA [37], we have mentioned in section 5 that our algorithm is asymptotically two times faster, since the number of radial nodes in our quadrature scheme is asymptotically half that of FFBsPCA. In addition, the total number of quadrature nodes in our scheme is about a quarter of that of FFBsPCA, and since the NFFT procedure for evaluating the Fourier transform of the sampled images on a polar grid (see (14)) is the most time-consuming step of both algorithms, it is expected that our algorithm will be faster than FFBsPCA by a factor between 2 and 4, which indeed agrees with the results in Table 1. In all scenarios tested, most of the computation time was spent on evaluating the PSWFs expansion coefficients (Algorithm 2), and only a small fraction of the time on the eigen-decomposition of the rotationally invariant covariance matrix. Table 2 summarizes the time spent on the evaluation of the PSWFs expansion coefficients (using Algorithm 2) versus time spent on the eigen-decomposition of the rotationally invariant covariance matrix.

Table 1.

Comparison of algorithms' running times, for 20,000 images consisting of white noise, for T = 10 and several values of L. All timings are given in seconds.

| L | PSWFs direct (Algs. 1+3) | PSWFs efficient (Algs. 2+3) | FFBsPCA [37] |

|---|---|---|---|

| 32 | 6 | 25 | 47 |

| 64 | 95 | 59 | 151 |

| 96 | 491 | 117 | 363 |

| 128 | 1625 | 204 | 697 |

Table 2.

Running times of coefficients' evaluation and eigen-decomposition for the efficient PSWFs-based method. All timings are given in seconds.

| L | PSWFs coefficients evaluation (Alg. 2) | Eigen-decomposition (Alg. 3 steps 3-6) |

|---|---|---|

| 32 | 24.5 | 0.5 |

| 64 | 56.5 | 2.5 |

| 96 | 111 | 6 |

| 128 | 193 | 11 |

Next, we demonstrate our algorithms on simulated single-particle cryo-electron microscopy (cryo-EM) projection images. In single-particle cryo-EM, one is interested in reconstructing a three-dimensional model of a macromolecule (such as a protein) from its two-dimensional projection images taken by an electron microscope. The procedure begins by embedding many copies of the macromolecule in a thin layer of ice (hence the “cryo” in the name of the procedure), where due to the experimental setup the different copies are frozen at random unknown orientations. Then, an electron microscope acquires two-dimensional projection images of the electron densities of these macromolecules. This procedure of image acquisition can be modelled mathematically as the Radon transform of a volume function evaluated at random viewing directions. Due to the properties of the imaging procedure, each projection image generated by the electron microscope undergoes a convolution with a kernel, referred to as the “contrast transfer function” (CTF), which is known to have a Gaussian envelope [6]. Since the unknown volume is essentially limited in space, and since the behavior of the CTF dictates that all images are localized in Fourier domain, we conclude that projection images obtained by single-particle cryo-EM are essentially limited to circular domains in both space and frequency. We emphasize that although the goal in single particle cryo-EM is to reconstruct the three-dimensional structure of the macromolecule, the input to the reconstruction process is only the set of two-dimensional images. Now, since the in-plane rotation of each single-particle cryo-EM image in the detector plane is arbitrary and irrelevant for the reconstruction, the image processing methods applied to the input image dataset, such as denoising and classification, should be invariant to these in-plane rotations. This observation explains why these images are suitable for exemplifying our steerable-PCA algorithm.

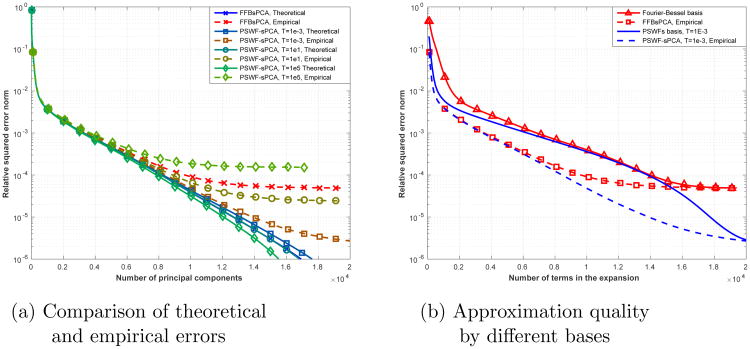

To demonstrate the accuracy of our method, we simulated 10,000 clean projection images from a noiseless three-dimensional density map (EMD-5578 from The Electron Microscopy Data Bank (EMDB) [19]) using the ASPIRE package [1], and obtained steerable principal components using both our fast algorithm and FFBsPCA [37]. Then, we used different numbers of principal components to reconstruct the images, and compared the theoretical error predicted by the residual eigenvalues (see (34)) with the empirical error obtained by comparing the reconstructions to the original images. Obviously, the difference between the two errors is due to the error incurred by the images' expansion scheme (PSWFs or Fourier–Bessel functions). Typical projection images from this dataset can be seen in Figure 7. To simplify the setting and the exposition, we used the projection images as they were obtained from the given volume, and we did not crop, filter, or process them beforehand. Therefore, we assumed a bandlimit c = πL throughout the experiment. We note that in general it is possible to crop and filter the images (i.e., choose a smaller bandlimit c) without significantly degrading the quality of the images (up to some prescribed accuracy), thus reducing the computational burden of the algorithms. This can be accomplished either by power density estimation (as demonstrated in [37]), or by employing more sophisticated and dataset-specific estimation techniques for the B-factor (which governs the Gaussian envelope decay of the CTF); see for example [23]. Figure 8 depicts the first 12 eigenfunctions obtained by our steerable-PCA algorithm for this dataset. In Figure 9(a) we show the relative error norms for the FFBsPCA algorithm and our PSWFs-based algorithm, using several values of T and with different numbers of eigenfunctions in the reconstruction. As expected, the theoretical and empirical relative error norms coincide when a small number of principal components is used in the reconstruction, yet when a large number of principal components is used, the error incurred by the expansion of the images (either using PSWFs or Fourier–Bessel) comes into play and dominates the overall error. As guaranteed by (35) and (10) for space-frequency localized images, smaller values of T lead to smaller approximation errors, and we notice that our PSWFs-based method outperforms the FFBsPCA algorithm in terms of accuracy for T = 10 and T = 10−3. It is also important to mention that the number of PSWFs taking part in the approximation of each image does not increase significantly when lowering T (see (11)). On the other hand, if one allows the approximation error to be of the order of 10−3 to 10−4, then it is also possible to use a very large truncation parameter, such as T = 105, for which the PSWFs-based transform enjoys superior orthogonality properties (see Figure 5), as well as shorter expansions. Often, such scenarios arise when handling noisy datasets. In a way, the truncation parameter provides us with flexibility to adapt the approximation scheme to the specific setting. In Figure 9(b) we show the approximation errors obtained by expanding the images in the PSWFs basis and in the Fourier–Bessel basis (without the rotationally invariant orthogonalization), where we sorted the basis functions according to their contribution to the expansion. It is evident that PSWFs are more adapted to expanding our image dataset than Fourier–Bessel, which is reasonable due to the underlying model behind these images, whereas both bases provide lower accuracy than the steerable principal components obtained from our PSWFs-based steerable PCA.

Figure 7. Sample of 3 simulated noiseless projection images at a resolution of L = 127 pixels.

Figure 8. The first 12 eigenfunctions with largest eigenvalues, obtained for T = 10−3.

Figure 9.

The ratio between the squared error norm and the squared norm of the images, when expanding the images with different numbers of basis functions. Panel (a) compares theoretical errors with empirical errors for our PSWFs-based algorithm (for several values of T) and the FFBsPCA algorithm of [37]. Curves which correspond to theoretical errors are obtained from the residual eigenvalues of the steerable-PCA procedure (see (34)), and empirical errors correspond to measured errors between the reconstructed and original projection images. Panel (b) illustrates the errors due to steerable-PCA expansions, PSWFs expansions, and Fourier–Bessel expansions. Note that the x axis, which counts the number of basis functions used in each expansion, counts only basis functions with non-negative angular indices (since these are sufficient for encoding real-valued images).

8. Summary and discussion

In this paper, we utilized PSWFs-based computational tools to construct fast and accurate algorithms for obtaining steerable principal components of large image datasets. The accuracy of our algorithms is guaranteed under the assumptions of localization of the images in space and frequency, which are natural assumptions for many datasets, particularly in the field of tomography. For M images, each sampled on a Cartesian grid of size (2L + 1) × (2L + 1), the computational complexity for obtaining the steerable principal components is O(ML3) operations, and their accuracy is of the order of the localization of the images in space and frequency, i.e., the norm of the images outside the unit disk in space, and the norm of their Fourier transform outside a disk of radius c, where c is the chosen bandlimit. We have compared our method with the FFBsPCA algorithm [37], which is considered state of the art for performing steerable PCA on single-particle cryo-EM projection images, and have shown that our method is both faster and more accurate (for sufficiently small values of T). In addition, our method enjoys rigorous error bounds throughout its various steps, whereas in contrast the FFBsPCA algorithm provides no analytic guarantees on its accuracy. We mention that classical operations of windowing and filtering can be subsumed in our procedure to ensure that the images fulfil the requirements of space-frequency localization.

As image resolutions get higher, investigating more efficient methods for processing image datasets is an important ongoing research task. As the running times of our algorithms are mostly dominated by the task of computing the PSWFs expansion coefficients, reducing the computational complexity of this step from O(L3) to O(L2 log L) for each image, resulting in the same asymptotic complexity as the two-dimensional FFT, will be a significant progress. Since the radial part of the PSWFs is evaluated to a prescribed accuracy using a finite series of special polynomials which admit a recurrence relation (see [31]), and since the expansion coefficients in terms of these polynomials are obtained from the eigenvectors of a tridiagonal matrix, it is possible to employ the methods described in [34] to derive an O(L2 log L) algorithm for computing the PSWFs expansion coefficients of a function. In addition, as mentioned earlier, the NFFT, which was employed to map the Cartesian grid samples to a polar grid, is a major time-consuming component. In this context, we mention that gridding methods (see for example [29]), when applied carefully, may accelerate such a mapping, and thus reduce the overall running time of our algorithms.

Acknowledgments

Funding: This research was supported by THE ISRAEL SCIENCE FOUNDATION grant No. 578/14, and by Award Number R01GM090200 from the NIGMS.

Appendix A. PSWFs expansion error bound

Let us define the restriction of an image I(x) to the unit disk by

| (42) |

and denote the Fourier transform of Ī(x) by

| (43) |

Since the Fourier transform is a linear operator, we can write

| (44) |

where we defined the Fourier transform of I(x) by Iℱ(ω). Now, it is clear that

| (45) |

| (46) |

Next, by employing Parseval's identity we obtain

| (47) |

and thus, by using our space-frequency concentration assumptions on the image I(x), we have that

| (48) |

Therefore, we conclude that Ī(x) is (1, ε̄)-concentrated in space, and its Fourier transform Īℱ(ω) is (c, δ̄c)-concentrated, where

| (49) |

Now, it follows from [13] that the approximation error of Ī satisfies

| (50) |

where T is the truncation parameter defined in (8), and η is a constant no bigger than 2π2L/c. Finally, since Im(x) and Īm(x) coincide on D, we get using (50)

| (51) |

Appendix B. A bound for

Recall that we choose the number of angular nodes per radius, denoted , such that it satisfies

| (52) |

for every ρ ∈ [0, 1]. Using a bound for the Bessel functions [2] together with the fact that |ρ| ≤ 1, we get

| (53) |

and by using Stirling's approximation [2] (for n > 1)

| (54) |

we obtain (for j > 0)

| (55) |

Then, using the fact that the Bessel functions of the first kind satisfy

| (56) |

we can write

| (57) |

We define

| (58) |

and choose the number of quadrature nodes per radius as

| (59) |

where d is some positive integer (to be determined shortly) and ⌈·⌉ denotes the rounding up operation. It can be easily verified that

| (60) |

whenever . Using (57) and (60) we get that

| (61) |

where we have used the inequality

| (62) |

from [2], with and b = γℓ + d. Finally, we can see that in order to satisfy (52) it is sufficient to choose

| (63) |

and thus

| (64) |

Appendix C. Behavior and decay properties of

We start by reviewing some known results on the behavior of the eigenvalues of the PSWFs in the one-dimensional setting, where they have been thoroughly investigated (see [24] and references therein). The most well-known characterization of these eigenvalues is that they can be divided into three distinct regions of behavior (as a function of their index n): a flat region, where the (normalized) eigenvalues are essentially 1, a transitional region, where they decay from values close to 1 to values close to 0, and a superexponential decay region, where they are very close to 0 and decay as ∼ e−nlogn. In addition, it is known that if we choose all eigenvalues that are greater than some small ε, then there are about 2c/π eigenvalues from the flat region, eigenvalues from the transitional region, and o(logc) eigenvalues from the decay region (see [18] for a precise formulation). Thus, the number of eigenvalues greater then ε is dominated by the number of eigenvalues in the flat region, which is 2c/π. As for the eigenvalues of the two-dimensional PSWFs, results in [30] indicate that as in the one-dimensional setting, the eigenvalues can be similarly divided into three distinct regions: flat, transitional, and superexponential decay regions. Correspondingly, the number of significant eigenvalues is dominated by the number of eigenvalues in the flat region. Since (see (8)), it is clear that in order to satisfy condition (19) we need to determine the number of terms in the flat region of . To this end, we follow [15], which provides similar results for general non-Hermitian Toeplitz integral operators (see also [18] and [36]), and consider the sum

| (65) |

which is approximately equal to the number of values of which are close to 1 (denoted as the flat region). For simplicity of the presentation, we evaluate this sum for a bandlimit of c, and eventually, replace c with 2c. From [32], the radial functions in (4) are real-valued, and are obtained as the solutions to the integral equation

| (66) |

where JN(x) is the Bessel function of the first kind of order N. The eigenvalues and of (3) and (66) are related by

| (67) |

By substituting φ(r) = R(r)√r and γ = β√c into (66), we obtain the integral equation

| (68) |

whose eigenvalues and eigenfunctions are and , respectively. Equation (68) was analyzed in [32], where it is established that the eigenfunctions constitute a complete orthonormal system in ℒ2 [0, 1]. Therefore, it follows that we have the identity

| (69) |

for r and ρ both in [0, 1]. If we notice that , and take N = 0, we have

| (70) |

Next, we take the squared absolute value of both sides of the equation above, followed by double integration (in r and ρ) to obtain

| (71) |

By evaluating the left-hand side of (71) using known integral identities of the Bessel functions [2], and after some manipulation, one can verify that

| (72) |

Now, using the asymptotic approximation (see [2])

| (73) |

valid for x ≫ |m2 − 1/4|, we can write

| (74) |

Therefore, the expression in (74) establishes that for large values of c, the number of terms in the set which are close to 1 is about 2c/π (see also Figure 4).

Footnotes

Received by the editors July 20, 2016; accepted for publication (in revised form) December 7, 2016; published electronically April 13, 2017.

References

- 1.Algorithms for single particle reconstruction. http://spr.math.princeton.edu/

- 2.Abramowitz M, Stegun IA. Handbook of Mathematical Functions: With Formulas, Graphs, and Mathematical Tables. Courier Corporation; Chelmsford, MA: 1964. [Google Scholar]

- 3.Dutt A, Rokhlin V. Fast Fourier transforms for nonequispaced data. SIAM J Sci Comput. 1993;14:1368–1393. [Google Scholar]

- 4.Ferraro M, Caelli TM. Relationship between integral transform invariances and Lie group theory. J Opt Soc Am A. 1988;5:738–742. [Google Scholar]

- 5.Fessler JA, Sutton BP. Nonuniform fast Fourier transforms using min-max interpolation. IEEE Trans Signal Process. 2003;51:560–574. [Google Scholar]

- 6.Frank J. Three-Dimensional Electron Microscopy of Macromolecular Assemblies: Visualization of Biological Molecules in Their Native State. Oxford University Press; Oxford: 2006. [Google Scholar]

- 7.Freeman WT, Adelson EH. The design and use of steerable filters. IEEE Trans Pattern Anal Mach Intell. 1991;13:891–906. [Google Scholar]

- 8.Greengard L, Lee JY. Accelerating the nonuniform fast Fourier transform. SIAM Rev. 2004;46:443–454. [Google Scholar]

- 9.Hilai R, Rubinstein J. Recognition of rotated images by invariant Karhunen–Loéve expansion. J Opt Soc Am A. 1994;11:1610–1618. [Google Scholar]

- 10.Jogan M, Zagar E, Leonardis A. Karhunen–Loeve expansion of a set of rotated templates. IEEE Trans Image Process. 2002;12:817–825. doi: 10.1109/TIP.2003.813141. [DOI] [PubMed] [Google Scholar]

- 11.Kanatani K. Springer Series in Information Sciences. Vol. 20. Springer; New york: 2012. Group-Theoretical Methods in Image Understanding. [Google Scholar]

- 12.Keiner J, Kunis S, Potts D. Using NFFT 3 – a software library for various nonequispaced fast Fourier transforms. ACM Trans Math Softw. 2009;36:19. [Google Scholar]

- 13.Landa B, Shkolnisky Y. Approximation scheme for essentially bandlimited and space-concentrated functions on a disk. Appl Comput Harmon Anal. 2016 accepted. [Google Scholar]

- 14.Landau HJ. Necessary density conditions for sampling and interpolation of certain entire functions. Acta Math. 1967;117:37–52. [Google Scholar]

- 15.Landau HJ. On Szegö's eingenvalue distribution theorem and non-hermitian kernels. Journal d';Analyse Mathématique. 1975;28:335–357. [Google Scholar]

- 16.Landau HJ, Pollak HO. Prolate spheroidal wave functions, Fourier analysis and uncertainty - II. Bell Syst Tech J. 1961;40:65–84. [Google Scholar]

- 17.Landau HJ, Pollak HO. Prolate spheroidal wave functions, Fourier analysis and uncertainty - III: The dimension of the space of essentially time-and band-limited signals. Bell Syst Tech J. 1962;41:1295–1336. [Google Scholar]

- 18.Landau HJ, Widom H. Eigenvalue distribution of time and frequency limiting. J Math Anal Appl. 1980;77:469–481. [Google Scholar]

- 19.Lawson CL, Patwardhan A, Baker ML, Hryc C, Garcia ES, Hudson BP, Lagerstedt I, Ludtke SJ, Pintilie G, Sala R, Westbrook JD, Berman HM, Kleywegt GJ, Chiu W. EMDataBank unified data resource for 3DEM. Nucleic Acids Res. 2016;44:D396–D403. doi: 10.1093/nar/gkv1126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Lenz R. Group-theoretical model of feature extraction. J Opt Soc Am A. 1989;6:827–834. [Google Scholar]

- 21.Lenz R. Group invariant pattern recognition. Pattern Recognition. 1990;23:199–217. [Google Scholar]

- 22.Lenz R. Group Theoretical Methods in Image Processing. Springer; New York: 1990. [Google Scholar]

- 23.Mallick SP, Carragher B, Potter CS, Kriegman DJ. ACE: automated CTF estimation. Ultramicroscopy. 2005;104:8–29. doi: 10.1016/j.ultramic.2005.02.004. [DOI] [PubMed] [Google Scholar]

- 24.Osipov A, Rokhlin V, Xiao H. Applied Mathematical Sciences. Vol. 187. Springer; New York: 2013. Prolate Spheroidal Wave Functions of Order Zero. [Google Scholar]

- 25.Perona P. Deformable kernels for early vision. IEEE Trans Pattern Anal Mach Intell. 1995;17:488–499. [Google Scholar]

- 26.Petersen DP, Middleton D. Sampling and reconstruction of wave-number-limited functions in n-dimensional Euclidean spaces. Inf Control. 1962;5:279–323. [Google Scholar]

- 27.Ponce C, Singer A. Computing steerable principal components of a large set of images and their rotations. IEEE Trans Image Process. 2011;20:3051–3062. doi: 10.1109/TIP.2011.2147323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Potts D, Steidl G, Tasche M. Modern Sampling Theory. Springer; New York: 2001. Fast Fourier transforms for nonequispaced data: A tutorial; pp. 247–270. [Google Scholar]

- 29.Rosenfeld D. An optimal and efficient new gridding algorithm using singular value decomposition. Magn Reson Med. 1998;40:14–23. doi: 10.1002/mrm.1910400103. [DOI] [PubMed] [Google Scholar]

- 30.Serkh K. Technical Report TR-1519. Department of Mathematics, Yale University; New Haven, CT: 2015. On generalized prolate spheroidal functions. [Google Scholar]

- 31.Shkolnisky Y. Prolate spheroidal wave functions on a disc - integration and approximation of two-dimensional bandlimited functions. Appl Comput Harmon Anal. 2007;22:235–256. [Google Scholar]

- 32.Slepian D. Prolate spheroidal wave functions, Fourier analysis and uncertainty - IV: Extensions to many dimensions; generalized prolate spheroidal functions. Bell Syst Tech J. 1964;43:3009–3057. [Google Scholar]

- 33.Slepian D, Pollak HO. Prolate spheroidal wave functions, Fourier analysis and uncertainty -I. Bell Syst Tech J. 1961;40:43–63. [Google Scholar]

- 34.Tygert M. Recurrence relations and fast algorithms. Appl Comput Harmon Anal. 2010;28:121–128. [Google Scholar]

- 35.Vonesch C, Stauber F, Unser M. Steerable PCA for rotation-invariant image recognition. SIAM J Imaging Sci. 2015;8:1857–1873. [Google Scholar]

- 36.Xiao H, Rokhlin V, Yarvin N. Prolate spheroidal wavefunctions, quadrature and interpolation. Inverse Probl. 2001;17:805. [Google Scholar]

- 37.Zhao Z, Shkolnisky Y, Singer A. Fast steerable principal component analysis. IEEE Trans Comput Imaging. 2016;2:1–12. doi: 10.1109/TCI.2016.2514700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Zhao Z, Singer A. Fourier–Bessel rotational invariant eigenimages. J Opt Soc Am A. 2013;30:871–877. doi: 10.1364/JOSAA.30.000871. [DOI] [PMC free article] [PubMed] [Google Scholar]