Summary

An emerging view suggests that spatial position is an integral component of working memory (WM), such that non-spatial features are bound to locations regardless of whether space is relevant [1,2]. For instance, past work has shown that stimulus position is spontaneously remembered when non-spatial features are stored. Item recognition is enhanced when memoranda appear at the same location where they were encoded [3–5], and accessing non-spatial information elicits shifts of spatial attention to the original position of the stimulus [6,7]. However, these findings do not establish that a persistent, active representation of stimulus position is maintained in WM because similar effect have also been documented following storage in long-term memory [8,9]. Here, we show that the spatial position of the memorandum is actively coded by persistent neural activity during a non-spatial WM task. We used a spatial encoding model in conjunction with EEG measurements of oscillatory alpha-band (8–12 Hz) activity to track active representations of spatial position. The position of the stimulus varied trial-to-trial but was wholly irrelevant to the tasks. We nevertheless observed active neural representations of the original stimulus position that persisted throughout the retention interval. Further experiments established that these spatial representations are dependent on the volitional storage of non-spatial features rather than being a lingering effect of sensory energy or initial encoding demands. These findings provide strong evidence that online spatial representations are spontaneously maintained in WM – regardless of task relevance – during the storage of non-spatial features.

Keywords: working memory, location, space, alpha, oscillations, inverted encoding model

Results

In Experiment 1, human observers performed a single-item color WM task (Figure 1A). Observers reported the color of a sample stimulus by clicking on a color wheel following a 1200-ms retention interval. Although the spatial position of the memorandum varied trial-to-trial, the position of the stimulus was wholly irrelevant to the task: observers never reported stimulus position, and the position of the test probe did not co-vary with the position of the memorandum. This design provided a strong test of whether observers spontaneously maintained an active representation of the irrelevant spatial position in WM. We assessed memory performance using the angular difference between the reported and studied color on the color wheel. We modeled these response errors as a mixture between a von Mises (circular normal) distribution and a uniform distribution [10]. The model fits established that observers rarely guessed, and precisely reported the color of the sample stimulus (Table S1).

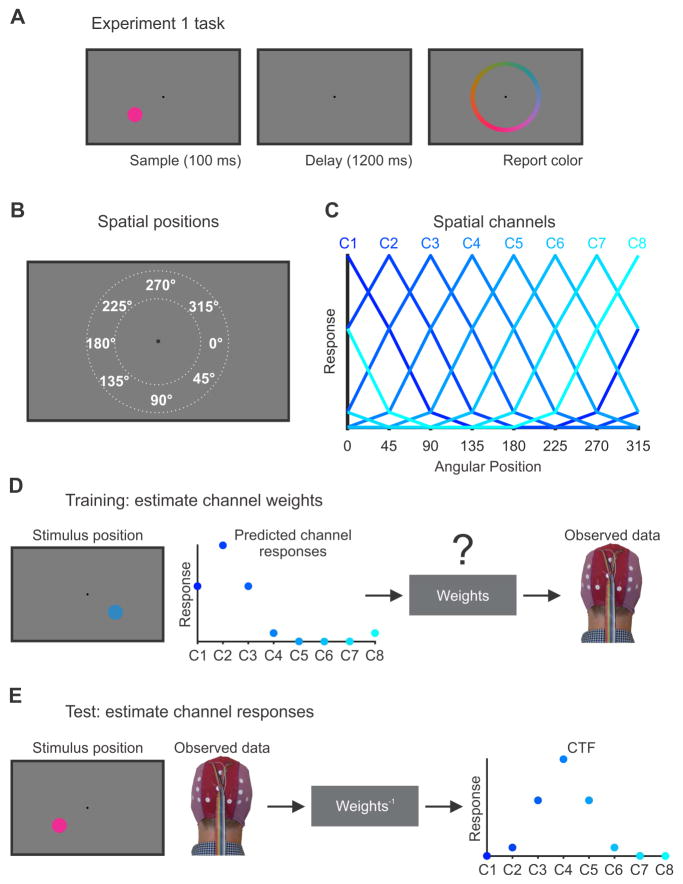

Figure 1. Experiment 1 task and the inverted encoding model (IEM) for reconstructing spatial channel-tuning functions (CTFs).

(A) Observers in Experiment 1 performed a color WM task. Observers saw a brief sample stimulus (100 ms). After a 1200-ms retention interval, observers reported the color of a sample stimulus as precisely as possible by clicking on a color wheel. The position of the sample stimulus varied trial-to-trial but was wholly irrelevant to the task.

(B). The sample stimulus could appear anywhere along an isoeccentric band around the fixation (dotted white lines). We categorized stimuli as belonging to one of eight positions bins centered at 0°, 45°, 90°, and so forth. Each position bin spanned a 45° wedge of positions (e.g., 22.5° to 67.5° for the bin centered at 45°).

(C) We modeled oscillatory power at each electrode as the weighted sum of eight spatially selective channels (C1-C8), each tuned for the center of one of the eight position bins shown in (B). Each curve shows the predicted response of one of the channels across the eight positions bins (i.e., the basis function).

(D) In the training phase, we used the predicted channel responses, determined by the basis functions shown in (C), to estimate a set of channel weights that specified the contribution of each spatial channel to the response measured at each electrode. The example shown here is for a stimulus presented at 45°.

(E) In the test phase, using an independent set of data, we used the channel weights obtained in the training phase to estimate the profile of channel responses given the observed pattern of activity across the scalp. The resulting CTF reflects the spatial selectivity of population-level oscillatory activity, as measured using EEG. The example shown here is for a stimulus presented at 135°. For more details, see STAR Methods.

An emerging view suggests that spatial position is an integral component of WM representations [1,2]. Consistent with this view, behavioral studies have shown that observers spontaneously remember stimulus position when it is not task relevant [3–7]. For example, item recognition is faster and more accurate when memoranda appear at the same location where they were encoded [3–5]. However, behavioral signatures of spatial storage do not establish that a persistent, active representation of stimulus position is maintained in WM because they could instead reflect spatial representations that are stored in other memory systems (e.g., latent representations in episodic memory). Indeed, these behavioral signatures of storage of an irrelevant position are also seen following storage in long-term memory [8,9]. Therefore, it has remained unclear whether active representations of spatial position are spontaneously maintained in WM when position is irrelevant to the task. Here, we reasoned that persistent spatially selective neural activity tracking the position of the memorandum could provide unambiguous evidence for online representations of space. Thus, we used ongoing oscillatory activity to test for active spatial representations during the delay period of non-spatial WM tasks.

We focused on oscillatory activity in the alpha-band (8–12 Hz) because past work has established that alpha-band activity encodes spatial positions that are actively maintained in WM [11]. We used an inverted encoding model (IEM) [11–13] to test for active alpha-band representations of the spatial position of the stimulus throughout the delay period (see Figure 1B–E and STAR Methods). Our spatial encoding model assumed that the pattern of alpha-band power across the scalp reflects the activity of a number of spatially tuned channels (or neuronal populations; Figure 1B and 1C). In a training phase (Figure 1D), we used a subset of the EEG data during the color WM task to estimate the relative contribution of these channels to each electrode on the scalp (the channel weights). Then, in a test phase (Figure 1E), using an independent subset of data, we inverted the model to estimate the response of the spatial channels from the pattern of alpha power across the scalp. This procedure produces a profile of responses across the spatial channels (termed channel-tuning functions or CTFs), which reflects the spatial selectivity of the large-scale neuronal populations that are measured by scalp EEG [11,14,15]. We performed this analysis at each time point throughout the trial, which allowed us to test whether active spatial representations were maintained throughout the retention interval.

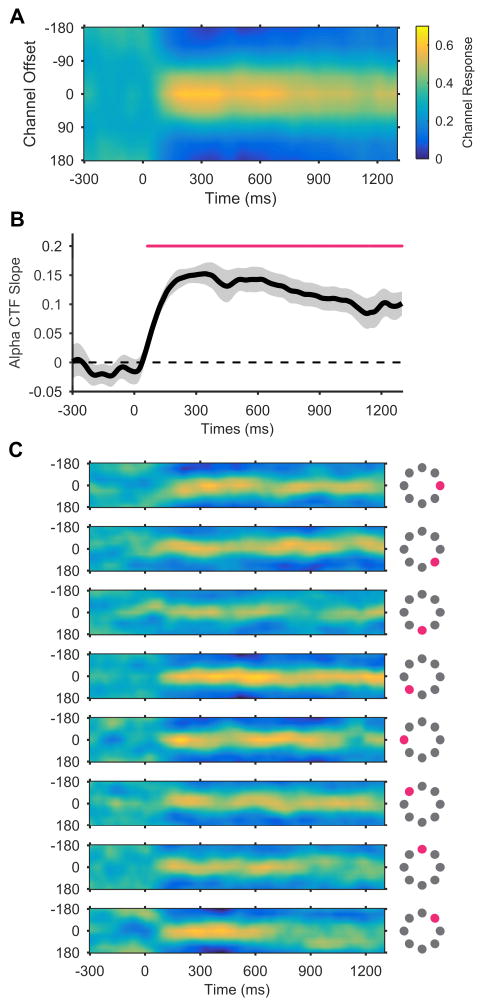

Our analysis revealed that precise representations of stimulus position were maintained in WM. Alpha-band CTFs revealed robust spatial selectivity, which persisted throughout the retention interval (Figure 2A). A permutation testing procedure (see STAR Methods) confirmed that this spatial selectivity was reliably above chance throughout the retention interval (Figure 2B). In line with past work [11], we found that this spatially selective delay activity was restricted to the alpha-band (Figure S1). To examine the precision of the spatial representations encoded by alpha-band activity, we inspected the time-resolved channel-response profiles for each of the eight position bins separately (Figure 2C). For each position bin, the peak response was seen in the channel tuned for that position (i.e., a channel offset of 0°), which established that the recovered alpha-band CTFs tracked which of the eight positions that the stimulus had appeared in. Thus, stimulus position was precisely represented in alpha-band activity. In summary, Experiment 1 established that observers spontaneous maintained an active representation of stimulus position that persisted throughout the delay period of a color WM task, even though spatial position was completely irrelevant.

Figure 2. Spatial alpha-band CTFs during the storage of a color stimulus in working memory.

(A) Average alpha-band CTF in Experiment 1. Although the position of the stimulus was irrelevant to the task, we observed a robust spatially selective alpha-band CTF throughout the delay period.

(B) The selectivity of the alpha-band CTF across time (measured as CTF slope, see STAR Methods). The magenta marker shows the period of reliable spatial selectivity. The shaded error bar reflects ±1 bootstrapped SEM across subjects.

(C) Alpha-band CTFs for each position separately. The magenta markers show which of the eight stimulus position bins that each subplot corresponds to.

Another possibility is that the decoded spatial activity reflects lingering sensory activity that was evoked by the sample stimulus, rather than the online maintenance of the stimulus color in WM. In experiments 2a and 2b, we tested whether spatial alpha-band CTFs depend on the volitional storage goals of the observer. Observers performed a selective storage task in which they were instructed to store a non-spatial feature of a target that was presented alongside a distractor. In Experiment 2a, observers performed a color version of this task (Figure 3A): observers saw two shapes (a circle and a triangle) and were instructed to remember the color of the target shape, which was consistent throughout the experiment (see STAR Methods). In Experiment 2b, observers performed an orientation version of the selective storage task (Figure 3B): observers saw two oriented gratings (one blue and one green) and were required to remember the orientation of the grating in the target color. Modeling of response errors revealed that observers rarely guessed or misreported the distractor item instead of the target (Table S1).

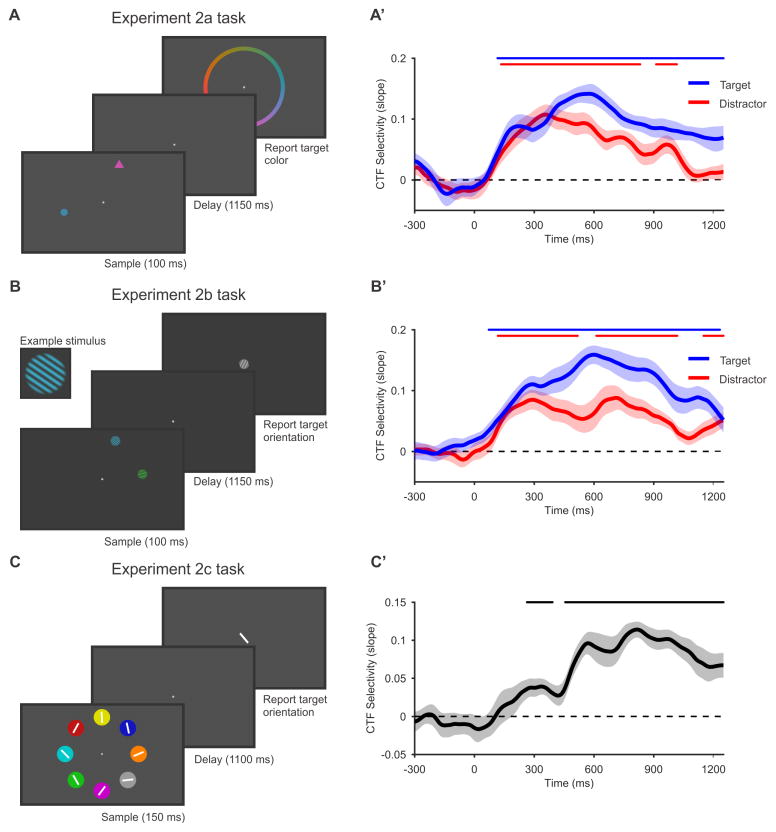

Figure 3. Spatial alpha-band CTFs reveal persistent spatial representations that are related to the storage of non-spatial content in working memory.

(A–C) Tasks in experiments 2a–c. (A) In Experiment 2a, observers performed a selective storage task. The sample display contained two colored shapes (a circle and a triangle). One shape served as a target, and the other as a distractor. We instructed observers to remember and report the color of the target, and to ignore the distractor. The target shape did not change throughout the session, and was counterbalanced across observers. (B) In Experiment 2b, observers performed an orientation version of the selective storage task used in Experiment 2a. In this experiment, observers saw two oriented gratings (one green and one blue), and were instructed to remember and report the orientation of the grating in the target color (counterbalanced across observers). (C) In Experiment 2c, observers saw a balanced array of stimuli and were instructed to remember and report the orientation of the line in the target-colored circle. The target color did not change throughout the experiment, and was varied across observers.

(A′–C′). Spatial selectivity of alpha-band CTFs in experiments 2a–c. In Experiments 2a (A′) and Experiments 2b (B′), we examined the spatial selectivity (measured as CTF slope) for the target position (blue) and distractor position (red). In both experiments, spatial selectivity was higher for the target-related CTF than for the distractor-related CTF. This modulation of CTF selectivity by storage goals reveals a component of spatial selectivity that is related to the volitional storage of non-spatial features in working memory. In Experiment 2c (C′), we saw a robust alpha-band CTF that tracked the target position throughout the retention interval. In this experiment, we completely eliminated spatially specific stimulus-driven activity by presenting the target stimulus in a balanced visual display. Thus, the alpha-band CTF in this experiment must be related to the storage of the target orientation in WM. The markers at the top of each plot mark the periods of reliable spatial selectivity. All shaded error bars reflect ±1 SEM.

See also Figures S2 and S4, and Table S1.

In both experiments, we varied the spatial positions of the target and distractor independently, which allowed us to compare target- and distractor-related alpha-band CTFs. By comparing target- and distractor-related CTFs, we were able to isolate spatially selective activity that must be related to the volitional storage of the target in WM. If sustained alpha-band CTFs are an automatic consequence of sensory activity evoked by the onset of a visual stimulus, then we should see identical alpha-band CTFs for the target and distractor items. Instead, we found that CTF selectivity was higher for the target location than for the distractor location (Figures 3A′ and 3B′). Bootstrap resampling tests confirmed that delay-period CTF selectivity (averaged from 100–1250 ms after stimulus onset) was reliably greater for the target location than for the distractor location in both experiments (Experiment 2a: p < 0.001; Experiment 2b: p < 0.001). While it is likely that there is a sensory contribution to the alpha-band CTFs, the amplified CTF selectivity observed for the target shows that these spatial representations cannot be wholly explained by stimulus-driven activity. Instead, they are strongly shaped by the observer’s volitional storage goals.

Although we saw clear modulation of CTFs by the relevance of the stimulus, we also saw a spatial CTF for the distractor location that lasted for most of the retention interval (Figures 3A′ and 3B′). In a further analysis, we found that the representation of the distractor position resembled that of the target position (Figure S2). We think it is unlikely that the sustained distractor-related CTF reflects lingering sensory activity alone. While observers have top-down control over the stimuli that are stored in WM, this control is imperfect, resulting in the unnecessary storage of irrelevant items [16,17]. Therefore, the spatially specific distractor-related activity may in part reflect the unnecessary storage of the distractor in WM.

In Experiment 2c, we used a different approach to eliminate stimulus-driven activity as a source of spontaneous spatial representations during the delay period. We used a balanced visual display in which eight items were equally spaced around the fixation point (Figure 3C). We instructed observers to remember the orientation of the line in the target item (defined by color, with the relevant color varied across observers), and to reproduce the target orientation following the retention interval. Because the sample displays were visually balanced, stimulus-driven activity was not spatially selective for the target position. Therefore, any spatially selective activity must be related to storage of the target item in WM. We observed a robust, target-related alpha-band CTF (Figure 3C′), which emerged between 200 and 300 ms after onset of the stimulus array, and sustained throughout the retention interval. This observation provides a clean look at the spatial alpha-band representation when spatially selective stimulus-driven activity is eliminated. Taken together, experiments 2a-c provide clear evidence for a persistent active representation of the spatial position of memoranda stored in WM, even when stimulus-driven activity is controlled for.

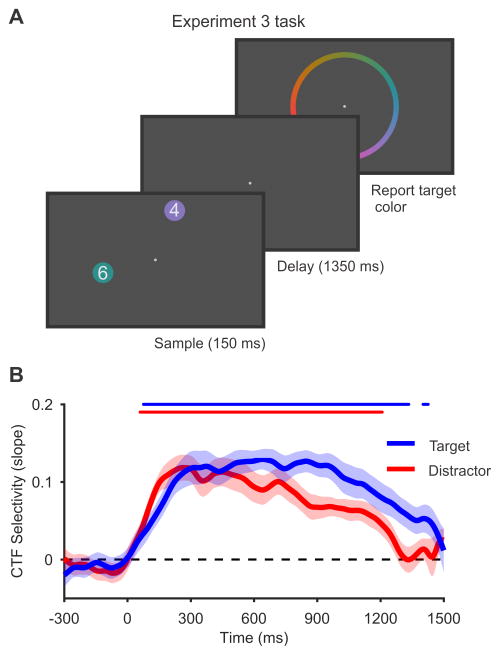

Finally, we considered whether biased attention at the time of encoding could explain the stronger spatial selectivity observed for the target relative to the distractor items in experiments 2a-c. Given that the target-defining feature was constant during experiments 2a and 2b, it is possible that observers could have encoded the relevant feature of the target without fully directing attention towards the distractor; this may have yielded a lingering advantage for the target during the subsequent delay period. Experiment 3 tested this possibility with a task that required full attention towards both the target and distractor items. Observers saw two colored circles, each containing a digit, and the target was the circle that contained the larger digit (Figure 4A). Again, we instructed observers to remember the color of the target, and to disregard the distractor. Critically, this task forced subjects to attend both items to identify the target [18]. Thus, any observed difference in alpha-band CTFs for the target and distractor cannot be attributed to biased attention toward the target during encoding. Indeed, we did not see any difference in CTF selectivity in the 500-ms period following onset of the sample display (0–500 ms, p = 0.42, bootstrap resampling test; Figure 4B), which far exceeds the time required to encode simple visual objects [19]. In contrast, when we examined the entire delay period (150–1500 ms), we found that alpha-band CTF selectivity was higher for the target than for the distractor throughout the delay period (p < 0.01, bootstrap resampling test). This finding shows that the need to store an item in WM modulates spatial alpha-band representations, even when attention to the target and distractor items during encoding is equated (also see Figure S3). Together, our results provide clear evidence that alpha-band activity tracks active spatial representations that persist during the storage of non-spatial features in WM.

Figure 4. Persistent alpha-band CTFs do not reflect a lingering consequence of biased attention during encoding.

(A) In Experiment 3, observers performed a variant on the selective storage task used in experiments 2a and 2b. Observers saw two colored circles, each containing a digit (between 0 and 7). The target was the circle that contained the larger digit. Observers were instructed to remember and report the color of the target, and to disregard the distractor. Critically, this task forced observers to attend and encode both items in order to determine which one was the target.

(B) Spatial selectivity (measured as CTF slope) for the target position (blue) and distractor position (red). Spatial selectivity was higher for the target-related CTF than for the distractor-related CTF. This modulation of CTF selectivity by storage goals cannot be explained by differential attention to the target during encoding because our task forced subjects to attend and encode both items. Therefore, this modulation of CTF selectivity must reflect the continued maintenance of the target item in WM. The markers at the top of the plot mark the periods of reliable spatial selectivity. All shaded error bars reflect ±1 SEM.

See also Figures S3 and S4, and Table S1.

Discussion

Past work has established that human observers can voluntarily control which visual features are stored in WM [20–22]. For example, Serences and colleagues [20] instructed observers to remember the color or orientation of a grating during a retention interval. Voxel-wise patterns of activity in visual cortex were measured with functional magnetic resonance imaging (fMRI) and revealed active coding of the relevant dimension but not the irrelevant dimension. Likewise, Woodman and Vogel [21] showed that contralateral delay activity, an electrophysiological marker of WM maintenance, was higher in amplitude for orientation stimuli than for color stimuli. Critically, this “orientation bump” was only seen when observers voluntarily stored the orientation dimension of conjunction stimuli. These studies demonstrated that observers can control which aspects of the stimuli are held in visual WM, such that specific stimulus dimensions are excluded when they are behaviorally irrelevant. Here, we showed that stimulus position is a striking exception to this rule. We observed active representations of stimulus position that were spontaneously maintained throughout the delay period, even though location was never relevant to the task.

This finding lends support to models that posit a central role for space in the storage of non-spatial information in WM [1,2]. It has been a longstanding hypothesis that space is an integral component of an observer’s representation of non-spatial information [23–25]. However, evidence for spontaneous representation of stimulus position in WM has been elusive. In non-human primates, there is evidence that spatial position is spontaneously represented when irrelevant [26,27]. However, these studies found that non-spatial dimensions (e.g., shape) were also spontaneously represented when irrelevant [26], raising the possibility that these findings might reflect a general failure of non-human primates to exclude any behaviorally irrelevant feature – spatial or non-spatial alike – from WM. In contrast, it has been well-established that human observers exclude irrelevant non-spatial features from storage in visual WM [20–22]. Therefore, our finding that human observers spontaneously maintain spatial position in WM provides clear evidence that spatial position holds a special status in WM.

Our IEM approach also sheds light on the nature of spontaneous spatial representations in WM. By presenting stimuli at many spatial positions in conjunction with an IEM, we established that alpha-band activity precisely tracked the position of the stimulus (Figure 2C). This result rules out the possibility that spatially selective activity reflects an imprecise spatial signal that does not track the specific location of the stimulus (e.g., a signal that tracks hemifield). Furthermore, because scalp EEG activity reflects the synchronous activity of large neuronal populations [14,15], our findings show that spatial position was robustly represented in a large-scale population code rather than an isolated group of spatially selective neuronal units.

Do these spontaneous spatial representations play a functional role in the online maintenance of non-spatial features? Past work has shown that observers recognize an item faster and more accurately when it appears at the location where it was encoded than when it appears elsewhere [3–5], thereby suggesting that access to a non-spatial feature is intertwined with spatial memory. Furthermore, Williams and colleagues [28] showed that color WM performance was impaired when observers were prevented from fixating or covertly attending the positions where the memoranda were presented. Thus, spatial attention toward the original locations of the items improved performance in a color WM task. That said, recognition memory in Williams and colleagues’ study required knowledge of stimulus position. Thus, more work is needed to determine whether sustained spatial focus on an item’s initial position will enhance the maintenance of non-spatial features alone.

A substantial body of work has linked spatially specific alpha-band activity with covert spatial attention [29–32], which raises the possibility that the spatial representations that we observed reflect sustained attention to the original location of the stimulus. If this activity does reflect covert spatial attention, does it qualify as a working memory for spatial position? Persistent stimulus-specific neural activity is an unambiguous signature of active maintenance in WM [33], and the sustained spatially-selective activity that we observed satisfies this criterion. Furthermore, a broad array of evidence has shown that there is considerable overlap between attention and WM [34–36], such that spatial attention supports maintenance of both locations and non-spatial features in WM [28,37,38]. Thus, while it is difficult to draw a precise line between spatial attention and spatial WM, our findings provide clear evidence that storing non-spatial features in WM elicits the spontaneous and sustained maintenance of an online spatial representation. Indeed, if the observed spatial representations are best interpreted as sustained covert attention, it is striking that this spatial focus was maintained throughout the delay period even though space was completely irrelevant to the task. This empirical pattern contrasts with findings from explicit studies of spatial attention, where behavioral relevance determines whether attention is sustained at a given location [39,40]. Therefore, this perspective on our findings also highlights the special status of spatial position in visual WM.

In Summary, we found that human observers spontaneously maintained active neural representations of stimulus position that persisted during the storage of non-spatial features in WM. These spatial representations were precise and robustly coded by population-level alpha-band activity. Although human observers can exclude non-spatial features (e.g., orientation or color) from WM when they are irrelevant [20–22], our results show robust and sustained spatial representations despite hundreds of trials in which spatial position was behaviorally irrelevant.

STAR Methods

CONTACT FOR REAGENT AND RESOURCE SHARING

Further information and requests for resources should be directed to and will be fulfilled by the Lead Contact, Joshua Foster (joshuafoster@uchicago.edu).

EXPERIMENTAL MODEL AND SUBJECT DETAILS

Subjects were human adults between 18 and 35 years old, who reported normal or corrected-to-normal vision. Twelve subjects (7 female, 5 male) participated in Experiment 1, 21 subjects (5 female, 16 male) participated in Experiment 2a, 20 subjects (14 female, 6 male) participated in Experiment 2b, 18 subjects (8 female, 10 male) participated in Experiment 2c, and 19 subjects (8 female, 11 male) participated in Experiment 3. Subjects in Experiment 1 provided informed consent according to procedures approved by the University of Oregon Review board. Subjects in all other experiments provided informed consent according to procedures approved by the University of Chicago Institutional Review Board.

METHOD DETAILS

Apparatus and stimuli

We tested subjects in a dimly lit, electrically shielded chamber. Stimuli were generated using Matlab (The Mathworks, Natick, MA) and the Psychophysics Toolbox [41,42]. In Experiment 1, stimuli were presented on a 17-in CRT monitor (refresh rate = 60 Hz) at a viewing distance of 100 cm. In experiments 2a and 2b, stimuli were presented on a 24-in LCD monitor (refresh rate = 120 Hz) at a viewing distance of 100 cm. In experiments 2c and 3, stimuli were presented on a 24-in LCD monitor (refresh rate = 120 Hz) at a viewing distance of 77cm.

Task procedures

Experiment 1

Subjects performed a delayed color-estimation task (Figure 1A). On each trial, subjects saw a sample stimulus and reported its color as precisely as possible following a retention interval. Subjects initiated each trial with a spacebar press. A fixation point (0.24° of visual angle) appeared for 800–1500 ms before a sample stimulus appeared for 100 ms. The sample stimulus was a circle (1.6° in diameter), centered 3.8° of visual angle from the fixation point. The angular position of the stimulus varied trial-to-trial, and was sampled from one of eight position bins around fixation (each bin spanned a 45° wedge of angular positions, bins were centered at 0°, 45°, 90°, and so forth). Critically, the position of the stimulus was irrelevant to the task, and subjects were told that this was the case at the start of the session. The color of the sample stimulus was chosen from a continuous color wheel that included 360 different colors. Following a 1200-ms retention interval during which only the fixation point remained on screen, a color wheel (8.0° in diameter, 0.5° thick) appeared around fixation, and subjects reported the color of the sample stimulus by clicking on the color wheel with a mouse. The orientation of the color wheel was randomized on each trial to ensure that subjects could not plan their response until the color wheel appeared. Before starting the task, subjects completed a brief set of practice trials to ensure that they understood the instructions. Subjects then completed 15 blocks of 64 trials (960 trials in total), or as many blocks as time-permitted (all subjects completed at least 13 blocks; one subject completed 2 extra blocks, thus completed 17 blocks in total).

Experiments 2a and 2b

Subjects in Experiments 2a and 2b performed a selective storage version of the delayed-estimation task (Figure 3A and 3B). In this procedure, two sample stimuli were presented on each trial (a target and a distractor). Subjects were instructed to remember the color (Experiment 2a) or orientation (Experiment 2b) of the target, and to ignore the distractor. As in Experiment 1, the angular positions of each stimulus around the fixation point were drawn from eight position bins, each spanning a 45° wedge of angular positions. The position bins that the target and distractor stimuli occupied were fully counterbalanced across trials for each subject. Thus, the position of one stimulus was random with respect to the other, allowing us to reconstruct spatial CTFs for the target and the distractor positions independently, disregarding the other. When the target and distractor stimuli occupied the same position bin, their exact position within the bin was constrained so that they did not overlap.

In Experiment 2a, subjects performed a color version of the selective storage task (Figure 3A). Subjects initiated each trial with a spacebar press. A fixation point (0.2°) appeared for 500–800 ms. Next, the sample display was presented for 100 ms. The sample display contained two stimuli: a circle (0.7° in diameter) and an equilateral triangle (length of edges were 0.94°, such that the circle and triangle were equated for area). The target shape was counterbalanced across subjects. Each shape was presented 4° of visual angle from the fixation point. Subjects were instructed to remember the color of the target shape (circle or triangle) as precisely as possible, and to ignore the distractor shape. Following an 1150-ms retention interval, during which only the fixation point remained on the screen, a color wheel (8° in diameter, 0.4° thick) appeared centered around the fixation point, and subjects reported the color of the sample stimulus by clicking on the color wheel with a mouse. As in Experiment 1, the orientation of the color wheel was randomized on each trial to ensure that subjects could not plan their response until the color wheel appeared. Before starting the task, subjects completed a brief set of practice trials to ensure that they understood the instructions. Subjects then completed 15 blocks of 64 trials (960 trials in total), or as many blocks as time permitted (all subjects completed at least 11 blocks, one subject completed 2 extra blocks, thus completed 17 blocks in total).

In Experiment 2b, subjects performed an orientation version of the selective storage task (Figure 3B). The timing of the task was identical to Experiment 2a. Here, the sample display contained two oriented gratings (diameter, 1°; spatial frequency, 7 cycles/°), one green and one blue (equated for luminance), each presented 4° of visual angle from the fixation point. The spatial phase of each grating was randomized on each trial. Subjects were instructed to remember the orientation of the grating in the target color (blue or green) as precisely as possible, and to ignore the grating in the distractor color. The target color was counterbalanced across subjects. Following the retention interval, a white grating appeared at fixation, and subjects adjusted the orientation of this probe grating to match the remembered orientation of the target using a response dial (PowerMate USB Multimedia Controller, Griffin Technology, USA). The initial orientation of the probe grating was randomized on each trial to ensure that subjects could not plan their response until the probe appeared. Once subjects had finished adjusting the orientation of the probe grating, they registered their response by pressing the spacebar. Before starting the task, subjects completed a brief set of practice trials to ensure that they understood the instructions. Subjects then completed 15 blocks of 64 trials (960 trials in total).

Experiment 2c

In Experiment 2c, a single target item was presented among seven distractors (Figure 3C). Subjects were instructed to remember the orientation of the line inside the circle in the target color (which was varied across subjects), and to ignore the distractor items. Subjects initiated each trial with a spacebar press. A fixation point (0.2°) appeared for 500–800 ms. Next, the sample display was presented for 150 ms. This display contained eight circles (1.8° in diameter), equally spaced around the fixation (each item was centered 4° of visual angle from the fixation point). In this experiment, the eight stimuli always occupied fixed locations at 0°, 45°, 90°, and so forth. Each circle contained a white line (1.2° long, 0.2° wide) presented at a randomly selected orientation. Each of the eight circles were presented in a different color (red, pink, yellow, green, light blue, dark blue, orange, and grey). These colors were chosen because they were easily discriminated. The target (i.e., the circle in the target color) appeared in each of the eight positions equally often, and the positions of the remaining seven colors were randomized. Following an 1100-ms retention interval, a white line appeared at fixation and subjects adjusted the orientation to match the remembered target orientation using a response dial. The initial orientation of the probe was randomized on each trial to ensure that subjects could not plan their response until the probe appeared. Once subjects had finished adjusting the orientation of the probe, they registered their response by pressing the spacebar. Immediately following their response, subjects were shown their response error (i.e., the angular difference between the target orientation and reported orientation) for 500 ms. Before starting the task, subjects completed a practice block of 64 trials to ensure that they understood the instructions. Subjects then completed 16 blocks of 64 trials (1024 trials in total).

Experiment 3

Subjects in Experiment 3 performed a variant of the two-item selective storage task used in experiments 2a and 2b (Figure 4A). In this experiment, both stimuli (the target and distractor) were colored circles (1.8° in diameter) containing a digit (1.6° tall and 0.8° wide) between 0 and 7. Subjects were instructed to remember and report the color of circle that contained the larger digit (i.e., the target). On each trial, the target digit was randomly sampled from the digits 1–7, and the distractor was randomly sampled from the subset of digits that were less than that the target. Critically, this task ensured that subjects had to attend and encode both stimuli to the extent that they could identify the digit in each of the items, in order to determine which item was the target [18]. The sample display was presented for 150 ms, and was followed by a 1350-ms retention interval. The trial timing was otherwise identical to that in experiments 2a and 2b. In this experiment, subjects were shown their response error for 500 ms immediately following their response. Before starting the task, subjects completed a practice block of 64 trials to ensure that they understood the instructions. Subjects then completed 16 blocks of 64 trials (1024 trials in total).

EEG recording

Experiment 1

We recorded EEG using 20 tin electrodes mounted in an elastic cap (Electro-Cap International, Eaton, OH). We recorded from International 10/20 sites: F3, Fz, F4, T3, C3, Cz, C4, T4, P3, Pz, P4, T5, T6, O1, and O2, along with five nonstandard sites: OL midway between T5 and O1, OR midway between T6 and O2, PO3 midway between P3 and OL, PO4 midway between P4 and OR, and POz midway between PO3 and PO4. All sites were recorded with a left-mastoid reference, and were re-referenced offline to the algebraic average of the left and right mastoids. Eye movements and blinks were monitored using electrooculogram (EOG). To detect horizontal eye movements, horizontal EOG was recorded from a bipolar pair of electrodes placed ~1 cm from the external canthus of each eye. To detect blinks and vertical eye movements, vertical EOG was recorded from an electrode placed below the right eye and referenced to the left mastoid. The EEG and EOG were amplified with an SA Instrumentation amplifier with a bandpass of 0.01 to 80 Hz and were digitized at 250 Hz using LabVIEW 6.1 running on a PC. Impedances were kept below 5 kΩ.

Experiments 2a-c and 3

We recorded EEG activity using 30 active Ag/AgCl electrodes mounted in an elastic cap (Brain Products actiCHamp, Munich, Germany). We recorded from International 10–20 sites: FP1, FP2, F7, F3, Fz, F4, F8, FC5, FC1, FC2, FC6, C3, Cz, C4, CP5, CP1, CP2, CP6, P7, P3, Pz, P4, P8, PO7, PO3, PO4, PO8, O1, Oz, and O2. Two additional electrodes were placed on the left and right mastoids, and a ground electrode was placed at position FPz. All sites were recorded with a right-mastoid reference, and were re-referenced offline to the algebraic average of the left and right mastoids. Eye movements and blinks were monitored using EOG, recorded with passive electrodes. Horizontal EOG was recorded from a bipolar pair of electrodes placed ~1 cm from the external canthus of each eye. Vertical EOG was recorded from a bipolar pair of electrodes placed above and below the right eye. Data were filtered online (low cut-off = .01 Hz, high cut-off = 80 Hz, slope from low- to high-cutoff = 12 dB/octave), and were digitized at 500 Hz using BrainVision Recorder (Brain Products, Munich, Germany) running on a PC. For three subjects in Experiment 2b, data were digitized at 1000 Hz because of an experimenter error. The data for these subjects were down-sampled to 500 Hz offline. Impedances were kept below 10 kΩ. For one subject in Experiment 2c, data from one electrode (F8) was discarded because of excessive noise.

Eye tracking

In experiments 2a-c and 3, we monitored gaze position using a desk-mounted infrared eye tracking system (EyeLink 1000 Plus, SR Research, Ontario, Canada). According to the manufacturer, this system provides spatial resolution of 0.01° of visual angle, and average accuracy of 0.25–0.50° of visual angle. In Experiments 2a and 2b, gaze position was sampled at 500 Hz, and data were obtained in remote mode (without a chin rest). In Experiments 2c and 3, gaze position was sampled at 1000 Hz, and head position was stabilized with a chin rest. We obtained usable eye-tracking data for 11, 11, 13, and 15 subjects in experiments 2a, 2b, 2c, and 3, respectively.

Artifact rejection

We visually inspected EEG for recording artifacts (amplifier saturation, excessive muscle noise, and skin potentials), and EOG for ocular artifacts (blinks and eye movements). For subjects with usable eye tracking data, we also inspected the gaze data for ocular artifacts. We discarded trials contaminated by artifacts. Subjects were excluded from the final samples if fewer than 600 trials remained after discarding trials contaminated by recording or ocular artifacts. For the analyses of gaze position, we further excluded any trials in which the eye tracker was unable to detect the pupil, operationalized as any trial in which gaze position was more than 15° of visual angle from the fixation point.

Subject exclusions

Experiment 1

Two subjects were excluded because of excessive artifacts (see Artifact Rejection). The final sample included 10 subjects with an average of 785 (SD = 92) artifact-free trials.

Experiment 2a

Four subjects were excluded because of excessive artifacts, and one subject was excluded due to poor task performance (prevalence of guesses and swaps ~14%, estimated by fitting a mixture model to response errors). The final sample included 16 subjects with an average of 762 (SD = 99) artifact-free trials.

Experiment 2b

One subject was excluded because of excessive artifacts. Data collection was terminated early for two subjects because of excessive artifacts. Finally, one subject was excluded because of poor task performance (prevalence of guesses and swaps ~23%). The final sample included 16 subjects with an average of 775 (SD = 92) artifact-free trials.

Experiment 2c

One subject was excluded because of excessive artifacts. Data collection was terminated for two subjects because of excessive eye movements. Data collection was terminated for one subject because of an equipment failure. The final sample included 14 subjects with an average of 823 (SD = 92) artifact-free trials.

Experiment 3

Two subjects were excluded because of excessive artifacts. The final sample included 17 subjects with an average of 835 (SD = 103) artifact-free trials.

QUANTIFICATION AND STATISTICAL ANALYSIS

Modeling response error distributions

In all experiments, response errors were calculated as the angular difference between the reported and presented color or orientation. Response errors could range between −180° and 180° for the 360°-color space, and between −90° and 90° for the 180°-orientation space. To quantify performance in experiments 1 and 2c, we fitted a mixture model to the distribution of response errors for each subject using MemToolbox [43]. We modeled the distribution of response errors as the mixture of a von Mises distribution centered on the correct value (i.e., a response error of 0°), corresponding to trials in which sample color or orientation was remembered, and a uniform distribution, corresponding to guesses in which the reported color was random with respect to the sample stimulus [10]. We obtained maximum likelihood estimates for two parameters: (1) the dispersion of the von Mises distribution (SD), which reflects response precision; and (2) the height of the uniform distribution (Pg), which reflects the probability of guessing. For experiments 2a, 2b, and 3 (the experiments with one target and one distractor) we fitted a mixture model that also included an additional von Mises component centered on the color/orientation value of the distractor, corresponding to trials in which subjects mistakenly report the value of the distractor stimulus instead of the target stimulus (i.e., ‘swaps’ [44]). We obtained maximum likelihood estimates for the same parameters as in the previous model, with one additional parameter (Ps), which reflects the probability of swaps. The parameter estimates for each experiment are summarized in Table S1.

Time-frequency analysis

Time-frequency analyses were performed using the Signal Processing toolbox and EEGLAB toolbox [45] for MATLAB (The Mathworks, Natick, MA). To isolate frequency-specific activity, we band-pass-filtered the raw EEG signal using a two-way least-squares finite-impulse-response filter (“eegfilt.m” from EEGLAB Toolbox [45]). This filtering method used a zero-phase forward and reverse operation, which ensured that phase values were not distorted, as can occur with forward-only filtering methods. A Hilbert transform (MATLAB Signal Processing Toolbox) was applied to the band-pass-filtered data, producing the complex analytic signal, z(t), of the filtered EEG, f(t):

where f̃(t) is the Hilbert transform of f(t), and . The complex analytic signal was extracted for each electrode using the following MATLAB syntax:

In this syntax, data is a 2-D matrix of raw EEG (number of trials × number of samples), F is the sampling frequency (250 Hz in Experiment 1, 500 Hz in all other experiments), f1 is the lower bound of the filtered frequency band, and f2 is the upper bound of the filtered frequency band. For alpha-band analyses, we used an 8- to 12-Hz band-pass filter; thus, f1 and f2 were 8 and 12, respectively. For the time-frequency analysis (Figure S1), we searched a broad range of frequencies (4–50 Hz, in increments of 1 Hz with a 1-Hz band pass). For these analyses, f1 and f2 were 4 and 5 to isolate 4- to 5-Hz activity, 5 and 6 to isolate 5- to 6-Hz activity, and so forth. Instantaneous power was computed by squaring the complex magnitude of the complex analytic signal.

Inverted encoding model

In keeping with our previous work on spatial working memory and spatial attention [11,32], we used an IEM to reconstruct spatially selective CTFs from the topographic distribution of oscillatory power across electrodes (Figure 1B–E). We assumed that power measured at each electrode reflected the weighted sum of eight spatial channels (i.e., neuronal populations), each tuned for a different angular position (Figure 1C). We modeled the response profile of each spatial channel across angular positions as a half sinusoid raised to the seventh power:

where θ is the angular position (ranging from 0° to 359°) and R is the response of the spatial channel in arbitrary units. This response profile was shifted circularly for each channel such that the peak response of each spatial channel was centered over one of the eight positions (corresponding to the centers of eight position bins (0°, 45°, 90°, etc., see Figure 1C).

An IEM routine was applied to each time point in the alpha-band analyses and each time-frequency point in the time-frequency analysis. We partitioned our data into independent sets of training data and test data (for details, see the Training and Test Data section). The routine proceeded in two stages (training and test). In the training stage (Figure 1D), the training data (B1) were used to estimate weights that approximated the relative contributions of the eight spatial channels to the observed response (i.e., oscillatory power) measured at each electrode. We define B1 (m electrodes × n1 measurements) as a matrix of the power at each electrode for each measurement in the training set, C1 (k channels × n1 measurements) as a matrix of the predicted response of each spatial channel (specified by the basis function for that channel; in experiments 2a, 2b, and 3, the predicted channel responses are determined by the target position bin when reconstructing target-related CTFs, and by the distractor position bin when reconstructing distractor-related CTFs) for each measurement, and W (m electrodes × k channels) as a weight matrix that characterizes a linear mapping from channel space to electrode space. The relationships among B1, C1, and W can be described by a general linear model of the following form:

The weight matrix was obtained via least squares estimation as follows:

In the test stage (Figure 1E), we inverted the model to transform the test data, B2 (m electrodes × n2 measurements), into estimated channel responses, (k channels × n2 measurements), using the estimated weight matrix, Ŵ, that we obtained in the training phase:

Each estimated channel-response function was circularly shifted to a common center, so that the center channel was the channel tuned for the position of the stimulus of interest (i.e., 0° on the channel offset axes of Figure 2A and 2C). We then averaged these shifted channel-response functions to obtain the CTF averaged across the eight stimulus position bins. The IEM routine was performed separately for each time point.

Finally, because the exact contributions of the spatial channels to electrode responses (i.e., the channel weights, W) were expected to vary by subject, we applied the IEM routine to each subject separately. This approach allowed us to disregard differences in how spatially selective activity was mapped to scalp-distributed patterns of power across subjects and instead focus on the profile of activity in the common stimulus, or information, space [11,46].

Training and test data

For the IEM procedure, we partitioned artifact-free trials for each subject into independent sets of training data and test data. Specifically, we divided the trials into three sets. For each of these sets, we averaged power across trials in which the relevant stimulus (see below) appeared in the same position bin. For example, trials in which the sample stimulus was presented at 32° and 60° were averaged because these positions both belonged the position bin centered at 45° (which spanned 22.5°–67.5°). For each set of trials, we obtained an i (electrodes) × 8 (position bins) matrix of power values, one for each set (note that the number of electrodes i was not the same in all experiments). We used a leave-one-out cross-validation routine such that two of these matrices served as the training data (B1, i electrodes × 16 measurements), and the remaining matrix served as the test data (B2, i electrodes × 8 measurements). Because no trial belonged to more than one of the three sets, the training and test data were always independent. We applied the IEM routine using each of the three matrices as the test data, and the remaining two matrices as the training data. The resulting CTFs were averaged across the three test sets.

When we partitioned the trials into three sets, we constrained the assignment of trials to the sets so that the number of trials was equal for all eight position bins within each set. To ensure that the number of trials per position would be equal within each of the three sets, we calculated the minimum number of trials per subject for a given position bin, n, and assigned n/3 trials for that position bin to each set. For example, if n was 100, we assigned 33 trials for each position bin to each set. Because of this constraint, some excess trials did not belong to any block.

We used an iterative approach to make use of all available trials. For each iteration, we randomly partitioned the trials into three sets (as just described) and performed the IEM routine on the resulting training and test data. We repeated this process of partitioning trials into sets 10 times for the alpha-band analyses, and 5 times for the full time-frequency analysis. For each iteration, the subset of trials that were assigned to blocks was randomly selected. Therefore, the trials that were not included in any block were different for each iteration. We averaged the resulting channel-response profiles across iterations. This iterative approach reduced noise in the resulting CTFs by minimizing the influence of idiosyncrasies that were specific to any given assignment of trials to blocks.

Note that when reconstructing target-related CTFs, we organized trials based on the position bin that the target stimulus appeared in, and when reconstructing distractor-related CTFs, we organized trials based on the position bin that the distractor stimulus appeared in. Thus, we trained and tested on the target’s position bin to obtain target-related CTFs, and trained and tested on distractor’s position bin to obtain distractor-related CTFs. In a supplemental analysis, we trained on the target position and tested on the distractor position (Figure S3). In this analysis, we assigned training blocks as in our standard analysis, and assigned all remaining trials to the test block.

CTF selectivity

To quantify the spatial selectivity of alpha-band CTFs, we used linear regression to estimate CTF slope. Specifically, we calculated the slope of the channel responses as a function of spatial channels after collapsing across channels that were equidistant from the channel tuned for the position of the stimulus. Higher CTF slope indicates greater spatial selectivity.

Permutation tests

To determine whether CTF selectivity was reliably above chance, we tested whether CTF slope was greater than zero using a one-sample t test. Because mean CTF slope may not be normally distributed under the null hypothesis, we employed a Monte Carlo randomization procedure to empirically approximate the null distribution of the t statistic. Specifically, we implemented the IEM as described above but randomized the position labels within each block so that the labels were random with respect to the observed responses in each electrode. This randomization procedure was repeated 1000 times to obtain a null distribution of t statistics. To test whether the observed CTF selectivity was reliably above chance, we calculated the probability of obtaining a t statistic from the surrogate null distribution greater than or equal to the observed t statistic (i.e., the probability of a Type 1 Error). Our permutation test was therefore a one-tailed test. CTF selectivity was deemed reliably above chance if the probability of a Type 1 Error was less than .01.

Bootstrap resampling tests

We used a subject-level bootstrap resampling procedure [47] to test for differences in spatial selectivity (measured as CTF slope) of target- and distractor-related CTFs. We drew 10,000 bootstrap samples, each containing N-many subjects sampled with replacement, where N is the sample size. For each bootstrap sample, we calculated the mean difference in CTF slope (target – distractor), yielding a distribution of 10,000 mean difference values. We tested whether these difference distributions significantly differenced from zero in either direction, by calculating the proportion of values > or < 0. We doubled the smaller value to obtain a 2-sided p value.

Eye movement controls

To check that removal of ocular artifacts was effective, we examined baselined HEOG (baseline period: −300 to 0 ms, relative to onset of the sample display). Variation in the grand-averaged HEOG waveforms as a function of stimulus position was < 3 μV in all experiments. Given that eye movements of about 1° of visual angle produce a deflection in the HEOG of ~16 μV [48], the residual variation in the average HEOG corresponds to variations in eye position of < 0.2° of visual angle.

In experiments 2a-c and 3, we also inspected gaze position (averaged from stimulus onset to the end of the delay period) as a function of the target’s position bin. We drift-corrected gaze position data by subtracting the mean gaze position measured during a pre-stimulus window (−300 to −100 ms, relative to onset of the sample display) to achieve optimal sensitivity to changes in eye position relative to the pre-stimulus period [49]. This analysis revealed remarkable little variation in gaze position (< 0.05° of visual angle) as a function of target position in all four experiments with eye tracking data (Figure S4A–D), showing we achieved an extremely high standard of fixation compliance once trials with artifacts were discarded.

Although residual bias in gaze position is very small, it is possible that alpha-band activity might track small biases in gaze position rather than spatial representation in working memory. We ran a control analysis to test this possibility. We reasoned that if eye movements drive spatially specific alpha-band activity, then we should observe more robust alpha-band CTFs when we reconstruct CTFs on the basis of gaze position than on the basis of the stimulus position. We sorted trials for each subject in Experiment 2c into eight “gaze position bins” on the basis of the mean gaze position (drift corrected) during the post-stimulus period (0–1250 ms; see Figure S4E). We then used these gaze position bins to perform the CTF analysis. The number of trials available in each position bin limits the number of trials that can be assigned to the training/test sets. Two subjects were excluded from this analysis because they had fewer than 30 trials in a given gaze position bin. We also re-ran the target-related CTF analysis, this time equating the number of trials that were included in the training/test sets with the gaze position analysis. We did not see clear evidence for a gaze-position-related CTF (Figure S4F). However, we did observed a clear target-related CTF, confirming that we had enough trials to detect spatially selective alpha-band activity. This analysis shows that alpha-band activity tracks the location of the target position rather than small variations in gaze position.

DATA AND SOFTWARE AVAILABILITY

All data and code for data analysis is available on Open Science Framework at https://osf.io/vw4uc/. Questions should be directed to the Lead Contact (joshuafoster@uchicago.edu).

Supplementary Material

Acknowledgments

This work was supported by NIMH grant 2R01MH087214-06A1. We thank Brendan Colson and Ariana Gale for assisting with data collection, David Sutterer for helpful discussions, and Megan deBettencourt for helpful feedback on the manuscript.

Footnotes

Conflicts of Interest: None

Author contributions: J.J.F. and E.A. conceived of and designed the experiments; J.J.F. managed the project; E.M.B. contributed to writing experiment code; E.M.B and R.J.J. assisted with data collection; J.J.F. analyzed the data; J.J.F. and E.A. wrote the manuscript; all authors edited the manuscript and approved the final version for submission.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Schneegans S, Bays PM. Neural architecture for binding in visual working memory. J Neurosci. 2017;37:3913–3925. doi: 10.1523/JNEUROSCI.3493-16.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Rajsic J, Wilson DE. Asymmetrical access to color and location in visual working memory. Atten Percept Psychophys. 2014;76:1902–1913. doi: 10.3758/s13414-014-0723-2. [DOI] [PubMed] [Google Scholar]

- 3.Dill M, Fahle M. Limited translation invariance of human visual pattern recognition. Percept Psychophys. 1998;60:65–81. doi: 10.3758/bf03211918. [DOI] [PubMed] [Google Scholar]

- 4.Foster DH, Kahn JI. Internal representations and operations in the visual comparison of transformed patterns: Effects of pattern point-inversion, positional symmetry, and separation. Biol Cybern. 1985;51:305–312. doi: 10.1007/BF00336917. [DOI] [PubMed] [Google Scholar]

- 5.Zaksas D, Bisley JW, Pasternak T. Motion information is spatially localized in a visual working-memory task. J Neurophysiol. 2001;86:912–921. doi: 10.1152/jn.2001.86.2.912. [DOI] [PubMed] [Google Scholar]

- 6.Theeuwes J, Kramer AF, Irwin DE. Attention on our mind: The role of spatial attention in visual working memory. Acta Psychol (Amst) 2011;137:248–251. doi: 10.1016/j.actpsy.2010.06.011. [DOI] [PubMed] [Google Scholar]

- 7.Kuo BCC, Rao A, Lepsien J, Nobre AC. Searching for targets within the spatial layout of visual short-term memory. J Neurosci. 2009;29:8032–8038. doi: 10.1523/JNEUROSCI.0952-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nazir TA, O’Regan JK. Some results on translation invariance in the human visual system. Spat Vis. 1990;5:81–100. doi: 10.1163/156856890x00011. [DOI] [PubMed] [Google Scholar]

- 9.Martarelli CS, Mast FW. Eye movements during long-term pictorial recall. Psychol Res. 2013;77:303–309. doi: 10.1007/s00426-012-0439-7. [DOI] [PubMed] [Google Scholar]

- 10.Zhang W, Luck SJ. Discrete fixed-resolution representations in visual working memory. Nature. 2008;453:233–235. doi: 10.1038/nature06860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Foster JJ, Sutterer DW, Serences JT, Vogel EK, Awh E. The topography of alpha-band activity tracks the content of spatial working memory. J Neurophysiol. 2016;115:168–177. doi: 10.1152/jn.00860.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sprague TC, Serences JT. Attention modulates spatial priority maps in the human occipital, parietal and frontal cortices. Nat Neurosci. 2013;16:1879–87. doi: 10.1038/nn.3574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sprague TC, Ester EF, Serences JT. Reconstructions of information in visual spatial working memory degrade with memory load. Curr Biol. 2014;24:1–7. doi: 10.1016/j.cub.2014.07.066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lopes da Silva F. EEG and MEG: Relevance to neuroscience. Neuron. 2013;80:1112–1128. doi: 10.1016/j.neuron.2013.10.017. [DOI] [PubMed] [Google Scholar]

- 15.Nunez PL, Srinivasan R. Electric fields of the brain: The neurophysics of EEG. New York, NY: Oxford University Press; 2006. [Google Scholar]

- 16.Vogel EK, McCollough AW, Machizawa MG. Neural measures reveal individual differences in controlling access to working memory. Nature. 2005;438:500–503. doi: 10.1038/nature04171. [DOI] [PubMed] [Google Scholar]

- 17.McNab F, Klingberg T. Prefrontal cortex and basal ganglia control access to working memory. Nat Neurosci. 2008;11:103–107. doi: 10.1038/nn2024. [DOI] [PubMed] [Google Scholar]

- 18.Pashler H, Badgio PC. Visual attention and stimulus identification. J Exp Psychol Hum Percept Perform. 1985;11:105–121. doi: 10.1037//0096-1523.11.2.105. [DOI] [PubMed] [Google Scholar]

- 19.Vogel EK, Woodman GF, Luck SJ. The time course of consolidation in visual working memory. J Exp Psychol Hum Percept Perform. 2006;32:1436–1451. doi: 10.1037/0096-1523.32.6.1436. [DOI] [PubMed] [Google Scholar]

- 20.Serences JT, Ester EF, Vogel EK, Awh E. Stimulus-specific delay activity in human primary visual cortex. Psychol Sci. 2009;20:207–214. doi: 10.1111/j.1467-9280.2009.02276.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Woodman GF, Vogel EK. Selective storage and maintenance of an object’s features in visual working memory. Psychon Bull Rev. 2008;15:223–229. doi: 10.3758/pbr.15.1.223. [DOI] [PubMed] [Google Scholar]

- 22.Yu Q, Shim WM. Occipital, parietal, and frontal cortices selectively maintain task-relevant features of multi-feature objects in visual working memory. Neuroimage. 2017;157:97–107. doi: 10.1016/j.neuroimage.2017.05.055. [DOI] [PubMed] [Google Scholar]

- 23.Nissen MJ. Accessing features and objects: Is location special? In: Posner MI, Marin OSM, editors. Attention and Performance XI. Hillsdale, NJ: Erlbaum; 1985. pp. 205–219. [Google Scholar]

- 24.Johnston JC, Pashler H. Close binding of identity and location in visual feature perception. J Exp Psychol Hum Percept Perform. 1990;16:843–856. doi: 10.1037//0096-1523.16.4.843. [DOI] [PubMed] [Google Scholar]

- 25.Tsal Y, Lavie N. Location dominance in attending to color and shape. J Exp Psychol Hum Percept Perform. 1993;19:131–139. doi: 10.1037//0096-1523.19.1.131. [DOI] [PubMed] [Google Scholar]

- 26.Sereno AB, Amador SC. Attention and memory-related responses of neurons in the lateral intraparietal area during spatial and shape-delayed match-to-sample tasks. J Neurophysiol. 2006;95:1078–1098. doi: 10.1152/jn.00431.2005. [DOI] [PubMed] [Google Scholar]

- 27.Salazar RF, Dotson NM, Bressler SL, Gray CM. Content-specific fronto-parietal synchronization during visual working memory. Science. 2012;338:1097–1100. doi: 10.1126/science.1224000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Williams M, Pouget P, Boucher L, Woodman GF. Visual–spatial attention aids the maintenance of object representations in visual working memory. Mem Cognit. 2013;41:698–715. doi: 10.3758/s13421-013-0296-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Thut G, Nietzel A, Brandt SA, Pascual-Leone A. Alpha-band electroencephalographic activity over occipital cortex indexes visuospatial attention bias and predicts visual target detection. J Neurosci. 2006;26:9494–9502. doi: 10.1523/JNEUROSCI.0875-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Foxe JJ, Snyder AC. The role of alpha-band brain oscillations as a sensory suppression mechanism during selective attention. Front Psychol. 2011;2:154. doi: 10.3389/fpsyg.2011.00154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Jensen O, Mazaheri A. Shaping functional architecture by oscillatory alpha activity: Gating by inhibition. Front Hum Neurosci. 2010;4:186. doi: 10.3389/fnhum.2010.00186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Foster JJ, Sutterer DW, Serences JT, Vogel EK, Awh E. Alpha-band oscillations enable spatially and temporally resolved tracking of covert spatial attention. Psychol Sci. 2017;28:929–941. doi: 10.1177/0956797617699167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Sreenivasan KK, Curtis CE, D’Esposito M. Revisiting the role of persistent neural activity during working memory. Trends Cogn Sci. 2014;18:82–89. doi: 10.1016/j.tics.2013.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Awh E, Jonides J. Overlapping mechanisms of attention and spatial working memory. Trends Cogn Sci. 2001;5:119–126. doi: 10.1016/s1364-6613(00)01593-x. [DOI] [PubMed] [Google Scholar]

- 35.Awh E, Vogel EK, Oh SH. Interactions between attention and working memory. Neuroscience. 2006;139:201–208. doi: 10.1016/j.neuroscience.2005.08.023. [DOI] [PubMed] [Google Scholar]

- 36.Gazzaley A, Nobre AC. Top-down modulation: Bridging selective attention and working memory. Trends Cogn Sci. 2012;16:129–135. doi: 10.1016/j.tics.2011.11.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Awh E, Jonides J, Reuter-Lorenz PA. Rehearsal in spatial working memory. J Exp Psychol Hum Percept Perform. 1998;24:780–790. doi: 10.1037//0096-1523.24.3.780. [DOI] [PubMed] [Google Scholar]

- 38.Awh E, Anllo-Vento L, Hillyard SA. The role of spatial selective attention in working memory for locations: Evidence from event-related potentials. J Cogn Neurosci. 2000;12:840–847. doi: 10.1162/089892900562444. [DOI] [PubMed] [Google Scholar]

- 39.Egeth HE, Yantis S. Visual attention: Control, representation, and time course. Annu Rev Psychol. 1997;48:269–297. doi: 10.1146/annurev.psych.48.1.269. [DOI] [PubMed] [Google Scholar]

- 40.Carrasco M. Visual attention: The past 25 years. Vision Res. 2011;51:1484–1525. doi: 10.1016/j.visres.2011.04.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Brainard DH. The Psychophysics Toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- 42.Pelli DG. The VideoToolbox software for psychophysics: transforming numbers into movies. Spat Vis. 1997;10:437–442. [PubMed] [Google Scholar]

- 43.Suchow JW, Brady TF, Fougnie D, Alvarez GA. Modeling visual working memory with the MemToolbox. J Vis. 2013;13:1–8. doi: 10.1167/13.10.9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Bays PM, Catalao RFG, Husain M. The precision of visual working memory is set by allocation of a shared resource. J Vis. 2009;9:1–11. doi: 10.1167/9.10.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Delorme A, Makieg S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J Neurosci Methods. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- 46.Sprague TC, Saproo S, Serences JT. Visual attention mitigates information loss in small- and large-scale neural codes. Trends Cogn Sci. 2015;19:215–226. doi: 10.1016/j.tics.2015.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Efron B, Tibshirani RJ. An introduction to the bootstrap. New York, NY: Chapman and Hall; 1993. [Google Scholar]

- 48.Lins OG, Picton TW, Berg P, Scherg M. Ocular artifacts in EEG and event-related potentials. I: Scalp topography Brain Topogr. 1993;6:51–63. doi: 10.1007/BF01234127. [DOI] [PubMed] [Google Scholar]

- 49.Cornelissen FW, Peters EM, Palmer J. The Eyelink Toolbox: eye tracking with MATLAB and the Psychophysics Toolbox. Behav Res Methods Instrum Comput. 2002;34:613–617. doi: 10.3758/bf03195489. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.