Abstract

This paper explores robust recovery of a superposition of R distinct complex exponential functions with or without damping factors from a few random Gaussian projections. We assume that the signal of interest is of 2N − 1 dimensions and R < 2N − 1. This framework covers a large class of signals arising from real applications in biology, automation, imaging science, etc. To reconstruct such a signal, our algorithm is to seek a low-rank Hankel matrix of the signal by minimizing its nuclear norm subject to the consistency on the sampled data. Our theoretical results show that a robust recovery is possible as long as the number of projections exceeds O(Rln2 N). No incoherence or separation condition is required in our proof. Our method can be applied to spectral compressed sensing where the signal of interest is a superposition of R complex sinusoids. Compared to existing results, our result here does not need any separation condition on the frequencies, while achieving better or comparable bounds on the number of measurements. Furthermore, our method provides theoretical guidance on how many samples are required in the state-of-the-art non-uniform sampling in NMR spectroscopy. The performance of our algorithm is further demonstrated by numerical experiments.

Keywords: Complex sinusoids, Random Gaussian projection, Low-rank Hankel matrix

1. Introduction

Many practical problems involve signals that can be modeled or approximated by a superposition of a few complex exponential functions. In particular, if we choose the exponential function to be complex sinusoid, it covers signals in acceleration of medical imaging [16], analog-to-digital conversion [25], inverse scattering in seismic imaging [1], etc. Time domain signals in nuclear magnetic resonance (NMR) spectroscopy, that are widely used to analyze the compounds in chemistry and protein structures in biology, are another type of signals that can be modeled or approximated by a superposition of complex exponential functions [19]. How to recover those superpositions of complex exponential functions is of primary importance in those applications.

In this paper, we will consider how to recover those complex exponentials from linear measurements of their superposition. More specifically, let x̂ ∈ ℂ2N − 1 be a vector satisfying

| (1) |

where zk ∈ ℂ, k = 1, …, R, are some unknown complex numbers. In other words, x̂ is a superposition of R exponential functions. We assume R < 2N − 1. When |zk| = 1, k = 1, …, R, x̂ is a superposition of complex sinusoids. When zk = e−τke2πιfk, k = 1, …, R, x̂ models the signal in NMR spectroscopy.

Since R < 2N − 1, the degree of freedom to determine x̂ is much less than the ambient dimension 2N − 1. Therefore, it is possible to recover x̂ from its under-sampling [3,5,8,12]. In particular, we consider to recover x̂ from its linear measurement

| (2) |

where A ∈ ℂM × (2N − 1) with M < 2N − 1.

We will use a Hankel structure to reconstruct the signal of interest x̂. The Hankel structure originates from the matrix pencil method [15] for harmonic retrieval for complex sinusoid. The conventional matrix pencil method assumes fully observed x̂ as well as the model order R, which are both unknown here. Following the ideas of the matrix pencil method in [15] and enhanced matrix completion (EMaC) in [10], we construct a Hankel matrix based on signal x̂. More specifically, define the Hankel matrix Ĥ ∈ ℂN×N by

| (3) |

Throughout this paper, indices of all vectors and matrices start from 0, instead of 1 in conventional notations. It can be shown that Ĥ is a matrix with rank R. Instead of reconstructing x̂ directly, we reconstruct the rank-R Hankel matrix Ĥ, subject to the constraint that (2) is satisfied.

Low rank matrix recovery has been widely studied [2,5,6,20]. It is well known that minimizing the nuclear norm tends to lead to a solution of low-rank matrices. Therefore, a nuclear norm minimization problem subject to the constraint (2) is proposed. More specifically, for any given x ∈ ℂ2N − 1, let H(x) ∈ ℂN×N be the Hankel matrix whose first row and last column is x, i.e., [H(x)]jk = xj+k. We propose to solve

| (4) |

where ||·||* is the nuclear norm function (the sum of all singular values), and A and b are from the linear measurement (2). When there is noise contained in the observation, i.e.,

we solve

| (5) |

where δ = ||η||2 is the noise level. The reconstruction of low-rank Hankel matrices via nuclear norm minimization were also proposed in [13] for system identification and realization.

An important theoretical question is how many measurements are required to get a robust reconstruction of Ĥ via (4) or (5). For a generic unstructured N × N matrix of rank R, standard theory [6,7,9,20] indicates that O(NR · poly(logN)) measurements are needed for a robust reconstruction by nuclear norm minimization. This result, however, is unacceptable here since the number of parameters of Ĥ is only 2N − 1 with the actual degrees of freedom R. The main contribution of this paper is then to prove that (4) and (5) give a robust recovery of Ĥ (hence x̂) as soon as the number of projections exceeds O(Rln2 N) if we choose the linear operator A to be some scaled random Gaussian projections. This result is further extended to the robust reconstruction of low-rank Hankel or Toeplitz matrices from its few Gaussian random projections.

Our result can be applied to various signals of superposition of complex exponentials, including, but not limited to, signals of complex sinusoids and signals in accelerated NMR spectroscopy. When applied to complex sinusoids, our result here does not need any separation condition on the frequencies, while only requiring O(Rln2 N) measurements instead of O(Rln4 N) in [10]. Furthermore, our theoretical result provides some guidance on how many samples to choose for the model proposed in [19] to recover NMR spectroscopy.

-

Complex sinusoids. When |zk| = 1 for k = 1, …, R, we must have zk = e2πιfk for some frequency fk. In this case, x̂ is a superposition of complex sinusoids, for example, in the analog-to-digital conversion of radio signals [25]. We often encounter the problem of signal recovery from compressed linear measurements of the superposition of complex sinusoids in various applications. For example, in compressed sensing of spectrally sparse bandlimited signals [25], the random demodulator obtains linear mixing measurements of spectrally sparse bandlimited signals through matching filters. We refer the reader to Sections III and IV of [25] for details. In array signal processing for Direction of Arrival (DoA) estimation of electromagnetic waves [26], the signals received at the antennas of the antenna array are a superposition of complex sinusoids with different frequencies. Suppose that the battery-powered antenna array aims to save energy in sending the measurements to the fusion center where the DoAs are calculated, the antenna array can send linear projections of the signals received across the antenna array. Moreover, if the antenna array is a non-uniform antenna array, the observations across the antenna array are also linear non-uniform compressive sampling of the uniform antenna array.

The problem on recovering x̂ from its as few as possible linear measurements (2) may be solved using compressed sensing (CS) [8]. One can discretize the domain of frequencies fk by a uniform grid. When the frequencies fk indeed fall on the grid, x̂ is sparse in the discrete Fourier transform domain, and CS theory [8,12] suggests that it is possible to reconstruct x̂ from its very few samples via ℓ1-norm minimization, provided that R ≪ 2N − 1. Nevertheless, the frequencies fk in our setting usually do not exactly fall on a grid. The basis mismatch between the true parameters and the grid based on discretization degenerates the performance of conventional compressed sensing [11].

To overcome this, the authors of [4,23] proposed to recover off-the-grid complex sinusoid frequencies using total variation minimization or atomic norm [9] minimization. They proved that the total variation minimization or atomic norm minimization can have a robust reconstruction of x̂ from a non-uniform sampling of very few entries of x̂, provided that the frequencies fk, k = 1, …, R, has a good separation. Another method for recovering off-the-grid frequencies is enhanced matrix completion (EMaC) proposed by Chen et al. [10], where the Hankel structure plays a central role similar to our model. The main result in [10] is that the complex sinusoids x̂ can be robustly reconstructed via EMaC from its very few non-uniformly sampled entries. Again, the EMaC requires a separation of the frequencies, described implicitly by an incoherence condition.

When applied to complex sinusoids, compared to the aforementioned existing results, our result in this paper does not need any separation condition on the frequencies, while achieving better or comparable bounds on the number of measurements.

Accelerated NMR spectroscopy. When zk = e−τke2πιfk, k = 1, …, R, x̂ models the signal in NMR spectroscopy, which arises frequently in studying short-lived molecular systems, monitoring chemical reactions in real-time, high-throughput applications, etc. Recently, Qu et al. [19] proposed an algorithm based on low rank Hankel matrix. In this specific application, A is a matrix that denotes the under-sampling of NMR signals in the time domain. We remark that linear non-uniform subsampling measurements of the signal can greatly speed up the NMR spectroscopy [19]. Numerical results show its efficiency in [19] for which theoretical guarantee results are still needed. It is vital to give some theoretical results on this model since it will give us some guidance on how many samples should be chosen to guarantee the robust recovery. Though the result in [10] applies to this problem, it needs an incoherence condition, which remains uncertain for diverse chemical and biology samples. Our result in this paper does not require any incoherence condition. Moreover, our bound is better than that in [10].

The rest of this paper is organized as follows. We begin with our model and our main results in Section 2. Proofs for the main result are given in Section 3. Then, in Section 4, we extend the main result to the reconstruction of generic low-rank Hankel or Toeplitz matrices. The performance of our algorithm is demonstrated by numerical experiments in Section 5. Finally, in Section 6, we conclude the paper and point out some possible future works.

2. Model and main results

Our approach is based on the observation that the Hankel matrix whose first row and last column consist of entries of x̂ has rank R. Let Ĥ be the Hankel matrix defined by (3). Eq. (1) leads to a decomposition

Therefore, the rank of Ĥ is R. Similar to Enhanced Matrix Completion (EMaC) in [10], in order to reconstruct x̂, we first reconstruct the rank-R Hankel matrix Ĥ, is satisfied. Then, x̂ is derived directly by choosing the first row and last column of Ĥ. More specifically, for any given x ∈ ℂ2N − 1, let H(x) ∈ ℂN×N be the Hankel matrix whose first row and last column is x, i.e., [H(x)]jk = xj+k. We propose to solve

| (6) |

where rank(H(x)) denotes the rank of H(x), and A and b are from the linear measurement (2). When there is noise contained in the observation, i.e., b = Ax̂ + η, we correspondingly solve

| (7) |

where δ = ||η||2 is the noise level.

These two problems are all NP hard problems and not easy to solve. Following the ideas of matrix completion and low rank matrix recovery [6,7,9,20], it is possible to exactly recover the low rank Hankel matrix via nuclear norm minimization. Therefore, it is reasonable to use nuclear norm minimization for our problem and it leads to the models in (4) and (5).

Theoretical results are desirable to guarantee the success of this Hankel matrix completion method. The results in [6,7,9,20] do not consider the Hankel structure. For generic N × N rank-R matrix, they require O(NR·poly(log N)) measurements for robust recovery which is too much since there are only 2N − 1 degrees of freedom in H(x). The theorems proposed in [23] work only for a special case where signals of interest are superpositions of complex sinusoids, which excludes, e.g., the signals in NMR spectroscopy. While the results from [10] extend to complex exponentials, the performance guarantees in [4,10,23] require incoherence conditions, implying the knowledge of frequency interval in spectroscopy, which are not available before the realistic sampling of diverse chemical or biological samples. This limits the applicability of these theories.

It is challenging to provide a theorem guaranteeing the exact recovery for model (4) with arbitrarily linear measurements A. In this paper, we provide a theoretical result ensuring exact recovery when A is a scaled random Gaussian matrix. Our result does not assume any incoherence conditions on the original signal.

Theorem 1

Let A = BD ∈ ℂM×(2N − 1), where B ∈ ℂM×(2N − 1) is a random matrix whose real and imaginary parts are i.i.d. Gaussian with mean 0 and variance 1, D ∈ ℝ(2N − 1)×(2N − 1) is a diagonal matrix with the j-th diagonal if j ≤ N − 1 and otherwise. Then, there exists a universal constant C1 > 0 such that, for an arbitrary ε > 0, if

then, with probability at least , we have

x̃ = x̂, where x̃ is the unique solution of (4) with b = Ax̂;

||D(x̃ − x̂)||2 ≤ 2δ/ε, where x̃ is the unique solution of (5) with ||b − Ax̂||2 ≤ δ.

The scaling matrix D is introduced to preserve energy. In particular, we will introduce a variable y = Dx, and then the operator 𝒢 induced by 𝒢y = H(x) satisfies ||y||2 = ||𝒢y||F; see details in (9). This energy preserving property is critical in our estimation that will be seen later.

The number of measurements required is O(Rln2 N), which is reasonable small compared with the number of parameters in H(x). Furthermore, there is a parameter ε in Theorem 1. For the noise-free case (a), the best choice of ε is obviously a number that is very close to 0. For the noisy case (b), we can balance the error bound and the number of measurements to get an optimal ε. On the one hand, according to the result in (b), in order to make the error in noisy case as small as possible, we would like ε to be as large as possible. On the other hand, we would like to keep the measurements M of the order of Rln2 N. Therefore, a seemingly optimal choice of ε is . With this choice of ε, the number of measurements M = O(Rln2 N) and the error .

Compared to results in [10,23], our theorem does not require any incoherence condition of the matrix Ĥ. In particular, our proposed approach for complex sinusoid signals does not need any separation condition on frequencies fk’s for k = 1, 2, …, R. The reason for not needing a separation condition in noiseless case may be due to the Hankel matrix reconstruction method. Our proof of this fact also depends on the assumption of Gaussian measurements, for which we have the tool of Gaussian width analysis framework. For generic low-rank matrix reconstruction, it is well known that incoherence condition is necessary for successful reconstruction if partial entries of the underlying matrix are sampled [6,7]; however, incoherence is not required if Gaussian random projections are used [9,20]. We are in the same situation except for the additional Hankel structure. Our proposed approach uses Gaussian random projections of Ĥ, while the methods in [10,23] sample partial entries Ĥ. However, empirically, even for non-uniform time-domain samples, we observe that Hankel matrix completion does not seem to require the separation condition between frequencies. We thus conjecture that Hankel matrix completion does not require separations-between-frequencies condition to recover missing data from noiseless measurements under non-uniform time-domain samples, for which we currently do not have a proof.

2.1. Hankel matrix completion for recovering off-the-grid frequencies

Our results also apply to recovering frequencies in superposition of complex sinusoids, instead of recovering only the superposition of complex sinusoids. We divide our discussion into two cases.

The first case is the noise-free case, where the observations are not contaminated by additive noises. In this case, since we can recover the full signal of the superposition of the underlying sinusoids, we can use the single-snapshot MUSIC algorithm [27] to recover the underlying frequencies precisely.

The second case is the noisy case, where the observation is contaminated by additive noises. For this case, we have obtained a bound on the recovery error for the superposition signal (Theorem 1 of our paper). We can further recover the frequencies using the single-snapshot MUSIC algorithm by choosing the R smallest local minimum of surrogate criterion function R(ω) in [27]. In [27], the authors provided the stability result of recovering frequency using the single-snapshot MUSIC algorithm (Theorem 3 of [27]). Specifically, the error in surrogate criterion function R(ω) is upper bounded by the Euclidean norm of the observation noise multiplied by a constant C, where the constant C depends on the largest and smallest nonzero singular values of the involved Hankel matrix. Moreover, the recovered frequency deviates from the true frequency in the order of noise standard deviation when the noise is small (Remark 9 of [27]). We remark that this stability result from [27] is applicable without imposing separation condition on frequencies.

For frequencies satisfying a certain separation condition (Equation (23) of [27]), the authors of [27] further provide stronger and more explicit bounds on the stability of recovering frequencies from noisy data (by explicitly bounding the singular values of the involved Hankel matrix).

Moitra [28] proved that stability of recovering frequencies from noisy observations depends on the separation of frequencies. In particular, [28] shows a sharp phase transition for the relationship between the cutoff time observation index m (namely 2N − 1 in this paper) and the frequency separation δ. If m > 1/δ + 1, there is a polynomial-complexity estimator converging to the true frequencies at an inverse polynomial rate in terms of the magnitude of the noise. And conversely, when m < (1 − ε)/δ, no estimator can distinguish between a particular pair of δ-separated signals if the magnitude of the noise is not exponentially small.

However, the converse results in [28] are dealing with worst-case frequencies and worst-case frequency coefficients. Namely if the separation condition is not satisfied, one can always finds a worst-case pair of signals x and x′ such that telling them apart requires exponentially small noise. Thus Moitra’s result in [28] is not for an average-case, fixed signal x. Moreover, Moitra’s results does not mean that the single-snapshot MUSIC cannot tolerate small noises in recovering frequencies. By comparison, in this paper, our stability result is an average-case stability result, where our spectrally sparse signal is a fixed signal of superposition of complex exponentials, and our stability result is obtained over the ensemble of random Gaussian measurements. Our results are especially useful when the observations are noiseless or have high SNR.

3. Proof of Theorem 1

In this section, we prove the main result Theorem 1. The most crucial factors are that i) one has an explicit formula for the subdifferential of the objective function, and ii) the Gaussian width under the current measurement model is computable.

3.1. Orthonormal basis of the N × N Hankel matrices subspace

In this subsection, we introduce an orthonormal basis of the subspace of N × N Hankel matrices and use it to define a projection from ℂN×N to the subspace of all N × N Hankel matrices.

Let Ej ∈ ℂN×N, j = 0, 1 …, 2N − 2, be the Hankel matrix satisfying

| (8) |

where Kj = j + 1 for j ≤ N − 1 and Kj = 2N − 1 − j for j ≥ N − 1 is the number of non-zeros in Ej. Then, it is easy to check that forms an orthonormal basis of the subspace of all N × N Hankel matrices, under the standard inner product in ℂN×N.

Define a linear operator

| (9) |

The adjoint 𝒢* of 𝒢 is

Obviously, 𝒢*𝒢 is the identity operator in ℂ2N − 1, and 𝒢𝒢* is the orthogonal projector onto the subspace of all Hankel matrices.

3.2. Recovery condition based on restricted minimum gain condition

First of all, let us simplify the minimization problem (4) by introducing D ∈ ℂ(2N − 1)×(2N − 1), the diagonal matrix with j-th diagonal . Then, by letting y = Dx, (4) is rewritten as,

| (10) |

where B = AD−1. Recall that 𝒢 satisfies 𝒢*𝒢 = ℐ, which is crucial in the proceeding analysis. Similarly, for the noisy case, (5) is rearranged to

| (11) |

By our assumption in Theorem 1, B ∈ ℂM×(2N − 1) is a random matrix whose real and imaginary parts are both real-valued random matrices with i.i.d. Gaussian entries of mean 0 and variance 1. We will prove ỹ = Dx̂ (respectively ||ỹ − ŷ||2 ≤ 2δ/ε) with dominant probability for problem (10) for the noise free case (respectively (11) for the noisy case).

Let the descent cone of ||𝒢 ·||* at ŷ be

| (12) |

To characterize the recovery condition, we need to use the minimum value of for nonzero z ∈ 𝔗(ŷ). This quantity is commonly called the minimum gain of the measurement operator B restricted on 𝔗(ŷ) [9]. In particular, if the minimum gain is bounded away from zero, then the exact recovery (respectively approximate recovery) for problem (10) (respectively (11)) holds.

Lemma 1

Let 𝔗(ŷ) be defined by (12). Assume

| (13) |

Let ỹ be the solution of (10) with b = Bŷ. Then ỹ = ŷ.

Let ỹ be the solution of (11) with ||b − Bŷ||2 ≤ δ. Then ||ỹ − ŷ||2 ≤ 2δ/ε.

Proof

Since (a) is a special case of (b) with δ = 0, we prove (b) only. The optimality of ỹ implies ỹ − ŷ ∈ 𝔗(ŷ). By (13), we have

Minimum gain condition is a powerful concept and has been employed in recent recovery results via ℓ1 norm minimization, block-sparse vector recovery, low-rank matrix reconstruction and other atomic norms [9].

3.3. Bound of minimum gain via Gaussian width

Lemma 1 requires to estimate the lower bound of . Gordon gave a solution using Gaussian width of a set [9,14] to estimate the lower bound of minimum gain.

Definition 1

The Gaussian width of a set S ⊂ ℝp is defined as:

where ξ ∈ ℝp is a random vector of independent zero-mean unit-variance Gaussians.

Let λn denote the expected length of a n-dimensional Gaussian random vector. Then and it can be tightly bounded as [9]. The following theorem is given in Corollary 1.2 in [14]. It gives a bound on minimum gain for a random map Π: ℝp ↦ ℝn.

Theorem 2. (See Corollary 1.2 in [14].)

Let Ω be a closed subset of {x ∈ ℝp|||x||2 = 1}. Let Π ∈ ℝn×p be a random matrix with i.i.d. Gaussian entries with mean 0 and variance 1. Then, for any ε > 0,

provided λn − w(Ω) − ε ≥ 0. Here , and w(Ω) is the Gaussian width of Ω.

By converting the complex setting in our problem to the real setting and using Theorem 2, we can get the bound of (13) in terms of Gaussian width of , where 𝔗ℝ(ŷ) is a cone in ℝ4N − 2 defined by

| (14) |

Lemma 2

Let the real and imaginary parts of entries of B ∈ ℂM×(2N − 1) be i.i.d. Gaussian with mean 0 and variance 1. Let 𝔗ℝ(ŷ) be defined by (14) and be the unit sphere in ℂ2N − 1. Then for any ε > 0,

where is the unit sphere in ℝ4N−2.

Proof

In order to use Theorem 2, we convert the complex setting in our problem to the real setting in Theorem 2. We will use Roman letters for vectors and matrices in complex-valued spaces, and Greek letters for real valued ones. Let B = Φ + ιΨ ∈ ℂM×(2N−1), where both Φ ∈ ℝM×(2N−1) and Ψ ∈ ℝM×(2N−1) are real-valued random matrices whose entries are i.i.d. mean-0 variance-1 Gaussian. Then, for any z = α + ιβ ∈ ℂ2N−1 with α, β ∈ ℝ2N−1,

Then

| (15) |

implies

Therefore,

It is easy to see that both [Φ −Ψ] and [Ψ Φ] are real-valued random matrices with i.i.d. Gaussian entries of mean 0 and variance 1. By Theorem 2,

and therefore we get the desired result.

3.4. Estimation of Gaussian width

Denote be polar cone of 𝔗ℝ(ŷ) ∈ ℝ4N−2, i.e.,

| (16) |

Following the arguments in Proposition 3.6 in [9], we obtain

| (17) |

where ξ ∈ ℝ4N−2 is a random vector of i.i.d. Gaussian entries of mean 0 and variance 1. Hence, instead of estimating Gaussian width , we bound . For this purpose, let ℱ : ℝ4N−2 ↦ ℝ be defined by

| (18) |

The following lemma gives us a characterization of in terms of the subdifferential ∂ℱ of ℱ.

Lemma 3

Let and ℱ be defined by (16) and (18) respectively. Let ω̂1, ω̂2 ∈ ℝ2N−1 be the real and imaginary parts of ŷ respectively and denote . Then

| (19) |

Proof

It is observed that 𝔗ℝ(ŷ) in (14) is the descent cone of the function ℱ

According to Theorem 23.4 in [21], the cone dual to the descent cone is the conic hull of subgradient, which is exactly (19).

The following lemma gives us an estimation of Gaussian width in terms of E(||𝒢g||2).

Lemma 4

Let 𝔗ℝ(ŷ) and 𝒢 be defined by (14) and (9) respectively. Then

where E(||𝒢g||2) is the expectation with respect to g ∈ ℂ2N−1. Here g is a random vector whose real and imaginary parts are i.i.d. mean-0 and variance-1 Gaussian entries.

Proof

By using (17) and Lemma 3, we need to find ∂ℱ(ω̂) and thus . Let Ω̂1 = 𝒢ω̂1 and Ω̂2 = 𝒢ω̂2. Then 𝒢ŷ = Ω̂1 + ιΩ̂2. Let a singular value decomposition of the rank-R matrix 𝒢ŷ be

| (20) |

where Θ1, Θ2, Ξ1, Ξ2 ∈ ℝN×R and Σ ∈ ℝR×R, and U ∈ ℂN×R and V ∈ ℂN×R satisfies U*U = V*V = I. Then, by direct calculation,

| (21) |

satisfy ΘTΘ = ΞTΞ = I. Moreover, if we define , then

| (22) |

is a singular value decomposition of the real matrix Ω̂, and the singular values Ω̂ are those of 𝒢ŷ, each repeated twice. Therefore,

| (23) |

Define a linear operator ℰ : ℝ4N−2 ↦ ℝ2N×2N by

By (23) and the definition of Ω̂, we obtain . From convex analysis theory and Ω̂ = ℰω̂, the subdifferential of ℱ is given by

| (24) |

On the one hand, the adjoint ℰ* is given by, for any with each block in ℝN×N,

| (25) |

On the other hand, since (22) provides a singular value decomposition of Ω̂,

| (26) |

Combining (24), (25), (26) and (21) yields the subdifferential of ℱ at ω̂

We are now ready for the estimation of the Gaussian width. Let the set 𝔖 be a subset of the set of complex-valued vectors

| (27) |

where U, V are in (20). Then, it can be checked that

| (28) |

Actually, for any W = Δ1 + ιΔ2 satisfying U*W = 0, WV = 0 and ||W||2 ≤ 1, we choose . Obviously, this choice of Δ satisfies the constraints on Δ in ∂ℱ(ω̂). Furthermore, . Therefore, (28) holds.

With the help of (28), we get

| (29) |

We then convert the real-valued vectors to complex-valued vectors by letting g = ξ1 + ιξ2 and c = γ1 + ιγ2, where ξ1 and ξ2 are the first and second half of ξ respectively and so for γ1 and γ2. This leads to

Since 𝒢*𝒢 is the identity operator and 𝒢𝒢* is an orthogonal projector, for any λ ≥ 0 and c ∈ 𝔖,

| (30) |

where W satisfies the conditions in the definition of 𝔖 in (27). Define two orthogonal projectors ℘1 and ℘2 in ℂN×N by

Then, it can be easily checked that: ℘1X and ℘2X are orthogonal, X = ℘1X + ℘2X, and

| (31) |

where U, V, W are the same as those in (27). We choose

Then, W satisfies constraints in (27). This, together with (29), (30), (31), implies

We will estimate both ||℘1(𝒢g)||F and ||℘2(𝒢g)||2. For ||℘1(𝒢g)||F, we have

where in the last line we have used the inequality

and similarly . For ||℘2(𝒢g)||2,

Altogether, we obtain

which together with (17) gives

3.5. Bound of E(||𝒢g||2)

The estimation of E(||𝒢g||2) plays an important role in proving Theorem 1 since it is needed to give the tight bound of the Gaussian width . The following theorem gives us a bound for E(||𝒢g||2).

Theorem 3

Let g ∈ ℝ2N−1 be a random vector whose entries are i.i.d. Gaussian random variables with mean 0 and variance 1, or g ∈ ℂ2N−1 a random vector whose real part and imaginary part have i.i.d. Gaussian random entries with mean 0 and variance 1. Then,

where C1 are some positive universal constants.

We will use the moment method (see Chapter 2.3 in [24] for more details) to prove Theorem 3. In order to help the reader easily understand the proof, we begin with the real case and introduce some ideas and lemmas first. Assume g ∈ ℝ2N−1 has i.i.d standard Gaussian entries with mean 0 and variance 1. Notice that 𝒢g is symmetric. Therefore, for any even integer k, (tr (𝒢g)k)1/k is the k-norm of vector of singular values, which implies ||𝒢g||2 ≤ (tr (𝒢g)k)1/k. This together with Jensen’s inequality,

| (32) |

Thus, in order to get an upper bound of E(||𝒢g||2), we estimate E(tr((𝒢g)k)). Denote M = 𝒢g. It is easy to see that

| (33) |

Therefore, we only need to estimate Σ0≤i1, i2,..., ik≤N−1 E(Mi1i2Mi2i3... Mik−1ikMiki1).

To simplify the notation, we denote ik+1 = i1. Notice that , where gi+j is a random Gaussian variable and Kj is defined in (8). Hence, Miℓ, iℓ+1 = Miℓ′, iℓ′+1 if and only if iℓ + iℓ+1 = iℓ′ + iℓ′+1. In order to utilize this property, we would like to introduce a graph for any given index i1, i2, ..., ik and its equivalent edges on the graph. More specifically, we construct graph 𝔉i1, i2,..., ik with nodes to be i1, i2, ..., ik and edges to be (i1, i2), (i2, i3), ..., (ik−1, ik), (ik, i1). Let the weight for the edge (iℓ, iℓ+1) be iℓ + iℓ+1. The edges with the same weights are considered as an equivalent class. Obviously, Miℓ, iℓ+1 = Miℓ′, iℓ′+1 if and only if (iℓ, iℓ+1) and (iℓ′, iℓ′+1) are in the same equivalent class. Assume there are p equivalent classes of the edges of 𝔉i1,i2,...,ik. These equivalent classes are indexed by 1, 2, ..., p according to their order in the graph traversal i1 → i2 → ... → ik → i1. We associate with the graph 𝔉i1,i2,...,ik a sequence c1c2 ... ck, where cj is the index of the equivalent class of the edge (ij, ij+1). We call c1c2 ... ck the label for the equivalent classes of the graph 𝔉i1,i2,...,ik.

The label for the equivalent classes of the graph 𝔉i1,i2,...,ik plays an important role in bounding E(||𝒢g||2). In order to help the reader understand this concept better, we give two specific examples here. For N = 6, k = 6, i1 = 1, i2 = 4, i3 = 1, i4 = 3, i5 = 1, i6 = 4, we have a corresponding graph and its label for the equivalent classes of the graph is 112211. For N = 6, k = 6, i1 = 2, i2 = 3, i3 = 2, i4 = 4, i5 = 2, i6 = 3, the label for the equivalent classes of the corresponding graph is 112211 as well. Therefore, there may be several different index sequences i1i2 ... ik that correspond to the same label for the equivalent classes of the corresponding graph. Let 𝔄c1c2...ck be the set of indices whose label of equivalent class of the corresponding graph is c1c2 ... ck, i.e.

| (34) |

For given c1c2 ... ck, 𝔄c1c2...ck is a subset of {i1i2 ... ik |ij ∈ {0, 1, ..., N − 1}, ∀ j = 1, ..., k}. The following lemma gives us an estimate for the bound Σi1,i2...ik∈𝔄c1c2...ck E(Mi1i2Mi2i3 ...Mik−1ikMiki1).

Lemma 5

Let ζ be the Riemann zeta function and 𝔄c1c2...ck be defined in (34). Define B(s) = ln(N + 1) if s = 2 and B(s) = ζ (s/2) ≤ π2/6 for s ≥ 4. Then

| (35) |

where p is the number of equivalent classes shown in c1c2 ... ck, and sℓ, ℓ = 1, ..., p, is the frequency of ℓ in c1c2 ... ck.

Proof

We begin with finding free indices for any i1, i2, ..., ik in the set 𝔄c1c2...ck. Let (j1, j2) be the first edge of the class 1. Therefore, the weight of the first class is j1 + j2. For convenience, we define k1(j1) = j1. The first edge of the class 2 must have a vertex k2(j1, j2), depending on j1 and j2, and a free vertex, denoted by j3. The weight of the second class is k2(j1, j2) + j3. Similarly, the first edge in class 3 has a vertex k3(j1, j2, j3) and a free vertex j4, and the weight is k3(j1, j2, j3) + j4, and so on. Finally, the first edge in class p has a vertex kp(j1, j2, ..., jp) and a free vertex jp+1, and the weight is kp(j1, j2, ..., jp) + jp+1. Recall that the entry Mij is , where gi+j is a random Gaussian variable. Therefore, for any i1i2 ... ik ∈ 𝔄c1c2...ck,

| (36) |

where mℓ = kℓ (j1, j2, ..., jℓ) + jℓ+1. Therefore, it is non-vanishing if and only if s1, s2, ..., sp are all even. In these cases,

| (37) |

Summing (37) over 𝔄c1c2...ck, we obtain

Since, for any 0 ≤ c ≤ N − 1,

where ζ is the Riemann zeta function. By defining B(s) = ln(N + 1) if s = 2 and B(s) = ζ (s/2) ≤ π2/6 for s ≥ 4, the desired result easily follows.

The desired bound for E(||𝒢g||2) can be obtained if we know how many different sets of 𝔄c1c2…ck are available in the set {i1i2 … ik|ij ∈ {0, 1, …, N − 1}, ∀ j = 1, …, k}. Let 𝔅s1s2…sp be the set of all labels of p equivalent classes with ℓ-th class containing sℓ equivalent edges respectively, i.e.

| (38) |

Let ℭp be the set of all possible choice of p positive even numbers s1, …, sp satisfying s1 +s2 +…+sp = k. Then

| (39) |

By bounding the cardinality of 𝔅s1s2…sp and ℭp, we can derive the bound E(tr(Mk)) hence E(||𝒢g||2) for the real case. The complex case can be proved by directly using the results for the real case. Now, we are in position to prove Theorem 3.

Proof of Theorem 3

Following (39), we need to count the cardinality of 𝔅s1s2…sp. For any c1c2 … ck ∈ 𝔅s1s2…sp, we must have c1 = 1. Therefore, there are choices of the positions of remaining 1’s in c1c2 … ck. Once positions for 1’s are fixed, the position of the first 2 has to be the first available slot, we have choices for the positions of remaining 2’s, and so on. Thus,

which together with (35) implies, for any s1s2 … sp ∈ ℭp,

| (40) |

Summing (40) over ℭp yields

| (41) |

Let us estimate the sum in the last line. Let s be the number of 2’s in s1s2 … sp. Then,

| (42) |

Since each s1, …, sp ≥ 2 and there are p − s terms greater than 4 among them, we have

| (43) |

and k − s1 − … − sℓ = sℓ+1 + … + sp ≥ 2(p − ℓ), which implies

| (44) |

There are choices of the positions of the s 2’s. Moreover, once the s 2’s in s1s2 … sp are chosen, there are at most

choices of the remaining p − s sj ‘s. Altogether,

| (45) |

Finally, (45) is summed over all possible p and we obtain

| (46) |

By using the fact that, for any A > 0,

(46) is rearranged into

Let k be the smallest even integer greater than . Then using ||M||2 ≤ (tr(Mk))1/k lead to

where the constant C1 is some universal constant.

Next, we estimate the complex case. In this case, g ∈ ℂ2N−1, where both its real part and imaginary part have i.i.d. Gaussian entries. Write g = ξ + ıη, where ξ, η ∈ ℝ2N−1 are real-valued random Gaussian vectors. From the real-valued case above, we derive

Therefore,

3.6. Proof of Theorem 1

With Lemmas 1, 2, 4, and Theorem 3 in hand, we are in position to prove Theorem 1.

Proof of Theorem 1

Since (10) is equivalent to (4) by the relation y = Dx, we only need to prove that ŷ = ỹ for noise free data (||ŷ−ỹ||2 ≤ 2δ/ε for noisy data) with dominant probability. According to Lemma 1, we only need to prove (13). By Lemma 2,

Lemma 4, Theorem 3, and the inequality imply that

When , we can easily get . We get the desired result.

4. Extension to structured low-rank matrix reconstruction

In this section, we extend our results to low-rank Hankel matrix reconstruction and low-rank Toeplitz matrix reconstruction from their Gaussian measurements.

Since the proof of Theorem 1 does not use the specific property that ŷ is an exponential signal, Theorem 1 holds true for any low-rank Hankel matrices. We have the following corollary, which reads that any Hankel matrix of size N × N and rank R can be recovered exactly from its O(Rln2 N) Gaussian measurements, and this reconstruction is robust to noise.

Corollary 1 (Low-rank Hankel matrix reconstruction)

Let Ĥ ∈ ℂN×N be a given Hankel matrix with rank R. Let x̂ ∈ ℂ2N−1 be satisfying x̂i+j = Ĥij for 0 ≤ i, j ≤ N − 1. Let A = BD ∈ ℂM×(2N−1), where B ∈ ℂM×(2N−1) is a random matrix whose real and imaginary parts are i.i.d. Gaussian with mean 0 and variance 1, D ∈ ℝ(2N−1)×(2N−1) is the same as defined in Theorem 1. Then, there exists a universal constant C1 > 0 such that, for any ε > 0, if

then, with probability at least , we have

-

H(x̃) = Ĥ, where x̃ is the unique solution of

with b = Ax̂;

-

||H(x̃) − Ĥ )||F ≤ 2δ/ε, where x̃ is the unique solution of

with ||b − Ax̂||2 ≤ δ.

Moreover, Theorem 1 can be extended to the reconstruction of low-rank Toeplitz matrix from its Gaussian measurements. Let T̂ ∈ ℂN×N be a Toeplitz matrix. Let x̂ ∈ ℂ2N−1 be a vector satisfying x̂N−1+(i−j) = T̂i,j for 0 ≤ i, j ≤ N − 1. Let P ∈ ℂN×N be an anti-diagonal matrix with anti-diagonals of 1. Then, it is easy to check that T̂ = H(x̂)P. Thus, we define a linear operator T that maps a vector in ℂ2N−1 to a N × N Toeplitz matrix by T(x) = H(x)P. Since P is a unitary matrix, one has ||T(x)||* = ||H(x)P||* = ||H(x)||*. Therefore, the above corollary can be adapted to low-rank Toeplitz matrices.

5. Numerical experiments

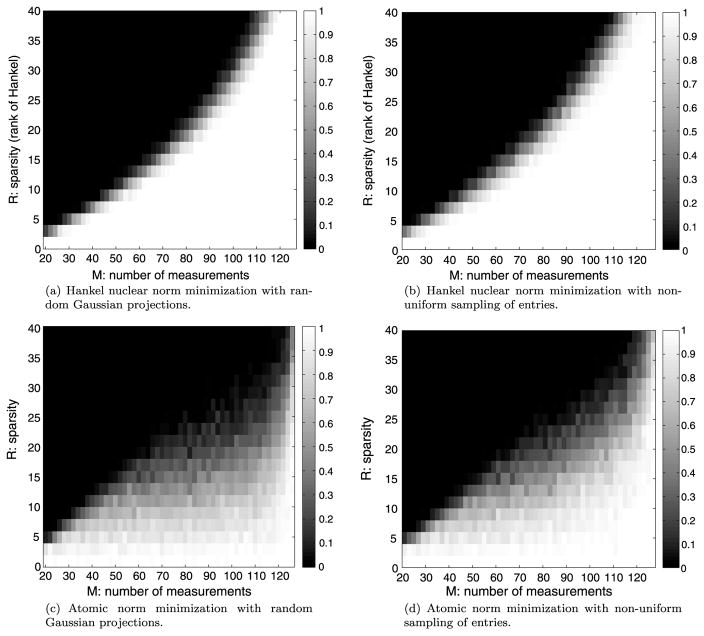

In this section, we use numerical experiments to demonstrate the empirical performance of our proposed approach, and compare it with other methods including those in [10,23]. We use superpositions of complex sinusoids as test signals. Note that the application of our approach is not limited to such signals but any signals that are superpositions of complex exponentials. We are going to consider two sampling schemes, namely, the random Gaussian sampling model in Theorem 1 and the non-uniform sampling of entries studied in [10,23]. For the latter sampling scheme, it randomly observes M entries of x̂ whose locations are uniformly distributed in all M-subsets of {0, 1, …, 2N −2}. We also consider two signal reconstruction algorithms, the Hankel nuclear norm minimization and the atomic norm minimization, from the given samples. Therefore, we have four different approaches to compare: the Hankel nuclear norm minimization with random Gaussian sampling (our proposed approach), the Hankel nuclear norm minimization with non-uniform sampling of entries (EMaC in [10]), the atomic norm minimization with random Gaussian sampling, and the atomic norm minimization with non-uniform sampling of entries (off-the-grid CS in [23]).

We fix N = 64, i.e., the dimension of the true signal x̂ is 127. We conduct experiments under different M and R for different approaches. For each approach with a fixed M and R, we test 100 runs, where each run is executed as follows. We first generate the true signal x̂ = [x̂(0), x̂(1), …, x̂(126)]T with for t = 0, 1, …, 126, where fk are frequencies drawn from the interval [0, 1] uniformly at random, and ck are complex coefficients satisfying the model ck = (1+100.5mk)ei2πθk with mk and θk being uniformly randomly drawn from the interval [0, 1]. Then we get M samples of x̂ according to the corresponding sampling scheme. Finally, a reconstruction x̃ is obtained by solving the corresponding reconstruction algorithm, which is numerically implemented by alternating direction method of multipliers (ADMM). If , then we regard it as a successful reconstruction.

We plot in Fig. 1 the rate of successful reconstruction with respect to different M and R for different approaches. The black and white region indicate a 0% and 100% of successful reconstruction respectively, and a grey between 0% and 100%. From the figure, we see that the atomic norm minimization has similar performance under the random Gaussian sampling and the non-uniform sampling of entries. Moreover, the Hankel nuclear norm minimization also has similar performance under these two types of different sampling schemes. Compared with the atomic norm minimization, the Hankel nuclear norm minimization method is more robust when neighboring frequencies are close, despite different sampling schemes used.

Fig. 1.

Numerical results.

6. Conclusion and future works

In this paper, we study compressed sensing of signal that is a weighted sum of R complex exponential functions with or without damping factor. The measurements are obtained by random Gaussian projections. We prove that, as long as the number of measurements is greater than O(Rln2 N) with N the dimension of the signal, minimization (4) is guaranteed to get a robust reconstruction of the underlying signal. Compared to results in [10,23] where partial entries of the underlying signal are observed, our proposed approach does not require any incoherence condition (i.e. does not require any separation condition on frequencies for signals without damping).

The bound O(Rln2 N) we obtained is not optimal. There are several possible direction to improve it. Firstly, we may improve the estimation of E(||𝒢g||2), a key step in our proof. We empirically observed that , which is better than the bound in Theorem 3. We would prove this bound theoretically. Secondly, we may borrow techniques from compressed sensing to get the optimal bound under Gaussian measurements. Actually, there has been recent work that yields precise bounds [17,18,22]. These results assume Gaussian measurements, but all quantities involved are reals. It is interesting to explore whether those results can be extended to our setting.

Acknowledgments

Sponsor names

NSF, country=United States, grants=DMS-1418737

National Natural Science Foundation of China, country=China, grants=61571380, 61201045

Simons Foundation, country=United States, grants=

NIH, country=United States, grants=1R01EB020665-01

References

- 1.Borcea L, Papanicolaou G, Tsogka C, Berryman J. Imaging and time reversal in random media. Inverse Probl. 2002;18:1247–1279. [Google Scholar]

- 2.Cai JF, Candès EJ, Shen Z. A singular value thresholding algorithm for matrix completion. SIAM J Optim. 2010;20:1956–1982. [Google Scholar]

- 3.Candes E, Li X, Ma Y, Wright J. Robust principal component analysis? J ACM. 2011:1–37. [Google Scholar]

- 4.Candes E, Fernandez-Granda C. Towards a mathematical theory of super-resolution. Comm Pure Appl Math. 2014;67(6):906–956. [Google Scholar]

- 5.Candes E, Plan Y. Matrix completion with noise. Proc IEEE. 2009;98:925–936. [Google Scholar]

- 6.Candes E, Recht B. Exact matrix completion via convex optimization. Found Comput Math. 2009;9:717–772. [Google Scholar]

- 7.Candes E, Tao T. The power of convex relaxation: near-optimal matrix completion. IEEE Trans Inform Theory. 2010;56:2053–2080. [Google Scholar]

- 8.Candès EJ, Romberg J, Tao T. Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans Inform Theory. 2006;52:489–509. [Google Scholar]

- 9.Chandrasekaran V, Recht B, Parrilo PA, Willsky AS. The convex geometry of linear inverse problems. Found Comput Math. 2012;12:805–849. [Google Scholar]

- 10.Chen Y, Chi Y. Robust spectral compressed sensing via structured matrix completion. IEEE Trans Inform Theory. 2014;60:6576–6601. [Google Scholar]

- 11.Chi Y, Scharf LL, Pezeshki A, Calderbank AR. Sensitivity to basis mismatch in compressed sensing. IEEE Trans Signal Process. 2011;59:2182–2195. [Google Scholar]

- 12.Donoho DL. Compressed sensing. IEEE Trans Inform Theory. 2006;52:1289–1306. [Google Scholar]

- 13.Fazel M, Fazel Maryam, Pong TK, Sun D, Tseng P. Hankel matrix rank minimization with applications to system identification and realization. SIAM J Matrix Anal Appl. 2013;34:946–977. [Google Scholar]

- 14.Gordon Y. Geometric Aspects of Functional Analysis, 1986/87. Vol. 1317. Springer; Berlin: 1988. On Milman’s inequality and random subspaces which escape through a mesh in Rn; pp. 84–106. Lecture Notes in Math. [Google Scholar]

- 15.Hua Y, Sarkar TK. Matrix pencil method for estimating parameters of exponentially damped/undamped sinusoids in noise. IEEE Trans Acoust Speech Signal Process. 1990;38:814–824. [Google Scholar]

- 16.Lustig M, Donoho D, Pauly JM. Sparse MRI: the application of compressed sensing for rapid MR imaging. Magn Reson Med. 2007;58:1182–1195. doi: 10.1002/mrm.21391. [DOI] [PubMed] [Google Scholar]

- 17.Oymak S, Thrampoulidis C, Hassibi B. The squared-error of generalized LASSO: a precise analysis. 51st Annual Allerton Conference on Communication, Control, and Computing, Allerton; IEEE; 2013. pp. 1002–1009. [Google Scholar]

- 18.Thrampoulidis C, Oymak S, Hassibi B. Simple error bounds for regularized noisy linear inverse problems. IEEE International Symposium on Information Theory; IEEE; 2014. pp. 3007–3011. [Google Scholar]

- 19.Qu X, Mayzel M, Cai JF, Chen Z, Orekhov V. Accelerated NMR spectroscopy with low-rank reconstruction. Angew Chem, Int Ed. 2015;54:852–854. doi: 10.1002/anie.201409291. [DOI] [PubMed] [Google Scholar]

- 20.Recht B, Fazel M, Parrilo P. Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM Rev. 2010;52:471–501. [Google Scholar]

- 21.Rockafellar RT. Princeton Landmarks in Mathematics. Princeton University Press; Princeton, NJ: 1997. Convex analysis. Reprint of the 1970 original, Princeton Paperbacks. [Google Scholar]

- 22.Stojnic M. A framework to characterize performance of lasso algorithms. 2013 arXiv:1303.7291. [Google Scholar]

- 23.Tang G, Bhaskar BN, Shah P, Recht B. Compressive sensing off the grid. IEEE Trans Inform Theory. 2013;59:7465–7490. [Google Scholar]

- 24.Tao T. Topics in Random Matrix Theory. American Mathematical Society; Providence, Rhode Island: 2012. [Google Scholar]

- 25.Tropp JA, Laska JN, Duarte MF, Romberg JK, Baraniuk RG. Beyond Nyquist: efficient sampling of sparse bandlimited signals. IEEE Trans Inform Theory. 2010;56:520–544. [Google Scholar]

- 26.Rao Richard, Kailath Thomas. ESPRIT-estimation of signal parameters via rotational invariance techniques. IEEE Trans Acoust Speech Signal Process. 1989;37(7):984–995. [Google Scholar]

- 27.Liao W, Fannjiang A. MUSIC for single-snapshot spectral estimation: stability and super-resolution. arXiv:1404.1484. [Google Scholar]

- 28.Moitra A. Super-resolution, extremal functions and the condition number of Vandermonde matrices. Proceedings of the 47th Annual ACM Symposium on Theory of Computing; STOC; 2015. [Google Scholar]