Abstract

Objective. To examine the extent of financial and faculty resources dedicated to preparing students for NAPLEX and PCOA examinations, and how these investments compare with NAPLEX pass rates.

Methods. A 23-item survey was administered to assessment professionals in U.S. colleges and schools of pharmacy (C/SOPs). Institutions were compared by type, age, and student cohort size. Institutional differences were explored according to the costs and types of NAPLEX and PCOA preparation provided, if any, and mean NAPLEX pass rates.

Results. Of 134 C/SOPs that received the survey invitation, 91 responded. Nearly 80% of these respondents reported providing some form of NAPLEX preparation. Significantly higher 2015 mean NAPLEX pass rates were found in public institutions, schools that do not provide NAPLEX prep, and schools spending less than $10,000 annually on NAPLEX prep. Only 18 schools reported providing PCOA preparation.

Conclusion. Investment in NAPLEX and PCOA preparation resources vary widely across C/SOPs but may increase in the next few years, due to dropping NAPLEX pass rates and depending upon how PCOA data are used.

Keywords: NAPLEX, PCOA, licensing exam preparation

Introduction

A standardized national examination is common among most health care professions in order for graduates to enter practice. All doctor of pharmacy (PharmD) graduates are required to take the North American Pharmacist Licensure Examination (NAPLEX),1 which was developed by the National Association of Boards of Pharmacy (NABP) as part of the state licensure process. The stated objectives of the NAPLEX are to evaluate whether students are able to identify practice standards for safe and effective pharmacotherapy, optimize therapeutic outcomes in patients, identify and determine safe and accurate methods to prepare and dispense medications, and provide and apply health care information to promote optimal health care. The NAPLEX has been used for decades as a valid measure of minimum competence for the practice of pharmacy, although Newton and colleagues cautioned that the “NAPLEX is not and cannot be validated to solely assess the vast and variable quantity of instruction provided in pharmacy education programs that prepare pharmacists for lifelong careers of learning.”2

Performance on licensing exams is of concern to both students and colleges/schools of pharmacy (C/SOPs). A PharmD graduate needs a minimum scaled score of 75 (range 0-150) to pass the NAPLEX. Candidates who fail the NAPLEX must wait 45 days to try again, with a maximum of three attempts in a 12-month period allowed.3 A study of nursing graduates found that failure to pass a licensing exam can cause the graduate to suffer delayed employment, loss of income, and harm to self-esteem.4 C/SOPs are also concerned about their graduates’ performance on licensing exams because a high pass rate is perceived as an indicator of the quality and effectiveness of the school’s PharmD curriculum. Three years of C/SOP NAPLEX pass rates are publicly available on the NABP website.5 The Accreditation Council for Pharmacy Education (ACPE) annually monitors program data for negative trends or changes in outcomes, and cautionary letters are sent to C/SOPs with NAPLEX pass rates and/or mean scaled scores for first-time candidates below two standard deviations from the national averages.6 ACPE also requires NAPLEX pass rates to be publicly disclosed on school websites. The public disclosure data may influence future applicants regarding school choice, and poor NAPLEX pass rates could have a negative impact on student recruitment.

NABP developed another standardized examination product, the Pharmacy Curriculum Outcomes Assessment (PCOA) and first launched it in April 2008 for use by C/SOPs in evaluating their PharmD curricula.7 ACPE now requires all C/SOPs to provide the annual PCOA performance of students nearing the end of their didactic curriculum. Although the large majority of C/SOPs were not using the PCOA prior to the 2016 mandate,8 ACPE asserts in Standards 2016, Guidance 24g that the PCOA “provides a valid and reliable assessment of student competence in the four broad science domains of the didactic curriculum.”9 The PCOA is not currently included in ACPE’s annual program data monitoring or public disclosure requirement and there is no mandated national passing score. However, given that Standards 2016 require C/SOPs to report annual PCOA performance along with other indicators such as NAPLEX pass rate and mean scaled scores, progression rates, and attrition rates, one must wonder if the PCOA will eventually become pharmacy’s version of the US Medical Licensing Exam Step 1 (USMLE Step 1).10

Given the high-stakes nature of licensure exams, both students and schools have been willing to invest time and money in a multitude of preparation products and activities such as books, commercial review courses, websites, and internally developed programs.11-15 Most of the existing literature regarding use of preparation products and activities for licensure exams comes from health disciplines other than pharmacy, including medicine and dentistry. Studies in the medical literature have found conflicting results on whether students who participate in commercial preparation courses score significantly higher on licensing exams than their counterparts. An early study by Scott and colleagues16 conducted in 1979 found that students who participated in a commercial test preparation course scored significantly higher on the USMLE Step 1 than students who did not participate in the course. However, more recent studies have not reached similar conclusions.

In 2000, Thadani and colleagues conducted a survey on how medical students prepared for the USMLE Step 1 examination and investigated the relationship between preparation and test scores. Ninety-eight percent of the 1650 respondents had used a commercial preparation program. Other preparations included lecture notes (39%), note-taking services (6%), textbooks (44%), course syllabi (21%), school preparation materials (25%), and group studying (25%). Preparations that significantly correlated with better performance were use of the USMLE general instructions book, textbooks, course syllabi, and study materials provided by the school.15 Werner and Bull found that students who participated in a 3-4 week live commercial coaching course did not achieve higher scores on the USMLE Step 1 than those who studied on their own.17 In 2004 Zhang and colleagues found that student performance on the USMLE Step 1 is related to academic performance in medical school and not the type of preparation methods.18

Medical schools have tried other methods of preparation besides commercial products to help their students prepare for the USMLE Step 1. In 2010, one medical school developed an optional student-led review course.11 Their study found that students who participated in the review course had a higher average score on the USMLE Step 1 than those who did not attend the review. Those who attended also felt the course was a valuable use of their time.

Other studies have surveyed medical students to explore relationships between particular study habits and higher USMLE Step 1 examination scores. A 2014 study found the only predictor of Step 1 scores was the Concentration score on the Learning and Study Strategy Inventory.19 A 2015 study found that medical students who studied 8-11 hours per day had higher USMLE Step 1 scores but that studying longer than 11 hours per day added no further benefit.20 Other behaviors that led to higher scores included studying for <40 days and completing >2000 practice questions. Behaviors that did not lead to higher scores were studying in groups, spending a majority of study time on practice questions, or taking longer than 40 days to prepare for the exam.

A longitudinal study (1996-2003) at a college of dentistry found that an internally constructed mock board exam on performance and clinical productivity correlated with passage of the Florida Dental Licensure Exam.21 In 2009, Hawley and colleagues found no correlation between the National Board Dental Examination (NBDE) Part I scores and various types of study aids, although they did find a weak correlation between NBDE Part 1 score and the number of hours studied per week (but not the number of months).13 They also found a significant relationship between NBDE Part 1 score and the length of dedicated curricular “release” time for students to study for the exam.

In pharmacy education there is limited literature on how C/SOPs prepare their students for the NAPLEX and PCOA examinations. A 2004 study reviewed NAPLEX preparation tools that were most commonly used by graduates of two Indiana pharmacy schools and surveyed graduates regarding which tools they felt were most valuable.22 Respondents (N=60) reported the most commonly used tools were a law review conducted by Purdue University and three commercial preparation products. Students felt these products were all valuable and were representative of the content of the licensure exam, however, none of the tools were found to be associated with a higher examination pass rate.

In 2014 a college of pharmacy studied an elective activity in which 38 third-year PharmD students (3-year program) generated and presented posters on pharmacotherapeutic topics to their peers.14 Students who participated in the activity performed better on both a commercial review book’s practice examination and the NAPLEX. Investigators found that scores on the commercial practice examination predicted 34% of the variance seen in the students’ NAPLEX scores. Furthermore, students perceived that the activity assisted them in their NAPLEX preparation. However, the small sample size may limit the predictive value of this study. There may also have been self-selection bias as academically stronger and/or residency-seeking students may have had greater motivation to participate in the poster presentations.

The literature on the PCOA is also limited, likely due to the lack of guidance on its use from ACPE prior to its required use by all C/SOPs in the 2016 standards. Scott and colleagues studied the relationship between GPA and PCOA scale score among P1, P2, and P3 students at one C/SOP from 2008-2010 and noted a positive correlation, which suggests that students who test well in courses can also succeed on comprehensive examinations.23 The authors raised salient points that minimal national participation in the PCOA limited the conclusions that can be drawn by comparing school results to the reference sample. Moreover, without either incentives or consequences, students may lack motivation to do their best on the exam which skews both their performance and the school results.

In 2015, Gortney and colleagues surveyed C/SOPs that have used or are using the PCOA.8 Forty-one of the 52 (79%) respondents reported using it for programmatic assessment and benchmarking, and nearly 90% were using PCOA with no or low stakes for students. Schools reported encouraging student performance by conveying (“messaging”) that their PCOA performance is reflective of program quality and effectiveness, and providing the PCOA results to student advisors. Use of the PCOA for evidence-based curricular improvement continues to be unclear at best.

C/SOPs may dedicate substantial financial resources and faculty time to prepare students to pass the NAPLEX upon graduation. With the recent mandate from ACPE requiring use of the PCOA, some may be delivering PCOA preparation as well. The objectives of this study were to examine the extent of financial and faculty resources dedicated to preparing students for NAPLEX and PCOA examinations, and to explore how these investments compare with NAPLEX pass rates.

METHODS

A survey was designed and administered to assessment professionals in US colleges and schools of pharmacy (C/SOPs). The survey contained 23 items structured as follows: items 1-10 pertained to NAPLEX review, 11-19 to PCOA review, and 20-23 to C/SOP characteristics. For the NAPLEX section, item 1 asked whether or not the respondent’s C/SOP provided any form of NAPLEX review. Those who answered yes were directed to items 2-9, which asked what type(s) of NAPLEX review was provided, whether student participation was required, the approximate cost of all resources used, and the respondent’s observations of why the C/SOP chose to do a review as well as faculty and student perceptions regarding the effectiveness of each resource. Those who answered no were directed to item 10 (items 2-9 were bypassed), which asked why their institutions did not provide students with NAPLEX review.

Similarly, the second block of items, which addressed the PCOA, began by asking the respondent whether or not his or her C/SOP provided PCOA preparation (item 11). Those who answered yes were asked what type(s) of PCOA review were used, their total annual cost, and the respondent’s observations of faculty and student perceptions of their effectiveness (items 12-18). Respondents whose institutions did not provide PCOA preparation bypassed items 12-18 and were asked why their C/SOPs chose not to provide PCOA review (item 19). Lastly, items 20-23 were intended to gather basic demographic information about the C/SOP: PharmD program length, number of faculty, committees or individuals responsible for NAPLEX and PCOA review, and name of the institution. The Virginia Commonwealth University Institutional Review Board approved this study. Data from the perception questions (items 7-8 and 18-19) were not reported due to concerns over validity and reliability.

The target population for the study was faculty and/or staff who were primarily responsible for assessment within a U.S. C/SOP at the time of the survey. A list of prospective participants and their contact information was compiled from the American Association of Colleges of Pharmacy (AACP) Roster of Faculty and Professional Staff.24 Individuals whose records indicated responsibility for assessment were initially included in our list. Each individual’s name, title, institution, and contact information was then verified and updated using his or her C/SOP’s website. Where possible, an individual who had left his or her institution was replaced with another individual from that C/SOP whose title or job description suggested responsibilities in assessment. In some cases, multiple faculty and staff were included for the same C/SOP in order to increase the likelihood of receiving a response from that institution. In total, the survey was administered to 147 individuals from 134 C/SOPs.

The survey was administered electronically using Qualtrics (Provo, UT). An email invitation containing a link to the consent form and survey instrument was sent to each participant in January 2016. Each respondent’s participation was tracked in Qualtrics to target reminders to non-respondents and later combine institutional data with survey data. Two automated email reminders were sent to non-respondents approximately 2 and 4 weeks following the initial survey invitation. Those who still had not responded within 6 weeks of the original invitation were contacted directly by the principal or a co-investigator to provide one final reminder before the survey closed in March.

Once compiled, survey data were downloaded from Qualtrics into Microsoft Excel 2013 v. 15.0 (Redmond, WA) for analysis. As a first step, institutions having more than one respondent were identified. Five institutions had two respondents each, so the more complete response for that C/SOP was retained while the second was removed from the dataset. Second, institutional data for the C/SOP age, 2015 NAPLEX pass rates and size of first-time candidate cohort, and accreditation status were compiled from web sources including the National Association of Boards of Pharmacy, American Association of Colleges of Pharmacy, and the Accreditation Council for Pharmacy Education and then merged with the survey dataset.5,25,26

A series of dummy variables were created to allow for comparisons between different types of C/SOPs. These included: institutional type (private or public); C/SOP founding date (“recent”=post-1995 or “legacy”=pre-1995); NAPLEX review provided; live NAPLEX review provided; PharmD cohort size (< 100 or ≥ 100); annual cost of the NAPLEX review (< $10,000 or ≥ $10,000), and PCOA review provided. Medians were used for the cohort size and NAPLEX review cost dummy variables to create two groups of approximately equal size. The decision was made to create a dummy variable for NAPLEX review cost, originally set on an ordinal scale, so that group differences in NAPLEX pass rates could be examined in a similar manner to the other demographic variables (ie, public versus private). Enrollment data were not available through AACP for two of the 91 institutions; NAPLEX pass rates were not available for five of the 91 institutions because these PharmD programs had not graduated students as of 2015. The final dataset was uploaded to SPSS v.21 for analysis.

Descriptive statistics were prepared for all variables in the dataset. Median costs of NAPLEX and PCOA preparation were compared between different groups of institutions on the basis of institutional type, size, and age. Mann-Whitney tests were used to determine whether any differences in NAPLEX cost between these groups reached statistical significance. PCOA cost data was not analyzed due to small sample size. Finally, comparisons were made between groups of institutions and mean NAPLEX pass rates on the basis of program size, type, age, and whether any NAPLEX preparation was provided, whether live NAPLEX review was provided, and the cost of NAPLEX preparation if any. Independent samples t-tests were conducted to determine whether statistically significant differences existed between each group of institutions. An alpha level of .05 was used to determine significance for all statistical tests in the analysis.

RESULTS

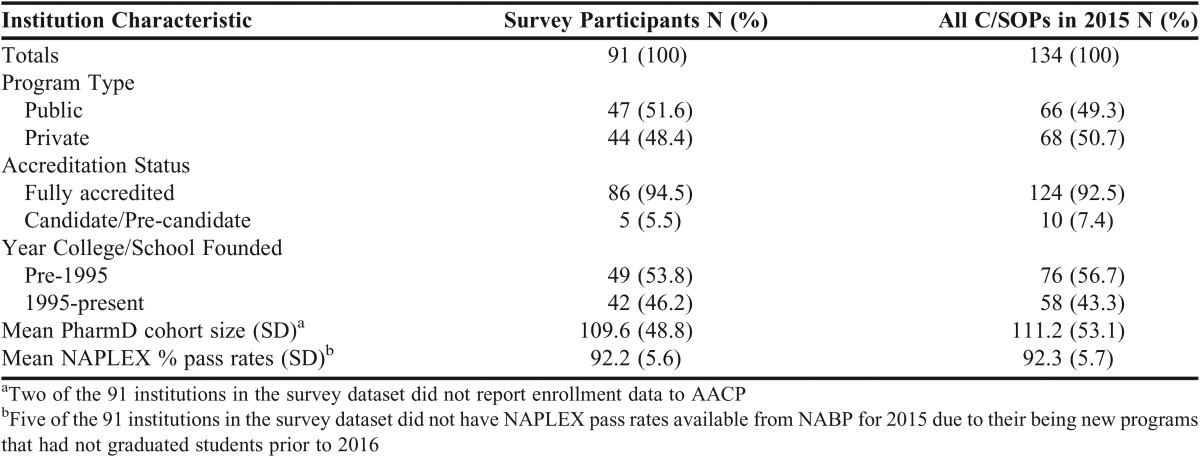

Ninety-one of 134 C/SOPs responded to the survey (68%). As noted, where responses from multiple campuses for the same institution were duplicative, only one response from each institution was retained for analysis. School demographic information are reported in Table 1.

Table 1.

Demographic Information for Colleges/Schools of Pharmacy

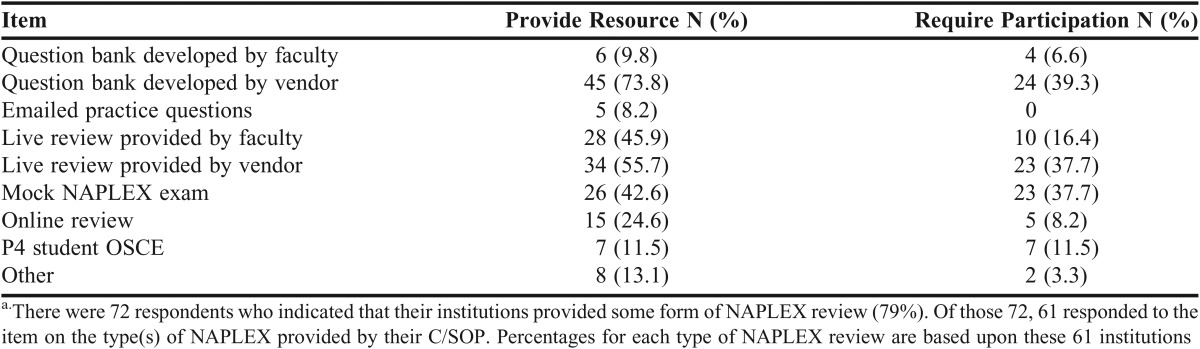

Seventy-two of the 91 (79.1%) responding schools provided a NAPLEX review for their graduating students. Of those 72, 61 responded to the item on the type(s) of NAPLEX provided by their C/SOP. Table 2 reflects the most common types of NAPLEX review at those 61 institutions. Nearly 40% of schools that provided NAPLEX review required student participation in live review by a vendor, question bank by a vendor, or a mock NAPLEX exam, while participation in live review by the faculty or online review was rarely required (16.4% and 8.2% respectively). There were 13 C/SOPs that identified use of only one resource, 13 that use two resources, 15 that use three resources, and 20 that use four or more NAPLEX prep resources. Most programs reported that the resources have been provided for the past three to six years. Among the 25 C/SOPs providing a live NAPLEX review that specified the time and structure of the review, the mean (SD) length of the review was 3.1 (1.4) days and involved an average (SD) of 2.8 (5) faculty members who spent an average (SD) of 7 (9.8) contact hours (collectively).

Table 2.

Survey Results for Type of NAPLEX Resources Provided (allowed for multiple selections)a

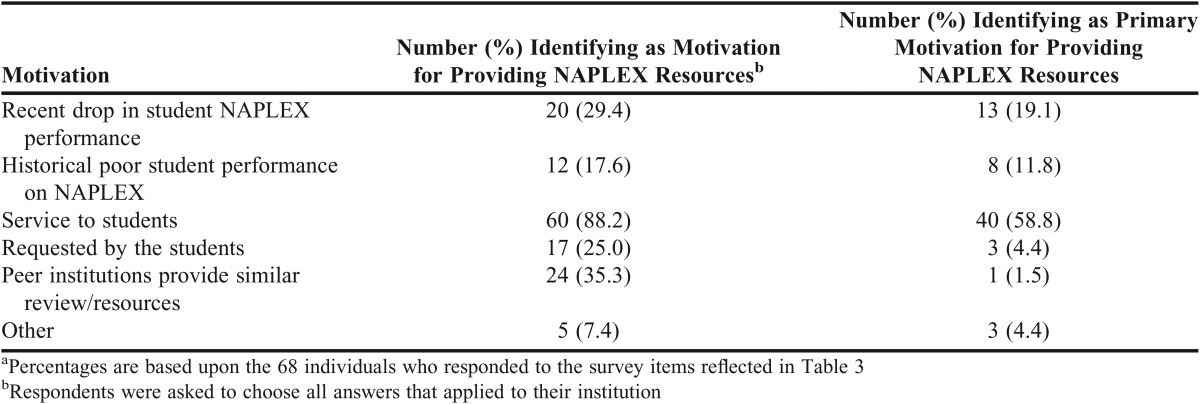

Sixty-eight C/SOPs responded to the question regarding institutional motivation for providing NAPLEX resources for students. Table 3 indicates that while service to the students was the most commonly cited and primary motivating factor, approximately 30% of schools listed a recent drop in student NAPLEX performance or historically poor NAPLEX performance as the primary reason to provide the review. While one-third of respondents reported a motivation to provide NAPLEX review because peer institutions provide similar review/resources, only one respondent identified this as the primary motivation for his/her institution. It was also found that private C/SOPs were more likely than public C/SOPs to identify poor historical performance on the NAPLEX as a motivation for providing review (χ2=8.15, p=.004). On the other hand, public C/SOPs were more likely to report NAPLEX preparation by peer institutions as a motivation for providing review (χ2=6.34, p=.012). The biggest reason schools cited for not conducting a review was having a high pass rate (>95%) without a review. Other reasons cited included perceptions that the school’s curriculum already prepared students, a review program is financially cost prohibitive for the C/SOP, or the C/SOP had provided reviews in the past that were not well attended.

Table 3.

Survey Results for College/School Motivations for Providing NAPLEX Resources to Studentsa

A total of 64 schools responded to the question about the annual costs incurred by the C/SOP for providing NAPLEX resources. Eleven schools reported spending no money (17.2%), 28.1% spend < $10,000, and 18.1% spend between $10,000 and $20,000 per year. Fourteen schools (21.9%) reported spending between $20,000 and $60,000, while 10 schools (15.6%) did not know their C/SOP’s expenditures on NAPLEX resources. The median spending range was $5,000-$9,999.

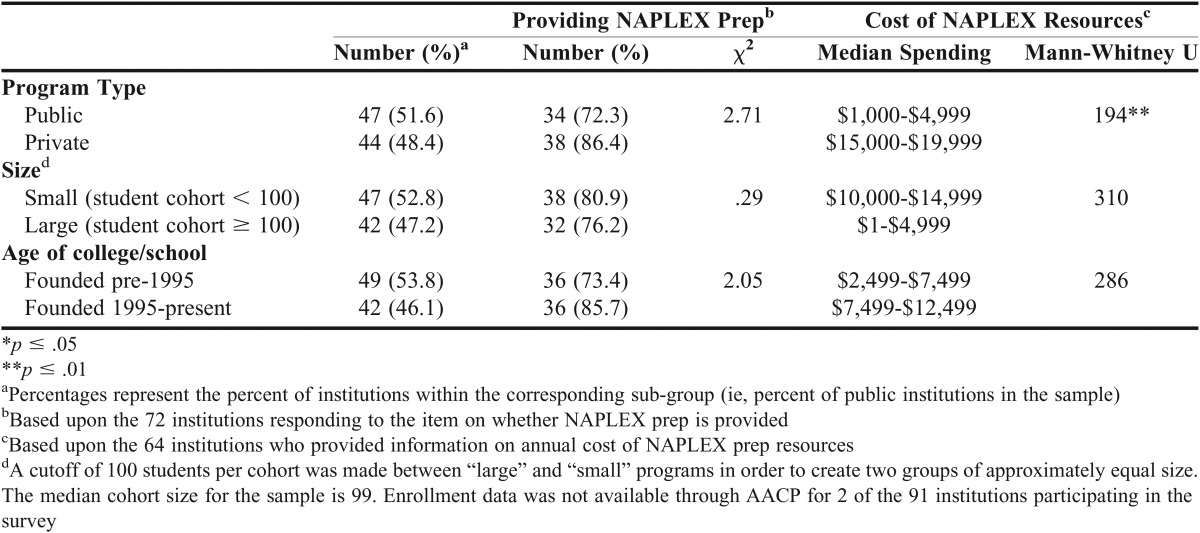

When comparing the characteristics of C/SOPs, private schools were more likely to provide their students with NAPLEX preparation compared to public institutions; 86.4% compared to 72.3%. Median spending among private institutions that are providing NAPLEX preparation resources was significantly higher compared to public institutions. Although not statistically significant, higher median spending was also observed among small as opposed to large programs, and among recent (post-1995) C/SOPs compared to legacy (pre-1995) programs (Table 4).

Table 4.

Comparison of NAPLEX Preparation Resources Based Upon Type of Institution

Among the 86 schools responding to the survey that had a mean NAPLEX pass rate for 2015, statistically higher means were found in the group of public institutions over private (93.8% vs 90.5% pass rate, p=.005). Interestingly, the group of 19 schools that do not provide NAPLEX preparation had a statistically significant higher mean NAPLEX pass rate over the C/SOPs that are providing preparation resources (95.9% versus 91.2%, p=.001). Among the 51 institutions that specified how much they spend annually on NAPLEX preparation and had a 2015 NAPLEX pass rate, the 28 schools spending less than $10,000 annually had a statistically significant higher mean NAPLEX pass rate over the 23 C/SOPs that are spending more than $10,000 annually (93.1% vs 88.9%, p=.006).

Only 18 of 91 C/SOPs reported that they provide PCOA preparation to students. Five schools provide live review by faculty, four provide question banks developed by a vendor, and three provide internally developed question banks. Nine of 15 respondents reported spending $0 on PCOA preparation; five schools are spending $1-$4,999, and one is spending $30,000-$34,999. Schools providing PCOA preparation are evenly representative of public and private (10 vs 8), and small and large cohorts (8 vs 10). Notably, twice as many legacy schools reported providing PCOA preparation as newer schools (12 vs 6). Schools that do not provide PCOA preparation cited a desire to obtain unbiased estimates of student content knowledge, use of the exam as a low-stakes assessment, and lack of experience with the exam.

DISCUSSION

Our study found that out of the 91 C/SOPs responding to our survey 79.1% provided some type of NAPLEX review with a median spending of $5,000-9,999. C/SOPs cited service to students and a drop in pass rates as the two biggest reasons for providing the preparation. Our study also found that only a handful of C/SOPs currently provide preparation for the PCOA examination. This may be due to the fact that until Standards 2016, this examination was not mandatory. Due to the addition of the PCOA exam as a required data set in Standards 2016, more schools may begin to consider providing students with preparation resources for this standardized examination. This may be especially true if a national passing score is established and ACPE begins to monitor PCOA results in the same manner as the NAPLEX.

The use of commercial preparations used by C/SOPs in our study (73.8% for question banks and 55.7% for live reviews) was similar to the findings from the medical and pharmacy literature. The medical literature has shown that 23%-33% of students studied have used some type of commercial coaching course to prepare for the USMLE Step 1 examination and 98% have used some type of commercial guide.15,17,18 A study of pharmacy students in Indiana found that 15%-48% of students used some type of commercial preparation products to prepare for the NAPLEX depending on the product used.22

Our study found that schools that do not provide NAPLEX preparation had higher pass rates than those that provided preparation materials. This may be related to finding that two of the primary motivating factors for C/SOPs to provide NAPLEX preparation resources were a recent decline and/or historically poor NAPLEX performance. Institutions with high-performing students may see little reason to invest in preparation resources for students.

Legacy C/SOPs had significantly higher NAPLEX pass rates than recent schools (93.3% vs 90.7%, p=.031), and public C/SOPs had higher pass rates than private (93.8% vs 90.5%, p=.005). These results are not surprising because the majority of public institutions were established prior to 1995 (78%) while the majority of private institutions were founded after 1995 (73%). C/SOPs that were founded more recently may consider providing NAPLEX review because they are under heightened scrutiny for initial ACPE accreditation, and a high NAPLEX pass rate is an indicator of program success.

C/SOPs that spent <$10,000 annually on NAPLEX preparation were more likely to have higher pass rates than those that spent $10,000 or more. This finding may also be related to program characteristics: legacy programs may have more faculty available to provide internal reviews, and public institutions might not have resources for external vendors.

We did not find the same results regarding the PCOA; only 18 of the 91 respondents currently provide PCOA preparation. While both exams are required documentation for ACPE, the PCOA does not have a pass/fail score cutpoint, and overall C/SOP exam results are not monitored by ACPE to the same extent as the NAPLEX. Secondly, as many schools noted, there is limited data on how to use and interpret the results from this exam making provision of preparation materials difficult.

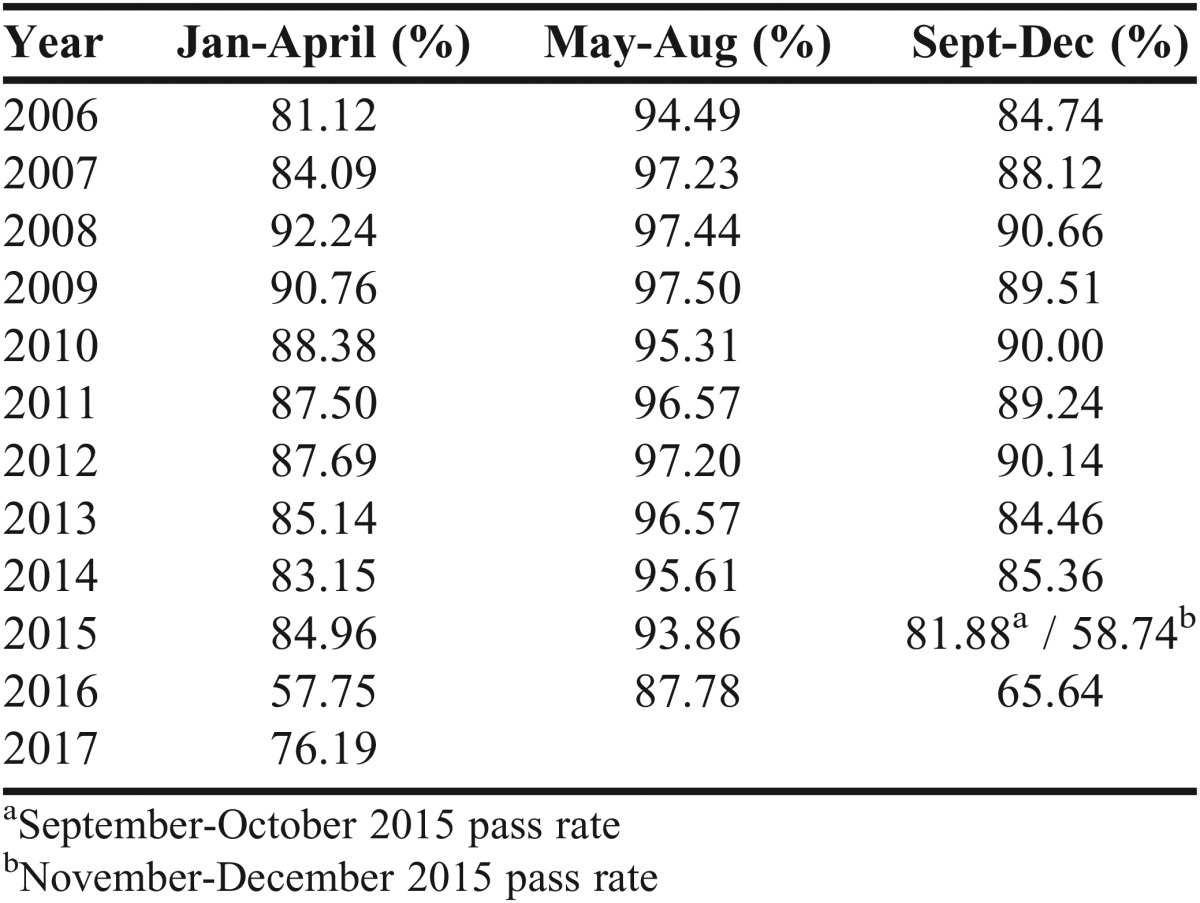

There are limitations to our study. One is that our study is a snapshot in time and C/SOP motivation to invest in preparation resources may change in the next few years, due to not only the new requirement for use of the PCOA but also due to the changes to the NAPLEX that have led to a decrease in the overall pass rate in late 2015-early 2016. NABP provides national data on NAPLEX pass rates and scores to all C/SOPs every four months (‘trimester’) that includes school, state, and national pass rate information as well as total and area score means for each NAPLEX content domain. The most recent reports reveal a dramatic plunge in national pass rates for first-time candidates of ACPE-accredited programs: 58.74% in November-December 2015 and 57.75% in January-April 2016, compared to 81.88% in September-October 2015 and 84.96% in January-April 2015 under the old blueprint. In the May-August trimester that represents the overwhelming majority of first-time recent graduate candidates, the pass rate dropped from 93.86% in 2015 to 87.78% in 2016 (Table 5). In their March 2016 newsletter, NABP announced a 4.7% increase in NAPLEX administrations between 2014 and 2015 (from 15,031 to 16,661), and 86% were first-time examinees. In their March 2017 newsletter, NABP announced an 8.8% increase in NAPLEX administrations between 2015 and 2016 (from 16,661 to 18,127), and less than 84% were first-time examinees. If the trend of a couple thousand first-time examinees failing the NAPLEX each year continues, the resources devoted by C/SOPs looking to provide students with NAPLEX preparation may continue to increase.

Table 5.

NAPLEX National Pass Rates by Trimester for First-Time Candidates, ACPE-Accredited Programs Only

It is also worth noting that the statistical power was limited for several of the t-tests to compare the NAPLEX pass rates for different types of institutions due to small group membership. The combination of small groups and small effect sizes meant that the statistical power was less than .80 for three of the six t-tests.27 In particular, the two t-tests where no statistically significant differences were detected – small versus large institutions and live versus other type of NAPLEX review – had power estimates that were .39 and .42, respectively. Lastly, we included survey items designed to capture faculty and student perceived effectiveness of the various NAPLEX preparation resources. Because the survey was delivered to the assessment professionals and not to faculty and students, these items were removed from the analysis due to the flawed design. Studies of faculty- and student-perceived effectiveness of NAPLEX resources represent one important area for future research.

Another area of future research is to examine the relationship between NAPLEX preparation resources and student NAPLEX performance while controlling for potential confounding factors such as student background and prior achievement variables. Are test preparation resources effective? Does focusing on test scores as quality indicators encourage an arms race? Should schools continue to allocate an enormous amount of faculty effort for internal reviews or spend thousands of dollars on external vendors if their NAPLEX pass rate and other data are already competitive with peers and nationally?

Such studies would necessitate the compilation of a large, student-level dataset from multiple institutions. While our study calls into question the appropriateness of school-provided NAPLEX preparation, it does not fully address whether preparation resources are successful in improving individual student performance.

CONCLUSION

Nearly 80% of the 91 C/SOPs in our study provide resources for NAPLEX review but the type(s) and expenditures vary considerably across institutions. Investment in PCOA preparation may increase depending on how the data are used in the future by C/SOPs and ACPE.

REFERENCES

- 1.National Association of Boards of Pharmacy. NAPLEX. http://www.nabp.net/programs/examination/naplex accessed 08/19 /2016. Accessed September 2, 2016.

- 2.Newton DW, Boyle M, Catizone CA. The NAPLEX: evolution, purpose, scope, and educational implications. Am J Pharm Educ. 2008;72(2):33. doi: 10.5688/aj720233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.National Association of Boards of Pharmacy. NAPLEX and MPJE 2016 Candidate Registration Bulletin. http://www.nabp.net/system/rich/rich_files/rich_files/000/001/480/original/naplex-mpje-bulletin-081716.pdf. Accessed September 2, 2016.

- 4.Frith KH, Sewell JP, Clark DJ. Best practices in NCLEX-RN readiness preparation for baccalaureate student success. Comput Inform Nurs. 2008;26(5 Suppl):46S–53S. doi: 10.1097/01.NCN.0000336443.39789.55. doi:10.1097/01.NCN.0000336443.39789.55. [DOI] [PubMed] [Google Scholar]

- 5.National Association of Boards of Pharmacy. NAPLEX passing rates for 2013-15 graduates per pharmacy school. http://www.aacp.org/about/membership/Pages/roster.aspx. Accessed September 2, 2016.

- 6.Accreditation Council for Pharmacy Education. Policies and Procedures for Accreditation of Professional Degree Programs. https://acpe-accredit.org/pdf/PoliciesProceduresJune2016.pdf. Published 2016. Accessed September 2, 2016.

- 7.National Association of Boards of Pharmacy. PCOA for Schools. http://www.nabp.net/programs/assessment/pcoa/pcoa-for-schools. Accessed September 2, 2016.

- 8.Gortney JS, Bray BS, Pharm BS, Salinitri FD. Implementation and Use of the Pharmacy Curriculum Outcomes Assessment at US Schools of Pharmacy. Am J Pharm Educ. 2015;79(9) doi: 10.5688/ajpe799137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Accreditation Council for Pharmacy Education. Accreditation Standards and Key Elements for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree. 2015. https://www.acpe-accredit.org/pdf/Standards2016FINAL.pdf.

- 10.United States Medical Licensing Examination. Step 1. http://www.usmle.org/step-1/#overview. Accessed September 2, 2016.

- 11.Alcamo AM, Davids AR, Way DP, Lynn DJ, Vandre DD. The impact of a peer-designed and -led USMLE Step 1 review course: improvement in preparation and scores. Acad Med. 2010;85(10 Suppl):S45–S48. doi: 10.1097/ACM.0b013e3181ed1cb9. doi:10.1097/ACM.0b013e3181ed1cb9. [DOI] [PubMed] [Google Scholar]

- 12.Dadian T, Guerink K, Olney C, Littlefield J. The effectiveness of a Mock Board experience in coaching students for the Dental Hygiene National Board Examination. J Dent Educ. 2002;66(5):643–648. http://www.ncbi.nlm.nih.gov/pubmed/12056769 [PubMed] [Google Scholar]

- 13.Hawley N, Johnson D, Packer K, Ditmyer M, Kingsley K. Dental students’ preparation and study habits for the National Board Dental Examination Part I. J Dent Educ. 2009;73(11):1274–1278. [PubMed] [Google Scholar]

- 14.Karimi R, Meyer D, Fujisaki B, Stein S. Implementation of an Integrated Longitudinal Curricular Activity for Graduating Pharmacy Students. Am J Pharm Educ. 2014;78(6):1–8. doi: 10.5688/ajpe786124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Thadani R, Swanson D, Galbraith R. A preliminary analysis of different approaches to preparing for the USMLE Step 1. Acad Med. 2000;75:S40–S42. doi: 10.1097/00001888-200010001-00013. [DOI] [PubMed] [Google Scholar]

- 16.Scott LK, Scott CW, Palmisano PA, Cunningham RD, Cannon NJ, Brown S. The Effects of Commercial Coaching for the NBME Part I examination. Med Educ. 1980;55(9):733–742. doi: 10.1097/00001888-198009000-00001. [DOI] [PubMed] [Google Scholar]

- 17.Werner LS, Bull BS. The effect of three commercial coaching courses on Step One USMLE performance. Med Educ. 2003;37(6):527–531. doi: 10.1046/j.1365-2923.2003.01534.x. doi:10.1046/j.1365-2923.2003.01534.x. [DOI] [PubMed] [Google Scholar]

- 18.Zhang C, Rauchwarger A, Toth C, O’Connell M. Student USMLE step 1 preparation and performance. Adv Heal Sci Educ. 2004;9(4):291–297. doi: 10.1007/s10459-004-3925-x. doi:10.1007/s10459-004-3925-x. [DOI] [PubMed] [Google Scholar]

- 19.West C, Kurz T, Smith S, Graham L. Are study strategies related to medical licensing exam performance? Int J Med Educ. 2014;5:199–204. doi: 10.5116/ijme.5439.6491. doi:10.5116/ijme.5439.6491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kumar A, Shah M, Maley J, Evron J, Gyftopoulos A, Miller C. Preparing to take the USMLE Step 1: a survey on medical students’ self-reported study habits. Postgrad Med J. 2015;91(1075):257–261. doi: 10.1136/postgradmedj-2014-133081. doi:10.1136/postgradmedj-2014-133081. [DOI] [PubMed] [Google Scholar]

- 21.Stewart CM, Bates RE, Smith GE. Does performance on school-administered mock boards predict performance on a dental licensure exam? J Dent Educ. 2004;68(4):426–432. http://www.ncbi.nlm.nih.gov/pubmed/15112919 [PubMed] [Google Scholar]

- 22.Peak AS, Sheehan AH, Arnett S.Perceived Utility of Pharmacy Licensure Examination Preparation Tools Am J Pharm Educ. 2006. Apr 1570225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Scott DM, Bennett LL, Ferrill MJ, Brown DL. Pharmacy curriculum outcomes assessment for individual student assessment and curricular evaluation. Am J Pharm Educ. 2010;74(10):183. doi: 10.5688/aj7410183. http://www.ncbi.nlm.nih.gov/pubmed/21436924 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.American College of Clinical Pharmacy. Roster of Faculty and Professional Staff.; 2016. http://www.aacp.org/about/membership/Pages/roster.aspx.

- 25.American Council for Pharmacy Education. Preaccredited and accredited professional programs of colleges and schools of pharmacy. 2016. https://www.acpe-accredit.org/shared_info/programsSecure.asp.

- 26.American Association of Colleges of Pharmacy. Student applications, enrollments and degrees conferred: Fall 2015 profile of pharmacy students. 2016. http://www.aacp.org/resources/research/institutionalresearch/Pages/StudentApplications,EnrollmentsandDegreesConferred.aspx.

- 27. Cohen J. Statistical Power Analysis for the Behavioral Sciences. New York, NY: Academic Press; 1977.