Abstract

Background

Accurately counting maize tassels is important for monitoring the growth status of maize plants. This tedious task, however, is still mainly done by manual efforts. In the context of modern plant phenotyping, automating this task is required to meet the need of large-scale analysis of genotype and phenotype. In recent years, computer vision technologies have experienced a significant breakthrough due to the emergence of large-scale datasets and increased computational resources. Naturally image-based approaches have also received much attention in plant-related studies. Yet a fact is that most image-based systems for plant phenotyping are deployed under controlled laboratory environment. When transferring the application scenario to unconstrained in-field conditions, intrinsic and extrinsic variations in the wild pose great challenges for accurate counting of maize tassels, which goes beyond the ability of conventional image processing techniques. This calls for further robust computer vision approaches to address in-field variations.

Results

This paper studies the in-field counting problem of maize tassels. To our knowledge, this is the first time that a plant-related counting problem is considered using computer vision technologies under unconstrained field-based environment. With 361 field images collected in four experimental fields across China between 2010 and 2015 and corresponding manually-labelled dotted annotations, a novel Maize Tassels Counting (MTC) dataset is created and will be released with this paper. To alleviate the in-field challenges, a deep convolutional neural network-based approach termed TasselNet is proposed. TasselNet can achieve good adaptability to in-field variations via modelling the local visual characteristics of field images and regressing the local counts of maize tassels. Extensive results on the MTC dataset demonstrate that TasselNet outperforms other state-of-the-art approaches by large margins and achieves the overall best counting performance, with a mean absolute error of 6.6 and a mean squared error of 9.6 averaged over 8 test sequences.

Conclusions

TasselNet can achieve robust in-field counting of maize tassels with a relatively high degree of accuracy. Our experimental evaluations also suggest several good practices for practitioners working on maize-tassel-like counting problems. It is worth noting that, though the counting errors have been greatly reduced by TasselNet, in-field counting of maize tassels remains an open and unsolved problem.

Keywords: Maize tassels, Object counting, Computer vision, Deep learning, Convolutional neural networks

Background

We consider the problem of counting maize tassels from images captured in the field using computer vision. Maize tassels are the male flowers of maize plants. The emergence of tassels indicates the arrival of the reproductive stage. During this stage, the total tassel number is an important cue to monitor the growth status of maize plants. It is closely related to the growth stage [1] and yield potential [2]. In practice, counting maize tassels still mainly depends on human efforts, which is inefficient and fallible. Such a tedious task should be replaced by machines in modern plant phenotyping.

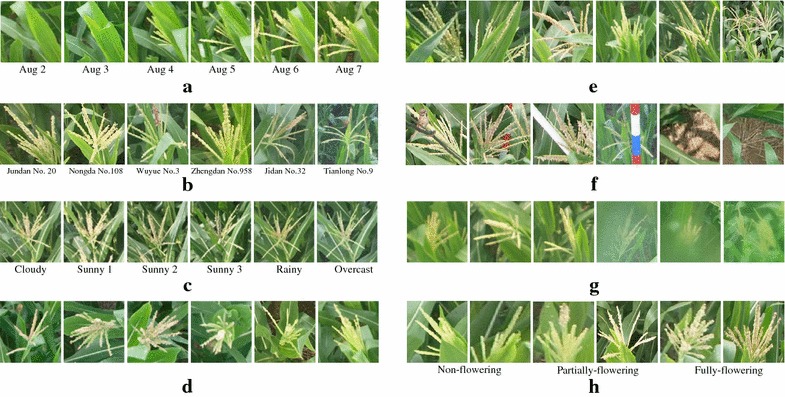

To meet the need of large-scale and high-throughput analysis in plant phenotyping, image-based techniques provide a feasible, low-end, and efficient solution and have thus received much attention recently [2–5]. However, most practitioners and researchers still conduct experiments under controlled artificial environment. Although indoor experiments do simplify the process of image processing and advance our knowledge regarding the link between genotype and phenotype, ultimately plant phenotyping must be transferred to real-world scenarios, such as in the field or greenhouse [6]. Unfortunately, intrinsic and extrinsic variations in the wild field render the understanding and processing of field-based images a challenging task. Such challenges become more serious in the problem of in-field counting of maize tassels. As shown in Fig. 1, these challenges can largely boil down to the variations in the field-based environment:

Maize tassels emerge suddenly and vary significantly in shape and size as plants grow over time;

Different cultivars of maize plants exhibit different appearance variations, such as colour and texture;

Illumination changes dramatically due to different weather conditions, especially during the sunny day;

The wind, imaging angle and perspective distortions cause various pose variations;

Occlusions occur frequently, which renders the difficulty for counting even for a human expert;

The cluttered background make visual patterns of maize tassels diverse and misleading;

The quality of images degrades because of the dust or rain drops on the camera lens;

Textural patterns also change essentially due to different flowering status.

It is worth noting that these challenges are not only specific to maize tassels but also applicable to a wide species of plants. It is inevitable to face these in-field variations before deploying plant phenotyping systems in the wild.

Fig. 1.

Intrinsic and extrinsic variations in the maize field. These variations pose significant challenges for in-field counting of maize tassels. a Shape and size vary significantly as plants grow over time. b Appearance variations due to different cultivars. c Illumination variations due to different weather conditions. d Pose variations due to wind, imaging and perspective distortions. e Occlusions between leaves and tassels or different tassels. f Cluttered background caused by wires, poles and weeds. g Image degradation due to dust or rain drops on the camera lens. h Texture variations due to different flowering status

Though efforts have been made to tackle above problems and have achieved a moderate degree of success, the precision of the state-of-the-art tassel detection method is still below 50% [2]. This may be largely due to the inherent limitation of the non-maximum suppression mechanism within object detection [7]—it cannot appropriately distinguish overlapping objects. Such a mechanism poses problems for accurate maize tassels detection because overlaps between different tassels are common patterns in the field. We have to ask: is the object detection the best way to count maize tassels? From a point of view of Computer Vision, the objective of object detection is to localise individual instances and output their corresponding bounding boxes. Since the locations of objects are identified, it is easy to derive the number of instances. However, the number of instances actually has nothing to do with the location. If one only cares about estimating the total number of instances, the problem is another important research topic in Computer Vision—object counting. In this paper, we show that it is better to formulate the task of maize tassels counting as a typical counting problem, rather than a detection one. In fact, object detection is generally more difficult to solve than object counting.

Nevertheless, object counting remains a known challenging task [8, 9], in both Plant Science and Computer Vision communities. Three sessions of Leaf Counting Challenge have been held in conjunction with the Computer Vision Problems in Plant Phenotyping workshops (CVPPP2014 [10]/CVPPP2015 [11]/ CVPPP2017 [12]), expecting to showcase visual challenges for plant phenotyping. Many efforts are also made in recent years in Computer Vision to improve the counting precision of crowds [13, 14], cells [15, 16], cars [17, 18], and animals [19]. However, little attention has been paid to plants-related counting tasks. To our knowledge, only two published papers considered counting problems relating to plants. Giuffrida et al. [20] proposed a learning-based approach to count leaves in rosette plants. Rahnemoonfar and Sheppard [21] presented a deep simulated learning approach to count tomato images. A limitation is that both papers only report their results on potted plants, which is far different from field-based scenarios. In contrast, our experiments use images captured exactly under unconstrained in-field environment, leading to a more challenging situation and a more reasonable experimental evaluation.

According to the taxonomy of [22], existing object counting approaches can be classified into three categories: counting by clustering, counting by detection, and counting by regression. The counting-by-clustering approaches often rely on the extraction of motion features (see [23] for example), which is not applicable to the plants because the motion of plants is almost unobservable within limited time. In addition, the counting-by-detection approaches [24, 25] tend to suffer in crowded scenes with significant occlusions, so this type of method is also not a good choice for our problem. In fact, the transductive principle suggests never to solve a harder problem than the target application necessitates [26]. As a consequence, recent counting-by-regression models [13, 15, 17] have demonstrated that it is indeed unnecessary to detect or segment individual instances when estimating their counts. In particular, the key component of modern counting-by-regression approaches is the introduction of the density map by Lempitsky and Zisserman [15]. Objects in an image are described by a density map given dot annotations. During the prediction, each object will be assigned a density that sums to 1, so the total number of objects can be reflected by summing over the whole density map. Overlapping objects are naturally taken into account in this paradigm.

Further, counting-by-regression approaches can be divided into two sub-categories: global regression [13, 20, 27] and local regression [14, 15, 19, 28]. Some early attempts try to regress the global image count directly via either Gaussian regression [13] or regression forest [27]. Chen et al. [29] estimates the local image count using a multi-output ridge regression model. Lempitsky and Zisserman [15], however, chooses to regress the local density map, which is found to be more effective than regressing just the global/local image count. At this time, although a moderate degree of counting accuracy is achieved, the performance is limited by the power of the feature representation. Such a circumstance eases in the era of deep learning, when the feature could be learnt and adjusted given a specific problem. The first deep counting approach can be found in [14], where the problem is addressed by regressing a local density map with deep networks. In fact, most subsequent deep counting approaches also follow this paradigm [18, 19, 30]. More recently, Cohen et al. [28] presents a somewhat different idea that regresses the local sub-image count with deep networks. We also take inspirations from Cohen et al. [28]. Readers can refer to Sindagi and Patel [31] for a comprehensive survey to the recent advance of deep networks in counting problems.

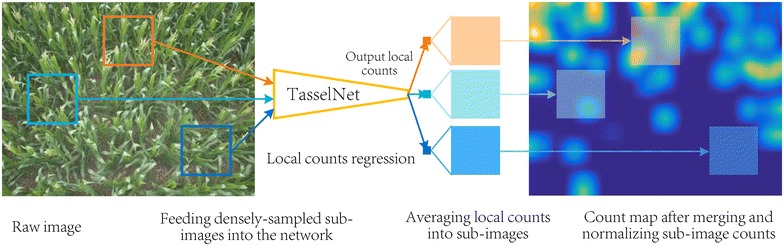

To better address aforementioned challenges, we follow the idea of counting by regression and present in this paper a deep convolutional neural network [32] (CNN)-based approach for maize tassels counting, which is referred to TasselNet. Deep networks are famous due to their excellent non-linear modelling ability and large model capacity, which is important for capturing diverse and complex visual patterns in the field. Notice that plants are like self-changing systems, the physical size of maize tassels in images vary significantly over time. This is what makes the problem of maize tassels counting different from other conventional counting problems in Computer Vision (the physical size of pedestrians, cells or cars in images remains unchanged or at least identical), and consequently, renders difficulties to describe the density map of maize tassels. To address this, in contrast to [15, 18] that either regress the global density map or the local density map, we propose to regress the local count computed from the density map. After merging and normalizing all local counts, our model outputs a count map similar to the ground-truth density map. The final count of maize tassels is computed by summing over the whole count map. Figure 2 illustrates our main technical pipeline.

Fig. 2.

The main technical pipeline of in-field counting of maize tassels. Sub-images are first densely sampled from a raw field image. Each sub-image will be fed into our TasselNet to regress a local count associating with the sub-image. After merging and normalizing all local counts, a count map for the field image can be acquired. The raw image count can thus be computed by integrating the count map

To validate the effectiveness of the proposed approach, a novel Maize Tassel Counting (MTC) dataset is constructed and will be released together with this paper. Our MTC dataset contains 361 images chosen from 16 image sequences. These sequences are collected from 2010 to 2015, covering 4 different experimental fields across China. All challenges described in Fig. 1 are involved in this dataset. The number of maize tassels in images varies between 0 and around 100. Following the standard annotation used in objection counting problems [15], a single dot is manually assigned for each maize tassel. We hope such a dataset could be used as a benchmark for evaluating in-field counting approaches and could draw attention from practitioners working in this area to attach importance to these in-field challenges.

Extensive evaluations are performed on the MTC dataset. Experimental results demonstrate that TasselNet outperforms other state-of-the-art methods and significantly reduces the counting errors by large margins. Moreover, based on the experimental results, we also suggest several good practices for in-field counting problems.

The contributions of this paper are multi-fold:

A novel counting problem of maize tassels whose sizes are self-changing over time. To the best of our knowledge, this is the first time that a plant-related counting problem is considered under unconstrained field conditions;

A challenging MTC dataset with 361 field images and corresponding manually-labelled dotted annotations;

TasselNet: an effective deep CNN-based solution for in-field counting of maize tassels via local counts regression.

Methods

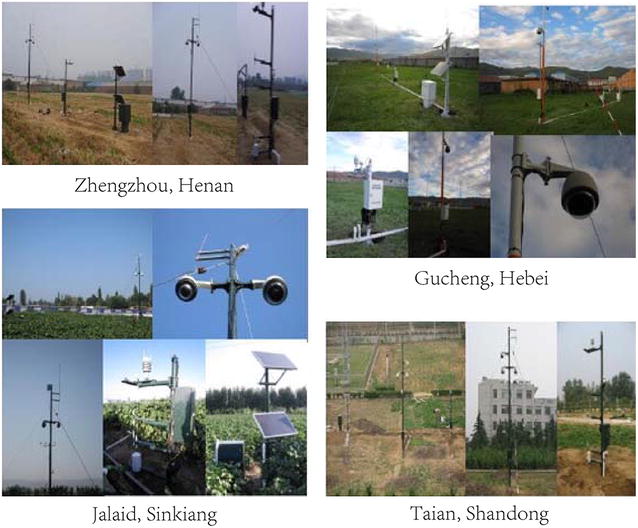

Experimental fields and imaging devices

16 independent time-series image sequences are collected from four different experimental fields across China between 2010 and 2015. Four experimental fields are located in Zhengzhou, Henan Province, China, Taian, Shandong Province, China, Gucheng, Hebei Province, China, and Jalaid, Sinkiang Autonomous Region, China, respectively. Six cultivars of maize plants are involved, including Jundan No. 20, Nongda No. 108, Wuyue No. 3, Zhengdan No. 32, Jidan No. 20, and Tianlong No. 9. Figure 3 show the experimental fields and imaging devices. The main components of the imaging device include a high-resolution CCD digital camera (E450 Olympus), a low-resolution monitoring device, a 3G wireless data transmission system, as well as several solar panels used for power supply. When an image is captured, it will be transmitted into a remote server, and then users can access the image data. Readers can refer to [33] for a detailed introduction of our imaging device. The focal length of the camera is fixed to 16mm. Images were taken every one hour from 9:00 to 16:00 from a five-meters-height vertical view (four meters for Gucheng sequences). The original image resolutions are 3648 × 2736 pixels for Zhengzhou and Taian sequences, 4272 × 2848 pixels for Gucheng sequences, and 3456 × 2304 pixels for Jalaid sequences.

Fig. 3.

Image acquisition devices in the maize field. Our devices are currently set up in four different places

Maize tassels counting dataset

Given 16 independent time series image sequences, images captured from the tasselling stage to the flowering stage are considered in our MTC dataset. In particular, according to the variability each sequence presents, 8–45 images are manually chosen from each sequence. If extrinsic conditions, such as weather conditions or the wind, change dramatically, more images will be chosen in one day, otherwise only 1 or 2 images are chosen. Such a sampling strategy is used with the motivation to avoid repetitive samples as much as possible, because images captured in one day usually do not exhibit many variations. However, the ability to model various data variabilities is much more important than blindly fitting a large number of repetitive samples for an effective computer vision approach. Thus, 361 field images in all are chosen to construct the MTC dataset. The MTC dataset is divided into the training set, validation set and test set. The training set and validation set share the same image sequences, while the test set uses different image sequences to enable a reasonable evaluation. Such an intentional setting is motivated by the fact that images in one sequence are often highly correlated, it is thus inappropriate to place them into both the training and test stages. Table 1 summarises the information of the MTC dataset. Overall, we have 186 images for training and validation and 175 images for test.

Table 1.

Training set (train), validation set (val) and test set (test) settings of the MTC dataset

| Sequence | Num | Cultivar | train | val | test |

|---|---|---|---|---|---|

| Zhengzhou2010 | 37 | Jundan No. 20 | |||

| Zhengzhou2011 | 24 | Jundan No. 20 | |||

| Zhengzhou2012 | 22 | Zhengdan No. 958 | |||

| Taian2010_1 | 30 | Wuyue No. 3 | |||

| Taian2010_2 | 32 | Wuyue No. 3 | |||

| Taian2011_1 | 21 | Nongda No. 108 | |||

| Taian2011_2 | 19 | Nongda No. 108 | |||

| Taian2012_1 | 41 | Zhengdan No. 958 | |||

| Taian2012_2 | 23 | Zhengdan No. 958 | |||

| Taian2013_1 | 8 | Zhengdan No. 958 | |||

| Taian2013_2 | 8 | Zhengdan No. 958 | |||

| Gucheng2012 | 15 | Jidan No. 32 | |||

| Gucheng2014 | 45 | Zhengdan No. 958 | |||

| Jalaid2015_1 | 12 | Tianlong No. 9 | |||

| Jalaid2015_2 | 12 | Tianlong No. 9 | |||

| Jalaid2015_3 | 12 | Tianlong No. 9 |

Num refers to the number of images in each sequence

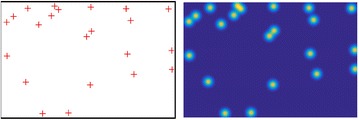

We also follow the standard annotation paradigm that manually provides each tassel with a dot annotation [15]. Indeed, dotting is regarded as a natural way to count for humans. It not only gives the raw counts of the image but also proffer the information how objects spatially distribute. Figure 4 show four example images with the dotted annotations.

Fig. 4.

Example images in the MTC dataset with dotted annotations. Images are from the a Zhengzhou2010, b Gucheng2012, c Taian2011_1 and d Jalaid2015_1 sequences, respectively

Local counts regression network

In this section we describe our proposed local counts regression network and show how to use it to address effectively the in-field counting problem of maize tassels.

The high-level idea of counting by regression is simple: given an image I and a regression target T, the goal is to seek some kind of regression function F so that . Standard solutions are to regress explicitly the raw counts in an image [13] (T is the global counts) or to regress implicitly the density map of an image [15] (T becomes a density map, and the counts can be acquired by integrating over the entire density map). However, as what we will show in our experiments, both solutions are not effective for maize tassels counting. The reason may boil down to the heterogeneity of maize tassels. As shown in Fig. 4, maize tassels exhibit uncertain poses and varying sizes, making them hard to be described by only a global image representation or a density map given only dotted annotations. Indeed, this is what makes maize tassels counting different from other standard counting problems.

Inspired by a recent idea of redundant counting [28], we propose to regress the local counts to address the counting problem of maize tassels. refers to the object count within a small sub-image . The proposed local regression has several benefits: (1) local characteristics are easier to be modelled than the global ones; (2) by regressing the local counts, we avoid the hard problem of dense per-pixel learning (compared to estimating the local density map); (3) by sampling small image patches, we can have access to a large number of training data, allowing us to train a high-capacity model. In particular, we consider the regression function F should be powerful enough so that it can appropriately capture those heterogeneous in-field variations. Inspired by the recent success of deep convolutional neural networks (CNNs) in visual recognition [32, 34], we choose to formulate F in a deep CNN-based framework. The goal is thus to recover with a set of non-linear transformations F given , i.e., . Figure 5 compares the conceptual difference of different regression goals. During the prediction, sub-images are densely sampled from a test image, and F will assign a local count to each sub-image patch. The final count of the image can be recovered by aggregating all sub-image counts into a count map with the same size of the test image, and a per-pixel normalisation step is performed to each pixel by dividing the number of sub-images that cast a prediction in it.

Fig. 5.

Conceptual differences of different regression targets. The global count regression directly regresses the number of image counts in an image. (Local) density map regression treats the two-dimensional (local) density map as the regression target. Our proposed local count regression regresses the local count computed from the local density map (best viewed in colour)

Regression target

Different regression targets imply different regression strategies, so how to define the regression target is the first and the most important step. In this paper, we first follow the standard way that generates the ground truth density by placing a Gaussian at each dot annotation [15]. Formally, given a ground-truth dot image Y, a density map D can be defined as , where G denotes a two-dimensional Gaussian kernel parametrised by , and indicates the convolution operation. Obviously, D is generated by performing Gaussian smoothing on Y. Figure 6 show an example of D given corresponding dotted annotations. It is worth noting that the summation of the density map is a decimal. The reason is that, when dots are close to the image boundary, their Gaussian probability will be partly outside the image, but this definition naturally takes a fraction of an objection into account.

Fig. 6.

An example of manually-annotated dot image (left) and its corresponding ground truth density map (right)

However, in contrast to [15, 18], we do not regard D as our regression target (we will show later in our experiments that the density map is too harsh as the regression target) but use the local counts integrated from the density map. In other words, some approaches produce a 2D network output, e.g., [18] outputs an 18 × 18 result, while TasselNet produces specifically an 1 × 1 output. The final count map is generated by scanning and combining scans at each location. If let D(x, y) be the pixel-level count of D at the location (x, y), then the regression target of local counts for the i-th sub-image can be defined:

| 1 |

where denotes the set of pixel locations of

Network architecture

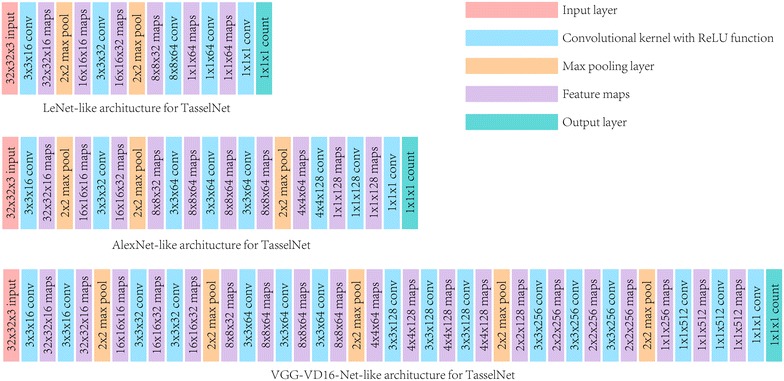

The network architecture closely relates to the model capacity, and the model capacity is also a key factor that affects the counting performance. Motivated by the leading role of CNNs in Computer Vision, in this paper we evaluate three typical network architectures: a low-capacity 4-layer model identical to the seminal LeNet architecture [35], a medium-capacity 7-layer model similar to the AlexNet architecture [32], as well as a high-capacity 16-layer model sharing the same spirit of the VGG-VG16-Net [34].

We follow the modern CNN design principle used in [34]: adopting only small 3 × 3 convolution kernels with 1-pixel padding to preserve the size of the tensor, doubling the number of feature maps in the higher layers to compensate the loss of the spatial information after the max pooling operation, synthesizing learnt features with two extra fully-connected layers, and using the ReLU function after each convolutional/full-connected layer. Figure 7 shows three architectures with a basic input size of 32 × 32 sub-image. The number of parameters within the LeNet-like, AlexNet-like and VGG-VD16-Net-like architectures are about , and , respectively.

Fig. 7.

Three typical CNN architectures used in TasselNet

Loss function

The learning of the regression network should be driven by a loss function. In this paper, we evaluate three typical loss functions used in regression problems. They are loss, loss, and Huber loss. loss and loss take the form

| 2 |

| 3 |

where L1 and L2 denote the and loss functions, respectively, M is the number of training sub-images, and is the residual that measures the difference between the regressed count and the ground truth count for the i-th sub-image, i.e., . Empirically, loss is considered more robust to noise than loss. Apart from these two standard choices, another widely-used loss function in robust regression is the Huber loss, which is defined as

| 4 |

where

| 5 |

and is a user-defined constant. Huber loss can be viewed as an integration of and losses. We will show later in our experiments that loss is the most effective one for maize tassels counting.

Merging and normalizing sub-image counts

During the prediction, TasselNet will scan the image in a sliding window manner with a stride of . Each window corresponds to a sub-image with size of . For each sub-image, TasselNet regresses a local count indicating the number of tassels within the sub-image. Assume that K sub-images in all are processed. Since each sub-image may be counted multiple times due to the densely-sampled mechanism, the final count of maize tassels cannot be directly computed by simply summing over all K local counts. To address this, here we develop a merging strategy to map K local counts back to the original test image. The normalization strategy is similar to the procedures introduced in [18, 28]. Assume that the k-th sub-image count is , we average into every pixel of the k-th sub-image, so the count of each pixel takes up (the sum of pixel-level counts still equals to ). In this way, a count map C with the same resolution of the test image can be consequently constructed by mapping the local count map back to the same location where the sub-image is sampled. Figure 2 illustrates this process. Finally, by constructing a normalisation image P that records how many times each pixel is counted, the final count of the image c can be computed as

| 6 |

where C(x, y) and P(x, y) denote the value of C and P at the location (x, y).

Implementation and learning details

We implement TasselNet based on MatConvNet [36]. Original high-resolution images are resized to their 1/8 sizes to reduce computational burden. During training, we densely crop sub-images with a stride of from 186 images belonging to the training and validation sequences of MTC dataset. We perform a random shuffling of these sub-images, 90% sub-images are used for training, and the rest for validation. Before feeding the image samples into the network, each sub-image is preprocessed by mean subtraction (the mean is computed from the training subset).

Note that, no data augmentation is performed when reporting the results, because we consider field-based conditions already cover various scenarios (the diversity of training data can be guaranteed). One may further improve the network performance with random rotation, flipping and cropping of training images. It also should be kept in mind that the ground truth counts may change accordingly.

The parameters of the convolution kernels are initialised with the improved Xavier method [37]. The standard stochastic gradient descent is used to optimise the network parameters. The learning rate is initially set to 0.01, and is decreased by a factor of 10 after 5 epochs and further decreased by a factor of 10 after another 10 epochs. Thus, we train TasselNet for 25 epochs in all. To allow the gradient to back-propagate easily from the output layer to the input layer, we add a batch normalisation layer [38] after each convolutional layer before ReLU. The training time of TasselNet varies from half a day to 2 days depending on the number of training samples and the network architecture used. The prediction time for each image takes about 2.5 seconds (Matlab 2016a, OS: Ubuntu 14.04 64-bit, CPU: Intel E5-2630 2.40GHz, GPU: Nvidia GeForce GTX TITAN X, RAM: 64 GB).

Table 2 summarises the default parameters used in our experiments. When , 355,473 and 31,167 sub-images are densely sampled and ready for training and validation, respectively.

Table 2.

Default parameters setting used in our experiments

| Parameter | Remark | Value |

|---|---|---|

| Network architecture | AlexNet-like | |

| Loss function | ||

| r | Sub-image size | 32 |

| Sampling stride during training | r/4 | |

| Sampling stride during prediction | r/4 | |

| Gaussian kernel parameter | 8 |

Results and discussion

We evaluate the effectiveness of TasselNet on the test sequences of MTC dataset. It is worth noting that Jalaid2015_2 and Jalaid2015_3 are two very challenging sequences. As shown in Fig. 8, images in the Jalaid2015_2 sequence suffer from dramatic illumination variations (Jalaid locates in a high-latitude area), and maize tassels in the Jalaid2015_2 sequence exhibit extremely crowded distributions. Extensive experiments are conducted to investigate key factors that affect the counting performance and to compare TasselNet against other state-of-the-art approaches. Based on the experimental results, we also suggest several good practices for practitioners working on in-filed counting problems.

Fig. 8.

Two example images from the Jalaid2015_2 (a) and Jalaid2015_3 (b) sequences. Images in two sequences exhibit dramatic illumination variations, dazzling visual characteristics, as well as extremely crowded distributions, which renders great difficulties for counting even for a human expert

Evaluation metric

The mean absolute error (MAE) and the mean squared error (MSE) are used as the evaluation metrics to assess the counting performance. They take the form

| 7 |

| 8 |

where N denotes the number of test images, is the ground truth count for the i-th image (computed by summing over the whole density map), and is the inferred image count for the i-th image (computed as per Eq. (6)). MAE quantifies the accuracy of the estimates, and MSE assesses the robustness of the estimates. The lower these two measures are, the better the counting performance is.

Choices of different network architectures, number of training samples, loss functions, Gaussian kernel parameters, and sub-image sizes

Here we perform extensive evaluations to justify our design choices. Notice that, in a principal way, the inclusion of specific design choices should be justified on the validation set. However, since we enforce the test set to be different sequences, the validation set thus exhibits a substantially different data distribution. Validating our design choices based on the validation set seems suboptimal. Instead, as a preliminary study, we direct report the counting performance on the test set to see how the variations of these design choices affect the final counting performance. Although with a little abuse, we demonstrate later that the performance of TasselNet with any design choice shows a notable improvement over other baseline approaches by large margins. Below we follow the default parameters setting when reporting experimental results unless a specific design choice is declared.

Network architecture

We first evaluate how the model capacity influences the results. As aforementioned, three network architectures of LeNet-like, AlexNet-like and VGG-VD16-Net-like TasselNet are considered. The learning curves of three models are shown in Fig. 9. It is clear that, the deeper the model uses, the lower the training error achieves. However, a lower training error does not imply a lower validation error for our problem—the validation error of VGG-VD16-Net-like model shows obvious fluctuations and is also higher than the AlexNet-like model. Numerical results on the test set are shown in Table 3. Experimental results demonstrate that the AlexNet-like architecture achieves the overall best counting performance, with a MAE of 6.6 and a MSE of 9.6. The inferiority of LeNet and VGG-VD16-Net may boil down to the low model capacity and the over-fitting on the training data. This can be clearly observed in Fig. 9. The low model capacity of LeNet-like architecture shows a relatively high training error, which implies the data may be in the state of under-fitting. Figure 9 show that the VGG-VD16-Net-like architecture fits the training data well while exhibits a higher validation error (compared to the AlexNet-like architecture), which suggests the model may not generalize well. Based on these results, a moderately complex model seems sufficient for maize tassels counting. In following evaluations, the AlexNet-like architecture is used in TasselNet.

Fig. 9.

Training (train) and validation (val) errors in terms of MAE versus the number of epochs on LeNet-like, AlexNet-like and VGG-VD16-like TasselNet architectures

Table 3.

Comparison of different network architectures for maize tassels counting on the test set of MTC dataset

| Network | Sequences | Overall | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Zhengzhou2011 | Taian2010_2 | Taian2011_2 | Taian2012_2 | Taian2013_2 | Gucheng2014 | Jalaid2015_2 | Jalaid2015_3 | |||||||||||

| MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | |

| LeNet | 4.4 | 5.4 | 6.3 | 8.0 | 2.9 | 3.7 | 6.4 | 7.9 | 4.9 | 5.8 | **3.8 | 5.0 | 16.3 | 17.0 | 28.7 | 33.0 | 7.2 | 11.3 |

| AlexNet | 4.9 | 6.1 | 5.2 | 6.6 | 2.5 | 2.9 | 4.8 | 5.8 | 4.0 | 5.0 | 5.3 | 6.5 | 16.0 | 16.6 | 20.7 | 25.2 | 6.6 | 9.6 |

| VGG-VD16-Net | 2.1 | 2.7 | 10.6 | 12.4 | 13.1 | 15.9 | 5.5 | 10.0 | 4.3 | 5.4 | 10.0 | 11.3 | 10.7 | 11.2 | 20.8 | 24.9 | 9.3 | 12.4 |

The lowest error is italicised

Further, it is worth noting that there exists some recent network architectures, such as ResNets [39] and DenseNets [40], that exhibit more powerful modelling ability than the three baseline architectures presented in this paper. One may find better counting performance using advanced architectures. We leave these explorations open at present.

Number of training samples

Here we investigate how the number of training samples affects the counting performance. We vary the sampling stride using the range of values , leading to , , , and training sub-images, respectively. Experimental results are listed in Table 4. We observe that the number of training samples indeed plays a vital role: MAE decreases from 9.5 to 6.5 with increased training number of sub-images. In addition, the overall performance between and is almost identical, implying that a moderate number of training sub-images can already capture well the in-field variations.

Table 4.

Counting performance with different number of training samples () on the MTC dataset

| MAE | MSE | |

|---|---|---|

| 9.5 | 14.2 | |

| 8.5 | 13.4 | |

| 6.6 | 9.6 | |

| 6.5 | 10.8 |

The performance is averaged over 8 test sequences, and the lowest error is italicised

Loss function

Here we compare the effect of different loss functions. As aforementioned, loss, loss, and Huber loss are evaluated. Huber loss contains a free parameter , so we further add three variants of Huber loss when , , and . The lower is, the more Huber loss looks like loss. The higher is, the more it looks like loss. Results are shown in Table 5. We observe that, there is no single loss can achieve consistently better results than other competitors over all test sequences. This may have something to do with the problem nature of maize tassels counting and specific data distributions of test sequences. Huber loss with shows better performance on the two challenging sequences, which suggests that Huber loss is indeed robust to noise but at a cost of sacrificing the ability to fit normal data samples (poor performance on the Taian2013_2 and Gucheng2014 sequences). Huber loss also has a problem that there is no principal way to choose an appropriate (the performance degrades when ). The counting performance of loss and loss is comparable, but is generally more stable.

Table 5.

Comparison of different loss functions for maize tassels counting on the MTC dataset

| Loss | MAE | MSE |

|---|---|---|

| Huber () | 8.5 | 12.2 |

| Huber () | 7.5 | 10.5 |

| Huber () | 7.3 | 10.0 |

| 7.3 | 10.3 | |

| 6.6 | 9.6 |

The performance is averaged over 8 test sequences, and the lowest error is italicised

Gaussian kernel parameter

The sensitivity of Gaussian kernel parameter is further evaluated. Concretely, we set , , and , respectively. The results are listed in Table 6. We observe that the optimal for each test sequence is different. The reason perhaps is that a fixed cannot describe appropriately maize tassels of different sizes (due to different cultivars). Although the optimal counting performance cannot be achieved with a specific , the counting performance with different does not vary significantly, which suggests that the mechanism of local counts regression is not that sensitive to specific choices of . Empirically, one can set an appropriate by observing the Gaussian smoothing responses on the training set. The responses should fit the median size of maize tassels.

Table 6.

Comparison of different Gaussian kernel parameter for maize tassels counting on the MTC dataset

| MAE | MSE | |

|---|---|---|

| 7.0 | 11.3 | |

| 6.6 | 9.6 | |

| 7.6 | 10.9 |

The performance is averaged over 8 test sequences, and the lowest error is italicised

Sub-image sizes

The influence of different sub-image sizes is also analysed. We compare the performance of four settings, including , , , and . Table 7 lists the results. According to the results, we again observe that the optimal performance for each test sequence does not correlate well with sub-image sizes. We think this has something to do with specific tassel sizes in each sequences. In practice, drawing upon a relatively small (but not too small) sub-image sizes is preferable. This is not just because one can densely sample a sufficient number of training samples but also because the variations within a small receptive field are easily modelled.

Table 7.

Comparison of different sub-image sizes for maize tassels counting on the MTC dataset

| MAE | MSE | |

|---|---|---|

| 9.9 | 13.4 | |

| 6.6 | 9.6 | |

| 6.8 | 10.8 | |

| 6.9 | 11.5 |

The performance is averaged over 8 test sequences, and the lowest error is italicised

Comparison with the state of the art

To place TasselNet in the context of the state of the art, several well-established baseline approaches are chosen for comparison, they are:

JointSeg [41]: JointSeg is the state-of-the-art tassel segmentation method. The number of object counts can be easily inferred from the segmentation results. We further perform some morphological operations as post-correction to reduce the segmentation noises. This approach can be viewed as a counting-by-segmentation baseline. It is not specially designed for a counting problem, but the comparison somewhat justify whether our problem could be addressed by a simple image processing technique.

mTASSEL [2]: mTASSEL is the state-of-the-art tassel detection approach designed specifically for maize tassels. mTASSEL uses multi-view representations to characterise the visual characteristics of tassels to achieve robust detection. This is a counting-by-detection baseline.

GlobalReg [42]: GlobalReg directly regresses the global count of images. Off-the-shelf fully-connected deep activations extracted from a pre-trained model are used as a holistic image representation. Then the global image feature is linearly mapped into a global object count by ridge regression. This is a global counting-by-regression baseline.

DensityReg [15]: DensityReg is the seminal work that proposes the idea of density map regression. It predicts a count density for every pixel by optimising a so-called MESA distance. This is a global density-based counting-by-regression baseline.

Counting-CNN (CCNN) [18]: CCNN is a state-of-the-art object counting approach. It treats the local density map as the regression target and also uses a AlexNet-like CNN architecture. This is a local density-based counting-by-regression baseline.

Qualitative and quantitative results are shown in Fig. 10 and Table 8, respectively. Results of TasselNet are reported using the default parameters setting, i.e., with the AlexNet-like architecture and suggested parameters. According to the results, we can make the following observations:

TasselNet outperforms other baseline approaches in 7 out of 8 test sequences and achieves the overall best counting performance—MAE and MSE are significantly lower than other competitors.

The poor performance of JointSeg and mTASSEL implies that the problem of in-field counting of maize tassels cannot be solved by simple colour-cue-based image segmentation or standard object detection.

Even a simple global regression can achieve comparable counting performance against mTASSEL in which the bounding-box-level annotations are utilized. This suggests it is better to formulate the problem of maize tassels counting in a counting-by-regression manner.

Regressing the global density map can also reduce the counting error effectively. However, it is hard to extend this idea to the deep CNN-based paradigm, because there is currently no dataset with thousands of labelled images samples to make the learning of deep networks tractable, especially in the plant-related scenarios. Hence, DensityReg cannot enjoy the bonus brought by deep CNN, and the performance may be limited by the power of feature representation.

The performance of CCNN even falls behind the global regression baseline. In experiments we observe that CCNN performs poorly when given an image with just a few tassels of different types. Compared to regressing local counts as in TasselNet, CCNN needs to fit harsher pixel-level ground truth density, so it likely suffers in the vague definition of density map due to different tassel sizes. This may explain why local density regression does not work when given varying object sizes like maize tassels.

Qualitative results in Fig. 10 show that TasselNet can give reasonable approximations to the ground truth density maps. In most cases, the estimated counts are similar to the ground truth counts. However, there also exists some circumstances that TasselNet cannot give an accurate prediction. The last row in Fig. 10 show three failure cases: (1) when the image is captured under extremely strong illuminations, highlight regions of leaves will contribute to several fake responses; (2) if maize tassels present long-tailed shapes in images, the long-tailed parts only receive partial local counts, resulting in a under-estimate situation; (3) the extremely crowded scene is also beyond the ability of TasselNet. To alleviate these issues, one may consider to add extra training data that contain the extremely crowded scenarios. Alternatively, since the training sequences and test sequences exhibit more or less different data distributions, it may be possible to use domain adaptation [43] to fill the last few percent of difference between sequences. We leave these as the future explorations of this work.

As a summary of our evaluations, we suggest the following good practices for maize-tassel-like in-field counting problems:

Try the idea of counting by regression if the objects exhibit significant occlusions.

Try local counts regression if the physical size of objects varies dramatically.

Use a relatively small sub-image size so that a sufficient number of training samples could be sampled.

It is safe to use a moderately complex deep model.

Try loss first to achieve a robust regression.

Fig. 10.

Qualitative results of ground truth density maps overlaid on original images and counting maps predicted by TasselNet. The number shown below each sub-figure denotes the tassel count integrated over the density/count map. The last row shows three unsuccessful predictions

Table 8.

Mean absolute errors (MAE) and mean squared errors (MSE) for maize tassels counting on the test set of MTC dataset

| Method | Sequences | Overall | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Zhengzhou2011 | Taian2010_2 | Taian2011_2 | Taian2012_2 | Taian2013_2 | Gucheng2014 | Jalaid2015_2 | Jalaid2015_3 | |||||||||||

| MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | |

| JointSeg | 20.9 | 23.2 | 46.6 | 47.9 | 16.4 | 19.7 | 25.1 | 29.8 | 6.5 | 8.0 | 7.3 | 10.5 | 27.8 | 29.1 | 53.2 | 61.3 | 24.2 | 31.6 |

| mTASSEL | 9.8 | 14.9 | 18.6 | 22.1 | 11.6 | 12.7 | 5.3 | 7.8 | 13.1 | 16.6 | 31.1 | 35.3 | 16.2 | 18.0 | 46.6 | 51.0 | 19.6 | 26.1 |

| GlobalReg | 19.0 | 21.5 | 23.0 | 24.7 | 14.1 | 16.8 | 13.5 | 15.7 | 19.6 | 25.2 | 19.5 | 21.7 | 11.2 | 13.7 | 42.1 | 45.4 | 19.7 | 23.3 |

| DensityReg | 16.1 | 20.2 | 9.9 | 10.7 | 9.2 | 11.7 | 10.8 | 12.7 | 20.2 | 23.7 | 9.4 | 10.5 | 7.2 | 7.9 | 23.5 | 26.9 | 11.9 | 14.8 |

| CCNN | 21.3 | 23.3 | 28.9 | 31.6 | 12.4 | 16.0 | 12.6 | 15.3 | 18.9 | 23.7 | 21.6 | 24.1 | 9.6 | 12.4 | 39.5 | 46.4 | 21.0 | 25.5 |

| TasselNet | 4.9 | 6.1 | 5.2 | 6.6 | 2.5 | 2.9 | 4.8 | 5.8 | 4.0 | 5.0 | 5.3 | 6.5 | 16.0 | 16.6 | 20.7 | 25.2 | 6.6 | 9.6 |

The lowest error is italicised

Conclusions

In this paper, we rethink the problem nature of in-field counting of maize tassels and novelly formulates the problem as an object counting task. A tailored MTC dataset with 361 field images captured during 6 years and corresponding manually-labelled dotted annotations is constructed. An effective deep CNN-based solution, TasselNet, is also presented to count effectively maize tassels via local counts regression. We show that local counts regression is particularly suitable for counting problems whose ground truth density maps cannot be precisely defined. Extensive experiments are conducted to justify the effectiveness of our proposition. Results show that TasselNet achieves the state-of-the-art performance and outperforms previous baseline approaches by large margins.

For future work, we will continue to enrich the MTC dataset, because the training data are always the key to the good performance, especially the data diversity. In addition, we will explore the feasibility to improve the counting performance in the context of domain adaptation, because the adaptation of object counting problems still remains an open question. In-field counting of maize tassels is a challenging problem, not only because the unconstrained natural environment but also because the self-changing rule of plants growth. We hope this paper could attract interests of both Plant Science and Computer Vision communities and inspires further studies to advance our knowledge and understanding towards the problem.

Authors' contributions

HL proposed the idea of counting maize tassels via local counts regression, implemented the technical pipeline, conducted the experiments, analysed the results, and drafted the manuscript. ZG, YX and CS co-supervised the study and contributed in writing the manuscript. BZ helped to design the experiments and provided technical support and computational resources for efficient model training. All authors read and approved the final manuscript.

Acknowledgements

The authors would like to thank Xiu-Shen Wei for useful discussion.

Competing interests

The authors declare that they have no competing interests.

Availability of data and materials

The MTC dataset and other supporting materials are available online at: https://sites.google.com/site/poppinace/.

Consent for publication

Not applicable.

Ethics approval and consent to participate

Not applicable.

Funding

This work was supported in part by the Special Scientific Research Fund of Meteorological Public Welfare Profession of China under Grant GYHY200906033 and in part by the National Natural Science Foundation of China under Grant 61502187.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Hao Lu, Email: poppinace@hust.edu.cn.

Zhiguo Cao, Email: zgcao@hust.edu.cn.

Yang Xiao, Email: Yang_Xiao@hust.edu.cn.

Bohan Zhuang, Email: bohan.zhuang@adelaide.edu.au.

Chunhua Shen, Email: chunhua.shen@adelaide.edu.au.

References

- 1.Ye M, Cao Z, Yu Z. An image-based approach for automatic detecting tasseling stage of maize using spatio-temporal saliency. In: Proceedings of eighth international symposium on multispectral image processing and pattern recognition; 2013. p. 89210. International Society for Optics and Photonics. doi:10.1117/12.2031024.

- 2.Lu H, Cao Z, Xiao Y, Fang Z, Zhu Y, Xian K. Fine-grained maize tassel trait characterization with multi-view representations. Comput Electron Agric. 2015;118:143–158. doi: 10.1016/j.compag.2015.08.027. [DOI] [Google Scholar]

- 3.Guo W, Fukatsu T, Ninomiya S. Automated characterization of flowering dynamics in rice using field-acquired time-series RGB images. Plant Methods. 2015;11(1):7. doi: 10.1186/s13007-015-0047-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Yang W, Guo Z, Huang C, Duan L, Chen G, Jiang N, Fang W, Feng H, Xie W, Lian X, et al. Combining high-throughput phenotyping and genome-wide association studies to reveal natural genetic variation in rice. Nat Commun. 2014 doi: 10.1038/ncomms6087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gage JL, Miller ND, Spalding EP, Kaeppler SM, de Leon N. TIPS: a system for automated image-based phenotyping of maize tassels. Plant Methods. 2017;13(1):21. doi: 10.1186/s13007-017-0172-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Fiorani F, Schurr U. Future scenarios for plant phenotyping. Annu Rev Plant Biol. 2013;64:267–291. doi: 10.1146/annurev-arplant-050312-120137. [DOI] [PubMed] [Google Scholar]

- 7.Felzenszwalb PF, Girshick RB, McAllester D, Ramanan D. Object detection with discriminatively trained part-based models. IEEE Trans Pattern Anal Mach Intell. 2010;32(9):1627–1645. doi: 10.1109/TPAMI.2009.167. [DOI] [PubMed] [Google Scholar]

- 8.Minervini M, Scharr H, Tsaftaris SA. Image analysis: the new bottleneck in plant phenotyping [applications corner] IEEE Signal Process Mag. 2015;32(4):126–131. doi: 10.1109/MSP.2015.2405111. [DOI] [Google Scholar]

- 9.Ali S, Nishino K, Manocha D, Shah M. Modeling, simulation and visual analysis of crowds: a multidisciplinary perspective. In: Ali S, Nishino K, Manocha D, Shah M, editors. Modeling, simulation and visual analysis of crowds. New York: Springer; 2013. [Google Scholar]

- 10.Tsaftaris SA, Scharr H (2014) Computer vision problems in plant phenotyping (CVPPP). https://www.plant-phenotyping.org/CVPPP2014. Accessed 25 Sept 2017.

- 11.Tsaftaris SA, Scharr H, Pridmore T (2015) Computer vision problems in plant phenotyping (CVPPP). https://www.plant-phenotyping.org/CVPPP2015. Accessed 25 Sept 2017.

- 12.Tsaftaris SA, Scharr H, Pridmore T (2017) Computer vision problems in plant phenotyping (CVPPP). https://www.plant-phenotyping.org/CVPPP2017. Accessed 25 Sept 2017.

- 13.Chan AB, Liang Z-SJ, Vasconcelos N. Privacy preserving crowd monitoring: counting people without people models or tracking. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR). IEEE; 2008. p. 1–7. doi:10.1109/CVPR.2008.4587569.

- 14.Zhang C, Li H, Wang X, Yang X. Cross-scene crowd counting via deep convolutional neural networks. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR); 2015. p. 833–841. doi:10.1109/cvpr.2015.7298684.

- 15.Lempitsky V, Zisserman A. Learning to count objects in images. In: Advances in neural information processing systems (NIPS); 2010. p. 1324–1332. http://papers.nips.cc/paper/4043-learning-to-count-objects-in-images.

- 16.Xie W, Noble JA, Zisserman A. Microscopy cell counting and detection with fully convolutional regression networks. Comput Methods Biomech Biomed Eng Imaging Vis. 2016 [Google Scholar]

- 17.Arteta C, Lempitsky V, Noble JA, Zisserman A. Interactive object counting. In: Proceedings of European conference on computer vision (ECCV). Springer; 2014. p. 504–518. doi:10.1007/978-3-319-10578-9_33.

- 18.Onoro-Rubio D, López-Sastre RJ. Towards perspective-free object counting with deep learning. In: Proceedings of European conference on computer vision (ECCV). Springer; 2016. p. 615–629. doi:10.1007/978-3-319-46478-7_38.

- 19.Arteta C, Lempitsky V, Zisserman A. Counting in the wild. In: Proceedings of European conference on computer vision (ECCV). Springer; 2016. p. 483–498. doi:10.1007/978-3-319-46478-7_30.

- 20.Giuffrida MV, Minervini M, Tsaftaris SA. Learning to count leaves in rosette plants. In: Proceedings of British Machine Vision Conference Workshops (BMVCW); 2015. doi:10.5244/c.29.cvppp.1

- 21.Rahnemoonfar M, Sheppard C. Deep count: fruit counting based on deep simulated learning. Sensors. 2017;17(4):905. doi: 10.3390/s17040905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Loy CC, Chen K, Gong S, Xiang T. Crowd counting and profiling: methodology and evaluation. In: Modeling, simulation and visual analysis of crowds. New York: Springer; 2013. p. 347–382. . doi:10.1007/978-1-4614-8483-7_14.

- 23.Rabaud V, Belongie S. Counting crowded moving objects. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR), vol. 1. IEEE; 2006. p. 705–711. doi:10.1109/cvpr.2006.92.

- 24.Li M, Zhang Z, Huang K, Tan T. Estimating the number of people in crowded scenes by mid based foreground segmentation and head-shoulder detection. In: Proceedings of international conference on pattern recognition; 2008. p. 1–4. doi:10.1109/icpr.2008.4761705.

- 25.Dollar P, Wojek C, Schiele B, Perona P. Pedestrian detection: an evaluation of the state of the art. IEEE Trans Pattern Anal Mach Intell. 2012;34(4):743–761. doi: 10.1109/TPAMI.2011.155. [DOI] [PubMed] [Google Scholar]

- 26.Vapnik VN, Vapnik V. Statistical learning theory. New York: Wiley; 1998. [Google Scholar]

- 27.Fiaschi L, Köthe U, Nair R, Hamprecht FA. Learning to count with regression forest and structured labels. In: Proceedings of international conference on pattern recognition (ICPR). IEEE; 2012. p. 2685–2688.

- 28.Cohen JP, Lo HZ, Bengio Y. Count-ception: counting by fully convolutional redundant counting. arXiv 2017.

- 29.Chen K, Loy CC, Gong S, Xiang T. Feature mining for localised crowd counting. In: Proceedings of British Machine Vision Conference (BMVC), vol. 1; 2012. p. 3. doi:10.5244/c.26.21.

- 30.Zhang Y, Zhou D, Chen S, Gao S, Ma Y. Single-image crowd counting via multi-column convolutional neural network. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR); 2016. p. 589–597. doi:10.1109/cvpr.2016.70.

- 31.Sindagi VA, Patel VM. A survey of recent advances in cnn-based single image crowd counting and density estimation. Pattern Recognit Lett. 2017 [Google Scholar]

- 32.Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems (NIPS); 2012. p. 1097–1105.

- 33.Lu H, Cao Z, Xiao Y, Fang Z, Zhu Y. Toward good practices for fine-grained maize cultivar identification with filter-specific convolutional activations. IEEE Trans Autom Sci Eng. 2016 [Google Scholar]

- 34.Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. CoRR abs/1409.1556 2014.

- 35.LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proc IEEE. 1998;86(11):2278–2324. doi: 10.1109/5.726791. [DOI] [Google Scholar]

- 36.Vedaldi A, Lenc K. MatConvNet: convolutional neural networks for matlab. In: Proceedings of ACM international conference on multimedia; 2015. p. 689–692. doi:10.1145/2733373.2807412.

- 37.He K, Zhang X, Ren S, Sun J. Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of IEEE international conference on computer vision (ICCV); 2015. p. 1026–1034. doi:10.1109/iccv.2015.123.

- 38.Ioffe S, Szegedy C. Batch normalization: accelerating deep network training by reducing internal covariate shift. In: Proceedings of international conference on machine learning (ICML); 2015.

- 39.He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR); 2016. doi:10.1109/cvpr.2016.90.

- 40.Huang G, Liu Z, Weinberger KQ, van der Maaten L. Densely connected convolutional networks. In: IEEE conference on computer vision and pattern recognition (CVPR); 2016.

- 41.Lu H, Cao Z, Xiao Y, Li Y, Zhu Y. Region-based colour modelling for joint crop and maize tassel segmentation. Biosyst Eng. 2016;147:139–150. doi: 10.1016/j.biosystemseng.2016.04.007. [DOI] [Google Scholar]

- 42.Tota K, Idrees H. Counting in dense crowds using deep features. In: CRCV; 2015.

- 43.Lu H, Cao Z, Xiao Y, Zhu Y. Two-dimensional subspace alignment for convolutional activations adaptation. Pattern Recognit. 2017;71:320–336. doi: 10.1016/j.patcog.2017.06.010. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The MTC dataset and other supporting materials are available online at: https://sites.google.com/site/poppinace/.