Abstract

Background

Assessment of competence in basic critical care echocardiography is complex. Competence relies on not only imaging accuracy but also interpretation and appropriate management decisions. The experience to achieve these skills, real-time, is likely more than required for imaging accuracy alone. We aimed to assess the feasibility of using simulation to assess number of studies required to attain competence in basic critical care echocardiography.

Methods

This is a prospective pilot study recruiting trainees at various degrees of experience in basic critical care echocardiography using experts as reference standard. We used high fidelity simulation to assess speed and accuracy using total time taken, total position difference and total angle difference across the basic acoustic windows. Interpretation and clinical application skills were assessed using a clinical scenario. ‘Cut-off’ values for number of studies required for competence were estimated.

Results

Twenty-seven trainees and eight experts were included. The subcostal view was achieved quickest by trainees (median 23 s, IQR 19–37). Eighty-seven percent of trainees did not achieve accuracy across all views; 81% achieved accuracy with the parasternal long axis and the least accurate was the parasternal short axis (44% of trainees). Fewer studies were required to be considered competent with imaging acquisition compared with competence in correct interpretation and integration (15 vs. 40 vs. 50, respectively).

Discussion

The use of echocardiography simulation to determine competence in basic critical care echocardiography is feasible. Competence in image acquisition appears to be achieved with less experience than correct interpretation and correct management decisions. Further studies are required.

Keywords: Critical care echocardiography, basic echo, echo training, competency, simulation

Introduction

The use of basic critical care echocardiography (CCE) is rapidly expanding and now forms a mandatory part of the Australasian College of Intensive Care Medicine training curriculum. To enable its successful implementation into mainstream intensive care practice, it is imperative that basic training methods are robust and carefully evaluated.

The difficulty in defining and assessing competence in basic CCE is well recognised.1,2 Current minimum training requirements are mainly based on expert opinion and round-table consensus where 30 performed and interpreted studies is often quoted as the minimum number required.3 A handful of single-centre studies have sought to evaluate this.4,5 These studies used small samples, focused principally on imaging quality, analysed by expert opinion and were biased by a lack of reproducibility due to the involvement of many different patients and often failed to take into account the real-time translation of echocardiography (echo) findings into clinical practice. Overall, a lack of robust evidence exists and this is reflected in the variability of minimum number of scans and supervision required for basic entry-level accreditation depending on where you undertake training (Table 1).

Table 1.

Minimum number of scans for basic accreditation by region.

We suggest that competence in basic CCE is based on not only accuracy of imaging but also the ability to interpret the images appropriately, in real-time at the bedside and most importantly, integrate these findings into the clinical situation to decide on appropriate management. The aim of this pilot study was to use high fidelity echo simulation and a standardised clinical scenario to objectively evaluate trainee competence in basic CCE in relation to prior echo experience. We analysed competence in three domains: imaging accuracy, real-time interpretation and management decision based on the study. We hypothesise that the minimum requirement of scans for competence in basic CCE is more than the number required for competence in image acquisition alone.

Methods

Study design and setting

We conducted a prospective, single-centre pilot study at Nepean Hospital, Sydney, Australia. Ethical approval was granted by Nepean Blue Mountains Human Research and Ethics committee (LNR/14/NEPEAN/2) and written consent was obtained from all the participants.

Inclusion criteria were (1) adult (>18 years of age), (2) subjects had completed a basic CCE course (including more than 4 h of hands-on training) accredited by the Australasian College of Intensive Care Medicine and (3) subjects who provided written consent. If a candidate met inclusion criteria and had performed more than 100 studies, they were considered to have performed 100 studies for data analysis purposes. Exclusion criteria were those who had not undertaken a suitable training course.

A group of ‘experts’ were recruited to provide a reference standard for imaging accuracy. Expert status was defined as (1) having performed more than 1000 comprehensive studies, (2) having the advanced echocardiography qualification the Diploma in Diagnostic Ultrasound (from the Australasian Society of Ultrasound in Medicine) and (3) having extensive experience in imaging critically ill patients.

Echocardiography

A high fidelity Vimedix echo simulator (CAE Healthcare, Montreal, Canada) was used for imaging, which is capable of recording data on probe position (in terms of distance and angle) and time taken for image acquisition. Specific pathology can be loaded along with the presence of normal anatomical structures such as ribs and lung to improve fidelity. Candidates were given a clinical scenario (see Supplemental Data 1) and then asked to perform a basic CCE study. The clinical scenario was one of a middle-aged woman who was tachycardic, hypotensive, mildly hypoxic with a vague history of heart disease. ECG and CXR were provided. The pathology chosen on the simulator was severe systolic dysfunction, left anterior descending coronary artery regional wall motion akinesia and a small pericardial effusion with no cardiac chamber compromise. All soft-tissue and bone (e.g. ribcage, lungs, and liver) were present to enable a life-like level of difficulty.

Candidates were then asked to complete a structured questionnaire of their findings (see Supplemental Data 2). They were able to review their images prior to completing the questionnaire on a computer screen provided. Finally, candidates had to choose the most appropriate management option based on their interpretation of the images. This process aimed to reflect normal clinical practice. The assessment of competence was based across three domains: imaging accuracy, image interpretation, and management choice.

Image acquisition

Reference images for each standard basic CCE imaging window were defined by SO and set on the simulator, known as ‘target cut planes’. Each candidate watched a video explaining the premise of the study (i.e. assessment of imaging and interpretation accuracy) and ‘rules’ (e.g. it was timed) and then was asked to perform all five standard basic CCE views on the simulator: parasternal long axis (PSL), parasternal short axis at the level of the papillary muscles (PSS), apical four chamber (A4C), subcostal (SC) and inferior vena cava (IVC) views. The candidates were observed and timed for each study and were asked to signal to the observer when they were satisfied with the view, the timer was stopped and the image was stored. This process was repeated for all five views. The simulator provided information in regards to total time to acquire each image as well as total position difference and total angle difference from the ‘reference image’ (see Figure 1). An image was considered accurate if both the total position and angle difference were less than the expert group upper 95% confidence interval from the stored reference image.

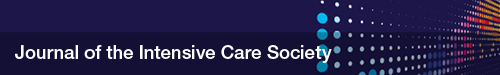

Figure 1.

Imaging on echocardiography simulator: (a) Reference probe position (red) vs. trainee image acquisition (grey); (b) Reference vs. trainee probe position difference measured in term of total angle difference (pitch + roll + yaw) and distance difference (x + y + z); (c) Example of reference image; (d) Example of trainee image

Interpretation of FCU

Eight findings had to be defined by checking appropriate boxes: left ventricle (LV) function, LV size, right ventricle (RV) function, RV size, presence of pericardial fluid, presence of echocardiographic signs of tamponade and IVC size and collapsibility. Appropriate interpretation of the basic CCE study was considered as choosing six or more correct findings out of a total of eight.

Management of clinical scenario

A list of five possible management options was given: further fluid resuscitation, diuresis, inotropic support, pericardial drain, or none of the above. Inotropic support was considered the correct response based on the presence of significant hypotension, a normal-sized LV with severe systolic dysfunction, and normal-sized IVC with no collapsibility.

Statistical analysis

Statistical analysis was performed with JMP version 11.2.0 (SAS Institute Inc., Cary, NC, USA). Continuous variables are expressed as mean ± standard deviation (SD) or median with interquartile range if not normally distributed and differences were analysed between groups using analysis of variance (ANOVA), if a significant difference was seen then Tukey HSD pair analysis was performed to determine between pair difference. Categorical variables are expressed as number and percentage and comparisons were performed by Fisher’s exact test (cells often had less than 5 values). Probability values are two-sided and p < 0.05 was considered significant. Receiver operating curves (ROCs) were generated to determine optimal point (best sensitivity and specificity) for number of scans required to achieve competence in imaging accuracy, correct image interpretation and correct management choice.

Results

Thirty-five subjects were included in this pilot study: 27 trainees and 8 experts. Data were collected over a six-month period from September 2015 to February 2016. Of the trainees, 13 had completed 0–9 FCU studies, 6 had completed 10–19, 3 had completed 20–39, 2 had completed 40–50 and 3 had completed more than 50 studies (see Table 2 for demographic data). There were no significant differences between the trainee groups in terms of age, sex, level of training, handedness or previous use of ultrasound. Including the expert group in the analysis, the expert group held significant differences: older age, more were left-handed and there were a higher proportion of specialists in the expert group compared to the trainees.

Table 2.

Demographic data based on experience with number of focused cardiac ultrasound studies performed.

| Number of focused cardiac ultrasound studies performed |

Expert | ANOVA/Fisher’s exact test | ||||||

|---|---|---|---|---|---|---|---|---|

| 0–9 | 10–19 | 20–39 | 40–50 | >50 | >1000 | |||

| Number candidates (n, %) | 13 (37%) | 6 (17%) | 3 (9%) | 2 (6%) | 3 (9%) | 8 (23%) | – | |

| Female gender (n, % in group) | 4/13 (31%) | 3/6 (50%) | 0/3 (0%) | 2/2 (100%) | 1/3 (33%) | 1/8 (13%) | 0.2 | |

| Age | 33 ± 9a | 32 ± 5a | 41 ± 9 | 29 ± 4 | 38 ± 3 | 47 ± 9a | 0.02a,b | |

| Right handedness (n, % in group) | 12/13 (92%) | 6/6 (100%) | 1/3 (33%) | 2/2 (100%) | 3/3 (100%) | 6/8 (75%) | 0.03b | |

| Ultrasound use for vascular access (n, % in group) | 10/13 (77%) | 2/6 (33%) | 1/3 (33%) | 2/2 (100%) | 3/3 (100%) | 7/8 (87%) | 0.2 | |

| Use of ultrasound for other organ analysis (n, % in group) | 8/13 (62%) | 6/6 (100%) | 2/3 (66%) | 0/2 (0%) | 3/3 (100%) | 5/8 (63%) | 0.1 | |

| Level of training (n, % in group) | JMO/Registrar | 9 (69%) | 2 (33%) | 1 (33%) | 2 (100%) | 0 | 0 | 0.01b |

| Senior Registrar | 3 (23%) | 3 (50%) | 0 | 0 | 2 (66%) | 0 | ||

| Specialist | 1 (8%) | 1 (17%) | 2 (66%) | 0 | 1 (33%) | 8/8 (100%) | ||

N = number; SD = standard deviation.

Significant difference in age between expert group and group 0–9 scans and 10–19 scans.

Analysis of covariance and Fisher’s exact test were not significant if the ‘expert’ group is not included (age p = 0.3, right handedness p = 0.1, level of training p = 0.1).

Imaging speed and accuracy

The SC view was acquired quickest (median 23 s; 19–37 s) and PSL was slowest to acquire (median time 53 s; 28–73 s). The median (IQR range) for PSS, A4C and IVC were 44 s (27–69 s), 42 s (31–78 s), and 32 s (15–75 s), respectively. The median total time to complete the study for trainees was 253 s (176–357 s), compared to 107 s (95–118 s) for experts. Overall, only 3/27 (11%) achieved imaging accuracy in all five views; 6/27 (22%) achieved 4/5 accurate views; 9/27 (33%) achieved 3/5 accurate views. The remaining 33% achieved accuracy in one or two views. Of those who had performed more than 40 studies, 100% achieved accuracy in at least 3/5 views. The PSL view yielded greatest accuracy with 22 (81%) achieving accuracy. The least accurate view was the PSS with only 12 trainees (45%) gaining accurate views. Of the A4C, SC and IVC accurate images were achieved in 13 (48%), 15 (56%), and 18 (67%), respectively (see Table 3). Data from ROCs showed candidates managed accurate imaging of the PSL and IVC view after the shortest time of experience (six scans) versus the PSS, A4C view and SC views which required more practice: estimated 15 scans (see Table 4).

Table 3.

Accuracy and time taken for image acquisition, correct interpretation and management based on number of CCE studies performed.

| Imaging | Number of basic CCE studies performed |

Overall trainee accuracy/ median time taken; IQR (s) | |||||

|---|---|---|---|---|---|---|---|

| 0–9 | 10–19 | 20–39 | 40–50 | >50 | |||

| Parasternal long-axis view | Accuracy (n, %) | 10/13 (77%) | 5/6 (83%) | 2/3 (67%) | 2/2 (100%) | 3/3 (100%) | 22/27 (81%) |

| Median time taken; IQR (s) | 50; 27–81 | 69; 38–103 | 53; 20–69 | 76; 16–135 | 43; 20–65 | 53; 28–73 | |

| Parasternal short axis view | Accuracy (n, %) | 6/13 (46%) | 2/6 (33%) | 1/3 33%) | 2/2 (100%) | 1/3 (33%) | 12/27 (45%) |

| Median time taken; IQR (s) | 47; 19–109 | 49; 43–71 | 31; 8.9–243 | 38; 34–42 | 42; 22–67 | 44; 27–69 | |

| Apical four chamber view | Accuracy (n, %) | 6/13 (46%) | 2/6 (33%) | 1/3 (33%) | 2/2 (100%) | 2/3 (67%) | 13/27 (48%) |

| Median time taken; IQR (s) | 40; 33–105 | 58; 39–95 | 18; 13–44 | 39; 36–42 | 17; 17–78 | 42; 31–78 | |

| Subcostal view | Accuracy (n, %) | 7/13 (54%) | 2/6 (33%) | 2/3 (67%) | 1/2 (50%) | 3/3 (100%) | 15/27 (56%) |

| Median time taken; IQR (s) | 23; 16–69 | 33; 23–41 | 13; 8–19 | 83; 20–146 | 26; 21–29 | 23; 19–37 | |

| Inferior vena cava view | Accuracy (n, %) | 8/13 (62%) | 4/6 (67%) | 2/3 (67%) | 1/2 (50%) | 3/3 (100%) | 18/27 (67%) |

| Median time taken; IQR (s) | 30; 15–55 | 53; 23–104 | 11.2; 9–76 | 145; 138–151 | 33; 10–47 | 32; 15–75 | |

| Correct interpretation | 7/13 (54%) | 3/6 (50%) | 1/3 (33%) | 2/2 (100%) | 3/3/(100%) | ||

| Correct management | 6/13 (46%) | 3/6 (50%) | 2/3 (67%) | 0/2 (0%) | 3/3 (100%) | ||

CCE: critical care echocardiography; IQR: interquartile range.

Table 4.

Number of studies required for competency based on accurate imaging, correct image interpretation and correct choice of management.

| Competency | ‘Cut-off’ number of studies | Specificity | Sensitivity | True positive | True negative | False positive | False negative | ROC area under curve | |

|---|---|---|---|---|---|---|---|---|---|

| Imaging accuracy | Parasternal long axis view | 6 | 0.6 | 0.7 | 15 | 3 | 2 | 7 | 0.6 |

| Parasternal short axis view | 15 | 0.7 | 0.4 | 5 | 11 | 4 | 7 | 0.5 | |

| Apical four chamber view | 15 | 0.8 | 0.5 | 6 | 11 | 3 | 7 | 0.6 | |

| Subcostal view | 15 | 0.8 | 0.5 | 7 | 10 | 2 | 8 | 0.6 | |

| Inferior vena cava view | 6 | 0.6 | 0.7 | 13 | 5 | 4 | 5 | 0.6 | |

| Correct interpretation | 40 | 1.0 | 0.3 | 5 | 12 | 0 | 10 | 0.6 | |

| Correct management | >50 | 1.0 | 0.4 | 6 | 13 | 0 | 11 | 0.7 | |

ROC: Receiver operating curves. Data based off ‘Cut-off’ value from receiver operating curves. Accurate imaging considered if recorded imaging position and orientation were less than mean + 1 standard deviation of the ‘experts’ (those who have completed >1000 focused studies). Correct interpretation considered selecting 6 or more correct interpretations from 8 questions from focused cardiac study. Correct management considered selecting appropriate choice from list of 5 possible choices based on interpretation of images taken during simulation.

Image interpretation

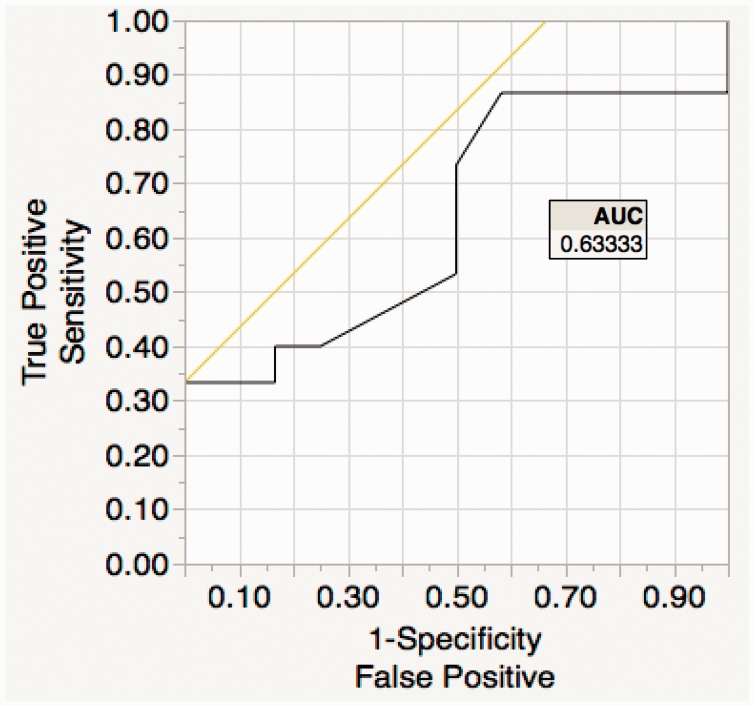

Of the trainees, the median number of correct findings was 6 (IQR 5–7) compared to the experts who all scored 8. Overall, 15 (56%) achieved 6 points or more in image interpretation (considered accurate image interpretation). Data from ROCs demonstrated a cut-off value of 40 studies for correct image interpretation, with a 100% specificity and 33% sensitivity (see Figure 2).

Figure 2.

Image interpretation receiver operating curve (ROC): trainee imaging interpretation determined accurate if their image was both within 95% confidence interval of the expert group for both positional as well as orientation accuracy vs. a reference standard. From this data, ROC curves generated to determine the point of greatest specificity and sensitivity for accuracy in terms of number of focused cardiac ultrasound studies done to achieve ‘accurate imaging’.

Choice of management

Trainees chose correct management 52% of the time compared to 100% of experts. The ROC detected a cut-off value of 50 studies, with a specificity of 100% and sensitivity of 40%. The median trainee confidence score recorded on a Likert scale was 6 (IQR 5.5–7.5). Those who had performed >50 studies were more likely to be confident in their interpretation and clinical management choices as recorded on a Likert scale (6 ± 2 vs. 9 ± 1, p < 0.001).

Discussion

The results of our pilot study demonstrate that fewer studies were required to gain competence in imaging compared to the number required for correct real-time interpretation and integration of findings to formulate an appropriate management plan. This study has also highlighted that most trainees did not perform a full basic study with accuracy. Based on ROC analysis, 15 studies were suggested to be a possible cut-off required to gain suitable imaging accuracy, as defined by a group of experts. However, this study is small in number, with the majority of candidates having performed less than 20 studies and the accuracy of this value needs further evaluation. The robust nature of assessment using simulation is a potential method for accurate and reproducible examination and may highlight those in need of further training. A larger study is warranted, particularly as intensive care colleges are including basic CCE as a mandatory part of training and current recommendations suggest 30 studies as a minimum standard to gain competence in basic CCE.3 Our findings suggest 30 studies may be a sufficient number to gain minimal proficiency in imaging but perhaps not enough experience to integrate basic findings in an accurate manner in the real-time management of a patient. Trainees found some views harder to obtain than others. Based on ROC analysis, the PSS, A4C, and SC views are hardest to achieve accuracy, compared to PSL and IVC views which were achieved with less scanning experience. This is in keeping with other studies, which suggest image acquisition skills can be gained with relatively little training9; however, accuracy with A4C and SC windows may be harder to achieve.10

Competence in basic CCE is a challenging concept to both define and measure and is evidenced by the varying practice worldwide.2,7,8 Proof of competency usually consists of set of requirements that provide some evidence that physicians have gained the skills needed to perform according to recognised standards.11 For basic CCE, a minimum of 30 fully supervised studies is suggested as a reasonable target for competency in image acquisition.12 The main premise of this study was to evaluate the minimum standard for trainees to practice safely in an appropriate environment where they are able to scan, but be aware of limitations and seek expert advice when necessary. Whilst competency in image acquisition may be easier to assess, the ability to assess cognitive competency in integration and clinical application is inherently more challenging. Whilst out pilot study has attempted to address this by including a clinical scenario, it does not take the place of real time clinical encounters. The number of studies required to transition from competency in image acquisition to competency in image interpretation and application is likely to be large, the exact figure is unknown.3,13 Our pilot study has suggested that a number of around 50 studies may be appropriate. Again larger studies are needed to evaluate this figure and these could include multiple case studies, rather than a single one, to avoid bias.

In contrast to cardiology echo training, assessment and training in critical care echo is in its infancy. It is a skill that is often learned on critically unwell patients, who are challenging to image, who have rapidly changing heamodynamics and complex pathology.2 As highlighted in the international consensus statement in 200914 competence in basic CCE is not as straightforward as other forms of critical care ultrasonography such as lung ultrasound as it requires the integration of a higher level cognitive training to translate findings to bedside management. CCE competence is divided into basic and advanced, with further subdivisions into the technical and cognitive domains. Basic CCE technical competency includes the ability to understand basic physical principles of ultrasound and attain accurate images across the five standard views without the use of comprehensive Doppler and two-dimensional measurements. The cognitive aspects consist of goal-directed assessments focusing on ruling in common clinical pathologies including severe hypovolaemia, LV failure, RV failure, tamponade, and massive left-sided valvular regurgitation and do not include the ability to evaluate or manage more complex haemodynamic problems.14

The usefulness of simulator-based training and derived motion analysis in cardiology fellows has recently been evaluated and was shown to be useful in assessing transition to clinical practice.15 The use of a simulator has not been proven to be transferable to live patients and does not take the place of actual bedside scanning. Simulation has been shown to improve knowledge of cardiac anatomy, image acquisition skills and the quantitative nature of the simulator, with no clinical consequences of failure may prove a useful addition to current teaching and training methods.16 The detailed kinematic analysis of motion may be helpful in identifying those that require more instruction and training and may be able to provide extra knowledge and skills to this subset.17 In addition, if echo simulation could be incorporated into high fidelity real-time simulation training, it may be a useful way to improve the cognitive aspects of basic CCE training where exposure to a wide variety of common clinical syndromes enables progression of skills in integration and clinical management.

Limitations in our study include the relatively small sample size, making it prone to beta-error,18 and an uneven distribution of groups with over half of candidates having performed less than 20 scans. The results gained from this single centre cohort may not be reproducible to other trainee/expert groups. In addition, the differences in cognitive abilities within the groups were difficult to account for. Whilst our sample size showed no significant difference in levels of training between the trainee groups, the ability to effectively integrate echo findings into a clinical scenario intuitively should be easier in those who have done more training. Our small sample size precluded analysis of this potential confounding factor. Whilst no participant had undergone formal Vimidex simulator training, any prior exposure to the simulator may have biased results and is a recognised limitation of this study. We did not record previous exposure to echo simulation and learned simulator-competence may explain some of the results with the metrics and timing. Further studies would benefit from assessing this previous experience. The strengths of the study include the unbiased, reproducible and accurate assessment of imaging as well as the review of the importance of clinical application of findings into a patient’s care.

Conclusions

In summary, whilst all trainees were able to perform a basic CCE study the majority were unable to perform a full study with accuracy. Whilst the exact number of basic CCE studies required for competency in image interpretation and appropriate management decisions remains unknown, this pilot study suggests that more training is needed in these two domains, possibly 50 studies. The use of echo simulation has yet to find its niche in current training programs, but as these evolve it may find a role as an adjunctive training tool and provide an objective way to assess competence in basic CCE. If basic CCE training is to be successfully implemented into intensive care curriculums, then further studies with larger numbers are required to inform and improve practice.

Acknowledgements

The authors thank Stephen Huang for help with statistical analysis.

Declaration of conflicting interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The author(s) received no financial support for the research, authorship, and/or publication of this article.

References

- 1.Poelaert J, Mayo P. Education and evaluation of knowledge and skills in echocardiography: how should we organize? Intensive Care Med 2007; 33: 1684–1686. [DOI] [PubMed] [Google Scholar]

- 2.Price S, Via G, Sloth E, et al. Echocardiography practice, training, accreditation in the intensive care: document for the World Interactive Network Focused on Critical Ultrasound (WINFOCUS). Cardiovasc Ultrasound 2008; 6: 49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.International expert statement on training standards for critical care ultrasonography. Intensive Care Med 2011; 37: 1077–1083. [DOI] [PubMed]

- 4.Labbé V, Ederhy S, Pasquet B, et al. Can we improve transthoracic echocardiography training in non-cardiologist residents? Experience of two training programs in the intensive care unit. Ann Intensive Care 2016; 6: 44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Tanzola RC, Walsh S, Hopman WM, et al. Brief report: focused transthoracic echocardiography training in a cohort of Canadian anesthesiology residents: a pilot study. Can J Anaesth 2013; 60: 32–37. [DOI] [PubMed] [Google Scholar]

- 6.College of intensive care medicine of Australia and New Zealand, http://cicm.org.au/Trainees/Training-Courses/Focused-Cardiac-Ultrasound#30Cases (accessed 27 August 2016).

- 7.Intensive Care Society, British Society of Echocardiography; FICE Accreditation Pack Final v6, www.ics.ac.uk/ics-homepage/accreditation-modules/focused-intensive-care-echo-fice/ (accessed 27 August 2016).

- 8.Canadian society of echocardiography. 2010 CCS/CSE guidelines for physician training and maintenance of competence in adult echocardiography, www.csecho.ca/pdf/CCS-CSE-Echo-ExecSum.pdf (accessed 27 August 2016).

- 9.Beraud AS, Rizk NW, Pearl RG, et al. Focused transthoracic echocardiography during critical care medicine training: curriculum implementation and evaluation of proficiency. Crit Care Med 2013; 41: 179–181. [DOI] [PubMed] [Google Scholar]

- 10.Chisholm CB, Dodge WR, Balise RR, et al. Focused cardiac ultrasound training: how much is enough? J Emerg Med 2013; 44: 818–822. [DOI] [PubMed] [Google Scholar]

- 11.Thys D. Clinical competence in echocardiography. Anesth Analg 2003; 97: 313–322. [DOI] [PubMed] [Google Scholar]

- 12.Mayo P. Training in critical care echocardiography. Ann Intensive Care 2011; 1: 36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Focused Cardiac Ultrasound: Recommendations from the American Society of Echocardiography. Expert consensus statement. J Am Soc Echocardiogr 2013; 26: 567–581. [DOI] [PubMed] [Google Scholar]

- 14.Mayo PH, Beaulieu Y, Doelken P, et al. American College of Chest Physicians/La Société de Réanimation de Langue Française Statement on Competence in Critical Care Ultrasonography. Consensus statement. Chest 2009; 135: 1050–1060. [DOI] [PubMed] [Google Scholar]

- 15.Montealegre-Gallegos M, Mahmood F, Kim H, et al. Imaging skills for transthoracic echocardiography in cardiology fellows: The value of motion metrics. Ann Card Anaesth 2016; 19: 245–250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Neelankavil J, Howard-Quijano K, Hsieh TC, et al. Transthoracic echocardiography simulation is an efficient method to train anesthesiologists in basic transthoracic echocardiography skills. Anesth Analg 2012; 115: 1042–1051. [DOI] [PubMed] [Google Scholar]

- 17.Matyal R, Mitchell JD, Hess PE, et al. Simulator-based transesophageal echocardiographic training with motion analysis. A curriculum-based approach. Anaesthesiology 2014; 121: 389–399. [DOI] [PubMed] [Google Scholar]

- 18.Freiman JA, Chalmers TC, Smith H, et al. The importance of beta, the type II error and sample size in the design and interpretation of the randomized control trial — survey of 71 negative trials. N Engl J Med 1978; 299: 690–694. [DOI] [PubMed] [Google Scholar]