Abstract

Background: The appearance of early Kienböck disease on radiographs and magnetic resonance imaging (MRI) may be difficult to distinguish from other conditions that affect the lunate. We aimed to assess the interobserver agreement in the diagnosis of early Kienböck disease when evaluated on different imaging modalities. Methods: Forty-three hand surgeon members of the Science of Variation Group were randomized to evaluate radiographs and 35 hand surgeons to evaluate radiographs and MRI scans of 26 patients for the presence of Kienböck disease, the lunate type, and the ulnar variance. We used Fleiss’ kappa analysis to assess the interobserver agreement for categorical variables and compared the κ values between the 2 groups. Results: We found that agreement on the diagnosis of early Kienböck disease was fair (κ, 0.36) among observers who evaluated radiographs alone and moderate (κ, 0.58) among observers who evaluated MRI scans in addition to radiographs, and that the difference in κ values was not statistically significant (P = .057). Agreement did not differ between observers based on imaging modality with regard to the assessment of the lunate type (P = .75) and ulnar variance (P = .15). Conclusions: We found, with the numbers evaluated, a notable but nonsignificant difference in agreement in favor of observers who evaluated MRI scans in addition to radiographs compared with radiographs alone. Surgeons should be aware that the diagnosis of Kienböck disease in the precollapse stages is not well defined, as evidenced by the substantial interobserver variability.

Keywords: Kienböck disease, agreement, radiographs, magnetic resonance imaging, ulnar variance

Introduction

Kienböck disease is idiopathic osteonecrosis of the lunate. Radiographs and magnetic resonance imaging (MRI) scans are used in the evaluation of Kienböck disease.3,16 MRI demonstrates decreased T1 signal in the lunate of patients with Kienböck disease when wrist radiographs are normal or when they show diffuse increased bone density and attenuation of the lunate, but no evidence of collapse.16,19,23 However, increased marrow signal in the lunate is not specific for Kienböck disease.8,21 Other conditions can cause bone marrow edema within the lunate and may be misinterpreted as Kienböck disease,9 such as ulnolunate impaction syndrome,6 intraosseous ganglion cysts, or intercarpal osteoarthritis.1,3,4 The distribution and margins of signal intensity within the lunate may be helpful to discriminate these conditions from Kienböck disease.4 Moreover, negative ulnar variance has been classically associated with Kienböck disease,24 whereas positive ulnar variance has been associated with ulnolunate impaction syndrome,6 but lack of ulnar variance should not rule out either condition and can lead to misdiagnosis.18 Be that as it may, ulnar variance is currently an important consideration in the treatment planning for Kienböck disease.16

In addition, Rhee et al22 demonstrated that a type II lunate (the presence of a medial articulation with the hamate) may protect patients with Kienböck disease against the development of coronal fractures and scaphoid flexion, and may impede disease progression. The authors suggested that patients with an intact type II lunate may potentially benefit most from disease modifying procedures in the early stages of Kienböck disease.

A study by Huellner et al10 has demonstrated that agreement on the diagnosis of various hand and wrist conditions (including Kienböck disease and ulnolunate impaction syndrome) is greater with MRI than radiographs among experienced readers, but less with inexperienced readers. The purpose of our study is to determine the interobserver agreement on the diagnosis of Kienböck disease among hand surgeons of the Science of Variation Group (SOVG)7 who evaluate different imaging modalities, and to determine whether MRI along with radiographs increases the reliability of the diagnosis of Kienböck disease compared with radiographs alone. We hypothesized that the interobserver agreement on the diagnosis of Kienböck disease with no radiographic signs of lunate collapse does not differ for radiographs alone compared with radiographs and MRI scans among the subgroup of hand surgeons of the Science of Variation Group. Our secondary null hypothesis was that the level of surgeon confidence with regard to the diagnosis of Kienböck disease does not vary based on imaging modality. In addition, we hypothesized that the interobserver agreement in the assessment of lunate type according to Viegas et al25 and ulnar variance does not vary based on imaging modality.

Patients and Methods

Study Design and Participants

Our institutional review board approved this cross-sectional survey study and granted a waiver of informed consent. We sent email invitations to the subset of 305 hand and wrist surgeons of the Science of Variation Group (SOVG). Eighty-four responded and participated in the study. One hundred forty-two invitees were excluded because—although their email addresses are registered in the SOVG database—they have never (n = 110) or only once (n = 32) participated in any of the previous 8 surveys over the past 12 months. We therefore considered them to have withdrawn from the SOVG. The demographics of the 84 out of the 163 active or new members (52%) who participated in the present study did not differ from the remaining 79 active members who did not participate in the present study with regard to sex (P = .60; by Fisher exact test), practice location (P = .74), years in practice (P = .78), or supervision of trainees (P = .28). Given the use of randomization in this study, the study invitees and response rate can be considered important only with respect to external validity.

We created an online survey using SurveyMonkey (Palo Alto, California) that included 28 patient descriptions. Out of 84 participants, 45 (54%) were randomized to view posteroanterior and lateral wrist radiographs for all patients (group 1) and 39 (46%) were randomized to view videos scrolling through coronal and sagittal T1 weighted images and coronal T2 or short-tau inversion recovery (STIR) images of MRI scans in addition to the radiographs for all patients (group 2). Two participants in group 1 (4.4%) and 4 participants in group 2 (10%) did not complete the survey and were excluded. We observed no imbalance in the number of participants that were randomized to (P = .43; by Fisher exact test) or excluded from (P = .41) both groups. The patient descriptions that we provided in addition to the imaging studies were the same for both study groups and included the patient’s age, sex, and affected limb, as well as the description “with wrist pain.” For each patient, respondents were asked to answer the following 4 questions: (1) Is this Kienböck disease? (Yes/No); (2) On a scale from 0 to 10, how confident are you about this decision? (0, not at all confident; 10, very confident); (3) What is the lunate type? (type I/type II); and (4) What is the ulnar variance? (Positive/Neutral/Negative). Invitations were sent to participants in January 2016, and reminders were sent after 1 and 3 weeks.

We text searched reports of all upper extremity MRI scans that were obtained between January 2000 and December 2015 at 1 of 2 institutions, for “Kienböck disease” or “avascular necrosis of the lunate,” “ulnolunate impaction syndrome,” “intraosseous cysts of the lunate,” and “intercarpal arthritis involving the lunate.” We included common misspellings and synonyms and reviewed the flagged MRI scans and radiographs in consecutive order by date from newest to oldest. Only MRI scans of adult patients of whom radiographs of the ipsilateral wrist were available, obtained a maximum of 3 months before or after the MRI, and without interval surgical changes, were included. We selected consecutive patients in reverse chronological order with changes diagnosed as Kienböck disease without lunate collapse (n = 13), ulnolunate impaction (n = 5), lunate intraosseous ganglion cysts (n = 5), and intercarpal arthritis affecting the lunate (n = 5). All MRI scans and radiographs were reviewed for eligibility by the principal investigator (D.R.).

Two patient descriptions were excluded from the study before analysis, because in the survey for group 1 (radiographs only), the lateral radiograph of patient 4 was erroneously shown with patient 1 in addition to the correct anteroposterior and lateral radiographs for patient 1, and, as a result thereof, patient 4 had only an anteroposterior radiograph. Final analyses were therefore performed on 26 (of the 28) patients who were rated by 78 observers (Table 1).

Table 1.

Baseline Characteristics of Participating Surgeons Per Group (N = 78).

| Radiographs (n = 43) |

Radiographs + MRI(n = 35) |

P value | |

|---|---|---|---|

| n (%) | n (%) | ||

| Sex | |||

| Male | 36 (84) | 35 (100) | .015* |

| Female | 7 (16) | 0 (0) | |

| Location of practice | |||

| North America | 31 (72) | 31 (89) | .18 |

| Europe | 7 (16) | 4 (11) | |

| South America | 4 (9.3) | 0 (0) | |

| Asia | 1 (2.3) | 0 (0) | |

| Years in practice | |||

| 0-5 years | 16 (37) | 7 (20) | .07 |

| 5-10 years | 7 (16) | 8 (23) | |

| 11-20 years | 11 (26) | 17 (49) | |

| 21-30 years | 9 (21) | 3 (8.6) | |

| Supervising trainees | |||

| Yes | 39 (91) | 28 (80) | .21 |

| No | 4 (9.3) | 7 (20) | |

Note. MRI = magnetic resonance imaging.

Statistical Analysis

Categorical variables are presented as frequencies with percentages and continuous variables as mean with standard deviation (SD). We used Fleiss’ kappa analysis to assess the interobserver agreement for categorical data and reported the κ values for both study groups. Fleiss’ kappa is a quantitative measure of agreement between 2 or more observers that factors in that agreement or disagreement between observers could simply occur due to chance. A κ value of 1 indicates perfect agreement, whereas a κ value of 0 indicates agreement equivalent to that expected by chance alone.26 We labeled the κ values according to the guidelines of Landis and Koch: values between 0.01 and 0.20 indicate slight agreement; 0.21 to 0.40, fair agreement; 0.41 to 0.60, moderate agreement; 0.61 to 0.80, substantial agreement; and 0.81 to 0.99, almost perfect agreement.14 We used bootstrapping (number of resamples, 1000) to calculate standard errors, z statistics, and 95% confidence intervals (CIs) for all κ values, and we calculated P values for the comparison.15 We used a 2-sample unpaired Student t test to compare the mean level of confidence in the surgeon diagnosis of Kienböck disease between the imaging modalities. A 2-tailed P value of less than .05 is considered statistically significant.

Power Analysis

A post hoc power analysis demonstrated that we had 47% statistical power (2-tailed alpha, 0.05) to detect the observed effect size of 0.43 for the difference in reliability with 43 respondents who evaluated radiographs alone and 35 respondents who evaluated radiographs and MRI scans. We had 85% statistical power (2-tailed alpha, 0.05) to detect the observed 0.69 effect size for the difference in level of confidence.

Results

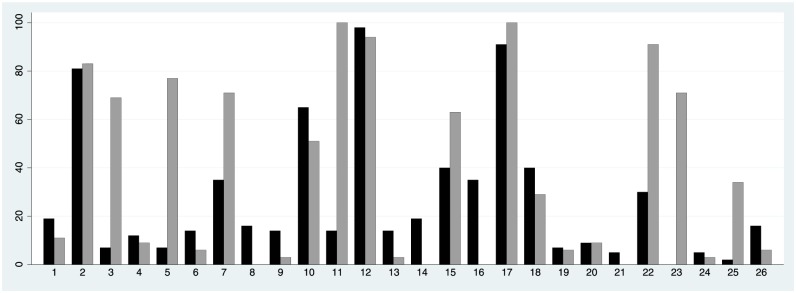

We found that agreement on the diagnosis of Kienböck disease was fair (κ, 0.36; SE, 0.094; 95% CI, 0.18-0.54) among observers who evaluated radiographs alone and moderate (κ, 0.58; SE, 0.069; 95% CI, 0.45-0.72) among those who evaluated MRI scans in addition to radiographs. With the numbers evaluated, there was a notable difference in agreement on the diagnosis of Kienböck disease between the 2 groups of observers, but the 95% CI overlapped and P = .057 (Table 2; Figure 1). The agreement did not differ between experienced (>10 years in practice) and less experienced (<10 years in practice) observers among the hand surgeons who evaluated radiographs alone (P = .50) or radiographs and MRI scans (P = .92).

Table 2.

Kappa Values for Agreement (N = 78).

| Radiographs only (n = 43) |

Radiographs + MRI (n = 35) |

P value | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Question | Agreement | κ | SE | 95% CI | Agreement | κ | SE | 95% CI | |

| 1. Is this Kienböck disease? | Fair | 0.36 | 0.094 | 0.18-0.54 | Moderate | 0.58 | 0.068 | 0.45-0.71 | .057 |

| 3. What is the lunate type? | Fair | 0.22 | 0.044 | 0.14-0.31 | Slight | 0.20 | 0.053 | 0.10-0.31 | .75 |

| 4. What is the ulnar variance? | Substantial | 0.61 | 0.051 | 0.51-0.71 | Moderate | 0.52 | 0.047 | 0.42-0.61 | .15 |

| Mean | SD | 95% CI | Mean | SD | 95% CI | P value | |||

| 2. Surgeon confidence in 1 | 6.8 | 1.4 | 6.4-7.3 | 7.7 | 1.0 | 7.4-8.1 | .004* | ||

Note. MRI = magnetic resonance imaging; CI = confidence interval.

Figure 1.

The bar graph indicates the proportion of surgeons that diagnosed each case as Kienböck disease for the group that evaluated radiographs alone (black bars) and the group that evaluated radiographs and magnetic resonance imaging scans (grey bars).

Hand surgeons who evaluated radiographs alone were less confident about their decision than surgeons who evaluated radiographs and MRI scans (P = .004; Table 2), but not after controlling for potential confounding by sex, location of practice, years in practice, and supervision of trainees (β, 0.51; SE, 0.32; 95% CI, −0.14-1.2; P = .12).

In addition, agreement did not differ between observers based on imaging modality with regard to the assessment of the lunate type (P = .75) and ulnar variance (P = .15) (Table 2).

Discussion

Ulnolunate impaction syndrome, intraosseous ganglion cysts, or intercarpal osteoarthritis may mimic the appearance of early-stage Kienböck disease—when there is no radiographic evidence of lunate collapse. MRI is widely used in the evaluation of Kienböck disease. We assessed the interobserver agreement on the diagnosis of Kienböck disease among hand surgeons of the SOVG who were randomized to evaluate different imaging modalities, and we assessed whether the addition of more advanced imaging (MRI) leads to a difference in agreement among observers with regard to the diagnosis of early (precollapse) Kienböck disease. Although we found a notably higher agreement among observers who evaluated MRI scans in addition to radiographs compared with radiographs alone, the difference in agreement was not statistically significant with the numbers of observers available, emphasizing that there is substantial variability in agreement with regard to the diagnosis of Kienböck disease, even with the addition of more advanced imaging in the form of MRI scans.

This study had some limitations. First, we provided respondents with jpeg images of radiographs and embedded YouTube videos scrolling through MRI scans in lieu of professional Digital Imaging and Communications in Medicine (DICOM) files with a dedicated viewer, and therefore respondents were unable to perform measurements of ulnar variance, zoom in, or adjust image contrast. As this held true for both groups, we think this is a minor limitation, because we were able to evaluate the added value of MRI images to conventional radiographs to the interobserver agreement. In addition, we compared the use of a viewer or videos in a prior study and noted little advantage to the viewer.17 Second, as musculoskeletal radiologists were not part of the SOVG at the time of the study, only hand surgeons evaluated the imaging studies. In addition, most SOVG members are academically oriented—86% of the respondents supervise surgical trainees—and may therefore not represent the average hand surgeon. Third, we used only a short patient description in addition to radiographs and MRI scans. Additional information from interview or physical examination might influence interobserver agreement. We consider this a minor limitation because descriptions were similar for both groups. Fourth, although both study groups received the same survey, it is possible that results of the evaluation of the radiological tests might have been different if the questions were phrased differently and the respondents were unaware that the study was about Kienböck disease. Fifth, this study can only address the reliability and not the accuracy of diagnosis of Kienböck disease because we did not have a reference standard for true Kienböck. Sixth, the number of patients with Kienböck disease in the study was much larger than the relative prevalence in the population, which introduces a spectrum bias that might influence the measures of reliability. Finally, there were variations in the quality of wrist radiographs, which may affect the ability to identify early changes of Kienböck disease with radiographs alone. In addition, as this was an online survey, conditions under which the imaging studies were evaluated (eg, lightning, time of day, monitor contrast) may not have been similar for all participants. This could be seen as a strength as these are the same variations that affect daily practice.

Our study showed that agreement on the diagnosis of precollapse Kienböck disease is fair among observers who evaluate radiographs and moderate among observers who evaluated radiographs and MRI scans, and that the difference in κ values was not statistically significant (P = .057) with the number of observers and observations available. The 0.43 effect size difference between the 2 κ values reflects the substantial variability (high standard deviation), which left us with limited (48%) statistical power. To achieve 80% statistical power, we would have needed 93 respondents in the group that viewed radiographs and 76 in the group that viewed radiographs and MRI scans. Nonetheless our study suggests a trend toward higher agreement in favor of additional MRI scans.

Few authors have studied the interobserver agreement in wrist pathology based on imaging modality. In patients with nonspecific wrist pain, Huellner et al10 found that agreement on etiology of a wide variety of wrist lesions—including Kienböck disease and ulnolunate impaction—is higher among experienced readers for both radiographs (κ, 0.47; 95% CI, 0.15-0.78) and MRI scans (κ, 0.74; 95% CI, 0.51-0.97), compared with experienced and inexperienced readers combined for radiographs (mean κ, 0.42) and MRI scans (mean κ, −0.01). In our study, there was no difference in agreement by surgeon experience for both imaging modalities. Several authors report a correlation between histopathology of osteonecrotic lunates and MRI signal changes,8,21,23 but Hashizume et al8 and Reinus et al21 note that decreased marrow signal in the lunate is not specific to osteonecrosis. The location and confinement of signal changes within the lunate may be useful to distinguish between different pathology.1,6 Interobserver agreement in evaluation of radiographs and/or MRI scans varies among other wrist conditions.5,20 For example, De Zwart et al5 report moderate agreement (κ, 0.44) among 5 radiologists in diagnosing scaphoid fractures on 64 MRI scans of healthy volunteers, whereas Ostergaard et al20 report fair and moderate agreement in classification of erosions (κ, 0.34) and bone lesions (κ, 0.48) in the lunate on MRI scans of patients with rheumatoid arthritis. Aoki et al,2 on the contrary, report almost perfect agreement for MRI scans (κ, 0.85), as well as substantial agreement for radiographs (κ, 0.78), among 2 experienced radiologists in the evaluation of bone erosions in the lunate, also in patients with rheumatoid arthritis.

After controlling for confounding by surgeon demographics, we found that surgeons who evaluated Kienböck disease on MRI scans in addition to radiographs were not more confident on average about their decision than surgeons who evaluated radiographs alone. This is in contrast with other studies, where advanced imaging increases the confidence in diagnosis,11 or surgical decision making.12 However, this lack of confidence may be related to the diagnosis of Kienböck disease itself. It is an enigmatic disease where diagnosis and treatment is not well defined.

In recent research, Rhee et al22 demonstrated that a type II lunate may have a protective effect against the development of scaphoid flexion in patients with Kienböck disease and may impede disease progression, and the authors suggested that patients with an intact type II lunate may potentially benefit most from disease modifying procedures in the early stages of Kienböck disease. We studied the interobserver agreement and found it to be equally low among observers who evaluated radiographs alone and those who evaluated radiographs and MRI scans. This may be partially explained by the fact that we did not train observers on the difference between the type I (absence of a medial hamate articulation) and type II lunate according to Viegas et al25 and some surgeons may not have been familiar with this classification. However, the purpose of the SOVG is to study observer variability among a large group of fully trained, practicing, and experienced surgeons without additional training.

The agreement on ulnar variance was lower in our study (radiographs: κ, 0.61; radiographs and MRI scans: κ, 0.52) than in the study of Laino et al13 who report almost perfect agreement for quantitative measurements of ulnar variance on both radiographs (intraclass correlation coefficient, 0.87) and MRI scans (intraclass correlation coefficient, 0.90 and 0.92). This should be interpreted in the light of the limitation that in our study, respondents were asked to categorize ulnar variance into positive, neutral, or negative, without performing any measurements, which is in contrast to the methodology of Laino et al.13

In conclusion, we found, with the numbers evaluated, a notable, but nonsignificant difference in agreement in favor of observers who evaluated MRI scans in addition to radiographs compared with observers who evaluated radiographs alone. Our results therefore confirm that MRI has additional value in the diagnosis of precollapse Kienböck disease over radiographs alone, but even with the addition of more advanced imaging, agreement was only moderate. Surgeons should be aware that the diagnosis of Kienböck disease in the precollapse stages is not well defined, as evidenced by the substantial interobserver variability. Future research could study agreement among hand surgeons and radiologists.

Acknowledgments

The members of the Science of Variation Group: J. Adams; N. M. Akabudike; T. Apard; T. Bafus; H. B. Bamberger; C. J. R. Barreto; M. Baskies; T. Baxamusa; R. de Bedout; P. Benhaim; P. Blazar; J. G. Boretto; L. A. Buendia; M. Calcagni; R. P. Calfee; J. T. Capo; K. Chivers; R. M. Costanzo; G. DeSilva; S. F. Duncan; P. J. Evans; J. Fischer; T. J. Fischer; W. E. Floyd III; R. S. Gilbert; C. A. Goldfarb; M. W. Grafe; R. R. L. Gray; J. A. Greenberg; P. Guidera; W. C. Hammert; R. Hauck; E. Hofmeister; R. L. Hutchison; J. A. Izzi, Jr.; P. Jebson; S. Kakar; R. N. Kamal; F. T. D. Kaplan; S. A. Kennedy; M. W. Kessler; E. Konul; G. A. Kraan; S. Kronlage; K. Lee; C. Lomita; C. Manke; J. McAuliffe; D. M. McKee; C. L. Moreno-Serrano; P. Muhl; M. Nancollas; J. F. Nappi; D. L. Nelson; A. L. Osterman; P. W. Owens; M. J. Palmer; J. M. Patiño; L. Paz; G. M. Pess; D. Polatsch; K. J. Prommersberger; M. Rizzo; C. Rodner; J. Sandoval; R. Shatford; S. Spruijt; T. G. Stackhouse; C. Swigart; J. Taras; D. E. Tate, Jr.; E. T. Walbeehm; C. J. Walsh; F. L. Walter; L. Weiss; B. P. D. Wills; T. Wyrick; J. Yao.

Footnotes

Authors’ Note: This work was performed at the Massachusetts General Hospital in Boston, MA, USA.

Ethical Approval: This study was approved by our institutional review board: Massachusetts General Hospital; Data Repository Protocol 2009P001019.

Statement of Human and Animal Rights: All procedures followed were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975, as revised in 2008.

Statement of Informed Consent: Informed consent was waived for this study.

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) received no financial support for the research, authorship, and/or publication of this article.

References

- 1. Allan CH, Joshi A, Lichtman DM. Kienböck’s disease: diagnosis and treatment. J Am Acad Orthop Surg. 2001;9(2):128-136. [DOI] [PubMed] [Google Scholar]

- 2. Aoki T, Fujii M, Yamashita Y, et al. Tomosynthesis of the wrist and hand in patients with rheumatoid arthritis: comparison with radiography and MRI. AJR Am J Roentgenol. 2014;202(2):386-390. [DOI] [PubMed] [Google Scholar]

- 3. Arnaiz J, Piedra T, Cerezal L, et al. Imaging of Kienböck disease. AJR Am J Roentgenol. 2014;203(1):131-139. [DOI] [PubMed] [Google Scholar]

- 4. Cerezal L, del Pinal F, Abascal F. MR imaging findings in ulnar-sided wrist impaction syndromes. Magn Reson Imaging Clin N Am. 2004;12(2):281-299, vi. [DOI] [PubMed] [Google Scholar]

- 5. De Zwart AD, Beeres FJ, Ring D, et al. MRI as a reference standard for suspected scaphoid fractures. Br J Radiol. 2012;85(1016):1098-1101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Escobedo EM, Bergman AG, Hunter JC. MR imaging of ulnar impaction. Skeletal Radiol. 1995;24(2):85-90. [DOI] [PubMed] [Google Scholar]

- 7. Guitton TG, Ring D; Science of Variation Group. Interobserver reliability of radial head fracture classification: two-dimensional compared with three-dimensional CT. J Bone Joint Surg Am. 2011;93(21):2015-2021. [DOI] [PubMed] [Google Scholar]

- 8. Hashizume H, Asahara H, Nishida K, et al. Histopathology of Kienböck’s disease. Correlation with magnetic resonance and other imaging techniques. J Hand Surg Br. 1996;21(1):89-93. [DOI] [PubMed] [Google Scholar]

- 9. Hayter CL, Gold SL, Potter HG. Magnetic resonance imaging of the wrist: bone and cartilage injury. J Magn Reson Imaging. 2013;37(5):1005-1019. [DOI] [PubMed] [Google Scholar]

- 10. Huellner MW, Burkert A, Strobel K, et al. Imaging non-specific wrist pain: interobserver agreement and diagnostic accuracy of SPECT/CT, MRI, CT, bone scan and plain radiographs. PLoS One. 2013;8(12):e85359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Hughes CM, Kramer E, Colamonico J, et al. Perspectives on the value of advanced medical imaging: a national survey of primary care physicians. J Am Coll Radiol. 2015;12(5):458-462. [DOI] [PubMed] [Google Scholar]

- 12. Janssen SJ, Hermanussen HH, Guitton TG, et al. Greater tuberosity fractures: does fracture assessment and treatment recommendation vary based on imaging modality? Clin Orthop Relat Res. 2016;474(5):1257-1265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Laino DK, Petchprapa CN, Lee SK. Ulnar variance: correlation of plain radiographs, computed tomography, and magnetic resonance imaging with anatomic dissection. J Hand Surg Am. 2012;37(1):90-97. [DOI] [PubMed] [Google Scholar]

- 14. Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159-174. [PubMed] [Google Scholar]

- 15. Lee J, Fung KP. Confidence interval of the kappa coefficient by bootstrap resampling. Psychiatry Res. 1993;49(1):97-98. [DOI] [PubMed] [Google Scholar]

- 16. Lichtman DM, Lesley NE, Simmons SP. The classification and treatment of Kienböck’s disease: the state of the art and a look at the future. J Hand Surg Eur Vol. 2010;35(7):549-554. [DOI] [PubMed] [Google Scholar]

- 17. Mallee WH, Mellema JJ, Guitton TG, et al. 6-week radiographs unsuitable for diagnosis of suspected scaphoid fractures. Arch Orthop Trauma Surg. 2016;136(6):771-778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Malone WJ, Snowden R, Alvi F, et al. Pitfalls of wrist MR imaging. Magn Reson Imaging Clin N Am. 2010;18(4):643-662. [DOI] [PubMed] [Google Scholar]

- 19. Ogawa T, Nishiura Y, Hara Y, et al. Correlation of histopathology with magnetic resonance imaging in Kienböck disease. J Hand Surg Am. 2012;37(1):83-89. [DOI] [PubMed] [Google Scholar]

- 20. Ostergaard M, Klarlund M, Lassere M, et al. Interreader agreement in the assessment of magnetic resonance images of rheumatoid arthritis wrist and finger joints—an international multicenter study. J Rheumatol. 2001;28(5):1143-1150. [PubMed] [Google Scholar]

- 21. Reinus WR, Conway WF, Totty WG, et al. Carpal avascular necrosis: MR imaging. Radiology. 1986;160(3):689-693. [DOI] [PubMed] [Google Scholar]

- 22. Rhee PC, Jones DB, Moran SL, et al. The effect of lunate morphology in Kienböck disease. J Hand Surg Am. 2015;40(4):738-744. [DOI] [PubMed] [Google Scholar]

- 23. Trumble TE, Irving J. Histologic and magnetic resonance imaging correlations in Kienböck’s disease. J Hand Surg Am. 1990;15(6):879-884. [DOI] [PubMed] [Google Scholar]

- 24. van Leeuwen WF, Oflazoglu K, Menendez ME, et al. Negative ulnar variance and Kienböck disease. J Hand Surg Am. 2016;41(2):214-218. [DOI] [PubMed] [Google Scholar]

- 25. Viegas SF, Wagner K, Patterson R, et al. Medial (hamate) facet of the lunate. J Hand Surg Am. 1990;15(4):564-571. [DOI] [PubMed] [Google Scholar]

- 26. Viera AJ, Garrett JM. Understanding interobserver agreement: the kappa statistic. Fam Med. 2005;37(5):360-363. [PubMed] [Google Scholar]