Abstract

The discrepancy between spatial orientations of an endoscopic image and a physician’s working environment can make it difficult to interpret endoscopic images. In this study, we developed and evaluated a device that corrects the endoscopic image orientation using an accelerometer and gyrosensor. The acceleration of gravity and angular velocity were retrieved from the accelerometer and gyrosensor attached to the handle of the endoscope. The rotational angle of the endoscope handle was calculated using a Kalman filter with transmission delay compensation. Technical evaluation of the orientation correction system was performed using a camera by comparing the optical rotational angle from the captured image with the rotational angle calculated from the sensor outputs. For the clinical utility test, fifteen anesthesiology residents performed a video endoscopic examination of an airway model with and without using the orientation correction system. The participants reported numbers written on papers placed at the left main, right main, and right upper bronchi of the airway model. The correctness and the total time it took participants to report the numbers were recorded. During the technical evaluation, errors in the calculated rotational angle were less than 5 degrees. In the clinical utility test, there was a significant time reduction when using the orientation correction system compared with not using the system (median, 52 vs. 76 seconds; P = .012). In this study, we developed a real-time endoscopic image orientation correction system, which significantly improved physician performance during a video endoscopic exam.

Introduction

Video endoscopes, such as laparoscopes, gastroenteroscopes, and bronchoscopes, are used in many fields of medicine to help clinicians diagnose and treat patients less invasively with shorter hospital stays. However, video endoscopes are difficult to operate, and it takes time for clinicians to learn how to interpret endoscopic images. One of the difficulties is the discrepancy between the spatial orientation of the endoscopic images and the endoscopist’s working environment [1, 2]. For example, it has been reported that a surgeon’s performance decreases when the optical axis of the endoscope equipment does not match the direction of gravity [3, 4]. Attempts to solve this problem have included pattern recognition [5], electromagnetic field sensors [6], and accelerometers [7, 8]. With the pattern recognition method, various computer algorithms were used to extract the features from fiducial markers or anatomical structures on the endoscopic image [5, 9]; however, this method cannot be used when there is no target in the line of sight. The electromagnetic method uses a current induced by a coil moving through a magnetic field. This technique is widely used as a tracking system for image-guided interventions [10], but it can also be used for image orientation correction [6]. However, conductive surgical instruments or electromagnetic interference, from x-ray fluoroscopy for example, can distort the magnetic field and influence the measurements [11]. The accelerometer method uses a low-cost, small inertial sensor to correct the direction of endoscopic images [7, 8]; however, it cannot distinguish between translational acceleration and gravity. Therefore, an accelerometer should be used in combination with a gyrosensor to correct the direction of a rapidly rotating endoscope.

The gyrosensor has a fast response time that is not influenced by translational acceleration. Because the accelerometer fails to differentiate acceleration from gravity, the gyrosensor measures the rotational angle more accurately than the accelerometer when the endoscope rotates. Moreover, the integration error of the rotational angle from the gyrosensor that accumulates over time can be easily corrected using an accelerometer signal. Therefore, these two sensors are often used to complement each other.

In this study, we developed and evaluated a real-time system for correcting endoscopic image orientation using an accelerometer and gyrosensor.

Materials and methods

Development of the system

To obtain the direction of gravity and angular velocity, an accelerometer and gyrosensor were attached to the handle of the endoscope. We assumed that the endoscope handle was a rigid body and that there would be no twisting from the handle to the tip of the endoscope. Quaternion, direction cosine matrix, Euler angles, and axis-angle representation can be used to signify the posture of a rigid body. Due to its simplicity and clear physical meaning, we used the axis-angle to represent the posture of the endoscope handle. The axis was defined as a unit vector indicating the direction of the advancing endoscope. The rotational angle θ was defined as 0 when the sensor was in the opposite direction of gravity and increased when the endoscope rotated in the clockwise direction. Because a singularity occurs when the operator holds the endoscope handle perpendicular to the ground, this action was prohibited during the study.

Calculation of the rotational angle

The sensor was attached so that the x axis pointed to the right as seen by the operator, the y axis pointed toward the head of the endoscope, and the z axis point in the direction of the advancing endoscope (Fig 1).

Fig 1. Axes definitions.

A. the sensor attachment and the viewpoint of the observer B. axes for the accelerometer. ax,y is the acceleration measured by the accelerometer and θaccel is the rotational angle calculated from the accelerometer data. C. axes for the gyrosensor. rz is the angular velocity measured by the gyrosensor and θgyro is the rotational angle calculated from the gyrosensor data.

The rotational angle with respect to the direction of gravity was calculated as:

| (1) |

| (2) |

where θaccel and θzyro are the rotational angles from the accelerometer and gyrosensor, respectively; ax,y is the acceleration from the accelerometer, rz is the angular velocity from the gyrosensor, and atan2 (y,x) is a function that returns the arc tangent of y/x within the range of −π and π depending upon the sign of x and y.

The rotational angles from the accelerometer and gyrosensor were calibrated using a Kalman filter [12, 13]. The filter used the output of the gyrosensor and Eq (3).

| (3) |

where θ Kalman is a state variable–the rotational angle after the Kalman filter (θ Kalman,0 = θ accel,0), rk is the angular velocity from the gyrosensor, T is the time from the last measurement, wk is the processing error, and θ accel is the rotational angle from the accelerometer.

Eq (4) represents the measurement equation of the Kalman filter using the accelerometer output.

| (4) |

where θ accel is a measurement variable–the rotational angle from the accelerometer, θ Kalman,k is a state variable, and zk is the measurement error.

In the Kalman filter algorithm, wk and zk were assumed to be zero mean Gaussian white noise with a covariance of Qk and Rk. The smaller the Qk value, the greater the importance of the gyrosensor output; the smaller the Rk value, the greater the importance of the accelerometer output. We set the Rk value to give less weight to the accelerometer output if an additional acceleration was observed.

| (5) |

where ak is the acceleration from the accelerometer and g is the acceleration of gravity.

The predicted rotational angle was calculated using Eq (6)

| (6) |

where Kalman,k is the estimated rotational angle from the Kalman filter, rk is the angular velocity from the gyrosensor, and Pk is the estimated covariance (P0 = 1).

Finally, the states and the estimated covariance were updated for the next iteration as shown in Eq (7).

| (7) |

where Kk is the Kalman gain.

Subsequently, the rotational angle from the Kalman filter was postprocessed to correct for the delay between the image acquisition and sensor measurement (Fig 2). Because the imaging device and the sensor have different processing and data transfer times, when the image arrives at the processor, the last measurement data from the sensor should not be used without correction. If we assume that the time difference between the last available sensor output and image frame does not change during the exam, the correction should be as shown in Eq (8):

| (8) |

where θ postproc,k is the final rotational angle after postprocessing, rk is the angular velocity from the gyrosensor, and Td is the time difference between the last available sensor output and image frame. Td was determined by a least square error method using the pre-examination data from the system with Eq 9.

| (9) |

where r is the vector of the measured angular velocity, θ Kalman is the rotational angle vector from the Kalman filter, and θ optical is the optical rotational angle vector (method will be described in the Technical evaluation section).

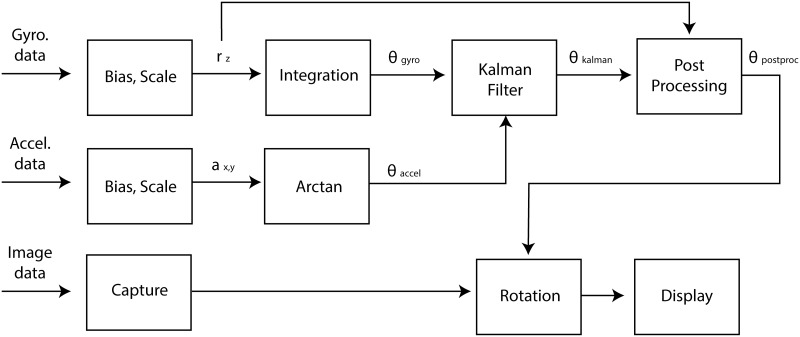

Fig 2. Schematic diagram of the image orientation correction system.

rz is the angular velocity measured by the gyrosensor; ax,y is the acceleration measured by the accelerometer; θgyro and θaccel are the rotational angles calculated from the gyrosensor and accelerometer data, respectively; θKalman is the rotational angle after the Kalman filter; and θ postproc is the final rotational angle used for image rotation. Gyro., gyrosensor; Accel., accelerometer.

Devices used for the system

A 3-Space Sensor™ Bluetooth (Yost Engineering Inc, Portsmouth, OH, USA) was used for the system. According to the manufacturer’s specifications, the accelerometer and gyrosensor precisions are 0.0024 m/s2 and 0.070°/s, respectively. The sensor device also has a magnetometer, although it was not used in this study. The video was captured by a charge-coupled device (CCD) camera attached to the eyepiece of the endoscope and transferred to a PC at 30 frames per second using a USB-ECPT video capture device (Sabrent, Los Angeles, CA, USA).

The image correction software was developed in C++. The sensor data were acquired by a polling method.

Technical evaluation

To evaluate the performance of the image orientation correction system, a board with a black upper half and a white lower half was placed 30 cm from the PC camera (S101 Web Cam; Kodak, Rochester, NY, USA), and the sensors were attached to the camera. The rotational angle (θoptical) was automatically calculated from the image by recognizing the black and white boundaries.

While the camera was rotated randomly for 30 seconds, the rotational angles from the accelerometer (θaccel), gyrosensor (θgyro), and Kalman filter (θKalman), the postprocessed rotational angle (θpostproc), and the optical rotational angle (θoptical) were recorded. Every measurement was repeated using the sensor’s wired and wireless connection.

Clinical utility test

A prospective, randomized observational study using an airway model was used to test the clinical utility of the image orientation correction system. Because the experiment was performed on the airway model and the participants were enrolled as testers, written informed consent was waived. The model simulated human tracheal rings and the angles of the carina and right upper bronchus. Fifteen residents from the Department of Anesthesiology and Pain Medicine at Seoul National University Hospital, each of whom had performed more than 30 bronchoscopic exams, participated in this study. Before the experiment, 5 minutes were allotted for each participant to learn how to use the equipment and practicing with the device. For the experiment, each participant performed two simulated endoscopic exams using two airway tree models in a random order, one with and one without the image orientation correction system (Fig 3). The order of exams was determined by a computer-generated random number. A wired connection was used between the sensor and the computer during the exam with the image orientation correction system.

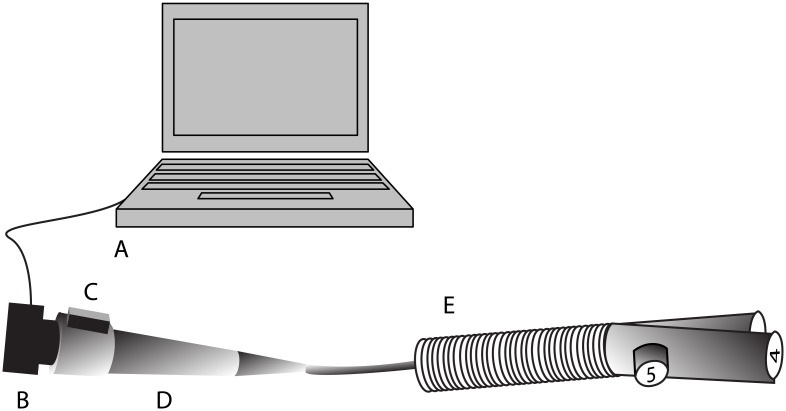

Fig 3. Experimental design of the clinical utility test.

Participants reported numbers printed on papers at the left main, right main, and right upper bronchi of the airway model for both exams with and without using the image orientation correction system. The numbers inside the airway model were reset for each test. A, computer; B, charged-coupled device (CCD) camera; C, sensor; D, handle of the endoscope; and E, airway model with numbered papers at the left main, right main, and right upper bronchi.

During each exam, participants verbally reported the printed numbers in the order of left main, right main, and right upper bronchi of the airway model. The correctness and the total time taken to report the numbers were recorded. The numbers inside the airway model were reset for each exam.

For a statistical power of 0.8 and a significance level of 0.05, the estimated sample size was 12, with the assumption that the mean difference and standard deviation between two measurements was 20 seconds and 25 seconds, respectively. Fifteen participants were needed to compensate for possible dropouts. The times required to finish the exam with and without using the image orientation correction system were compared using the Mann-Whitney U test. All statistical analyses were performed using SPSS software (version 21; SPSS, Inc., Chicago, IL, USA). P < .05 was considered statistically significant. Data are expressed as median (interquartile).

Results

Technical evaluation

The optical rotational angle measured from the captured image during the random rotation of the camera ranged from −83.1° to 88.0° with the wired sensor connection and from −108.0° to 90.9° with the wireless connection. The optical angular velocity of the camera ranged from −184°/s to 182°/s with the wired sensor connection and from −251°/s to 201°/s with the wireless connection. The mean sampling rate of the sensor was 333.4 Hz and 27.7 Hz for the wired and wireless connection, respectively.

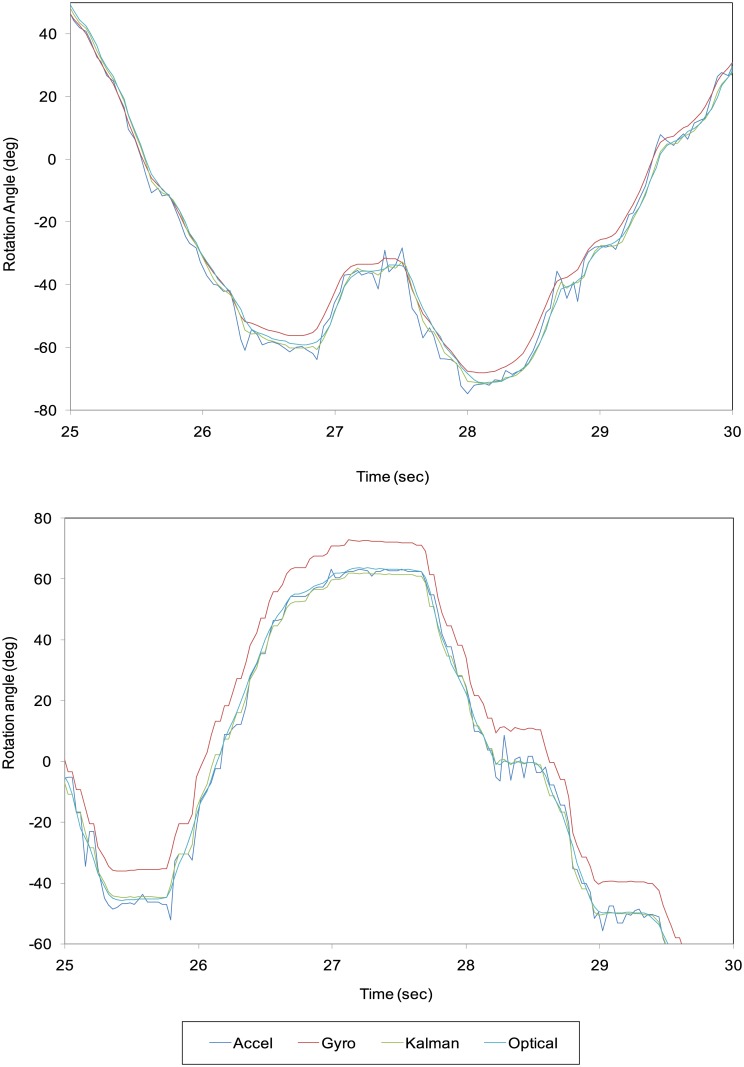

Using only the accelerometer output, the maximum difference between θoptical and θaccel was 12.79° with the wired connection and 15.08° with the wireless connection (Table 1; Fig 4). Using only the gyrosensor output, the maximum difference between θoptical and θgyrol was 8.87° with the wired connection and 14.18° with the wireless connection. After the Kalman filter and postprocessing, the maximum difference between θoptical and θpostproc was 5.00° with the wired connection and 7.48° with the wireless connection.

Table 1. Error of rotational angle with different combinations of sensors, connections, and processing.

Error was defined as the difference between the measured rotational angle and the optical rotational angle. RMS, root mean square; Kalman, Kalman filtering.

| Sensors | Error range (°) | Error RMS (°) |

|---|---|---|

| Wired+accelerometer | −10.82 to 12.79 | 3.55 |

| Wired+gyrosensor | −7.17 to 8.87 | 3.01 |

| Wired+Kalman | −4.59 to 5.15 | 2.42 |

| Wired+Kalman+postprocessing | −5.00 to 3.75 | 1.21 |

| Wireless+accelerometer | −14.92 to 15.08 | 3.48 |

| Wireless+gyrosensor | −5.07 to 14.19 | 5.09 |

| Wireless+Kalman | −7.38 to 7.82 | 2.72 |

| Wireless+Kalman+postprocessing | −7.53 to 7.48 | 2.70 |

Fig 4. Results of the measured and optical rotational angles from 25 to 30 seconds after beginning the experiments.

A, wired connection; B, wireless connection.

As shown in Table 1, the minimum root mean square (RMS) error between the calculated and measured optical rotational angles was obtained using the wired connection for both the accelerometer and the gyrosensor with Kalman filtering and postprocessing for the time delay. The wired connection provided better results than the wireless connection for both sensors in most cases.

The time differences between the last available sensor output and image frame measured by Eq (9) were 0.025 and 0.002 seconds for the wired and wireless connection, respectively.

Clinical utility test

All participants successfully completed the experiments. The time required to finish the bronchoscopic exam decreased significantly using the image orientation correction system (median, 52 seconds; interquartile range, 32–74 seconds) compared with not using the system (median, 76 seconds; interquartile range, 59–128 seconds; P = .012).

There was one case when the image orientation correction system was not used in which the number printed at the opposite bronchus was reported.

Discussion

In the present study, we developed and evaluated an endoscopic image orientation correction system using an accelerometer and gyrosensor. As a result, there was a clear benefit from using the orientation correction system.

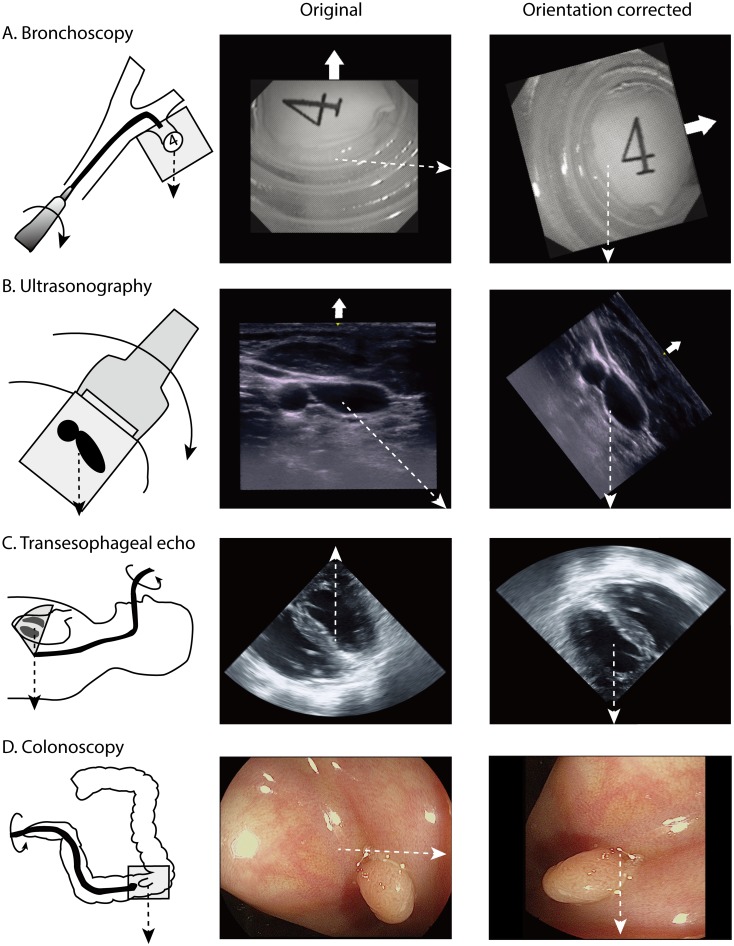

The direction of a flexible endoscope needs to be changed by rotation, because the tip can only be bent up or down. During ultrasonography, the probe should be positioned obliquely in order to transmit the beam to the target. These changes cause unwanted rotation of the image orientation (Fig 5). By correcting the image orientation relative to the direction of gravity, the image can be interpreted more intuitively. Video clips of the airway model bronchoscopic exam with and without image orientation correction are provided as supplementary files (S1 and S2 Video Files). The orientation corrected image was much easier to interpret because the direction of the image did not change during the exam.

Fig 5. Images before and after orientation correction in various clinical situations.

A. With flexible endoscopy, changing the direction requires axial rotation of the endoscope. The gravitational distortion due to the rotation can be corrected by the orientation correction system. The procedure is also easier because the direction of the endoscope tip coincides with the spatial orientation of the endoscopist. This image was captured using the orientation correction system used in this study. B. With ultrasound-guided catheter insertion, the image orientation correction system can help to match the spatial orientation of the image and the operator. This image was captured using the orientation correction system used in this study. C. With conventional transesophageal echocardiography, the direction of gravity is upward. Therefore, air bubbles come down from the top of the image. D. In colonoscopy, the location of the mass can be accurately described even when there is no structural landmark. The upper direction of the raw image is indicated by a thick white arrow and the direction of gravity is indicated by a dashed line.

To correct the endoscopic image orientation, some researchers have reported using an accelerometer alone [7, 14]. Although the accuracy of the device in that report was within 1°, this result was achieved only in a stationary state. When using the accelerometer alone in our study, the error was much greater than 1° while the endoscope was rotated. This error decreased significantly when the gyrosensor and accelerometer were used simultaneously. Use of accelerometer and gyrosensor in endoscopic guidance have been reported already [15, 16]. Behrens and colleagues used accelerometer and gyrosensor for endoscope navigating system. In the report, the mean error of the position measurement was between 1 and 4 degrees [15]. Ren, et al. reported that accelerometer and gyrosensor can improve the accuracy of existing electromagnetic tracking system [16]. However, relatively little effort has been made to evaluate the clinical usefulness of this technology.

A novel postprocessing method was also suggested in this study, wherein the time difference between the last available sensor output and image frame multiplied by the angular velocity was subtracted from the Kalman filter output. In the devices used in this study, the time difference between the last available sensor output and image frame was 0.025 and 0.002 seconds with the wired and wireless connection, respectively. Not compensating for this time difference can result in large error, especially with the wired connection. The possibility of postprocessing using the angular velocity is another benefit of using the gyrosensor.

The main advantage of the system developed in this study is that the sensor module can be attached to the handle of the endoscope. While this can cause an error when the tip and handle of a flexible endoscope twist, this is not common in most clinical situations. Also, sensors attached to the tip may increase the tip size and the risk of aspiration when the equipment is damaged. Additionally, attaching the sensor to a conventional endoscope handle is more cost-effective than purchasing new equipment.

This endoscopic image orientation correction system can also help clinicians to objectively analyze the endoscopic images after the procedure. This is particularly useful when the endoscopist and the operator are not the same person (e.g., laparoscopic surgery or transesophageal echocardiography-guided valvuloplasty), when there is no information about the image orientation (e.g., intravascular sonography or epiduroscopy), or when communicating with another specialist for consultation or education.

This study does have some limitations. A camera was used instead of an endoscope during the technical evaluation phase. However, measuring the optical rotational angle from with an actual endoscope may have induced an error due to the lower resolution. Second, an error may have occurred during the technical evaluation if the center of the camera did not align exactly with the center of the target board. Third, an airway model was used instead of an animal or human airway for the clinical utility test. During a bronchoscopic exam of a human patient, the shape of tracheal rings and muscle stripes can help orient the operator; however, the airway model used in this study did not have such detailed structures.

In conclusion, the endoscopic image orientation correction system using both an accelerometer and a gyroscope resulted in greater accuracy than using an accelerometer alone. This system also significantly decreased the time needed to perform a bronchoscopic exam, which would be quite valuable in the clinical setting. Although these results were obtained in a limited situation, this orientation correction system will likely help clinicians interpret and analyze endoscopic images in clinical practice.

Supporting information

As the endoscope rotates, the image also rotates, making it difficult to interpret the direction.

(AVI)

The orientation of the image is fixed to the direction of gravity while the endoscope is rotating. A wired connection was used for capturing the video.

(AVI)

Data Availability

The minimal underlying data set necessary for replication, including raw data for clinical evaluation parts, are available at https://osf.io/7ngdq/.

Funding Statement

The authors received no specific funding for this work.

References

- 1.Cao CGL, Milgram P. Disorientation in Minimal Access Surgery: A Case Study. Proceedings of the Human Factors and Ergonomics Society Annual Meeting. 2000;44(26):169–72. doi: 10.1177/154193120004402610 [Google Scholar]

- 2.Holden JG, Flach JM, Donchin Y. Perceptual-motor coordination in an endoscopic surgery simulation. Surgical endoscopy. 1999;13(2):127–32. Epub 1999/01/26. . [DOI] [PubMed] [Google Scholar]

- 3.Patil PV, Hanna GB, Cuschieri A. Effect of the angle between the optical axis of the endoscope and the instruments’ plane on monitor image and surgical performance. Surgical Endoscopy And Other Interventional Techniques. 2004;18(1):111–4. doi: 10.1007/s00464-002-8769-y [DOI] [PubMed] [Google Scholar]

- 4.Zhang L, Cao CG. Effect of automatic image realignment on visuomotor coordination in simulated laparoscopic surgery. Appl Ergon. 2012;43(6):993–1001. Epub 2012/03/01. doi: 10.1016/j.apergo.2012.02.001 . [DOI] [PubMed] [Google Scholar]

- 5.Koppel D, Wang Y-F, Lee H. Automated Image Rectification in Video-Endoscopy. In: Niessen WJ, Viergever MA, editors. Medical Image Computing and Computer-Assisted Intervention—MICCAI 2001: 4th International Conference Utrecht, The Netherlands, October 14–17, 2001 Proceedings. Berlin, Heidelberg: Springer Berlin Heidelberg; 2001. p. 1412–4.

- 6.Salomon O, Kósa G, Shoham M, Stefanini C, Ascari L, Dario P, et al. Enhancing endoscopic image perception using a magnetic localization system. 2006. [Google Scholar]

- 7.Höller K, Penne J, Schneider A, Jahn J, Guttiérrez Boronat J, Wittenberg T, et al. Endoscopic Orientation Correction. In: Yang G-Z, Hawkes D, Rueckert D, Noble A, Taylor C, editors. Medical Image Computing and Computer-Assisted Intervention—MICCAI 2009: 12th International Conference, London, UK, September 20–24, 2009, Proceedings, Part I. Berlin, Heidelberg: Springer Berlin Heidelberg; 2009. p. 459–66. [DOI] [PubMed]

- 8.Warren A, Mountney P, Noonan D, Yang G-Z. Horizon Stabilized—Dynamic View Expansion for Robotic Assisted Surgery (HS-DVE). International Journal of Computer Assisted Radiology and Surgery. 2012;7(2):281–8. doi: 10.1007/s11548-011-0603-3 [DOI] [PubMed] [Google Scholar]

- 9.Turan M, Almalioglu Y, Konukoglu E, Sitti M. A Deep Learning Based 6 Degree-of-Freedom Localization Method for Endoscopic Capsule Robots. arXiv preprint arXiv:170505435. 2017.

- 10.Cleary K, Peters TM. Image-guided interventions: technology review and clinical applications. Annual review of biomedical engineering. 2010;12:119–42. doi: 10.1146/annurev-bioeng-070909-105249 [DOI] [PubMed] [Google Scholar]

- 11.Hummel J, Figl M, Kollmann C, Bergmann H, Birkfellner W. Evaluation of a miniature electromagnetic position tracker. Medical physics. 2002;29(10):2205–12. doi: 10.1118/1.1508377 [DOI] [PubMed] [Google Scholar]

- 12.Kalman RE. A new approach to linear filtering and prediction problems. Journal of basic Engineering. 1960;82(1):35–45. [Google Scholar]

- 13.Simon D. Optimal state estimation: Kalman, H [infinity] and nonlinear approaches. Hoboken, N.J.: Wiley-Interscience; 2006. xxvi, 526 p. p. [Google Scholar]

- 14.Holler K, Penne J, Hornegger J, Schneider A, Gillen S, Feußner H, et al., editors. Clinical evaluation of Endorientation: Gravity related rectification for endoscopic images. Image and Signal Processing and Analysis, 2009 ISPA 2009 Proceedings of 6th International Symposium on; 2009: IEEE.

- 15.Behrens A, Grimm J, Gross S, Aach T, editors. Inertial navigation system for bladder endoscopy. Engineering in Medicine and Biology Society, EMBC, 2011 Annual International Conference of the IEEE; 2011: IEEE. [DOI] [PubMed]

- 16.Ren H, Rank D, Merdes M, Stallkamp J, Kazanzides P. Multisensor data fusion in an integrated tracking system for endoscopic surgery. IEEE Transactions on Information Technology in Biomedicine. 2012;16(1):106–11. doi: 10.1109/TITB.2011.2164088 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

As the endoscope rotates, the image also rotates, making it difficult to interpret the direction.

(AVI)

The orientation of the image is fixed to the direction of gravity while the endoscope is rotating. A wired connection was used for capturing the video.

(AVI)

Data Availability Statement

The minimal underlying data set necessary for replication, including raw data for clinical evaluation parts, are available at https://osf.io/7ngdq/.