Abstract

The variational principle for conformational dynamics has enabled the systematic construction of Markov state models through the optimization of hyperparameters by approximating the transfer operator. In this note, we discuss why the lag time of the operator being approximated must be held constant in the variational approach.

Markov state models (MSMs) are a powerful master equation framework for the analysis of molecular dynamics (MD) datasets that involve a complete partition of the conformational space into disjoint states.1 By representing each frame of a MD dataset as its state label, the populations of and conditional pairwise transition probabilities between the states can be counted, leading to thermodynamic and kinetic information about the system, respectively. This information is represented by a transition matrix, which contains all the information necessary to propagate the system forward in time. The transition matrix is the discrete-time approximation to the transfer operator (τ), which is characterized by its lag time τ. The transfer operator propagates the system, represented by a normalized probability density (x), forward by a time step of τ and admits a decomposition into eigenfunctions and eigenvalues (see Ref. 1, Ch. 3),

| (1a) |

| (1b) |

The eigenvalues λi are real and numbered in decreasing order. The unique highest eigenvalue λ1 = 1 corresponds to the stationary distribution, and the subsequent eigenvalue/eigenfunction pairs represent dynamical processes in the time series. Importantly, the timescale of each process can only be retrieved with knowledge of the lag time at which the operator was defined using the equation

| (2) |

Choosing a lag time at which the system is Markovian depends on what type of system is being modeled. At a long enough lag time for the system to be approximated as a Markov process, intrastate transitions occur much more quickly than interstate transitions. The appropriate lag time depends on the system of study: for protein folding, 50 ns might be appropriate; for electron dynamics, a suitable lag time might be on the order of femtoseconds. If a system is Markovian at a lag time τ (if the intrastate transitions occur more quickly than τ), then the system will be Markovian at all lag times greater than τ and the timescales of the subprocesses will be constant for all Markovian lag times. This idea has motivated the use of implied timescale plots to choose a lag time.2 Lag times after which the timescales “level out” are assumed to be Markovian, and usually the shortest such time is chosen for the most temporal resolution.

In practice, we usually do not know the true eigenfunctions ψ in (1b) and instead need to guess them. For a MSM, this means choosing how to divide phase space into disjoint states. Until recently, choosing how to define the states occupied by a dynamic system represented a bottleneck in the development of MSM methods, and heuristic, hand-selected states were common. However, the derivation of a variational principle for conformational dynamics by Noé and Nüske3 in 2013 opened the door for a systematic approach to choosing the states of a system. Our guess, or ansatz eigenfunctions, will admit corresponding eigenvalues . Using our ansatz, we can state the variational principle derived by Noé and Nüske,3

| (3) |

where GMRQ stands for the generalized matrix Rayleigh quotient, which is the form of the approximator when the first m eigenfunctions are estimated simultaneously. By recalling the relation of the eigenvalues and operator lag time τ to the system timescales in (2), we see that the variational principle establishes an upper bound on the timescales of the slowest m processes in the dynamical system. In practical cases, the variational bound can be exceeded due to statistically undersampled processes; therefore, the GMRQ must be evaluated under cross-validation as described in Ref. 4.

When we variationally choose a set of eigenfunctions, we can only compare them if we are trying to approximate the same transfer operator. Therefore, the lag time τ must not be changed when the ansatz is changed, and it cannot be variationally optimized using the GMRQ—instead, it must be determined using such techniques as implied timescale plots. In contrast, all transformation and dimensionality reduction choices leading up to the state decomposition are ideal hyperparameters to optimize using the GMRQ. This might include the following:

-

•

RMSD cutoffs for geometric clustering;

-

•

internal coordinate choices such as dihedral angles or contact pairs, including which angles and pairs to include, and any transformations thereof;

-

•

internal parameters for time-structure based independent component analysis (tICA) such as tICA lag time, number of components retained, and any transformations of these components;

-

•

clustering algorithm and number of clusters;

but, as discussed above, cannot include

-

•

the operator lag time or

-

•

the number of timescales scored.

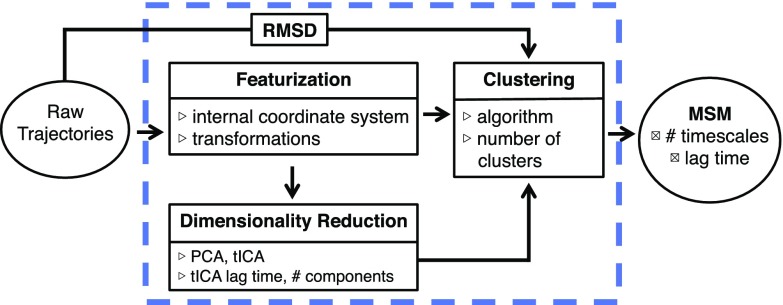

These choices are illustrated in Fig. 1. For protein folding, we refer the reader to Ref. 5 for a systematic study of these choices in the context of the variational approach to conformational dynamics (VAC).

FIG. 1.

The flow chart shows several ways to create a MSM from raw simulation data. The blue box indicates which of the parameters enumerated can be optimized using the GMRQ. The MSM lag time and number of timescales scored must be held constant. This figure is adapted with permission from Husic et al., J. Chem. Phys. 145, 194103 (2016). Copyright 2016 AIP Publishing LLC.

In practice, we recommend starting with reasonable parameters for the system of study and choosing a valid lag time. Then, at the chosen lag time, perform a hyperparameter search for any of the state decomposition choices listed above. This two-step process can then be repeated, alternating lag time validation using fixed hyperparameters with hyperparameter searches for a fixed lag time.

Perhaps the most natural way to understand the separate treatment of the lag time is to consider its true role in kinetic model building. While previous MSM approaches have treated it effectively like a hyperparameter (e.g., choosing a lag time based on flattening of implied timescales), in actuality, this approach is fundamentally philosophically incorrect. The lag time must be chosen a priori by the researcher, as it directly reflects the resolution of interest to study. Given a method which can directly identify the relevant degrees of freedom, choosing a lag time of picoseconds would bring water dynamics into the state space, vs. nanoseconds for backbone and side chain dynamics or microseconds for slower collective rearrangements. For this reason, it simply does not make sense to let the model choose the lag time, and instead one must have the protocol choose the best model given a pre-chosen set lag time.

MSMs are just one example of the general set of models to which the VAC applies. The popular tICA framework6,7 can also be variationally optimized. When using tICA as an intermediate step in MSM construction, the tICA lag time may be varied and optimized. However, in the case where the tICA model is the entity being evaluated, the tICA lag time and number of components scored must be held constant in order to ensure that the same operator is being approximated. Additional extensions of the VAC can be found in Ref. 8. We also refer the interested reader to Ref. 9, which presents continuous-time Markov processes that do not have lag times. Finally, we would like to note that the VAC is not a panacea: the slowest dynamical processes are often assumed, but not guaranteed, to be the processes of interest, and it is important to verify this for each analysis. We anticipate that this note will help guide hyperparameter optimization when using VAC.

Acknowledgments

The authors are grateful to Matt Harrigan, Carlos Hernández, Jade Shi, Anton Sinitskiy, Nate Stanely, and Muneeb Sultan for discussion and manuscript feedback. We acknowledge the National Institutes of Health under No. NIH R01-GM62868 for funding. V.S.P. is a consultant and SAB member of Schrodinger, LLC and Globavir, sits on the Board of Directors of Apeel Inc, Freenome, Inc, Omada Health, PatientPing, Rigetti Computing, and is a General Partner at Andreessen Horowitz.

The open-source software Osprey10 has been designed for variational hyperparameter optimization and is available on msmbuilder.org/osprey.

REFERENCES

- 1.Bowman G. R., Pande V. S., and Noé F., An Introduction to Markov State Models and Their Application to Long Timescale Molecular Simulation, Advances in Experimental Medicine and Biology Vol. 797 (Springer, 2014). [Google Scholar]

- 2.Swope W. C., Pitera J. W., and Suits F., J. Phys. Chem. B 108, 6571 (2004). 10.1021/jp037421y [DOI] [Google Scholar]

- 3.Noé F. and Nüske F., Multiscale Model. Simul. 11, 635 (2013). 10.1137/110858616 [DOI] [Google Scholar]

- 4.McGibbon R. T. and Pande V. S., J. Chem. Phys. 142, 124105 (2015). 10.1063/1.4916292 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Husic B. E., McGibbon R. T., Sultan M. M., and Pande V. S., J. Chem. Phys. 145, 194103 (2016). 10.1063/1.4967809 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Pérez-Hernández G., Paul F., Giorgino T., De Fabritiis G., and Noé F., J. Chem. Phys. 139, 015102 (2013). 10.1063/1.4811489 [DOI] [PubMed] [Google Scholar]

- 7.Schwantes C. R. and Pande V. S., J. Chem. Theory Comput. 11, 600 (2015). 10.1021/ct5007357 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wu H. and Noé F., preprint arXiv:1707.04659 (2017).

- 9.McGibbon R. T. and Pande V. S., J. Chem. Phys. 143, 034109 (2015). 10.1063/1.4926516 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.McGibbon R. T., Hernández C. X., Harrigan M. P., Kearnes S., Sultan M. M., Jastrzebski S., Husic B. E., and Pande V. S., J. Open Source Software 1, 34 (2016). 10.21105/joss.00034 [DOI] [Google Scholar]