Abstract

Tactual exploration of objects produce specific patterns in the human brain and hence objects can be recognized by analyzing brain signals during tactile exploration. The present work aims at analyzing EEG signals online for recognition of embossed texts by tactual exploration. EEG signals are acquired from the parietal region over the somatosensory cortex of blindfolded healthy subjects while they tactually explored embossed texts, including symbols, numbers, and alphabets. Classifiers based on the principle of supervised learning are trained on the extracted EEG feature space, comprising three features, namely, adaptive autoregressive parameters, Hurst exponents, and power spectral density, to recognize the respective texts. The pre-trained classifiers are used to classify the EEG data to identify the texts online and the recognized text is displayed on the computer screen for communication. Online classifications of two, four, and six classes of embossed texts are achieved with overall average recognition rates of 76.62, 72.31, and 67.62% respectively and the computational time is less than 2 s in each case. The maximum information transfer rate and utility of the system performance over all experiments are 0.7187 and 2.0529 bits/s respectively. This work presents a study that shows the possibility to classify 3D letters using tactually evoked EEG. In future, it will help the BCI community to design stimuli for better tactile augmentation n also opens new directions of research to facilitate 3D letters for visually impaired persons. Further, 3D maps can be generated for aiding tactual BCI in teleoperation.

Keywords: Text recognition, Tactile perception, Electroencephalography, Brain-computer interface, Rehabilitation

Introduction

The sense of touch, among the different sensory abilities, occupies a very important area in object recognition. Though objects can be recognized to a large extent by vision alone, the sense of touch independently, or in combination with vision can aid in fool-proof and complete discrimination of objects.

Different objects produce different patterns of responses in the human brain when explored by touch. Hence objects can be recognized by analyzing brain signals through electroencephalogram (EEG) signals (Dornhege 2007; Sanei and Chambers 2008) during tactile perception (James et al. 2007; Martinovic et al. 2012; Pal et al. 2014; Gohel et al. 2016). Researchers have shown that objects can be identified from brain responses to visual and/or tactile stimuli depending on different parameters like shape, size, texture etc. (Amedi et al. 2005; Datta et al. 2013; Khasnobish et al. 2013; Reed et al. 2004; Grunwald 2008; Gohel et al. 2016; Hori and Okada 2017; Kono et al. 2013; Wang et al. 2015; Taghizadeh-Sarabi et al. 2015; Kodama et al. 2016; Ursino et al. 2011). Along with tactile based object classification, tactile location has also been performed (Wang et al. 2015). Kodama et al. (2016) classified responses obtained from full body. Tactile classification is also done by magnetoencephalogram (MEG) signals (Gohel et al. 2016). On the basis of the literature survey, the present work proposes and validates a hypothesis that 3-dimensional (embossed) texts can be recognized by tactually evoked EEG signal analysis. Printed texts are usually 2-dimensional, whereas in this work texts are embossed on plain paper surfaces using acrylic paints with a height of approximately 1 mm, which we will henceforth refer to as 3-D texts. This work can find applications in brain-computer interfaces (BCI) which utilize brain responses for control and communication in rehabilitation, especially in patients with neuro-motor diseases (Aloise et al. 2010; Curran and Stokes 2003; Fabiani et al. 2004; Neuper et al. 2005; McCane et al. 2015).

This is a preliminary study to classify 3-D texts from tactually evoked EEG signals. Here the evaluation of the feasibility of embossed text classification from tactually evoked EEG signals is the main goal. This is followed by the communication of EEG analysis results on a computer screen to discriminate between the tactile perceptions of different classes of embossed alphabets, digits, or symbols as an alternative to verbal communication of the same, and hence this study can be extended to develop a rehabilitative communication platform for the paralyzed in future. In addition, this study can facilitate the development of a BCI driven aid for the visually-challenged as well as for the enhancement of multitasking in case of normal-visioned individuals. For example, when a pilot/driver is visually engaged, he can still communicate through EEG signals, while tactually reading 3-D texts or navigating through embossed maps. In these cases, a tactile feedback channel can be provided to improve the perceptual understanding of the embossed objects while the visual or other channels are engaged in other activities or are unavailable due to physical ailments. The existing solution for text recognition for visually-challenged people is Braille (Jiménez et al. 2009; Beisteiner et al. 2015). The use of Braille has been prevalent among the visually challenged because of the easy tactile discrimination of the ‘raised dots’ in varying numbers and arrangements to represent different characters. However, using a Braille system requires large amounts of space and time resources to convert the normal texts into Braille in addition to the need for extensive user training. Also, it is not usually convenient to be used by a person with normal vision, or a normal-visioned person who has lost the ability of visual perception due to accident or trauma. The main goal of this paper is to present the proof of concept for classification of alphabets, numbers and symbols from tactually evoked EEG signals in real time. This work will be further extended to provide a common platform for visually impaired individuals by birth or by accident as well as healthy individuals to recognize 3-D texts and communicate without any verbal or gesture medium, but through an efficient BCI driven system.

In this work, EEG signals have been acquired from the parietal region over the somatosensory cortex of blindfolded healthy subjects while they tactually explore the 3-D texts, including symbols, numbers, and alphabets. The acquired signals are preprocessed to extract three features to recognize the respective 3-D texts from EEG. These pre-trained classifiers are used for online classification of EEG data to identify the texts which are displayed on the computer screen for communication. Assessment of the subjects’ verbal responses following the presentation of the tactile stimuli validates their perceptual understanding of the explored texts.

The rest of the paper is structured as follows. In the second section, the principles used and the methods undertaken are explained. The experiments and results follow in the third section. The fourth section provides a discussion on the experimental results. In the fifth and final section, conclusions are drawn and future scopes of work are stated.

Principles and methods

The present work proposes to communicate the brain responses during tactile exploration of 3-D texts without any verbal or body gestures with the aim to develop an efficient BCI based rehabilitative aid for visually/neuro-motor impaired patients.

Experimental design

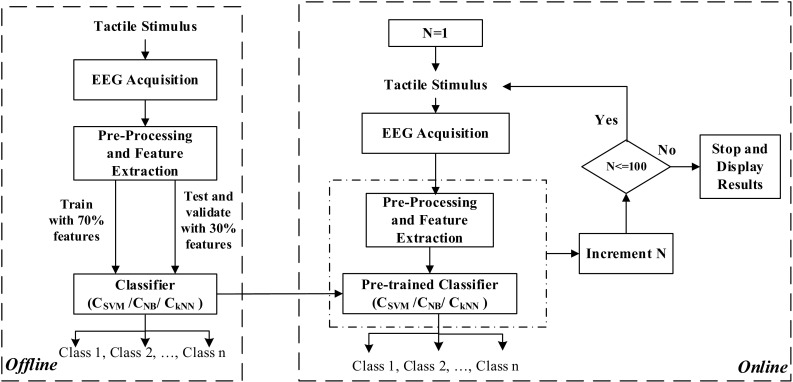

The entire course of work is illustrated by the flowchart in Fig. 1. There are two modules or phases, offline and online. In the offline module classifiers are trained to recognize 3-D texts from tactually evoked EEG features. In this phase, an acquired EEG database is used to train classifiers to discriminate between different classes of embossed alphabets, digits or symbols. Testing is done to validate the efficacy of the classifiers. In the online module, the pre-trained classifiers are used for online recognition of the embossed texts from EEG and subsequent communication of the results for 100 test samples. Here N denotes a test stimulus or sample. In this phase, unknown EEG data is classified to belong to a particular class of embossed text and the result of classification is displayed on the computer screen in each case. The experiment operator keeps track of the actual embossed text that is given to the subject and the result of EEG classification displayed on the screen and uses these statistics over time to determine the performance. The process is ‘online’ that is there is a stream of stimulus presentation, EEG analysis and result display followed by the next stimulus presentation, EEG analysis and so on. While EEG processing and display of results of a particular stimulus is being done by the computer, the subject is given the next stimulus for exploration. In each of the two phases of experimentation, subjects’ verbal responses regarding the presented stimuli are noted to evaluate the correctness of their perceptual understanding of the stimuli.

Fig. 1.

Flowchart depicting the course of work

EEG acquisition principles

EEG signal acquisition requires the selections of the scalp locations and signal components according to the application.

Electrode selection

The primary somatosensory cortex of the parietal lobe is responsible encoding the sense of touch (James et al. 2007; Amedi et al. 2005; Grunwald 2008). Hence, in this work the EEG signals from the parietal region are of interest, which are acquired from the two scalp electrodes P7 and P8 placed according to the International 10–20 system of electrode placement (Dornhege 2007).

EEG component selection

Through a series of experiments, it is observed that followed by the tactile stimuli presentation there is a desynchronization of the EEG signals which is followed by their synchronization. Thus event related desynchronization/synchronization (ERD/S) (Dornhege 2007; Sanei and Chambers 2008; Zhang et al. 2016a) is considered as the EEG component in the present work. ERD/S refers to the relative decrease/increase in the EEG signal power in a certain frequency range during dynamic cognitive processes upon excitation with stimuli.

EEG pre-processing and feature extraction

Pre-processing

EEG signals for somatosensory perception are significantly predominant in the theta band (Grunwald et al. 2001). On performing Fourier Transform on the tactually evoked EEG signals; it is observed that maximum signal power is contained in the frequency range 4–15 Hz that includes the theta as well as the alpha bands. An elliptical band pass filter of order 6 and bandwidth of 4–15 Hz is implemented for extracting the relevant denoised EEG signals eliminating undesirable high frequency components including power line noises and motor movement related artifacts. For elimination of the interference among the channels spatial filtering is necessary. It is implemented with common average referencing (Dornhege 2007). Here, from data of each channel of EEG, data from all the channels equally weighted are subtracted to eliminate the commonality of that channel with the rest and preserve its specific temporal features. This is described by (1) where the signal at the primary channel or the channel under consideration and the other channels are respectively x i(t) and x j(t), for i, j = 1 to ch, ch denotes the total number of channels taken. It thus reduces the influence of surrounding electrode channels/sources from each individual electrodes. In our case, the total number of channel includes all channels available in the acquisition apparatus used. Then data from P7 and P8 only are further processed.

| 1 |

Feature extraction

In this work EEG signals are represented through three features, adaptive autoregressive parameters (AAR) (Nai-Jen and Palaniappan 2004; Schlögl et al. 1997; Schlögl 2000; Datta et al. 2015; Bhaduri et al. 2016) and Hurst exponents (HE) (Kanounikov et al. 1999), both as time-domain features and power spectral density (PSD) (Cona et al. 2009) as a frequency domain feature. Though other standard bio-signal based features like wavelet transform features, empirical mode decomposition features, Hjorth parameters, etc. have been previously studied, these particular three features have been selected because of a better performance in the present set of experiments. The final feature vector representing each EEG instance is obtained by combining these features.

An Autoregressive (AR) model is a parametric method for describing the stochastic behavior of a time series. EEG signals are time varying or non-stationary in nature, and hence the auto-regressive (AR) parameters for representing EEG signals should be estimated in a time-varying manner. That is done by AAR parameters. Such an AAR model is described by (2) and (3) where the index k is an integer to denote discrete, equidistant time points, y k−i with i = 1 to p are the p previous sample values, p being the order of the AR model and a i,k the time-varying AAR model parameters and x k being a zero-mean-Gaussian-noise process with σ 2x,k as the time-varying variance. There are various methods for estimating the AAR parameters like least-mean-square (LMS) method, recursive-least-square (RLS) method, recursive AR (RAR) method, Kalman filtering, etc. In this work, after several trials to obtain the best performance, the AAR model is chosen to be of order 6 and AAR estimation is done using Kalman filtering. The rate of adaptation of the AAR parameters, determined by the update coefficient, is heuristically taken as 0.0085.

| 2 |

| 3 |

EEG responses at different excitations may have random variations and can be described to be chaotic. Hence, Hurst exponent (Kanounikov et al. 1999; Acharya et al. 2005; Gschwind et al. 2016), a non-linear parameter is used as an EEG feature. It is computed using rescaled range analysis by statistical methods and estimates the occurrence of long-range dependence and its degree in a time series by the evaluating the probability of an event to be followed by a similar event. It is described by (4), where T denotes the sample duration and R/S denotes the corresponding rescaled range value.

| 4 |

A value of H = 0.5 indicates that the time-series is similar to an independent random process, 0 ≤ H < 0.5 indicates a present decreasing trend in the process implies a future increasing trend and vice versa whereas 0.5 < H ≤ 1 indicates that a present increasing/decreasing trend in the process implies a future increasing/decreasing trend.

Experiments reveal that the EEG signals corresponding to exploring different embossed letters have different amounts of power at different frequencies and hence the power spectral density (PSD) can be used as an EEG feature. PSD of a wide sense stationary signal x(t) is the Fourier transform of its autocorrelation function, given by S(w) in (5), where E denotes the expected value and T denotes the time interval.

| 5 |

Thus for a time varying signal such as EEG, the complete time series should be divided into segments to determine its PSD. In the present work PSD has been evaluated using Welch Method (Alkan and Yilmaz 2007), that splits the signal into overlapping segments, computes the periodograms of the overlapping segments from their Fourier Transforms, and averages the resulting periodograms to produce the power spectral density estimate, in the integer frequency points in the range of 4–15 Hz using a Hamming window with 50% overlap between the segments.

For the two channels of EEG data AAR, PSD and HE features yield feature vectors of length 6 × 2 = 12, 12 × 2 = 24, and 1 × 2 = 2 respectively. Therefore, the final concatenated feature vector has dimensions of 38. The feature dimension being considerably small, there is no necessity of a feature selection stage for feature dimension reduction. During each set of experiments, each of the three features is normalized with respect to their respective maximum values and combined to produce the normalized feature space for classification. Normalization performs scaling of the feature values in the range [−1, 1]. This is done to minimize the differences arising due to different trials of the same experiment. For the feature space arranged in a two dimensional matrix form where rows represent trials and columns represent features, normalization is effected according to (6) where the feature space is the matrix composed of elements of the form f i,j, the ith instance of the jth feature, f normi,j is the normalized value of f i,j and ‘max’ computes the maximum value.

| 6 |

Classification

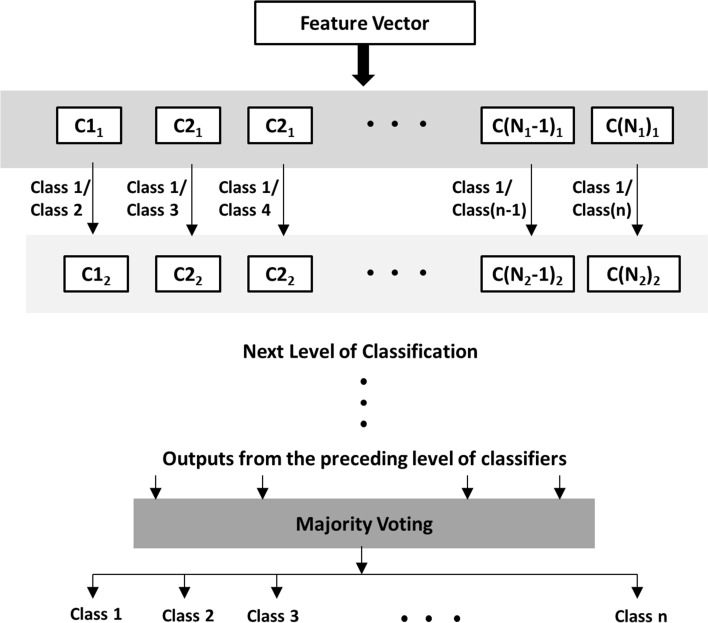

The extracted features are classified using three standard supervised classifiers, namely, support vector machine (SVM) (Mitchell 1997; Webb 2003; Zhang et al. 2016), naive Bayes (NB) (Mitchell 1997; Leung 2007; Bhaduri et al. 2016; Chan et al. 2015) and k-nearest neighbor (kNN) (Mitchell 1997; Page et al. 2015) independent of each other. Classification is performed in a hierarchial one-vs.-one (OVO) approach and majority voting (Paul et al. 2006) is used to decide the final outcome. The scheme of classification is illustrated in Fig. 2.

Fig. 2.

Classification Scheme

Suppose there are n numbers of possible classes. In the first stage a sample is classified by a number of OVO classifiers, each classifying the sample to belong to class 1 or class 2, class 1 or class 3, …, class (n−1) or class n. Therefore, for classifications against class 1, (n−1) classifiers would be required, for classifications against class 2, (n−2) classifiers would be required, and so on. Hence a total of N 1 = n(n−1)/2 OVO classifiers will be required in the first stage, identified as Ci 1 for i = 1 to N 1. The outcomes of the classifiers are combined using majority voting technique to determine the class of the sample with highest occurrence. A second stage of OVO classifiers prior to the majority voting method is necessary in situations when the first stage yields a non-conclusive results, say in the first stage the sample is classified to belong to k different classes with the equal number of votes. In that case the second level of classifiers will require N 2 = k(k−1)/2 OVO classifiers, Ci 2 for i = 1 to N 2, arguing in a similar manner. This process can be continued in case of a persisting conflict for final outcome in case of equal number of votes, thereby producing a hierarchical classification pattern.

SVM used in this work has been tuned with a cost value of 100 and margin of 1, which are determined experimentally. The NB classifier is used with the assumption that the features have a normal distribution whose mean and covariance are learned during the process of training. For kNN, the distance metric and the value of ‘k’ are experimentally selected to be city block distance and k = 5 on the basis of best performance.

Experiments were conducted in two phases, i.e. for training the classifiers and online classifications using the pre-trained classifiers. Experiments were performed to recognize only numbers, only alphabets, only symbols as well as combinations of alphabets, numbers, and symbols.

EEG acquisition and processing

Data acquisition

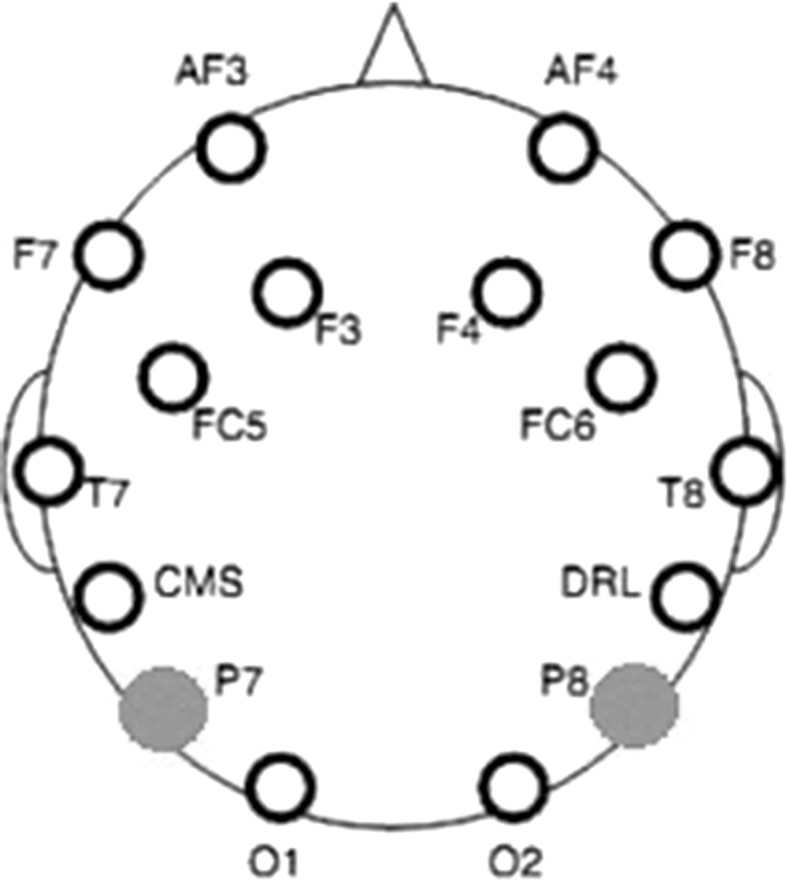

The electrode placement (P7 and P8), according to the International 10–20 system (Dornhege 2007; Teplan 2002) is shown in Fig. 3 where the selected electrodes are marked in green. EEG is acquired using a 14-channel Emotiv Headset (www.emotiv.com/eeg) at a sampling rate of 128 Hz.

Fig. 3.

Electrode placement

Material preparation and subjects

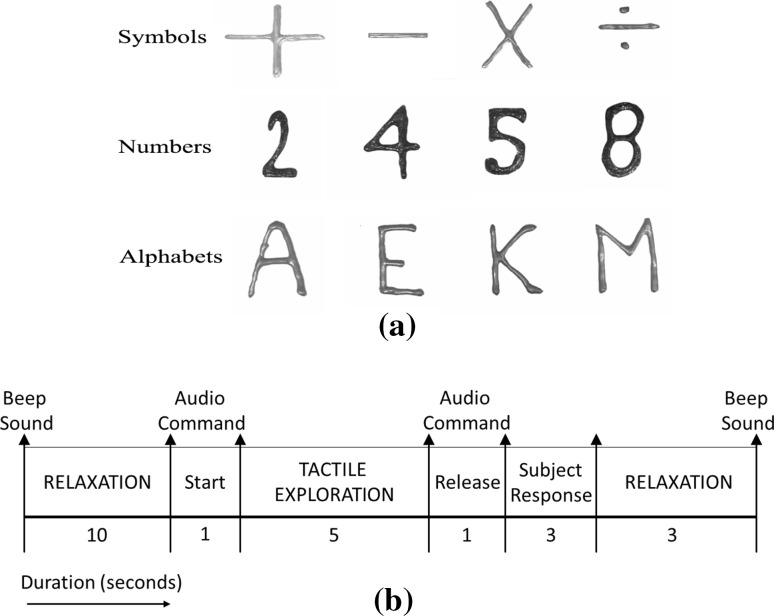

In order to recognize texts from touch without visual observation, plain surfaces were embossed with hard acrylic paint that produces texts with depths of about 1 mm. In the present work four alphabets, numbers, and symbols have been randomly selected from the standard set of 26 alphabets, 10 digits and various symbols respectively. Since this is a preliminary study to classify texts from tactually evoked EEG signals, texts were randomly selected to test the proposition that the brain waves can be decoded to identify embossed texts during tactile exploration. At first only two classes of texts were considered for classification, followed by four and six text classes, so as to validate the feasibility of 3-D text recognition from EEG signals even with increase in complexity in terms of more number of classes. The study is to be extended in future with all the alphabets/numbers, based on the present results. 3-D texts representing four numbers namely, 2, 4, 5, and 8, four uppercase alphabets namely, A, E, K and M, and four symbols namely, + (plus), − (minus), × (multiplication) and ÷ (division) were selected randomly from set of all (0–9) numbers, 26 alphabets, and various mathematical symbols (Fig. 4a).

Fig. 4.

a Embossed texts for experiments, b tactile stimulus presentation for offline EEG acquisition

Both online and offline experiments were conducted on 15 subjects, 7 male and 8 female (25 ± 5 years). The subjects were blindfolded and instructed to explore the embossed surfaces containing the 3-D texts in accordance with an audio cue to time the sequential grasping and release of each text. All ethical issues relating to human subject experiments were considered. The subjects voluntarily participated in the experiments, after signing consent forms. The subjects’ identities were not disclosed and they were provided with refreshments after their participation. The institutional ethical committee was informed and their agreement acquired regarding the experimental objective and procedure. All data acquisition procedures were non-invasive and all safety norms were abided for the protection of the subjects. Helsinki Declaration of 1975, as revised in 2000 (Carlson et al. 2004), was followed for dealing with experiments on human subjects.

Offline procedure

In each set of experiments, during classifier training, EEG is acquired on presenting an audio stimulus (Fig. 4b) to the blindfolded subjects for tactual exploration, starting with a beep sound followed by a 10 s rest period. An audio cue then directs the subject to start tactual exploration for 5 s, during which the 3-D text is presented in front of him/her to explore. During this duration, EEG signals are acquired for analysis. Hence each trial comprises of 5 × 128 (=640) samples of data. At the end of this 5 s, another audio cue instructs the subject to stop the exploration, which is followed by assessment of the subject’s verbal response to evaluate his/her understanding of the stimulus and another relaxation period of 5 s. If the verbal response is incorrect, that particular text is presented again. The 3-D text objects in the stimuli are arranged in a random order. The subjects have no previous notion of which text is going to be provided to him/her during the experiments.

The offline classifiers are trained into recognize numbers, alphabets, symbols, or combinations of these using EEG data taken over a period of 5 days per experiment per subject, to include the possible variations in EEG signals over different days. For each subject and each experiment, 10 instances of EEG, each of 5 s duration, (taken over 5 days and hence 50 instances) of each class acquired by repeating the audio stimulus, are used for the offline phase. Experiment I assesses the performance of binary classification to separately recognize symbols, alphabets or numbers. Experiments are also conducted to observe whether multiple classes of symbols, numbers, and alphabets can also be classified separately as well as in combinations in Exp. II and Exp. III.

Experiment I: Two classes

mathematical symbols ‘+’ and ‘−’

numbers ‘2’ and ‘4’

alphabets ‘A’ and ‘M’

Experiment II: Four classes

mathematical symbols ‘+’, ‘−’, ‘×’ and ‘÷’

numbers ‘2’, ‘4’, ‘5’ and ‘8’

alphabets ‘A’, ‘E’, ‘K’ and M’

Experiment III: Six classes

Two symbols, two numbers and two alphabets, ‘+’, ‘−’, ‘5’, ‘8’, ‘A’ and ‘E’

During offline classification, the feature space is fivefold cross-validated to create test and train instances from the 50 instances of each class i.e. in each case, first data is divided into 5 disjoint partitions and (5 − 1=) fourfolds are used for training while the last fold is used for evaluation. This process is repeated 5 times, each time different partitions are generated randomly.

Online classification and communication

Each of the set of experiments I, II and III, performed offline, are carried out online also, using the pre-trained classifiers independently. The test samples are provided to the blindfolded subjects in a random stream without the subjects knowing about them or their order. Online EEG acquisition for each test text instance is done for 5 s. In the next 5 s, the subject’s response is noted (this takes a maximum of 2 s) and the acquired EEG signal is processed and classified displaying the result class on the computer screen, while the subject relaxes. The offline classification results (Table 1) confirm that computation time for processing and classification of EEG of 5 s duration is less than 5 s in all experiments. Hence the loop comprising of 5 s of EEG acquisition and 5 s of parallel relaxation and processing is not disturbed. In case a sample is incorrectly interpreted as evaluated from the subject’s response, the same sample is produced again. EEG recordings of incorrect responses has not been used for classifier training in this work. The loop is repeated for a total of 100 test instances in each experiment. The displayed result in each iteration is matched with the actual class of the text, known to the experiment operator. From the number of correct hits, the correct rate (CR) is evaluated corresponding to each class of text and the average computation time per test sample (T) is also noted.

Table 1.

Results of offline experiments

| Ma | Experiment I | |||||

|---|---|---|---|---|---|---|

| (1) Symbols | (2) Numbers | (3) Alphabets | ||||

| ‘+’ | ‘−’ | ‘2’ | ‘4’ | ‘A’ | ‘M’ | |

| CAb | 0.8245 (0.0435) | 0.8435 (0.0476) | 0.8329 (0.0245) | 0.8145 (0.0306) | 0.8180 (0.0540) | 0.7805 (0.0462) |

| CTc (s) | 0.4119 (0.0030) | 0.4714 (0.0025) | 0.4396 (0.0015) | |||

| P d | 0.9880 (0.0110) | 0.9920 (0.0110) | 0.9860 (0.0167) | 99.40 (0.0110) | 0.9880 (0.0415) | 0.9860 (0.0261) |

| M | Experiment II | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (1) Symbols | (2) Numbers | (3) Alphabets | ||||||||||

| ‘+’ | ‘−’ | ‘×’ | ‘÷’ | ‘2’ | ‘4’ | ‘5’ | ‘8’ | ‘A’ | ‘E’ | ‘K’ | ‘M’ | |

| CA | 0.8220 (0.0560) | 0.8017 (0.0328) | 0.7570 (0.0776) | 0.7633 (0.0544) | 0.7867 (0.06065) | 0.7650 (0.0902) | 0.8073 (0.0450) | 0.7870 (0.0438) | 0.7625 (0.0635) | 0.8050 (0.0854) | 0.7558 (0.0460) | 0.7750 (0.0674) |

| CT (s) | 0.8758 (0.0012) | 0.8379 (0.0056) | 0.7384 (0.0034) | |||||||||

| p | 0.9860 (0.0300) | 0.9820 (0.0261) | 0.9820 (0.0120) | 0.9680 (0.0250) | 0.9750 (0.0112) | 0.9750 (0.0140) | 0.9880 (0.0261) | 0.9910 (0.0110) | 0.9820 (0.0240) | 0.9920 (0.0110) | 0.9650 (0.0120) | 0.9810 (0.0415) |

| M | Experiment III | |||||

|---|---|---|---|---|---|---|

| Symbols + numbers + alphabets | ||||||

| ‘+’ | ‘−’ | ‘5’ | ‘8’ | ‘A’ | ‘E’ | |

| CA | 0.7870 (0.0350) | 0.8018 (0.0566) | 0.7672 (0.0880) | 0.7780 (0.0672) | 0.7520 (0.0476) | 0.7572 (0.0635) |

| CT (s) | 0.9485 (0.0052) | |||||

| p | 0.9880 (0.0415) | 0.9780 (0.0200) | 0.9760 (0.0261) | 0.9760 (0.0300) | 0.9780 (0.011) | 0.9740 (0.0261) |

aMetric

bClassification accuracy

cComputation time in seconds

dRatio of correct to total number of verbal responses

Performance evaluation

Classification accuracy/correct rate and computation time For offline classifications, the EEG classification accuracy (CA) of each class C i is computed from the confusion matrix (Dornhege 2007). The computation time (CT) for each experiment is the total EEG processing and classification time.

During each of the online experiments, at the end of the presentation of all the 100 test samples, for each class C i the number of correctly classified samples (C correcti) are counted and using the total number of samples presented for each class (C totali) the correct rate (CR) of that class for EEG classification is computed using (7). The average processing and classification time (T) per test sample per class is also noted.

| 7 |

Information transfer rate The information transfer rate (ITR) (Yuan et al. 2013) during online experiments is also noted. ITR measures the performance of EEG classification in the online condition and is calculated by (8) where B represents ITR in bits/sample, N represents the number of choices per sample (possible outcome classes) and P represents the probability of correct classification and lies in the range from 0 to 1. The evaluation of ITR assumes that all possible outcomes of classification convey the same amount of information. In our case, the value of CR over all classes is a measure of the number of samples correctly classified out of the total number of samples and is used to represent P in (8). ITR can also be represented in bits/s according to (9) where T denotes the processing and classification time per sample in seconds.

| 8 |

| 9 |

Utility While ITR is a measure of the classifier performance in a BCI system, the overall performance of the system cannot be estimated by it. The utility metric (Dal Seno et al. 2010; Thompson et al. 2013) takes into consideration the total system while evaluating the ‘amount of quantifiable benefits by the user when using a BCI system’. utility is a more ‘user centric’ metric for BCI performance evaluation that takes into account not only the classifier performance but also the control interface. The parameters to evaluate the utility metric in the present context are the accuracy of user perception (as evaluated from the subject’s verbal response) p, the average system time taken per sample T S that is equal to T (processing and classification time per sample) + T c (communication/result display time per sample). The total time for evaluating the system’s overall performance T L per sample considers only T S when the subject’s perception is correct, however if the subject’s perception is incorrect, he/she is given the same sample again and hence T L now includes the time for interpreting the sample again. Thus T L is given by (10) where T (1)L is the time for interpreting the misinterpreted sample again.

| 10 |

Assuming no time dependency of the user’s response and a memory less BCI system where each sample is classified independent of the others, T (1)L = T L and (10) can be re-written and the resulting series converges to give T L = T/p as shown in (11).

| 11 |

According to the definition utility is computed as the average benefit b L per sample divided by the time required to achieve it at the system output T L. Assuming equal probability among all N classes the conveyed information is b L = log2N and utility U is given by (12).

| 12 |

All computations are done using MATLAB R2012b on a Windows 7, 64-bit OS with an Intel Core i3 2.20 GHz processor.

Results and discussion

From the offline classification results it is found that the performance over the different classifiers is variable and it is not possible to obtain a best classifier on an average over all these particular experiments. This result follows the well known concept of ‘No Free Lunch’ (Ho 1999) in machine learning. All experiments are performed subject-wise i.e. classifiers are trained and tested offline separately for each subject’s data and then the particular pre-trained classifier for a subject is used during his/her online testing phase. The results of offline classification in terms of the metrics (M) of CA and CT, along with the ratio of the correct/total number of verbal responses (p), average and standard deviation (in parenthesis) over 15 subjects, are tabulated in Table 1. These results are shown for the best case classification (from the different classifiers used), on the datasets acquired over 5 days on each subject (50 instances of each class each) and creating training and testing instances through fivefold cross-validation.

The best results of online EEG classification over all the pre-trained classifiers are tabulated in Table 2 with the average values of the metrics (M) and their standard deviations (in parenthesis) over all 15 subjects. In each experiment, sets of 2, 4, or 6 texts are supplied to the subject in random order, with a total of 100 samples text, equally divided over the different classes for the respective experiments. The correct rate (CR) is computed as the number of samples of a particular class classified correctly.

Table 2.

Results of online experiments

| Ma | Experiment I | |||||

|---|---|---|---|---|---|---|

| (1) Symbols | (2) Numbers | (3) Alphabets | ||||

| ‘+’ | ‘−’ | ‘2’ | ‘4’ | ‘A’ | ‘M’ | |

| CRb | 0.7750 (0.0672) | 0.7850 (0.0650) | 0.7760 (0.0854) | 0.7550 (0.0432) | 0.7652 (0.0560) | 0.7410 (0.0230) |

| Tc (s) | 0.5576 (0.0040) | 0.5872 (0.0032) | 0.5659 (0.0072) | 0.5988 (0.0025) | 0.5160 (0.0065) | 0.5875 (0.0450) |

| M | Experiment II | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (1) Symbols | (2) Numbers | (3) Alphabets | ||||||||||

| ‘+’ | ‘−’ | ‘×’ | ‘÷’ | ‘2’ | ‘4’ | ‘5’ | ‘8’ | ‘A’ | ‘E’ | ‘K’ | ‘M’ | |

| CR | 0.7060 (0.0305) | 0.7232 (0.0610) | 0.7465 (0.0750) | 0.7035 (0.0345) | 0.7022 (0.0260) | 0.7570 (0.0450) | 0.7610 (0.0632) | 0.7060 (0.0532) | 0.7435 (0.0260) | 0.6850 (0.0450) | 0.7225 (0.0250) | 0.7212 (0.0620) |

| T (s) | 0.9788 (0.0030) | 0.9664 (0.0050) | 0.9545 (0.0028) | 0.9971 (0.0052) | 1.5245 (0.0036) | 1.5574 (0.0078) | 1.5781 (0.0056) | 1.5654 (0.0036) | 1.5832 (0.0020) | 1.5543 (0.0042) | 1.5871 (0.0026) | 1.5882 (0.0038) |

| M | Experiment III | |||||

|---|---|---|---|---|---|---|

| Symbols + numbers + alphabets | ||||||

| ‘+’ | ‘−’ | ‘5’ | ‘8’ | ‘A’ | ‘E’ | |

| CR | 0.6875 (0.0532) | 0.7040 (0.0564) | 0.6575 (0.0750) | 0.6225 (0.0800) | 0.6812 (0.0612) | 0.7045 (0.0854) |

| T (s) | 1.9781 (0.0087) | 1.8991 (0.0102) | 1.9112 (0.0075) | 1.9891 (0.0060) | 1.9882 (0.0082) | 1.8978 (0.0072) |

aMetric

bCorrect rate

cComputation Time per sample in seconds

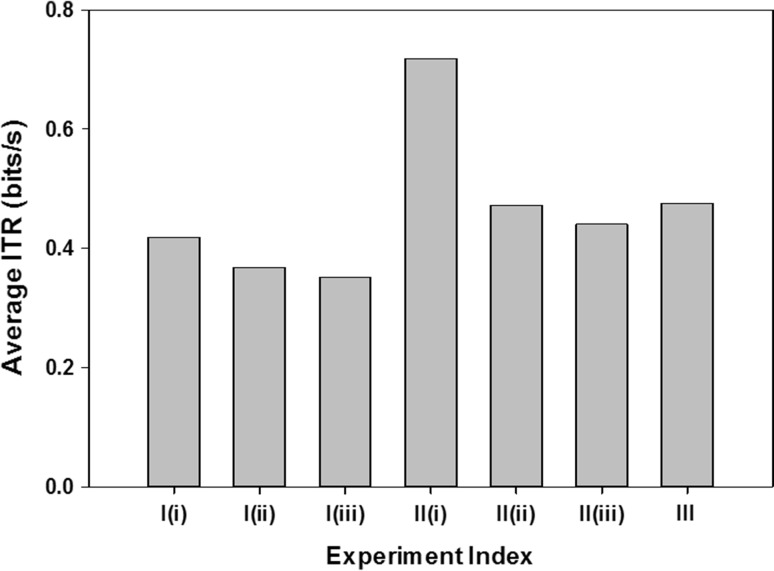

Table 3 denotes the variation in mean ITR over all classes (in bits/sample as well as bits/s) along with the values of the correctness of the verbal response (p) of the participants and corresponding computed utility (U) average and standard deviation (in parenthesis) over all subjects.

Table 3.

Online performance metrics

| Experiment | ITRa (bits/sample) | ITR (bits/s) | P b | Uc (bits/s) |

|---|---|---|---|---|

| I (1) | 0.2398 (0.0055) | 0.4190 (0.0078) | 1 (0.0000) | 1.7469 (0.0455) |

| I (2) | 0.2142 (0.0082) | 0.3679 (0.0091) | 1 (0.0000) | 1.7170 (0.0302) |

| I (3) | 0.1937 (0.0063) | 0.3510 (0.0032) | 1 (0.0000) | 1.8123 (0.0142) |

| II (2) | 0.7002 (0.0044) | 0.7187 (0.0106) | 1 (0.0000) | 2.0529 (0.0635) |

| II (2) | 0.7353 (0.0068) | 0.4724 (0.0112) | 0.9333 (0.0258) | 1.1993 (0.0338) |

| II (3) | 0.6950 (0.0037) | 0.4404 (0.0075) | 1 (0.0000) | 1.2672 (0.0422) |

| III | 0.9247 (0.0042) | 0.4757 (0.0302) | 0.8667 (0.0352) | 1.1525 (0.0458) |

| Mean | 0.5290 | 0.4636 | 0.9714 | 1.5640 |

aInformation transfer rate

b Ratio of correct to total number of verbal responses

cUtility

A bar graph illustrating the variation in average ITR over all classes mean over all subjects is illustrated in Fig. 5.

Fig. 5.

Average ITR values over all subjects for the different experiments

For further analysis of the observed features, one way ANOVA test (Taghizadeh-Sarabi et al. 2015) has been implemented on the obtained feature set. Table 4 shows the corresponding p value for all the three experiments.

Table 4.

One way ANOVA test

| Features | Experiment | P value |

|---|---|---|

| AAR | I | <0.02 |

| II | <0.25 | |

| III | <0.04 | |

| PSD | I | <0.01 |

| II | <0.01 | |

| III | <0.02 | |

| HE | I | <0.03 |

| II | <0.03 | |

| III | <0.05 |

It can be observed from Table 4 that the PSD features has the least p value compared to AAR and HE for all the three experiments. These low p values justify the choice of these three features which yielded such high classification accuracy in this work.

As observed from Table 1, the best performance in classification of 2 classes of texts is achieved for 2 symbols being classified with the maximum average accuracy of 83.40%. The performances of classification of 2 classes of alphabets or numbers are equally good, the CAs being greater than 78% in all cases. The performance of text recognition deteriorates slightly with an increase in the number of classes, though the minimum individual accuracy does not decrease below 75% in any of the cases. Four numbers are classified with average classification accuracy of 78.65% while four symbols and alphabets achieve maximum of 78.60 and 77.45% average accuracies respectively. Six texts, a combination of 2 symbols, 2 numbers, and 2 alphabets are classified with average accuracy of 77.38%. Throughout the experiments, the maximum CT is below 1 s. From the results of offline EEG classification, it can be concluded that tactually evoked EEG can indeed be classified to recognize embossed symbols, numbers, or alphabets with above 75% accuracy. The values of p indicate that the perceptual understanding of the tactile stimuli over all subjects is significantly high. During the offline phase, training and testing of the classifiers occur with separate set of instances and hence these results show the CA during testing in offline classification, validating the use of this work in online environment with pre-trained classifiers.

From Table 2 it is observed that during online classification, the average CR is the highest while recognizing two classes of texts only, the correct rates being greater than 70% in all cases and reaching a maximum average of 78% for recognizing two symbols. For four classes of texts, the highest CR of 73.15% is obtained for four numbers. The average recognition rate for six classes of texts is achieved at 67.62% with a considerable increase in time T, which is evident considering the hierarchical nature and OVO classification process. In the online experiments, the minimum individual CR is not below 60% and maximum T is less than 2 s, thereby validating the proposed hypothesis.

All sub-experiments under experiment I deal with 2 classes, that for II deal with 4 classes and experiment III deals with 6 classes. Hence from Fig. 5 the variation in ITR with number of classes is also evaluated and the results are found to sustain larger number of classes. A higher ITR value for more classes indicates no significant depreciation of CR with an increase in the number of classes.

From Table 3 it is observed that the maximum value of U reaches 2.0529 bits/s. The mean ITR (bits/s) and U (bits/s) over all sub-experiments and subjects is found out to be 0.4636 and 1.5640 bits/s respectively.

In both phases of the experiment, prior to the start of the actual experimentations, the subjects are given a blank page with nothing embossed to explore, and EEG signals are acquired and analyzed. Next the subjects are provided with the embossed texts and asked to explore tactually, and during that period also EEG signals are acquired and analyzed. It is found that EEG signals for only motor movements are in the bandwidth of 8–32 Hz. However, when tactually evoked, then ERD/ERS patterns and duration is different as well as the bandwidth is 4–15 Hz. Thus, the motor related artifacts are found to be high frequency components, which are eliminated to a large extent by Band Pass Filtering in the pre-processing stage. However, the human brain is multisensory, and the tactile system is intricately linked to the motor and even auditory system making exceptionally purely tactile brain responses unlikely to obtain (Ro et al. 2012).

Similar works has been done in past literatures for tactile classification using EEG signals. In Hori and Okada (2017), tactile sensation of different patterns has been classified yielding 85 and 60% classification accuracy for 2 and 4 class classification problems. Tactile stimulus provided by four pressure directions using a joystick was used in (Kono et al. 2013) to classify EEG signals online with 82.1% accuracy. Location of tactile stimulus can also be classified from EEG signals with a high offline classification accuracy of 96.76% (Wang et al. 2015). In this work, a different approach of EEG based tactile classification has been presented. Instead of simple pattern stimulus, different alphabets, numbers and shapes has been classified both offline and online. As shown in Tables 1 and 2, highest average classification accuracy of 81.90% for Exp-I, 78.24% for Exp-II and 77.39% for Exp-III is obtained for offline classification. These results are comparable with the other EEG based tactile classification works. Even for online classification, the accuracy is above 60%. Thus, even with 2, 4 and 6 class classification, a high classification accuracy is obtained for both offline and online classification.

Conclusion and future directions

The present work efficiently recognizes 3-D texts during tactile exploration by EEG signal analysis. The results of the experiments have been validated over 15 healthy blindfolded subjects. Extracted EEG features are classified independently by three different classifiers in hierarchical one-vs.-one approach and online analysis has been performed with the pre-trained classifiers showing promising results in each case. Experiments have been performed to recognize up to six classes of texts, separately for each of symbols, numbers, and alphabets as well as in combinations. However, as the number of classes of texts increase the recognition rate falls slightly and the computation times considerably increase, but remains below 2 s. The results thus depict that 3-D texts can be efficiently classified from tactually evoked EEG signals. The classification accuracy decreases on increasing the number of classes to recognize, which is obvious because the mental overload increases with increase in number of classes. Even then the classification accuracy is never less than 60% in online analysis with a maximum ITR and utility of 0.7187 and 2.0529 bits/s respectively. Thus, the presented technique can be further improvised in future to increase all the numbers, alphabets and more symbols with will lead to the development of BCI based communication system from tactile channel stimulations.

In this work, we have tried to study the feasibility of online tactually evoked EEG classification to recognize up to six classes of 3D texts in a laboratory environment. This work will be extended for recognition of all ten digits as well as the twenty-six alphabets from tactually evoked EEG analysis. Future scopes of work comprise of using better recognition algorithms to incorporate online exploration of words and groups of words based on the proposed methods. Several other classification algorithms will be explored. Recent studies on several cognitive studies uses neural network models (Oyedotun and Khashman 2017; Yamada and Kashimori 2013; Mizraji and Lin 2017) which shows better performance. Therefore, neural networks will be implemented in our future work. Also, we intend to study the performance of EEG classification in a multitasking environment while the subjects are asked to perform other cognitive tasks while tactually exploring 3D texts. This study is intended to provide a BCI based alternative to existing techniques such as Braille that will provide control and communication powers to the paralyzed and the visually impaired patients by virtue of only tactile exploration. Another interesting avenue is the study of EEG patterns of Braille reading in visually challenged and normal visioned individuals.

Acknowledgements

This study has been supported by University Grants Commission, India, University of Potential Excellence Program (UGC-UPE) (Phase II) in Cognitive Science, Jadavpur University and Council of Scientific and Industrial Research (CSIR), India.

Contributor Information

A. Khasnobish, Email: anweshakhasno@gmail.com

S. Datta, Email: shreyasidatta@gmail.com

R. Bose, Email: rohitbose94@gmail.com

D. N. Tibarewala, Email: biomed.ju@gmail.com

A. Konar, Email: konaramit@yahoo.co.in

References

- Acharya UR, Faust O, Kannathal N, Chua T, Laxminarayan S. Non-linear analysis of EEG signals at various sleep stages. Comput Methods Programs Biomed. 2005;80(1):37–45. doi: 10.1016/j.cmpb.2005.06.011. [DOI] [PubMed] [Google Scholar]

- Alkan A, Yilmaz AS. Frequency domain analysis of power system transients using Welch and Yule–Walker AR methods. Energy Convers Manag. 2007;48(7):2129–2135. doi: 10.1016/j.enconman.2006.12.017. [DOI] [Google Scholar]

- Aloise F, Schettini F, Aricò P, Bianchi L, Riccio A, Mecella M, Cincotti F (2010) Advanced brain computer interface for communication and control. In Proceedings of the International Conference on Advanced Visual Interfaces. ACM, pp 399–400

- Amedi A, Kriegstein KV, Atteveldt NMV, Beauchamp MS, Naumer MJ. Functional imaging of human crossmodal identification and object recognition. Exp Brain Res. 2005;166(34):559–571. doi: 10.1007/s00221-005-2396-5. [DOI] [PubMed] [Google Scholar]

- Beisteiner R, Windischberger C, Geißler A, Gartus A, Uhl F, Moser E, Deecke L, Lanzenberger R. FMRI correlates of different components of Braille reading by the blind. Neurol Psychiatry Brain Res. 2015;21(4):137–145. doi: 10.1016/j.npbr.2015.10.002. [DOI] [Google Scholar]

- Bhaduri S, Khasnobish A, Bose R, Tibarewala DN (2016, March) Classification of lower limb motor imagery using K nearest neighbor and Naïve-Bayesian classifier. In: 2016 3rd international conference on recent advances in information technology (RAIT). IEEE, pp 499–504

- Carlson RV, Boyd KM, Webb DJ. The revision of the Declaration of Helsinki: past, present and future. Br J Clin Pharmacol. 2004;57(6):695–713. doi: 10.1111/j.1365-2125.2004.02103.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan A, Early CE, Subedi S, Li Y, Lin H (2015, November) Systematic analysis of machine learning algorithms on EEG data for brain state intelligence. In: 2015 IEEE international conference on bioinformatics and biomedicine (BIBM). IEEE, pp 793–799

- Cona F, Zavaglia M, Astolfi L, Babiloni F, Ursino M. Changes in EEG power spectral density and cortical connectivity in healthy and tetraplegic patients during a motor imagery task. Comput Intell Neurosci. 2009 doi: 10.1155/2009/279515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curran EA, Stokes MJ. Learning to control brain activity: a review of the production and control of EEG components for driving brain–computer interface (BCI) systems. Brain Cogn. 2003;51(3):326–336. doi: 10.1016/S0278-2626(03)00036-8. [DOI] [PubMed] [Google Scholar]

- Dal Seno B, Matteucci M, Mainardi LT. The utility metric: a novel method to assess the overall performance of discrete brain–computer interfaces. IEEE Trans Neural Syst Rehabil Eng. 2010;18(1):20–28. doi: 10.1109/TNSRE.2009.2032642. [DOI] [PubMed] [Google Scholar]

- Datta S, Saha A, Konar A (2013) Perceptual basis of texture classification from tactile stimulus by EEG analysis. Proc Nat Conf Brain Consci, pp 38–45

- Datta S, Khasnobish A, Konar A, Tibarewala DN (2015) Cognitive activity classification from EEG signals with an interval type-2 fuzzy system. In Advancements of Medical Electronics. Springer, New Delhi, pp 235–247

- Dornhege G. Towards brain–computer interfacing. Cambridge: MIT Press; 2007. [Google Scholar]

- Fabiani GE, McFarland DJ, Wolpaw JR, Pfurtscheller G. Conversion of EEG activity into cursor movement by a brain–computer interface (BCI) IEEE Trans Neural Syst Rehabil Eng. 2004;12(3):331–338. doi: 10.1109/TNSRE.2004.834627. [DOI] [PubMed] [Google Scholar]

- Gohel B, Lee P, Jeong Y. Modality-specific spectral dynamics in response to visual and tactile sequential shape information processing tasks: an MEG study using multivariate pattern classification analysis. Brain Res. 2016;1644:39–52. doi: 10.1016/j.brainres.2016.04.068. [DOI] [PubMed] [Google Scholar]

- Grunwald M. Human haptic perception. Berlin: Verlag; 2008. [Google Scholar]

- Grunwald M, et al. Theta power in the EEG of humans during ongoing processing in a haptic object recognition task. J Cogn Brain Res. 2001;11:33–37. doi: 10.1016/S0926-6410(00)00061-6. [DOI] [PubMed] [Google Scholar]

- Gschwind M, Van De Ville D, Hardmeier M, Fuhr P, Michel C, Seeck M. ID 249—corrupted fractal organization of EEG topographical fluctuations predict disease state in minimally disabled multiple sclerosis patients. Clin Neurophysiol. 2016;127(3):e72. doi: 10.1016/j.clinph.2015.11.241. [DOI] [Google Scholar]

- Ho YC. The no free lunch theorem and the human-machine interface. IEEE Control Syst. 1999;19(3):8–10. doi: 10.1109/37.768535. [DOI] [Google Scholar]

- Hori J, Okada N. Classification of tactile event-related potential elicited by Braille display for brain–computer interface. Biocybern Biomed Eng. 2017;37(1):135–142. doi: 10.1016/j.bbe.2016.10.007. [DOI] [Google Scholar]

- James TW, Kim S, Fisher JS. The neural basis of haptic object processing. Can J Exp Psychol Rev. 2007;61(3):219. doi: 10.1037/cjep2007023. [DOI] [PubMed] [Google Scholar]

- Jiménez J, Olea J, Torres J, Alonso I, Harder D, Fischer K. Biography of Louis Braille and invention of the braille alphabet. Surv Ophthal. 2009;54(1):142–149. doi: 10.1016/j.survophthal.2008.10.006. [DOI] [PubMed] [Google Scholar]

- Kanounikov IE, Antonova EV, Kiselev BV, Belov DR. Dependence of one of the fractal characteristics (Hurst exponent) of the human electroencephalogram on the cortical area and type of activity. Proc IEEE Int Jt Conf Neural Netw IJCNN. 1999;1:243–246. [Google Scholar]

- Khasnobish A, Konar A, Tibarewala DN, Bhattacharyya S, Janarthanan R (2013) Object shape recognition from EEG signals during tactile and visual exploration. In: Maji P et al (eds) Pat Recog Mach Intell. Springer, Berlin 8251: 459–464

- Kodama T, Makino S, Rutkowski TM (2016, December) Tactile brain-computer interface using classification of P300 responses evoked by full body spatial vibrotactile stimuli. In: Signal and information processing association annual summit and conference (APSIPA), 2016 Asia-Pacific. IEEE, pp 1–8

- Kono S, Aminaka D, Makino S, Rutkowski TM (2013, December) EEG signal processing and classification for the novel tactile-force brain-computer interface paradigm. In: 2013 international conference on signal-image technology & internet-based systems (SITIS). IEEE, pp 812–817

- Leung KM (2007) Naive Bayesian classifier, technical report, Department of Computer Science/Finance and Risk Engineering, Polytechnic University, Brooklyn, New York, USA

- Martinovic J, Lawson R, Craddock M. Time course of information processing in visual and haptic object classification. Front Hum Neurosci. 2012 doi: 10.3389/fnhum.2012.00049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCane LM, Heckman SM, McFarland DJ, Townsend G, Mak JN, Sellers EW, et al. P300-based brain-computer interface (BCI) event-related potentials (ERPs): people with amyotrophic lateral sclerosis (ALS) vs. age-matched controls. Clin Neurophysiol. 2015;126(11):2124–2131. doi: 10.1016/j.clinph.2015.01.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell TM. Machine learning. New York City: McGraw Hill; 1997. [Google Scholar]

- Mizraji E, Lin J. The feeling of understanding: an exploration with neural models. Cogn Neurodyn. 2017;11(2):135–146. doi: 10.1007/s11571-016-9414-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nai-Jen H, Palaniappan R (2004) Classification of mental tasks using fixed and adaptive autoregressive models of EEG signals. In: Proceedings of 26th annual international conference of the IEEE engineering in medicine and biology society, vol 1, pp 507–510 [DOI] [PubMed]

- Neuper C, Scherer R, Reiner M, Pfurtscheller G. Imagery of motor actions: differential effects of kinesthetic and visual–motor mode of imagery in single-trial EEG. Cogn Brain Res. 2005;25(3):668–677. doi: 10.1016/j.cogbrainres.2005.08.014. [DOI] [PubMed] [Google Scholar]

- Oyedotun OK, Khashman A. Banknote recognition: investigating processing and cognition framework using competitive neural network. Cogn Neurodyn. 2017;11(1):67–79. doi: 10.1007/s11571-016-9404-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Page A, Sagedy C, Smith E, Attaran N, Oates T, Mohsenin T. A flexible multichannel EEG feature extractor and classifier for seizure detection. IEEE Trans Circuits Syst II Express Briefs. 2015;62(2):109–113. doi: 10.1109/TCSII.2014.2385211. [DOI] [Google Scholar]

- Pal M, Khasnobish A, Konar A, Tibarewala DN, Janarthanan R (2014, January) Performance enhancement of object shape classification by coupling tactile sensing with EEG. In: 2014 international conference on electronics, communication and instrumentation (ICECI). IEEE, pp 1–4

- Paul TK, Hasegawa Y, Iba H (2006) Classification of gene expression data by majority voting genetic programming classifier. In: Proceedings of IEEE congress on evolutionary computation, pp 2521–2528

- Reed CL, Shoham S, Halgren E. Neural substrates of tactile object recognition: an fMRI study. Hum Brain Mapp. 2004;21(4):236–246. doi: 10.1002/hbm.10162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ro T, Ellmore TM, Beauchamp MS. A neural link between feeling and hearing. Cereb Cortex. 2012;23(7):1724–1730. doi: 10.1093/cercor/bhs166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sanei S, Chambers JA. EEG signal processing. Hoboken: Wiley; 2008. [Google Scholar]

- Schlögl A. The electroencephalogram and the adaptive autoregressive model: theory and applications. Germany: Shaker; 2000. [Google Scholar]

- Schlögl A, Lugger K, Pfurtscheller G (1997) Using adaptive autoregressive parameters for a brain–computer-interface experiment. In: Proceedings of IEEE 19th annual international conference engineering in medicine and biology society vol 4, pp 1533–535

- Taghizadeh-Sarabi M, Daliri MR, Niksirat KS. Decoding objects of basic categories from electroencephalographic signals using wavelet transform and support vector machines. Brain Topogr. 2015;28(1):33–46. doi: 10.1007/s10548-014-0371-9. [DOI] [PubMed] [Google Scholar]

- Teplan M. Fundamentals of EEG measurement. Meas Sci Rev. 2002;2(2):1–11. [Google Scholar]

- Thompson DE, Blain-Moraes S, Huggins JE. Performance assessment in brain–computer interface-based augmentative and alternative communication. Biomed Eng Online. 2013;12(1):43. doi: 10.1186/1475-925X-12-43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ursino M, Cuppini C, Magosso E. An integrated neural model of semantic memory, lexical retrieval and category formation, based on a distributed feature representation. Cogn Neurodyn. 2011;5(2):183–207. doi: 10.1007/s11571-011-9154-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang D, Liu Y, Hu D, Blohm G. EEG-based perceived tactile location prediction. IEEE Trans Auton Ment Dev. 2015;7(4):342–348. doi: 10.1109/TAMD.2015.2427581. [DOI] [Google Scholar]

- Webb AR. Statistical pattern recognition. Hoboken: Wiley; 2003. [Google Scholar]

- Yamada Y, Kashimori Y. Neural mechanism of dynamic responses of neurons in inferior temporal cortex in face perception. Cogn Neurodyn. 2013;7(1):23–38. doi: 10.1007/s11571-012-9212-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan P, Gao X, Allison B, Wang Y, Bin G, Gao S. A study of the existing problems of estimating the information transfer rate in online brain–computer interfaces. J Neural Eng. 2013;10(2):1–11. doi: 10.1088/1741-2560/10/2/026014. [DOI] [PubMed] [Google Scholar]

- Zhang D, Xu F, Xu H, Shull PB, Zhu X. Quantifying different tactile sensations evoked by cutaneous electrical stimulation using electroencephalography features. Int J Neural Syst. 2016;26(02):1650006. doi: 10.1142/S0129065716500064. [DOI] [PubMed] [Google Scholar]

- Zhang Y, Liu B, Ji X, Huang D. Classification of EEG signals based on autoregressive model and wavelet packet decomposition. Neural Process Lett. 2016;2(45):365–378. [Google Scholar]