Abstract

Permutation testing is a non-parametric method for obtaining the max null distribution used to compute corrected p-values that provide strong control of false positives. In neuroimaging, however, the computational burden of running such an algorithm can be significant. We find that by viewing the permutation testing procedure as the construction of a very large permutation testing matrix, T, one can exploit structural properties derived from the data and the test statistics to reduce the runtime under certain conditions. In particular, we see that T is low-rank plus a low-variance residual. This makes T a good candidate for low-rank matrix completion, where only a very small number of entries of T (~ 0.35% of all entries in our experiments) have to be computed to obtain a good estimate. Based on this observation, we present RapidPT, an algorithm that efficiently recovers the max null distribution commonly obtained through regular permutation testing in voxel-wise analysis. We present an extensive validation on a synthetic dataset and four varying sized datasets against two baselines: Statistical NonParametric Mapping (SnPM13) and a standard permutation testing implementation (referred as NaivePT). We find that RapidPT achieves its best runtime performance on medium sized datasets (50 ≤ n ≤ 200), with speedups of 1.5× – 38× (vs. SnPM13) and 20×–1000× (vs. NaivePT). For larger datasets (n ≥ 200) RapidPT outperforms NaivePT (6× – 200×) on all datasets, and provides large speedups over SnPM13 when more than 10000 permutations (2× – 15×) are needed. The implementation is a standalone toolbox and also integrated within SnPM13, able to leverage multi-core architectures when available.

Keywords: Voxel-wise analysis, Hypothesis test, Permutation test, Matrix completion

1. Introduction

Nonparametric voxel-wise analysis, e.g., via permutation tests, are widely used in the brain image analysis literature. Permutation tests are often utilized to control the family-wise error rate (FWER) in voxel-wise hypothesis testing. As opposed to parametric hypothesis testing schemes Friston et al. (1994); Worsley et al. (1992, 1996), nonparametric permutation tests Holmes et al. (1996); Nichols and Holmes (2002) can provide exact control of false positives while making minimal assumptions on the data. Further, despite the additional computational cost, permutation tests have been widely adopted in image analysis Arndt et al. (1996); Halber et al. (1997); Holmes et al. (1996); Nichols and Holmes (2002); Nichols and Hayasaka (2003) via implementations in broadly used software libraries available in the community SnPM (2013); FSL (2012); Winkler et al. (2014).

Running time aspects of permutation testing

Despite the varied advantages of permutation tests, there is a general consensus that the computational cost of performing permutation tests in neuroimaging analysis can often be quite high. As we will describe in more detail shortly, high dimensional imaging datasets essentially mean that for each permutation, hundreds of thousands of test statistics need to be computed. Further, as imaging technologies continue to get better (leading to higher resolution imaging data) and the concurrent slowdown in the predicted increase of processor speeds (Moore's law), it is reasonable to assume that the associated runtime will continue to be a problem in the short to medium term. To alleviate these runtime costs, ideas that rely on code optimization and parallel computing have been explored Eklund et al. (2011); Eklund (2012, 2013). These are interesting strategies but any hardware-based approach will be limited by the amount of resources at hand. Clearly, significant gains may be possible if more efficient schemes that exploit the underlying structure of the imaging data were available. It seems likely that such algorithms can better exploit the resources (e.g., cloud or compute cluster) one has available as part of a study and may also gain from hardware/code improvements that are being reported in the literature.

Data acquired in many scientific studies, especially imaging and genomic data, are highly structured. Individual genes and/or individual voxels share a great deal of commonality with other genes and voxels. It seems reasonable that such correlation can be exploited towards better (or more efficient) statistical algorithms. For example, in genomics, Cheverud (2001) and Li and Ji (2005) used correlations in the data to estimate the effective number of independent tests in a genome sequence to appropriately threshold the test statistics. Also motivated by bioinformatics problems, Knijnenburg et al. (2009) approached the question of estimating the tail of the distribution of permutation values via an approximation by a generalized Pareto distribution (using fewer permutations). In the context of more general statistical analysis, the authors in Subramanian et al. (2005) proposed Gene Set Enrichment Analysis (GSEA) which exploits the underlying structure among the genes, to analyze gene-sets (e.g., where sets were obtained from biological pathways) instead of individual genes. If the genes within a gene-set have similar expression pattern, one may see improvements in statistical power. This idea of exploiting the “structure” towards efficiency (broadly construed) was more rigorously studied in Efron and Tibshirani (2007) and a nice non-parametric Bayesian perspective was offered in Dahl and Newton (2007). Within neuroimaging, a similar intuition drives Random Field theory based analysis Taylor and Worsley (2008), albeit the objective there is to obtain a less conservative correction, rather than computational efficiency. Recently, motivated by neuroimaging applications and computational issues, Gaonkar and Davatzikos (2013) derived an analytical approximation of statistical significance maps to reduce the computational burden imposed by permutation tests commonly used to identify which brain regions contribute to a Support Vector Machines (SVM) model. In summary, exploiting the structure of the data to obtain alternative efficient solutions is not new, but we find that in the context of permutation testing on imaging data, there is a great deal of voxel-to-voxel correlations that if leveraged properly can, in principle, yield interesting new algorithms.

For permutation testing tasks in neuroimaging in particular, several groups have recently investigated ideas to make use of the underlying structure of the data to accelerate the procedure. In a preliminary conference paper (Hinrichs et al. (2013)), we introduced the notion of exploiting correlations in neuroimaging data via the underlying low-rank structure of the permutation testing procedure. A few years later, Winkler et al. (2016) presented the first thorough evaluation of the accuracy and runtime gains of six approaches that leverage the problem structure to accelerate permutation testing for neuroimage analysis. Among these approaches Winkler et al. (2016) presented an algorithm which relied on some of the ideas introduced by Hinrichs et al. (2013) to accelerate permutation testing through low-rank matrix completion (LRMC). Overall, algorithms that exploit the underlying structure of permutation testing in neuroimaging have provided substantial computational speedups.

1.1. Main idea and contributions

The starting point of our formulation is to analyze the entire permutation testing procedure via numerical linear algebra. In particular, the object of interest is the permutation testing matrix, T. Each row of T corresponds to the voxel-wise statistics, and each column is a specific permutation of the labels of the data. This perspective is not commonly used because a typical permutation test in neuroimaging rarely instantiates or operates on this matrix of statistics. Apart from the fact that T, in neuroimaging, contains millions of entries, the reason for not working directly with it is because the goal is to derive the maximum null distribution. The central aspect of this work is to exploit the structure in T – the spatial correlation across different voxel-statistics. Such correlations are not atypical because the statistics are computed from anatomically correlated regions in the brain. Even far apart voxel neighbourhoods are inherently correlated because of the underlying biological structures. This idea drives the proposed novel permutation testing procedure. We describe the contributions of this paper based on the observation that the permutation testing matrix is filled with related entries.

Theoretical Guarantees. The basic premise of this paper is that permutation testing in high-dimensions (especially, imaging data) is extremely redundant. We show how we can model T as a low-rank plus a low-variance residual. We provide two theorems that support this claim and demonstrate its practical implications. Our first result justifies this modeling assumption and several of the components involved in recovering T. The second result shows that the error in the global maximum null distribution obtained from the estimate of T is quite small.

A novel, fast and robust, multiple-hypothesis testing procedure. Building upon the theoretical development, we propose a fast and accurate algorithm for permutation testing involving high-dimensional imaging data. The algorithm achieves state of the art runtime performance by estimating (or recovering) the statistics in T rather than “explicitly” computing them. We refer to the algorithm as RapidPT, and we show that compared to existing state-of-the-art libraries for non-parametric testing, the proposed model achieves approximately 20× speed up over existing procedures. We further identify regimes where the speed up is even higher. RapidPT also is able to leverage serial and parallel computing environments seamlessly.

A plugin in SnPM (with stand-alone libraries). Given the importance and the wide usage of permutation testing in neuroimaging (and other studies involving high-dimensional and multimodal data), we introduce a heavily tested implementation of RapidPT integrated as a plugin within the current development version of SnPM — a widely used non-parametric testing toolbox. Users can invoke RapidPT directly from within the SnPM graphical user interface and benefit from SnPM's familiar pre-processing and post-processing capabilities. This final contribution, without a separate installation, brings the performance promised by the theorems to the neuroimaging community. Our documentation Gutierrez-Barragan and Ithapu (2016) gives an overview of how to use RapidPT within SnPM.

Although the present work shares some of the goals and motivation of Winkler et al. (2016) – specifically, utilizing the algebraic structure of T – there are substantial technical differences in the present approach, which we outline further below. First, unlike Winkler et al. (2016), we directly study permutation testing for images at a more fundamental level and seek to characterize mathematical properties of relabeling (i.e., permutation) procedures operating on high-dimensional imaging data. This is different from assessing whether the underlying operations of classical statistical testing procedures can be reformulated (based on the correlations) to reduce computational burden as in Winkler et al. (2016). Second, by exploiting celebrated technical results in random matrix theory, we provide theoretical guarantees for estimation and recovery of T. Few such results were known. Note that empirically, our machinery avoids a large majority of the operations performed in Winkler et al. (2016). Third, some speed-up strategies proposed in Winkler et al. (2016) can be considered as special cases of our proposed algorithm — interestingly, if we were to increase the number ‘actual’ operations performed by RapidPT (from ≈1%, suggested by our experiments, to 50%), the computational workload begins approaching what is described in Winkler et al. (2016).

2. Permutation testing in neuroimaging

In this section, we first introduce some notations and basic concepts. Then, we give additional background on permutation testing for hypothesis testing in neuroimaging to motivate our formulation. Matrices and vectors will be denoted by bold upper-case and lower-case letters respectively, and scalars will be represented using non-bold letters. For a matrix X, X[i, : ] denotes the ith row and X[i, j] denotes the element in ith row and jth column.

Permutation testing is a nonparametric procedure for estimating the empirical distribution of the global null Edgington (1969b, a); Edgington and Onghena (2007). For a two-sample univariate statistical test, a permutation test computes an unbiased estimate of the null distribution of the relevant univariate statistic (e.g., t or χ2). Although univariate null distributions are, in general, well characterized, the sample maximum of the voxel-wise statistics usually does not have an analytical form due to strong correlations across voxels, as discussed in Section 1. Permutation testing is appropriate in this high-dimensional setting because it is nonparametric and does not impose any restriction on the correlations across the voxel-wise statistics. Indeed, when the test corresponds to group differences between samples based on a stratification variable, under the null hypothesis ℋ0, the grouping labels given to the samples are artificial, i.e., they correspond to the set of all possible relabellings of the samples. In neuroimaging studies, typically the groups correspond to the presense or absence of an underlying disease condition (e.g., controls and diseased). Whenever ℋ0 is true, the data sample representing a healthy subject is, roughly speaking, ‘similar’ to a diseased subject. Under this setting, in principle, interchanging the labels of the two instances will have no effect on the distribution of the resulting voxel-wise statistics across all the dimensions (or covariates or features). So, if we randomly permute the labels of the data instances from both groups, under ℋ0 the recomputed sets of voxel-wise statistics correspond to the same global null distribution. We denote the number of such relabellings (or permutations) by L. The histogram of all L maximum statistics i.e., the maximum across all voxel-wise statistics for a given permutation, is the empirical estimate of the exact maximum null distribution under ℋ0. When given the true/real labeling, to test for significant group-wise differences, one can simply compute the fraction of the max null that is more extreme than the maximum statistic computed across all voxels for this real labeling.

The case for strong null

Observe that when testing multiple sets of hypotheses there are two different types of control for the Type 1 error rate (FWER): weak and strong control Hochberg (1987). A test is referred to as weak control for FWER whenever the Type 1 error rate is controlled only when all the hypotheses involved (here, the number of voxels being tested) are true. That is, ℋ0 is true for (all) voxels. On the other hand, a test provides strong control for FWER whenever Type 1 error rate is controlled under any combination/proportion of the true hypotheses. It is known that the procedure described above (i.e., using the max null distribution calculated across all voxel-wise statistics) provides strong control of FWER Holmes et al. (1996). This is easy to verify because the maximum of all voxel-wise statistics is used to compute the corrected p-value, and so, the exact proportion of which hypotheses are true does not matter. Further, testing based on strong control will classify non-activated voxels as activated with a probability upper bounded by α, i.e., it has localizing power Holmes et al. (1996), a desirable property in neuroimaging studies in particular. For the remainder of this paper, we will focus on such strong control and restrict our presentation to the case of group difference analysis for two groups.

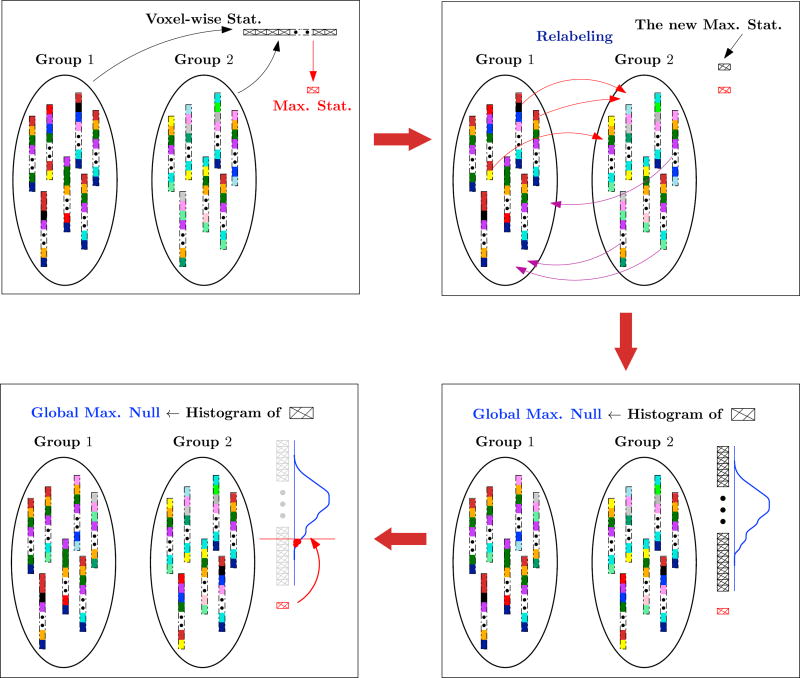

2.1. NaivePT: the exhaustive permutation testing procedure

Fig. 1 and algorithm 1 illustrate the permutation testing procedure. Given the data instances from two groups, X1 ∈ ℝv×n1 and X2 ∈ ℝv×n2, where n1 and n2 denote the number of subjects from each group respectively. Also, v denotes the number of voxels in the brain image. Let n = n1 + n2 and X = [X1; X2] give the (row-wise) stacked data matrix (X ∈ ℝv×n). Note that a permutation of the columns of X corresponds to a group relabeling. The v distinct voxel-wise statistics are then computed for L such permutations, and used to construct the permutation testing matrix T ∈ ℝv×L. The empirical estimate of the max null is simply the histogram of the maximum of each of the columns of T – denoted by hL. Algorithm 1 is occasionally referred to as Monte Carlo permutation tests in the literature because of the random sampling involved in generating the statistics. This standard description of a permutation test will be used in the following sections to describe our proposed testing algorithm.

Algorithm 1.

NaivePT The exhaustive permutation testing procedure.

| Input: X1, X2, L |

| Output: T, hL |

| X = [X1; X2], n = n1 + n2 |

| m1 …, mL ← [∅] |

| for i ∈ 1, …, L do |

| j1 …, jn ~ Permute[1, n] |

| X̃1 ← X[:, j1, …, jn1], X̃2 ← X[:, jn1+1, …, jn] |

| T[:, i] ← test(X̃1, X̃2) |

| mi ← Max(T[:, i]) |

| end for |

| hL ← Histogram(m1, …, mL) |

Fig. 1.

Flow diagram of the permutation testing procedure described in algorithm 1. Group 1 and Group 2 correspond to X1 and X2, each new max stat corresponds to each mi, and the global max null corresponds to hL.

2.2. The situation when L is large

Evidently as the number of permutations (L) increases Algorithm 1 becomes extremely expensive. Nonetheless, performing a large number of permutations is essential in neuroimaging for various reasons. We discuss these reasons in some detail next.

Random sampling methods draw many samples from near the mode(s) of the distribution of interest, but fewer samples from the tail. To characterize the threshold for a small portion of the tail of a distribution, we must invariably draw a very large number of samples just so that the estimate converges. So, if we want an α = 0.01 threshold from the max null distribution, we require many thousands of permutation samples — a computationally expensive procedure in neuroimaging as previously discussed.

Ideally we want to obtain a precise threshold for any α, in particular small α. However, the smallest possible p-value that can be obtained from the empirical null is . Therefore, to calculate very low p-values (essential in many applications), L must be very large.

A typical characteristic of brain imaging disorders, for instance in the early (e.g., preclinical) stages of Alzheimer's disease (AD) and other forms of dementia, is that the disease signature is subtle — for instance, in AD, the deposition of Amyloid load estimated via positron emission tomographic (PET) images or atrophy captured in longitudinal structural magnetic resonance images (MRI) image scans in the asymptomatic stage of the disease. The signal is weak in this setting and requires large sample size studies (i.e., large n) and a need for estimating the Type 1 error threshold with high confidence. The necessity for high confidence tail estimation implies that we need to sample many more relabelings, requiring a large number of permutations L.

3. A convex formulation to characterize the structure of T

It turns out that the computational burden of algorithm 1 can be mitigated by exploiting the structural properties of the permutation testing matrix T. Our strategy uses ideas from LRMC, subspace tracking, and random matrix theory, to exploit the correlated structure of T and model it in an alternative form. In this section, we first introduce LRMC, followed by the overview of the eigen-spectrum properties of T, which then leads to our proposed model of T.

3.1. Low-rank matrix completion

Given only a small fraction of the entries in a matrix, the problem of low-rank matrix completion (LRMC) Candès and Tao (2010) seeks to recover the missing entries of the entire matrix. Clearly, with no assumption on the properties of the matrix, such a recovery is ill-posed. Instead, if we assume that the column space of the matrix is low-rank and the observed entries are randomly sampled, then the authors of Candès and Tao (2010) and others have shown that, with sufficiently small number of entries, one can recover the orthogonal basis of the row space as well as the expansion coefficients for each column — that is, fully recover the missing entries of the matrix. Specifically, the number of entries required is roughly r log(d) where r is the column space's rank and d is the ambient dimension. By placing an ℓ1-norm penalty on the eigenvalues of the recovered matrix, i.e., the nuclear norm Fazel et al. (2004); Recht et al. (2010), one optimizes a convex relaxation of an (nonconvex) objective function which explicitly minimizes the rank. Alternatively, we can specify a rank r ahead of time, and estimate an orthogonal basis of that rank by following a gradient along the Grassmannian manifold Balzano et al. (2010); He et al. (2012). The LRMC problem has received a great deal of attention in the period after the Netflix Prize Bennett and Lanning (2007), and numerous applications in machine learning and computer vision have been investigated Ji et al. (2010). Details regarding existing algorithms and their analyses including strong recovery guarantees are available in Candès and Recht (2009, 2011).

LRMC Formulation

Let us consider a matrix T ∈ ℝv×L. Denote the set of randomly subsampled entries of this matrix as Ω. This means that we have access to TΩ, and our recovery task corresponds to estimating TΩC, where ΩC corresponds to the complement of the set Ω. Let us denote the estimate of the complete matrix be T̂. The completion problem can be written as the following optimization task,

| (1) |

| (2) |

| (3) |

where U ∈ ℝv×r is the low-rank basis of T, i.e., the columns of U correspond to the orthogonal basis vectors of the column space of T. Here, Ω gives the measured entries and W is the matrix of coefficients that lets us reconstruct T̂.

3.2. Low-rank plus a long tail in T

Most datasets encountered in the real world (and especially in neuroimaging) have a dominant low-rank component. While the data may not be exactly characterized by a low-rank basis, the residual will not significantly alter the eigen-spectrum of the sample covariance in general. Strong correlations nearly always imply a skewed eigen-spectrum, because as the eigen-spectrum becomes flat, the resulting covariance matrix tends to become sparser (the “uncertainty principle” between low-rank and sparse matrices Chandrasekaran et al. (2011)). Low-rank structure in the data is encountered even more frequently in neuroimaging — unlike natural images in computer vision, there is much stronger voxel-to-voxel homogeneity in a brain image.

While performing statistical hypothesis testing on these images, the low-rank structure described above carries through to T for purely linear statistics such as sample means, mean differences and so on. However, non-linearities in the test statistic, e.g., normalizing by pooled variances, will perturb the eigen-spectrum of the original data, contributing a long tail of eigenvalues (see Fig. 2). This large number of significant singular values needs to be accounted for, if one intends to model T using low-rank structure. Ideally, we require that this long tail should either decay rapidly, or that it does not overlap with the dominant eigenvalues. This is equivalent to asking that the resulting non-linearities do not decorrelate the test statistics, to the point that the matrix T cannot be approximated by a low-rank matrix with high fidelity. For t-statistics, the non-linearities come from normalization by pooled variances, see for example a two-sample t-test shown in (4). Here (μ1, σ1) and (μ2, σ2) are the mean and standard deviations for the two groups respectively. Since the pooled variances calculated from correlated data X are unlikely to change very much from one permutation sample to another (except outliers), we expect that the spectrum of T will resemble that of the data (or sample) covariance, with the addition of a long, exponentially decaying tail. More generally, if the non-linearity does not decorrelate the test statistics too much, it will almost certainly preserve the low-rank structure.

| (4) |

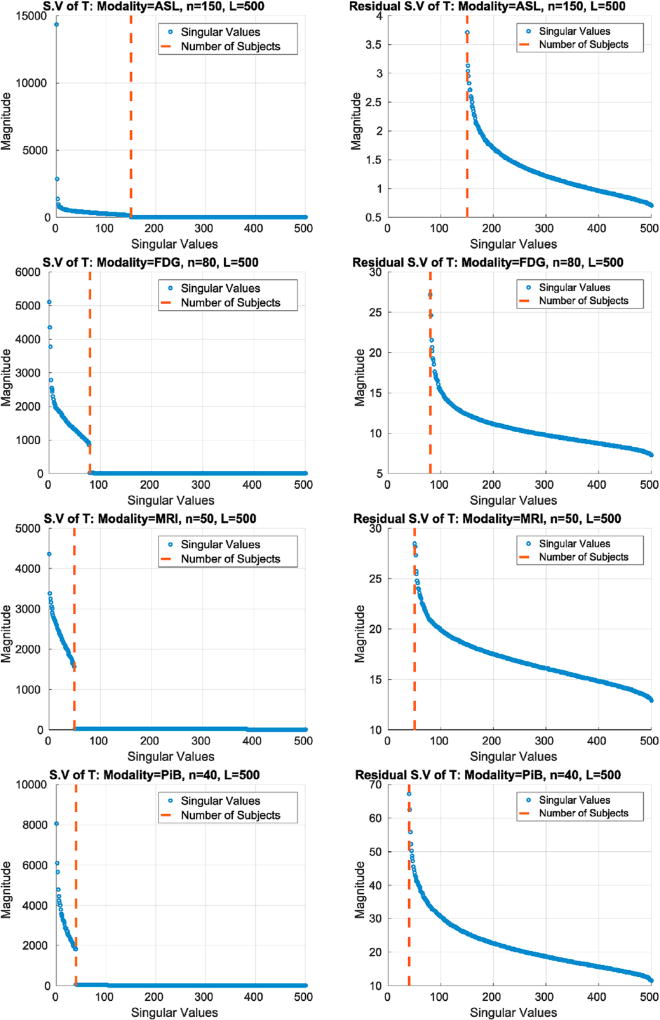

Fig. 2.

Singular value spectrums for permutation testing matrices with dimensions generated from the imaging modalities: ASL, FDG PET, MRI, and PiB PET. Left: Full spectrum of T with L rows and v columns. Right: Residual singular values of T.

3.2.1. Does low-rank plus a long tail assumption hold for other image modalities?

The underlying thesis of our proposed framework is that the permutation testing matrix T, in general, has this low-rank plus long tail structure. In Fig. 2, we show evidence that this is in fact the case for a variety of imaging modalities that are commonly used in medical studies – Arterial Spin Labeling (ASL), Magnetic Resonance Imaging (MRI), and two Positron Emission Tomography (PET) modalities including fluorodeoxyglucose (FDG) and Pittsburgh compound B (PiB). Using several images coming from each of these modalities four different Ts are constructed. Each row in Fig. 2 shows the decay of the singular value spectrum of these four different Ts. The low-rank (left column) and the remaining long tail (right column) is clearly seen in these spectrum plots which suggests that the core modeling assumptions are satisfied. We note that the MRI data that was used in Fig. 2 was in fact the de facto dataset for our evaluation presented in section 6. The underlying construct of such high correlations across multiple covariates or predictors is common to most biological datasets beyond brain scans, like genetic datasets.

3.3. Overview of proposed method

If the low-rank structure dominates the long tail described above, then its contribution to T can be modeled as a low variance Gaussian I.I.D. residual. A Central Limit argument appeals to the number of independent eigenfunctions that contribute to this residual, and, the orthogonality of eigenfunctions implies that as more of them meaningfully contribute to each entry in the residual, the more independent those entries become. In other words, if this long tail begins at a low magnitude and decays slowly, then we can treat it as a Gaussian i.i.d. residual; and if it decays rapidly, then the residual will perhaps be less Gaussian, but also more negligible. Thus, our algorithm makes no direct assumption about these eigenvalues themselves, but rather that the residual corresponds to a low-variance i.i.d. Gaussian random matrix – its contribution to the covariance of test statistics will be Wishart distributed, and from this property, we can characterize its eigenvalues.

Why should we expect runtime improvements?

The low-rank + long tail structure of the permutation testing matrix then translates to the following identity,

| (5) |

where S is the unknown i.i.d. Gaussian random matrix. We do not restrict ourselves to one-sided tests here, and so, S is modeled to be zero-mean. Later in Section 4, we show that this apparent zero-mean assumption is addressed because of a post-processing step. The low-rank portion of T can be reconstructed by sub-sampling the matrix at Ω using the LRMC optimization from (1). Recall from the discussion in Section 3.1 that Ω corresponds to a subset of indices of the entries in T, i.e., instead of computing all voxel-wise statistics for a given relabeling (a column of T), only a small fraction η, referred to as the sub-sampling rate, are computed. Later in Sections 5 and 6, we will show that η is very small (on the orders of <1%). Therefore, the overall number of entries in Ω — the number of statistics actually calculated to recover T – is ηvL as opposed to vL for η≪1.

Since the core of the proposed method is to model T by accessing only a small subset of its entries Ω, we refer to it as a rapid permutation testing procedure – RapidPT. Observe that a large contributor to the running time of online subspace tracking algorithms, including the LRMC optimization from (1), is the module which updates the basis set U; but once a good estimate for U has been found, this additional calculation is no longer needed. Second, the eventual goal of the testing procedure is to recover the max null as discussed earlier in Section 2, which then implies that the residual S should also be recovered with high fidelity. Exact recovery of S is not possible. Although, for our purposes, we only need its effect on the distribution of the maximum per permutation test. An estimate of the mean and variance of S then provides reasonably good estimates of the max null. We therefore divide the entire process into two steps: training, and recovery which is described in detail in the next section.

4. Rapid permutation testing – RapidPT

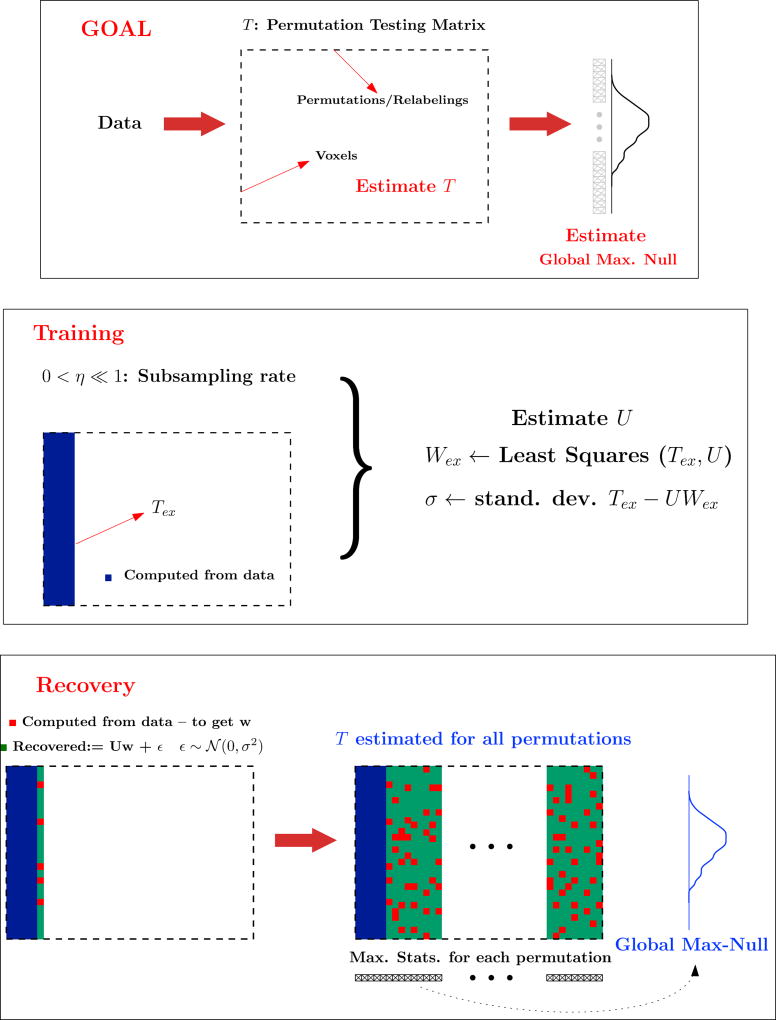

In this section, we discuss the specifics of the training and recovery stages of RapidPT, and then present the complete algorithm, followed by some theoretical guarantees regarding consistency and recovery of T. Fig. 3 shows a graphical representation of the RapidPT algorithm 2.

Fig. 3.

Flow diagram of the training and recovery steps in RapidPT. The number of training samples (l) and the rank of U(r) is the number of columns computed in the training phase (the blue area). The sub-sampling rate, η, is the fraction of red over green entries computed per column. The global max null is hL in the algorithm.

4.1. The training phase

The goal of the training phase is to estimate the basis U. Here, we perform a small number of fully sampled permutation tests, i.e., for approximately a few hundred of the columns of T (each of which corresponds to a permutation) denoted by ℓ, all the v voxel-wise statistics are computed. This v × ℓ “sub-matrix” of T is referred to as the training set, denoted by Tex. In our experiments, ℓ was selected to be either a fraction or a multiple of the total number of subjects n as described in section 5.4. From Tex, we estimate the basis U using sub-sampled matrix completion methods Balzano et al. (2010); He et al. (2012), making multiple passes over the training set with the (given) sub-sampling rate η, until convergence. This corresponds to initializing U as a random orthogonal matrix of a pre-determined rank r, and using the columns of Tex repeatedly to iteratively update it until convergence (see Balzano et al. (2010); He et al. (2012) for details regarding subspace tracking). Once U is obtained in this manner, Wex is obtained by running a simple least-squares procedure on Tex and U. The histogram of Tex − UWex will then be an estimate of the empirical distribution of the residual S over the training set. We denote the standard deviation of these ‘left over’ entries as σ. We now discuss a few relevant aspects of this training phase.

Notice that in principle, one can estimate U directly from Tex by simply computing the leading r principal components. This involves a brute-force approximation of U by computing the singular-value decomposition of a dense v × ℓ matrix. Even for reasonably small v, this is a costly operation. Second, T̂, by definition, contains a non-trivial residual. We have no direct control on the structure of S except that it is i.i.d Gaussian. Clearly, the variance of entries of S will depend on the fidelity of the approximation provided by U. Since the sub-sampling rate η (the size of the set Ω compared to vL) is known ahead of time, estimating U via a subspace-tracking procedure using η fraction of the entries of Tex (where each column of Tex modifies an existing estimate of U, one-by-one, without requiring to store all the entries of Tex) directly provides an estimate of S.

Bias-Variance Trade-off

When using a very sparse subsampling method i.e., sampling with small η, there is a bias-variance trade-off in estimating S. Clearly, if we use the entire matrix T to estimate U, W and S, we will obtain reliable estimates of S. But, there is an overfitting problem: the least-squares objective used in fittingW (in getting a good estimate of the max null) to such a small sample of entries is likely to grossly underestimate the variance of S compared to when we use the entire matrix; the sub-sampling problem is not nearly as over-constrained as it is for the full matrix. This sampling artifact reduces the apparent variance of S, and induces a bias in the distribution of the sample maximum, because extreme values are found less frequently. This sampling artifact has the effect of “shifting” the distribution of the sample maximum towards zero. We refer to this as a bias-variance trade-off because, we can think of the need for shift as an outcome of the sub-optimality of the estimate of σ versus the deviation of the true max null from the estimated max null, We correct for this bias by estimating the amount of the shift during the training phase, and then shifting the recovered sample max distribution by this estimated amount. This shift is denoted by μ.

4.2. The recovery phase

In the recovery phase, we sub-sample a small fraction of the entries of each column of T successively, i.e., for each new relabeling, the voxel-wise statistics are computed over a small fraction of all the voxels to populate TΩ. Using this TΩ, and the pre-estimated U, the reconstruction coefficients for this column w ∈ ℝr×1 are computed. After adding the random residuals – i.i.d Gaussian with mean μ and standard deviation σ, to this Uw, we have our estimate T̂ for this specific relabeling/permutation. Recall that S was originally modeled to be zero-mean (see (5)), but the presence of the shift μ suggests a 𝒩 (μ, σ2) distribution instead. Overall, this entails recovering a total of v voxel-wise statistics from ηv of such entries where η≪1. This process repeats for all the remaining L − ℓ columns of T, eventually providing T̂. Once T̂ has been estimated, we proceed exactly as in the NaivePT, to compute the max null and test for the significance of the true labeling.

4.3. The algorithm

Algorithm 2 and Fig. 3 summarizes RapidPT. The algorithm takes in the input data X, the rank of the basis r, the sub-sampling rate η, the number of training columns ℓ and the total number of columns L as inputs, and returns the estimated permutation testing matrix T and the max null distribution hL. As described earlier in Sections 4.1 and 4.2, Algorithm 2 first estimates U, σ and the shift μ, which are then used to compute W and S for the L number of permutations.

Algorithm 2.

The RapidPT algorithm for permutation testing.

| Input: X1, X2, r, η, L, ℓ, stat |

| Output: T̂, hL |

| X = [X1; X2], n = n1 + n2 |

| TRAINING |

| U ← Rand. Orth., Wex = [∅] |

| for i ∈ 1, …, ℓ do |

| j1 …, jn ~ Permute[1, n] |

| X̃1 ← X[:, j1, …, jn1] |

| X̃2 ← X[:, jn1+1, …, jn] |

| Tex[:, i] ← test(X̃1, X̃2) |

| k1, …, k⌈ηυ⌉ ~ UNIF[1, υ] |

| T̃ ← Tex[k1, …, k⌈ηυ⌉, i] |

| U, Wex[:, i] ← Subspace-Tracking(r) |

| end for |

| σ ← Standard Deviation{Tex − UWex}Ω |

| μ ← supi Max{Tex[:, i] − UWex[:, i]} |

| for i ∈ 1, …, ℓ do |

| T̂[:, i] ← T[:, i] |

| end for |

| RECOVERY |

| for i ∈ ℓ + 1, …, L do |

| k1, …, k⌈ηυ⌉ ~ UNIF[1, υ] |

| j1 …, jn ~ Permute[1, n] |

| X̃1 ← X[k1, …, k⌈ηυ⌉, j1, …, jn1] |

| X̃2 ← X[k1, …, k⌈ηυ⌉, jn1+1, …, jn] |

| T̃ ← test(X̃1, X̃2) |

| W[:, i] ← Complete(U, T̃, k1, …, k⌈ηυ⌉) |

| s ← i.i.d𝒩υ(0, σ2) |

| T̂[:, i] ← UW[:, i] + s |

| end for |

| for i ∈ 1, …, L do |

| if i ≤ ℓ then |

| mi ← Max(T̂)[:, i]) |

| else |

| mi ← Max(T̂)[:, i] + μ |

| end if |

| end for |

| hL ← Histogram(m1, …, mL) |

4.4. Summary of theoretical guarantees

Algorithm 2 shows an efficient way to recover the max null by modeling T as a low-rank (UW) plus a low-variance residual (S). While this is useful, one may ask if this modeling assumption is reasonable and whether such a procedure will, in fact, recover the true statistics. Our two technical results answer these questions, and for brevity, we present them in the supplementary material accompanying the main paper. The informal summary of the results is (1) the basic model (i.e., low-rank and low-variance residual) is indeed meaningful for the setting we are interested in, and (2) Recovering the low-rank and the residual by Algorithm 2 guarantees a high fidelity estimate of the max null, and shows that the error is small.

5. Experimental setup

We evaluate RapidPT in multiple phases. First we perform a simulation study where the goal is to empirically demonstrate the requirements on the input hyperparameters that will guarantee an accurate recovery of the max null. These empirical results are compared to the analytical bounds governed by the theory (and further discussed in the supplement). The purpose of these evaluations is to walk the reader through the choices of hyperparameters, and how the algorithm is very robust to them. Next, we perform another simulation study where our goal is to evaluate the performance of RapidPT on multiple synthetic datasets generated by changing the strength of group-wise differences and the sparsity of the signal (e.g., how many voxels are different). We then conduct an extensive experiments to evaluate RapidPT against competitive methods on real brain imaging datasets. These include comparisons of RapidPT's accuracy, runtime speedups and overall performance gains against two baselines. The first baseline we used was the latest release of the widely used MATLAB toolbox for nonparametric permutation testing in neuroimaging, Statistical NonParametric Mapping (SnPM) (SnPM (2013); Nichols and Holmes (2002)). The second baseline was a standard MATLAB implementation of algorithm 1, which we will call NaivePT. Both baselines serve to evaluate RapidPT's accuracy. Further, the very small differences between the results provided by SnPM and NaivePT offer a secondary reference point that tells us an acceptable range for RapidPT's results (in terms of differences). For runtime performance, SnPM acts as a state of the art baseline. On the other hand, NaivePT is used to evaluate how an unoptimized permutation testing implementation will perform on the datasets we use in our experiments. In the next section, we describe the experimental data, the hyperparameters space evaluated, the methods used to quantify accuracy, and the environment where all experiments were run.

5.1. Simulation Data I

The dataset consisted of n = 30 synthetic images composed of v = 20000 voxels. The signal in each voxel is derived from one of the following two normal distributions: N(μ = 0, σ2 = 1) and N(μ = 1, σ2 = 1). Two groups were then constructed with 15 images in each and letting 1% (200 voxels) exhibit voxel-wise group differences. The signal in the remaining 99% of the voxels was assumed to come from N(μ = 0, σ2 = 1).

5.2. Simulation Data II

The dataset consisted of a total of 48 synthetically generated datasets, each with v = 20000 voxels. The datasets were generated by varying: the number of images (n) in the dataset, the strength of the signal (i.e., deviation of μ in N(μ, 1) from N(0, 1)) and the sparsity of the signal (percentage of voxels showing group differences). The dataset sizes were n = 60, n = 150, n = 600. Each dataset was split into two equally sized groups for which various degrees of signal and sparsity of the signal were used to generate the different datasets. The first “group” (for group-difference analysis) in all datasets was generated from a standard normal distribution, N(μ = 0, σ2 = 1). In the second “group”, we chose {1%, 5%, 10%, 25%} of the voxels from one of four normal distributions (N(μ = 1, σ2 = 1), N(μ = 5, σ2 = 1), N(μ = 10, σ2 = 1), N(μ = 25, σ2 = 1)). The signal in the remaining voxels in the second group was also obtained from N(μ = 0, σ2 = 1).

To summarize the simulation setup, Simulation Data I fixes the dataset and changes the algorithmic hyperparameters whereas Simulation Data II fixes the algorithmic hyperparameters and generates different datasets.

5.3. Data

The data used to evaluate RapidPT comes from the Alzheimer's disease Neuroimaging Initiative-II (ADNI2) dataset. The ADNI project was launched in 2003 by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, the Food and Drug Administration, private pharmaceutical companies, and nonprofit organizations, as a $60 million, 5-year public-private partnership. The overall goal of ADNI is to test whether serial MRI, positron emission tomography (PET), other biological markers, and clinical and neuropsychological assessment can be combined to measure the progression of MCI and early AD. Determination of sensitive and specific markers of very early AD progression is intended to aid researchers and clinicians to develop new treatments and monitor their effectiveness, as well as lessen the time and cost of clinical trials. The principal investigator of this initiative is Michael W. Weiner, M.D., VA Medical Center and University of California – San Francisco. ADNI is the result of the efforts of many co-investigators from a broad range of academic institutions and private corporations, and subjects have been recruited from over 50 sites across the U.S. and Canada. The initial aim of ADNI was to recruit 800 adults, ages 55 to 90, to participate in the research – approximately 200 cognitively normal older individuals to be followed for 3 years, 400 people with MCI to be followed for 3 years, and 200 people with early AD to be followed for 2 years.

For the experiments presented in this paper, we used gray matter tissue probability maps derived from T1-weighted magnetic resonance imaging (MRI) data. From this data, we constructed four varying sized datasets. We sampled n1 and n2 subjects from the CN and AD groups in the cohort, respectively. Table 1 shows a summary of the datasets used for our evaluations.

Table 1.

Dataset sizes used in our experiments. The table lists the total number of subjects (n) and how many of the participants were sampled from the CN (n1) and AD groups (n2).

| Dataset Size: n (n1,n2) | |||

|---|---|---|---|

| 50 (25,25) | 100 (50,50) | 200 (100,100) | 400 (200,200) |

5.3.1. Data pre-processing

All images were pre-processed using voxel-based morphometry (VBM) toolbox in Statistical Parametric Mapping software (SPM, http://www.fil.ion.ucl.ac.uk/spm). After pre-processing, we obtain a data matrix X composed of n rows and v columns for each dataset shown in Table 1. Each row in X corresponds to a subject and each column is associated to a voxel that denotes approximately the same anatomical location across subjects (since the images are already co-registered). This pre-processing is commonly used in the literature and not specialized to our experiments.

5.4. Hyperparameters

As outlined in Algorithm 2, there are three high-level input parameters that will impact the performance of the procedure: the number of training samples (l), the sub-sampling rate (η), and the number of permutations (L). To demonstrate the robustness of the algorithm to these parameter settings, we explored and report on hundreds of combinations of these hyperparameters on each dataset. This also helps us identify the general scenarios under which RapidPT will be a much superior alternative to regular permutation testing. The baselines for a given combination of these hyperparameters are given by the max null distribution constructed by SnPM and NaivePT. The number of permutations used for the max null distributions of the baselines is the same as the number of permutations used by RapidPT for a given combination of hyperparameters.

Number of Training Samples: The number of training samples, l, determines how many columns of T are calculated to estimate the basis of the subspace, and also how many training passes are performed to estimate the shift that corrects for the bias-variance tradeoff discussed in Section 4.1. We decided to use the total number of subjects n as a guide to pick a sensible l, the rationale is that the maximum possible rank of T is n (as discussed in Section 3). Further, l is also used to determine the number of passes performed to calculate the shift of the max null distribution. Calculating the shift is a cheap step, therefore it makes sense to use all the information available in Tex to calculate the shift. Table 2 shows a summary of the different values for l used in our evaluations.

Sub-sampling rate: The sub-sampling rate, η, is the percentage of all the entries of T that we will calculate (i.e., sample) when recovering the max null distribution. In the recovery phase, η determines how many voxel-wise test statistics will be calculated at each permutation to recover a column of T̄. For instance, if the data matrix has v columns (number of voxels) then instead of calculating v test statistics, we will sample only ηv (where η≪1) random columns and calculate test statistics only for those columns. Table 3 shows a summary of the different values of η used in our evaluations.

Number of Permutations The number of permutations, L, determines the total number of columns in T. By varying L we are able to see how the size of T affects the accuracy of the algorithm and also how it scales compared to a standard permutation testing implementation with the same number of permutations (e.g., NaivePT or SnPM). Table 4 shows a summary of the different values for L used in our evaluations.

Table 2.

Number of training samples used to evaluate RapidPT. n corresponds to the total number of subjects in the dataset. For instance, for the 400 subject dataset the values for l used were 100, 200, 400, and 800.

| Number of Training Samples: l | ||||||

|---|---|---|---|---|---|---|

| Simulations |

|

n | 2n | |||

| Experiments |

|

|

n | 2n | ||

Table 3.

Sub-sampling rates used to evaluate RapidPT. η is the percentage of the total number of entries in T that will be calculated during the recovery phase.

| Sub-sampling rate: η | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Simulations | 0.5% | 1% | 1.6% | 2% | 4% | 8% | 16% | 32% | 64% |

| Experiments | 0.1% | 0.35% | 0.5% | 0.7% | 1.5% | 5% | |||

Table 4.

Number of permutations done to evaluate RapidPT.

| Number of Permutations: L | |||||||

|---|---|---|---|---|---|---|---|

| Simulations | 5, 000 | 10, 000 | 20, 000 | 40, 000 | 100, 000 | ||

| Experiments | 2, 000 | 5, 000 | 10, 000 | 20, 000 | 40, 000 | 80, 000 | 160, 000 |

5.5. Accuracy benchmarks

In order to assess the accuracy and overall usefulness of the recovered max null distribution by RapidPT we used three different measures: Kullback-Leibler Divergence (KL-Divergence), t-thresholds/p-values and the resampling risk.

Kullback-Leibler Divergence

The KL-Divergence provides a measure of the difference between two probability distributions. One of the distributions represents the ground truth (SnPM or NaivePT) and the other an “approximation” (obtained via RapidPT). In this case, the distributions are the max null distributions (hL). We use the KL-Divergence to identify under which circumstances (i.e., hyperparameters) RapidPT provides a good estimate of the overall max null distribution and if there are cases where the results are unsatisfactory.

T-thresholds/p-values

Once we have evaluated whether all methods recover a similar max null distribution, we analyze if t-thresholds associated to a given p-value calculated from each max null distribution are also similar.

Resampling risk

Two methods can recover a similar max null distribution and p-values, and yet partially disagree in which voxels should be classified as statistically significant (e.g., within a group difference analysis). The resampling risk is the probability that the decision of accepting/rejecting the null hypothesis differs between two methods (Jockel (1984)). Let v1 and v2 be the number of voxels whose null hypothesis was rejected according to the max null derived from Method 1 and 2, respectively. Further, let vc be the number of voxels that are the (set) intersection of v1 and v2. The resampling risk can then be calculated as shown in (6).

| (6) |

5.6. Implementation environment and other details

All evaluation runs reported in this paper were performed on multiple machines with the same hardware configuration. The setup consisted of multiple 16 core machine with two Intel(R) Xeon(R) CPU E5-2670, each with 8 cores. This means that any MATLAB application will be able to run a maximum of 16 threads. To evaluate the runtime performance of RapidPT, we performed all experiments on two different setups which forced MATLAB to use a specific number of threads. First, we forced MATLAB to only use a single thread (single core) when running SnPM, RapidPT, and NaivePT. The performance results on a single threaded environment attempt to emulate a scenario where the application was running on an older laptop/workstation serially. In the second setup, we allow MATLAB to use all 16 threads available to demonstrate how RapidPT is also able to leverage a parallel computing environment to reduce its overall runtime.

Although all machines had the same hardware setup, to further ensure that we were making a fair runtime performance comparison, all measurements of a given Figure shown in Section 6 were obtained from the same machine.

6. Results

The hyperparameter space explored through hundreds of runs allowed identifying specific scenarios where RapidPT is most effective. To demonstrate accuracy, we first show the impact of the hyperparameters on the recovery of the max null distribution by analyzing KL-Divergence. Then we focus on the comparison of the corrected p-values across methods and the resampling risk associated with those p-values. To demonstrate the runtime performance gains of RapidPT, we first calculate the speedup results across hyperparameters. We then focus on the hyperparameters that a user would use and look at how RapidPT, SnPM, and NaivePT scale with respect to the dataset and the number of permutations. Overall, the large hyperparameter space that was explored in these experiments produced hundreds of figures. In this section, we summarize the results of all figures within each subsection, but only present the figures that we believe will convey the most important information about RapidPT. An extended results section is presented in the supplementary materials. We point out that following the results and the corresponding discussion of the plots and figures, we discuss the open-source toolbox version of RapidPT that is mde available online.

6.1. Accuracy

6.1.1. Simulations

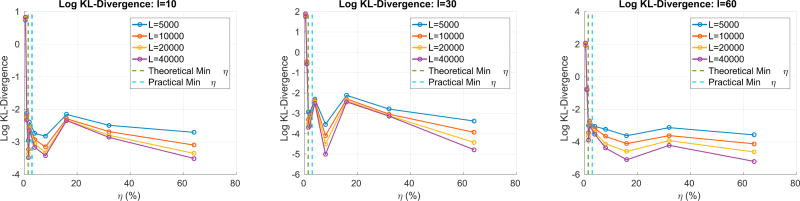

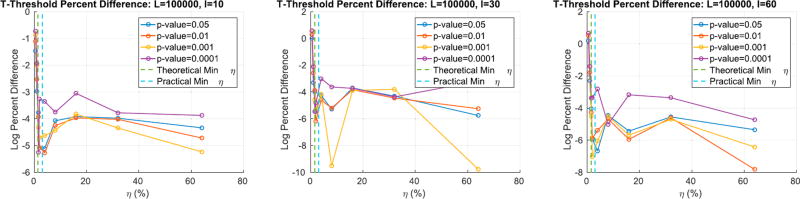

Fig. 4 shows the log KL-Divergence between the max null recovered by regular permutation testing and the max null recovered by RapidPT. We can observe that once the sub-sampling rate, η, exceeds the minimum value established by LRMC theory, RapidPT is able to accurately recover the max null with a KL-Divergence < 10−2. Furthermore, increasing l can lead to slightly lower KL-Divergence as seen in the middle and right most plots. Finally, increasing the number of permutation improves the accuracy. A through discussion of how to choose the important hyperparameters is in Section 7.3.

Fig. 4.

KL-Divergence between the true max null and the one recovered by RapidPT. Each line corresponds to a different number of permutations. The dotted lines are the theoretical minimum sub-sampling rate and the ”practical” one, i.e., the one the toolbox will set it to automatically if none is specified.

Fig. 5 shows the log percent difference between the t-thresholds for different p-values obtained from the true max null and the one recovered by RapidPT. Similar to Fig. 4, it is evident that once the strict requirement on the minimum value of η is achieved, we obtain a reliable t-threshold i.e., percent difference <10−3. Additionally, increasing the number of training samples (progression of plots from left to right) gives an improvement in the accuracy, however, not incredibly significant since we are already at negligible percent differences. Overall we see that RapidPT is able to estimate accurate thresholds even at extremely low p-value regimes.

Fig. 5.

Percent difference between the t-threshold (for different p-values) obtained from the true max null and the one recovered by RapidPT. The dotted lines are the theoretical minimum sub-sampling rate and the ”practical” one, i.e., the one the toolbox will set it to automatically if none is specified.

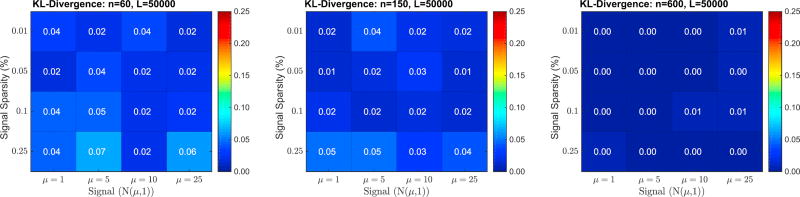

Fig. 6 shows the KL-Divergence between the max null recovered by regular permutation testing and the max null recovered by RapidPT on 48 synthetically generated datasets (16 in each column). The input hyperparameters used were fixed to L = 50000, η = 2ηmin, and l = n, where ηmin refers to the theoretical minimum sub-sampling rate and n is the dataset size. As expected, the strength or sparsity of the signal does not have an impact on the performance of RapidPT. The dataset size, however, does have a slight impact on the accuracy but we still find that the recovered t-thresholds for the smaller datasets are within 2% of the true threshold.

Fig. 6.

KL-Divergence between the true max null and the one recovered by RapidPT on 48 datasets. The sub-sampling rate, η, used for each run was 2ηmin. The number of training samples, l, used for each run was n (i.e., the same as the number of images in the dataset).

6.1.2. Results on the ADNI dataset

6.1.2.1. Can we recover the max null distribution?

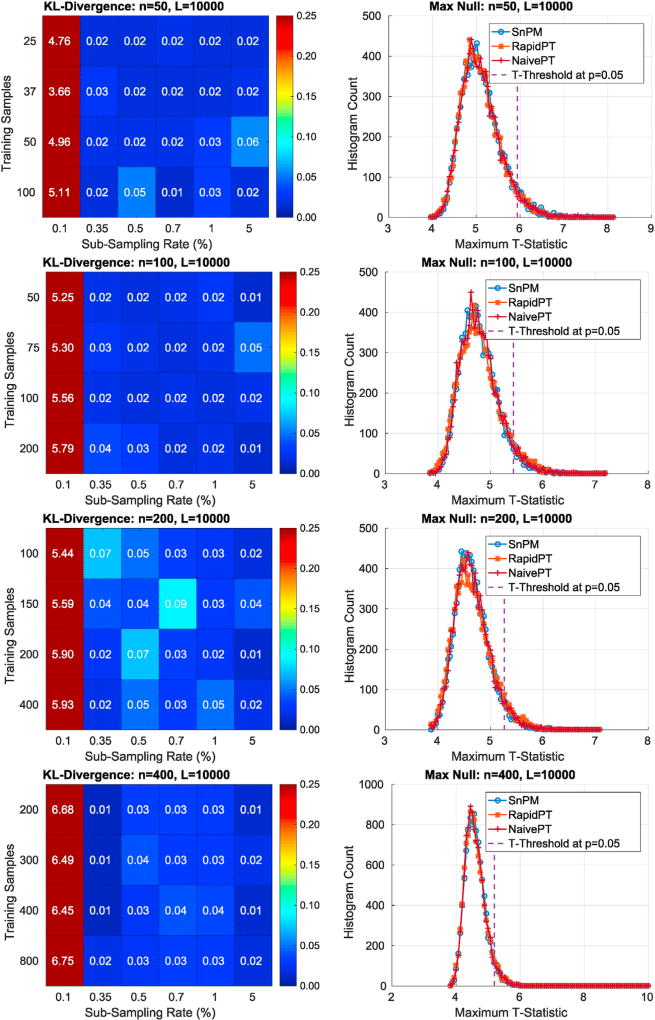

The left column of Fig. 7 uses a colormap to summarize the KL-Divergence results obtained from comparing the max null distributions of a single run of SnPM versus multiple RapidPT runs with various hyperparameters. The right column of Fig. 7 puts the numbers displayed in the colormaps into context by showing the actual max null distributions for a single combination of hyperparameters. Each row corresponds to each of the four datasets used in our evaluations.

Fig. 7.

Left: Colormap of the KL-Divergence between the max null distributions of RapidPT and SnPM. Each colormap is associated to a run on one of the datasets and a fixed number of permutations. The resulting KL-Divergence from 24 hyperparameter combinations is displayed on each colormap. Rows 1, 2, 3, and 4 of this figure are associated to the 50, 100, 200, and 400 subject datasets respectively. Right: The max null distributions given by RapidPT, SnPM, and NaivePT for the hyperparameters: L = 10000, l = n, and η = 0.35%.

The sub-sampling rate was the hyperparameter that had the most significant impact on the KL-Divergence. As shown in Fig. 7, a sub-sampling rate of 0.1% led to high KL-Divergence, i.e., the max null distribution was not recovered in this case. For every other combination of hyperparameters RapidPT was able to sample at rates as low as 0.35% and still recover an accurate max null distribution. Most KL-Divergence values were in the 0.01 – 0.05 range with some occasional values between 0.05 – 0.15. However, using the max null distributions derived from only 2000 permutations leads to the resulting KL-Divergence to range mainly between 0.05 – 0.15, as shown in the supplementary results.

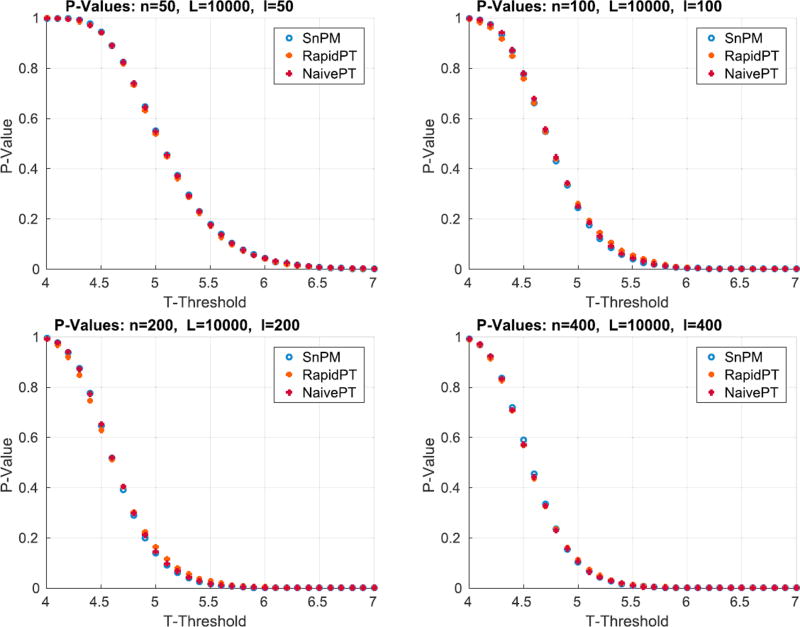

6.1.2.2. Are we rejecting the correct null hypotheses?

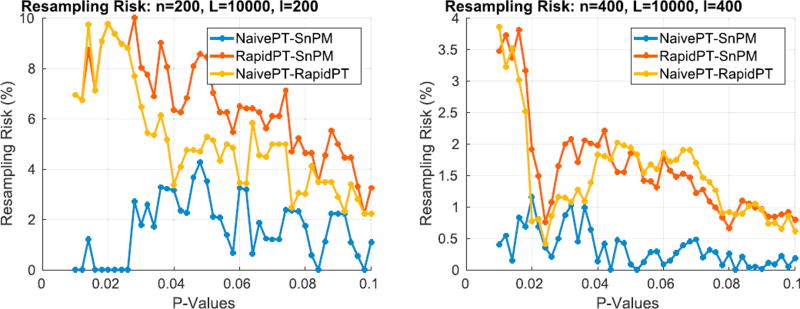

The test statistics obtained using the original data labels whose value exceed the t-threshold associated to a given p-value will correspond to the null hypothesis rejected. Fig. 8 shows the resultant mapping between t-threshold and p-values for the max null distribution for a given set of hyperparameters. It is evident that the difference across methods is minimal. Moreover, Fig. 8 shows that low p-values (p < 0.1), which are the main object of interest, show the lowest differences. However, despite the low percent differences between the p-values, in the larger datasets (100, 200, and 400 subjects) RapidPT consistently yields slightly more conservative p-values near the tails of the distribution. Nonetheless, Fig. 11 shows that the resampling risk between RapidPT and the two baselines remains very close to the resampling risk between both baselines. In practice, these plots show that RapidPT will reject the null hypothesis for a slightly lower number of voxels than SnPM or NaivePT.

Fig. 8.

p-values for SnPM, RapidPT, and NaivePT. The hyperparameters used were: η = 0.35%, L = 10000, and l = n. The results in this plot were obtained from the max null distributions shown in the right hand side of Fig. 7.

Fig. 11.

Resampling risk of NaivePT-SnPM, RapidPT-SnPM, and NaivePT-RapidPT. The hyperparameters used were: η = 0.35%, L = 10000, and l = n.

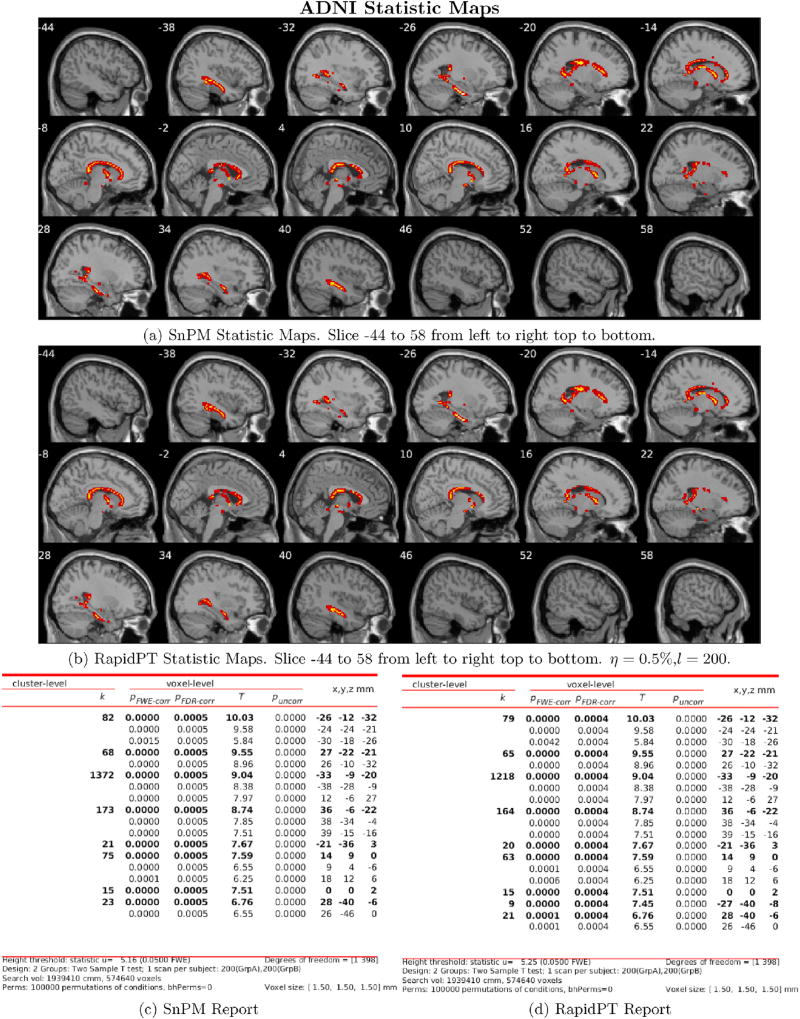

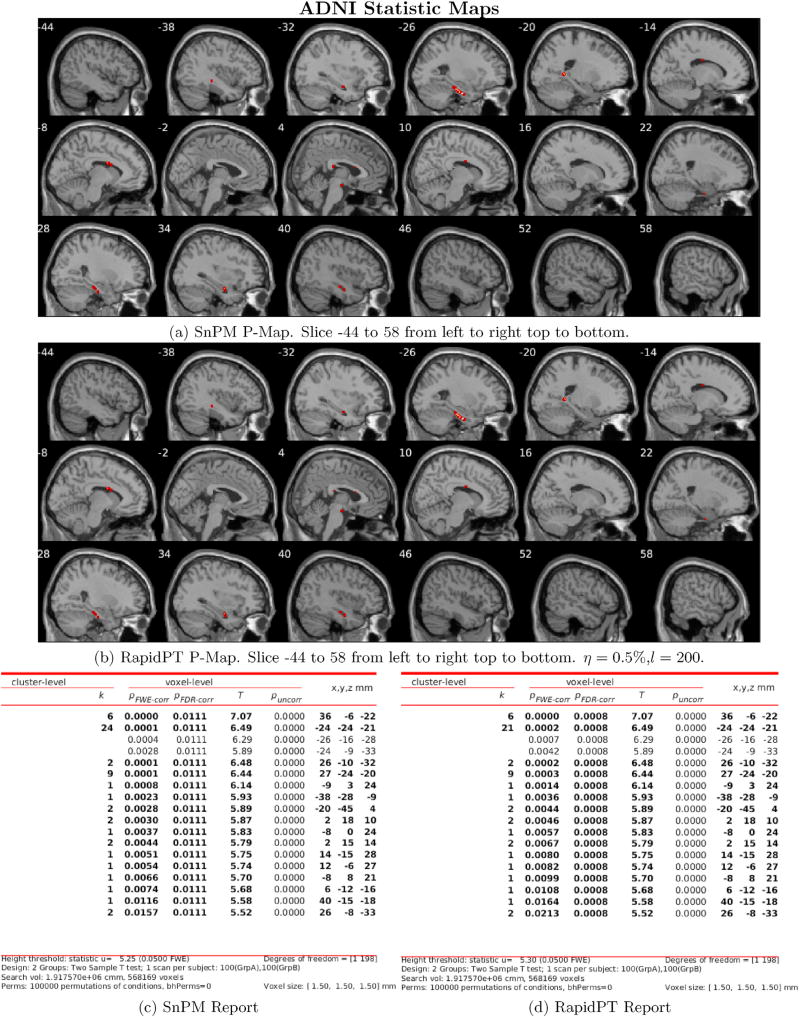

Despite the slight difference in thresholds, the actual brain regions whose null hypotheses were rejected consistently match between both methods as shown in Figs. 9 and 10. Additionally, the regions picked up by both RapidPT and SnPM in Fig. 9 correspond to the Hippocampus – which is one of the primary structural brain imaging region that corresponds to the signature of cognitive decay at the onset of Alzheimer's disease. The regions in Fig. 10 contain a subset of the brain regions in Fig. 9 which is expected from the thresholds shown in the right column of Fig. 7.

Fig. 9.

Thresholded FWER corrected statistical maps at (α = 0.05) with the n = 400 dataset. The hyperparameters used were: η = 0.5%, l = n, and L = 100000. The images show the test statistics for which the null was rejected in SnPM (top) and RapidPT (bottom). The tables show a numerical summary of the images. The columns refer to: k - cluster size, pFWE–corr -

Fig. 10.

Thresholded FWER corrected statistical maps at (α = 0.05) with the n = 200 dataset. The hyperparameters used were: η = 0.5%, l = n, and L = 100000. The images show the test statistics for which the null was rejected in SnPM (top) and RapidPT (bottom). The tables show a numerical summary of the images. The columns refer to: k - cluster size, pFWE–corr -

6.2. Runtime performance

6.2.1. Effect of hyperparameters on the speed of RapidPT

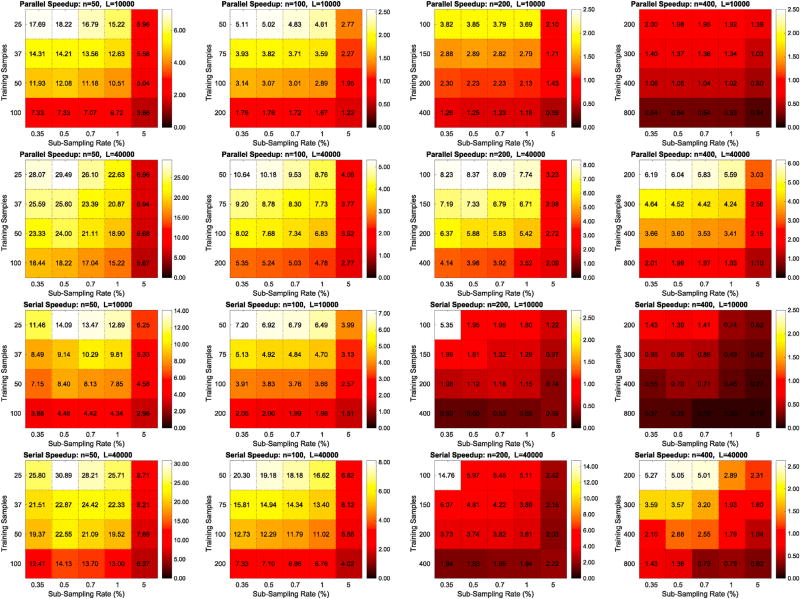

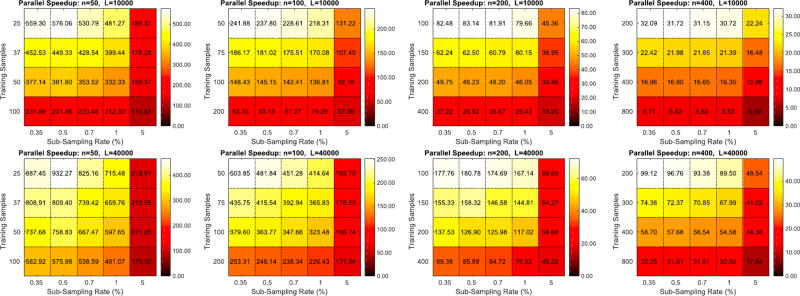

Figs. 12 and 13 show the speedup gains of RapidPT over SnPM and NaivePT, respectively. Each column corresponds to a single dataset, and each row corresponds to a different number of permutations. The supplementary results include an exhaustive version of these results that show the speedup gains of RapidPT for many additional number of permutations.

Fig. 12.

Colormaps of the speedup gains of RapidPT over SnPM in a serial and parallel computing environment. Each colormap corresponds to a run with a given dataset and a fixed number of permutations, and displays 20 different speedups resulting from different hyperparameter combinations. The first two rows correspond to the speedups obtained from the runs on 16 cores, and the last two columns from runs on a single core. Columns 1, 2, 3, and 4 of this figure are correspond to the 50, 100, 200, and 400 subject datasets, respectively.

Fig. 13.

Colormap of the speedup gains of RapidPT over NaivePT. The organization of the colormaps and the information they display is the same as Fig. 12. These speedups correspond to both programs running on a MATLAB instance that could use all 16 available cores.

As shown in Fig. 12, RapidPT outperforms SnPM in most scenarios. With the exception of the L = 2000 and L = 5000 runs on the larger datasets (n = 200 and n = 400), the colormaps show that RapidPT is 1.5–30× faster than SnPM. As expected, a low η (0.35%, 0.5%) and leads to the best runtime performance without a noticeable accuracy tradeoff, as can be seen also in Fig. 7 earlier.

Fig. 13 shows how RapidPT performs against a non-optimized permutation testing implementation. In this setup, RapidPT outperforms NaivePT in every single combination of the hyperparameters. The same speedup trends that were seen when comparing RapidPT and SnPM are seen between RapidPT and NaivePT but with a much larger magnitude. For the remainder of this section, the runtime results of NaivePT are no longer compared because the difference is too large.

6.2.2. Scaling of RapidPT vs. SnPM

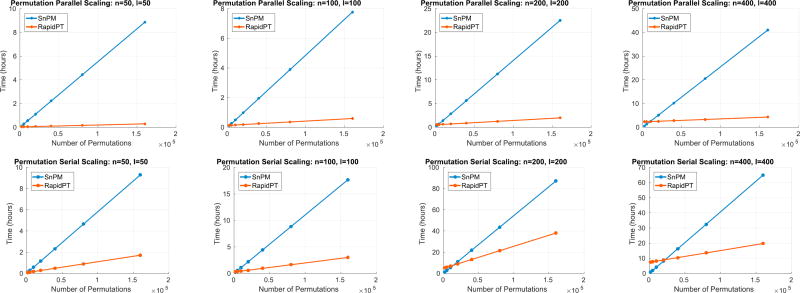

As opposed to η and l which only seem to have an impact on the runtime performance of RapidPT, the number of permutations and the size of the dataset have an impact on the runtime of both RapidPT and SnPM. In this section, we compare how RapidPT and SnPM scale as we vary these two parameters. Figs. 14 and 15 show the runtime performance of RapidPT for η = 0.35% and l = n.

Fig. 14.

Effect of the number of permutations on the runtime performance of RapidPT and SnPM on 16 cores (first row) and on a single core (second row). The hyperparameters used were: η = 0.35% and l = n.

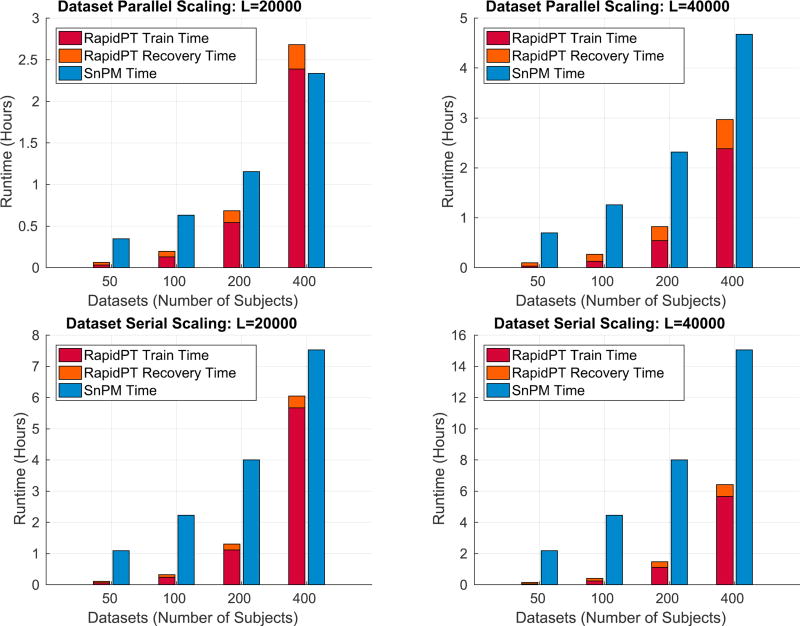

Fig. 15.

Effect of the dataset size on the performance of RapidPT and SnPM on 16 cores (first row) and on a single core (second row). The overall measured time of RapidPT is the result of the total time spent on the training phase and the recovery phase. The hyperparameters used were: η = 0.35%, L = 10000, and l = n.

Number of Permutations

Fig. 14 shows the super linear scaling of SnPM compared to RapidPT for all datasets as the number of permutation increases. Doubling the number of permutations in SnPM leads to an increase in the runtime by a factor of about two. On the other hand, doubling the number of permutations for RapidPT only affects the runtime of the recovery phase leading to small increases in the timing performance. The performance of both implementations is only comparable if we focus on the lower range of the number of permutations (5000) across datasets. As this number increases, as was shown in Fig. 12, RapidPT outperforms the permutation testing implementation within SnPM.

Dataset Size

Fig. 15 shows the effect of the dataset size on the runtime of RapidPT and SnPM. In SnPM, as expected, increasing the dataset by a factor of two leads to an increase on the runtime by a factor of two. In RapidPT, however, increasing the dataset size has a variable effect on the runtime. The training phase ends up contributing more to the runtime as the dataset size increases, while the recovery phase runtime increases at much slower rate.

Overall, scaling the number of permutations have a stronger impact on the runtime performance of SnPM than RapidPT. On the other hand, scaling the dataset size has a more negative effect on the timing of RapidPT than in SnPM. Furthermore, if both parameters are increased at the same time the runtime of SnPM increases at a much faster rate than the runtime of RapidPT.

7. Discussion

7.1. Accuracy

7.1.1. Recovered Max null distribution

Monte carlo permutation tests perform a randomization step where a random subset of the total number of permutations is chosen. This means that the constructed max null distribution from one run might slightly differ from another run, and as the number of permutations increases, this difference will decrease. In terms of KL-Divergence, this means that the KL-Divergence between two permutation testing runs on the same data will be small, but not exactly zero. The results show that given a good set of hyperparameters the KL-Divergence between a run of RapidPT versus a regular permutation testing run leads to a very low KL-Divergence which is the expected result, even if we run the same permutation testing program twice. The evaluated scenarios show that a sensible set of hyperparameters can be easily defined as long as the subsampling rate is sufficient for the recovery of the permutation testing matrix. The minimum number of sub-sampled entries needed to accurately recover T depends on the rank and dimensions of T as discussed in section 3.1 and in the supplement. For the simulation study with n = 30, the minimum η required was 1.6% of all entries as shown in Figs. 4 and 5. The experiments on the other 48 synthetic datasets (Fig. 6) used 2ηmin as the sub-sampling rate. In our experiments on the ADNI dataset, η ≥ 0.35% led to a large enough set of sub-sampled entries to obtain an accurate estimate of the max null distribution. For a brief discussion on how to pick a sensible η and the minimum sub-sampling rate (ηmin), please refer to section 3 of the supplementary material. Overall, once we have a sensible η, the resulting max null distribution constructed by RapidPT is consistent to the one recovered by regular permutation testing.

The number of permutations also has a significant impact on the KL-Divergence. As L increases, the KL-Divergence decreases. However, this is also true between any two permutation testing runs on the same dataset.

7.1.2. Evaluating p-values

As seen in the p-value spectrum plots, the p-values drawn from each max null distribution agree (given a good set of hyperparameters as discussed above). This follows from the low KL-Divergence, since the KL-Divergence is a measure of the overall difference between the distributions. The lowest differences, however, are located in the tails of the distributions. This means that the derived thresholds are expected to accept/reject almost the same null hypotheses.

The extremely low p-value regime

As we will discuss in section 7.2 the largest speedups from RapidPT are obtained as the number of permutations increases. The main reason to increase L is to obtain smaller p-values. Figs. 5 and 8 show that RapidPT recovers extremely accurate t-threshold (percent differences <0.1%). Figs. 9 and 10 demonstrate this in a practical scenario where a large number of permutation (L = 100, 000) and a high percentage of the brain regions that rejected the null had a p-value <0.001. Therefore, a user interested in using small p-values can benefit from large computational speedups when using RapidPT, with a negligible loss in accuracy.

7.1.3. Resampling risk

Small signal datasets

Although the p-values agree across methods, a small difference may lead to slightly more or less null hypotheses to be rejected by a given method. This has a direct impact on the resampling risk between RapidPT and regular permutation testing. In datasets where there is a small signal difference between groups, we may see an elevated resampling risk. The reason is because a very small number of null hypotheses will be rejected. Therefore, at a given p-value, p, one of the methods will reject a small number of null hypotheses which the second method will not reject until the p-value is p + δ, where δ≪1. This slight difference in the number of rejected null hypotheses may have a significant impact in the resampling risk. For instance in Fig. 11 (N = 200 at p = 0.05), RapidPT rejected 59 (out of ~ 568k statistics) null hypotheses and SnPM rejected 71: this led to a resampling risk of 8.45%. On the other hand, for N = 400 at p = 0.05 RapidPT rejected 2158 (out of ~ 570k statistics) and SnPM rejected 2241 null hypotheses, resulting in a lower resampling risk of 1.85% even when there is a larger difference in the number of rejected hypotheses. Nonetheless, as we can see in the brain maps in Figs. 9 and 10 that this difference of RapidPT's and SnPM's rejections were among the boundary voxels of the rejection region (i.e., the mismatch is not at the center of the rejection/significant region). Note that once a reasonable small smoothing filter is applied to nullify noise (e.g., stand-alone voxels) this apparent small difference will vanish visually. A similar situation is encountered in the 50 and 100 subject dataset (see supplement), where the number of null hypotheses rejected was extremely low (< 10) and single mismatch led to an elevated resampling risk. Therefore, the resampling risk is a useful measure if it is presented together with the number of null hypothesis being rejected. Hence, RapidPT yields a slightly more conservative test, primarily because it is based on sub-sampling. Nevertheless, this sub-sampling is robust to locating the same voxel clusters that displayed group differences as the other methods.

7.2. Runtime performance

Our results show that under certain scenarios RapidPT provides substantial speedups over SnPM (state of the art) and NaivePT (simple implementation). From the runtime performance of NaivePT, it is evident that in practice it is more beneficial for the user to rely on a permutation testing toolbox such as SnPM. In the remainder of the discussion we will just consider the runtime performance of RapidPT and SnPM.

Dataset Size

Overall, the largest speedups in both the serial and parallel setups were obtained in the runs for the smallest dataset (N = 50). The number of training samples used to estimate the basis U is smaller and consequently the training phase time decreases. As the dataset size increases, the training time introduces a considerable overhead which negatively impacts the speedup of RapidPT over SnPM when less than 20000 permutations are being performed.

Number of Permutations

The number of permutations have a linear impact on the runtime of both RapidPT and SnPM. But as shown in Fig. 14, RapidPT runtime increases at a lower rate than SnPM's. This is expected since the number of permutations only impacts the runtime of the recovery phase. Therefore, the training phase time for a given setup is constant as the number of permutations changes. The results show that for datasets with less than or equal to 400 subjects between 5000 – 10000 permutations is the threshold where despite the training overhead in RapidPT, the expected speedup is considerable to justify its use. In the larger datasets, when performing less than 5000 permutations, the training overhead becomes too large, as shown in the supplementary material.

7.2.1. Serial vs. parallel performance

The serial and parallel runs show very similar speedup trends across hyperparameters as shown in the results and supplementary material. However, in terms of actual runtime, both RapidPT and SnPM benefit from being able to run on a parallel environment. This is an essential feature for any software toolbox that will be running on modern workstations because multiple cores are available in nearly all computers shipped today.

7.3. Hyperparameter recommendations

Sub-sampling Rate

The KL-Divergence results, Figs. 4 and 7, show that as long as the sub-sampling rate is greater than or equal to a certain threshold, RapidPT is able to accurately recover the max null distribution. For the simulation study this threshold was 1.6% and for the real data experiments 0.35% was a high enough sub-sampling rate. A minimum ηmin can be calculated using LRMC theory as shown in Section 3 of the supplementary material. This minimum value is simply a function of the number of voxels and the number of data instances. The simulation results in Figs. 4 and 5 explicitly show this ηmin. The toolbox itself sets it to a default conservative value of 2∗ηmin, which is also shown in Figs. 4 and 5. This slightly larger choice ensures the error in recovering the null is almost negligible. Overall, the accuracy of RapidPT will not significantly improve as the sub-sampling rate increases (pass ηmin). On the other hand, a low sub-sampling rate can significantly reduce the runtime, in particular in the large L regime, and is therefore preferable. A user does not need to change this rate in practice (beyond what is given by the toolbox). However, if he/she is willing to be even more flexible by sacrificing some accuracy to achieve an even higher speed-up, it can be reduced appropriately.

Number of Training Samples

The number of training samples will ideally be the exact rank of T. This is usually not known, however, we know that the rank is bounded by the number of subjects in the data matrix used to generate T. Therefore, in our evaluations, we are able to accurately recover the max null distribution even when using as low as training samples. The recommended number of training samples in practice is be n (which follows from rank structure of the testing matrix) and is the default setting within RapidPT toolbox.

Number of Permutations

When a large number of permutations is desired, RapidPT should be strongly considered due to its runtime gains. Not only RapidPT provides considerable runtime gains in the large L regime, but also its accuracy will improve as the KL-Divergence results show. Note, however, that the user should also take into account the dataset size and look at the speedup colormaps to see the expected speedups.

Dataset size

Although not a hyperparameter, as discussed above, the dataset size should play a role when the user selects which method to use. Large datasets (n ≥ 200), RapidPT will be a good option if the user is planning to perform a large number of permutations. For medium-sized datasets (50 ≤ n ≤ 200), RapidPT will most likely lead to good speedup gains. For small datasets (n ≤ 50), although RapidPT might lead to speedup gains over regular permutation testing, the total runtime will be on the order of minutes anyway.

As we briefly discussed in Section 1, Winkler et al. (2016) provides several strategies for reducing the runtime of permutation testing. But the authors do not report significant speedup gains against regular permutation testing on a 50 subject dataset with ≈200000 voxels. On the other hand, RapidPT is able to consistently outperform a state of the art permutation testing implementation (SnPM) on a 50 subject dataset with ≈540k voxels. This boost in performance is, in large part, due to our low sub-sampling rates. Our subspace tracking algorithm is, nonetheless, able to perform recovery in this sparse sampling setting.

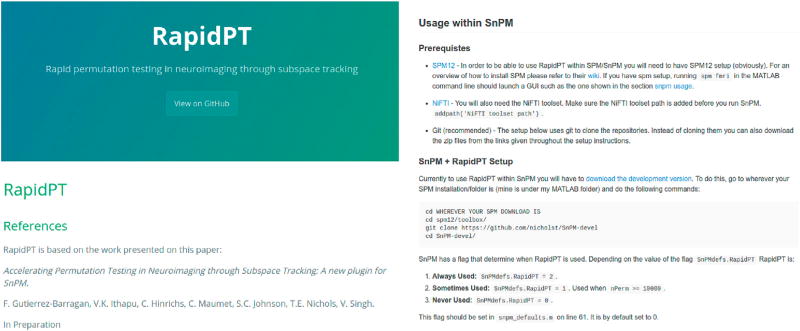

7.4. SnPM integration

SnPM is a toolbox that can be used within the software Statistical Parametric Mapping (SPM) SPM (2012). RapidPT has been integrated into the development version of SnPM SnPM (2013). This enables users to leverage the pre and post processing capabilities of SnPM. Through the graphical user interface (GUI) of SnPM the user can simply specify if they want to use RapidPT or not. Alternatively, the experienced user can also toggle a flag called RapidPT inside the snpm_defaults.m. Once this flag is set the user can simply proceed with their normal SnPM workflow. The SnPM GUI does not allow the user to set RapidPT's hyperparameters (η, l), however, the online documentation walks the user through the process of setting them manually. A preview of the online documentation can be seen in Fig. 16. Further discussion and walkthroughs of how to use SnPM and RapidPT within SnPM can be found in the documentation of both toolboxes Gutierrez-Barragan and Ithapu (2016); SnPM (2013). The RapidPT library webpage is at http://felipegb94.github.io/RapidPT/.

Fig. 16.

Screenshots of the RapidPT website (left) and SnPM integration documentation (right).

8. Conclusion

In this paper, we have presented a new algorithmic framework that is able to efficiently approximate the max null distribution commonly obtained through permutation testing. By exploiting the structure of the permutation testing matrix, T, and applying recent ideas from online matrix completion we show through our theoretical analysis and experimental evaluations that one can subsample entries of T at extremely low-rates and still construct a good estimate of T. The algorithm first goes through a training phase where the basis and the distribution of the residual of T are estimated. Then it continues into the recovery phase where a small number of entries of each column of T are calculated and the rest are estimated through matrix completion. Finally, we obtain the max null distribution from the maximum value of each column in T. Experiments on four varying sized datasets derived from the ADNI2 dataset showed that if we sub-sample at a high enough rate we can accurately recover the max null distribution at a fraction of the time in many scenarios. The implementation is available as a stand-alone open-source toolbox as well as a plugin for SnPM13 SnPM (2013), and is able to leverage multi-core architectures.

Supplementary Material

Acknowledgments

The authors thank Jia Xu for helping out with a preliminary implementation of the model. This work was supported in part by NIH R01 AG040396; NIH R01 EB022883; NIH R01 AG021155; NSF CAREER grant 1252725; NSF RI 1116584; UW ADRC P50 AG033514 and UW ICTR 1UL1RR025011. Hinrichs was supported by a CIBM post-doctoral fellowship at Wisconsin via NLM grant 2T15LM007359. Nichols and Maumet are supported by the Wellcome Trust (100309/Z/12/Z). The contents do not represent views of the Department of Veterans Affairs or the United States Government.

Footnotes

Data used in preparation of this article was obtained from the Alzheimer's Disease Neuroimaging Initiative (ADNI) database (adni.loni.ucla.edu). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found at: http://adni.loni.ucla.edu/wp-content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf.

Appendix A. Supplementary data

Supplementary data related to this article can be found at http://dx.doi.org/10.1016/j.neuroimage.2017.07.025.

References

- Arndt S, Cizadlo T, Andreasen N, Heckel D, Gold S, O'Leary D. Tests for comparing images based on randomization and permutation methods. J. Cereb. Blood Flow Metaholism. 1996;16:1271–1279. doi: 10.1097/00004647-199611000-00023. [DOI] [PubMed] [Google Scholar]

- Balzano L, Nowak R, Recht B. Online identification and tracking of subspaces from highly incomplete information; Communication, Control, and Computing (Allerton), 2010 48th Annual Allerton Conference on; Sep, 2010. pp. 704–711. [Google Scholar]

- Bennett J, Lanning S. The netflix prize. Proceedings of KDD Cup and Workshop. 2007;2007:35. [Google Scholar]

- Candès EJ, Recht B. Exact matrix completion via convex optimization. Found. Comput. Math. 2009;9(6):717–772. [Google Scholar]

- Candès E, Tao T. The power of convex relaxation: near-optimal matrix completion. IEEE Trans. Inf. Theor. 2010;56(5):2053–2080. http://dx.doi.org/10.1109/TIT.2010.2044061. [Google Scholar]

- Chandrasekaran V, Sanghavi S, Parrilo PA, Willsky AS. Rank-sparsity incoherence for matrix decomposition. SIAM J. Optim. 2011;21(2):572–596. [Google Scholar]