Abstract

Objective

To estimate agreement of self-reported heart failure (HF) with physician diagnosed HF and compare the prevalence of HF by method of ascertainment.

Study Design and Setting

ARIC cohort members (60–83 years of age) were asked annually whether a physician indicated they have HF. For those self-reporting HF, physicians were asked to confirm their patient’s HF status. Physician diagnosed HF included surveillance of hospitalized HF, and hospitalized and outpatient HF identified in administrative claims databases.

We estimated sensitivity, specificity, positive predicted value, kappa, prevalence and biased adjusted kappa (PABAK), and prevalence.

Results

Compared to physician diagnosed HF, sensitivity of self-report was low (28–38%) and specificity was high (96–97%). Agreement was poor (kappa 0.32–0.39) and increased when adjusted for prevalence and bias (PABAK 0.73–0.83). Prevalence of HF measured by self-report (9.0%), ARIC-classified hospitalizations (11.2%), or administrative hospitalization claims (12.7%) were similar. When outpatient HF claims were included, prevalence of HF increased to 18.6%.

Conclusions

For accurate estimates HF burden, self-reports of HF are best confirmed by appropriate diagnostic tests or medical records. Our results highlight the need for improved awareness and understanding of HF by patients as accurate patient awareness of this diagnosis may enhance management of this common condition.

Keywords: heart failure, administrative claims, medical records, self-report

Background

Patient report of having physician-diagnosed heart failure (HF) has been used clinically and to quantify the burden of HF in the community. In the National Health and Nutrition Examination Survey (NHANES), self-report of a physician diagnosis of HF is used to estimate the prevalence of HF in the United States (US). HF is difficult to diagnose and identify in population research. Although this difficulty is not limited to estimating the accuracy of self-reported HF, estimates comparing self-reported HF to clinically diagnosed HF [1], medical records [2–8], and health administrative data [4, 9] may be affected. Given the complexity of diagnosing and classifying HF, it may be difficult for health professionals to accurately inform patients of this diagnosis, which may limit the accuracy of self-report of physician-diagnosed HF and thus the practical advantage in using self-report to estimate prevalence of HF.

Compared to self-reports of HF, self-reports of coronary heart disease and myocardial infarction have greater validity. However, most prior studies compared self-reported HF only to a single benchmark. Since no consensus exists on a single HF classification scheme, examination of the agreement and validity of self-reported HF against different benchmark definitions of HF is desirable. Thus, in the Atherosclerosis Risk in Communities (ARIC) study cohort, we addressed an individual’s ability to convey prior diagnoses of HF by estimating the agreement of self-reported HF with confirmation of HF by the participant’s health care provider, hospital medical record extraction, and the presence of HF International Classification of Disease, 9th Edition Clinical Modification (ICD-9-CM) codes in administrative claims. Estimates of the prevalence of HF based on these methods of ascertainment were compared.

Methods

Study population

The ARIC study is an ongoing prospective cohort of 15,792 men and women aged 45–64 years at baseline (1987–1989) recruited from the following four US communities: Forsyth County, North Carolina; Jackson, Mississippi; Washington County, Maryland; and suburban Minneapolis, Minnesota [10]. Standardized physical examinations and interviewer-administered questionnaires were conducted at baseline and at four follow-up visits through 2012. Participants were additionally followed-up annually (from 1987) and semi-annually (beginning in 2012) through telephone interviews and review of hospitalization and vital status records. Institutional Review Boards at each participating institution approved the study and all participants provided written informed consent at each examination.

Self-reported heart failure

Starting in 2005, participants were asked questions regarding their HF status during annual follow-up telephone interviews. Participants who reported a diagnosis of HF or who reported that their heart was weak, their heart did not pump as strongly as it should, or that they had fluid in their lungs prior to 2005 were classified as having prevalent HF. Participants, free of self-reported HF prior to 2005 were asked at the initial (2005) and subsequent annual telephone interviews whether a doctor said that they had HF, or if their heart was weak or did not pump as strongly as it should, since the last time they were contacted. The approximate date of diagnosis and whether or not the participant reported a HF-related hospitalization was also collected. Participants were classified as having new self-reported HF if they answered “yes” to either of the above questions.

Physician confirmed heart failure

In parallel with the ARIC participants’ self-reported HF status a confirmation of HF from the participant’s physician was sought. If a participant reported being diagnosed with HF, or told by a physician that his/her heart was pumping weakly, they were asked to authorize a release of medical information from their physician. Once a signed authorization was obtained the provider of medical care was sent a survey to confirm their patient’s HF status, HF characteristics, and treatment status.

ARIC-classified heart failure

Prior to 2005, ARIC recorded ICD-9-CM codes but did not abstract HF records; we excluded participants who had a heart failure-related ICD-9-CM discharge code of 428.x in any position. Starting in 2005 the ARIC Study conducted continuous surveillance of hospitalized HF events, including acute decompensated HF (ADHF) and chronic stable HF (CSHF) among the cohort participants. The medical records of all cohort hospitalizations are abstracted by trained study staff adhering to a common protocol [11]. Each record is reviewed for any evidence of relevant HF symptoms or mention by the physician of HF in the hospital record. If the hospital record contains such confirmation a detailed abstraction is completed. Data abstracted include the elements required by four diagnostic criteria commonly used (Framingham, modified Boston, NHANES, Gothenburg) and ICD-9-CM codes. Each hospitalization eligible for full abstraction is independently reviewed by two physicians who are provided portions of the medical record and a report of the abstracted data. Reviewers then classify hospitalizations as definite ADHF, possible ADHF, chronic stable HF, HF unlikely, or unclassifiable. Hospitalizations classified as definite or possible ADHF and chronic stable HF were considered confirmation of HF for our study.

Heart failure identified from administrative claims

ARIC cohort participants’ identifiers were linked with Centers for Medicare and Medicaid Services (CMS) Medicare claims for the years 1991–2013 using a finder file that included participants’ social security numbers, gender, and date of birth. From the total number of study participants with available social security numbers (n=15,744), 238 died before 1991 and 607 died after 1991 but before reaching the Medicare eligibility age of 65 years, leaving 14,899 eligible ARIC study participants. A crosswalk file was used to identify ARIC cohort participants eligible for Medicare coverage. The crosswalk file between the ARIC study finder file and the Medicare Beneficiary Summary file yielded 14,702 ARIC cohort IDs for analyses (98.7 % match).

Information concerning ARIC study participant enrollment in fee-for-service (FFS) Medicare was obtained from monthly indicators of enrollment in Part A, Part B, and Medicaid buy-in available from annual Medicare Beneficiary Summary files. Continuous enrollment periods were created to indicate uninterrupted FFS Medicare coverage, defined as enrollment in Medicare Part A and Part B as well as lack of enrollment in a Medicare Advantage (HMO) plan. All inpatient and outpatient claims were linked.

Hospitalized HF was identified from Medicare Provider Annual Review (MedPAR) records using ICD-9-CM code 428.x in any position. Outpatient HF was identified from claims with Evaluation and Management service codes for new and established outpatient visits, consultations, and established preventive medicine visits matched with date of service found in the Carrier (Part B) claims. Similar to hospitalized HF, outpatient HF events were identified using HF-specific ICD-9-CM codes 428.0–428.9.

Eligibility

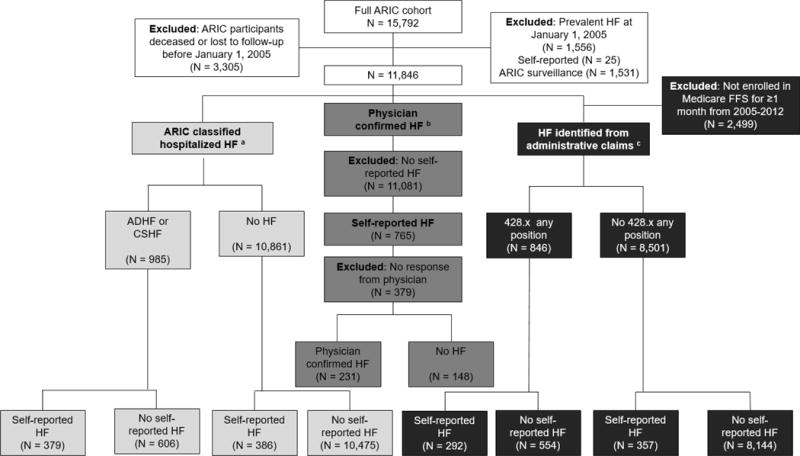

To assess measurement properties of self-reported HF, participants were excluded if deceased or lost to follow-up before January 1, 2005 (Figure 1). Participants were also excluded if they were hospitalized with a HF code (defined by an ICD-9-CM discharge code of 428.x in any position) or self-reported having HF prior to January 1, 2005.

Figure 1. Study Design, The Atherosclerosis Risk in Communities (ARIC) Study (2005–2012).

Abbreviations: Heart failure (HF), fee-for-service (FFS), acute decompensated heart failure (ADHF), chronic stable heart failure (CSHF)

aDefinite and probable acute decompensated heart failure and chronic stable heart failure ascertained from reviewed abstracted medical records

bAscertained from heart failure surveys sent to participant’s physicians for confirmation of heart failure diagnosis

cCenters for Medicare and Medicaid Services Medicare claims, International Classification of Disease, 9th Edition Clinical Modification (ICD-9-CM) code 428.x in any position present in the hospital record, ICD-9-CM 428.x in any position present in the hospital record or ICD-9-CM 428.x present in the outpatient record Self-reported heart failure ascertained from ARIC annual follow up telephone interview

To estimate and compare prevalence of self-reported HF, ARIC-classified HF hospitalizations, and HF identified from Medicare administrative claims diagnoses of participants alive from 2005–2012, we constructed a cohort of participants who had information available for all methods of HF ascertainment. Participants were included if they responded to any telephone interview questions regarding HF and were continuously enrolled in Medicare fee-for-service for at least one calendar month from 2005–2012. No exclusions were made based on prevalent heart failure prior to 2005.

Statistical analysis

To verify self-reported HF, we calculated the proportion of self-reported HF confirmed by physicians (verification). Because only ARIC participants who self-reported having HF (yes) were followed up for physician confirmed HF (yes/no), other measures of validity were not possible using physician confirmation (Table 1). To directly compare self-reported HF to other methods of HF ascertainment, we also calculated verification for ARIC-classified HF and HF ascertained from administrative claims.

Table 1.

Agreement estimates of self-reported heart failure vs. objective measures of heart failure, The Atherosclerosis Risk in Communities (ARIC) Study

| Estimate | Physician Confirmed Heart Failure | ARIC-Classified Heart Failurea | Heart Failure Identified from Administrative Claimsb |

|---|---|---|---|

| Verificationc | ✓ | ✓ | ✓ |

| Kappa | ✓ | ✓ | |

| PABAK | ✓ | ✓ | |

| Sensitivity | ✓ | ✓ | |

| Specificity | ✓ | ✓ | |

| PPV | ✓ | ✓ |

Abbreviations: Prevalence and biased adjusted kappa (PABAK); positive predicted value (PPV)

✓ Estimate calculated for objective measure of heart failure

Definite and probable acute decompensated heart failure and chronic stable heart failure ascertained from reviewed abstracted medical records

Centers for Medicare and Medicaid Services Medicare claims

Confirmed heart failure among self-reporters of heart failure

To compare self-reported HF (yes/no) with ARIC-classified HF (yes/no) or administrative claims (yes/no), participants were classified as true positives, true negatives, false positives, or false negatives for each comparison. We evaluated agreement (the number of true positives and negatives divided by all participants), positive predictive value (PPV, probability that a participant has recorded HF given that they positively self-reported HF), sensitivity (probability of positively self-reporting HF among participants with recorded HF), and specificity (probability of negatively self-reporting HF among participants without recorded HF) (Table 1). Lastly, we computed prevalence and bias adjusted kappa (PABAK) [12]. Because both prevalence and bias play a part in determining the magnitude of the kappa coefficient and the prevalence of HF is relatively low (~10%), PABAK is considered an appropriate complement to the traditional kappa statistic. PABAK is calculated as 2Io-1 where Io is the observed agreement.

Prevalence and 95% confidence intervals (CI) for self-reported HF, ARIC-classified HF hospitalizations, and HF identified from Medicare administrative claims diagnoses were estimated from 2005 through 2012. We made no attempt to align the years of HF report with HF hospitalization or administrative claim, but rather took affirmative responses at any time as having agreed. All analyses were conducted using SAS V9.4 (SAS Inc. Cary, North Carolina).

Results

Of the 15,792 members of the ARIC cohort, 3,305 were deceased (N=2,884) or lost to follow-up before January 1, 2005 and 1,556 participants were classified as having prevalent HF, leaving 11,846 participants for these analysis (Figure 1). Among eligible ARIC participants, 59% were female and 25% were African American (Table 2) and ages ranged from 60 to 83 years in 2005. Over 40% of participants had hypertension and 12% had diabetes at baseline (2005). ARIC participants with Medicare fee-for-service coverage were similar in age, gender, racial, and comorbidity composition to all eligible ARIC participants. Participants who self-reported HF were older, more likely to be male and had higher prevalence of diabetes, hypertension, myocardial infarction, stroke, and coronary heart disease at baseline (Table 2).

Table 2.

Descriptive characteristics by heart failure ascertainment, The Atherosclerosis Risk in Communities (ARIC) Study, 2005–2012

| ARIC participants who may be classified as having heart failure

|

|||

|---|---|---|---|

| Physician confirmed heart failure (N = 379) |

ARIC-classified heart failurea (N = 11,846) |

Heart failure identified from administrative claimsb (N = 9,347) |

|

|

|

|||

| Mean (Standard Deviation) | |||

|

|

|||

| Age (years), mean (standard deviation)c | 72 (5.6) | 71 (5.7) N (%) |

71 (5.5) |

|

|

|||

| % Female | 181 (48) | 6,960 (59) | 5,584 (60) |

| % African American | 100 (26) | 2,912 (25) | 2,492 (27) |

| Center | |||

| Forsyth County | 58 (15) | 3,104 (26) | 2,049 (22) |

| Jackson | 93 (24) | 2,532 (21) | 2,219 (24) |

| Minneapolis | 104 (27) | 3,236 (27) | 2,266 (24) |

| Washington County | 124 (33) | 2,974 (25) | 2,813 (30) |

| Comorbidities | |||

| Diabetesd | 82 (22) | 1,386 (12) | 1,161 (12) |

| Hypertensione | 236 (62) | 5,270 (44) | 4,340 (46) |

| Myocardial infarctionf | 67 (18) | 551 (5) | 452 (5) |

| Strokeg | 24 (6) | 331 (3) | 288 (3) |

| Coronary heart diseaseh | 90 (24) | 654 (6) | 536 (6) |

Definite and probable acute decompensated heart failure and chronic stable heart failure ascertained from reviewed abstracted medical records

Centers for Medicare and Medicaid Services Medicare (CMS) fee-for-service inpatient and outpatient claims

Age at start of follow-up (January 1, 2005)

Fasting glucose ≥126, non-fasting glucose ≥200, using medication for diabetes, or self-reported physician diagnosis of diabetes prior to start of follow-up (January 1, 2005)

Systolic blood pressure ≥140, diastolic blood pressure ≥90, or on medication for high blood pressure prior to start of follow-up (January 1, 2005)

Prevalent myocardial infarction ascertained from ARIC surveillance

Prevalent stroke ascertained from ARIC surveillance

Prevalent coronary heart disease ascertained from ARIC surveillance

Prevalence

Of the eligible participants enrolled in Medicare fee-for-service, 649 self-reported HF (6.9%, 95% CI: 6.4, 7.5) during at least one telephone interview (N=9,347), 824 had hospitalizations with a diagnosis of HF confirmed by ARIC (8.8%, 95% CI: 8.2, 9.4), 846 were hospitalized with a HF-related ICD-9-CM discharge code of 428.x identified from administrative claims (9.1, 95% CI: 8.5, 9.6)), and 1,391 were hospitalized or seen in the outpatient setting for HF identified from administrative claims (14.9, 95% CI: 14.2, 15.6, Supplemental Table 2).

Verification of heart failure

Between January 1, 2005 and December 31, 2012, 379 of the 765 participants who self-reported HF (49.5%, Supplemental Table 1) had information regarding confirmed HF status from their physician. For 231 of those, the participants’ physicians confirmed that their patients had HF (agreement=60.9%, 95% CI: 56.0, 65.9, Table 3).

Table 3.

Agreement between self-report of heart failure and objective measures of heart failure, The Atherosclerosis Risk in Communities (ARIC) Study, 2005–2012

|

Administrative claimsb |

||||

|---|---|---|---|---|

| Physician confirmed heart failure (N = 379) |

ARIC-classified heart failurea (N = 11,846) |

Heart failure hospitalizationsc (N = 9,347) |

Heart failure hospitalizations and outpatient visitsd (N = 9,347) |

|

| % (95% CI) | % (95% CI) | % (95% CI) | % (95% CI) | |

|

|

||||

| Verification e | 60.9 (56.0, 65.9) | 49.5 (46.0, 53.1) | 45.0 (41.1, 48.8) | 60.9 (57.1, 64.6) |

| Sensitivity | – | 38.5 (35.4, 41.6) | 34.5 (31.3, 37.8) | 28.4 (26.1, 30.9) |

| Specificity | – | 96.4 (96.1, 96.8) | 95.8 (95.3, 96.2) | 96.8 (96.4, 97.2) |

| Positive Predicted Value | – | 49.5 (45.9, 53.1) | 45.0 (41.3, 48.9) | 60.9 (57.0, 64.6) |

| Kappa | – | 0.39 (0.35, 0.42) | 0.34 (0.31, 0.37) | 0.32 (0.29, 0.35) |

| PABAK | – | 0.83 (0.82, 0.85) | 0.81 (0.79, 0.82) | 0.73 (0.71, 0.75) |

Abbreviations: Prevalence and biases adjusted kappa (PABAK)

Confirmed heart failure: Physician confirmed heart failure N = 231; ARIC-classified heart failure N = 379; hospitalized heart failure identified from administrative claims N = 292; hospitalized and outpatient heart failure identified from administrative claims N = 395 Prevalence: Self-reported heart failure (6.9%); ARIC-classified heart failure (8.8%); hospitalized heart failure identified from administrative claims (9.1%); hospitalized and outpatient heart failure identified from administrative claims (14.9%)

Definite and probable acute decompensated heart failure and chronic stable heart failure ascertained from reviewed abstracted medical records

Centers for Medicare and Medicaid Services Medicare claims

International Classification of Disease, 9th Edition Clinical Modification (ICD-9-CM) code 428.x in any position present in the hospital record

ICD-9-CM 428.x in any position present in the hospital record or ICD-9-CM 428.x present in the outpatient record

Confirmed heart failure among self-reporters of heart failure

Comparison of self-reported and ARIC-classified heart failure

Of the 765 ARIC participants who self-reported HF, 379 (50%) were classified as having HF by ARIC study criteria (Supplemental Table 1). The probability that a participant was classified by ARIC as having HF given that they self-reported HF (PPV) was 49.5% (95% CI: 45.9, 53.1, Table 3). The sensitivity of self-reported HF vs. ARIC-classified HF was low (38.5%, 95% CI: 35.4, 41.6) and specificity was high (96.4%, 95% CI: 96.1). The agreement of self-reported HF with HF classified by ARIC was quantified as kappa 0.39 (CI: 0.35, 0.42) and PABAK 0.83 (95% CI: 0.82, 0.85).

Comparison of self-reported heart failure and heart failure identified from administrative claims

Of the 11,846 eligible ARIC participants, 9,347 were enrolled in Medicare fee-for-service for at least one calendar month from January 1, 2005 to December 31, 2012. Among them, 45% (95% CI: 41.2, 48.8) of those who self-reported HF had previously been hospitalized with a HF code according to administrative claims, and 60.9% (95% CI: 57.1, 64.6) of participants who self-reported HF had been hospitalized or were seen in the outpatient setting with a HF code (Table 3). Overall agreement between self-reported HF and hospitalized HF identified from administrative claims, measured by kappa, was 0.34 (95% CI: 0.31, 0.37) while the agreement with HF identified from administrative hospitalization and outpatient claims decreased to 0.32 (95% CI: 0.29, 0.34). Similarly, the sensitivity, PPV, and PABAK decreased when outpatient claims were included in the comparison definition, partly due to the shift of participants from true negatives to false negatives (Supplemental Table 1).

Discussion

Because health-status questionnaires continue to be important tools in clinical settings and in public health research, we assessed the accuracy of self-reported HF compared to physician diagnosed HF, and evaluated the agreement between self-reported HF and a diagnosis of HF by the individual’s health care provider, prior indications of HF in an individual’s hospital records, and HF diagnostic codes in administrative claims. We observed low agreement (kappa 0.32–0.39) between HF self-reported by participants and physician diagnosed HF, and self-reports of HF were characterized by frequent false positives and false negatives. Adjustment of kappa statistics for prevalence and bias improved agreement to 0.73–0.83. Sensitivity was low (28–38%) and specificity was high (96–97%) for self-reported HF compared to all measures of physician diagnosed HF. The prevalence of ARIC-classified HF and of hospitalized HF ascertained from administrative claims were similar. However, the prevalence of self-reported HF was lower, and the prevalence of pooled hospitalized and outpatient HF ascertained from administrative claims were higher than the prevalence of hospitalized HF.

Multiple benchmarks

Previous studies have compared self-reported HF to a single validation benchmark, making comparisons across studies difficult. Thus, our study was designed to directly compare the agreement between self-reported HF and physician diagnosed HF including in-depth medical record review, administrative claims, and confirmation from a physician. Among previous reports estimating the agreement of self-reports of physician-diagnosed HF and medical records, agreement (kappa) ranged from 0.30–0.48 with low to fair sensitivity (31–69%) and high specificity (0.91–0.97) [2–8]. Our study yielded similar results with low sensitivity (39%) and agreement (0.32–0.39) and high specificity (96%).

Baumeister et al. compared self-reported HF with physical examinations and laboratory data [1] and reported higher agreement (kappa=0.74) and sensitivity (89%) than other studies [2–8]. Although our data does not allow a direct comparison to Baumeister et al., our validation results were highest for self-reported HF compared to physician confirmation of HF (61%). While we chose confirmation of HF by the interviewee’s physician as our benchmark, we sought physician confirmation only from study participants who self-reported HF for the first time during an annual telephone interview. Approximately 50% of physicians ultimately returned the HF survey, and as a result, 379 participant self-reports of HF were available for verification by a physician. Although the profile of study participant characteristics did not differ according to their physician’s response to the survey, this low response constrains the generalizability of the observed rate of physician confirmation of self-reported HF.

Similar to medical record review, studies comparing self-report to HF identified from hospital administrative claims returned low agreement (0.19–0.33) and sensitivity (26%), as well as high specificity (99%) [4, 9]. Among ARIC participants with CMS Medicare fee-for-service, sensitivity (28–35%), specificity (96–97%), and agreement (0.32–0.34) were comparable. Although our results can be compared to other studies, administrative data have high variation in validity for recording HF [13] and significant differences exist in the manner in which hospitalizations are recorded in administrative claims compared with medical records [14, 15]. Despite such differences there is a high degree of agreement between hospital records and Medicare administrative claims in the identification of individuals discharged from hospital with a HF diagnostic codes.

Patients’ Awareness and Understanding of HF

Previous studies indicated that self-reported conditions characterized by complex, nonspecific symptoms – such as HF – have poor agreement compared with objective measures of the condition, in contrast to conditions that are better characterized and more easily diagnosed such as myocardial infarction, stroke, and diabetes [2–6, 9, 16]. The low agreement of self-reported HF with physician diagnosed HF may thus reflect the complexity of HF as a syndrome and its varied presentations. The current lack of consensus on the combination of signs and symptoms to classify HF, resulting in several classification schema for use in clinical and research settings [17] may lead clinicians and physicians to be cautious when conveying a diagnosis of HF to their patients. The increasing use of functional tests and biomarkers to diagnose and manage HF in primary care should assist clinicians in making more accurate diagnoses of HF, conveying this information to patients, and engaging them in an evidence-informed management plan. Similar to the difficulties practitioners face in conveying an accurate diagnosis of HF to a patient, patients face challenges discerning a diagnosis of HF from other medical conditions with similar symptoms and characteristics. As a case in point, the chronic morbidity that characterized many of the study participants who self-reported HF may have contributed to the lack of agreement with the practitioners’ diagnoses: among the 148 individuals whose physicians indicated that their patients did not have the HF they had self-reported, 69.6% had a diagnosis of atrial fibrillation (103), angina pectoris (n=37), a previous MI (n=20), or another form of ischemic heart disease (n=60). Conditions such as these may have led to self-reports of HF.

Prevalence and bias adjusted kappa (PABAK)

Because agreement measures may be influenced by the prevalence of HF (<10%), Kappa coefficients may be decomposed into factors that reflect observed agreement, bias, and prevalence. Particularly for comparisons across studies it is informative to report the kappa coefficient values and the effects of bias and prevalence on agreement. In contrast to the observed low kappa estimates, PABAK estimates were considerably higher (0.73–0.83), although it should be considered that while PABAK adjusts for prevalence it may overestimate agreement. Thus, the effect of bias and prevalence on the magnitude of kappa are of interest, and while it has been argued that they should not be adjusted for [18] we provided such measures of agreement [19] alongside the value of kappa [20].

Prevalence of HF

We estimated the prevalence of HF by ascertainment method in the study population and observed that 6.9% of ARIC participants, whose average age was 71 years, self-reported HF during annual follow-up telephone interviews. This is somewhat lower than the 8–10% reported by other population-based surveys [21–23], and higher than the 4.6% self-reported by comparably aged populations in the Health and Retirement Study [23]. While the prevalence of ARIC-classified HF hospitalizations (8.8%) and hospitalized HF identified from administrative claims (9.1%) were similar, only 562 of the 1,108 participants with either type of HF hospitalizations were identified in both sources (Supplemental Table 3). In turn, only 244 (17.6%) participants who self-reported HF were identified by ARIC adjudication of medical records, and had a HF hospitalization identified from administrative claims.

As hypothesized, including outpatient claims increased the prevalence of HF ascertained from administrative claims substantially. Although approximately half of the HF patients are managed in the outpatient setting (not associated with a hospitalization) and HF patients are increasingly diagnosed and treated in outpatient clinics [24, 25], reported population estimates of HF rarely include outpatient HF. These temporal trends in the medical care of HF, coupled with the variability in prevalence estimates mentioned above, underscore the importance of specifying the definition and source of HF events when reporting frequency estimates.

Conclusion

Our results suggest that agreement of self-reported HF with physician confirmed HF, prior indications of HF in the patient’s health record, and with HF identified from administrative claims is low to fair, and self-reported HF is insensitive. While prevalence estimates of self-reported HF are comparable to those from hospitalizations with HF discharge diagnoses, the agreement between these sources in the identification of “cases” of HF is low to poor. For accurate population estimates of HF, self-reported HF data should be coupled with other sources such as diagnostic tests or medical records. The observed low accuracy of self-reported HF suggests that complexities in the diagnosis of HF make it challenging for health professionals to consistently and accurately convey this diagnosis to patients. These results highlight the need for improved awareness and understanding of HF by patients to enable their participation in the management of HF toward improved clinical outcomes.

Supplementary Material

Highlights.

Sensitivity of self-reported HF was low

Specificity of self-reported HF was high

Agreement of self-reported HF with physician diagnosed HF was poor

Self-reports of HF are best confirmed by diagnostic tests or medical records

There is a need or improved awareness and understanding of HF by patients

Acknowledgments

The authors thank the staff and participants of the ARIC study for their important contributions. The Atherosclerosis Risk in Communities Study is carried out as a collaborative study supported by National Heart, Lung, and Blood Institute contracts (HHSN268201100005C, HHSN268201100006C, HHSN268201100007C, HHSN268201100008C, HHSN268201100009C, HHSN268201100010C, HHSN268201100011C, and HHSN268201100012C).

RC was supported by the National Heart, Lung, and Blood Institute GRS Diversity Supplement (HHSN268201100007C).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Ricky Camplain, Northern Arizona University, Center for Health Equity; The University of North Carolina at Chapel Hill, Department of Epidemiology, PO Box 4065, Northern Arizona University, Flagstaff, AZ 86011-4065.

Anna Kucharska-Newton, The University of North Carolina at Chapel Hill, Department of Epidemiology.

Laura Loehr, The University of North Carolina at Chapel Hill, Department of Epidemiology.

Thomas C. Keyserling, The University of North Carolina at Chapel Hill, Department of Medicine

J. Bradley Layton, The University of North Carolina at Chapel Hill, Department of Epidemiology.

Lisa Wruck, Center for Predictive Medicine, Duke Clinical Research Institute.

Aaron R. Folsom, University of Minnesota School of Public Health, Division of Epidemiology and Community Health

Alain G Bertoni, Wake Forest School of Medicine, Department of Epidemiology and Prevention.

Gerardo Heiss, The University of North Carolina at Chapel Hill, Department of Epidemiology.

References

- 1.Baumeister H, et al. High agreement of self-report and physician-diagnosed somatic conditions yields limited bias in examining mental-physical comorbidity. J Clin Epidemiol. 2010;63(5):558–65. doi: 10.1016/j.jclinepi.2009.08.009. [DOI] [PubMed] [Google Scholar]

- 2.Okura Y, et al. Agreement between self-report questionnaires and medical record data was substantial for diabetes, hypertension, myocardial infarction and stroke but not for heart failure. J Clin Epidemiol. 2004;57(10):1096–103. doi: 10.1016/j.jclinepi.2004.04.005. [DOI] [PubMed] [Google Scholar]

- 3.Merkin SS, et al. Agreement of self-reported comorbid conditions with medical and physician reports varied by disease among end-stage renal disease patients. J Clin Epidemiol. 2007;60(6):634–42. doi: 10.1016/j.jclinepi.2006.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Teh R, et al. Agreement between self-reports and medical records of cardiovascular disease in octogenarians. J Clin Epidemiol. 2013;66(10):1135–43. doi: 10.1016/j.jclinepi.2013.05.001. [DOI] [PubMed] [Google Scholar]

- 5.Prevalence of chronic diseases in older Italians: comparing self-reported and clinical diagnoses. The Italian Longitudinal Study on Aging Working Group. Int J Epidemiol. 1997;26(5):995–1002. doi: 10.1093/ije/26.5.995. [DOI] [PubMed] [Google Scholar]

- 6.Simpson CF, et al. Agreement between self-report of disease diagnoses and medical record validation in disabled older women: factors that modify agreement. J Am Geriatr Soc. 2004;52(1):123–7. doi: 10.1111/j.1532-5415.2004.52021.x. [DOI] [PubMed] [Google Scholar]

- 7.Tisnado DM, et al. What is the concordance between the medical record and patient self-report as data sources for ambulatory care? Med Care. 2006;44(2):132–40. doi: 10.1097/01.mlr.0000196952.15921.bf. [DOI] [PubMed] [Google Scholar]

- 8.Heckbert SR, et al. Comparison of self-report, hospital discharge codes, and adjudication of cardiovascular events in the Women’s Health Initiative. Am J Epidemiol. 2004;160(12):1152–8. doi: 10.1093/aje/kwh314. [DOI] [PubMed] [Google Scholar]

- 9.Muggah E, et al. Ascertainment of chronic diseases using population health data: a comparison of health administrative data and patient self-report. BMC Public Health. 2013;13:16. doi: 10.1186/1471-2458-13-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.The Atherosclerosis Risk in Communities (ARIC) Study: design and objectives. The ARIC investigators. Am J Epidemiol. 1989;129(4):687–702. [PubMed] [Google Scholar]

- 11.Rosamond WD, et al. Classification of heart failure in the atherosclerosis risk in communities (ARIC) study: a comparison of diagnostic criteria. Circ Heart Fail. 2012;5(2):152–9. doi: 10.1161/CIRCHEARTFAILURE.111.963199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Byrt T, Bishop J, Carlin JB. Bias, prevalence and kappa. J Clin Epidemiol. 1993;46(5):423–9. doi: 10.1016/0895-4356(93)90018-v. [DOI] [PubMed] [Google Scholar]

- 13.Quach S, Blais C, Quan H. Administrative data have high variation in validity for recording heart failure. Can J Cardiol. 2010;26(8):306–12. doi: 10.1016/s0828-282x(10)70438-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kucharska-Newton AM, et al. Identification of Heart Failure Events in Medicare Claims: The Atherosclerosis Risk in Communities (ARIC) Study. J Card Fail. 2016;22(1):48–55. doi: 10.1016/j.cardfail.2015.07.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Saczynski JS, et al. A systematic review of validated methods for identifying heart failure using administrative data. Pharmacoepidemiol Drug Saf. 2012;21(Suppl 1):129–40. doi: 10.1002/pds.2313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Colditz GA, et al. Validation of questionnaire information on risk factors and disease outcomes in a prospective cohort study of women. Am J Epidemiol. 1986;123(5):894–900. doi: 10.1093/oxfordjournals.aje.a114319. [DOI] [PubMed] [Google Scholar]

- 17.Loehr LR, et al. Classification of acute decompensated heart failure: an automated algorithm compared with a physician reviewer panel: the Atherosclerosis Risk in Communities study. Circ Heart Fail. 2013;6(4):719–26. doi: 10.1161/CIRCHEARTFAILURE.112.000195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hoehler FK. Bias and prevalence effects on kappa viewed in terms of sensitivity and specificity. J Clin Epidemiol. 2000;53(5):499–503. doi: 10.1016/s0895-4356(99)00174-2. [DOI] [PubMed] [Google Scholar]

- 19.Chen G, et al. Measuring agreement of administrative data with chart data using prevalence unadjusted and adjusted kappa. BMC Med Res Methodol. 2009;9:5. doi: 10.1186/1471-2288-9-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sim J, Wright CC. The kappa statistic in reliability studies: use, interpretation, and sample size requirements. Phys Ther. 2005;85(3):257–68. [PubMed] [Google Scholar]

- 21.Kitzman DW, et al. Importance of heart failure with preserved systolic function in patients > or = 65 years of age. CHS Research Group. Cardiovascular Health Study. Am J Cardiol. 2001;87(4):413–9. doi: 10.1016/s0002-9149(00)01393-x. [DOI] [PubMed] [Google Scholar]

- 22.Gillum RF. Epidemiology of heart failure in the United States. Am Heart J. 1993;126(4):1042–7. doi: 10.1016/0002-8703(93)90738-u. [DOI] [PubMed] [Google Scholar]

- 23.Gure TR, et al. Degree of disability and patterns of caregiving among older Americans with congestive heart failure. J Gen Intern Med. 2008;23(1):70–6. doi: 10.1007/s11606-007-0456-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ezekowitz JA, et al. Trends in heart failure care: has the incident diagnosis of heart failure shifted from the hospital to the emergency department and outpatient clinics? Eur J Heart Fail. 2011;13(2):142–7. doi: 10.1093/eurjhf/hfq185. [DOI] [PubMed] [Google Scholar]

- 25.Yeung DF, et al. Trends in the incidence and outcomes of heart failure in Ontario, Canada: 1997 to 2007. CMAJ. 2012;184(14):E765–73. doi: 10.1503/cmaj.111958. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.