Abstract

We present a full analysis of data from our preliminary report (Lafer-Sousa, Hermann, & Conway, 2015) and test whether #TheDress image is multistable. A multistable image must give rise to more than one mutually exclusive percept, typically within single individuals. Clustering algorithms of color-matching data showed that the dress was seen categorically, as white/gold (W/G) or blue/black (B/K), with a blue/brown transition state. Multinomial regression predicted categorical labels. Consistent with our prior hypothesis, W/G observers inferred a cool illuminant, whereas B/K observers inferred a warm illuminant; moreover, subjects could use skin color alone to infer the illuminant. The data provide some, albeit weak, support for our hypothesis that day larks see the dress as W/G and night owls see it as B/K. About half of observers who were previously familiar with the image reported switching categories at least once. Switching probability increased with professional art experience. Priming with an image that disambiguated the dress as B/K biased reports toward B/K (priming with W/G had negligible impact); furthermore, knowledge of the dress's true colors and any prior exposure to the image shifted the population toward B/K. These results show that some people have switched their perception of the dress. Finally, consistent with a role of attention and local image statistics in determining how multistable images are seen, we found that observers tended to discount as achromatic the dress component that they did not attend to: B/K reporters focused on a blue region, whereas W/G reporters focused on a golden region.

Keywords: color categorization, color constancy, bistable illusion

Introduction

Most visual stimuli are underdetermined: A given pattern of light can be evidence for many different surfaces or objects. Despite being underdetermined, most retinal images are resolved unequivocally. It is not known how the brain resolves such ambiguity, yet this process is fundamental to normal brain function (Brainard et al., 2006; Conway, 2016). Multistable images are useful tools for investigating the underlying neural mechanisms. The two defining properties of multistable stimuli are that they give rise to more than one plausible, stable, percept within single individuals and that the alternative percepts are mutually exclusive (Leopold & Logothetis, 1999; Long & Toppino, 2004; Schwartz, Grimault, Hupe, Moore, & Pressnitzer, 2012; Scocchia, Valsecchi, & Triesch, 2014). Multistable images are similar to binocular rivalrous stimuli, although in binocular rivalry the competition is between two different images rather than alternative interpretations of a single image. Although the first account of binocular rivalry involved color (Dutour, 1760), to date there are no striking examples of multistable color images. Of course, not all colored stimuli are unambiguous: Consider turquoise, which might be called blue or green by different people. Such ambiguous color stimuli typically retain their ambiguity even when labeled categorically, unlike multistable shape images (Klink, van Wezel, & van Ee, 2012). To date, the best example of something approximating a multistable color phenomenon is the colored Mach card, in which the color of a bicolored card folded along the color interface and viewed monocularly can vary depending on whether one perceives the card receding or protruding (Bloj, Kersten, & Hurlbert, 1999). But it is not clear that the color perceptions of the Mach card are categorical. Moreover, the phenomenon is primarily an illusion of 3-D geometry: Without stereopsis, the perspective cues are ambiguous; the way the colors are perceived is contingent on how these cues are resolved.

Could #TheDress be an elusive multistable color image? Initial reports on social media raised the possibility that the image was seen in one of two mutually exclusive ways, as white and gold (W/G) or blue and black (B/K). But color-matching data (not color names) reported by Gegenfurtner, Bloj, and Toscani (2015) concluded that there were many different ways in which the dress's colors could be seen. The tentative conclusion was that reports of two categories arose as an artifact of the two-alternative forced-choice question posed by social media (“Do you see the dress as W/G or B/K?”). The implication was that the true population distribution is unimodal, which is inconsistent with the idea that the image is multistable. The Gegenfurtner et al. study measured perceptions of 15 people. It is not known how many subjects would be required to reject the hypothesis that the population distribution is unimodal. We addressed these issues through a full, quantitative analysis of the results that we presented in preliminary form shortly after the image was discovered, in which we argued that the dress was seen categorically (Lafer-Sousa, Hermann, & Conway, 2015). A side goal was to evaluate the extent to which tests conducted online replicate results obtained under laboratory conditions. Many studies of perception and cognition are being conducted through online surveys; it remains unclear whether results obtained in a lab and online are comparable.

Popular accounts suggest that people are fixed by “one-shot learning” in the way they see the dress image (Drissi Daoudi, Doerig, Parkosadze, Kunchulia, & Herzog, 2017). These observations have been taken to imply that the dress is not like a typical multistable image, because it is widely thought that most people experience frequent perceptual reversals of multistable images. But frequent reversals might not be a necessary property of multistability (see Discussion). The perception of multi-stable shape images at any given instant was initially thought to depend only on low-level factors, such as where in the image one looked (Long & Toppino, 2004): since we move our eyes frequently, it was assumed that the perception of a multi-stable shape image would necessarily reverse frequently. It is now recognized that high-level factors, including familiarity with the image, prior knowledge, personality, mood, attention, decision making, and learning, also play a role in how multi-stable shape images are seen (Kosegarten & Kose, 2014; Leopold & Logothetis, 1999; Podvigina & Chernigovskaya, 2015). These factors are often modulated over a longer time frame than eye movements, which could explain why some multi-stable images do not reverse very often. Data in our initial report suggested that some observers experience perceptual reversals of the dress, raising the possibility that the image is not unlike other multistable images. Here we determined the extent to which the individual differences in perception of the dress image are fixed. We characterized the conditions that promote perceptual reversals of the dress, and tested five factors known to influence how multistable images are perceived: prior knowledge about the image (Rock & Mitchener, 1992); exposure to disambiguated versions (Fisher, 1967; Long & Toppino, 2004); low-level stimulus properties (e.g., stimulus size; Chastain & Burnham, 1975); where subjects look (or attend; Ellis & Stark, 1978; Kawabata & Mori, 1992; Kawabata, Yamagami, & Noaki, 1978); and priors encoded in genes or through lifetime experience (Scocchia et al., 2014). These experiments were afforded because we tested people who varied in terms of both prior exposure to the image and knowledge about the color of the dress in the real world.

By examining the factors that influence perception of the dress image, we hoped to shed light on how the brain resolves underdetermined chromatic signals. While low-level sensory mechanisms like adaptation in the retina can account for color constancy under simple viewing conditions (Chichilnisky & Wandell, 1995; D'Zmura & Lennie, 1986; Foster & Nascimento, 1994; Land, 1986; Stiles, 1959; von Kries, 1878; Webster & Mollon, 1995), they fail to explain constancy of natural surfaces (Brainard & Wandell, 1986; Webster & Mollon, 1997) and real scenes (Hedrich, Bloj, & Ruppertsberg, 2009; Khang & Zaidi, 2002; Kraft & Brainard, 1999). We have argued that the competing percepts of the dress are the result of ambiguous lighting information. The colors of the pixels viewed in isolation align with the colors associated with daylight (Brainard & Hurlbert, 2015; Conway, 2015; Lafer-Sousa et al., 2015). The visual system must contend with two plausible interpretations—that the dress is either in cool shadow or in warm light. In our prior report, we tested the idea that illumination assumptions underlie the individual differences in color perception of the dress, by digitally embedding the dress in scenes containing unambiguous illumination cues to either warm or cool illumination. Most observers conformed to a single categorical percept consistent with the illumination cued (Lafer-Sousa et al., 2015). Here we directly tested the hypothesis by analyzing subjects' judgments about the light shining on the dress. We also tested our hypothesis that the way the dress is seen can be explained by one's chronotype: Night owls spend much of their awake time under incandescent light, and we hypothesized they would therefore be more likely to discount the orange component of the dress and see the dress's colors as B/K; day larks spend more of their time under blue daylight, and we surmised that they would discount the blue component of the dress and see the colors as W/G (Rogers, 2015).

Finally, we used the dress image as a tool to examine the role of memory colors in color constancy. The spectral bias of the illuminant could, in theory, be determined by comparing the chromatic signals entering the visual system with the object colors stored in memory. The gamut of human skin occupies a distinctive profile in cone-contrast space that is surprisingly stable across skin types and shifts predictably under varying illuminations (Crichton, Pichat, Mackiewicz, Tian, & Hurlbert, 2012). These statistics, coupled with the fact that skin is viewable in almost every natural glance, make skin a potentially good cue to estimate the spectral bias of the illuminant (Bianco & Schettini, 2012). We used our disambiguation paradigm (Lafer-Sousa et al., 2015), digitally embedding the dress in scenes in which we systematically introduced different cues to the illuminant. As far as we are aware, the results provide the first behavioral evidence that skin color is sufficient to recover information about the illuminant for color constancy.

Methods and materials

Experimental setup

Detailed methods are provided in the supplementary material of our previous report (Lafer-Sousa et al., 2015). Raw materials and sample analyses are provided here: https://github.com/rlaferso/-TheDress. The majority of participants (N = 2,200) were recruited and tested online through Amazon's Mechanical Turk using a combination of template (Morris Alper's Turk Suite Template Generator 2014, available online at http://mturk.mit.edu/template.php) and custom HTML and JavaScript code. A smaller number of subjects (N = 53) were recruited from the Massachusetts Institute of Technology (MIT) and Wellesley College campus through word of mouth and social media, and tested using the M-Turk platform on a calibrated display in the laboratory. We adhered to the policies of the MIT Committee on the Use of Humans as Experimental Subjects in using Amazon's Mechanical Turk for research purposes. Informed consent was obtained from those subjects who performed the study in the laboratory study. Procedures were approved by the institutional review board of Wellesley College. Subjects were between 18 and 69 years of age. To control for subject quality among the Mechanical Turk participants, we required that subjects have Mechanical Turk approval ratings of 95% or higher and have previously completed at least 1,000 human intelligence tasks on Mechanical Turk.

In-laboratory subjects viewed the display at 40 cm. Subjects used a chin rest to control viewing angle and distance. The experiment was performed on a calibrated 21.5-in. iMac computer with a pixel resolution of 1,920 × 1,080 in a windowless room with LED overhead lighting (CIExyY: 0.4814, 0.4290, 4.3587 cd/m2), measured off the Macbeth color checker's standard white, held at the same location and viewing angle on the monitor at which we presented the dress image). Normal color vision was confirmed with Ishihara plates (Ishihara, 1977).

To ensure that stimuli were the same size across displays for online subjects, we specified the sizes of stimulus images in absolute pixels in the HTML experiment code. There is some variability from display to display in terms of the actual physical size of a pixel. We measured the images on a typical monitor in the laboratory to provide a reasonable estimate for how the pixel values correspond to degrees of visual angle. We estimate that among different displays the variance in actual display size was ∼±10% of the size measured in lab.

Three experiments were conducted: 1, a main experiment; 2, a follow-up experiment to assess the role of image size in determining what colors people report; and 3, an in-laboratory, controlled experiment. Data were pooled from the various experiments depending on the analysis performed.

In each experiment, dress color percepts were queried using two tasks: a free-response color-naming task and a color-matching task. In Experiments 1 and 3 subjects were also asked to report on their impressions of the lighting conditions in the image by providing temperature ratings and verbal descriptors, and to estimate where in the image they thought they spent most of their time looking. In addition, Experiments 1 and 3 queried color percepts for a set of digitally synthesized test stimuli featuring the dress (cropped from the original image) embedded in scenes with unambiguous simulated lighting conditions, and a version of the original image that had been spatially blurred. Experiment 3 included an additional set of synthetic test images not presented in Experiment 1. Each experiment contained questions about subjects' demographics, viewing conditions, and past viewing experiences, which were distributed throughout the experiment (the full questionnaire is reproduced in Lafer-Sousa et al., 2015).

Stimuli

Full-scale stimuli reproductions are provided in the Supplementary Image Appendix; they can also be viewed online at https://youtu.be/U6c4au-Wu-E.

Original dress image (used in Experiments 1, 2, and 3)

The original dress image circulated on the Internet (courtesy of Cecilia Bleasdale; Figure 1). In Experiments 1 and 3, the original dress photograph was presented at 36% of its original size so that the entire image would be visible on the display. The image was presented at an absolute size of 226 × 337 pixels. This corresponded to 7.2° of visual-angle width. In Experiment 2, the original dress photograph was presented at one of four sizes, defined as a percentage of the original: 10% (63 × 94 pixels, or 2.0° of visual angle on a 21.5-in. iMac), 36% (the image size presented in Experiment 1; 226 × 337 pixels, or 7.2° of visual angle), 100% (628 × 937 pixels, or 20.0° of visual angle), and 150% (942 × 1,405 pixels, or 30.0° of visual angle). The stimuli in the 10% and 36% conditions fit fully in all browser windows. For the 100% and 150% images, only part of the image was visible at once in the height direction, but the full width of the image was completely visible in the horizontal dimension. As a result, subjects had to scroll over the stimulus image, from top to bottom, to view it. Scrolling to see the entire image was required in order for subjects to access the buttons to move through the study, ensuring that all subjects saw the entire image even in the 100% and 150% displays. Subjects were randomly assigned to one of the four scale conditions.

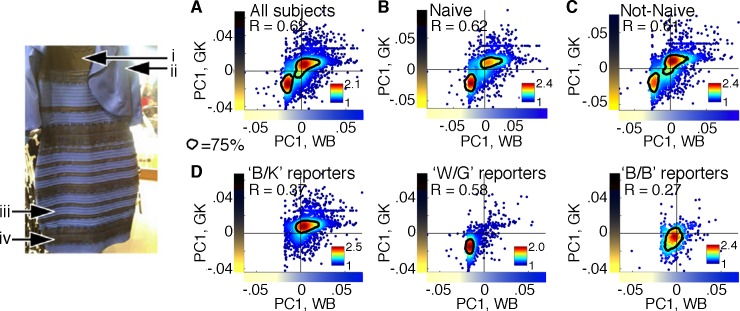

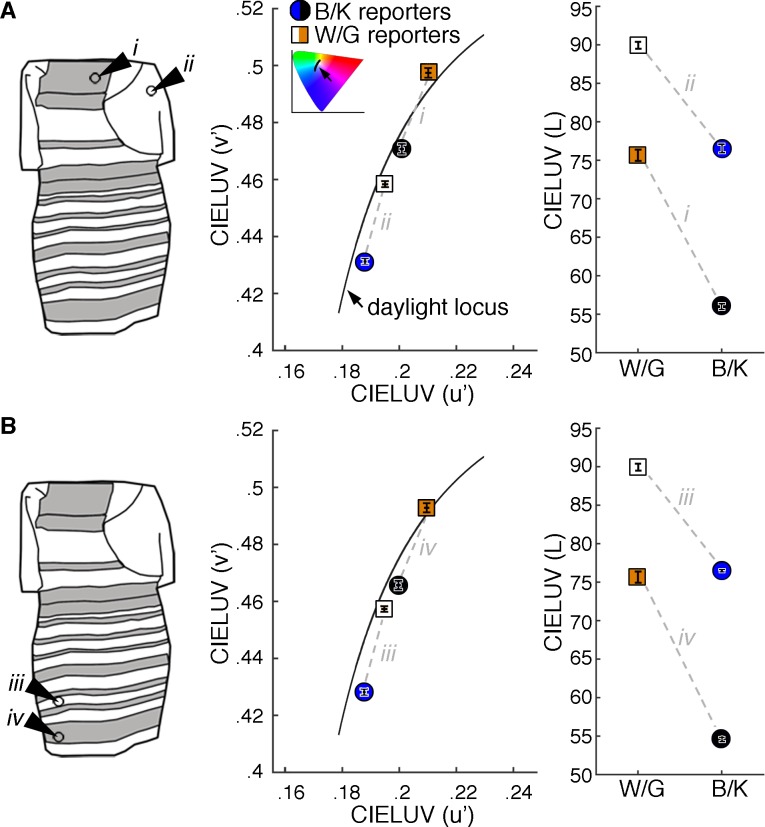

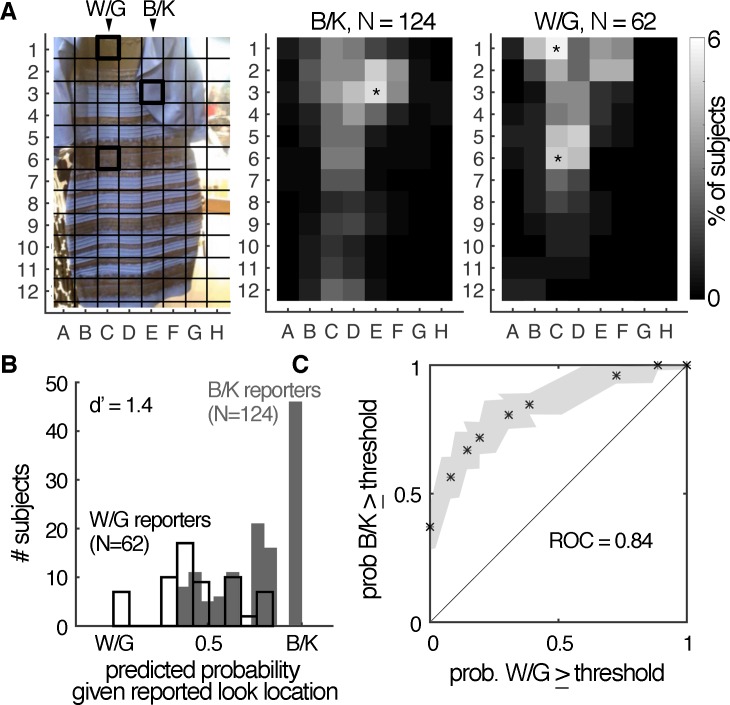

Figure 1.

Population distributions of subjects' color matches show categorical perception of the dress. Subjects used a digital color picker to match their perception of four regions of the dress (i, ii, iii, iv); the dress image was shown throughout the color-matching procedure. (A) Matches for regions i and iv of the dress plotted against matches for regions ii and iii, for all online subjects (N = 2,200; R = 0.62, p < 0.001). Contours contain the highest density (75%) of matches. The first principal component of the population matches (computed from CIELUV values) to (i, iv) defined the y-axis (gold/black: GK); the first principal component of the population matches to (ii, iii) defined the x-axis (white/blue: WB). Each subject's (x, y) values are the principal-component weights for their matches; each has two (x, y) pairs, corresponding to (i, ii) and (iii, iv). Color scale is number of matches (smoothed). (B) Color matches for regions (i, iii) of the dress plotted against matches for regions (ii, iv) for subjects who had never seen the dress before the experiment (Naïve; N = 1,017; R = 0.62, p < 0.001). Axes and contours were defined using data from only those subjects. (C) Color matches for regions (i, iv) of the dress plotted against matches for regions (ii, iii) for subjects who had seen the dress before the experiment (N = 1,183; R = 0.61, p < 0.001). Axes and contours were defined using data from only those subjects. (D) Color matches for all subjects (from A) were sorted by subjects' verbal color descriptions (“blue/black” = B/K, N = 1,184; “white/gold” = W/G, N = 686; “blue/brown” = B/B, N = 272) and plotted separately. Axes defined as in (A). In all panels, contours contain the highest density (75%) of the matches shown in each plot. Dress image reproduced with permission from Cecilia Bleasdale.

Blurry dress stimulus (used in Experiments 1 and 3)

A blurry version of the original image was presented at 41% of the source size, with a Gaussian blur radius of 3.3 pixels (0.11°). The image was 8.3° of visual angle along the horizontal axis.

Disambiguating stimuli (warm and cool illumination simulations)

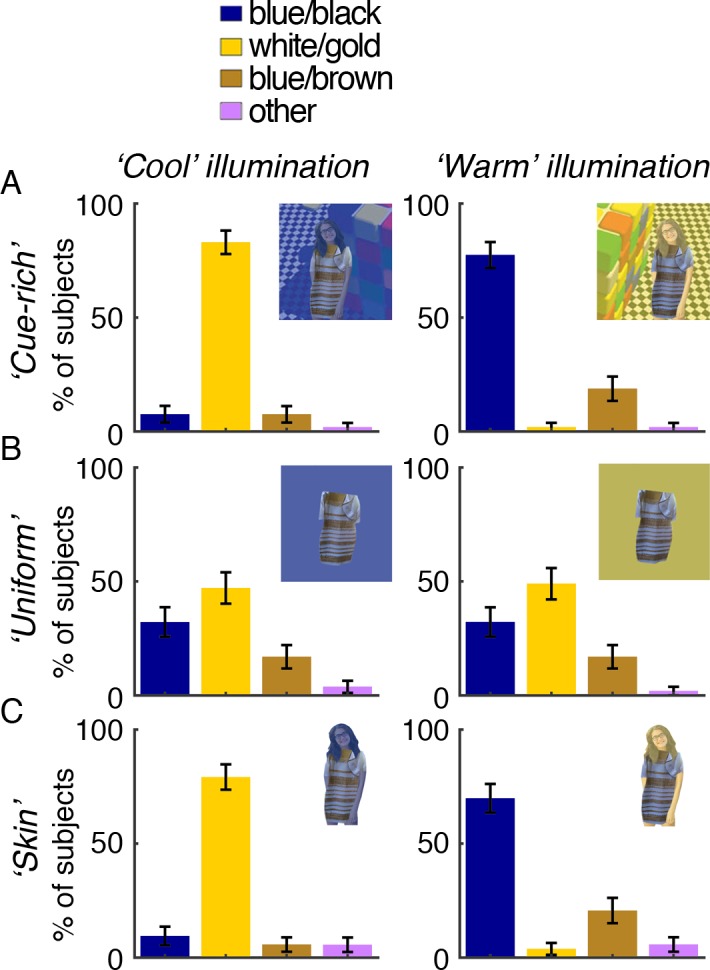

Cue-rich test stimuli (used in Experiments 1 and 3)

To create the cue-rich test stimuli (see Supplementary Image Appendix for full-size reproductions), we digitally dressed a White female model in the garment and embedded her in a scene depicting a Rubik's cube under either a simulated warm (yellowish) or cool (bluish) illuminant (cube reproduced with permission from Beau Lotto; Lotto & Purves, 2002). In the cool-illumination scene, the woman was positioned in the shadow cast by the cube. The colors of her skin and hair were tinted to reflect the color bias of the simulated illuminant using a semitransparent color overlay. The chromaticity of the overlay was defined on the basis of the chromaticity of the white component of the scene's checkered floor, which provides a quantitative white point for the scene (for the cool-illumination scene we used the white checkers that were cast in shadow, corresponding to our placement of the model in shadow). In the warm scene, the white point was 0.352, 0.394, 66 cd/m2 (CIExyY 1931); in the cool scene it was 0.249, 0.271, 23 cd/m2. Note that the pixels making up the dress were never manipulated. The dress portion of the stimulus was presented at 76% of the size of the dress in the original image; the complete picture was 518 × 429 pixels (∼16.5° of visual angle on the horizontal axis). Throughout this article, figures showing the model are for illustration purposes only: Copyright for the photograph of the model we used to create the stimuli could not be secured for reproduction.

Uniform-surround test stimuli (used in Experiment 3)

To test whether a low-level sensory mechanism like receptor adaptation or local color contrast is sufficient to resolve the dress's colors, we superimposed the isolated dress on uniform fields matched to the mean chromaticity of the cue-rich scenes (CIExyY warm field: 0.363, 0.414, 51 cd/m2; cool field: 0.276, 0.293, 29 cd/m2). The dress portion of the stimulus was presented at 76% of the size of the dress in the original image; the complete picture was 518 × 429 pixels (∼16.5° of visual angle on the horizontal axis). The pixels that make up the dress were not manipulated.

Skin-only test stimuli (Experiment 3)

To test whether skin chromaticity is by itself a sufficient cue to achieve good color constancy, we presented the dress superimposed on the woman on a white (achromatic) background (CIExyY: 0.322, 0.352, 75 cd/m2) and tinted her skin according to the spectral bias of the illuminants simulated in the cue-rich scenes. The dress portion of the stimulus was presented at 76% of the size of the dress in the original image; the complete picture was 518 × 429 pixels (∼16.5° of visual angle on the horizontal axis). The pixels that make up the dress were not manipulated.

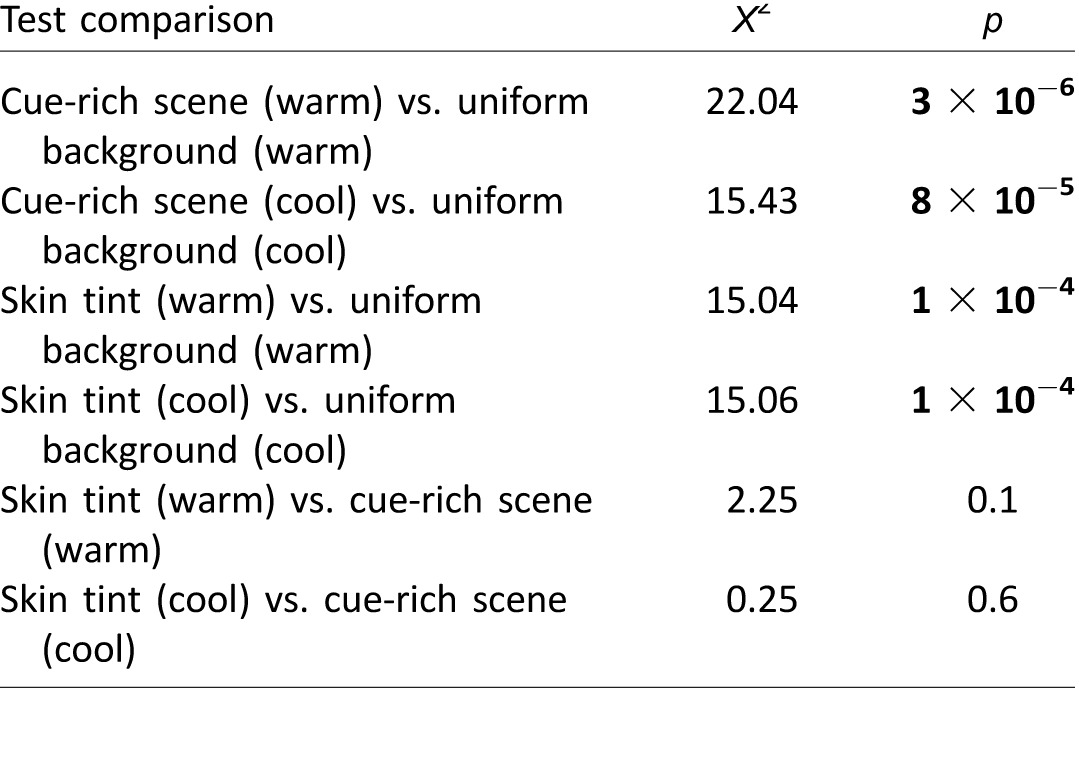

We considered color constancy good if the majority of subjects conformed to the percept predicted by the lighting cues in each condition, and bad when individual subjects' perceptions were unaffected by the changes in simulated lighting conditions: Under the cool illuminant, good color constancy predicts that subjects should discount a cool (blue) component and see the dress as W/G, while under the warm illuminant they should discount a warm (yellow) component and see the dress as B/K. Note that we use the terms blue, yellow, white, warm, and cool as shorthand for accurate colorimetric descriptions. McNemar's chi-square tests were used to compare goodness of constancy achieved in different stimulus conditions. McNemar's test is a within-subject z test of equality of proportions for repeated measures. Each test compares the proportion of subjects that did or did not conform to the percept cued in stimulus condition X versus the proportion that did or did not conform to the percept cued in stimulus condition Y. Six tests were performed: cue-rich scene (warm) versus uniform background (warm); skin tint (warm) versus uniform background (warm); skin tint (warm) versus cue-rich scene (warm); cue-rich scene (cool) versus uniform background (cool); skin tint (cool) versus uniform background (cool); and skin tint (cool) versus cue-rich scene (cool).

Tasks

Color naming

Each image was shown for 15 s, and then subjects were prompted to report the apparent color of the dress via a free-response verbal task (two text boxes were provided): “Please look carefully at the dress. A ‘continue' button will appear just below this text after 15 seconds. This is a picture of a ____ and ____ dress. (Fill in the colors that you see).” The image was on the screen continuously while the subjects responded (this was not designed as a test of color memory). Color descriptions of the dress were binned into categories: blue/black, white/gold, blue/brown, other (following the methods outlined in Lafer-Sousa et al., 2015).

Lighting judgments

After performing the color-naming task, subjects were prompted to rate the apparent quality of the light illuminating the background of the image (“On a scale from 1 to 5, where 1 is cool and 5 is warm, please rank the lighting conditions in the background”) and the light illuminating the dress (“On a scale from 1 to 5, where 1 is cool and 5 is warm, please rank the light illuminating the dress”). They were then asked to characterize the light in the background, and the light illuminating the dress, by checking off any of a number of possible verbal descriptors from a list (“The lighting in the background is... Check all that apply/The light illuminating the dress is... Check all that apply”: dim, dark, cool, blueish, bright, warm, yellowish, glaring, blown out, washed out, reddish, greenish, purplish, iridescent).

Color matching

Each image was presented a second time (again, for 15 s), and this time subjects were prompted to make color matches to four regions of the dress (Figure 1 inset, arrows i, ii, iii, iv), using a color-picker tool comprising a complete color gamut: “Please adjust the hue (color circle) and brightness (slider bar) to match the pixels you see in the image.”

For Experiments 1 and 3, in the first half of the experiment each image was shown for 15 s and then subjects were prompted to perform the color-naming task and the lighting-judgment task. In Experiment 2, subjects were not asked to perform the lighting judgment task. The image was on the screen continuously while the subjects responded (this was not designed as a test of color memory). In the second half of the experiment, each image was shown a second time, and after 15 s, subjects were prompted to perform the color-matching task (the image remained on the screen continuously while the subjects performed the task). Between the presentation of the first and second images, we collected basic demographic information. Between the presentations of the last two images, we asked subjects about the environment in which they were completing the study. We also asked whether they had viewed the original dress image prior to this study, and if so, whether they had experienced multiple percepts of the dress (i.e., switching).

The first image shown was always the original dress photograph (courtesy of Cecilia Bleasdale), but the order of the subsequent two images differed between the two conditions. In addition, all subjects were queried on their perception of a blurry version of the original image.

Experiment 1:

Order A: Report colors and lighting for original dress image, cue-rich scene (cool), cue-rich scene (warm); make color matches for original dress image, cue-rich scene (cool), cue-rich scene (warm); report colors of the blurry dress.

Order B: Report colors and lighting for original dress image, cue-rich scene (warm), cue-rich scene (cool); make color matches for original dress image, cue-rich scene (warm), cue-rich scene (cool); report colors of the blurry dress.

Experiment 2:

Order: Report colors for original dress image; make color matches for original dress image.

Experiment 3:

Order A: Report colors and lighting for original dress image, cue-rich scene (cool), cue-rich scene (warm), uniform surround (cool), uniform surround (warm), skin-tint only (cool), skin-tint only surround (warm); make color matches for original dress image, cue-rich scene (cool), cue-rich scene (warm), uniform surround (cool), uniform surround (warm), skin-tint only (cool), skin-tint only (warm); report colors of the blurry dress.

Order B: Report colors and lighting for original dress image, cue-rich scene (warm), cue-rich scene (cool), uniform surround (warm), uniform surround (cool), skin-tint only (warm), skin-tint only surround (cool); make color matches for original dress image, cue-rich scene (warm), cue-rich scene (cool), uniform surround (warm), uniform surround (cool), skin-tint only (warm), skin-tint only surround (cool); report colors of the blurry dress.

Data analysis

Analyses are described in the legends and Results section. All statistical analyses were conducted using MATLAB. A value of p < 0.05 was considered statistically significant.

Results

#TheDress (Figure 1, left) is a rare image that elicits striking individual differences in color perception (Gegenfurtner et al., 2015; Lafer-Sousa et al., 2015). Although the pixels that make up the dress are (in isolation) light blue and brown, most observers queried through social media reported seeing the dress as either blue/black (B/K) or white/gold (W/G; Rogers, 2015). A minority of subjects (∼10%) reported seeing the dress as blue/brown (B/B) (Lafer-Sousa et al., 2015).

Categorical perception of the dress: True or false?

Subjects were asked to identify the colors of four regions of the dress (i–iv; Figure 1, left). The three-dimensional (CIE L*,u*,v*) coordinates of the color-matching data were compressed to one dimension using principal-component analysis (Lafer-Sousa et al., 2015). Subjects' color matches for the brown regions of the dress (i, iv) are plotted against their matches for the blue regions of the dress (ii, iii), and are highly correlated (density plots and contours were created in MATLAB using scatplot1). Moreover, the correlation shows two peaks, suggestive of two underlying categories. This pattern of results was consistent for both subjects who had and had not seen the image previously (Figure 1B, 1C). The peaks in the population density plots corresponded well with the categorical color descriptions provided by the participants: Figure 1D shows the color matches made by subjects who reported a B/K percept (left panel) or a W/G percept (middle panel). Some subjects reported B/B. These subjects made color matches that were intermediate to the two main categories (Figure 1D, right panel). To quantitatively test the hypothesis that the dress is viewed categorically, we performed a k-means clustering assessment on the color-matching data.

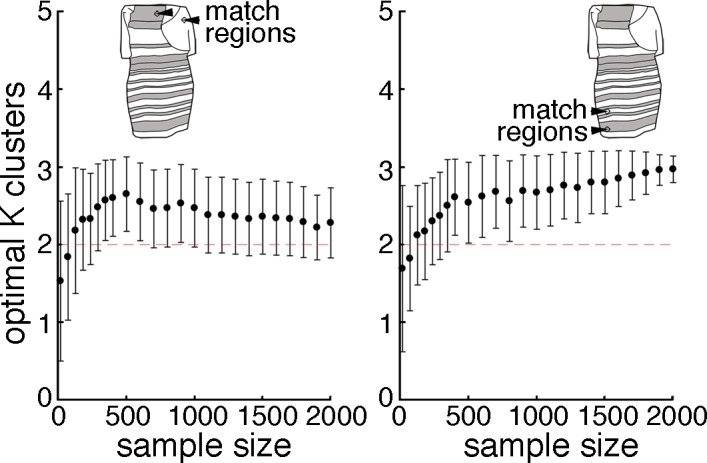

There are several methods for estimating the optimal number of k clusters (groups) in a distribution, but most are constrained to assessing solutions of two or more clusters. To test our hypothesis, we need some way of assessing the relative goodness of clustering for a single-component (k = 1 cluster) versus a k > 1 component model. To do so, we clustered the data, varying the number of clusters (k = 1, 2, …, 13), then used the gap method to assess the outcomes and identify the optimal K clusters (Tibshirani, Walther, & Hastie, 2001). The gap statistic is estimated by comparing the within-cluster dispersion of a k-component model to its expectation under a single-component null, and seeks to identify the smallest k satisfying Gap(k) ≥ Gap(k + 1) − SE(Gap(k + 1)). For color matches made to both upper (Figure 2A–2C) and lower (Figure 2D–2F) regions of the dress, the single-component solution was rejected in favor of two or three clusters, confirming the suspected nonunimodality of the underlying population distribution.

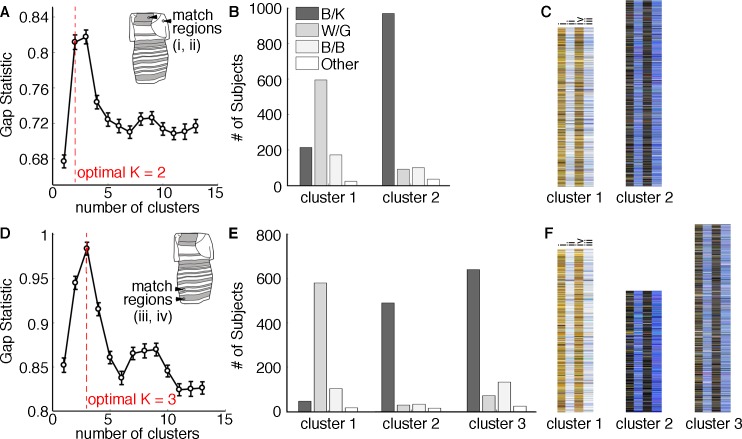

Figure 2.

K-means clustering of color-matching data favor a two- or three-component model over a single-component distribution. Plots summarize the results from k-means clustering assessment (via the gap method; Tibshirani et al., 2001) of the color-matching data presented in Figure 1A (N = 2,200 subjects). (A) The gap statistic computed as a function of the number of clusters, for color-matching data (principal-component analysis weights) obtained for the upper regions of the dress, using all the data from the online population. Dashed line indicates the optimal k clusters. (B) Bar plot showing the cluster assignments of the color matches, binned by the color terms used by the subjects to describe the dress. (C) RGB values of the color matches, sorted by cluster assignment from (B); each thin horizontal bar shows the color matches for a single subject. (D–F) As for (A–C), but for color matches made to the bottom regions of the dress. The gap analysis compares the within-cluster dispersion of a k-component model to its expectation under a null model of a single component. The algorithm seeks to identify the smallest number of clusters satisfying Gap(k) ≥ GAPMAX − SE(GAPMAX), where k is the number of clusters, Gap(k) is the gap value for the clustering solution with k clusters, GAPMAX is the largest gap value, and SE(GAPMAX) is the standard error corresponding to the largest gap value. The optimal k solution for the distribution of upper dress-region color matches is two clusters, and for the lower dress regions it is three, confirming the suspected nonunimodality of the underlying population distribution.

The bar plots (Figure 2B, 2E) show the distribution of the different color terms assigned to each cluster, for the optimal k solution returned by the gap analysis. The clustering algorithm assigned the majority of W/G reporters' matches to Cluster 1 (in both the upper- and lower-region analyses) and the majority of B/K reporters' matches to Cluster 2 (upper-region analysis) or Clusters 2 and 3 (lower-region analysis). Matches made by subjects who described the dress as B/B or other colors outside of the main categories (other) were distributed more evenly across the clusters, even when three clusters were returned. Each thin band in the tapestries (Figure 2C, 2F) corresponds to the color matches made by a single subject, providing a visual snapshot of the success of the clustering algorithms in separating W/G and B/K reporters. These results show that perception of the dress in the population is categorical, not continuous, and reject the idea that reports of categorical perception are an artifact caused by a forced choice.

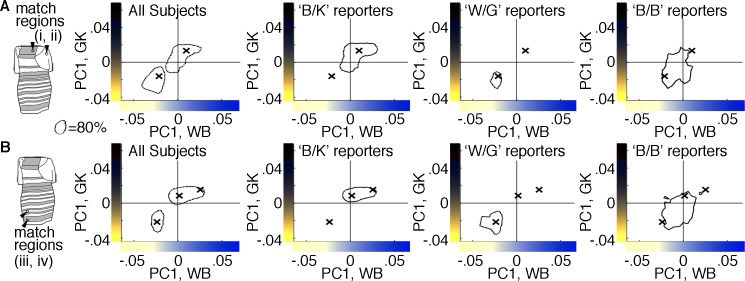

Categorical perception of the dress: How many categories?

The results of Figure 2 suggest that the underlying population may comprise two or three distinct categories. In our initial report, we argued that subjects who report the dress as B/B constituted a distinct third category, intermediate between the two main categories. Figure 3 (top row) shows the spatial relationship of the optimal k cluster centroids (x) and the color-matching distributions (contours) for subjects grouped by their verbal report, for an analysis of the upper match regions. The cluster centroids coincide with the center of the color-matching data for W/G and B/K subjects (xs fall inside the contours; contours and centroids obtained with different halves of the data). Color matches made by subjects who reported B/B fell between these centroids (Figure 3, top right panel). These results suggest that the B/B report does not reflect a distinct category. Figure 3 (bottom row) shows the relationship between the optimal k cluster centroids for the bottom match regions, which returned three clusters. But none of the centroids fell within the contour capturing color matches made by B/B subjects (Figure 3, bottom right panel). These results show that the third category, when evident, is a subgroup of the population of observers who describe the dress as B/K. In addition to the gap analysis, we applied the silhouette clustering criterion and the Calinski–Harabasz clustering criterion; these methods do not allow for a single-component solution. They returned an optimal solution of two clusters, for both the upper and lower match regions.

Figure 3.

Comparison of color-matching data (contours) with predictions from the k-means clustering solutions (x), sorted by subjects' verbal reports. Color-matching distribution contours and k-means cluster centroids derived from independent data sets for (A) the color matches made to upper regions of the dress (region i is plotted against region ii; principal-component analysis weights and principal-component axes from Figure 1A) and (B) lower regions of the dress (iv, iii). Distribution contours were determined using one half of the data set (randomly sampled from the online subject pool); cluster centroids were determined using the left-out data (clustered using the optimal k identified in the gap analysis from Figure 2). Individual plots show the contours encompassing the top 80% of color matches for half the data from each group (left to right): all subjects; subjects who described the dress as blue and black (B/K); subjects who described the dress as white and gold (W/G); and subjects who described the dress as blue and brown (B/B). Within each row, the same cluster centroids are replotted across the panels, reflecting the outcome of clustering the unsorted data set (i.e., independent of verbal reports).

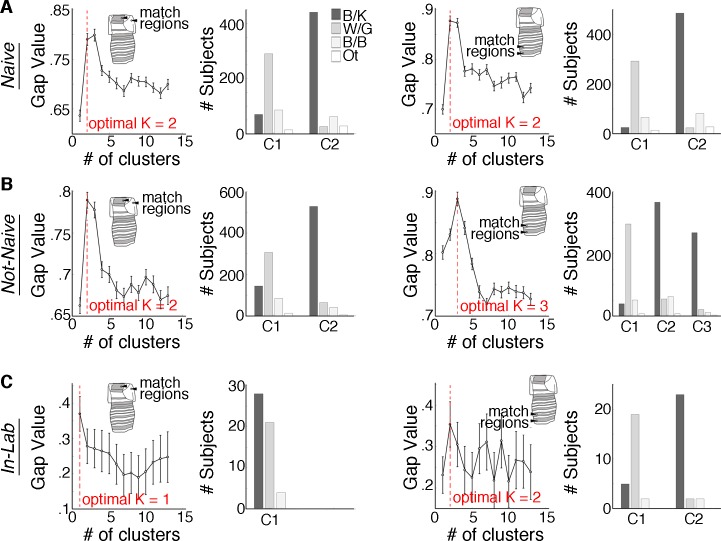

Does prior exposure to the image change the number of categories manifest in the population? The color-matching data for subjects with versus without prior exposure were similar (Figure 1), suggesting that prior exposure had no impact on the number of categories in the population. Figure 4 shows gap-statistical tests of the color-matching data to establish this conclusion. The optimal number of clusters for subjects with no prior exposure (N = 1,017), for either the top or bottom regions of the dress, produced an optimal cluster number of two (Figure 4A); the only color-matching data that produced more than two optimal clusters were those obtained on subjects with prior exposure tasked with matching the lower part of the dress (N = 1,183, three clusters, Figure 4B). The results of the analyses carried out using the silhouette clustering criterion and the Calinski–Harabasz clustering criterion returned two clusters, regardless of whether the matches came from subjects with prior experience.

Figure 4.

K-means clustering solutions for naïve, non-naïve, and in-lab subjects. The k-means clustering assessments were performed for the subsets of subjects who (A) had never seen the dress before participating in the study (N = 1,017; top row), (B) had seen the dress before (N = 1,183; middle row), and (C) who were tested under controlled conditions in the laboratory (N = 53; bottom row). Conventions are as in Figure 2.

Together with the qualitative evaluation of the color-matching data we reported previously (Lafer-Sousa et al., 2015), these results show that the categories reported in social media reflect true categories in the population and are not a result of the way the question was posed.

Categorical perception of the dress: Power analysis

The categorical nature of the population distribution appears, on first inspection, to contradict reports that the true population distribution is continuous. The discrepancy may be resolved by considering the large differences in the number of subjects in the different studies: Conclusions of a continuous distribution were made using data from less than two dozen subjects, whereas the present analysis depends on data from several thousand subjects. How many subjects are necessary to uncover the true population distribution? To address this question, we performed a power analysis by computing the optimal k using subsamples of the data we collected, and then bootstrapping (Figure 5). The variance around the predicted k decreases and the predicted k itself increases with increasing numbers of samples. That the optimal k is 2, and not 1, becomes significant with about 125–180 subjects.

Figure 5.

Power analysis. Plots show the results of k-means clustering (as in Figure 2) for a range of sample sizes (color-matching data randomly sampled from the online subject pool). For each sample size tested, the average gap value derived from 100 bootstraps is plotted; error bars show the standard deviation. Left panel corresponds to upper dress-region matches; right panel, to lower dress-region matches. The variance around the predicted k decreases and the predicted k increases with increasing numbers of samples. That the optimal k = 2 or 3, and not 1, becomes significant with about 125–180 subjects, and plateaus around 500 subjects.

The gap statistic obtained for all subjects (N = 2,200), for the upper match regions, yielded comparable values for two and three clusters (Figure 2); in this case, the optimal gap value is considered to be 2, because the gap method favors the smallest number of clusters satisfying the method's criterion. The gap statistic for the lower match regions was clearly distinguished as three clusters. This difference between the upper and lower match regions accounts for the difference in the results of the power analysis: For the upper regions, the optimal k converges between two and three clusters (and the error bars remain large even at large sample sizes); for the lower regions, it converges on three clusters and the error bars get very small at large sample sizes. The additional cluster identified using color matches for the bottom region of the dress correspond not to a discrete B/B category but rather to a subdivision of the B/K category (Figure 3B; two centroids fall within the B/K reporters).

Our estimate of the number of subjects required to adequately assess the underlying population distribution was made using data obtained online; there is much higher variability in the viewing conditions and subject pool for experiments conducted online versus in a lab. It is possible that the number of subjects needed to determine the true underlying population distribution would be lower for data obtained in a lab, where the viewing conditions can be better controlled. In our prior study, we collected data from 53 subjects under controlled lab conditions. A k-means clustering analysis of these data neither rejected nor confirmed a single-component model (Figure 4C). These results suggest that conducting the experiments in a lab confers no benefit in uncovering the population distribution.

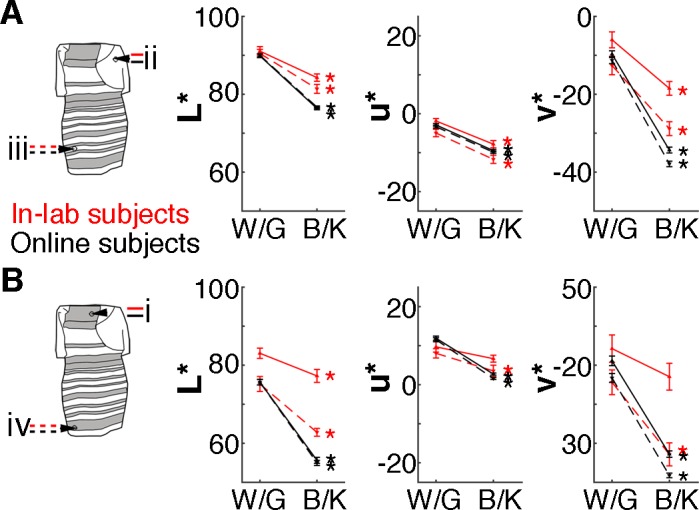

Categorical perception of the dress: Comparing results obtained online and in a lab

The results in Figure 4C show that even under controlled viewing conditions, samples of more than 53 participants are needed to reliably uncover the true population distribution. Nonetheless, the data collected in the lab showed trends consistent with two underlying categories of observers. First, qualitative assessment of the color-matching plots (Lafer-Sousa et al., 2015) shows evidence of two clumps. Second, the optimal k for one of the two sets of regions (iii, iv) was 2, even if this optimal value is not strongly distinguished from other values of k (Figure 4C, left panel). That the optimal k is 2 becomes clearer when the analysis is run on data combining color matches for all regions tested, essentially doubling the data set, which returns an optimal k of 2 (data not shown). Third, the relative distribution of W/G to B/K observers among participants without prior exposure was about the same for subjects tested online versus in a lab (Lafer-Sousa et al., 2015). And fourth, the average chromaticity of the color matches made by subjects in the lab was consistent with those made by subjects online (Figure 6). Regardless of whether the data were obtained online or in a lab, the results showed the same pattern: Compared to B/K subjects, W/G subjects reported not only higher luminance but also higher values of u* (redness) and v* (yellowness), for all four regions tested. The strongest changes were in the luminance and v* dimensions. The comparability of data collected online versus in a lab is consistent with the idea that the factors that determine how one sees the dress are relatively high level, divorced from the specific low-level conditions of viewing (such as the white balance, mean luminance, and size of the display).

Figure 6.

Color matches made by B/K reporters and W/G reporters differ in lightness and hue, under controlled conditions and online. (A) Matches for the blue/white regions. (B) Matches for the black/gold regions. Plots show the average lightness and hue components (CIELUV 1976: L*, u*, v*) of color matches made by subjects reporting B/K or W/G, tested online (black lines; N = 1,174; 770 B/K, 404 W/G) and under controlled viewing conditions (red lines; N = 49; 28 B/K, 21 W'/G). Solid lines show data from the upper regions of the dress, and dashed lines show data from the lower regions; error bars show 95% confidence intervals. Asterisks show cases for which B/K and W/G matches differed significantly (paired t tests): In the online data, matches differed in all three color dimensions (u*, or “red–greenness”; v*, or “blue–yellowness”; and L*, or Luminance; p values < 0.001); and in the in-lab data, matches differed in all but the u* and v* dimensions of one region (i; p values < 0.001 for all paired t tests, except u* and v* of region i: p = 0.06, 0.2).

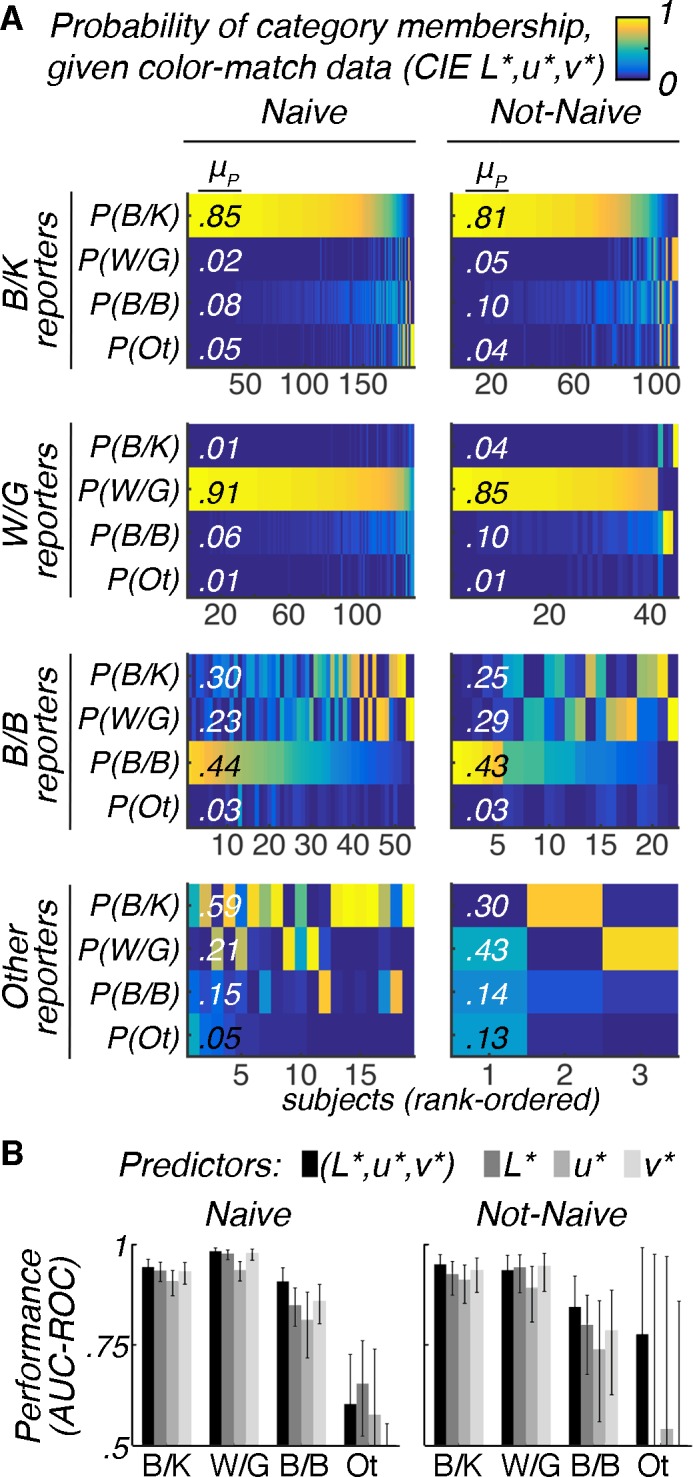

Categorical perception of the dress: Predicting color terms from color matches

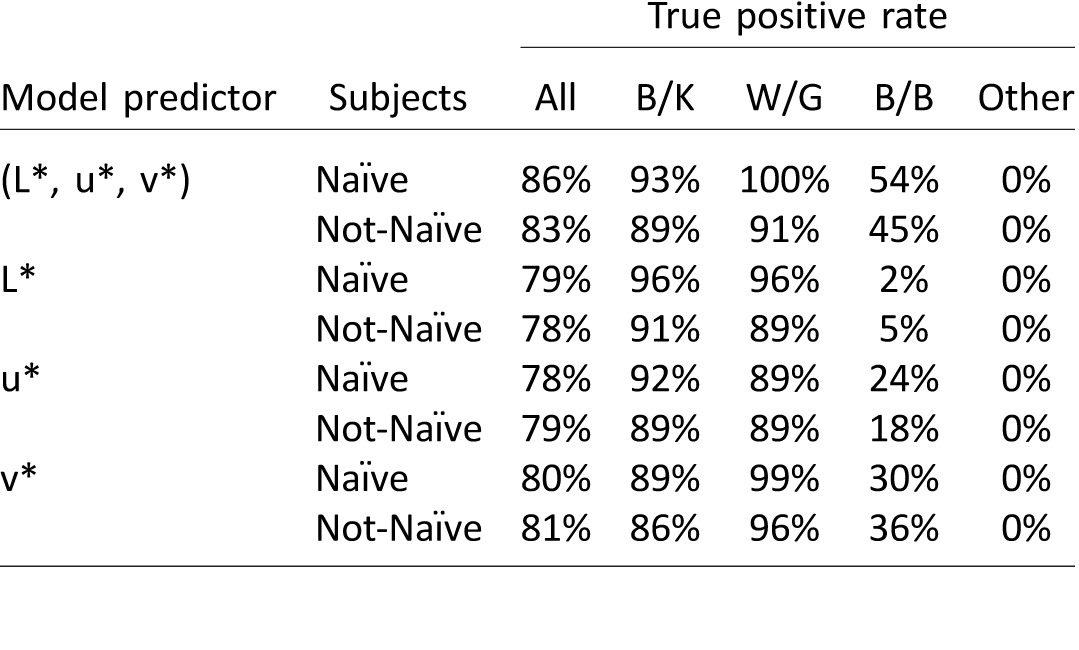

The results in Figures 1–6 support the conclusion that the color of the dress photograph is resolved as one of two dominant categories, consistent with the initial social-media reports. As an additional test of the hypothesis, we performed a multinomial logistic regression analysis to test the extent to which we could predict the colors a person would use to describe the dress, given the color matches the person makes. If, for example, B/B reporters represent a distinct or stable perceptual category, then a classifier (trained on independent data) should be able to distinguish B/B reporters from B/K and W/G on the basis of color matches alone. We generated four models: a full model, which used the L*, u*, and v* components of subjects' matches as the predictors, and three additional models which used either the L*, u*, or v* component of subjects' matches as the predictor. The models were trained and tested with independent data (Ntrain = 549, Ntest = 547). Figure 7A shows the prediction outcomes for the full model. Panels show the results grouped by prior experience with the image (Naïve vs. Not-Naïve) and ground-truth verbal label (B/K, W/G, B/B, Other). Among participants who reported B/K, the average predicted probability of B/K category membership was 0.85 for subjects with no prior experience (Figure 7A, top left) and 0.81 for subjects with prior experience (Figure 7A, top right). These probabilities were such that 92% of B/K reporters were classified B/K (93% of those without prior experience, 89% of those with prior experience); and 98% of W/G reporters were classified W/G (100% of those without prior experience, 91% of those with prior experience; Table 1). The model was less successful in its classification of B/B reporters, but above chance: 51% of B/B reporters were classified B/B (54% of those without prior experience, 45% of those with prior experience). The classifier failed to classify all Other reporters as Other. Overall, the true positive rate was 85%. We further quantified model performance by computing the area under the receiver operating characteristic (ROC) curve for each categorical label. This quantity reflects both the true and false positive rates (Figure 7B). Values greater than 0.90 indicate excellent performance (which we observed for B/K and W/G reporters), while values between 0.75 and 0.90 represent fair to good (observed for B/B reporters) and values between 0.5 and 0.75 represent poor performance (observed for Other reporters). Values below 0.5 represent failure.

Figure 7.

Categorical verbal reports can be predicted from color matches. Multinomial logistic regression was used to build nominal-response models (classifiers). Four models were generated: The full model was fitted using the L*, u*, and v* components of subjects' color matches (to all four match regions) as predictors; three additional models were fitted using either the L*, u*, or v* component of subjects' matches as the predictors. Models were fitted with responses from a subset of the online subjects (half the subjects from Experiment 2, Ntrain = 549) and tested on responses from the left-out subjects (Ntest = 547). (A) Predicted probability of category membership for the full model. Each panel contains the results for data from individual (left-out) subjects, grouped by the verbal label they used to describe the dress (ground truth) and whether they had seen the dress prior to the study (Naïve vs. Not-Naïve). Each thin vertical column within a panel shows the results for a single subject: The colors of each row in the column represent the predicted probability that the subject used each of the categorical labels (B/K, W/G, B/B, Other); each column sums to 1. Subjects are rank-ordered by the predicted probability for the ground-truth class. The average predicted probabilities for each response category are denoted μP. (B) Bar plots quantifying classification performance (the area under the receiver operating characteristic curves, computed using the true and false positive rates), by category, for each of the four models. Error bars indicate 95% confidence intervals. Values greater than 0.90 indicate excellent performance; values between 0.75 and 0.90 indicate fair to good performance; values between 0.5 and 0.75 indicate poor performance. We compared the accuracy of the various models against each other using MATLAB's testcholdout function: The L*-only model performed no better than the u*-only model (Naïve: p = 0.5; Not-Naïve: p = 0.8) or the v*-only model (Naïve: p = 0.5; Not-Naïve: p = 0.3). The full model was more accurate than the L*-only model, but only among Naïve subjects (Naïve: p < 0.001; Not-Naïve: p = 0.09). True positive rates (sensitivity) for all four models are provided in Table 1.

Table 1.

True positive rate of multinomial classifiers trained to predict verbal color reports from color matches (see Figure 7). Four models were generated: The full model was fitted using the L*, u*, and v* components of subjects' color matches as predictors; three additional models were fitted using either the L*, u*, or v* component of subjects' matches as predictors. Models were fitted with responses from a subset of the online subjects (half the subjects from Experiment 2, Ntrain = 549) and tested on responses from the left-out subjects (Ntest = 547). The table shows the true positive rates—(# of true positives)/(# of true positives + # of false negatives)—for each model, broken down by subjects' prior experience with the image (Naïve, Not-Naïve) and their verbal label (B/K, W/G, B/B, and Other).

Do the model results contradict the conclusions drawn in Figures 2 and 3 by providing evidence for three categories? While the true positive rates for the B/B observers (51%) are above chance (chance = 25%) and the ROC analysis shows fair to good performance, only ∼25% of the correctly classified B/B reporters were classified accurately with high predicted probability. Furthermore, among those misclassified, most were classified with strong confidence as being either B/K or W/G (Figure 7A, third row). There was a delay of a few minutes between when subjects provided verbal reports about the dress color and when they did the color-matching experiment; the perception of the dress could have switched during this time, as spontaneously reported by some subjects. That many B/B people were confidently classified as either B/K or W/G is consistent with the hypothesis that these subjects may have switched percepts between when they gave their verbal report and when they gave their color match. Together with the results in Figures 2 and 3, the classifier results suggest that the B/B designation is not a distinct category. Instead, we interpret the results to indicate the B/B category as a transient state between B/K and W/G, which would be consistent with the properties of a bistable phenomenon.

When the model was fitted using only the L*, u*, or v* components of subjects' color matches as the predictors, the classification performance remained high (Table 1; Figure 7B) and did not differ as a function of which component was used as the predictor: The L*-only model performed no better than the u*-only model (no prior experience: p = 0.5; prior experience: p = 0.8) or the v*-only model (no prior experience: p = 0.5; prior experience: p = 0.3). The full model was more accurate than the L*-only model, but only among subjects with no prior experience (p < 0.001; prior experience: p = 0.09). This provides additional support for the contention that B/K and W/G reporters are differentiated by both the lightness and the hue of the matches they select.

Categorical perception of the dress: Switching perception from one category to another

The evidence presented in Figures 1–7 strongly suggests that the dress image is an ambiguous image that the visual system can interpret as one of two mutually exclusive categorical percepts. When viewing ambiguous shape images such as the Necker cube, subjects often report a change in their perception of the image from one stable state to the other. But it has also been shown that knowledge of the fact that the image can be perceived in different ways can have a profound impact on whether subjects see the image flip. The online data we obtained came from a diverse subject pool. Unlike most other online surveys of the phenomenon, participants were not recruited with links attached to media reports describing the dress. As a result, the image was entirely new to many of the participants in our study. Moreover, of those who had previously encountered the image, many had no knowledge of the actual color of the real dress, enabling us to test the extent to which subjects can change their perception of the dress and, if so, the impact on flip rates conferred by knowledge of the image's multistability.

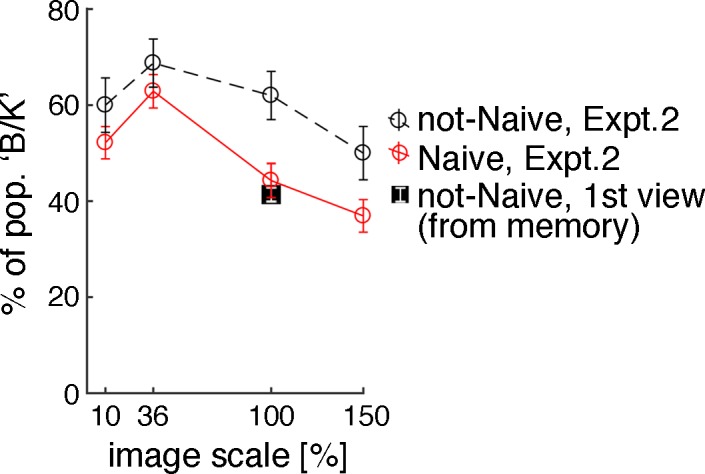

We asked subjects who had previous experience with the image about their first encounter with it. The distribution of verbal color reports corresponding to the first time that these subjects viewed the dress (recalled from memory; Figure 8, filled symbol) showed a greater proportion of W/G than was reported by the same subjects in response to our presentation of the image (X2 = 731, p < 0.001). Some of these subjects must have switched their perception of the dress's colors since their first viewing; the results show that experience with the image biased the population toward the B/K percept. The relative proportion of B/K first-encounter reports is comparable to findings from other surveys and matches the proportion recovered in experiments of subjects without experience of the image who were shown it at 100% size (the solid symbol overlaps the open red circle; Figure 8). As we showed previously, reducing the size of the image from its native size on the Internet also biased the population toward the B/K percept. These two factors (image size and experience) interact (ANOVA performed with the data from the Figure 8; open circles confirmed main effects of scale, p < 0.001, experience, p < 0.001, and their interaction, p < 0.001).

Figure 8.

Image scale and prior exposure affect perception of the dress's colors. In Experiment 2 we varied the presentation scale of the image (different subjects saw different scales; 10%, 36%, 100%, 150%). Unlike in Experiment 1, subjects were never shown an image in which the lighting cues were disambiguated. Open circles show the percentage of subjects who reported B/K at each scale. The red line shows data from subjects who had not seen the dress prior to participating in the study (10%, N = 207; 36%, N = 194; 100%, N = 192; 150%, N = 195). The black line shows data from subjects who had seen the dress prior to the experiment (10%, N = 80; 36%, N = 80; 100%, N = 100; 150%, N = 78). Viewing the image at a reduced scale and having prior experience with the image both increased the proportion of subjects who reported B/K, and the two factors interacted (ANOVA performed with bootstrapped data from the open circles showed main effects of scale, p < 0.001, experience, p < 0.001, and their interaction, p < 0.001). Subjects who had seen the image before also reported on the colors they perceived when they first saw it, recalled from memory (solid black square; data from Not-Naïve subjects in Experiments 1 and 2, N = 1,037). The distribution of verbal color reports corresponding to the first time that Not-Naïve subjects viewed the image differed from the distribution of reports the same subjects provided in response to our presentation of the image, confirming that many had flipped (chi-square test of independence: X2 = 731, p < 0.001). Error bars show standard errors (bootstrapping, sampling with replacement).

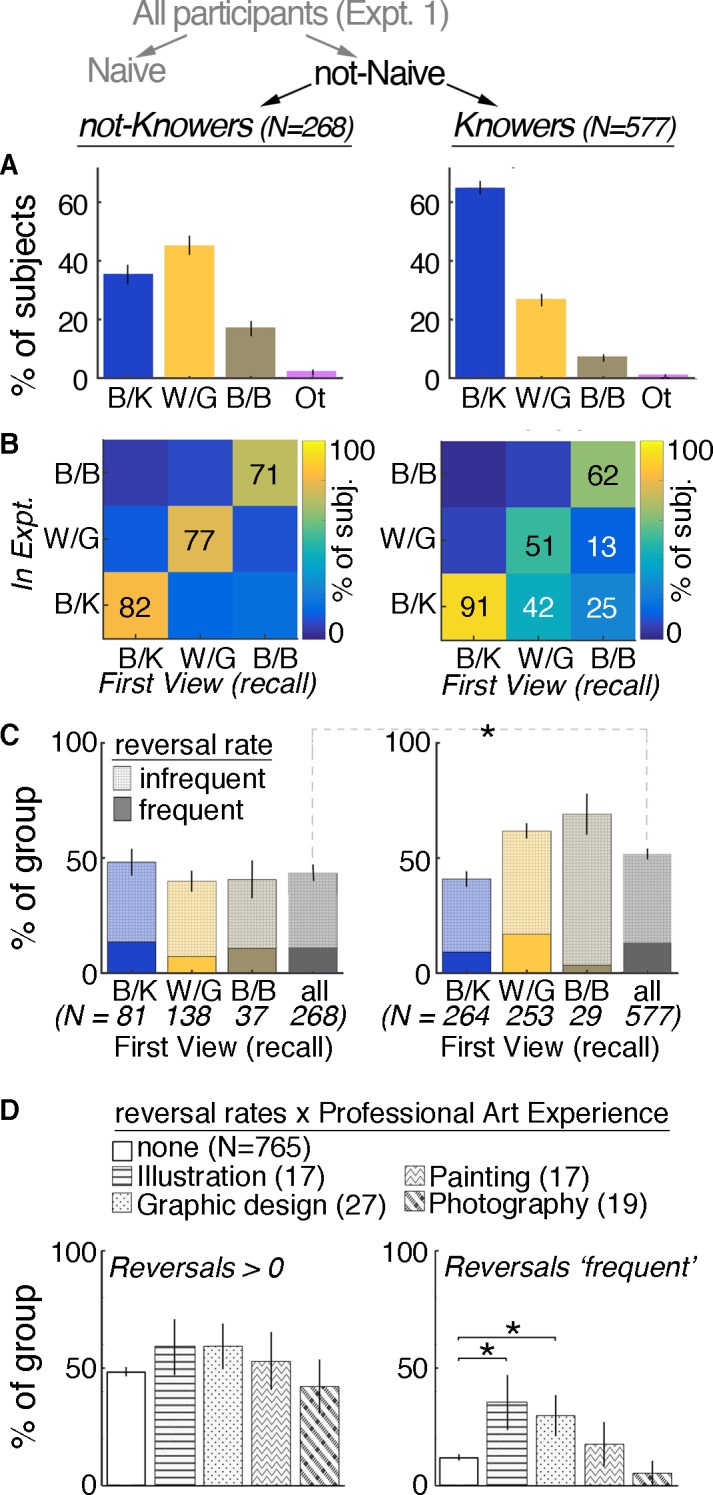

Among the 845 subjects with prior experience, 577 knew that the true dress color was B/K, allowing us to ask how knowledge alters perception. Knowledge of the dress's color in real life dramatically altered the ratio of B/K to W/G responses in the population in a direction that favored B/K (Figure 9A, 9B). Moreover, knowledge of the true colors increased reports of flipping between B/K and W/G (Figure 9C). Among people who first saw W/G yet reported knowing the true colors of the dress, 42% had switched to B/K (we confirmed that these individuals were not merely reporting the dress's true colors while continuing to perceive W/G by running their color matches through our classifier: Only 9% of them were classified as W/G; 76% were classified as B/K, 13% as B/B). Among people who first saw W/G and reported not knowing the true colors of the dress, only 14% had switched to B/K. These results show that knowledge of the dress's true colors affected whether people could see it flip.

Figure 9.

Familiarity with the dress image affects subsequent viewings. (A) The distribution of color reports (Experiment 1; image scale always 36%) from subjects who had seen the image before (N = 845) and had knowledge of the dress's colors in real life (“Knowers,” N = 577) differed from the distribution of reports from subjects who had seen the image and did not know the dress's real colors (“Not-knowers,” N = 268; chi-square test for difference of proportions: p < 0.001, X2 = 66). (B) Color reports of the dress during the experiment as a function of how subjects first perceived the dress (recalled from memory). Results for Not-knowers are predominantly along the x = y diagonal, reflecting dominance of initial stable state (though the presence of deviations was still significant: chi-square test of independence: X2 = 277, p < 0.001). Results for Knowers showed more substantial deviations from the diagonal, particularly for subjects who first saw W/G or B/B, reflecting a weakening of initial state and an increased dominance of B/K state (the color of the dress in real life; chi-square test of independence: X2 = 394, p < 0.001). (C) Quantification of self- reported reversal rates for Knowers and Not-knowers; percentages computed within class (B/K, W/G, B/B, Other; class corresponded to first-view percepts from memory). Subjects were asked: “In your viewings prior to this study, did your perception of the dress colors ever change? Y/N” and “How often did you see it change? Frequently/Infrequently/Never.” The proportion of subjects who reported changes was higher among Knowers than Not-knowers (gray asterisk; chi-square test for difference of proportions: p = 0.036, X2 = 4.4). Error bars indicate standard errors (bootstrapping, sampling with replacement). (D) Quantification of self-reported reversals as a function of professional art experience. Left panel shows the proportion of subjects who reported at least one reversal, sorted by professional art experience. Right panel shows the proportion of those subjects who reported that reversals were frequent. Compared to non-artists, reports of frequent reversals were significantly higher among subjects who indicated having professional art experience in the fields of illustration (p = 0.004, two-proportion z test) and graphic design (p = 0.005). Error bars indicate standard errors (bootstrapping, sampling with replacement).

The distribution of observers across categories was different between participants with and without knowledge of the true dress color (compare figure 1 of Lafer-Sousa et al., 2015, with Figure 9A, right panel; X2 = 21, p < 0.001; all data obtained with the 36% image). Specifically, the proportion of B/K among participants with knowledge of the true colors (65%) was higher than the proportion of B/K among participants with no previous experience (54%). Curiously, among the population who had seen the dress previously but did not know its colors, we found roughly the same proportion of B/K as W/G observers (Figure 9B, left panel); this distribution was different than observed for participants with no prior experience, who were much more likely to see B/K (X2 = 23, p < 0.001). Individuals with prior exposure but no knowledge of the dress's colors likely first saw the image in social media, where it was shown at a larger scale than we used in this set of experiments. We attribute the relatively higher levels of W/G reports among this group—even though the image we showed was at the smaller size—to the fact that their first view likely established a prior about the colors of the dress that had not been updated with any subsequent knowledge of its true colors.

On average, half of subjects reported experiencing the dress reverse at least once, while only 12% reported frequent reversals (Figure 9C). Given that reversal rates in multistable perception can be influenced by cognitive factors like personality, creativity, and attention, we examined the proportion of subjects reporting reversals as a function of their professional art experience (subjects could indicate professional experience with graphic design, illustration, photography, painting, and art history; Figure 9D). Although the proportion of people who reported having seen the dress switch at least once did not differ as a function of art experience (Figure 9D, left), the proportion who reported frequent reversals was different across different art experience (Figure 9D, right). Compared to nonartists, reports of frequent reversals were 3 times higher among subjects who indicated having professional illustration experience (p = 0.004; two-proportion z test) and 2.5 times higher among those with professional graphic-design experience (p = 0.005).

Together, the analysis of the population responses of different categories of observers shows that (a) how you first saw the dress establishes a prior; (b) knowledge of the colors of the dress in real life updates this prior, biasing it toward B/K; (c) varying the image size systematically biases the percept (increasing image size increases W/G reporting; reducing image size increases B/K reporting); (d) experience with the image over time, independent of knowledge of the dress's true colors, biases the population toward B/K; and (e) reversal frequencies vary with professional art experience. These results uncover the important role played by both low-level perceptual features (such as image size) and high-level features (such as knowledge) in shaping how people perceive the colors of #TheDress, and add to a growing body of evidence that exposure to social media can change the colors we see.

What accounts for the different ways in which the dress colors are seen?

We have argued that the multistability of the image derives specifically from the fact that colors of the image align with the daylight locus (Brainard & Hurlbert, 2015; Conway, 2015; Lafer-Sousa et al., 2015). We hypothesized that in this context, multiple percepts become possible because the illumination cues in the image are ambiguous: Subjects may infer either a warm or a cool illuminant, and discount it accordingly. Consistent with this notion, color matches made by B/K and W/G reporters systematically shifted along the daylight locus, with B/K matches shifting away from the warm end of the locus (consistent with discounting a warm illuminant) and W/G matches shifting away from the cool end (consistent with discounting a cool illuminant; Figure 10A, 10B, left plots). W/G matches were also lighter on average than B/K matches, consistent with the idea that subjects are discounting not only chromatic biases in the illuminant but also lightness biases expected if they thought the dress was in shadow (Figure 10A, 10B, right plots). In our prior report, we tested the idea that illumination assumptions underlie the individual differences in color perception of the dress by determining how perception changes when the dress is embedded in a scene with disambiguated lighting (Lafer-Sousa et al., 2015). The results support the idea that observers who see B/K assume the dress is illuminated by a warm illuminant, while observers who see W/G assume that it is illuminated by a cool light source. Further evidence for this idea has been provided by others (Toscani, Gegenfurtner, & Doerschner, 2017; Witzel, Racey, & O'Regan, 2017).

Figure 10.

Color matches made by B/K and W/G reporters track the daylight locus. Chromaticity matches for (A) the top regions of the dress and (B) the bottom regions of the dress. Left graphs: Mean hue (CIE u′, v′) of color-matches made by online subjects (N = 1,174; B/K = 770; W/G = 404). Right plots: Mean lightness of matches (CIE L). Error bars show the 95% confidence interval of the mean. Data were grouped by the verbal report made by the subjects: squares for W/G and circles for B/K. The color of the symbol corresponds to the color term used by the subject (blue, black, white, or gold). Black line shows the daylight locus. Inset = CIE 1976 u′v′ color space with the daylight locus (arrow).

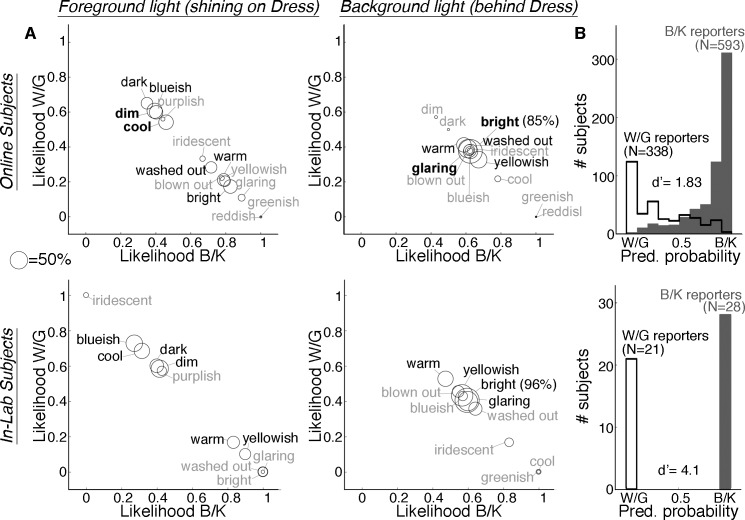

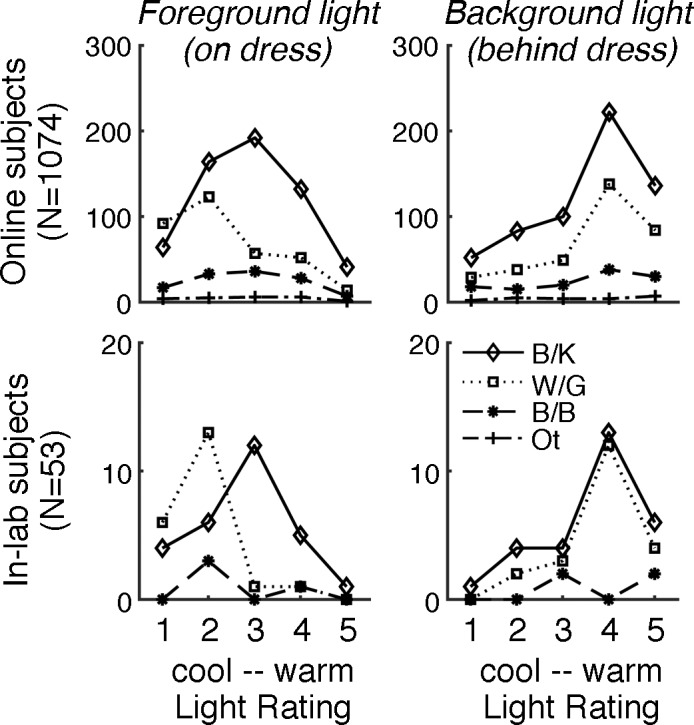

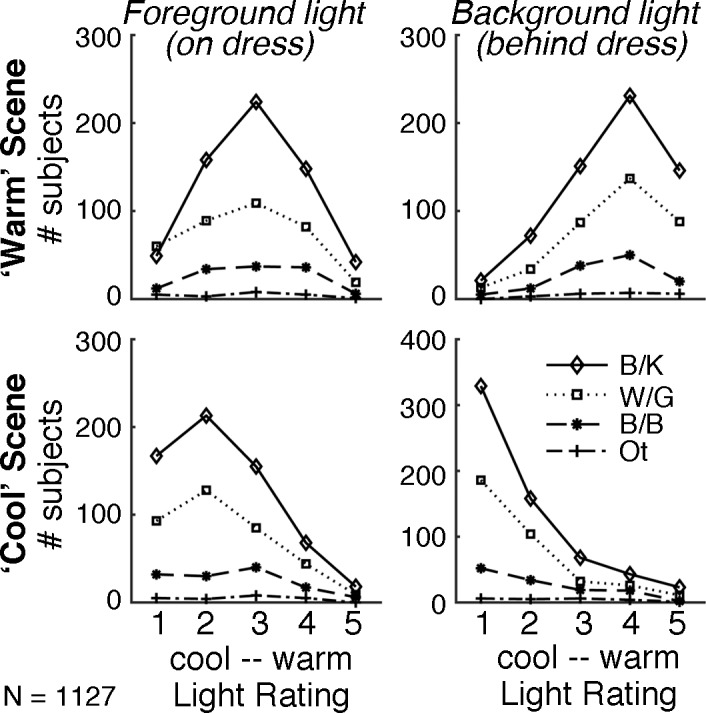

To further test the hypothesis, we applied a classification algorithm to data collected in our initial survey (1,074 subjects online, 53 subjects in the lab), in which we asked people to explicitly report on the lighting conditions in the image (for the full questionnaire, see the supplementary material of Lafer-Sousa et al., 2015). We ran two experiments to assess subjective experience of the lighting conditions. First, we asked subjects to rate the illumination temperature on a scale of 1 to 5 for cool versus warm; second, we asked them to check off any of a number of possible verbal descriptors, including “dim,” “dark,” “bright,” “warm,” “cool,” “blueish,” and “yellowish” (see Methods and materials). Most subjects, regardless of their perception of the dress, reported the background illumination in the image to be warm (Figure 11, right panels; B/K and W/G reporter ratings did not differ, according to a t test—online subjects: p = 0.2; in-lab subjects: p = 0.5). But subjects who saw W/G differed from those who saw B/K in terms of their inference about the light on the dress itself: W/G percepts were associated with cool illumination, as if the dress were backlit and cast in shadow, while B/K percepts were associated with a warmer illumination, as if the dress were lit by the same global light as the rest of the room (Figure 11, left panels; t tests—online subjects: p < 0.001; in-lab subjects: p < 0.001). These analyses quantify results in our initial report and are consistent with other findings (Chetverikov & Ivanchei, 2016; Toscani et al., 2017; Wallisch, 2017; Witzel et al., 2017).

Figure 11.

Temperature ratings of the light shining on the dress systematically differ as a function of percept. Subjects (top row: 1,074 online, bottom row: 53 in-lab) were asked to rate their impression of the light shining on the dress and the light illuminating the background, on a scale of 1–5 (cool to warm). Plots show illumination ratings for the light on the dress (left panels) and the light in the background (right panels), grouped by verbal report of the dress's colors (B/K, W/G, B/B, Other). Subjects' ratings of the light on the dress systematically differed as a function of percept (two-sample t test comparing the ratings provided by subjects who saw the dress as B/K vs. those who saw W/G; online: p < 0.001; in-lab: p = 0.002). Subjects' ratings of the background light did not differ as a function of percept (online: p = 0.2; in-lab: p = 0.5).

The results obtained using data on the warm/cool ratings were confirmed by an analysis of the descriptors that subjects used to characterize the lighting. W/G and B/K subjects were indistinguishable in the words they used to report on the illumination of the background (in Figure 12A the descriptors form one cluster in the right-hand bubble plots, where the most common descriptor was “bright”) but showed strikingly different word choices when reporting on the illumination over the dress itself (descriptors form two clusters: Figure 12A, left-hand bubble plots), with words like “dim” and “cool” corresponding to higher likelihoods of W/G reporting and words like “warm” and “bright” to higher likelihoods of B/K reporting. The binary logistic regression using the lighting descriptors as predictors reliably classified B/K and W/G reporters (Figure 12B, classification histograms; online subjects: correct rate = 84%, d′ = 1.83, R2 = 0.52; in-lab subjects: correct rate = 100%, d′ = +∞, R2 = 1) and outperformed a constant-only model (online subjects: X2 = 583, p < 0.001; in-lab subjects: X2 = 66.9, p = 0.04). Note that, once again, the results obtained online were consistent with those obtained in the lab.

Figure 12.

Subjects' color percepts of the dress are predicted by their inference of the lighting conditions. Subjects (top row: 1,074 online; bottom row: 49 in-lab; only subjects who reported either W/G or B/K are included in this analysis) were asked to characterize the light shining on the dress and the light illuminating the background, by checking off any of a number of possible verbal descriptors from a list (dim, dark, cool, blueish, bright, warm, yellowish, glaring, blown out, washed out, reddish, greenish, purplish, iridescent). (A) For each word in the list, the likelihood of being B/K = (# of B/K reporters who used the term)/(# of B/K + # of W/G who used the term). The diameter of the bubble reflects the proportion of people in the population who used the term: (# of B/K who used it + # of W/G who used it)/(# of B/K reporters + # of W/G reporters). Inset key = 50%. Bubble plots for the light shining on the dress (foreground; left panels) and the light in the background (right panels; top row: online subjects; bottom row: in-lab subjects). (B) Classification histograms for a binary logistic regression where the lighting descriptors were used as predictors to distinguish B/K from W/G reporters—online subjects: correct rate = 84% (85% for B/K, 80% for W/G), d′ = 1.83, R2 = 0.52; in-lab subjects: correct rate 100%, d′ = +∞, R2 = 1. A test of the full model against a constant-only model was statistically significant, indicating that the predictors (the verbal descriptors of the lighting conditions) as a set reliably distinguish between B/K reporters and W/G reporters (online subjects: X2 = 583, p < 0.001; in-lab subjects: X2 = 66.9, p = 0.037).

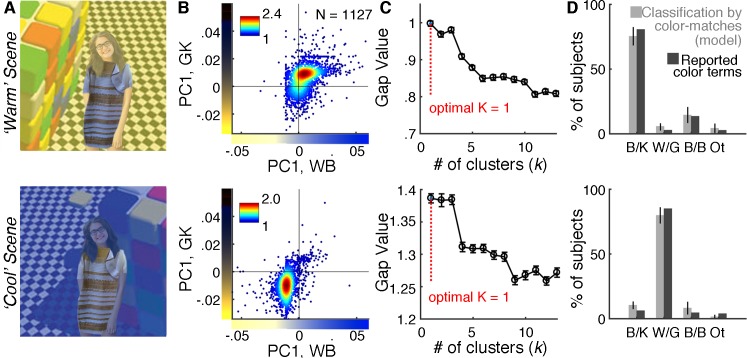

Quantification of color-matching data obtained from 1,127 people mostly online using cue-rich disambiguating stimuli show that for both of the disambiguating scenes (cool and warm illumination simulations; Figure 13A), the optimal k-means clustering solution is a single-component model (one cluster), consistent with the unimodal distribution of color terms reported under these conditions (Figure 13B–13D). When asked to rate the lighting conditions in the simulated scenes, subjects also conformed to ratings consistent with the lighting conditions cued (Figure 14). The results confirm that embedding the dress in scenes with unambiguous illumination cues resolves the individual differences in perception of the dress. The results also prove that the individual differences measured online, for the original dress image, are not simply the result of variability in viewing conditions.

Figure 13.

When the dress is embedded in scenes with overt cues to the illumination, it is perceived in a way that can be predicted from the overt cues: W/G when a cool illuminant is cued, B/K when a warm illuminant is cued. (A) The dress was digitally embedded in simulated contexts designed to convey warm illumination (top row) or cool illumination (bottom). Cues to the illuminant are provided by a global color tint applied to the whole scene, including the skin of the model, but not the pixels of the dress. (B) Distribution of subjects' (N = 1,127; 1,074 online, 53 in-lab) color matches. Conventions as in Figure 1. (C) Results of k-means clustering assessments of the matching data from (B). Conventions as in Figure 2. The analysis favored a single-component model (optimal k = 1 cluster) for both the warm and cool scene distributions. (D) Distribution of categorical percepts observed (dark-gray bars) and the distribution predicted from the multinomial classifier (light-gray bars; see Figure 7). Error bars show 95% confidence intervals. Photograph of the dress used with permission; copyright Cecilia Bleasdale.

Figure 14.

When the dress is embedded in scenes with overt cues to the illumination, the lighting is perceived in a predictable way. Subjects' ratings of the illumination in the simulated scenes from Figure 13 (N = 1,127; online = 1,074; in-lab = 53): warm illumination (top row) and cool illumination (bottom row). Conventions are as in Figure 11; data are grouped by the color terms that subjects used to describe the original dress image. Subjects' ratings of the foreground light did not differ as a function of initial percept (p = 0.9), nor did their ratings of the background light (p = 0.8; two-sample t tests comparing the ratings provided by subjects who originally reported B/K vs. those who originally reported W/G). Rating variance was higher for the original image (Figure 11) than for either test (cool: p < 0.001; warm: p < 0.001; F test), but similar for the tests (p = 0.08).

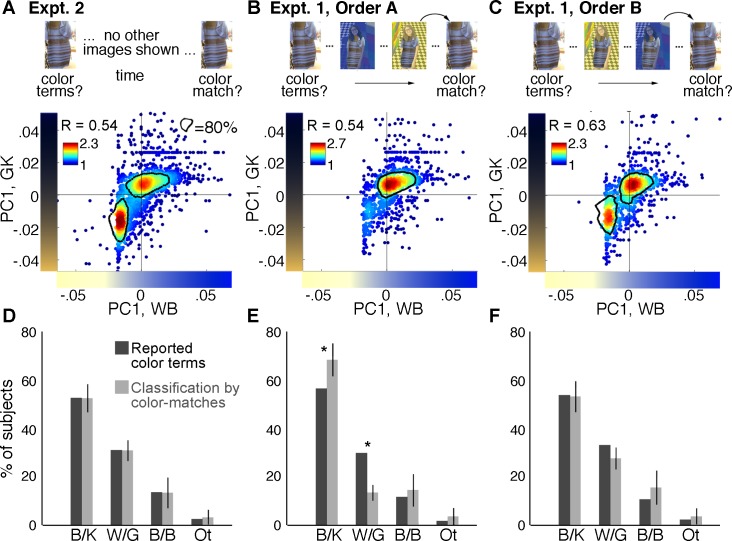

What informs people's priors? Immediate prior exposure

Our results show that experience acquired over the medium term (knowledge of the true colors of the dress) affects perception. What about experience in the very short term? Presumably priors on lighting are updating constantly, weighted by the reliability of the data. We sought to test whether exposure to a disambiguated version of the image that was digitally manipulated to provide clear information about the illuminant affects how subjects see the original image.

We were able to address this hypothesis because we carried out two versions of Experiment 1 (with different participants; Figure 15). In one version, subjects provided color matches for the original dress image after being exposed to the image simulating a warm illuminant; in the second version, subjects provided color matches for the original dress image after being exposed to the image simulating a cool illuminant. We also conducted a separate experiment (Experiment 2) in which subjects were never exposed to the disambiguating stimuli. At the beginning of all experiments, subjects provided color terms for the original image. In our analysis we leveraged the discovery described previously (Figure 7), that color matches reliably predict verbal reports. We compared the verbal reports made by subjects at the beginning of the experiment with the verbal reports we predicted they would make, given their color matches, at the end of the experiment. If exposure to a disambiguated stimulus updates a prior about the lighting condition, the predicted verbal reports made on the basis of color-matching data should differ from the verbal reports made by the subjects for Experiment 1 but not Experiment 2; specifically, the predicted reports in Experiment 2 should be biased toward B/K when subjects were exposed to the warm scene and to W/G when exposed to the cool scene.

Figure 15.

Priming with disambiguated scenes of the dress affects subsequent viewings, providing evidence that priors on lighting conditions can be updated by short-term experience. Two experiments were conducted with separate groups of subjects. In Experiment 1, subjects gave color matches for the original dress image immediately after viewing the disambiguating stimuli from Figure 13; in Experiment 2, subjects were never exposed to the disambiguating stimuli. In Experiment 1, two groups of subjects saw the disambiguating stimuli in one of two orders that differed depending on which disambiguating stimulus immediately preceded the color-matching task. All subjects provided verbal reports prior to viewing the disambiguating stimuli. (A) Analysis of data from Experiment 2 (online subjects: N = 1,126). The scatterplot (conventions as in Figure 1A) shows two peaks. (B) Analysis of color-matching data from Experiment 1, Order A (N = 553; subjects viewed the simulated warm scene—the B/K primer—directly before performing the color-matching task on the original image). K-means clustering returns two clusters, but there is one dominant peak. (C) Analysis of color-matching data from Experiment 1, Order B (N = 523; subjects viewed the simulated cool scene—the W/G primer—directly before the color-matching task on the original image). The scatterplot has two strong peaks. (D) Histograms comparing the distribution of categorical percepts recovered in Experiment 2 (control, no-primer) during the verbal task and the color-matching task. Dark-gray bars show data from the verbal reporting task; light-gray bars show the distribution of verbal reports predicted from the color matches using the category-response model (see Figure 7A; the classifier was trained on half the data and the plot shows classification for the other half). Error bars show 95% confidence intervals. Distributions do not differ. (E) Bar plots showing the distribution of categorical percepts for Experiment 1, Order A. Dark bars show verbal reports collected prior to priming; light bars show verbal labels predicted from color matches made following exposure to the B/K primer. Asterisks indicate a significant shift from W/G to B/K reporting after B/K priming (dark bar falls outside of the 95% confidence interval of the light bar). (F) Histograms comparing distributions for WG primer (Experiment 1, Order B). Photograph of the dress used with permission; copyright Cecilia Bleasdale.

Figure 15A shows the results for the control case—no exposure to a disambiguating stimulus—and replicates the findings in Figure 1A: The density plot shows two strong peaks, corresponding to B/K and W/G reporters (Figure 15A is a subset of the data shown in Figure 1A). We deployed our classifier trained on independent data (Figure 7, full model) to categorize observers on the basis of the color-matching data they provided. The distribution of verbal reports predicted by the classifier (light-gray bars) is almost identical to the distribution of verbal reports that subjects provided (dark-gray bars; Figure 15D). Figure 15B shows the results of Experiment 1, Order A, in which a separate set of subjects (N = 553) viewed the simulated warmly lit scene immediately before they gave color matches for the original image. The distribution of verbal reports (obtained prior to color matching) is essentially indistinguishable from the distribution obtained in the control experiment (compare dark bars in Figure 15E with dark bars in Figure 15D), providing reassurance that we sampled a sufficient number of subjects to recover an accurate estimate of the population distribution. But compared to Figure 15A, the density plot in Figure 15B shows only one strong peak, which aligns with the color matches made by B/K subjects in the control experiment (the contour contains 80% of the data). These results show that more subjects reported B/K, and fewer reported W/G, than expected on the basis of the verbal reports that they provided. K-means clustering returned two optimal clusters, showing that the W/G peak was still present, albeit diminished. The bar plots (Figure 15E) quantify the shift. Figure 15C and 15F show the results for an independent set of subjects (N = 523) who participated in Experiment 1, Order B. Unlike with Order A, the data look similar to the control case—the density plot shows two strong peaks, and the distribution of verbal reports predicted from the color matches is not different from the distribution of verbal reports that subjects actually provided. These results show that priming subjects to see B/K influences them to see B/K, whereas priming subjects to see W/G has no effect.

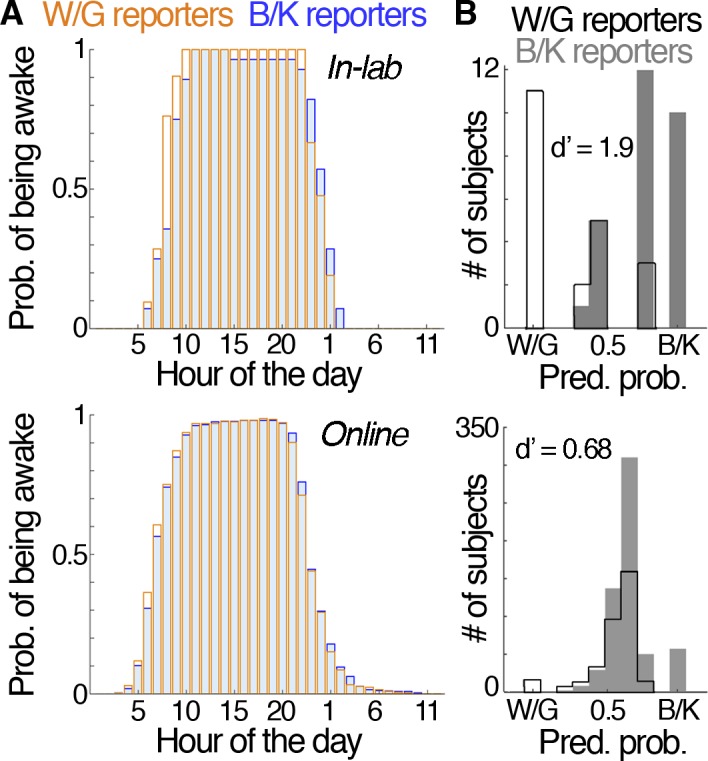

What informs people's priors? Long-term exposure (chronotype)