Abstract

Objective

This systematic review applied meta-analytic procedures to synthesize medication adherence (also termed compliance) interventions that focus on health care providers.

Design

Comprehensive searching located studies testing interventions that targeted health care providers and reported patient medication adherence behavior outcomes. Search strategies included 13 computerized databases, hand searches of 57 journals, and both author and ancestry searches. Study sample, intervention characteristics, design, and outcomes were reliably coded. Standardized mean difference effect sizes were calculated using random-effects models. Heterogeneity was examined with Q and I2 statistics. Exploratory moderator analyses used meta-analytic analog of ANOVA and regression.

Results

Codable data were extracted from 218 reports of 151,182 subjects. The mean difference effect size was 0.233. Effect sizes for individual interventions varied from .088 to 0.301. Interventions were more effective when they included multiple strategies. Risk of bias assessment documented larger effect sizes in studies with larger samples, studies that used true control groups (as compared to attention control), and studies without intention-to-treat analyses.

Conclusion

Overall, this meta-analysis documented that interventions targeted to health care providers significantly improved patient medication adherence. The modest overall effect size suggests that interventions addressing multiple levels of influence on medication adherence may be necessary to achieve therapeutic outcomes.

Keywords: Health care provider, medication adherence, patient compliance, meta-analysis, systematic review

Introduction

Although scientific advances have produced medications for many chronic diseases that slow progression and prevent complications, inadequate medication adherence limits success in achieving therapeutic goals (1). Poor medication adherence has many negative consequences. Patients may experience avoidable morbidity or mortality (1, 2). When drugs’ intended therapeutic effects do not occur, additional health care is necessary and health care costs increase. Diminished worker productivity may occur as diseases and symptoms are poorly controlled (3). Health care providers experience frustration when intended treatments appear to be ineffective in improving clinical outcomes (4).

Health care providers’ (hereafter “providers”) actions and relationships with patients have long been recognized as determinants of medication adherence (hereafter “adherence”) (5–9). Patients’ trust in providers may influence adherence decisions (10–12). Effective patient-provider communication has been linked to adherence (13–15). Adherence directly correlates with patients’ satisfaction with communication from providers (16, 17), ratings of collaborative communication (18, 19), perception of providers as trustworthy (20), and evaluations of providers’ cultural competence (11).

Despite the importance of adherence for achieving therapeutic goals, providers rarely ask patients about adherence, and they do not accurately predict patients’ adherence (21). Tarn et al. found that only 4% of encounters directly addressed non-adherence (22). Oftentimes, patients do not understand providers’ questions about non-adherence (22). Lack of communication between providers and patients about barriers to adherence is common (23). Providers are reluctant to confront patients about non-adherence, and patients do not spontaneously reveal lack of adherence(22).

Few reviews synthesize provider-targeted interventions on medication adherence outcome behavior. Some completed reviews of providers’ links with patient behavior have limited information about medication adherence behavior outcomes because they lumped medication adherence with other health behaviors such as appointment-keeping (5, 24–30). Some reviews cover related constructs such as patient-centered care (26). The few relevant meta-analyses on patient health behavior outcomes (not limited to medication adherence) report mean difference effect sizes of 0.145 for provider-targeted interventions (24); 0.24 for physician training to increase communication (30); and 0.24 to 0.33 for provider information-giving and provider questions (27). No previous meta-analysis examines provider-targeted interventions to increase medication adherence.

Purpose

This systematic review and meta-analysis fills knowledge gaps in the important and growing research area of adherence outcomes of interventions that target providers. Primary study participants included adults taking prescription medications. Eligible interventions targeted providers such as physicians, nurses, physician assistants, pharmacists, case managers, social workers, and respiratory therapists. This review focuses on comparisons of adherence behavior outcomes between treatment and control groups. The research questions were: 1) What is the overall effect of interventions that target providers on adherence behavior outcomes? 2) Do effects of provider-targeted interventions vary depending on intervention characteristics, patient attributes, or design features of primary studies?

Methods

Standard systematic review and meta-analysis methods in accordance with PRISMA guidelines were used to conduct and report this project (31, 32).

Eligibility Criteria

Studies with interventions targeting providers and reporting adherence behavior outcomes were eligible. Adherence refers to the extent to which patient medication-consumption behavior is consistent with provider recommendations (33). Studies including adherence measured as dose timing, dose administration, persistence, and the like were included. Primary studies with varied adherence measures (e.g., pharmacy refills, electronic bottle cap devices) were included because meta-analysis methods convert primary study outcomes to unitless standardized indices (31).

Eligible provider-targeted interventions are those attempting to change either individual providers’ behavior with patients or the organization of providers’ care to increase adherence. Studies of adult subjects were included regardless of their health status. Exclusions included studies with samples of institutionalized persons or those taking the following medications: substance abuse or smoking cessation treatments, vitamins/supplements/nutraceuticals not prescribed by a provider, and reproductive or sexual function medications.

Intervention trials with data adequate to calculate adherence effect sizes in the reported study or available from corresponding authors were included. Because the single most consistent difference between published and unpublished studies is the statistical significance of findings, both published and unpublished studies were included (34). The project focused on treatment-versus-control comparisons. Pre-experimental studies were included in exploratory analyses; they are reported as ancillary information to the more valid two-group comparisons. Non-English studies were eligible for inclusion if research specialists or investigators were fluent in that language.

Information Sources and Search Strategy

An expert health sciences librarian conducted searches in MEDLINE, PUBMED, PsycINFO, CINAHL, Cochrane Central Trials Register, EBSCSO, Cochrane Database of Systematic Reviews, EBM Reviews, PDQT, ERIC, IndMed, International Pharmaceutical Abstracts, and Communication and Mass Media. The primary MeSH terms upon which searches were constructed were patient compliance for studies published before 2009 and medication adherence for studies published after 2008, when the latter term was introduced. Other MeSH terms used in constructing search strategies were: prescription drugs, pharmaceutical preparations, drugs, dosage forms, or generic. Text word searches used: compliant, compliance, adherent, adherence, noncompliant, noncompliance, nonadherent, nonadherence, improve, promote, enhance, encourage, foster, advocate, influence, incentive, ensure, remind, optimize, optimize, increase, impact, prevent, address, decrease, prescription(s), prescribed, drug(s), medication(s), pill(s), tablet(s), regimen(s), chemotherapy, agent(s), antihypertensive(s), antituberculosis, antiretrovirals, and HAART. Search terms specific to provider-targeted interventions were not used because no structured language-appropriate terms exist in search engines.

Journal hand searches were completed for 57 journals. Searches were conducted in 19 grant databases and clinical trials registers (e.g., Research Porfolio Online Reporting Tool). Abstracts from 48 conferences were retrieved and reviewed. Computerized database searches were conducted on the names of individuals who had authored multiple eligible studies. These authors were also contacted to solicit additional studies.

Study Selection

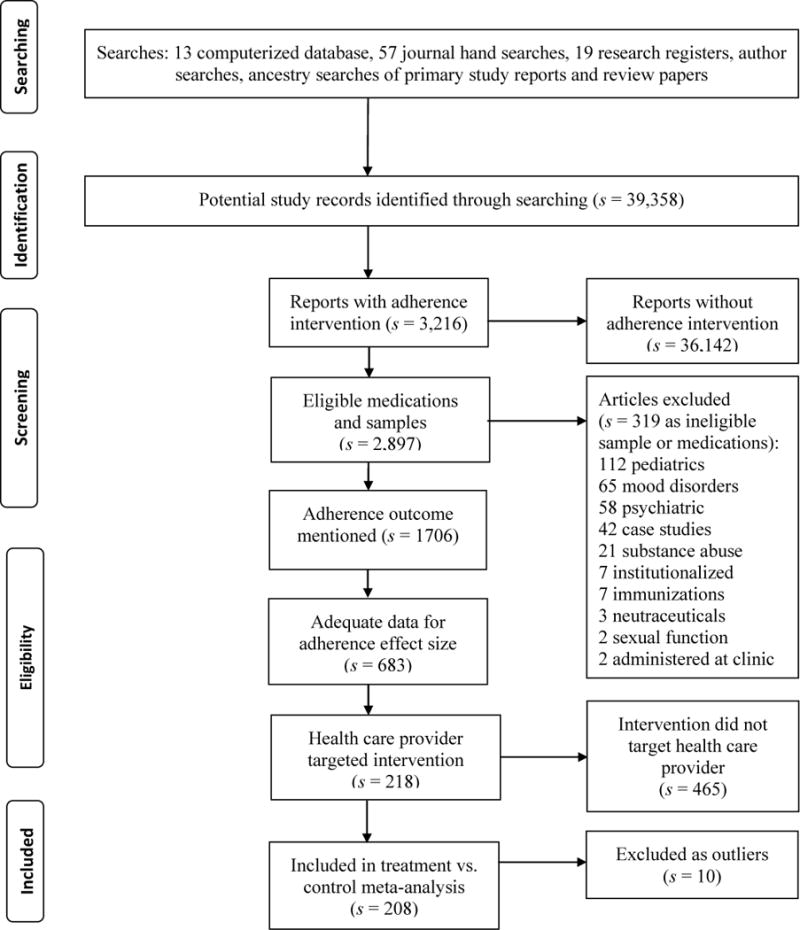

Figure 1 shows how potentially eligible primary studies flowed through the project. These studies were imported into bibliographic software and then tracked with study-specific customized fields. Eligibility criteria were applied: presence of a provider-targeted intervention, eligible sample (including medications), and adherence outcome data. Corresponding authors were contacted to provide this information when necessary.

Figure 1.

Flow diagram for health care provider targeted intervention primary studies

Note: s denotes the number of research reports

Data Collection

The research team’s prior projects, existing meta-analyses on related topics, and experts’ opinions were used to develop a coding frame to extract primary study characteristics and outcomes. Before implementation, the coding frame was pilot-tested with 20 studies. In order to code all targeted interventions in the primary studies, the initial set of coding items about provider-targeted interventions was expanded as new studies revealed additional interventions. Report features were coded (year of distribution, publication status). Patient attributes (gender, age, ethnicity, health) and medication regimen characteristics were coded. Coded methodological features included assignment of subjects to groups, allocation concealment, control group management (attention control vs. true control), masking of data collectors, sample size, attrition rates, and intention-to-treat analyses. Data for effect size calculations such as means, measures of variability, and sample sizes were extracted from primary reports.

Two extensively trained staff members independently coded all study variables, and each variable was compared between coders to achieve 100% agreement (35). Data used for effect size calculations were further verified by a third doctorally prepared coder. Author lists of each study were compared with author lists from other studies to identify any potentially overlapping samples and so ensure sample independence. When overlap was unclear, authors were contacted to clarify sample uniqueness. Multiple reports about the same subjects were used as ancillary information to enhance coding.

Risk of Bias

Comprehensive search strategies helped avoid potential bias from including easy-to-locate primary studies, which often have larger effect sizes (36). Meta-analyses limited to published studies may overestimate effect sizes because easy to find studies have larger effect sizes. Both published and unpublished studies were included to avoid publication bias (34). Publication bias was explored with funnel plots and Begg’s and Egger’s tests (37). A priori decisions about which adherence measures to code were used to avoid potential reporting bias within primary studies.

Primary study sample size variations were managed statistically by weighting effect size estimates so more precise studies (e.g., due to larger sample sizes) would be given proportionally more influence in effect size findings. Small-sample studies were included because they contribute to effect size estimates. These underpowered primary studies may be included in meta-analyses because effect sizes do not rely on p values from primary research.

Moderator analyses were used to examine the influence of common aspects of methodological strength (random assignment of subjects, allocation concealment, masking of data collectors, control group management, sample size, attrition rates, intention-to-treat analyses) on effect size. Moderator analyses also examined the type of adherence measurement (i.e. medication event monitoring systems, pharmacy refills, pill counts, and self-report) as a potential source of bias in this area of science. These analyses are a form of sensitivity analyses that address risk of bias (32). Quality rating scale scores were not used to weight effect sizes because scales lack validity and combine distinct aspects of conduct and report quality into single scores that may obscure meaningful differences.

To partially address design bias, effect sizes were reported separately for treatment-versus-control comparisons and for treatment pre-post comparisons. Control group pre-post comparisons were calculated to explore potential bias due to study participation.

Statistical Analysis: Summary Measure and Method of Analysis

For each treatment versus control comparison, a standardized mean difference (d) was calculated (31). The unitless standardized mean difference is the difference between treatment and control groups divided by the pooled standard deviation. A positive effect size indicates better adherence outcomes for treatment than for control subjects. Supplementary analyses examined treatment group pre- versus post-intervention effect sizes. A similar single-group analysis was conducted for control groups. Single-group findings should be considered ancillary information to the more valid two-group comparisons.

Using the effect sizes calculated from individual comparisons, an estimate of overall effect size was determined and associated confidence intervals were constructed. To provide comparisons with larger samples more influence in overall effect size estimates, effect sizes were weighted by the inverse of the within-study sampling variance (38). Random-effects models were used for effect size calculations to acknowledge that effect sizes vary not only from subject-level sampling error but also from other sources of study-level error such as methodological variations (39). Potential outliers were identified by examining externally standardized residuals of effect sizes. Funnel plots of effect sizes against sampling variance were used to explore potential publication bias. To facilitate interpretation, effect sizes were converted to an original metric by multiplying the effect size by the standard deviation and then adding that value to the mean of the control group for that metric.

Homogeneity was assessed using Q, a conventional heterogeneity statistic, and by computing I2, an index of heterogeneity beyond within-study sampling error (39). Heterogeneity was expected because it is common in behavioral research. The expected heterogeneity was handled in four ways: 1) both location and measures of heterogeneity were reported, 2) random-effects analysis models were used because they take into account heterogeneity beyond that explained by moderator analyses, 3) moderator analyses were used to explore heterogeneity, and 4) findings were interpreted in light of discovered heterogeneity (40).

Moderator analyses examined the association between study characteristics and effect sizes (39). Dichotomous moderator analyses tested the effect of between group heterogeneity statistics (Qbetween), which is a meta-analytic analogue of ANOVA. Continuous moderator analyses tested the effects through an unstandardized regression slope, which is a meta-analytic analogue of regression, with associated Qmodel statistic. Moderator analyses should be considered exploratory.

Results

Comprehensive searching located 218 eligible primary study reports with a total of 151,182 subjects (list of primary studies is in the supporting information file). Thirty-two additional papers were located that reported on the same studies; these were used as companion papers to enhance coded data. Two studies reported in Spanish were included. The primary study reports provided information for 196 treatment versus control comparisons at outcome, 139 treatment pre- versus post-intervention comparisons, and 75 control baseline versus outcome comparisons. Eighteen reports included two comparisons (such as two treatment groups compared to control groups), four studies included three comparisons, and one report included four comparisons.

Primary Study Characteristics

Primary studies were most often disseminated as journal articles (s = 203) (s = number of reports, k = number of comparisons). Dissertations (s = 12), presentations (s = 2), and unpublished reports (s = 1) were less common. Adherence interventions targeting providers are increasingly frequent, with 15 reports (7%) disseminated prior to 1990, and 182 (83%) in 2000 or after. Most primary studies reported funding (s = 163).

Descriptive statistics for primary studies are reported in Table 1. The median sample size was 75 participants. Modest attrition rates were reported (median percent of 6.79% for two-group comparisons). Across studies, 53% of participants were female. The median of mean participant age was 60.70 years. Among the 96 studies reporting ethnicity, 36% of samples were underrepresented adults. Among the 29 studies that reported mean number of prescribed medications, the median of mean medications was 6 medications. The most common diseases among patients were diabetes (k = 89), cardiac diseases (k = 89), hypertension (k = 87), hyperlipidemia (k = 39), HIV (k = 29), renal disease (k = 21), asthma (k = 19), lung disease (k = 16), stroke (k = 15), and gastrointestinal diseases (k = 12). Fewer than 10 studies reported including patients with acute Illness, allergies, autoimmune disease, blood disorders, cancer, eye problems, gynecological disorders, liver disease, malaria, nervous system problems, osteoarthritis, osteoporosis, rheumatoid arthritis, seizures, skin diseases, organ transplant, tuberculosis, and vascular disease.

Table 1.

Characteristics of Primary Studies Included in Medication Adherence Meta-analyses

| Characteristic | s | Min | Q1 | Mdn | Q3 | Max |

|---|---|---|---|---|---|---|

| Mean age (years) | 247 | 27.7 | 51 | 60.70 | 66.8 | 84 |

| Total post-test sample size per study | 247 | 5 | 30 | 75 | 148 | 30,073 |

| Percentage attrition in 2-group comparisons | 160 | 0 | 0 | 6.79 | 22.01 | 82.18 |

| Percentage female | 211 | 0 | 42.05 | 53.30 | 66.45 | 100 |

| Percentage ethnic minority | 96 | 0 | 13.50 | 36.10 | 58.95 | 100 |

| Mean number of prescribed medications | 29 | 2.04 | 5.1 | 6 | 7.9 | 15.1 |

Note. Includes all studies that contributed to primary analyses at least one effect size for any type of comparison. s=number of reports providing data on characteristic; Q1=first quartile, Q3=third quartile.

Health Care Provider Targeted Interventions

Several interventions targeting providers were described in primary studies reporting treatment and control group outcomes. Research staff trained providers to increase skills to enhance patient adherence in 100 comparisons. For example, these skills included teaching providers how to uncover patients’ barriers to adherence and find solutions to barriers, teach patients about adherence, and use standardized adherence treatment checklists. Some interventions increased integration of care across providers (k = 85) to improve adherence. Integration of care strategies were designed to improve coordination of care across health care professionals such as physicians, pharmacists, nurses, and therapists. In 28 studies, researchers increased providers’ communication skills with patients. Typical communication skill interventions focused on listening skills, question asking strategies to elicit adherence issues, motivational interviewing communication strategies, and supportive communication. Patient adherence information to providers was used in 18 studies. For example, this intervention could include adherence information from medication event monitoring system devices or pharmacy refill data to indicate adherence among study patients. In 15 studies, researchers directed providers to monitor patients’ adherence behavior. These included reminder systems to prompt providers to ask intervention patients about adherence along with a field for recording the adherence information in patients’ medical records. Provider-patient medication concordance was used to increase adherence in 13 comparisons. These interventions emphasized negotiated agreement between patients and providers about appropriate medication prescriptions. Increased provider time with patients in the intervention group was used to increase adherence in 11 comparisons. Ten studies were designed to reduce the geographical distance between providers and patients. For example, some interventions moved clinics closer to patients while others provided telehealth interventions so patients did not need to be physically present at clinics. Three studies attempted to increase continuity of care by ensuring patients saw the same provider over multiple visits or across multiple care sites such as hospitalization and ambulatory care. One study described the intervention as “activating” providers.

The professional preparation of providers was provided in all but seven studies. Most common were physicians who prescribed medications (k = 121), pharmacists (k = 85), and nurses (k = 60). Social workers, advanced practice nurses, and case managers were each targeted in four comparisons. No studies reported targeting physician assistants or respiratory therapists.

Overall Effects of Interventions on Medication Adherence Outcomes

Overall adherence outcome effect sizes are presented in Table 2. Effect sizes were calculated for 196 treatment-versus-control group outcomes comparisons of 139,392 subjects. Overall effect size was based on 192 comparisons, with four effect sizes excluded as outliers. The overall standardized mean difference was 0.233. The effect size was similar when the one extremely large sample study was excluded (0.239). The positive effect size documents that treatment subjects had significantly better adherence outcomes than did control participants. The overall effect size was significantly heterogeneous (based on Q statistic) with an I2 of 85.581. In terms of conversion to an original metric, the 0.233 effect size is consistent with the finding of 73% adherence rate for treatment subjects compared to 68% adherence rate among control subjects.

Table 2.

Random-Effects Medication Adherence and Health Outcome Estimates and Tests

| k | Effect size | p (ES) | 95% Confidence interval | Standard error | I2 | Q | p (Q) | |

|---|---|---|---|---|---|---|---|---|

| Medication Adherence Outcomes | ||||||||

| Treatment vs. control studiesa | 192 | 0.233 | <.001 | 0.186,0.280 | 0.024 | 85.581 | 1324.631 | <.001 |

| Treatment subjects pre- vs. post-comparisonsb | 132 | 0.311 | <.001 | 0.241, .0382 | 0.036 | 90.452 | 1372.063 | <.001 |

| Control subjects pre- vs. post-comparisonsc | 74 | 0.024 | .414 | −0.033, 0.081 | 0.029 | 76.919 | 316.281 | <.001 |

k denotes number of comparisons, Q is a conventional heterogeneity statistic, I2 is the percentage of total variation among studies’ observed ES due to heterogeneity.

Four comparisons were excluded as outliers.

Seven comparisons excluded as outliers.

One comparison excluded as an outlier.

Single-group pre-post effect sizes were calculated for 139 treatment comparisons of 24,142 subjects and 75 control groups with 12,814 subjects. For the treatment group overall effect size determination, seven comparisons were excluded as outliers, whereas a single outlier was excluded from the control group calculation. An overall effect size of 0.311 was calculated for treatment pre- versus post-intervention comparisons. In contrast, the overall effect size for control group pre-post comparisons was 0.024 documenting that control group subjects did not acquire improved adherence from mere study participation. Both treatment and control single-group comparisons were significantly heterogeneous.

If at least 10 primary studies were available, effect sizes were calculated for diseases subgroups of studies with all subjects having that disease. The effect size for asthma patients was 0.092 (k = 11, 95% CI [−0.013, 0.198]). An effect size of 0.237 (k = 15, 95% CI [−0.016, 0.489]) was calculated for heart failure patient studies. Among primary studies composed entirely of subjects with diabetes, the effect size was 0.219 (k = 17, 95% CI [0.052, 0.395]). An effect size of 0.355 (k = 24, 95% CI [0.243, 0.468]) was found for studies of samples of HIV patients. For studies of subjects with hypertension, the overall effect size was 0.235 (k = 42, 95% CI [0.098, 0.370]).

Synthesis of Specific Provider-targeted Interventions

Table 3 includes results for specific kinds of interventions that were reported by at least five comparisons. An effect size of 0.242 was calculated for studies where research staff trained providers to increase skills that enhance adherence. An effect size of 0.235 was reported among studies with intervention to increase integration of care across providers. Among studies with interventions that increased provider communication skills with patients, an effect size of 0.172 was reported. The mean difference effect size for interventions that provided patient adherence information to providers was 0.088. Primary studies with interventions that directed providers to monitor patients’ adherence behavior reported an effect size of 0.207. An effect size of 0.301 was reported for studies with interventions that fostered patient-provider concordance. Among studies with interventions to increase provider time with patients, an effect size of 0.293 was reported. Studies with interventions designed to reduce the geographic distance between providers and patients reported an effect size of 0.076. Comparisons between studies with and without each intervention documented only two statistically significant differences. Studies with interventions to reduce the distance between providers and patients were less effective than studies without this intervention. Studies providing information to providers about patients’ medication adherence were less effective than studies without such information.

Table 3.

Analyses of Specific Provider Interventions

| Moderator | k | Effect size | Standard error | Qbetween | p (Qbetween) |

|---|---|---|---|---|---|

| Improve provider medication adherence intervention skills | 0.403 | .525 | |||

| Present | 100 | 0.242 | 0.039 | ||

| Absent | 92 | 0.211 | 0.028 | ||

| Integrate health care | 0.008 | .927 | |||

| Present | 85 | 0.235 | 0.047 | ||

| Absent | 107 | 0.230 | 0.028 | ||

| Improve provider communication skills | 1.392 | .238 | |||

| Present | 28 | 0.172 | 0.054 | ||

| Absent | 164 | 0.243 | 0.027 | ||

| Information about patient medication adherence given to provider | 15.194 | <.001 | |||

| Present | 18 | 0.088 | 0.032 | ||

| Absent | 174 | 0.260 | 0.030 | ||

| Provider monitored patient medication adherence | 0.122 | .726 | |||

| Present | 15 | 0.207 | 0.078 | ||

| Absent | 177 | 0.236 | 0.025 | ||

| Concordance about medications between patient and provider | 0.399 | .528 | |||

| Present | 13 | 0.301 | 0.110 | ||

| Absent | 179 | 0.230 | 0.025 | ||

| Increased patient time with provider | 1.083 | .298 | |||

| Present | 11 | 0.293 | 0.056 | ||

| Absent | 181 | 0.229 | 0.025 | ||

| Reduce distance between patient and provider | 5.301 | .021 | |||

| Present | 10 | 0.076 | 0.067 | ||

| Absent | 182 | 0.240 | 0.025 |

k denotes number of comparisons, effect size is standardized mean difference, Q is a conventional heterogeneity statistic.

Further analyses examined the number of provider-targeted interventions in studies and specific combinations of interventions. In moderator analysis of continuous variables, studies with more provider-targeted interventions exhibited larger effect sizes than studies with fewer provider interventions (Qmodel = 25.479, p < .001). Only two specific combinations of provider-targeted interventions were reported by at least 5 studies. Studies combining interventions to improve providers’ skills to increase adherence with increased integration of care reported a standardized mean difference effect size of 0.282 (k = 16). Studies that trained providers both to better their patient communication skills and increase their skills to improve adherence reported an overall effect size of 0.118 (k = 16).

Provider interventions were also grouped by whether they focused on patient-provider interaction (increase provider skills to facilitate adherence, improve provider communication skills, provider monitor adherence, provider activation, increase provider time with individual patients, increase concordance) or on system-level changes (increase integration of care, improve continuity of care, system total quality improvement, reduce distance between providers and patients). Effect sizes were similar for individual-focused (0.255) and systems-level change (0.300) interventions. No effect size differences were found for studies that targeted physicians (0.227), registered nurses (not advanced practice nurses) (0.230), or pharmacists (0.249).

Risk of Bias Analysis

Possible associations between adherence effect sizes and indicators of potential bias were investigated using exploratory moderator analyses. The results of dichotomous moderator analyses are in table 4, results of continuous moderator analyses are in the text. Effect sizes were similar for studies with random assignment (0.234) and studies without random assignment (0.226). Studies with allocation concealment (0.229) had similar effect sizes as studies without concealment (0.235). Studies with attention control groups (0.030) reported significantly smaller effect sizes than studies with true control groups (0.284). Effect size was unrelated to masking of data collectors. Effect sizes were significantly smaller among studies with intention-to-treat analyses (0.154) than in studies without this approach (0.256). Effect sizes were larger among studies with larger sample sizes (Qmodel = 14.863, p < .001). Attrition rates were unrelated to effect sizes (Qmodel = 0.004, p = .951).

Table 4.

Risk of Bias Sensitivity Analyses: Treatment vs. Control at Outcome

| Moderator | k | Effect size | Standard error | Qbetween | p (Qbetween) |

|---|---|---|---|---|---|

| Allocation to treatment groups | 0.027 | .868 | |||

| Randomization of individual subjects | 107 | 0.234 | 0.029 | ||

| Subjects not individually randomized | 85 | 0.226 | 0.040 | ||

| Allocation concealment | 0.010 | .922 | |||

| Allocation concealed | 39 | 0.229 | 0.053 | ||

| Did not report allocation concealed | 153 | 0.235 | 0.027 | ||

| Nature of control group | 47.261 | <.001 | |||

| Attention control | 45 | 0.030 | 0.023 | ||

| True control | 147 | 0.284 | 0.029 | ||

| Data collector masking | 0.016 | .898 | |||

| Data collectors masked to group assignment | 43 | 0.228 | 0.051 | ||

| Did not report data collectors masked to group assignment | 149 | 0.235 | 0.028 | ||

| Intention-to-treat approach | 4.969 | .026 | |||

| Reported intention-to-treat approach | 42 | 0.154 | 0.035 | ||

| Did not report intention-to-treat approach | 150 | 0.256 | 0.030 | ||

| Publication status | 0.039 | .844 | |||

| Published articles | 176 | 0.232 | 0.025 | ||

| Dissertations, presentations, unpublished reports | 16 | 0.248 | 0.081 | ||

| Adherence measured with medication event monitoring system | 0.720 | .396 | |||

| Medication event monitoring system | 17 | 0.302 | 0.085 | ||

| Did not use medication event monitoring system | 175 | 0.227 | 0.025 | ||

| Adherence measured with pharmacy refill data | 1.475 | .225 | |||

| Pharmacy refill data | 42 | 0.183 | 0.050 | ||

| Did not use pharmacy refill data | 150 | 0.253 | 0.029 | ||

| Adherence measured with pill counts | 1.624 | .202 | |||

| Pill counts | 14 | 0.351 | 0.096 | ||

| Did not use pill counts | 178 | 0.225 | 0.025 | ||

| Adherence measured by self-report | 0.010 | .919 | |||

| Self-report adherence data | 100 | 0.231 | 0.033 | ||

| Did not use self-report data | 92 | 0.236 | 0.036 |

k denotes number of comparisons, effect size is standardized mean difference, Q is a conventional heterogeneity statistic.

The method used to measure adherence is a potential bias in adherence science. Fourteen studies used pill count data, 17 studies used medication event monitoring systems, 42 analyzed pharmacy refill data, and the remainder of the studies used patient self-report to measure adherence. When effect sizes for each method was compared to other methods, no statistically significant differences were found (Table 4).

The impact of publication on effect size was also investigated. Effect sizes were similar whether studies were published (0.232) or unpublished (0.248). The funnel plot for the treatment versus control two-group comparisons suggested that studies with nonsignificant results are under-reported; both Begg’s (p < .001) and Egger’s (p = .009) tests confirmed the potential publication bias. Similar publication bias was suggested by funnel plots and Begg’s (p = .007) and Egger’s (p = .005) tests for the treatment baseline versus treatment outcome comparisons. In contrast, the funnel plot for control group effect sizes demonstrated no evidence of publication bias which was confirmed by Begg’s (p = .641) and Egger’s (p = .275) tests. (Funnel plots are available from the senior author.)

Discussion

This project is the first comprehensive systematic review and meta-analysis of interventions targeted at providers to increase adherence. The significant positive overall mean difference documents that these interventions are effective in changing patients’ adherence behavior. The overall mean difference effect size of 0.233 was similar to the 0.24 effect size of a meta-analysis of provider-targeted interventions for other health behaviors (30) and smaller than the 0.58 effect size for pharmacist interventions to increase adherence (41). The modest effect size may reflect the difficulty in changing patients’ adherence behavior and in changing health care providers’ behavior.

Although interventions targeted providers, the extent to which the interventions changed their behavior with patients is unknown. Provider interactions with patients must address many issues besides adherence (30). Time to focus on adherence may compete with gathering information to make accurate diagnoses and planning treatments other than medication. Providers develop habits for efficient management of patients, it may be difficult to modify long-established health care provider behaviors. This could be reflected in the low effect size for interventions designed to change provider communication skills. Future research that targets providers should examine provider behavior with patients to verify the impact of interventions on provider actions. Research which examines adherence outcomes of documented provider actions to increase adherence would be informative. This will provide even greater specificity of what provider behaviors may lead to improved patient adherence behavior in clinical practice settings. Medication adherence is important and strategies to enhance and sustain it should be integrated into clinical practice. However, providers (e.g. physicians) have limited time with patients and may not be able to administer adherence services. Individuals with expertise in adherence assessment and management could be incorporated into health care teams to provide such services. Further, if third party payors were to provide reimbursement for adherence support services then health care systems would be more likely to provide comprehensive adherence services.

Increasing medication adherence from 68% to 73% may be clinically meaningful for some diseases. The required adherence to achieve therapeutic goals is unknown for many medications (42). The mean adherence scores reflect aggregate changes, many patients likely achieved much higher adherence levels while others experienced no improvement in adherence. Thus some patients probably attained adherence sufficient for clinical improvement while other patients received limited therapeutic medication benefit due to poor adherence. Future research which identifies strategies most useful for different patients would be useful. Providers need to understand the difficulty in changing adherence behavior and be willing to broaden interventions beyond their interactions with patients to help patients attain clinical improvements. We found that interventions with multiple strategies to change provider behavior were associated with greater improvement in adherence. When trying to change provider behavior with regard to adherence in clinical practice settings, multiple approaches will likely be needed to achieve the greatest impact on patient outcomes.

We found no evidence that individual level or systems level interventions are superior. Effect sizes of 0.088 to 0.301 were reported for interventions targeting individual provider interactions with patients. Effect sizes that focused on systems of providers’ care reported standardized mean difference effect sizes of 0.076 to 0.293. Given the difficulty in changing adherence behavior, interventions that focus on providers may be only a partial solution. It is unclear if the cost and difficulty in changing provider behavior, as the sole intervention to increase adherence, is warranted given the modest effect sizes. In practice, interventions beyond provider behavior should be used to increase adherence to achieve therapeutic goals.

This project focused on interventions to change providers’ interactions or delivery of care. Patient perceptions of providers are rarely reported in intervention studies and thus were not synthesized. This is an important limitation in extant literature, given evidence that patient perceptions about their providers may influence adherence (10–20). Future research which examines patient perceptions of provider interventions to increase adherence would be useful.

Data were analyzed based on the presence or absence of specific provider-targeted interventions because meta-analyses rely on primary study reports that report presence of specific interventions but not extent of implementation. Providers’ actions and relationships with patients are on a continuum, not a dichotomous variable (24). This is a limitation of the meta-analysis. Future research should examine the dose of provider interventions in relationship to adherence outcomes. Despite comprehensive searching, individual relevant studies may have been missed. Some interesting variables, such as the number of prescribed medications that patients were taking, were not included in moderator analyses because they were poorly reported in primary studies. New adherence research should provide more detailed information about medications. In studies with multiple provider-targeted interventions, it was impossible to determine a dominant component of the intervention because this information was not specified in research reports. Some primary studies included sources of bias such as lack of random assignment, allocation concealment, data collector masking, adherence measures with documented validity, and intention-to-treat analyses.

This is the first systematic review and meta-analysis to examine provider-targeted interventions designed to improve adherence. The overall effect size confirmed that these interventions improve adherence behavior, but the effect size was modest. Primary research comparing specific provider-linked intervention components in individual trials is necessary to move knowledge forward. Complex multi-level interventions that target systems of care, providers’ actions and relationships with patients, family and larger social context, and individual patient determinants of adherence may be necessary to dramatically improve adherence to achieve therapeutic outcomes (33).

Review Criteria

Eligible studies reported patient medication adherence behavior following interventions designed to increase adherence which targeted health care providers.

Comprehensive searching was conducted in 13 databases and 57 journals; ancestry and author searches were completed.

Standardized mean difference effect sizes between treatment and control subjects were calculated. Effect sizes were calculated separately for different characteristics of health care provider targeted interventions.

Message for the Clinic

Overall, interventions that target health care providers to increase patient adherence are significantly effective; the magnitude of medication adherence improvement is modest (68% adherence for control subjects vs. 73% adherence for treatment subjects).

Although interventions were more effective when they included multiple strategies, there was little evidence supporting specific strategies.

Successful interventions to dramatically improve medication adherence may need to address multiple levels of influence beyond health care providers.

Acknowledgments

The project was supported by Award Number R01NR011990 (Conn-principal investigator) from the National Institutes of Health. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The funding agency had no role in study design, data collection, data analysis, manuscript preparation, or publication decisions.

Footnotes

Author Contributions: Dr. Conn conceptualized and designed the study, secured funding, analyzed and interpreted data, and wrote the first draft of the manuscript. Dr. Ruppar participated in conceptualizing the study, designing provider specific coding items, interpreting findings, and critically revising the manuscript. Dr. Enriquez provide primary health care provider practice and research expertise in designing coding item, interpreting moderator analyses, and reviewing the manuscript. Dr. Cooper provided expertise in designing coding items for descriptive information and primary study results, interpreting findings, and extensive manuscript revision. Dr. Chan participated in conceptualizing health care provider specific variables, acquiring data, analyzing data, and manuscript revision.

Disclosures: The authors disclose no actual or potential conflicts of interest.

Conflict of Interest

The authors declare that there are no conflicts of interest.

Contributor Information

V. S. Conn, School of Nursing, S317 Sinclair Building, University of Missouri, Columbia MO 65211, United States of America

T. M. Ruppar, School of Nursing, S413 Sinclair Building, University of Missouri, Columbia MO 65211, United States of America

M. Enriquez, School of Nursing, S328 Sinclair Building, University of Missouri, Columbia MO 65211, United States of America

P. S. Cooper, School of Nursing, S303 Sinclair Building, University of Missouri, Columbia MO 65211, United States of America

K. C. Chan, School of Nursing, S319 Sinclair Building, University of Missouri, Columbia MO 65211, United States of America

References

- 1.DiMatteo MR, Giordani PJ, Lepper HS, Croghan TW. Patient adherence and medical treatment outcomes: a meta-analysis. Med Care. 2002;40:794–811. doi: 10.1097/00005650-200209000-00009. [DOI] [PubMed] [Google Scholar]

- 2.Simpson SH, Eurich DT, Majumdar SR, et al. A meta-analysis of the association between adherence to drug therapy and mortality. BMJ. 2006;333:15. doi: 10.1136/bmj.38875.675486.55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Christensen A. Patient adherence to medical treatment regimens: bridging the gap between behavior science and biomedicine. New York: Yale University Press; 2004. [Google Scholar]

- 4.Rosenbaum L, Shrank WH. Taking our medicine–improving adherence in the accountability era. N Engl J Med. 2013;369:694–5. doi: 10.1056/NEJMp1307084. [DOI] [PubMed] [Google Scholar]

- 5.Becker MH, Maiman LA. Sociobehavioral determinants of compliance with health and medical care recommendations. Med Care. 1975;13:10–24. doi: 10.1097/00005650-197501000-00002. [DOI] [PubMed] [Google Scholar]

- 6.Bender B. Physician-patient communication as a tool that can change adherence. Ann Allergy Asthma Immunol. 2009;103:1–2. doi: 10.1016/S1081-1206(10)60134-2. [DOI] [PubMed] [Google Scholar]

- 7.Bosworth HB, Dudley T, Olsen MK, et al. Racial differences in blood pressure control: potential explanatory factors. Am J Med. 2006;119:70.e9–15. doi: 10.1016/j.amjmed.2005.08.019. [DOI] [PubMed] [Google Scholar]

- 8.Gillum RF, Barsky AJ. Diagnosis and management of patient noncompliance. JAMA. 1974;228:1563–7. [PubMed] [Google Scholar]

- 9.Laws MB, Beach MC, Lee Y, et al. Provider-patient adherence dialogue in HIV care: results of a multisite study. AIDS Behav. 2013;17:148–59. doi: 10.1007/s10461-012-0143-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Blackstock OJ, Addison DN, Brennan JS, Alao OA. Trust in primary care providers and antiretroviral adherence in an urban HIV clinic. J Health Care Poor Underserved. 2012;23:88–98. doi: 10.1353/hpu.2012.0006. [DOI] [PubMed] [Google Scholar]

- 11.Gaston GB. African-Americans’ perceptions of health care provider cultural competence that promote HIV medical self-care and antiretroviral medication adherence. AIDS Care. 2013;25:1159–65. doi: 10.1080/09540121.2012.752783. [DOI] [PubMed] [Google Scholar]

- 12.Sale JE, Gignac MA, Hawker G, et al. Decision to take osteoporosis medication in patients who have had a fracture and are ‘high’ risk for future fracture: a qualitative study. BMC Musculoskelet Disord. 2011;12:92. doi: 10.1186/1471-2474-12-92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Harrington J, Noble LM, Newman SP. Improving patients’ communication with doctors: a systematic review of intervention studies. Patient Educ Couns. 2004;52:7–16. doi: 10.1016/s0738-3991(03)00017-x. [DOI] [PubMed] [Google Scholar]

- 14.Jahng KH, Martin LR, Golin CE, DiMatteo MR. Preferences for medical collaboration: patient-physician congruence and patient outcomes. Patient Educ Couns. 2005;57:308–14. doi: 10.1016/j.pec.2004.08.006. [DOI] [PubMed] [Google Scholar]

- 15.Stavropoulou C. Non-adherence to medication and doctor-patient relationship: Evidence from a European survey. Patient Educ Couns. 2011;83:7–13. doi: 10.1016/j.pec.2010.04.039. [DOI] [PubMed] [Google Scholar]

- 16.Bushnell CD, Olson DM, Zhao X, et al. Secondary preventive medication persistence and adherence 1 year after stroke. Neurology. 2011;77:1182–90. doi: 10.1212/WNL.0b013e31822f0423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Thames AD, Moizel J, Panos SE, et al. Differential predictors of medication adherence in HIV: findings from a sample of African American and Caucasian HIV-positive drug-using adults. AIDS Patient Care STDS. 2012;26:621–30. doi: 10.1089/apc.2012.0157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Schoenthaler A, Chaplin WF, Allegrante JP, et al. Provider communication effects medication adherence in hypertensive African Americans. Patient Educ Couns. 2009;75:185–91. doi: 10.1016/j.pec.2008.09.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Schoenthaler A, Allegrante JP, Chaplin W, Ogedegbe G. The effect of patient-provider communication on medication adherence in hypertensive black patients: does race concordance matter? Ann Behav Med. 2012;43:372–82. doi: 10.1007/s12160-011-9342-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ledford CJ, Villagran MM, Kreps GL, et al. “Practicing medicine”: patient perceptions of physician communication and the process of prescription. Patient Educ Couns. 2010;80:384–92. doi: 10.1016/j.pec.2010.06.033. [DOI] [PubMed] [Google Scholar]

- 21.Zeller A, Taegtmeyer A, Martina B, Battegay E, Tschudi P. Physicians’ ability to predict patients’ adherence to antihypertensive medication in primary care. Hypertension Research - Clinical & Experimental. 2008;31:1765–71. doi: 10.1291/hypres.31.1765. [DOI] [PubMed] [Google Scholar]

- 22.Tarn DM, Mattimore TJ, Bell DS, Kravitz RL, Wenger NS. Provider views about responsibility for medication adherence and content of physician-older patient discussions. J Am Geriatr Soc. 2012;60:1019–26. doi: 10.1111/j.1532-5415.2012.03969.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wilson IB, Schoen C, Neuman P, et al. Physician-patient communication about prescription medication nonadherence: a 50-state study of America’s seniors. J Gen Intern Med. 2007;22:6–12. doi: 10.1007/s11606-006-0093-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Arbuthnott A, Sharpe D. The effect of physician-patient collaboration on patient adherence in non-psychiatric medicine. Patient Educ Couns. 2009;77:60–7. doi: 10.1016/j.pec.2009.03.022. [DOI] [PubMed] [Google Scholar]

- 25.Beck RS, Daughtridge R, Sloane PD. Physician-patient communication in the primary care office: a systematic review. J Am Board Fam Pract. 2002;15:25–38. [PubMed] [Google Scholar]

- 26.Dwamena F, HolmesRovner M, Gaulden CM, et al. Interventions for providers to promote a patient-centred approach in clinical consultations. Cochrane Database of Systematic Reviews. 2012;12:1–178. doi: 10.1002/14651858.CD003267.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hall JAP, Roter DLD, Katz NRBA. Meta-analysis of Correlates of Provider Behavior in Medical Encounters. Med Care. 1988;26:657–75. doi: 10.1097/00005650-198807000-00002. [DOI] [PubMed] [Google Scholar]

- 28.Krueger KP, Berger BA, Felkey B. Medication adherence and persistence: a comprehensive review. Adv Ther. 2005;22:313–56. doi: 10.1007/BF02850081. [DOI] [PubMed] [Google Scholar]

- 29.Mead N, Bower P. Patient-centred consultations and outcomes in primary care: a review of the literature. Patient Educ Couns. 2002;48:51–61. doi: 10.1016/s0738-3991(02)00099-x. [DOI] [PubMed] [Google Scholar]

- 30.Zolnierek KB, Dimatteo MR. Physician communication and patient adherence to treatment: a meta-analysis. Med Care. 2009;47:826–34. doi: 10.1097/MLR.0b013e31819a5acc. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Cooper H, Hedges L, Valentine J, editors. The Handbook of Research Synthesis and Meta-Analysis. 2nd. New York: Russell Sage Foundation; 2009. [Google Scholar]

- 32.Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ. 2009;339:b2700. doi: 10.1136/bmj.b2700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.World Health Organization. Adherence to long-term therapies: evidence for action. Vol. 2003. Geneva, Switzerland: World Health Organization; 2003. [Google Scholar]

- 34.Rothstein HR, Hopewell S. Grey literature. In: Cooper H, Hedges L, Valentine J, editors. The Handbook of Research Synthesis and Meta-Analysis. 2nd. New York: Russell Sage Foundation; 2009. pp. 103–25. [Google Scholar]

- 35.Wilson D. Systematic coding. In: Cooper H, Hedges L, Valentine J, editors. The Handbook of Research Synthesis and Meta-Analysis. 2nd. New York: Russell Sage Foundation; 2009. pp. 159–76. [Google Scholar]

- 36.White H. Scientific communication and literature retrieval. In: Cooper H, Hedges L, Valentine J, editors. The Handbook of Research Synthesis and Meta-Analysis. 2nd. New York: Russell Sage Foundation; 2009. pp. 51–71. [Google Scholar]

- 37.Sutton AJ. Publicaton bias. In: Cooper H, Hedges L, Valentine J, editors. The Handbook of Research Synthesis and Meta-Analysis. 2nd. New York: Russell Sage Foundation; 2009. pp. 435–52. [Google Scholar]

- 38.Hedges L, Olkin I. Statistical Methods for Meta-Analysis. Orlando, FL: Academic Press; 1985. [Google Scholar]

- 39.Borenstein M, Hedges L, Higgins JPT, Rothstein H. Introduction to Meta-Analysis. West Sussex, England: John Wiley & Sons, Ltd; 2009. [Google Scholar]

- 40.Conn VS, Hafdahl AR, Mehr DR, LeMaster JW, Brown SA, Nielsen PJ. Metabolic effects of interventions to increase exercise in adults with type 2 diabetes. Diabetologia. 2007;50:913–21. doi: 10.1007/s00125-007-0625-0. [DOI] [PubMed] [Google Scholar]

- 41.Chisholm-Burns MAPMPHFF, Kim Lee JPB, Spivey CAPL, et al. US Pharmacists’ Effect as Team Members on Patient Care: Systematic Review and Meta-Analyses. Med Care. 2010;48:923–33. doi: 10.1097/MLR.0b013e3181e57962. [DOI] [PubMed] [Google Scholar]

- 42.Peeters B, Van Tongelen I, Boussery K, Mehuys E, Remon JP, Willems S. Factors associated with medication adherence to oral hypoglycaemic agents in different ethnic groups suffering from type 2 diabetes: a systematic literature review and suggestions for further research. Diabet Med. 2011;28:262–75. doi: 10.1111/j.1464-5491.2010.03133.x. [DOI] [PubMed] [Google Scholar]