Abstract

While Virtual Reality (VR) has emerged as a viable method for training new medical residents, it has not yet reached all areas of training. One area lacking such development is surgical residency programs where there are large learning curves associated with skill development. In order to address this gap, a Dynamic Haptic Robotic Trainer (DHRT) was developed to help train surgical residents in the placement of ultrasound guided Internal Jugular Central Venous Catheters and to incorporate personalized learning. In order to accomplish this, a 2-part study was conducted to: (1) systematically analyze the feedback given to 18 third year medical students by trained professionals to identify the items necessary for a personalized learning system and (2) develop and experimentally test the usability of the personalized learning interface within the DHRT system. The results can be used to inform the design of VR and personalized learning systems within the medical community.

INTRODUCTION

Virtual reality (VR) is emerging in the medical field as a revolutionary training method for surgical procedures (Ruthenbeck & Reynolds, 2015) because it provides a realistic environment to learn and practice surgical skills without compromising patient safety (Frank et al., 2010). Although studies show that surgical complication rates are reduced when training is provided on a simulator (Evans et al., 2010), there are still large skill gaps between an individual’s performance on a simulator and their first performance of a surgical procedure on a patient (Ericsson, 2004). In addition, while the use of VR simulators may have an advantage over standard simulators for improving learning gains through adaptive and real time feedback, research has shown that the timing and type of feedback (task specific, processes specific, or self-regulatory) provided to an individual can change what and how they learn (Hattie & Timperley, 2007). Specifically, researchers have shown that providing detailed and appropriate feedback is a critical part of the learning process and the opportunity for deliberate practice to incorporate that feedback is crucial to the development of expertise in surgery (Ericsson, 2006). This prior work has identified the need to explore how to improve VR training through more personalized learning feedback platforms in an effort to reduce the skills gap between simulator and actual performance.

Within the medical field, most training feedback has trended towards the use of checklists that ensure critical points are being met. However, these checklists can result in observers simply checking off boxes rather than providing helpful feedback (Dong et al., 2010). On the other hand, an advantage of training and providing feedback through VR is that students can receive detailed, personalized feedback on skills rather than just a simple ‘yes’ or ‘no’ checkbox. Importantly, the effectiveness of this feedback relies heavily on system’s graphical user interface (GUI) design. For example, a study by Magner, Schwonke, Aleven, Popescu, and Renkl (2014) suggested that learning can be increased through motivation and situational interest and that these factors could be influenced through aesthetics of the feedback system (Magner et al., 2014). Additionally, user testing and good aesthetics of a GUI for a learning system are important because perceptions of usability can influence the actual usability of a system (Kurosu & Kashimura, 1995). In other words, if a poorly designed GUI results in a system that appears difficult to operate, users may determine that the system itself is difficult to use, therefore reducing learning. The format in which information, in this case learning feedback, is presented is also important. For example, a recent study found that providing both textual and graphical feedback to participants who were learning a new skill improved their retention of the information when compared to just textual feedback or just graphical feedback (Rieber, Tzeng, & Tribble, 2004). Thus, if designed well, VR simulators can have a major advantage over standard “dummy” simulators because they can present multiple types of feedback to the users through both text and graphical formats. In addition, VR systems can provide real time tactile and visual feedback that correspond to variations in anatomy and therefore present realistically difficult situations (Grantcharov et al., 2004).

While VR simulators show great promise in medical education, they have yet to be fully extended to all areas of medical education, including central venous catheterization (CVC). Developing surgical trainers for CVC is important because up to 39% of CVC patients experience adverse effects (McGee & Gould, 2003) and there is a high rate of morbidity in hospitalized patients (Leape et al., 1991). The current state of the art in CVC training involves “realistic” manikins featuring an arterial pulse (controlled through a hand-pump) and self-sealing veins. While these types of trainers provide a low-stress, no-risk method to learn surgical procedures (Kunkler, 2006), they are static in nature, only representing a single patient type and anatomy. In addition, they require feedback from an individual who is trained in the field. Importantly, the current evaluation method for CVC training utilizes a 10-question binary checklist (see “Phase 2” section for details).

In order to overcome the deficits of current trainers, a Dynamic Haptic Robotic Trainer (DHRT) (See Figure 1) was developed to expose medical residents, who have not performed the procedure, to accurate haptic feedback and variations in patient anatomy prior to placing a line in a clinical setting (see (Pepley et al., 2016) for full details on system). This is important because research has suggested that many of the complications associated with CVC placement are due to variations in patient anatomy (Kirkpatrick et al., 2008). Thus, the DHRT system was developed to overcome these issues. The DHRT system includes a simulated and interactive ultrasound probe, ultrasound screen, needle, and a variety of patient cases. Importantly, an initial study comparing the use of the DHRT system to standard manikin training showed that there were no differences in learning outcomes or self-efficacy between the two systems in a short-term study with medical students (Yovanoff et al., 2016). While the study was conducted using the IJ CVC checklist, the verbal feedback provided to the participants often corrected items that were marked as ‘pass’ on the binary form. This suggests the checklist may not be an effective method for providing feedback. While the DHRT system has the ability to provide personalized feedback, no effective feedback method had yet been developed.

Figure 1.

The Dynamic Haptic Robotic System

Thus, the current two-phase study was developed to: (1) systematically analyze the feedback given to individuals being trained on IJ CVC procedures to develop a personalized learning system and (2) develop and experimentally test the usability of a personalized learning interface within the DHRT system. Importantly, the data presented here uniquely addresses two of the “Grand Challenges of Engineering for the 21st Century” proposed by the National Academy of Engineering including “Enhancing Virtual Reality” and “Personalized Learning” (“NAE Grand Challenges for Engineering,” 2017). These results can be used to inform the design of VR and personalized learning systems within the medical community.

PHASE 1: LEARNING SYSTEM DEVELOPMENT

The purpose of phase 1 of the study was to determine the content of the feedback provided to trainees during the IJ-CVC procedure through an experimental study with 18 medical students (11 male, 7 female) between the ages of 23 and 35 with a mean age of 26. The details of the study follow.

Procedure

At the start of the study, the purposes and procedures were explained to participants and informed consent was obtained. Next, participants were given a demonstration of the CVC procedure on a manikin. Individually, each participant then completed a pre-test and received feedback on the same manikin. Participants then completed a practice session with 8 insertions where they received verbal feedback. Finally, a post-test was completed on a different manikin and feedback was provided. For a detailed description of this experimental procedure see (Yovanoff et al., 2016).

To aid readers who are unfamiliar with the CVC procedure a description of how to perform the needle insertion is summarize according to Graham, Ozment, Tegtmeyer, and Braner (2013). Specifically, during the procedure, an ultrasound is used to identify two vessels. The vein is typically on the right, but should always be identified by compressibility. Once the vessels are identified, the ultrasound is held in place and a needle is inserted at a 30° to 45° angle while aspirating - pulling back on the syringe while continuously inserting the needle. Individuals should be watching the ultrasound screen to track their needle position. They should feel when the needle enters a vessel because they will be able to easily aspirate and will see ‘flash”, which is blood filling the syringe.

Data Analysis

In order to identify the feedback being provided during CVC training, a qualitative analysis was conducted. This content analysis focused only on feedback given to participants about their performance on the pre- and post-tests. The feedback provided was video recorded and then transcribed. The transcripts were then analyzed by two independent raters using combined principles of inductive and deductive content analysis (Mayring, 2015) in NVivo v11.4.0. These were used because a pre-existing coding scheme did not exist, but an Internal Jugular Catheterization (IJ CVC) evaluation form which is used to evaluate resident performance was available. The IJ CVC evaluation form is a 10-item checklist focusing exclusively on the needle insertion portion of the procedure and included items like “continuously aspirating the entire time”, “conducting the entire procedure without any mistakes”, and “selecting the appropriate site for venipuncture”. This checklist was filled out during the pre- and post-test to compare performance between the DHRT system and manikin training groups across the tests (see (Yovanoff et al., 2016) for results). Thus, this checklist was used as a starting place to analyze the verbal feedback provided to participants on these items.

During transcript coding, each item on the checklist was made into a node in NVivo. Next, two researchers verbally discussed each item on the checklist until both felt satisfied that they had a mutual understanding of each item. For example, “inserting needle at a 30–45 degree angle” was coded any time the specific angle of the needle was mentioned or any time “shallow” or “steep” was used to describe the needle. Each rater coded 3–5 different transcriptions and were encouraged to add any nodes they felt were frequently mentioned. An example is “hand position on the syringe”. Together, the raters then reevaluated the existing nodes to form a consensus for which nodes were a good representation of the feedback being provided. A coding handbook was developed which can be found at (http://www.engr.psu.edu/britelab/projects_cvc.html).

After thoroughly discussing the handbook, the raters coded all eighteen transcriptions. An example portion of a transcription and accompanying code is shown below: the first highlighted portion was coded as “aspirating the entire time” while the second highlighted portion was coded as “identifying the vessels on the ultrasound”.

Participant: “Do I need to inject this?”

Evaluator: “No you’re fine. You can just leave that right there. That’s fine. Um, Excellent job. I’ll give you feedback through pre- and post- tests. Uh, you did something that most beginners struggle with and that is aspirating the entire time which is very important. If you’re stabbing any needle you’re aspirating the entire time. So you did a great job of that. You did a great job of identifying the vessel. Excellent job, any questions?”

Once the coding scheme was set, the raters individually coded the transcripts, achieving an interrater reliability (weighted Cohen’s Kappa) of 0.7.

Results

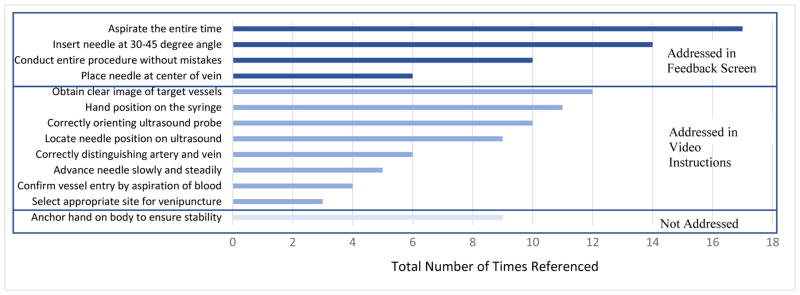

Based on the content analysis, the most frequently mentioned feedback provided during the pre- and post- test were, “Aspirating the entire time” which was referenced 17 times, “Inserting the needle at a 30–45 degree angle” which was referenced 14 times, and “Obtaining a clear image of the target vessels” which was referenced 12 times. A total reference count for all items is shown in Figure 2. Based on this information, and the capabilities of the system, the authors focused future development of the learning system in two areas: (1) personalized learning feedback through the DHRT system, and (2) an introductory training video presented at the start of the DHRT system. The details of these items are presented in Phase 2.

Figure 2.

Number and type of feedback provided to participants during the pre- and post- test and information addressed in the DHRT personalized learning system.

PHASE 2: LEARNING INTERFACE DESIGN AND DEVELOPMENT

The purpose of Phase 2 of the study was to incorporate the information gathered in Phase 1 into a personalized learning interface for the DHRT system and conduct a usability study on the effectiveness and interpretation of the information presented through the system.

DHRT Personalized Learning System Development

Based on the results from Phase 1 and the capabilities of the system, items were addressed in either a feedback screen or in an instructional video shown prior to using the system, see Figure 2. Specifically, the results were used to create a personalized learning system that involved 4 phases. First, users log into the system using a unique code (de-identified) and are presented with a video they are required to view. This video was developed to address the eight feedback items not addressed by the personalized learning interface. Specifically, the video provides an introduction to the system and highlights the skills on the CVC checklist that were identified in Phase 1 as being most frequently referenced. This includes a full demonstration of an IJ CVC placement, and demonstrations of how to hold the syringe, how to identify vessels on the ultrasound screen, and how to confirm vessel entry by aspiration. During these demonstrations, particular emphasis is placed on methods for correctly identifying the vessels, advancing the needle slowly and steadily, and selecting the appropriate site for venipuncture.

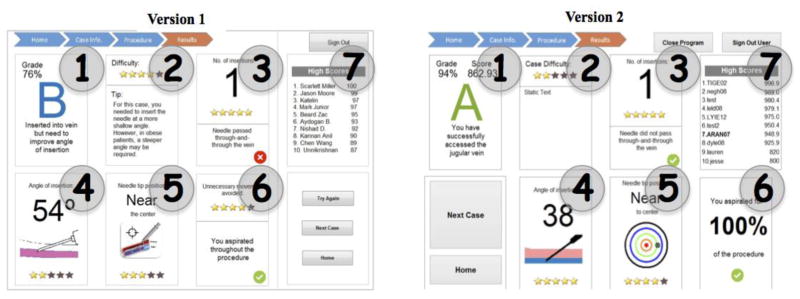

Once the video is viewed, participants are directed to a ‘home screen’ showing performance over time, general tips, and navigation buttons to re-watch the training video or start simulation practice. When users start a simulation, they will first be shown a hypothetical patient profile containing relevant clinical information such as height and weight. After viewing this information, users may start a trial. An ultrasound image will appear and they can complete a needle insertion. Once complete, a personalized screen is shown that captures the feedback from Phase 1 of the study (see Figure 3).

Figure 3.

Learning Interface for the Dynamic Haptic Robotic Trainer. Version 1, developed prior to user testing, is shown left. Version 2, with modifications based on user testing, is shown right.

The initial design of the personalized learning screen contains 7 unique boxes to address the items identified in Phase 1 as most commonly referenced. To address ‘conduct entire procedure without any mistakes’ (referenced 10 times) there is an overall grade in Box 1. This is calculated by a weighted grading system for each item on the CVC checklist which are based on frequency of reference from Phase 1. This Box 2 includes overall difficulty of the scenario, as determined by team of surgical experts, and a personalized tip. Box 3 includes the number of insertion attempts and alerts the user if they went through–and–through the vein. Although number of insertions was not analyzed as part of the content analysis, it was an item on the CVC checklist. An attempt was counted as a new insertion attempt if the user retracted the needle from the skin. Box 4 shows the average angle of insertion (referenced 14 times), which is calculated, based on the overall path created by the needle tip. Box 5 shows how closely the needle tip was to the center of the vein (referenced 6 times) based on the coordinates of the tip of the needle at the end of the trial relative to the center of the circular vessel. Box 6 informed participants if they had any unnecessary movement, and if they aspirated the entire time (referenced 17 times). On the top right side of the screen is a high score board which keeps a history of top scores from Box 1. After the system was developed, a usability study was conducted to identify its effectiveness.

Usability Study Participants and Procedures

The initial design of the learning interface was evaluated through user testing and interviews with 8 surgical residents from HMC. To qualify, participants were required to have placed a central line in a clinical setting. They were remunerated with a $15 gift card. The purposes and procedures were explained to the participants and informed consent was obtained.

During the study, participants were asked to perform specific tasks using the interface, including signing in, watching a tutorial video, starting a simulation, performing a CVC insertion simulation, and verbally reporting their interpretation of the results presented on the feedback screen. The results presented were not the results of any simulation they had completed, but were static for each user and created for the sole purposes of this study. After completing the study, participants completed a brief survey asking them to rate the various feedback items on a scale of 1 to 5 with 1 being “least useful” and 5 being “most useful.” Finally, participants were allowed to provide suggestions on the system design.

Usability Study Data Analysis, Results and System Modifications

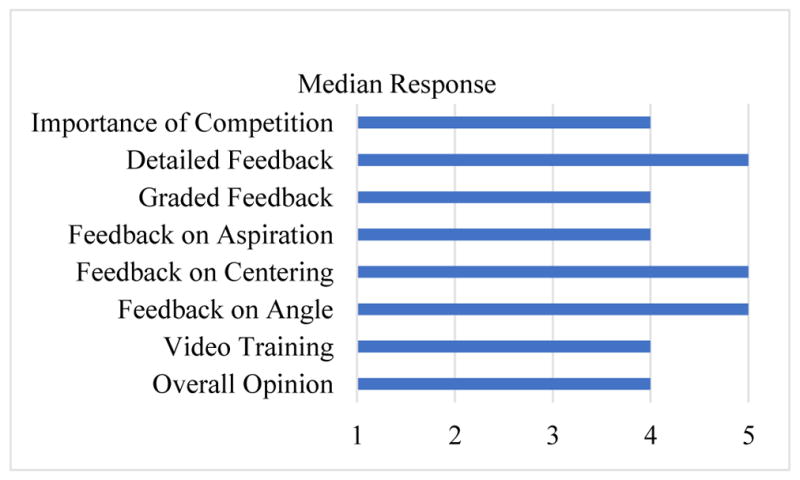

The results of the survey showed that all survey responses for the 8 items on the usability survey were above a median value of 3 (neutral) with feedback on needle angle (M=5), centering of needle in vessel (M=5) and detailed feedback (M=5) receiving the highest ratings (see Figure 4). The following suggestions were provided by participants during the study: (1) Present methods for improving parameters of performance in addition to scoring, (2) Use letter grades with specific performance parameters, and (3) Provide a personalized tip for each user on which parameter(s) to improve based on their past performance.

Figure 4.

Median responses on the usability survey.

Based on this feedback, several modifications were made to the interface as shown in Figure 3. Specifically, the grading system in Box 1 was changed from a maximum score of 100 to a maximum score of 1000 to capture more granular differences in performance. In addition, in order to provide more detailed feedback, the image in Box 5 was changed from a static image to a dart-like image that showed the exact position of the needle tip relative to the center of the vein. Additionally, Box 6 was changed to show a percentage of time aspirating rather than a binary system. Finally, because participants indicated that “unnecessary movement” was vague and confusing, this feedback on this was removed.

Conclusions and Future Work

The goal of this study was to develop a robust personalized learning interface for the DHRT system. While the results identified a methodology for creating a learning interface based on verbal feedback for a surgical procedure, there were several limitations to this study. First, although residents conducted all training in CVC procedures at HMC, the content of the feedback provided during the trials may differ between individuals. Additionally, although participants were able to verbalize an understanding of the feedback being provided, no analysis of their actual performance and learning gains was conducted. In order to overcome these limitations, a study is currently in progress to determine if the DHRT system, including the personalized learning interface, is an effective method for training new medical residents in CVC placement. Preliminary results of this study indicate that users are significantly improving their performance in CVC skills. While the ongoing study focuses on understanding the learning outcomes for CVC skills while using the learning interface of the DHRT system, future studies should seek to identify how these learning outcomes transfer to clinical settings.

Acknowledgments

Research reported in this publication was supported by the National Heart, Lung, and Blood Institute of the National Institutes of Health under Award Number R01HL127316. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. We would also like to thank CAE Healthcare for loaning the Blue-Phantom trainer. Finally, we would like to thank Beytullah Aydogan, Zachary Beard, and Kannan Kumar for their help in designing the system and the user study.

Contributor Information

Mary Yovanoff, Industrial Engineering, Penn State, University Park, PA.

David Pepley, Mechanical and Nuclear Engineering, Penn State, University Park, PA.

Katelin Mirkin, Penn State Hershey Medical Center, Hershey, PA.

Jason Moore, Mechanical and Nuclear Engineering, Penn State, University Park, PA.

David Han, Penn State Hershey Medical Center, Hershey, PA.

Scarlett Miller, Engineering Design and Industrial Engineering, Penn State, University Park, PA.

References

- Dong Y, Suri HS, Cook DA, Kashani KB, Mullon JJ, Enders FT, … Dunn WF. Simulation-based objective assessment discerns clinical proficiency in central line placement: a construct validation. CHEST Journal. 2010;137(5):1050–1056. doi: 10.1378/chest.09-1451. [DOI] [PubMed] [Google Scholar]

- Ericsson KA. Deliberate practice and the acquisition and maintenance of expert performance in medicine and related domains. Academic Medicine. 2004;79(10):S70–S81. doi: 10.1097/00001888-200410001-00022. [DOI] [PubMed] [Google Scholar]

- Ericsson KA. The influence of experience and deliberate practice on the development of superior expert performance. The Cambridge handbook of expertise and expert performance. 2006:683–703. [Google Scholar]

- Evans LV, Dodge KL, Shah TD, Kaplan LJ, Siegel MD, Moore CL, … D’Onofrio G. Simulation training in central venous catheter insertion: improved performance in clinical practice. Academic Medicine. 2010;85(9):1462–1469. doi: 10.1097/ACM.0b013e3181eac9a3. [DOI] [PubMed] [Google Scholar]

- Frank JR, Snell LS, Cate OT, Holmboe ES, Carraccio C, Swing SR, … Dath D. Competency-based medical education: theory to practice. Medical teacher. 2010;32(8):638–645. doi: 10.3109/0142159X.2010.501190. [DOI] [PubMed] [Google Scholar]

- Graham A, Ozment C, Tegtmeyer K, Braner D., Producer Central Venous Catheterization. 2013 doi: 10.1056/NEJMvcm055053. Retrieved from http://www.youtube.com/watch?v=L_Z87iEwjbE. [DOI] [PubMed]

- Grantcharov TP, Kristiansen V, Bendix J, Bardram L, Rosenberg J, Funch-Jensen P. Randomized clinical trial of virtual reality simulation for laparoscopic skills training. British Journal of Surgery. 2004;91(2):146–150. doi: 10.1002/bjs.4407. [DOI] [PubMed] [Google Scholar]

- Hattie J, Timperley H. The power of feedback. Review of Educational Research. 2007;77(1):81–112. [Google Scholar]

- Kirkpatrick AW, Blaivas M, Sustic A, Chun R, Beaulieu Y, Breitkreutz R. Focused application of ultrasound in critical care medicine. Critical care medicine. 2008;36(2):654–655. doi: 10.1097/01.CCM.0000300544.63192.2B. [DOI] [PubMed] [Google Scholar]

- Kunkler K. The role of medical simulation: an overview. The International Journal of Medical Robotics and Computer Assisted Surgery. 2006;2(3):203–210. doi: 10.1002/rcs.101. [DOI] [PubMed] [Google Scholar]

- Kurosu M, Kashimura K. Apparent usability vs. inherent usability: experimental analysis on the determinants of the apparent usability. Paper presented at the Conference companion on Human factors in computing systems.1995. [Google Scholar]

- Leape LL, Brennan TA, Laird N, Lawthers AG, Localio AR, Barnes BA, … Hiatt H. The nature of adverse events in hospitalized patients: results of the Harvard Medical Practice Study II. New England Journal of Medicine. 1991;324(6):377–384. doi: 10.1056/NEJM199102073240605. [DOI] [PubMed] [Google Scholar]

- Magner UI, Schwonke R, Aleven V, Popescu O, Renkl A. Triggering situational interest by decorative illustrations both fosters and hinders learning in computer-based learning environments. Learning and instruction. 2014;29:141–152. [Google Scholar]

- Mayring P. Approaches to qualitative research in mathematics education. Springer; 2015. Qualitative content analysis: theoretical background and procedures; pp. 365–380. [Google Scholar]

- McGee DC, Gould MK. Preventing complications of central venous catheterization. New England Journal of Medicine. 2003;348(12):1123–1133. doi: 10.1056/NEJMra011883. [DOI] [PubMed] [Google Scholar]

- NAE Grand Challenges for Engineering. 2017 Retrieved from http://www.engineeringchallenges.org/

- Pepley D, Yovanoff M, Mirkin K, Miller S, Han D, Moore J. A Virtual Reality Haptic Robotic Simulator for Central Venous Catheterization Training. Journal of Medical Devices. 2016;10(3):030937. doi: 10.1115/1.4033867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rieber LP, Tzeng SC, Tribble K. Discovery learning, representation, and explanation within a computer-based simulation: Finding the right mix. Learning and instruction. 2004;14(3):307–323. [Google Scholar]

- Ruthenbeck GS, Reynolds KJ. Virtual reality for medical training: the state-of-the-art. Journal of Simulation. 2015;9(1):16–26. [Google Scholar]

- Yovanoff M, Pepley D, Mirkin K, Moore J, Han D, Miller S. Improving Medical Education: Simulating Changes in Patient Anatomy Using Dynamic Haptic Feedback; Paper presented at the Proceedings of the Human Factors and Ergonomics Society Annual Meeting; 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]