Abstract

Purpose

Cochlear implantation has recently become available as an intervention strategy for young children with profound hearing impairment. In fact, infants as young as 6 months are now receiving cochlear implants (CIs), and even younger infants are being fitted with hearing aids (HAs). Because early audiovisual experience may be important for normal development of speech perception, it is important to investigate the effects of a period of auditory deprivation and amplification type on multimodal perceptual processes of infants and children. The purpose of this study was to investigate audiovisual perception skills in normal-hearing (NH) infants and children and deaf infants and children with CIs and HAs of similar chronological ages.

Methods

We used an Intermodal Preferential Looking Paradigm to present the same woman’s face articulating two words (“judge” and “back”) in temporal synchrony on two sides of a TV monitor, along with an auditory presentation of one of the words.

Results

The results showed that NH infants and children spontaneously matched auditory and visual information in spoken words; deaf infants and children with HAs did not integrate the audiovisual information; and deaf infants and children with CIs initially did not initially integrate the audiovisual information but gradually matched the auditory and visual information in spoken words.

Conclusions

These results suggest that a period of auditory deprivation affects multimodal perceptual processes that may begin to develop normally after several months of auditory experience.

Keywords: Audiovisual speech perception, cochlear implants, hearing aids, hearing loss, infants, children

1. Introduction

In typically developing infants, the auditory system is well developed at birth whereas the visual system takes several months to fully develop (Bahrick and Lickliter, 2000; Dobson and Teller, 1978; Gottlieb, 1976). Nevertheless, infants are capable of integrating auditory and visual speech information at a very young age (Kuhl and Meltzoff, 1982; Patterson and Werker, 2003). There is debate as to what role experience plays in acquiring early audiovisual integration skills for speech. Some researchers have proposed that acquiring complete representations of audiovisual speech gestures requires extensive experience listening to, observing, and perhaps even producing speech. One way of measuring the effects of such experience is to compare audiovisual speech perception skills in normal-hearing infants and deaf infants who receive hearing aids or cochlear implants to restore maximal hearing capabilities. The purpose of the present study is to investigate the development of audiovisual perception of spoken words in infants with normal hearing and hearing loss who vary in chronological age, duration of deafness, and duration of audiological device use.

Young infants are capable of matching auditory and visual information that is naturally coupled in the environment (Bahrick and Lickliter, 2000; Lewkowicz and Kraebel, 2004). In one of the first studies of infants’ perception of audiovisual synchrony, for example, Spelke (1976) simultaneously presented two films, one portraying a woman playing peek-a-boo and the other portraying a hand playing percussion instruments, to 4-month-old infants. She then measured infants’ looking time to each of the films while a soundtrack corresponding to only one of the films was played, and found that the infants preferred to watch the film that matched the sound track. Several studies have more specifically explored infants’ perception and integration of auditory and visual information in speech (Aldridge et al., 1999; Dodd, 1979; Lewkowicz, 2000; Walton and Bower, 1993). In a seminal study of infant audiovisual speech perception, Kuhl and Meltzoff (1982) presented 18- to 20-week-old infants with two faces visually articulating the vowels /a/ and /i/ and one sound track synchronized with one of the articulating faces. They found that the infants looked longer at the matching face than the nonmatching face. More recent studies have also shown that infants as young as 2.5 months of age successfully integrate audiovisual steady-state vowels (Patterson and Werker, 1999, 2003). Finally, infants as young as newborns prefer audiovisually matched presentations of nonnative vowels (Aldridge et al., 1999; Walton and Bower, 1993).

Although infants show remarkable audiovisual matching skills of simple speech stimuli like steady state vowels, other research has shown some limitations on their matching of more complex stimuli. When presented with consonants or combinations of consonants and vowels, infants must correlate the visual and auditory signals that change rapidly over time as they are articulated by the talker. Mugitani, Hirai, Shimada, and Hiraki (2002) found that 8-month-olds had difficulty matching audiovisual information in consonants. On the other hand, MacKain, Studdert-Kennedy, Spieker, and Stern (1983) found that 5- to 6-month-old infants preferred to look longer at matching CVCV displays, but only when attending to the right side. Although they interpreted these results as indicative of left hemisphere speech processing, the results could also suggest that infants do not integrate audiovisual information in complex stimuli as easily as in steady-state vowels.

Despite being capable of matching audiovisual speech information, it remains possible that infants and children still have incomplete representations of the auditory and visual components in speech. Lewkowicz (2000) presented 4-, 6-, and 8-month-old infants with audiovisual syllables (/ba/ and /sha/) and measured their perception of auditory, visual, or audiovisual changes to these syllables. They found that all age groups detected auditory and audiovisual changes to the syllables, but only the 8-month-olds detected visual changes, unless presented in an infant-directed speech style. These results suggest that infants’ perception of the visual components of AV speech may develop more slowly than their perception of the auditory components. In fact, there is still evidence of less visual influence on perception of audiovisual speech, compared to adults, by the time children reach preschool (Desjardins et al., 1997; McGurk and MacDonald, 1976; van Linden and Vroomen, 2008).

Several researchers have related infants’ uneven development of auditory and visual perception to their early experiences with listening, observing, and producing speech (e.g., Desjardins et al., 1997; Mugitani et al., 2008). In a study of preschoolers’ perception of congruent and incongruent audiovisual syllables, Desjardins et al. (1997) found that the perception of the visual speech gestures was more adult-like in children who had more experience correctly producing consonants such as “th” compared to children who had difficulty producing such consonants. The authors further suggest that the representation of the visible articulation is built up by not just correctly producing consonants but also by the length of time correctly producing consonants. This notion has important implications for infants and children with congenital profound hearing loss who receive cochlear implants, who have no auditory experience prior to cochlear implantation, and who typically do not correctly produce consonants until several months or years following implantation.

One factor that is extremely important for early auditory experience in deaf children is age at implantation. Infants and children who are implanted at an earlier age thus have a shorter duration of deafness and a longer duration of experience with spoken language. In recent analyses of spoken word recognition and sentence comprehension in children with cochlear implants enrolled in a longitudinal study of speech perception and language development, we found that prelingually deaf children showed more improvement in audiovisual and auditory-alone comprehension skills than visual-alone skills over a period of five years following cochlear implantation (Bergeson et al., 2003, 2005). We also found that children who were implanted under the age of 5 years performed better in the auditory-alone and audiovisual conditions than children implanted over the age of 5 years, whereas children who were implanted later had better visual-alone scores than children who were implanted earlier. Finally, pre-implantation performance in the visual-alone and audiovisual conditions was strongly correlated with performance 3 years post-implantation on a variety of clinical outcome measures of speech and language skills.

These results suggest that infants and children with hearing loss learn to utilize any speech information they receive, regardless of the modality. That is, children with less early auditory experience (i.e., implanted after the age of 5 years) actually appear to be more influenced by the visual component of spoken language than children with more early auditory experience. Similarly, in a study of McGurk consonant perception in deaf children with cochlear implants, Schorr, Fox, van Wassenhove, and Knudsen (2008) found that children implanted after the age of 2.5 years were more influenced by the visual component of incongruent syllables than children implanted before the age of 2.5 years. Thus, early auditory and audiovisual experience seems to delay processing of the visual components of audiovisual information, whereas early visual-only experience serves to increase dependence upon the visual components of audiovisual information.

One main goal of the present study is to investigate audiovisual speech perception in normal-hearing infants and children and hearing-impaired infants and children who use hearing aids or cochlear implants. Recent studies have shown that hearing-impaired infants may be able to perceive and integrate audiovisual speech stimuli after approximately 12 months of cochlear implant experience, but audibility plays a role in successful audiovisual integration (Barker and Bass-Ringdahl, 2004; Barker and Tomblin, 2004). Thus, we hypothesize that infants and children with severe-to-profound hearing loss prior to receiving hearing aids and infants and children with profound hearing loss prior to receiving cochlear implants will have difficulty matching auditory and visual signals in a replication and extension of Kuhl and Meltzoff’s (1982) audiovisual speech perception task.

Another goal of this study is to investigate the effects of duration of severe-to-profound hearing loss on audiovisual speech perception. If longer durations of early auditory deprivation lead to increased difficulty acquiring audiovisual speech integration skills, then earlier implanted infants and children should perform better on audiovisual speech perception tasks than later implanted infants and children.

The majority of previous studies of infants’ perception of audiovisual speech have used isolated steady-state vowels as test stimuli, even though those sounds rarely occur in everyday speech to infants and children. It is important to measure audiovisual speech input that infants and children experience in their natural environment. Compared to isolated steady-state vowels, spoken words encode highly distinctive auditory and visual phonetic information such as rapid spectrum changes and dynamic movements of the articulators over time. Therefore, a third goal of the present study is to measure the development of audiovisual perception of words in normal-hearing infants and hearing-impaired infants with hearing aids or cochlear implants across a variety of ages.

2. Method

2.1. Subjects

Normal-hearing infants and children (n = 20; 11 females) ages 11.5–39.5 months (m = 23.9) were recruited from the local community. Any infants with three or more ear infections per year were administered a tympanogram and otoacoustic emission testing to insure normal hearing.

Infants and children with bilateral hearing loss were recruited from Indiana University School of Medicine (see Table 1). Hearing Aids: Twenty children (9 females) received hearing aids between the ages of 2–19 months (m = 6.2 months) and were 8–28 months of age (m = 15.6 months) at time of testing. Their pre-amplification unaided pure tone averages ranged from 38–120 dB (m = 61.5 dB). An additional three children with hearing aids were excluded because they did not complete testing. Cochlear Implants: Nineteen children (5 females) received a cochlear implant between the ages of 10–24 months (m = 15.6 months) and were 16–39 months of age (m = 26.6 months) at time of testing. Their pre-amplification unaided pure tone averages ranged from 67–120 dB (m = 112.0 dB). An additional eight children with cochlear implants were excluded because they did not complete testing. Hearing-impaired subjects were tested at 3–20 months post-amplification; some were tested at more than one post-amplification interval.

Table 1.

Participant demographics

| Age at amplification (mos) |

Pre-amplification unaided PTA (dB) |

Device | |

|---|---|---|---|

| Cochlear Implant Group | |||

| CI15 | 13.8 | 102 | Nucleus 24 Contour |

| CI19 | 10.3 | 67 | Med-El C 40+ |

| CI22 | 22.1 | 97 | Nucleus 24 Contour |

| CI25 | 16.1 | 118 | Nucleus 24 K |

| CI28 | 16.8 | 118 | Nucleus 24 Contour |

| CI29 | 16.5 | 118 | Med-El C 40+ [L] Advanced Bionics HiRes 90K [R] |

| CI34 | 10.4 | 112 | Nucleus 24 Contour |

| CI35 | 16.7 | 120 | Nucleus Freedom–Contour Advance |

| CI39 | 17.9 | 97 | Nucleus Freedom–Straight |

| CI40 | 13.2 | 118 | Nucleus Freedom–Contour Advance |

| CI42 | 12.8 | 117 | Nucleus Freedom–Contour Advance |

| CI48 | 20.5 | 118 | Nucleus Freedom–Contour Advance |

| CI49 | 20.5 | 118 | Nucleus Freedom–Contour Advance |

| CI51 | 10.2 | 118 | Nucleus Freedom–Contour Advance |

| CI53 | 11.9 | 118 | Nucleus Freedom–Contour Advance |

| CI3029 | 14.5 | 118 | Advanced Bionics HiRes 90K |

| CI3058 | 24.2 | 112 | Nucleus Freedom–Contour Advance |

| CI3307 | 9.9 | 118 | Advanced Bionics HiRes 90k focus |

| CI3374 | 13.6 | 107 | Nucleus Freedom–Contour Advance |

| Hearing Aid Group | |||

| HA03 | 2.2 | . | Phonak Naida 111 UP |

| HA07 | 4.6 | 41 | Oticon Gaia BTEs |

| HA08 | 6.2 | 48 | Phonak Maxx 311 BTE |

| HA09 | 19.6 | 46 | Phonak Maxx 311 BTEs |

| HA10 | 10.6 | 64 | Oticon Gaia BTEs |

| HA11 | 6.6 | 53 | Phonak Maxx 211 BTE |

| HA12 | 8.4 | 43 | Unison 6 BTEs |

| HA13 | 2.0 | 44 | Unitron Unison 6 BTE |

| HA14 | 4.7 | 47 | Oticon Gaia BTEs |

| HA16 | 14.1 | 118 | Phonak Power Maxx 411 BTEs |

| HA17 | 3.4 | 120 | Phonak Maxx 311 BTEs |

| HA18 | 4.1 | 47 | Phonak Maxx 311 BTEs |

| HA20 | 1.4 | 120 | Phonak Maxx 311 BTE |

| HA22 | 8.8 | 45 | Phonak Maxx 311 BTEs |

| HA24 | 5.2 | 38 | Oticon Gaia VC BTEs |

| HA25 | 6.4 | 80 | Oticon Sumo BTE [L] Oticon Tego Pro BTE [R] |

| HA3029 | 3.9 | 120 | Oticon Tego Pro BTEs |

| HA3551 | 2.3 | 104 | Oticon Sumo DM |

| HA3664 | 7.1 | 39 | Oticon Safran BTEs |

| HA3699 | 2.5 | 76 | Oticon Tego Pro BTEs |

All subjects had normal vision, as reported by their parents. The families were paid $10/hour for their participation. Families of hearing-impaired infants were also reimbursed for transportation and lodging costs when traveling from long distances.

2.2. Stimulus materials

Audiovisual test stimuli were drawn from the Hoosier Audiovisual Multitalker Database of spoken words, in which a female talker produced CVC monosyllabic words in a natural adult-directed manner using neutral facial expressions (Lachs and Hernández, 1998; Sheffert et al., 1996). The words “judge” and “back” were used in this study. These two words were selected because their articulations are visually distinctive and the durations of the audiovisual clips are closely matched (“judge” = 0.595 s; “back” = 0.512 s). The auditory stimuli were presented at 65–70 dB HL, well within the audible range for all groups of infants.

2.3. Apparatus and procedure

Testing was conducted in a custom-made, double-walled IAC sound booth. Infants sat on their caregiver’s lap in front of a large 55-inch wide-aspect TV monitor. The experiment was conducted using HABIT software (Cohen et al., 2004). Video clips of the two test words (“judge” and “back”) were presented simultaneously on the left and right sides of the TV monitor. Visual presentation of the test words was counterbalanced across testing sessions (judge-left, back-right versus judge-right, back-left). During the pre-test phase, two silent trials were presented to determine whether individual infants exhibited a response bias for the visual articulation of one word over the other. During the test phase, the same video clips were presented in each of 16 trials (8 repetitions of the words per trial). Half of the trials were also accompanied by the sound track from one of the spoken words (e.g., “judge”) and half of the trials used the other spoken word (e.g., “back”), in random order. Prior to each trial the infant’s attention was drawn to the TV monitor using an “attention getter” (i.e., a video of a laughing baby’s face).

Each trial was initiated when the infant looked at the attention getter and continued until all 8 repetitions of the word were completed. To assess the direction and durations of the infants’ looking behavior during the test phases, we coded the infants’ looking responses offline using the digital video tape recordings of the testing sessions. All coding was performed by trained research assistants who were blind to the stimulus conditions and experimental hypotheses. All coders were trained on a subset of previously coded videos until they consistently achieved greater than 95% consistency with previous codings.

3. Results

None of the groups of infants and children showed a looking time preference for either word (“judge” or “back”) during the visual-only pre-trial presentations. Because infants and young children often have difficulty maintaining attention for a period of time, we analyzed the results for the first block of trials (trials 1–8) and the second block of trials (trials 9–16) to track children’s attention and interest levels over the course of the experimental session. Moreover, it could also be the case that infants and children with hearing loss might not immediately detect the audiovisual correspondence and instead need extra time to learn that the auditory signal matches only one of the visual signals. Total looking times (s) – averaged across trials in each condition for each block and for each individual group of infants and children – are presented below.

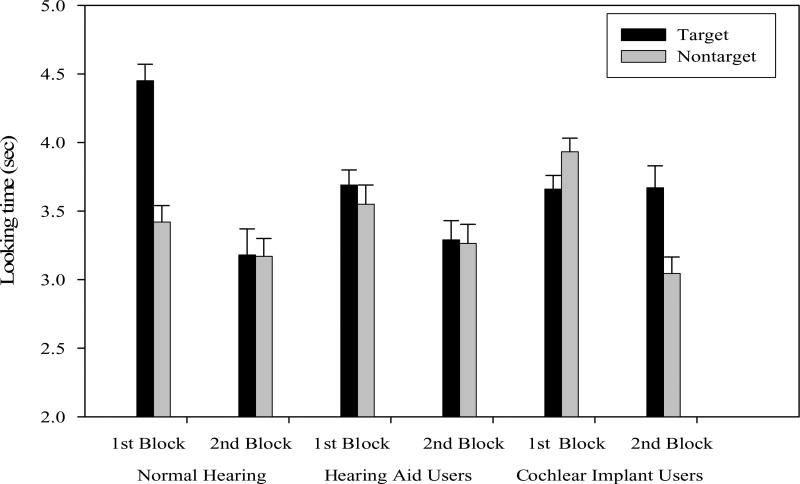

3.1. Normal hearing infants and children

As shown in Fig. 1, normal-hearing infants prefer to look longer at the matching face (m = 3.78, s.d. = 0.55) than the nonmatching face (m = 3.42, s.d. = 0.55) in the first block of trials, t(19) = 2.15, p = 0.045. In the second block of trials, normal-hearing infants did not show a looking time preference for either the matching face (m = 3.18, s.d. = 0.87) or the nonmatching face (m = 3.17, s.d. = 0.57) face, t(19) = 0.03, p = 0.973.

Fig. 1.

Total looking time at the matching and nonmatching faces in the first and second blocks of the experiment across hearing status. Error bars indicate standard error.

3.2. Deaf infants and children with hearing aids

Because hearing-impaired subjects with hearing aids were tested at more than one post-amplification interval, we completed linear mixed-model analyses (SPSS 16). Figure 1 shows that hearing-impaired infants with hearing aids did not prefer to look longer at the matching face (m = 3.69, s.d. = 0.62) than the nonmatching face (m = 3.55, s.d. = 0.78) in the first block, F(1, 30) = 0.554, p = 0.467. In the second block, hearing-impaired infants with hearing aids again did not show a looking time preference for either the matching face (m = 3.29, s.d. = 0.76) or the nonmatching face (m = 3.26, s.d. = 0.79), F(1, 30) = 0.014, p = 0.906.

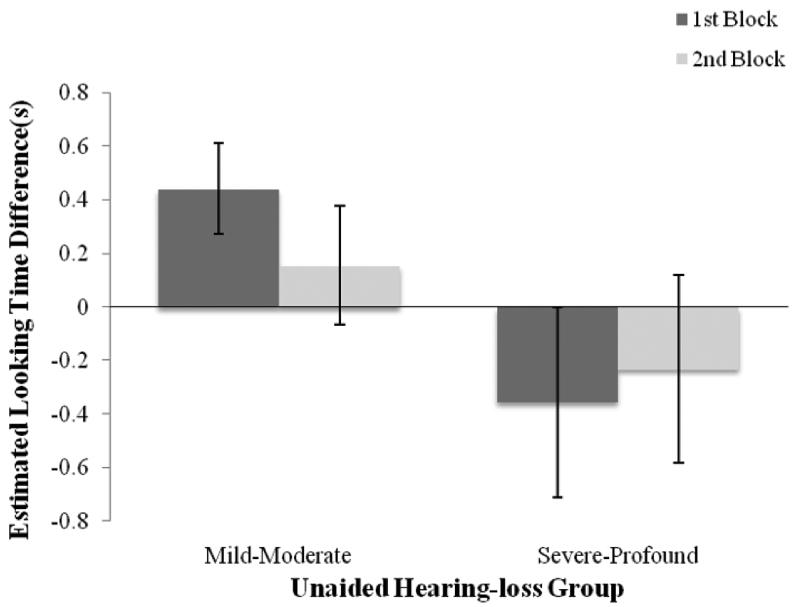

To investigate the effects of pre-amplification unaided pure tone averages on audiovisual speech perception, we compared looking time preferences across children with mild-to-moderate hearing loss (hearing thresholds of 25–70 dB, n = 12) versus those with severe-to-profound hearing loss (hearing thresholds over 70 dB, n = 7) for each block of test trials (see Fig. 2). Linear mixed-model analyses revealed that looking preferences in children with mild-to-moderate hearing loss and in children with severe-to-profound hearing loss differed significantly in Block 1, F(1, 16.4) = 4.87, p = 0.04, but not in Block 2. Further analyses revealed that only the children with mild-to-moderate hearing loss looked significantly longer at the matching than non-matching face (F(1, 8.1) = 10.90, p = 0.01) in Block 1, whereas those with severe to profound hearing loss did not show any statistically significant looking preferences in Block 1 or Block 2. These results suggest that, like NH infants and children, children with mild-to-moderate hearing loss are able to match auditory and visual speech information.

Fig. 2.

Looking time differences (looking time to matching face minus looking time to nonmatching face) across levels of pre-amplification unaided hearing thresholds (below and above 70 dB) in infants who use hearing aids. Error bars indicate standard error.

3.3. Deaf infants and children with cochlear implants

Because hearing-impaired subjects with hearing aids were tested at more than one post-amplification interval, we completed linear mixed-model analyses (SPSS 16). Figure 1 shows a pattern of preferences across the two experimental blocks that is in direct contrast to the pattern of preferences in the normal-hearing infants and children. In the first block of trials, linear mixed-model analyses revealed that hearing-impaired infants with cochlear implants actually looked slightly longer at the nonmatching face (m = 3.93, s.d. = 0.54) than the matching face (m = 3.66, s.d. = 0.55), although the difference was not statistically significant, F(1, 27) = 2.46, p = 0.128. On the other hand, in the second block of trials, hearing-impaired infants with cochlear implants looked significantly longer at the matching face (m = 3.67, s.d. = 0.85) than the nonmatching face (m = 3.05, s.d. = 0.62), F(1, 27) = 13.56, p = 0.001.

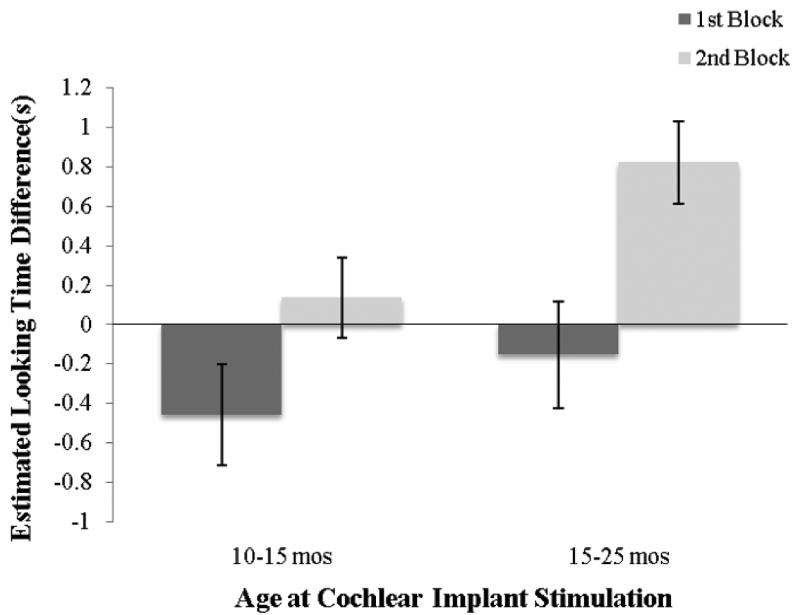

An ANCOVA with face type (matching vs. mismatching) as the independent variable, looking time (s) as the dependent variable, and pre-amplification PTA (dB) as a covariate revealed no effects or interactions with pre-amplification hearing level. To investigate the effects of age at cochlear implant stimulation and duration of cochlear implant use on audiovisual speech perception, we compared looking time preferences across the first and second experimental blocks in infants and children who received cochlear implant stimulation before the age of 15 months (Early, n = 10) and after the age of 15 months (Late, n = 9) at 3, 6, 12, 18, and 20 months after implantation. Fig. 3 shows that the children in both groups initially looked longer at the nonmatching than the matching face, but then switched preferences to look longer at the matching than the nonmatching face in the second block of trials. Linear mixed-model analyses revealed that performance between groups did not differ significantly in Block 1 but did differ significantly in Block 2, F(1, 12.7) = 7.40, p = 0.02; only the Late group looked significantly longer at the matching than the nonmatching face, F(1, 6.5) = 11.41, p = 0.01. There was also a significant effect of post-implantation interval during Block 2, F(4, 8.3) = 6.80, p = 0.01. Post-hoc analyses revealed significantly worse performance at the 3-month post-implantation interval than the 6-month post-implantation interval (p = 0.04, Bonferroni adjustment for multiple comparisons). These findings suggest that performance was influenced by both age at implantation and duration of cochlear implant experience. However, the effect of age at implantation was opposite than predicted – earlier implanted children performed worse than later implanted children.

Fig. 3.

Looking time differences (looking time to matching face minus looking time to nonmatching face) for infants who received cochlear implants prior to 14 months of age (Early) and after 14 months of age (Late). Error bars indicate standard error.

4. Discussion

Based on previous studies of audiovisual speech perception in normal-hearing infants and hearing-impaired infants and children with cochlear implants (Barker and Tomblin, 2004; Bergeson et al., 2003, 2005; Kuhl and Meltzoff, 1982; Patterson and Werker, 2003), we predicted that audiovisual speech perception skills would be influenced by hearing impairment. However, we found that infants and children in all three groups (those with normal-hearing, hearing aids, or cochlear implants) did not look significantly longer at the matching versus nonmatching face while listening to the words “judge” or “back.”

Nevertheless, interesting patterns of performance emerged when comparing looking time preferences across the first and second blocks of the experiment. Normal-hearing infants and children with mild-to-moderate hearing loss initially preferred to look longer at the matching face than the nonmatching face. During the second block of the experiment, however, they looked approximately the same amounts at both the matching and nonmatching faces. It is possible that once they have successfully matched up the auditory and visual signals they become equally bored with the matching and nonmatching faces. In fact, their looking times do decrease somewhat across the two blocks of trials. Interestingly, infants and children with greater hearing loss prior to receiving their hearing aids did not show the ability to match auditory and visual speech information during either block of trials. Thus, it appears that auditory experience plays a role in audiovisual speech perception.

Additional evidence for this notion is that deaf infants with cochlear implants could not successfully match the auditory and visual information in the spoken words until the second block of trials and that performance was worse at the earliest post-implantation interval. An ANCOVA also revealed that the amount of pre-implantation hearing loss did not affect these results, likely because there was little variance in the levels of hearing loss. Interestingly, infants and children who were implanted earlier did not do as well as those who were implanted later on the audiovisual speech perception task. Recall that Bergeson et al. (2003, 2005) found that children implanted later performed better on the visual-only task of speech comprehension measures, whereas children implanted earlier performed better on the auditory-only and audiovisual portions of the speech comprehension measures. Moreover, Schorr et al. (2008) found similar effects of age at implantation in a replication of the McGurk audiovisual speech perception test (McGurk and Mac-Donald, 1976). They suggest a sensitive period of approximately 2.5 years for bimodal fusion. After this sensitive period, deaf children with cochlear implants are influenced more by the visual input rather than the auditory input. In the present study, it is possible that the children implanted later process the visual components but must learn the correspondence between the visual and auditory signals, as evidenced by the preference for matching audiovisual stimuli only in Block 2. Moreover, the present results on duration of device use suggest that early-implanted infants and children might eventually show the same bimodal fusion in Block 1 as normal-hearing infants and infants with mild-moderate hearing loss after a sufficient period of cochlear implant experience.

Evidence from studies with animals and studies of human neural responses suggests that the absence of sound during the first several months of life affects neural development at several points along the peripheral auditory pathway and other higher-level cortical areas (Kral et al., 2000; Leake and Hradek, 1988; Neville and Bruer, 2001; Ponton et al., 1996; Ponton and Eggermont, 2001; Ponton et al., 2000; Ponton et al., 1999; Sharma et al., 2002). Connections between the auditory cortex and other brain structures may not develop normally in congenitally deaf infants, and, as a result, their visual, attentional, and cognitive neural networks may not be strongly linked to their auditory processing skills after receiving a cochlear implant. Moreover, early experience and activities with multimodal stimulus events appear to be necessary for the development of auditory and visual sensory systems and the integration of common “amodal” information from each modality (Lewkowicz and Kraebel, 2004). It is possible, then, that children who have been deprived of auditory sensory input before and immediately following birth because of a hearing loss may not acquire spoken language through normal auditory-visual sensory means.

In summary, the results of the present study reveal that level of hearing loss and age at cochlear implantation do in fact affect the development of audiovisual speech perception. Normal-hearing children, children with more hearing prior to receiving hearing aids, and children who received a cochlear implant later rather than earlier were the most successful at matching auditory and visual components of spoken words. These findings suggest that early auditory experience is very important for developing normal audiovisual speech perception abilities. However, infants and children with hearing loss may learn to rely on the visual modality to aid audiovisual speech perception.

Acknowledgments

This research was supported by the American Hearing Research Foundation, NIH/NIDCD Research Grant R21DC06682 and NIH/NIDCD Training Grant T32DC00012. We gratefully acknowledge Carrie Hansel, Kabreea York and the Babytalk Research research assistants for their help testing the participants and coding the data. Finally, we thank the participants and their families, some of whom traveled great distances, for their participation.

References

- Aldridge MA, Braga ES, Walton GE, Bower TGR. The intermodal representation of speech in newborns. Developmental Science. 1999;2(1):42–46. [Google Scholar]

- Bahrick LE, Lickliter R. Intersensory redundancy guides attentional selectivity and perceptual learning in infancy. Developmental Psychology. 2000;36(2):190–201. doi: 10.1037//0012-1649.36.2.190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barker BA, Bass-Ringdahl SM. The effect of audibility on audio-visual speech perception in very young cochlear implant recipients. In: Miyamoto RT, editor. Cochlear Implants: Proceedings of the VI-II International Cochlear Implant Conference: International Congress Series. Vol. 1273. San Diego: Elsevier Inc; 2004. pp. 316–319. [Google Scholar]

- Barker BA, Tomblin JB. Bimodal speech perception in infant hearing aid and cochlear implant users. Archives of Otolaryngology-Head & Neck Surgery. 2004;130:582–586. doi: 10.1001/archotol.130.5.582. [DOI] [PubMed] [Google Scholar]

- Bergeson TR, Pisoni DB, Davis RAO. A longitudinal study of audiovisual speech perception by children with hearing loss who have cochlear implants. The Volta Review. 2003;103:347–370. [PMC free article] [PubMed] [Google Scholar]

- Bergeson TR, Pisoni DB, Davis RAO. Development of audiovisual comprehension skills in prelingually deaf children with cochlear implants. Ear and Hearing. 2005;26:149–164. doi: 10.1097/00003446-200504000-00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen LB, Atkinson DJ, Chaput HH. Habit X: Anew program for obtaining and organizing data in infant perception and cognition studies (Version 1.0) Austin: University of Texas; 2004. [Google Scholar]

- Desjardins RN, Rogers J, Werker JF. An exploration of why preschoolers perform differently than do adults in audiovisual speech perception tasks. Journal of Experimental Child Psychology. 1997;66:85–110. doi: 10.1006/jecp.1997.2379. [DOI] [PubMed] [Google Scholar]

- Dobson V, Teller DY. Visual acuity in human infants: A review and comparison of behavioral and electrophysiological studies. Vision Research. 1978;18:1469–1483. doi: 10.1016/0042-6989(78)90001-9. [DOI] [PubMed] [Google Scholar]

- Dodd B. Lip reading in infants: Attention to speech presented in- and out-of-synchrony. Cognitive Psychology. 1979;11:478–484. doi: 10.1016/0010-0285(79)90021-5. [DOI] [PubMed] [Google Scholar]

- Gottlieb G. Conceptions of prenatal development: Behavioral embryology. Psychological Review. 1976;83(3):215–234. [PubMed] [Google Scholar]

- Kral A, Hartmann R, Tillein J, Held S, Klinke R. Congenital auditory deprivation reduces synaptic activity within the auditory cortex in a layer-specific manner. Cerebral Cortex. 2000;10:714–726. doi: 10.1093/cercor/10.7.714. [DOI] [PubMed] [Google Scholar]

- Kuhl PK, Meltzoff AN. The bimodal perception of speech in infancy. Science. 1982;218(4577):1138–1141. doi: 10.1126/science.7146899. [DOI] [PubMed] [Google Scholar]

- Lachs L, Hern ández LR. Research on Spoken Language Processing Progress Report No. 22. Bloomington, IN: Speech Research Laboratory, Indiana University; 1998. Update: The Hoosier Audiovisual Multitalker Database; pp. 377–388. [Google Scholar]

- Leake PA, Hradek GT. Cochlear pathology of long term neomycin induced deafness in cats. Hearing Research. 1988;33:11–34. doi: 10.1016/0378-5955(88)90018-4. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ. Infants’ perception of the audible, visible, and bimodal attributes of multimodal syllables. Child Development. 2000;71:1241–1257. doi: 10.1111/1467-8624.00226. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ, Kraebel KS. The value of multisensory redundancy in the development of intersensory perception. In: Calvert GA, Spence C, Stein BE, editors. The Handbook of Multisensory Processes. Cambridge, Massachusetts: The MIT Press; 2004. pp. 655–678. [Google Scholar]

- MacKain K, Studdert-Kennedy M, Spieker S, Stern D. Infant intermodal speech perception is a left-hemisphere function. Science. 1983;219(4590):1347–1349. doi: 10.1126/science.6828865. [DOI] [PubMed] [Google Scholar]

- McGurk H, MacDonald J. Hearing lips and seeing voices. Nature. 1976;264:746–748. doi: 10.1038/264746a0. [DOI] [PubMed] [Google Scholar]

- Mugitani R, Hirai M, Shimada S, Hiraki K. The audiovisual speech perception of consonants in infants; Paper presented at the 13th Biennial International Conference on Infant Studies; Toronto, Canada. 2002. [Google Scholar]

- Mugitani R, Kobayashi T, Hiraki K. Audiovisual matching of lips and non-canonical sounds in 8-month-old infants. Infant Behavior and Development. 2008;31:307–310. doi: 10.1016/j.infbeh.2007.12.002. [DOI] [PubMed] [Google Scholar]

- Neville HJ, Bruer JT. Language Processing: How experience affects brain organization. In: Bailey JDB, Bruer JT, editors. Critical Thinking about Critical Periods. Baltimore: Paul H. Brookes; 2001. pp. 151–172. [Google Scholar]

- Patterson ML, Werker JF. Matching phonetic information in lips and voice is robust in 4.5-month-old infants. Infant Behavior and Development. 1999;22(2):237–247. [Google Scholar]

- Patterson ML, Werker JF. Two-month old infants match phonetic information in lips and voice. Developmental Science. 2003;6(2):191–196. [Google Scholar]

- Ponton CW, Don M, Eggermont JJ, Waring MD, Kwong B, Masuda A. Auditory system plasticity in children after long periods of complete deafness. Neuroreport. 1996;8:61–65. doi: 10.1097/00001756-199612200-00013. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Eggermont JJ. Of kittens and kids: Altered cortical maturation following profound deafness and cochlear implant use. Audiology & Neuro-Otology. 2001;6:363–380. doi: 10.1159/000046846. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Eggermont JJ, Don M, Waring MD, Kwong B, Cunningham J, et al. Maturation of the mismatch negativity: Effects of profound deafness and cochlear implant use. Audiology & Neuro-Otology. 2000;5:167–185. doi: 10.1159/000013878. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Moore JK, Eggermont JJ. Prolonged deafness limits auditory system developmental plasticity: Evidence from an evoked potentials study in children with cochlear implants. Scandinavian Audiology. 1999;28(Suppl 51):13–22. [PubMed] [Google Scholar]

- Schorr EA, Fox NA, van Wassenhove V, Knudsen EI. Auditory-visual fusion in speech perception in children with cochlear implants. Proceedings of the National Academy of Sciences. 2008;102:18748–18750. doi: 10.1073/pnas.0508862102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharma A, Dorman MF, Spahr AJ. A sensitive period for the development of the central auditory system in children with cochlear implants: Implications for age of implantation. Ear and Hearing. 2002;23:532–539. doi: 10.1097/00003446-200212000-00004. [DOI] [PubMed] [Google Scholar]

- Sheffert SM, Lachs L, Hernández LR. Research on Spoken Language Processing Progress Report No. 21. Bloomington, IN: Speech Research Laboratory, Indiana University; 1996. The Hoosier Audiovisual Multitalker Database; pp. 578–583. [Google Scholar]

- Spelke ES. Infants’ intermodal perception of events. Cognitive Psychology. 1976;8:553–560. [Google Scholar]

- van Linden S, Vroomen J. Audiovisual speech calibration in children. Journal of Child Language. 2008;35:809–822. doi: 10.1017/S0305000908008817. [DOI] [PubMed] [Google Scholar]

- Walton GE, Bower TGR. Amodal representations of speech in infants. Infant Behavior and Development. 1993;16(2):233–243. [Google Scholar]