Abstract

The purpose of this study was to test the hypothesis that listeners with frequent exposure to loud music exhibit deficits in suprathreshold auditory performance consistent with cochlear synaptopathy. Young adults with normal audiograms were recruited who either did (n = 31) or did not (n = 30) have a history of frequent attendance at loud music venues where the typical sound levels could be expected to result in temporary threshold shifts. A test battery was administered that comprised three sets of procedures: (a) electrophysiological tests including distortion product otoacoustic emissions, auditory brainstem responses, envelope following responses, and the acoustic change complex evoked by an interaural phase inversion; (b) psychoacoustic tests including temporal modulation detection, spectral modulation detection, and sensitivity to interaural phase; and (c) speech tests including filtered phoneme recognition and speech-in-noise recognition. The results demonstrated that a history of loud music exposure can lead to a profile of peripheral auditory function that is consistent with an interpretation of cochlear synaptopathy in humans, namely, modestly abnormal auditory brainstem response Wave I/Wave V ratios in the presence of normal distortion product otoacoustic emissions and normal audiometric thresholds. However, there were no other electrophysiological, psychophysical, or speech perception effects. The absence of any behavioral effects in suprathreshold sound processing indicated that, even if cochlear synaptopathy is a valid pathophysiological condition in humans, its perceptual sequelae are either too diffuse or too inconsequential to permit a simple differential diagnosis of hidden hearing loss.

Keywords: cochlear synaptopathy, loud music exposure, hidden hearing loss, auditory brainstem response

Introduction

This study probed for the existence and perceptual sequelae of cochlear synaptopathy in humans. The expected auditory profile of cochlear synaptopathy—namely, suprathreshold deficits in the presence of audiometrically normal hearing—is a general profile that has long been recognized. Therefore, to place the current focus in context, it is appropriate to consider how the interpretation of this presentation has varied over the years. In the mid-20th century, it was viewed from the perspective of psychogenic hearing loss, wherein hearing complaints that could not be obviously linked to a sensory—or organic—dysfunction were assumed to be psychological in origin (e.g., P. F. King, 1954). Doerfler and Stewart (1946), in seeking to construct a test to differentiate psychogenic and organic hearing loss, made the insightful observation that listeners with psychogenic hearing loss were often particularly poor at speech-in-noise measures. Kopetzky (1948) applied the acronym LCDL (Loss of the Capacity for Discriminative Listening) to such patients. In recognition of the pioneering work of these early investigators, Hinchcliffe (1992) coined the term King-Kopetzky Syndrome to refer to the profile of auditory disabilities in the presence of essentially normal pure-tone audiograms. This attribution is perhaps a misnomer since only a minority of King’s psychogenic hearing loss cases were noted to have normal hearing acuity (P. F. King, 1954), and Kopetzky (1948) made no stipulation that cases of psychogenic hearing loss should necessarily be associated with normal audiometric sensitivity, yet this term has found some continued usage (e.g., Stephens & Zhao, 2000; Zhao & Stephens, 1996). Concurrent with Hinchcliffe’s work, the terms Obscure Auditory Dysfunction (Saunders & Haggard, 1989; Saunders, Haggard, & Field, 1989) and Auditory Disability with Normal Hearing (K. King & Stephens, 1992; Rendell & Stephens, 1988) were also introduced to describe the condition of hearing difficulties in the presence of a clinically normal audiogram.

Whereas such designations were intended to encompass a wide spectrum of contributing factors—including psychological factors—other investigators focused specifically on a cochlear, or peripheral, basis for this profile. The premise for this focus was that cochlear dysfunction could result in broadened auditory filters—leading to hearing difficulties due to spectral smearing—without elevating audiometric thresholds (e.g., Pick & Evans, 1983). Perceptual deficits associated with poor frequency resolution in the presence of normal audiometric thresholds generated yet another term for this profile, namely, selective disacusis (Narula & Mason, 1988). Continued work in this area has reinforced the hypothesis that deficits in cochlear function that manifest as poor frequency resolution can lead to suprathreshold deficits despite normal audiometric hearing (Badri, Siegel, & Wright, 2011). Importantly, reduced frequency resolution is generally attributed to impairment of the active mechanism in the cochlea associated with the outer hair cells (e.g., Ruggero, 1992).

More recently, another cochlear pathophysiology has been investigated that also results in suprathreshold deficits but one that is not associated with outer hair cells. This pathophysiology has been termed cochlear synaptopathy because it involves synaptic disruption of the ribbon synapses between the inner hair cells and the primary auditory neurons (for review, see Kujawa & Liberman, 2015; M. C. Liberman, Epstein, Cleveland, Wang, & Maison, 2016). Cochlear synaptopathy is most commonly a result of noise exposure that is sufficient to cause temporary, but not permanent, elevations in detection threshold (Kujawa & Liberman, 2009; Lin, Furman, Kujawa, & Liberman, 2011). The synaptopathy appears to preferentially affect connections to low-spontaneous rate, high-threshold fibers possibly because of the location of these particular synapses on the modiolar face of the hair cell where their susceptibility to noise trauma might be exacerbated (Furman, Kujawa, & Liberman, 2013; L. D. Liberman, Suzuki, & Liberman, 2015). Low-spontaneous rate, high-threshold fibers presumably feature prominently in conveying information at higher sound levels because of their ability to maintain synchrony at these levels as well as their greater resilience to background noise. In contrast, the information bearing capacity of low-threshold fibers is more limited at higher levels because of the saturation of their firing rates (Costalupes, 1985; Young & Barta, 1986). Cochlear synaptopathy leads to deafferentation and an eventual atrophication of eighth nerve fibers that result in a permanent depopulation of the auditory nerve (Jensen, Lysaght, Liberman, Qvortrup, & Stankovic, 2015). The functional consequence of cochlear synaptopathy is that, although the performance of outer hair cells remains unaffected—and sufficient eighth nerve fibers remain to support normal detection thresholds—the response capacity of the eighth nerve becomes limited at suprathreshold levels. This is classically demonstrated in the animal model with the triplex of normal otoacoustic emissions (OAEs), normal auditory brainstem response (ABR) thresholds, and reduced amplitude of the ABR Wave I at high levels (e.g., Lin et al., 2011).

Cochlear synaptopathy has been demonstrated in several mammalian species including the mouse (Kujawa & Liberman, 2009), guinea pig (Lin et al., 2011), chinchilla (cf. Kujawa & Liberman, 2015), and rhesus macaques (Valero et al., 2017). This has generated keen interest in determining whether the analogous condition exists in humans. Putative cochlear synaptopathy in humans is now commonly referred to as an example of hidden hearing loss (HHL; e.g., Schaette & McAlpine, 2011) although, given the historical context provided earlier, this phrase should be seen as describing an auditory profile rather than a mechanism. To date, the findings on human cochlear synaptopathy have been mixed. Several studies have reported findings that are consistent with this condition. An age-related cochlear synaptopathy has been reported in a postmortem anatomical study (Viana et al., 2015). In the electrophysiological domain, findings include an association between noise exposure history and ABR Wave I amplitude in listeners who have normal audiograms (Bramhall, Konrad-Martin, McMillan, & Griest, 2017; Stamper & Johnson, 2015). If the presence of tinnitus in listeners with normal audiograms is accepted as a proxy of noise-related cochlear synaptopathy, then supportive evidence can also be taken from a study demonstrating a linkage between ABR Wave I amplitude and the presence of tinnitus (Schaette & McAlpine, 2011). The shift of ABR Wave V latency in background noise has also been hypothesized to be associated with cochlear synaptopathy, and this has been supported by a cross-species study of audiometrically normal human listeners and noise-exposed mice (Mehraei et al., 2016). Liberman et al. (2016) undertook electrocochleography on young adults with clinically normal audiograms and found that the ratio of the amplitudes of the summating potential and the compound action potential (equivalent to ABR Wave I) was increased in normal hearing young adults at high risk for noise-induced trauma based on noise exposure history. Interestingly, these same high-risk listeners also exhibited reduced recognition of 35-dB HL speech in challenging conditions such as reverberation. Deficits in speech-in-noise recognition, as well as some measures of temporal processing, have also been found in a study of Indian train drivers with high occupational noise levels but relatively normal audiometric hearing (Kumar, Ameenudin, & Sangamanatha, 2012); these findings have been interpreted as compatible with cochlear synaptopathy. Rather than pregroup participants on the basis of noise exposure history, Bharadwaj, Masud, Mehraei, Verhulst, and Shinn-Cunningham (2015) used an alternative approach wherein individual differences in auditory performance were probed among a group of young adults with normal audiograms. They found a range of performance in measures of temporal processing—both psychophysical and electrophysiological—that included amplitude modulation (AM) detection, interaural time difference (ITD) sensitivity, and envelope following responses (EFR). These individual differences did not appear to be associated with measures of cochlear function but, rather, were interpreted as depending on the integrity of the neural periphery. When the listeners were then stratified into two groups based on self-reported noise exposure, the group with more exposure had generally poorer temporal processing measures than the less exposed group, supporting the hypothesis of cochlear synaptopathy in humans. The study of Mehraei et al. (2016) also used a psychophysical ITD task as a behavioral manifestation of cochlear synaptopathy to support their masked Wave V latency functions. In summary, several studies have reported anatomical, electrophysiological, and behavioral findings that are supportive of cochlear synaptopathy in humans.

However, other studies have failed to find evidence supporting human cochlear synaptopathy. Foremost among them is the extensive study by Prendergast et al. (2017) which found no association between noise exposure history and a number of electrophysiological measures in young adults with clinically normal hearing. These metrics included the amplitude of ABR Wave I and the amplitude of the EFR. Also in terms of ABR Wave I amplitude, and in contrast to the findings of Schaette and MacAlpine (2011), Guest, Munro, Prendergast, Howe, and Plack (2017) found no association between tinnitus and Wave I amplitude in listeners with normal audiograms, bringing into question whether the Schaette and MacAlpine findings actually reflect cochlear synaptopathy. In terms of psychoacoustic findings, the extensive study of Prendergast et al. (2016) also found no association between noise exposure history and measures of intensity discrimination, AM detection, AM frequency discrimination, and interaural phase discrimination (IPD). Relevant to the IPD measure, Bernstein and Trahiotis (2016) found a relationship between binaural temporal processing and audiometric threshold at 4 kHz, even among listeners whose audiometric thresholds would be considered normal. Although they conclude that deficiencies in binaural temporal processing performance can therefore manifest prior to any deficiencies in monaural measures of auditory function, they make no claims that such binaural temporal deficiencies necessarily point to cochlear synaptopathy as an underlying mechanism. This caveat is relevant to the findings of Bharadwaj et al. (2015) and Mehraei et al. (2016) both of whose studies interpreted poor ITD measures in terms of cochlear synaptopathy. Finally, in terms of speech measures, Prendergast et al. (2016) found no association between noise exposure history and speech-in-noise performance. Le Prell and Lobarinas (2016) also found no relationship between speech-in-noise recognition and recreational noise exposure history in normal hearing young adults. In summary, a number of studies that have sought to identify cochlear synaptopathy in humans using electrophysiological, psychoacoustic, and speech measures have failed to do so.

The lack of consensus across studies concerning the existence and functional consequences of human cochlear synaptopathy invites further critical examination of this issue. The purpose of this study was to undertake a comprehensive examination of auditory performance in listeners who were either likely or unlikely to have been exposed to conditions conducive to cochlear synaptopathy. The approach was to test young adults with normal audiograms who either did or did not have a history of frequent attendance at loud music venues or events where the typical sound levels could be expected to result in temporary threshold shifts (TTS). The hypothesis was that listeners with frequent exposure to loud sound would exhibit deficits in suprathreshold auditory performance in comparison to control listeners, compatible with an interpretation in terms of cochlear synaptopathy. The general finding of this study was that, although the two groups showed differences in ABR characteristics but not in OAE characteristics—consistent with expectations based on cochlear synaptopathy—the two groups did not differ across a wide range of suprathreshold behavioral measures. This suggests that even if cochlear synaptopathy is a valid pathophysiological condition in humans, its behavioral consequences are too diffuse in the general population to permit a straightforward differential diagnosis.

Materials and Methods

Participants

Two groups of young adults with normal audiometric hearing were recruited—one with a history of frequent attendance at loud music events or venues (Experimental [EXP] group), and the other being an age-matched group without such a history (Control [CON]). All subjects met the following criteria: (a) normal audiometric hearing at the time of test, defined as pure-tone thresholds ≤ 20 dB HL at the octave frequencies 0.25 to 8 kHz; (b) aged between 18 and 35 years; (c) native speakers of English; and (d) no contraindications such as history of ear disease or regular use of ototoxic medications, including ibuprofen and aspirin. The crux criterion that differentiated the two groups related to the history of loud music exposure. For inclusion in the EXP group, the subject must have attended at least 25 loud music events/venues in the preceding year and at least 40 such events/venues in the preceding two years. Dosimeter recordings at a sampling of venues (typically local rock clubs) and events (typically rock concerts) indicated a median maximum Leq of 104 dBA over the event duration. Such sound levels are usually sufficient to result in a TTS in the average human listener (for review, see Quaranta, Portalatini, & Henderson, 1998).

During enrollment, additional information pertinent to hearing history was collected via questionnaire. Questions probed information about (a) ringing or fullness in the ears following attendance at a loud music event; (b) subjective assessment of the loudness of the attended event(s); (c) the use of hearing protection at the loud music events; (d) musical training or experience; (e) proportion of time spent listening to music on personal systems, and at what rated loudness level; and (f) participation in other noisy work or recreational activities. Enrollment did not include an estimated measure of lifetime noise exposure.

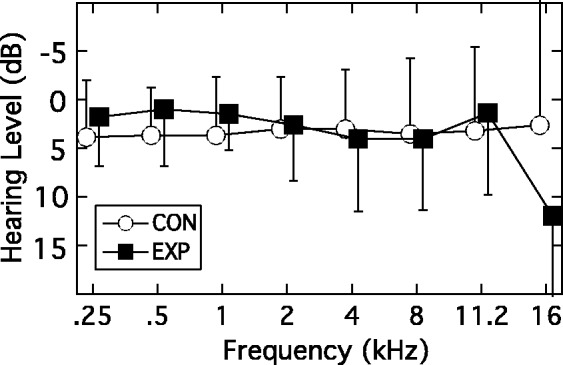

EXP group

A total of 31 participants were included in the EXP group (mean age = 25 years; 21 males). The median number of loud music events attended over the preceding 2 years was 90 (range = 40–500), and the EXP participants estimated that following 38% of these concerts they had ringing in their ears. Although some occasionally used ear protection—typically foam earplugs—they did not for approximately 76% of the concerts they attended. In addition, 81% of the EXP participants reported listening to music daily for several hours. It is relevant to note that 71% of the EXP group identified themselves as musicians—indeed several were either members of rock bands or were involved in staging musical events. In terms of pure-tone audiometric thresholds, all EXP participants were tested over the octave frequencies 0.25 to 8 kHz; however, several months into the project it was decided to add the additional test frequencies of 11.2 kHz and 16 kHz. The audiometric thresholds in the test ear for the EXP group (Figure 1, black squares) therefore reflect all 31 participants for the frequencies 0.25 to 8 kHz, but only 26 participants for the additional frequencies of 11.2 kHz and 16 kHz.

Figure 1.

Mean audiograms for the test ear for the EXP group (filled squares) and CON group (unfilled circles). Error bars are 1 standard deviation (SD). See text for participant numbers for the two highest frequencies.

EXP = experimental; CON = control.

CON group

A total of 30 participants were included in the CON group (mean age = 23 years; 11 males). The median number of loud music events attended over the preceding 2 years was 4 (range = 0–30). Of these few events, the CON participants estimated that about a quarter resulted in ringing in their ears. No hearing protection was used. In addition, 67% of these participants reported listening to music daily for several hours, although only 33% described themselves as musicians. The audiometric thresholds in the test ear for the CON group (Figure 1, unfilled circles) reflect all 30 participants for the frequencies 0.25 to 8 kHz, but only 25 participants for the additional frequencies of 11.2 kHz and 16 kHz.

In summary, the two groups of participants were clearly differentiated in terms of history of loud music exposure in the preceding 2 years. Compared with CON participants, the EXP participants attended a far greater number of concerts (90 vs. 4) and, while some EXP participants did occasionally wear hearing protection, they also reported that more of the concerts they attended caused ringing in their ears afterwards (34 vs. 1). In addition, more of the EXP group participants listened to music daily on a stereo or with headphones (81% vs. 67%), and more of these subjects self-reported as musicians (71% vs. 33%). All participants provided written informed consent and were reimbursed for their participation. The study was approved by the Institutional Review Board of the University of North Carolina at Chapel Hill (IRB# 14-2204).

Test Battery

The test battery was selected to assess auditory performance at suprathreshold levels using tasks that are thought to rely on the fidelity of neural coding of information in both the spectral and temporal domains. The battery comprised three sets of procedures: electrophysiology, psychoacoustics, and speech. Electrophysiological tests included distortion product otoacoustic emissions (DPOAEs), ABR, EFR, and the acoustic change complex (ACC) evoked by an IPD. Psychoacoustic tests included temporal modulation detection, spectral modulation detection, and sensitivity to IPD. Speech tests included phoneme recognition and speech-in-noise recognition. Several of these tests were spectrally constrained to one-octave band regions geometrically centered at either 1414 Hz (1000–2000 Hz) or 4243 Hz (3000–6000 Hz). The rationale for this was that any effects of noise exposure might be expected to emerge first in a higher frequency region before a lower frequency region (for review, see Fausti, Wilmington, Helt, Helt, & Konrad-Martin, 2005). Except for the binaural tasks involving IPDs, all testing was monaural using the left ear (with the exception of one CON participant who was tested in the right ear). Procedural details, and associated hypotheses, for each test in the battery are provided later. All testing occurred in double-walled, sound-attenuating booths and, for the electrophysiological testing, the booth was electromagnetically shielded.

DPOAEs

A DPGram was obtained by measuring the DPOAE evoked by a pair of primary tones, ƒ1 and ƒ2, where ƒ2/ƒ1 = 1.2. The level of ƒ1 (L1) was 65 dB SPL, and the level of ƒ2 (L2) was 55 dB SPL. The geometric mean of the pair was swept at two frequencies per octave across the range from 500 to 8000 Hz. In addition, DPOAE input/output (I/O) functions were measured in two frequency regions: 1414 Hz and 4243 Hz. For each of these two center frequencies, ƒ1 and ƒ2 were selected to have a geometric mean equal to the given octave-band center frequency, and a ƒ2/ƒ1 ratio of 1.22. For the center frequency of 1414 Hz, ƒ1 = 1280 Hz and ƒ2 = 1562 Hz, yielding a 2ƒ1 − ƒ2 DPOAE of 998 Hz. For the center frequency of 4243 Hz, ƒ1 = 3841 Hz and ƒ2 = 4687 Hz, yielding a 2ƒ1 − ƒ2 DPOAE of 2996 Hz. The I/O function was obtained by adjusting the levels of both primary tones in 10 level pairings that were related according to the equation derived by Kummer, Janssen, and Arnold (1998): L1 = 0.4L2 + 39. The 10 pairings were selected such that L2 decreased in 5-dB steps from 65 to 20 dB SPL. The actual pairings for L1 and L2 in dB SPL were therefore: 65:65, 63:60, 61:55, 59:50, 57:45, 55:40, 53:35, 51:30, 49:25, and 47:20. For each L1:L2 pairing, a DPOAE was accepted as present if the signal-to-noise ratio (SNR) at the expected DPOAE frequency was ≥6 dB. The hypothesis was that EXP and CON groups would not differ in their DPOAE functions since animal work on cochlear synaptopathy has shown that, following recovery from TTS, the DPOAE functions of noise-exposed animals also return to normal (e.g., Lin et al., 2011). DPOAE testing was implemented using an Intelligent Hearing System SmartDPOAE platform (Glenvar Heights, FL).

ABR

ABRs were measured for 100 -µsec click stimuli presented in alternating phase at levels of 95 and 105 dB peak-to-peak equivalent SPL (ppeSPL). To optimize the Wave I recording, the electrode montage included an ear-canal electrode (Tiptrode™) as the inverting electrode for the test ear; the noninverting electrode was placed midline on the high forehead and the ground electrode between the eyebrows. Electrode impedance was maintained below 3 kΩ. Wave I recording was also optimized by using a relatively slow click rate of 7.7 Hz. The recording bandwidth was 100 to 3000 Hz, and artifact rejection was set at ±15 µV. Following electrode placement, the subject relaxed in a reclining chair and was instructed to remain still and try to sleep. For each presentation level, at least two runs of 2,048 sweeps per average were collected. The hypothesis was that the amplitude of Wave I would be lower in the EXP group than the CON group. This expectation was based on animal work showing that, following recovery from TTS, the Wave I I/O functions of the noise-exposed animals remain suppressed relative to unexposed animals at higher levels (e.g., Lin et al., 2011). ABR testing was implemented using an Intelligent Hearing System SmartEP platform which uses 3 A insert phones.

EFR

The EFR was measured for a sinusoidally amplitude modulated (SAM) tone having a carrier frequency (ƒc) of 4240 Hz which is the approximate center frequency of the 3000 to 6000 Hz octave band. This ƒc was modulated by a raised sinusoid with a frequency of 80 Hz (ƒm), and the modulator amplitude was varied to yield three modulation depths of 40%, 60%, and 80%. The 80-Hz EFR, or auditory steady state response, is usually associated with brainstem-level generators (Herdman et al., 2002). Two overall presentation levels of 70 and 80 dB SPL were tested. Each stimulus was approximately 1 s in duration, including 10-ms rise/fall ramps, and the interstimulus interval was 122 ms. The participant was seated in a reclining chair and electrodes placed at high forehead (noninverting), nape of neck (inverting), and between the eyebrows (ground). Electrode impedance was maintained below 3 kΩ. The participant was instructed to remain quiet and still, and to sleep if possible. The recording bandwidth was 30 to 300 Hz, and artifact rejection was set at ±31 µV. The averaging procedure continued until the noise floor of the averaged response had dropped below a criterion level or until a maximum of 400 sweeps had been collected. The SNR of the 80-Hz component was designated as significant if it was ≥6 dB. If this criterion was met, the absolute amplitude of the component was tabulated. Two replications per condition were collected, with the order of testing being: (1,2,3) 80 dB SPL at 80%, 60%, 40% depths; (4,5,6) 70 dB SPL at 80%, 60%, 40% depths; (7,8,9) repeat of the 80-dB series; and (10,11,12) repeat of the 70-dB series. The hypothesis was that the EXP group would exhibit less robust EFRs than the CON group, particularly at high levels and shallow modulation depths, since animal work on cochlear synaptopathy has shown that the low-spontaneous rate, high-threshold fibers are most vulnerable, and that these are presumably important for coding modulation at high levels (Furman et al., 2013). EFR testing was implemented using the Intelligent Hearing System SmartASSR platform which uses 3 A insert phones.

ACC

The stimuli for this test were 200-Hz wide bands of noise centered at 500, 1000, 1250, and 1500 Hz. The total duration of each complete stimulus was 800 ms, including 10-ms rise/fall ramps, but each stimulus consisted of two sequential segments of equal duration. The two segments overlapped at their abutment such that the 10-ms onset ramp for the lagging segment was coincident with the 10-ms offset ramp for the leading segment. This made for a perceptually seamless transition that, in the case of monaural or diotic presentation, resulted in the percept of an uninterrupted band of noise. However, a perceptual discontinuity could be introduced by inverting the lagging segment to one ear. The salience of this interaural difference imposed at the midway point in the stimulus depended upon the frequency of the noise band. For low-frequency noise bands, the IPD resulted in a noticeable change in “image diffuseness” within the head as the stimulus transitioned from diotic to dichotic modes. Note that, irrespective of the interaural phase relation, each monaural stimulus was heard as an uninterrupted noise. For each of the four center frequencies, the cortical P1-N1-P2 complex elicited by the transition from diotic to dichotic modes was measured. For the 500-Hz center frequency, a standard condition was also run wherein the stimulus remained diotic for its entire 800-ms duration. The stimuli were presented repetitively to the subject at a level of 75 dB SPL through electromagnetically shielded ER2 insert phones (Etymotic Research, Elk Grove Village, IL) with an interstimulus interval of 1.2 s. Generation of each stimulus segment was refreshed for each presentation.

For recording, a midline electrode montage was employed with a noninverting electrode placed at high forehead, an inverting electrode at the nape of neck, and a ground electrode between the eyebrows. Electrode impedance was maintained ≤5 kΩ. The subject was seated in a comfortable recliner chair and allowed to read or watch a silent movie with captions. The stimulating system sent time-event markers to the recording system that were coincident with both the stimulus onset and the 400-ms midpoint where, in the ACC conditions, the interaural phase of the lagging segment was inverted. The continuously recorded EEG was segmented into epochs referenced to either the onset of the stimulus or to the midpoint where IPD changed. Each epoch was 400 ms in duration, including a prestimulus baseline of 100 ms. Approximately 200 epochs were averaged per condition. The EEG was recorded using a Compumed Neuroscan system (SynAmpRT, Victoria, Australia), and the stimulating system was a Tucker-Davis-Technologies RZ6 (Alachua, FL) controlled by custom Matlab code (Mathworks, Natick, MA). Because the magnitude of the response to the IPD is presumed to reflect the fidelity of neural phase locking at the locus of binaural interaction, the hypothesis for this test was that the robustness of the response should diminish with increasing ƒc more rapidly for the EXP group than the CON group.

Temporal modulation detection

Temporal modulation detection was measured for ƒm = 80 Hz and ƒc = 4243 Hz both in quiet and in background noise. The stimulus was 400 ms in duration including 50-ms rise/fall ramps. To generate the SAM tone, the ƒc was multiplied by a DC-shifted ƒm using the equation:

where m is the proportional modulation depth (0–1). The starting phase for the modulator was fixed at 3π/2, whereas the starting phase of the carrier () was randomly selected for each stimulus interval. To maintain a constant output level prior to final stage attenuation, the amplitude of the signal waveform was scaled as a function of modulation depth by the factor:

Two SAM tone levels were tested: 70 dB SPL and 85 dB SPL. For conditions with a background noise present, a one-octave wide Gaussian noise was generated that was geometrically centered at 4243 Hz (3000–6000 Hz). The level of this noise was set such that the subband that fell within the estimated auditory filter centered at 4243 Hz had an overall level that was 10-dB down relative to the signal. The bandwidth of this auditory filter was computed to be nominally 482 Hz (Moore & Glasberg, 1983). The stimuli were presented to the test ear through a Sennheiser HD380 Pro headphone (Wedemark, Germany). Modulation detection thresholds were measured with a three-alternative, forced-choice procedure (3AFC) that incorporated a three-down, one-up stepping rule to converge on the 79.4% correct point. The initial step size for modulation depth adjustment was 4 dB (in units of ), and this was halved after the second and fourth track reversal to result in a final step size of 1 dB. A threshold estimation track was terminated after 10 reversals, and the mean of the modulation depths in dB at the final six reversal points was taken as the threshold estimate. At least three estimates of threshold were obtained for each modulation rate, with a fourth obtained if the range of the first three thresholds exceeded 3 dB. All threshold estimates were averaged to obtain the final threshold value. The hypothesis was that the EXP group would exhibit higher detection thresholds than the CON group, particularly at the higher level and in the presence of background noise. This was based on the observation from animal work that cochlear synaptopathy associated with noise exposure is especially manifest for the low-spontaneous rate, high-threshold fibers that retain some dynamic range for high-level input (Furman et al., 2013). Presumably, depletion of this fiber population would undermine the coding of temporal envelopes at higher levels, especially in noise.

Spectral modulation detection

The stimulus for this test was a one-octave band of noise centered at either 1414 Hz (1000–2000 Hz) or 4243 Hz (3000–6000 Hz). A spectral modulation was imposed on this band, wherein the spectrum level in dB varied sinusoidally across log-frequency at a rate of two cycles per octave. The starting phase of the sinusoidal modulator was selected randomly for each target presentation to undermine the reliability of level cues at any particular frequency, especially the edge frequencies. The band was presented at a nominal level of either 65 dB SPL or 85 dB SPL, but the actual output varied randomly over a 5-dB range relative to this nominal level for every presentation to further undermine the effectiveness of level changes at the spectral edges of a noise band as the detection cue. The stimuli were presented to the test ear through a Sennheiser HD380 Pro headphone.

Spectral modulation detection thresholds were measured with the 3AFC procedure that estimated the 79.4% correct point. The initial step size for modulation depth adjustment was 4 dB, and this was reduced to 1 dB after the second reversal in depth direction, and to 0.4 dB after the fourth reversal. A threshold estimation track was terminated after 10 reversals, and the mean of the modulation depths at the final six reversal points was taken as the threshold estimate. At least three estimates of threshold were obtained for each modulation rate, with a fourth obtained if the range of the first three thresholds exceeded 3 dB. All threshold estimates were averaged to obtain the final threshold value. The hypothesis was that the EXP group would exhibit poorer detection thresholds than the CON group, particularly at the higher level and frequency region, for the same reasons noted for temporal modulation detection.

IPD sensitivity

The stimulus for this test was a SAM tone, 800 ms in duration, that was 100% modulated at ƒm = 5 Hz. The modulator starting phase was fixed at 3π/2, whereas the starting phase of the carrier was randomly selected for each stimulus interval. For the standard diotic presentation, the SAM tone was interaurally in phase (S0) throughout its entire duration for both carrier and modulator. For the target dichotic presentation, the modulator remained interaurally in-phase throughout the stimulus, but the carrier phase was inverted (i.e., π radian IPD or Sπ) to one ear during every other cycle of modulation. Because the phase discontinuity occurred during an envelope minimum, the transition was inaudible. The task measured the upper ƒc limit at which the S0 standard could be differentiated from the Sπ signal. This was measured using the 3AFC procedure that estimated the 79.4% correct point. The step size for frequency change was one-quarter octave (a factor of ∼1.19). Following three correct responses in a row ƒc was increased; following an incorrect response ƒc was decreased. A threshold estimation track was terminated after eight reversals in frequency direction, and the geometric mean of the frequencies at the final six reversal points was taken as the threshold estimate. At least three estimates of threshold were obtained, and all threshold estimates were averaged to obtain the final threshold value. The binaural stimuli were presented at a level of 65 dB SPL through Etymotic ER2 insert phones. Because IPD sensitivity is presumed to rely on neural phase locking, this test is a psychophysical measure of the fidelity of neural synchrony in the auditory periphery (cf. Grose & Mamo, 2010). The hypothesis was that the upper frequency limit would be lower for the EXP group than the CON group because of cochlear synaptopathy associated with noise exposure.

Consonant-nucleus-consonant phoneme recognition

The consonant-nucleus-consonant (CNC) test consists of a corpus of 500 monosyllabic words divided into 10 lists (Peterson & Lehiste, 1962). This test was selected because it allows for the recognition accuracy of initial consonant, intermediate vowel, and final consonant to be assessed separately. Depending on condition, the CNC token was filtered into one of two one-octave wide frequency bands; one band was centered at 1414 Hz (1000–2000 Hz) and the other band centered at 4243 Hz (3000–6000 Hz). The filtered speech was presented at two overall levels of 60 and 80 dB SPL. Each subject therefore listened to four conditions: two octave-band regions at each of two overall levels. For each condition, a list of 50 CNC words was presented monaurally through a Sennheiser HD265 headphone, and the participant was instructed to repeat aloud as much of the speech token as they perceived without regard to whether it made semantic sense. Concurrent with the acoustic presentation of the stimulus, the text of the presented speech token was displayed on a monitor screen in front of the experimenter outside the booth with each of the three phonemes highlighted in a position-sensitive box. The experimenter mouse-clicked on each phoneme that was incorrect. At the end of each condition, the percent correct score was computed for the initial consonants, intermediate vowels, and final consonants. There were three replications per condition. The hypothesis was that the EXP group would exhibit reduced speech recognition performance at high levels since cochlear synaptopathy would be expected to result in poor encoding of complex signals such as speech at high levels.

Speech-in-noise recognition

The target speech used in this test was the Bamford-Kowel-Bench (BKB) sentence corpus which consists of 21 lists of 16 sentences per list, with each sentence containing between three and five key words (Bench, Kowal, & Bamford, 1979). The BKB corpus was selected because it provides a sufficiently large stimulus set that a participant could complete all the conditions of the experiment without being presented with the same sentence more than once. Depending on condition, the speech was filtered into one of two one-octave wide frequency bands; one band centered at 1414 Hz (1000–2000 Hz) and the other band centered at 4243 Hz (3000–6000 Hz). Each filtered speech band was masked by a corresponding band of noise filtered from a speech-shaped noise whose spectral envelope was equivalent to the long-term average spectrum of the BKB sentences. The experimental manipulation adjusted the speech-to-masker ratio to determine the recognition threshold for the filtered speech while keeping the overall level constant. Two overall levels were tested of 60 and 80 dB SPL. Each subject therefore listened to four conditions: two octave-band regions at each of two overall levels. The stimulus was presented monaurally through a Sennheiser HD265 headphone.

The participant was instructed to repeat aloud as much of the sentence as they perceived without regard to whether it made grammatical or semantic sense. Concurrent with the acoustic presentation of the stimulus, the text of the presented sentence was displayed on a monitor screen in front of the experimenter outside the booth with each key word highlighted in a position-sensitive box. The experimenter mouse-clicked on every key word that was omitted or incorrect. A one-up, one-down tracking procedure was implemented wherein the sentence-level response was scored as correct if two or more of the key words were identified correctly, otherwise the sentence-level response was scored as incorrect. The step size was 2 dB, and a track continued until eight reversals in level direction had occurred. The mean of the final six reversals was taken as the threshold estimate of speech recognition, and there were three replications per condition. If the range of thresholds exceeded 3 dB, a fourth run was collected and included in the average threshold estimate. The hypothesis was that the EXP group would show reduced speech-in-noise performance at high levels due to cochlear synaptopathy.

Statistical Analysis

Group comparisons were undertaken using repeated-measures analyses of variance (RMANOVA) where data sets were complete, and linear mixed-model analyses where data sets had missing data—typically because a participant did not generate a response in a particular condition. In one group comparison (high-frequency audiometric threshold, see later), a nonparametric test was used because the data approached a nonnormal distribution.

Results

The results of each test in the battery are described below. Note that not all participants completed every test, primarily because of scheduling issues (e.g., lost to follow-up) or technical issues (e.g., inability to maintain probe tip seal for DPOAE test), so the number of participants per group in each test is indicated in parentheses after each heading. By way of overview, note that a group difference was found for only a derived ABR measure.

Audiograms (EXP: 31, CON: 30)

The group mean audiograms are shown in Figure 1 (EXP: filled squares; CON: unfilled circles). As defined by the inclusion criteria, both the EXP and CON groups had thresholds within clinically normal limits (≤20 dB HL) for the octave frequencies 250 to 8000 Hz. At the highest frequency of 16,000 Hz, there was increased variability and the suggestion of a trend for higher thresholds in the EXP group. Recall that data for this frequency were limited to 26 EXP and 25 CON participants. Shapiro–Wilk statistics on the 16,000-Hz thresholds for the two groups indicated that the distribution of thresholds for the EXP group approached nonnormality (p = .054) and so, to be conservative, a group comparison of these 16,000-Hz thresholds was undertaken using a nonparametric Mann–Whitney U test. This indicated no difference between the two groups (U = 233.5; p = .084).

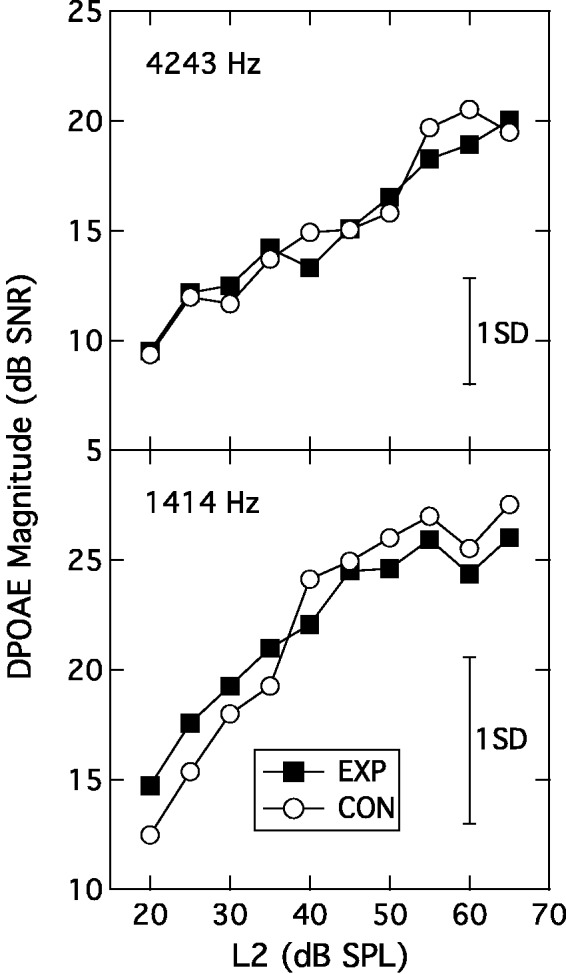

DPOAEs (EXP: 28, CON: 30)

The DPGrams from the two groups overlapped completely (data not shown). The DPOAE growth functions measured in the 1414-Hz and 4243-Hz regions were also highly similar across groups as shown in the two panels of Figure 2, which show DPOAE levels plotted against L2 in dB SPL (EXP: filled squares; CON: unfilled circles). Following the procedure of Boege and Janssen (2002), the DPOAE amplitudes for each participant/frequency were also converted into pressure units, deriving an I/O function expressed as µPa (DPOAE) per dB (L2). A regression line was then fitted to each function and, for those fits that accounted for at least 80% of the variance, the intersection of the regression line with the abscissa was taken as an estimate of behavioral threshold at that frequency (cf. Boege & Janssen, 2002). The variance criterion was most commonly met in both groups for the 1414-Hz frequency (EXP: 19; CON: 22), and so the behavioral estimates of threshold were compared only for this frequency. The mean estimated behavioral thresholds of 14 dB SPL (SD = 8 dB SPL) for the EXP group and 16 dB SPL (SD = 7 dB SPL) for the CON group were not significantly different, (t(39) = 0.80; p = .431).

Figure 2.

Input-output functions at 4243 Hz (upper panel) and 1414 Hz (lower panel) plotting signal-to-noise ratio as a function of L2. The parameter is participant group. Error bars are 1 SD.

EXP = experimental; CON = control; DPOAE = distortion product otoacoustic emission.

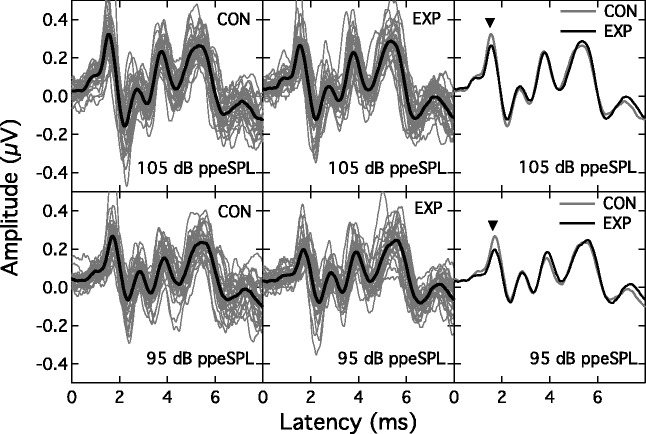

ABR (EXP: 29, CON: 28)

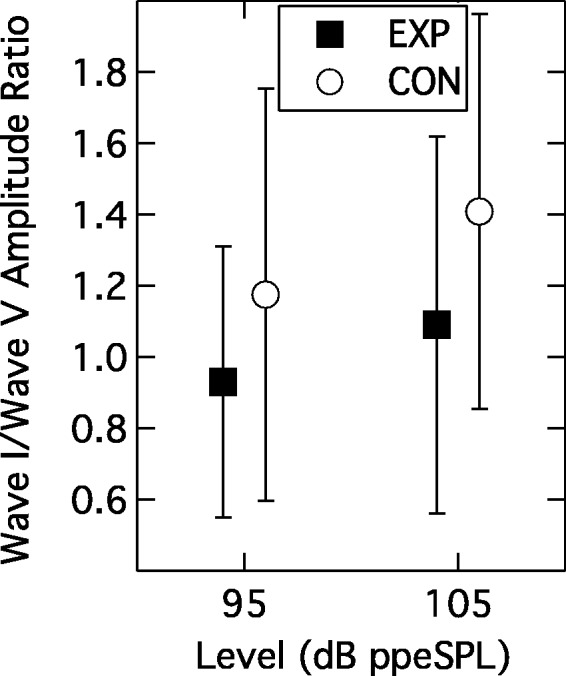

Figure 3 shows the individual and group mean ABRs at the two presentation levels for the CON group (left columns) and EXP group (middle columns). The mean ABRs are also overlaid in the right column (EXP: black line; CON: gray line). An inverted triangle points to Wave I. These superimposed group-average ABRs suggest a higher Wave I amplitude—but not Wave V amplitude—in the CON group at each level. To assess this impression, a RMANOVA was undertaken with one between-subjects factor of group (EXP, CON) and two within-subject factors of wave number (Wave I, Wave V) and presentation level (95 -, 105-dB ppeSPL). The analysis indicated no effect of group (F(1, 55) = 0.18; p = .67) or wave number (F(1, 55) = 1.77; p = .189) but a significant interaction between these two factors (F(1, 55) = 4.64; p = .036). The main effect of presentation level was also significant (F(1, 55) = 214.73; p < .001), as was its interaction with wave number (F(1, 55) = 29.56; p < .001). The three-way interaction term was not significant. Although the interaction between group and wave number was significant, post hoc analysis showed that neither Wave I nor Wave V actually differed in amplitude across the two groups (F(1, 55) = 1.96; p = .167 and F(1, 55) = 1.95; p = .169, respectively). The presentation level effects simply indicate that wave amplitude increased with stimulus level but more so for Wave I than Wave V. Comparison of absolute amplitudes, however, may not be the most appropriate metric since absolute amplitude can vary across individuals due to incidental factors such as head size, tissue impedance, etc. (Nikiforidis, Koutsojannis, Varakis, & Goumas, 1993; Trune, Mitchell, & Phillips, 1988; Yamaguchi, Yagi, Baba, Aoki, & Yamanobe, 1991). To compensate for individual variations, a derived measure was employed wherein the ratio of the Wave I amplitude to Wave V amplitude was computed for each individual ABR (cf. Musiek, Kibbe, Rackliffe, & Weider, 1984). This metric has been modeled to be sensitive to cochlear synaptopathy (Verhulst, Jagadeesh, Mauermann, & Ernst, 2016).1 Figure 4 shows the mean Wave I/Wave V ratios for each group at the two presentation levels. Analysis of this data pattern with a RMANOVA again indicated a significant within-subjects effect of presentation level (F(1, 55) = 18.39; p < .001) but now the between-subjects effect of group was also significant (F(1, 55) = 4.80; p = .033). The interaction of presentation level and group was not significant (F(1, 55) = 0.63; p = .431). These results indicate that the amplitude of Wave I relative to Wave V was significantly smaller in the EXP group than in the CON group.

Figure 3.

ABR traces for the CON group (left panels) and EXP group (middle panels) for the 95 - and 105-dB ppeSPL presentation levels (lower and upper rows, respectively). Light traces are individual ABRs, heavy trace is group mean. The group mean traces are overlaid in the right panels, with an inverted triangle indicating Wave I.

EXP = experimental; CON = control.

Figure 4.

Group mean Wave I/Wave V ratios as a function of presentation level. Parameter is participant group. Error bars are 1 SD.

EXP = experimental; CON = control.

EFR (EXP: 28, CON: 28)

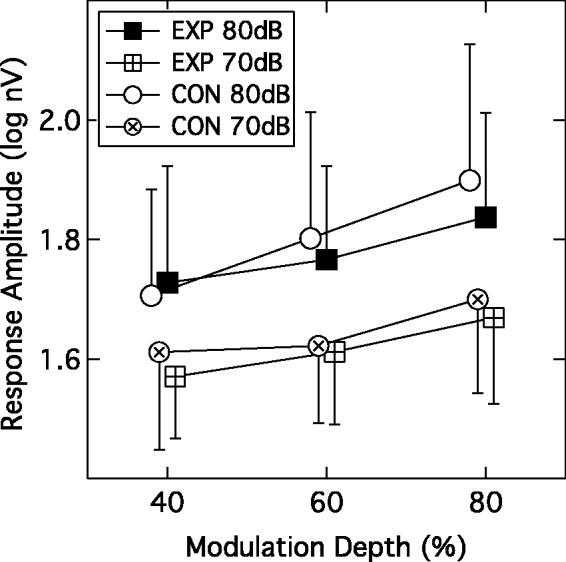

The group mean EFR amplitudes as a function of modulation depth are shown in Figure 5. Squares indicate data from the EXP group and circles data from the CON group; crossed symbols denote the lower presentation level of 70 dB SPL and filled or unfilled symbols denote the 80-dB SPL level. Recall that EFRs were accepted as valid only if the SNR was ≥ 6 dB, so not all participants contributed data to every condition. Indeed, there was a general decline in the number of valid data points as the modulation depth and presentation level decreased; for the most challenging condition (40% modulation depth, 70 dB SPL), only 19 EXP and 23 CON subjects contributed data. Following log transformation of the amplitudes to normalize variance, a linear mixed-model analysis was undertaken and this indicated a significant within-subjects effect of presentation level (F(1, 219.4) = 47.02; p < .001) and a significant within-subjects effect of modulation depth (F(2, 204.24) = 21.17; p < .001) but no between-subjects effect of group (F(1, 69.14) = 0.19; p = .664). None of the interaction terms were significant (F ranging from 1.0 to 0.01; p ranging from 0.371 to 0.927). This data pattern indicates that the EFR amplitudes were larger at 80 dB SPL than 70 dB SPL, and that amplitude declined as the modulation depth was reduced. However, the EFR behavior of the two participant groups was not significantly different. Bharadwaj et al. (2015) and Bharadwaj, Verhulst, Shaheen, Liberman, and Shinn-Cunningham (2014) have recommended using the slope of the function relating EFR amplitude to modulation depth as a gauge of cochlear synaptopathy. To assess this, slopes were computed for data where the EFR amplitude values were valid for all three modulation depths. At the 80-dB SPL level, this criterion was met by 26 of the EXP group and 22 of the CON group; at the 70-dB SPL level, this criterion was met by 18 from each group. A linear mixed-model analysis indicated that the slopes of the EFR functions did not differ significantly across group (F(1, 80) = 2.80; p = .098) or presentation level (F(1, 80) = 1.80; p = .18).

Figure 5.

Group mean EFR amplitudes plotted as a function of modulation depth. Parameters are participant group and presentation level. Error bars are 1 SD.

EXP = experimental; CON = control.

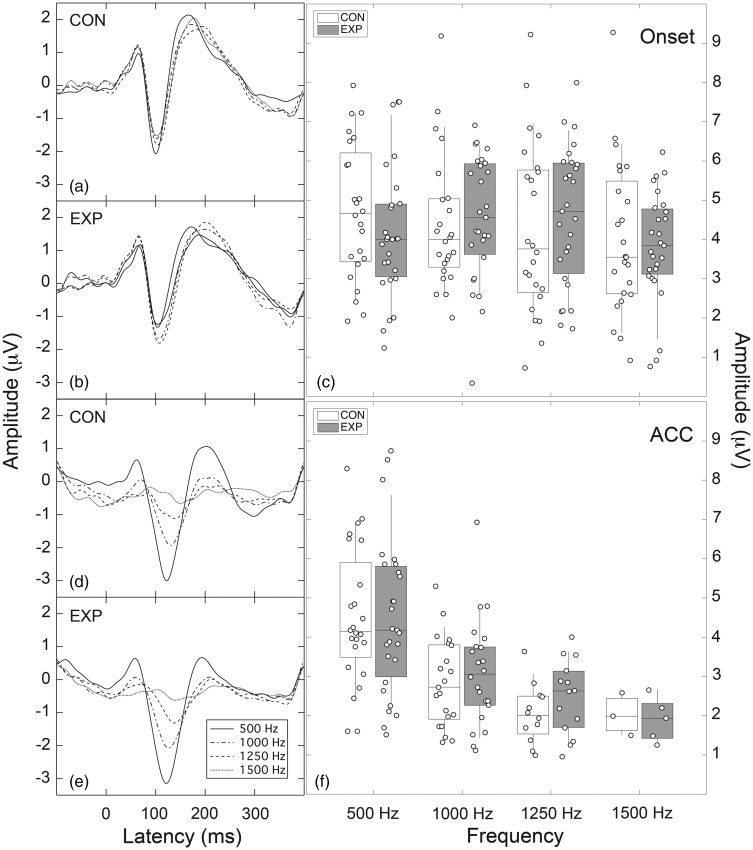

ACC (EXP: 27, CON: 24)

Figure 6, Panels a, b, d, and e, display the grand average P1-N1-P2 responses evoked by the four noise bands of differing center frequency. Panels a and b show the onset responses overlaid for the CON group and EXP group, respectively. Panels d and e show the ACC responses overlaid for the CON group and EXP group, respectively. These traces show that, whereas the onset responses are relatively similar across the four noise bands, the ACC responses display a marked reduction in response amplitude and increase in latency as the frequency of the noise band increased. This behavior was similar for both participant groups. However, these grand average waveforms do not convey the fact that progressively fewer subjects generated ACC responses as noise-band frequency increased.

Figure 6.

Left panels: Panels a and b show grand average P1-N1-P2 onset responses for the CON and EXP groups, respectively; Panels d and e show grand average P1-N1-P2 ACC responses for the CON and EXP groups, respectively. Parameter is noise-band center frequency. Right panels: Panel c shows distribution of N1-P2 amplitudes as a function of frequency for onset responses; Panel f shows complementary distribution for ACC responses. Individual data points (circles) are superimposed on boxplots where rectangles denote the 25th to 75th percentiles (CON: unfilled; EXP: filled); the horizontal bar within each rectangle is the median, and the vertical lines extend out to the 10th to 90th percentiles.

EXP = experimental; CON = control; ACC = acoustic change complex.

The distribution of individual data is shown in the right-hand panels of Figure 6 which plot individual N1-P2 amplitudes in boxplot format (Panel c: Onset; Panel f: ACC). The individual data points (circles) are superimposed on rectangles that denote the 25th to 75th percentiles (CON: unfilled; EXP: filled); the horizontal bar within each rectangle is the median, and the vertical lines extend out to the 10th to 90th percentiles. The first general observation is that amplitudes of individual participants varied considerably, but the overall amplitude of the onset response appeared relatively stable across noise-band frequency. However, a RMANOVA with one within-subjects factor (frequency) and one between-subjects factor (group) indicated that the effect of frequency was significant (F(3, 147) = 3.71; p = .013), although group was not (F(1, 49) = 0.03; p = .87). Post hoc contrasts indicated that the effect of frequency was due to the onset amplitudes for the 1500-Hz noise band being lower than for the other noise bands.

With respect to the ACC response, two features stand out in Figure 6. First, whereas the amplitude of the 500-Hz ACC appears comparable to that of the 500-Hz onset response, the ACC amplitude thereafter diminishes with increasing noise-band frequency. Second, as the frequency of the noise band increases, progressively fewer subjects generate an ACC in both groups; for the highest center frequency of 1500 Hz, a total of three EXP and five CON subjects exhibited an ACC response. The equivalence of the 500-Hz response amplitudes was confirmed with a RMANOVA that indicated no effect of response type (onset, ACC) (F(1, 49) = 0.04; p = .852) or group (F(1, 49) = 0.57; p = .452). Given that progressively fewer subjects contributed data to the ACC as frequency increased, caution is required in interpreting the data pattern in Panels d and e of Figure 6 wherein response latency increases with increasing frequency. The key result is that the overall pattern of results was similar for the two participant groups.

Temporal Modulation Detection (EXP: 30, CON: 30)

The group mean temporal modulation detection thresholds are shown in Table 1, which compiles data from four tests in the battery. Mean thresholds are tabulated for both the quiet and the noise-background presentation modes at the two presentation levels. The performance of one CON participant was inexplicably poor, being almost 4 standard deviations above the mean, and so this participant’s data were omitted as outliers (this omission did not change the outcome of the statistical analysis). A RMANOVA indicated significant within-subjects effects of presentation level (F(1, 58) = 39.45; p < .001) and presentation mode (F(1, 58) = 1003.27; p < .001), and a significant interaction between these within-subjects factors (F(1, 58) = 34.77; p < .001). The between-subjects factor of group was not significant (F(1, 58) = 0.81; p = .372), nor were any of its two- and three-way interactions with the within-subjects factors (F(1, 58) ranging from 3.24 to 0.001; p ranging from .08 to .98). This data pattern indicates that modulation detection thresholds were better in quiet than in noise, and better at the higher presentation level, but that this dependence of threshold on stimulus level was more pronounced in quiet than in a background noise. The two participant groups did not differ significantly in their temporal modulation detection performance.

Table 1.

Group Mean Data for Four Tasks, With Standard Deviations in Parentheses.

| (a) Temporal modulation detection (dB) |

(b) Spectral modulation detection (dB) |

(c) Interaural phase discrimination (Hz) | (d) BKB threshold (dB) |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Quiet |

Noise |

1414 Hz |

4243 Hz |

1414 Hz |

4243 Hz |

||||||||

| 70 dB | 85 dB | 70 dB | 85 dB | 65 dB | 85 dB | 65 dB | 85 dB | 60 dB | 80 dB | 60 dB | 80 dB | ||

| EXP | −21.86 (3.67) | −24.73 (3.92) | −8.94 (2.81) | −9.71 (2.45) | 14.69 (3.08) | 14.65 (4.04) | 14.71 (4.10) | 16.24 (3.79) | 1106 (170) | 6.41 (2.83) | 2.53 (2.28) | 14.92 (5.65) | 6.35 (4.58) |

| CON | −20.51 (5.44) | −23.45 (4.50) | −9.09 (2.85) | −9.61 (2.29) | 14.06 (3.30) | 14.75 (3.46) | 15.68 (4.39) | 16.63 (4.00) | 1068 (163) | 7.41 (3.16) | 3.20 (2.11) | 14.29 (4.15) | 5.52 (3.47) |

Note. EXP = experimental; CON = control; BKB = Bamford-Kowel-Bench. (a) Temporal modulation detection thresholds measured in quiet and in noise at presentation levels of 70 and 85 dB SPL. Thresholds are expressed in units of 20 log(m). (b) Spectral modulation detection thresholds measured for octave-band noises centered at 1414 Hz and 4243 Hz, and presented at 65 and 85 dB SPL. Thresholds are spectral peak-dip excursions in dB. (c) Upper frequency limit for discrimination of S0 from Sπ in Hz. (d) BKB thresholds for octave-band speech centered at 1414 Hz and 4243 Hz and presented at overall levels of 60 and 80 dB SPL. Thresholds are expressed as signal-to-noise ratios in dB.

Spectral modulation detection (EXP: 30, CON: 30)

The group mean spectral modulation detection thresholds are shown in Table 1 for the two frequency regions and presentation levels. A RMANOVA with two within-subjects factors (presentation level and band frequency) and one between-subjects factor (group) indicated significant effects of presentation level (F(1, 58) = 7.69; p = .007) and band frequency (F(1, 58) = 14.87; p < .001), but no effect of group (F(1, 58) = 0.034; p = .86). None of the interaction terms were significant (F ranging from 2.95 to 0.03; p ranging from .091 to .863). This pattern of results indicates that spectral modulation detection was poorer in the higher frequency band and at the higher level, but that this pattern held for both the EXP and CON groups, who were indistinguishable from each other.

IPD Sensitivity (Exp: 29; CON: 28)

The average upper frequency limit at which an S0 signal could be differentiated from an Sπ signal is shown in Table 1. A t test indicated that the EXP group limit of 1106 Hz did not differ from the CON group limit of 1068 Hz (t(55) = 0.9; p = .37).

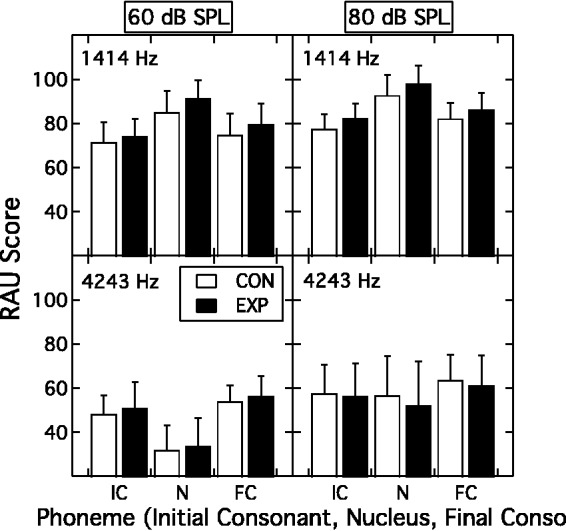

CNC Phoneme Recognition (Exp: 29; CON: 28)

The phoneme recognition data were converted into rationalized arcsine units (Studebaker, 1985), and the group mean results are shown in Figure 7. Each of the four panels displays a bar-graph showing performance for the three phoneme positions (initial consonant, nucleus vowel, and final consonant) for the CON group (unfilled bars) and EXP group (filled bars). The upper and lower rows of panels are for the 1414-Hz and 4243-Hz center frequencies, respectively; the left and right columns of panels are for the 60-dB SPL and 80-dB SPL presentation levels, respectively. A RMANOVA with three within-subjects factors (phoneme position, band frequency, and presentation level) and one between-subjects factor (group) indicated a number of main effects and interactions but the key outcome was that the main effect of group was not significant (F(1, 55) = 1.20; p = .279), nor were any of its two-, three-, or four-way interactions with the within-subjects factors (F ranging from 3.16 to 0.01; p ranging from .081 to .986). In terms of the within-subjects factors, the main effects and all two- and three-way interactions of the three factors were significant (F ranging from 498.61 to 12.83; p ≤ .001). The primary features of this within-subjects pattern are that presentation level had a greater impact for the higher band frequency than the lower band frequency, and that this was particularly striking for the nucleus vowel phoneme position.

Figure 7.

Group mean phoneme recognition (RAU score) as a function of phoneme position (initial consonant, nucleus vowel, final consonant) for the EXP group (filled bars) and the CON group (unfilled bars). The upper and lower rows of panels are for the 1414-Hz center frequency and 4243-Hz center frequency, respectively; the left and right columns of panels are for the 60-dB SPL and 80-dB SPL presentation levels, respectively. Error bars are 1 SD.

EXP = experimental; CON = control.

Speech-in-Noise Recognition (Exp: 29; CON: 26)

Table 1 tabulates the speech-to-masker ratio at threshold for sentence recognition as a function of presentation level and octave-band center frequency. A RMANOVA with two within-subjects factors (presentation level and band frequency) indicated a significant effect of both presentation level (F(1, 53) = 371.09; p < .001) and band frequency (F(1, 53) = 163.94; p < .001), as well as a significant interaction between these two factors (F(1, 53) = 81.36; p < .001). The between-subjects effect of group was not significant (F(1, 53) = 0.004; p = .951), nor were any of its two- or three-way interactions with the within-subjects factors (F(1, 53) ranging from 3.46 to 0.016; p ranging from 0.07 to 0.90). The level-by-frequency interaction underscores the markedly larger drop in threshold SNR with level for the octave-band speech centered at 4243 Hz relative to that centered at 1414 Hz. The two participant groups did not differ in their band-limited masked speech recognition.

Discussion

The results of this study can best be summarized as demonstrating that, in humans, defining a profile of behavioral measures that can be unequivocally associated with the pathophysiology of cochlear synaptopathy remains an elusive goal. In the animal model, the signature of cochlear synaptopathy consists of the triple features of normal OAEs, normal ABR thresholds, and reduced amplitude of the ABR Wave I at high levels (Kujawa & Liberman, 2015; M. C. Liberman et al., 2016). Findings suggestive of this signature were observed here in humans with a history of loud music exposure, namely, normal audiometric thresholds, normal OAE behavior, and an abnormally reduced Wave I/Wave V amplitude ratio. This pattern could be interpreted as supporting the existence of cochlear synaptopathy in humans. However, this interpretation entails the corollary that suprathreshold behavioral performance as well as other electrophysiological indices such as EFR appear generally insensitive to this pathophysiological condition—at least for the tasks selected here.

Several factors must be considered before this somewhat stark interpretation can be adopted, including (a) whether the EXP and CON groups were actually differentiated on the basis of cochlear synaptopathy, (b) whether other participant characteristics counteracted any effects of cochlear synaptopathy, and (c) whether the battery of suprathreshold tests was sensitive to the sequelae of cochlear synaptopathy.

Dealing first with group differentiation on the basis of cochlear synaptopathy, two possibilities must be considered. On the one hand, it is possible that neither group exhibited cochlear synaptopathy; on the other hand, it is possible that both groups exhibited the condition. In the former case, it must be presumed that—if cochlear synaptopathy does exist in humans—then the selection criteria implemented here involving a history of loud music exposure were insufficient to target this population. Conversely, if both groups actually exhibited the condition, then it must be presumed that the accumulated noise exposure history of even the CON listeners was sufficient to result in cochlear synaptopathy, and the screening process failed to exclude this. In either of these cases, failure to observe behavioral differences between groups would be due to the simple fact that the groups were not, in fact, differentiated on the basis of cochlear synaptopathy.

This conjecture, however, begs the question of why the two groups then differed on the key measurement of Wave I/Wave V amplitude ratio. One possibility is that some sort of gender difference underlay the effect since almost twice as many males comprised the EXP group than the CON group. Gender differences are known to exist in the ABR; for example, Wave V is larger in females than males (Jerger & Hall, 1980). This possibility seems remote for two reasons. First, the association between gender and ABR amplitude extends to both Waves I and V (Trune et al., 1988), and therefore a gender effect on the Wave I/Wave V amplitude ratio would not be expected. Indeed, this is evident in the current data set as summarized in Table 2 which tabulates key ABR metrics categorized by group and gender. Collapsed across the two listener groups, there were approximately the same number of males (n = 29) as females (n = 28). A RMANOVA on the amplitudes of Waves I and V, examining the between-subjects factor of gender and the within-subjects factors of wave number and presentation level, indicated a significant effect of gender (F(1, 55) = 4.74; p = .034) but no interaction of gender with either wave number (F(1, 55) = 1.53; p = .221) or presentation level (F(1, 55) = 2.46; p = .122). In other words, wave amplitudes were overall larger in females than males. However, a complementary RMANOVA on the Wave I/Wave V amplitude ratio indicated no effect of gender (F(1, 55) = 0.99; p = .324) and no interaction of gender with presentation level (F(1, 55) = 0.35; p = .555). Thus, although wave amplitudes were larger in females than males, this gender difference was neutralized by deriving the Wave I/Wave V amplitude ratio. The relevance of this is that the significant difference found between the EXP and CON groups for the Wave I/Wave V amplitude ratio was therefore unlikely to reflect a gender effect.

Table 2.

Mean ABR Measures, With Standard Deviations in Parentheses, for the CON and EXP Groups Subdivided by Gender. Units for Presentation Level are dB ppeSPL.

| Latency (ms) |

Amplitude (µV) |

Wave I/Wave V |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Wave I |

Wave V |

Wave I |

Wave V |

Ratio |

|||||||

| 95 dB | 105 dB | 95 dB | 105 dB | 95 dB | 105 dB | 95 dB | 105 dB | 95 dB | 105 dB | ||

| CON | Female (n = 18) | 1.72 (0.06) | 1.55 (0.07) | 5.67 (0.18) | 5.49 (0.17) | 0.41 (0.13) | 0.59 (0.15) | 0.38 (0.11) | 0.44 (0.12) | 1.18 (0.49) | 1.44 (0.44) |

| Male (n = 10) | 1.83 (0.12) | 1.62 (0.12) | 5.85 (0.23) | 5.69 (0.22) | 0.35 (0.26) | 0.46 (0.32) | 0.32 (0.11) | 0.37 (0.14) | 1.17 (0.74) | 1.35 (0.74) | |

| EXP | Female (n = 10) | 1.72 (0.13) | 1.55 (0.11) | 5.59 (0.24) | 5.42 (0.23) | 0.38 (0.17) | 0.52 (0.24) | 0.39 (0.06) | 0.50 (0.11) | 0.97 (0.40) | 1.12 (0.68) |

| Male (n = 19) | 1.80 (0.14) | 1.60 (0.11) | 5.75 (0.14) | 5.56 (0.16) | 0.31 (0.08) | 0.45 (0.11) | 0.37 (0.10) | 0.45 (0.11) | 0.91 (0.38) | 1.07 (0.45) | |

Note. EXP = experimental; CON = control.

The second reason that gender differences are unlikely to underlie the group effect of Wave I/Wave V amplitude ratio is that gender is also associated with differences in ABR wave latency (Nikiforidis et al., 1993; Trune et al., 1988). This was also observed in the present data set where a RMANOVA on the wave latencies, having a between-subjects factor of gender and two within-subjects factors of wave number and presentation level, indicated a significant effect of gender (F(1, 55) = 12.83; p = .001), wave number (F(1, 55) = 24522.21; p < .001), and presentation level (F(1, 55) = 446.82; p < .001), but no interaction between any of these factors (F(1, 55) ranging from 1.86 to 0.31; p ranging from 0.582 to 0.293). The fact that the females had uniformly shorter latencies than the males, however, did not translate to a listener group effect; that is, there was no significant difference between the EXP and CON groups for the latencies of Wave I or V at either presentation level. This was confirmed by a RMANOVA having two within-subjects factors of wave number and presentation level and one between-subjects factor of group. The pertinent result is that the effect of group was not significant, (F(1, 55) = 0.248; p = .621), nor were any of the two- or three-way interactions of group with wave number and presentation level, (F(1, 55) ranging from 1.349 to 0.00; p ranging from 0.251 to 0.99). Thus, in summary, it is unlikely that the difference in Wave I/V amplitude ratio between the EXP and CON groups reflected a gender effect.

Assuming that cochlear synaptopathy does exist in humans, and that the EXP and CON groups were successfully differentiated on the basis of this condition, a second issue that must be considered is whether some other participant characteristics counteracted any effects of cochlear synaptopathy. One such possible characteristic is musical training or experience. There were more self-reported musicians in the EXP group than the CON group—indeed several participants with the most extensive loud-music exposure were members of rock bands who performed regularly. In addition, by self-report more EXP participants listened to music regularly than did CON participants. There are several studies indicating that auditory performance is better in musicians than nonmusicians (Oxenham, Fligor, Mason, & Kidd, 2003; Swaminathan et al., 2015). Thus, it is possible that any deficits in suprathreshold behavioral performance that resulted from cochlear synaptopathy in the EXP participants were offset by benefits associated with musical training or experience. Indeed, this possibility was specifically raised in a report from Australia studying musicians and HHL (Yeend et al., 2016).

A final factor that must be considered is that, irrespective of cochlear synaptopathy status, the behavioral measures selected to test for this in the present study were insensitive to the condition. Whereas this possibility can never be entirely dismissed, the measures were selected to rely on the cues thought to be undermined by synaptopathy and carefully designed to test the hypothetical sequelae of cochlear synaptopathy. That is, the depletion of auditory nerve fibers—particularly those with low-spontaneous rates and high thresholds—is hypothesized to play a prominent role in information coding for complex sounds at high levels, the conditions under which low-threshold fibers are saturated (Furman et al., 2013). In addition, effects of noise exposure are likely to be observed first at the higher frequencies. The battery of behavioral tasks employed here arguably relied on processing complex sounds at high levels and therefore should have been susceptible to effects of cochlear synaptopathy, particularly as both a lower and a higher frequency region were probed. However, it is possible that effects associated with the depletion of low-spontaneous rate, high-threshold fibers were compensated for in these tasks by contributions from unaffected high-spontaneous rate, low-threshold fibers recruited through upward spread of excitation. That is, the absence of high-pass maskers to restrict off-frequency listening might have enabled high-spontaneous rate, low-threshold fibers to convey sufficient information in the EXP group. It is relevant to note, however, that simple modeling work by Oxenham (2016) has demonstrated that behavioral tasks involving suprathreshold discrimination may be resilient to relatively massive loss of auditory nerve fibers.

In summary, this study demonstrated that a history of loud music can lead to a profile of peripheral auditory function that is consistent with an interpretation of cochlear synaptopathy in humans. However, the absence of any widespread behavioral effects in suprathreshold sound processing suggests that the perceptual sequelae of this condition in humans are either too diffuse or too inconsequential to permit a simple differential diagnosis. A meaningful and sensitive assay of HHL associated with cochlear synaptopathy therefore remains an elusive goal.

Acknowledgments

The authors discussed the results and implications and commented on the manuscript at all stages. The assistance of Joseph W. Hall IV and JP Hyzy in data collection is gratefully acknowledged.

Note

Note also that the Wave V/Wave I ratio has been used as a metric of central gain in electrophysiological studies of tinnitus (Gu, Herrmann, Levine, & Melcher, 2012).

Authors' Note

Joseph W. Hall III is an emeritus professor at University of North Carolina at Chapel Hill, NC, USA.

Author Contributions

All authors contributed equally to this work. J. H. G., E. B., and J. W. H. formulated the investigation and designed the experiments; J. H. G. implemented the experiment and along with E. B. analyzed and interpreted the results.

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the Hearing Industry Research Consortium (IRC), with additional funding from the NIH NIDCD R01DC001507 (JHG).

References

- Badri R., Siegel J. H., Wright B. A. (2011) Auditory filter shapes and high-frequency hearing in adults who have impaired speech in noise performance despite clinically normal audiograms. Journal of the Acoustical Society of America 129(2): 852–863. doi: 10.1121/1.3523476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bench J., Kowal A., Bamford J. (1979) The BKB (Bamford-Kowal-Bench) sentence lists for partially-hearing children. British Journal of Audiology 13(3): 108–112. [DOI] [PubMed] [Google Scholar]

- Bernstein L. R., Trahiotis C. (2016) Behavioral manifestations of audiometrically-defined “slight” or “hidden” hearing loss revealed by measures of binaural detection. Journal of the Acoustical Society of America 140(5): 3540–3548. doi: 10.1121/1.4966113. [DOI] [PubMed] [Google Scholar]

- Bharadwaj H. M., Masud S., Mehraei G., Verhulst S., Shinn-Cunningham B. G. (2015) Individual differences reveal correlates of hidden hearing deficits. Journal of Neuroscience 35(5): 2161–2172. doi: 10.1523/JNEUROSCI.3915-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bharadwaj H. M., Verhulst S., Shaheen L., Liberman M. C., Shinn-Cunningham B. G. (2014) Cochlear neuropathy and the coding of supra-threshold sound. Frontiers in Systems Neuroscience 8(26): 1–18. doi: 10.3389/fnsys.2014.00026.24478639 [Google Scholar]

- Boege P., Janssen T. (2002) Pure-tone threshold estimation from extrapolated distortion product otoacoustic emission I/O-functions in normal and cochlear hearing loss ears. Journal of the Acoustical Society of America 111(4): 1810–1818. [DOI] [PubMed] [Google Scholar]

- Bramhall N. F., Konrad-Martin D., McMillan G. P., Griest S. E. (2017) Auditory brainstem response altered in humans with noise exposure despite normal outer hair cell function. Ear and Hearing 38(1): e1–e12. doi: 10.1097/AUD.0000000000000370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Costalupes J. A. (1985) Representation of tones in noise in the responses of auditory nerve fibers in cats. I. Comparison with detection thresholds. Journal of Neuroscience 5(12): 3261–3269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doerfler L., Stewart K. (1946) Malingering and psychogenic deafness. Journal of Speech Disorders 11(3): 181–186. [DOI] [PubMed] [Google Scholar]

- Fausti S. A., Wilmington D. J., Helt P. V., Helt W. J., Konrad-Martin D. (2005) Hearing health and care: The need for improved hearing loss prevention and hearing conservation practices. Journal of Rehabilitation Research and Development 42(4 Suppl 2): 45–62. [DOI] [PubMed] [Google Scholar]

- Furman A. C., Kujawa S. G., Liberman M. C. (2013) Noise-induced cochlear neuropathy is selective for fibers with low spontaneous rates. Journal of Neurophysiology 110(3): 577–586. doi: 10.1152/jn.00164.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grose J. H., Mamo S. K. (2010) Processing of temporal fine structure as a function of age. Ear and Hearing 31: 755–760. doi: 10.1097/AUD.0b013e3181e627e7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu J. W., Herrmann B. S., Levine R. A., Melcher J. R. (2012) Brainstem auditory evoked potentials suggest a role for the ventral cochlear nucleus in tinnitus. Journal of the Association for Research in Otolaryngology 13(6): 819–833. doi: 10.1007/s10162-012-0344-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guest H., Munro K. J., Prendergast G., Howe S., Plack C. J. (2017) Tinnitus with a normal audiogram: Relation to noise exposure but no evidence for cochlear synaptopathy. Hearing Research 344: 265–274. doi: 10.1016/j.heares.2016.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herdman A. T., Lins O., Van Roon P., Stapells D. R., Scherg M., Picton T. W. (2002) Intracerebral sources of human auditory steady-state responses. Brain Topography 15(2): 69–86. [DOI] [PubMed] [Google Scholar]

- Hinchcliffe R. (1992) King-Kopetzky syndrome: An auditory stress disorder? Journal of Audiological Medicine 1: 89–98. [Google Scholar]

- Jensen J. B., Lysaght A. C., Liberman M. C., Qvortrup K., Stankovic K. M. (2015) Immediate and delayed cochlear neuropathy after noise exposure in pubescent mice. PLoS One 10(5): e0125160, doi: 10.1371/journal.pone.0125160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jerger J., Hall J. (1980) Effects of age and sex on auditory brainstem response. Archives of Otolaryngology 106(7): 387–391. [DOI] [PubMed] [Google Scholar]

- King K., Stephens D. (1992) Auditory and psychological factors in “auditory disability with normal hearing”. Scandinavian Audiology 21(2): 109–114. [DOI] [PubMed] [Google Scholar]

- King P. F. (1954) Psychogenic deafness. Journal of Laryngology and Otology 68(9): 623–635. [DOI] [PubMed] [Google Scholar]

- Kopetzky S. J. (1948) Findings indicating rationally applied therapy—Part X. Deafness, tinnitus, and vertigo, New York, NY: Nelson, pp. 279–285. [Google Scholar]

- Kujawa S. G., Liberman M. C. (2009) Adding insult to injury: Cochlear nerve degeneration after “temporary” noise-induced hearing loss. Journal of Neuroscience 29(45): 14077–14085. doi: 10.1523/JNEUROSCI.2845-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kujawa S. G., Liberman M. C. (2015) Synaptopathy in the noise-exposed and aging cochlea: Primary neural degeneration in acquired sensorineural hearing loss. Hearing Research 330(Pt B): 191–199. doi: 10.1016/j.heares.2015.02.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kumar U. A., Ameenudin S., Sangamanatha A. V. (2012) Temporal and speech processing skills in normal hearing individuals exposed to occupational noise. Noise Health 14(58): 100–105. doi: 10.4103/1463-1741.97252. [DOI] [PubMed] [Google Scholar]

- Kummer P., Janssen T., Arnold W. (1998) The level and growth behavior of the 2 f1-f2 distortion product otoacoustic emission and its relationship to auditory sensitivity in normal hearing and cochlear hearing loss. Journal of the Acoustical Society of America 103(6): 3431–3444. [DOI] [PubMed] [Google Scholar]

- Le Prell, C. G., & Lobarinas, E. (2016). Lack of correlation between recreational noise history and performance on the Words-In-Noise (WIN) Test among normal hearing young adults. Paper presented at the Association for Research in Otolaryngology 39th MidWinter Meeting, San Diego, CA.

- Liberman L. D., Suzuki J., Liberman M. C. (2015) Dynamics of cochlear synaptopathy after acoustic overexposure. Journal of the Association for Research in Otolaryngology 16(2): 205–219. doi: 10.1007/s10162-015-0510-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liberman M. C., Epstein M. J., Cleveland S. S., Wang H., Maison S. F. (2016) Toward a differential diagnosis of hidden hearing loss in humans. PLoS One 11(9): e0162726, doi: 10.1371/journal.pone.0162726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin H. W., Furman A. C., Kujawa S. G., Liberman M. C. (2011) Primary neural degeneration in the Guinea pig cochlea after reversible noise-induced threshold shift. Journal of the Association for Research in Otolaryngology 12(5): 605–616. doi: 10.1007/s10162-011-0277-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mehraei G., Hickox A. E., Bharadwaj H. M., Goldberg H., Verhulst S., Liberman M. C., Shinn-Cunningham B. G. (2016) Auditory brainstem response latency in noise as a marker of cochlear synaptopathy. Journal of Neuroscience 36(13): 3755–3764. doi: 10.1523/JNEUROSCI.4460-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore B. C. J., Glasberg B. R. (1983) Suggested formulae for calculating auditory filter bandwidths and excitation patterns. Journal of the Acoustical Society of America 74: 750–753. [DOI] [PubMed] [Google Scholar]