Significance

Neurons and networks in the cerebral cortex must operate reliably despite multiple sources of noise. Using a mathematical analysis and model simulations, we show that noise robustness requires synaptic connections to be in a balanced regime in which excitation and inhibition are strong and largely cancel each other. Our theory predicts an optimal ratio for the number of excitatory and inhibitory synapses that depends on the statistics of afferent activity and is consistent with data. This distinct form of excitation–inhibition balance is essential for robust neuronal selectivity and crucial for stability in associative memory networks, and it emerges automatically from learning in the presence of noise.

Keywords: E/I balance, synaptic learning, associative memory

Abstract

Neurons and networks in the cerebral cortex must operate reliably despite multiple sources of noise. To evaluate the impact of both input and output noise, we determine the robustness of single-neuron stimulus selective responses, as well as the robustness of attractor states of networks of neurons performing memory tasks. We find that robustness to output noise requires synaptic connections to be in a balanced regime in which excitation and inhibition are strong and largely cancel each other. We evaluate the conditions required for this regime to exist and determine the properties of networks operating within it. A plausible synaptic plasticity rule for learning that balances weight configurations is presented. Our theory predicts an optimal ratio of the number of excitatory and inhibitory synapses for maximizing the encoding capacity of balanced networks for given statistics of afferent activations. Previous work has shown that balanced networks amplify spatiotemporal variability and account for observed asynchronous irregular states. Here we present a distinct type of balanced network that amplifies small changes in the impinging signals and emerges automatically from learning to perform neuronal and network functions robustly.

The response properties of neurons in many brain areas including cerebral cortex are shaped by the balance between coactivated inhibitory and excitatory synaptic inputs (1–5) (for a review see ref. 6). Excitation–inhibition balance may have different forms in different brain areas or species and its emergence likely arises from multiple mechanisms. Theoretical work has shown that, when externally driven, circuits of recurrently connected excitatory and inhibitory neurons with strong synapses settle rapidly into a state in which population activity levels ensure a balance of excitatory and inhibitory currents (7, 8). Experimental evidence in some systems indicates that synaptic plasticity plays a role in maintaining this balance (9–12). Here we address the question of what computational benefits are conferred by the excitation–inhibition balance properties of balanced and unbalanced neuronal circuits. Although it has been shown that networks in the balanced states have advantages in generating a fast and linear response to changing stimuli (7, 8, 13, 14), the advantages and disadvantages of excitation–inhibition balance for general information processing have not been elucidated [except in special architectures (15–17)]. Here we compare the computational properties of neurons operating with and without excitation–inhibition balance and present a constructive computational reason for strong, balanced excitation and inhibition: It is needed for neurons to generate selective responses that are robust to output noise, and it is crucial for the stability of memory states in associative memory networks. The distinct balanced networks we present here naturally and automatically emerge from synaptic learning that endows neurons and networks with robust functionality.

We begin our analysis by considering a single neuron receiving input from a large number of afferents. We characterize its basic task as discriminating patterns of input activation to which it should respond by firing action potentials from other patterns which should leave it quiescent. Neurons implement this form of response selectivity by applying a threshold to the sum of inputs from their presynaptic afferents. The simplest (parsimonious) model that captures these basic elements is the binary model neuron (18, 19), which has been studied extensively (20–23) and used to model a variety of neuronal circuits (24–28). Our work is based on including and analyzing the implications of four fundamental neuronal features not previously considered together: (i) nonnegative input, corresponding to the fact that neuronal activity is characterized by firing rates; (ii) a membrane potential threshold for neuronal firing above the resting potential (and hence a silent resting state); (iii) sign-constrained and bounded synaptic weights, meaning that individual synapses are either excitatory or inhibitory and the total synaptic strength is limited; and (iv) two sources of noise, input and output noise, representing fluctuations arising from variable stimuli and inputs and from processes within the neuron. As will be shown, these features imply that, when the number of input afferents is large, synaptic input must be strong and balanced if the neuron’s response selectivity is to be robust. We extend our analysis to recurrently connected networks storing long-term memory and find that similar balanced synaptic patterns are required for the stability of the memory states against noise. In addition, maximizing the performance of neurons and networks in the balanced state yields a prediction for the optimal ratio of excitatory to inhibitory inputs in cortical circuits.

Results

Our model neuron is a binary unit that is either active or quiescent, depending on whether its membrane potential is above or below a firing threshold. The potential, labeled , is a weighted sum of inputs , , that represent afferent firing rates and are thus nonnegative,

| [1] |

where is the resting potential of the neuron and x and w are -component vectors with elements and , respectively. The weight represents the synaptic efficacy of the th input. If the neuron is in an active state; otherwise, it is in a quiescent state. To implement the segregation of excitatory and inhibitory inputs, each weight is constrained so that if input is excitatory and if input is inhibitory.

To function properly in a circuit, a neuron must respond selectively to an appropriate set of inputs. To characterize selectivity, we define a set of exemplar input vectors , with , and randomly assign them to two classes, denoted as “plus” and “minus.” The neuron must respond to inputs belonging to the plus class by firing (active state) and to the minus class by remaining quiescent. This means that the neuron is acting as a perceptron (18–22, 25, 27, 29). We assume the input activations, , are drawn identically and independently from a distribution with nonnegative means, , and covariance matrix, (when is large, higher moments of the distribution of x have negligible effect). For simplicity we assume that the stimulus average activities are the same for all input neurons within a population, so that , and that is diagonal with equal variances within a population, . Note that synaptic weights are in units of membrane potential over input activity levels (firing rates) and hence will be measured in units of .

We call weight vectors that correctly categorize the exemplar input patterns, for , solutions of the categorization task presented to the neuron. Before describing in detail the properties of the solutions, we outline a broad distinction between two types of possible solutions. One type is characterized by weak synapses, i.e., individual synaptic weights that are inversely proportional to the total number of synaptic inputs, [note that weights weaker than will not enable the neuron to cross the threshold]. For this solution type, the total excitatory and inhibitory parts of the membrane potential are of the same order as the neuron’s threshold. An alternative scenario is a solution in which individual synaptic weights are relatively strong, . In this case, both the total excitatory and inhibitory parts of the potential are, individually, much greater than the threshold, but they make approximately equal contributions, so that excitation and inhibition tend to cancel, and the mean is close to threshold. We call the first type of solution unbalanced and the second type balanced. Importantly, since both balanced and unbalanced solutions solve the categorization task with the same value of , the two solution types are not related to each other by a global scaling of the weights but represent different patterns of . Note that the norm of the weight vector, , serves to distinguish the two types of solutions. This norm is of order of for unbalanced solutions and of order in the balanced case. Weights with norms stronger than lead to membrane potential values that are much larger in magnitude than the neuron’s threshold. For biological neurons postsynaptic potentials of such magnitude can result in very high, unreasonable firing rates (although see ref. 30). We therefore impose an upper bound of the weight norm , where is of order 1. We now argue that the differences between unbalanced and balanced solutions have important consequences for the way the system copes with noise.

As mentioned above, neurons in the central nervous system are subject to multiple sources of noise, and their performance must be robust to its effects. We distinguish two biologically relevant types of noise: input noise resulting from the fluctuations of the stimuli and sensory processes that generate the stimulus-related input x and output noise arising from afferents unrelated to a particular task or from biophysical processes internal to the neuron, including fluctuations in the effective threshold due to spiking history and adaptation (31–33) (for theoretical modeling see ref. 34). Both sources of noise result in trial-by-trial fluctuations of the membrane potential and, for a robust solution, the probability of changing the state of the output neuron relative to the noise-free condition must be low. The two sources of noise differ in their dependence on the magnitude of the synaptic weights. Because input noise is filtered through the same set of synaptic weights as the signal, its effect on the membrane potential is sensitive to the magnitude of those weights. Specifically, if the trial-to-trial variability of each input is characterized by SD , the fluctuations it generates in the membrane potential have SD (Fig. 1, Top Left and Top Right). On the other hand, the effect of output noise is independent of the synaptic weights w. Output noise characterized by SD induces membrane potential fluctuations with the same SD for both types of solutions (Fig. 1, Bottom Left and Bottom Right).

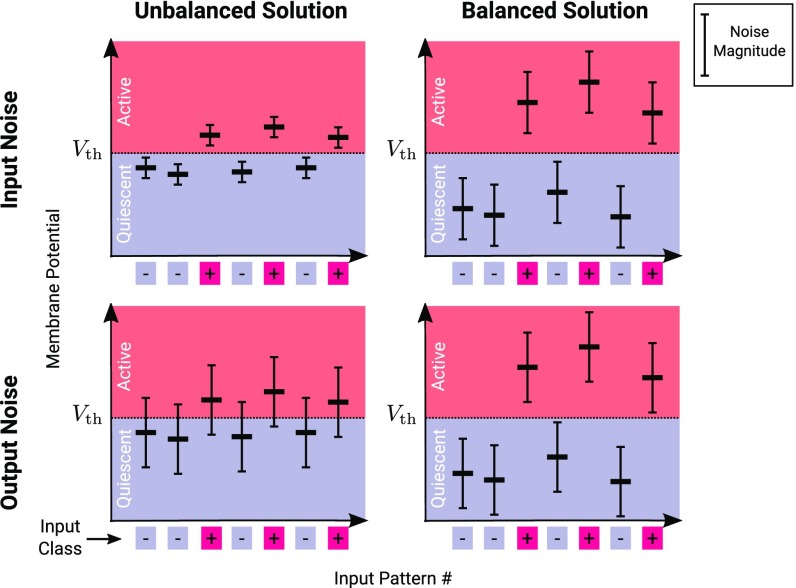

Fig. 1.

Only balanced solutions can be robust to both input and output noise. Each panel depicts membrane potentials resulting from different input patterns in a classification task. Weights are unbalanced [, Top Left and Bottom Left] or balanced [, Top Right and Bottom Right]. The neuron is in an active state only if the membrane potential is greater than the threshold . The input pattern class (plus or minus) is specified by the squares underneath the horizontal axis. Each input pattern determines a membrane potential (mean, horizontal bars) that fluctuates from one presentation to another due to input noise (Top Left and Top Right) and output noise (Bottom Left and Bottom Right). Vertical bars depict the magnitude of the noise in each case. The variability of the mean across input patterns (which is the signal differentiating input pattern classes) is proportional to . As a result, the mean s for unbalanced solutions (Top Left and Bottom Left) cluster close to the threshold [difference from threshold ]. For balanced solutions (Top Right and Bottom Right), the mean s have a larger spread [potential difference ]. Input noise (fluctuations of , Top Left and Top Right) produces membrane potential fluctuations with SD that is proportional to , which is of for unbalanced solutions (Top Left) and of for balanced solutions (Top Right). Output noise (Bottom Left and Bottom Right) produces membrane potential fluctuations that are independent of , so it is of the same magnitude for both solution types. Thus, while both balanced and unbalanced solutions can be robust to input noise, only balanced solutions can also be robust to substantial output noise.

We can now appreciate the basis for the difference in the noise robustness of the two types of solutions. For unbalanced solutions, the difference between the potential induced by typical plus and minus noise-free inputs (the signal) is of the order of (Fig. 1, Top Left and Bottom Left). Although the fluctuations induced by input noise are of this same order (Fig. 1, Top Left), output noise yields fluctuations in the membrane potential of order which is much larger than the magnitude of the weak signal (Fig. 1, Bottom Left). In contrast, for balanced solutions, the signal differentiating plus and minus patterns is of order , which is the same order as the fluctuations induced by both types of noise (Fig. 1, Top Right and Bottom Right). Thus, we are led to the important observation that the balanced solution provides the only hope for producing selectivity that is robust against both types of noise. However, there is no guarantee that robust, balanced solutions exist or that they can be found and maintained in a manner that can be implemented by a biological system. Key questions, therefore, are, Under what conditions does a balanced solution to the selectivity task exist? And what are, in detail, its robustness properties? Below, we derive conditions for the existence of a balanced solution, analyze its properties, and study the implications for single-neuron and network computation. We show that, subject to a small reduction of the total information stored in the network, robust and balanced solutions exist and can emerge naturally when learning occurs in the presence of output noise.

Balanced and Unbalanced Solutions.

We begin by presenting the results of an analytic approach (20–22) for determining existence conditions and analyzing properties of weights that generate a specified selectivity, independent of the particular method or learning algorithm used to find the weights (SI Replica Theory for Sign- and Norm-Constrained Perceptron). We validate the theoretical results by using numerical methods that can determine the existence of such weights and find them if they exist (SI Materials and Methods).

When the number of patterns is too large, solutions may not exist. The maximal value of that permits solutions is proportional to the number of synapses, , so a useful measure is the ratio , which we call the load. The capacity, denoted as , is the maximal load that permits solutions to the task. The capacity depends on the relative number of plus and minus input patterns. For simplicity we assume throughout that the two classes are equal in size (but see SI Capacity for Noneven Split of Plus and Minus Patterns). A classic result for the perceptron with weights that are not sign constrained is that the capacity is (20, 35, 36). For the “constrained perceptron” considered here, we find that depends also on the fraction of excitatory afferents, denoted by . This fraction is an important architectural feature of neuronal circuits and varies in different brain systems. For , namely a purely inhibitory circuit, the capacity vanishes, because when all of the input to the neuron is inhibitory, cannot reach threshold and the neuron is quiescent for all stimuli. When the circuit includes excitatory synapses, the task can be solved by appropriate shaping of the strength of the excitatory and inhibitory synapses, and this ability increases the larger the fraction of excitatory synapses is. Therefore, for , increases with up to a maximum of (half the capacity of an unconstrained perceptron) for fractions equal to or greater than a critical fraction . This dependence can be summarized by the capacity curve (Fig. 2A, solid line) bounding the range of loads which admit solutions for the different excitatory/inhibitory ratios.

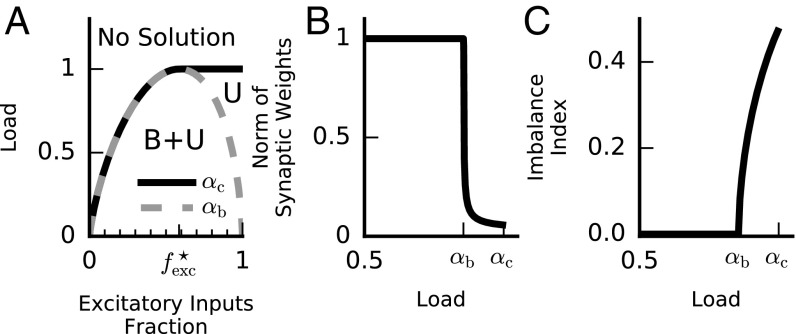

Fig. 2.

Balanced and unbalanced solutions. (A) Perceptron solutions as a function of load and fraction of excitatory weights. Above the capacity line [, solid line] no solution exists. Balanced solutions exist only below the balanced capacity line [, dashed shaded line]. Between the balanced capacity and maximum capacity lines, only unbalanced solutions exist (U). On the other hand, below the balanced capacity line, unbalanced solutions coexist with balanced ones (B+U). (B) The norm of the synaptic weight vector of typical solutions as a function the load [in units of ]. Below the norm is clipped at its upper bound (in this case ). Above the norm collapses and is of order (shown here for ). (C) The input imbalance index (IB, Eq. 3) of typical solutions as a function of the load. Note the sharp onset of imbalance above . In B and C , yielding . See SI Materials and Methods for other parameters used. For simulation results see Fig. S1.

Interestingly, depends on the statistics of the inputs (SI Replica Theory for Sign- and Norm-Constrained Perceptron). We denote the coefficient of variation (CV) of the excitatory and inhibitory input activities by and , respectively. These measure the degree of stimulus tuning of the two afferent populations. In terms of these quantities, the critical excitatory fraction is

| [2] |

In other words, the critical ratio between the number of excitatory and inhibitory afferents [/(1 − )] equals the ratio of their degree of tuning. To understand the origin of this result, we note that to maximize the encoding capacity, the relative strength of the weights should be inversely proportional to the SD of their afferents, , implying that the mean total synaptic inputs are proportional to , where . For excitatory fraction this mean total synaptic inputs are positive, allowing the voltage to reach the threshold and the neuron to implement the required selectivity task with optimally scaled weights. Thus, the capacity of the neuron is unaffected by changes in in the range . For excitatory fraction the neuron cannot remain responsive (reach threshold) with optimally scaled weights, and thus the capacity is reduced.

In cortical circuits, inhibitory neurons tend to fire at higher firing rates and are thought to be more broadly tuned than excitatory neurons (4, 37, 38), implying (SI Effects of E and I Input Statistics). This is consistent with the abundance of excitatory synapses in cortex. However, input statistics that make do not change the qualitative behavior we discuss (SI Effects of E and I Input Statistics and Fig. S2A).

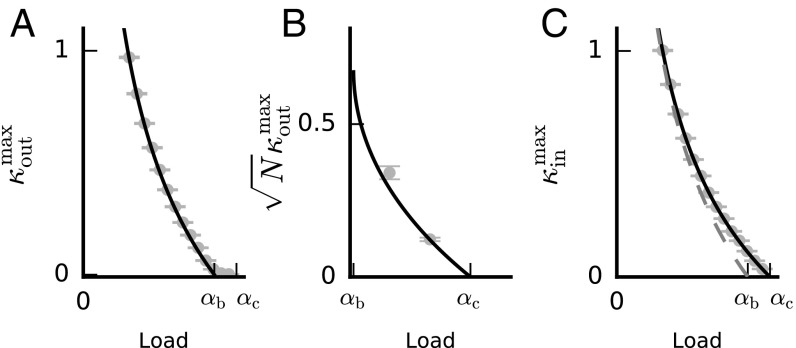

Fig. S2.

Effects of input statistics. (A) Solution type vs. and (as a fraction of ) (Capacity for Noneven Split of Plus and Minus Patterns) for different values of . From Left to Right . Lines are as in Fig. 2A. (B) Type of maximal solutions vs. and for different values of . For a wide range of and these solutions are unbalanced for all values of . Here and from Left to Right. (C) Fraction of silent weights for maximal solutions vs. the load for different values of . Fraction of E silent weights is shown in blue and fraction of I silent weights is depicted in red. Here , , and from Left to Right. Notably, for unbalanced, maximal solutions the fraction of silent weights is constant and equals 0.5 for both E and I inputs (Saddle-point equations for the maximal in solution and Distribution of synaptic weights).

For load levels below the capacity, many synaptic weight vectors solve the selectivity task and we now describe the properties of the different solutions. In particular, we investigate the parameter regimes where balanced or unbalanced solutions exist. We find that unbalanced solutions with weight vector norms of order exist for all load values below . As for the balanced solutions with weight vector norms of order , they exist below a critical value which may be smaller than . Specifically, for balanced solutions exist for all load values below capacity; i.e., . For , is smaller than and decreases with until it vanishes at (Fig. 2A, dashed shaded line). The absence of balanced solutions for is clear, as there is no inhibition to balance the excitatory inputs. Furthermore, the synaptic excitatory weights must be weak (scaling as ) to ensure that remains close to threshold (slightly above it for plus patterns and slightly below it for minus ones). For the predominance of excitatory afferents precludes a balanced solution if the load is high; i.e., . As argued above and shown below, balanced solutions are more robust than unbalanced solutions. Hence, we can identify as the optimal fraction of excitatory input, because it is the fraction of excitatory afferents for which the capacity of balanced solutions is maximal.

For loads below both balanced and unbalanced solutions exist, raising the question, What would be the character of a weight vector that is sampled randomly from the space of all possible solutions? Our theory predicts that whenever the balanced solutions exist, the vast majority of the solutions are balanced and furthermore have a weight vector norm that is saturated at the upper bound . This is a consequence of the geometry of high-dimensional spaces in which volumes are dominated by the volume elements with the largest radii (see SI Replica Theory for Sign- and Norm-Constrained Perceptron for details). Thus, for , the typical solution undergoes a transition from balanced to unbalanced weights as crosses the balanced capacity line . At this point the norm of the solution collapses from to (Fig. 2B).

As explained above, for balanced solutions we expect to find a near cancellation of the total excitatory (E) and inhibitory (I) inputs. Our theory confirms this expectation. To measure the degree of E-I cancellation for any solution, we introduce the imbalance index,

| [3] |

where the overbar symbol denotes an average over all of the input patterns () and, as mentioned above, E weights are nonnegative () and I weights are nonpositive (). Whereas for the unbalanced solution the IB is of order , for the balanced solution it is small, of order . Thus, the typical solution below has zero imbalance (to leading order in , but the imbalance increases sharply as increases beyond (Fig. 2C).

Noise Robustness of Balanced and Unbalanced Solutions.

To characterize the effect of noise on the different solutions, we introduce two measures, input robustness and output robustness , which characterize the robustness of the noise-free solutions to the addition of two types of noise. To ensure robustness to output noise, the noise-free membrane potential that is the closest to the threshold must be sufficiently far from it. Thus, we define

| [4] |

where the minimum is taken over all of the input patterns in the task and the threshold is 1 [because we measure the weights in units of ]. The second measure, which characterizes robustness to input noise, must take into account the fact that the fluctuations in the membrane potential induced by this form of noise scale with the size of the synaptic weights. Hence, [ corresponds to the notion of margin in machine learning (39)]. Efficient algorithms for finding the solution with a maximum possible value of have been studied extensively (39, 40). We have developed an efficient algorithm for finding solutions with maximal (SI Materials and Methods).

We now ask, What are the possible values of the input and output robustness of unbalanced and balanced solutions? Our theory predicts that the majority of both balanced and unbalanced solutions have vanishingly small values of and and are thus very sensitive to noise. However, for a given load (below capacity) robust solutions do exist, with a spectrum of robustness values up to maximal values, and . Since the magnitude of w scales both signal and noise in the inputs, is not sensitive to and hence is of for both unbalanced and balanced solutions. On the other hand, is proportional to . Thus, we expect to be of when balanced solutions exist and of when only unbalanced solutions exist. In addition, we expect that increasing the load will reduce the value of and as the number of constraints that need to be satisfied by the synaptic weights increases.

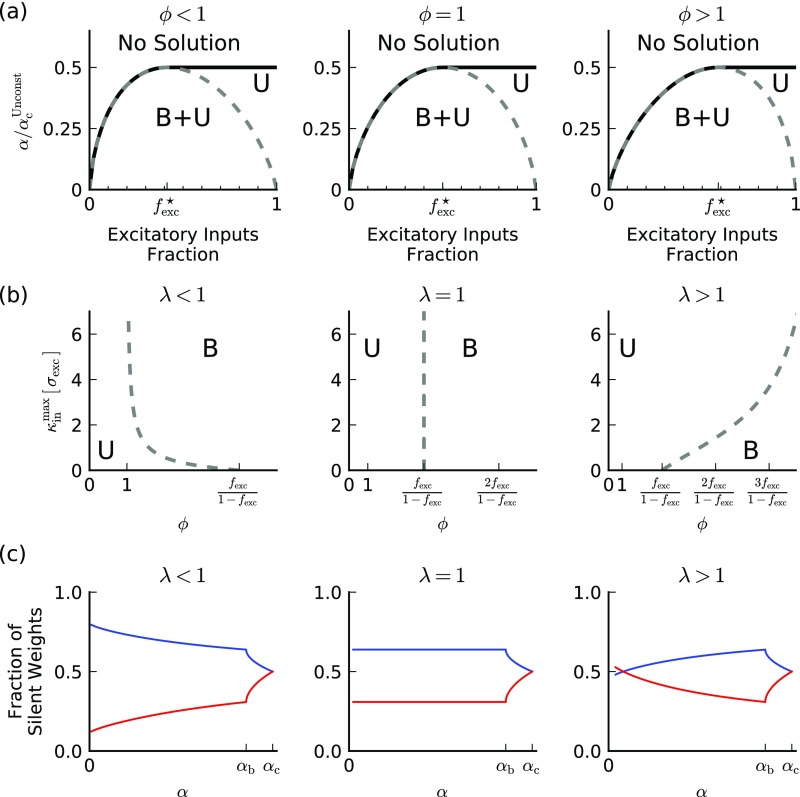

In Fig. 3 we present the values of and vs. the load. As expected, we find that the values of both and reach zero as the load approaches the capacity, (and diverges, as , for vanishingly small loads). However, is only substantial (of order 1) and proportional to below where balanced solutions exist (Fig. 3 A and B). In contrast, remains of order 1 up to the full capacity, (Fig. 3C). What are the properties of “optimal” solutions that achieve the maximal robustness to either input or output noise? We find that the solutions that achieve the maximal output robustness, , are balanced for all and their norm saturates the upper bound, (Fig. S3B). Interestingly, for a wide range of input parameters (SI Replica Theory for Sign- and Norm-Constrained Perceptron, Effects if E and I Input Statistics, and Fig. S2B), solutions that achieve the maximal input robustness, , are unbalanced solutions (Fig. S3C). Nevertheless, we find that below the critical balance load, , the values of the balanced maximal solutions are of the same order as, and indeed close to, (Fig. 3C, dashed shaded line). In fact, the balanced solution with maximal also poses the maximal value of that is possible for balanced solutions.

Fig. 3.

Maximal values of input and output robustness. (A) Maximal value of vs. load [in units of ]. No solutions exist above the maximal line (, solid line). Below , for output robustness that is of order 1, only balanced solutions exist. (B) Maximal value of for loads between and (in units of ). In this range only unbalanced solutions exist and the maximal values (solid line) scale as . (C) Maximal value of vs. load (in units of ). No solutions exist above the maximal line (, solid line). For the parameters used, solutions that achieve are unbalanced. The maximal value of for balanced solutions (dashed shaded line) is not far from the and is attained by solutions that maximize for . In A–C, theory and numerical results are depicted in solid or shaded lines and shaded circles, respectively. Error bars depict SE of the mean. See SI Materials and Methods for parameters used. For further simulation results see Fig. S3.

Fig. S3.

Properties of maximal output and input robustness solutions. (A) Input robustness, , vs. the load for the maximal solution (red) and the maximal solution (blue). (B) Norm of synaptic weight vector vs. the load for the maximal solution. In the balanced regime () the norm saturates its upper bound . Since the norm is constant, maximizing in the balanced regime is equivalent to maximizing under the constraint . (C) Rescaled norm of synaptic weight vector () vs. the load for the maximal solution. To demonstrate the scaling of the weight vector norm, colors depict results for (gray), (green), and (red). In A–C lines depict theoretical prediction. , , and ; results are averaged over 100 samples.

We conclude that solutions that are robust to both input and output noise exist for loads less than which for is smaller than . However, as long as is close to , the reduction in capacity from to imposed by the requirement of robustness is small.

Balanced and Unbalanced Solutions for Spiking Neurons.

Neurons typically receive their input and communicate their output through action potentials. Thus, a fundamental question is, How will the introduction of spike-based input and spiking output affect our results? Here we show that the main properties of balanced and unbalanced synaptic efficacies, as discussed above, remain when the inputs are spike trains and the model neuron implements spiking and membrane potential reset mechanisms.

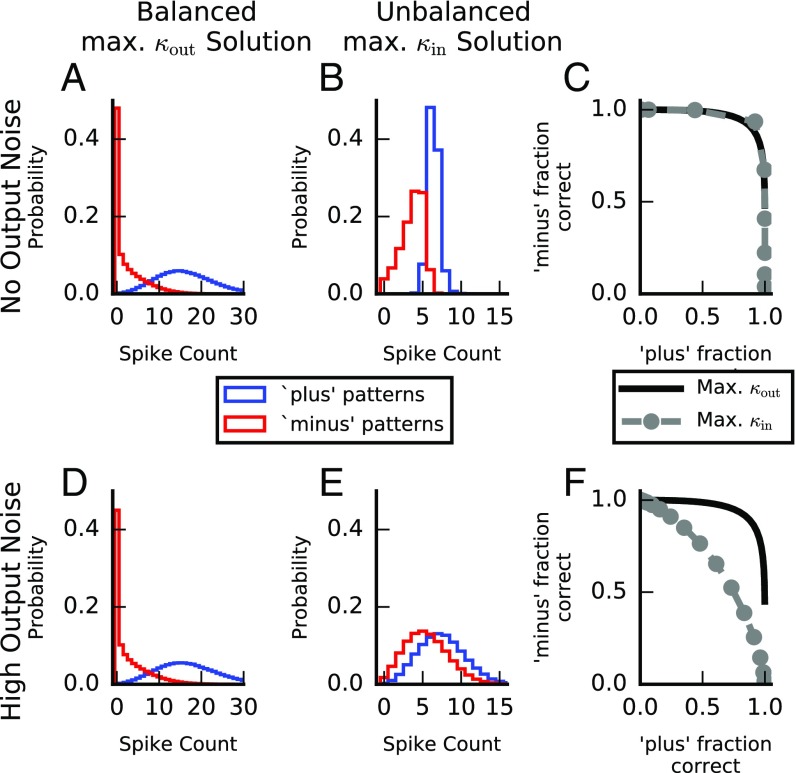

We consider a leaky integrate-and-fire (LIF) neuron that is required to perform the same binary classification task we considered using the perceptron. Each input is characterized by a vector of firing rates, . Each afferent generates a Poisson spike train over an interval from time to , with mean rate . The LIF neuron integrates these input spikes (SI Materials and Methods) and emits an output spike whenever its membrane potential crosses a firing threshold. After each output spike, the membrane potential is reset to the resting potential, and the integration of inputs continues. We define the output state of the LIF neuron, using the total number of output spikes : The neuron is quiescent if and active if , where is chosen to maximize classification performance. We do not discuss the properties of learning in LIF neurons (41–45), but instead test the properties of the solutions (weights) obtained from the perceptron model when they are used for the LIF neuron. In particular, we compare the performance of the balanced, maximal solution and the unbalanced, maximal solution. When the synaptic weights of the LIF neuron are set according to the two perceptron solutions, the mean output of the LIF neuron correctly classifies the input patterns (according to the desired classification; Fig. S4). Consistent with the results for the perceptron, we find that with no output noise the performance of both solutions is good, even in the presence of the substantial input noise caused by Poisson fluctuations in the number of input spikes and their timings (Fig. 4 A–C). When the output noise magnitude is increased (SI Materials and Methods), however, the performance of the unbalanced maximal solution quickly deteriorates, whereas the performance of the balanced maximal solution remains largely unaffected (Fig. 4 D–F). Thus, the spiking model recapitulates the general results found for the perceptron.

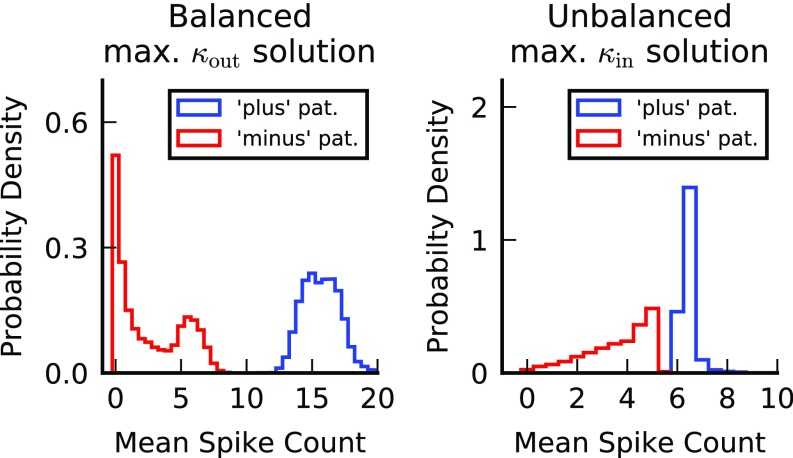

Fig. S4.

Neuronal selectivity for a spiking neuron. Both panels depict the histograms of the mean output spike count for patterns belonging to the plus (blue) and minus (red) classes of an LIF neuron with balanced weights maximizing (Left) and unbalanced weights maximizing (Right). Here the magnitude of the output noise is zero. In both cases the mean output spike count can be used to correctly classify the patterns. For parameters used see Fig. 3.

Fig. 4.

Selectivity in a spiking model. A and B (D and E) depict the output of an LIF neuron with no (high) output noise for the balanced maximal solution (A and D) and the unbalanced maximal solution (B and E). C and F depict the receiver operating characteristic (ROC) curves for the two solutions under the no output noise (C) and high output noise (F) conditions obtained as the decision threshold () is modified from 0 to . Consistent with the results of the perceptron, the performances of the two solutions with no output noise are very similar with a slight advantage for the maximal solution. With higher levels of output noise, the performance of the unbalanced maximal solution quickly deteriorates, whereas the performance of the balanced maximal solution is only slightly affected. of the balanced solution was chosen to equalize the mean output spike count across all patterns in both solutions (mean ). See SI Materials and Methods for parameters used.

Balanced and Unbalanced Synaptic Weights in Associative Memory Networks.

Thus far, we have considered the selectivity of a single neuron, but our results also have important implications for recurrently connected neuronal networks, in particular recurrent networks implementing associative memory functions. Models of associative memory in which stable fixed points of the network dynamics represent memories, and memory retrieval corresponds to the dynamic transformation of an initial state to one of the memory-representing fixed points, have been a major focus of memory research for many years (24, 27, 28, 46–48). For the network to function as an associative memory, memory states must have large basins of attraction so that the network can perform pattern completion, recalling a memory from an initial state that is similar but not identical to it. In addition, memory retrieval must be robust to output noise. As we will show, the variables and for the synaptic weights projecting onto individual neurons in the network are closely related to the sizes of the basins of attraction of the memories and the robustness to output noise, respectively.

We consider a network that consists of E and I, recurrently connected binary neurons. The network operates in discrete time steps and at each step the state of one randomly chosen neuron, , is updated according to

| [5] |

Here for and 0 otherwise, is the weight of the synapse from neuron to neuron , and , the output noise, is a Gaussian random variable with SD . randomly chosen binary activity patterns (where each ) representing the stored memories are encoded in the recurrent synaptic matrix . This is achieved by treating each neuron, say as a perceptron with a weight vector that maps its inputs } from all other neurons to its desired output for each memory state (Fig. 5 A and B and SI Materials and Methods). This creates an attractor network in which the memory states are fixed points of the dynamics in the noise-free condition () (20).

Fig. 5.

Recurrent associative memory network constructed using single-neuron feedforward learning. (A) A fully connected recurrent network of E and I neurons in a particular memory state. Active (quiescent) neurons are shown in black (white). E and I synaptic connections () are shown in yellow and blue, respectively (not all connections are depicted). Lines symbolize axons, and synapses are shown as small circles. (B) To find an appropriate , the postsynaptic weights of each neuron are set using the memory-state activities of the other neurons as input and its own memory state as the desired output. In this example, neuron 4 will implement its desire memory state through modification of the weights for . C and E show the fraction of erroneous (different from a given memory pattern) neurons in the network as a function of time. (C) Network dynamics with . An initial state of the network can either converge to the memory state (blue) or diverge to other network states (red). (D) Probability of converging to a memory state vs. initial pattern distortion (SI Materials and Methods) for a network with unbalanced maximal weights (green), a network with balanced maximal weights (black), and a network with balanced maximal weights with unlearned inhibition (gray, main text). (E) Network dynamics with . The network is initialized at the memory state. The dynamics can be stable (blue; the network remains close to the memory state), or unstable (red; the network diverges to another state). (F) Probability of stable dynamics for at least time steps for networks initialized at the memory state in the presence of output noise vs. . Colors are the same as in D. (G) Maximal output noise magnitude vs. load for networks with balanced synaptic weights matrix maximizing . Similar to , the maximal output noise magnitude is of order 1 only below . Above it, even though solutions exist they are extremely sensitive to output noise. Results are shown for (green) and (magenta). See SI Materials and Methods for parameters used.

We do not attempt to perform a complete analysis of the effects of input and output noise in recurrent networks, a difficult challenge. Instead, we link observations from our single-neuron analysis to key features of a recurrent network performing a memory function. The capacity of such a memory network is defined as the maximal load for which the memory patterns can be fixed points of the noise-free dynamics, stable against single-neuron perturbations. This condition is met as long as the single-neuron synaptic weights possess substantial (i.e., ) for all neurons. Thus, the single-neuron capacities will determine the overall network capacity. As we showed before, the capacity of a single-neuron perceptron depends on the statistics of its desired output (which in our case is the sparsity of activity across memory states). Since this statistic may be different in E and I populations, the single-neuron capacity of the two populations may vary, and hence the global capacity of the recurrent network is the minimum of the single-neuron capacities of the two neuron types. As long as is smaller than this critical capacity, a recurrent weight matrix exists for which all memory states are stable fixed points of the noiseless dynamics. However, such solutions are not unique, and the choice of a particular matrix can endow the network with different robustness properties. As stated above, to properly function as an associative memory the fixed points must have large basins of attraction. Corruption of the initial state away from the parent memory pattern introduces variability into the inputs of each neuron for subsequent dynamic iterations and hence is equivalent to injecting input noise in the single-neuron feedforward case. The network propagates this initial input noise in a nontrivial way; however, its magnitude always remains proportional to the magnitude of the norm of the neurons’ synaptic weights. We therefore expect that a large basin of attraction is achieved when the matrix yields a large input noise robustness for each neuron in the (noise-free) fixed points (49, 50). When output noise is introduced to the network dynamics (), the network may propagate it as input noise to other neurons in subsequent time steps. However, initially its magnitude is proportional only to and is unaffected by the scale of the synaptic weights. Thus, we expect that the requirement that the memory states and retrieval will be robust against output noise is satisfied when yields a large output noise robustness for each neuron in the (noise-free) fixed points. We therefore consider two types of recurrent connections: one in which each row of is a weight vector that maximizes and hence, in the chosen parameter regime, is necessarily unbalanced and a second one in which the rows of the connection matrix correspond to balanced solutions that maximize .

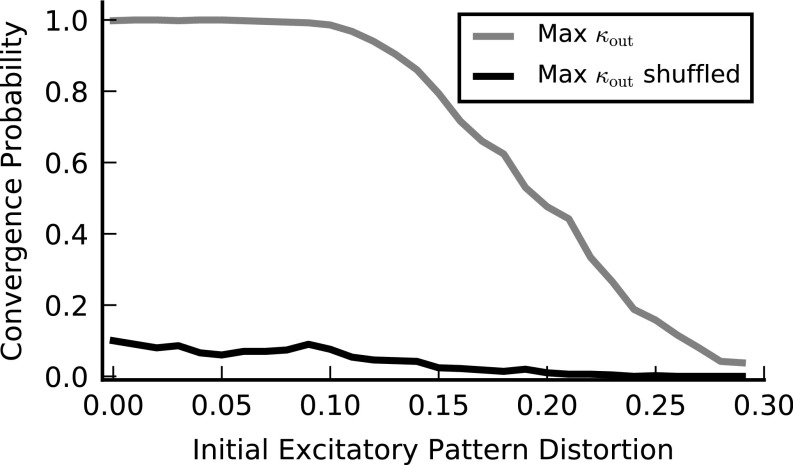

We estimate the basins of attraction of the memory patterns numerically by initializing the network in states that are corrupted versions of the memory states (SI Materials and Methods) and observing whether the network, with , converges to the parent memory state (Fig. 5C, blue) or diverges away from it (Fig. 5C, red). We define the size of the basin of attraction as the maximum distortion in the initial state that ensures convergence to the parent memory with high probability.

Comparing the basins of attraction of the two types of networks, we find that the mean basin of attraction of the unbalanced network is moderately larger than that of the balanced one (Fig. 5D), consistent with the slightly lower value of in the balanced case (Fig. 5D). On the other hand, the behavior of the two networks is strikingly different in the presence of output noise. To illustrate this, we start each network at a memory state and determine whether it is stable (remains in the vicinity of this state for an extended period), despite the noise in the dynamics (Fig. 5E). We estimate the output noise tolerance of the network by measuring the maximal value of for which the memory states are stable (Fig. 5F). We find that memory states in the balanced solution with maximal are stable for noise levels that (for the network sizes used in the simulation) are an order of magnitude larger than for the unbalanced network with maximal (Fig. 5F).

Finally, we ask how the noise robustness of the memory states in the balanced network depends on the number of memories. As shown in Fig. 5F, for a fixed level of load below capacity, memory patterns are stable () as long as levels of noise remain below a threshold value, which we denote as . When increases beyond , stability of the memory states rapidly deteriorates. The critical noise function decreases smoothly from a large value at small to zero at a level of load, . This load coincides with the maximal load for which both E and I neurons have balanced solutions (Fig. 5G). For loads , all solutions are unbalanced, and hence the magnitude of the stochastic dynamical component can be at most of order .

The Role of Inhibition in Associative Memory Networks.

In our associative memory network model, we assumed that both E and I neurons code desired memory states and that all network connections are modified by learning. Most previous models of associative memory that separate excitation and inhibition assume that memory patterns are restricted to the E population, whereas inhibition provides stabilizing inputs (14, 48, 51–54). To address the emergence of balanced solution in scenarios where the I neurons do not represent long-term memories, we studied an architecture where I to E, I to I, and E to I connections are random sparse matrices with large amplitudes, resulting in I activity patterns driven by the E memory states. In such conditions, the I subnetwork exhibits irregular asynchronous activity with an overall mean activity that is proportional to the mean activity of the driving E population (7, 55, 56). Although the mean I feedback provided to the E neurons can balance the mean excitation, the variability in this feedback injects substantial noise onto the E neurons, which degrades system performance (SI Recurrent Networks with Nonlearned Inhibition). This variability stems from the differences in I activity patterns generated by the different E memory states (albeit with the same mean). Additional noise is caused by the temporal irregular activity of the chaotic I dynamics. Next we ask whether the system’s performance can be improved through plasticity in the I to E connections for which some experimental evidence exists (23, 57–60). Indeed, we find an appropriate plasticity rule for this pathway (SI Recurrent Networks with Nonlearned Inhibition) that suppresses the spatiotemporal fluctuations in the I feedback, yielding a balanced state that behaves similarly to the fully learned networks described above (Fig. 5 D and F, gray lines). Interestingly, in this case the basins of attraction of the balanced network are comparable to or even larger than the basins of the unbalanced fully learned network (compare gray to green curves in Fig. 5D). Despite the fact that no explicit memory patterns are assigned to the the I populations, the I activity plays a computational role that goes beyond providing global I feedback; when the weights of the I to E connections are shuffled, the network’s performance significantly degrades (Fig. S5).

Fig. S5.

Effect of shuffling learned I weights in recurrent networks with nonlearned I activity. Shaded line depicts the performance of the network with random E to I and I to I connections and learned E to E and I to E connections (Recurrent Networks with Nonlearned Inhibition) (same as gray line in Fig. 5D). Solid line depicts the performance of the same network with the I weights of each E neuron randomly shuffled. Thus, the distribution of I synaptic weights for each E neuron is identical in both cases. This result shows that the learned I weights are important for network performance and stability.

Learning Robust Solutions.

Thus far, we have presented analytical and numerical investigations of solutions that support selectivity or associative memory and provide substantial robustness to noise. However, we did not address the way in which these robust solutions could be learned by a biological system. In fact, as stated above, the majority of solutions for these tasks have vanishingly small output and input robustness and the above maximum robustness solutions are found numerically by special learning algorithms. Therefore, an important question is whether noise robust weights can emerge naturally from synaptic learning rules that are appropriate for neuronal circuits.

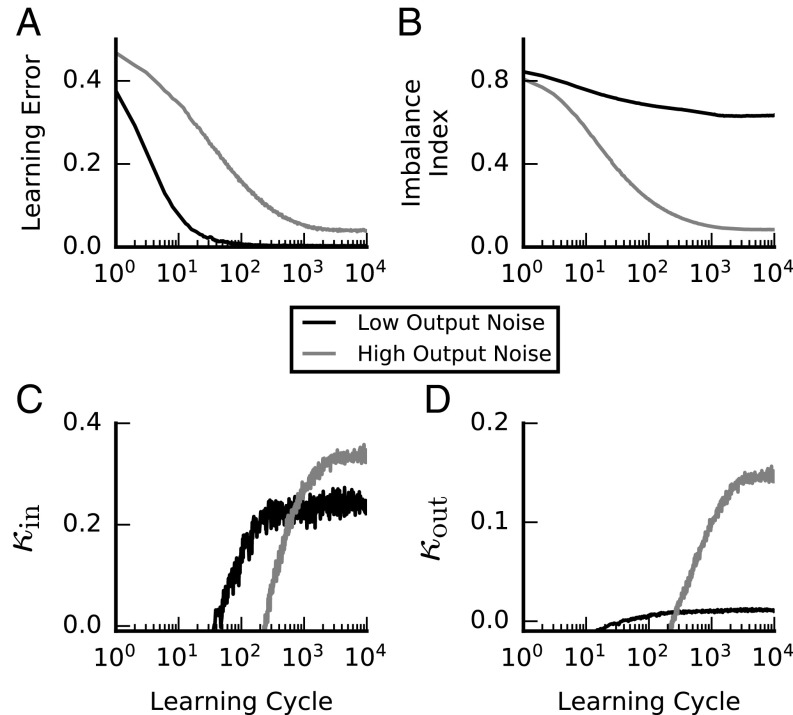

The actual algorithms used for learning in the neural circuits are generally unknown, especially within a supervised learning scenario. Experiments suggest that learning rules may depend on brain area and both pre- and postsynaptic neuron types (for example, refs. 57–59, 61; for reviews see refs. 60, 62–64). From a theoretical perspective, the properties of the solutions found through learning, and in particular their noise robustness, depend on both the type and parameters of the algorithm and the properties of the space of possible solutions. However, our theory suggests that a general, simple way to ensure that learning arrives at a robust solution is to introduce noise during learning. Indeed, this is a common practice in machine learning for increasing generalization abilities [a specific form of data augmentation (65, 66)]. The rationale is that learning algorithms that achieve low error in the presence of noise necessarily lead to solutions that are robust against noise levels at least as large as those present during learning. In the case we are considering, learning in the presence of substantial input noise should lead to solutions that have substantial and introducing output noise during learning should lead to solutions with substantial . We note that may be large even if remains small (for example, in unbalanced solutions with maximal ) but not vice versa [because of order 1 implies (and as a result ) of order 1 as well]. Therefore, learning in the presence of significant output noise should lead to solutions that are robust to both input and output noise, whereas learning in the presence of input noise alone may lead to unbalanced solutions that are sensitive to output noise, depending on the details of the learning algorithm. We therefore predict that performing successful learning in the presence of output noise is a sufficient condition for the emergence of excitation–inhibition balance.

To demonstrate that robust balanced solutions emerge in the presence of output noise, we consider a variant of the perceptron learning algorithm (18) in which we have forced the sign constraints on the weights (29) and, in addition, added a weight decay term implementing a soft constraint on the magnitude of the weights (SI Materials and Methods). This supervised learning rule possesses several important properties that are required for biological plausibility: It is online, and weights are modified incrementally after each pattern presentation; it is history independent so that each weight update depends only on the current pattern and error signal; and finally, it is simple and local, and weight updates are a function of the error signal and quantities that are available locally at the synapse (presynaptic activity and synaptic efficacy). When this learning rule is applied to train a selectivity task in the presence of substantial output noise, the resulting solution has a balanced weight vector with substantial and (Fig. 6, shaded lines). In contrast, if learning occurs with weak output noise, the algorithm’s tendency to reduce the magnitude of the weights causes the resulting solution to be unbalanced with small , while its may be large if substantial input noise is present during learning (Fig. 6, solid lines). When this learning rule is applied in the load regime where only unbalanced solutions exist (), learning fails to achieve reasonable performance when applied in the presence of large output noise. When noise is scaled down to the value allowed by , learning yields unbalanced solutions with robustness values of the order of the maximum allowed in this region (Fig. S6).

Fig. 6.

Emergence of E-I balance from learning in the presence of output noise. All panels show the outcome of perceptron learning for a noisy neuron (SI Materials and Methods) under low (, solid lines) and high (, shaded lines) output noise conditions. Except for , all model and learning parameters are identical for the two conditions (including ). (A) Mean training error vs. learning cycle. On each cycle, all of the input patterns to be learned are presented once. The error decays and plateaus at its minimal value under both low and high output noise conditions. (B) Mean IB (Eq. 3) vs. learning cycle. IB remains of order 1 under low output noise conditions and drops close to zero under high output noise conditions. (C) Mean input robustness () vs. learning cycle. Input robustness is high under both output noise conditions. (D) Mean output robustness ( vs. learning cycle. Output robustness is substantial only under the high output noise learning condition. These results demonstrate that robust balanced solutions naturally emerge under learning in the presence of high output noise. See SI Materials and Methods for other parameters used.

Fig. S6.

Perceptron learning with input and output noise for . A–D depict the outcome of simple perceptron learning for a noisy neuron (Materials and Methods) under low output noise conditions (, solid lines) and high output noise conditions (, shaded lines). Except for all model and learning parameters are identical for the two conditions (including ). (A) Mean training error vs. learning cycle. At each cycle all of the learned input patterns are presented once. (B) Mean imbalance index vs. learning cycle. IB remains of order 1 under low output noise conditions and drops to lower values under high output noise conditions. (C) Mean input robustness () vs. learning cycle. (D) Mean rescaled output robustness () vs. learning cycle. The error decays and plateaus at its minimal value under both low and high output noise conditions; however, for high output noise the error remains substantial. Both output and input robustness are negative under the high output noise conditions. (The learning does not find a weights vector that performs the classification of the noise-free patterns correctly.) Input and output robustness are positive when the output noise scales at most as . Random patterns are binary pattern with equal probabilities and an even split of plus and minus patterns. , . Learning algorithm parameters: , , . Results are averaged over 50 samples.

SI Materials and Methods

Finding Perceptron Solutions.

There are a number of numerical methods for choosing a weight vector w that generates a specified selectivity (25, 27, 29, 39). For numerical simulations we developed algorithms that find the maximal and maximal solutions that obey the imposed biological constraints. These solutions can be found directly by solving conic programming optimization problems for which efficient algorithms exist and are widely available (73). For details see Finding Maximal in and out Solutions.

Random Patterns in Numerical Estimation of and Solutions.

In numerical experiments for Figs. 3 and 4 and Figs. S1 and S3, E inputs for the random patterns were drawn i.i.d. from an exponential distribution with unity mean and SD. I inputs were drawn from a Gamma distribution with shape parameter and scale parameter [the probability density function of the Gamma distribution is given by , where is the Gamma function].

Fig. S1.

Numerical measurement of capacity and balanced capacity. (A) Capacity of sign-constrained weights perceptron, , vs. the fraction of excitatory inputs, , as a fraction of the capacity of an unconstrained perceptron (Capacity for Noneven Split of Plus and Minus Patterns). Theory is depicted in black. Simulation results are shown in blue for and red for . To measure we measure the probability of the existence of a solution as a function of . We estimate by the load at which this probability is 1/2. (B) Capacity of balanced solutions, , as a fraction of vs. . Since solutions are balanced whenever balanced solutions exist, to measure we measure the probability of finding a balanced solution, i.e., a solution that saturates the upper bound on . We estimate by the load at which this probability is 1/2. In both A and B, , .

Dynamics of LIF Neuron.

Input spike trains.

For each input pattern input spike trains of input afferent were drawn randomly from a Poisson process with rate for duration .

Synaptic input.

Given the set of input spike trains the contribution of synaptic input to the membrane potential is given by , where is the synaptic efficacy of the synapse from the th input afferent and is a postsynaptic potential kernel. for and is given by for , where and are the membrane and synaptic time constants, respectively, and is such that the maximal value of is one.

Output noise.

Output noise was added to the neuron’s membrane potential as random synaptic input , where was randomly drawn from a zero mean Gaussian distribution with SD and was randomly drawn from a uniform distribution.

Voltage reset.

After each threshold crossing the membrane potential was reset to its resting potential. Given the set of output spike times , the total contribution of voltage reset to the membrane potential can be written as , where and are the neuron’s resting and threshold potentials, respectively, and implements the postspike voltage reset. for and is given by for . This form ensures the voltage is reset to the resting potential immediately after an output spike.

Membrane potential.

Finally, the neuron’s membrane potential is given by , where is computed given and .

Simulations of Recurrent Networks.

Memory states.

Networks were trained to implement a set of memory states, specified by , , as stable fixed points of the noise-free dynamics. Memory states were randomly chosen i.i.d. from binary distributions with parameter according to the type of the th input afferent; i.e., and .

Initial pattern distortion.

To start the network close to a memory state , the initial state of the network, for , was randomly chosen according to , where is the initial pattern distortion level (Fig. 5B) and for and 0 otherwise. This procedure ensures that the mean activity levels of E and I neurons in the initial state are the same as their mean activity levels in the memory state (74).

Perceptron Learning Algorithm.

The perceptron learning algorithm (Fig. 6 and Fig. S6) learns to classify a set of labeled patterns. At learning time-step one pattern with desired output is presented to the neuron. The output of the perceptron is given by where is a Gaussian random variable with zero mean and variance . The error signal is defined as , where for and zero otherwise. After each pattern presentation all synapses are updated. The synaptic weights of E inputs are updated according to and weights of I inputs are updated according to , where , is a weight decay constant, and is a constant learning rate. At each learning cycle ( learning time steps) all patterns are presented sequentially in a random order (randomized at each learning cycle).

Figure Parameters.

Fig. 2.

In all panels and . with an even split between responsive/unresponsive labels. In B and C .

Fig. 3.

In all panels , , and (Random Patterns in Numerical Estimation of and Solutions), leading to , with an even split between responsive/unresponsive labels. Numerical results are averaged over 100 samples.

Fig. 4.

In all panels , , fraction of plus patterns , , , , (Dynamics of LIF Neuron). Random patterns were drawn as described in Random Patterns in Numerical Estimation of and Solutions with and . Maximal solutions were found with in units of . No output noise was added in A–C. In D and E output noise was added with and (Dynamics of LIF Neuron).

Fig. 5.

In C–F , , , , , in units of . In D and F results are averaged over 10 networks and 10 patterns from each network. See Recurrent Networks with Nonlearned Inhibition for parameters of I connectivity of the nonlearned inhibition networks (gray lines). In G maximal output noise magnitude is defined as the value of for which the stable pattern probability is 1/2. To minimize finite-size effects in simulations we used , , , in units of . Stable pattern probability for each load and noise level was estimated by averaging over five networks and 20 patterns from each network.

Fig. 6.

Random patterns are binary pattern with equal probabilities and an even split of plus and minus patterns. , . Learning algorithm parameters are , , (Perceptron Learning Algorithm). Results are averaged over 50 samples.

Finding Maximal and Maximal Solutions.

Here we describe how finding the maximal and maximal solutions can be expressed as convex conic optimization problems. This allows us to efficiently validate the theoretical results. As noted in the main text, maximizing is equivalent to maximizing the margin of the solution’s weight vector as is done by support vector machines (39). However, to our knowledge, the application of conic optimization tools for maximizing is a unique contribution of our work.

Solution weight vectors, w, with input robustness or output robustness , satisfy the inequalities

| [S1] |

where and (here we assume without loss of generality that ).

For each solution w we define effective weights, u, and effective threshold [the so-called canonical weights and threshold (39)] given by

| [S2] |

| [S3] |

where is chosen such that (for either or ).

Together with the sign and norm constraints on the weights, u and must satisfy the linear constraints

| [S4] |

where if is excitatory and if is inhibitory, and the quadratic constraint

| [S5] |

which enforces the constraint .

For the effective weights and threshold, is given by and is given by . Thus, maximizing is equivalent to minimizing and maximizing is equivalent to minimizing . We therefore define a minimization cost function that is given by

| [S6] |

for the solution, and

| [S7] |

for the solution.

To find the maximal or maximal solution we solve the conic program

| [S8] |

in the limit of , subject to

| [S9] |

is a global regularization variable that ensures the existence of a solution to the optimization problem (Eqs. S8 and S9) even when the linear constraints S4 are not realizable. In practice it is sufficient to set to be a large constant (we set ). If the optimal value of is zero, the solution corresponds to the optimal perceptron solution for the classification task. If the optimal value of is greater then zero, it indicates that the labeled patterns are not linearly separable and that there is no zero error solution to the classification task. Given that a solution with is found, the optimal weights are given by .

SI Capacity for Noneven Split of Plus and Minus Patterns

The capacity of a perceptron with no sign constraints on synaptic weights for classification of random patterns is a function of the fraction of plus patterns in the desired classification, (20–22), and is given by

where is the Gaussian integration measure, , and the order parameter is given by the solution to the equation

Fig. S1A depicts the theoretical and measured of our “constrained” perceptron as a fraction of the corresponding unconstrained capacity vs. for two values of . Fig. S1B depicts theoretical and measured as a fraction of for two values of .

SI Effects of E and I Input Statistics

Our results depend, of course, on parameters, but in a fairly reduced way. In particular, the properties we discuss depend on the ratio of the inputs standard deviations, , and the ratio of their coefficients of variation, (Repica Theory for Sign- and Norm-Constrained Perceptron). As discussed in the main text determines the value of the optimal fraction of excitatory synapses, , which can be written as (Eq. 2 in the main text). Thus, the shape of the phase diagram changes with (Fig. S2A). The parameter has more subtle effects. We note here the main effect has on the maximal and maximal solutions.

Balanced and Unbalanced Maximal Solutions.

The maximal solutions can be either balanced or unbalanced, depending on and the value of (example in Fig. S2B). Importantly, for a wide range of reasonable parameters [for example, and ] the solution is unbalanced for all values of .

Fraction of “Silent” Weights in Maximal and Maximal Solutions.

As noted in previous studies (25, 27), a prominent feature of “critical” solutions with sign-constraint weights, such as the maximal and maximal solutions, is that a finite fraction of the synapses are silent; i.e., . Our theory allows us to derive the full distribution of synaptic efficacies (Distribution of synaptic weights) and calculate the fraction of silent weights for each solution. For the maximal solutions in the unbalanced regime (), the fraction of E (I) silent weights is always larger (smaller) then 1/2 (Fig. S2C). However, in the balanced regime () the qualitative behavior depends on (Fig. S2C). Interestingly, for unbalanced maximal solutions the fraction of silent weights is constant and equals 1/2 for both E and I inputs (Saddle-point equations for the maximal in solution and Distribution of synaptic weights).

Tuning Properties of Cortical Neurons Suggest That in Cortex .

In cortical circuits, I neurons tend to fire with higher firing rates and are thought to be more broadly tuned than E neurons, implying, under reasonable assumptions, that both and are greater than 1, leading to .

To see this, we consider input neurons with Gaussian tuning curves to some external stimulus variable ; i.e., the mean response, , of neuron to stimulus is given by

| [S10] |

where , , and characterize the response properties of the neuron. Assuming that is distributed uniformly and, for simplicity, that , the mean and variance of the neurons’ responses are given by

| [S11] |

and

| [S12] |

where we neglect terms of order . We now assume that and if neuron is E and that and if neuron is I. Further, we assume that I neurons respond with a higher firing rate () and are more broadly tuned (. In this case we have

| [S13] |

and

| [S14] |

SI Recurrent Networks with Nonlearned Inhibition

In our basic model for an associative memory network we assume that the activity of both E and I neurons is specified in the desired memory states and that all network connections are learned. Both of these assumptions can be modified, creating new scenarios with different computational properties.

First, we assume that memory state is specified only by the activity of E neurons and that the memory is recalled when the activity of E neurons matches the memory state regardless of the activity of I neurons. The problem of learning in such a network is computationally hard since the learning needs to optimize the activity of the I neurons, using the full connectivity matrix. In our work we do not address this scenario. Instead we forgo the assumption that E and I connections onto I neurons are learned and replace them with randomly chosen connections, i.e., assume that E to I and I to I connections are not learned and random.

Choosing Random Synapses for I Neurons.

In this scenario the activity of I neurons is determined by the network dynamics. We consider random I to I and E to I weights with means and and standard deviations and . We examine the distribution of I neurons’ membrane potential, given that the activity of E neurons is held at a memory state in which neurons are active. When is large, this distribution is Gaussian and we assume correlations are weak. Thus, the mean activity in the network is the probability that the membrane potential is above threshold and is given by the equation

| [S15] |

where , and and are the mean and variance of the membrane potential of I neurons, respectively.

On the other hand, given the mean activity, , the mean and variance of the membrane potentials are given by

| [S16] |

| [S17] |

Together, Eqs. S15–S17 define the relations between , , , , and .

In our simulations we set and the mean and variance of the I to I connections and choose the mean and variance of the E to I connections according to the solution of Eq. S15 [when is large, is given by ]. In particular, we choose an I network with binary weights in which each I neuron projects to another I neuron with probability with synaptic efficacy . Each E neuron projects to an I neuron with probability with synaptic efficacy that ensures that the mean I activity level at the memory states is .

In this parameter regime, the I subnetwork exhibits asynchronous activity, with mean activity , at the E memory states. However, different memory states lead to different asynchronous states.

Training Set Definition.

E neurons need to learn to remain stationary at the desired memory states, given the network activity at this state. However, since the activity of the I subnetwork is not stationary at the desired memory states, the training set for learning is not well defined.

To properly define the training set, we sample instances of the generated I activity for each memory state when the activity of the E neuron is clipped to this memory state. Sampling was performed by running the I network dynamics and recording the state of the I neurons after time steps. We then use the sampled activity patterns together with the E memory states as an extended training set (with patterns) for the E neurons.

Learned Network Stability.

The nonfixed point dynamics of the I subnetwork imply that the convergence of the learning on the training set does not entail that the memory states themselves are dynamically stable, in contrast to our prior model in which I neurons learn their synaptic weights. Therefore, after training we measure the probabilities that patterns are stable. This is done by the following procedure: First, we run the network dynamics (with ) when the E neurons’ activity is clipped to the memory state, for time steps. We then release the E neurons to evolve according to the natural network dynamics and observe whether their activity remains in the vicinity of the memory state for time steps. In a similar way we test the basins of attraction, starting the E network from a distorted version of the memory state instead of the memory state itself.

Learning Only E to E Connections.

First, we consider the case in which I to E connections are random: Each I neuron projects to an E neuron with probability with synaptic efficacy . We then try to find an appropriate E to E connection, using the learning scheme described above. We find that the pattern to pattern fluctuations in the I feedback due to the variance of the I to E connections and the variance in the I network neurons’ activation are substantial and of the same order of the signal differentiating the memory states. In fact, in this scenario the parameters we consider (, , , , ) are above the system’s memory capacity and we are unable to find appropriate E weights which implement the desired memory states for the training set. We conclude that this form of balancing I feedback is too restrictive due to the heterogeneity of I to E connections and variability of I neurons’ activity.

Learning Both E to E and I to E Connections.

In this scenario we find the maximal solution for the extended training set described above. For the parameters used (, , ) we are able to find solutions that implement all of the desired memory states for the extended training set. In addition, we find that the E memory states are dynamically stable with very high probability (we did not observe any unstable pattern). For numerical results see Fig. 5 and Fig S5.

SI Replica Theory for Sign- and Norm-Constrained Perceptron

We use the replica method (75) to calculate the system’s typical properties. For the perceptron architecture the replica symmetric solution has been shown to be stable and exact (20–22).

Given a set of patterns, and desired labels for , the Gardner volume is given by

| [S18] |

where is the Heaviside step function and is an integration domain obeying the sign and norm constraint .

We assume input pattern and labels are drawn independently from distributions with nonnegative means and SD . Labels are independently drawn from a binary distribution with and .

We handle both input and output robustness criteria by using different for each case,

| [S19] |

where here, and are dimensionless numbers representing the input robustness in units of and the output robustness in units of , respectively.

Further, we define the parameters

| [S20] |

and

| [S21] |

The Order Parameters.

We calculate the mean logarithm of the Gardner volume averaged over the E and I input distributions and the desired label distribution. The result of the calculation is expressing as a stationary-phase integral over a free energy that is a function of several order parameters. The value of the order parameters is determined by the saddle-point equations of the free energy.

In our model the saddle-point equations are a system of six equations for the six order parameters: , and .

The order parameters have a straightforward physical interpretation.

The parameter is the mean typical correlation coefficient between the s elicited by two different solutions to the same classification task: Given two typical solution weight vectors and , is given by

| [S22] |

where if is E and if is I.

Given a typical solution w, the physical interpretation of and is given by

| [S23] |

| [S24] |

The norm constraint on the weights is satisfied as long as

| [S25] |

Thus, there are two types of solutions: one in which the value of is determined by the saddle-point equation (unbalanced solutions) and the other in which is clipped to its lower-bound value (balanced solutions). Note that and remain of order 1 for any scaling of while scales as when is of order and is of order when is of order 1.

The physical interpretation of can be expressed through the relation

| [S26] |

where if is E and if is I.

Summary of Main Results.

Before describing the full saddle-point (SP) equations and their various solutions in detail, we provide a brief general summary of the results that will hopefully provide some flavor of the derivations for the interested reader.

Since is bounded from below by , we have two sets of SP equations which we term the balanced and the unbalanced sets. In both sets, given the free energy , five of the SP equations are given by

| [S27] |

The sixth equation is

| [S28] |

in the unbalanced set and is

| [S29] |

in the balanced set. Importantly, we find that Eq. S28 has solutions only when , which implies , and Eq. S29 implies , justifying the naming of the two sets. The solutions to the two sets of SP equations define the range of possible values of , , and that permits the existence of solution weight vectors. There are a number of interesting cases that we analyze below.

We first consider the solutions of the SP equations for zero and . In this case the SP describes the typical solutions that dominate the Gardner volume. Since the -dim. volume of balanced solutions with is exponentially larger than the volume of unbalanced solutions with , we expect that balanced solutions will dominate the Gardner volume whenever they exist. Indeed, solving the two sets of SP equations we find that solutions to the balanced set exist only for while solutions for the unbalanced set exist only for .

Next, we examine the values of that permit solutions to the balanced and unbalanced sets of SP equations. Importantly, we show that the unbalanced set can be solved only for . Thus, unbalanced solutions cannot have of or conversely all solutions with of are balanced.

Of particular interest are the so-called critical solutions for which . At this limit the typical correlation coefficient between the s elicited by two different solutions to the same classification task approaches unity, which implies that only one solution exists and the Gardner volume shrinks to zero. Thus, for a given or , the value of for which is the maximal load for which solutions exist. In this case, the SP describes the properties of the maximal or solutions.

The structure of the equations in this limit is relatively simple. First, the order parameter is given by the solutions to

| [S30] |

with the robustness parameter being or and the integration measure, , is given by . Second, we find a simple relation between critical loads of the constrained perceptron considered here and the critical loads of the classic unconstrained perceptron, :

| [S31] |

is given by

| [S32] |

which is indeed the critical load of an unconstrained perceptron with a given margin (21). Finding the critical load is then reduced to solving for the order parameter . For each value of or only one set of SP equations can be solved, determining whether the maximal or solutions are balanced or unbalanced. By examining the range of solutions for each set we can find the value of and for any and determine that (i) the maximal solution is balanced for and unbalanced for and (ii) for a wide range of parameters the maximal is unbalanced for all . In addition, we find that for , is given by

| [S33] |

where is finite and larger than zero when approaches from above and approaches zero when approaches . The above result implies that output robustness can be increased when the tuning of the input is increased. As we discuss in the main text in the context of neuronal selectivity in purely E circuits, sparse input activity is one way to increase the input tuning. If we consider sparse binary inputs with mean activity level , the output robustness will be given by .

Finally, we consider the solutions of the SP equations in the critical limit () for . In this limit the SP describes the capacity and balanced capacity. We note that for , (and as a result ) is independent of all of the other order parameters, simplifying the equations. In this case, we have only two coupled SP equations (for the order parameters , , and ), given by

| [S34] |

| [S35] |

where we defined the functions

| [S36] |

| [S37] |

For the balanced SP equations we have and for the unbalanced SP equations, Eq. S28 reduces to . Finally, is given by Eq. S31.

For the unbalanced set () we have and . We immediately get and

| [S38] |

This solution suggests that at capacity the solutions are unbalanced and that capacity as a function of is constant with

| [S39] |

However, this solution is valid only as long as , which is true only as long as

| [S40] |

which implies

| [S41] |

For the solution for the balanced set terms with can be neglected and we have the equation

| [S42] |

for the order parameter . and are given by

| [S43] |

| [S44] |

This solution gives us the balanced capacity line

| [S45] |

where is given by the solution of Eq. S42 and is given by Eqs. S32 and S30 with .

Detailed Solutions of the SP Equations.

Below we provide the SP equations and their solutions under various conditions. We also provide the derived form of the distributions of synaptic weights for critical solutions.

The general SP equations.

We define the following:

| [S46] |

| [S47] |

| [S48] |

| [S49] |

| [S50] |

| [S51] |

| [S52] |

| [S53] |

| [S54] |

| [S55] |

| [S56] |

| [S57] |

| [S58] |

| [S59] |

| [S60] |

| [S61] |

The SP equations are given by

| [S62] |

| [S63] |

| [S64] |

| [S65] |

| [S66] |

| [S67] |

It is important to note the relation between and . is of the order of ( remains of order 1 under all conditions). Thus, for unbalanced solutions and is of while for balanced solutions is of and is of and can be neglected.

SP equations for typical solutions.

For typical solutions we solve the SP equations for or , leading to . In this case we have and thus

| [S68] |

and the SP equation for is

| [S69] |

We now can solve the SP equations for the unbalanced case with

| [S70] |

and for the balanced case with

| [S71] |

We find that for a solution exists only for equations of the balanced case while for a solution exists for the equations for the unbalanced case. Thus, typical solutions are balanced below and unbalanced above it. The norm of the weight and the IB depicted in Fig. 2 B and C are given by

| [S72] |

| [S73] |

Solutions with significant are balanced.

In this section we show that all unbalanced solutions have output robustness of order and, equivalently, solutions with of order 1 are balanced.

Theorem.

All unbalanced solutions have output robustness of the order of

Proof.

In the case of output robustness we have and thus . We are looking for unbalanced solutions so we have the equations

| [S74] |

| [S75] |

Both equations must be satisfied and therefore we have (using Eqs. S65, S74, and S75)

| [S76] |

Performing the derivatives, we get

| [S77] |

Now, we use Eq. S74, leading to

| [S78] |

where we defined as

| [S79] |

which remains of . Thus, we are left with

| [S80] |

Note that and the first term is negative (nonzero). The other two terms scale as and therefore the equation can be satisfied only if .

SP equations for critical solutions.

To find the capacity, balanced capacity, and solutions with maximal output and input robustness we consider the limit .

We define

| [S81] |

and thus in this limit is given by

| [S82] |

In addition,

| [S83] |

| [S84] |

| [S85] |

| [S86] |

and, in the limit we have

| [S87] |

| [S88] |

| [S89] |

| [S90] |

We now write the final form of the SP equations for critical solutions:

| [S91] |

| [S92] |

| [S93] |

| [S94] |

| [S95] |

Finally is given by

| [S96] |

Capacity and balanced capacity.

The capacity is given for and . In this case both and are zero and Eq. S95 has two possible solutions:

| [S97] |

for unbalanced solutions or

| [S98] |

for balanced solutions. In both cases we have .

Unbalanced solution.

The SP equations become

| [S99] |

| [S100] |

| [S101] |

and the capacity is given by

| [S102] |

where is given by .

This solution is valid only when is larger than its lower bound, which is guarantied in the large limit as long as . Using Eq. S100, this entails that

| [S103] |

with

| [S104] |

or conversely

Balanced solution.