Abstract

Humans, like many other species, employ three fundamental forms of strategies to navigate: allocentric, egocentric, and beacon. Here, we review each of these different forms of navigation with a particular focus on how our high-resolution visual system contributes to their unique properties. We also consider how we might employ allocentric and egocentric representations, in particular, across different spatial dimensions, such as 1-D vs. 2-D. Our high acuity visual system also leads to important considerations regarding the scale of space we are navigating (e.g., smaller, room-sized “vista” spaces or larger city-sized “environmental” spaces). We conclude that a hallmark of human spatial navigation is our ability to employ these representations systems in a parallel and flexible manner, which differ both as a function of dimension and spatial scale.

Keywords: Spatial navigation, hippocampus, parahipopcampal cortex, retrosplenial cortex, place cell, electrophysiology, fMRI, neurophysiology, lesion, allocentric, egocentric, virtual reality, non-human primate, rodent

Much of our knowledge about navigation, particularly its neural basis, derives from studies in rodents [1]. How we navigate, however, differs fundamentally from these mammals in that we are highly visual creatures, and vision, under normal situations, forms a critical foundation for how we represent space compared to rodents [2]. At the same time, like rodents, we possess many similarities in terms of the basic strategies and access to similar forms of representations that we employ to navigate. In this review, we will focus on the cognitive and behavioral basis of human spatial navigation. We will base much of our discussion on the idea that, like the rodent, we use three fundamental strategies to get to our goal: allocentric, egocentric, and beacon. Because of the advantages that our high acuity visual system confers to navigating, we will also consider how this impacts our ability to represent different dimensions (1D-3D) and scales of space, such as room vs. city-sized environments.

Tolman first argued for the importance of an allocentric representation to navigation in the rodent in the context of the cognitive map [3]. As elaborated on later by many others [4–8], an allocentric representation is referenced outside of one’s current body position, most often to multiple landmarks external to the navigator (Figure 1a). In 2-D space (e.g., Figure 2), mathematically at least, this involves a minimum of three such landmarks because these are needed to define a plane in X-Y space (alternatively, a boundary and landmark will also suffice because a line and a point can also define a 2-D plane) [7]. The “purest” form of an allocentric representation emerges when we draw a cartographic map of an environment because these are not possible without detailed knowledge of the relative directions and distances of stationary landmarks [9–13]. Other tasks, such as the widely used judgments of relative direction (JRD) task [12, 14–16], also involve some use of an allocentric representation because the task requires reference to the positions of landmarks relative to each other [17]. Specifically, in this task, participants imagine themselves standing at one location, facing a second, and point to a third location. Thus, two primary assays to determine whether participants employ allocentric coordinates are map drawing and the JRD task.

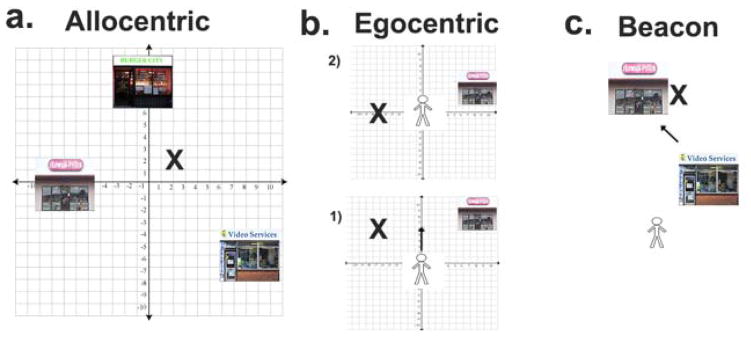

Figure 1.

a. Allocentric navigation: The navigator treats the location of the target (“x”) as a coordinate on a 2-D plane defined by three landmarks (stores). The coordinates in allocentric space are constant as long as the landmarks remain stable. b. Egocentric navigation: The coordinates of the target location (“x”) change continuously with the displacement of navigator from location 1) to 2). In other words, egocentric coordinates change continuously as a function of displacement. c. Beacon/response navigation: The navigator uses the visible locations of stores to find the target. Finding the target is simply based on using its size on the retina to gage the relative distance of the target. Thus, it is not necessary to encode or retrieve a spatial representation or coordinate system when using beacon navigation.

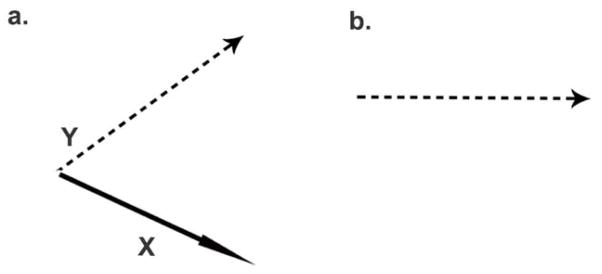

Figure 2.

a. Spatial representation in two dimensions (ignore vertical [Z] plane). b. Spatial representation in one dimension. When used to code time, we refer to this as the mental timeline.

Landmarks themselves, however, are not necessary for an allocentric representation. The surrounding spatial geometry, like a square or rectangle shape defined by the boundaries of an environment, can also serve as a powerful cue for organizing externally referenced knowledge [15, 18–21]. For example, when participants perform the JRD task, they tend to point more accurately when they are aligned (parallel) with the major axis of the surrounding environmental boundaries, like a rectangle, compared to when they are misaligned with these axes. Numerous studies have replicated this advantage in pointing accuracy when aligned with the spatial boundaries, which have held across a variety of testing conditions [15, 18–23]. Thus, while past theoretical proposals have conceptualized allocentric representations as largely dependent on multiple landmarks [4, 7], decades of work in human spatial navigation have demonstrated that the surrounding spatial geometry defined by environment boundaries can also serve as a powerful cue for organizing an allocentric coordinate system.

Another form of spatial representation, arguably more commonly used in everyday situations like reaching for an object or remembering where a chair is in the room, is the egocentric representation [7]. Egocentric representations involve reference to our current body position, such as that a chair is located 30 feet in front of us about 10 degrees off from our current facing direction (Figure 1b). As suggested in numerous studies of human spatial cognition [16, 24, 25], we often employ egocentric forms of representation for avoiding collisions with objects and navigating our immediate, peripersonal space. Consistent with this notion, several studies suggest that egocentric representations tend to be high-resolution visual “snapshots” linked to our current bearing [16, 24]. By taking a series of these high-resolution, static, body-referenced snap-shots, we can integrate them together to form a single coherent egocentric representation linked to our current location in space [26] (Figure 1b). Each of these representations can then be updated as we move throughout an environment (Figure 1b), forming the basis for a system of a vector addition called path integration [17, 27]. However, during disorientation [16, 24, 28], or moving in large scale environments [29], these representations degrade, necessitating other forms of representation, like an allocentric one.

What conditions emphasize egocentric over allocentric representations? To what extent can the two develop in parallel [30]? In one particular study, Zhang et al. compared performance on the JRD task after studying a map and navigating a route with performance on the scene and orientation dependent pointing task (SOP task), commonly used to assay egocentric forms of representation [12]. In this task, all visual cues (except the target locations) remain and participants use these orienting cues to point to the hidden location (i.e., “Point to the Supermarket”). Studying a map resulted in rapid, non-linear improvements in JRD pointing accuracy but slow modest improvements in SOP accuracy. In contrast, navigating a route resulted in greater improvements in SOP accuracy than JRD accuracy. These findings suggest that 1) map learning provides more immediate improvements in allocentric knowledge 2) route learning provides more immediate improvements in egocentric knowledge. These findings support the idea that the two forms of representations are partially dissociable in humans, but suggest that both develop in parallel during learning. See also [16, 31–33] for similar conclusions.

A third navigation strategy is often called a beacon or response strategy [34–37], which involves navigating to a single object, or series of objects, such as salient landmarks or objects in an environment. This type of navigation does not necessitate a representation of a coordinate space and only requires one’s memory for the object itself and the ability to discriminate it from other features and objects. Navigation thus involves moving either closer to, or further from, a specific object, such that its position gets bigger or smaller on the retina, thus providing a basic cue for getting to the object (sometimes termed a “response strategy” [37]). Beacon navigation, when combined with egocentric codes like “right and left,” forms the basis of how we navigate with mobile devices like GPS on our phone. This is because GPS instructions reduce the navigator’s job to searching for a specific target paired with a response (e.g., “At first street, take a right”).”

One important question then regards the optimal means for acquiring information about locations within our surrounding spatial environment. Both route and map learning, as discussed above, contribute to egocentric and allocentric knowledge, although route knowledge acquired under real-world, rather than virtual navigation, is more precise and accurate [38]. But what about GPS? In one study, participants navigated a real world environment either by studying a map first, being guided by an experimenter and then navigating the route, or navigating it with GPS [39]. GPS users tended to make more errors when having to later navigate without the device, resulting in greater errors in judgments of direction and in map drawing compared to those with direct navigation experience. These results are consistent with past findings suggesting that beacon strategies lead to limited recruitment of important brain structures to navigation-related memory, like the hippocampus [35, 37, 40]. An important question, though, not answered in this research regards the extent to which participants can nonetheless can acquire some allocentric knowledge under these otherwise impoverished learning conditions [for example, see: 41].

Spatial dimensions: Not all are created equal

So far, we have considered representations primarily in two dimensions, although it is useful to consider the simpler case of 1-D (the reader is referred elsewhere to papers considering the vertical [z] dimension [42]). If we consider a simple line, we can think of an allocentric representation involving two landmarks (where are we relative to two points on the line?). An egocentric representation then involves our body position along that line. This situation may arise during everyday navigation, for example, when walking down a hallway or using distal landmarks (mountains) to walk a straight path to a goal [29]. In this way, by reducing the dimensionality of the space we traverse, either physically (hallways) or mentally (imagining walking in a straight line in a 2-D environment), we can employ a simpler egocentric or allocentric representation because it involves the need for fewer landmarks and coordinates.

One particularly interesting case of a 1-D spatial representation involves the so-called “mental number line,” which may form one basis for how we represent time [43, 44]. These 1-D spatial representations of time could either be egocentric or allocentric, and their underlying spatial nature remains unclear. In one particularly thoughtful test of this idea, Frassinetti et al. examined whether inducing an egocentric bias during a prismatic adaption could also induce temporal distortions [45]. The adaptation process, which involves having participants point while wearing goggles that shifted their view to the right or left. results in a post-adaptation egocentric bias in participant pointing error. Moreover, it was observed that time perception was also altered. A leftward aftereffect facilitated in an underestimation and rightward aftereffect facilitated an overestimation of temporal duration. These findings are consistent with the idea that the mental number line flows from left to right [43, 44], suggesting that their findings regarding the 1-D egocentric spatial representations might generalize to other temporal coding schemes. Similar results have been reported for longer duration autobiographical mental time travel [46] and duration judgment of tones [47]. These findings, however, are inconsistent with other data suggesting dissociable neural representations for temporal order, time duration, and space [48–51]. It therefore remains to be determined whether 1-D spatial representations underlie all forms of temporal estimation or only in specific instances, and how these might differ for 1-D allocentric spatial representations.

Scales of space: Vista vs. environmental space

Most research regarding how we structure spatial knowledge derives from studies conducted in small-scale, room-sized environments, termed “vista space.” One limitation with this work, however, is that most relevant information in “vista space” can be acquired from a single viewpoint [52, 53], at least by humans [2, 17]. In contrast, large-scale space, termed “environmental space”, would appear to require integration of information across multiple trajectories and viewpoints experienced at different time points because not all of it can be acquired from a single viewpoint [9, 12, 54] (Figure 3). Consistent with this notion, some recent studies suggest potential differences in how we represent different scales of space.

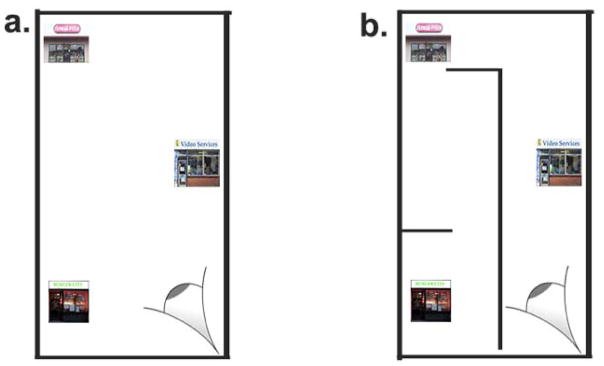

Figure 3.

a. An example of a vista space. Here, all landmarks are visible from a single viewpoint. b. An example of an environmental space. Here, navigation is required to view and encode all the landmarks.

As one example of how representations differ as a function of spatial scale, egocentric and allocentric representations form rapidly in small-scale space while those in large-scale space typically evolve dynamically over time [12, 31]. Additionally, how we use spatial boundaries to anchor our representations also differs as a function of spatial scale. In a virtual reality study [55], Meilinger et al. compared two navigation conditions of an environment. In one condition, the corridors prevented participants from seeing the whole space at once (environmental space) while in the other, participants could see the entire space from a single viewpoint (vista space, Figure 3). The authors found that the distance traveled and the sequence of objects influenced pointing error in environmental space but not in vista space. This finding is consistent with the idea that environmental space involves a time-dependent conversion of egocentric into allocentric coordinates by integrating over multiple egocentric reference frames (see Figure 1) [9, 12, 56]. Additionally, these findings suggest that participants treat environmental space as compartmentalized micro-environments, consistent with past studies suggesting that spatial representations can often be learned in a hierarchical fashion for neighboring spaces [57, 58]. These findings again emphasize the need for considering the time-dependent integration of representations in environmental space, suggesting its fundamental difference from vista space.

Summary

While much of our knowledge about spatial navigation derives from other species, understanding navigation in humans is an important research endeavor in its own right. Like rodents, highly studied mammals in the context of navigation, we also employ allocentric, egocentric, and beacon strategies to navigate. Our comparatively superior visual system provides for high-resolution visual forms of these representations, particularly transient egocentric representations. An important consideration, then, is how different scales of space interact with different forms of spatial representation. An emerging picture in the human spatial navigation literature is that not all forms of space are created equally, with vista space relying heavily, although not exclusively, on high-resolution egocentric “snapshots” and environmental space involving more gradual acquisition of stable allocentric representations.

Highlights.

Human spatial navigation as involving three fundamental forms of representations and strategies: allocentric, egocentric, and beacon.

How we navigate differs from rodents because of our high-resolution visual system.

We discuss how our high-resolution visual system directly impacts the nature of these three fundamental forms of representation.

We also discuss how these representations differ as a function of spatial dimension and spatial scale.

Acknowledgments

Funding: NIH (NS076856, NS093052) and NSF (BCS-1630296) to PI Ekstrom.

Glossary

- Allocentric

A representation of a spatial environment referenced to an external coordinate system that is not dependent on the view or direction navigated.

- Cognitive map

A representation of a spatial environment that contains information about metric and directional relationships of objects in that environment. By definition, these representations are allocentric.

- Egocentric

A representation of a spatial environment tied to a self or body centered coordinate system.

- Path-integration

Computation of the optimal, or shortest, path to a location based on previous paths. Based primarily on egocentric representation.

Footnotes

The authors state that they have no conflicts of interest.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Moser EI, Kropff E, Moser MB. Place Cells, Grid Cells, and the Brain’s Spatial Representation System. Annu Rev Neurosci. 2008 doi: 10.1146/annurev.neuro.31.061307.090723. [DOI] [PubMed] [Google Scholar]

- 2*.Ekstrom AD. Why vision is important to how we navigate. Hippocampus. 2015;25(6):731–735. doi: 10.1002/hipo.22449. This paper discusses the foundational nature of human vision to spatial navigation, on both the behavioral and neural levels. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Tolman EC. Cognitive Maps in Rats and Men. Psychol Rev. 1948;55:189–208. doi: 10.1037/h0061626. [DOI] [PubMed] [Google Scholar]

- 4.O’Keefe J, Nadel L. The Hippocampus as a Cognitive Map. Oxford: Clarendon Press; 1978. [Google Scholar]

- 5.Gallistel CR. The Organization of Learning. Cambridge, MA: MT Press; 1990. [Google Scholar]

- 6.Meilinger T, Vosgerau G. Putting Egocentric and Allocentric into Perspective. In: Holscher C, et al., editors. Spatial Cognition VII. Springer; Germany: 2010. pp. 207–221. [Google Scholar]

- 7.Klatzky R. Allocentric and egocentric spatial representations: Definitions, Distinctions, and Interconnections. In: Freksa C, Habel C, Wender CF, editors. Spatial cognition: An interdisciplinary approach to representation and processing of spatial knowledge. Springer-Verlag; Berlin: 1998. pp. 1–17. [Google Scholar]

- 8.Burgess N. Spatial memory: how egocentric and allocentric combine. Trends in Cognitive Sciences. 2006;10(12):551–7. doi: 10.1016/j.tics.2006.10.005. [DOI] [PubMed] [Google Scholar]

- 9.Siegel AW, White SH. The development of spatial representations of large-scale environments. In: Reese HW, editor. Advances in child development and behavior. Academic; New York: 1975. [DOI] [PubMed] [Google Scholar]

- 10.Appleyard D. Styles and Methods of Structuring a City. Environment and Behavior. 1970;2:100–117. [Google Scholar]

- 11.Lynch K. The image of the city. Vol. 11. MIT press; 1960. [Google Scholar]

- 12.Zhang H, Zherdeva K, Ekstrom AD. Different “routes” to a cognitive map: dissociable forms of spatial knowledge derived from route and cartographic map learning. Mem Cognit. 2014;42(7):1106–17. doi: 10.3758/s13421-014-0418-x. This empirical study compares the acquisition of putative allocentric and egocentric representations during route and map learning. The findings suggest that while map learning favors allocentric and route learning favor egocentric, both develop in parallel. See also Torok et al. 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Thorndyke PW, Hayes-Roth B. Differences in spatial knowledge acquired from maps and navigation. Cogn Psychol. 1982;14(4):560–89. doi: 10.1016/0010-0285(82)90019-6. [DOI] [PubMed] [Google Scholar]

- 14.Rieser JJ. Access to knowledge of spatial structure at novel points of observation. J Exp Psychol Learn Mem Cogn. 1989;15(6):1157–65. doi: 10.1037//0278-7393.15.6.1157. [DOI] [PubMed] [Google Scholar]

- 15.Shelton AL, McNamara T. Systems of spatial reference in human memory. Cognit Psychology. 2001;43(4):274–310. doi: 10.1006/cogp.2001.0758. [DOI] [PubMed] [Google Scholar]

- 16**.Waller D, Hodgson E. Transient and enduring spatial representations under disorientation and self-rotation. J Exp Psychol Learn Mem Cogn. 2006;32(4):867–82. doi: 10.1037/0278-7393.32.4.867. This seminal empirical paper resolves decades of debate in human spatial navigation by showing that while egocentric representations typically dominate in room-sized (vista space) environments, allocentric spatial representations emerge during periods of disorientation. By double dissociating the two fundamental forms of representation, this paper establishes both a means of measuring the two forms of representations and the conditions under which they emerge. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ekstrom AD, Arnold AE, Iaria G. A critical review of the allocentric spatial representation and its neural underpinnings: Toward a network-based perspective. Frontiers in Human Neuroscience. 2014 doi: 10.3389/fnhum.2014.00803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Richard L, Waller D. Toward a definition of intrinsic axes: the effect of orthogonality and symmetry on the preferred direction of spatial memory. J Exp Psychol Learn Mem Cogn. 2013;39(6):1914–29. doi: 10.1037/a0032995. [DOI] [PubMed] [Google Scholar]

- 19.McNamara TP, Rump B, Werner S. Egocentric and geocentric frames of reference in memory of large-scale space. Psychon Bull Rev. 2003;10(3):589–95. doi: 10.3758/bf03196519. [DOI] [PubMed] [Google Scholar]

- 20.Mou W, Zhao M, McNamara TP. Layout geometry in the selection of intrinsic frames of reference from multiple viewpoints. J Exp Psychol Learn Mem Cogn. 2007;33(1):145–54. doi: 10.1037/0278-7393.33.1.145. [DOI] [PubMed] [Google Scholar]

- 21.Chan E, et al. Reference frames in allocentric representations are invariant across static and active encoding. Front Psychol. 2013;4:565. doi: 10.3389/fpsyg.2013.00565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Mou W, et al. Roles of egocentric and allocentric spatial representations in locomotion and reorientation. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2006;32(6):1274–90. doi: 10.1037/0278-7393.32.6.1274. [DOI] [PubMed] [Google Scholar]

- 23.Frankenstein J, et al. Is the Map in Our Head Oriented North? Psychological Science. 2012;23(2):120–125. doi: 10.1177/0956797611429467. [DOI] [PubMed] [Google Scholar]

- 24.Wang RF, Spelke ES. Updating egocentric representations in human navigation. Cognition. 2000;77(3):215–50. doi: 10.1016/s0010-0277(00)00105-0. [DOI] [PubMed] [Google Scholar]

- 25.Diwadkar VA, McNamara TP. Viewpoint dependence in scene recognition. Psychological Science. 1997;8(4):302–307. [Google Scholar]

- 26*.Holmes CA, Marchette SA, Newcombe NS. Multiple Views of Space: Continuous Visual Flow Enhances Small-Scale Spatial Learning. 2017 doi: 10.1037/xlm0000346. This empirical paper demonstrates how a combined egocentric representation can emerge from numerous individual high-resolution viewpoints linked to body position and orientation. [DOI] [PubMed] [Google Scholar]

- 27.Mittelstaedt ML, Mittelstaedt ML. Homing by path integration in a mammal. Naturewissenschaften. 1980;67(566) [Google Scholar]

- 28.Holmes MC, Sholl MJ. Allocentric coding of object-to-object relations in overlearned and novel environments. J Exp Psychol Learn Mem Cogn. 2005;31(5):1069–87. doi: 10.1037/0278-7393.31.5.1069. [DOI] [PubMed] [Google Scholar]

- 29.Souman JL, et al. Walking Straight into Circles. Current Biology. 2009;19(18):1538–1542. doi: 10.1016/j.cub.2009.07.053. [DOI] [PubMed] [Google Scholar]

- 30.Ishikawa T, Montello DR. Spatial knowledge acquisition from direct experience in the environment: Individual differences in the development of metric knowledge and the integration of separately learned places. Cognitive Psychology. 2006;52(2):93–129. doi: 10.1016/j.cogpsych.2005.08.003. [DOI] [PubMed] [Google Scholar]

- 31.Török Á, et al. Reference frames in virtual spatial navigation are viewpoint dependent. Frontiers in human neuroscience. 2014:8. doi: 10.3389/fnhum.2014.00646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Gramann K, et al. Evidence of separable spatial representations in a virtual navigation task. Journal of Experimental Psychology-Human Perception and Performance. 2005;31(6):1199–1223. doi: 10.1037/0096-1523.31.6.1199. [DOI] [PubMed] [Google Scholar]

- 33.Li X, Mou W, McNamara TP. Retrieving enduring spatial representations after disorientation. Cognition. 2012;124(2):143–155. doi: 10.1016/j.cognition.2012.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Morris RGM, et al. Place navigation impaired in rats with hippocampal lesions. Nature. 1982;297:681–683. doi: 10.1038/297681a0. [DOI] [PubMed] [Google Scholar]

- 35.Morris R, Hagan J, Rawlins J. Allocentric spatial learning by hippocampectomised rats: a further test of the “spatial mapping” and “working memory” theories of hippocampal function. The Quarterly journal of experimental psychology. 1986;38(4):365–395. [PubMed] [Google Scholar]

- 36.Waller D, Lippa Y. Landmarks as beacons and associative cues: Their role in route learning. Memory & Cognition. 2007;35(5):910–924. doi: 10.3758/bf03193465. [DOI] [PubMed] [Google Scholar]

- 37.Packard MG, Hirsh R, White NM. Differential effects of fornix and caudate nucleus lesions on two radial maze tasks: evidence for multiple memory systems. Journal of Neuroscience. 1989;9(5):1465–1472. doi: 10.1523/JNEUROSCI.09-05-01465.1989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ruddle RA, Lessels S. For efficient navigational search, humans require full physical movement, but not a rich visual scene. Psychological Science. 2006;17(6):460–465. doi: 10.1111/j.1467-9280.2006.01728.x. [DOI] [PubMed] [Google Scholar]

- 39**.Ishikawa T, et al. Wayfinding with a GPS-based mobile navigation system: A comparison with maps and direct experience. Journal of Environmental Psychology. 2008;28(1):74–82. This important empirical paper is one of the first to directly compare spatial knowledge gained during exploration with GPS. Consistent with the idea that GPS involves a beacon-based strategy that leads to only limited allocentric knowledge, the results show impoverished learning with numerous metrics following GPS compared to map learning or exploration. [Google Scholar]

- 40.Iaria G, et al. Cognitive strategies dependent on the hippocampus and caudate nucleus in human navigation: variability and change with practice. J Neurosci. 2003;23(13):5945–52. doi: 10.1523/JNEUROSCI.23-13-05945.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Yamamoto N, Shelton AL. Sequential versus simultaneous viewing of an environment: Effects of focal attention to individual object locations on visual spatial learning. Visual Cognition. 2009;17(4):457–483. [Google Scholar]

- 42.Zwergal A, et al. Anisotropy of Human Horizontal and Vertical Navigation in Real Space: Behavioral and PET Correlates. Cereb Cortex. 2015 doi: 10.1093/cercor/bhv213. [DOI] [PubMed] [Google Scholar]

- 43.Dehaene S. The neural basis of the Weber–Fechner law: a logarithmic mental number line. Trends in cognitive sciences. 2003;7(4):145–147. doi: 10.1016/s1364-6613(03)00055-x. [DOI] [PubMed] [Google Scholar]

- 44.Walsh V. A theory of magnitude: common cortical metrics of time, space and quantity. Trends in cognitive sciences. 2003;7(11):483–488. doi: 10.1016/j.tics.2003.09.002. [DOI] [PubMed] [Google Scholar]

- 45*.Frassinetti F, Magnani B, Oliveri M. Prismatic lenses shift time perception. Psychol Sci. 2009;20(8):949–54. doi: 10.1111/j.1467-9280.2009.02390.x. This empirical paper demonstrates that 1-D egocentric spatial representations underlie some forms of temporal duration estimates. [DOI] [PubMed] [Google Scholar]

- 46.Anelli F, et al. Prisms to travel in time: Investigation of time-space association through prismatic adaptation effect on mental time travel. Cognition. 2016;156:1–5. doi: 10.1016/j.cognition.2016.07.009. [DOI] [PubMed] [Google Scholar]

- 47.Magnani B, Pavani F, Frassinetti F. Changing auditory time with prismatic goggles. Cognition. 2012;125(2):233–243. doi: 10.1016/j.cognition.2012.07.001. [DOI] [PubMed] [Google Scholar]

- 48.Macdonald CJ, et al. Hippocampal “Time Cells” Bridge the Gap in Memory for Discontiguous Events. Neuron. 2011;71(4):737–749. doi: 10.1016/j.neuron.2011.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Kraus BJ, et al. Hippocampal “time cells”: time versus path integration. Neuron. 2013;78(6):1090–101. doi: 10.1016/j.neuron.2013.04.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Vass LK, et al. Oscillations go the distance: Low frequency human hippocampal oscillations code spatial distance in the absence of sensory cues during teleportation. Neuron. 2016;89(6):1180–1186. doi: 10.1016/j.neuron.2016.01.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Ekstrom AD, et al. Dissociable networks involved in spatial and temporal order source retrieval. Neuroimage. 2011;2011:18. doi: 10.1016/j.neuroimage.2011.02.033. [DOI] [PubMed] [Google Scholar]

- 52**.Wolbers T, Wiener JM. Challenges in identifying the neural mechanisms that support spatial navigation: the impact of spatial scale. Frontiers in Human Neuroscience. 2014;8(571):1–12. doi: 10.3389/fnhum.2014.00571. This seminal theoretical paper is the first to explore in depth the quantitative and qualitative differences between egocentric and allocentric representations as a function of spatial scale. Wolbers and Wiener firmly establish the idea, theoretically at least, that vista space involves qualitatively different forms of spatial representation than environmental space. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Montello DR. In: Scale and multiple psychologies of space, in Spatial information theory: A theoretical basis for GIS. Frank AU, Campari I, editors. Springer-Verlag Lecture Notes in Computer Science; Berlin: 1993. [Google Scholar]

- 54.Poucet B. Spatial cognitive maps in animals: new hypotheses on their structure and neural mechanisms. Psychol Rev. 1993;100(2):163–82. doi: 10.1037/0033-295x.100.2.163. [DOI] [PubMed] [Google Scholar]

- 55**.Meilinger T, Strickrodt M, Bulthoff HH. Qualitative differences in memory for vista and environmental spaces are caused by opaque borders, not movement or successive presentation. Cognition. 2016;155:77–95. doi: 10.1016/j.cognition.2016.06.003. This empirical paper provides the first direct evidence for the idea that vista and environmental spaces lead to different forms of spatial representation. Specifically, they show that environmental space involves less binding to the surrounding spatial geometry than vista space, and allocentric representations in environmental space are more experience dependent than in vista space. [DOI] [PubMed] [Google Scholar]

- 56.Kolarik B, Ekstrom A. The Neural Underpinnings of Spatial Memory and Navigation. [Google Scholar]

- 57.McNamara TP, Hardy JK, Hirtle SC. Subjective Hierarchies in Spatial Memory. Journal of Experimental Psychology-Learning Memory and Cognition. 1989;15(2):211–227. doi: 10.1037//0278-7393.15.2.211. [DOI] [PubMed] [Google Scholar]

- 58.Han X, Becker S. One Spatial Map or Many? Spatial Coding of Connected Environments. J Exp Psychol Learn Mem Cogn. 2013 doi: 10.1037/a0035259. [DOI] [PubMed] [Google Scholar]