Abstract

Inferring the directionality of interactions between cellular processes is a major challenge in systems biology. Time-lagged correlations allow to discriminate between alternative models, but they still rely on assumed underlying interactions. Here, we use the transfer entropy (TE), an information-theoretic quantity that quantifies the directional influence between fluctuating variables in a model-free way. We present a theoretical approach to compute the transfer entropy, even when the noise has an extrinsic component or in the presence of feedback. We re-analyze the experimental data from Kiviet et al. (2014) where fluctuations in gene expression of metabolic enzymes and growth rate have been measured in single cells of E. coli. We confirm the formerly detected modes between growth and gene expression, while prescribing more stringent conditions on the structure of noise sources. We furthermore point out practical requirements in terms of length of time series and sampling time which must be satisfied in order to infer optimally transfer entropy from times series of fluctuations.

Introduction

Quantifying information exchange between variables is a general goal in many studies of biological systems because the complexity of such systems prohibits mechanistic bottom-up approaches. Several statistical methods have been proposed to exploit either the specific dependence of the covariances between input and output variables with respect to a perturbation applied to the network [1], or the information contained in 3-point correlations [2]. These methods are potentially well suited for datasets obtained from destructive measurements, such as RNA sequencing or immunohistochemistry.

However, none of these methods exploits the information contained in time-lagged statistics, which is provided for instance by non-destructive measurements obtained from time-lapse microscopy of single cells. Such experimental data should be quite relevant to understand functional relationships since they merely reflect the time delays present in the dynamics of the system. Time-delayed cross-correlations between gene expression fluctuations have indeed been shown to discriminate between several mechanistic models of well characterized genetic networks [3]. However, such methods become difficult to interpret in the presence of feedback.

This situation is illustrated in reference [4] where the fluctuations in the growth rate and in the expression level of metabolic enzymes have been measured as a function of time by tracking single cells of E. coli with time-lapse microscopy. The interplay between these variables has been characterized using cross-correlations as proposed in [3]. To circumvent the difficulty of discriminating between many complex and poorly parametrized metabolic models, the authors reduced functional relations to effective linear responses with a postulated form of effective couplings.

In the present work, we instead use a time-lagged and information-based method to analyze the interplay between the two fluctuating variables. A crucial feature in this method is that it is model-free and it is able to disentangle the two directions of influence between the two variables, unlike the cross-correlations discussed above. This type of approach was first proposed by Granger [5] in the field of econometrics and found applications in a broader area. More recently, transfer entropy [6], which is a non-linear extension of Granger causality, has become a popular information-theoretic measure to infer directional relationships between jointly dependent processes [7]. It has been successfully applied to various biomedical time series (see for instance [8]) and used extensively in the field of neurobiology, as shown in Ref. [9] and in references therein. This is the tool that will be used in this work.

The plan of this paper is as follows. We first introduce two measures of information dynamics, transfer entropy (TE) and information flow (IF). We then illustrate our numerical method on a well controlled case, namely a simple linear Langevin model, and show that we can properly estimate these quantities from the generated time series. We then analyze experimental data on the fluctuations of metabolism of E. coli taken from Ref. [4]. We provide analytical expressions for the transfer entropy and information flow rates for the model proposed in that reference. After identifying a divergence in one TE rate as the sampling time goes to zero, we introduce a simplified model which is free of divergences while still being compatible with the experimental data. We conclude that the inference of information-theoretic dynamical quantities can be helpful to build physically sound models of the various noise components present in chemical networks.

Information theoretic measures

Unlike the mutual information I(X : Y) that only quantifies the amount of information exchanged between two random variables X and Y as defined in the section on Methods, the transfer entropy (TE) is an asymmetric measure that can discriminate between a source and a target [6]. Consider two sampled time series {‥xi−1, xi, xi+1‥} and {‥yi−1, yi, yi+1‥}, where i is the discrete time index, generated by a source process X and a target process Y. The transfer entropy TX→Y from X to Y is a conditional, history-dependent mutual information defined as

| (1) |

where and denote two blocks of past values of Y and X of length k and l respectively, is the joint probability of observing , and are conditional probabilities. In the second line, H(.|.) denotes the conditional Shannon entropy (see Section on Methods for definition). In the first equation, the summation is taken over all possible values of the random variables and over all values of the time index i.

To put it in simple terms, TX→Y quantifies the information contained from the past of X about the future of Y, which the past of Y did not already provide [7, 8]. Therefore, it should be regarded as a measure of predictability rather than a measure of causality between two time-series [10]. For instance, when does not bring new information on yi+1, then and the transfer entropy vanishes because the prediction on yi+1 is not improved. With a similar definition for TY→X, one can define the net variation of transfer entropy from X to Y as ΔTX→Y ≡ TX→Y − TY→X. The sign of ΔTX→Y informs on the directionality of the information transfer.

The statistics required for properly evaluating the transfer entropy rapidly increases with k and l, which in practice prohibits the use of large values of k and l. The most accessible case thus corresponds to k = l = 1, which we denote hereafter as . This quantity is then simply defined as

| (2) |

When the dynamics of the joint process {X, Y} is Markovian, one has and since one has (see Ref. [11]). Therefore, represents an upper bound on the transfer entropy. In the case of stationary time series, which is the regime we consider in this work, it is natural to also introduce the TE rate

| (3) |

where the continuous time variable t replaces the discrete index i. In practice , but only for sufficiently small time step τ.

The most direct strategy to evaluate Eq (1) would be to construct empirical estimators of the probabilities from histograms of the data. Although this procedure works well for evaluating other quantities, for instance the entropy production in small stochastic systems [12], it completely fails in the case of transfer entropy. Indeed, such a method leads to a non-zero TE even between uncorrelated signals, due to strong biases in standard estimators based on data binning. In order to overcome this problem, we used the Kraskov-Stögbauer-Grassberger (KSG) estimator which does not rely on binning, as implemented in the software package JIDT (Java Information Dynamics Toolkit) [13]. Using estimators of this kind is particularly important for variables that take continuous values.

In the following, the inference method will be applied to time series generated by diffusion processes. It will then be interesting to compare the TE rate to another measure of information dynamics, the so-called information flow [14–16] (also dubbed learning rate in the context of sensory systems [11, 17]), which is defined as the time-shifted mutual information [18]

| (4) |

In the special case where the two processes X and Y experience independent noises (the system is then called bipartite) [15], one has the inequality [17], which in turn implies that

| (5) |

when the joint process is Markovian. Observing a violation of this inequality is thus a strong indication that the noises on X and Y are correlated. As will be seen later, this is indeed the situation in biochemical networks, due the presence of the so-called extrinsic noise generated by the stochasticity in the cell and in the cell environment [19] which acts on all chemical reactions within the cell, and thus induces correlations.

Results

Test of the inference method on a Langevin model

In order to benchmark our inference method and perform a rigorous test in a controlled setting, we first applied it on times series generated by a simple model for which the transfer entropy and the information flow can be computed analytically. The data were obtained by simulating the two coupled Langevin equations

| (6) |

that describe the dynamics of a particle of mass m subjected to a velocity-dependent feedback that damps thermal fluctuation [16, 20, 21] (in these equations, the dependence of the variables on the time t is implicit). Here, ξ(t) is the noise generated by the thermal environment with viscous damping γ and temperature T, while η(t) is the noise associated with the measurement of the particle’s velocity v(t). The two noises are independent and Gaussian with zero-mean and variances ⟨ξ(t)ξ(t′)⟩ = 2γkBTδ(t − t′) and ⟨η(t)η(t′)⟩ = σ2 δ(t − t′). a is the feedback gain and τr is a time constant.

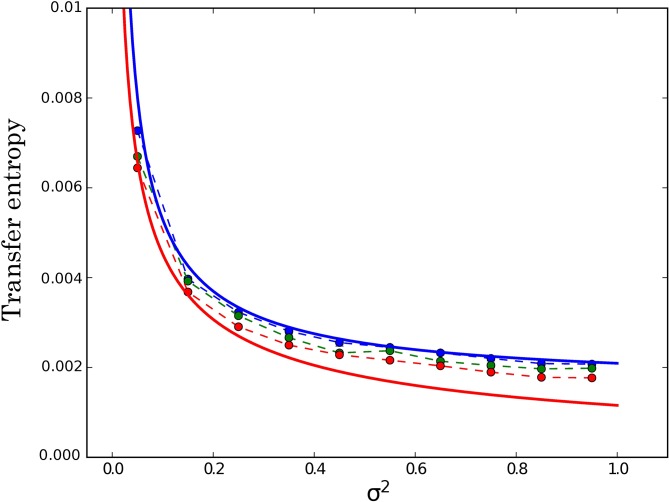

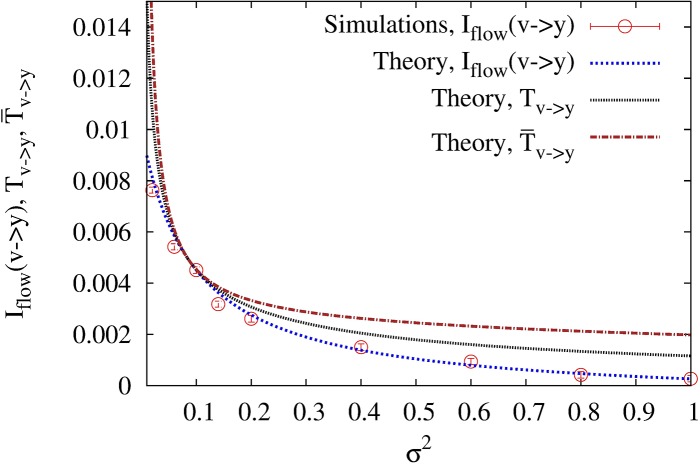

The two Langevin equations were numerically integrated with the standard Heun’s method [22] using a time step Δt = 10−3, and the transfer entropy in the steady state was estimated from 100 time series of duration t = 2000 with a sampling time (i.e., the time between two consecutive data points) τ = Δt. We first checked that the TE in the direction Y → V does vanish in the absence of feedback, i.e. for a = 0, whereas it is non-zero as soon as a > 0. We then tested the influence of the measurement error σ2 for a fixed value of the gain a. As can be seen in Fig 1, TV→Y diverges as σ2 → 0, a feature that will play an important role in our discussion of the model for the metabolic network. In the figure, the color of the symbols correspond to three different values of the parameter k which represents the history length in the definition of the transfer entropy (see Eq (1)). One can see that the estimates of TV→Y for k = 1 are in very good agreement with the theoretical prediction for (upper solid line). Moreover, the estimates decrease as k is increased from 1 to 5, and one can reasonably expect that the theoretical value of TV→Y (lower solid line) computed in Ref. [16] and given by Eq (21) in the section on Methods would be reached in the limit k → ∞.

Fig 1. Transfer entropy TY→V for the feedback model governed by Eq (6) as a function of the noise intensity σ2 for k = 1 (blue circles), k = 3 (green circles) and k = 5 (red circles).

The parameter l present in the definition of Eq (1) is fixed to 1. The lower red (resp. upper blue) solid line represents the value of TY→V (resp. ) obtained by multiplying the theoretical rate (resp. ) given by Eq (21) (resp. Eq (23) by the sampling time τ = 10−3. The parameters of the model are T = 5, γ = m = 1, τr = 0.1, and a = 8.

Finally, by estimating the information flow and the transfer entropy, we checked that inequality (5) holds, as a result of the independence of the two noises ξ and η (see section on Methods).

Analysis of stochasticity in a metabolic network

Experimental time series

We are now in position to analyze the fluctuations in the metabolism of E. coli at the single cell level obtained in Ref. [4] using the information-theoretic notions introduced and tested in the previous section. Since there are a multitude of reactions and interactions involved in the metabolism of E. coli, a complete mechanistic description is not feasible, and our model-free inference method has a crucial advantage. In Ref. [4], the length of the cells was recorded as a function of time using image analysis, and the growth rate was then obtained by fitting this data over subparts of the cell cycle. In the same experiment, the fluorescence level of GFP, which is co-expressed with growth enzymes LacY and LacZ was recorded. Three set of experiments were carried out corresponding to three levels of an inducer IPTG: low, intermediate and high.

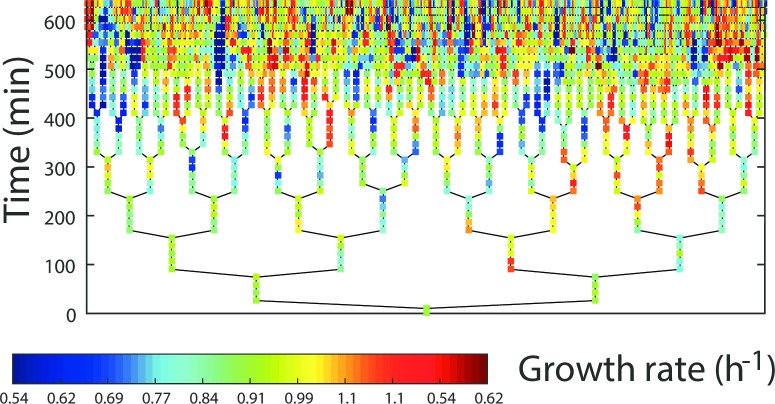

The two time series have a branching structure due to the various lineages, which all start from a single mother cell as shown in Fig 2. The experimental data thus come in the form of a large ensemble of short times series which represent a record of all the cell cycles. There are about ∼3000 time series, with 2 to 8 measurement points in each of them which are represented as colored points in Fig 2. In order to correctly estimate the transfer entropy from such data, we have analyzed the multiple time series as independent realizations of the same underlying stochastic process. For the present analysis, we fix the history length parameters k and l to the value k = l = 1, which means that we focus on rather than T. We infer the values of in the two directions, from growth (denoted μ) to gene expression (denoted E) and vice versa. The results obtained for the three concentrations of IPTG are represented in Table 1. The negative value of which is found in the intermediate case is due to the numerical inference method and should be regarded as a value which cannot be distinguished from zero.

Fig 2. Pedigree tree representing the evolution of the colony of E. coli. studied in Ref. [4].

The splitting of the branches corresponds to cell division events, each colored point is associated to a measurement of a single cell and the colors represent the growth rates as shown in the bar in the lower part of the figure.

Table 1. Inferred values of the transfer entropies in the directions E → μ and μ → E, and the difference for low, medium and high concentrations of IPTG based on the data of ref. [4].

The TE are given in nats.

| Conc. of IPTG | Low | Intermediate | High |

|---|---|---|---|

| 2.35 ⋅ 10−2 | 1.37 ⋅ 10−2 | 1.06 ⋅ 10−3 | |

| 2.16 ⋅ 10−2 | −4.08 ⋅ 10−3 | 9.94 ⋅ 10−3 | |

| 1.84 ⋅ 10−4 | 1.78 ⋅ 10−2 | −8.88 ⋅ 10−3 |

Based on this analysis, we conclude that the influence between the variables is directed primarily from enzyme expression to growth in the low and intermediate IPTG experiments, while it mainly proceeds in the reverse direction in the high IPTG experiment. Such results are in line with the conclusions of Ref. [4] based on the measured asymmetry of the time-lagged cross-correlations. Moreover, the present analysis provides an estimate of the influence between the two variables separately in the two directions from E to μ and from μ to E. In particular, we observe for the low experiment that the values of TE in the two directions are of same order of magnitude, whereas in the intermediate experiment the TE from E to μ is larger, a feature which could not have been guessed from measured time delays.

Theoretical models

We now turn to the analysis of the model proposed in Ref. [4] to account for the experimental data. The question we ask is whether the model correctly reproduces the above results for the transfer entropies, in particular the change in the sign of for the high concentration of IPTG.

The central equation of the model describes the production of the enzyme as

| (7) |

where E is the enzyme concentration, p its production rate, and μ the rate of increase in cell volume. Although the function p is typically non-linear, its precise expression is irrelevant because (7) is linearized around the stationary point defined by the mean values E = E0 and μ = μ0. This linearization then yields

| (8) |

in terms of perturbed variables δX(t) = X(t) − X0, where X0 denotes the mean of X.

The model of Ref. [4] is essentially phenomenological in nature because it approximates the noises as Gaussian processes. Although this approximation is often done in this field, it may not always hold since fluctuations due to low copy numbers are generally not Gaussian [23]. In any case, the model contains three Gaussian noises: NG is a common component while NE and Nμ are component specific to E and μ. These noises are assumed to be independent Ornstein-Uhlenbeck noises with zero mean and autocorrelation functions (i = E, μ, G). As commonly done, the three Ornstein-Uhlenbeck noises are generated by the auxiliary equations

| (9) |

where the are zero-mean Gaussian white noises satisfying with . Introducing the constant logarithmic gains TXY that represent how a variable X responds to the fluctuations of a source Y, the equations of the model read [4]

| (10) |

where specifically TEμ = −1 and TμG = 1. Then, eliminating δp from Eqs (8) and (10), one obtains the coupled equations

| (11) |

where we have defined the reduced variables x = δE/E0, y = δμ/μ0. We stress that NG is an extrinsic noise that affects both the enzyme concentration and the growth rate, whereas NE (resp. Nμ) is an intrinsic noise that only affects E (resp. μ). Note that the two effective noises TEGNG + NE and TμGNG + Nμ acting on and y are colored and correlated, which makes the present model more complicated than most stochastic models studied in the current literature. In fact, since we are mainly interested in the information exchanged between x and y, it is convenient to replace one of the noises, say NG, by the dynamical variable y. Differentiating the second equation in Eq (11), using Eq (9) and performing some simple manipulations, one then obtains a new set of equations for the four random variables x, y, u ≡ NE, v ≡ Nμ:

| (12) |

where the coefficients aj and bj (j = 1…4) are defined by Eq (24) in the section on Methods and ξy = ξμ + ξG is a new white noise satisfying and .

The calculation of the transfer entropy rate (which coincides with since the TE is invariant under the change of variables from E to x and μ to y) is detailed in the section on Methods, together with the calculation of the information flows. The final expression reads

| (13) |

where p(x, y) is the steady state probability distribution and the functions and are defined in Eqs (40) and (43), respectively. This result agrees with that obtained in Refs. [11, 18] and in [24] in special cases.

In Table 2, we show the results of the analysis of the time series generated by Eq (12) using our numerical inference method with a sampling time τ = 1min (equal to the time step Δt used to numerically integrate the model). One can see that the estimates of are in good agreement with the predictions of Eq (13), with the values of the model parameters taken from Table S1 in Ref. [4]. Note that the negative number given by the inference method in the high IPTG experiment signals that the actual value of cannot be distinguished from zero, which is indeed the theoretical prediction. In contrast, the estimated and theoretical results for do not agree, as the inference method yields finite values in all cases whereas the theoretical values diverge.

Table 2. Comparison between the theoretical values of the transfer entropy rates and for the model of Ref. [4] and the values inferred from simulation data.

Averages are taken over 100 times series of duration 106 min, sampled every 1 min.

| Conc. of IPTG | Low | Intermediate | High |

|---|---|---|---|

| (in h−1) (theo.) | 0.033 | 0.034 | 0 |

| (simul.) | 0.031 | 0.034 | −0.011 |

| (theo.) | ∞ | ∞ | ∞ |

| (simul.) | 0.202 | 0.123 | 0.347 |

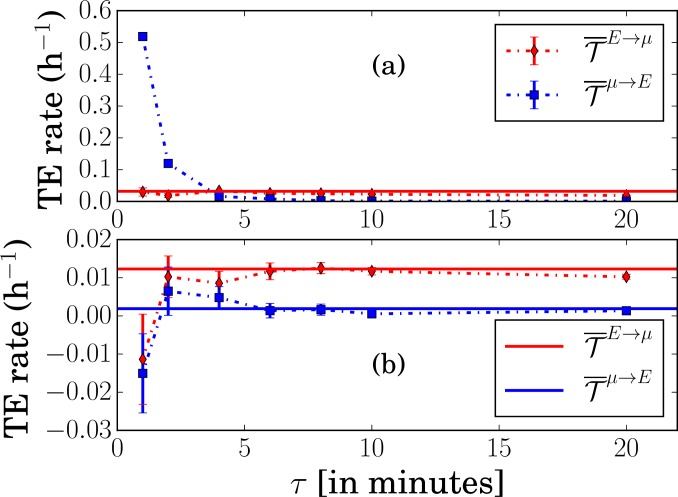

This behavior is due to the absence of a white noise source directly affecting the dynamical evolution of x in the set of Eq (12). Indeed, as pointed out in Ref. [6] and also observed above in Fig 1, a TE rate diverges when the coupling between the variables is deterministic. In the model of Ref. [4], this feature can be traced back to the fact that the noise NE affecting the enzyme concentration is colored with a finite relaxation time . Therefore, when taking the limit τ → 0 in Eq (3), one explores a time interval where NE is not really random. This is illustrated in Fig 3a that corresponds to the low IPTG experiment: we see that the estimate of with the inference method is indeed diverging when the sampling time τ approaches zero. On the other hand, as expected, remains finite and the points nicely lie on the plateau determined by Eq (13).

Fig 3. Transfer entropy rates and in the low IPTG experiment.

(a) Original model of Ref. [4] (b) Modified model where NE is a white noise. The symbols are the estimates from the inference method when varying the sampling time τ, and the solid lines are the theoretical predictions from Eq (13) in (a) and from Eq (60) in (b). Note that diverges as τ goes to zero in (a) but not (b).

The obvious and simplest way to cure this undesirable feature of the original model is to treat NE as a purely white noise, which amounts to taking the limit . In fact, it is noticeable that the values of extracted from the fit of the correlation functions in Ref. [4] (resp. and 8.15 min for the low, intermediate, and high IPTG concentrations) are significantly smaller than the time steps τexp used for collecting the data (resp. τexp = 28, 20 and 15.8 min). Therefore, it is clear that the experimental data are not precise enough to decide whether NE is colored or not. This issue does not arise for the other relaxation times in the model, and , which are much longer (at least for the low and intermediate IPTG concentrations), and can be correctly extracted from the experimental data.

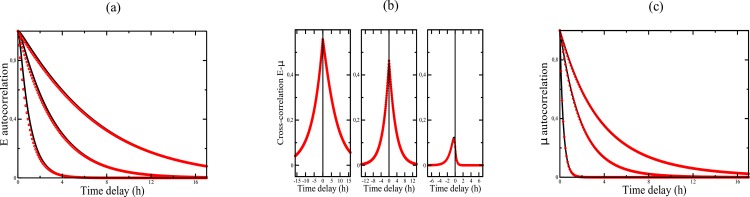

We thus propose to modify the model of Ref. [4] by describing NE as a Gaussian white noise with variance 〈NE(t)NE(t′)〉 = 2DEδ(t − t′) and the same intensity as the colored noise in the original model, i.e. (which yields DE ≈ 0.188h, 0.100h, 0.031h for the three IPTG concentrations). Unsurprisingly, this modification does not affect the auto and cross-correlation functions used to fit the data, as shown in Fig 4 (see also section on Methods for a detailed calculation). On the other hand, the values of are changed (compare Tables 2 and 3) and, more importantly, , given by Eq (60) is now finite. As a result, the model predicts that the difference is positive at low and intermediate IPTG concentrations and becomes negative at high concentration, which is in agreement with the direct analysis of the experimental data in Table 1. In contrast, was always negative in the original model as is infinite.

Fig 4.

(a) Autocorrelation function Rμμ(τ) for the three IPTG concentrations. Black lines: original model of Ref. [4], red circles: simplified model where NE is a white noise. (b) Same as (a) for REE(τ). (c) Same as (a) for REμ(τ).

Table 3. Theoretical values of the transfer entropy rates and and their difference in the modified model.

| Conc. of IPTG | Low | Intermediate | High |

|---|---|---|---|

| (h−1) | 1.23 ⋅ 10−2 | 8.2 ⋅ 10−3 | 0 |

| (h−1) | 1.9 ⋅ 10−3 | 5 ⋅ 10−4 | 2.97 ⋅ 10−2 |

| (h−1) | 1.04 ⋅ 10−2 | 7.7 ⋅ 10−3 | −2.97 ⋅ 10−2 |

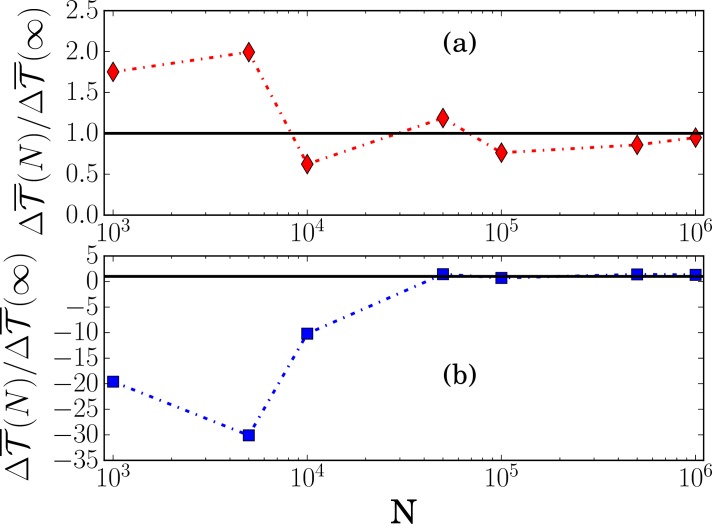

This new behavior of the TE rates is also manifest when the inference method is applied to the time series generated by the model and the sampling time τ is varied. As observed in Fig 3b, the inferred value of no longer diverges as τ → 0 (compare the vertical scale with that in Fig 3a). The estimates of and are also in good agreement with the theoretical predictions, except for the shortest value of τ which is equal to the time step Δt = 1 min used to numerically integrate the equations. It worth mentioning, however, that the error bars increase as τ is decreased.

While the change in the sign of is now confirmed by the model, which is the main outcome of our analysis, one may also wonder whether the numerical values in Table 1 are recovered. This requires to multiply the rates in Table 3 by the experimental sampling times τexp which are different in each experiment, as indicated above. One then observes significant discrepancies for the low and intermediate IPTG experiments. We believe that the problem arises from the presence of many short time series in the set of experimental data. This is a important issue that needs to be examined in more detail since it may be difficult to obtain long time series in practice.

To this aim, we have studied the convergence of the estimates of to the exact asymptotic value as a function of N, the length of the time series generated by the model in the stationary regime. As shown in Fig 5, the convergence with N is slow, which means that one can make significant errors in the estimation of if N is small. On the other hand, the convergence can be greatly facilitated by choosing a value of the sampling time which is not too short (but of course shorter than the equilibration time of the system), for instance τ = 6min instead of 1 min in the case considered in Fig 5. The important observation is that the sign of is then correctly inferred even with N ≈ 1000. In contrast, with τ = 1min, this is only possible for much longer series, typically N ≈ 50000. This is an encouraging indication for experimental studies, as the overall acquisition time of the data can be significantly reduced.

Fig 5. Inferred values of for the low IPTG experiment as a function of the length N of the time series generated by the modified model.

Panels (a) and (b) correspond to sampling times τ = 6 min and τ = 1 min, respectively. is the exact asymptotic value.

Finally, we briefly comment on the results for the information flows and . As already pointed out, the fact that the noises acting on the two random variables are correlated invalidates inequality (5). This is indeed what is observed in Table 4. It is also noticeable that , except in the high IPTG experiment where TμE = 0.

Table 4. Comparison between the theoretical values of the TE rates and the information flows for the modified model and the values inferred from simulation data (all quantities are expressed in h−1).

The analysis was performed with a sampling τ = 6 min and 100 time series of 106 points.

| Conc. of IPTG | Low | Intermediate | High |

|---|---|---|---|

| , analytical | 0.0123 | 0.0082 | 0 |

| , simulation | 0.0128 ± 6 ⋅ 10−4 | 0.0064 ± 6 ⋅ 10−4 | −0.0002 ± 5 ⋅ 10−4 |

| , analytical | 0.0019 | 0.0005 | 0.0297 |

| , simulation | 0.0023 ± 6 ⋅ 10−4 | 0.0012 ± 6 ⋅ 10−4 | 0.0215 ± 7 ⋅ 10−4 |

| , analytical | 0.0751 | 0.092 | −0.0214 |

| , simulation | 0.076 ± 10−3 | 0.09 ± 8 ⋅ 10−4 | −0.018 ± 8 ⋅ 10−4 |

| , analytical | 0.0455 | 0.0743 | 0.0214 |

| , simulation | 0.047 ± 10−3 | 0.072 ± 10−3 | 0.015 ± 10−3 |

Discussion and conclusion

A challenge when studying any biochemical network is to properly identify the direction of information. In this work, using the notion of transfer entropy, we have characterized the directed flow of information between the single cell growth rate and the gene expression, using a method that goes beyond what could be obtained from correlation functions, or from other inference techniques which do not exploit dynamical information.

Another crucial challenge in the field is to properly model the various noise components. It turns out that biological systems are generally non-bipartite due the presence of an extrinsic component in the noise. The present work provides on the one hand analytical expressions for the magnitude of the transfer entropy (or at least an upper bound on it) and of the information flow when the system is not bipartite, and, on the other hand a numerical method to infer the TE in all cases. Furthermore, we have shown that one can correctly infer the sign of the TE difference even with short time series by properly choosing the sampling time (see Ref. [25] for more details on the dependence of TE on the sampling time).

To conclude, we would like to emphasize that the transfer entropy is a general tool to identify variables which are relevant for time series prediction [26]. As such, the method has a lot of potential beyond the particular application covered in this paper: Predicting the current or future state of the environment by sensing it is an adaptation strategy followed by biological systems which can be understood using information-theoretic concepts [11, 27]. Similarly, during evolution, biological systems accumulate information from their environment, process it and use it quasi-optimally to increase their own fitness [28, 29]. In this context, transfer entropy-based methods have the potential to identify the directional interactions in co-evolution processes, which could be for instance the genomic evolution of a virus compared to that of its antigenes [30]. With the recent advances in high-throughput techniques and experimental evolution, we might soon be able to predict reliably the evolution of biological systems [31], and without doubt tools of information theory will play a key role in these advances.

Methods

In this section, we provide a detailed analysis of the information-theoretic quantities for the various models considered in this paper. The section is organized as follows:

Basic information-theoretic measures

Transfer entropy and information flow in the feedback cooling model

Transfer entropy rates and information flows in the model of Ref. [4] for a metabolic network

Transfer entropy rates and information flows in the modified model for the metabolic network

Basic information-theoretic measures

Below we briefly recall some definitions and properties of the information-theoretic measures. A fundamental quantity is the Shannon entropy which quantifies the uncertainty associated with the measurement x of a random variable X:

| (14) |

where P(x) is the probability that event x is realized, given an ensemble of possible outcomes. With this convention, the entropy is measured in nats. Similarly, for two random variables X and Y, one defines the joint Shannon entropy

| (15) |

and the conditional Shannon entropy

| (16) |

where P(x, y) and P(x|y) are joint and conditional probability distribution functions, respectively. The mutual information I(X : Y) is then a symmetric measure defined as

| (17) |

which quantifies the reduction of the uncertainty about X (resp. Y) resulting from the knowledge of the value of Y (respX). The more strongly X and Y are correlated, the larger I(X : Y) is.

These notions can be readily extended to random processes X = {Xi} and Y = {Yi} viewed as collections of individual random variables sorted by an integer time index i. The mutual information between the ordered time series {xi} and {yi}, realizations of X and Y, is then defined as

| (18) |

and characterizes the undirected information exchanged between the two processes. The conditional mutual information is defined similarly.

In contrast, the transfer entropy TX→Y is a information-theoretic measure that is both asymmetric and dynamic as it captures the amount of information that a source process X provides about the next state of a target process Y. More precisely, as defined by Eq (1) in the introduction,

| (19) |

where k and l define the lengths of the process histories, i.e., and . In this work, we have focused on a history length of 1 (i.e. k = l = 1) and denoted the corresponding TE by . Hence, , which is an upper bound to TX→Y(k, l) for l = 1 when the joint process {X, Y} obeys a Markovian dynamics [11].

On the other hand, the information flow from X to Y is defined as the time-shifted mutual information

| (20) |

and informs on the reduction of uncertainty in Yi when knowing about Xi+1 as compared to what we had with Xi only. In practice, can be obtained by shifting in time one time series with respect to the other one. Contrary to the transfer entropy which is always a positive quantity, the information flow may be negative or positive, depending on whether X sends information to Y (or X gains control of Y), or Y sends information to X (or X looses control over Y). In a bipartite system one has in the stationary regime. This is no longer true when the system is non-bipartite.

Transfer entropy and information flow in the feedback cooling model

We first recall the theoretical expressions of the transfer entropy rates and the information flows for the feedback-cooling model described by Eq (6). These quantities were computed in Ref. [16]. The transfer entropy rates in the stationary state are given by

| (21) |

Note that 2T/(γσ2) is the signal-to-noise ratio that quantifies the relative size of the measurement accuracy to the thermal diffusion of the velocity. Accordingly, the TE rate diverges when the control is deterministic. The information flow is given by

| (22) |

where is the determinant of the covariance matrix. The analytical expressions of the elements of the matrix, 〈v2〉, 〈y2〉 and 〈vy〉, are given by Eqs (A2) in Ref. [16]. In contrast with , the information flow remains finite as the noise intensity vanishes.

The upper bounds to the transfer entropies (see Eq (2)) were computed in Ref. [24] in the general case of coupled linear Langevin equations. For the feedback cooling model, one obtains

| (23) |

As shown in Fig 1, the estimate of the transfer entropy obtained by the inference method is in good agreement with the theoretical value (we stress that the figure shows the rates multiplied by the sampling time τ = 10−3). In Fig 6, we also obtain satisfactory agreement between inferred value of the information flow and theoretical value, when representing these quantities against the noise intensity σ2. These results of this figure confirm the inequalities .

Fig 6. and as a function of the noise intensity σ2.

The parameters of the model are T = 5, γ = m = 1, τr = 0.1 and a = −0.7.

Transfer entropy rates and information flows in the model of Ref. [4] for a metabolic network

Stationary distributions and correlation functions

We first compute the stationary probability distributions (pdfs) associated with Eq (12) were the coefficients aj and bj are given by

| (24) |

We recall that μE = μ0(1 + TμE − TEE) sets the timescale of E-fluctuations [4]. Since Eq (12) describe a set of coupled Markovian Ornstein-Uhlenbeck processes, the stationary pdf pxuvy(x, u, v, y) is Gaussian and given by

| (25) |

where is the covariance matrix which obeys the Lyapunov equation [32]

| (26) |

where

The solution of Eq (26) reads

| (27) |

From this we can compute all marginal pdfs, in particular

| (28) |

and

| (29) |

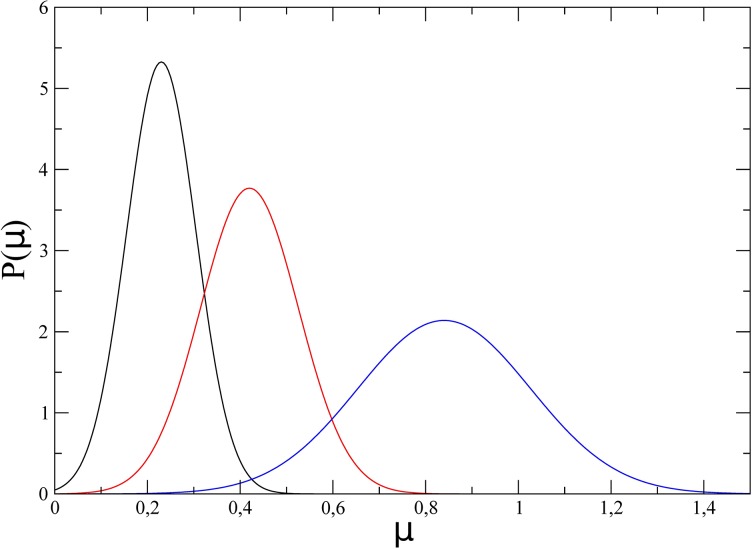

As an illustration, the steady-state pdf is plotted in Fig 7 for the three different IPTG concentrations (low, intermediate, and high). The agreement with the experimental curves displayed in Fig 1d of Ref. [4] is satisfactory.

Fig 7. Steady-state probability distribution of the growth rate for the three IPTG concentrations: Low (black), intermediate (red), high (blue).

For completeness, we also quote the expressions of Rpp(0) and Rpμ(0) (properly normalized) obtained from the definition :

| (30) |

| (31) |

with Rμμ(0) = σ44.

The correlation functions Rμμ(τ), REE(τ), and REμ(τ), obtained by taking the inverse Fourier transform of Eqs (6) in the Supplementary Information of [4] are plotted in Fig 4. In passing, we correct a few misprints in these equations: i) The correct expression of Rμμ(τ) is obtained by replacing AE(τ) by REE(τ) in the first term of Eq (12) in the Supplementary Information of [4]. ii) Eq (10) corresponds to REμ(τ) and not to RμE(τ) = REμ(−τ). Eq (8) then gives the correct expression of REμ(τ) (and not of RμE(τ)) provided the function AX(τ) defined in Eq (10) is altered. For τ ≥ 0, one should have

| (32) |

Transfer entropy rates

We now address the computation of the conditional probabilities and at first order in τ. This will allow us to obtain the expressions of the upper bounds to the transfer entropy rates defined by

| (33) |

where I is the mutual information, for instance in the steady state (where pxy(x′, y′) and py(y) become time independent pdfs). Therefore,

| (34) |

Note that the actual transfer entropy rates are defined as

| (35) |

where {xt′}t′≤t and {yt′}t′≤t denote the full trajectories of xt and yt in the time interval [0, t]. Since the present model is not bipartite, the calculation of these quantities is a nontrivial task that is left aside.

The two-time distributions and are given by

| (36) |

where is the transition probability from the state at time t to the state at time t + τ. From the definition of the Fokker-Planck operator associated with the 4-dimensional diffusion process described by Eq (12), the transition probability for small times is given by [32]

| (37) |

where is the drift coefficient in the equation for zi (with z1 = x, z2 = u, z3 = v, z4 = y), and all other θi, j being equal to 0.

Let us first consider the calculation of . By integrating over x, u, and v, we readily obtain

where the terms involving ∂x, ∂u, ∂v cancel due to natural boundary conditions. Hence,

| (38) |

which yields

| (39) |

after integration over u′ and v′, where we have defined the averaged drift coefficient

| (40) |

We thus finally obtain

| (41) |

Similarly, by also integrating over x′, we obtain

| (42) |

where

| (43) |

Due to the linearity of Eq (12) and the Gaussian character of the pfds, one simply has and , where a, b, c are complicated functions of the model parameters which we do not display here.

Eq (41) (resp. Eq (42)) merely shows that (resp. ) at the lowest order in τ is identical to the transition probability associated with an Ornstein-Uhlenbeck process with drift coefficient (resp. ) and diffusion coefficient . To proceed further, it is then convenient to use to the Fourier integral representation of the δ function and re-express and for small times as

| (44) |

and

| (45) |

up to corrections of the order τ2 [32]. This leads to

| (46) |

and from Eq (39) and the definition of the transfer entropy rate [Eq (34)],

| (47) |

We then use

| (48) |

and

| (49) |

to finally arrive at Eq (13), namely

| (50) |

A similar expression can be found in Ref. [11] (see Eq (A.31) in that reference). Note also that the result given in Ref. [24] is obtained as a special case.

Inserting into Eq (13) the values of the parameters given in Table S1 of Ref. [4], we obtain the values given in Table 2. Note that for the high IPTG concentration because TμE = 0, and therefore μ(t) no longer depends on E(t) as can be seen from Eq (10).

There is no need to detail the calculation of (i.e. ) because it goes along the same line, with y replaced by x. The crucial difference is that there is no white noise acting on . Therefore, the denominator in Eq (13), which is the variance of the noise ξy, is replaced by 0. This implies that is infinite.

Information flows

The information flows and are derived from the time-shifted mutual informations I[xt+τ : yt] and I[yt+τ : xt]. Specifically,

| (51) |

Let us first consider the second flow which requires the knowledge of whose expression is obtained by integrating Eq (39) over x′. This yields

| (52) |

Hence

| (53) |

We finally obtain

| (54) |

A similar calculation yields

| (55) |

where

| (56) |

is an averaged drift coefficient. Contrary to the case of the transfer entropy rate , the absence of a white noise acting on does not lead to an infinite result for . In fact, one has the symmetry relation

| (57) |

which is readily obtained by noting that pxy(x, y), the stationary solution of the Fokker-Planck equation, satisfies the equation

| (58) |

Inserting the numerical values of the parameters given in Table S1 of Ref. [4], we obtain the values given in Table 5 below. Interestingly, decreases as the IPTG concentration increases and that it becomes negative at high concentration.

Table 5. Theoretical values of in the original model of Ref. [4].

| Conc. of IPTG | Low | Intermediate | High |

|---|---|---|---|

| (in h−1) | 0.0148 | 0.0088 | -0.0243 |

Transfer entropy rates and information flows in the modified model for the metabolic network

We now repeat the above calculations for the modified model where NE is treated as a white noise. Eliminating again the variable w (i.e. NG) in favor of y, the new set of equations that describe the stochastic dynamics and replace Eq (12) reads

| (59) |

where we have defined the white noises ξx = μ0NE and satisfying and , respectively. These two noises are correlated, with .

The pdfs and the correlation functions can be computed as before. In fact, it is clear that this simply amounts to taking the limit βE → ∞ with finite in the previous equations (for instance in Eq (27) for the covariances). The new correlation functions are plotted in Fig 4. As expected, they are almost indistinguishable from those obtained with the original model and they fit the experimental data just as well (this of course is also true for the pdfs).

Much more interesting are the results for the transfer entropy rates and the information flows. Again, there is no need to repeat the calculations as they follow the same lines as before. We now obtain

| (60) |

| (61) |

where

| (62) |

| (63) |

and

| (64) |

| (65) |

(Again, gx(x, v, y) and gy(x, v, y) denote the drift coefficients in Eq (59)). The crucial difference with the results for the original model is that is now finite. Similarly, we have

| (66) |

| (67) |

The numerical values of and are given in Table 3. For completeness, we also compare these values with the estimates obtained by the inference method in Table 4. We see that satisfactory results are obtained by properly choosing the sampling time τ. This is also true for the information flows and . It is worth noting that the symmetry relation no longer holds, except for the high IPTG concentration (as TμE = 0). This contrasts with the preceding case where NE was modeled by an Ornstein-Uhlenbeck noise. We also observe that the information flows are not always smaller than the transfer entropy rates, contrary to what occurs in bipartite systems. Therefore, the concept of a “sensory capacity” as introduced in Ref. [11] is here ineffective.

Acknowledgments

We acknowledge J. Lizier for many insightful comments regarding the numerical evaluation of transfer entropies, and L. Peliti for stimulating discussions. S.L. thanks the Institute of Complex Systems (ISC-PIF), the Region Ile-de-France, and the Labex CelTisPhyBio (No. ANR-10- LBX-0038) part of the IDEX PSL (No. ANR-10-IDEX-0001-02 PSL) for financial support.

Data Availability

All relevant data are within the paper.

Funding Statement

S.L. thanks the Institute of Complex Systems (ISC-PIF), the Region Ile-de-France, and the Labex CelTisPhyBio (No. ANR-10- LBX-0038) part of the IDEX PSL (No. ANR-10-IDEX-0001-02 PSL) for financial support (to DL). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Prill RJ, Vogel R, Cecchi GA, Altan-Bonnet G, Stolovitzky G. Noise-Driven Causal Inference in Biomolecular Networks. PLoS ONE. 2015;10(6):e0125777 doi: 10.1371/journal.pone.0125777 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Affeldt S, Verny L, Isambert H. 3off2: A network reconstruction algorithm based on 2-point and 3-point information statistics. BMC Bioinformatics. 2016;17(S2). doi: 10.1186/s12859-015-0856-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Dunlop MJ, Cox RS, Levine JH, Murray RM, Elowitz MB. Regulatory activity revealed by dynamic correlations in gene expression noise. Nat Genet. 2008;40(12):1493–1498. doi: 10.1038/ng.281 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Kiviet DJ, Nghe P, Walker N, Boulineau S, Sunderlikova V, Tans SJ. Stochasticity of metabolism and growth at the single-cell level. Nature. 2014;514:376 doi: 10.1038/nature13582 [DOI] [PubMed] [Google Scholar]

- 5. Granger CWJ. Investigating Causal Relations by Econometric Models and Cross-spectral Methods. Econometrica. 1969;37(3):424–438. doi: 10.2307/1912791 [Google Scholar]

- 6. Schreiber T. Measuring information transfer. Phys Rev Lett. 2000;85:461 doi: 10.1103/PhysRevLett.85.461 [DOI] [PubMed] [Google Scholar]

- 7. Wibral M, Pampu N, Priesemann V, Siebenhühner F, Seiwert H, Lindner M, et al. Measuring Information-Transfer Delays. PLoS ONE. 2013;8:e55809 doi: 10.1371/journal.pone.0055809 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Pahle J, Green AK, Dixon CJ, Kummer U. Information transfer in signaling pathways: A study using coupled simulated and experimental data. BMC Bioinformatics. 2008;9(1):139 doi: 10.1186/1471-2105-9-139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Vicente R, Wibral M, Lindner M, Pipa G. Transfer entropy—a model-free measure of effective connectivity for the neurosciences. Journal of Computational Neuroscience. 2011;30(1):45–67. doi: 10.1007/s10827-010-0262-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Lizier TJ, Prokopenko M. Differentiating information transfer and causal effect. Eur Phys J B. 2010;73(4):605–615. doi: 10.1140/epjb/e2010-00034-5 [Google Scholar]

- 11. Hartich D, Barato AC, Seifert U. Sensory capacity: An information theoretical measure of the performance of a sensor. Phys Rev E. 2016;93:022116 doi: 10.1103/PhysRevE.93.022116 [DOI] [PubMed] [Google Scholar]

- 12. Tusch S, Kundu A, Verley G, Blondel T, Miralles V, Démoulin D, et al. Energy versus Information Based Estimations of Dissipation Using a Pair of Magnetic Colloidal Particles. Phys Rev Lett. 2014;112:180604 doi: 10.1103/PhysRevLett.112.180604 [DOI] [PubMed] [Google Scholar]

- 13.Lizier JT. JIDT: An information-theoretic toolkit for studying the dynamics of complex systems. Frontiers in Robotics and AI. 2014;1:11(11).

- 14. Parrondo JMR, Horowitz JM, Sagawa T. Thermodynamics of Information. Nature Physics. 2015;11:131 doi: 10.1038/nphys3230 [Google Scholar]

- 15. Horowitz JM, Esposito M. Thermodynamics with Continuous Information Flow. Phys Rev X. 2014;4:031015. [Google Scholar]

- 16. Horowitz JM, Sandberg H. Second-law-like inequalities with information and their interpretations. New J Phys. 2014;16:125007 doi: 10.1088/1367-2630/16/12/125007 [Google Scholar]

- 17. Hartich D, Barato AC, Seifert U. Stochastic thermodynamics of bipartite systems: transfer entropy inequalities and a Maxwell’s demon interpretation. J Stat Mech. 2014;2014(2):P02016 doi: 10.1088/1742-5468/2014/02/P02016 [Google Scholar]

- 18. Allahverdyan AE, Janzing D, Mahler G. Thermodynamic efficiency of information and heat flow. J Stat Mech. 2009;2009(09):P09011 doi: 10.1088/1742-5468/2009/09/P09011 [Google Scholar]

- 19. Bowsher CG, Swain PS. Identifying sources of variation and the flow of information in biochemical networks. Proc Natl Acad Sci USA. 2012;109(20):E1320–E1328. doi: 10.1073/pnas.1119407109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Kim KH, Qian H. Entropy Production of Brownian Macromolecules with Inertia. Phys Rev Lett. 2004;93:120602 doi: 10.1103/PhysRevLett.93.120602 [DOI] [PubMed] [Google Scholar]

- 21. Munakata T, Rosinberg ML. Feedback cooling, measurement errors, and entropy production. J Stat Mech. 2013;2013(06):P06014 doi: 10.1088/1742-5468/2013/06/P06014 [Google Scholar]

- 22. Sauer T. Computational solution of stochastic differential equations. Wiley Interdisciplinary Reviews: Computational Statistics. 2013;5(5):362–371. doi: 10.1002/wics.1272 [Google Scholar]

- 23. Monteoliva D, Diambra L. Information propagation in a noisy gene cascade. Phys Rev E. 2017;96:012403 doi: 10.1103/PhysRevE.96.012403 [DOI] [PubMed] [Google Scholar]

- 24. Ito S, Sagawa T. Maxwell’s demon in biochemical signal transduction with feedback loop. Nat Commun. 2014;6:7498 doi: 10.1038/ncomms8498 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Barnett L, Seth AK. Detectability of Granger causality for subsampled continuous-time neurophysiological processes. Journal of Neuroscience Methods. 2017;275:93–121. doi: 10.1016/j.jneumeth.2016.10.016 [DOI] [PubMed] [Google Scholar]

- 26.Tishby N, Pereira FC, Bialek W. The Information bottleneck. arXiv preprint physics/0004057. 2000;.

- 27. Tostevin F, ten Wolde PR. Mutual Information between Input and Output Trajectories of Biochemical Networks. Phys Rev Lett. 2009;102:218101 doi: 10.1103/PhysRevLett.102.218101 [DOI] [PubMed] [Google Scholar]

- 28. Kobayashi T, Sughiyama Y. Fluctuation Relations of Fitness and Information in Population Dynamics. Phys Rev Lett. 2015;115:238102 doi: 10.1103/PhysRevLett.115.238102 [DOI] [PubMed] [Google Scholar]

- 29. Halabi N, Rivoire O, Leibler S, Ranganathan R. Protein Sectors: Evolutionary Units of Three-Dimensional Structure. Cell. 2009;138(4):774–786. doi: 10.1016/j.cell.2009.07.038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Smith DJ, Lapedes AS, de Jong JC, Bestebroer TM, Rimmelzwaan GF, Osterhaus ADME, et al. Mapping the Antigenic and Genetic Evolution of Influenza Virus. Science. 2004;305(5682):371–376. doi: 10.1126/science.1097211 [DOI] [PubMed] [Google Scholar]

- 31. Lässig M, Mustonen V, Walczak AM. Predicting evolution. Nat Ecol Evol. 2017;1(0077):1–9. [DOI] [PubMed] [Google Scholar]

- 32. Risken H. The Fokker-Planck equation. Springer; 1989. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All relevant data are within the paper.