Abstract

Fabricated tissue phantoms are instrumental in optical in-vitro investigations concerning cancer diagnosis, therapeutic applications, and drug efficacy tests. We present a simple non-invasive computational technique that, when coupled with experiments, has the potential for characterization of a wide range of biological tissues. The fundamental idea of our approach is to find a supervised learner that links the scattering pattern of a turbid sample to its thickness and scattering parameters. Once found, this supervised learner is employed in an inverse optimization problem for estimating the scattering parameters of a sample given its thickness and scattering pattern. Multi-response Gaussian processes are used for the supervised learning task and a simple setup is introduced to obtain the scattering pattern of a tissue sample. To increase the predictive power of the supervised learner, the scattering patterns are filtered, enriched by a regressor, and finally characterized with two parameters, namely, transmitted power and scaled Gaussian width. We computationally illustrate that our approach achieves errors of roughly 5% in predicting the scattering properties of many biological tissues. Our method has the potential to facilitate the characterization of tissues and fabrication of phantoms used for diagnostic and therapeutic purposes over a wide range of optical spectrum.

Introduction

Recently, considerable effort has been devoted to improving the quality of fabricated tissue phantoms1–4 as they are instrumental in the optical in-vitro investigations concerning cancer diagnosis5, therapeutic applications6,7, and drug efficacy tests8. In this regard, one avenue of research has pursued the use of accurate and cost-effective phantom characterization techniques to guide the fabrication process. The most widely recognized characterization techniques for this purpose are spatial frequency domain imaging (SFDI)9,10, frequency domain photon migration (FDPM)11,12, and inverse adding-doubling (IAD)13,14. The fundamental idea of these techniques is to computationally model the scattering phenomenon in tissue phantoms and subsequently estimate the scattering properties of such materials by calibrating the computational model against some experimental data. Below, we briefly describe these methods and then introduce our approach which enables the data-driven estimation of scattering properties of tissues by employing a supervised learner (which is fitted to a training dataset of tissues’ characteristics) in an inverse optimization procedure. Our method is inexpensive, non-intrusive, efficient, and applicable to a wide range of materials.

In the case of a wavefront with a lateral sinusoidal intensity profile, the penetration depth and the diffuse reflectance depend on the lateral spatial frequency. The latter quantity, can be used to obtain the optical properties as well as the optical tomography of the sample10. The essence of the SFDI technique is to employ this relation by matching the measured and calculated diffuse reflectance for a set of wavefronts with different spatial frequencies. As for the FDPM technique, the analytical expressions of the phase lag, amplitude attenuation, and complex wave vector of a semi-infinite turbid medium are fitted to the corresponding measured values of the reflected beam to find the scattering parameters. In FDPM, the two ways of collecting the fitting data are: for a fixed temporal modulation frequency, the distance between the LED source and the detector is changed, and for a fixed distance between the source and the detector, the temporal modulation frequency is varied over a wide range.

In both SFDI and FDPM methods, the diffusion equation is used to approximate the Boltzmann transport equation. This results in the overestimation (underestimation) of the diffuse reflectance at low (high) spatial frequencies. In addition to the fitting error, enforcing the boundary conditions in the diffusion equation15 introduces some error in arriving at the analytical formulas for realistic semi-infinite media. Moreover, the experimental setup in both SFDI and FDPM methods are complex and costly. In SFDI, in addition to a spatial light modulator, two polarizers at the source and detector are needed to reject the specular reflection collected normal to the surface. As for FDPM technique, a network analyzer is required to modulate the current of the LED and to detect the diffused reflectance of the temporally modulated beam. These instruments render the setup complex and costly. Furthermore, these methods are incapable of measuring the anisotropy coefficient of the sample, , which is an important parameter for characterizing turbid media16–18. In biological tissues, the probability of scattering a beam of light at an angle (with respect to the incoming beam) can be described suitably by the Henyey-Greenstein phase function19,20:

| 1 |

where the optical properties of the turbid medium depend on both (that characterizes the angular profile of scattering) as well as the scattering length, , the average distance over which the scattering occurs.

Among these techniques, IAD is the most popular one due to its relatively higher accuracy and simpler experimental setup. Briefly, IAD is based on matching the measured and the calculated diffuse reflectance and transmittance by calibrating the scattering and absorption coefficients used in the simulations. When an accurate measurement of the un-scattered transmission can be made, it is possible to obtain as well. In IAD, the errors are mostly attributed to the experimental data. For instance, when measuring the total transmission and reflectance, part of the light scattered from the edge of the sample can be lost, or when measuring the un-scattered transmission, the scattered rays may unavoidably influence the measurement13.

We propose an efficient method to address the above challenges and have a better compromise between accuracy and the cost of measuring the scattering parameters (i.e., and ). Our method is based on a supervised learner that can predict the scattering pattern of a turbid medium given its thickness () and scattering parameters. Once this supervised learner is found, the scattering parameters of any turbid sample can be calculated given its thickness and the image of the scattered rays’ pattern either by inversing the supervised learner or performing an optimization task.

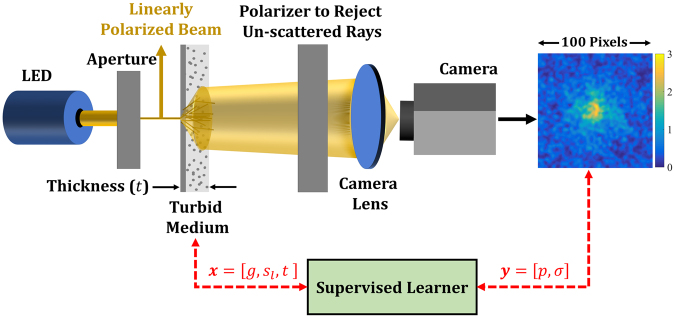

Our process for obtaining the scattering pattern, as illustrated in Fig. 1, starts by producing a pencil beam from an LED placed behind an aperture. The pencil beam has a well-defined but arbitrary polarization and is incident on the turbid medium with a known thickness. The surface of this medium is then imaged to a camera sensor through a lens, where the un-scattered beam with the well-defined polarization is rejected via a polarizer placed next to the turbid medium. We note that with such a non-coherent and phase-insensitive measurement, the size of the image as well as the components scale with the dimeter of the laser. Because of this scaling rule, the length unit of the image shown in Fig. 1 equals the number of the scaled pixels of the camera. We also note that for a collimated illumination, the distance between the source and the sample is arbitrary. A similar argument holds for the distance between the polarizer and the sample because the un-scattered light is collimated.

Figure 1.

The schematics of the simulation setup: The image of the transmitted scattered waves on the surface of the turbid medium is used to obtain its scattering properties. The input to the turbid medium is a linearly polarized pencil beam of an LED. A free space polarizer is used to reject the un-scattered beam based on the polarization of the input LED. The color bar besides the image indicates the range of gray intensity. See the text for more details on our simulation setup.

We employed the same configuration as in Fig. 1 in our computational simulations. In particular, we placed the camera lens far from the sample ( such that the scattered light is almost parallel to the optical axis. We employed a lens with a focal length, radius, and maximum numerical aperture of, respectively, , , and . Additionally, the optical resolution of the system according to Rayleigh’s criterion was at 21, which is equal to the pixel pitch of the detector in our simulations. Since the pixel pitch was larger than half of the optical resolving limit and hence the Nyquist criterion was not satisfied, the scattering patterns are slightly blurred. As for the LED bandwidth, Δλ, we chose it wide enough to have a coherent length much smaller than the optical path length of the rays (). With this choice, the coherent effects do not distort the scattered images. In particular, we ensured that Δλ where n is the refractive index and is the wavelength of interest. The minimum required bandwidth is when , , and .

We note that, in our method the simulations are performed on thin slabs of phantom or tissue with known thickness. Although performing the same type of experiment using reflection is in principle possible, we expect much weaker reflection than transmission for such thin slabs. Additionally, the reduction of the signal strength translates into lower SNR and higher measurement errors in the case of reflection. We have also found some experimental works which are based on quantitative phase of the transmission images of thin samples for which 22,23. As opposed to this latter approach, our method is based on the intensity of the scattering patterns which is simpler and applicable to thicker samples.

To fit our supervised learner, a high-fidelity training dataset of input-output pairs is required. Here, the inputs (collectively denoted by ) are the characteristics of the turbid samples (i.e., , , and ) while the outputs (collectively denoted by ) are some finite set of parameters that characterize the corresponding scattering patterns (i.e., the images similar to the one in Fig. 1). We elaborate on the choice of the latter parameters in Sec. 0 but note that they must be sufficiently robust to noise so that, given , the scattering parameters of any turbid sample can be predicted with relatively high accuracy using the supervised learner.

Results

To construct the computational training dataset, we used the Sobol sequence24,25 to build a space filling design of experiments (DOE) with . points (i.e., simulation settings) over the hypercube , , and . It is noted that the lower limit on the sample thickness is because of the considerable inaccuracies associated with the negligible probability of scattering in thin samples. In contrast, the upper limit on the sample thickness is bounded due to the computational costs associated with tracing the large number of ray scatterings. As for ranges of g and , they cover the scattering properties of a wide range of biological tissues including but not limited to liver26, white brain matter, grey brain matter, cerebellum, and brainstem tissues (pons, thalamus)27.

Once the simulation settings were determined, following the schematic in Fig. 1, the scattering pattern corresponding to each of them was obtained by the commercial raytracing software Zemax OpticStudio. Although there are many software programs applicable for this task (such as Code v, Oslo, and FRED), Zemax is perhaps the most widely used software for ray tracing. Unlike mode solvers, ray tracing is computationally fast. The significant scattering effects as well as the employed broadband light source (i.e., the LED) further justify the use of a ray-tracing software. In our simulations with Zemax , rigorous Monte-Carlo simulations were conducted for higher accuracy (instead of solving the simplified diffusion equation) and the turbid media were simulated with the built-in Henyey-Greenstein model28. To push the upper limit on the sample thickness to 600 μm, we increased the number of Monte Carlo intersections and observed that the maximum capacity of Zemax (roughly two million segments per ray) must be employed for sufficient accuracy. Additionally, we found that a 100 × 100 rectangular detector and five million launched rays provide a reasonable compromise between the accuracy and the simulation costs (about 3 minutes for each input setting).

As mentioned in Sec. 1, the scattering patterns corresponding to the simulation settings (i.e., the DOE points) must be characterized with a finite set of parameters (denoted by y in Fig. 1) to reduce the problem dimensionality and enable the supervised learning process. To determine the sufficient number of parameters, we highlight that our end goal is to arrive at an inverse relation where the g and of a tissue sample with a specific thickness can be predicted. Therefore, if the parameters are chosen such that both g and are monotonic functions of them, two characterizing parameters are required for a one-to-one relation. It must be noted that, these parameters must be sufficiently robust to the inherent errors in the simulations mentioned above. We will elaborate on this latter point below and in Sec. 3.

We have conducted extensive studies and our results indicate that the transmitted power, p, and the scaled Gaussian width, σ, can sufficiently and robustly characterize the scattering patterns of a wide range of tissue samples. While p measures the amount of the LED beam power transmitted through the sample and collected at the image, σ measures the extent to which the sample scatters the LED beam. It is evident that these parameters are negatively correlated, i.e., increasing p would decrease σ and vice versa.

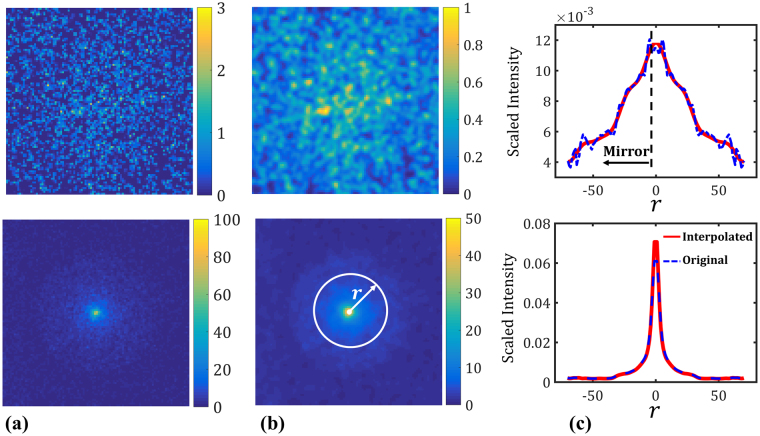

Measuring p for an image is straightforward as it only requires integrating the gray intensity over all the image pixels. Measuring σ, however, requires some pre-processing because the amount of scattering in an image is sensitive to noise and has a strong positive correlation with it (i.e., high scattering would involve a high degree of noise in the image and vice versa). As illustrated in Fig. 2, we take the following steps to measure σ for an image:

Filtering the image with a Gaussian kernel to eliminate the local noises (see panel b in Fig. 2). In general, the width of the Gaussian kernel depends on the resolution of the original image as well as the amount of noise. In our case, the filtering was conducted (in the frequency space) with a kernel width of 7 pixels.

Obtaining the radial distribution of the intensity by angularly averaging it over the image.

Mirroring the radial distribution to obtain a symmetric curve and then scaling it so that the area under the curve equals unity (see panel c in Fig. 2). At this point, the resulting symmetric curve would approximate a zero-mean Gaussian probability distribution function (PDF).

Fit a regressor to further reduce the noise and enrich the scattered data which resemble a Gaussian PDF (compare the solid and dashed lines in panel c).

Estimate the standard deviation of the Gaussian PDF via the enriched data. Divide this standard deviation by the power of the image (i.e., p) to obtain σ.

Figure 2.

Measuring σ for two different images: (a) The original scattering patterns obtained by Zemax following the schematic in Fig. 1. Both images have a side length of 100 pixels. The simulation settings (i.e., ) for the images on the top and bottom row correspond to and , respectively. The color bars indicate the range of gray intensity for each image. (b) The filtered images. The same Gaussian kernel is used to filter out the local noises in both images in panel (a). (c) The scaled radial distribution of gray intensity. The area under the dashed blue curve is approximately unity in both cases and the solid red curves are the regressors fitted to the original data. While in the top plot the noise is considerable (even after filtering with a Gaussian kernel), in the bottom plot the noise is negligible and hence the regressor almost interpolates. Gaussian processes are employed as regressors here with an estimated nugget of 10−3 and 10−5 for the top and bottom plots, respectively.

As for the regressor, we recommend employing a method that can address the potential high amount of noise in some of the images which, as mentioned earlier, happens when scattering is significant (e.g., when t is large while g and are small). We have used Gaussian processes (GP’s), neural networks, and polynomials for this purpose but recommend the use of GP’s mainly because they, following the procedure outlined in ref.29, can automatically address high or small amounts of noise. Additional attractive features of GP’s are discussed in Sec. 2.1 and Sec. 5.

The reason behind scaling the standard deviation in step 5 by p is to leverage the negative correlation between the transmitted power and the noise to arrive at a better measure for estimating scattering. To demonstrate this, consider two images where one of them is noisier than the other. It is obvious that the noisier image must be more scattered and hence have a larger scattering measure. To increase the difference between the scattering measures (and, subsequently, increase the predictive power of the supervised learner), one can divide them with a variable that is larger (smaller) for the smaller (larger) scattering measure. This variable, in our case, is the transmitted power which is rather robust to the noise.

Finally, we note that the images were not directly used in the supervised learning stage as outputs because: (i) Predicting the scattering pattern is not our only goal. Rather, we would like to have a limited set of parameters (i.e., outputs) that can sensibly characterize the image and hence provide guidance as to how the inputs (i.e., ) affect the outputs (and correspondingly the scattering patterns). Using the images directly as outputs is a more straightforward approach but renders monitoring the trends difficult. (ii) With outputs (the total number of pixels), fitting a multi-response supervised learner becomes computationally very expensive and, more importantly, may face severe numerical issues. One can also fit single-response supervised learners but this is rather cumbersome, expensive, and prone to errors due to high amounts of noise in some pixels. (iii) With outputs, the inverse optimization processes (for estimating g and given t and an image) becomes expensive.

Supervised learner: Linking Scattering Patterns with Tissue Sample Characteristics

With the advances in computational capabilities, supervised and unsupervised learning methods have drawn considerable attention in a wide range of applications including computational materials science30–51, neuroscience52, clinical medicine53–56, biology57,58, protein analysis and genetics59, biotechnology60,61, robotics62, psychology63, climatology64, paleoseismology65, and economics66. These methods provide the means to predict the response of a system where no or limited data is available. Neural networks, support vector machines, decision trees, Gaussian processes (GP’s), clustering, and random forests are amongst the most widely used methods. In case of biological tissues, supervised learning via neural networks has been previously employed, e.g., for classification of tissues using SFDI-based training datasets67–72.

We employ GP’s to link the characterizing parameters of the scattering patterns (i.e., p and σ) with those of the tissue samples (i.e., t, g, and ). Briefly, the essential idea behind using GP’s as supervised learners is to model the input-output relation as a realization of a Gaussian process. GP’s are well established in the statistics73, computational materials science33,40, and computer science74 communities as they, e.g., readily quantify the prediction uncertainty75,76 and enable tractable and efficient Bayesian analyses77,78. In addition, GP’s are particularly suited to emulate highly nonlinear functions especially when insufficient training samples are available.

In our case, the inputs and outputs corresponds to and , respectively. As there are two outputs, we can either fit a multi-response GP (MRGP) model or two independent single-response GP (SRGP) models. With the former approach, one GP model is fitted to map the three-dimensional (3D) space of x to the two-dimensional (2D) space of y. With the latter approach, however, two GP models are fitted: one for mapping x to p and another for mapping x to σ. The primary advantage of an MRGP model lies in capturing the correlation between the responses (if there is any) and, subsequently, requiring less data for a desired level of accuracy. An MRGP model might not provide more predictive power if the responses are independent, have vastly different behavior, or contain different levels of noise.

We conducted convergence studies to decide between the two modeling options and, additionally, determine the minimum DOE size required to fit a sufficiently accurate model. As mentioned earlier, the Sobol sequence was employed to build one DOE of size 400 over the hypercube , , and . Sobol sequence was chosen over other design methods (e.g., Latin hypercube) because consecutive subsets of a Sobol sequence all constitute space-filling 50 designs. Following this, we partitioned the first 300 points in the original DOE of size 400 into six subsets with an increment of 50, i.e., the DOE (i = 1,…, 6) included points from the original DOE. The last 100 points in the original DOE (which are space-filling and different from all the training points) were reserved for estimating the predictive power of the models. Next, three GP models were fitted to each DOE: (i) an MRGP model to map x to y, and (ii) two SRGP models; one to map x to p and another to map x to σ. Finally, the reserved 100 DOE points were used to estimate the scaled root-mean-squared error (RMSE) as:

| 2 |

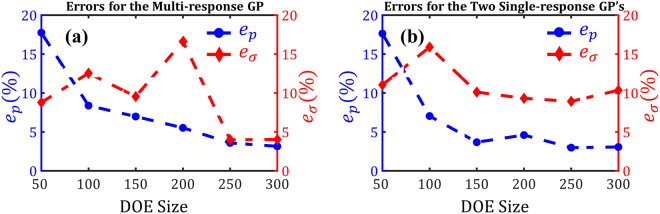

where N is the number of prediction points (N = 100 in our case), q is the quantity of interest (either p or σ), and is the estimated quantity by the fitted model. Figure 3 summarizes the results of our convergence studies (see Sec. 5.1 for fitting costs) and indicates that:

As the sample size increases, the errors generally decrease. The sudden increases in the errors are either due to overfitting or the addition of some noisy data points.

of the MRGP model is almost always smaller than that of the SRGP (compare the red curves in Fig. 3a and b). The opposite statement holds for . This is because p, as compared to σ, is much less noisy.

Figure 3.

Reduction of prediction error (in percent) by increasing the number of training samples: (a) Prediction errors for the MRGP model. (b) Prediction errors for the SRGP models. The errors are all calculated based on 100 test points that were not used in fitting.

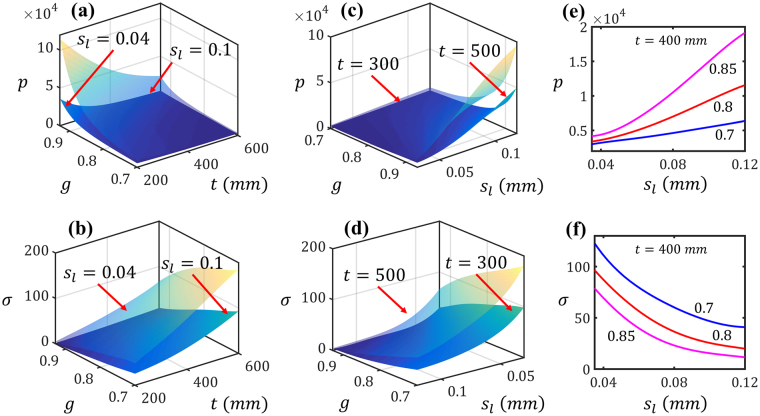

Based on the convergence studies, we can conclude that an MRGP model with at least 300 training data points can provide, on average, prediction errors smaller than 5%. Following this, we fitted an MRGP model in 28.6 seconds to the entire dataset (i.e., DOE of size 400) and employed it in the subsequent analyses in Sec. 2.2. Figure 4 illustrates how p and σ (and hence the scattering patterns) change as a function of tissue sample characteristics based on this MRGP model. The plots in top and bottom rows of Fig. 4 demonstrate the effect of inputs on, respectively, the transmittted power and the scaled Gaussian width. In Fig. 4(a) and (b), is fixed to either or and the outputs are plotted versus t and g. In Fig. 4(c) and (d), t is fixed to either 300 mm, or 500 mm and the outputs are plotted versus g and . In Fig. 4(e) and (f), p and σ are plotted versus for three values of g while having t fixed to 400 mm. In summary, these plots demonstrate that decreasing a sample’s g or , or increasing its thickness, would decrease the transmitted power while increasing the scattering (i.e., σ). Moreover, both p and σ change monotonically as a function of the inputs. This latter feature enables us to uniquely estimate g and given t, p, and σ.

Figure 4.

Effect of the characteristics of tissue samples (i.e., ) on the characteristics of scattering patterns (i.e, ): The effect on the transmittted power and the scaled Gaussian width are demostrated in the figures in, respectively, top and bottom rows. In (a) through (d), either t or μ is fixed and the outputs are plotted versus the other two inputs. In (e) and (f), p and σ are plotted versus μ for three values of g while having t fixed to 400 mm.

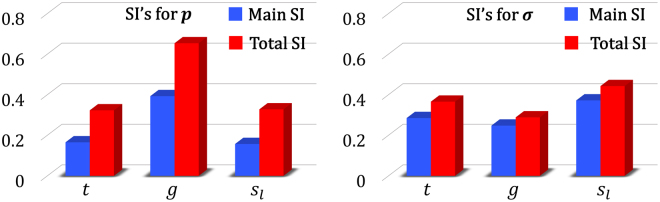

To quantify the relative importance of each input parameter on the two model outputs, we conducted global sensitivity analysis (SA) by calculating the Sobol indices (SI’s)79,80. As opposed to local SA methods which are based on the gradient, SI’s are variance-based quantities and provide a global measure for variable importance by decomposing the output variance as a sum of the contributions of each input parameter or combinations thereof. Generally, two indices are calculated for each input parameter of the model: main SI and total SI81. While a main SI measures the first order (i.e., additive) effect of an input on the output, the total SI measures both the first and higher order effects (i.e., including the interactions). SI’ are normalized quantities and known to be efficient indicators of variable importance because they do not presume any specific form (e.g., linear, monotonic, etc.) for the input-output relation.

Using the MRGP model, we conducted quasi Monte Carlo simulations to calculate the main and total SI’s of the three inputs for each of the outputs. The results are summarized in Fig. 5 and indicate that all the inputs affect both outputs. While p is noticeably sensitive to g (and equally sensitive to t and ), σ is almost equally sensitive to all the inputs. It is also evident (as captured by the difference between the height of the two bars for each input) that there is more interaction between the inputs in the case of p than σ.

Figure 5.

Results of the sensitivity analyses: Main and total sensitivity indices (SI’s) for the transmitted power (left) and the scaled Gaussian width (right).

Inverse Optimization: Estimating the Scattering Properties of a Tissue

Noting that the sample thickness can be controlled in an experiment, tissue characterization is achieved by finding the scattering parameters of the sample given how it scatters a pencil beam in a setup similar to that in Fig. 1. More formally, in our case, tissue characterization requires estimating g and given p, σ, and the sample thickness t. Although in principle we can inverse the MRGP model at any fixed t to map to , this is rather cumbersome. Hence, we cast the problem as an optimization one by minimizing the cost function, F, defined as:

| 3 |

where F is the cost function which measures the difference between the experimental values (i.e., and ) and the predicted ones by the MRGP model (i.e., and ). We note that, the model predictions are subject to where is the sample thickness.

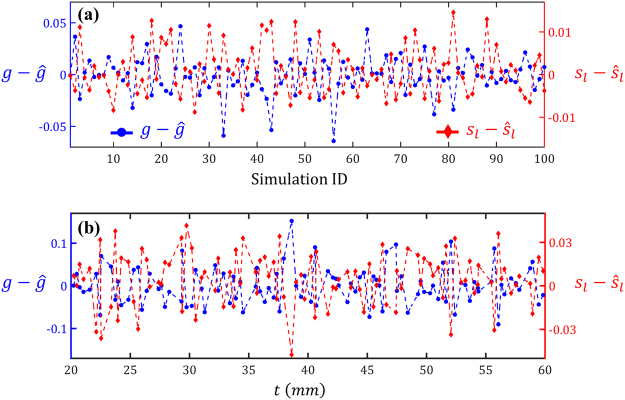

To test the accuracy of the fitted MRGP model in estimating the scattering parameters, we generated a space-filling test dataset of size 100 while ensuring that none of the test points were the same as the 400 training ones used in fitting the MRGP. For each test point, then, the outputs (i.e., p and σ) and the sample thickness (i.e., t) were used to estimate the inputs (i.e., and ) by minimizing Eq. 3. To solve Eq. 3, we used the command in the optimization toolbox of MATLAB®. Figure 6(a) illustrates the prediction errors of estimating g (on the left axis) and (on the right axis) for the 100 test points. It is evident that the average errors are zero in estimating either g or , indicating that the results are indeed unbiased. In Fig. 6(b) the errors are plotted with respect to the sample thickness to investigate whether they are correlated with t. As no obvious pattern can be observed, it can be concluded that our procedure for estimating g and is quite robust over the range where t is sampled in the training stage.

Figure 6.

Errors in predicting the scattering properties of 100 tissue samples: The scattering properties of most samples have been estimated quite accurately. In (a) and (b) the simulations are ordered with respect to, respectively, simulation number and the sample thickness.

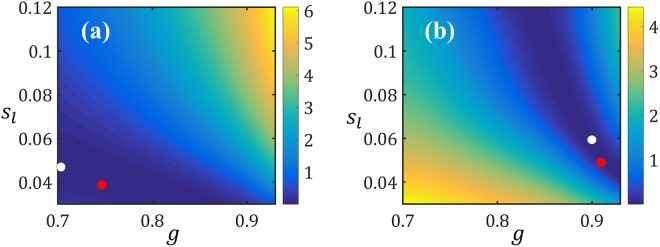

For further investigations, we normalize the errors and provide the summary statistics in Table 1. As quantified by the scaled RMSE, on average, the prediction errors are relatively small, especially for the g. The maximum scaled errors are (corresponding to simulation ID 56 in Fig. 6) and (simulation ID 22) for g and , respectively. Figure 7 demonstrates the contour plots of the cost function for these two simulations. As it can be observed, in each case there are regions in the search space of where the cost function F is approximately constant. In fact, the true optimum and the estimated solution (indicated, respectively, with white and red dots in Fig. 7) are on the loci where F is minimized. The existence of such loci can be explained by noting that g and have similar effects on both responses, i.e., increasing (decreasing) either of them would decrease (increase) σ while increasing (decreasing) p.

Table 1.

Summary of prediction errors: The scaled RMSE (see Eq. 2) and scaled maximum error are calculated for the 100 data points in Fig. 6.

| Scaled RMSE (%) | Scaled Max. Error (%) | |

|---|---|---|

| g | 2.41 | 9.1 |

| s L | 7.87 | 18.1 |

Figure 7.

Contours of F as a function of the optimization variables: The fixed variables are in (a) and in (b). The true and estimated optima are indicated with, respectively, white and red dots in each case.

Finally, we note that the inverse optimization cost is negligible (less than 10 seconds) in our case because we have employed a gradient-based optimization technique which converges fast because (i) it uses the predictions from the MRGP model for both the response and its gradient (which are done almost instantaneously), and (ii) we have reduced the dimensionality of the problem from 100 × 100 (the number of pixels in each image) to two (i.e., ).

Discussions

In our computational approach, the accuracy in predicting the scattering parameters of a turbid medium mainly depends on (i) the errors in Zemax simulations, (ii) the predictive power of p and σ in characterizing the scattering patterns, and the effectiveness of the supervised learner and the optimization procedure.

The inherent numerical errors in Zemax inevitably introduce some error into the training dataset. In addition, the number of launched rays in our Monte Carlo simulations, though having utilized the maximum capacity of Zemax, might be insufficient and hence introduce some inaccuracies. This latter source of error particularly affects samples which scatter the incoming LED beam more (e.g., thick samples with small g and ) because once the number of segments per a launched ray exceeds the software’s limit, the ray is discarded.

To reduce the problem dimensionality and enable the supervised learning process, the images of the scattering patterns (see, e.g., Fig. 2a) were characterized with two negatively correlated parameters, namely, p and σ. Since the images are not entirely symmetric and may not completely resemble a Gaussian pattern, employing only σ to capture their patterns’ spread will introduce some error. We have addressed this source of error, to some extent, by filtering the images with a Gaussian kernel (see, e.g., Fig. 2b) and enriching the radial distribution of the scattering patterns by a GP regressor (see Fig. 2c). Our choice of regressor, in particular, enabled automatic filtering of small to large amounts of noise through the so-called nugget parameter. Additionally, we leveraged the negative correlation between p and σ in the definition of σ to increase its sensitivity to the spreads. The supervised learning and inverse optimization procedures will, of course, benefit from reducing the simulation errors and finding parameters with more predictive power than σ.

As for the supervised learner, we illustrated that a multi-response Gaussian process can provide sufficient accuracy with a relatively small training dataset (see Fig. 3a). Learning both responses (i.e., p and σ) simultaneously, in fact, helped to better address the noise due to the negative correlation between the responses. As demonstrated in Fig. 3a, the MRGP model with 300 training samples can achieve, on average, errors smaller than 5%. Increasing the size of the training dataset would decrease the error but, due to the simulation errors, an RMSE of zero cannot be achieved. Additionally, sensitivity analyses were conducted by calculating the Sobol indices of the inputs (i.e., t, g, and)using the MRGP model. As illustrated in Fig. 5, all the inputs are effective and affect both outputs with p being noticeably sensitive to g and embodying more interactions between the inputs.

We casted the problem of determining the scattering parameters as an inverse optimization one where g and of a tissue sample were estimated given its thickness t, and the corresponding scattering pattern (i.e., p and σ). In optimization parlance, the objective or cost function (defined in Eq. 3) achieves the target scattering pattern by searching for the two unknown inputs while constraining the sample thickness. As illustrated in Fig. 6 for 100 test cases, our optimization procedure provides an unbiased estimate for the scattering parameters with an error of roughly 5%. The inaccurate estimations in our optimization studies are because g and have similar effects on p and σ. This is demonstrated in Fig. 7 where the local optima of the objective function create a locus and hence overestimating g or would result in underestimating the other and vice versa. To quantify this effect, we calculated Spearman’s rank-order correlation between the errors and for the 100 data points reported in Fig. 6 and found it to be . Such a strong negative correlation value (Spearman’s rank-order correlation is, in the absence of repeated data values, between and 1) indicates that when g is underestimated, will be overestimated and vice versa.

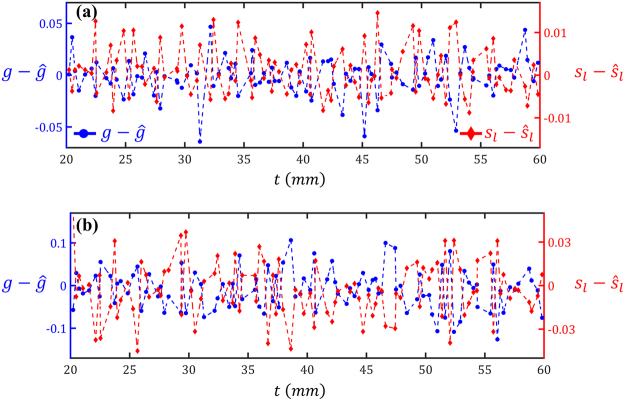

When coupling our method with experimental data, there will be some measurement errors primarily due to the noise of the camera sensor, insufficient rejection of the un-scattered waves, and the inaccuracy in determining the sample thickness. To address the dark current noise of the camera, the camera integration time should be increased. It is also favorable to increase the power of LED to reduce the influence of the parasitic rays of the environment, but caution must be practiced to avoid saturating the camera or damaging the sample. To ensure proper rejection of the un-scattered rays, an image corresponding to the absolute pixel-by-pixel difference of the two images with and without the polarization filter should be obtained. Then, the maximum intensity on the difference image should be compared with that of the image obtained with the polarization filter. Lastly, to minimize the errors due to sample thickness, samples with uniform and carefully measured thickness must be prepared. Inaccuracies in measuring the sample thickness or using considerably non-uniform ones, will adversely affect the prediction results. To quantify the sensitivity of the predictions to inaccuracies associated with thickness, we repeated the inverse optimization process in Sec. 2.2 considering potential measurement errors of 10%. In particular, we redid the inverse optimization for the test dataset while employing thickness values with 10% difference from the true values (i.e., instead of t, or were used). The estimated values (i.e., and ) where then compared to the true ones. As summarized in Table 2, the scaled RMSE’s have, especially in the case of , increased. To see whether the sample thickness has a correlation with the errors, in Fig. 8 the errors are plotted versus t. Although the errors are quite large in some cases (which is expected because the true t value is not used in the inverse optimization), the overall results are unbiased (i.e., the average errors are close to zero). These results indicate that, as long as the sample thickness is measured sufficiently accurate, the model can provide an unbiased estimate for g and .

Table 2.

Summary of prediction errors while enforcing 10% error in thickness: The scaled RMSE’s (see Eq. 2) are calculated for the 100 test points while enforcing 10% difference between the sample thickness used in the simulations and the thickness used in the inverse optimization.

| Errors with 10% Overestimation of t | Errors with 10% Underestimation of t | |

|---|---|---|

| g | ||

| s L |

Figure 8.

Errors in predicting the scattering properties of 100 tissue samples while enforcing 10% error in thickness value: In (a) and (b) the thickness value used in the inverse optimization is, respectively, increased and decreased by 10% from the original value used in Zemax simulations. The simulations are ordered with respect to the sample thickness.

Lastly, we compare the accuracy of our approach to other methods. The reported errors in Fig. 3, Table 1, and Fig. 6 do not consider the errors that will be introduced upon experimental data collection. As explained below, our error estimates are comparable to those of the FDPM, SFDI, and IAD methods from a computational standpoint. It is noted that in all these methods (including ours) the dominating error will be associated with experimental data (once it is used in conjunction with simulations).

In FDPM, the model bias77 originates from the assumptions made for solving the diffusion equation (e.g., using a semi-infinite medium as opposed to a finite-size sample)82. Besides this, there are two other error sources in FDPM (i) the preliminary error due to approximating light transport in tissues with diffusion equation is estimated to be 5~10%83, and (ii) the error due to the quantum shot noise limit of the instrument which depends on the configuration and components of the system. For a reasonable system comprising of two detectors and one source at the modulation frequency of , the limit of quantum shot noise results in about 2% error in estimating the scattering coefficient82. The scattering length, , is the inverse of the scattering coefficient and will roughly have the same error. Similarly, the percentage errors of roughly equals to that of the reduced scattering coefficient defined as . Considering only these two sources of noise, we can assume a total noise of around 10% for the FDPM technique. As for the SFDI technique, the diffusion approximation results in an overall reported error of around 3% for the reduced scattering coefficient9. In the IAD method, the prediction errors are sensitive to the input data. For instance, it is reported that with a 1% perturbation in the inputted transmission and reflection amounts, the relative error in estimating the scattering coefficient and anisotropy factor increases 10 and 4 times, respectively13.

Conclusion

We have introduced a non-invasive method for computational characterization of the scattering parameters (i.e., the anisotropy factor and the scattering length) of a medium. The essence of our approach lies in finding a supervised learner that can predict the scattering pattern of a turbid medium given its thickness and scattering parameters. Once this supervised learner is found, we solve an inverse optimization problem to estimate the scattering parameters of any turbid sample given its thickness and the image of the scattered rays’ pattern. Additionally, our approach is computationally inexpensive because the majority of the cost lies in building the training dataset which is done once.

To the best of our knowledge, this is one of the simplest and most inexpensive methods of tissue characterization because, in practice, only a few basic and low-cost instruments such as an LED, an aperture, a polarizer, and a camera are required. Additionally, our analyses and results are independent of the wavelength of the LED and therefore the scattering parameters of many tissues can be estimated over a wide range of visible and infrared wavelengths. We note that, in our method it is assumed that the absorption is much weaker than the scattering and thus its effect on the output images is negligible. This assumption holds for some tissues including white brain matter, grey brain matter, cerebellum, and brainstem tissues where the scattering coefficient is more than 100 times larger than the absorption coefficient in most of the visible and in the reported near-infrared range27 (see Table 3 in ref.84 for more details). Measuring weak absorption of tissue with our method requires more intense data analysis and processing. However, we believe that this limit doesn’t translate into impracticality of our method as there are methods22,23 which can only estimate g and .

We plan to experimentally validate our approach and quantify the effect of measurement errors (due to, e.g., the noise of the camera sensor and insufficient rejection of the un-scattered waves) on estimating the scattering parameters. We believe that this method has the potential to facilitate the fabrication of tissue phantoms used for diagnostic and therapeutic purposes over a wide range of optical spectrum.

Methods

Gaussian Process Modeling

GP modeling has become the de-facto supervised learning technique for fitting a response surface to training datasets of either costly physical experiments or expensive computer simulations due to its simplicity, flexibility, and accuracy74,85–88. The fundamental idea of GP modeling, is to model the dependent variable, y, as a realization of a random process, Y, with inputs where is the number of inputs. The underlying regression model can be formally stated as:

| 4 |

where are a set of known basis functions, are unknown coefficients, and is the random process. In Eq. 4, following the statistical conventions, the random variables/processes and their realizations are denoted with, respectively, upper and lower cases. Assuming is a zero-mean GP with covariance function of the form

| 5 |

between and , GP modeling essentially consists of estimating the β coefficients, process variance s, and parameters of the correlation function . Often, the maximum likelihood estimation (MLE) method is used for this purpose89,90.

We implemented an in-house GP modeling code in Matlab® following the procedure outlined in ref.29. The so-called Gaussian correlation function was employed with an addition of a nugget parameter, δ, to address the possible noises:

| 6 |

where θ are the roughness parameters estimated via MLE. For noiseless datasets, δ is generally set to either a very small number (e.g., 10−8) to avoid numerical issues, or zero. In our work, we have used GP’s for two purposes: (i) to smooth out the radial distribution of the scattered rays and enrich the associated PDF for a better estimation of its standard deviation (see Fig. 2), and (ii) to fit a response surface for mapping to . We emphasize that, the adaptive procedure of ref.29 allows to adjust δ in Eq. 6 to address negligible to large amounts of noise.

Multiple studies have extended GP modeling to multi-output datasets. Of particular interest, has been the work of Conti. et al.91 where the essential idea is to concatenate the vector of responses (i.e., for u outputs) and model the covariance function as where is the covariance matrix of the responses and ⊗ is the Kronecker product. Finally, it is noted that since we did not know a priori how and change as a function of , a constant basis function was used (i.e., ) in all our simulations.

The computational cost of fitting each of the MRGP models used in the convergence study is summarized in Table 3. As it can be observed, the costs are all small and increase as the size of the training dataset increases.

Table 3.

Computational cost of fitting MRGP models in our convergence study: As the number of the training samples increases, the fitting cost increases as well.

| Model 1 | Model 2 | Model 3 | Model 4 | Model 5 | Model 6 | |

|---|---|---|---|---|---|---|

| Training Size | ||||||

| Fitting Cost (seconds) |

Sensitivity Analysis with Sobol Indices

Sobol indices (SI’s) are variance-based measures for quantifying the global sensitivity of a model output to its inputs. For a model of the form , the main SI for the input is calculated as:

| 7 |

where is the total variance of the output, denotes all the inputs except , is the variance with respect to , and is the expectation of Y for all the possible values of while keeping fixed. Using the law of total variance, one can show that ’s are normalized quantities and vary between zero and one. It is noted that, similar to the above, the random variables and their realizations are denoted with, respectively, upper and lower cases.

The total SI for the input is calculated as:

| 8 |

which includes the contributions from all the terms in the variance decomposition that include . Comparing Eqs 7 and 8, it is evident that . Saltelli et al.81 provided numerical methods based on quasi Monte Carlo simulations for efficiently calculating both the main and total SI’s.

Data availability

The datasets and statistical models generated during this study are available from the corresponding author.

Acknowledgements

The authors appreciate the anonymous reviewers for their insightful comments. Grant support from National Science Foundation (NSF EEC-1530734) is appreciated. We would like to acknowledge partial support from ARO award #W911NF-11-1-0390. In addition, the authors would like to thank the Digital Manufacturing and Design Innovation Institute (DMDII), a UI LABS collaboration, for its funding support to Ramin Bostanabad through award number 15-07-07.

Author Contributions

I.H. initiated the project and performed the Zemax simulations. R.B. conducted the design of experiments, supervised learning, sensitivity analyses, and optimization tasks. All authors discussed the results and contributed to the manuscript.

Competing Interests

The authors declare that they have no competing interests.

Footnotes

Iman Hassaninia and Ramin Bostanabad contributed equally to this work.

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Chen C, et al. Preparation of a skin equivalent phantom with interior micron-scale vessel structures for optical imaging experiments. Bio. opt. exp. 2014;5:3140–3149. doi: 10.1364/BOE.5.003140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Martelli F, et al. Phantoms for diffuse optical imaging based on totally absorbing objects, part 2: experimental implementation. J. bio.opt. 2014;19:076011–076011. doi: 10.1117/1.JBO.19.7.076011. [DOI] [PubMed] [Google Scholar]

- 3.Krauter P, et al. Optical phantoms with adjustable subdiffusive scattering parameters. J. bio.opt. 2015;20:105008–105008. doi: 10.1117/1.JBO.20.10.105008. [DOI] [PubMed] [Google Scholar]

- 4.Böcklin C, Baumann D, Stuker F, Fröhlich J. Mixing formula for tissue-mimicking silicone phantoms in the near infrared. J. Phy.D: Appl. Phys. 2015;48:105402. doi: 10.1088/0022-3727/48/10/105402. [DOI] [Google Scholar]

- 5.Manoharan R, et al. Raman spectroscopy and fluorescence photon migration for breast cancer diagnosis and imaging. Photochem. Photobiol. 1998;67:15–22. doi: 10.1111/j.1751-1097.1998.tb05160.x. [DOI] [PubMed] [Google Scholar]

- 6.Ng DC, et al. On-chip biofluorescence imaging inside a brain tissue phantom using a CMOS image sensor for in vivo brain imaging verification. Sens. and Act. B: Chem. 2006;119:262–274. doi: 10.1016/j.snb.2005.12.020. [DOI] [Google Scholar]

- 7.Ley, S., Stadthalter, M., Link, D., Laqua, D. & Husar, P. In Engineering in Medicine and Biology Society (EMBC), 36th Annual International Conference of the IEEE. 1432–1435 (IEEE) (2014). [DOI] [PubMed]

- 8.Tien LW, et al. Silk as a Multifunctional Biomaterial Substrate for Reduced Glial Scarring around Brain‐Penetrating Electrodes. Adv. Func. Mat. 2013;23:3185–3193. doi: 10.1002/adfm.201203716. [DOI] [Google Scholar]

- 9.Cuccia, D. J., Bevilacqua, F., Durkin, A. J., Ayers, F. R. & Tromberg, B. J. Quantitation and mapping of tissue optical properties using modulated imaging. J.bio. opt. 14, 024012-024012-024013 (2009). [DOI] [PMC free article] [PubMed]

- 10.Cuccia DJ, Bevilacqua F, Durkin AJ, Tromberg BJ. Modulated imaging: quantitative analysis and tomography of turbid media in the spatial-frequency domain. Opt. lett. 2005;30:1354–1356. doi: 10.1364/OL.30.001354. [DOI] [PubMed] [Google Scholar]

- 11.Tromberg BJ, et al. Non–invasive measurements of breast tissue optical properties using frequency–domain photon migration. Phil. Trans. Roy. Soc. London B: Bio. Sci. 1997;352:661–668. doi: 10.1098/rstb.1997.0047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Pham TH, Coquoz O, Fishkin JB, Anderson E, Tromberg BJ. Broad bandwidth frequency domain instrument for quantitative tissue optical spectroscopy. Rev. Sci. Inst. 2000;71:2500–2513. doi: 10.1063/1.1150665. [DOI] [Google Scholar]

- 13.Prahl SA, van Gemert MJ, Welch AJ. Determining the optical properties of turbid media by using the adding–doubling method. Appl. opt. 1993;32:559–568. doi: 10.1364/AO.32.000559. [DOI] [PubMed] [Google Scholar]

- 14.Prahl, S. Everything I think you should know about Inverse Adding-Doubling. Oregon Medical Laser Center, St. Vincent Hospital, 1–74 (2011).

- 15.Haskell RC, et al. Boundary conditions for the diffusion equation in radiative transfer. JOSA. A. 1994;11:2727–2741. doi: 10.1364/JOSAA.11.002727. [DOI] [PubMed] [Google Scholar]

- 16.Peters V, Wyman D, Patterson M, Frank G. Optical properties of normal and diseased human breast tissues in the visible and near infrared. Phys. in med. and bio. 1990;35:1317. doi: 10.1088/0031-9155/35/9/010. [DOI] [PubMed] [Google Scholar]

- 17.Van der Zee, P., Essenpreis, M. & Delpy, D. T. In Proc. SPIE. 454–465.

- 18.Patterson MS, Wilson BC, Graff R. In vivo tests of the concept of photodynamic threshold dose in normal rat liver photosensitized by aluminum chlorosulphonated phthalocyanine. Photochem. Photobiol. 1990;51:343–349. doi: 10.1111/j.1751-1097.1990.tb01720.x. [DOI] [PubMed] [Google Scholar]

- 19.Cornette WM, Shanks JG. Physically reasonable analytic expression for the single-scattering phase function. Appl. opt. 1992;31:3152–3160. doi: 10.1364/AO.31.003152. [DOI] [PubMed] [Google Scholar]

- 20.Henyey LG, Greenstein JL. Diffuse radiation in the galaxy. The Astro.J. 1941;93:70–83. doi: 10.1086/144246. [DOI] [Google Scholar]

- 21.Goodman, J. W. Introduction to Fourier optics. (Roberts and Company Publishers, 2005).

- 22.Lee M, et al. Label-free optical quantification of structural alterations in Alzheimer’s disease. Sci. Rep. 2016;6:31034. doi: 10.1038/srep31034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ding H, et al. Measuring the scattering parameters of tissues from quantitative phase imaging of thin slices. Opt. lett. 2011;36:2281–2283. doi: 10.1364/OL.36.002281. [DOI] [PubMed] [Google Scholar]

- 24.Sobol IM. On quasi-Monte Carlo integrations. Math. Comput. Simul. 1998;47:103–112. doi: 10.1016/S0378-4754(98)00096-2. [DOI] [Google Scholar]

- 25.Sobol’ IYM. On the distribution of points in a cube and the approximate evaluation of integrals. Zhurnal Vychislitel'noi Matematiki i Matematicheskoi Fiziki. 1967;7:784–802. [Google Scholar]

- 26.De Jode M. Monte Carlo simulations of light distributions in an embedded tumour model: studies of selectivity in photodynamic therapy. Lasers. Med. Sci. 2000;15:49–56. doi: 10.1007/s101030050047. [DOI] [PubMed] [Google Scholar]

- 27.Yaroslavsky A, et al. Optical properties of selected native and coagulated human brain tissues in vitro in the visible and near infrared spectral range. Phys. Med. Biol. 2002;47:2059. doi: 10.1088/0031-9155/47/12/305. [DOI] [PubMed] [Google Scholar]

- 28.Kim M, et al. Optical lens-microneedle array for percutaneous light delivery. Biomedical opt. expr. 2016;7:4220–4227. doi: 10.1364/BOE.7.004220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bostanabad, R., Kearney, T., Tao, S., Apley, D. W. & Chen, W. Leveraging the Nugget Parameter for Efficient Gaussian Process Modeling. Intl. J. Num. Meth in Eng. (2017).

- 30.Bostanabad R, Bui AT, Xie W, Apley DW, Chen W. Stochastic microstructure characterization and reconstruction via supervised learning. Acta. Mat. 2016;103:89–102. doi: 10.1016/j.actamat.2015.09.044. [DOI] [PubMed] [Google Scholar]

- 31.Breneman CM, et al. Stalking the Materials Genome: A Data-Driven Approach to the Virtual Design of Nanostructured Polymers. Adv. Funct. Mate.r. 2013;23:5746–5752. doi: 10.1002/adfm.201301744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kalidindi SR, De Graef M. Materials Data Science: Current Status and Future Outlook. Ann. Rev. of Mat. Res. 2015;45:171–193. doi: 10.1146/annurev-matsci-070214-020844. [DOI] [Google Scholar]

- 33.Bessa MA, et al. A framework for data-driven analysis of materials under uncertainty: Countering the curse of dimensionality. Comp. Meth. in Appl. Mech. and Eng. 2017;320:633–667. doi: 10.1016/j.cma.2017.03.037. [DOI] [Google Scholar]

- 34.Liu R, Yabansu YC, Agrawal A, Kalidindi SR, Choudhary AN. Machine learning approaches for elastic localization linkages in high-contrast composite materials. Integ.Mat. and Manufac. Innov. 2015;4:13. doi: 10.1186/s40192-015-0042-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Le B, Yvonnet J, He QC. Computational homogenization of nonlinear elastic materials using neural networks. Int. J. Numeric.Meth. in Eng. 2015;104:1061–1084. doi: 10.1002/nme.4953. [DOI] [Google Scholar]

- 36.Bostanabad R, Chen W, Apley DW. Characterization and reconstruction of 3D stochastic microstructures via supervised learning. J. Microsc. 2016;264:282–297. doi: 10.1111/jmi.12441. [DOI] [PubMed] [Google Scholar]

- 37.Matouš K, Geers MG, Kouznetsova VG, Gillman A. A review of predictive nonlinear theories for multiscale modeling of heterogeneous materials. J. of Comp.Phys. 2017;330:192–220. doi: 10.1016/j.jcp.2016.10.070. [DOI] [Google Scholar]

- 38.Xu HY, Liu RQ, Choudhary A, Chen W. A Machine Learning-Based Design Representation Method for Designing Heterogeneous Microstructures. J.Mech. Design. 2015;137:051403. doi: 10.1115/1.4029768. [DOI] [Google Scholar]

- 39.Geers M, Yvonnet J. Multiscale modeling of microstructure–property relations. MRS. Bullet. 2016;41:610–616. doi: 10.1557/mrs.2016.165. [DOI] [Google Scholar]

- 40.Xue D, et al. Accelerated search for materials with targeted properties by adaptive design. Nat. Commun. 2016;7:11241. doi: 10.1038/ncomms11241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Curtarolo S, et al. The high-throughput highway to computational materials design. Nat. Mater. 2013;12:191–201. doi: 10.1038/nmat3568. [DOI] [PubMed] [Google Scholar]

- 42.Kalinin SV, Sumpter BG, Archibald RK. Big-deep-smart data in imaging for guiding materials design. Nat. Mater. 2015;14:973–980. doi: 10.1038/nmat4395. [DOI] [PubMed] [Google Scholar]

- 43.Balachandran PV, Young J, Lookman T, Rondinelli JM. Learning from data to design functional materials without inversion symmetry. Nat. Comm. 2017;8:14282. doi: 10.1038/ncomms14282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Seko, A., Maekawa, T., Tsuda, K. & Tanaka, I. Machine learning with systematic density-functional theory calculations: Application to melting temperatures of single- and binary-component solids. Phys.Rev. B. 89, 10.1103/PhysRevB.89.054303 (2014).

- 45.Cang, R. J. & Ren, M. Y. Deep Network-Based Feature Extraction and Reconstruction of Complex Material Microstructures. Proc. ASME Int. Design Eng. Tech. Conf. and Comp. and Infor. in Eng. Conference, 2016, Vol 2b, 95–104 (2016).

- 46.Balachandran PV, Theiler J, Rondinelli JM, Lookman T. Materials Prediction via Classification Learning. Sci. Rep. 2015;5:13285. doi: 10.1038/srep13285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Liu R, et al. A predictive machine learning approach for microstructure optimization and materials design. Sci. Rep. 2015;5:11551. doi: 10.1038/srep11551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Pilania G, Wang C, Jiang X, Rajasekaran S, Ramprasad R. Accelerating materials property predictions using machine learning. Sci. Rep. 2013;3:2810. doi: 10.1038/srep02810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Balachandran, P. V., Xue, D., Theiler, J., Hogden, J. & Lookman, T. Adaptive Strategies for Materials Design using Uncertainties. Sci. Rep. 6 (2016). [DOI] [PMC free article] [PubMed]

- 50.Kolb B, Lentz LC, Kolpak AM. Discovering charge density functionals and structure-property relationships with PROPhet: A general framework for coupling machine learning and first-principles methods. Sci. Rep. 2017;7:1192. doi: 10.1038/s41598-017-01251-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Wang, C., Yu, S., Chen, W. & Sun, C. Highly efficient light-trapping structure design inspired by natural evolution. Sci. Rep. 3 (2013). [DOI] [PMC free article] [PubMed]

- 52.Akil H, Martone ME, Van Essen DC. Challenges and Opportunities in Mining Neuroscience Data. Science. 2011;331:708–712. doi: 10.1126/science.1199305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Bellazzi R, Zupan B. Predictive data mining in clinical medicine: Current issues and guidelines. Int. J. Med. Infor. 2008;77:81–97. doi: 10.1016/j.ijmedinf.2006.11.006. [DOI] [PubMed] [Google Scholar]

- 54.Buonamici, S. et al. CCR7 signalling as an essential regulator of CNS infiltration in T-cell leukaemia. Nat. 459, 1000–1004, http://www.nature.com/nature/journal/v459/n7249/suppinfo/nature08020_S1.html (2009). [DOI] [PMC free article] [PubMed]

- 55.Hanash SM, Pitteri SJ, Faca VM. Mining the plasma proteome for cancer biomarkers. Nat. 2008;452:571–579. doi: 10.1038/nature06916. [DOI] [PubMed] [Google Scholar]

- 56.Shlush LI, et al. Identification of pre-leukaemic haematopoietic stem cells in acute leukaemia. Nat. 2014;506:328–333. doi: 10.1038/nature13038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Hehemann, J.-H. et al. Transfer of carbohydrate-active enzymes from marine bacteria to Japanese gut microbiota. Nat. 464, 908–912, http://www.nature.com/nature/journal/v464/n7290/suppinfo/nature08937_S1.html (2010). [DOI] [PubMed]

- 58.Warnecke, F. et al. Metagenomic and functional analysis of hindgut microbiota of a wood-feeding higher termite. Nat. 450, 560–565, http://www.nature.com/nature/journal/v450/n7169/suppinfo/nature06269_S1.html (2007). [DOI] [PubMed]

- 59.Vinayagam A, et al. Protein Complex–Based Analysis Framework for High-Throughput Data Sets. Sci. Sign. 2013;6:rs5–rs5. doi: 10.1126/scisignal.6288er5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Besnard, J. et al. Automated design of ligands to polypharmacological profiles. Nat. 492, 215–220, http://www.nature.com/nature/journal/v492/n7428/abs/nature11691.html#supplementary-information (2012). [DOI] [PMC free article] [PubMed]

- 61.Tatonetti NP, Ye PP, Daneshjou R, Altman RB. Data-Driven Prediction of Drug Effects and Interactions. Sci. Trans. Med. 2012;4:125ra131–125ra131. doi: 10.1126/scitranslmed.3003377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Cully, A., Clune, J., Tarapore, D. & Mouret, J.-B. Robots that can adapt like animals. Nat. 521, 503–507, 10.1038/nature14422http://www.nature.com/nature/journal/v521/n7553/abs/nature14422.html#supplementary-information (2015). [DOI] [PubMed]

- 63.Whelan, R. et al. Neuropsychosocial profiles of current and future adolescent alcohol misusers. Nat.512, 185–189, 10.1038/nature13402http://www.nature.com/nature/journal/v512/n7513/abs/nature13402.html#supplementary-information (2014). [DOI] [PMC free article] [PubMed]

- 64.Chavez, E., Conway, G., Ghil, M. & Sadler, M. An end-to-end assessment of extreme weather impacts on food security. Nat. Clim. Change. 5, 997–1001, 10.1038/nclimate2747http://www.nature.com/nclimate/journal/v5/n11/abs/nclimate2747.html#supplementary-information (2015).

- 65.Yoon, C. E., O’Reilly, O., Bergen, K. J. & Beroza, G. C. Earthquake detection through computationally efficient similarity search. Sci. Adv. 1, 10.1126/sciadv.1501057 (2015). [DOI] [PMC free article] [PubMed]

- 66.Einav, L. & Levin, J. Economics in the age of big data. Science346, 10.1126/science.1243089 (2014). [DOI] [PubMed]

- 67.Warncke, D., Lewis, E., Lochmann, S. & Leahy, M. In J. of Phys.: Conf. Ser. 012047 (IOP Publishing).

- 68.Farrell, T. J., Patterson, M. S., Hayward, J. E., Wilson, B. C. & Beck, E. R. In OE/LASE'94. 117–128 (International Society for Optics and Photonics).

- 69.Pfefer TJ, et al. Reflectance-based determination of optical properties in highly attenuating tissue. J. Bio. Opt. 2003;8:206–215. doi: 10.1117/1.1559487. [DOI] [PubMed] [Google Scholar]

- 70.Sharma D, Agrawal A, Matchette LS, Pfefer TJ. Evaluation of a fiberoptic-based system for measurement of optical properties in highly attenuating turbid media. Biomed.Eng. Online. 2006;5:49. doi: 10.1186/1475-925X-5-49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Bruulsema J, et al. Correlation between blood glucose concentration in diabetics and noninvasively measured tissue optical scattering coefficient. Opt. lett. 1997;22:190–192. doi: 10.1364/OL.22.000190. [DOI] [PubMed] [Google Scholar]

- 72.Zhang L, Wang Z, Zhou M. Determination of the optical coefficients of biological tissue by neural network. J. of Mod. Opt. 2010;57:1163–1170. doi: 10.1080/09500340.2010.500106. [DOI] [Google Scholar]

- 73.Plumlee, M. & Apley, D. W. Lifted Brownian kriging models. Technometrics (2016).

- 74.Rasmussen, C. E. Gaussian processes for machine learning (2006).

- 75.Jin R, Du X, Chen W. The use of metamodeling techniques for optimization under uncertainty. Struc. and Multi. Opt. 2003;25:99–116. doi: 10.1007/s00158-002-0277-0. [DOI] [Google Scholar]

- 76.Worley, B. Deterministic uncertainty analysis. (Oak Ridge National Lab., 1987).

- 77.Kennedy MC, O'Hagan A. Bayesian calibration of computer models. J. Roy. Stat. Soc: Ser. B. (Stat. Meth.) 2001;63:425–464. doi: 10.1111/1467-9868.00294. [DOI] [Google Scholar]

- 78.Farhang-Mehr A, Azarm S. Bayesian meta-modelling of engineering design simulations: a sequential approach with adaptation to irregularities in the response behaviour. Int. J. Num. Meth. Eng. 2005;62:2104–2126. doi: 10.1002/nme.1261. [DOI] [Google Scholar]

- 79.Sobol’ IYM. On sensitivity estimation for nonlinear mathematical models. Matematicheskoe Modelirovanie. 1990;2:112–118. [Google Scholar]

- 80.Sudret B. Global sensitivity analysis using polynomial chaos expansions. Rel. Eng. & Sys. Safe. 2008;93:964–979. doi: 10.1016/j.ress.2007.04.002. [DOI] [Google Scholar]

- 81.Saltelli A, et al. Variance based sensitivity analysis of model output. Design and estimator for the total sensitivity index. Comp. Phys. Comm. 2010;181:259–270. doi: 10.1016/j.cpc.2009.09.018. [DOI] [Google Scholar]

- 82.Pogue BW, Patterson MS. Error assessment of a wavelength tunable frequency domain system for noninvasive tissue spectroscopy. J. Biomed. Opt. 1996;1:311–323. doi: 10.1117/12.240679. [DOI] [PubMed] [Google Scholar]

- 83.Fantini S, Franceschini MA, Gratton E. Semi-infinite-geometry boundary problem for light migration in highly scattering media: a frequency-domain study in the diffusion approximation. JOSA. B. 1994;11:2128–2138. doi: 10.1364/JOSAB.11.002128. [DOI] [Google Scholar]

- 84.Cheong W-F, Prahl SA, Welch AJ. A review of the optical properties of biological tissues. IEEE. J.Quan. Elect. 1990;26:2166–2185. doi: 10.1109/3.64354. [DOI] [Google Scholar]

- 85.Sacks, J., Welch, W. J., Mitchell, T. J. & Wynn, H. P. Design and analysis of computer experiments. Stat.Sci. 409–423 (1989).

- 86.MacDonald, B., Ranjan, P. & Chipman, H. GPfit: An R package for fitting a gaussian process model to deterministic simulator outputs. J. Stat. Soft. 64 (2015).

- 87.Ba, S. & Joseph, V. R. Composite Gaussian process models for emulating expensive functions. Ann. Appl. Stat. 1838–1860 (2012).

- 88.Zhang, L., Wang, K. & Chen, N. Monitoring wafer geometric quality using additive gaussian process model. IIE Transactions (2015).

- 89.Martin JD, Simpson TW. Use of kriging models to approximate deterministic computer models. AIAA. J. 2005;43:853–863. doi: 10.2514/1.8650. [DOI] [Google Scholar]

- 90.Jin R, Chen W, Simpson TW. Comparative studies of metamodelling techniques under multiple modelling criteria. Struc. and Multi. Opt. 2001;23:1–13. doi: 10.1007/s00158-001-0160-4. [DOI] [Google Scholar]

- 91.Conti, S., Gosling, J. P., Oakley, J. E. & O’hagan, A. Gaussian process emulation of dynamic computer codes. Biometrika asp028 (2009).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets and statistical models generated during this study are available from the corresponding author.