Abstract

Objective

To provide metrics for quantifying the capability of hospitals and the degree of care regionalization.

Data Source

Administrative database covering more than 10 million hospital encounters during a 3‐year period (2012–2014) in Massachusetts.

Principal Findings

We calculated the condition‐specific probabilities of transfer for all acute care hospitals in Massachusetts and devised two new metrics, the Hospital Capability Index (HCI) and the Regionalization Index (RI), for analyzing hospital systems. The HCI had face validity, accurately differentiating academic, teaching, and community hospitals of varying size. Individual hospital capabilities were clearly revealed in “fingerprints” of their condition‐specific transfer behavior. The RI also performed well, with those of specific conditions successfully quantifying the concentration of care arising from regulatory and public health activity. The median RI of all conditions within the Massachusetts health care system was 0.21 (IQR, 0.13–0.36), with a long tail of conditions that were very highly regionalized. Application of the HCI and RI metrics together across the entire state identified the degree of interdependence among its hospitals.

Conclusions

Condition‐specific transfer activity, as captured in the HCI and RI, provides quantitative measures of hospital capability and regionalization of care.

Keywords: Health care organizations and systems; access, demand, utilization of services; hospital administrative data; network adequacy

Inter‐hospital transfer of patients is common throughout the developed world. Although transfer can frequently serve patient and provider preferences, it is first and foremost a mechanism for conducting sick patients to higher levels of care. In this way, inter‐hospital transfer supports the regionalization of services, which improves outcome in many conditions, including trauma (Gabbe et al. 2012), stroke (Albright et al. 2010), acute myocardial infarction (Topol and Kereiakes 2003), and cardiac arrest (Spaite et al. 2014).

Despite the critical importance of hospital transfer, practices typically result from complex processes that arise informally (Bosk, Veinot, and Iwashyna 2011). Many transfer practices originated from physicians’ patient‐sharing relationships (Landon et al. 2012), leaving hospitals embedded in complex networks of care over which they had little control (Lee et al. 2011). Modern hospital affiliations, fueled initially by economics and recently accelerated by incentives within the Affordable Care Act, are rapidly creating new care networks with transfer priorities that will supersede these older clinical relationships. Although federal agencies offer broad guidelines for network structure, the details of regulation are left to the states and quantitative tools for evaluating network adequacy are limited (Baicker and Levy 2015).

Unhindered transfer promotes efficient resource allocation by permitting some hospitals to focus on common conditions while transferring patients with uncommon conditions to other hospitals providing more specialized or complex care. In this manner, decentralized patient‐sharing decisions can, over time, yield networks of care that effectively move patients from less to more capable hospitals (Lomi et al. 2014). Yet while system‐wide transfer behavior may be a natural evolutionary process, tools are absent for assessing and comparing the resultant clinical capabilities of individual hospitals or their combinations. Common experience and intuition teach that not all hospitals are the same, but quantitative measures are necessary to address modern questions of cost variation (Seltz et al. 2016), network narrowness (Baicker and Levy 2015), emergency medical system design (Institute of Medicine 2007), and disaster preparedness (Farmer and Carlton 2006). We hypothesized that the frequency and patterns of patient transfer could fill this gap and serve as measures of both hospital capability and regionalization of care.

Methods

Data Source

We used the 2012–2014 Acute Hospital Case Mix dataset obtained from the Massachusetts (MA) Center for Health and Information and Analysis (CHIA). The dataset contains high‐quality encounter‐level data submitted quarterly by all acute care hospitals (ACHs) in the state. Similar to data from the Healthcare Cost and Utilization Project (HCUP 2015), the Case Mix dataset encompasses all inpatient, outpatient, and emergency department hospital visits of a given fiscal year (FY). Each visit contains both demographic and clinical elements, such as patient insurance and zip code of residence, medical diagnoses and procedures, lengths of stay, synthetic patient identifiers, and hospital charges for individuals admitted to Massachusetts's hospitals. Our work was reviewed and approved by the CHIA Data Release Board and by the Boston Children's Hospital Institutional Review Board.

Hospital and Patient Selection

We analyzed all inpatient and outpatient admissions and emergency department visits for the 2012–2014 fiscal years. We studied acute care general hospitals with active emergency departments (EDs), including multispecialty pediatric hospitals, and excluded facilities limited to a single specialty. For each encounter, we attributed a categorized diagnosis based on the primary diagnosis code using the Clinical Classifications Software, CCS (HCUP 2013). CCS aggregates the over 14,000 International Classification of Diseases, Ninth Revision, Clinical Modification (ICD‐9‐CM) diagnosis codes into 285 mutually exclusive diagnostic categories. Excluded from our analysis were mental health conditions (CCS codes 65–75, 7.1 percent of the total number of admissions and 19.0 percent of transfers), where the vast majority of patients are transferred outside of the acute care system, and rare conditions (codes with fewer than 30 statewide appearances per year). For some analyses, hospitals were aggregated into geographic regions or academic, teaching, community, and community‐disproportionate share hospital (DSH) cohorts as defined by CHIA.

Admissions, Transfers, and Probability of Completing Care

For these analyses, we define an admission as any inpatient or observation admission to an acute care hospital. Similarly, an ED visit refers to any and all emergency department encounters. A transfer was first identified according to the disposition status recorded for each admission or ED visit, including all sources and sites (i.e., ED to inpatient, ED to ED, inpatient to inpatient, and inpatient to ED). We then used synthetic patient identifiers and other fields to match each transfer to admissions and visits in different acute care hospitals. Only transfers between two different hospitals that can be matched by our algorithm are included in the analysis.

In the destination hospital, patients may be admitted directly to the hospital or present to the ED. Those presenting to the ED may be treated and discharged home, admitted to that hospital, or transferred to another facility for further evaluation and care. Those discharged directly home from the first ED visited were presumed to have completed their care as outpatients and were excluded from further analysis. Those admitted to the hospital (either directly or through the ED) and those transferred to another facility were considered inpatients. Inpatients may complete their care in their original institution and be discharged home or require transfer for additional care elsewhere. For the purpose of our indices, care is considered complete when a patient has any discharge status that is not a transfer to another acute care hospital. Using this definition for each CCS diagnosis at each hospital, we calculated the fraction of inpatients that ultimately completed their care in that hospital. Mathematically, this probability of care completion (P) is the ratio of the number admissions to the sum of admissions (A) and transfers (T):

| (1) |

Note that the set of variables given by hospital, period of time, and CCS code is the minimal set of variables that can be used to define this probability (and all subsequent indices). However, additional variables can also be used to stratify this probability, including for instance, (i) patient's demographics such as age, ethnicity, gender, and other study groups; (ii) hospital characteristics such as hospital service areas, provider networks; and/or (iii) a subset of clinical conditions, among other possibilities and combinations of the characteristics discussed above. In this manner, all indices below may be easily calculated along multiple dimensions as needed to focus upon the particular question at hand. In the work presented here, we primarily aim to provide a global overview of the health care system in MA, so we present most results using the variables shown in equation (1). To illustrate the flexibility of stratification by factors such as population or hospital characteristics, we will present some results and analyses by region, age, and cohort of hospitals.

Hospital Capability

Highly capable hospitals will complete the care of nearly all patients presenting for assistance. Less capable hospitals will stabilize patients to the best of their ability and transfer those that require additional care (EMTALA 1985). We reasoned that the overall capability of each hospital might be described by its average probability of completing the care of patients across all conditions. For a given hospital, therefore, we defined the Hospital Capability Index (HCI) as the institution's weighted average probability of completing care across all CCS codes1:

| (2) |

Note that the weight w(hospital, CCS, time) can be defined in multiple ways depending on the focus of analysis. If that focus is understanding the capability of a hospital in serving the most common conditions it sees, the weight could be given by the normalized frequency of conditions at the hospital (see Hospital Demand Capability ). If that focus is the overall capability of a hospital to treat all medical conditions, each condition is given the same weight, that is, w(hospital, CCS, time) = 1, and equation (2) can be rewritten as follows:

| (3) |

According to this measure, a highly capable hospital will complete the care of most patients with a wide range of clinical conditions, transfer very few, and carry an HCI close to 1 (i.e., the average probability of care completion for all conditions approaches 1). Conversely, a hospital with very low capability may transfer all of its sick patients, admit no one, and carry an HCI of zero. For conditions that are never encountered in the 3‐year period (i.e., both numerator and denominator in equation (2) are zero), we chose to also assign a zero value, reasoning that clinical experience and capability with that condition are limited. To avoid sampling bias in this regard, we compared multiyear and single‐year measures for each hospital.

Hospital Demand Capability

As noted above, the HCI gives information concerning the range of conditions for which a hospital routinely completes care, but it treats all conditions equally and includes some conditions that a hospital may never see. For example, patients with unconventional conditions may self‐select to specialty centers or those in different regions may have different disease patterns. To compliment the HCI, therefore, we also define the Hospital Demand Capability Index (HDCI) as the average probability of completing care across all CCS codes that are seen at an institution:

| (4) |

where the frequency of the CCS conditions seen at the hospital is given by the following:

| (5) |

Note that demand capability corresponds to the limit of equation (2) where the weights are given by the frequency of the CCS codes present at the hospital, normalized to the total number of patients seen at the hospital during the period. The HDCI quantifies the ability of a hospital to complete the care of the patients it encounters and it corresponds to the percentage of patients that completed their care at the institution across all conditions. While the HCI will approach unity only for hospitals capable of managing a wide range of clinical conditions, HDCI will approach unity when a hospital completes the care of those who come to it. That is, hospitals with high‐demand capability may transfer very few patients but actually manage only a limited set of conditions. A specialty hospital, for example, may have a very high HDCI (it completes the care of the patients it sees) but have a very low HCI (it only cares for a limited set of conditions). Taken together, the HCI and HDCI quantify different aspects of hospital activity, addressing different questions while giving a fuller representation of capability than either alone.

Regionalization

Across a region, hospitals may or may not vary in capability. In some regions, there may be a small number of highly capable hospitals, each completely serving the needs of its local population. In other regions, there may be many less capable hospitals, serving routine local needs and transferring their sickest patients to one or more highly capable hospitals. Regionalization, therefore, may be inferred from the number of admissions and the average probability of transfer among hospitals within a region. That is, if transfers are infrequent and definitive care is available in many institutions, there is little regionalization. If transfers are frequent, and definitive care is only available in a few institutions, there is substantial regionalization. We therefore define the Regionalization Index (RI) for a single condition or collection of conditions using the average probability of transfer over all hospitals in the system:

| (6) |

The RI, then, describes the degree to which definitive care is concentrated within a system for a particular condition or population. It captures concentration arising both from transfer and from self‐selection. In this expression, the denominator represents the total number of active hospitals within a system. A hypothetical condition with which patients present to all hospitals and complete their care without transfer will have an RI equal to 0 (not regionalized). A condition that is frequently transferred and/or seen in only a few hospitals will have an RI that approaches 1 (i.e., highly regionalized).

For statistical robustness, all calculations are conducted within each of the three study years and then all indices are reported as 3‐year averages. All analyses were performed using Python 3.5, an open‐source programming language (Perkel 2015), and the IPython interactive environment (Perez and Granger 2007). We calculated HCIs, HDCIs, and RIs, for all hospitals, hospital cohorts, and all conditions, reporting descriptive statistics for each. Simple correlations were calculated by linear regression. Comparisons between cohorts and regions were performed using the Kruskal–Wallis H‐test, and rank tests were performed with the Wilcoxon signed‐rank test.

Results

During the study period, there were 66 active acute care hospitals in Massachusetts that satisfy our criteria of inclusion. Our complete dataset included 10,559,761 encounters, including 7,531,799 ED visits, 2,421,492 inpatient admissions, 606,470 outpatient observation admissions, of which 81.9 percent (8,644,520) have synthetic patient identifiers that allow for the transfer verification. After exclusion of single‐specialty facilities, ED visits discharged directly home, mental health visits, transfers within different facilities of the same hospital, and infrequent conditions; the final dataset contained 2,171,571 admissions and 125,513 transfers.

Hospital Capability

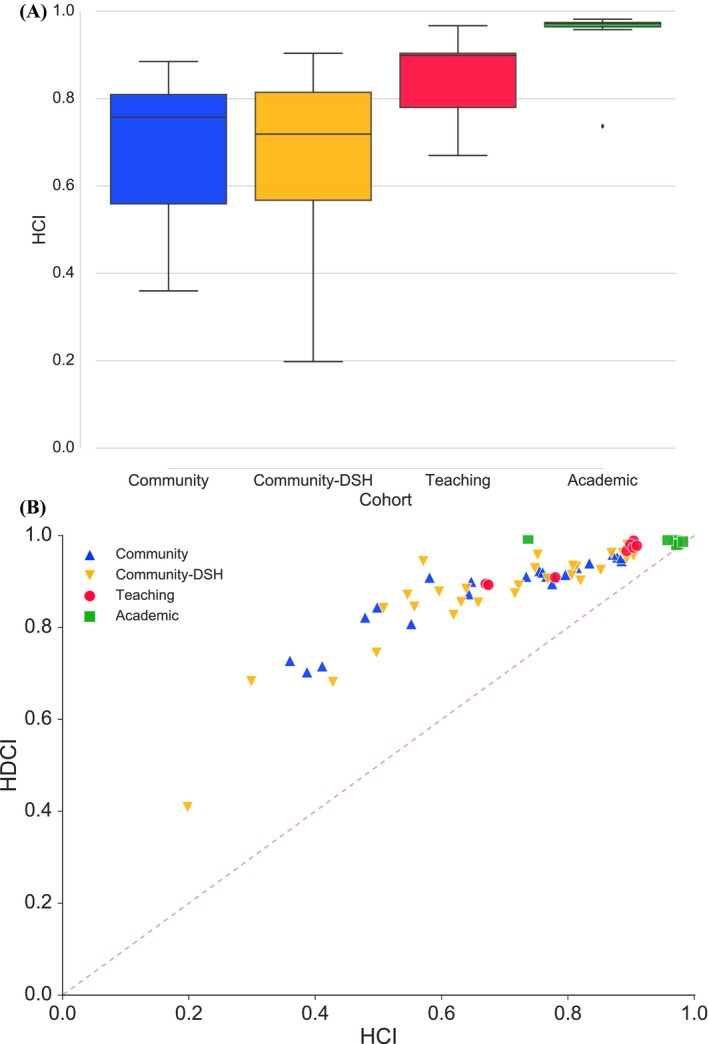

Overall, the HCI had face validity. The five most capable hospitals, all widely recognized as important referral centers, returned indices of 0.97–0.98. The five least capable hospitals, all small institutions serving limited populations, returned indices of 0.20–0.41. The median overall capability of hospitals was 0.73 (IQR: 0.62–0.88) with clear and significant differences among hospital cohorts. As illustrated in Figure 1A, community and community‐DSH hospitals returned similar capabilities (median 0.76 and 0.72, respectively), while teaching and academic hospitals returned much higher capabilities (median 0.90 and 0.97, respectively). The differences between academic and all other cohorts and teaching and all other cohorts were significant (p < .05). Variation within cohorts was particularly evident among community hospitals with an absolute range of 0.20–0.90. In this group, the least capable hospital transferred more than 59 percent of patients to complete their care through admission or evaluation elsewhere.

Figure 1.

(A) Hospital Capability Index (HCI) for Hospital Cohorts within the MA Health Care System. (B) Hospital Capabilities (HCI) and Demand Capabilities (HDCI) for the 66 Acute Care Hospitals in MA [Color figure can be viewed at wileyonlinelibrary.com]

The HDCI gave similar information. Recall that demand capability refers to a hospital's ability to meet the needs of the patients who come to it. HDCI attempts to capture this by corresponding to the percentage of arriving patients that complete their care without transfer. Figure 1B demonstrates that demand capability is very closely related to overall capability (R 2 = .89). Statewide hospital rankings for the HCI and HDCI were also similar (p < .01). As expected, median HDCIs were much higher than HCIs for community and community‐DSH hospitals (0.91 vs. 0.76 and 0.89 vs. 0.72, respectively), suggesting that their capabilities meet most of their patients’ needs. However, all academic and most teaching hospitals returned demand capabilities near 1, while only one large community hospital did so. Thus, nearly all hospitals in the Massachusetts system relied heavily on transfer to academic medical centers to meet the needs of their patients.

Capability “Fingerprints”

Among hospitals with similar overall capability indices, there were clear differences in capabilities with respect to individual conditions. Relative strengths, weaknesses, and gaps were readily apparent when condition‐specific capabilities are arranged for side‐by‐side comparison. Figure 2 displays five such capability “fingerprints” for a representative high‐capability hospital, a representative low‐capability hospital, and three average capability hospitals of similar size and volume.

Figure 2.

Hospital Capabilities Fingerprint Depicting the Probability of Completing Care for All CCS Codes (HCI = Hospital Capability Index, described in Methods; CCS descriptions available at https://www.hcup-us.ahrq.gov/toolssoftware/ccs/ccs.jsp) [Color figure can be viewed at wileyonlinelibrary.com]

As HCIs are plotted for all CCS codes and then aligned for comparison, gaps represent entire conditions for which patients were never seen or always transferred. It is immediately obvious that high‐capability hospitals have few gaps, low‐capability hospitals have many, and increasing HCI reflects this filling. More interesting, however, was that hospitals of similar size and volume frequently exhibited very different capabilities with respect to specific conditions. It was not the case that all hospitals of a given size predictably completed the care of a similar set of conditions. Rather, even hospitals with similar HCIs frequently differed in their ability to complete the care of their patients. The practical meaning of this is that individuals presenting to their local hospital for care may, depending on their problem, have very different experiences.

Sample Size and Geography

We assigned a capability of zero whenever a condition was not encountered by a hospital during our 3‐year sample. To explore the impact of time sample size on HCI, we compared capability indices for each hospital as calculated from single‐year samples (FY2014) to averages calculated from multiyear samples (FY2012–2014). Overall, capability indices were robust to sample size with median values increasing just 5 percent in the larger sample. As might be expected, sample size‐related differences were larger among low‐volume than high‐volume hospitals, but the relative capability rankings of individual hospitals were unaffected (p < .01).

To understand the impact of hospital location on our results, we also compared the capabilities of hospitals in the different regions of the state according to CHIA definitions (Cape and Islands, Central MA, Metro Boston, Metro South, Metro West, Northeastern MA, Southcoast, and Western MA). While we observed strong variations within the regions, the differences among them were not statistically significant (p = .59).

Regionalization Index

The median RI for all conditions within the Massachusetts acute care hospital system for the FY2012–2014 period was 0.21 (IQR: 0.13–0.36), including a long tail of highly regionalized conditions (Figure 3A). As might be expected, some conditions were very highly regionalized and others were completely unregionalized. Overall, only 25 percent of CCS codes returned an RI above 0.36 and only 5 percent above 0.67. For example, Gastritis and duodenitis and Asthma, exemplifying extremely common conditions that are routinely cared for in all hospitals, returned regionalization indices close to zero (both 0.03). Uncommon conditions that benefit from specialized care (e.g., Cystic fibrosis and Other central nervous system infection and poliomyelitis) returned high regionalization indices (0.87 and 0.67, respectively), suggesting that definitive care was rendered in only a few institutions. For Cystic fibrosis, with a low transfer rate of 19/1012 or 0.019, this was mainly due to direct referral. For Other central nervous system infection and poliomyelitis, with high transfer rate of 105/650 or 0.16, this was in significant part due to transfer. Conditions that are intentionally regionalized, such as trauma and congenital heart disease, were also identified by the index (e.g., Spinal cord injury, 0.66; Cardiac and circulatory congenital anomalies, 0.62). Additional representative conditions are described in Table 1, and a complete list is included in the Supplementary Materials.

Figure 3.

(A) Distribution of Regionalization Indices (RI) for the 231 CCS Codes in the Years 2012–2014. (B) Regionalization Index (RI) versus Fraction of Hospitals Encountering Each of the 231 CCS Codes Seen in MA from 2012 to 2014; Annotated CCS Conditions Are Discussed in the Text [Color figure can be viewed at wileyonlinelibrary.com]

Table 1.

Selected CCS Conditions with High, Medium, and Low RI and the Fraction of Acute Care Hospitals (ACH) That Encountered This Condition

| CCS Codes | Admissions | Transfers | RI | Fraction of ACH |

|---|---|---|---|---|

| Cystic fibrosis | 1,012 | 19 | 0.87 | 0.17 |

| Rehabilitation care, fitting of prostheses, and adjustment of devices | 6,774 | 163 | 0.74 | 0.27 |

| Spinal cord injury | 544 | 113 | 0.66 | 0.58 |

| Cardiac and circulatory congenital anomalies | 2,374 | 40 | 0.60 | 0.45 |

| Burns | 1,271 | 341 | 0.57 | 0.84 |

| Acute myocardial infarction | 29,624 | 7,346 | 0.44 | 0.99 |

| Open wounds of head, neck, and trunk | 2,500 | 626 | 0.39 | 0.98 |

| Chronic kidney disease | 1,760 | 85 | 0.28 | 0.82 |

| Peritonitis and intestinal abscess | 1,818 | 174 | 0.23 | 0.93 |

| Fracture of upper limb | 9,443 | 1,033 | 0.18 | 1.00 |

| Influenza | 5,302 | 69 | 0.10 | 0.92 |

| Appendicitis and other appendiceal conditions | 16,434 | 416 | 0.07 | 1.00 |

| Pneumonia | 59,109 | 2,378 | 0.06 | 1.00 |

| Asthma | 28,023 | 575 | 0.03 | 1.00 |

Public health regulations that effectively force regionalization were also captured by the index. In Massachusetts, for example, inpatient care of children under age 15 years is limited to hospitals with specifically licensed pediatric beds. As a result, the all‐condition median RI for patients under age 15 was 0.87 (IQR: 0.81–0.92), while that of patients 15 years of age and over was 0.21 (IQR: 0.13–0.36; p < .05). Similarly, invasive cardiac services, including open‐heart surgery and cardiac catheterization, are subject to strict determination of need limitations. As a result, CCS codes related to the most serious cardiac conditions also returned very high regionalization indices (e.g., Cardiac arrest and ventricular fibrillation = 0.64). Indeed, all conditions specifically covered by Massachusetts Department of Public Health point‐of‐entry policies2 (e.g., trauma, stroke, ST‐segment elevation myocardial infarction, burns, pediatrics, and obstetrics) were identified by the index.

When RI is plotted against the fraction of hospitals encountering a condition, a fingerprint of the system emerges. Figure 3B shows the RI of all 231 CCS conditions treated in MA versus the fraction of hospitals that encountered each condition. For presentation, corresponding Multilevel CCS aggregation is used following HCUP definitions. From this perspective, conditions at the top are being seen everywhere (fraction of hospitals encountering the condition = 1) and conditions falling on the dashed line are completing their care in the first hospital to which they present. Highly regionalized conditions falling near the dashed lines are regionalized by demand (self‐selection, etc.), while highly regionalized conditions falling off the dashed line are being transferred to varying degrees. A quick glance confirms that cystic fibrosis and cancer care are highly regionalized in Massachusetts, yet most patients present to the centers capable of caring for them. Head trauma, acute myocardial infarction, and cardiac arrest, in contrast, are seen everywhere but definitively cared for in only a few centers. Since these plots were created with the minimal variable set, additional pictures can be obtained through incorporation of other variables (age, sex, ethnicity, insurance status, etc.) or their combination. Pictures of individual networks or health service areas may also be generated by limiting the number of included hospitals. In this manner, network adequacy with respect to specific populations and conditions could be placed side by side for comparison.

Discussion

All hospitals are not equal, and complex transfer networks have naturally evolved to conduct sick patients to the care they need. As new networks are forged, deeper understanding of hospital capability and systemic regionalization is necessary to maintain network adequacy and access to care. Here, we propose simple metrics that employ transfer frequency as a window on both. We measure a hospital's capability as the condition‐specific probability that new patients will stay until their care is complete. Regionalization, conversely, is a measure of the degree to which definitive care is concentrated within a few centers. The capability metric (HCI) may be used to evaluate the abilities of individual hospitals or groups of hospitals to provide definitive care for specific conditions (e.g., heart disease) or to specific populations (e.g., children). The regionalization metric (RI) may also be calculated with respect to conditions or populations in order to better understand how their care is concentrated. In this manner, different conditions, hospitals, and systems may be compared, gaps identified, and changes monitored over time.

We studied the Massachusetts acute care hospital system to test the feasibility of these new metrics. We found that the HCI accurately described a continuum of hospitals with the lowest quartile containing small community hospitals and the highest containing major academic centers. We observed that highly competent academic hospitals in this system seldom transferred patients to other centers (independent of their condition) while less competent community, and community‐DSH hospitals exhibited a long‐tail distribution of condition‐specific capabilities. We observed that most hospitals in Massachusetts depend on transfer and that each hospital's unique capabilities can be presented in an all‐condition “fingerprint” summarizing their experience with specific diagnoses.

As a corollary to hospital capability, we found that our regionalization index metric (RI) accurately identified conditions whose care is regionalized in Massachusetts. This was true whether by virtue of the specialized services required (e.g., cystic fibrosis and spinal cord injury) or the regulatory environment (e.g., pediatric and cardiac care). Although most common conditions were not regionalized at all, the degree of regionalization for a few was surprising. We believe that further investigation into these patterns is warranted.

Although the decision to transfer a patient represents the summation of both medical and nonmedical factors, it is usually considered when satisfactory services are unavailable within the transferring hospital. Even when adequate services are available, transfer from low‐volume to high‐volume facilities may improve outcome in conditions for which clear mortality‐volume relationships are established (Dudley et al. 2000). If inter‐hospital transfer can effectively conduct patients to higher levels of care, and such cooperation can significantly improve hospital performance (Mascia and Di Vincenzo 2011), deeper understanding of the transfer network is essential. This is particularly true as formation of Accountable Care Organizations promise to disrupt the existing hospital ecosystem. Since, as we show here, individual facilities vary significantly in their ability to provide definitive care for different conditions, these capability and regionalization indices may be useful in measuring and comparing the adequacy of these new networks.

Transfer, like mortality, offers uniquely accurate information that is less subject to data entry error than some other possible measures of hospital activity. Even so, we attempted to minimize error by tracking specific patients on synthetic identifiers rather than relying on a single transfer field. For illustrative purposes, we chose the minimum set of variables in equations (1), (2), (3), (4), (5), (6) to provide a general view of a statewide system. We also demonstrate, however, that the data can easily be grouped using demographic variables or hospital characteristics as needed to address particular questions raised by this bird's‐eye view. For example, specific questions concerning pediatric care can be addressed by adding an age variable.

Our analysis is subject to several limitations. First, all administrative datasets are vulnerable to errors of coding and to changes in coding behavior over time. This being so, administrative data can disagree with clinical registries for some conditions (Lawson et al. 2016). We chose to report calculations using CCS rather than ICD‐9 codes to simplify presentation and minimize coding variability. Second, we tested our metrics in the state of Massachusetts, where insurance coverage is high, the health care system is uniquely dependent on academic centers, and legislators have capped increases in health care spending (Steinbrook 2012). Thus, hospital capabilities and the extent of regionalization reported here may certainly differ significantly in other states. Even so, we believe that the metrics described here can be useful in capturing those differences, quantifying them, and facilitating the comparison of different systems. Indeed, such comparisons are now a subject of our ongoing investigations. Third, we excluded mental health conditions because their definitive management lies beyond the acute care hospitals we studied. We acknowledge, however, that complete understanding of the health care system will require the future inclusion of mental health. Fourth, our analysis can only take into account direct transfers and is blind to those that are accomplished through unconventional means such as discharging a patient with recommendations for admission elsewhere. Finally, we elected to exclude a small number of very rare conditions that, in aggregate, represented less than 1 percent of all admissions. We believe that this is warranted since patients with those conditions are typically followed by a very small number of providers and transfer is often more related to personal relationships than to hospital capability. Nevertheless, we performed a sensitivity analysis utilizing different cutoffs and saw no significant difference in hospital capability ratings or overall results.

We believe that these new metrics will prove useful in a variety of settings. For regulators, they can provide insight into on‐the‐ground performance and interdependences of hospitals when addressing questions of cost variability, monopoly, mergers, and closures. For policy makers, capability indices can inform questions of network adequacy, while regionalization indices, tracked over time, can illuminate the systemic response to payment reform. For researchers, the metrics can provide a clinical basis for comparing individual hospitals, networks of hospitals, and systems of care. They can also be used to study health outcomes that may follow from regionalization. First responders may use hospital capability fingerprints to improve point‐of‐entry policies, and individuals with complex or chronic conditions may use them to help decide among options for care.

In conclusion, we offer two metrics, the HCI and the RI based on the frequency of transfer from one institution to another. The HCI may be applied statewide, to subsets of hospitals, by specific condition, or to yield an all‐condition “fingerprint” of individual institutions. The RI may be applied to any condition and any combination of hospitals to assess the degree to which care is concentrated. Using these metrics, we demonstrate that transfer activity is an extremely critical element of hospital care in Massachusetts and, for some conditions, care is so regionalized that definitive management occurs in only a small subset of centers. As transfer and referral patterns are revised by new financial arrangements, the clinical capabilities of resulting entities should be monitored. We believe that the HCI and RI may be useful in this and in comparing the condition‐specific outcomes of regionalization among different systems.

Supporting information

Appendix SA1: Author Matrix.

Table S1. Regionalization of All CCS Codes Admitted in MA Hospitals from 2012 to 2014.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: This work was supported by the Boston Children's Hospital Department of Anesthesiology, Perioperative, and Pain Medicine and by the Boston Children's Hospital Chair for Critical Care Anesthesia.

Disclosures: None.

Disclaimers: None.

Notes

The denominator of equation (2) is obtained from the unique CCS conditions presented by patients within the dataset. In this analysis, N CCS = 231 (see full list in Table S1).

References

- Albright, K. C. , Branas C. C., Meyer B. C., Matherne‐Meyer D. E., Zivin J. A., Lyden P. D., and Carr B. G.. 2010. “ACCESS: Acute Cerebrovascular Care in Emergency Stroke Systems.” Archives of Neurology 67 (10): 1210–8. [DOI] [PubMed] [Google Scholar]

- Baicker, K. , and Levy H.. 2015. “How Narrow a Network Is Too Narrow?” JAMA Internal Medicine 175 (3): 337–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bosk, E. A. , Veinot T., and Iwashyna T. J.. 2011. “Which Patients and Where.” Medical Care 49 (6): 592–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dudley, R. A. , Johansen K. L., Brand R., Rennie D. J., and Milstein A.. 2000. “Selective Referral to High‐Volume Hospitals: Estimating Potentially Avoidable Deaths.” Journal of the American Medical Association 283 (9): 1159–66. [DOI] [PubMed] [Google Scholar]

- EMTALA . 1985. The Emergency Medical Treatment and Active Labor Act as Established Under the Consolidated Omnibus Budget Reconciliation Act (COBRA), (42 USC 1395 dd) and 42 CFR 489.24; 42 CFR 489.20.

- Farmer, J. C. , and Carlton P. K. Jr. 2006. “Providing Critical Care during a Disaster: The Interface between Disaster Response Agencies and Hospitals.” Critical Care Medicine 34 (3): S56–9. [DOI] [PubMed] [Google Scholar]

- Gabbe, B. J. , Simpson P. M., Sutherland A. M., Wolfe R., Fitzgerald M. C., Judson R., and Cameron P. A.. 2012. “Improved Functional Outcomes for Major Trauma Patients in a Regionalized, Inclusive Trauma System.” Annals of Surgery 255 (6): 1009–15. [DOI] [PubMed] [Google Scholar]

- HCUP . 2013. Clinical Classifications Software (CCS) for ICD‐9‐CM [accessed on June 15, 2016]. Available at https://www.hcup-us.ahrq.gov/toolssoftware/ccs/ccs.jsp

- HCUP . 2015. Agency for Healthcare Cost and Utilization Project [accessed on June 15, 2016]. Available at http://www.hcup-us.ahrq.gov/

- Institute of Medicine . 2007. “IOM Report: The Future of Emergency Care in the United States Health System.” Academic Emergency Medicine 13 (10): 1081–5. [DOI] [PubMed] [Google Scholar]

- Landon, B. E. , Keating N. L., Barnett M. L., Onnela J.‐P., Paul S., O'Malley A. J., Keegan T., and Christakis N. A.. 2012. “Variation in Patient‐Sharing Networks of Physicians across the United States.” Journal of the American Medical Association 308 (3): 1–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lawson, E. H. , Louie R., Zingmond D. S., Sacks G. D., Brook R. H., Hall B. L., and Ko C. Y.. 2016. “Using Both Clinical Registry and Administrative Claims Data to Measure Risk‐adjusted Surgical Outcomes.” Annals of Surgery 263 (1): 50–7. [DOI] [PubMed] [Google Scholar]

- Lee, B. Y. , McGlone S. M., Song Y., Avery T. R., Eubank S., Chang C.‐C., Bailey R. R., Wagener D. K., Burke D. S., Platt R., and Huang S. S.. 2011. “Social Network Analysis of Patient Sharing among Hospitals in Orange County, California.” American Journal of Public Health 101 (4): 707–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lomi, A. , Mascia D., Vu D. Q., Pallotti F., Conaldi G., and Iwashyna T. J.. 2014. “Quality of Care and Interhospital Collaboration.” Medical Care 52 (5): 407–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mascia, D. , and Di Vincenzo F.. 2011. “Understanding Hospital Performance: The Role of Network Ties and Patterns of Competition.” Health Care Management Review 36 (4): 327–37. [DOI] [PubMed] [Google Scholar]

- Perez, F. , and Granger B. E.. 2007. “IPython: A System for Interactive Scientific Computing.” Computing in Science & Engineering 9 (3): 21–9. [Google Scholar]

- Perkel, J. M. 2015. “Programming: Pick Up Python.” Nature 518 (7537): 125–6. [DOI] [PubMed] [Google Scholar]

- Seltz, D. , Auerbach D., Mills K., Wrobel M., and Pervin A.. 2016. “Addressing Price Variation in Massachusetts.” Health Affairs Blog [accessed on June 15, 2016]. Available at http://healthaffairs.org/blog/2016/05/12/addressing-price-variation-in-massachusetts/ [Google Scholar]

- Spaite, D. W. , Bobrow B. J., Stolz U., Berg R. A., Sanders A. B., Kern K. B., Chikani V., Humble W., Mullins T., Stapczynski J. S., and Ewy G. A., Arizona Cardiac Receiving Center Consortium . 2014. “Statewide Regionalization of Postarrest Care for Out‐of‐Hospital Cardiac Arrest: Association with Survival and Neurologic Outcome.” Annals of Emergency Medicine 64 (5): 496–506.e1. [DOI] [PubMed] [Google Scholar]

- Steinbrook, R. 2012. “Controlling Health Care Costs in Massachusetts with a Global Spending Target.” Journal of the American Medical Association 308 (12): 1215–6. [DOI] [PubMed] [Google Scholar]

- Topol, E. J. , and Kereiakes D. J.. 2003. “Regionalization of Care for Acute Ischemic Heart Disease a Call for Specialized Centers.” Circulation 107 (11): 1463–6. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix SA1: Author Matrix.

Table S1. Regionalization of All CCS Codes Admitted in MA Hospitals from 2012 to 2014.