Abstract

Orthopaedic surgeons are still following the decades old workflow of using dozens of two-dimensional fluoroscopic images to drill through complex 3D structures, e.g. pelvis. This Letter presents a mixed reality support system, which incorporates multi-modal data fusion and model-based surgical tool tracking for creating a mixed reality environment supporting screw placement in orthopaedic surgery. A red–green–blue–depth camera is rigidly attached to a mobile C-arm and is calibrated to the cone-beam computed tomography (CBCT) imaging space via iterative closest point algorithm. This allows real-time automatic fusion of reconstructed surface and/or 3D point clouds and synthetic fluoroscopic images obtained through CBCT imaging. An adapted 3D model-based tracking algorithm with automatic tool segmentation allows for tracking of the surgical tools occluded by hand. This proposed interactive 3D mixed reality environment provides an intuitive understanding of the surgical site and supports surgeons in quickly localising the entry point and orienting the surgical tool during screw placement. The authors validate the augmentation by measuring target registration error and also evaluate the tracking accuracy in the presence of partial occlusion.

Keywords: orthopaedics, surgery, medical image processing, diagnostic radiography, bone, image fusion, computerised tomography, iterative methods, image reconstruction, image segmentation, image registration

Keywords: multimodal imaging, mixed reality visualisation, orthopaedic surgery, workflow, two-dimensional fluoroscopic images, complex 3D structures, pelvis, multimodal data fusion, model-based surgical tool tracking, mixed reality environment, screw placement, red-green-blue-depth camera, mobile C-arm, cone-beam computed tomography imaging space, iterative closest point algorithm, real-time automatic fusion, reconstructed surface, 3D point clouds, synthetic fluoroscopic images, CBCT imaging, adapted 3D model-based tracking algorithm, automatic tool segmentation, surgical tools, interactive 3D mixed reality environment, entry point, target registration error, tracking accuracy, partial occlusion

1. Introduction

Minimally invasive orthopaedic surgical procedures can be technically challenging for a variety of reasons including complex anatomy, limitations in screw starting point and trajectory, and in some cases a slim margin for error. For example, during percutaneous pelvis fixation, screws are placed through narrow tunnels of bone in the pelvis. The orientation of these osseous tunnels is complex and a slight deviation of a guide wire (k-wire) from the desired path could result in severe damage to vital internal structures.

Mobile C-arms are frequently used in these procedures to provide intra-operative two-dimensional (2D) fluoroscopic imaging. The usage of intra-operative medical images results in reduction of blood loss, collateral tissue damage, and total operation time [1]. Furthermore, imaging can negate the need for direct visualisation of osseous structures through extensile open incisions making minimally invasive percutaneous techniques possible. However, mental mapping between 2D fluoroscopic images, the patient's body, and surgical tools remains a challenge [2].

Typically, in order to place a k-wire or screw in a percutaneous pelvis fixation procedure, numerous fluoroscopic images are taken and several attempts may be required before the target is reached from the correct orientation. This results in relatively high radiation exposure and operating time in addition to potential harm to the patient.

Medical augmented reality (AR) is gaining importance in these interventions to facilitate the screw placement task; however, most proposed AR solutions rely on complicated external navigation systems and have, therefore, not been deployed to common surgeries. In this Letter, we propose a novel mixed reality support system for screw placement by integrating and fusing 3D sensing data, medical data, intra-operative planning, and virtually tracked tools. This disruptive innovation in orthopaedic interventions allows the surgeon to perform procedures in a more safe and efficient manner.

1.1. State of the art

Surgical navigation systems are used to track tools and the patient with respect to the medical images; it, therefore, assist the surgeons with their mental alignment and localisation. These systems are mainly based on outside-in tracking of optical markers on the C-arm and recovering the spatial transformation between the patient, medical images, and the surgical tool. The modern navigation systems reach a sub-millimetre accuracy [3, 4]. However, they do not significantly reduce operation room (OR) time, but rather require cumbersome pre-operative calibration, occupy valuable space, and suffer from line-of-sight limitations [5, 6]. Furthermore, navigation is mostly computed based on pre-operative patient data. As a result, deformations and displacements of the patient's anatomy are not considered.

Alternative solutions attach cameras to the C-arm, and co-register them with the fluoroscopic image [7]. The camera is mounted near the X-ray source, and by utilising an X-ray transparent mirror, the camera and fluoroscopic views would be similar. Therefore, the camera and X-ray origins are aligned, and they remain calibrated due to the rigid construction. To overlay undistorted and semi-translucent fluoroscopic images onto the live camera feed, optical and radiopaque markers on a calibration phantom are detected and aligned. The AR provides an intuitive visualisation of the fluoroscopic and a live optical view of the surgical site.

These alternative solutions were tested during 40 orthopaedic and trauma surgeries, and demonstrated promising improvement in X-ray dose reduction and localisation in the 2D plane perpendicular to the fluoroscopic view [8, 9]. However, this technique requires the introduction of large spherical markers and the medical imaging is limited to 2D fluoroscopic images co-registered to the view of the optical camera. Furthermore, this requires the X-ray source to be positioned above the patient rather than under the table, which reduces the surgeon work space, and increases scatter radiation to the clinical staff. In [10], a red–green–blue–depth (RGBD) camera was mounted on a mobile C-arm, and calibrated with X-ray. However, no contribution toward tool tracking, or simplification of surgery was presented.

Vision-based tracking of markers and a simple AR visualisation combining digitally reconstructed radiographs (DRRs) with live video feed from a stereo camera attached to the detector plane of the C-arm was presented in [11]. Using a hex-face optical and radiopaque calibration phantom, paired-point registration is performed to recover the relationship between fluoroscopic image and camera origin. The vision-based tracking requires visual markers and the stereo camera on the C-arm. This work takes a step further from static 2D–2D visualisation to interactive 2D–3D visualisation. However, tracking for an image-guided navigation requires the introduction of marker on the surgical instrument.

Another solution in C-arm guided intervention is to track the tools with fluoroscopic images [12, 13]. Image processing is done on fluoroscopic images to extract the tools and thus determine the tool position and orientations. This approach does not have line-of-sight problems, but increases radiation exposure. On the other hand, in the computer vision industry, marker-less tracking of rigid objects by incorporating an RGBD camera has previously been performed using iterative closest point (ICP) algorithm. A 3D user-interface application based on this method is introduced, which allows 3D tracking of an object held by the user [14]. This work assumes a static background, and the tracking requires the generation of user-specific hand models for tracking the objects occluded by hands. This inspires an idea of using 3D sensing for tool tracking, which requires neither makers nor additional fluoroscopic images. In [15, 16], 3D inside-out visual sensing has been used for automatic registration between pre- and intra-operative data and estimating patient rigid movement during image acquisition.

1.2. Contributions

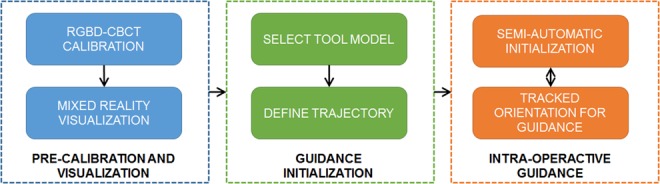

In the computer-aided intervention community, Lee et al. [17] suggested registering 3D medical data with 3D optics, and provided a mixed reality visualisation environment for clinical data realisation, which is evaluated in [18] showing that it could help reduce radiation, shorten operating time, and lower surgical task load. The system evaluated used simulated pre-clinical phantoms, where the users performed drilling tasks inside a thin plastic tube. Furthermore, that system did not provide tool tracking and relied solely on live point cloud feedback to locate the entry point and the orientation. On the other hand, tool tracking with single 3D optics is also investigated in recent research in the computer vision community such as [19, 20] which give reliable tracking results of arbitrary tools. In light of these two recent advancements, this Letter presents a mixed reality support system which incorporates marker-less surgical tool tracking via model-based 3D tracking with automatic tool segmentation into multi-modal data fusion via a one-time calibration. The suggested workflow using the system is illustrated in Fig. 1. The cone-beam computed tomography (CBCT) device and RGBD sensor are pre-calibrated, which allows data fusion for a mixed reality visualisation. At the beginning of the intervention, surgeons select the surgical tool model and define the expected trajectory on the CBCT or CT data. During the intervention, we use the tracking information and augment this virtual model at the position of the drill inside the mixed reality environment. When the drill is sufficiently close to the initialisation, the tracking will automatically start. Thereafter, surgeons can interact with the scene and align the tracked tool's orientation with the planned trajectory. Finally, a few fluoroscopic images are acquired from different viewpoints to ensure the correct alignment and compensate for minute tracking errors.

Fig. 1.

Workflow of the tracking of surgical tools for interventional guidance in the mixed reality environment. The system is pre-calibrated which enables a mixed reality visualisation platform. During intervention, the surgeon first selects the tool model and defines the trajectory (planning) on the medical data. Next, the mixed reality environment is used together with the tracking outcome for supporting the tool placement

We combine a mixed reality visualisation with an advanced tracking technique, which enables the simultaneous and real-time display of the patient's surface and anatomy (CBCT or X-ray), the surgical site, surgical planning, and objects within the surgical site (clinician's hand, tools etc.). While the literature focuses on the accuracy of tool tracking and precise navigation, our mixed reality system concentrates on providing guidance and support for fast entry point localisation. Without having additional navigation setting, this integrated system enables surgeons to intuitively align the tracked surgical tool's orientation with the planned trajectory or anatomy of interest. Our evaluation shows it could bring surgeons sufficiently close to their desired entry point, and thereby considerably shorten the operation time, and reduce radiation exposure.

2. Materials and methods

Our system requires an RGBD camera to be installed on the gantry of the mobile C-arm, preferably near the detector to remain above the surgical site during the intervention. We adapted the calibration method and marker-less tool tracking algorithm in [17, 19] to provide the mixed reality support system. In the following sections, we describe the calibration technique for RGBD camera and CBCT data produced by the mobile C-arm, the marker-less tool tracking algorithm, and the integration of tool tracking and mixed reality visualisation.

2.1. Calibration of RGBD camera and CBCT space

The calibration is performed by obtaining an RGBD and CBCT scan from a radiopaque and infrared-visible calibration phantom [17]. After surface extraction, the meshes are pre-processed to remove outliers and noise (Fig. 2a). The set of points are and . The surfaces are registered using the sample consensus initial alignment (SAC-IA) with fast point feature histogram (FPFH) [21]. This method provides a fast and reliable initialisation , which is then used for the ICPs algorithm to complete the final calibration result (Fig. 2b)

| (1) |

where and . is the resulting point correspondence index pairs, and the resulting transformation allows the fusion of CBCT and RGBD information in a common coordinate frame. This enables tool tracking relative to anatomical structures, and mixed reality visualisation which is depicted in Fig. 2c.

Fig. 2.

Pre-processed point clouds acquired from

a Depth camera and radiation space, during the CBCT scan

b They are registered by using SAC-IA with FPFH and ICP

c It enables the overlay between DRR of CBCT and reconstructed object surface at any desired angle as an example

2.2. Real-time tool tracking using fast projective ICP with tool region segmentation

Tool tracking is carried out by registering the 3D tool model to automatically extract surface segments in each depth frame using ICP with fast projective point correspondence [19] (implementation available at: http://campar.in.tum.de/Chair/ProjectInSeg). Nevertheless, for the specific application at hand, it has to be considered that the presence of a hand holding the drill causes severe occlusions that affect tracking convergence and accuracy. To deal with these limitations, we propose to exploit the automatic 3D surface segmentation to only track the segment corresponding to the drill and, therefore, removing possible outliers during the ICP stage.

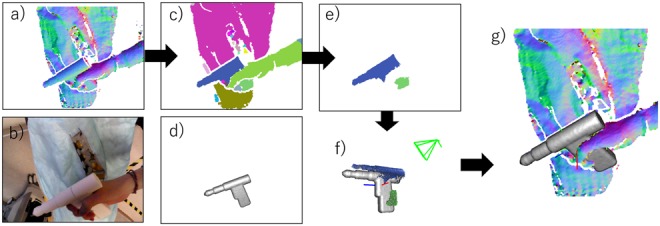

Fig. 3 shows the overview of the 3D tool tracking process. The depth image in input frame is segmented to individual smoothly connected segments by means of connected component analyses on the angles between normals of neighbour pixels in depth image [22] (Fig. 3c). Among these segments, we detect the segments which might correspond to the 3D tool model by computing 3D overlap ratio between the segments and the visible part of the 3D tool model. The visible part is computed by rendering a virtual view using the camera pose estimated in the previous frame (Fig. 3d). All segments of the depth image, which yield a 3D overlap higher than a threshold with the visible tool model, are merged into a set of tool segments (TSs) (Fig. 3e). TS which is a subset of depth image is used for ICP. Then, 3D tool model is registered to TS by means of ICP with a point-to-plane error metric to estimate the current pose of the tool. Correspondences between the points in the TS and the 3D tool model are obtained by projecting the current visible part of the tool model to the TS [23] (Fig. 3f). The registered 3D model in depth image is shown in Fig. 3g. The use of a limited subset of points belonging to the tool surface allows not only to better deal with occlusion, but also to track the drill in view of the camera.

Fig. 3.

Overview of the 3D tool tracking process

a Depth image with surface normals in input frame

b Colour image in input frame

c Geometrical segmentation result

d Visible part of 3D tool model in the previous frame

e Detected TS in input frame

f ICP between the 3D tool model and TS

g Registered 3D tool model in depth image

To improve robustness toward occlusion, we also deploy frame-to-frame temporal tracking. We add correspondences found in the previous frame to the ICP, as well as the current TS set. Hence, the merged set of correspondences is jointly used to minimise the registration residual. This is particularly useful in the presence of high levels of occlusion, where the 3D surface of the hand holding the tool is deployed to robustly estimate the current camera pose with respect to the tool. Additionally, to detect tracking failure, standard deviation of the residuals between the points in current depth map and the surface of tool model is computed as tracking quality described in [20]. When this standard deviation of residuals error exceeds a certain threshold, the tracking is considered to have failed.

2.3. Integrating mixed reality visualisation and tool tracking

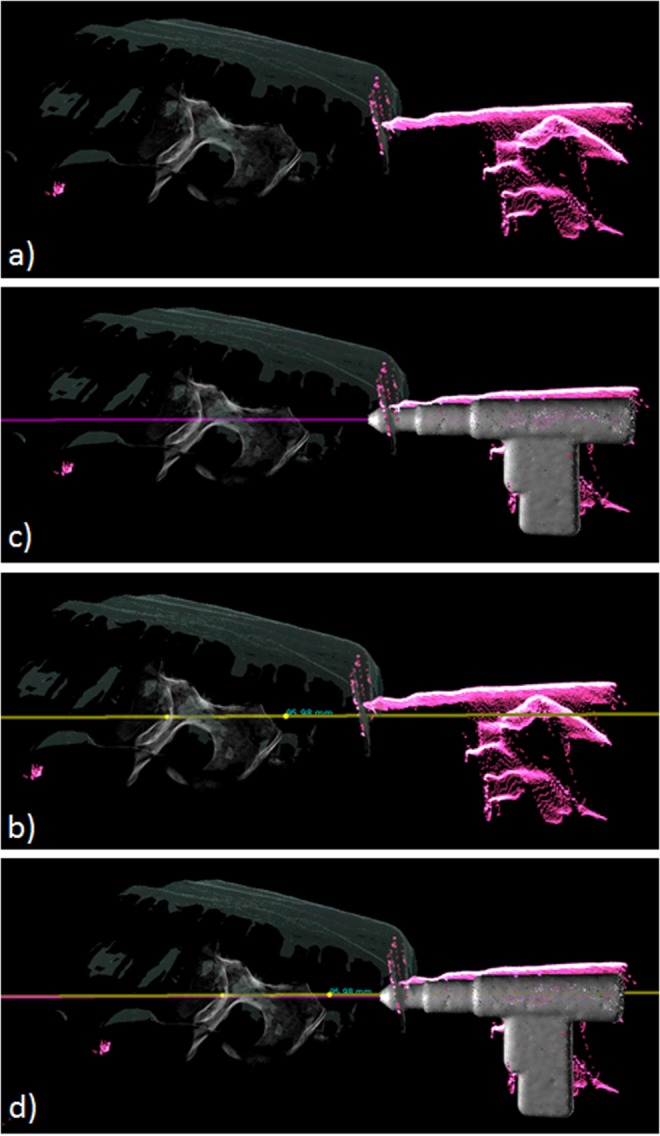

We generate a mixed reality scene using a CBCT volume, and the real-time RGBD information. The user can position multiple virtual cameras in this scene to view the anatomy and tools from multiple desired angles. An example is shown in Fig. 4.

Fig. 4.

Example of multiple desired views with marker-less tracking. The tool is tracked based on the 3D features while the overlay of DRR from CBCT and reconstructed surface can be viewed at any desired angles

The visualisation has several advantages as evaluated in [18, 24]. However, for a realistic clinical setting, we can clearly identify the need to further improve the mixed reality visualisation by extrapolating and predicting the trajectory of the tool by deploying marker-less tracking of the surgical instruments. By combining the tracking results and augmenting the tracked tools in the multi-view visualisation, this interaction allows users to perceive the target depth, orientation, and their relationship intuitively and quickly. Together with the overlaid planned trajectory (yellow line) and the estimated tool's orientation (purple line), as shown in Fig. 5, it simplifies the complicated k-wire/screw placement procedure, which typically requires numerous fluoroscopic images, to a similar setting in the phantom study of [18, 24]. Our system allows the user to achieve a nearly correct alignment quickly (generally within a minute).

Fig. 5.

Overlaid planned trajectory (yellow line) and the estimated tool's orientation (purple line)

a Mixed reality scene, which overlays the reconstructed surface, DRR, and live point clouds

b Planned trajectory in yellow added on top of (a). User can then use the live point cloud feedback for 3D localisation

c Integrated the tracked surgical tool and extended orientation line in purple onto (a). With the planned trajectory, the user can now intuitively align the extended orientation with the planned trajectory as illustrated in (d)

3. Experimental validation and results

3.1. System setup

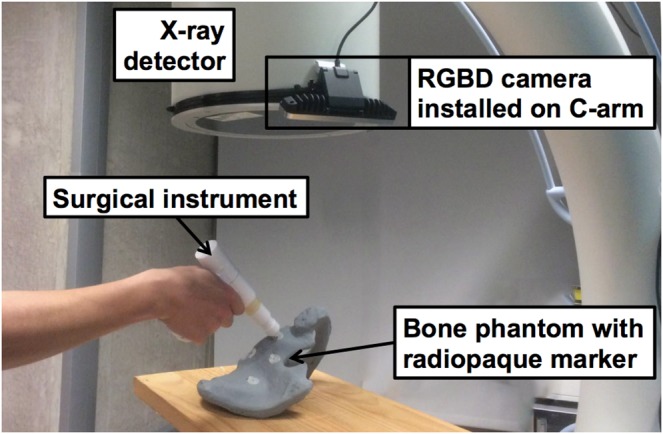

The system comprises of a Siemens ARCADIS Orbic 3D C-arm (Siemens Healthineers, Erlangen, Germany) and Intel RealSense SR300 RGBD camera (Intel Corporation, Santa Clara, CA, USA). As shown in Fig. 6, the camera is rigidly mounted on the detector of the C-arm and the transformation between the camera and CBCT origin is modelled as a rigid transformation. They are calibrated using the method mentioned in Section 2.1. The tracking algorithm discussed in Section 2.2 is used for tracking the surgical tools.

Fig. 6.

RGBD camera is installed on the detector of a C-arm. Radiopaque markers on a pelvis phantom are targeted using a surgical instrument. The Euclidean distance between markers in CBCT and tracked tool tip is computed as error (TRE). Although only a small part of the tool is visible to the camera the tracking remains functional

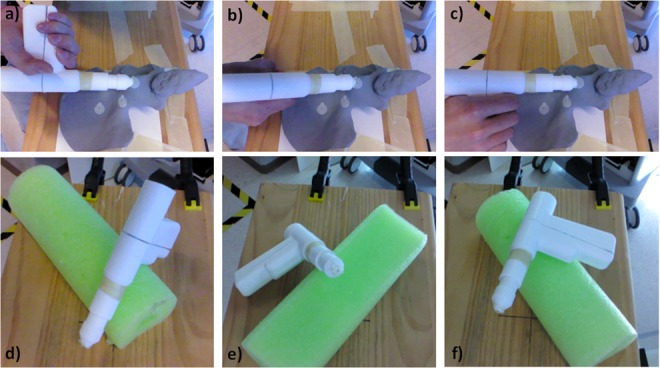

We attached five radiopaque markers on a phantom and positioned the drill tip on the markers (Figs. 7a–c). The corresponding coordinates in the CBCT is recovered and compared by measuring the target registration error (TRE). To mimic the clinical setting, we evaluated the tracking accuracy with different levels of occlusions. Tracking accuracy of 1.36 mm is reached when sufficient number of features are observed from the camera. An accuracy of 6.40 mm is reached when the drill is partially occluded, and 2 cm when it is fully occluded (Table 1).

Fig. 7.

Measuring the TRE with the drill

a Facing the depth camera

b Perpendicular to the camera

c Occluded

d–f Corresponding poses for the second experiment

Table 1.

TRE measurements of the target localisation experiment, where , , , and are the Euclidean distances

| partial occlusion | 6.02 ± 1.80 | 1.35 ± 0.85 | 5.78 ± 0.41 | 6.40 ± 1.85 |

| low occlusion | 1.28 ± 0.12 | 0.30 ± 0.19 | 1.68 ± 0.64 | 1.36 ± 1.12 |

| high occlusion | 17.50 ± 4.70 | 7.50 ± 2.18 | 8.91 ± 4.47 | 20.68 ± 4.54 |

Values are reported as mean ± standard deviation.

3.2. Target localisation experiment

3.2.1. Tracking accuracy

We first assessed the tracking accuracy by attaching a radiopaque marker on the drill tip and moving the drill to arbitrary poses. The tracking results are compared with measurements from the marker position in the CBCT (results as shown in Table 2). The measurements show an average accuracy of 3.04 mm. Owing to the symmetric geometry of the drill, the rotational element along the drill tube axis is lost under high occlusion (Fig. 7d). The best outcome is achieved when a large portion of the handle remains visible (Fig. 7f).

Table 2.

Measurements of the tracking quality, where , , , and are the Euclidean distances

| pose 1 | 1.09 | 0.83 | 4.03 | 4.26 |

| pose 2 | 2.45 | 4.50 | 0.65 | 5.16 |

| pose 3 | 0.67 | 1.14 | 0.18 | 1.33 |

| average | 1.40 | 2.16 | 1.62 | 3.04 |

Results are shown in millimetres.

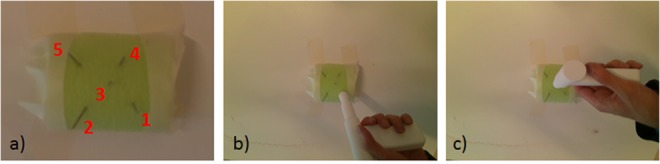

To evaluate the guidance quality of using the tracking method, we designed a phantom and measured the errors in a simulated surgical scenario. The phantom as shown in Fig. 8 is comprised of a foam base and several pins which are placed at different orientations. Next, the tracked surgical drill is oriented to align with the pin. We then measure the distance from the tool tip to the desired path given by the pin's orientations, which can be easily extracted in CBCT images. The results are shown in Table 3. The measurement shows it has ∼3 mm off from the planned trajectory when the tool is partially occluded by the hand. However, for pin 3, since many features are occluded the tracking accuracy is worst.

Fig. 8.

Phantom

a shows our guidance quality phantom, which is composed by five metal pins in a foam block. The five pins are inserted with different orientations

b and c show the drill aligned with the pin 1 and 3

Table 3.

Measurements of the guidance quality

| Pin 1 | Pin 2 | Pin 3 | Pin 4 | Pin 5 | |

|---|---|---|---|---|---|

| 3.0563 | 3.4618 | 6.3178 | 3.0304 | 2.5764 |

is the Euclidean distance from the tool tip to the expected paths in millimetres.

4. Discussion

We proposed a mixed reality support system for image-guided interventions using real-time model-based tracking. The visualisation provides simultaneous views from different perspectives. In each view, anatomical image data, the surgical site, the planned trajectory, and tracked surgical tool are depicted, which facilitate intuitive perception of the target depth, orientation, and its relationship to the surgical tool, and thereby allow for faster correction of tool orientation while minimising total radiation dose. The incorporation of the tracking algorithm into the system transforms the complicated surgical task (entry point finding) into a simplified line alignment between planned trajectory and tracked drill path in multiple views, which helps surgeons reach to reach an entry point closer to the planned trajectory in a shorter time.

The RGBD camera and CBCT are registered using SAC-IA with FPFH, followed by an ICP-based refinement. The surgical tool is tracked using InSeg with the same RGBD camera. TRE measurements are presented to assess the accuracy. The results indicate that, in general, the marker-less tracking provides reasonable accuracy of 3.04 mm. When the tracking features are fully seen by the depth camera, it can achieve an accuracy of up to 1.36 mm. In the worst case, when most of the important 3D features are occluded, its accuracy decreases to 2 cm.

In a typical clinical scenario, the instruments are partially occluded by hands. The system maintains an accuracy of 6.40 mm. To improve the tracking quality, multiple RGBD cameras could be placed to maximise the surface coverage of tracked objects. Additionally, in comparison to a conventional outside-in tracking system, our system uses the camera attached near the detector, which is close to the surgical site, and therefore, has lower chances to suffer from line-of-sight issues. In addition, the depth-based algorithm incorporated in the system tracks the surgical tools directly with the RGBD camera. Under this setting, the tracking target is larger than the optical marker target in the convention optical tracking system; therefore, it is more tolerant to occlusion. Furthermore, occlusion by blood would not significantly affect the depth-based tracking algorithm, while it may dramatically reduce the tracking quality using colour images.

For several orthopaedic interventions, knowledge of the correct orientation of the surgical tool (with respect to trajectory planning or medical data) is very crucial. As opposed to only viewing the desired trajectory on the anatomy of interest, adding the trajectory from the tip of the drill (made possible with tool tracking) allows the surgeon to simply align the two trajectories for correct placement. We evaluated the quality of the tracking by measuring the distance between tool tip positions to a desired ground truth path. For the majority of cases that the drill is partially occluded, the tracking error is ∼3 mm. This accuracy is not sufficient for precise k-wire/screw placement. However, it provides a good starting point and insertion angle that is roughly aligned with the planned trajectory. To further improve the accuracy, multiple RGBD cameras from different perspectives could be integrated into the system, so that the tracking algorithm gathers sufficient information to compensate for the occlusion; therefore, providing higher accuracy. Alternatively, multiple simultaneous tracking algorithms could be deployed and the Kalman filter scheme could be used to cross-check and regulate the tracking result, which could improve the quality of the tracking algorithm.

Moreover, as evaluated in [18, 24] on pre-clinical phantoms, the visualisation system leads to a considerable reduction of radiation dose by 63.9% and shortening operation time by 59.1%. In this Letter, the drilling path is clearly visible from the sense, and surgeons can quickly align the tool with live point cloud feedback; however, in real-life surgery, surgeons may not clearly see the drill path in a complicated anatomical structure. They need to plan the drilling path and align the surgical tools by relying on fluoroscopic images. We bridged a gap by incorporating the depth-based tracking to help the alignment of the surgical tools to a planned drilling path. The planned drill path and tracked tool drilling trajectory are augmented on the medical data for quicker alignment, which transforms the entry point localisation task to a simplified task as studied in [18, 24]. Therefore, with the support of this system, the surgical tools are aligned with the planning data, and thereafter a few fluoroscopic images are required to confirm the correct placement of tools and medical instruments into the patient. This can lead to quick placement of k-wires and screw during an orthopaedic procedure. Note that this proposed system should not replace the judgement of the surgeons, but rather help them to align their instruments with medical data quicker.

5. Conclusions

In this work, we adapt advanced vision-based methods to track surgical tools in C-arm guided interventions. The tracking outcome is integrated in a mixed reality environment. Our proposed mixed reality system supports the surgeon with the complex task of placing tools in 3D and helps them localise a starting point on their pre-operative planned trajectory faster without using fluoroscopic images. As a result, it can improve the efficiency in the operating room by shortening the operation time, reducing the surgical task load, and decreasing radiation usage.

In conclusion, this Letter presents an intuitive intra-operative mixed reality visualisation of the 3D medical data, surgical site, and tracked surgical tools using a marker-less tracking algorithm for orthopaedic interventions. This method integrates advanced computer vision techniques using RGBD cameras into a clinical setting and enables the surgeon to quickly reach a better entry point for the rest of the procedure. The authors believe that the system is a novel solution to intra-operative 3D visualisation.

6. Acknowledgments

The authors thank Wolfgang Wein and his team from ImFusion GmbH, Munich for the opportunity of using the ImFusion Suite and Gerhard Kleinzig and Sebastian Vogt from SIEMENS for their support and making a SIEMENS ARCADIS Orbic 3D available for this research.

7. Declaration of interests

The authors declare that a mobile C-arm was provided for this work by Siemens Research. Dr. Fuerst reports a patent RGBD-based Simultaneous Segmentation, and Tracking of Surgical Tools pending to Johns Hopkins University. Dr. Navab acted as consultant on a different project for Siemens Healthcare in 2015 and has a patent 6,473,489 issued to Siemens Corporate Research, and a patent 6,447,163 issued to Siemens Corporate Research.

8 References

- 1.Parker P.J., Copeland C.: ‘Percutaneous fluoroscopic screw fixation of acetabular fractures’, Injury, 1997, 28, (9), pp. 597–600 (doi: 10.1016/S0020-1383(97)00097-1) [DOI] [PubMed] [Google Scholar]

- 2.Starr A., Jones A., Reinert C.,, et al. : ‘Preliminary results and complications following limited open reduction and percutaneous screw fixation of displaced fractures of the actabulum’, Injury, 2001, 32, pp. 45–50 (doi: 10.1016/S0020-1383(01)00060-2) [DOI] [PubMed] [Google Scholar]

- 3.Matthews F., Hoigne D., Weiser M.,, et al. : ‘Navigating the fluoroscope's C-arm back into position: an accurate and practicable solution to cut radiation and optimize intraoperative workflow’, J. Orthop. Trauma, 2007, 21, pp. 687–692 (doi: 10.1097/BOT.0b013e318158fd42) [DOI] [PubMed] [Google Scholar]

- 4.Liu L., Ecker T., Schumann S.,, et al. : ‘Computer assisted planning and navigation of periacetabular osteotomy with range of motion optimization’ (Springer International Publishing, Cham, 2014), pp. 643–650 [DOI] [PubMed] [Google Scholar]

- 5.Boszczyk B.M., Bierschneider M., Panzer S.,, et al. : ‘Fluoroscopic radiation exposure of the kyphoplasty patient’, Eur. Spine J., 2006, 15, (3), pp. 347–355 (doi: 10.1007/s00586-005-0952-0) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Synowitz M., Kiwit J.: ‘Surgeon's radiation exposure during percutaneous vertebroplasty’, J. Neurosurg., Spine, 2006, 4, (2), pp. 106–109 (doi: 10.3171/spi.2006.4.2.106) [DOI] [PubMed] [Google Scholar]

- 7.Navab N., Heining S.M., Traub J.: ‘Camera augmented mobile C-arm (CAMC): calibration, accuracy study, and clinical applications’, IEEE Trans. Med. Imaging, 2010, 29, pp. 1412–1423 (doi: 10.1109/TMI.2009.2021947) [DOI] [PubMed] [Google Scholar]

- 8.Navab N., Blum T., Wang L.,, et al. : ‘First deployments of augmented reality in operating rooms’, Computer, 2012, 45, pp. 48–55 (doi: 10.1109/MC.2012.75) [Google Scholar]

- 9.Diotte B., Fallavollita P., Wang L.,, et al. : ‘Radiation-free drill guidance in interlocking of intramedullary nails’ (Springer Berlin Heidelberg, Berlin, Heidelberg, 2012), pp. 18–25 [DOI] [PubMed] [Google Scholar]

- 10.Habert S., Gardiazabal J., Fallavollita P.,, et al. : ‘Rgbdx: first design and experimental validation of a mirror-based RGBD X-ray imaging system’. 2015 IEEE Int. Symp. Mixed and Augmented Reality (ISMAR), September 2015, pp. 13–18 [Google Scholar]

- 11.Reaungamornrat S., Otake Y., Uneri A.,, et al. : ‘An on-board surgical tracking and video augmentation system for C-arm image guidance’, Int. J. Comput. Assist. Radiol. Surg., 2012, 7, (5), pp. 647–665 (doi: 10.1007/s11548-012-0682-9) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Grützner P.A., Zheng G., Langlotz U.,, et al. : ‘C-arm based navigation in total hip arthroplasty background and clinical experience’, Injury, 2004, 35, (1), pp. 90–95, supplement (doi: 10.1016/j.injury.2004.05.016) [DOI] [PubMed] [Google Scholar]

- 13.Siewerdsen J.H., Moseley D.J., Burch S.,, et al. : ‘Volume CT with a flat-panel detector on a mobile, isocentric C-arm: pre-clinical investigation in guidance of minimally invasive surgery’, Med. Phys., 2005, 32, (1), pp. 241–254 (doi: 10.1118/1.1836331) [DOI] [PubMed] [Google Scholar]

- 14.Held R., Gupta A., Curless B.,, et al. : ‘3d puppetry: a kinect-based interface for 3d animation’. Proc. 25th Annual ACM Symp. User Interface Software and Technology, UIST ‘12, New York, NY, USA, 2012, pp. 423–434 [Google Scholar]

- 15.Fotouhi J., Fuerst B., Johnson A.,, et al. : ‘Pose-aware C-arm for automatic re-initialization of interventional 2d/3d image registration’, Int. J. Comput. Assist. Radiol. Surg., 2017, 12, (7), pp. 1221–1230 (doi: 10.1007/s11548-017-1611-8) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Fotouhi J., Fuerst B., Wein W.,, et al. : ‘Can real-time RGBD enhance intraoperative cone-beam CT?’, Int. J. Comput. Assist. Radiol. Surg., 2017, 12, (7), pp. 1211–1219 (doi: 10.1007/s11548-017-1572-y) [DOI] [PubMed] [Google Scholar]

- 17.Lee S.C., Fuerst B., Fotouhi J.,, et al. : ‘Calibration of RGBD camera and cone-beam CT for 3d intra-operative mixed reality visualization’, Int. J. Comput. Assist. Radiol. Surg., 2016, 11, (6), pp. 967–975 (doi: 10.1007/s11548-016-1396-1) [DOI] [PubMed] [Google Scholar]

- 18.Fischer M., Fuerst B., Lee S.C.,, et al. : ‘Preclinical usability study of multiple augmented reality concepts for k-wire placement’, Int. J. Comput. Assist. Radiol. Surg., 2016, 11, (6), pp. 1007–1014 (doi: 10.1007/s11548-016-1363-x) [DOI] [PubMed] [Google Scholar]

- 19.Tateno K., Tombari F., Navab N.: ‘Real-time and scalable incremental segmentation on dense slam’. 2015 IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), September 2015, pp. 4465–4472 [Google Scholar]

- 20.Tateno K., Tombari F., Navab N.: ‘Large scale and long standing simultaneous reconstruction and segmentation’, Comput. Vis. Image Underst., 2017, 157, pp. 138–150 (doi: 10.1016/j.cviu.2016.05.013) [Google Scholar]

- 21.Rusu R.B., Blodow N., Beetz M.: ‘Fast point feature histograms (FPFH) for 3d registration’. Proc. 2009 IEEE Int. Conf. Robotics and Automation, ICRA'09, Piscataway, NJ, USA, 2009, pp. 1848–1853 [Google Scholar]

- 22.Uckermann A., Elbrechter C., Haschke R.,, et al. : ‘3D scene segmentation for autonomous robot grasping’. 2012 IEEE/RSJ Int. Conf. Intelligent Robots and Systems, October 2012, pp. 1734–1740 [Google Scholar]

- 23.Rusinkiewicz S., Levoy M.: ‘Efficient variants of the ICP algorithm. 3D digital imaging and modeling’. Int. Conf. 3D Digital Imaging and Modeling, 2001 [Google Scholar]

- 24.Fotouhi J., Fuerst B., Lee S.,, et al. : ‘Interventional 3d augmented reality for orthopedic and trauma surgery’. 16th Annual Meeting of the International Society for Computer Assisted Orthopedic Surgery (CAOS), 2016 [Google Scholar]