Abstract

Image-guided surgery (IGS) has allowed for more minimally invasive procedures, leading to better patient outcomes, reduced risk of infection, less pain, shorter hospital stays and faster recoveries. One drawback that has emerged with IGS is that the surgeon must shift their attention from the patient to the monitor for guidance. Yet both cognitive and motor tasks are negatively affected with attention shifts. Augmented reality (AR), which merges the realworld surgical scene with preoperative virtual patient images and plans, has been proposed as a solution to this drawback. In this work, we studied the impact of two different types of AR IGS set-ups (mobile AR and desktop AR) and traditional navigation on attention shifts for the specific task of craniotomy planning. We found a significant difference in terms of the time taken to perform the task and attention shifts between traditional navigation, but no significant difference between the different AR set-ups. With mobile AR, however, users felt that the system was easier to use and that their performance was better. These results suggest that regardless of where the AR visualisation is shown to the surgeon, AR may reduce attention shifts, leading to more streamlined and focused procedures.

Keywords: augmented reality, medical image processing, tumours, neurophysiology, surgery

Keywords: desktop augmented reality, mobile augmented reality, tumour, craniotomy planning, augmented reality image-guided neurosurgery

1. Introduction

Augmented reality (AR) is increasingly being studied in image-guided surgery (IGS) for its potential to improve intraoperative surgical planning, simplify anatomical localisation and guide the surgeon in their tasks. In AR IGS, preoperative patient models are merged with the surgical field of view, allowing the surgeon to understand the mapping between the surgical scene and the preoperative plans and images and to see the anatomy of interest below the surface of the patient. This may facilitate decision making in the operating room (OR) and reduce attention shifts from the IGS system to the patient, allowing for more minimally invasive and quicker procedures.

Numerous technical solutions have been proposed to present AR views in IGS. These include the use of tablets, projectors, surgical microscopes, half-silvered mirrors, head-mounted displays (HMDs) or the use of the monitor of the IGS system itself [1]. These different solutions can be categorised as either presenting the AR visualisation within the field of view of the surgeon, i.e. in situ (e.g. via tablets, HMDs, the microscope or a projector) or outside the surgical sterile field on the IGS system itself. Whereas, the main advantage of the former, is that the surgeon does not have to look away from the surgical scene, the disadvantage is that additional hardware is needed, with the exception of the surgical microscope. Although the surgical microscope can present the AR view to the surgeon without the use of additional hardware, it is not used for all surgical steps or by all surgeons. For example, it is not used during craniotomy planning or by surgeons who prefer to use surgical loupes throughout a case. Conversely, the advantage of using the IGS system to display the AR view is that no additional hardware is needed in an already cluttered and busy OR. The disadvantage of presenting the AR view on the IGS monitor may be that the surgeon may need to shift their attention back and forth between the IGS system (where they are looking for guidance) and the patient (where they are working). To the best of our knowledge, no previous work has looked at the impact of different AR solutions on attention shift for specific tasks in IGS. Yet, evaluating how different technologies compare with desktop AR is an important task, which will help determine which technologies are most appropriate in the OR.

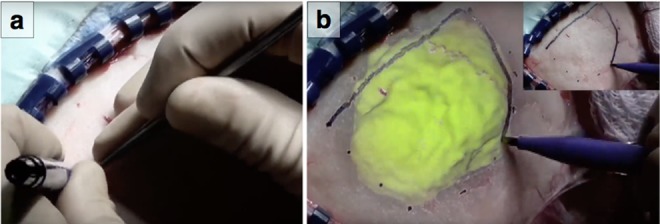

In this Letter, we present a mobile-based (e.g. smartphone/tablet) AR IGS system and compare it with (i) visualisation of the AR view on the monitor of the IGS system and (ii) traditional IGS navigation. We do this for the specific task of outlining the extent of a tumour on the skull (Fig. 1). Tumour localisation and delineation are done during craniotomy planning in neurosurgery in order to determine the location, size and shape of the bone flap to be removed to access the brain. This task does not require high accuracy in terms of outlining the tumour contour, but rather it is important to localise the extent of the tumour. Therefore, in testing these three methods we specifically focus on the time to delineate a tumour and the number of attention shifts from patient to screen, that are required to do this.

Fig. 1.

Specific task of outlining the extent of a tumour on the skull

a A surgeon uses a pointer in his right hand to locate the boundary of the tumour and draws dots with his left hand at different locations

b AR visualisation would allow the surgeon to see the tumour merged with the real surgical scene and can use that to draw the extent of the tumour. (b) Inlay: with traditional neuronavigation this surgeon has drawn dots using guidance and then connects the dots to create the contour of the tumour

2. Related work

In the following section, we first give a review of related work focusing on the use of AR in IG neurosurgery (IGNS). Second, we explore the previous work on attention shifts in surgery.

2.1. AR in IGNS

The first neurosurgical AR system was proposed in the early 1990s by Kikinis et al. [2]. In their work, they combined three-dimensional (3D) segmented virtual objects (e.g. tumours) from preoperative patient images with live video images of the patient. For ear–nose–throat and neurosurgery, Edwards et al. [3] developed microscope-assisted guided intervention, a system that allowed for stereo projection of virtual images into the microscope. Varioscope AR was a custom built head-mounted operating microscope for neurosurgery that allowed for virtual objects to be presented to the viewer using video graphics array displays. The Zeiss OPMI® Pentero's microscope and its multivision function (AR visualisation) were used by Cabrilo et al. [4, 5] for AR in neurovascular surgery. One of the findings of this work was that surgeons believed that AR visualisation enabled a more tailored surgical approach that involved determining the craniotomy. Kersten-Oertel et al. [6, 7] used AR visualisation in a number of neurovascular and tumour surgery cases [7]. In both studies, the authors found that AR visualisation (presented on the monitor of the IGNS system) could facilitate tailoring the size and shape of the craniotomy.

Over the past several years, mobile devices have been increasingly used to display AR views in order to ease and speed up several tasks in surgery. Mobasheri et al. [8] presented a review of the different tasks for which mobile devices can be used including diagnostics, telemedicine, operative navigation and planning, training etc. To the best of our knowledge, there has not been research that has examined using mobile AR specifically for craniotomy planning. However, Deng et al. [9] and Watanabe et al. [10] have built mobile neuronavigation AR systems, which they test in surgery including craniotomy planning. Then, Bieck et al. [11] introduced an iPad-based system aimed at neurosurgery. Hou et al. [12] also built an iPhone-based system to project preoperative images of relevant anatomy onto the scalp. Some prototypes of mobile AR for surgery have also been tested in other contexts, for example, in nephrolithotomy by Müller et al. [13]. We have expanded on this previous work in AR IGNS by looking at how different AR display methods, specifically in the surgical field via mobile device versus on IGNS monitor, may impact the surgeon in terms of attention.

For more information as to the use of AR in IGS the reader is referred to [1], and AR in neurosurgery specifically [14–16].

2.2. Attention shifts in surgery

As summarised by Wachs [17], attention shifts have negative effects on surgical tasks. In general, attention shifts can deteriorate performance. The work of Graydon and Eysenck [18] and Weerdesteyn et al. [19] showed how distractions and attention shifts impact various types of cognitive and motor tasks such as counting backwards and avoiding obstacles while walking. Goodell et al. [20] showed how surgical tasks in particular are impacted. They observed an increase of 30–40% in the time required to complete a task when a subject was distracted compared with when they were not distracted. In our work, we study the number of attention shifts needed to perform a simple surgical planning task using both AR and traditional navigation. We did not focus on accuracy of the tracings; however, the previous work has shown that in both a laboratory and clinical environment, AR guidance is no less accurate than the traditional navigation systems [5, 21].

3. Methodology

Our IGS system comprises of a Polaris tracking system (Northern Digital Technologies, Waterloo, Canada) and the intraoperative brain imaging system (IBIS) Neuronav open-source platform for IGNS [22]. IBIS runs on a desktop computer with an i7-3820 3.6 GHz central processing unit (CPU), NVIDIA GTX670 graphics PU (GPU), ASUS PCE-AC55BT (Intel 7260 chipset) wireless peripheral component interconnect card and Ubuntu 14.04.5 LTS [with the latest available wireless drivers (iwlwifi 25.30.0.14.0)]. To extend the functionality from IGS to mobile AR IGS, we use a smart phone device (OnePlus One phone with a Qualcomm MSM8974AC Snapdragon 801 chipset, Quad-core 2.5 GHz Krait 400 CPU, Adreno 330 GPU and Android 6.0.1.) outfitted with a passive tracker that is attached to a case to obtain the live view.

The IBIS Neuronav package comes with plug-ins for tracking, patient-to-image registration, camera calibration and the capability to do AR visualisation by capturing a live video stream from a microscope or video camera and merging this with preoperative images on the monitor of the system itself. In our work, we extended the IBIS Neuronav system to allow for augmenting an image, not only on the monitor of the system, but on a mobile device that captures the surgical field of view. Thus, allowing for in situ AR visualisation.

To make use of IBIS’ existing functionality, the mobile device serves merely as a camera and display. The costly computations are handled by the desktop on which IBIS runs. To create the AR view, we first calibrate the camera of the mobile phone. Calibration (intrinsic and extrinsic) is done using a modification of Zhang's camera calibration method [23], followed by a second optimisation procedure to find the transform from the tracker to optical centre of the camera (for more details the reader is referred to [22]). Patient-to-image registration is done using skin landmark registration. For desktop AR, the mobile device captures live video frames and sends them to the desktop using OpenIGTLink [24]. These live frames are then augmented with virtual objects, in our case the 3D surface of a tumour. For mobile AR, the rendered virtual object is sent using OpenIGTLink to the mobile device on which it is blended with the live video feed using OpenGL (version ES 3.0) and GLSL. The Qt framework (version 5.8) was used to handle the phone's camera and create the AR mobile phone application.

4. Experiment

To determine the impact of using AR with in situ visualisation (mobile AR) in contrast to AR visualisation on the navigation system (desktop AR) and traditional neuronavigation (traditional nav) on attention shifts, 12 subjects (aged 24–41, 3 female and 9 male) working in medical neuroimaging and/or IGS did a laboratory study. The subjects were graduate students, researchers, engineers and neurosurgery residents. All subjects were familiar with IGNS and craniotomy planning.

The task of the subjects was to draw the contour of a segmented tumour on the surface of the skull of a phantom – a task typically done during craniotomy planning in tumour resections, see Fig. 1. Prior to the study, the subjects were re-familiarised with the purpose of tumour delineation, craniotomy planning and AR visualisation and were shown the system under each of the conditions (described below). The order in which the different systems were used in the experiment was alternated between subjects and each of the possible condition orders were used an equal number of times to reduce learning bias.

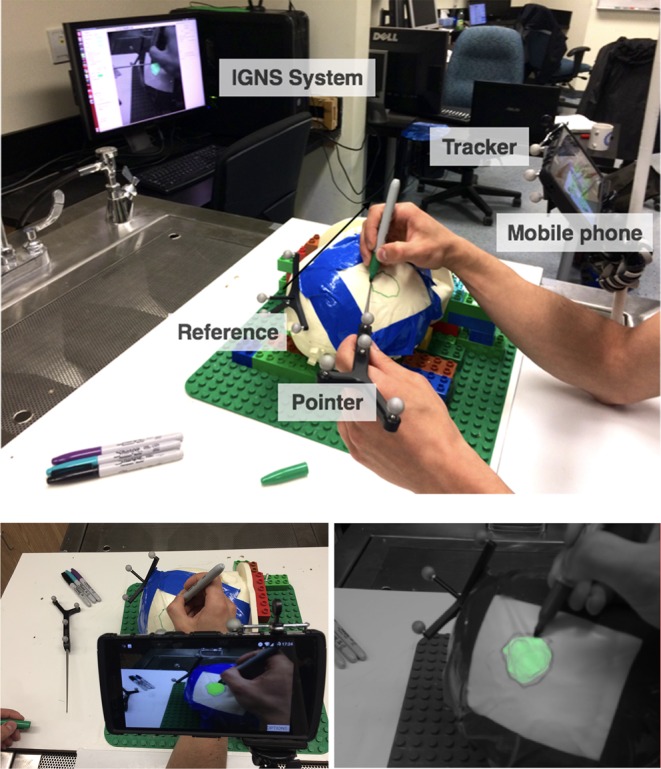

To perform the task, subjects used a permanent marker to draw the tumour outline on a 3D printed phantom that was covered in self-adhesive plastic wrap. Each subject delineated four segmented tumours that were mapped to the 3D phantom under each of the three conditions: mobile AR, desktop AR and traditional nav. For mobile AR the tumour was blended with the camera image on the phone, whereas for desktop AR the AR visualisation was shown on the monitor of the IGNS system. Finally for traditional nav, both the tumour and the head of the patient were rendered in order to give the subject contextual information about the location of the tumour, see Fig. 2. For each delineation task, subjects could decide whether to use the surgical pointer and see its location on the IGNS system/or phone with respect to the tumour. However, regardless of if they used it or not, they always held it in their hand throughout the experiment. The set-up of the experiment is shown in Fig. 3.

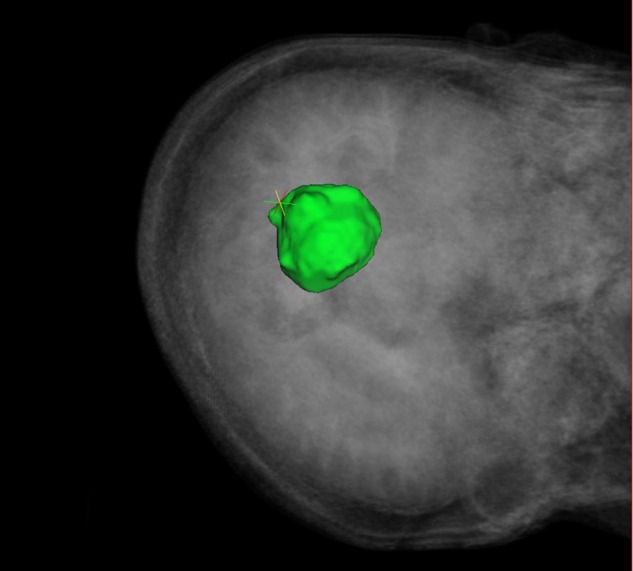

Fig. 2.

Screenshot of the IGNS monitor view for the traditional nav condition: the user has access to the pointer position (coloured cross-hair) as well as patient's preoperative scan and the segmented tumour model (green)

Fig. 3.

Top: experimental set-up: the user holds the pointer in one hand and the marker in the other. Depending on the condition he or she looks either at the mobile phone (outfitted with a tracker) for the AR visualisation or on the desktop for either AR or IGNS navigation. The purpose of the task is to draw the contour of the tumour on the surface of the phantom. Bottom left: subjects’ point of view of the experimental set-up when testing the mobile AR condition. Bottom right: screenshot of the desktop AR view

Whereas in traditional IGS a surgeon must make use of the surgical pointer to determine the location of it with respect to the virtual anatomy of the patient and surgical plans, this is not necessary when using in situ AR as the virtual data is visible in the surgical field of view. We therefore, allowed each subject to decide how and whether to make use of the surgical pointer and under which conditions to use it. For the mobile AR condition, the phone was attached to an arm that remained in place throughout the study. Although allowing for these differences between conditions could potentially lead to confounds in the time taken to delineate the tumour, we believe it allows for the most realistic scenario and one which would mimic how a surgeon would work under the different conditions in the OR. For example, the surgical pointer would be used with traditional neuronavigation, but not necessarily in situ AR, making the task take longer under the non-AR condition. Finally, subjects could also decide whether to use a connect-the-dots strategy (mark dots on the phantom at the edges of the tumour and draw a line between them) or simply outline the tumour with the contour.

For each of the tasks we measured both the time to complete the task as well as the number of times the subject switched their attention from the 3D phantom to the IGNS system or mobile phone.

After performing the experiment all subjects filled out a questionnaire [The questionnaire can be found at http://tinyurl.com/y9svldpw.]. The questionnaire includes the NASA–TLX (which pertains to the perceived workload of using the system) [25], as well as a number of other questions about their experience. Furthermore, subjects were asked to provide any additional comments on performing the task under each of the different conditions.

5. Results

In terms of the system itself, the pointer calibration was 0.24 mm root-mean-square (RMS) error, the registration between phantom and virtual models was 1.76 mm RMS error and camera's intrinsic reprojection error was 1.75 mm. For the mobile AR system, a frame rate between 15 and 20 fps at a resolution of was achieved (without compression).

For the experiments, we measured the time it took to delineate the tumour and the when and for how long they looked at the 3D phantom, at the mobile phone or at the monitor of the IGNS system. We analysed the data using an analysis of variance (ANOVA) and post hoc Tukey honestly significant difference (HSD) tests. The JMP statistical software package and MATLAB were used. As well as looking at time, and number of attention shifts, we also looked at the ratio between the amount of time the subject looked at one of the screens in comparison with the total time taken to delineate the tumour.

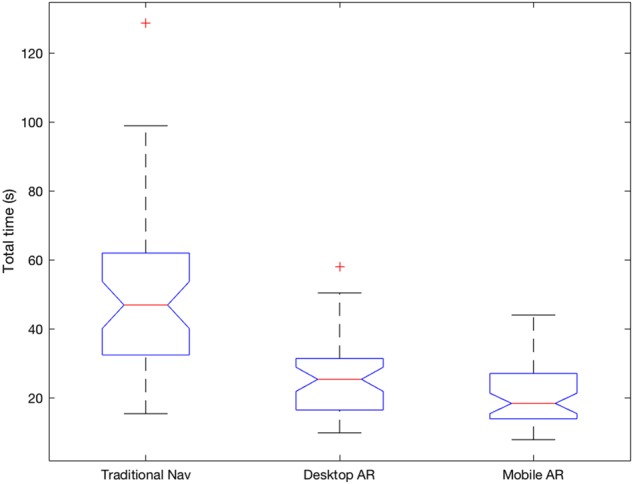

For all the measures, we found that both AR systems were statistically different from the traditional navigation system, but they were not statistically different from one another. Specifically, a one-way repeated measures ANOVA showed that there was a significant effect of AR display type on the total time to delineate the tumour . The mean times for tumour delineation were (s) for traditional nav, for desktop AR and for mobile AR. Post hoc Tukey HSD tests showed that there was a significant difference between traditional nav and desktop AR and mobile AR , but that there was no significant difference between desktop AR and mobile AR .

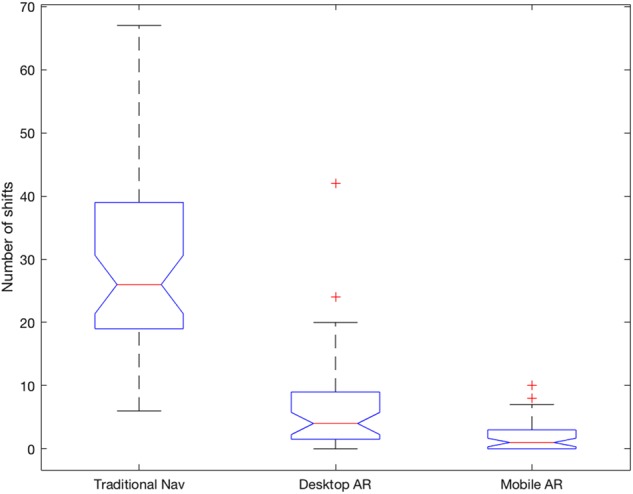

For total number of attention shifts from the phantom to either desktop or mobile screen, a one-way repeated measures ANOVA showed that there was a significant effect of IGNS display type on the number of attention shifts . The median number of attention shifts during tumour delineation were 26, with a median absolute deviation (MAD) of 7.5, for traditional nav, four (MAD = 4) for desktop AR and 1 (MAD = 1) for mobile AR. Post hoc Tukey HSD tests showed that there was a significant difference between traditional nav and desktop AR and mobile AR , but that there was no significant difference between desktop AR and mobile AR .

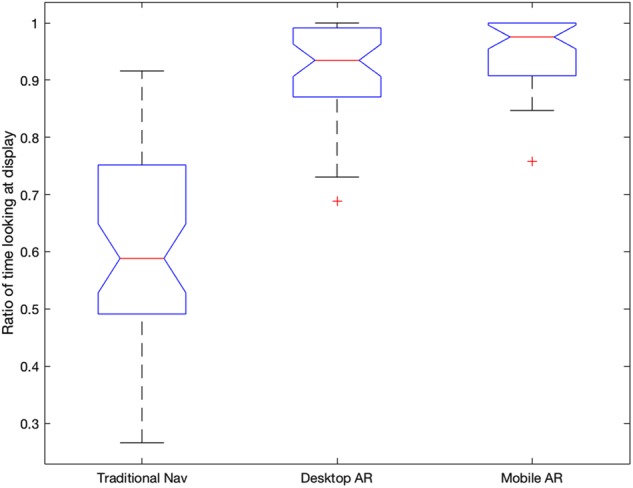

For ratio of total time spent looking at the display over total time taken to delineate the tumour, a one-way repeated measures ANOVA showed that there was a significant effect of display type on the ratio . The mean ratio of time spent looking at the screen over time taken during tumour delineation was for traditional nav, for desktop AR and for mobile AR. Post hoc Tukey tests showed that there was a significant difference between traditional nav and desktop AR and mobile AR , but that there was no significant difference between desktop AR and mobile AR (Figs. 4–6).

Fig. 5.

Boxplots of the number of attention shifts per condition. The average number of attention shifts were , and for traditional nav, desktop AR and mobile AR, respectively

Fig. 4.

Boxplots of the total times taken per condition (in seconds). The average times to delineate a tumour were , and for traditional nav, desktop AR and mobile AR, respectively

Fig. 6.

Boxplots of the ratio of time looking at desktop/mobile over total time per condition. The averages were , and for traditional nav, desktop AR and mobile AR, respectively

5.1. Questionnaire results

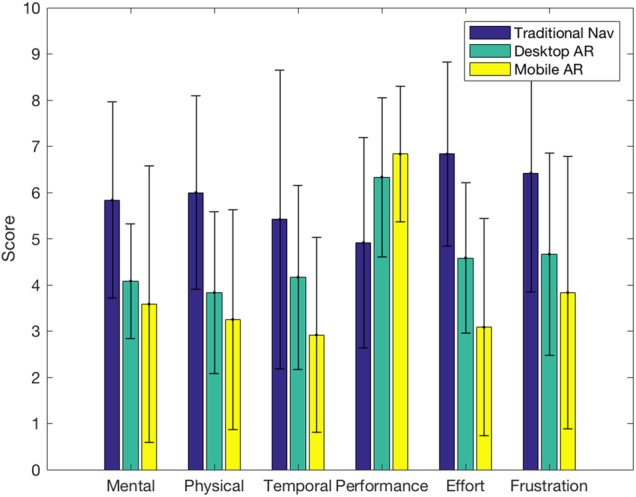

All subjects filled out a questionnaire after performing the study. As mentioned in the last section, we asked the subjects to hold the pointer in all conditions; however, according to the post-task questionnaire 58% of the subjects did not use it for desktop AR and 67% of subjects did not use it in the mobile AR condition. In terms of user reporting of accuracy, only one subject found that he/she was more accurate with traditional nav. All others found AR to be more accurate, specifically 67% found that mobile AR was the most accurate. Furthermore, all subjects found that one of the two types of AR was most intuitive and comfortable, of those 83% thought that mobile AR was the most intuitive and 92% thought mobile AR to be the most comfortable. Overall, 92% of subjects preferred mobile AR. Finally, the TLX confirms those last findings, since on average, the traditional nav scored 59 points, the desktop AR 46 points and mobile AR 39 points, where a lower score means the perceived cognitive load was less.

As we can see in Fig. 7, mobile AR is perceived as the least demanding system to use overall, followed by desktop AR. In terms of mental demand, subjects found both AR systems to be equally less demanding than traditional nav. Although, in terms of physical demand, temporal demand, effort and frustration, mobile AR was perceived as less demanding than desktop AR, which was less demanding than traditional nav. In terms of performance, mobile AR was perceived as best, again followed by desktop AR.

Fig. 7.

Individual scales of the NASA–TLX for the different conditions, ranging from 0 to 10. The results show that for all measures mobile AR was perceived to be better/easier to use than desktop AR, which in turn performs better than traditional nav

6. Discussion

Our results showed that for both AR guidance methods, the attention of the subject remains almost the whole time (90–95%) on the guidance images. In contrast, for traditional nav the attention is split almost 50–50 between the patient and the monitor. Such attention shifts can be detrimental to the motor task at hand, add time to performing the task and may increase the cognitive burden of the surgeon.

The ratio of time looking at the screen against total time taken may also give an estimate of the user's confidence in what he/she is doing. The users shift to look at the patient when they need to confirm that the pointer and the marker are where they expect them to be. Consequently, the higher ratios obtained for both AR systems may indicate that AR gives users more confidence that they are correct with respect to the data presented. The NASA–TLX results in terms of perceived performance further seem to support this claim with subjects being most confident in their performance using AR.

Our results also show that the time needed to accomplish the tumour outlining and the number of attention shifts done during the task is significantly lower when using either of the AR systems than when using traditional navigation. Although mobile AR is not significantly different from desktop AR on these two factors, it was considered by subjects to be more intuitive, more comfortable to use and generally preferred. Furthermore, one should keep in mind that in the OR, the IGNS monitor may be much further away or less conveniently positioned due to other equipments, which could deteriorate performance or desktop AR. Thus, we believe that even though our study was limited to a laboratory set-up, the findings we have made would translate to the OR, similarly to how Cabrilo et al. [5] found that their AR system made a positive difference in two thirds of the clinical cases and a major improvement in 17% of the cases.

Although we did not quantitatively measure the difference in accuracy of the tracings between conditions, we believe they should be comparable, as Tabrizi and Mahvash [21] and Cabrilo et al. [5] have shown that AR is not less accurate than the traditional systems. There is however a need to do a thorough study of accuracy of craniotomy planning between conditions. This will be done in future work.

The two most frequent negative comments that we received concerning our system were that there was some lag in the video feed and that the small size of the screen was making it harder to be precise. Those two limitations will be lifted in a future version of our system. The first one, caused by bandwidth limitations and network latency, will be greatly diminished when using compressed images. The second one will be solved when porting our system to a newer tablet device such as an iPad.

This work was motivated by feedback from a surgeon who has previously used desktop AR in neurosurgery and wanted to be able to walk around the patient and see the location of relevant anatomy below the surface of the skin and skull during craniotomy planning. On presenting the prototype system to the surgeon, he commented that as well having the tumour projected, it would be useful to include vessels, gyri and sulci to further facilitate planning a resection approach. Furthermore, he commented that being able to look at the AR view on the mobile phone could assist in teaching and allow easy discussion with residents in terms of surgical plan and approach. Given, this feedback in future work, we plan to add more features to our AR system, so that the surgeon can interact with the view on the phone, for example, by turning anatomy of interest on and off and going through slice views mapped in depth to the real image.

7. Conclusions

In this Letter, we examined the effect of in situ AR, desktop AR and traditional navigation on attention shifts between different IGNS displays and the patient. The results show that tumour outlining with AR systems takes less time and requires less attention shifts than with a traditional navigation system. It is not clear that mobile AR performs better on these two factors than desktop AR, but it is clear that users find it more intuitive and comfortable. Reducing the disconnect between the AR display and the scene of interest does have an influence on the ease-of-use of the AR navigation system.

In future work, in addition to porting to iPad and compressing images, we also intend to bring the system into the OR to test it in its intended environment and with its intended users. As attention shifts have been shown to impact accuracy, we will further study the effect of the system compared with traditional image-guidance on the accuracy of different surgical tasks.

8. Funding and Declaration of Interests

This work was supported by the Engineering Faculty at Concordia University. Dr. Drouin reports grants from the Canadian Institute of Health Research, Fonds Québécois de la recherche sur la nature et les technologies and the Natural Science and Engineering Research Council of Canada during the conduct of the study.

9 References

- 1.Kersten-Oertel M., Jannin P., Collins D.L.: ‘The state of the art of visualization in mixed reality image guided surgery’, Comput. Med. Imaging Graph., 2013, 37, (2), pp. 98–112 (doi: 10.1016/j.compmedimag.2013.01.009) [DOI] [PubMed] [Google Scholar]

- 2.Kikinis R., Gleason P.L., Lorensen W.E., et al. : ‘Image guidance techniques for neurosurgery’. Visualization in Biomedical Computing 1994 Int. Society for Optics and Photonics, 1994, pp. 537–540 [Google Scholar]

- 3.Edwards P.J., King A.P., Maurer C.R., et al. : ‘Design and evaluation of a system for microscope-assisted guided interventions (magi)’, IEEE Trans. Med. Imaging, 2000, 19, (11), pp. 1082–1093 (doi: 10.1109/42.896784) [DOI] [PubMed] [Google Scholar]

- 4.Cabrilo I., Bijlenga P., Schaller K.: ‘Augmented reality in the surgery of cerebral aneurysms: a technical report’, Oper. Neurosurg., 2011, 10, (2), pp. 252–261 [DOI] [PubMed] [Google Scholar]

- 5.Cabrilo I., Bijlenga P., Schaller K.: ‘Augmented reality in the surgery of cerebral arteriovenous malformations: technique assessment and considerations’, Acta Neurochir., 2014, 156, (9), pp. 1769–1774 (doi: 10.1007/s00701-014-2183-9) [DOI] [PubMed] [Google Scholar]

- 6.Kersten-Oertel M., Gerard I.J., Drouin S., et al. : ‘Augmented reality for specific neurovascular surgical tasks’. Workshop on Augmented Environments for Computer-Assisted Interventions, 2015, pp. 92–103 [Google Scholar]

- 7.Kersten-Oertel M., Gerard I.J., Drouin S., et al. : ‘Towards augmented reality guided craniotomy planning in tumour resections’. Int. Conf. Medical Imaging and Virtual Reality, 2016, pp. 163–174 [Google Scholar]

- 8.Mobasheri M.H., Johnston M., Syed U.M., et al. : ‘The uses of smartphones and tablet devices in surgery: a systematic review of the literature’, Surgery, 2015, 158, (5), pp. 1352–1371 (doi: 10.1016/j.surg.2015.03.029) [DOI] [PubMed] [Google Scholar]

- 9.Deng W., Li F., Wang M., et al. : ‘Easy-to-use augmented reality neuronavigation using a wireless tablet PC’, Stereotact. Funct. Neurosurg., 2014, 92, (1), pp. 17–24 (doi: 10.1159/000354816) [DOI] [PubMed] [Google Scholar]

- 10.Watanabe E., Satoh M., Konno T., et al. : ‘The trans-visible navigator: a see-through neuronavigation system using augmented reality’, World Neurosurg., 2016, 87, pp. 399–405 (doi: 10.1016/j.wneu.2015.11.084) [DOI] [PubMed] [Google Scholar]

- 11.Bieck R., Franke S., Neumuth T., et al. : ‘Computer-assisted neurosurgery: an interaction concept for a tablet-based surgical assistance system’. Conf.: 14th Annual Conf. the German Society of Computer and Robotic Assisted Surgery, Bremen, Germany, 2015 [Google Scholar]

- 12.Hou Y., Ma L., Zhu R., et al. : ‘A low-cost iPhone-assisted augmented reality solution for the localization of intracranial lesions’, PLoS ONE, 2016, 11, (7), pp. 1–18 (doi: 10.1371/journal.pone.0159185) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Müller M., Rassweiler M.-C., Klein J., et al. : ‘Mobile augmented reality for computer-assisted percutaneous nephrolithotomy’, Int. J. Comput. Assist. Radiol. Surg., 2013, 8, (4), pp. 663–675 (doi: 10.1007/s11548-013-0828-4) [DOI] [PubMed] [Google Scholar]

- 14.Guha D., Alotaibi N.M., Nguyen N., et al. : ‘Augmented reality in neurosurgery: a review of current concepts and emerging applications’, Can. J. Neurol. Sci., 2017, 44, (33), pp. 235–245 (doi: 10.1017/cjn.2016.443) [DOI] [PubMed] [Google Scholar]

- 15.Tagaytayan R., Kelemen A., Sik-Lanyi C.: ‘Augmented reality in neurosurgery’, Arch. Med. Sci., 2016, 12, (1), pp 1–11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Meola A., Cutolo F., Carbone M., et al. : ‘Augmented reality in neurosurgery: a systematic review’, 2016 [DOI] [PMC free article] [PubMed]

- 17.Wachs J.P.: ‘Gaze, posture and gesture recognition to minimize focus shifts for intelligent operating rooms in a collaborative support system’, Int. J. Comput. Commun. Control, 2010, 5, (1), pp. 106–124 (doi: 10.15837/ijccc.2010.1.2467) [Google Scholar]

- 18.Graydon J., Eysenck M.W.: ‘Distraction and cognitive performance’, Eur. J. Cogn. Psychol., 1989, 1, (2), pp. 161–179 (doi: 10.1080/09541448908403078) [Google Scholar]

- 19.Weerdesteyn V., Schillings A.M., Van Galen G.P., et al. : ‘Distraction affects the performance of obstacle avoidance during walking’, J. Mot. Behav., 2003, 35, (1), pp. 53–63 (doi: 10.1080/00222890309602121) [DOI] [PubMed] [Google Scholar]

- 20.Goodell K.H., Cao C.G.L., Schwaitzberg S.D.: ‘Effects of cognitive distraction on performance of laparoscopic surgical tasks’, J. Laparoendosc. Adv. Surg. Tech., 2006, 16, (2), pp. 94–98 (doi: 10.1089/lap.2006.16.94) [DOI] [PubMed] [Google Scholar]

- 21.Tabrizi L.B., Mahvash M.: ‘Augmented reality–guided neurosurgery: accuracy and intraoperative application of an image projection technique’, J. Neurosurg., 2015, 123, (1), pp. 206–211 (doi: 10.3171/2014.9.JNS141001) [DOI] [PubMed] [Google Scholar]

- 22.Drouin S., Kochanowska A., Kersten-Oertel M., et al. : ‘IBIS: an or ready open-source platform for image-guided neurosurgery’, Int. J. Comput. Assist. Radiol. Surg., 2016, 12, (3), pp. 363–378 (doi: 10.1007/s11548-016-1478-0) [DOI] [PubMed] [Google Scholar]

- 23.Zhang Z.: ‘Flexible camera calibration by viewing a plane from unknown orientations’. The Proc. Seventh IEEE Int. Conf. Computer Vision, 1999, 1999, vol. 1, pp. 666–673 [Google Scholar]

- 24.Tokuda J., Fischer G.S., Papademetris X., et al. : ‘Openigtlink: an open network protocol for image-guided therapy environment’, Int. J. Med. Robot. Comput. Assist. Surg., 2009, 5, (4), pp. 423–434 (doi: 10.1002/rcs.274) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hart S.G., Staveland L.E.: ‘Development of NASA–TLX (task load index): results of empirical and theoretical research’, Adv. Psychol., 1988, 52, pp. 139–183 (doi: 10.1016/S0166-4115(08)62386-9) [Google Scholar]